Adaptive Inference for Change Points in High-Dimensional Data

Abstract: In this article, we propose a class of test statistics for a change point in the mean of high-dimensional independent data. Our test integrates the U-statistic based approach in a recent work by Wang et al. (2019) and the -norm based high-dimensional test in He et al. (2020), and inherits several appealing features such as being tuning parameter free and asymptotic independence for test statistics corresponding to even s. A simple combination of test statistics corresponding to several different s leads to a test with adaptive power property, that is, it can be powerful against both sparse and dense alternatives. On the estimation front, we obtain the convergence rate of the maximizer of our test statistic standardized by sample size when there is one change-point in mean and , and propose to combine our tests with a wild binary segmentation (WBS) algorithm to estimate the change-point number and locations when there are multiple change-points. Numerical comparisons using both simulated and real data demonstrate the advantage of our adaptive test and its corresponding estimation method.

Keywords: asymptotically pivotal, segmentation, self-normalization, structural break, U-statistics

1 Introduction

Testing and estimation of change points in a sequence of time-ordered data is a classical problem in statistics. There is a rich literature for both univariate and multivariate data of low dimension; see Csörgö and Horváth (1997), Chen and Gupta (2011) and Tartakovsky et al. (2014), for some book-length introductions and Perron (2006), Aue and Horváth (2013), and Aminikhanghahi and Cook (2017) for recent reviews of the subject. This paper addresses the testing and estimation for change points of high-dimensional data where the dimension is high and can exceed the sample size .

As high-dimensional data becomes ubiquitous due to technological advances in science, engineering and other areas, change point inference under the high-dimensional setting has drawn great interest in recent years. When the dimension is greater than sample size , traditional methods are often no longer applicable. Among recent work that addresses change point inference for the mean of high-dimensional data, we mention Horváth and Hušková (2012), Chan et al. (2013), Jirak (2015), Cho (2016), Wang and Samworth (2018), Enikeeva and Harchaoui (2019), Wang et al. (2019) and Yu and Chen (2020). In most of these papers, the proposed methods are powerful either when the alternative is sparse and strong, i.e., there are a few large non-zero values in the components of mean difference, or when the alternative is weak and dense, i.e., there are many small values in the components of mean difference. Among some of these papers, the sparsity appeared either explicitly in the assumptions, e.g. Wang and Samworth (2018), who proposed to project the data to some informative direction related to the mean change, to which univariate change point detection algorithm can be applied, or implicitly in the methodology, e.g. Jirak (2015), who took the maximal CUSUM statistic and therefore essentially targeted at the sparse alternative. Yu and Chen (2020) recently introduced a Gaussian multiplier bootstrap to calibrate critical values of the sup norm of CUSUM test statistics in high dimensions and their test is also specifically for sparse alternative. On the contrary, Horváth and Hušková (2012) aggregated the univariate CUSUM test statistics using the sum and their test is supposed to capture the dense alternative, but the validity of their method required the cross-sectional independence assumption. Wang et al. (2019) aimed at dense alternatives by extending the U-statistic based approach pioneered by Chen and Qin (2010) in the two-sample testing problem. An exception is the test developed in Enikeeva and Harchaoui (2019), which was based on a combination of a linear statistic and a scan statistic, and can be adaptive to both sparse and dense alternatives. However, its critical values were obtained under strong Gaussian and independent components assumptions and they do not seem to work when these assumptions are not satisfied; see Section 4 for numerical evidence.

In practice, it is often unrealistic to assume a particular type of alternative and there is little knowledge about the type of changes if any. Thus there is a need to develop new test that can be adaptive to different types of alternatives, and have good power against a broad range of alternatives. In this article, we shall propose a new class of tests that can have this adaptive power property, which holds without the strong Gaussian and independent components assumptions. Our test is built on two recent advances in the high-dimensional testing literature: Wang et al. (2019) and He et al. (2020). In Wang et al. (2019), they developed a mean change point test based on a U-statistic that is an unbiased estimator of the squared norm of the mean difference. They further used the idea of self-normalization [see Shao (2010), Shao and Zhang (2010), Shao (2015)] to eliminate the need of estimating the unknown nuisance parameter. He et al. (2020) studied both one sample and two sample high-dimensional testing problem for the mean and covariance matrix using norm, where is some integer. They showed that the corresponding U-statistics at different s are asymptotically independent, which facilitates a simple combination of the tests based on several values of (say and ) and their corresponding -values, and that the resulting combined test is adaptive to both dense and sparse alternatives.

Building on these two recent advances, we shall propose a new norm based test for a change point in the mean of high-dimensional independent data. Our contributions to the literature is threefold. On the methodological front, we develop a new class of test statistics (as indexed by ) based on the principle of self-normalization in the high-dimensional setting. Our test is tuning parameter free when testing for a single change point. A simple combination of tests corresponding to different s can be easily implemented due to the asymptotic independence and results in an adaptive test that has well-rounded power against a wide range of alternatives. On the theory front, as He et al. (2020) proved the asymptotic independence of one-sample and two-sample U-statistics corresponding to different s, we derive the asymptotic independence for several stochastic processes corresponding to different s under significantly weaker assumptions. More precisely, we can define two-sample test statistics on different sub-samples for each . These statistics can be viewed as smooth functionals of stochastic processes indexed by the starting and ending points of the sub-samples, which turn out to be asymptotically independent for different s. Compared to the adaptive test in Enikeeva and Harchaoui (2019), which relied on the Gaussian and independent components assumptions, our technical assumptions are much weaker, allowing non-Gaussianity and weak dependence among components. Furthermore, we obtained the convergence rate of the argmax of our SN-based test statistic standardized by sample size when there is one change point and . Lastly, in terms of empirical performance, we show in the simulation studies that the adaptive test can have accurate size and high power for both sparse and dense alternatives. Their power is always close to the highest one given by a single statistic under both dense and sparse alternatives.

The rest of the paper is organized as follows. In Section 2, we define our statistic, derive the limiting null distribution and analyze the asymptotic power when there is one change point. We also propose an adaptive procedure combining several tests of different . In Section 3, we study the asymptotic behavior of change-point location estimators when there is a single change-point and combine the WBS algorithm with our test to estimate the location when there are multiple change points. In Section 4, we present some simulation results for both testing and estimation and apply the WBS-based estimation method to a real data set. Section 5 concludes. All technical details and some additional simulation results are gathered in the supplemental material.

2 Test Statistics and Theoretical Properties

Mathematically, let be i.i.d random vectors with mean 0 and covariance . Our observed data is , where is the mean at time . The null hypothesis is that there is no change point in the mean vector and the alternative is that there is at least one change point, the location of which is unknown, i.e., we want to test

where and are unknown. Note that we assume temporal independence, which seems to be commonly adopted in change point analysis for genomic data; see Zhang et al. (2010), Jeng et al. (2010), and Zhang and Siegmund (2012) among others.

In this section, we first construct our two-sample U-statistic for a single change point alternative, which is the cornerstone for the estimation method we will introduce later. Then we derive the theoretical size and power results for our statistic. We also form an adaptive test that combines tests corresponding to different s. Throughout the paper, we assume and we may use to emphasize that can depend on . For a vector or matrix and , we use to denote , and in particular, for equals the Frobenius norm. We use to denote the spectral norm. Denote the number of permutations , and define to be the summation over all pairwise distinct indices. If , we denote , and if , we denote . Throughout the paper, we use “” to denote convergence in distribution, “” for convergence in probability, and “” for process convergence in some suitable function space. We use to denote the set of bounded functions on .

2.1 U-statistic and Self-normalization

In this subsection, we shall develop our test statistics for one change point alternative, i.e.,

where is unknown. In Wang et al. (2019), a U-statistic based approach was developed and their test targets at the dense alternative since the power is a monotone function of , where is the difference between pre-break and post-break means, i.e., , and is the covariance matrix of . Thus their test may not be powerful if the change in mean is sparse and is small. Note that several tests have been developed to capture sparse alternatives as mentioned in Section 1. In practice, when there is no prior knowledge of the alternative for a given data set at hand, it would be helpful to have a test that can be adaptive to different types and magnitudes of the change. To this end, we shall adopt the norm-based approach, as initiated by Xu et al. (2016) and He et al. (2020), and develop a class of test statistics indexed by , and then combine these tests to achieve the adaptivity.

Denote . For any positive even number , consider the following two-sample U-statistic of order ,

for any . Simple calculation shows that for any under the null hypothesis, and under the alternative. When (i.e., is odd) and under the alternative, where . This is the main reason we focus on the statistics corresponding to even s since for an odd , does not imply .

If the change point location , is known, then we would use as our test statistic. As implied by the asymptotic results shown later, we have that under the null,

under suitable moment and weak dependence assumptions on the components of . In practice, a typical approach is to replace by a ratio-consistent estimator, which is available for [see Chen and Qin (2010)], but not for general . In practice, the location is unknown, which adds additional complexity to the variance estimation and motivates Wang et al. (2019) to use the idea of self-normalization [Shao (2010), Shao and Zhang (2010)] in the case . Self-normalization is a nascent inferential method [Lobato (2001), Shao (2010)] that has been developed for low and fixed-dimensional parameter in a low dimensional time series. It uses an inconsistent variance estimator to yield an asymptotically pivotal statistic, and does not involve any tuning parameter or involves less number of tuning parameters compared to traditional procedures. See Shao (2015) for a comprehensive review of recent developments for low dimensional time series. There have been two recent extensions to the high-dimensional setting: Wang and Shao (2019) adopted a one sample U-statistic with trimming and extended self-normalization to inference for the mean of high-dimensional time series; Wang et al. (2019) used a two sample U-statistic and extended the self-normalization (SN)-based change point test in Shao and Zhang (2010) to high-dimensional independent data. Both papers are norm based, and this seems to be the first time that a -norm based approach is extended to high-dimensional setting via self-normalization.

Following Wang et al. (2019), we consider the following self-normalization procedure. Define

which is an un-normalized version of applied to the subsample . Let

The self-normalized statistic is given by

Remark 2.1.

2.2 Limiting Null Distribution

Before presenting our main theorem, we need to make the following assumptions.

Assumption 2.1.

Suppose are i.i.d. copies of with mean 0 and covariance matrix , and the following conditions hold.

-

1.

There exists not depending such that inf .

-

2.

has up to -th moments, with and for there exist constants depending on only and a constant such that

Remark 2.2 (Discussion of Assumptions).

The above cumulant assumption is implied by geometric moment contraction [cf. Proposition 2 of Wu and Shao (2004)] or physical dependence measure proposed by Wu (2005) [cf. Section 4 of Shao and Wu (2007)], or -mixing [Andrews (1991), Zhurbenko and Zuev (1975)] in the time series setting. It basically imposes weak dependence among the components in the data. Our theory holds as long as a permutation of components satisfies the cumulant assumption, since our test is invariant to the permutation within the components.

To derive the limiting null distribution for , we need to define some useful intermediate processes. Define

for any . Note that under the null, ’s have the same mean. Therefore, we can rewrite as

In the above expression, considering the summand for each we can define, for any ,

if and and 0 otherwise.

Theorem 2.1.

If Assumption 2.1 holds, then under the null and for a finite set of positive even numbers, we have that

in jointly over , where , and are centered Gaussian processes. Furthermore, the covariance of and is given by

where ,, and . Additionally, and are mutually independent if .

For illustration, consider the case when and . We have

which implies, for example,

The proof of Theorem 2.1 is long and is deferred to the supplement.

Theorem 2.2.

It can be derived that the is a Gaussian process with the following covariance structure:

When ,

When ,

where is defined in Theorem 2.1. The limiting null distribution is pivotal and its critical values can be simulated as done in Wang et al. (2019) for the case . The simulated critical values and their corresponding realizations for are available upon request. For a practical reason, we did not pursue the larger , such as , since larger corresponds to more trimming on the two ends and the finite sample performance when is already very promising for detecting sparse alternatives, see Section 4. An additional difficulty with larger is the associated computation cost and complexity in its implementation.

Remark 2.3.

Compared to He et al. (2020), we assume the th moment conditions, which is weaker than the uniform sub-Gaussian type conditions in their condition A.4(2), although the latter condition seems to be exclusively used for deriving the limit of the test statistic corresponding to . Furthermore, since their strong mixing condition with exponential decay rate [cf. condition A.4(3) of He et al. (2020)] implies our cumulant assumption 2.1 [see Andrews (1991), Zhurbenko and Zuev (1975)], our overall assumption is weaker than condition A.4 in He et al. (2020). Despite the weaker assumptions, our results are stronger, as we derived the asymptotic independence of several stochastic process indexed by , which implies the asymptotic independence of U-statistics indexed by .

Note that our current formulation does not include the case, which corresponds to norm of mean difference . The -norm based test was developed by Yu and Chen (2020) and their test statistic is based on CUSUM statistics

and takes the form , where is the boundary removal parameter. They did not obtain the asymptotic distribution of but showed that a bootstrap CUSUM test statistic is able to approximate the finite sample distribution of using a modification of Gaussian and bootstrap approximation techniques developed by Chernozhukov et al. (2013, 2017). Given the asymptotic independence between -norm based U statistic and -norm based test statistic [He et al. (2020)] in the two-sample testing text, we would conjecture that test statistic in Yu and Chen (2020) is asymptotically independent of our for any under suitable moment and weak componentwise dependence conditions. A rigorous investigation is left for future work.

2.3 Adaptive Test

Let be a set of (e.g. {2,6}). Since s are asymptotically independent for different under the null, we can combine their corresponding -values and form an adaptive test. For example, we may use , where is the -value corresponding to , as a new statistic. Its -value is equal to . Suppose we want to perform a level- test, it is equivalent to conduct tests based on at level , and reject the null if one of the statistics exceeds its critical value. Therefore, we only need to compare each with its -quantile of the corresponding limiting null distribution.

As we explained before, a smaller (say ) tends to have higher power under the dense alternative, which is also the main motivation for the proposed method in Wang et al. (2019). On the contrary, a larger has a higher power under the sparse alternative, as . Therefore, with the adaptive test, we can achieve high power under both dense and sparse alternatives with asymptotic size still equal to . This adaptivity will be confirmed by our asymptotic power analysis presented in Section 2.4 and simulation results presented in Section 4.

2.4 Power Analysis

Theorem 2.3.

Assume that the change point location is at with the change in the mean equal to . Suppose Assumption 2.1, and the following conditions on hold. We have

-

1.

If ;

-

2.

If ;

-

3.

If , then

where

Remark 2.4.

The following example illustrates the power behavior using different . For simplicity, we assume and consider a change in the mean equal to . In addition to demonstrating that large (small) is favorable to the sparse (dense) alternatives, our local asymptotic power results stated in Theorem 2.3 also allow us to provide a rule to classify an alternative, which is given by

To have a nontrivial power, it suffices to have , which implies . Therefore, when , a smaller corresponds to a larger . On the contrary, when , a smaller that yields a larger is preferable to have higher power. Similar argument still holds for more general and , as long as we have a similar order for and , and the latter one is guaranteed by Assumption 2.1.

We can summarize the asymptotic powers of the tests under different alternatives in the following table. Note that when at least one single- based test obtains asymptotically nontrivial power (power 1), our adaptive test can also achieve nontrivial power (power 1).

| Alternative | ||||

|---|---|---|---|---|

| Dense | ||||

| 1 | 1 | |||

| & | ||||

| 1 | 1 | |||

| 1 | 1 | 1 | ||

| Sparse ) | ||||

| 1 | 1 | |||

| & | ||||

| 1 | 1 | |||

| 1 | 1 | 1 |

Liu et al. (2020) recently studied the detection of a sparse change in the high-dimensional mean vector under the Gaussian assumption as a minimax testing problem. Let . In the fully dense case, i.e., when , where denotes the norm, Theorem 8 in Liu et al. (2020) stated that the minimax rate is given by . Thus under the assumption that , the -norm based test in Wang et al. (2019) achieves the rate optimality up to a logarithm factor. Consequently, any adaptive test based on is rate optimal (up to a logarithm factor) as long as .

In the special case , the minimax rate is given by

Recall that . In the sparse setting and under the assumptions that , and , the minimax rate is (up to a logarithm factor), which corresponds to . Our -norm based test is not minimax rate optimal since the detection boundary is , which gets closer to as gets larger. In the dense setting and under the assumptions that , and , the minimax rate is (up to a logarithm factor), which corresponds to . Therefore the -norm based test in Wang et al. (2019) is again rate optimal (up to a logarithm factor).

3 Change-point Estimation

In this section, we investigate the change-point location estimation based on change-point test statistics we proposed in Section 2. Specifically, Section 3.1 presents convergence rate for the argmax of SN-based test statistic upon suitable standardization. Section 3.2 proposes a combination of wild binary segmentation (WBS, Fryzlewicz (2014)) algorithm with our SN-based test statistics for both single- test and adaptive test to estimate multiple change points.

3.1 Single Change-point Estimation

In this subsection, we propose to estimate the location of a change point assuming that the data is generated from the following single change-point model,

where is the location of change point. In the literature, it is common to focus on the convergence rate of the estimators of the relative location , that is, we shall focus on the convergence rate of , where is an estimator for .

Given the discussions about size and power properties of the SN-based test statistic in Section 2, it is natural to use the argmax of the test statistic as the estimator for . That is, we define

To present the convergence rate for , we shall introduce the following assumptions.

Assumption 3.1.

-

1.

;

-

2.

, for ;

-

3.

.

Let so . We have the following convergence rate of for the case .

Theorem 3.1.

Suppose Assumption 3.1 holds and . It holds that as , for any .

Remark 3.1.

Assumption 3.1 (1) and (2) have been assumed in Wang et al. (2019), and they are implied by Assumption 2.1; see Remark 3.2 in Wang et al. (2019). Assumption 3.1(3) is equivalent to , which implies that is a consistent estimator of . Note that even in the low-dimensional setting, no convergence rate for the argmax of SN-based statistic (standarized by the sample size) is obtained in Shao and Zhang (2010). Thus this is the first time the asymptotic rate for the argmax of a SN-based test statistic is studied. On the other hand, the proof for the more general case is considerably more involved than the special case and is deferred to future investigation.

3.2 Multiple Change-point Estimation

In practice, the interest is often in the change point estimation or segmentation, when the presence of change points is confirmed by testing or based on prior knowledge. In the high-dimensional context, the literature on change point estimation is relatively scarce; see Cho (2016), Wang and Samworth (2018) and Wang et al. (2019). Here we shall follow the latter two papers and use the wild binary segmentation [Fryzlewicz (2014)] coupled with our test developed for a single or adaptive test to estimate the number and location of change points. Note that the standard binary segmentation procedure may fail when the change in means is not monotonic, as shown in Wang et al. (2019) via simulations.

For any integers satisfying , define

Note that is essentially the statistic based on the sub-sample . Denote a random sample of s.t. as , where the sample is drawn independently with replacement of size . In practice, we may require the segments to be slightly longer to reduce unnecessary fluctuations of the critical values. Then define and we stop the algorithm if , where is some threshold to be specified below, and estimate the change point otherwise; see Algorithm 1 for details.

One anonymous reviewer asked whether it is possible to derive the limiting distribution of under the null, which turns out to be challenging for two reasons: (1) The SN-based test statistic for different intervals could be highly dependent, especially when the two intervals overlap by a lot; (2) the number of such randomly generated intervals is usual large, and it would be more valuable to develop an asymptotic distribution under the assumption that both sample size and number of intervals go to infinity. It seems difficult to use the classical argument for this problem, and we shall leave this for future investigation.

To obtain the threshold value as needed in the Algorithm 1, we generate standard Gaussian samples each of which has sample size and dimension . For the -th sample (, we calculate

where is the SN-based test statistic applied to the th Gaussian simulated sample. We can take to be the 95% quantile of . Since the self-normalized test statistic is asymptotically pivotal, the above threshold is expected to approximate the 95% quantile of the finite sample distribution of maximized SN-based test statistic applied to randomly drawn sub-samples from the original data.

To apply the adaptive test, we calculate with -th sample using different . Denote We calculate -value for each single- based statistic and select the most significant one for location estimation, which gives the adaptive version; see Algorithm 2.

4 Numerical Studies

In this section, we present numerical results to examine the finite sample performance of our testing and estimation method in comparison with the existing alternatives. Section 4.1 shows the size and power for the single change point tests; Section 4.2 presents the estimation result when there is one single change-point; Section 4.3 compares several WBS-based estimation methods for multiple change point estimation, including the INSPECT method in Wang and Samworth (2018). Finally, we apply our method to a real data set in Section 4.4.

4.1 Single Change Point Testing

In this subsection, we examine the size and power property of our single- and adaptive tests in comparison with the one in Enikeeva and Harchaoui (2019) (denoted as EH), which seems to be the only adaptive method in the literature. The data , where for under the null. We set and and performed 2000 Monte carlo replications. We consider four different configurations of as follows,

They correspond to independent components (Id), auto-regressive model with order (AR(0.5) and AR(0.8)) and compound symmetric (CS), respectively. The first three configurations imply weak dependence among components so satisfy Assumption 2.1, whereas the compound symmetric covariance matrix corresponds to strong dependence among components and violates our assumption. The size of our tests, including at a single and combined tests with , and are presented in Table 2. It appears that all tests are oversized when is compound symmetric, which is somewhat expected since the strong dependence among components brings non-negligible errors in asymptotic approximation. As a matter of fact, we conjecture that our limiting null distribution no longer holds in this case. Below we shall focus our comments on the first three configurations (Id, AR(0.5) and AR(0.8)).

The size for (i.e., the test in Wang et al. (2019)) appears quite accurate except for some degree of under-rejection in the Id case. For , it is oversized and its size seems inferior to the case , which also shows some over-rejection for the AR(1) models when , but the size distortion improves quite a bit when we increase to . Among the three combined tests, there are apparent over-rejections for , for the AR(1) models, and the test corresponding to exhibits the most accurate size overall. By contrast, the EH shows serious size distortions in all settings with some serious over-rejection when the componentwise dependence is strong (e.g., AR(0.8) and CS), which is consistent with the fact that its validity strongly relies on the Gaussian and componentwise independence assumptions. We also checked the sensitivity of the size with respect to nonGaussian assumptions and observe serious distortion for EH when the data is generated from a nonGaussian distribution (results not shown). Overall, the adaptive test with seems preferred to all other tests (including the adaptive test with ) in terms of size accuracy.

Please insert Table 2 here!

To investigate the power, we let for and for . We take and , which corresponds to a sparse alternative; and let to examine the power under the dense alternative; see Table 3. In the case of sparse alternative, we can see that the powers corresponding to and are much higher than that for , which is consistent with our intuition. When , the power is slightly higher than that for , which might be explained by the over-rejection with (in the case of AR(1) models), and we expect no power gain as we increase to etc, so the results for these larger are not included. Also for larger , there is more trimming involved as the maximum runs from to in our test statistics, so if the change point occurs outside of the range , our test has little power. In the dense alternative case, the power for is the highest as expected, and the power for is again slightly higher than that for .

The power of the combined tests (i.e., or or ) is always fairly close to the best single one within the set. For example, the power for is very close to the power for in the sparse case and is quite close to the power for in the dense case, indicating the adaptiveness of the combined test. In the sparse case, the powers for and are slightly higher than that for , which could be related to the over-rejection of the tests with and , especially when the data is generated from AR(1) models. Overall, the adaptive tests (i.e., (2,4), (2,6) or (2,4,6)) have a good all-around power behavior against both sparse and dense alternatives and are preferred choices when there is no prior knowledge about the type of alternative the data falls into. Since the size for is more accurate than that for (2,4) and (2,4,6), we slightly favor the combination. EH exhibits high power for all settings, but it is at the cost of serious size distortion. We shall not present size-adjusted power as the serious distortion is too great to recommend its use when there are componentwise dependence in the data.

Please insert Tables 3 here!

4.2 Estimation for Single Change-point

In this subsection, we present the square root of mean-square-error (RMSE, multiplied by 1000 for readability) of SN-based location estimators and compare with the EH-based estimator under the same settings as we used in Section 4.1.

For both dense and sparse alternatives, the proposed estimators (i.e., SN(2), SN(4) and SN(6)) perform better than the EH method when the signal is relatively weak (i.e., ). However, as the signal becomes stronger (i.e., ), the EH method can outperform ours in the identity covariance matrix case. On the other other hand, the performance of the EH estimator apparently deterioates as the cross-sectional dependence gets stronger, indicating its strong reliance on the componentwise independence assumption. It is interesting to note that the SN-based method performs fairly well, even in the case of compound symmetric covariane matrix, and SN(6) outperforms the other two in all settings. A theoretical justification for the latter phenomenon would be intriguing.

Please insert Tables 4 here!

4.3 Estimation for Multiple Change Points

In the following simulations, we compare our WBS-based method with the INSPECT method proposed by Wang and Samworth (2018). Following Wang et al. (2019), we generate 100 samples of i.i.d. standard normal variables with . The 3 change points are located at and . Denote the changes in mean by with . We use, e.g., Dense(2.5) to denote dense changes with for and Sparse(4) to denote sparse changes with for . In particular, Dense() & Sparse() refers to , where we have a mixture of dense and sparse changes.

We compare WBS with INSPECT, for which we use default parameters with the ”InspectChangepoint” package in R. We use 2 different metrics for evaluating the performance of different methods. One is to calculate the mean square errors (MSE) of the estimated number of change points. The other metric takes the accuracy of location estimation into account. We utilize the correlated rand index (CRI), which can measure the accuracy of change point location estimation. See Rand (1971), Hubert and Arabie (1985) and Wang et al. (2019) for more details. For perfect estimation, the calculated CRI is 1. In general it is a number between 0 and 1 and the more precise we estimate the change point locations, the higher CRI we get. We average the CRI for all Monte Carlo replications and record the average rand index (ARI). We report the MSE and ARI of different methods based on 100 replications in Table 5.

When there are only sparse changes and , the performance of adaptive procedure (WBS(2,6)) is similar to WBS(6), whose estimation is much more accurate than WBS(2) and INSPECT. When we strengthen the signal by increasing from to , the detection power of all methods increase, but instead INSPECT has the best estimation accuracy in this case, closely followed by WBS(6) and WBS(2,6). In the case of purely dense changes with , the performance of WBS(2) dominates the others and WBS(2,6) is the second best. When we increase from to in this setting, the adaptive test slightly outperforms INSPECT, and its performance is comparable to WBS(2). For both dense settings, the performance of WBS(6) is rather poor. We can see that under all these four settings, the performance of WBS(2,6) is always close to the best, indicating its adaptiveness to different types of change points. Moreover, when there is a mixture of dense and sparse changes, the adaptive method outperforms all the others. In practice, the type of changes is often unknown, and therefore our adaptive procedure could be appealing for practitioners.

Please insert Table 5 here!

4.4 Real data illustration

In this subsection, we study the genomic micro-array data set that contains log intensity ratios of 43 individuals with bladder tumor, measured at 2215 different loci. The data was available in R package ecp and was also studied by Wang et al. (2019) and Wang and Samworth (2018). We compare our results with theirs.

We take the first 200 loci for our study. For the WBS algorithm, we generate 10000 samples from i.i.d. standard normal distributions with , and draw 5000 random intervals to calculate the supremum statistics and get the 98%-quantile as our critical value. The change points detected by WBS at 0.98 level and the 20 most significant points detected by INSPECT are given as follows.

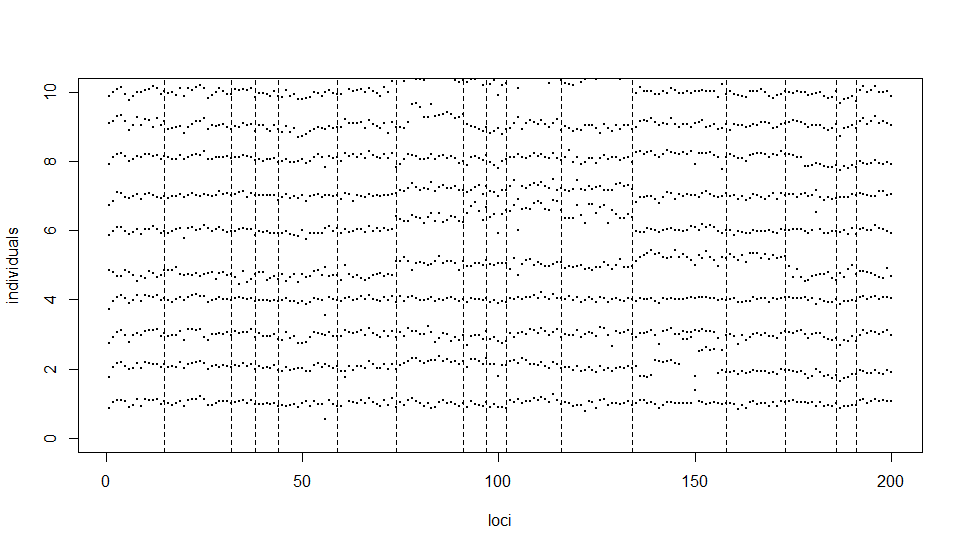

We can see that the set of change points detected by the adaptive WBS method is roughly a union of the sets corresponding to two single WBS methods (that is, for a single ), which suggests that the adaptive WBS method captures both sparse and dense alternatives as expected. In particular, 32(33),44(46),74,134(135), 158(155),173 are detected by both single methods, 38(39),97,102,191 are detected only by , and 15, 59, 91,116,186 only by . The set of the change points detected by adaptive WBS method overlaps with the set for INSPECT by a lot, including 15, 32(33), 38(36), 74(73), 91, 97, 102, 116(119), 134, 158(155), 173(174), and 191. It is worth noting that the change points at locations 91, 97, 191 were only detected by one of two single WBS methods and INSPECT, whereas the adaptive WBS method is able to capture with its good all-round power property again a broad range of alternatives. In Figure 1, we plot the log intensity ratios of the first 10 individuals at first 200 loci, and the locations of the change points estimated by the adaptive method.

Please insert Figure 1 here!

This example clearly demonstrates the usefulness of the proposed adaptive test and corresponding WBS-based estimation method. An important practical choice is the threshold, which can be viewed as a tuning parameter in the implementation of WBS algorithm. We shall leave its choice for future investigation.

5 Conclusion

In this paper, we propose a class of asymptotically pivotal statistics for testing a mean change in high-dimensional independent data. The test statistics are formed on the basis of an unbiased estimator of -th power of the norm of the mean change via U-statistic and self-normalization. They are asymptotically independent for different , and therefore, we can form an adaptive test by taking the minimum of -values corresponding to test statistics indexed by . The resulting test is shown to have good overall power against both dense and sparse alternatives via theory and simulations. On the estimation front, we obtain the convergence rate for the argmax of SN-based test statistic standardized by sample size under the one change-point model and . We also combine our tests with WBS algorithm to estimate multiple change points. As demonstrated by our simulations, the WBS-based estimation method inherits the advantage of the adaptive test, as it outperforms other methods under the setting where there is a mixture of dense and sparse change points, and has close-to-best performance for purely dense and purely sparse cases.

To conclude, we mention that it would be interesting to extend our adaptive test to the high-dimensional time series setting, for which a trimming parameter seems necessary to accommodate weak temporal dependence in view of recent work by Wang and Shao (2019). In addition, the focus of this paper is on mean change, whereas in practice the interest could be on other high-dimensional parameters, such as vector of marginal quantiles, variance-covariance matrix, and even high-dimensional distributions. It remains to be seen whether some extensions to these more general parameters are possible in the high-dimensional environment. We shall leave these open problems for future research.

| DGP | 5% | |||||||

| EH | ||||||||

| Id | (200,100) | 0.028 | 0.065 | 0.056 | 0.052 | 0.032 | 0.045 | 0.01 |

| (400,200) | 0.036 | 0.068 | 0.051 | 0.055 | 0.041 | 0.045 | 0 | |

| AR(0.5) | (200,100) | 0.049 | 0.109 | 0.077 | 0.087 | 0.063 | 0.085 | 0.111 |

| (400,200) | 0.043 | 0.089 | 0.058 | 0.081 | 0.056 | 0.074 | 0.093 | |

| AR(0.8) | (200,100) | 0.051 | 0.12 | 0.079 | 0.097 | 0.063 | 0.086 | 0.613 |

| (400,200) | 0.045 | 0.094 | 0.046 | 0.082 | 0.047 | 0.069 | 0.66 | |

| CS | (200,100) | 0.095 | 0.103 | 0.081 | 0.109 | 0.088 | 0.098 | 0.729 |

| (400,200) | 0.11 | 0.085 | 0.061 | 0.116 | 0.099 | 0.104 | 0.898 | |

| DGP | 5% | |||||||||

| EH | ||||||||||

| Sparse | Id | 1 | (200,100) | 0.742 | 0.981 | 0.962 | 0.982 | 0.967 | 0.981 | 0.844 |

| (400,200) | 0.94 | 1 | 1 | 1 | 1 | 1 | 0.998 | |||

| 2 | (200,100) | 0.995 | 1 | 1 | 1 | 1 | 1 | 1 | ||

| (400,200) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |||

| AR(0.5) | 1 | (200,100) | 0.566 | 0.947 | 0.91 | 0.933 | 0.894 | 0.93 | 0.809 | |

| (400,200) | 0.82 | 1 | 1 | 1 | 0.996 | 1 | 0.994 | |||

| 2 | (200,100) | 0.94 | 1 | 1 | 1 | 0.999 | 1 | 0.999 | ||

| (400,200) | 0.998 | 1 | 1 | 1 | 1 | 1 | 1 | |||

| AR(0.8) | 1 | (200,100) | 0.298 | 0.887 | 0.84 | 0.883 | 0.82 | 0.876 | 0.912 | |

| (400,200) | 0.522 | 0.99 | 0.994 | 0.99 | 0.988 | 0.992 | 1 | |||

| 2 | (200,100) | 0.703 | 0.997 | 0.995 | 0.997 | 0.994 | 0.997 | 0.994 | ||

| (400,200) | 0.928 | 1 | 1 | 1 | 1 | 1 | 1 | |||

| CS | 1 | (200,100) | 0.231 | 0.971 | 0.937 | 0.966 | 0.927 | 0.962 | 0.964 | |

| (400,200) | 0.226 | 1 | 1 | 1 | 1 | 1 | 1 | |||

| 2 | (200,100) | 0.592 | 1 | 1 | 1 | 1 | 1 | 1 | ||

| (400,200) | 0.656 | 1 | 1 | 1 | 1 | 1 | 1 | |||

| Dense | Id | 1 | (200,100) | 0.718 | 0.326 | 0.292 | 0.677 | 0.661 | 0.645 | 0.444 |

| (400,200) | 0.94 | 0.348 | 0.282 | 0.912 | 0.896 | 0.89 | 0.82 | |||

| 2 | (200,100) | 0.995 | 0.567 | 0.612 | 0.991 | 0.99 | 0.985 | 0.978 | ||

| (400,200) | 1 | 0.682 | 0.628 | 1 | 1 | 1 | 1 | |||

| AR(0.5) | 1 | (200,100) | 0.589 | 0.357 | 0.312 | 0.581 | 0.554 | 0.552 | 0.566 | |

| (400,200) | 0.808 | 0.36 | 0.338 | 0.762 | 0.754 | 0.732 | 0.832 | |||

| 2 | (200,100) | 0.927 | 0.616 | 0.573 | 0.917 | 0.909 | 0.899 | 0.93 | ||

| (400,200) | 0.994 | 0.68 | 0.608 | 0.99 | 0.988 | 0.984 | 0.998 | |||

| AR(0.8) | 1 | (200,100) | 0.385 | 0.33 | 0.262 | 0.41 | 0.358 | 0.376 | 0.831 | |

| (400,200) | 0.502 | 0.32 | 0.242 | 0.474 | 0.436 | 0.422 | 0.912 | |||

| 2 | (200,100) | 0.693 | 0.537 | 0.474 | 0.699 | 0.656 | 0.667 | 0.94 | ||

| (400,200) | 0.872 | 0.564 | 0.51 | 0.866 | 0.848 | 0.84 | 0.986 | |||

| CS | 1 | (200,100) | 0.345 | 0.277 | 0.235 | 0.363 | 0.33 | 0.338 | 0.84 | |

| (400,200) | 0.36 | 0.284 | 0.214 | 0.368 | 0.334 | 0.348 | 1 | |||

| 2 | (200,100) | 0.544 | 0.455 | 0.414 | 0.551 | 0.526 | 0.532 | 0.919 | ||

| (400,200) | 0.588 | 0.474 | 0.424 | 0.584 | 0.55 | 0.562 | 1 | |||

| Method | Sparse | Dense | |||||||

| Id | AR(0.5) | AR(0.8) | CS | Id | AR(0.5) | AR(0.8) | CS | ||

| 1 | SN(2) | 38.7 | 53.7 | 72.3 | 84.5 | 41.1 | 50.6 | 72.6 | 91.4 |

| SN(4) | 20.3 | 24.5 | 26.7 | 20.9 | 44.6 | 43.0 | 49.1 | 49.6 | |

| SN(6) | 18.5 | 22.0 | 22.3 | 19.6 | 33.1 | 31.6 | 32.6 | 31.4 | |

| EH | 150.3 | 214.9 | 300.6 | 326.8 | 155.6 | 216.9 | 291.3 | 332.3 | |

| 2 | SN(2) | 26.0 | 33.5 | 41.7 | 44.3 | 27.5 | 36.6 | 54.8 | 76.8 |

| SN(4) | 14.4 | 17.5 | 19.6 | 16.3 | 37.9 | 38.3 | 45.8 | 48.8 | |

| SN(6) | 12.1 | 14.1 | 14.9 | 11.9 | 29.7 | 28.6 | 31.9 | 30.5 | |

| EH | 41.4 | 90.2 | 196.8 | 272.7 | 40.1 | 110.8 | 210.2 | 286.6 | |

| 4 | SN(2) | 21.8 | 24.8 | 29.5 | 30.4 | 20.7 | 26.1 | 39.2 | 64.2 |

| SN(4) | 12.1 | 14.7 | 16.5 | 14.1 | 22.8 | 27.8 | 39.0 | 44.3 | |

| SN(6) | 9.9 | 10.8 | 11.6 | 10.1 | 26.1 | 26.5 | 29.1 | 33.6 | |

| EH | 8.7 | 16.7 | 66.8 | 153.2 | 9.7 | 29.9 | 109.4 | 209.6 | |

| MSE | ARI | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| -3 | -2 | -1 | 0 | 1 | 2 | 3 | ||||

| Sparse(2.5) | WBS-SN(2) | 0 | 1 | 11 | 75 | 13 | 0 | 0 | 0.28 | 0.8667 |

| WBS-SN(4) | 0 | 0 | 0 | 98 | 2 | 0 | 0 | 0.02 | 0.958 | |

| WBS-SN(6) | 0 | 0 | 0 | 94 | 5 | 1 | 0 | 0.09 | 0.9552 | |

| WBS-SN(2,6) | 0 | 0 | 0 | 90 | 10 | 0 | 0 | 0.1 | 0.9489 | |

| INSPECT | 0 | 26 | 0 | 69 | 5 | 0 | 0 | 1.09 | 0.7951 | |

| Sparse(4) | WBS-SN(2) | 0 | 0 | 0 | 86 | 14 | 0 | 0 | 0.14 | 0.9188 |

| WBS-SN(4) | 0 | 0 | 0 | 98 | 2 | 0 | 0 | 0.02 | 0.9684 | |

| WBS-SN(6) | 0 | 0 | 0 | 94 | 5 | 1 | 0 | 0.09 | 0.9707 | |

| WBS-SN(2,6) | 0 | 0 | 0 | 90 | 10 | 0 | 0 | 0.1 | 0.9678 | |

| INSPECT | 0 | 0 | 0 | 91 | 8 | 1 | 0 | 0.12 | 0.9766 | |

| Dense(2.5) | WBS-SN(2) | 0 | 2 | 10 | 74 | 13 | 1 | 0 | 0.35 | 0.8662 |

| WBS-SN(4) | 94 | 4 | 2 | 0 | 0 | 0 | 0 | 8.64 | 0.0263 | |

| WBS-SN(6) | 70 | 20 | 7 | 3 | 0 | 0 | 0 | 7.17 | 0.1229 | |

| WBS-SN(2,6) | 5 | 5 | 7 | 64 | 9 | 0 | 0 | 0.91 | 0.7809 | |

| INSPECT | 0 | 40 | 0 | 46 | 13 | 0 | 1 | 1.82 | 0.6656 | |

| Dense(4) | WBS-SN(2) | 0 | 0 | 0 | 85 | 13 | 2 | 0 | 0.21 | 0.9186 |

| WBS-SN(4) | 47 | 33 | 14 | 6 | 0 | 0 | 0 | 5.69 | 0.2748 | |

| WBS-SN(6) | 46 | 28 | 21 | 5 | 0 | 0 | 0 | 5.47 | 0.2642 | |

| WBS-SN(2,6) | 0 | 0 | 0 | 87 | 13 | 0 | 0 | 0.13 | 0.9214 | |

| INSPECT | 0 | 7 | 0 | 68 | 22 | 2 | 1 | 0.67 | 0.9027 | |

| WBS-SN(2) | 0 | 1 | 12 | 73 | 14 | 0 | 0 | 0.3 | 0.8742 | |

| Sparse(2.5) | WBS-SN(4) | 0 | 0 | 62 | 37 | 1 | 0 | 0 | 0.63 | 0.7855 |

| & | WBS-SN(6) | 0 | 0 | 60 | 38 | 2 | 0 | 0 | 0.62 | 0.7743 |

| Dense(4) | WBS-SN(2,6) | 0 | 0 | 0 | 91 | 9 | 0 | 0 | 0.09 | 0.9439 |

| INSPECT | 0 | 21 | 1 | 70 | 6 | 1 | 1 | 1.04 | 0.8198 | |

References

- Aminikhanghahi and Cook (2017) Aminikhanghahi, S. and Cook, D. J. (2017), “A survey of methods for time series change point detection,” Knowledge and Information Systems, 51, 339–367.

- Andrews (1991) Andrews, D. (1991), “Heteroskedasticity and autocorrelation consistent covariant matrix estimation,” Econometrica, 59, 817–858.

- Aue and Horváth (2013) Aue, A. and Horváth, L. (2013), “Structural breaks in time series,” Journal of Time Series Analysis, 34, 1–16.

- Chan et al. (2013) Chan, J., Horváth, L., and Hušková, M. (2013), “Darling–Erdős limit results for change-point detection in panel data,” Journal of Statistical Planning and Inference, 143, 955–970.

- Chen and Zhang (2015) Chen, H. and Zhang, N. (2015), “Graph-based change-point detection,” The Annals of Statistics, 43, 139–176.

- Chen and Gupta (2011) Chen, J. and Gupta, A. K. (2011), Parametric Statistical Change Point Analysis: with Applications to Genetics, Medicine, and Finance, Springer Science & Business Media.

- Chen and Qin (2010) Chen, S. X. and Qin, Y.-L. (2010), “A two-sample test for high-dimensional data with applications to gene-set testing,” The Annals of Statistics, 38, 808–835.

- Chernozhukov et al. (2013) Chernozhukov, V., Chetverikov, D., and Kato, K. (2013), “Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors,” Annals of Statistics, 41, 2786–2819.

- Chernozhukov et al. (2017) — (2017), “Central limit theorems and bootstrap in high dimensions,” Annals of Probability, 45, 2309–2352.

- Cho (2016) Cho, H. (2016), “Change-point detection in panel data via double CUSUM statistic,” Electronic Journal of Statistics, 10, 2000–2038.

- Csörgö and Horváth (1997) Csörgö, M. and Horváth, L. (1997), Limit Theorems in Change-Point Analysis. Wiley Series in Probability and Statistics., Wiley.

- Enikeeva and Harchaoui (2019) Enikeeva, F. and Harchaoui, Z. (2019), “High-dimensional change-point detection with sparse alternatives,” The Annals of Statistics, 47, 2051–2079.

- Fryzlewicz (2014) Fryzlewicz, P. (2014), “Wild binary segmentation for multiple change-point detection,” The Annals of Statistics, 42, 2243–2281.

- He et al. (2020) He, Y., Xu, G., Wu, C., and Pan, W. (2020), “Asymptotically independent U-Statistics in high-dimensional testing,” The Annals of Statistics, forthcoming.

- Horváth and Hušková (2012) Horváth, L. and Hušková, M. (2012), “Change-point detection in panel data,” Journal of Time Series Analysis, 33, 631–648.

- Hubert and Arabie (1985) Hubert, L. and Arabie, P. (1985), “Comparing partitions,” Journal of Classification, 2, 193–218.

- Jeng et al. (2010) Jeng, X., Cai, T., and Li, H. (2010), “Optimal sparse segment identification with application in copy number variation analysis,” Journal of the American Statistical Association, 105, 1156–1166.

- Jirak (2015) Jirak, M. (2015), “Uniform change point tests in high dimension,” The Annals of Statistics, 43, 2451–2483.

- Kley et al. (2016) Kley, T., Volgushev, S., Dette, H., and Hallin, M. (2016), “Quantile spectral processes: asymptotic analysis and inference,” Bernoulli, 22, 1770–1807.

- Liu et al. (2020) Liu, H., Gao, C., and Samworth, R. (2020), “Minimax Rates in Sparse High-Dimensional Changepoint Detection,” Annals of Statistics, to appear.

- Lobato (2001) Lobato, I. N. (2001), “Testing that a dependent process is uncorrelated,” Journal of the American Statistical Association, 96, 1066–1076.

- Perron (2006) Perron, P. (2006), “Dealing with structural breaks,” Palgrave Handbook of Econometrics, 1, 278–352.

- Rand (1971) Rand, W. M. (1971), “Objective criteria for the evaluation of clustering methods,” Journal of the American Statistical Association, 66, 846–850.

- Shao (2010) Shao, X. (2010), “A self-normalized approach to confidence interval construction in time series,” Journal of the Royal Statistical Society, Series, B., 72, 343–366.

- Shao (2015) — (2015), “Self-normalization for time series: a review of recent developments,” Journal of the American Statistical Association, 110, 1797–1817.

- Shao and Wu (2007) Shao, X. and Wu, W. B. (2007), “Local whittle estimation of fractional integration for nonlinear processes,” Econometric Theory, 23, 899–929.

- Shao and Zhang (2010) Shao, X. and Zhang, X. (2010), “Testing for change points in time series,” Journal of the American Statistical Association, 105, 1228–1240.

- Tartakovsky et al. (2014) Tartakovsky, A., Nikiforov, I., and Basseville, M. (2014), Sequential Analysis: Hypothesis Testing and Changepoint Detection, Chapman and Hall/CRC.

- Wang et al. (2020) Wang, D., Yu, Y., and Rinaldo, A. (2020), “Optimal change point detection and localization in sparse dynamic networks,” forthcoming at Annals of Statistics, arXiv preprint arXiv:1809.09602.

- Wang and Shao (2019) Wang, R. and Shao, X. (2019), “Hypothesis testing for high-dimensional time series via self-normalization,” The Annals of Statistics, to appear.

- Wang et al. (2019) Wang, R., Volgushev, S., and Shao, X. (2019), “Inference for change points in high dimensional data,” arXiv preprint arXiv:1905.08446.

- Wang and Samworth (2018) Wang, T. and Samworth, R. J. (2018), “High dimensional change point estimation via sparse projection,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80, 57–83.

- Wu (2005) Wu, W. B. (2005), “Nonlinear system theory: Another look at dependence,” Proceedings of the National Academy of Sciences USA, 102, 14150–14154.

- Wu and Shao (2004) Wu, W. B. and Shao, X. (2004), “Limit theorems for iterated random functions,” Journal of Applied Probability, 41, 425–436.

- Xu et al. (2016) Xu, G., Lin, L., Wei, P., and Pan, W. (2016), “An adaptive two-sample test for high-dimensional means,” Biometrika, 103, 609–624.

- Yu and Chen (2020) Yu, M. and Chen, X. (2020), “Finite sample change point inference and identification for high-dimensional mean vectors,” Journal of Royal Statistical Society, Series B, to appear.

- Zhang and Siegmund (2012) Zhang, N. R. and Siegmund, D. O. (2012), “Model selection for high-dimensional multi-sequence change-point problems,” Statistica Sinica, 22, 1507–1538.

- Zhang et al. (2010) Zhang, N. R., Siegmund, D. O., Ji, H., and Li, J. Z. (2010), “Detecting simultaneous changepoints in multiple sequences,” Biometrika, 97, 631–645.

- Zhang and Lavitas (2018) Zhang, T. and Lavitas, L. (2018), “Unsupervised self-normalized change-point testing for time series,” Journal of the American Statistical Association, 113, 637–648.

- Zhao et al. (2019) Zhao, Z., Chen, L., and Lin, L. (2019), “Change-point detection in dynamic networks via graphon estimation,” arXiv preprint arXiv:1908.01823.

- Zhurbenko and Zuev (1975) Zhurbenko, I. and Zuev, N. (1975), “On higher spectral densities of stationary processes with mixing,” Ukrainian Mathematical Journal, 27, 364–373.

Supplement to ”Adaptive Inference for Change Points in High-Dimensional Data”

The supplement contains all the technical proofs in Section 6 and some additional simulation results on network change-point detection in Section 7.

6 Technical Appendix

In the following, we will denote and if .

Proof of Theorem 2.2.

Recall that under the null, as ’s have the same mean,

Therefore, we can calculate the covariance structure of based on that of given in Theorem 2.1.

When ,

When ,

When ,

For , we have

For , since covariance of and is 0, we know the covariance of and is also 0, since their arbitrary linear combinations are also Gaussian by previous proofs, they are jointly Gaussian and therefore independence is implied by uncorrelation. The rest follows from an application of the continuous mapping theorem.

Note that our Assumption 2.1 is a counterpart to the assumption made by Remark 3.2 in Wang et al. (2019). Their results are derived with some weaker assumption (i.e. Assumption 3.1 therein), whose -norm based counterpart for is given as follows.

Assumption 6.1.

For any , the following statements hold:

A.1 .

A.2 has up to th moments and there exists a constant independent of such that

for .

Proof of the claim.

Define

By triangular inequality, , and therefore,

On the other hand,

RHS has order if . Now a simple computation shows that Assumption 6.1 is satisfied if for and which is equivalent to .

We are now ready to introduce the following lemma, which is vital in proving the main result.

Lemma 6.1.

Under Assumption 2.1, for any that are all distinct, , and ,

In particular, for , we have

In addition, for any ,

Proof of Lemma 6.1.

Applying the generalized Hölder’s Inequality, we obtain

since are all different, and are i.i.d. Again by Hölder’s Inequality,

The last line in the above inequalities is due to the CR inequality and the definition of joint cumulants, where runs through the list of all partitions of , runs through the list of all blocks of the partition . As all blocks in a partition are disjoint, we can further bound it as

where the first inequality in the above is due to Assumption 6.1, A.2, which is a consequence of Assumption 2.1, and the fact that there are only finite number of distinct partitions over . This completes the proof of the first result.

For the second result, we first define as the notation for the element-wise -th power of any real matrix , i.e. . Then we have

where is the largest eigenvalue. First observe that . By properties of norm, , if , and , if . This implies .

Next, for any symmetric matrix , . This, together with Assumption 2.1 (A.2), implies

for some . This is equivalent to . Note that , which leads to . So

since , for . This completes the proof for the second result.

This lemma is a generalization to its counterpart in Wang et al. (2019), in which we only have . To prove Theorem 2.1, we need the following lemmas to show tightness and finite dimensional convergence.

Lemma 6.2.

Under Assumption 2.1, for any , and define the 3-dimensional index set

for some constant , any such that .

Proof of Lemma 6.2.

By CR-inequality,

We shall only analyze , and the analysis of the other 2 terms are similar.

Note that for any and ,

where we have applied Lemma 6.1-(2) to , and the summation is over for . Therefore, we have

Lemma 6.3.

Fix for any any , we have

where

Proof of Lemma 6.3.

WLOG, we can assume . The other terms are similar. Define

and

Define , we can see that under the null , is a martingale difference sequence w.r.t. , and

To apply the martingale CLT (Theorem 35.12 in Billingsley (2008)), we need to verify the following two conditions

.

.

To prove (1), it suffices to show that

Observe that

Straightforward calculations show that

The same result holds for . Therefore,

As regards (2), we decompose as follows,

We still focus on the case . Note that

where denotes the summation over terms s.t. , and is over the other terms.

It is straightforward to see that as ’s are independent, and

Note that

where the summation is over the range of s.t. Note that RHS can be further decomposed into 2 parts. The first part corresponds to the summation of the terms s.t. for and has no intersection with that for , which has order

For the second part, it corresponds to the summation of the terms s.t. for and has at least one intersection with that for . Since at least one ”degree of freedom” for is lost, the summation still has the form as in Lemma 6.1-(2), which has order . We can conclude that the second part has order , and hence goes to 0.

Therefore, , which implies . Therefore, we can conclude that . It remains to show that .

It suffices to show that . Based on the same argument as before, by applying Lemma 6.1-(2) we know that every kind of summation has the same order no matter how intersects with each other. Therefore, the terms in the expansion of for which has highest degree of freedom should dominate. For these terms, each index in should have exactly one pair. The number of these terms is of order . The summation has forms , s.t. and . We need to show that it is of order to complete the proof. By symmetry, we can assume , and therefore . Note that for ,

where we have used Hölder’s inequality, along with A.1 and the fact that

When , it must be the case that , the term becomes , and directly applying A.1 can yield the desired order.

We can then conclude that and hence . Combining what we have proved so far, we obtain .

Similar argument shows that

Therefore, we conclude that

which completes the proof.

We can generalize the above lemma to the case when are not identical.

Lemma 6.4.

Fix for any any , we have

where and are independent Gaussian processes if , or or . And when , we have

Proof of Lemma 6.4.

We use the same notations in proving last lemma, as the proof is similar to the previous one and involves applying martingale CLT, where we have decomposed into 2 parts. Since the argument there can be directly applied, the only additional work is about calculating the mean.

To prove the second statement, we take , as the example case, since the proof for other cases are similar. With the same technique we have used, it can be shown that

To derive the convergence in the statement, we can follow the same argument as before to show the variance goes to 0, and therefore, we have the convergence in distribution, with desired covariance structure.

As for the first statement, it is straightforward to see that the expectation for the crossing term (corresponding to ) is 0 for each of the cases in the first statement, which implies that the Gaussian processes have to be independent due to asymptotic normality.

Now we are ready to complete the proof of Theorem 2.1.

Proof of Theorem 2.1.

The tightness is guaranteed by Lemma 6.2 and applying Lemma 7.1 in Kley et al. (2016) with . We omit the detailed proof as the argument is similar to the tightness proof in Wang et al. (2019). Lemma 6.4 has provided finite dimensional convergence of , which has asymptotic covariance structure as after normalization. Therefore, we have derived desired process convergence.

Proof Theorem 2.3.

Now we are under the alternative, with the location point and the change of mean equal to . Suppose WLOG .

First suppose , which is equivalent to . It suffices to show that in this case,

Since converges to some non-degenerate process, and

it remains to show that .

Note that consists of terms that are each ratio consistent to

for some constant depending on and , where

which can be further decomposed as

for some constants depending on . Therefore, it suffices to show

Similar argument for showing tightness and finite dimensional convergence in proving Theorem 2.1 can be applied. More precisely, we can get a similar moment bound as in Lemma 6.2 and follow the argument there to show the tightness, since we have

by Lemma 6.1-(1).

Furthermore, following the proof of Lemma 6.3, Lemma 6.1-(3) implies finite dimensional convergence to 0, as

We have the desired process convergence for ., which along with the continuous mapping theorem further implies the convergence of the statistic.

When , note that . Since is the location of the change point, the denominator has the same value as the null. On the contrary, it is immediate to see that the numerator diverges to infinity after normalizing (with ). Therefore, we have .

Before we prove the convergence rate for SN-based estimator, we state the following useful propositions.

Proposition 6.1.

For any , and , we have:

-

1.

if or , ;

-

2.

if ,

-

3.

if ,

Let . We have the following result.

Proposition 6.2.

Now we are ready to prove the convergence rate for SN-based statistic .

Proof of Theorem 3.1.

Due to the fact that is the global maximizer, we have

Since , and are all strictly positive almost surely, we can then conclude that or . Define . If , then there exists at least one such that or . This implies

By Proposition 6.2, it is straightforward to see that , and this completes the proof.

Proof of Proposition 6.1.

If or , then are all identical, for . This implies that .

When , there are two scenarios depending on the value of . If , note that for any and zero otherwise, then by straightforward calculation we have

Similarly if we have

Proof of Proposition 6.2.

To show the first result, we first assume . Then according to Proposition 6.1,

Similarly we have

It is easy to verify that , for . Furthermore, by Theorem 2.1 in Wang et al. (2019) and the argument therein, we have

since by Assumption 3.1 (3), and

These imply that

since by Assumption 3.1 (3). Therefore, we have

In addition,

Since , we have

Finally, it is straightforward to see that

And

since both and converge to 1. This is equivalent to

and it implies that . Similar tactics can be applied to the case and by combining the two parts we have . Therefore this completes the proof for the first result.

When is between and , by Proposition 6.1 we have

and from the above decomposition we observe that , which is the second term in the above equality. Then

since . Furthermore,

due to Assumption 3.1, Theorem 2.1 and the argument in Wang et al. (2019).

Similarly when is between and , we have

where , and

Therefore by combining the above results we obtain that

since . And

since and . By very similar arguments, we can obtain the same bound for , and hence . On the other hand, Theorem 2.1 implies that . This indicates that , and consequently,

This completes the whole proof.

7 Application to network change-point detection

Our change-point testing and estimation methods are applicable to network change-point detection in the following sense. Suppose we observe independent networks over time with nodes. Here is the adjacency matrix at time . We assume the edges in each network are generated from Bernoulli random variables and are un-directed. That is,

Let and assume . Let .

Suppose that we are interested in testing

versus certain change point alternatives. Here we can convert the adjacency matrix into a high-dimensional vector, and apply our test and estimation procedures. Note that a mean shift in implies a shift in variance matrix of , so the variance matrix is not constant under the alternative. However, the asymptotic distribution of our SN-based test statistics still holds under the null, and our change-point detection method is applicable. Note that our method allows the edges to be weakly dependent, which can be satisfied by many popular network models; see Wang et al. (2020).

To examine the finite sample performance of our change-point testing and estimation in the network framework, we consider the following stochastic block model as in Wang et al. (2020). We generate as a matrix with entries being i.i.d. Bernoulli variables with mean matrix where is the membership matrix and is the connectivity matrix. We set to be the first columns of identity matrix so that , and be a matrix of ones.

Table 6 presents the size with 1000 Monte Carlo repetitions. We take with .

| DGP | ,5% | ,10% | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | (200,10) | 0.035 | 0.096 | 0.068 | 0.08 | 0.048 | 0.075 | 0.152 | 0.135 | 0.124 | 0.096 |

| (400,20) | 0.054 | 0.084 | 0.049 | 0.071 | 0.048 | 0.097 | 0.142 | 0.094 | 0.135 | 0.099 | |

| 0.2 | (200,10) | 0.065 | 0.117 | 0.08 | 0.116 | 0.062 | 0.095 | 0.153 | 0.151 | 0.147 | 0.121 |

| (400,20) | 0.05 | 0.101 | 0.043 | 0.09 | 0.047 | 0.099 | 0.153 | 0.096 | 0.137 | 0.083 | |

As regards the power simulation, we generate the network data with a change point located at , which leads to . We take with and . We obtain the empirical power based on 1000 Monte Carlo repetitions.

| DGP | ,5% | ,10% | |||||||||

| () | |||||||||||

| (0.2,1) | (200,10) | 0.152 | 0.172 | 0.116 | 0.19 | 0.145 | 0.223 | 0.254 | 0.225 | 0.265 | 0.222 |

| (400,20) | 0.83 | 0.309 | 0.238 | 0.787 | 0.775 | 0.908 | 0.411 | 0.364 | 0.865 | 0.85 | |

| (0.5,1) | (200,10) | 0.93 | 0.628 | 0.527 | 0.917 | 0.904 | 0.963 | 0.723 | 0.666 | 0.952 | 0.937 |

| (400,20) | 1 | 0.995 | 0.97 | 1 | 1 | 1 | 0.997 | 0.99 | 1 | 1 | |

| (0.2,0.2) | (200,10) | 0.804 | 0.677 | 0.61 | 0.798 | 0.755 | 0.866 | 0.75 | 0.708 | 0.86 | 0.829 |

| (400,20) | 1 | 0.994 | 0.991 | 1 | 1 | 1 | 0.997 | 0.999 | 1 | 1 | |

| (0.5,0.2) | (200,10) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| (400,20) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

We can see that our method exhibits similar size behavior as compared to the setting for Gaussian distributed data in Section 4.1. The power also appears to be quite good and increases when the signal increases. Unfortunately, we are not aware of any particular testing method tailored for single network change-point so we did not include any other method into the comparison.

To estimate the change-points in the network time series, we also combine our method with WBS. We generate 100 samples of networks with connection probability and sparsity parameter . The 3 change points are located at and . We take . We report the MSE and ARI of 100 Monte Carlo simulations as before. We compare our method with modified neighborhood smoothing (MNBS) algorithm in Zhao et al. (2019) and the graph-based test in Chen and Zhang (2015) combined with the binary segmentation (denoted as CZ). We do not include a comparison with Wang et al. (2020) as their method requires two iid samples. We can see that CZ performs worse than the other two methods as our simulation involves non-monotonic changes in the mean that does not favor binary segmentation. When the network becomes sparse, i.e. , our method also has better performance than MNBS. Overall the performance of our method (e.g., WBS-SN(2), WBS-SN(2,6)) seem quite stable. Of course, the scope of this simulation is quite limited, and we leave a more in-depth investigation of network change-point estimation to near future.

| MSE | ARI | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| -3 | -2 | -1 | 0 | 1 | 2 | 3 | ||||

| (0.2, 1,1) | WBS-SN(2) | 0 | 1 | 14 | 74 | 10 | 1 | 0 | 0.32 | 0.865 |

| WBS-SN(4) | 90 | 9 | 1 | 0 | 0 | 0 | 0 | 8.47 | 0.0373 | |

| WBS-SN(6) | 32 | 23 | 24 | 16 | 4 | 1 | 0 | 4.12 | 0.278 | |

| WBS-SN(2,6) | 1 | 2 | 18 | 39 | 32 | 8 | 0 | 0.99 | 0.728 | |

| CZ | 46 | 50 | 4 | 0 | 0 | 0 | 0 | 6.18 | 0.165 | |

| MNBS | 0 | 2 | 17 | 55 | 23 | 3 | 0 | 0.6 | 0.847 | |

| (0.1, 1,0.3) | WBS-SN(2) | 0 | 0 | 4 | 82 | 14 | 0 | 0 | 0.18 | 0.893 |

| WBS-SN(4) | 12 | 17 | 38 | 33 | 0 | 0 | 0 | 2.14 | 0.604 | |

| WBS-SN(6) | 28 | 27 | 27 | 14 | 4 | 0 | 0 | 3.91 | 0.383 | |

| WBS-SN(2,6) | 0 | 1 | 8 | 60 | 29 | 2 | 0 | 0.49 | 0.852 | |

| CZ | 55 | 33 | 6 | 4 | 1 | 1 | 0 | 6.38 | 0.156 | |

| MNBS | 97 | 0 | 2 | 1 | 0 | 0 | 0 | 8.75 | 0.019 | |