Adaptive self-organization in a realistic neural network model

Abstract

Information processing in complex systems is often found to be maximally efficient close to critical states associated with phase transitions. It is therefore conceivable that also neural information processing operates close to criticality. This is further supported by the observation of power-law distributions, which are a hallmark of phase transitions. An important open question is how neural networks could remain close to a critical point while undergoing continual change in the course of development, adaptation, learning, and more. An influential contribution was made by Bornholdt and Rohlf, introducing a generic mechanism of robust self-organized criticality in adaptive networks. Here we address the question whether this mechanism is relevant for real neural networks. We show in a realistic model that spike-time dependent synaptic plasticity can self-organize neural networks robustly toward criticality. Our model reproduces several empirical observations and makes testable predictions on the distribution of synaptic strength, relating them to the critical state of the network. These results suggest that the interplay between dynamics and topology may be essential for neural information processing.

pacs:

87.18.Sn, 05.65.+b, 89.75.Fb, 64.60.HtI Introduction

Dynamical criticality has been shown to bring about optimal information transfer and storage capabilities Beggs and Plenz (2003); Trusina et al. (2005); Legenstein and Maass (2007) and sensitivity to external stimuli Haldeman and Beggs (2005); Kinouchi and Copelli (2006) making it an attractive concept for neural dynamics Bienenstock and Lehmann (1998); Freeman et al. (2000); Chialvo (2006). Evidence for dynamical criticality in neural networks is found in vitro, in cell cultures and in slices of rat cortex Beggs and Plenz (2003), and in vivo Gireesh and Plenz (2008), where avalanches of neuronal activity observed to follow a power-law size distribution with exponent -1.5. On a larger spatial scale of human electroencephalography (EEG), measurements of electrical activity in the brain show that the power spectrum of background activity follows a power-law Barlow (1993); Barrie et al. (1996); Freeman et al. (2000); de Arcangelis et al. (2006) which has also been linked to criticality Novikov et al. (1997); de Arcangelis et al. (2006). Recently, also measures of phase synchronization were demonstrated to follow power-law scaling in functional magnetic resonance imaging (MRI) and magnetoencephalographic (MEG) data recorded from humans, indicating critical dynamics Kitzbichler et al. (2009).

Despite the empirical evidence, few generative mechanisms explaining the purported criticality have been proposed. What is needed is an robust mechanism that drives the system back to the critical state after a perturbation. In the theoretical literature, self-organized criticality (SOC), the ability of systems to self-tune their operating parameters to the critical state, has been discussed for a long time Bak et al. (1987). A major new impulse came from the discovery of network-based mechanisms, which were first reported in Christensen et al. (1998) and explained in detail in Bornholdt and Rohlf (2000); Bornholdt and Röhl (2003). These works showed that adaptive networks, i.e., networks combining topological evolution of the network topology with dynamics in the network nodes Gross and Blasius (2008); Gross and (Eds.), can exhibit highly robust SOC based on simple local rules.

The essential characteristic of adaptive networks is the interplay between dynamics ON the network and dynamics OF the network. In the case of neural networks the dynamics on the networks is the activity of the individual neurons. Dynamics of the network appear in many forms, but include the rewiring of neural connections in the developing brain and synaptic plasticity, the dynamical change of synaptic strength. In real world neural networks the formation of connections and all but the fastest mechanism of plasticity are clearly much slower than the dynamics of the neurons. For this reason both types of dynamics can be assumed to take place on separate timescales: On a short timescale the dynamics of neuronal activity occurs in a network with quasi-static topology. Only on a longer timescale, the topology evolves depending on the time-averaged, and therefore also quasi-static patterns of neuronal activity.

The basic mechanism of adaptive SOC can be explained as follows: Dynamics on networks are in general sensitive to the network topology, the specific pattern of nodes and links. Remarkably, even the dynamics in a single network node may provide information on global topological order parameters, if the node is observed for sufficient time. Thus, the dynamics explores the network making certain global topological properties locally accessible in every network node. In adaptive networks this information can then be utilized by a local topological update rule that slowly drives the network toward criticality.

The investigation of conceptual models of adaptive SOC Bornholdt and Röhl (2003); Gross and (Eds.) has shown that the presence of this type of self-organization in human neural networks is plausible. Independently, robust SOC has recently been demonstrated in neural models Shin and Kim (2006); Levina et al. (2007); Siri et al. (2007), which also fall into the class of adaptive networks. The aim of the present work is to assess whether adaptive SOC can self-organize a realistic model of neural network robustly to criticality. In particular, we consider topological self-organization driven by spike-time dependent synaptic plasticity (STDP). We find that the final state the network approaches is critical, albeit different from the states that were approached in previous models using activity-dependent (homeostatic) mechanism of plasticity.

II Description of the Model

We consider a network of leaky integrate-and-fire neurons. In contrast to previous works, which focused on inhibitory interactions Levina et al. (2007), we study a network of 80% excitatory to 20% inhibitory neurons, which is realistic for cortical networks Braitenberg and Schütz (1991). In the absence of a stimulus, the membrane potential, , of neuron follows the equation

| (1) |

describing an exponential return to the resting potential on a timescale given by . Whenever a neuron receives an input from a pre-synaptic neuron we update the membrane potential by adding if the pre-synaptic neuron is excitatory, or if the pre-synaptic neuron is inhibitory. If the update causes the membrane potential to reach or exceed the threshold then the potential is reset to for a refractory period after which it evolves again according to Eq. (1). Upon reaching the threshold the neuron fires a spike, which reaches the connected post-synaptic neurons after a travel-time delay .

Note that spikes can only be fired directly upon receiving an excitatory input. Consequently spikes are fired at times that are integer multiples of the travel-time delay. Thus the travel-time defines a natural timestep which we also use as the timestep of our simulation. Between spikes the membrane potential of a neuron is updated using the analytical solution of Eq. (1). The system can thus be simulated without suffering from the inaccuracies that are often introduced by numerical integration.

The topology of the network changes due to spike-time dependent plasticity (STDP) Bi and Poo (1998); Debanne et al. (1998), which is believed to alter the topology of the network on a timescale that is much slower than the spiking dynamics (some hundred milliseconds to some seconds Markram et al. (1997); Bi and Poo (1998)).

Exploiting the timescale separation we proceed as follows: We simulate the dynamics on the network according to the rules described above for a long time . Only when the simulation has finished the topology is changed according to an update rule explained below. The timescale is chosen sufficiently long for the system to reach a dynamical attractor. Specifically, we assume that this is the case when the neurons have fired in average spikes or all activity on the network has stopped. Once the attractor has been reached further simulation does not reveal additional information, as the system remains on the attractor (see e.g. Bornholdt and Rohlf (2000)). The exact choice of is therefore of no consequence, provided that it is sufficiently long. In the present model, this was confirmed numerically.

The STDP update rule captures the effect of the temporal order of spikes between pairs of neurons Markram et al. (1997); Bi and Poo (1998); Debanne et al. (1998). Following Refs. Pfister and Gerstner (2006); Morrison et al. (2008), we model this behavior by introducing internal variables and linked to the activity of a neuron . The variable encodes the time that has passed since the last spike of neuron , while counts the total number of spikes observed so far. At all times at which neuron spikes, both and are increased by . Between spikes decays with a time constant , such that

| (2) |

The temporal order of spikes between two neurons can be captured by introducing one more variable . When neuron spikes this variable is decreased by , while when spikes it is increased by . Therefore the variable will be increased if the neurons spike in the sequence while it is decreased if the neurons spike in the sequence . The increase/decrease is more pronounced in neurons spiking shortly after each other.

At the end of the simulation run the topology is updated depending on the variables introduced above. Depending on the specific question under consideration we use either one of two variants of the topological update rule. The first mimics the formation of neural connections in the developing brain where activity-dependent processes are considered to be a prominent mechanism in shaping topographic maps during development of cerebral connectivity Innocenti and Price (2005); Price et al. (2006). At the time of the topology update, a random is picked. If is greater or equal than the threshold , a new synapse from neuron to neuron with is created, if it is smaller than the threshold and a synapse from neuron to neuron exists, this synapse is deleted.

For the investigation of the self-organization of synaptic conductance, we use a variant update rule, in which we alter the conductance of a synapse from neuron to as . If is greater or equal than the threshold , the weight is increased, if it is smaller, is decreased by a fixed value , unless this would cause to become negative or exceed one.

After the update rule has been applied, we restart the system by assigning random membrane potentials to the neurons and setting two percent of the neurons above threshold. The procedure of simulating the dynamics and then applying the topology update rule is iterated to allow the network topology to relax to a self-organized state.

While our model aims to be as realistic as possible, there are three particulars of real brain networks that we cannot capture: the detailed organization of brain areas, the enormous number of neurons (approx. ) and the large average number of synapses connecting to every neuron (approx. for cortical neurons) Kandel et al. (2000). While it can be assumed that the detailed organization is only of secondary importance for the questions at hand, it is clear that the level of neuronal activity depends on the average number of synaptic inputs. As we will see in the following, the activity self-organizes to a certain level. Setting the number of synapses to a low/high value therefore causes the synaptic conductances to self-organize to correspondingly high/low levels. Conversely, if we fix the synaptic conductance at a low/high level, the number of synapses self-organizes to a high/low value in turn. Therefore (unrealistically) strong synapses have to be assumed in numerical simulations to keep the number of synapses in a feasible range. The impact of this assumption is discussed below.

III Results

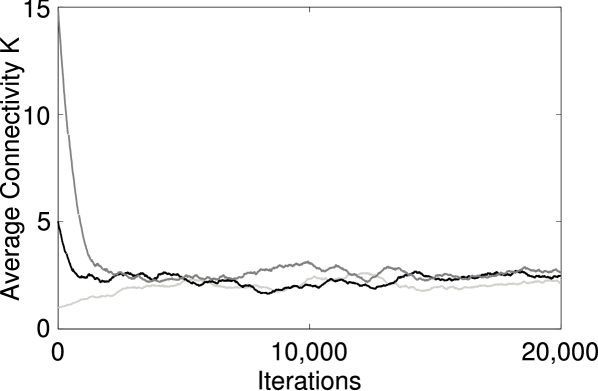

As a first test for self-organization, we monitor the connectivity of the model networks, while they evolve under the influence of the STDP update rule. For this purpose the first variant of the update rule is used. Starting with random networks with each neuron having on average synaptic connections to other neurons, the system approaches a characteristic connectivity independent of initial conditions. A representative set of timeseries is shown in Fig. 1. Additional investigations (not shown) confirm that is robust against variation of numerical constants such as .

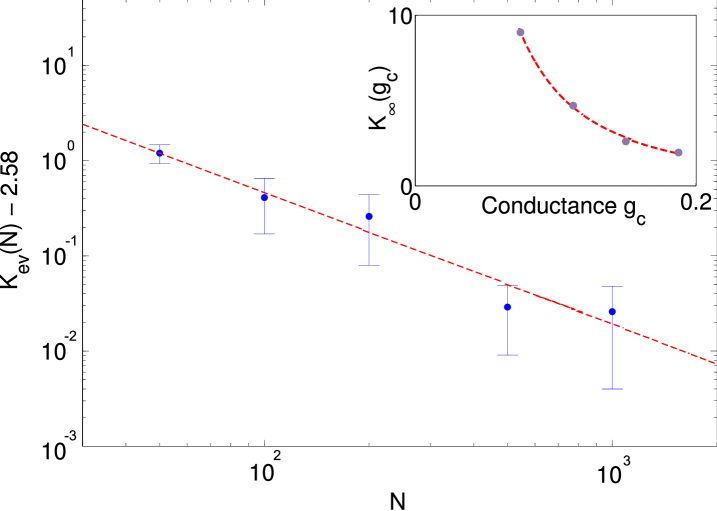

In order to investigate how our assumptions affect the self-organized level of connectivity, we simulate the evolution of networks for different synaptic strength and network sizes. We find that the value of scales with system size according to the scaling law

| (3) |

shown in Fig. 2. The best fit to the numerical observations is provided by the parameter values , and . Computing similar fits for different values of the synaptic conductance we find the scaling law

| (4) |

with , and (see Fig. 2 inset).

In real neural networks, a few tens of simultaneous excitatory post-synaptic potentials are sufficient to elevate the membrane potential from its reset value to spike threshold Kandel et al. (2000). A typical number is about inputs corresponding to a conductance of in our model. Substituting this value into Eq. (4) we obtain . While this extrapolation certainly provides only a very rough estimate it is reassuring to see that the result is in the order of magnitude of the connectivity observed for cortical neurons Braitenberg and Schütz (1998).

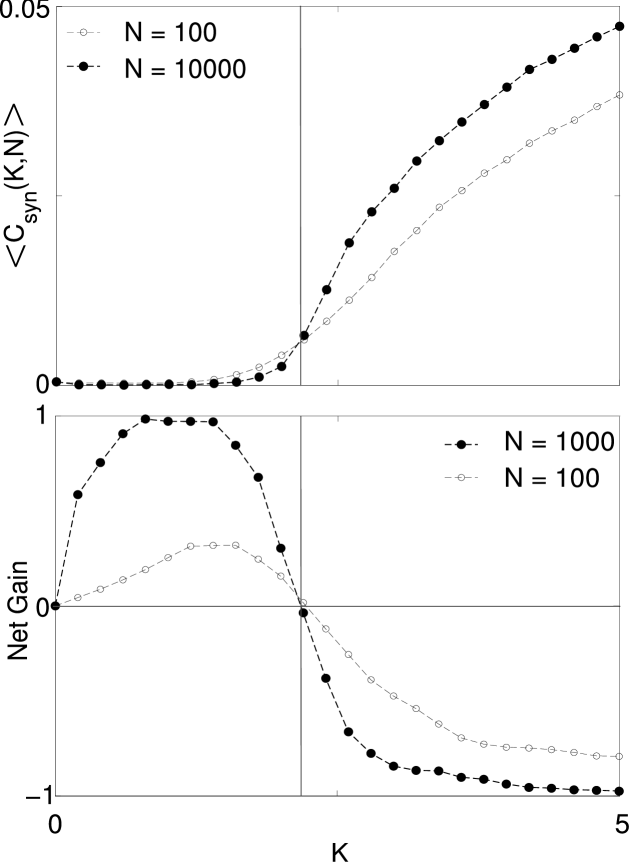

To show that the state approached by the network is critical, we first investigate the dynamics on random networks without synaptic plasticity so that the topology remains static. Previous studies Bornholdt and Rohlf (2000); Bornholdt and Röhl (2003) have shown that it is advantageous to quantify the dynamics by defining an order parameter as an average over pairs of neurons of the correlations between neurons

| (5) |

where is one if the neuron spiked at time and zero otherwise. This quantity is evaluated over a time which we here consider equal . Figure 3 shows averaged over random network topologies, for different connectivities and network sizes. This averaged order parameter, , increases at a threshold around which becomes more pronounced in larger networks indicating the existence of a continuous phase transition corresponding to the onset of synchrony in neural activity in the limit of large network size.

As a second step, we compute the net gain of links if the STDP rule were applied. Figure 3 shows that the STDP increases the connectivity of sparsely connected networks but decreases the connectivity of more densely connected networks. This constitutes an effective force toward the phase transition at .

The critical threshold at which the transition occurs in Fig. 3 corresponds approximately to the self-organized connectivity in the dynamic network. An exact match cannot be expected since the evolved networks are no longer random graphs but may exhibit long range correlations in the topology that shift the threshold to higher or lower connectivities.

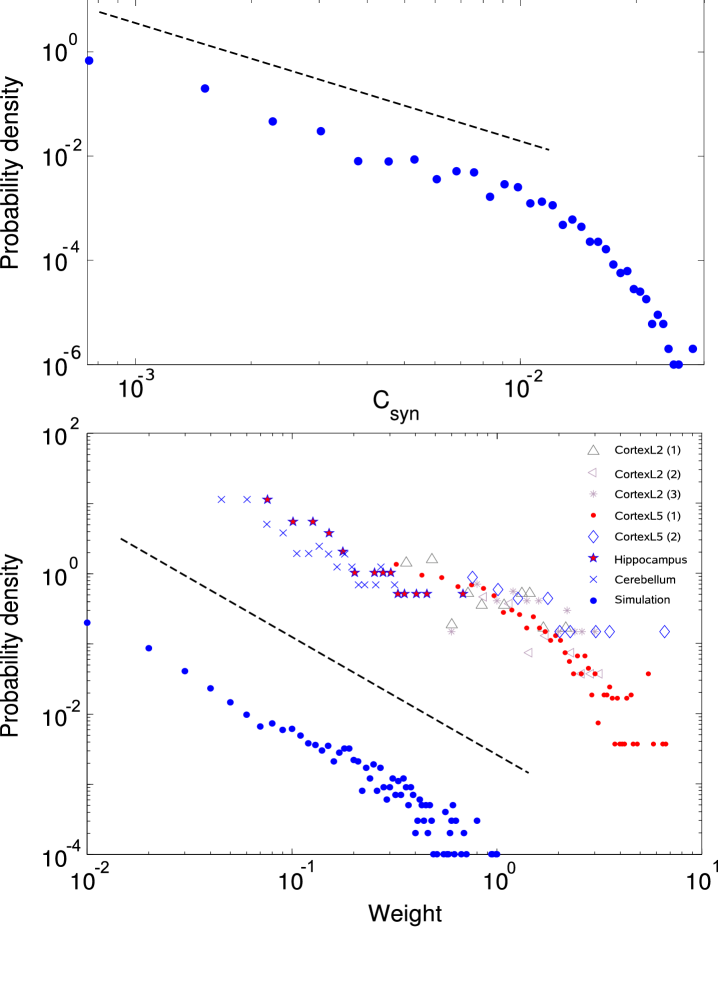

A hallmark of continuous phase transitions is the power-law distribution of microscopic variables related to the order parameter. We therefore compute the distribution of the spike-time correlation, , in the evolving networks. In the self-organized state, we find to be distributed according to a power-law (Fig. 4). While our numerical approach imposes strong cut-offs, already the observation of power-law scaling of the variable associated with the order parameter over one decade, provides additional evidence for the dynamical criticality in the evolved state. Our results are reminiscent of recent functional MRI and MEG measurements in human brains, which demonstrated a remarkably robust power-law scaling of two different synchronization measures over a broad range of frequencies and different pairs of anatomical regions Kitzbichler et al. (2009).

A current question not yet fully resolved by empirical data concerns the distribution of synaptic weights in real neural networks. To investigate this distribution in our model, we abandon the deletion and creation of synapses and instead switch to the variant STDP update-rule in which the weights of the synapses are increased or decreased. With the variant update rule, we observe that the connectivity, now defined as , approaches the same value that is found with the boolean update rule. In the critical state a large fraction of synaptic weights is zero, which is in agreement with empirical evidence Barbour et al. (2007). In our simulation the exact size of this fraction depends strongly on the number of synapses.

As shown in Fig. 4, the distribution of synaptic weights in the evolved state follows a power-law with exponent . The self-organization to an identical distribution was also observed in further simulations (not shown) using different and/or asymmetric values of for strengthening or weakening updates, as proposed in Morrison et al. (2008). Interestingly, a similar scaling behavior regarding the observed power-law for synaptic strengths in our model was found to play a role in models of network pruning Kurten and Clark (2008). The authors relate this observation to the sparseness of networks which is also an outcome of the present model.

For comparison of the self-organized distribution with empirical data, Fig. 4 also shows measurements of synaptic weights from somatic recordings of different anatomical areas in the brain Sayer et al. (1990); Mason et al. (1991); Turrigiano and Nelson (2001); Isope and Barbour (2002); Holmgren et al. (2003); Song et al. (2005); Frick et al. (2008) summarized in Barbour et al. (2007). From these recordings the two smallest values are neglected, since measurements of small weights are typically underestimated as they are likely to fall below the detection threshold Barbour et al. (2007). Comparing the combined data sets to the numerical results reveals a close resemblance. While this could be interpreted as an indication that the brain as a whole is in a self-organized critical state, such a claim is highly questionable, as, at least, the organization into different brain areas is certainly not self-organized. Considered individually, the data-sets curve slightly downwards in the double-logarithmic plot, which is indicative of an exponential distribution Pajevic and Plenz (2009). However, statistical tests Clauset et al. (2009) reveal that a power-law relationship can not be excluded at least for CortexL2 (1), CortexL2 (2), CortexL5 (2), Hippocampus and Cerebellum. The exponent providing the best fit for such a power law closely matches the value of found in our simulations.

IV Discussion

In this work we have investigated a realistic model of neural networks, capturing the interplay between the integrate-and-fire dynamics of neurons and spike-time dependent synaptic plasticity. We observed that the networks robustly evolved to a state characterized by the presence of power-laws in the distribution of synaptic conductances and the synchronization order parameter .

Our results provide strong evidence for self-organized criticality. In particular they indicate that a previously proposed mechanism, based on the interplay of dynamics and topology in adaptive networks Bornholdt and Rohlf (2000); Bornholdt and Röhl (2003), can robustly self-organize realistic models of neural networks to critical states. This suggests that this mechanism could also drive the self-organization to criticality recently observed in other models Shin and Kim (2006); Levina et al. (2007, 2009).

Apart from the higher degree of realism, our model differs from previous works in the nature of the synaptic plasticity. We find that spike-time dependent plasticity drives the network to a critical state that marks the onset of synchronous activity. By contrast, previous models based on activity-dependent update rules, found a self-organization toward a different critical state corresponding to an order-disorder phase transition Bornholdt and Rohlf (2000); Levina et al. (2007). Since both types of plasticity have been observed in nature it is promising to study their combined effect in future models. It is interesting to note that the order-disorder transition mainly depends on the average connectivity of the network, while the synchronization transition is much more sensitive to larger topological motifs. The combined effect of activity-dependent and spike-time dependent mechanisms could therefore potentially self-organize the network to both thresholds simultaneously.

One question that we have not studied in detail in this work concerns the topology that evolves in the model. However, the observation of sustained activity in relatively small networks with realistic refractory times suggests the evolution of highly non-random topologies on which activity can propagate similarly to synfire chains. Results from an earlier work showed that STDP can reorganize a globally connected neural network into a functional network, which is both small-world and scale-free with a degree distribution following a power-law with an exponent similar to the one for synaptic weights in our model Shin and Kim (2006). Similarly, Siri et al. (2007) showed that Hebbian learning can rewire the network to show small-world properties and operate at the edge of chaos. Robust self-organization of scale-free topologies was also observed in a study of spike-time dependent network modifications with node dynamics based on coupled logistic maps Jost and Kolwankar (2009).

Certainly the most important question is if the mechanism studied here is also at work in real neural networks. To this end note that our observations were based on the interplay of two well-accepted ingredients: spike-time dependent plasticity and integrate-and-fire neurons. We observed that the coupling of these ingredients yields results that are in good agreement with empirical observations. We therefore conclude that also the self-organization to criticality should take place in nature, unless factors exist that specifically disrupt it. The evolution of such factors should be disfavored as critical states are believed to be advantageous for information processing. Furthermore, the basic adaptive mechanism for self-organization, considered here, has by now been observed in several different models. It is therefore unlikely that this mechanism is disrupted by specific details rooted in the biochemistry and biophysics of the neurons. Also, the local nature of the mechanism conveys a high robustness against noise Bornholdt and Rohlf (2000), which appears in real world networks due to true stochasticity and in the form of external inputs. Finally, finite-size effects, which strongly constrain self-organized criticality in many systems, are unlikely to play a role in human cortical networks because of the large number of neurons and synapses. Therefore, the observation of self-organization to criticality in the realistic model, studied here, shows that similar self-organization in real neural networks is likely.

Perhaps the most controversial prediction of the current model is that synaptic weights should follow a power-law. Although it is often assumed that the real weight distribution is exponential, to our knowledge, no mechanistic model reproducing the observed distributions has been proposed. Moreover, at least in certain brain areas, the hypothesis that synaptic weights are distributed according to a power-law cannot be rejected on empirical grounds. While further investigations are certainly necessary, the mechanism studied here can therefore potentially provide a rationale explaining the observed distributions in these brain areas.

Acknowledgments

CM thanks Jens Timmer and Stefan Rotter for help and discussions.

References

- Beggs and Plenz (2003) J. Beggs and D. Plenz, The Journal of Neuroscience 23, 11167 (2003).

- Trusina et al. (2005) A. Trusina, M. Rosvall, and K. Sneppen, Physical Review Letters 94, 238701 (2005).

- Legenstein and Maass (2007) R. Legenstein and W. Maass, Neural Networks 20, 323 (2007).

- Haldeman and Beggs (2005) C. Haldeman and J.M. Beggs, Physical Review Letters 94, 058101 (2005).

- Kinouchi and Copelli (2006) O. Kinouchi and M. Copelli, Nature Physics 2, 348 (2006).

- Bienenstock and Lehmann (1998) E. Bienenstock and D. Lehmann, Advances in Complex Systems 1, 361 (1998).

- Freeman et al. (2000) W. Freeman, L. Rogers, M. Holmes, and D. Silbergeld, Journal of Neuroscience Methods 95, 111 (2000).

- Chialvo (2006) D. Chialvo, Nature Physics 2, 301 (2006).

- Gireesh and Plenz (2008) E. Gireesh and D. Plenz, Proceedings of the National Academy of Science USA 105, 7576 (2008).

- Barlow (1993) J. Barlow, The Electroencephalogram: Its Patterns and Origins. (Cambridge MA: MIT, 1993).

- Barrie et al. (1996) J. Barrie, W. Freeman, and M. Lenhart, Journal of Neurophysiology 76, 520 (1996).

- de Arcangelis et al. (2006) L. de Arcangelis, C. Perrone-Capano, and H.J. Herrmann, Physical Review Letters 96, 028107 (2006).

- Novikov et al. (1997) E. Novikov, A. Novikov, D. Shannahoff-Khalsa, B. Schwartz, and J. Wright, Phys. Rev. E 56, R2387 (1997).

- Kitzbichler et al. (2009) M. Kitzbichler, M. Smith, S. Christensen, and E. Bullmore, PLoS Computational Biology 5, e1000314 (2009).

- Bak et al. (1987) P. Bak, C. Tang, and K. Wiesenfeld, Physical Review Letters 59, 381 (1987).

- Christensen et al. (1998) K. Christensen, R. Donangelo, B. Koiller, and K. Sneppen, Physical Review Letters 81, 2380 (1998).

- Bornholdt and Rohlf (2000) S. Bornholdt and T. Rohlf, Physical Review Letters 84, 6114 (2000).

- Bornholdt and Röhl (2003) S. Bornholdt and T. Röhl, Physical Review E 67, 066118 (2003).

- Gross and Blasius (2008) T. Gross and B. Blasius, Journal of the Royal Society - Interface 5, 259 (2008).

- Gross and (Eds.) T. Gross and H. S. (Eds.), Adaptive Networks: Theory, Models and Applications (Springer Verlag Heidelberg, 2009).

- Shin and Kim (2006) C.-W. Shin and S. Kim, Physical Review E 74, 045101(R) (2006).

- Levina et al. (2007) A. Levina, J. Herrmann, and T. Geisel, Nature Physics 3, 857 (2007).

- Siri et al. (2007) B. Siri, M. Quoy, B. Delord, B. Cessac, and H. Berry, Journal of Physiology-Paris 101, 136 (2007).

- Braitenberg and Schütz (1991) V. Braitenberg and A. Schütz, Anatomy of the Cortex (Springer: Berlin, 1991).

- Bi and Poo (1998) G. Bi and M. Poo, The Journal of Neuroscience 18, 10464 (1998).

- Debanne et al. (1998) D. Debanne, B. Gahwiler, and S. Thompson, Journal of Physiology (London) 507, 237 (1998).

- Markram et al. (1997) H. Markram, J. Lübke, M. Frotscher, and B. Sakmann, Science 275, 213 (1997).

- Pfister and Gerstner (2006) J.-P. Pfister and W. Gerstner, Advances in Neural Information Processing Systems 18, 1083 (2006).

- Morrison et al. (2008) A. Morrison, M. Diesmann, and W. Gerstner, Biological Cybernetics 98, 459 (2008).

- Innocenti and Price (2005) G. Innocenti and D. Price, Nature Reviews Neuroscience 6, 955 (2005).

- Price et al. (2006) D. Price, H. Kennedy, C. Dehay, L. Zhou, M. Mercier, Y. Jossin, A. Goffinet, F. Tissir, D. Blakey, and Z. Molnar, European Journal of Neuroscience 23, 910 (2006).

- Kandel et al. (2000) E. Kandel, J. Schwartz, and T. Jessell, Principles of Neural Science, 4th ed. (McGraw-Hill, New York, 2000).

- Diesmann et al. (1999) M. Diesmann, M.-O. Gewaltig, and A. Aertsen, Nature 402, 529 (1999).

- Tsodyks et al. (2000) M. Tsodyks, A. Uziel, and H. Markram, The Journal of Neuroscience 20, 1 (2000).

- Gewaltig et al. (2001) M.-O. Gewaltig, M. Diesmann, and A. Aertsen, Neural networks 14, 657 (2001).

- Braitenberg and Schütz (1998) V. Braitenberg and A. Schütz, Cortex: Statistics and Geometry of Neuronal Connectivity (2nd ed.) (Springer, Berlin, 1998).

- Barbour et al. (2007) B. Barbour, N. Brunel, V. Hakim, and J.-P. Nadal, TRENDS in Neurosciences 30 (2007).

- Kurten and Clark (2008) K. E. Kurten and J. W. Clark, Phys. Rev. E 77, 046116 (2008).

- Sayer et al. (1990) R. Sayer, M. Friedlander, and S. Redman, Journal of Neuroscience 10, 826 (1990).

- Mason et al. (1991) A. Mason, A. Nicoll, and K. Stratford, Journal of Neuroscience 11, 72 (1991).

- Turrigiano and Nelson (2001) P. S. G. Turrigiano and S. Nelson, Neuron 32, 1149 (2001).

- Isope and Barbour (2002) P. Isope and B. Barbour, Journal of Neuroscience 22, 9668 (2002).

- Holmgren et al. (2003) C. Holmgren, T. Harkany, B. Svennenfors, and Y. Zilberter, The Journal of Physiology 551, 139 (2003).

- Song et al. (2005) S. Song, P. Sjöström, M. Reigl, S. Nelson, and D. Chklovskii, PLoS Biol. 3, e68 (2005).

- Frick et al. (2008) A. Frick, D. Feldmeyer, M. Helmstaedter, and B. Sakmann, Cerebral Cortex 18, 397 (2008).

- Pajevic and Plenz (2009) S. Pajevic and D. Plenz, PLoS Computational Biology 5, e1000271 (2009).

- Clauset et al. (2009) A. Clauset, C. Shalizi, and M. Newman, SIAM Review (2009).

- Levina et al. (2009) A. Levina, J.M. Herrmann, and T. Geisel, Physical Review Letters 102, 118110 (2009).

- Jost and Kolwankar (2009) J. Jost and K. M. Kolwankar, Physica A 388, 1959 (2009).