Adaptive Transmission Scheduling in Wireless Networks for Asynchronous Federated Learning

Abstract

In this paper, we study asynchronous federated learning (FL) in a wireless distributed learning network (WDLN). To allow each edge device to use its local data more efficiently via asynchronous FL, transmission scheduling in the WDLN for asynchronous FL should be carefully determined considering system uncertainties, such as time-varying channel and stochastic data arrivals, and the scarce radio resources in the WDLN. To address this, we propose a metric, called an effectivity score, which represents the amount of learning from asynchronous FL. We then formulate an Asynchronous Learning-aware transmission Scheduling (ALS) problem to maximize the effectivity score and develop three ALS algorithms, called ALSA-PI, BALSA, and BALSA-PO, to solve it. If the statistical information about the uncertainties is known, the problem can be optimally and efficiently solved by ALSA-PI. Even if not, it can be still optimally solved by BALSA that learns the uncertainties based on a Bayesian approach using the state information reported from devices. BALSA-PO suboptimally solves the problem, but it addresses a more restrictive WDLN in practice, where the AP can observe a limited state information compared with the information used in BALSA. We show via simulations that the models trained by our ALS algorithms achieve performances close to that by an ideal benchmark and outperform those by other state-of-the-art baseline scheduling algorithms in terms of model accuracy, training loss, learning speed, and robustness of learning. These results demonstrate that the adaptive scheduling strategy in our ALS algorithms is effective to asynchronous FL.

Index Terms:

Asynchronous learning, distributed learning, federated learning, scheduling, wireless networkI Introduction

Nowadays, a massive amount of data is generated from devices, such as mobile phones and wearable devices, which can be used for a wide range of machine learning (ML) applications from healthcare to autonomous driving. As the computational and storage capabilities of such distributed devices keep growing, distributed learning has become attractive to efficiently exploit the data from devices and to address privacy concerns. Due to the emergence of the need for distributed learning, federated learning (FL) has been widely studied as a potentially viable solution for distributed learning [1, 2]. FL allows to learn a shared central model from the locally trained models of distributed devices under the coordination of a central server while the local training data at the devices is not shared with the central server.

In FL, the central server and the devices should communicate with each other to transmit the trained models. For this, a wireless network composed of one AP and multiple devices has been widely considered [3, 4, 5, 6, 7, 8, 9, 10, 11], and the AP in the network plays the role of the central server in FL. FL is operated in multiple rounds. In each round, first, the AP broadcasts the current central model. In typical FL, all devices substitute their local models into the received central one and train them using their local training data. Then, the locally trained model of each device is uploaded to the AP and the AP aggregates them into the central model. This enables training the central model in a distributed manner while avoiding privacy concerns because it does not require each device to upload its local training data.

However, as FL has been implemented in wireless networks, the limited radio resources of wireless networks have been raised as a critical issue since the radio resources restrict the number of devices that can upload the local models to the AP simultaneously [8, 7]. To address the issue, in [10, 5, 6, 7, 8, 9, 11], device scheduling procedures for FL have been proposed in which the AP schedules the devices to train their local models and upload them considering the limited radio resources. Then, in each round, only the scheduled devices do so and the AP aggregates the local models uploaded from the scheduled devices. On the other hand, the not-scheduled devices do not train their local models and their local data that have been arrived during the round is stored for future use. Hence, every locally trained model in each round is synchronously aggregated into the central model in the round (i.e., once a device trains its local model in a round, it must be aggregated into the central model in that round and its use in future rounds is not allowed), and such FL is called synchronous FL in the literature.

Device scheduling procedures in synchronous FL have effectively addressed the issue of the limited radio resources in wireless networks, but at the same time, they may cause the inefficiency of utilizing local computation resources and the loss of local data due to too much pileup [12, 13]. These issues are raised because of the not-scheduled devices in each round that do not train their local models. Hence, to address them, we can allow even the not-scheduled devices to train their local models and store them for future use. This enables each device to continually train its local model by using the arriving local data in an online manner, which avoids waste of local computation resources and too much pileup of the local data [12, 13]. However, at the same time, when aggregating the stored locally trained models, it causes the time lag between the stored local models and the current central model due to the asynchronous local model aggregation. In the literature, such FL with the time lag is called asynchronous FL. In addition, such devices who store the previous locally trained models are typically called stragglers, and when aggregating them into the central model, they may cause an adverse effect to the convergence of the central model because of the time lag [13].

Recently, in the ML literature, several works on asynchronous FL have addressed the harmful effects from the stragglers which inevitably exist due to network circumstances such as time-varying channels and scarce radio resources [13, 14, 12, 15]. They introduced various approaches which address the stragglers when updating the central model and the local models; balancing between the previous local model and current one [15, 13], adopting dynamic learning rates [14, 13, 12], and using a regularized loss function [15, 13]. However, they mainly focused on addressing the incurred stragglers and did not take into account the issues relevant to the implementation of FL, which are related to the occurrence of the stragglers. Hence, it is necessary to study an asynchronous FL procedure in which the key challenges of implementing asynchronous FL in a wireless network are carefully considered.

When implementing FL in the wireless network, the scarcity of radio resources is one of the key challenges. Hence, several existing works focus on reducing the communication costs of a single local model transmission of each edge device; analog model aggregation methods using the inherent characteristic of a wireless medium [4, 3], a gradient reusing method [16], and local gradient compressing methods [17, 18]. Meanwhile, other existing works in [6, 5, 8, 7, 10, 9, 11] focus on scheduling the transmission of the local models for FL in which only scheduled edge devices participate in FL. Since typical scheduling strategies in wireless networks [19, 20] do not consider FL, for effective learning, various scheduling strategies for FL have been proposed based on traditional scheduling policies [5], scheduling criteria to minimize the training loss [6, 9, 7, 8], an effective temporal scheduling pattern to FL [10], and multi-armed bandits [11]. They allow the AP to efficiently use the radio resources to accelerate FL.

However, these existing works on FL implementation in the wireless network have been studied only for synchronous FL, and do not consider the characteristics of asynchronous FL, such as the stragglers and the continual online training of the local models, at all. Nevertheless, the existing methods on reducing the communication costs can be used for asynchronous FL since in asynchronous FL, the edge devices should transmit their local model to the AP as in synchronous FL. On the other hand, the methods on scheduling the transmission of the local models may cause a significant inefficiency of learning if they are adopted to asynchronous FL. This is because most of them consider each individual FL round separately and do not address transmission scheduling over multiple rounds. In asynchronous FL, each round is highly inter-dependent since the current scheduling affects the subsequent rounds due to the use of the stored local models from the stragglers. Hence, the transmission scheduling for asynchronous FL should be carefully determined considering the stragglers over multiple rounds. In addition, in such scheduling over multiple rounds, the effectiveness of learning depends on system uncertainties, such as time-varying channels and stochastic data arrivals. In particular, the stochastic data arrivals become more important in asynchronous FL since the data arrivals are directly related to the amount of the straggled local data due to the continual online training. However, the existing works do not consider stochastic data arrivals at edge devices.

| Wireless channel | Multiple rounds | Stochastic data arrivals | Stragglers from async. FL | |

| [6, 9, 7, 8, 5] | ||||

| [10, 11] | ||||

| Our work |

In this paper, we study asynchronous FL considering the key challenges of implementing it in a wireless network. To the best of our knowledge, our work is the first to study asynchronous FL in a wireless network. Specifically, we propose an asynchronous FL procedure in which the characteristics of asynchronous FL, time-varying channels, and stochastic data arrivals of the edge devices are considered for transmission scheduling over multiple rounds. The comparison of our work and the existing works on transmission scheduling in FL is summarized in Table I. We then analyze the convergence of the asynchronous FL procedure. To address scheduling the transmission of the local models in the asynchronous FL procedure, we also propose a metric called an effectivity score. It represents the amount of learning from asynchronous FL considering the properties of asynchronous FL including the harmful effects on learning due to the stragglers. We formulate an asynchronous learning-aware transmission scheduling (ALS) problem to maximize the effectivity score while considering the system uncertainties (i.e., the time-varying channels and stochastic data arrivals). We then develop the following three ALS algorithms that solve the ALS problem:

-

•

First, an ALS algorithm with the perfect statistical information about the system uncertainties (ALSA-PI) optimally and efficiently solves the problem using the state information reported from the edge devices in the asynchronous FL procedure and the statistical information.

-

•

Second, a Bayesian ALS algorithm (BALSA) solves the problem using the state information without requiring any a priori information. Instead, it learns the system uncertainties based on a Bayesian approach. We prove that BALSA is optimal in terms of the long-term average effectivity score by its regret bound analysis.

-

•

Third, a Bayesian ALS algorithm for a partially observable WDLN (BALSA-PO) solves the problem only using partial state information (i.e., channel conditions). It addresses a more restrictive WDLN in practice, where each edge device is allowed to report only its current channel condition to the AP.

Through experimental results, we show that ALSA-PI and BALSAs (i.e., BALSA and BALSA-PO) achieve performance close to an ideal benchmark with no radio resource constraints and transmission failure. We also show that they outperform other baseline scheduling algorithms in terms of training loss, test accuracy, learning speed, and robustness of learning.

The rest of this paper is organized as follows. Section II introduces a WDLN with asynchronous FL. In Section III, we formulate the ALS problem considering asynchronous FL. In Section IV, we develop ALSA-PI, BALSA, and BALSA-PO, and in Section V, we provide experimental results. Finally, we conclude in Section VI.

II Wireless Distributed Learning Network with Asynchronous FL

In this section, we introduce typical learning strategies of asynchronous FL provided in [15, 14, 13, 12]. We then propose an asynchronous FL procedure to adopt the learning strategies in a WDLN. For ease of reference, we summarize some notations in Table II.

| Notation | Description |

| Central parameters of the AP in round | |

| Local parameters of device in round | |

| Central learning weight of device in round | |

| Number of aggregated samples of device from the latest successful local update transmission to at the beginning of round | |

| Number of arrived samples of device during round | |

| Total number of samples from device used for the central updates before the beginning of round | |

| Local update of device in round | |

| Local learning rate of device in round | |

| Local gradient of device in round | |

| Channel gain of device in round | |

| Transmission scheduling indicator of device in round | |

| Successful transmission indicator of device in round | |

| System parameters related to the channel gain of device | |

| System parameter related to the sample arrival of device | |

| Prior distribution for the parameters | |

| Posterior distribution for the parameters in round | |

| Start time of stage of BALSA | |

| Length of stage of BALSA |

II-A Central and Local Parameter Updates in Asynchronous FL

Here, we introduce typical learning strategies to update the central and local parameters in asynchronous FL [15, 14, 13, 12], which address the challenges due to the stragglers. To this end, we consider one access point (AP) that plays a role of a central server in FL and edge devices. The set of edge devices is defined as . In asynchronous FL, an artificial neural network (ANN) model composed of multiple parameters is trained in a distributed manner to minimize an empirical loss function , where denotes the parameters of the ANN. Asynchronous FL proceeds over a discrete-time horizon composed of multiple rounds. The set of rounds is defined as and the index of rounds is denoted by . Then, we can formally define the problem of asynchronous FL with a given local loss function at device , , as follows:

| (1) |

where denotes the number of data samples of device ,111For simple presentation, we omit the word “edge” from edge device in the rest of the paper. , and is an empirical local loss function defined by the parameters at -th data sample of device . To solve this problem, in asynchronous FL, every device trains parameters by using its locally available data in an online manner for efficient learning on each device and avoiding too much pileup of the local data. Then, partial devices are scheduled to transmit the trained parameters to the AP. By using the received parameters from the scheduled devices, the AP updates its parameters. We call the parameters at the AP central parameters and those at each device local parameters. The details of how to update the local and central parameters in asynchronous FL are provided in the following.

II-A1 Local Parameter Updates

We describe local parameter updates at each device in asynchronous FL. Let the central parameters of the AP in round be denoted by . Note that the central parameters is calculated by the AP in round , which will be described in the central parameter updates section later. In round , the AP first broadcasts the central parameters to all devices and each device replaces its local parameters with . Thus, becomes the initial parameters of local training at each device in round . Then, the device trains its local parameters using its local data samples that have arrived since its previous local parameter update. To this end, it applies a gradient-based update method using the regularized loss function defined as follows [15, 13]:

| (2) |

where the second term mitigates the deviations of the local parameters from the central ones and is the parameter for the regularization. We denote the local gradient of device calculated using the local parameters and its local data samples in round by . In asynchronous FL, the local gradient which has not been transmitted in the previous rounds will be aggregated to the current local gradient. Such local gradients not transmitted in the previous rounds are called delayed local gradients. In the literature, the devices who have such delayed local gradients are called stragglers. It has been shown that they adversely affect model convergence since the parameters used to calculate the delayed local gradients are different from the current local parameters used to calculate the current local gradients [21, 15, 13].

To address this issue, when aggregating the previous local gradients and current ones, we need to balance between them. To this end, in [15, 13], the decay coefficient is introduced and used when aggregating the local gradients as

| (3) |

where is the aggregated local gradient of device in round . By using the aggregated local gradient, we define the local update of device in round as

| (4) |

where is the local learning rate of the local parameters of device in round . It is worth emphasizing that each device uploads its local update to the AP for updating the central parameters if scheduled. Moreover, a dynamic local learning rate has been widely applied to address the stragglers. The dynamic learning rate of device in round is determined as follows [22, 12, 13]:

| (5) |

where is an initial value of the local learning rate and is the number of delayed rounds since the latest successful local update transmission of device in round . Each device transmits its local update to the AP according to the transmission scheduling, and then, updates its aggregated local gradient according to the local update transmission as

| (6) |

II-A2 Central Parameter Updates

In round , device obtains its local update, , by using its local data and delayed local gradients as in (3) and (4), respectively. After the scheduled devices transmit their local updates to the AP, the AP recalculates its central parameters by aggregating the successfully received local updates from the scheduled devices. To represent this, we define the set of the devices whose local updates are successfully received at the AP in round by . Then, the central parameters are updated as follows [13, 12]:

| (7) |

where is the central learning weight of device in round . It is worth empasizing that is calculated by the AP in round and will be broadcasted to all devices in round . The central learning weight of each device is determined according to the contribution of the device to the central parameter updates so far, which is evaluated according to the number of the samples used for the central parameter updates from the device [3, 4, 5, 6, 7, 8, 9, 11, 10, 13, 14, 12, 15]. We define the total number of samples from device used for the central updates before the beginning of round as , the number of aggregated samples of device from the latest successful local update transmission to the beginning of round as , and the number of samples of device that have arrived during round as . Then, the central learning weight of device in round is determined as

| (8) |

II-B Asynchronous FL Procedure in WDLN

We consider a WDLN consisting of one AP and devices that cooperatively operate FL over the discrete-time horizon composed of multiple rounds that have an identical time duration. In the WDLN, the local data stochastically and continually arrives at each device and the device trains its local parameters in each round by using the arrived data. Due to the scarce radio resources of the WDLN, it is impractical that all devices transmit their local parameters to the AP simultaneously. Hence, FL in the WDLN becomes asynchronous FL and the devices who will transmit their local parameters in each round should be carefully scheduled by the AP for training the central parameters effectively.

We now propose an asynchronous FL procedure for the WDLN in which the central and local parameter update strategies introduced in the previous subsection are adopted. In the procedure, the characteristics of the WDLN, such as time-varying channel conditions and system bandwidth, are considered as well. This allows us to implement asynchronous FL in the WDLN while addressing the challenges due to the scarcity of radio resources in the WDLN. We denote the channel gain of device in round by . We assume that the channel gain of device in each round follows a parameterized distribution and denote the corresponding system parameters by . For example, for Rician fading channels, represents the parameters of the Rician distribution, and . The vector of the channel gain in round is defined by .

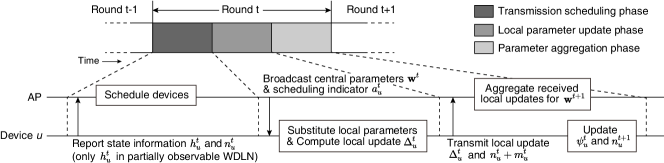

In the procedure, each round is composed of three phases: transmission scheduling phase, local parameter update phase, and parameter aggregation phase. In the transmission scheduling phase, the AP observes the state information of the devices which can be used to determine the transmission scheduling. In specific, we define the state information in round as , where is the vector of the number of aggregated samples of each device from its latest successful local update transmission to the beginning of round . It is worth noting that in the following sections, we consider a partially observable WDLN as well, in which the AP can observe the channel gain only, to address more restrictive environments in practice. Then, the AP determines the transmission scheduling for asynchronous FL based on the state information. In the local parameter update phase, the AP broadcasts its central parameters to all devices. We do not consider the transmission failure for at each device as in the related works [9, 7, 8, 10], i.e., all devices receive successfully. This is reasonable because the probability of the transmission failure for is small in practice since the AP can reliably broadcast the central parameters by using much larger transmission power than that of the devices and robust transmission methods considering the worst-case device.222It is worth noting that this assumption is only to avoid unnecessarily complicated modeling. Besides, our proposed method can be easily used even without this assumption as well by allowing each device to maintain its previous local parameters when its reception of the central parameters is failed. Each device replaces its local parameters with the received central parameters (i.e., ). Then, it trains the parameters using its local data samples which have arrived since the local parameter update phase in the previous round and calculates the local update in (4) as described in Section II-A1. Finally, in the parameter aggregation phase, each scheduled device transmits its local update to the AP. Then, the AP updates the central parameters by averaging them as in (7). In the following, we describe the asynchronous FL procedure in the WDLN in more detail.

First, the AP schedules the devices to transmit their local updates based on the state information to effectively utilize the limited radio resources in each round. We define the maximum number of devices that can be scheduled in each round as . It is worth noting that is restricted by the bandwidth of the WDLN and typically much smaller than the number of devices (i.e., ). We define a transmission scheduling indicator of device in round as , where 1 represents device is scheduled to transmit its gradient in round and 0 represents it is not. The vector of the transmission scheduling indicators in round is defined as . In round , the AP schedules the devices to transmit their local updates satisfying the following constraint:

| (9) |

We then define the successful transmission indicator of the local update of device in round as a Bernoulli random variable , where 1 represents the successful transmission and 0 represents the transmission failure. Since the probability of the successful transmission of device in round is determined according to the channel gain of device , we can model it by using the approximation of the packet error rate (PER) with the given signal-to-interference-noise ratio (SINR) [23, 24]. Moreover, we can use the PER provided in [25, 26] to model it considering the HARQ or ARQ retransmissions. For example, the PER of an uncoded packet can be given by , where is the SINR with the channel gain , is the bit error rate for the given SINR, and is the packet length in bits. We refer the readers to [24] for more examples of the PER approximations with coding. The vector of the successful transmission indicators in round is defined as .

We assume the number of the samples that have arrived at device during round , , is an independently and identically distributed (i.i.d.) Poisson random variable with a system parameter .333It is worth noting that the assumption of Poisson data arrivals is only for simple implementation of the Bayesian approach in our proposed method. We can easily generalize our proposed method for any other i.i.d. data arrivals by using sampling algorithms such as Gibbs sampling. At the end of round (i.e., at the beginning of round ), the number of aggregated samples of device from the latest successful local update transmission to the beginning of round ,444For brevity, to denote , we simply write “the number of aggregated samples of device at the beginning of round ” in the rest of this paper. , and the total number of samples from device used for the central updates before the beginning of round , , should be calculated depending on the local update transmission as follows:

| (10) |

and

| (11) |

With the scheduling and successful transmission indicators, ’s and ’s, we can rewrite the equation for the centralized parameter update at the AP in (7) as follows:

| (12) |

We summarize the asynchronous FL procedure in the WDLN in Algorithm 1 and illustrate in Fig. 1.

II-C Convergence Analysis of Asynchronous FL in WDLN

We analyze the convergence of the asynchronous FL procedure proposed in the previous subsection. We first introduce some definitions and an assumption on the objective function of asynchronous FL in (1) for the analysis.

Definition 1

(-smoothness) The function is -smooth if it has Lipschitz continuous gradient with constant (i.e., ),

Definition 2

(-strongly convexity) The function is -strongly convex with if ,

Definition 3

Assumption 1

The objective function of asynchronous FL, , in (1) is bounded from below, and there exists such that hold for all .

It is worth noting that this assumption is a typical one used in literature [27, 15, 13]. With this assumption, we can show the convergence of the asynchronous FL procedure in the WDLN in the following theorem.

Theorem 1

Suppose that the objective function of asynchronous FL in the WDLN, , in (1) is -smooth and -strongly convex, and the local gradients ’s are -dissimilar. Then, if Assumption 1 holds, after rounds, the asynchronous FL procedure in the WDLN satisfies

| (13) | |||

In addition, if there exists any satisfies and , the following holds:

| (14) |

where and .

Proof:

See Appendix A. ∎

Theorem 1 takes consideration of the asynchronous FL procedure in the WDLN compared with the convergence analysis of asynchronous FL in [13]. As a result, the theorem implies that the faster convergence can be expected as a larger expected number of successful transmissions in the WDLN, . Besides, it theoretically shows the convergence of the asynchronous FL procedure in the WDLN as if the expected number of successful transmissions is non-zero since . However, various aspects in learning, such as a learning speed and a robustness to stragglers, are not directly shown in this theorem, and they may appear differently according to transmission scheduling strategies used in the asynchronous FL procedure as will be shown in Section V.

III Problem Formulation

We now formulate an asynchronous learning-aware transmission scheduling (ALS) problem to maximize the performance of FL learning. In the literature, it is empirically shown that the FL learning performance mainly depends on the local update of each device, which is determined by the arrived data samples at the devices [2, 15]. However, it is still not clearly investigated which characteristics in a local update bring a larger impact than others to learn the optimal parameters [28, 2]. Moreover, it is hard for the AP to exploit information relevant to the local updates because the AP cannot obtain the local updates before the devices transmit them to the AP and cannot access the data samples of the devices due to the limited radio resources and privacy issues. Due to these reasons, instead of directly finding the impactful local updates, the existing works [9, 7, 8] schedule the transmissions according to the factors in the bound of the convergence rate of FL, which implicitly represent the impact of the local updates in terms of the convergence. However, as pointed out in Section I, the existing works on transmission scheduling for FL do not consider asynchronous FL over multiple rounds. As a result, their scheduling criterion based on the convergence rate may become inefficient in asynchronous FL since in a long-term aspect with asynchronous FL, the deterioration of the central model’s convergence due to the absence of some devices in model aggregation can be compensated later thanks to the nature of asynchronous FL.

Contrary to the existing works, we focus on maximizing the average number of learning from the local data samples considering asynchronous FL. The number of data samples used in learning can implicitly represent the amount of learning. Roughly speaking, a larger number of data samples leads the empirical loss in (1) to be converged into a true one in the real-world. It is also empirically accepted in the literature that more amount of data samples generally results in better performance [29, 30]. In this context, in FL, the number of the local samples used to calculate the local gradients represents the amount of learning since the central parameters are updated by aggregating the local gradients. However, in asynchronous FL, the delayed local gradients due to scheduling or transmission failure are aggregated to the current one, which may cause an adverse effect on learning. Hence, here, we maximize the total number of samples used to calculate the local gradients aggregated in the central updates while considering their delay as a cost to minimize its adverse effect on learning.

We define a state in round by a tuple of the channel gain and the numbers of the aggregated samples , and the state space . The AP can observe the state information in the transmission scheduling phase of each round. It is worth noting that in the following section, we will also consider the more restrictive environments in which the AP can observe only a partial state in round (i.e., the channel gain ). We denote system uncertainties in the WDLN, such as the successful local update transmissions and data sample arrivals, by random disturbances. The vector of the random disturbances in round is defined as , where is the vector of the successful transmission indicators in round and is the vector of the number of samples that have arrived at each device during round . Then, an action in round , , is chosen from the action space, defined by . In each round , the AP determines the action based on the observed state while considering the random disturbances which are unknown to the AP. To evaluate the effectiveness of the transmission on learning in each round, we define a metric, called an effectivity score of asynchronous FL, as

| (15) |

where is the set of the devices whose local update transmission was successful in round and is a delay cost coefficient to consider the adverse effect of the stragglers. Note that this metric is correlated over time since depends on the previous scheduling and the transmission result. The first term of the effectivity score represents the total number of the samples that will be effectively used in the central update while the second term represents the total number of the samples that may cause the adverse effects in learning due to the delayed local updates. We now formulate the ALS problem maximizing the total expected effectivity score of asynchronous FL as

| (16) |

where is a policy that maps a state to an action. This problem maximizes the number of samples used in asynchronous FL while trying to minimize the adverse effect of the stragglers due to the delay.

Remark 1

We highlight that the proposed effective score for asynchronous FL shares the same principle with the metrics in the conventional works [9, 8]. Roughly speaking, the metrics try to maximize the number of data samples used in learning the transmitted local updates since it maximizes the convergence rates of the central model according to the convergence analysis of synchronous FL. Similar to this, the proposed effective score tries to maximize the number of data samples used in learning the successfully transmitted local updates to maximize the amount of learning conveyed to the AP. However, at the same time, it tries to minimize the number of data samples used in learning the delayed local updates to minimize their adverse effects, while the conventional metrics do not introduce any penalty due to the delayed local updates.

We define the system parameters related to the system uncertainties as

| (17) |

where is a system parameter space. These system parameters express the system uncertainties, such as the channel statistics and the average number of arrived samples (i.e., arrival rate) of each device in each round, in the WDLN, and thus, we can solve the problem if they are known. We define the true system parameters for the WDLN as , where and are the true system parameters for the channel gains and the arrival rates, respectively. Formally, if the system parameters are perfectly known in advance as a priori information, the state transition probabilities, , can be derived by using the probability of successful transmission, the distribution of the channel gains, and the Poisson distribution with the a priori information. Then, based on the transition probabilities, the problem in (16) can be optimally solved by standard dynamic programming (DP) methods [31]. However, this is impractical since it is hard to obtain such a priori information in advance. Besides, even if such a priori information is perfectly known, the computational complexity of the standard DP methods is too high as will be shown in Section IV-E. Hence, we need a learning approach such as reinforcement learning (RL) that learns the information while proceeding the asynchronous FL, and develop algorithms based on it in the following section.

IV Asynchronous Learning-Aware Transmission Scheduling

In this section, we develop three ALS algorithms each of which requires different information to solve the ALS problem: an ALS algorithm with perfect a priori information (ALSA-PI), a Bayesian ALS algorithm (BALSA), and a Bayesian ALS algorithm with a partially observable state information (BALSA-PO). We summarize the information required in ASLA-PI, BALSA, and BALSA-PO in Table III. In the table, we can see that from ALSA-PI to BALSA-PO, the required information in those three algorithms is gradually reduced.

| ALSA-PI | BALSA | BALSA-PO | |

| a priori information on true system parameters | - | - | |

| State information reported from the devices |

IV-A Parametrized Markov Decision Process

Before developing algorithms, we first define a parameterized Markov decision process (MDP) based on the ALS problem in the previous section. Since the system uncertainties in the WDLN, such as the channel statistics and the arrival rates of the samples, depend on the system parameters in (17), the MDP is parameterized by system parameters . In specific, it is defined as , where and are the state space and action space of the ALS problem, respectively, is the reward function, and is the transition probability such that . We define the reward function as the effectivity score in (15). For theoretical results, we assume that the state space is finite and the reward function is bounded as .555We can easily implement such a system by quantizing the channel gain and truncating and with a large constant. Then, the state space becomes finite and we can use the reward function as the normalized version of with the maximum expected reward that can be derived from the constant. In practice, this system is more realistic to be implemented since such variables in a real system are typically finite. The average reward per round of a stationary policy is defined as

| (18) |

The optimal average reward per round satisfies the Bellman equation:

| (19) |

where is the value function at state . We can define the corresponding optimal policy, , as the maximizer of the above optimization. Then, the policy becomes the optimal solution to the problem in (16).

IV-B ALSA-PI: Optimal ALS with Perfect a Priori Information

We now develop ALSA-PI to solve the ALS problem when is perfectly known at the AP in advance. Typically, the true system parameters is unknown, but here we introduce ALSA-PI since it will be used to develop BALSA in Section IV-C. Besides, in some cases, might be precisely estimated by using past experiences. Since the true system parameters are known, the problem in (16) can be solved by finding the optimal policy to . However, in general, computing the optimal policy to given parameters requires a large computational complexity which exponentially increases with the number of devices, which is often called a curse of dimensionality. Hence, even if the true system parameters are perfectly known in advance, it is hard to compute the corresponding optimal policy . However, for the ALS problem, a greedy policy, which myopically chooses the action to maximize the expected reward in the current round, becomes the optimal policy as the following theorem.

Theorem 2

For the parameterized MDP with given finite parameters, the greedy policy in (23) is optimal.

Proof:

See Appendix B. ∎

With this theorem, we can easily develop ALSA-PI by adopting the greedy policy with the known system parameters thanks to the structure of the reward. This also implies that we can significantly reduce the computational complexity to solve the ALS problem with the known parameters compared with the DP methods that are typical ones to solve MDPs. The computational complexity will be analyzed in Section IV-E.

In round , the greedy policy for given system parameters chooses the action by solving the following optimization problem:

| (20) |

where represents the expectation over the probability distribution of the given system parameters . With the scheduling and successful transmission indicators, ’s and ’s, we rearrange the objective function in (15) as

| (21) |

Then, we can reformulate the problem as

| (22) |

since the last term in (21) does not depend on both transmission scheduling indicator and uncertainty on the successful transmission . In addition, the conditional expectation in the problem depends only on the system parameters for the arrival rates of the data samples, , because is solely determined by the current channel gain. To solve the problem, the AP estimates the expected number of samples of each device , , by using its channel gain and the system parameter . Let the devices be sorted in descending order of their expected number of samples and indexed by . Then, the greedy policy in round is easily obtained as

| (23) |

In ALSA-PI, the AP schedules the devices according to the greedy policy with the true system parameter (i.e., in (23) with ) in each round.

Remark 2

For the time-varying maximum number of devices that can be scheduled in each round, the greedy policy in (23) can be easily extended by substituting into , where denotes the maximum number in round . This implies that the ALS algorithms (i.e., ALSA-PI, BALSA, and BALSA-PO) can be easily extended for it as well since they are implemented by using the greedy policy and in the methods, is related to the greedy policy only.

IV-C BALSA: Optimal Bayesian ALS without a Priori Information

We now develop BALSA that solves the parameterized MDP without requiring any a priori information. To this end, it adopts a Bayesian approach to learn the unknown system parameters . We define the policy determined by BALSA as each of which chooses an action according to the history of states, actions, and rewards, . To apply the Bayesian approach, we assume that the system parameters that belong to are independent to each other. We denote a prior distribution for the system parameters by . For the prior distribution, the non-informative prior can be typically used if there is no prior information about the system parameters. On the other hand, if there is any prior information, then it can be defined by considering the information. For example, if we know that a parameter belongs to a certain interval, then the prior distribution can be defined as a truncated distribution whose probability measure outside of the interval is zero. We then define the Bayesian regret of BALSA up to time as

| (24) |

where the expectation in the above equation is over the prior distribution and the randomness in state transitions. This Bayesian regret has been widely used in the literature of Bayesian RL as a metric to quantify the performance of a learning algorithm [32, 33, 34].

In BALSA, the system uncertainties are estimated by a Bayesian approach. Formally, in the Bayesian approach, the posterior distribution of in round , which is denoted by , is updated by using the observed information about according to the Bayes’ rule. Then, the posterior distribution is used to estimate or sample the system parameters for the algorithm. We will describe how the posterior distribution is updated in detail later. We define the number of visits to any state-action pair before time as

| (25) |

where denotes the cardinality of the set.

BALSA operates in stages each of which is composed of multiple rounds. We denote the start time of stage of BALSA by and the length of stage by . With the convention, we set . Stage ends and the next stage starts if or for some . This balances the trade-off between exploration and exploitation in BALSA. Thus, the start time of stage is given by

| (26) |

and . This stopping criterion allows us to bound the number of stages over rounds. At the beginning of stage , system parameters are sampled from the posterior distribution . Then, the action is chosen by the optimal policy corresponding to the sampled system parameter , , until the stage ends. It is worth noting that this posterior sampling procedure, in which the system parameters sampled from the posterior distribution are used for choosing actions, has been widely applied to address the exploration-exploitation dilemma in RL [32, 33, 34, 35]. Since the greedy policy, , is the optimal policy to the ALS problem as shown in the previous subsection, we can easily implement BALSA by using it. Besides, this makes the AP not have to estimate the posterior distribution of the parameters for the channel gains, , since the greedy policy do not use them.

Here, we describe BALSA in more detail. In round , the AP observes the states . Then, the AP can obtain the numbers of arrived samples of all devices during round , ’s, as follows: for each device whose local model was transmitted to the AP in round (i.e., ), it can be known because is transmitted to the AP in round for the local update, and for the other devices (i.e., ), it can be obtained by subtracting from . Then, the AP updates the posterior distribution of , , according to Bayes’ rule. We denote the posterior distribution for a system parameter that belongs to in round by . Then, the posterior distribution of is updated by using ’s. For example, if the non-informative Jeffreys prior for the Poisson distribution is used for the system parameter , then its posterior distribution in round is derived as follows [36]:

| (27) |

where . In this paper, we implement BALSA based on the non-informative prior. In each stage of the algorithm, the AP samples the system parameters by using the posterior distribution in (27) and schedules the local update transmission according to the greedy policy in (23) using the sampled system parameters . We summarize BALSA in Algorithm 2.

We can prove the regret bound of BALSA as the following theorem.

Theorem 3

Suppose that the maximum value function over the state space is bounded, i.e., for all . Then, the Bayes regret of BALSA satisfies

| (28) |

where and denote the numbers of states and actions, i.e., and , respectively.

Proof:

See Appendix C. ∎

The regret bound in Theorem 3 is sublinear in . Thus, in theory, it is guaranteed that the average reward of BALSA per round converges to that of the optimal policy (i.e., ), which implies the optimality of BALSA in terms of the long-term average reward per round.

IV-D BALSA-PO: Bayesian ALS for Partially Observable State Information

In the WDLN, the state, , can be reported to the AP from the devices. In typical wireless networks, reporting ’s to the AP needs data transmissions of the devices, which require exchanging control messages between the AP and the devices individually. Besides, it is expected that the number of devices participating in FL is typically much larger than the capacity of wireless networks that represents the number of devices that can transmit the local updates simultaneously [2]. Hence, it might be impractical (at least inefficient) that all individual devices report their numbers of the aggregated samples to the AP in each round because of the excessive exchanges of the control messages between the AP and the individual devices for reporting . On the other hand, the channel gains may not require such data transmissions in an uplink case since the AP can estimate the channel gains of the devices by using the pre-defined reference signals transmitted from the devices [37]. In this context, in this section, we consider a WDLN in which in each round, the AP observes the channel gain only and the numbers of the aggregated samples are not reported to the AP. We can model this as a partially observable MDP (POMDP) based on the description in Section III, but the POMDP is often hard to solve because of the intractable computation. In this section, we develop an algorithm to solve the ALS problem in the partially observable WDLN by slightly modifying BALSA, which is called BALSA-PO.

In the fully observable WDLN, the AP observes the numbers of the aggregated samples, ’s, from all devices in each round . In BALSA, ’s are used to choose the action according to the greedy policy in (23) and to obtain the numbers of the arrived samples of all devices during round , ’s, for updating the posterior distribution of . Hence, in the partially observable WDLN, where ’s are not observable, the AP cannot choose the action according to the greedy policy as well as obtain ’s. To address these issues, first, in BALSA-PO, the AP approximates by using the sampled system parameter of as , where is the number of the rounds from the latest successful local update transmission to the current round (i.e., ) and is the sampled system parameter of in stage . Then, the AP can choose the action according to the greedy policy by using the approximated ’s, i.e., . However, the chosen action will be meaningful only if the posterior distribution keeps updated correctly since ’s are approximated based on the sampled system parameters. In the partially observable WDLN, it is a challenging issue updating the posterior distribution correctly since the AP cannot obtain ’s which are required to update the posterior distribution. However, fortunately, even in the partially observable WDLN, the AP can still observe the information about the number of samples in every successful local update transmission since the information is included in the local update transmission (line 10 of Algorithm 1). Note that denotes the sum of ’s over the rounds from the latest successful local update transmission to the current round. This sum of ’s in the partially observable WDLN is less informative than all individual values of ’s. Nevertheless, it is informative enough to update the posterior distribution because the update of the posterior distribution of requires only the sum of ’s as in (27). Hence, in the partially observable WDLN, the AP can update the posterior distribution of when device successfully transmits its local update. Then, BALSA-PO can be implemented by substituting the state in BALSA, , to , where and changing the posterior distribution update procedure from BALSA in line 9 of Algorithm 2 as follows: “Update as in (27) using for device ”.

| Computational complexity up to round | |

| DP-based with perfect information | |

| DP-based without information | Upper-bound: Lower-bound: |

| ALSA-PI,BALSA(-PO) | |

| and | |

IV-E Comparison of Computational Complexity

We compare the computational complexities of the DP-based algorithms with/without a priori information, ALSA-PI, and BALSAs. The DP-based algorithm with perfect a priori information requires to run one of the standard DP methods once to compute the optimal policy for the system parameters in the perfect information. For the complexity analysis to compute the optimal policy, we consider the well-known value iteration whose computational complexity is given by , where , , is the set of the possible channel gain, and is the set of the possible number of the aggregated samples. Hence, the computational complexity of the DP-based algorithm with perfect a priori information is given by . The DP-based algorithm without a priori information denotes a learning method based on the posterior sampling in [34]. It operates over multiple stages as in BALSAs but the optimal policy for the sampled system parameters in stage is computed based on the standard DP methods. Thus, its upper-bound complexity up to round is given by if the policy is computed in all rounds. Since the system parameters are sampled once for each stage, we can also derive the lower bound of its computational complexity up to round as from Lemma 3 in Appendix C.

Contrary to the DP-based algorithms, ALSA-PI and BALSAs use the greedy policy that finds the devices with the largest expected number of samples in each round. Thus, their computational complexity up to round is given by , where is the number of devices, according to the complexity of typical sorting algorithms. It is worth emphasizing that the complexity of BALSAs grows according to the rate of while the complexity of the DP algorithms exponentially increases according to . Besides, the DP-based algorithms have much larger computational complexities than BALSAs in terms of not only the asymptotic behavior but also the complexity coefficients in the Big-O notation since the DP-based algorithms require a complex computations based on the Bellman equation while BALSAs require only the computations to update posteriors in (27) and to sort the expected number of samples. From the analyses, we can see that the complexity of BALSAs is significantly lower than that of the DP-based algorithms. The computational complexities of the algorithms are summarized in Table IV.

V Experimental Results

In this section, we provide experimental results to evaluate the performance of our algorithms. To this end, we develop a dedicated Python-based simulator on which the following simulation and asynchronous FL are run. We consider a WDLN composed of one AP and 25 devices. We use a shard as a unit of data samples each of which consists of multiple data samples. The number of data samples in each shard is determined according to datasets. The arrival rate of the data samples of each device (i.e., the system parameter ), and the distance between the AP and each device are provided in Table V. The maximum number of scheduled devices in the WDLN, , is set to be 5 as in [11]. The channel gains are composed of the Rayleigh small-scale fading that follows an independent exponential distribution with unit mean and the large-scale fading based on the pathloss model , where the pathloss exponent is given by 3.76 and represents the distance in km. The transmission power of each device is set to be 23 dBm and the noise power is set to be -96 dBm. In the transmission of the local updates, the PER is approximated according to given SNR as in [25] based on turbo code. The delay cost coefficient in the ALS problem is set to be 0.01. In asynchronous FL, we set the local learning rate to be 0.01 and the decay coefficient to be 0.001.

| Device index | 1-3 | 4-6 | 7-9 | 10-12 | 13-15 | 16 | 17 | 18 |

| Arr. rate (shards/round) | 1 | 1 | 1 | 1 | 1 | 3 | 3 | 3 |

| Distance (m) | 100 | 200 | 300 | 400 | 500 | 300 | 350 | 400 |

| Device index | 19 | 20 | 21 | 22 | 23 | 24 | 25 | - |

| Arr. rate (shards/round) | 3 | 5 | 5 | 5 | 5 | 10 | 10 | - |

| Distance (m) | 450 | 300 | 350 | 400 | 450 | 400 | 450 | - |

In the experiments, we consider MNIST and CIFAR-10 datasets. For the MNIST dataset, we set the number of data samples in each shard to be 10 and consider a convolutional neural network model with two convolution layers with max pooling, a fully connected layer, and a final softmax output layer. The first convolution layer is with 1 channel and the second one is with 10 channels. The fully connected layer is composed of 320 units with ReLU activation. When training the local models for MNIST, we set the local batch size to be 10 and the number of local epochs to train the local model to be 10. For the CIFAR-10 dataset, we set the number of data samples in each shard to be 50 and consider a well-known VGG19 model [38]. We also set the local batch size to be 50 and the number of local epochs to be 5.

To evaluate the performance of our algorithms, we compare them with an ideal benchmark and state-of-the-art scheduling algorithms.666In this paper, we do not compare our algorithms with model compressing methods [16, 17, 18], which reduces the communication costs of a single local model transmission, since they can be used with our algorithms orthogonally. The descriptions of the algorithms are provided as follows.

-

•

Bench represents an ideal benchmark algorithm, where in each round, the AP updates the central parameters by aggregating the local updates of all devices as in FedAvg [1]. This provides the upper bound of the model performance because it is based on an ideal system with no radio resource constraints and transmission failure.

-

•

RR represents an algorithm in which the devices are scheduled in a round robin manner [5]. This algorithm does not consider both arrival rates of the data samples and channel information of the devices.

- •

- •

- •

-

•

BALSA-PO is implemented as described in Section IV-D. The Jeffreys prior is used as in BALSA. This algorithm is for the partially observable WDLN.

The models are trained by asynchronous FL with above transmission scheduling algorithms. We run 50 simulation instances for MNIST dataset and 20 simulation instances for CIFAR-10 dataset. In the following figures, the 95% confidence interval is illustrated as a shaded region.

V-A Effectivity Scores in the ALS Problem

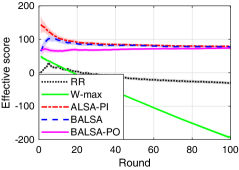

We first provide the effectivity scores of the algorithms, which are the objective function of the ALS problem in (16), in Fig. 2. Note that Bench is not provided in the figure since in Bench, all devices transmit their local updates and all the transmissions succeed. From the figure, we can see that our BALSAs achieve the similar effectivity score to that of ALSA-PI, which is the optimal one. In particular, as the round proceeds, the effectivity scores of BALSAs converge to that of ALSA-PI. This clearly shows that our BALSAs can effectively learn the uncertainties in the WDLN. On the other hand, RR and -max achieve the much lower effectivity scores compared with BALSAs. Especially, the effectivity score of -max decreases as the round proceeds since it fails not only to maximize the number of the data samples used in training and but also to minimize the adverse effect from the stragglers. To show the validity of the effectivity score for effective learning in asynchronous FL, in the following subsections, it will be clearly shown that the algorithms with the higher effectivity scores achieve better trained model performances such as training loss, accuracy, robustness against stragglers, and learning speed.

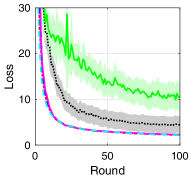

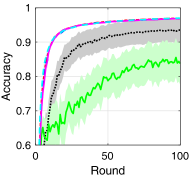

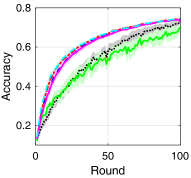

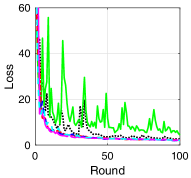

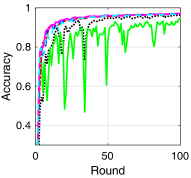

V-B Training Loss and Test Accuracy

To compare the performance of asynchronous FL with respect to the transmission scheduling algorithms, which is our ultimate goal, we provide the training loss and test accuracy of the algorithms. Fig. 3 provides the training loss and test accuracy with MNIST and CIFAR-10 datasets. From Figs. 3a and 3c, we can see that ALSA-PI, BALSA, and BALSA-PO achieve the similar training loss to Bench. Compared with them, RR and -max achieve the larger training loss. The larger training loss of a model typically implies the lower accuracy of the model. This is clearly shown in Figs. 3b and 3d. From the figures, we can see that the models trained with RR and -max achieve the significantly lower accuracy than the models trained with the other algorithms and their variances are much larger than those of the other algorithms. These results imply that RR and -max fail to effectively gather the local updates while addressing the stragglers because they do not have a capability to consider the characteristics of asynchronous FL and the uncertainties in the WDLN. On the other hand, our BALSAs gather the local updates which are enough to train the model while effectively addressing the stragglers due to asynchronous FL by considering the effectivity score.

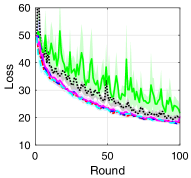

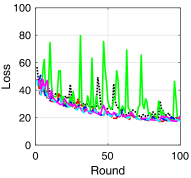

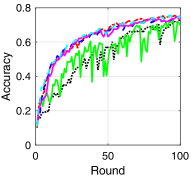

V-C Robustness of Learning against Stragglers

In Fig. 3, the unstable learning of the algorithms due to the stragglers is not clearly shown since the fluctuations of the training loss and accuracy in the figure are wiped out while averaging the results from the multiple simulation instances. Hence, in Fig. 4, we show the robustness of the algorithms against the stragglers more clearly through the training loss and test accuracy results of a single simulation instance. First of all, it is worth emphasizing that Bench is the most stable one in terms of the stragglers because it has no straggler. From Figs. 4a and 4c, we can see that the training losses of the algorithms considering asynchronous FL based on the effectivity score (i.e., ALSA-PI and BALSAs) are quite stable as much as that of Bench. Accordingly, their corresponding test accuracies are also stable. On the other hand, for the algorithms not considering asynchronous FL (i.e., RR and -max), a lot of spikes (i.e., short-lasting peaks) appear in their training losses, and accordingly, their test accuracies are also unstable. Moreover, the training loss and test accuracy of -max is significantly unstable compared with RR. This is because the scheduling strategy of -max is highly biased according to the average channel gain while RR sequentially schedules all the devices. Such biased scheduling in -max raises much more stragglers than RR. This clearly shows that a transmission scheduling algorithm may cause unstable learning if its scheduling strategy is biased without considering the stragglers.

V-D Satisfaction Rate and Learning Speed

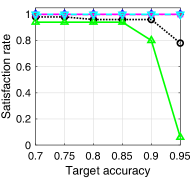

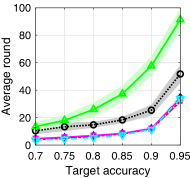

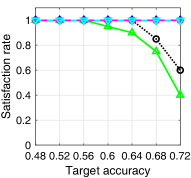

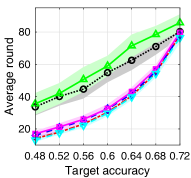

In Fig. 5, we provide the target accuracy satisfaction rate and the average required rounds for satisfying the target accuracy of each algorithm. For the MNIST dataset, we vary the target accuracy from 0.7 to 0.95, and for the CIFAR-10 dataset, we vary it from 0.48 to 0.72. The test accuracy satisfaction rate of each algorithm is obtained as the ratio of the simulation instances, where the test accuracy of the corresponding trained model exceeds the target accuracy at the end of the simulation, to the total simulation instances. For the average required rounds for satisfying the target accuracy, we find the minimum required rounds of each simulation instance in which the target accuracy is satisfied. To avoid the effect of the spikes, we set a criteria of satisfying the target accuracy as follows: all test accuracies in three consecutive rounds exceed the target accuracy. From Figs. 5a and 5c, we can see that the satisfaction rates of ALSA-PI and BALSAs are similar to that of Bench. On the other hand, the satisfaction rates of RR and -max significantly decrease as the target accuracy increases. Figs. 5b and 5d provide the average required rounds of the algorithms to satisfy the target accuracy. From the figures, we can see that the algorithms considering asynchronous FL (ALSA-PI and BALSAs) require the smaller rounds to satisfy the target accuracy. This clearly shows that the algorithms that consider the effective score of asynchronous FL (i.e., ALSA-PI and BALSAs) have a faster learning speed than the algorithms that do not consider it (i.e., RR and -max).

VI Conclusion and Future Work

In this paper, we proposed the asynchronous FL procedure in the WDLN and investigated its convergence. We also investigated transmission scheduling in the WDLN for effective learning. To this end, we first proposed the effectivity score of asynchronous FL that represents the amount of learning in which the harmful effects on learning due to the stragglers are considered. We then formulated the ALS problem that maximizes the effectivity score of asynchronous FL. We developed ALSA-PI that can solve the ALS problem when the perfect a priori information is given. We also developed BALSA and BALSA-PO that effectively solve the ALS problem without a priori information by learning the uncertainty on stochastic data arrivals with a minimal amount of information. Our experimental results show that our ALSA-PI and BALSAs achieve the similar performance to the ideal benchmark. In addition, they outperform the other baseline scheduling algorithms. These results clearly show that the transmission scheduling strategy based on the effectivity score, which is adopted to our algorithms, is effective for asynchronous FL. Besides, our BALSAs effectively schedules the transmissions even without any a priori information by learning the system uncertainties. As a future work, a non-i.i.d. distribution of data samples over devices can be incorporated into the ALS problem for more effective learning in a non-i.i.d. data distribution scenario. In addition, subchannel allocation and power control can be considered to minimize the time consumption for the FL update procedure by utilizing the resources more effectively in asynchronous FL.

Appendix A Proof of Theorem 1

Lemma 1

If is -strong convex, then with Assumption 1, we have

| (29) |

Using Lemma 1, we now prove Theorem 1. Since is -smooth provided in Definition 1 (i.e., ), the following holds:

| (30) |

Let us define . Then, and with Assumption 1 and gradient dissimilarity, the following holds:

| (31) |

Let us define . Then, because for all , we have Now, with Lemma 1, we can rewrite the inequality in (31) as

| (32) | |||

By subtracting from both sides and rearranging the inequality, we have

| (33) | |||

By taking expectation of both sides, we can get

| (34) | |||

and with telescoping the above equations, we have the equation in (13) in Theorem 1. Then, if , we can rewrite the equation in (34) as

| (35) |

where and . With telescoping the above equations, we have the equation in (14) in Theorem 1.

Appendix B Proof of Theorem 2

Suppose that there exists the optimal policy whose chosen action in a round with state is not identical to the greedy policy. We denote the expected instantaneous effectivity score of round with the optimal policy by and that with the greedy policy by . Based on the equation in (21), we can decompose the expected instantaneous effectivity score into two sub-rewards as , where and . In the problem, the samples are accumulated if they are not used for central training. Moreover, the channel gains and arrival rates of the samples of the devices are i.i.d. Hence, if a policy satisfies

| (36) |

where represents from policy , any arrived samples will be eventually reflected in the sub-reward regardless of the policy due to the accumulation of the samples. This implies that the average of sub-reward converges to as . Accordingly, if a policy satisfies the condition in (36), its optimality to the ALS problem depends on minimizing the delay cost (i.e., the sub-reward ). With the greedy policy, any device will be scheduled when the number of its aggregated samples is large enough. Hence, the greedy policy satisfies the condition if the parameters are finite. We now denote the expected sub-rewards of round with the optimal policy and the greedy policy by and , respectively. From the definition of the greedy policy, it is obvious that which implies that for any random disturbances. Consequently, this leads to which implies that the greedy policy is optimal.

Appendix C Proof of Theorem 3

We define , which represents the number of stages in BALSA until round . For in stage , we have the following equation from (19):

| (37) |

Then, the expected regret of BALSA is derived as

| (38) |

where

We can bound the regret of BALSA by deriving the bounds on , , and as the following lemma:

Lemma 2

For the expected regret of BALSA, we have the following bounds:

-

•

The first term is bounded as .

-

•

The second term is bounded as .

-

•

The third term is bounded as .

In addition, we can bound the number of stages as follows.

Lemma 3

The number of stages in BALSA until round is bounded as .

References

- [1] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Proc. AISTATS, 2017.

- [2] W. Y. B. Lim, N. C. Luong, D. T. Hoang, Y. Jiao, Y.-C. Liang, Q. Yang, D. Niyato, and C. Miao, “Federated learning in mobile edge networks: A comprehensive survey,” IEEE Commun. Surveys Tuts., no. 3, pp. 2031–2063, 2020.

- [3] M. M. Amiri and D. Gündüz, “Federated learning over wireless fading channels,” IEEE Trans. Wireless Commun., vol. 19, no. 5, pp. 3546–3557, May 2020.

- [4] ——, “Machine learning at the wireless edge: Distributed stochastic gradient descent over-the-air,” IEEE Trans. Signal Process., vol. 68, pp. 2155–2169, Mar. 2020.

- [5] H. H. Yang, Z. Liu, T. Q. Quek, and H. V. Poor, “Scheduling policies for federated learning in wireless networks,” IEEE Trans. Commun., vol. 68, no. 1, pp. 317–333, Jan. 2020.

- [6] S. Wang, T. Tuor, T. Salonidis, K. K. Leung, C. Makaya, T. He, and K. Chan, “Adaptive federated learning in resource constrained edge computing systems,” IEEE J. Sel. Areas Commun., vol. 37, no. 6, pp. 1205–1221, June 2019.

- [7] M. M. Amiri, D. Gündüz, S. R. Kulkarni, and H. V. Poor, “Convergence of update aware device scheduling for federated learning at the wireless edge,” vol. 20, no. 6, pp. 3643–3658, June 2021.

- [8] W. Shi, S. Zhou, Z. Niu, M. Jiang, and L. Geng, “Joint device scheduling and resource allocation for latency constrained wireless federated learning,” IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 453–467, Jan. 2021.

- [9] M. Chen, Z. Yang, W. Saad, C. Yin, H. V. Poor, and S. Cui, “A joint learning and communications framework for federated learning over wireless networks,” IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 269–283, Jan. 2021.

- [10] J. Xu and H. Wang, “Client selection and bandwidth allocation in wireless federated learning networks: A long-term perspective,” IEEE Trans. Wireless Commun., vol. 20, no. 2, pp. 1188–1200, Feb. 2021.

- [11] W. Xia, T. Q. Quek, K. Guo, W. Wen, H. H. Yang, and H. Zhu, “Multi-armed bandit-based client scheduling for federated learning,” vol. 19, no. 11, pp. 7108–7123, Nov. 2020.

- [12] Y. Chen, X. Sun, and Y. Jin, “Communication-efficient federated deep learning with layerwise asynchronous model update and temporally weighted aggregation,” IEEE Trans. Neural Netw. Learn. Syst., vol. 31, no. 10, pp. 4229–4238, Oct. 2020.

- [13] Y. Chen, Y. Ning, M. Slawski, and H. Rangwala, “Asynchronous online federated learning for edge devices with non-IID data,” in Proc. 2020 IEEE Int. Conf. on Big Data (Big Data), 2020.

- [14] S. Zheng, Q. Meng, T. Wang, W. Chen, N. Yu, Z.-M. Ma, and T.-Y. Liu, “Asynchronous stochastic gradient descent with delay compensation,” in Proc. ICML, 2017.

- [15] C. Xie, S. Koyejo, and I. Gupta, “Asynchronous federated optimization,” arXiv preprint arXiv:1903.03934, 2020.

- [16] T. Chen, G. Giannakis, T. Sun, and W. Yin, “LAG: Lazily aggregated gradient for communication-efficient distributed learning,” in Proc. NIPS, 2018.

- [17] Y. Lin, S. Han, H. Mao, Y. Wang, and B. Dally, “Deep gradient compression: Reducing the communication bandwidth for distributed training,” in Proc. ICLR, 2018.

- [18] J. Xu, W. Du, Y. Jin, W. He, and R. Cheng, “Ternary compression for communication-efficient federated learning,” IEEE Trans. Neural Netw. Learn. Syst., to be published.

- [19] F. Li, D. Yu, H. Yang, J. Yu, H. Karl, and X. Cheng, “Multi-armed-bandit-based spectrum scheduling algorithms in wireless networks: A survey,” IEEE Wireless Commun., vol. 27, no. 1, pp. 24–30, 2020.

- [20] H.-S. Lee, J.-Y. Kim, and J.-W. Lee, “Resource allocation in wireless networks with deep reinforcement learning: A circumstance-independent approach,” IEEE Syst. J., vol. 14, no. 2, pp. 2589–2592, 2020.

- [21] V. Smith, C.-K. Chiang, M. Sanjabi, and A. S. Talwalkar, “Federated multi-task learning,” in Proc. NIPS, 2017.

- [22] I. M. Baytas, M. Yan, A. K. Jain, and J. Zhou, “Asynchronous multi-task learning,” in IEEE ICDM, 2016.

- [23] Y. Xi, A. Burr, J. Wei, and D. Grace, “A general upper bound to evaluate packet error rate over quasi-static fading channels,” IEEE Trans. Wireless Commun., vol. 10, no. 5, pp. 1373–1377, May 2011.

- [24] P. Ferrand, J.-M. Gorce, and C. Goursaud, “Approximations of the packet error rate under quasi-static fading in direct and relayed links,” EURASIP J. on Wireless Commun. and Netw., vol. 2015, no. 1, p. 12, Jan. 2015.

- [25] J. Wu, G. Wang, and Y. R. Zheng, “Energy efficiency and spectral efficiency tradeoff in type-I ARQ systems,” IEEE J. Sel. Areas Commun., vol. 32, no. 2, pp. 356–366, Feb. 2014.

- [26] S. Ge, Y. Xi, S. Huang, and J. Wei, “Packet error rate analysis and power allocation for cc-harq over rayleigh fading channels,” IEEE Commun. Lett., vol. 18, no. 8, pp. 1467–1470, Aug. 2014.

- [27] T. Li, A. K. Sahu, M. Zaheer, M. Sanjabi, A. Talwalkar, and V. Smith, “Federated optimization in heterogeneous networks,” in Proceedings of Machine Learning and Systems, vol. 2, 2020, pp. 429–450.

- [28] Z. Tao and Q. Li, “eSGD: Communication efficient distributed deep learning on the edge,” in Proc. USENIX Workshop Hot Topics Edge Comput. (HotEdge 18), 2018.

- [29] P. Domingos, “A few useful things to know about machine learning,” Commun. of the ACM, vol. 55, no. 10, pp. 78–87, 2012.

- [30] I. Goodfellow, Y. Bengio, A. Courville, and Y. Bengio, Deep learning. MIT press Cambridge, 2016, vol. 1, no. 2.

- [31] D. P. Bertsekas, Dynamic programming and optimal control. Athena scientific Belmont, MA, 1995, vol. 1, no. 2.

- [32] A. Gopalan and S. Mannor, “Thompson sampling for learning parameterized Markov decision processes,” in Proc. Conf. on Learn. Theory, 2015.

- [33] I. Osband and B. Van Roy, “Why is posterior sampling better than optimism for reinforcement learning?” in Proc. ICML, 2017.

- [34] Y. Ouyang, M. Gagrani, A. Nayyar, and R. Jain, “Learning unknown Markov decision processes: A Thompson sampling approach,” in Proc. NIPS, 2017.

- [35] H.-S. Lee, C. Shen, W. Zame, J.-W. Lee, and M. Schaar, “SDF-Bayes: Cautious optimism in safe dose-finding clinical trials with drug combinations and heterogeneous patient groups,” in Proc. AISTATS, 2021.

- [36] M. Misgiyati and K. Nisa, “Bayesian inference of poisson distribution using conjugate and non-informative priors,” in Prosiding Seminar Nasional Metode Kuantitatif, no. 1, 2017.

- [37] X. Hou and H. Kayama, Demodulation reference signal design and channel estimation for LTE-Advanced uplink. INTECH Open Access Publisher, 2011.

- [38] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proc. ICLR, 2015.

- [39] L. Bottou, F. E. Curtis, and J. Nocedal, “Optimization methods for large-scale machine learning,” Siam Review, vol. 60, no. 2, pp. 223–311, 2018.