ifaamas \acmDOIdoi \acmISBN \acmConference[AAMAS’18]Proc. of the 17th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2018), M. Dastani, G. Sukthankar, E. Andre, S. Koenig (eds.)July 2018Stockholm, Sweden \acmYear2018 \copyrightyear2018 \acmPrice

Adversarial Classification on Social Networks

Abstract.

The spread of unwanted or malicious content through social media has become a major challenge. Traditional examples of this include social network spam, but an important new concern is the propagation of fake news through social media. A common approach for mitigating this problem is by using standard statistical classification to distinguish malicious (e.g., fake news) instances from benign (e.g., actual news stories). However, such an approach ignores the fact that malicious instances propagate through the network, which is consequential both in quantifying consequences (e.g., fake news diffusing through the network), and capturing detection redundancy (bad content can be detected at different nodes). An additional concern is evasion attacks, whereby the generators of malicious instances modify the nature of these to escape detection. We model this problem as a Stackelberg game between the defender who is choosing parameters of the detection model, and an attacker, who is choosing both the node at which to initiate malicious spread, and the nature of malicious entities. We develop a novel bi-level programming approach for this problem, as well as a novel solution approach based on implicit function gradients, and experimentally demonstrate the advantage of our approach over alternatives which ignore network structure.

Electrical Engineering and Computer Science, Vanderbilt University \cityNashville \stateTN

Electrical Engineering and Computer Science, Vanderbilt University \cityNashville \stateTN

Computer Science, Amherst College \cityAmherst \stateMA

¡ccs2012¿ ¡concept¿ ¡concept_id¿10010147.10010178.10010219.10010220¡/concept_id¿ ¡concept_desc¿Computing methodologies Multi-agent systems¡/concept_desc¿ ¡concept_significance¿500¡/concept_significance¿ ¡/concept¿ ¡/ccs2012¿

1. Introduction

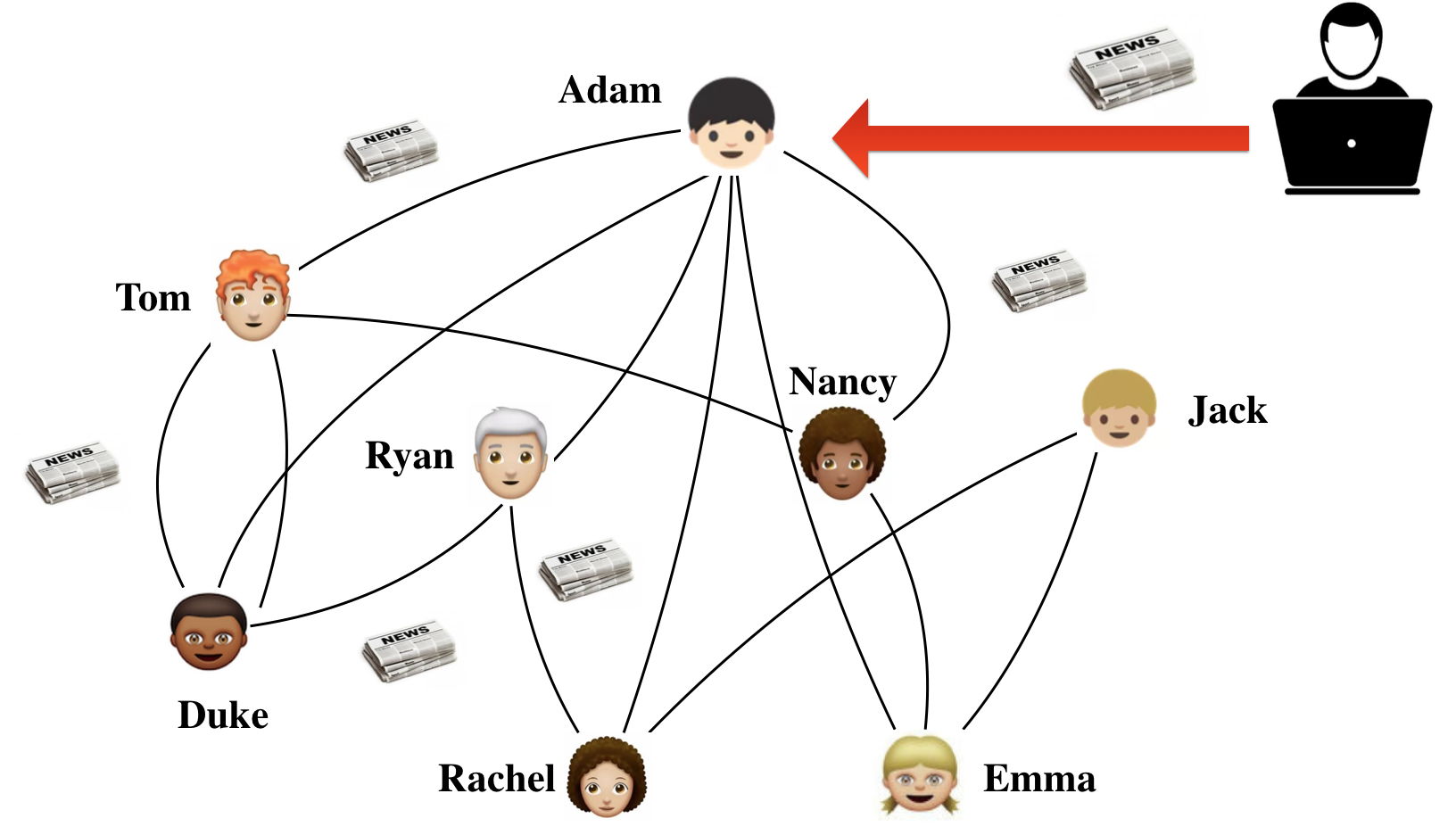

Consider a large online social network, such as Facebook or Twitter. It enables unprecedented levels of social interaction in the digital space, as well as sharing of valuable information among individuals. It is also a treasure trove of potentially vulnerable individuals to exploit for unscrupulous parties who wish to gain an economic, social, or political advantage. In a perfect world, the social network is an enabler, allowing diffusion of valuable information. We can think of this “benign” information as originating stochastically from some node, and subsequently propagating over the network to its neighbors (e.g., through retweeting a news story), then their neighbors, and so on. But just as the network is a conduit for valueable information, so it is for “malicious” content. However, such undesirable content can be targeted: first, by selecting an influential starting point on the network (akin to influence maximization), and second, by tuning the content for maximal impact. For example, an adversary may craft the headline of a fake news story to capture the most attention. Consider the illustration in Figure 1, where an attacker crafts a fake news story and shares it with Adam. This story is then shared by Adam with his friends, and so on.

These are not abstract concerns. Recently, widespread malicious content (e.g., fake news, antisocial posts) in online social networks has become a major concern. For example, considering that over adults in the U.S. regard social media as their primary sources for news holcomb2013news , the negative impact of fake news can be substantial. According to Allcott et al. allcott2017social over 37 million news stories that are later proved fake were shared on Facebook in the last three months of 2016 U.S. presidential election. In addition to fake news, anti-social posts in online communities negatively affect other users and damage community dynamics cheng2015antisocial , while social network spam and phish can defraud users and spread malicious software cormack2008email .

The managers of online social networks are not powerless against these threats, and can deploy detection methods, such as statistical classifiers, to identify and stop the spread of malicious content. However, such traditional mitigations have not as yet proved adequate. We focus on two of the reasons for this: first, adversaries can tune content to avoid being detected, and second, traditional learning approaches do not account for network structure. The implication of network structure mediating both spread and detection has in turn two consequences: first, we have to account for impact of detection errors in terms of benign or malicious content subsequently propgatating through the network, and second, the fact that we can potentially detect malicious content at multiple nodes on the network creates a degree of redundancy. Consequently, while traditional detection methods use training data to learn a single “global” classifier of malicious and benign content, we show that specializing such learning to network structure, and using different classifiers at different nodes can dramatically improve performance.

To address the problem of malicious content detection on social networks, we propose two significant modeling innovations. First, we explicitly model the diffusion process of content over networks as a function of content (or, rather, features thereof). This is a generalization of typical network influence models which abstract away the nature of information being shared. It is also a crucial generalization in our setting, as it allows us to directly model the balancing act by the attacker between increasing social influence and avoiding detection. Second, we consider the problem of designing a collection of heterogeneous statistical detectors which explicitly account for network structure and diffusion at the level of individual nodes, rather than merely training data of past benign and malicious instances. We formalize the overall problem faced as a Stackelberg game between a defender (manager of the social network) who deploys a collection of heterogeneous detectors, and an attacker who optimally chooses both the starting node for malicious content, and the content itself. This results in a complex bi-level optimization problem, and we introduce a novel technical approach for solving it, first considering a naive model in which the defender knows the node being attacked, which allows us to develop a projected gradient descent approach for solving this restricted problem, and subsequently utilizing this to devise a heuristic algorithm for tackling the original problem. We show that our approach offers a dramatic improvement over both traditional homogeneous statistical detection and a common adversarial classification approach.

Related Work

A number of prior efforts have considered limiting adversarial influence on social networks. Most of these pit two influence maximization players against one another, with both choosing a subset of nodes to maximize the spread of their own influence (blocking the influence of the other). For example, Cerenet et al. budak2011limiting consider the problem of blocking a “bad” campaign using a “good” campaign that spreads and thereby neutralizes the “bad” influence. Similarly, Tsai et al. tsai2012security study a zero-sum game between two parties with competing interests in a networked environment, with each party choosing a subset of nodes for initial influence. Vorobeychik et al. vorobeychik2015securing considered an influence blocking game in which the defender chooses from a small set of security configurations for each node, while the attacker chooses an initial set of nodes to start a malicious cascade. The main differences between this prior work and ours is that (a) our diffusion process depends on the malicious content in addition to network topology, (b) detection at each node is explicitly accomplished using machine learning techniques, rather than an abstract small set of configurations, and (c) we consider an attacker who, in addition to choosing the starting point of a malicious cascade, chooses the content in part to evade the machine learning-based detectors. The issue of using heterogeneous (personalized) filters was previously studied by Laszka et al. Laszka15 , but this work did not consider network structure or adversarial evasion.

Our paper is also related to prior research in single-agent influence maximization and adversarial learning. Kempe et al. kempe2003maximizing initiated the study of influence maximization, where the goal is to select a set of nodes to maximize the total subset of network affected for discrete-time diffusion processes. Rodriguez et al. gomez2012influence and Du et al. du2012learning ; du2013uncover ; du2013scalable considered the continuous-time diffusion process to model information diffusion; we extend this model. Prior adversarial machine learning work, in turn, focuses on the design of a single detector (classifier) that is robust to evasion attacks dalvi2004adversarial ; bruckner2011stackelberg ; li2014feature . However, this work does not consider malicious content spreading over a social network.

2. Model

We are interested in protecting a set of agents on a social network from malicious content originating from an external source, while allowing regular (benign) content to diffuse. The social network is represented by a graph , where is the set of vertices (agents) and is the set of edges. An edge between a pair of nodes represents communication or influence between them. For example, an edge from to may mean that can see and repost a video or a news article shared by . For simplicity, we assume that the network is undirected; generalization is direct.

We suppose that each message (benign or malicious) originates from a node on the network (which may differ for different messages) and then propagates to others. We utilize a finite set of instances diffusing over the network (of both malicious and benign content) as a training dataset . Each instance, malicious or benign, is represented by a feature vector where is the dimension of the feature space. The dataset is partitioned into and , where corresponds to malicious and to benign instances.

To analyze the diffusion of benign and malicious content on social networks in the presence of an adversary, we develop formal models of (a) the diffusion process, (b) the defender who aims to prevent the spread of malicious content while allowing benign content diffuse, (c) the attacker who attempts to maximize the influence of a malicious message, and (d) the game between the attacker and defender. We present these next.

2.1. Continuous-Time Diffusion

Given an undirected network with a known topology, we use a continuous-time diffusion process to model the propagation of content (malicious or benign) through the social network, extending Rodriguez et al. gomez2012influence . In our model, diffusion will depend not merely on the network structure, but also on the nature of the item propagating through the network, which we quantify by a feature vector as above.

Suppose that the diffusion process for a single message originates at a node . First, is transmitted from to its direct neighbors. The time taken by a propagation through an edge is sampled from a distribution over time, , which is a function of the edge itself and the entity , and parametrized by . The affected (influenced) neighbors of then propagate to their neighbors, and so on. We assume that an affected agent remains affected through the diffusion process.

Given a sample of propagation times over all edges, the time taken to affect an agent is the length of the shortest path between and , where the weights of edges are propagation times associated with these edges. The continuous-time diffusion process is supplied with a time window , which is used to simulate time-sensitive natures of propagation, for example, people are generally concerned about a news for several months but not for years. An agent is affected if and only if its shortest path to is less than or equal to . The diffusion process terminates when the path from to every unaffected agent is above . We define the influence of an instance initially affecting a network node as the expected number of affected agents over a fixed time window .

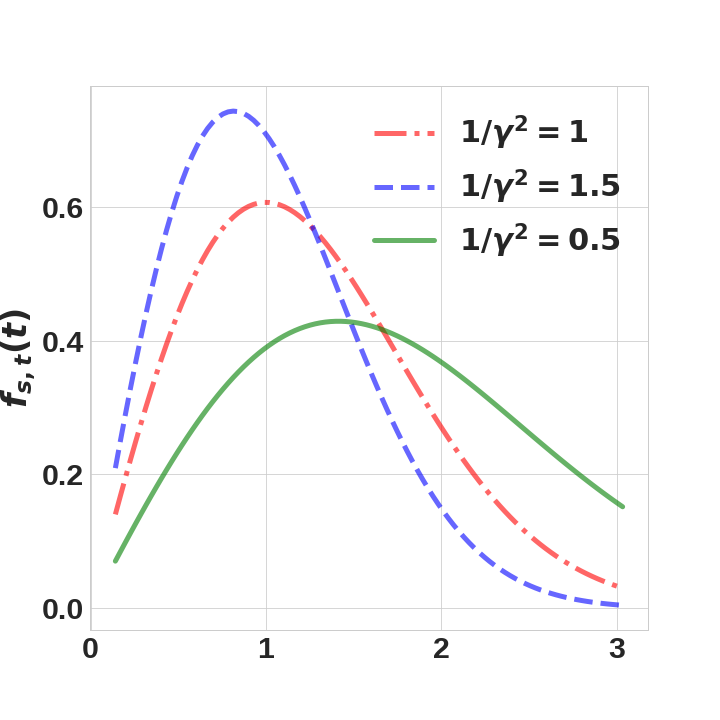

We assume that the distributions associated with edges are Rayleigh distributions (illustrated in Figure 2), which have density function , where and is the scale parameter.111It is straightforward to allow for alternative distributions, such as Weibull. The Rayleigh distribution is commonly used in epidemiology and survival analysis wallinga2004different and has been recently applied to model information diffusion in social networks gomez2012influence ; du2013uncover . In order to account for heterogeneity among mutual interactions of agents, and to let the influence of a process depend on the content being diffused, we parameterize the Rayleigh distribution of each edge by letting , where is sampled from the uniform distribution over . This parameterization results in the following density function for an arbitrary edge:

| (1) |

We denote by the joint parametrization of all edges.

Throughout, we assume that the parameters are given, and known to both the defender and attacker. A number of other research studies explore how to learn these parameter vectors from data du2012learning ; du2013uncover .

2.2. Defender Model

To protect the network against the spread of malicious content, the network manager—henceforth, the defender—can deploy statistical detection, which considers a particular instance (e.g., a tweet with an embedded link) and decides whether or not it is safe. The traditional way of deploying such a system is to take the dataset of labeled malicious and benign examples, train a classifier, and use it to detect new malicious content. However, this approach entirely ignores the fact that a social network mediates the spread of both malicious and benign entities. Moreover, both the nature (as captured in the feature vector) and the origin of malicious instances are deliberate decisions by the adversary aiming to maximize impact (and harm, from the defender’s perspective). Our key innovations are (a) to allow heterogeneous parametrization of classifiers deployed at different nodes, and (b) to explicitly consider both diffusion and adversarial manipulation during learning. In combination, this enables us to significantly boost detection effectiveness in social network settings.

Let be a vector of parameters of detection models deployed on the network where each represents the model used for content shared by node .222Below, we focus on corresponding to detection thresholds as an illustration; generalization is direct. We now extend our definition of expected influence to be a function of detector parameters, denoting it by , since any content (malicious or benign) starting at node which is classified as malicious at a node (not necessarily the same as ) will be blocked from spreading any further.

We define the defender’s utility as

| (2) | ||||

where is the starting node targeted by the adversary, which is subsequently modified by the same adversary into (in an attempt to bypass detection) when the original content used by the adversary is . The first part of the utility represents the influence of benign content that the defender wishes to maximize, while the second part denotes the influence of malicious content that the defender aims to limit, with trading off the relative importance of these two considerations. Observe that we assume that benign content originates uniformly over the set of nodes, while malicious origin is selected by the adversary. The defender’s action space is the set of all possible parameters of the detectors deployed at all network nodes. Note that, as is typical in machine learning, we are using the finite labeled dataset as a proxy for expected utility with respect to malicious and benign content generated from the same distribution as the data.

2.3. Attacker Model

The attacker’s decision is twofold: (1) find a node to start diffusion; and (2) transform malicious content from (its original, or ideal, form) into another feature vector with the aim of avoiding detection. The first decision is reminiscent of the influence maximization problemkempe2003maximizing . The second decision is commonly known as the evasion attack on classifiers lowd2005adversarial ; li2014feature . In our case, the adversary attempts to balance three considerations: (a) impact, mediated by the diffusion of malicious content, (b) evasion, or avoiding being detected (a critical consideration for impact as well), and (c) a cost of modifying original “ideal” content into another form, which corresponds to the associated reduced effectiveness of the transformed content, or effort involved in the transformation. We impose this last consideration as a hard constraint that for an exogenously specified , where is the norm.

Consider the collection of detectors with parameters deployed on the network. We say that a malicious instance is detected at a node if , where is the 0-1 indicator function. The optimization problem of the attacker corresponding to an original malicious instance is then:

| (3) | ||||||

where the first constraint is the attacker’s budget limit, while the second constraint requires that the attack instance remains undetected. If Problem (LABEL:eq:attacker_opt) does not have a feasible solution, the attacker sends the original malicious instance without any modification. Consequently, the pair in the defender’s utility function above are the solutions to Problem (LABEL:eq:attacker_opt).

2.4. Stackelberg Game Formulation

We formally model the interaction between the defender and the attacker as a Stackelberg game in which the defender is the leader (choosing parameters of node-level detectors) and the attacker the follower (choosing a node to start malicious diffusion, as well as the content thereof). We assume that the attacker knows , as well as all relevant parameters (such as ) before constructing its attack. The equilibrium of this game is the joint choice of , where and solve Problem (LABEL:eq:attacker_opt), thereby maximizing the attacker’s utility, and maximizes the defender’s utility given and . More precisely, we aim to find a Strong Stackelberg Equilibrium (SSE), where the attacker breaks ties in the defender’s favor.

We propose finding solutions to this Stackelberg game using the following optimization problem:

| (4) | ||||||

This is a hierarchical optimization problem, where the upper-level optimization corresponds to maximizing the defender’s utility. The constraints of the upper-level optimization are called the lower-level optimization, which is the attacker’s optimization problem.

The optimization problem (4) is generally intractable for several reasons. First, Problem (4) is a bilevel optimization problem colson2007overview , which is hard even when the upper- and lower-level problems are both linear colson2007overview . The second difficulty lies in maximizing (the attacker’s problem), as the objective function does not have an explicit closed-form expression. In what follows, we develop a principled approach to address these technical challenges.

3. Solution Approach

We start by making a strong assumption that the defender knows the node being attacked. This will allow us to make considerable progress in transforming the problem into a significantly more tractable form. Subsequently, we relax this assumption, developing an effective heuristic algorithm for computing the SSE of the original problem.

First, we utilize the tree structure of a continuous-time diffusion process to convert (4) into a tractable bilevel optimization. We then collapse the bilevel optimization into a single-level optimization problem by leveraging Karush-Kuhn-Tucker (KKT) boyd2004convex conditions. The assumption that the defender knows the node being attacked allows us to solve the resulting single-level optimization problem using projected gradient descent.

3.1. Collapsing the Bilevel Problem

A continuous-time diffusion process proceeds in a breadth-first-search fashion. It starts from an agent trying to influence each of its neighbors. Then its neighbors try to influence their neighbors, and so on. Notice that once an agent becomes affected, it is no longer affected by others. The main consequence of this propagation process is that it results in a propagation tree rooted at , with its structure intimately connected to the starting node . This is where we leverage the assumption that the defender knows the starting node of the attack: in this case, the tree structure can be pre-computed, and fixed for the optimization problem.

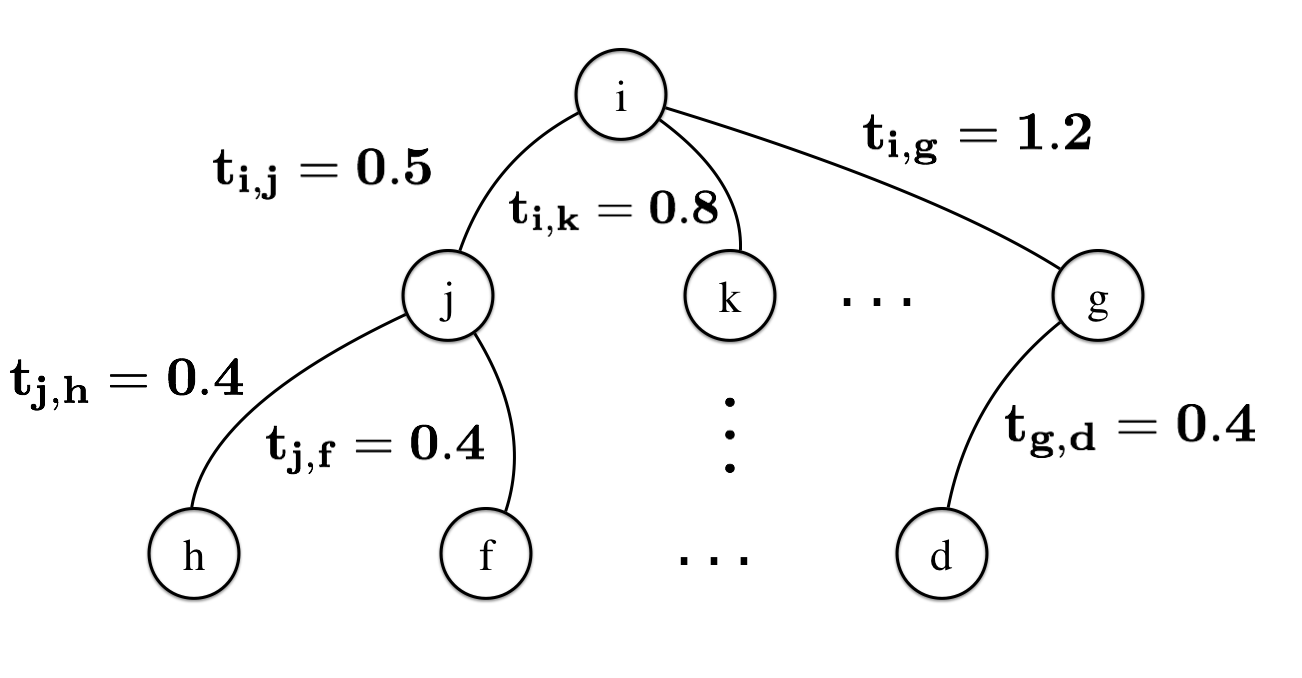

We divide the agents traversed by the tree into several layers in terms of their distances to the source, where each layer is indexed by . Since the structure of the tree depends on , is a function of , . An example of the influence propagation tree is depicted in Figure 3, where the first layer consists of . The number next to each edge represents the weight sampled from the associated distribution.

We define a matrix where is the number of agents in layer and is the feature dimension of . Each row of corresponds to the parametrization vector of an edge in layer (an edge is in layer if one of its endpoint is in layer while the other is in layer ; the source is always in layer zero). For example, in Figure 3, . The product of is a vector in , where each element corresponds to the parameter of an edge in layer .

Recall that a sample of random variables from Rayleigh distributions associated with edges corresponds to a sample of weights associated with these edges. With a fixed time window , small edge weights result in wider diffusion of the content over the social network. For example, in Figure 3 if the number next to each edge represents a sample of weights, then with the propagation starting from can only reach agents and . However, if we assume that in another sample all become , then with the same time window the propagation can reach every agent in the network. Consequently, the attacker’s goal is to increase for each edge . This suggests that in order to increase the attacker can modify the malicious instance such that the inner products between and the parameter vectors of edges are large. Consequently, we can formulate the attacker’s optimization problem with respect to malicious content for a given original feature vector as

| (5) | ||||||

The attacker aims to make for each edge as large as possible, which is captured by the objective function , where is a vector with all elements equal to one. Intuitively, this means the attacker is trying to maximize on average the parameter of every edge at layer . Here, is a vector of decreasing coefficients that provides more flexibility to modeling the attacker’s behavior: they are used to re-weight the importance of each layer. For example, setting models the attacker who tries to make malicious instances spread wider at the earlier layers of the diffusion.

We now use similar ideas to convert the upper-level optimization problem of (4) into a more tractable form. Suppose that the node being attacked is (and known to the defender). Then the defender wants the detection model at to accurately identify both malicious and benign contents. This is achieved by the two indicator functions inside and in the reformulated objective function of the defender (6):

| (6) | ||||

Notice that this expression includes a vector that does not appear in (5). is a function of and , for a given node which triggers diffusion (which we omit below for clarity):

| (7) |

Slightly abusing notation, we let denote the th agent in layer . The term in can be expanded as follows:

| (8) | ||||

noting again that and depend on , the starting node of the diffusion process. From the expression (8), the defender tries to find that minimizes the impact of false positives while maximizing the impact of true negatives. This is because if each benign instance is correctly identified (false-positive rates are zero and true-negative rates are one), the summation at the second line of expression (8) will attain its maximum possible value.

In addition to facilitating the propagation of benign contents, the defender wants to limit the propagation of malicious contents, which is embodied in . The equations in are similar to those in , except that the summation is over malicious contents , and is accounting for the false negatives. In this case, is a function of , the adversarial feature vector which transforms into another, .

We now re-formulate the problem (4) as a new bilevel optimization problem (9). The upper-level problem corresponds to the defender’s strategy (6), and the lower-level problem to the attacker’s optimization problem (5). Here, is again the node chosen by the attacker.

| (9) | ||||

where the last constraint ensures that for all attacks .

The final step, inspired by mei2015security ; mei2015using , is to convert (9) into a single-level optimization problem via the KKT boyd2004convex conditions of the lower-level problem. With appropriate norm constraints (e.g., norm) and a convex relaxation of the indicator functions (i.e., convex surrogates of the indicator functions), the lower-level problem of (9) is convex. A convex optimization problem can be equivalently represented by its KKT conditionsburges1998tutorial . The single-level optimization problem then becomes:

| (10) | ||||

where is the objective function of Problem (9), and , , are vectors of lagrangian multipliers. is the attacker’s budget constraint. is the set of equality constraints . is the Hadamard (elementwise) product between and .

3.2. Projected Gradient Descent

In this section we demonstrate how to solve the single-level optimization obtained above by projected gradient descent. The key technical challenge is that we don’t have an explicit representation of the gradients with respect to the defender’s decision , as these are indirectly related via the optimal solution to the attacker’s optimization problem. We derive these gradients based on the implicit function of the defender’s utility with respect to .

We begin by outlining the overall iterative projected gradient descent procedure. In iteration we update the parameters of detection models by taking a projected gradient step:

| (11) | ||||

where is the feasible domain of and is the learning rate. With we solve for , which is the optimal attack for a fixed . is the gradient of the upper-level problem.

Expanding using the chain rule and still using as the initially attacked node, we obtain

| (12) | ||||

In both and we note that is dependent on the specific detection models. We will give a concrete example of their derivation in Section 3.5.

In there are two terms that are functions of : and . Consequently, can be expanded as:

| (13) | ||||

Note that only the detection models of those agents at layer have contribution to . Thus, is a Jacobian matrix with dimension , where is the number of agents at layer and denotes the detection models of those agents. Since is also dependent on the specific detection models of agents, we defer its derivation to Section 3.5.

is a Jacobian matrix and is the main difficulty because we do not have an explicit function of the attacker’s optimal decision with respect to . Fortunately, the constraints in (10) implicitly define in terms of :

| (14) | ||||

and the attacked malicious instance satisfy . The Implicit Function Theoremzorichmathematical states that if is continuous and differentiable and the Jacobian matrix

has full rank, there is a unique implicit function . Moreover, the derivative of is:

| (15) | ||||

is the Jacobian matrix of with respect to , and so on. is the first rows of , where can be column-wise indexed by the nodes at layer .

can be similarly expanded as we had done for , except that the attacker does not modify benign content, so that is no longer a function of :

| (16) | ||||

The full projected gradient descent approach is given by Algorithm 1.

3.3. Optimal Attack

So far, we had assumed that the network node being attacked is fixed. However, the ultimate goal is to allow the attacker to choose both the node , and the modification of the malicious content . We begin our generalization by first allowing the attacker to optimize these jointly.

The full attacker algorithm which results is described in Algorithm 2.

Recall that the tree structure of a propagation is dependent on the agent being attacked, which makes the objective function of (5) a function of the agent being attacked. Thus, for a given fixed , the attacker iterates through each agent and solves the problem (5), assuming the propagation starts from , resulting in associated utility and an attacked instance . Then , , and are appended into a list of a 3-tuples (the sixth step in Algorithm 2). When the iteration completes the attacker picks the optimal 3-tuple in terms of utility (eighth step in Algorithm 2, where the function OptimalTuple(ret) finds the optimal 3-tuple from the list ret). The node and the corresponding attack instance in this optimal 3-tuple become the optimal attack.

3.4. SSE Heuristic Algorithm

Now we take the final step, relaxing the assumption that the attacker chooses a fixed node to attack which is known to the defender prior to choosing . Our main observation is that fixing in the defender’s algorithm above allows us to find a collection of heterogeneous detector parameters , and we can evaluate the actual utility of the associated defense (i.e., if the attacker optimally chooses both and in response) by using Algorithm 2. We use this insight to devise a simple heuristic: iterate over all potential nodes that can be attacked, compute the associated defense (using the optimistic definition of defender’s utility in which is assumed fixed), then find the actual optimal attack in response for each . Finally, choose the which has the best actual defender utility.

This heuristic algorithm is described in Algorithm 3.

The fifth step in the algorithm includes the function DefenderUtility, which evaluates the defender’s utility . Note that the input argument of this function is used to determine the tree structure of the propagation started from .

Recall that Algorithm 1 solves (10), which depends on the specific detection model to compute the relevant gradients. Therefore, in what follows, we present a concrete example of how to solve (10) where detection models are logistic regressions. Specifically, we illustrate how to derive the two terms, and that depend on particular details of the detection model.

3.5. Illustration: Logistic Regression

We consider the logistic regression model used for detection at individual nodes to illustrate the ideas developed above. For a node , its detection model has two components: the logistic regression , where is the weight vector of the logistic regression and the instance propagated to , and a detection threshold (which is the parameter the defender will optimize). An instance is classified as benign if . Thus (slightly abusing notation as before), ( is classified as malicious) if .

With the specific forms of the detection models we can derive and (omitting the node index or for clarity). A technical challenge is that the indicator function is not continuous or differentiable, which means that it’s difficult to characterize its derivative with respect to . However, observe that for logistic regression is equivalent to . Therefore we use as a surrogate function for . Then is a -dimension vector with the th element equal to . The vector then becomes:

| (17) |

and becomes a diagonal matrix:

| (18) |

With equations (LABEL:eq:dF_dTheta)-(16), and , we can now calculate . Since the thresholds , the defender’s action space is . When updating by (11) we therefore project it back to in each iteration.

4. Experiments

In this section we experimentally evaluate our proposed approach. We used the Spam dataset Lichman:2013 from UCI machine learning repository as the training dataset for the logistic regression model. The Spam dataset contains 4601 emails, where each email is represented by a 57-dimension feature vector. We divided the dataset into three disjoint subsets: used to learn the logistic regression (tuning the weight vectors with thresholds setting to ) as well as other models to which we compare, used in Algorithm 3 to find the optimal defense strategy, and to test the performance of the defense strategy. The sizes of , , and are 3681, 460, and 460, respectively. They are all randomly sampled from .

Our experiments were conducted on two synthetic networks with 64 nodes: Barabasi-Albert preferential attachment networks (BA) barabasi1999emergence and Watts-Strogatz networks (Small-World) watts1998collective . BA is characterized by its power-law degree distribution, where connectivity is heavily skewed towards high-degree nodes. The power-law degree distribution, , gives the probability that a randomly selected node has neighbors. The degree distributions of many real-world social networks have previously been shown to be reasonably approximated by the power-law distribution with barabasi2002evolution . Our experiments for BA were conducted across two sets of parameters: and .

The Small-World topology is well-known for balancing shortest path distance between pairs of nodes and local clustering in a way as to qualitatively resemble real networks ugander2011anatomy . In our experiments we consider two kinds of Small-World networks. The first has average length of shortest path equal to and local clustering coefficient equal to . In this case the local clustering coefficient is close to what had been observed in large-scale Facebook friendship networks ugander2011anatomy . The second one has average shortest path length of and local clustering coefficient of , where the local clustering coefficient is close to that for the electric power grid of the western United States watts1998collective .

Our node-level detectors use logistic regression, with our algorithm producing the threshold for these. The trade-off parameter was set to and the time window was set to . We applied standard pre-processing techniques to transform each feature to lie between zero and one. The attacker’s budget is measured by squared norm and the budget limit is varied from to . We compare our strategy with three others based on traditional approaches: Baseline, Re-training, and Personalized-single-threshold; we describe these next.

Baseline: This is the typical approach which simply learns a logistic regression on training data, sets all thresholds to , and deploys this model at all nodes.

Re-training: The idea of re-training, common in adversarial classification, is to iteratively augment the original training data with attacked instances, re-training the logistic regression each time, until convergence barreno2006can ; li2016general . The logistic regressions deployed at the nodes are homogeneous, with all thresholds being .

Personalized-single-threshold: This strategy is only allowed to tune a single agent’s threshold. It has access to that includes unattacked emails. The strategy iterates throught each node and finds its optimal threshold. The optimality is measured by the defender’s utility as defined in (2), where the expected influence of an instance is estimated by simulating 1000 propagations started from . Then the strategy picks the node with largest utility and sets its optimal threshold.

As stated earlier, network topologies and parameter vectors associated with edges are assumed to be known by both the defender and the attacker. The attacker has full knowledge about the defense strategy, including the weight vectors of logistic regressions as well as their thresholds. As in the definition of Stackelberg game, the evaluation procedure lets the defender first choose its strategy , and then the attacker computes its best response, which chooses the initial node for the attack and transformations of malicious content aimed at evading the classifier. Finally the defender’s utility is calculated by (2), where the expected influence is estimated by simulating 1000 propagations originating from for each malicious instance .

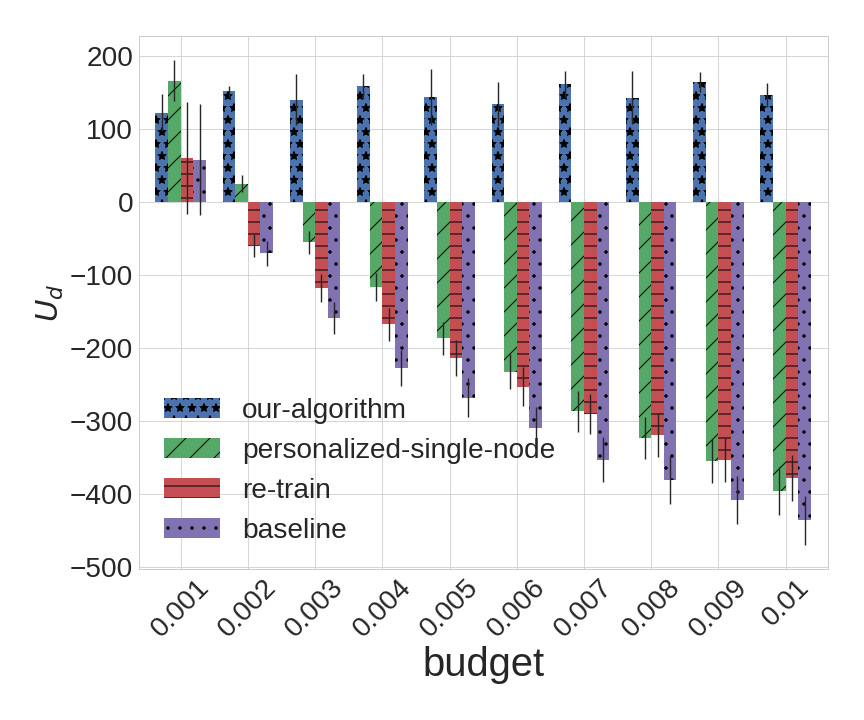

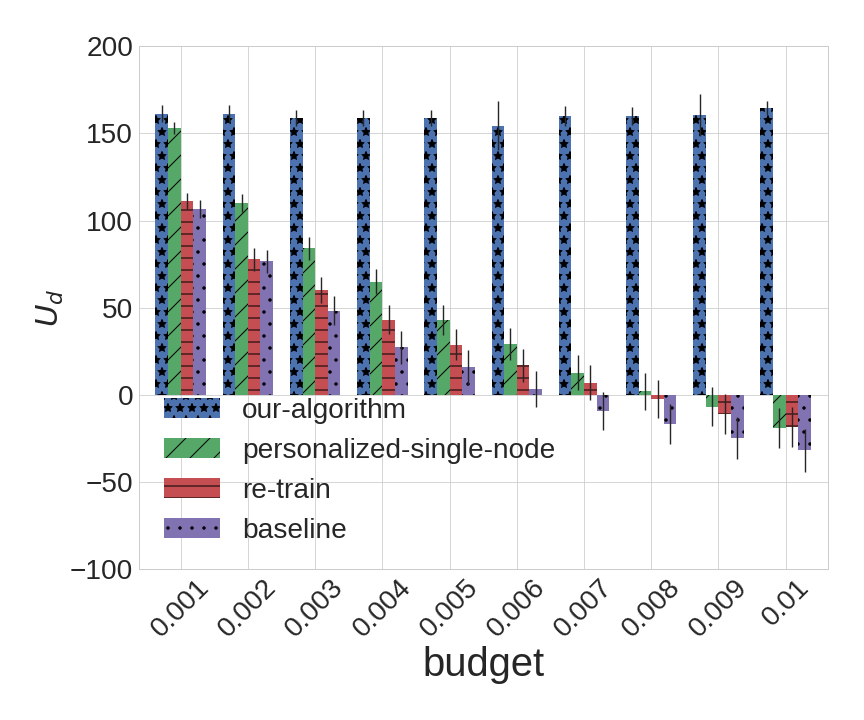

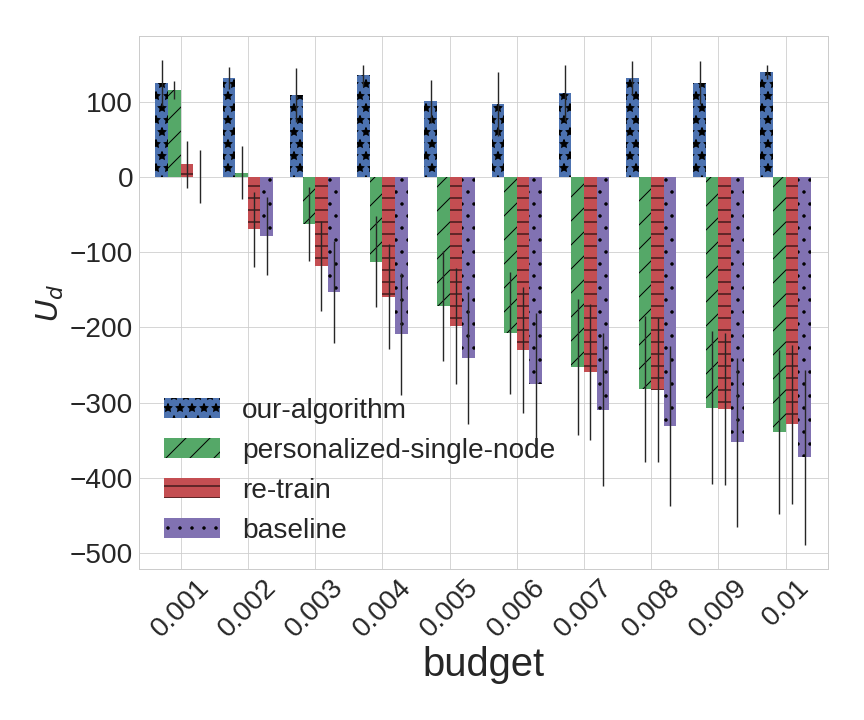

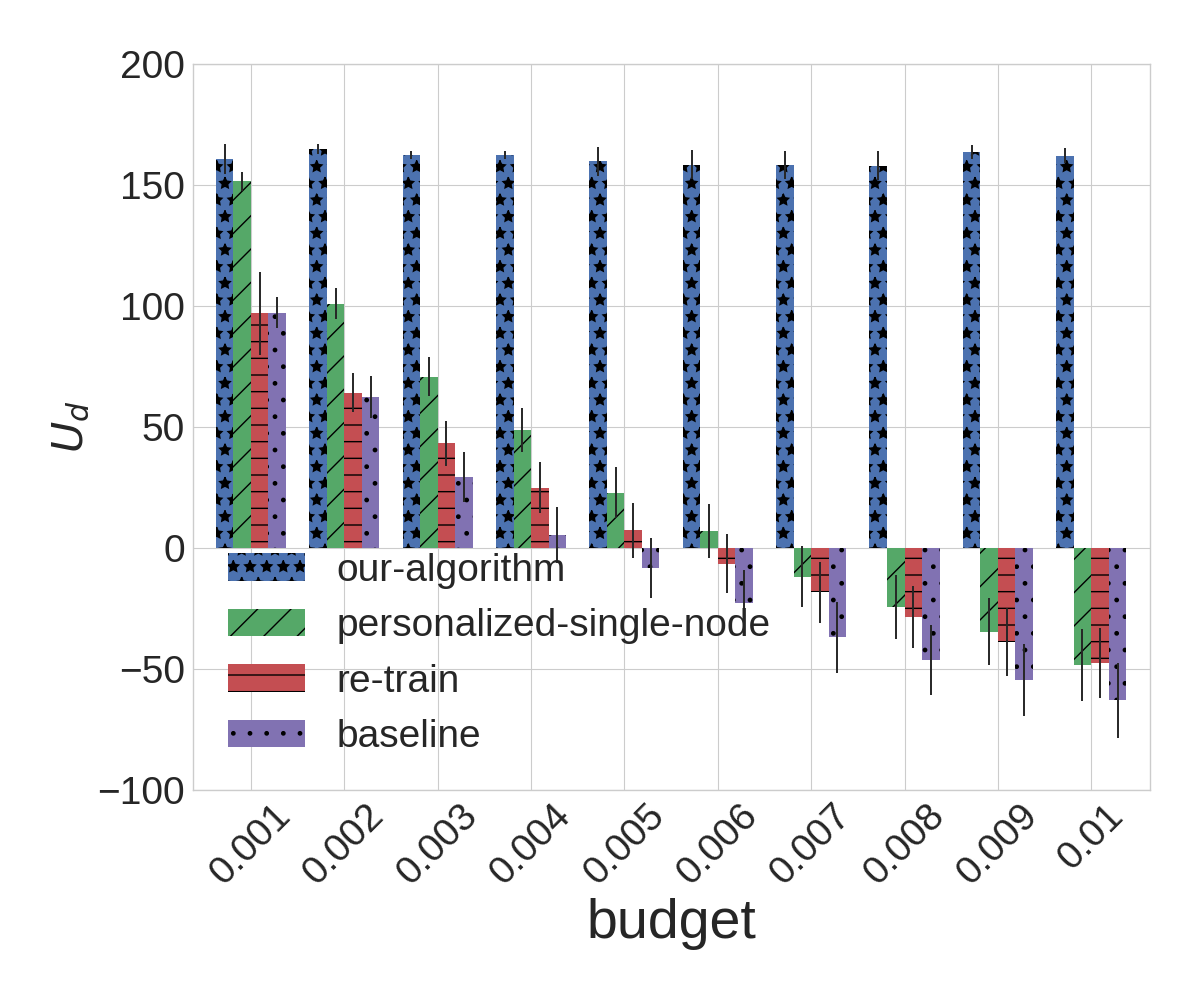

The experimental results for BA () and Small-World (average length of shortest path= and local clustering coefficient=) are shown in Figure 4, and the experimental results for BA () and Small-World (average length of shortest path= and local clustering coefficient=) are shown in Figure 5.

|

|

|

|

As we can observe from the experiments, our algorithm outperforms all of the alternatives in nearly every instance; the sole exception is when the attacker budget is , which effectively eliminates the adversarial component from learning. For larger budgets, our algorithm remarkably robust even as other algorithms perform quite poorly, so that when , there is a rather dramatic gap between our approach and all alternatives. Not surprisingly, the most dramatic differences can be observed in the BA topology: with the large variance in the degree distribution of different nodes, our heterogeneous detection is particularly valuable in this setting. In contrast, the degradation of the other methods on Small-World topologies is not quite as dramatic, although the improvement offered by the proposed approach is still quite pronounced. Among the alternatives, it is also revealing that personalizing thresholds results in second-best performance: again, takng account of network topology is crucial; somewhat surprisingly, it often outperforms re-training, which explicitly accounts for adversarial evasion, but not network topology.

5. Conclusion

We address the problem of adversarial detection of malicious content spreading through social networks. Traditional approaches use with a homogeneous detector or a personalized filtering approach. Both ignore (and thus fail to exploit knowledge of) the network topology, and most filtering approaches in prior literature ignore the presence of an adversary. We present a combination of modeling and algorithmic advances to systematically address this problem. On the modeling side, we extend diffusion modeling to allow for dependence on the content propagating through the network, model the attacker as choosing both the malicious content, and initial target on the social network, and allow the defender to choose heterogeneous detectors over the network to block malicious content while allowing benign diffusion. On the algorithmic side, we solve the resulting Stackelberg game by first representing it as a bilevel program, then collapsing this program into a single-level program by exploiting the problem structure and applying KKT conditions, and finally deriving a projected gradient descent algorithm using explicit and implicit gradient information. Our experiments show that our approach dramatically outperforms, homogeneous classification, adversarial learning, and heterogeneous but non-adversarial alternatives.

6. Acknowledgements

This work was supported, in part by the National Science Foundation (CNS-1640624, IIS-1649972, and IIS-1526860), Office of Naval Research (N00014-15-1-2621) and Army Research Office (W911NF-16-1-0069).

References

- [1] H. Allcott and M. Gentzkow. Social media and fake news in the 2016 election. Technical report, National Bureau of Economic Research, 2017.

- [2] A.-L. Barabási and R. Albert. Emergence of scaling in random networks. Science, 286(5439):509–512, 1999.

- [3] A.-L. Barabâsi, H. Jeong, Z. Néda, E. Ravasz, A. Schubert, and T. Vicsek. Evolution of the social network of scientific collaborations. Physica A: Statistical mechanics and its applications, 311(3):590–614, 2002.

- [4] M. Barreno, B. Nelson, R. Sears, A. D. Joseph, and J. D. Tygar. Can machine learning be secure? In Proceedings of the 2006 ACM Symposium on Information, computer and communications security, pages 16–25. ACM, 2006.

- [5] S. Boyd and L. Vandenberghe. Convex optimization. Cambridge university press, 2004.

- [6] M. Brückner and T. Scheffer. Stackelberg games for adversarial prediction problems. In Proceedings of the 17th ACM SIGKDD, pages 547–555. ACM, 2011.

- [7] C. Budak, D. Agrawal, and A. El Abbadi. Limiting the spread of misinformation in social networks. In Proceedings of the 20th international conference on World wide web, pages 665–674. ACM, 2011.

- [8] C. J. Burges. A tutorial on support vector machines for pattern recognition. Data mining and knowledge discovery, 2(2):121–167, 1998.

- [9] J. Cheng, C. Danescu-Niculescu-Mizil, and J. Leskovec. Antisocial behavior in online discussion communities. In International Conference on Weblogs and Social Media, pages 61–70, 2015.

- [10] B. Colson, P. Marcotte, and G. Savard. An overview of bilevel optimization. Annals of operations research, 153(1):235–256, 2007.

- [11] G. V. Cormack et al. Email spam filtering: A systematic review. Foundations and Trends® in Information Retrieval, 1(4):335–455, 2008.

- [12] N. Dalvi, P. Domingos, S. Sanghai, D. Verma, et al. Adversarial classification. In Proceedings of the tenth ACM SIGKDD, pages 99–108. ACM, 2004.

- [13] N. Du, L. Song, M. G. Rodriguez, and H. Zha. Scalable influence estimation in continuous-time diffusion networks. In Advances in neural information processing systems, pages 3147–3155, 2013.

- [14] N. Du, L. Song, H. Woo, and H. Zha. Uncover topic-sensitive information diffusion networks. In Artificial Intelligence and Statistics, pages 229–237, 2013.

- [15] N. Du, L. Song, M. Yuan, and A. J. Smola. Learning networks of heterogeneous influence. In Advances in Neural Information Processing Systems, pages 2780–2788, 2012.

- [16] M. Gomez-Rodriguez and B. Schölkopf. Influence maximization in continuous time diffusion networks. In Proceedings of the 29th International Coference on International Conference on Machine Learning, pages 579–586. Omnipress, 2012.

- [17] J. Holcomb, J. Gottfried, and A. Mitchell. News use across social media platforms. Pew Research Journalism Project, 2013.

- [18] D. Kempe, J. Kleinberg, and É. Tardos. Maximizing the spread of influence through a social network. In Proceedings of the ninth ACM SIGKDD, pages 137–146. ACM, 2003.

- [19] A. Laszka, Y. Vorobeychik, and X. Koutsoukos. Optimal personalized filtering against spear-phishing attacks. In AAAI Conference on Artificial Intelligence, 2015.

- [20] B. Li and Y. Vorobeychik. Feature cross-substitution in adversarial classification. In Advances in neural information processing systems, pages 2087–2095, 2014.

- [21] B. Li, Y. Vorobeychik, and X. Chen. A general retraining framework for scalable adversarial classification. arXiv preprint arXiv:1604.02606, 2016.

- [22] M. Lichman. UCI machine learning repository, 2013.

- [23] D. Lowd and C. Meek. Adversarial learning. In Proceedings of the eleventh ACM SIGKDD, pages 641–647. ACM, 2005.

- [24] S. Mei and X. Zhu. The security of latent dirichlet allocation. In Artificial Intelligence and Statistics, pages 681–689, 2015.

- [25] S. Mei and X. Zhu. Using machine teaching to identify optimal training-set attacks on machine learners. In AAAI Conference on Artificial Intelligence, pages 2871–2877, 2015.

- [26] J. Tsai, T. H. Nguyen, and M. Tambe. Security games for controlling contagion. In AAAI Conference on Artificial Intelligence, 2012.

- [27] J. Ugander, B. Karrer, L. Backstrom, and C. Marlow. The anatomy of the facebook social graph. arXiv preprint arXiv:1111.4503, 2011.

- [28] Y. Vorobeychik and J. Letchford. Securing interdependent assets. Autonomous Agents and Multi-Agent Systems, 29(2):305–333, 2015.

- [29] J. Wallinga and P. Teunis. Different epidemic curves for severe acute respiratory syndrome reveal similar impacts of control measures. American Journal of Epidemiology, 160(6):509–516, 2004.

- [30] D. J. Watts and S. H. Strogatz. Collective dynamics of small-world networks. Nature, 393(6684):440–442, 1998.

- [31] V. A. Zorich and R. Cooke. Mathematical analysis i. 2004.