Adversarial for Social Privacy: A Poisoning Strategy to Degrade User Identity Linkage

Abstract

Privacy issues on social networks have been extensively discussed in recent years. The user identity linkage (UIL) task, aiming at finding corresponding users across different social networks, would be a threat to privacy if unethically applied. The sensitive user information might be detected through connected identities. A promising and novel solution to this issue is to design an adversarial strategy to degrade the matching performance of UIL models. However, most existing adversarial attacks on graphs are designed for models working in a single network, while UIL is a cross-network learning task. Meanwhile, privacy protection against UIL works unilaterally in real-world scenarios, i.e., the service provider can only add perturbations to its own network to protect its users from being linked. To tackle these challenges, this paper proposes a novel adversarial attack strategy that poisons one target network to prevent its nodes from being linked to other networks by UIL algorithms. Specifically, we reformalize the UIL problem in the perspective of kernelized topology consistency and convert the attack objective to maximizing the structural changes within the target network before and after attacks. A novel graph kernel is then defined with Earth mover’s distance (EMD) on the edge-embedding space. In terms of efficiency, a fast attack strategy is proposed by greedy searching and replacing EMD with its lower bound. Results on three real-world datasets indicate that the proposed attacks can best fool a wide range of UIL models and reach a balance between attack effectiveness and imperceptibility.

Index Terms:

social privacy, user identity linkage, social network, adversarial attack, graph kernelI Introduction

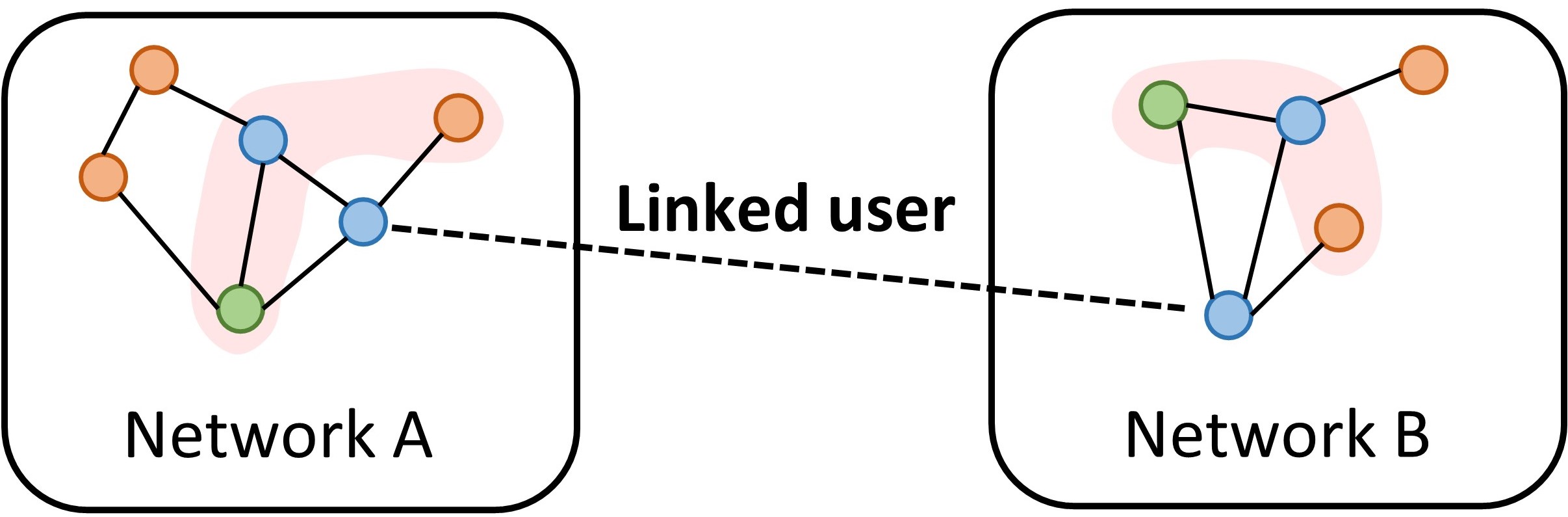

The privacy concern has attracted great attention on social networking services recently [1, 2]. The sensitive user information may be unintentionally divulged by user identity linkage (UIL) [3, 4, 5] which aims to link socialized user data across different social networks. Associated with cross-network linkages, user properties can be accurately aligned [6] and thus privacy data, including family, job, age, email, etc., can be easily revealed. Therefore, the lack of management on UIL would hold a candle to the devil.

To combat the user privacy leakage problem led by malicious usage of UIL, the key is to degrade matching performance of UIL models when applied in practice. According to most literature, social relationships play a pivotal role in exploring corresponding user identities across networks [6, 7, 8, 9, 10]. Typically, users who share similar neighborhoods in different graphs could be recognized as matched ones, which is known as topology consistency [10, 11] (shown in Fig. 1). Thus, disturbing topology consistency can lead to performance degradation on a series of UIL models relied on structures.

Previous studies [12] on adversarial attacks have shown that imposing slight perturbations in graph structure can drastically worsen the performance of graph models, e.g., node classification [13, 14, 15], link prediction [16, 17], and graph classification [18]. However, these adversarial attacks are constrained to fool learning models in a single network while UIL needs to consider multiple networks. To degrade matching performance across networks, GMA [19] adopts a perturbation method calculated by node density estimation on two graphs, aiming to increase the possibility of deriving wrong matching. Although perturbations on two graphs take effects on degrading UIL models, it is still hard to be generalized into real applications. This is because the authority of perturbations for privacy protection can hardly be extended to multiple networks in practice.

To tackle these challenges, we propose a novel poisoning strategy named TOAK (Topology-Oriented Attack via Kernel method) to attack a wide range of UIL models via removing edges in a single network. The key idea of TOAK is to vastly break the topology consistency so that UIL models cannot identify similar users on different social networks. We first reformalize UIL problem by graph kernels [20, 21], which measures similarity between two structural objects by kernel functions defined in reproducing kernel Hilbert space (RKHS). Based on kernel-based modeling methods, we prove an equivalent attack objective on minimizing kernel function values between clean and poisoned target network (i.e., maximizing the structural changes in the target network). A novel kernel based on earth mover’s distance (EMD) [22] in edge-embedding space is also proposed. Extensive experimental results on real datasets demonstrate the effectiveness of our proposed strategy in preventing user privacy leakage via UIL.

The main contributions of this paper are listed as follows:

-

•

Problem formulation. We reformulate UIL problem on attribute graph with a unified Kernel-based Framework and a closed-form solution is finally obtained. It provides new insight on how to efficiently break topology consistency so as to worsen performances of UIL models.

-

•

Novel poisoning strategy. With the new perspective on UIL, we propose the TOAK method to attack common UIL models. TOAK converts the attack objective to minimize kernel function values within the clean and poisoned target network. Meanwhile, a novel graph kernel is proposed based on EMD [22] among edge embedding distributions that are obtained via variational graph autoencoder (VGAE) [23] and the random walk process.

-

•

Optimized computation. We utilize a greedy strategy to remove edges to avoid repeated calculation. Furthermore, a lower bound is used to approximate EMD in the attacking objective, which reduces the complexity from to . The optimized method is more time-saving.

-

•

Performance. We conduct extensive experiments to validate the performance of proposed methods. The experimental results demonstrate that (1) Transferability. TOAK can be broadly applied to attack typical unsupervised and supervised UIL models; (2) Attack performance. Guided by proposed attacks, the quality of UIL models shows a higher mismatching rate than the attacks from other state-of-the-art attack models. Meanwhile, the proposed attack model still achieves a good balance between effectiveness and imperceptibility than compared methods; (3) Efficiency. The proposed acceleration strategy leads to more than 10 speed-up while receiving almost similar attack performance compared with the exact unaccelerated version.

II Related Works

II-A Network Alignment

Network alignment aims to search related nodes across different networks and has been widely applied in diverse research fields [6], including chemistry [24], bioinformatics [25], knowledge graph [6], and social computing [3]. In this paper, attentions are paid to network alignment models in social fields. Sometimes, it is also known as user identity linkage [26] or anchor link prediction [3].

Most previous works utilize the “topology consistency” in such kind of matching issues. Inspired by PageRank [27], IsoRank [25] and FINAL [10] algorithm iteratively propagate the pairwise node similarity in graph. Additionally, BigAlign [28] uses the alternating projected gradient descent to solve the bipartite network alignment. Then, with the development of graph representation, topology consistency can be also addressed by specific embedding. PALE [8] and IONE [7] learn cross-network mapping via embedding that captures the specific structural regularities. DeepLink [9] uses embeddings inspired by word embedding technologies. Based on heterogeneous networks, TALP [29] design a type-aware embedding method to better model matching objectives.

II-B Adversarial Attack on Graph

Generally, graph adversarial attack methods can be divided into three categories according to specific tasks, i.e., node-relevant, link-relevant, and graph-relevant tasks. Node-relevant models focus on node-level tasks such as node classification and node embedding. Zügner et al. [13] first propose NETTACK to fool the node classification models. RL-S2V [14] utilizes a reinforcement learning method to learn the attack policy. Additionally, Bojchevski et al. [30] analyze adversarial vulnerability by random walks and propose approaches to worsen the quality of node embeddings. Most link-relevant models attack link prediction tasks. Zhou et al. [16] try to minimize the similarity metrics to lower the accuracy of link prediction. FPTA [17] designs heuristic rules to attack target models. Moreover, graph-relevant models concentrate on graph-level tasks such as graph classification. Xi et al. [18] propose the backdoor attack on graphs to worsen the model.

However, most attack models concentrate on single network tasks while UIL is related to multiple networks, thus existing models may be invalid in degrading the performance of UIL. Although the work like GMA [19] can perform its effectiveness on graph matching, the requirement on cooperative perturbations cross networks limits the real application on privacy protection.

III Notations and Preliminaries

This section introduces basic concepts used in the paper.

III-A Notations

In this paper, a graph is defined as a tuple , where and denote the set of nodes and edges respectively. Symbol denotes a node and represents the edge with node and as its endpoints. Let and denote the total number of nodes and edges. Each node has -dimensional attributes which are represented by a row in . The adjacency matrix of is represented by .

For a node , the -hop neighborhood of contains nodes at a distance less than or equal to from . The -ego network of node is a subgraph of induced by the -hop neighborhood of and itself, and is denoted by .

III-B Graph Kernel

Kernel methods are commonly used to measure similarity between two objects with a kernel function which corresponds to an inner product in reproducing kernel Hilbert space (RKHS). Kernels on graphs are generally defined on R-Convolution framework [31]. The main idea of R-Convolution is to decompose a graph into sub-structures and kernel value is a combination of sub-structure similarities. In this way, the kernel defined on graphs is given by:

where are decompositions of graph , denotes the relation from sub-structures to graph , and is a set of sub-structures in graph . The is a general kernel and satisfies:

Here, function maps a vector to a high dimensional space, and denotes the inner product.

III-C Earth Mover’s Distance

Let be a -dimensional compact set and denotes space of probability measures defined on . Earth mover’s distance (EMD) defines the discrepancy between two distribution :

where is the set of all joint distributions whose marginals are and . The can be intuitively interpreted as “mass” transportation from to so that EMD is the minimum cost for transforming one distribution into another distribution . The determines transportation cost from to , which is generally derived from distance measures. The discrete form of EMD is as follows:

III-D Variational Graph Autoencoder

Variational graph auto-encoder (VGAE) [23] is a commonly used network embedding framework, which encodes both graph structures and node attributes into the embedding. Given adjacency matrix and attribute for a graph, the encoder part of VGAE computes latent variables and with GCNs [32]: , , and latent embedding is given by a normal distribution :

The decoder part reconstructs the graph via inner product of latent embeddings. In this paper, we use VGAE to generate node embeddings that combine structural and attribute features.

IV Attacks on UIL Models

IV-A Problem Formulation

Given source network and target network . Let represents the prior knowledge matrix. The goal of UIL algorithm is to find the matching matrix:

The matrix has size and the entry reflect the probability that node (in source networ) and node (in target network) are linked. Typically, matrix denotes labeled data for supervised model. Otherwise, can also be pairwise node similarity. If no prior knowledge is known, all entries of are set to 0.

In this paper, the goal of adversarial attacks on UIL algorithms is to remove a set of edges to maximize changes in matching matrix. Let denotes the matching matrix between source network and poisoned target network , the objective is:

where is an allowed threshold of removed edges. Here, we only consider the removing edge attack because graphs in real applications are usually sparse, thus the solution spaces are relatively small compared with other kinds of attack. Meanwhile, removing edge is easy to implement on social platforms.

IV-B Unified Kernel-based Framework on UIL

Previous studies on UIL have reached a consensus [10, 11] that, among different networks, nodes that have similar local structures are more likely to be linked, which is known as the “topology consistency”. Accordingly, instead of assuming one specific UIL algorithm as the threat model, we propose a unified framework to re-formalize the UIL problem based on the widely used topology consistency, which is conducive to devising an effective and universal strategy to degrade diverse UIL models.

The local structure of a node can be well captured by the -ego network of and the similarity across different structures is measured by graph kernels. Thus, consistency between node in graph and node in graph can be measured as: . The UIL task can be uniformly expressed as the following form:

| (1) |

The above equation means nodes with higher topology consistency should have a higher probability to be aligned and, at the same time, the final alignment matrix needs to be consistent with prior knowledge matrix . Here, is a hyperparameter that controls the importance of prior knowledge.

Let denote the Kernel Matrix and . Eq (1) can be written in the matrix form as follows:

The closed-form solution is obtained by setting its derivative to be zero:

and finally we can get

| (2) |

Eq. (2) gives a unified view of UIL tasks based on the topology consistency. With such a perspective, the matching matrix is a linear combination of the kernel matrix and the prior knowledge matrix. Although most existing UIL models do not use kernel-based modeling explicitly, the pairwise similarity comparison in them is closely related to kernel methods. For example, the matching results of FINAL [10] are relevant to random walk kernels and the similarity comparison in DeepLink [9] can be viewed as a variant of path-based kernels.

Next, we will display our attack strategy based on the proposed unified kernel-based framework on UIL.

IV-C Topology-oriented Attack via Kernel Method (TOAK)

With the unified kernel-based framework on UIL, the matching matrix between source network and poisoned target network is calculated as:

| (3) |

where denote the Kernel Matrix for source network and poisoned target network so that . Integrating Eq (2) and Eq (3), the original objective of the attack problem can be transformed into the following form:

| (4) |

Here, -ego network changes as the removing edge set changes and the goal is to maximize the Eq (IV-C). It can be noticed that the source network and the target network are both involved in the objective function which indicates the optimization process needs information about two networks. However, collecting details across different networks is usually difficult in real applications. Therefore, the following theorem is utilized to simplify the objective function:

Theorem 1

For node in source network and node in target network , the following relation holds:

The proof is referred to in Appendix A-A. Theorem 1 indicates that maximizing the differences on kernel function values between source network and target network before and after the attack as in Eq. (IV-C) is equivalent to minimizing the kernel values for the clean and poisoned target network. With this transformation, only the target network information is required and the objective is converted to:

| (5) |

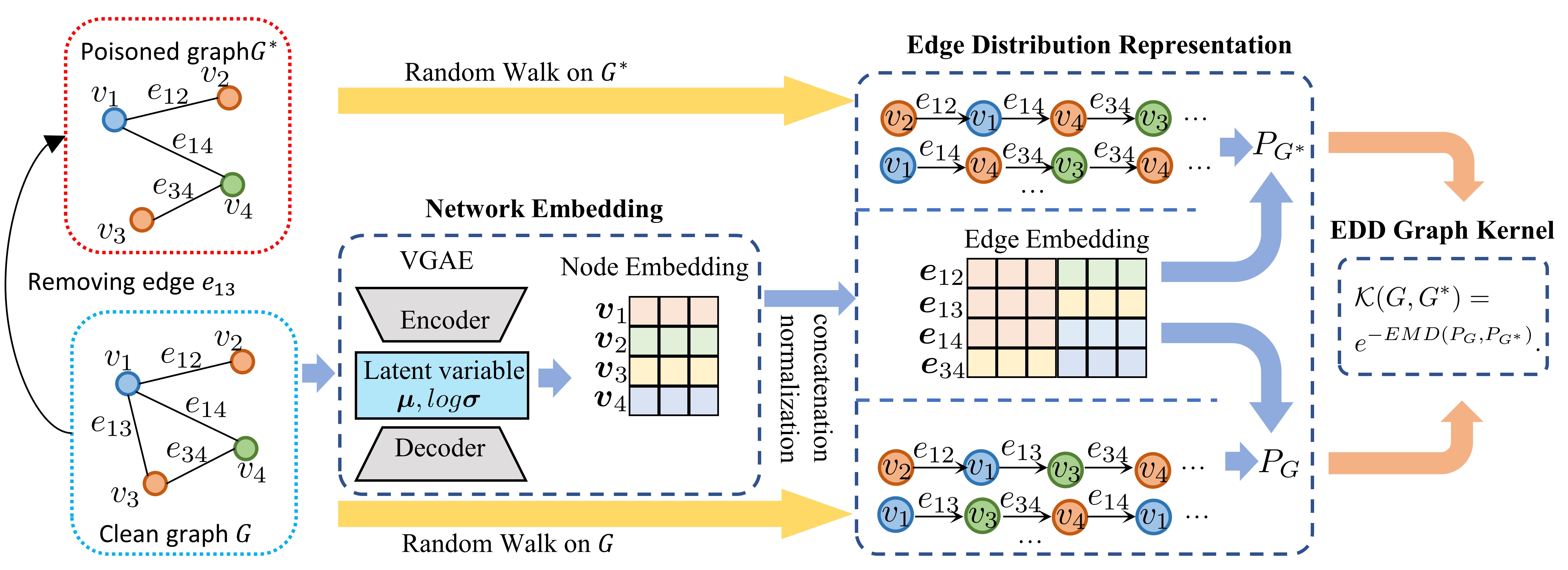

IV-D Edge Distribution Distance (EDD) Kernels

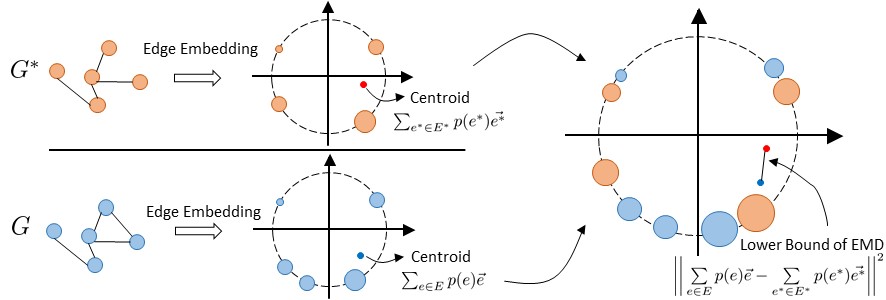

In this section, we will define a specific graph kernel applied in TOAK (shown in Fig. 2). Following the R-Convolution framework mentioned in Section III-B, a naive decomposition treats the graph as a bag-of-edges, where pre-image corresponds to the set of edges in graph . In this way, the R-Convolution kernel related to and can be reduced to:

| (6) |

where refers to the set of comparable edge pairs between and , and denotes representations of edges respectively.

Generally, edge representation can be well interpreted by its endpoints’ representations [7]. With consideration of the decomposition insensitive to topology variance in Eq. (6), we apply VGAE to generate powerful node representations that fuses the structural and attribute information:

where is node representation matrix and row vector denotes corresponding node representation, With the calculated node representation, the edge representation is obtained with concatenation and normalization of endpoints’ representations:

| (7) |

Here, represents the concatenation operation of two vectors and denotes the norm of a vector.

Based on edge representations, kernel is formalized as a radial basis function, i.e.,

| (8) |

where is a shape parameter to scale the distance between edges. Substitute Eq. (8) into Eq. (6), the kernel can be formulated as

| (9) |

Then, we formalize the unresolved parameter . We regard a graph as a distribution on its edge embedding space and measures the discrepancy between two distributions with Earth mover’s distance as follows:

| (10) | ||||

Here and are the converted distribution of and respectively. Parameter is exactly defined as the joint probability. With this definition, the kernel reflects not only the similarity between two graphs but also the importance of different edges in a graph. The effect of removing an edge can be calculated conveniently, which is essential in the attack task.

The marginal probability in Eq. (10) is defined as follows:

| (11) |

where is a function counting the frequency that edge appears in random walk process. The and represent the sum of -th row and -th row in prior knowledge matrix respectively. Function is an indicator, it equals 1 when and equals 0 otherwise. Note that, random walk and prior knowledge indicate the edge importance in graphs and UIL task respectively.

V Optimization and Analysis

In this section, we equip the proposed kernel with a speed-up technique and then display the complete attack strategy. Some analyses on time complexity are given at the end.

V-A Acceleration

Directly applying the proposed kernel to objective Eq. (5) will calculate EMD with two -ego networks. The time complexity of computing EMD in Eq. (10) is , where represents the number of nodes in -ego network. The high cost of computing makes it hard to be applied to dense or large-scale graphs. In this way, we estimate EMD by its lower bound:

Theorem 2

EMD between and has the following lower bound:

Proof and discussion on the lower bound can be found in Appendix A-B. Using the lower bound in Theorem 2 to replace the EMD in Eq. (12) and we have:

| (13) |

This form of kernel can be viewed as a variant of typical Gaussian kernels which is positive definite. Otherwise, the complexity is reduced to , which is applicable to large-scale datasets.

V-B Greedy Poisoning Strategy on Edges

With the defined kernel functions, we can measure the impact of removing one edge within the object Eq (5):

| (14) |

Here, poisoned graph is obtained via removing edge in clean graph . The graph is the -ego network of node on poisoned graph. Note that, if edge is not in the edge set of , which means removing edge will not affect the -ego network of , thus the kernel value equals 1. Usually, -ego network contains only a small amount of edges. Therefore, most terms in score(e) equal 1 and only a small part of kernels needs to be calculated.

After computing scores, the edge with the minimum score is removed. By repeating the computing-removing process for times, the set is finally obtained. However, recalculating the score for times is time-consuming. Therefore, we utilize the greedy selecting strategy. According to the score, we choose edges at once with -smallest values to compose the removing edge set . The overall algorithm is shown in Algorithm 1.

Input: The target network , prior knowledge matrix .

Parameter: Removing edge number , coefficient . Random walk length and random walk amoumt .

Output: Removing edge set .

V-C Complexity Analysis

The time complexity of the random walk process is , in which denotes random walk amount and denotes length of random walk. The score calculating has a complexity of , where the number represents the average nodes among the -ego networks and the number denotes average times an edge appears in -ego networks. The total complexity of TOAK is . As and , the algorithm is almost linear about node numbers and edge numbers.

Note that the proposed score calculating method in Eq. (14) can also compute the effect of non-existing edges, which represents the adding-edge attack. However, due to the sparsity of graphs, the possible candidates of adding-edge attack are huge, causing nearly time complexity of score calculating process. As a result, in this paper, we only consider removing-edges attacks. Efforts on designing other kinds of attacks will be our future task.

VI Experiment

VI-A Experimental Setup

In this section, we describe the datasets, baselines, UIL models, metrics, and other details of experiment111The code and data of TOAK will be available at Github after acceptance..

Datasets We conduct experiments on three real-world datasets to evaluate the performance of proposed TOAK model. Basic information of datasets is listed in Table I. The Douban and TF datasets come from famous social networks while the ARXIV dataset contains co-author networks. By reversing the source and target network in datasets, six user identity linkage datasets can be built and we denote them as: Douban (OnlineOffline), Douban (Offline Online), TF (TwitterFoursqure), TF (FoursquareTwitter), ARXIV (MatDM), and ARXIV (CSDM), where the former in brackets is the source network and the later is the target network.

| Dataset | Network | #nodes | #edges | #linked users |

|---|---|---|---|---|

| Douban [35] | Online | 3906 | 8164 | 1118 |

| Offline | 1118 | 1511 | ||

| TF [36] | 5120 | 130575 | 3148 | |

| Foursquare | 5313 | 54233 | ||

| ARXIV [37] | Mat | 3506 | 7341 | 1400 (Mat-DM) 3896 (CS-DM) |

| DM | 5465 | 14485 | ||

| CS | 4946 | 11600 |

Baselines Our TOAK model is compared with 6 baselines including state-of-the-art methods. Random attack randomly removes edges to generate perturbed graphs. DEG attack removes edges that connect to nodes with the largest degree and PR attack deletes edges connected to nodes with the highest pagerank [27] score. DW3 [30] perturbs the random walk based node embedding via analysis on the PPMI matrix of the graph. FPTA [17] is a heuristic approach that relies on hand-crafted rules, which remove edges connected with known linked users. GMA [19] push nodes to dense regions in graphs via the density estimation approach and achieves state-of-the-art performance. Also, we compare TOAK with its variants TOAK-, which runs without the EMD acceleration technique. To ensure the fairness of comparison, baseline models only remove edges on the target network as TOAK does.

| Douban (Online Offline) | TF (Twitter Foursquare) | ARXIV (Mat DM) | |||||||||||||

| FINAL | REGAL | PALE | DeePLink | IONE | FINAL | REGAL | PALE | DeePLink | IONE | FINAL | REGAL | PALE | DeePLink | IONE | |

| Clean | 40.70 | 96.19 | 65.97 | 91.13 | 69.85 | 46.41 | 47.87 | 89.08 | 97.66 | 90.27 | 47.57 | 95.46 | 78.57 | 82.68 | 87.39 |

| Random | 47.41 | 96.73 | 73.01 | 91.87 | 73.99 | 47.30 | 50.71 | 91.02 | 97.64 | 91.23 | 49.86 | 95.84 | 80.45 | 85.23 | 89.59 |

| DEG | 40.88 | 96.60 | 68.76 | 91.22 | 72.20 | 46.63 | 48.21 | 89.01 | 97.83 | 89.82 | 47.71 | 95.77 | 79.68 | 84.32 | 89.04 |

| PR | 42.75 | 96.92 | 68.80 | 91.75 | 72.74 | 47.20 | 48.24 | 89.15 | 97.78 | 89.88 | 47.57 | 95.86 | 80.79 | 83.55 | 90.43 |

| DW3 | 42.93 | 96.80 | 73.45 | 92.54 | 75.42 | 46.57 | 51.55 | 92.05 | 98.09 | 92.12 | 45.43 | 95.93 | 80.54 | 83.80 | 89.14 |

| FPTA | 48.21 | 96.99 | 70.68 | 92.29 | 71.84 | 47.52 | 47.06 | 90.07 | 98.02 | 90.83 | 52.14 | 95.73 | 80.32 | 83.18 | 89.04 |

| GMA | - | - | 71.15 | 91.84 | 74.55 | - | - | 92.58 | 98.27 | 92.75 | - | - | 82.46 | 85.23 | 89.54 |

| TOAK- | 55.01 | 97.53 | 76.29 | 93.25 | 76.31 | 50.07 | 52.30 | 95.03 | 99.38 | 95.89 | 53.06 | 95.89 | 90.89 | 93.21 | 93.04 |

| TOAK | 54.95 | 97.78 | 75.96 | 93.27 | 76.52 | 50.13 | 52.69 | 95.89 | 99.35 | 95.35 | 53.87 | 96.49 | 91.05 | 93.84 | 93.34 |

| Douban (Offline Online) | TF (Foursquare Twitter) | ARXIV (CS DM) | |||||||||||||

| FINAL | REGAL | PALE | DeePLink | IONE | FINAL | REGAL | PALE | DeePLink | IONE | FINAL | REGAL | PALE | DeePLink | IONE | |

| Clean | 41.50 | 96.15 | 64.49 | 85.14 | 70.68 | 46.41 | 44.10 | 85.03 | 96.94 | 90.36 | 15.48 | 90.36 | 67.80 | 71.72 | 84.39 |

| Random | 46.60 | 95.87 | 69.90 | 85.79 | 72.67 | 46.54 | 47.33 | 86.28 | 97.44 | 91.77 | 18.35 | 93.56 | 70.05 | 75.38 | 86.23 |

| DEG | 43.29 | 96.12 | 65.50 | 84.76 | 72.40 | 46.95 | 45.65 | 85.91 | 97.26 | 91.00 | 16.45 | 90.66 | 69.12 | 72.94 | 85.72 |

| PR | 43.38 | 96.53 | 66.19 | 85.30 | 72.25 | 47.01 | 46.14 | 85.63 | 97.11 | 90.58 | 15.76 | 90.39 | 70.72 | 73.69 | 85.61 |

| DW3 | 47.41 | 95.64 | 71.44 | 87.04 | 75.33 | 46.16 | 47.06 | 86.87 | 97.23 | 91.80 | 16.14 | 93.81 | 73.29 | 76.87 | 88.31 |

| FPTA | 47.50 | 96.91 | 71.22 | 88.27 | 72.78 | 46.47 | 45.59 | 85.88 | 97.65 | 91.46 | 20.74 | 93.25 | 69.36 | 76.59 | 86.38 |

| GMA | - | - | 69.50 | 87.26 | 72.36 | - | - | 89.51 | 98.78 | 93.63 | - | - | 73.44 | 77.83 | 85.08 |

| TOAK- | 54.29 | 97.01 | 93.05 | 96.34 | 89.03 | 49.62 | 51.08 | 93.26 | 99.01 | 96.02 | 26.17 | 93.92 | 80.98 | 87.95 | 90.07 |

| TOAK | 54.09 | 97.09 | 93.72 | 96.14 | 88.01 | 49.71 | 51.97 | 94.06 | 99.67 | 95.42 | 27.10 | 94.62 | 81.99 | 88.63 | 90.30 |

Target UIL models The effectiveness of the proposed attack method is validated with kinds of UIL models, and differences among them are displayed in Table II. FINAL [10] is a network alignment model which finds aligned nodes through iterations on the Kronecker product of adjacency matrix. REGAL [38] leverages the power of automatically learned node representations to match nodes across different graphs. PALE [8] employs network embedding with awareness of observed anchor links as supervised information. DeepLink [9] utilize more powerful embedding approaches inspired by word embedding technologies. IONE [7] learns multiple node embeddings and tries to model followers/followees as different context vectors. Note that, FINAL and REGAL are not deep models, thus the GMA method can not be applied to them.

Metrics The accuracy index is the most popular measure in UIL tasks, which calculates the ratio of correctly linked user pairs among all linked pairs. To directly reflect the attacking quality, we use a variant of Accuracy named Mismatching Rate (MR) as the metric. MR can be calculated as follows:

As for the measurement of imperceptibility of attacks, it is generally weighted by the total removed edges. However, this index can only reflect the imperceptibility of the whole graph. To go a further step, we consider the imperceptibility at a more precise node level, and computes the ratio of unpoisoned edges on each node, which is called Node Imperceptibility Score ():

The higher the , the harder the attack is to be perceived at the node .

Experimental Details In this part, we describe the implementation details of TOAK and the experimental settings.

TOAK utilizes typical VGAE222https://github.com/dmlc/dgl/tree/master/examples/pytorch/vgae to embed the network. The latent variable of VGAE is calculated with 2-layers GCN with hidden dimensions of 32 and 16 respectively. The learning rate is set to 0.001 and the total running epochs are 1000. The prior knowledge matrix in unsupervised UIL algorithms is the node degree similarity matrix and for supervised UIL models, for training node pairs and otherwise. For all of the datasets, the random walk length (hyperparameter ) is set to 5 and repeated 1000 times (hyperparameter ). The hyperparameter in Douban (online offline) and for Douban (offline online). For TF and ARXIV datasets, are all set to .

As for the details of UIL algorithms. FINAL and REGAL are unsupervised algorithms, and all the linked users are used for testing. The supervised UIL algorithms including PALE, DeepLink, and IONE randomly select 20% of linked user node pairs as training set and remains 80% of linked user pairs for testing. The hyperparameters of UIL models are adjusted to the best. We repeat the experiment 5 times and report the average mismatching rate.

All of the experiments are conducted on a compute server running on Linux with 2 CPUs of Intel Xeon E5-2640 v4 (at 2.40 GHz), 2 GPUs of NVIDIA Telsa K80 (11GB memory), and 128GB of RAM.

VI-B Overall Comparision

Firstly, we make an overall comparison of the attack performance among all the models when removing 10% edges in target network. The results are shown in Table III.

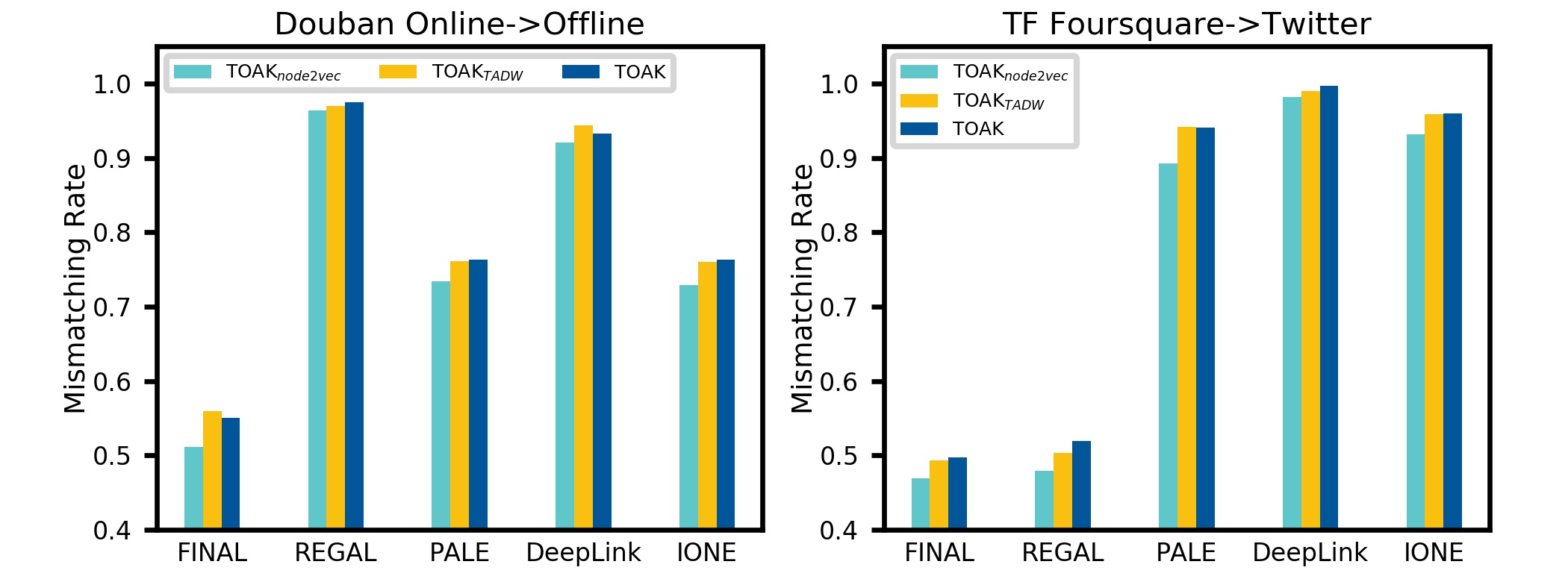

We can observe that the proposed model outperforms other baselines on all datasets. Among different experimental settings over datasets and UIL algorithms, the proposed TOAK improves mismatching rate with an average of 4.58% when compared with best baselines, and gains at most 22.28% increasing on PALE in Douban (OfflineOnline), indicating the effectiveness of TOAK. Meanwhile, results show that attacks from baselines perform unsteady over UIL models. Besides, with a constraint on gradient descent method, GMA cannot be applied to FINAL and REGAL. Meanwhile, TOAK has no such limits and gains the best performance, reflecting better transferability than compared attack methods.

It is worth noting that, on ARXIV datasets, barely removing edges in DM network can dramatically confused the linkage on both the MatDM and CSDM. Such a result demonstrates the potential application of TOAK in real-world, where only the target network can be modified. Interestingly, TOAK always obtains more MR promotions on supervised deep UIL models. The phenomenon may be attributed to the vulnerability of deep linkage methods. Finally, despite approximated EMD used in TOAK, it can still achieve similar mismatching rate as TOAK- (which applies exact calculation on EMD). The comparison on TOAK and TOAK- demonstrates the correctness of the accelerating process.

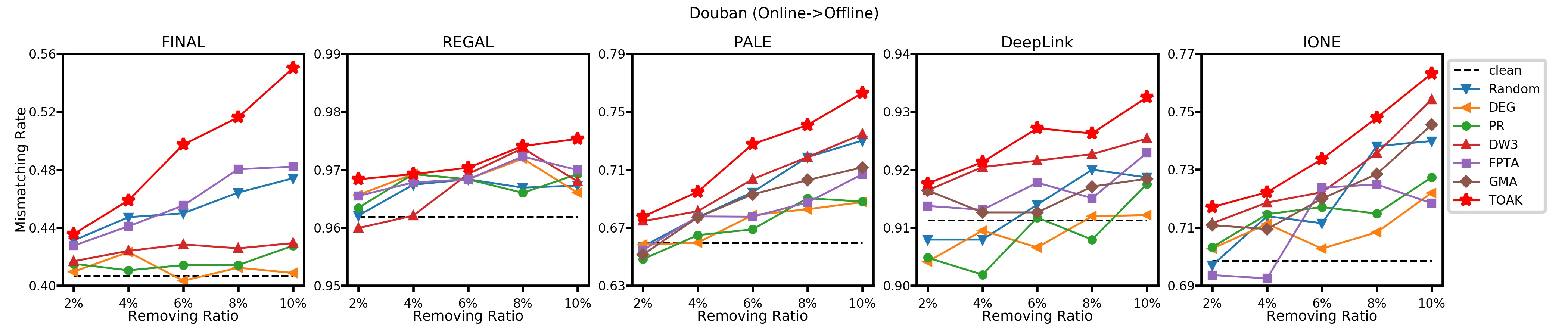

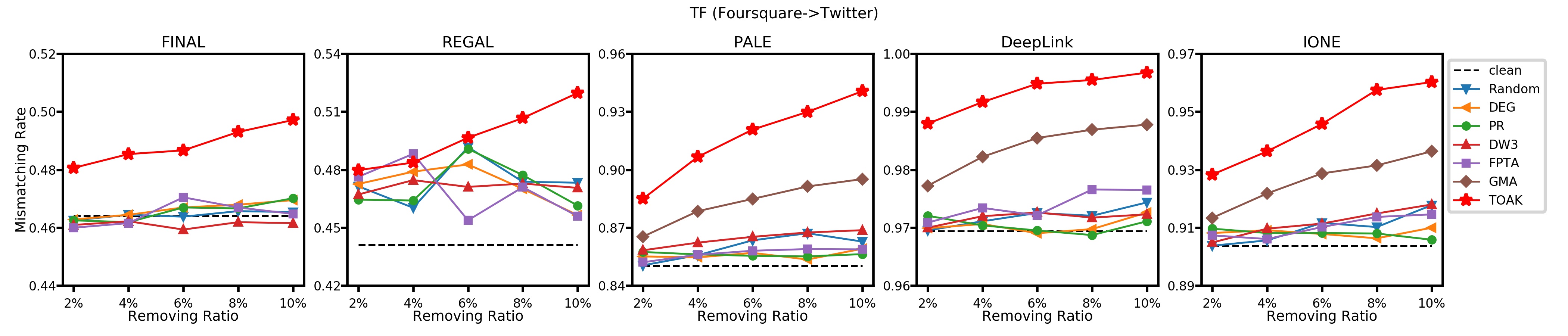

VI-C Ablation Study

Fig. 3 presents mismatching rate on various UIL models by varying the ratios of removed edges from 2% to 10%. As it illustrates, TOAK model always has higher mismatching rates under different ratios of removed edges when compared with other baselines. Meanwhile, mismatching rates can be consistently increased along with higher removing ratios resulting from TOAK.

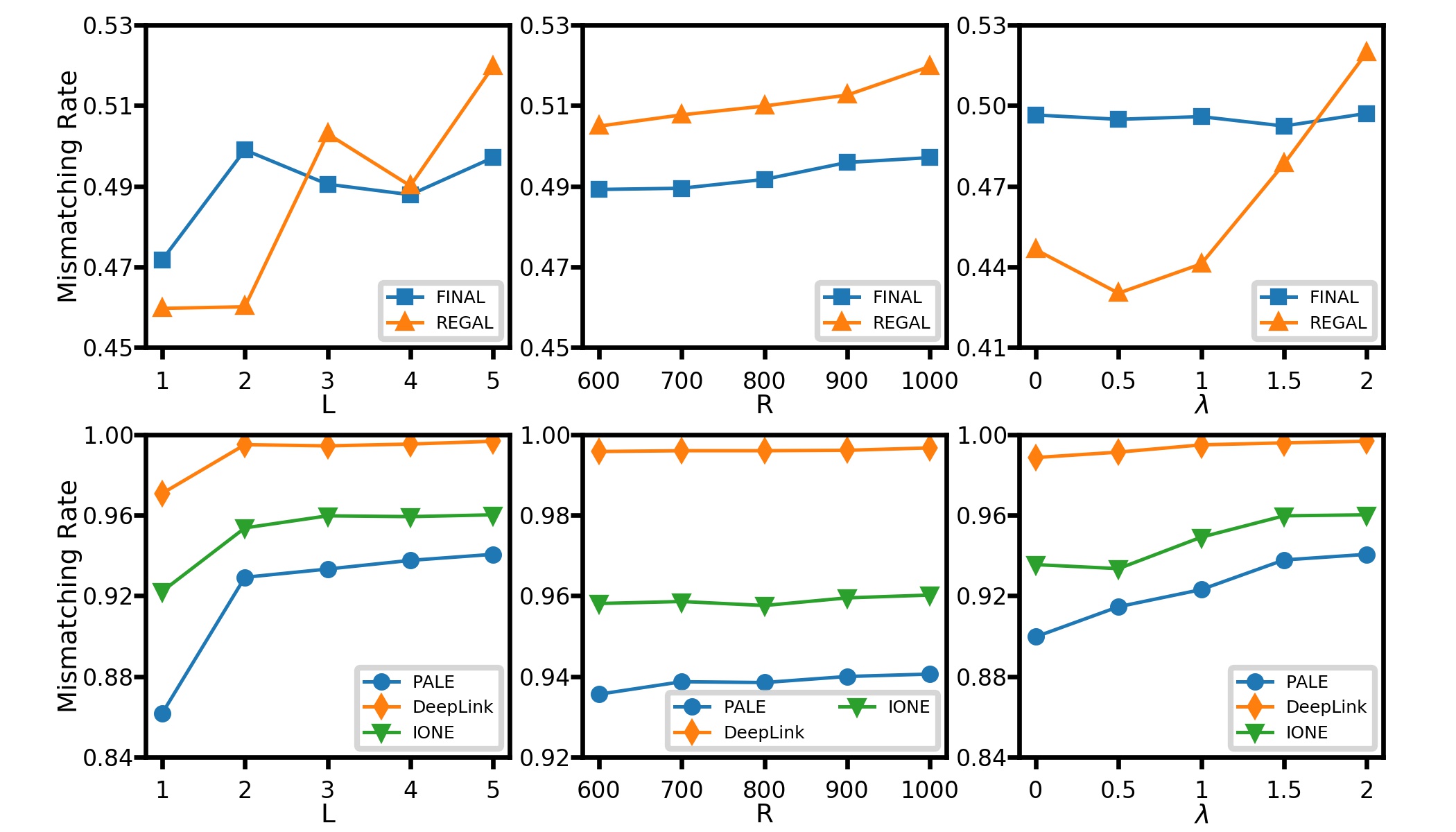

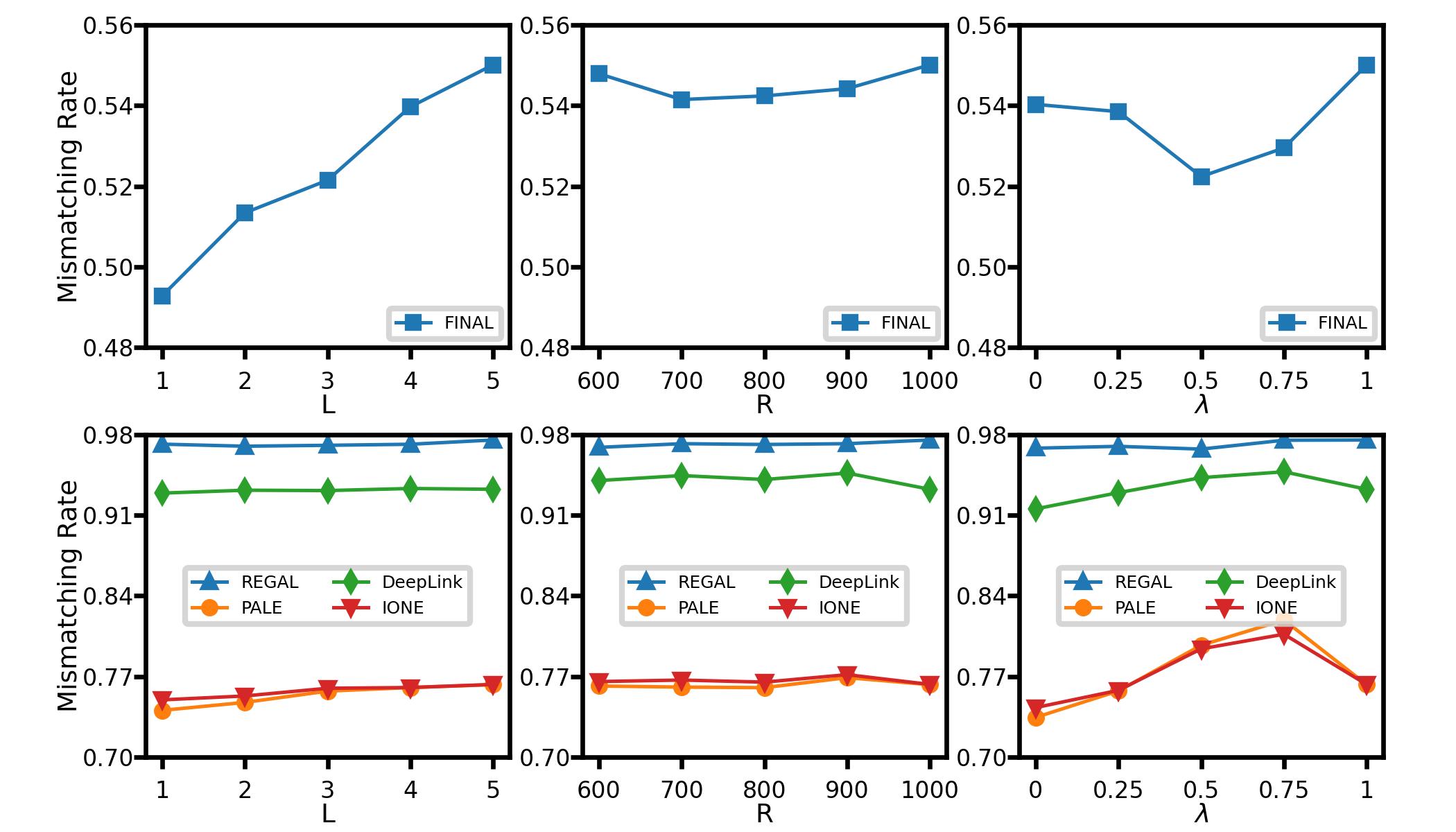

Next, we discuss the influence of hyper-parameters in the proposed method, including random walk length , random walk amount , and coefficient . Comparisons are conducted with 10% edges in the target network removed. The experimental results on TF (FoursquareTwitter) dataset are illustrated in Figure 4. Besides, the influence on other datasets has similar trends and we show them in Appendix A-C. As for random walk length, we can notice that using a longer walk length can improve the performance, and the mismatching rate becomes stable when the length is extended. The phenomenon can be explained that the longer walk length can reflect more comprehensive local structures around nodes thus leading a better performance. In addition, random walk amount has no prominent influence on TOAK, where mismatching rate keeps similar as random walk amount varies from 600 to 1000. The last hyper-parameter plays an important role in TOAK, which controls the ratio of the prior knowledge in edge distributions. TOAK has almost the worst performance when , which means no prior knowledge to refer to, and the mismatching rate starts rising as becomes larger. Results on indicate that prior knowledge plays an important role in attacking.

Additionally, the effect of network embedding methods in TOAK is also verified and the results are shown in Fig. 5. Here TOAK is the proposed model with VGAE embedding. The TOAKnode2vec and TOAKTADW uses node2vec [39] and TADW [40] embedding respectively. The mismatching rate is similar on TOAK and TOAKTADW while TOAKnode2vec has worse performance. It is because node2vec considers only the graph structure features and ignores the node attributes.

VI-D Imperceptibility & Efficiency

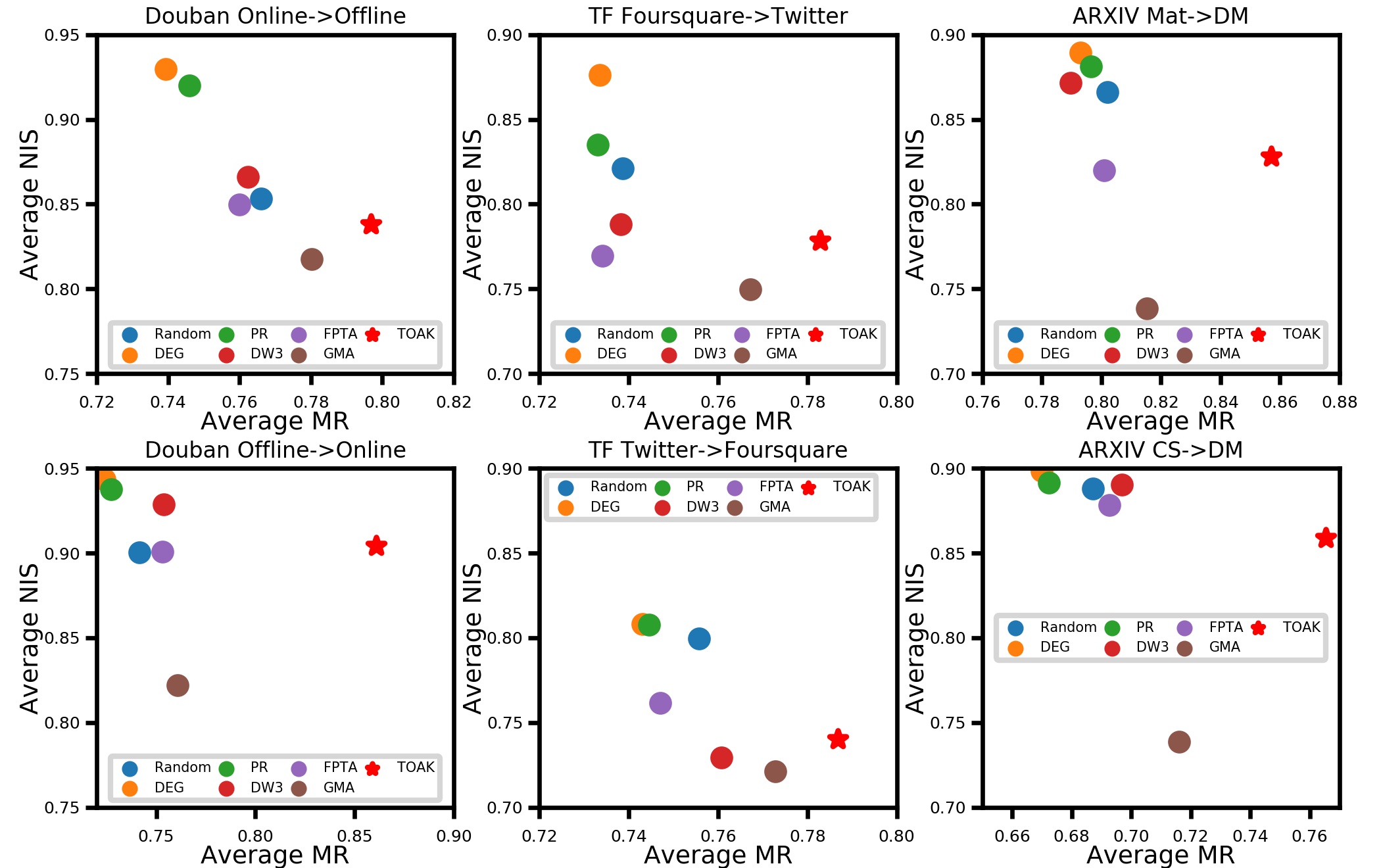

In addition to the effectiveness of the model, the imperceptibility of attack and efficiency are also of great significance. Generally, the imperceptibility of the attack is weighted by the total removed edges, which can only reflect the attack imperceptibility on the whole graph. In practice, users are more sensitive to changes that appear closely around them. As a result, we consider the average node imperceptibility score to better reflect the attack imperceptibility of a single user. The results are shown in Fig 6. It is obvious that our model has the best mismatching rate performance and has higher imperceptibility than the state-of-the-art baseline GMA. Another baseline like DEG or PR has higher but their performances are limited. Overall, the proposed TOAK model can reach a balance between attack effectiveness and attack imperceptibility.

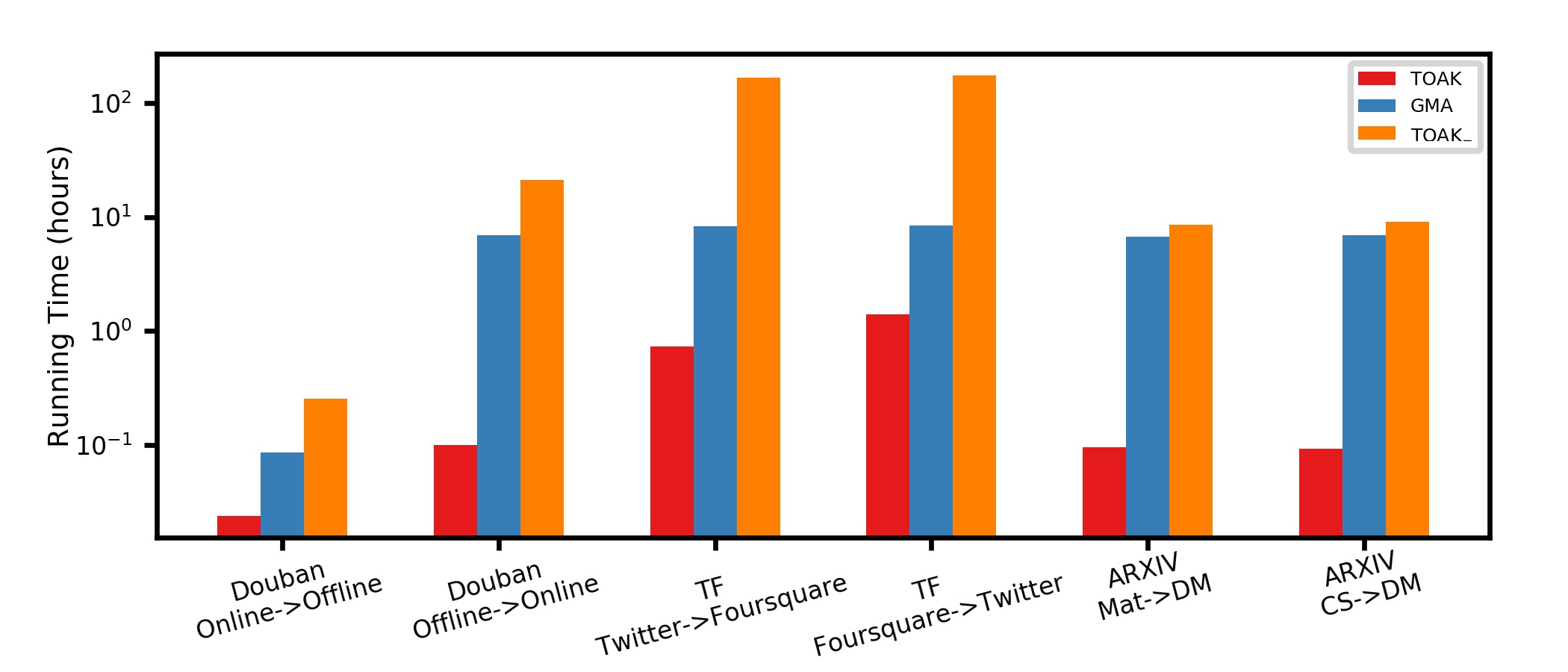

Fig 7 shows the time cost of GMA, TOAK, and TOAK- on different datasets for removing 10% edges in the target network. The TOAK-, which computes earth mover’s distance directly, spends much more running time than TOAK and GMA, especially for large target networks. With acceleration techniques, TOAK shows more than 10 times speedup compared with TOAK- among different datasets. The acceleration process becomes necessary when the graph is large. The TOAK and TOAK- have almost the same mismatching rate whereas TOAK has a lower time cost. At the same time, TOAK performs better than GMA and is less time-consuming. Comparison of running times shows the proposed TOAK is superior on not only the effectiveness but also the efficiency.

VII Conclusion

In this paper, we notice the potential privacy leakage caused by user identity linkage and design a novel strategy to degrade UIL algorithms via removing edges in a network. The proposed method re-formalizes the genera UIL problem in the graph kernel framework, which models the topology consistency. The attack problem is then converted to maximizing the structural changes in a single network. A novel kernel based on earth mover’s distance among edge embedding distributions is proposed as well, and the attack strategy is designed according to the kernel. To make the proposed model more time-efficient, we adopt an approximate method and greatly decreased the complexity. Experimental results on real-world datasets show that TOAK achieves state-of-the-art performance and obtains a balance between attack effectiveness and imperceptibility.

In the future, we will consider more types of attacks, such as flipping edges or modifying node attributes, based on the proposed kernelized framework. Moreover, some heuristic strategies would be also introduced to improve the capability in more practical scenarios, e.g., partial observation of datasets. Also, the concealment of attacks should be meticulously considered. It might be conducive to explicitly model the imperceptibility in the objective function.

References

- [1] S. Zhang, W. Ni, and N. Fu, “Community preserved social graph publishing with node differential privacy,” in ICDM, 2020, pp. 1400–1405.

- [2] A. Rezaei and J. Gao, “On privacy of socially contagious attributes,” in ICDM, 2019, pp. 1294–1299.

- [3] X. Kong, J. Zhang, and P. S. Yu, “Inferring anchor links across multiple heterogeneous social networks,” in CIKM, 2013, pp. 179–188.

- [4] Q. Zhan, J. Zhang, P. Yu, and J. Xie, “Community detection for emerging social networks,” World Wide Web, vol. 20, no. 6, pp. 1409–1441, 2017.

- [5] H. T. Trung, N. T. Toan, T. Van Vinh, H. T. Dat, D. C. Thang, N. Q. V. Hung, and A. Sattar, “A comparative study on network alignment techniques,” Expert Systems with Applications, vol. 140, p. 112883, 2020.

- [6] K. Shu, S. Wang, J. Tang, R. Zafarani, and H. Liu, “User identity linkage across online social networks: A review,” Acm Sigkdd Explorations Newsletter, vol. 18, no. 2, pp. 5–17, 2017.

- [7] L. Liu, W. K. Cheung, X. Li, and L. Liao, “Aligning users across social networks using network embedding.” in Ijcai, 2016, pp. 1774–1780.

- [8] T. Man, H. Shen, S. Liu, X. Jin, and X. Cheng, “Predict anchor links across social networks via an embedding approach.” in Ijcai, vol. 16, 2016, pp. 1823–1829.

- [9] F. Zhou, L. Liu, K. Zhang, G. Trajcevski, J. Wu, and T. Zhong, “Deeplink: A deep learning approach for user identity linkage,” in IEEE INFOCOM. IEEE, 2018, pp. 1313–1321.

- [10] S. Zhang and H. Tong, “Final: Fast attributed network alignment,” in KDD, 2016, pp. 1345–1354.

- [11] Y. Yan, S. Zhang, and H. Tong, “Bright: A bridging algorithm for network alignment,” in WWW, 2021, pp. 3907–3917.

- [12] L. Sun, Y. Dou, C. Yang, J. Wang, P. S. Yu, L. He, and B. Li, “Adversarial attack and defense on graph data: A survey,” arXiv preprint arXiv:1812.10528, 2018.

- [13] D. Zügner, A. Akbarnejad, and S. Günnemann, “Adversarial attacks on neural networks for graph data,” in KDD, 2018, pp. 2847–2856.

- [14] H. Dai, H. Li, T. Tian, X. Huang, L. Wang, J. Zhu, and L. Song, “Adversarial attack on graph structured data,” in ICML. PMLR, 2018, pp. 1115–1124.

- [15] D. Zügner and S. Günnemann, “Adversarial attacks on graph neural networks via meta learning,” arXiv preprint arXiv:1902.08412, 2019.

- [16] K. Zhou, T. P. Michalak, M. Waniek, T. Rahwan, and Y. Vorobeychik, “Attacking similarity-based link prediction in social networks,” in AAMAS, 2019, pp. 305–313.

- [17] P. Dey and S. Medya, “Manipulating node similarity measures in networks,” arXiv preprint arXiv:1910.11529, 2019.

- [18] Z. Xi, R. Pang, S. Ji, and T. Wang, “Graph backdoor,” in USENIX Security 21, 2021, pp. 1523–1540.

- [19] Z. Zhang, Z. Zhang, Y. Zhou, Y. Shen, R. Jin, and D. Dou, “Adversarial attacks on deep graph matching,” Advances in Neural Information Processing Systems, vol. 33, pp. 20 834–20 851, 2020.

- [20] P. Yanardag and S. Vishwanathan, “Deep graph kernels,” in KDD, 2015, pp. 1365–1374.

- [21] B. Schölkopf, “The kernel trick for distances,” Advances in neural information processing systems, vol. 13, 2000.

- [22] Y. Rubner, C. Tomasi, and L. J. Guibas, “The earth mover’s distance as a metric for image retrieval,” International journal of computer vision, vol. 40, no. 2, pp. 99–121, 2000.

- [23] T. N. Kipf and M. Welling, “Variational graph auto-encoders,” arXiv preprint arXiv:1611.07308, 2016.

- [24] A. Smalter, J. Huan, and G. Lushington, “Gpm: A graph pattern matching kernel with diffusion for chemical compound classification,” in 2008 8th IEEE International Conference on BioInformatics and BioEngineering. IEEE, 2008, pp. 1–6.

- [25] R. Singh, J. Xu, and B. Berger, “Global alignment of multiple protein interaction networks with application to functional orthology detection,” Proceedings of the National Academy of Sciences, vol. 105, no. 35, pp. 12 763–12 768, 2008.

- [26] X. Mu, F. Zhu, E.-P. Lim, J. Xiao, J. Wang, and Z.-H. Zhou, “User identity linkage by latent user space modelling,” in KDD, 2016, pp. 1775–1784.

- [27] L. Page, S. Brin, R. Motwani, and T. Winograd, “The pagerank citation ranking: Bringing order to the web.” Stanford InfoLab, Tech. Rep., 1999.

- [28] D. Koutra, H. Tong, and D. Lubensky, “Big-align: Fast bipartite graph alignment,” in ICDM. IEEE, 2013, pp. 389–398.

- [29] X. Li, Y. Shang, Y. Cao, Y. Li, J. Tan, and Y. Liu, “Type-aware anchor link prediction across heterogeneous networks based on graph attention network,” in AAAI, vol. 34, no. 01, 2020, pp. 147–155.

- [30] A. Bojchevski and S. Günnemann, “Adversarial attacks on node embeddings via graph poisoning,” in ICML. PMLR, 2019, pp. 695–704.

- [31] D. Haussler, “Convolution kernels on discrete structures,” Technical report, Department of Computer Science, University of California …, Tech. Rep., 1999.

- [32] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” arXiv preprint arXiv:1609.02907, 2016.

- [33] M. Togninalli, E. Ghisu, F. Llinares-López, B. Rieck, and K. Borgwardt, “Wasserstein weisfeiler-lehman graph kernels,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- [34] A. Feragen, F. Lauze, and S. Hauberg, “Geodesic exponential kernels: When curvature and linearity conflict,” in CVPR, 2015, pp. 3032–3042.

- [35] Y. Dong, J. Tang, S. Wu, J. Tian, N. V. Chawla, J. Rao, and H. Cao, “Link prediction and recommendation across heterogeneous social networks,” in ICDM. IEEE, 2012, pp. 181–190.

- [36] J. Zhang, X. Kong, and P. S. Yu, “Transferring heterogeneous links across location-based social networks,” in WSDM, 2014, pp. 303–312.

- [37] M. De Domenico, A. Lancichinetti, A. Arenas, and M. Rosvall, “Identifying modular flows on multilayer networks reveals highly overlapping organization in interconnected systems,” Physical Review X, vol. 5, no. 1, 2015.

- [38] M. Heimann, H. Shen, T. Safavi, and D. Koutra, “Regal: Representation learning-based graph alignment,” in CIKM, 2018, pp. 117–126.

- [39] A. Grover and J. Leskovec, “node2vec: Scalable feature learning for networks,” in KDD, 2016, pp. 855–864.

- [40] C. Yang, Z. Liu, D. Zhao, M. Sun, and E. Chang, “Network representation learning with rich text information,” in IJCAI, 2015.

Appendix A APPENDIX

A-A Proof of Theorem 1

According to the definition of graph kernels, for any graph , there exists a mapping function that satisfies: , where denotes the inner product.

Therefore, for node in source network and node in target network , we have:

In the above equation, terms , and are the inner product of theirself. Thus they can be regarded as a constant. By ignoring the constant, we have:

The above equation proves that:

A-B Proof of Theorem 2

Let denotes the joint distribution that minimize the transportation cost from distribution to distribution . According to the definition of EMD, we have

| (15) | ||||

| (16) |

The inequality (A-B) is obtained via Jensen Inequality. Recall that, for any :

| (17) |

where and denote the edge set of and respectively.

An intuitive expression on this lower bound is shown in Fig 8. With the normalized edge embeddings, edges in a graph distributing on the unit circle. The centroid of a distribution is the probability-weighted sum of all edge embeddings in a graph. The lower bound of EMD calculates the distance between two centroids of distributions.

A-C Effect of hyperparameters on dataset Douban (online offline)

As shown in Fig. 9, we can notice that using a longer walk length can improve the TOAK performance on FINAL, which might be because the longer the length the more features about neighbors are considered, while the performance shows a slight improvement and then becomes stable on other algorithms. In addition, the TOAK model is not sensitive to the random walk number. Finally, the influence of is also important. On the FINAL and REGAL algorithms, the mismatching rate first decreases and then increases. However, the trend is opposite on PALE, DeepLink, and IONE, where too large and too small will all worsen the performance.