AFEC: Active Forgetting of Negative Transfer in Continual Learning

Abstract

Continual learning aims to learn a sequence of tasks from dynamic data distributions. Without accessing to the old training samples, knowledge transfer from the old tasks to each new task is difficult to determine, which might be either positive or negative. If the old knowledge interferes with the learning of a new task, i.e., the forward knowledge transfer is negative, then precisely remembering the old tasks will further aggravate the interference, thus decreasing the performance of continual learning. By contrast, biological neural networks can actively forget the old knowledge that conflicts with the learning of a new experience, through regulating the learning-triggered synaptic expansion and synaptic convergence. Inspired by the biological active forgetting, we propose to actively forget the old knowledge that limits the learning of new tasks to benefit continual learning. Under the framework of Bayesian continual learning, we develop a novel approach named Active Forgetting with synaptic Expansion-Convergence (AFEC). Our method dynamically expands parameters to learn each new task and then selectively combines them, which is formally consistent with the underlying mechanism of biological active forgetting. We extensively evaluate AFEC on a variety of continual learning benchmarks, including CIFAR-10 regression tasks, visual classification tasks and Atari reinforcement tasks, where AFEC effectively improves the learning of new tasks and achieves the state-of-the-art performance in a plug-and-play way.

1 Introduction

The ability to continually learn numerous tasks from dynamic data distributions is critical for deep neural networks, which needs to remember the old tasks by avoiding catastrophic forgetting [18] while effectively learn each new task by improving forward knowledge transfer [17]. Due to the dynamic data distributions, forward knowledge transfer might be either positive or negative, and is difficult to determine without accessing to the old training samples. If the forward knowledge transfer is negative, i.e., learning a new task from the old knowledge is worse than learning the new task on a randomly-initialized network [37, 17], then precisely remembering the old tasks will severely interfere with the learning of the new task, thus decreasing the performance of continual learning.

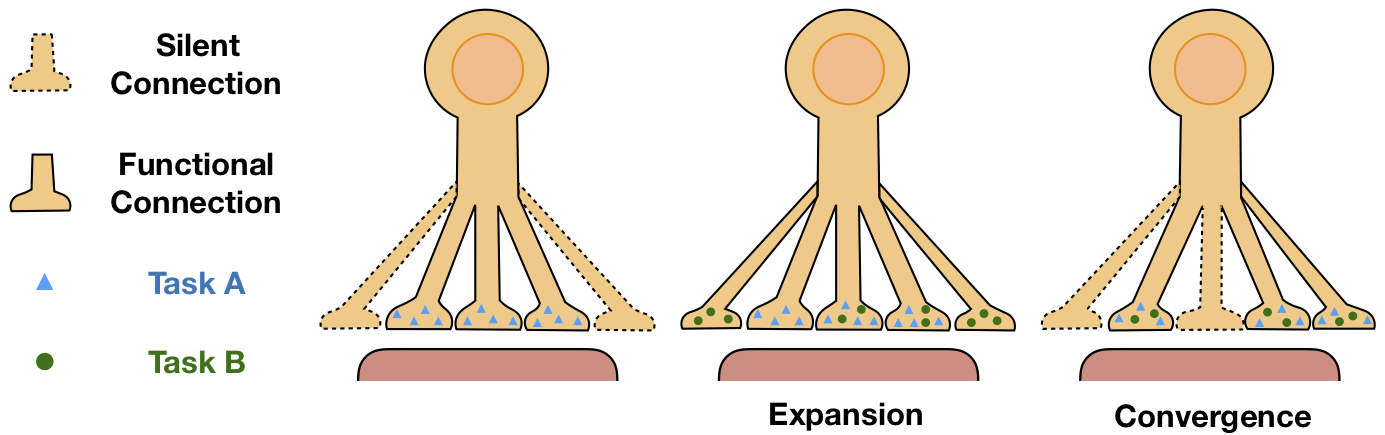

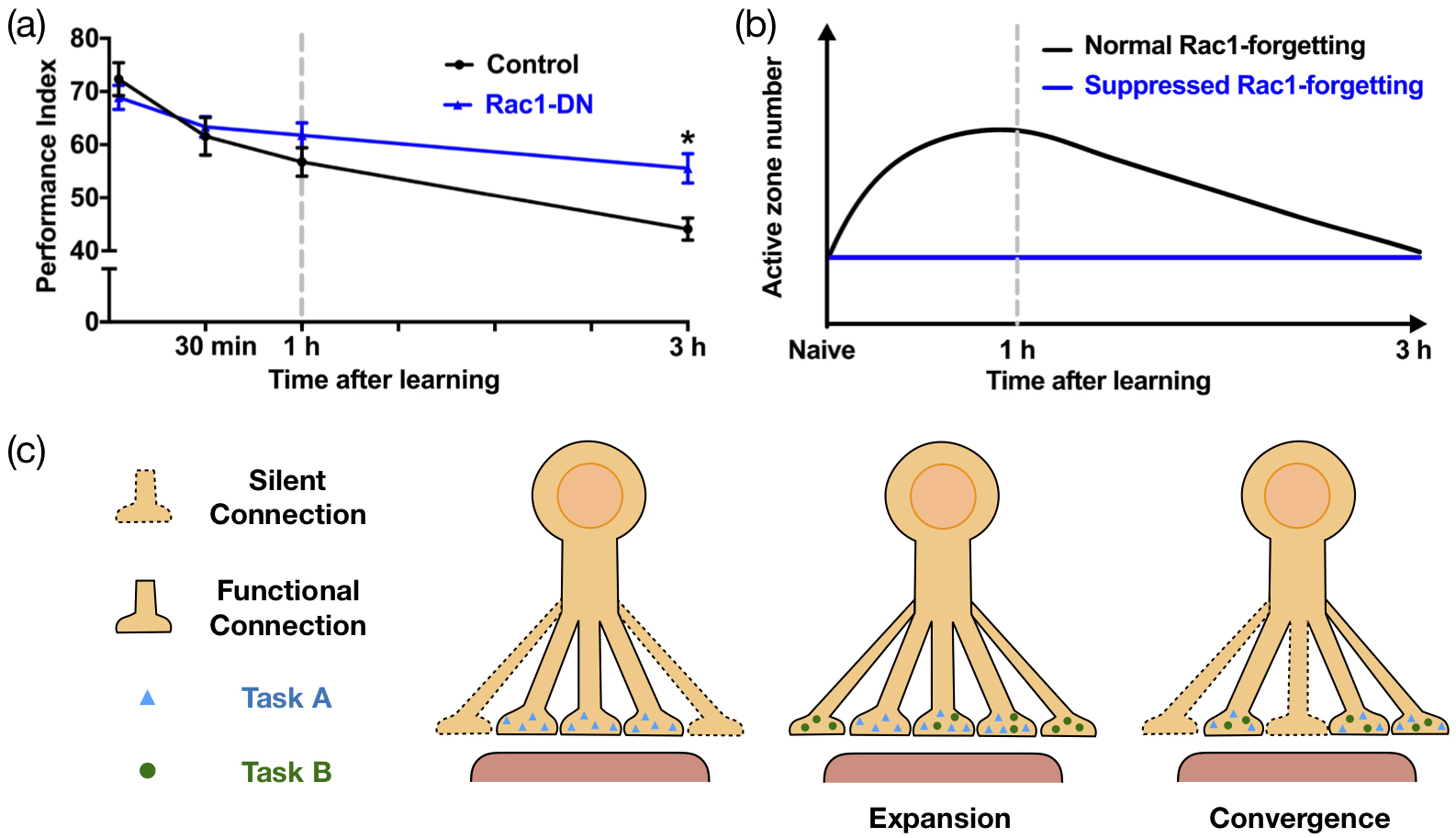

By contrast, biological neural networks can effectively learn a new experience on the basis of remembering the old experiences, even if they conflict with each other [18, 5]. This advantage, called memory flexibility, is achieved by active forgetting of the old knowledge that interferes with the learning of a new experience [28, 5]. The latest data suggested that the underlying mechanism of biological active forgetting is to regulate the learning-triggered synaptic expansion and synaptic convergence (Fig. 1, see Appendix A for neuroscience background and our biological data). Specifically, the biological synapses expand additional functional connections to learn a new experience together with the previously-learned functional connections (synaptic expansion). Then, all the functional connections are pruned to the amount before learning (synaptic convergence).

Inspired by the biological active forgetting, we propose to actively forget the old knowledge that interferes with the learning of new tasks without significantly increasing catastrophic forgetting, so as to benefit continual learning. Specifically, we adopt Bayesian continual learning and actively forget the posterior distribution that absorbs all the information of the old tasks with a forgetting factor to better learn each new task. Then, we derive a novel method named Active Forgetting with synaptic Expansion-Convergence (AFEC), which is formally consistent with the underlying mechanism of biological active forgetting at synaptic structures. Beyond regular weight regularization approaches [12, 1, 36, 2], which selectively penalize changes of the important parameters for the old tasks, AFEC dynamically expands parameters only for each new task to avoid potential negative transfer from the main network, while the forgetting factor regulates a penalty to selectively merge the main network parameters with the expanded parameters, so as to learn a better overall representation of both the old tasks and the new task.

We extensively evaluate AFEC on continual learning of CIFAR-10 regression tasks, a variety of visual classification tasks, and Atari reinforcement tasks [10], where AFEC achieves the state-of-the-art (SOTA) performance. We empirically validate that the performance improvement results from effectively improving the learning of new tasks without increasing catastrophic forgetting. Further, AFEC can be a plug-and-play method that significantly boosts the performance of representative continual learning strategies, such as weight regularization [12, 1, 36, 2] and memory replay [21, 9, 6].

Our contributions include: (1) We draw inspirations from the biological active forgetting and propose a novel approach to actively forget the old knowledge that interferes with the learning of new tasks for continual learning; (2) Extensive evaluation on a variety of continual learning benchmarks shows that our method effectively improves the learning of new tasks and achieves the SOTA performance in a plug-and-play way; and (3) To the best of our knowledge, we are the first to model the biological active forgetting and its underlying mechanism at synaptic structures, which suggests a potential theoretical explanation of how the underlying mechanism of biological active forgetting achieves its function of forgetting the past and continually learning conflicting experiences [28, 5].

2 Related Work

Continual learning needs to minimize catastrophic forgetting and maximize forward knowledge transfer. Existing work in continual learning mainly focuses on mitigating catastrophic forgetting. Representative approaches include: weight regularization [12, 1, 36, 2], which selectively penalizes changes of the previously-learned parameters; parameter isolation [24, 10], which allocates a dedicated parameter subspace for each task; and memory replay [21, 9, 31, 32], which approximates and recovers the old data distributions through storing old training data, their embedding or learning a generative model. In particular, Adaptive Group Sparsity based Continual Learning (AGS-CL) [10] proposed to regularize the group sparsity with separation of the important nodes for the old tasks to prevent catastrophic forgetting, which takes advantages of weight regularization and parameter isolation, and achieved the SOTA performance on various continual learning benchmarks.

Several studies suggested that forward knowledge transfer is critical for continual learning [17, 4], which might be either positive or negative due to the dynamic data distributions. Although it is highly nontrivial to mitigate potential negative transfer while overcoming catastrophic forgetting, the efforts that specifically consider this challenging issue are limited. [3] developed a method to mitigate negative transfer when fine-tuning tasks on a pretrained network. For the scenario where the old tasks can be learned again, [26] learned an additional active column to better exploit potential positive transfer. [22] tried to maximize transfer and minimize interference from a memory buffer containing a few old training data. Similarly, [6, 16, 34] attempted to more effectively balance stability and plasticity with the memory buffer in class incremental learning, while [33] stored and updated the old features. By contrast, since pretraining or old training data might not be available in continual learning, we mainly focus on a more restrict yet realistic setting that a neural network incrementally learns a sequence of tasks from scratch, without storing old training data. Further, we extend our method to the scenarios where pretraining or memory buffer can be used, as well as the scenarios other than classification tasks, such as regression tasks and reinforcement tasks.

3 Method

In this section, we first describe the framework of Bayesian continual learning [12, 20]. Under such framework, we propose an active forgetting strategy, which is formally consistent with the underling mechanism of biological active forgetting at synaptic structures.

3.1 Basics of Bayesian Continual Learning

Continual learning needs to remember the old tasks and learn each new task effectively. Let’s consider a simple case that a neural network with parameter continually learns two independent tasks, task and task , from their training datasets and [12]. The training dataset of each task is only available when learning the task.

Bayesian Learning: After learning , the posterior distribution

incorporates the knowledge of task . Then, we can get the predictive distribution for the test data of task :

As the posterior is generally intractable (except very special cases), we must resort to approximation methods, such as the Laplace approximation [12] or other approaches of approximate inference [20]. Let’s take Laplace approximation as an example. If is smooth and majorly peaked around the mode , we can approximate it with a Gaussian distribution whose mean is and covariance is the inverse Hessian of the negative log posterior (detailed in Appendix B.1).

Bayesian Continual Learning: Next, we want to incorporate the new task into the posterior, which uses the posterior as the prior of the next task [12]:

| (1) |

Then we can test the performance of continual learning by evaluating

| (2) |

Similarly, can be approximated by a Gaussian using Laplace approximation whose mean is the mode of the posterior:

| (3) | ||||

| (4) |

This MAP estimation is also known as the Elastic Weight Consolidation (EWC) [12]:

| (5) |

where is the loss for task and is the label of each parameter. is the Fisher Information matrix (FIM) of on (the computation is detailed in Appendix B.1), which indicates the “importance” of parameter for task . The hyperparameter explicitly controls the penalty that selectively merges each to to alleviate catastrophic forgetting.

3.2 Active Forgetting with Synaptic Expansion-Convergence

However, if precisely remembering task interferes with the learning of task , e.g., task and task are too different, it might be useful to actively forget the original data, similar to the biological strategy of active forgetting. Based on this inspiration, we introduce a forgetting factor and replace that absorbs all the information of with a weighted product distribution [8, 19]:

| (6) |

where we use to denote that we are ‘mixing’ and to produce the new distribution . is the normalizer that depends on , which keeps following a Gaussian distribution if and are both Gaussian (detailed in Appendix B.2). When , will be dominated by and remember all the information about task . When , will actively forget all the information about task . Modified from Eqn. (2), our target becomes:

| (7) |

We first need to determine , which decides how much information from task is forgotten to maximize the probability of learning task well. A good should be as follows:

| (8) |

Since the integral is difficult to solve, we can make a grid search to determine , which should be between 0 and 1. Next, can also be approximated by a Gaussian using Laplace approximation (the proof is detailed in Appendix B.3), and the MAP estimation is

| (9) |

Then we obtain the loss function of Active Forgetting with synaptic Expansion-Convergence (AFEC):

| (10) |

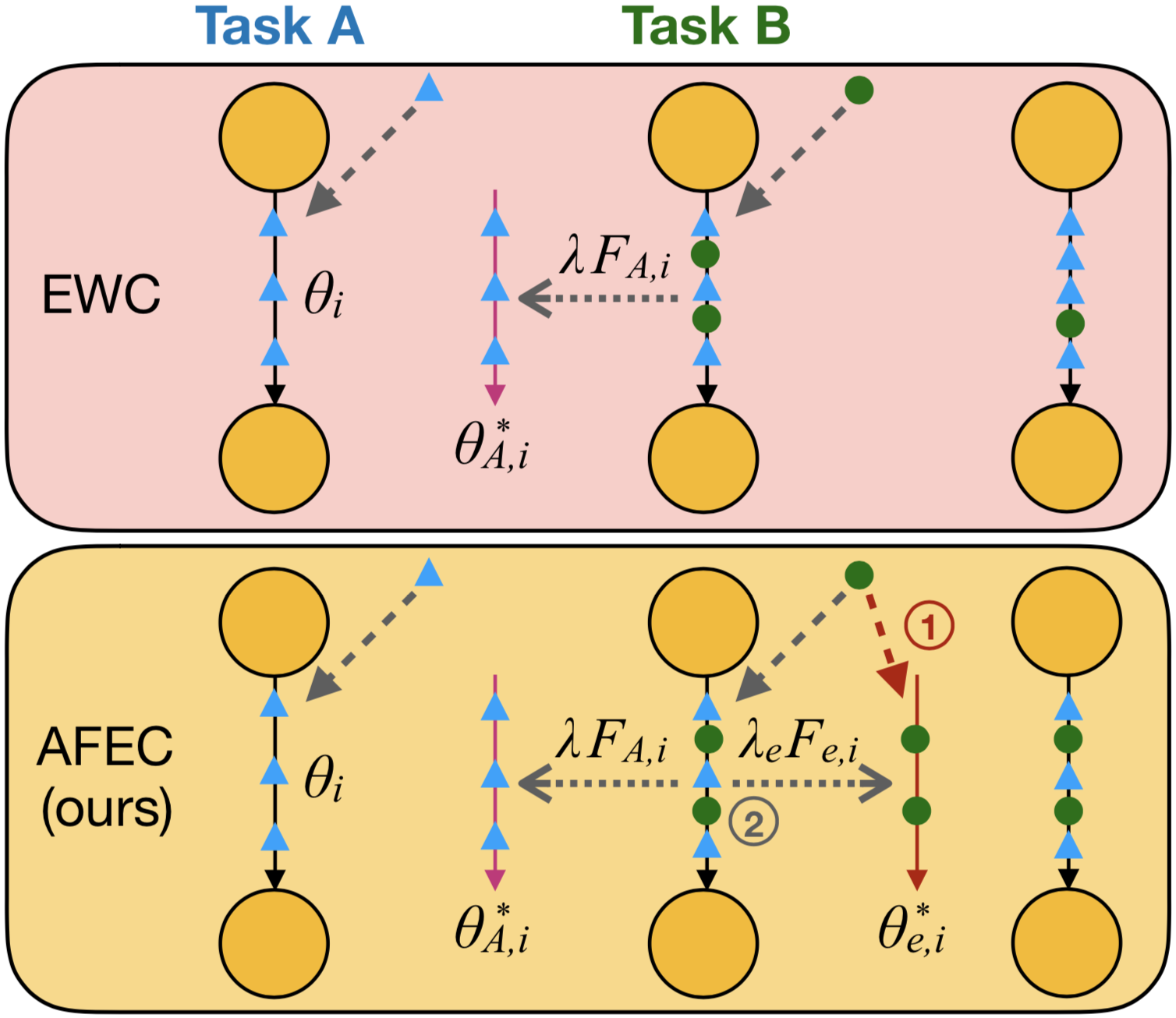

are the optimal parameters for the new task and is the FIM of (the computation is detailed in Appendix B.1). As shown in Fig. 2, we first learn a set of expanded parameters with to obtain and . Then we can optimize Eqn. (10), where two weight-merging regularizers selectively merge with for the old tasks and for the new task. The forgetting factor is integrated into a hyperparameter to control the penalty that promotes active forgetting. Therefore, derived from active forgetting of the original posterior in Eqn. (6), we obtain an algorithm that dynamically expands parameters to learn a new task and then selectively converges the expanded parameters to the main network. Intriguingly, this algorithm is formally consistent with the underlying mechanism of biological active forgetting (the neuroscience evidence is detailed in Appendix A), which also expands additional functional connections for a new experience (synaptic expansion) and then prunes them to the amount before learning (synaptic convergence).

As the proposed active forgetting is integrated into the third term, our method can be used in a plug-and-play way to improve continual learning (detailed in Appendix E, F). Here we use Laplace approximation to approximate the intractable posteriors, which can be other strategies of approximate inference [20] in further work. Note that and are not stored in continual learning, and the architecture of the main network is fixed. Thus, AFEC does not cause additional storage cost compared with regular weight regularization approaches such as [12, 36, 1, 2]. Further, it is straightforward to extend our method to continual learning of more than two tasks. We discuss it in Appendix B.4 with a pseudocode.

Now we conceptually analyze how AFEC mitigates potential negative transfer in continual learning (see Fig. 2). When learning task on the basis of task , regular weight regularization approaches [12, 36, 1, 2] selectively penalize changes of the old parameters learned for task , which will severely interfere with the learning of task if they conflict with each other. In contrast, AFEC learns a set of expanded parameters only for task to avoid potential negative transfer from task . Then, the main network parameters selectively merge with both the old parameters and the expanded parameters, depending on their contributions to the overall representations of task and task .

4 Experiment

In this section, we evaluate AFEC on a variety of continual learning benchmarks, including: CIFAR-10 regression tasks, which is a toy experiment to validate our idea about negative transfer in continual learning; visual classification tasks, where the forward knowledge transfer might be either positive or negative; and Atari reinforcement tasks, where the forward knowledge transfer is severely negative. All the experiments are averaged by 5 runs with different random seeds and task orders.

4.1 CIFAR-10 Regression Tasks

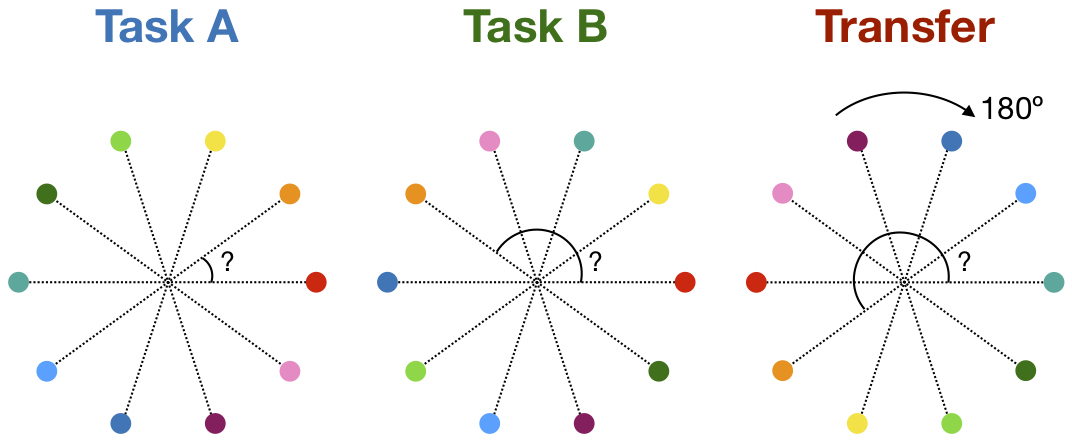

First, we propose CIFAR-10 regression tasks to explicitly show how negative transfer affects continual learning, and how AFEC effectively addresses this challenging issue. CIFAR-10 dataset [13] contains 50,000 training samples and 10,000 testing samples of 10-class colored images of size . The regression task is to evenly map the ten classes around the origin of the two-dimensional coordinates and train the neural network to predict the angle of the origin to each class (see Fig. 3). We change the relative position of the ten classes to construct different regression tasks with mutual negative transfer, in which remembering the old knowledge will severely interfere with the learning of a new task.

| Methods | LeNet [15] | VGG11 [29] | VGG11BN [29] | ResNet10 [7] | |

|---|---|---|---|---|---|

| Two-Task | Fine-tuning | 29.23 | 46.37 | 46.54 | 60.67 |

| EWC [12] | 39.91 | 73.55 | 82.00 | 71.94 | |

| AFEC (ours) | 44.45 | 77.76 | 86.07 | 75.67 | |

| Ten-Task | Fine-tuning | 46.57 | 18.03 | 18.08 | 54.97 |

| EWC [12] | 49.95 | 79.39 | 85.98 | 82.91 | |

| AFEC (ours) | 53.50 | 82.50 | 88.31 | 85.33 | |

| Transfer | Fine-tuning | 38.93 | 80.37 | 84.30 | 85.69 |

| EWC [12] | 35.87 | 76.66 | 82.25 | 84.96 | |

| AFEC (ours) | 40.90 | 83.81 | 86.30 | 87.80 |

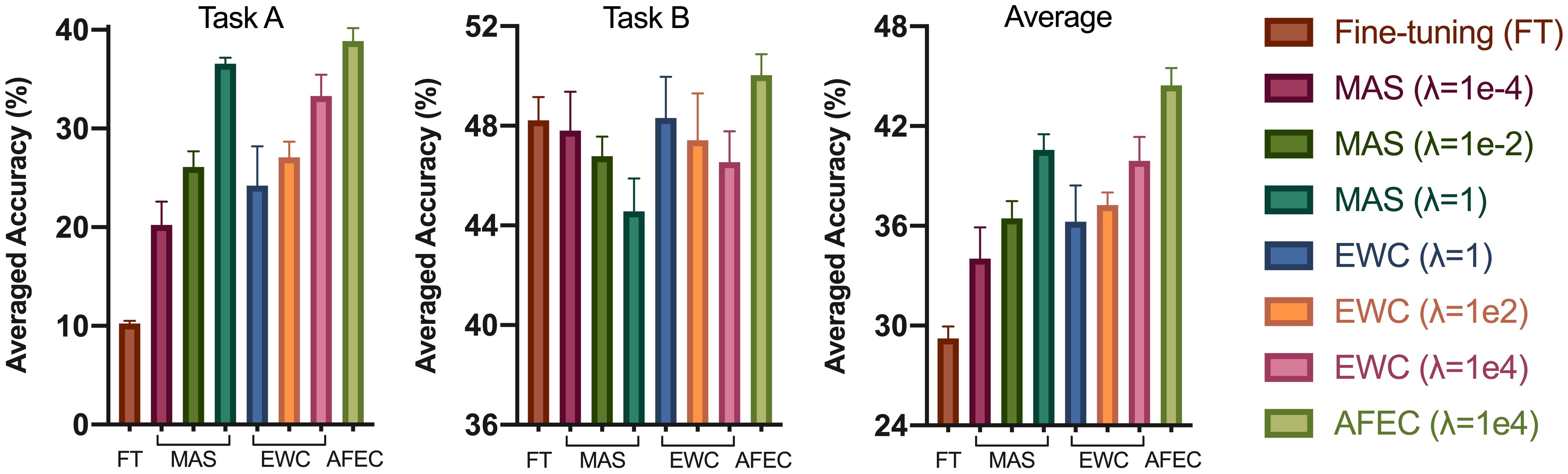

As shown in Fig. 4 for continual learning of two different regression tasks, regular weight regularization approaches, such as MAS [1] and EWC [12], can effectively remember the old tasks, but limits the learning of new tasks. In particular, larger strength of the weight regularization results in better performance of the first task but worse performance of the second task. In contrast, AFEC improves the learning of new tasks on the basis of remembering the old tasks, so as to achieve better averaged accuracy. Note that EWC is equal to the ablation of active forgetting in AFEC, i.e., , so the performance improvement of AFEC on EWC validates the effectiveness of our proposal. We further demonstrate the efficacy of AFEC on a variety of architectures and a larger amount of tasks (see Table 1).

In addition, we evaluate the ability of transfer learning after continual learning of two different regression tasks. We fix the feature extractor of the neural network and only fine-tune a linear classifier to predict a new task that is similar to the first task. Specifically, the similar task applies the same relative position as the first task, but rotates by , , , or . Therefore, if the neural network effectively remembers and transfers the relative position learned in the first task, it will be able to learn the similar task well. As shown in Table 1, AFEC can more effectively learn the similar task, while EWC is even worse than sequentially fine-tuning without weight regularization.

4.2 Visual Classification Tasks

Dataset: We evaluate continual learning on a variety of benchmark datasets for visual classification, including CIFAR-100, CUB-200-2011 and ImageNet-100. CIFAR-100 [13] contains 100-class colored images of the size , where each class includes 500 training samples and 100 testing samples. CUB-200-2011 [30] is a large-scale dataset including 200 classes and 11,788 colored images of birds, split as 30 images per class for training while the rest for testing. ImageNet-100 [9] is a subset of iILSVRC-2012 [23], consisting of randomly selected 100 classes of images and 1300 samples per class. We follow the regular preprocessing pipeline of CUB-200-2011 and ImageNet-100 as [10], which randomly resizes and crops the images to the size of before experiment.

Benchmark: We consider five representative benchmarks of visual classification tasks to evaluate continual learning in different aspects. The first three are on CIFAR-100, with forward knowledge transfer from more negative to more positive (detailed in Fig. 5), while the second two are on large-scale images. (1) CIFAR-100-SC [35]: CIFAR-100 can be split as 20 superclasses (SC) with 5 classes per superclass dependent on semantic similarity, where each superclass is a classification task. Since the superclasses are semantically different, forward knowledge transfer in such a task sequence is relatively more negative. (2) CIFAR-100 [21]: The 100 classes in CIFAR-100 are randomly split as 20 classification tasks with 5 classes per task. (3) CIFAR-10/100 [10]: The 10-class CIFAR-10 are randomly split as 2 classification tasks with 5 classes per task, followed by 20 tasks with 5 classes per task randomly split from CIFAR-100. This benchmark is adapted from [10] to keep the number of classes per task the same as benchmark (1, 2), where the large amounts of training data in the first two CIFAR-10 tasks bring a relatively more positive transfer. (4) CUB-200 [10]: The 200 classes in CUB-200-2011 are randomly split as 10 classification tasks with 20 classes per task. (5) ImageNet-100 [21]: The 100 classes in ImageNet-100 are randomly split as 10 classification tasks with 10 classes per task.

Architecture: We follow [10] to use a CNN architecture with 6 convolution layers and 2 fully connected layers for benchmark (1, 2, 3), and AlexNet [14] for benchmark (4, 5). Since continual learning needs to quickly learn a usable model from incrementally collected data, we mainly consider learning the network from scratch. Following [10], we also try AlexNet with ImageNet pretraining for CUB-200.

| CIFAR-100-SC | CIFAR-100 | CIFAR-10/100 | CUB-200 w/ PT | CUB-200 w/o PT | ImageNet-100 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | ||||||||||||

| Fine-tuning | 32.58 | 28.40 | 40.92 | 33.53 | 78.96 | 37.81 | 78.75 | 78.13 | 31.91 | 39.82 | 50.56 | 44.80 |

| P&C [26] | 53.48 | 52.88 | 70.10 | 70.21 | 86.72 | 78.29 | 81.42 | 81.74 | 33.88 | 42.79 | 76.44 | 74.38 |

| AGS-CL [10] | 55.19 | 53.19 | 71.24 | 69.99 | 86.27 | 80.42 | 82.30 | 81.84 | 32.69 | 40.73 | 51.48 | 47.20 |

| EWC [12] | 52.25 | 51.74 | 68.72 | 69.18 | 85.07 | 77.75 | 81.37 | 80.92 | 32.90 | 42.29 | 76.12 | 73.82 |

| ∗AFEC (ours) | 56.28 | 55.24 | 72.36 | 72.29 | 86.87 | 81.25 | 83.65 | 82.04 | 34.36 | 43.05 | 77.64 | 75.46 |

| \cdashline1-13[2pt/2pt] MAS [1] | 52.76 | 52.18 | 67.60 | 69.41 | 84.97 | 77.39 | 79.98 | 79.67 | 31.68 | 42.56 | 75.48 | 74.72 |

| w/ AFEC (ours) | 55.26 | 54.89 | 69.57 | 71.20 | 86.21 | 80.01 | 82.77 | 81.31 | 34.08 | 42.93 | 75.64 | 75.66 |

| \cdashline1-13[2pt/2pt] SI [36] | 52.20 | 51.97 | 68.72 | 69.21 | 85.00 | 76.69 | 80.14 | 80.21 | 33.08 | 42.03 | 73.52 | 72.97 |

| w/ AFEC (ours) | 55.25 | 53.90 | 69.34 | 70.13 | 85.71 | 78.49 | 83.06 | 81.88 | 34.04 | 43.20 | 75.72 | 74.14 |

| \cdashline1-13[2pt/2pt] RWALK [2] | 50.51 | 49.62 | 66.02 | 66.90 | 85.59 | 73.64 | 80.81 | 80.58 | 32.56 | 41.94 | 73.24 | 73.22 |

| w/ AFEC (ours) | 52.62 | 51.76 | 68.50 | 69.12 | 86.12 | 77.16 | 83.24 | 81.95 | 33.35 | 42.95 | 74.64 | 73.86 |

Baseline: First, we consider a restrict yet realistic setting of continual learning without access to the old training data, and perform multi-head evaluation [2]. Since AFEC is a weight regularization approach, we mainly compare with representative approaches that follow a similar idea, such as EWC [12], MAS [1], SI [36] and RWALK [2]. We also compare with AGS-CL [10], the SOTA method that takes advantage of weight regularization and parameter isolation, and P&C [26], which learns an additional active column on the basis of EWC to improve forward knowledge transfer. We reproduce the results of all the baselines from the officially released code of [10], where we do an extensive hyperparameter search and report the best performance for fair comparison (detailed in Appendix C). Then, we relax the restriction of using old training data and plug AFEC in representative memory replay approaches, where we perform single-head evaluation [2] (detailed in Appendix F).

Averaged Accuracy: In Table 2, we summarize the averaged accuracy of all the tasks learned so far during continual learning of visual classification tasks. AFEC achieves the best performance on all the continual learning benchmarks and is much better than EWC [12], i.e., the ablation of active forgetting in AFEC. In particular, AGS-CL [10] is the SOTA method on relatively small-scale images and on CUB-200 with ImageNet pretraining (CUB-200 w/ PT). While, AFEC achieves a better performance than AGS-CL on small-scale images from scratch and CUB-200 w/ PT, and substantially outperforms AGS-CL on the two benchmarks of large-scale images from scratch. Further, since regular weight regularization approaches are generally in a re-weighted weight decay form, AFEC can be easily adapted to such approaches (the adaptation is detailed in Appendix E) and effectively boost their performance on the benchmarks above.

Knowledge Transfer: Next, we evaluate knowledge transfer in the three continual learning benchmarks developed on CIFAR-100 in Fig. 5. We first present the accuracy of learning each new task in continual learning, where AFEC learns each new task much better than other baselines. Since continual learning of more tasks leads to less network resources for a new task, the overall trend of all the baselines is declining, indicating the necessity to improve forward knowledge transfer on the basis of overcoming catastrophic forgetting. Then we calculate forward transfer (FWT) [17], i.e., the averaged influence that learning the previous tasks has on a future task, and backward transfer (BWT) [17], i.e., the averaged influence that learning a new task has on the previous tasks (detailed in Appendix D). FWT is from more negative to more positive in CIFAR-100-SC, CIFAR-100 and CIFAR-10/100, while AFEC achieves the highest FWT among all the baselines. The BWT of AFEC is comparable as EWC, indicating that the proposed active forgetting does not cause additional catastrophic forgetting. Therefore, the performance improvement of AFEC in Table 2 is achieved by effectively improving the learning of new tasks in continual learning. In particular, AFEC achieves a much larger improvement on the learning of new tasks than P&C, which attempted to improve forward transfer of EWC through learning an additional active column. Due to the progressive parameter isolation, although AGS-CL achieves the best BWT, its ability of learning each new task drops more rapidly than other baselines. Thus, it underperforms AFEC in Table 2.

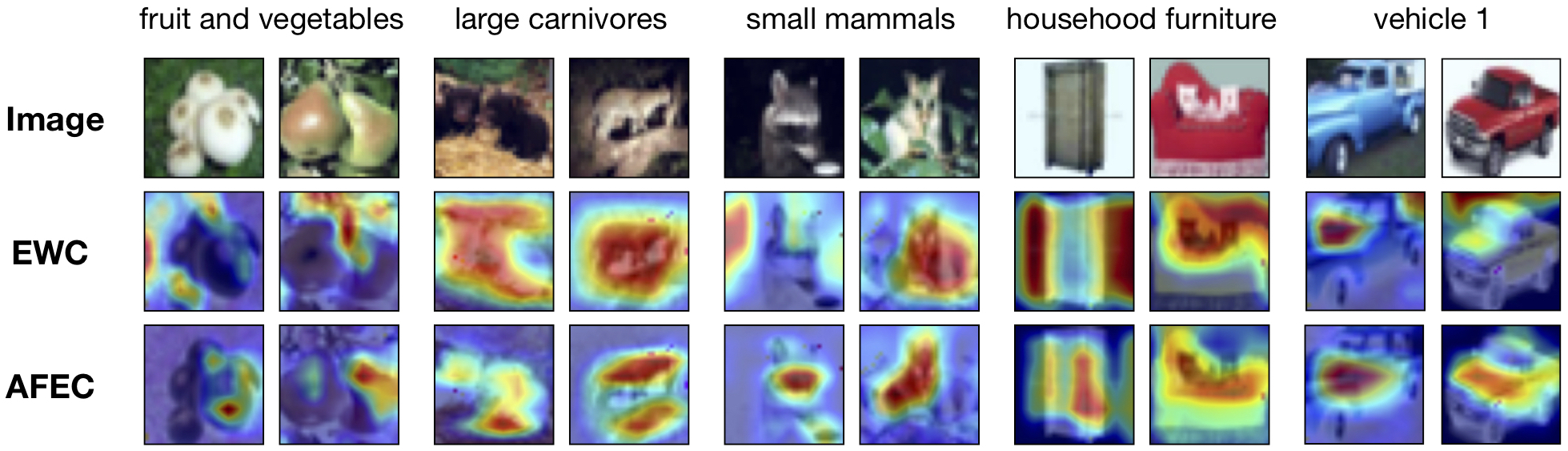

Visual Explanation: To explicitly show how AFEC improves continual learning, in Fig. 6 we use Grad-CAM [27] to visualize predictions of the latest task after continual learning on CIFAR-100-SC, where FWT is more negative as discussed above. The predictions of EWC overfit the background information since it attempts to best remember the old tasks with severe negative transfer, which limits the learning of new tasks. In contrast, the visual explanation of AFEC is much more reasonable than EWC, indicating the efficacy of active forgetting to address potential negative transfer and benefit the learning of new tasks.

Plugging-in Memory Replay: We further implement AFEC in representative memory replay approaches in Appendix F, where we perform single-head evaluation [2]. On CIFAR-100 and ImageNet-100 datasets, we follow [9, 6] that first learn 50 classes and then continually learn the other 50 classes by 5 phases (10 classes per phase) or 10 phases (5 classes per phase), using a small memory buffer of 20 images per class. AFEC substantially boosts the performance of representative memory replay approaches such as iCaRL [21], LUCIR [9] and PODNet [6].

4.3 Atari Reinforcement Tasks

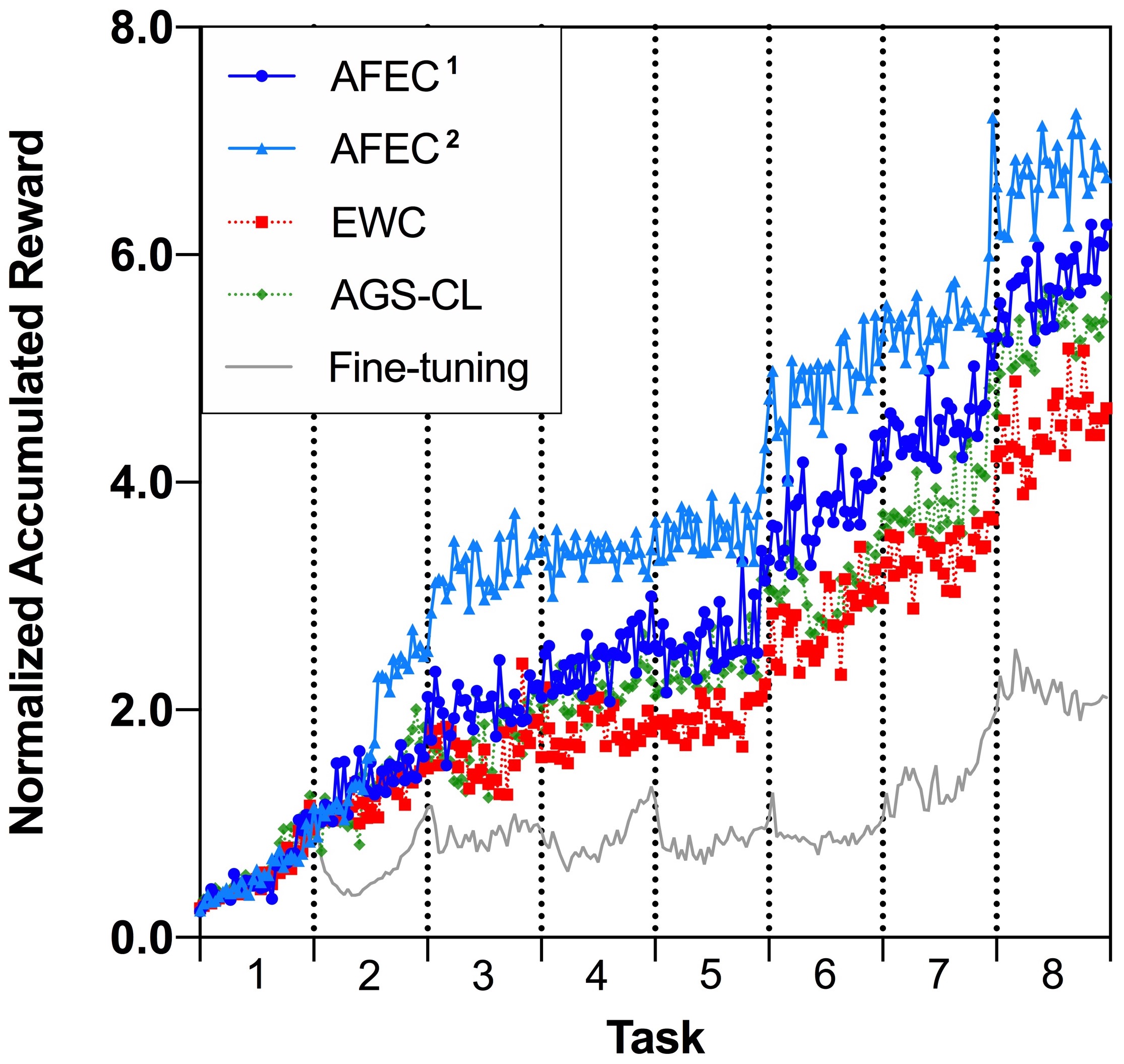

Next, we evaluate AFEC in continual learning of Atari reinforcement tasks (Atari games). We follow the implementation of [10] to sequentially learn eight randomly selected Atari games. Specifically, we applies a CNN architecture consisting of 3 convolution layers with 2 fully connected layers and identical PPO [25] for all the methods (detailed in Appendix G). The evaluation metric is the normalized accumulated reward: the evaluated rewards are normalized with the maximum reward of fine-tuning on each task, and accumulated. We present the results of three different orders of task sequence, averaged by five runs with different random initialization.

| Sequence 1 | Sequence 2 | Sequence 3 | |

|---|---|---|---|

| AFEC1 on EWC | +35.28 | +50.55 | +28.00 |

| AFEC2 on MAS | +30.09 | +61.12 | +26.63 |

For continual learning of Atari reinforcement tasks, forward knowledge transfer is severely negative, possibly because the optimal policies of each Atari games are highly different. We first measure the normalized rewards of learning each task with a randomly initialized network, which are 2.16, 1.44 and 1.68 on the three task sequences, respectively. That is to say, the initialization learned from the old tasks results in an averaged performance decline by , and , compared with random initialization. Then, we evaluate the maximum reward of learning each new task in Table 3, and the normalized accumulated reward of continual learning in Fig. 7. AFEC effectively improves the learning of new tasks and thus boosts the performance of EWC and MAS, particularly when learning more incremental tasks. AFEC also achieves a much better performance than the reproduced results of AGS-CL on its officially released code [10] (see Appendix G for an extensive analysis).

5 Conclusion

In this work, we draw inspirations from the biological active forgetting and propose a novel approach to mitigate potential negative transfer in continual learning. Our method achieves the SOTA performance on a variety of continual learning benchmarks through effectively improving the learning of new tasks, and boosts representative continual learning strategies in a plug-and-play way. Intriguingly, derived from active forgetting of the past with Bayesian continual learning, we obtain the algorithm that is formally consistent with the synaptic expansion and synaptic convergence (detailed Appendix A), and is functionally consistent with the advantage of biological active forgetting in memory flexibility [5]. This connection provides a potential theoretical explanation of how the underlying mechanism of biological active forgetting achieves its function of forgetting the past and continually learning conflicting experiences. We will further explore it with artificial neural networks and biological neural networks in the future.

Acknowledgements

This work was supported by NSF of China Projects (Nos. 62061136001, 61620106010, U19B2034, U181146, 62076145), Beijing NSF Project (No. JQ19016), Tsinghua-Peking Center for Life Sciences, Beijing Academy of Artificial Intelligence (BAAI), Tsinghua-Huawei Joint Research Program, a grant from Tsinghua Institute for Guo Qiang, and the NVIDIA NVAIL Program with GPU/DGX Acceleration.

References

- Aljundi et al. [2018] Rahaf Aljundi, Francesca Babiloni, Mohamed Elhoseiny, Marcus Rohrbach, and Tinne Tuytelaars. Memory aware synapses: Learning what (not) to forget. In Proceedings of the European Conference on Computer Vision (ECCV), pages 139–154, 2018.

- Chaudhry et al. [2018] Arslan Chaudhry, Puneet K Dokania, Thalaiyasingam Ajanthan, and Philip HS Torr. Riemannian walk for incremental learning: Understanding forgetting and intransigence. In Proceedings of the European Conference on Computer Vision (ECCV), pages 532–547, 2018.

- Chen et al. [2019] Xinyang Chen, Sinan Wang, Bo Fu, Mingsheng Long, and Jianmin Wang. Catastrophic forgetting meets negative transfer: Batch spectral shrinkage for safe transfer learning. In Advances in Neural Information Processing Systems, pages 1908–1918, 2019.

- Díaz-Rodríguez et al. [2018] Natalia Díaz-Rodríguez, Vincenzo Lomonaco, David Filliat, and Davide Maltoni. Don’t forget, there is more than forgetting: new metrics for continual learning. arXiv preprint arXiv:1810.13166, 2018.

- Dong et al. [2016] Tao Dong, Jing He, Shiqing Wang, Lianzhang Wang, Yuqi Cheng, and Yi Zhong. Inability to activate rac1-dependent forgetting contributes to behavioral inflexibility in mutants of multiple autism-risk genes. Proceedings of the National Academy of Sciences, 113(27):7644–7649, 2016.

- Douillard et al. [2020] Arthur Douillard, Matthieu Cord, Charles Ollion, Thomas Robert, and Eduardo Valle. Podnet: Pooled outputs distillation for small-tasks incremental learning. In Proceedings of the European Conference on Computer Vision (ECCV), volume 12365, pages 86–102, 2020.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 770–778, 2016.

- Hinton [2002] Geoffrey E Hinton. Training products of experts by minimizing contrastive divergence. Neural Computation, 14(8):1771–1800, 2002.

- Hou et al. [2019] Saihui Hou, Xinyu Pan, Chen Change Loy, Zilei Wang, and Dahua Lin. Learning a unified classifier incrementally via rebalancing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 831–839, 2019.

- Jung et al. [2020] Sangwon Jung, Hongjoon Ahn, Sungmin Cha, and Taesup Moon. Continual learning with node-importance based adaptive group sparse regularization. arXiv e-prints, pages arXiv–2003, 2020.

- Kay [1993] Steven M Kay. Fundamentals of statistical signal processing. Prentice Hall PTR, 1993.

- Kirkpatrick et al. [2017] James Kirkpatrick, Razvan Pascanu, Neil Rabinowitz, Joel Veness, Guillaume Desjardins, Andrei A Rusu, Kieran Milan, John Quan, Tiago Ramalho, Agnieszka Grabska-Barwinska, et al. Overcoming catastrophic forgetting in neural networks. Proceedings of the National Academy of Sciences, 114(13):3521–3526, 2017.

- Krizhevsky et al. [2009] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. Technical report, Citeseer, 2009.

- Krizhevsky et al. [2012] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems, volume 25, pages 1097–1105, 2012.

- LeCun [1998] Yann LeCun. The mnist database of handwritten digits. http://yann. lecun. com/exdb/mnist/, 1998.

- Liu et al. [2020] Yaoyao Liu, Bernt Schiele, and Qianru Sun. Meta-aggregating networks for class-incremental learning. arXiv preprint arXiv:2010.05063, 2020.

- Lopez-Paz et al. [2017] David Lopez-Paz et al. Gradient episodic memory for continual learning. In Advances in Neural Information Processing Systems, pages 6467–6476, 2017.

- McCloskey and Cohen [1989] Michael McCloskey and Neal J Cohen. Catastrophic interference in connectionist networks: The sequential learning problem. In Psychology of Learning and Motivation, volume 24, pages 109–165. Elsevier, 1989.

- Minka [2004] Thomas Minka. Power ep. Technical report, Microsoft Research, Cambridge, 2004.

- Nguyen et al. [2017] Cuong V Nguyen, Yingzhen Li, Thang D Bui, and Richard E Turner. Variational continual learning. arXiv preprint arXiv:1710.10628, 2017.

- Rebuffi et al. [2017] Sylvestre-Alvise Rebuffi, Alexander Kolesnikov, Georg Sperl, and Christoph H Lampert. icarl: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2001–2010, 2017.

- Riemer et al. [2018] Matthew Riemer, Ignacio Cases, Robert Ajemian, Miao Liu, Irina Rish, Yuhai Tu, and Gerald Tesauro. Learning to learn without forgetting by maximizing transfer and minimizing interference. arXiv preprint arXiv:1810.11910, 2018.

- Russakovsky et al. [2015] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115(3):211–252, 2015.

- Rusu et al. [2016] Andrei A Rusu, Neil C Rabinowitz, Guillaume Desjardins, Hubert Soyer, James Kirkpatrick, Koray Kavukcuoglu, Razvan Pascanu, and Raia Hadsell. Progressive neural networks. arXiv preprint arXiv:1606.04671, 2016.

- Schulman et al. [2017] John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- Schwarz et al. [2018] Jonathan Schwarz, Wojciech Czarnecki, Jelena Luketina, Agnieszka Grabska-Barwinska, Yee Whye Teh, Razvan Pascanu, and Raia Hadsell. Progress & compress: A scalable framework for continual learning. In Proceedings of International Conference on Machine Learning, pages 4528–4537. PMLR, 2018.

- Selvaraju et al. [2017] Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 618–626, 2017.

- Shuai et al. [2010] Yichun Shuai, Binyan Lu, Ying Hu, Lianzhang Wang, Kan Sun, and Yi Zhong. Forgetting is regulated through rac activity in drosophila. Cell, 140(4):579–589, 2010.

- Simonyan and Zisserman [2014] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- Wah et al. [2011] Catherine Wah, Steve Branson, Peter Welinder, Pietro Perona, and Serge Belongie. The caltech-ucsd birds-200-2011 dataset. 2011.

- Wang et al. [2021a] Liyuan Wang, Bo Lei, Qian Li, Hang Su, Jun Zhu, and Yi Zhong. Triple-memory networks: A brain-inspired method for continual learning. IEEE Transactions on Neural Networks and Learning Systems, 2021a.

- Wang et al. [2021b] Liyuan Wang, Kuo Yang, Chongxuan Li, Lanqing Hong, Zhenguo Li, and Jun Zhu. Ordisco: Effective and efficient usage of incremental unlabeled data for semi-supervised continual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5383–5392, 2021b.

- Wang et al. [2021c] Shipeng Wang, Xiaorong Li, Jian Sun, and Zongben Xu. Training networks in null space of covariance for continual learning. arXiv preprint arXiv:2103.07113, 2021c.

- Yan et al. [2021] Shipeng Yan, Jiangwei Xie, and Xuming He. Der: Dynamically expandable representation for class incremental learning. arXiv preprint arXiv:2103.16788, 2021.

- Yoon et al. [2019] Jaehong Yoon, Saehoon Kim, Eunho Yang, and Sung Ju Hwang. Scalable and order-robust continual learning with additive parameter decomposition. arXiv preprint arXiv:1902.09432, 2019.

- Zenke et al. [2017] Friedemann Zenke, Ben Poole, and Surya Ganguli. Continual learning through synaptic intelligence. In Proceedings of International Conference on Machine Learning, pages 3987–3995, 2017.

- Zhang et al. [2020] Wen Zhang, Lingfei Deng, and Dongrui Wu. Overcoming negative transfer: A survey. arXiv preprint arXiv:2009.00909, 2020.

Appendix A Neural Mechanism of Biological Active Forgetting

Forgetting is an important mechanism in biological learning and memory. The biological forgetting is not simply passive, but can be actively regulated by specialized signaling pathways. An identified pathway is called Rac1 signaling pathway, where the active forgetting regulated by Rac1 signaling pathway is called Rac1-dependent active forgetting [28]. So, why do organisms evolve such a mechanism to actively forget the learned information? A study discovered that the abnormality of Rac1-dependent active forgetting results in severe defects of memory flexibility, where the organisms cannot effectively learn a new experience that conflicts with the old memory [5].

However, the understanding to the neural mechanism of active forgetting is still limited. Our latest biological data in drosophila indicated that Rac1-dependent active forgetting is achieved by regulating a synaptic expansion-convergence process. Specifically, learning of a new experience triggers the increase and subsequent elimination in the number of presynaptic active zones (AZs, i.e., the site of neurotransmitter release), which is regulated by Rac1 signaling pathway (Fig. 8). After learning an aversive olfactory conditioning task, the number of AZs is significantly increased followed by elimination within the mushroom body lobe where a new memory is formed (Fig. 8, a, b). The time course of AZ addition-induced elimination is in parallel with Rac1-dependent active forgetting that lasts for only hours (Fig. 8, a, b). In particular, inhibition of Rac1 and its downstream Dia specifically blocks the increase of the number rather than the size of AZs. Suppressing activity of either Rac1 or its downstream signaling pathway blocks AZ addition. Such manipulations that block AZ addition-induced elimination all prevent forgetting.111The detailed biological evidence will be published elsewhere, so we do not include them in the published version of this paper.

The evidences above suggested that Rac1-dependent active forgetting is achieved by regulating the learning-triggered synaptic expansion-convergence, both sufficiently and necessarily. Since Rac1-dependent active forgetting is critical for organisms to continually learn a new task that conflicts with the old knowledge [5], we adapt the synaptic expansion-convergence to the scenario of continual learning (see Fig. 8, c). After learning the historical experiences (task ), the neural network continually learns a new experience (task ). The learning of task triggers the synaptic expansion, where both the expanded and the old AZs can learn the new experience. While, the subsequent synaptic convergence eliminates the AZs to the amount before learning.

Appendix B Computation Details

B.1 Laplace Approximation

The objective of continual learning is to estimate , which can be written as:

| (11) |

As the posterior is generally intractable (except very special cases), we must resort to approximation methods, such as the Laplace approximation [12]. If is smooth and majorly peaked around its point of maxima (i.e., ), we can approximate it with a Gaussian distribution with mean and variance . To determine and of the Gaussian distribution, we begin with computing the second order Taylor expansion of a function around as follows:

| (12) |

where is the higher order term. Neglecting the higher order term, we have:

| (13) |

Now we approximate with Eqn. (13). Noting that , we have:

| (14) |

Then, we can rewrite Eqn. (14) to obtain the Laplace approximation of as:

| (15) |

| (16) |

The variance represents the inverse of Fisher Information matrix (FIM) , which can be approximated from the first order derivatives to avoid computing the Hessian matrix [11]:

| (17) |

Taking Eqn. (14) and Eqn. (11) together, we obtain the objective of EWC [12] in Eqn. (5). To address continual learning of more than two tasks, we follow [12] that averages the FIM among all the tasks ever seen for EWC and AFEC. If the network continually learns tasks, we compute the FIM of the current task as and update the FIM of all the old tasks as

| (18) |

In Eqn. (9), the posterior can be similarly approximated as a Gaussian distribution with mean and variance . In particular, the inverse of can be computed as:

| (19) |

B.2 Weighted Product Distribution with Forgetting Factor

Here we prove that if two distribution , , then we can find a normalizer that depends on to keep following a Gaussian distribution. The probability density functions of and are

| (20) |

| (21) |

So we get

| (22) |

where

| (23) |

| (24) |

| (25) |

Then we get

| (26) |

| (27) |

B.3 New Log Posterior of AFEC

In AFEC, the original posterior is replaced by defined in Eqn. (6). Then the new log posterior becomes:

| (28) |

where only depends on and is constant to . Note that Eqn. (28) can be further derived as

| (29) |

| (30) |

As proved in Appendix B.2, the new posterior follows a Gaussian distribution if and are both Gaussian. Therefore, we can use a Laplace approximation of it, as discussed in the main text.

B.4 Continual Learning of More than Two Tasks

Here we discuss the scenario of continual learning of more than two tasks. First, let’s consider the case of three tasks, where the neural network continually learns task from its training dataset after learning task and task with active forgetting. Now we use the old posterior as the prior to incorporate the new task, where is the forgetting factor used to learn task :

| (31) |

To mitigate potential negative transfer to task , we replace that absorbs all the information of and with

| (32) |

is the forgetting factor to learn task . is the normalizer that depends on , which keeps following a Gaussian distribution if and are both Gaussian (proved in Appendix B.2). Next, we use a Laplace approximation of , and the MAP estimation is

| (33) |

Then we obtain the loss function to learn the third task:

| (34) |

where is the loss for task , has been obtained after learning task and task , and is the FIM updated with Eqn. (18). is obtained by optimizing the expanded parameter with and its FIM is calculated similarly as Eqn. (19).

Similarly, for continual learning of more tasks, where a neural network with parameter continually learns tasks from their task specific training datasets (), the loss function is

| (35) |

To demonstrate our method more clearly, we provide a pseudocode as below:

Appendix C Details of Visual Classification Tasks

C.1 Implementation

| Methods | Hyperparameter | CIFAR-100-SC | CIFAR-100 | CIFAR-10/100 | 1CUB-200 w/ PT | CUB-200 w/o PT | ImageNet-100 |

|---|---|---|---|---|---|---|---|

| 2AGS-CL [10] | 400, 800, 1600, 3200, 6400 | 400, 800, 1600, 3200 | 1000, 4000, 7000, 10000 | 1.5 | 0.0001, 0.001, 0.01, 0.1, 1, 1.5 | 0.0001, 0.001, 0.01, 0.1, 1, 1.5, 3 | |

| 5, 10, 20, 40 | 5, 10, 20 | 10, 20, 40 | 0.5 | 0.001, 0.01, 0.1, 0.5 | 0.0001, 0.001, 0.01, 0.1, 0.5 | ||

| 0.3 | 0.3 | 0.2 | 0.1 | 0.05, 0.1, 0.2 | 0.05, 0.1, 0.2 | ||

| EWC [12] | 10000, 20000, 40000, 80000 | 10000, 20000, 40000 | 10000, 25000, 50000, 100000 | 40 | 0.1, 1, 5, 10, 20, 40, 80 | 40, 80, 160, 320 | |

| 3P&C [26] | 10000, 20000, 40000, 80000 | 10000, 20000, 40000 | 10000, 25000, 50000, 100000 | 40 | 0.1, 1, 10 | 40, 80, 160 | |

| MAS [1] | 4, 8, 16, 32 | 2, 4, 8 | 1, 2, 5, 10 | 0.6 | 0.001, 0.01, 0.05, 0.5, 1.2 | 0.01, 0.03, 0.1, 0.3, 1 | |

| SI [36] | 4, 8, 16, 32 | 2, 4, 8, 10, 20 | 0.7, 3, 6, 12 | 0.75 | 0.1, 0.2, 0.4, 0.75, 1.5 | 0.3, 1, 3, 10 | |

| RWALK [2] | 64, 128, 256 | 1, 3, 6, 8 | 6, 12, 24, 48, 96 | 50 | 10, 25, 50, 100 | 0.3, 1, 3, 10 | |

| 4 w/ AFEC (ours) | 0.1, 1, 10 | 0.1, 1, 10 | 0.1, 1, 10 | 0.1, 0.01, 0.001, 0.0001 | 1, 0.1, 0.01, 0.001 | 0.01, 0.001, 0.0001 |

We follow the implementation of [10] for visual classification tasks with small-scale and large-scale images. For CIFAR-100-SC, CIFAR-100 and CIFAR-10/100, we use Adam optimizer with initial learning rate 0.001 to train all methods with mini-batch size of 256 for 100 epochs. For CUB-200 w/ PT, CUB-200 w/o PT and ImageNet-100, we use SGD with momentum 0.9 and initial learning rate 0.005 to train all methods with mini-batch size of 64 for 40 epochs. We make an extensive hyperparameter search of all methods and report the best performance for fair comparison. The range of hyperparameter search and the selected hyperparameter are summarized in Table 4. Due to the space limit, we include error bar (standard deviation) of the classification results in Table. 5.

C.2 Longer Task Sequence

We further evaluate AFEC on 50-split Omniglot [10]. The averaged accuracy of the first 25 tasks is 66.45% for EWC and 84.08% for AFEC, while the averaged accuracy of all the 50 tasks is 76.53% for EWC and 83.00% for AFEC, respectively. Therefore, AFEC can still effectively improve continual learning for a much larger number of tasks.

Appendix D ACC, FWT and BWT

We evaluate continual learning of visual classification tasks by three metrics: averaged accuracy (AAC), forward transfer (FWT) and backward transfer (BWT) [17].

| (36) |

| (37) |

| (38) |

where is the test accuracy task after continual learning of task , and is the test accuracy of each task at random initialization. Averaged accuracy (ACC) is the averaged performance on all the tasks ever seen to evaluate the performance of both the old tasks and the new tasks.

Forward transfer (FWT) indicates the averaged influence that learning a task has on a future task, which can be either positive or negative. If a new task conflicts with the old tasks, negative transfer will substantially decrease the performance on the task sequence, which is a common issue for existing continual learning strategies. Since AFEC aims to improve the learning of new tasks in continual learning, FWT should reflect this advantage.

Backward transfer (BWT) indicates the averaged influence that learning a new task has on the old tasks. Positive BWT exists when learning of a new task increases the performance on the old tasks. On the other hand, negative BWT exists when learning of a task decreases the performance on the old tasks, which is also known as catastrophic forgetting.

| CIFAR-100-SC | CIFAR-100 | CIFAR-10/100 | CUB-200 w/ PT | CUB-200 w/o PT | ImageNet-100 | |||||||

| Methods | ||||||||||||

| Fine-tuning | 32.58 | 28.40 | 40.92 4.33 | 33.53 | 78.96 | 37.81 | 78.75 | 78.13 | 31.91 | 39.82 | 50.56 1.97 | 44.80 2.65 |

| P&C [26] | 53.48 | 52.88 | 70.10 | 70.21 1.22 | 86.72 | 78.29 | 81.42 | 81.74 | 33.88 | 42.79 | 76.44 | 74.38 |

| AGS-CL [10] | 55.19 | 53.19 | 71.24 | 69.99 | 86.27 | 80.42 | 82.30 | 81.84 | 32.69 | 40.73 | 51.48 | 47.20 |

| EWC [12] | 52.25 | 51.74 | 68.72 | 69.18 | 85.07 | 77.75 | 81.37 | 80.92 | 32.90 | 42.29 | 76.12 | 73.82 |

| AFEC (ours) | 56.28 | 55.24 | 72.36 | 72.29 | 86.87 | 81.25 | 83.65 | 82.04 | 34.36 | 43.05 | 77.64 | 75.46 |

| \cdashline1-13[2pt/2pt] MAS [1] | 52.76 | 52.18 | 67.60 | 69.41 | 84.97 | 77.39 | 79.98 | 79.67 | 31.68 | 42.56 | 75.48 | 74.72 |

| w/ AFEC (ours) | 55.26 | 54.89 | 69.57 | 71.20 | 86.21 | 80.01 | 82.77 | 81.31 | 34.08 | 42.93 | 75.64 | 75.66 1.33 |

| \cdashline1-13[2pt/2pt] SI [36] | 52.20 | 51.97 | 68.72 | 69.21 | 85.00 | 76.69 | 80.14 | 80.21 | 33.08 | 42.03 | 73.52 | 72.97 |

| w/ AFEC (ours) | 55.25 | 53.90 | 69.34 | 70.13 | 85.71 | 78.49 | 83.06 | 81.88 | 34.04 | 43.20 | 75.72 1.06 | 74.14 |

| \cdashline1-13[2pt/2pt] RWALK [2] | 50.51 | 49.62 | 66.02 | 66.90 | 85.59 | 73.64 | 80.81 | 80.58 | 32.56 | 41.94 | 73.24 | 73.22 |

| w/ AFEC (ours) | 52.62 | 51.76 | 68.50 | 69.12 | 86.12 | 77.16 | 83.24 | 81.95 | 33.35 | 42.95 | 74.64 | 73.86 |

Appendix E Adapt AFEC to Representative Weight Regularization Approaches

Regular weight regularization approaches, such as EWC [12], MAS [1], SI [36] and RWALK [2], generally add a regularization (Reg) term to penalize changes of the important parameters for the old tasks. The loss function of such methods can be written as:

| (39) |

where is the hyperparameter that explicitly controls the strength to remember task , and indicates the “importance” of parameter to task .

Through plugging-in the regularization term of active forgetting, AFEC can be naturally adapted to regular weight regularization approaches. Here we consider a simple adaptation, and validate its effectiveness in Table 2:

| (40) |

where we learn the expanded parameters with to obtain , and calculate with Eqn. (19).

Appendix F Adapt AFEC to Representative Memory Replay Approaches

| CIFAR-100 | ImageNet-100 | |||

|---|---|---|---|---|

| Methods | 5-phase | 10-phase | 5-phase | 10-phase |

| iCaRL [21] | 57.12 | 52.66 | 65.44 | 59.88 |

| w/ AFEC (ours) | 62.76 | 59.00 | 70.75 | 65.62 |

| \cdashline1-5[2pt/2pt] LUCIR [9] | 63.17 | 60.14 | 70.84 | 68.32 |

| w/ AFEC (ours) | 64.47 | 62.26 | 73.38 | 70.20 |

| \cdashline1-5[2pt/2pt] PODNet [6] | 64.83 | 63.19 | 75.54 | 74.33 |

| w/ AFEC (ours) | 65.86 | 63.79 | 76.90 | 75.80 |

Here we relax the restriction of accessing to old training data, and plug AFEC in representative memory replay approaches such as iCaRL [21], LUCIR [9] and PODNet [6] with single-head evaluation [2]. We follow the setting widely-used in the above memory replay approaches that the neural network first learns 50 classes and then continually learns the other 50 classes by 5 phases (10 classes per phase) or 10 phases (5 classes per phase) with a small memory buffer of 20 images per class [21, 9, 6]. We implement AFEC in the officially released code of corresponding methods for fair comparison. For CIFAR-100, we use ResNet32 and train each model for 160 epochs with minibatch size of 128 and weight decay of . For ImageNet-100, we use ResNet18 and train each model for 90 epochs with minibatch size of 128 and weight decay of . For all the datasets, we use a SGD optimizer with momentum of 0.9, initial learning rate of 0.1 and cosine annealing scheduling. As shown in Table 6, AFEC substantially boosts the performance of representative memory replay approaches such as iCaRL [21], LUCIR [9] and PODNet [6].

Appendix G Details of Atari Reinforcement Tasks

G.1 Implementation

The officially released code of [10] provided the implementations of EWC, AGS-CL and fine-tuning. We reproduce the above baselines, and implement MAS, AFEC1 and AFEC2 on it with the same hyperparameters of PPO [25] and training details. Specifically, we use Adam optimizer with the initial learning rate of 0.0003 and evaluate the normalized accumulated reward of all the tasks ever seen for 30 times during training each task. Also, we follow [10] to search the hyperparameters of AGS-CL (; ), EWC () and MAS ().

We observe that the normalized rewards obtained in continual learning are highly unstable in different runs and random seeds for all the baselines, possibly because the optimal policies for Atari games are highly different from each other, which results in severe negative transfer. Thus, we average the performance for five runs with different random seeds to acquire consistent results, and evaluate three orders of the task sequence as below:

Sequence 1 (the original task order used in [10]): StarGunner - Boxing - VideoPinball - Crazyclimber - Gopher - Robotank - DemonAttack - NameThisGame

Sequence 2: DemonAttack - Robotank - Boxing - NameThisGame - StarGunner - Gopher - VideoPinball - Crazyclimber

Sequence 3: Crazyclimber - Robotank - Gopher - NameThisGame - DemonAttack - StarGunner - Boxing - VideoPinball

G.2 Reproduced Results of AGS-CL

Unfortunately, the officially released code cannot reproduce the reported performance of AGS-CL [10]. For the reported performance, the normalized rewards of AGS-CL are significantly higher than EWC on Task 1 and Task 7, while are comparable with or slightly higher than EWC on the other six tasks. Thus, the reported accumulated performance of AGS-CL significantly outperforms EWC [10]. However, the officially released code cannot reproduce the advantage of AGS-CL on Task 1 and Task 7, so the reproduced accumulated performance of AGS-CL only slightly outperforms EWC but underperforms both AFEC1 and AFEC2, as shown in Fig. 9.