Coventry University, Coventry, CV1 5FB, UK 11email:

{Dorian.Florescu, Matthew.England}@coventry.ac.uk

Algorithmically generating new algebraic

features of polynomial systems

for machine learning

Abstract

There are a variety of choices to be made in both computer algebra systems (CASs) and satisfiability modulo theory (SMT) solvers which can impact performance without affecting mathematical correctness. Such choices are candidates for machine learning (ML) approaches, however, there are difficulties in applying standard ML techniques, such as the efficient identification of ML features from input data which is typically a polynomial system. Our focus is selecting the variable ordering for cylindrical algebraic decomposition (CAD), an important algorithm implemented in several CASs, and now also SMT-solvers. We created a framework to describe all the previously identified ML features for the problem and then enumerated all options in this framework to automatically generation many more features. We validate the usefulness of these with an experiment which shows that an ML choice for CAD variable ordering is superior to those made by human created heuristics, and further improved with these additional features. We expect that this technique of feature generation could be useful for other choices related to CAD, or even choices for other algorithms with polynomial systems for input.

Keywords:

machine learning; feature generation; non-linear real arithmetic; symbolic computation; cylindrical algebraic decomposition1 Introduction

Machine Learning (ML), that is statistical techniques to give computer systems the ability to learn rules from data, is a topic that has found great success in a diverse range of fields over recent years. ML is most attractive when the underlying functional relationship to be modelled is complex or not well understood. Hence ML has yet to make a large impact in the fields which form SC2, Symbolic Computation and Satisfiability Checking [1], since these prize mathematical correctness and seek to understand underlying functional relationships. However, as most developers would acknowledge, our software usually comes with a range of choices which, while having no effect on the correctness of the end result, could have a great effect on the resources required to find it. These choices range from the low level (in what order to perform a search that may terminate early) to the high (which of a set of competing exact algorithms to use for this problem instance). In making such choices we may be faced with decisions where relationships are not fully understood, but are not the key object of study.

In practice such choices may be made by man-made heuristics based on some experimentation (e.g. [18]) or magic constants where crossing a single threshold changes system behaviour [11]. It is likely that many of these decisions could be improved by allowing learning algorithms to analyse the data. The broad topic of this paper is ML for algorithm choices where the input is a set of polynomials, which encompasses a variety of tools in computer algebra systems and the SMT theory of [Quantifier Free] Non-Linear Real Arithmetic, [QF]NRA.

There has been little research on the use of ML in computer algebra: only [28] [27] [24] on the topic of CAD variable ordering choice; [26], [27] on the question of whether to precondition CAD with Groebner Bases; and [31] on deciding the order of sub-formulae solving for a QE procedure. Within SMT there has been significant work on the Boolean logic side e.g. the portfolio SAT solver SATZilla [45] and MapleSAT [33] which views solver branching as an optimisation problem. However there is little work on the use of ML to choose or optimise theory solvers. We note that other fields of mathematical software are ahead in the use of ML, most notably the automated reasoning community (see e.g. [42], [32], [7], or the brief survey in [19]).

1.1 Difficulties with ML for problems in NRA

There are difficulties in applying standard ML techniques to problems in NRA. One is the lack of sufficiently large datasets, which is addressed only partially by the SMT-LIB. The experiment in [26] found that the [QF]NRA sections of the SMT-LIB too uniform, and had to resort to random generated examples (although the state of benchmarking in computer algebra is far worse [22]). There have been improvements since then, with the benchmarks increasing both in number and diversity of underlying application. For example, there are now problems arising from biology [4], [23] and economics [37], [38].

Another difficulty is the identification of suitable features from the input with which to train the ML models. There are some obvious candidates concerning the size and degrees of polynomials, and the distribution of variables. However, this provides a starting set (i.e. before any feature selection takes place) that is small in comparison to other machine learning applications. The main focus of this paper is to introduce a method to automatically (and cheaply) generate further features for ML from polynomial systems.

1.2 Contribution and plan

Our main contributions are the new feature generation approach described in Section 3 and the validation of its use in the experiments described in Sections 45. The experiments are for the choice of variable ordering for cylindrical algebraic decomposition, a topic whose background we first present in Section 2, but we emphasise that the techniques may be applicable more broadly.

2 Background on variable ordering for CAD

2.1 Cylindrical algebraic decomposition

A Cylindrical Algebraic Decomposition (CAD) is a decomposition of ordered space into cells arranged cylindrically: the projections of any pair of cells with respect to the variable ordering are either equal or disjoint. The projections form an induced CAD of the lower dimensional space. The cells are (semi)-algebraic meaning each can be described with a finite sequence of polynomial constraints.

A CAD is produced to be truth-invariant for a logical formula (so the formula is either true or false on each cell). Such a decomposition can then be used to perform Quantifier Elimination (QE) over the reals, i.e. given a quantified Tarski formula find an equivalent quantifier free formula over the reals. For example, QE would transform to the equivalent unquantified statement . A CAD over the -space could be used to ascertain this, so long as the variable ordering ensured that there was an induced CAD of -space. We test one sample point per cell and construct a quantifier free formula from the relevant semi-algebraic cell descriptions.

CAD was introduced by Collins in 1975 [15] and works relative to a set of polynomials. Collins’ CAD produces a decomposition so that each polynomial has constant sign on each cell (thus truth-invariant for any formula built with those polynomials). The algorithm first projects the polynomials into smaller and smaller dimensions; and then uses these to lift to incrementally build decompositions of larger and larger spaces according to the polynomials at that level. For further details on CAD see for example the collection [12].

2.2 Variable ordering

The definition of cylindricity and both stages of the algorithm are relative to an ordering of the variables. For example, given polynomials in variables ordered as we first project away and so on until we are left with polynomials univariate in . We then start lifting by decomposing the axis, and then the plane and so so on. The cylindricity condition refers to projections of cells in onto a space ) where . There have been numerous advances to CAD since its inception: new projection schemes [34], [36]; partial construction [16], [43]; symbolic-numeric lifting [40], [29]; adapting to the Boolean structure [5], [20]; and adaptations for SMT [30], [9]. However, in all cases, the need for a fixed variable ordering remains.

Depending on the application, the variable ordering may be determined, constrained, or free. QE, requires that quantified variables are eliminated first and that variables are eliminated in the order in which they are quantified. However, variables in blocks of the same quantifier (and the free variables) can be swapped, so there is partial freedom. Of course, in the SMT context there is only a single existentially quantified block and so there is a free choice of ordering. So the discriminant in the example above could have been found with any CAD which eliminates first. A CAD for the quadratic polynomial under ordering has only 27 cells, but needs 115 for the reverse ordering.

Since we can switch the order of quantified variables in a statement when the quantifier is the same, we also have some choice on the ordering of quantified variables. For example, a QE problem of the form could be solved by a CAD under either ordering or ordering .

The choice of variable ordering can have a great effect on the time and memory use of CAD, and the number of cells in the output. Further, Brown and Davenport presented a class of problems in which one variable ordering gave output of double exponential complexity in the number of variables and another output of a constant size [10].

2.3 Prior work on choosing the variable ordering

Heuristics have been developed to choose a variable ordering, with Dolzmann et al. [18] giving the best known study. After analysing a variety of metrics they proposed a heuristic, sotd, which constructs the full set of projection polynomials for each permitted ordering and selects the ordering whose corresponding set has the lowest sum of total degrees for each of the monomials in each of the polynomials. The second author demonstrated examples for which that heuristic could be misled in [6]; and then later showed that tailoring to an implementation could improve performance [21]. These heuristics all involved potentially costly projection operations on the input polynomials.

In [28] the second author of the present paper collaborated to use a support vector machine to choose which of three human made heuristics to believe when picking the variable ordering, based only on simple features of the input polynomials. The experiments identified substantial subclasses on which each of the three heuristics made the best decision, and demonstrated that the machine learned choice did significantly better than any one heuristic overall. This work was picked up again in [24] by the present authors, where ML was used to predict directly the variable ordering for CAD, leading to the shortest computing time, with experiments conducted for four different ML models.

3 Generating new features algorithmically

3.1 Existing features for sets of polynomials

An early heuristic for the choice of CAD variable ordering is that of Brown [8], which chooses a variable ordering according to the following criteria, starting with the first and breaking ties with successive ones.

-

(1)

Eliminate a variable first if it appears with the lowest overall degree in the input.

-

(2)

For each variable calculate the maximum total degree for the set of terms in the input in which it occurs. Eliminate first the variable for which this is lowest.

-

(3)

Eliminate a variable first if there is a smaller number of terms in the input which contain the variable.

Despite being computationally cheaper than the sotd heuristic (because the latter performs projections before measuring degrees) experiments in [28] suggested this simpler measure actually performs slightly better, although the key message from those experiments is that there were substantial subsets of problems for which each heuristic made a better choice than the others.

The Brown heuristic inspired almost all the features used by the authors of [28], [24] to perform ML for CAD variable ordering, with the full set of 11 features listed in Table 1 (column 3 will be explained later).

| # | Description | ||||

|---|---|---|---|---|---|

| 1 | Number of polynomials | ||||

| 2 | Maximum total degree of polynomials | ||||

| 3 | Maximum degree of among all polynomials | ||||

| 4 | Maximum degree of among all polynomials | ||||

| 5 | Maximum degree of among all polynomials | ||||

| 6 | Proportion of occurring in polynomials | ||||

| 7 | Proportion of occurring in polynomials | ||||

| 8 | Proportion of occurring in polynomials | ||||

| 9 | Proportion of occurring in monomials | ||||

| 10 | Proportion of occurring in monomials | ||||

| 11 | Proportion of occurring in monomials |

3.2 A new framework for generating polynomial features

Our new feature generation procedure is based on the observation that all the measurements taken by the Brown heauristic, and all those features used in [28], [24] can be formalised mathematically using a small number of functions. For simplicity, the following discussion will be restricted to polynomials of variables as these were used in the following experiments, but everything generalises in an obvious way to variables.

Let a problem instance be defined by a set of polynomials

| (1) |

This is the case for producing a sign-invariant CAD. Of course, any problem instance consisting of a logical formula whose atoms are polynomial sign conditions can also have such a set extracted.

In the following we define the notation for polynomial variables and coefficients that will be used throughout the manuscript. Each polynomial with index , for contains a different number of monomials, which will be labelled with index , where and denotes the number of monomials in polynomial . We note that these are just labels and are not setting an ordering themselves. The degrees corresponding to each of the variables are a function of and . These need to be explicitly labelled in order to allow a rigorous definition of our proposed procedure of feature generation.

We next write each polynomial as

| (2) |

Here, represents the polynomial variables (). Thus for each monomial in each polynomial there is a tuple of positive integers that label it. Then in turn we denote by the degree of variable in that monomial, and by the constant coefficient, i.e., tuple superscripts are giving a label for a monomial in a problem. The original indices are simply a labelling and not an ordering of the variables

Therefore, any one of our problem instances is uniquely represented by a set of sets

| (3) |

Observe now that each of Brown’s measures can be formalised as a vector of features for choosing a variable as follows.

-

(1)

Overall degree in the input of a variable: .

-

(2)

Maximum total degree of those terms in the input in which a variable occurs:

-

(3)

Number of terms in the input which contain the variable:

In the latter two we use the sign function to discriminate between monomials which contain a variable (sign of degree is positive) and those which do not (sign of degree is zero). Of course the sign of the degree is never negative.

Define now also the averaging functions

Then the features in Table 1 can be formalised similarly to Brown’s metrics, as shown in the third column of Table 1.

We can place all of these scalars into a single framework:

| (4) |

where

and , and are all taken from the set

In the above set , , and are all equal to the identity function.

For example, let . If , then .

3.3 Generating additional features

We will thus consider deriving all of the other features which fall into this framework, but to do so we must first impose a number of rules.

-

1.

The functions must all belong to distinct categories of function, i.e. one each of , , av, and sgn.

-

2.

Exactly one of the functions is computed over and exactly one is computed over (it may be the same one).

-

3.

The computation over is always performed by a function with an index greater or equal to that of the function computing over .

| max | av | sum | sgn |

|---|---|---|---|

| 0 | |||

| 0 | |||

| 0 | |||

| 0 | |||

| 0 | |||

| 0 | |||

| 1 | |||

| 1 | |||

| 1 | |||

| 1 | |||

| 1 | |||

| 1 |

The expression of can be interpreted as follows. The values are functions of variables and . Each of the functions either leave the function unchanged, or they turn it into a function of fewer variables (first into a function of , and then into a scalar value, representing the ML feature).

The rules above are justified as follows. Rule reduces the redundancy in the feature set. Rules and guarantee that the feature is well defined and is a scalar number. In particular, Rule is necessary because the computation over the terms in a polynomial is dependent on their number, which is not the same for all polynomials.

The final set has size for a problem with variables. This number is attained as follows: we have 12 possible distributions of indexes to the functions as shown in Table 2; then possible orderings of those functions; and 6 possible choices for . .

However, many of these features will be identical (e.g. to a different placement of the identify function). We do not identify these manually now: the task that is trivial for a given dataset, but substantial to do in generality.

4 Machine learning experiment with the new features

We now describe a ML experiment to choose the variable ordering for cylcindrical algebraic decomposition. The methodology here is similar to that in our recent paper [24] except for the addition of the extra features from Section 3. A more detailed discussion of the methodology can be found in [24].

4.1 Problem set

We use the nlsat dataset111Freely available from http://cs.nyu.edu/˜dejan/nonlinear/ produced to evaluate the work in [30], thus the problems are all fully existentially quantified. Although there are CAD algorithms that reduce what is being computed based on the quantifiers in the input (most notably via Partial CAD [16]), the conclusions drawn are likely to be applicable outside of the SAT context.

We use the problems with variables from this database, so each has a choice of six different variable orderings. We extracted only the polynomials involved, and randomly divided into two datasets for training () and testing (). Only the former was used to tune the parameters of the ML models.

4.2 Software

We used the CAD routine CylindricalAlgebraicDecompose which is part of the RegularChains Library for Maple. This algorithm builds decompositions first of -dimensional complex space before refining to a CAD of [14], [13], [3]. We ran the code in Maple but used an updated version of the RegularChains Library (http://www.regularchains.org). Training and evaluation of the ML models was done using the scikit-learn package [39] v0.20.2 for Python 2.7. The features for ML were extracted using code written in the sympy package v1.3 for Python 2.7, as was Brown’s heuristic. The sotd heuristic was implemented in Maple as part of the ProjectionCAD package [25].

4.3 Timings

CAD construction was timed in a Maple script that was called separately from Python for each CAD (to avoid Maple’s caching of results). The target variable ordering for ML was defined as the one that minimises the computing time for a given problem. All CAD function calls included a time limit. For the training dataset an initial time limit of seconds was used, doubled incrementally if all orderings timed out, until CAD completed for at least one ordering (a target variable ordering could be assigned for all problems using time limits no bigger than seconds). The problems in the testing dataset were processed with a larger time limit of seconds for all orderings (time outs set as s).

4.4 Feature simplification

When computed on a set of problems , some of the features turn out to be constant, i.e. Such features will have no benefit for ML and are removed. Further, other features may be repetitive, i.e. This repetition may represent a mathematical equality, or just be the case of the given dataset. Either way, they are merged into a single feature for the experiment. After this step, we are left with features: so while a large majority were redundant, we still have seven times those available in [28], [24].

4.5 Feature selection

Feature selection was performed with the training dataset to see if any features were redundant for the ML. We chose the Analysis of Variance (ANOVA) F-value to determine the importance of each feature for the classification task. Other choices we considered were unsuitable for our problem, e.g. the mutual information based selection requires very large amounts of data.

The training dataset consists of problems with variables, and each problem is assigned a target ordering, or class , where . Let denote problem number from the training dataset that is assigned class number , and , where denotes the number of problems that are assigned class Thus

The F-value for feature number is computed as follows [35].

| (5) |

where is the sample mean in class , and the overall mean of the data:

The numerator in (5) represents the between-class variability or explained variance and the denominator the within-class variability or unexplained variance.

Of the 78 features the three with the highest F-values were the following

The new features may be translated back into natural language. For example, feature is the proportion of monomials containing variable , averaged across all polynomials; feature the sum of the degrees of the variables in all monomials containing variable , averaged across all monomials and summed across all polynomials;and feature the maximum sum of the degrees of the variables in all monomials containing variable , summed across all polynomials.

Feature selection did not suggest to remove any features (they all contributed meaningful information), so we proceed with our experiment using all 78.

4.6 ML models

Four of the most commonly used deterministic ML models were tuned on the training data (for details on the methods see e.g. the textbook [2]).

Each model was trained using grid search -fold cross-validation, i.e. the set was randomly divided into and each possible combination of parts was used to tune the model parameters, leaving the last part for fitting the hyperparameters with cross-validation, by optimising the average F-score. Grid searches were performed for an initially large range for each hyperparameter; then gradually decreased to home into optimal values. This lasted from a few seconds for simpler models like KNN to a few minutes for more complex models like MLP. The optimal hyperparameters selected during cross-validation are in Table 3.

| Model | Hyperparameter | Value |

| Decision Tree | Criterion | Gini impurity |

| Maximum tree depth | ||

| K-Nearest | Train instances weighting | Inversely proportional to distance |

| Neighbours | Algorithm | Ball Tree |

| Support Vector | Regularization parameter | |

| Machine | Kernel | Radial basis function |

| Tolerance for stopping criterion | ||

| Multi-Layer | Hidden layer size | |

| Perceptron | Activation function | Hyperbolic tangent |

| Algorithm | Quasi-Newton based optimiser | |

| Regularization parameter |

4.7 Comparing with human made heuristics

The ML approaches were compared in terms of prediction accuracy and resulting CAD computing time against the two best known human constructed heuristics [8], [18] as discussed earlier. Unlike the ML, these can end up predicting several variable orderings (i.e. when they cannot discriminate). In practice if this were to happen the heuristic would select one randomly (or perhaps lexicographically), however that final pick is not meaningful. To accommodate this, for each problem, the prediction accuracy of such a heuristic is judged to be the the percentage of its predicted variable orderings that are also target orderings. The average of this percentage over all problems in the testing dataset represents the prediction accuracy. Similarly, the computing time for such methods was assessed as the average computing time over all predicted orderings, and it is this that is summed up for all problems in the testing dataset.

5 Experimental Results

The results are presented in Table 4. We compare the four ML models on the percentage of problems where they selected the optimum ordering, and the total computation time (in seconds) for solving all the problems with their chosen orderings. The first two rows reproduce the results of [24] which used only the 11 features from Table 1, while the latter two rows are the results from the new experiment in the present paper which has 78 features. We also compare with the two human constructed heuristics and the outcome of a random choice between the 6 orderings (which do not change with the number of features). We might expect a random choice to be correct one sixth of the time but it is higher as for some problems there were multiple variable orderings with equally fast timings.

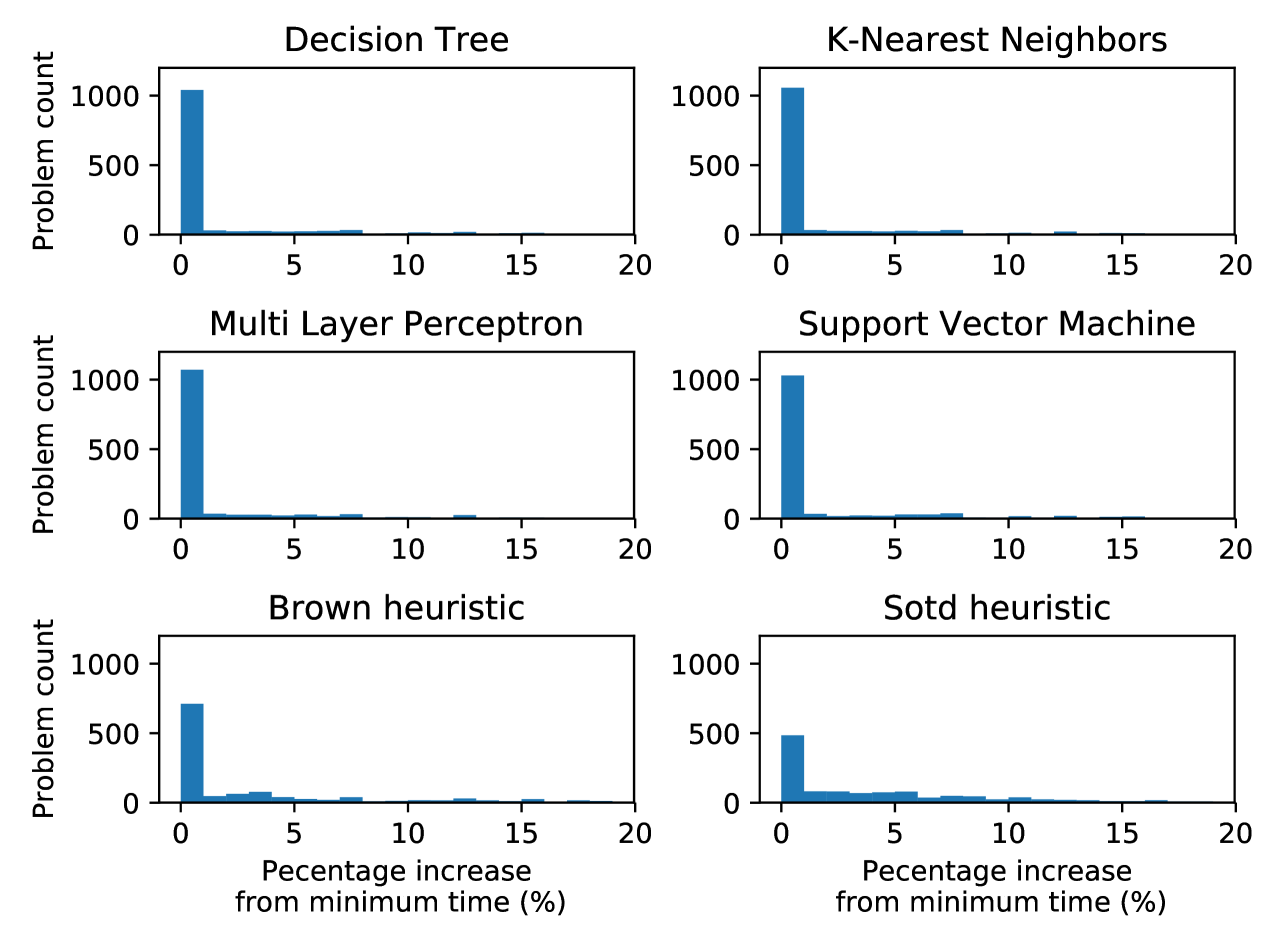

We also consider the distribution of the computation times: the differences between the computation time of each method and the minimum computation time, given as a percentage of the minimum time, are depicted in Figure 1.

| DT | KNN | MLP | SVM | Brown | sotd | rand | ||

|---|---|---|---|---|---|---|---|---|

| From [24] | Accuracy | |||||||

| (11 Features) | Time (s) | |||||||

| New Experiment | Accuracy | |||||||

| (78 Features) | Time (s) |

5.1 Range of possible outcomes

The minimum total computing time, achieved if we select an optimal ordering for every problem, is s. Choosing at random would take s, almost 4 times as much. The maximum time, if we selected the worst ordering for every problem, is s. The K-Nearest Neighbours model achieved the shortest time of our models and heuristics, with s, only more than the minimal possible.

5.2 Human-made heuristics

Since they are not affected by the new feature framework of the present paper the findings on the human made heuristics are the same as in [24]. Of the two human-made heuristics, Brown performed the best, surprising since the sotd heuristic has access to additional information (not just the input polynomials but also their projections). Obtaining an ordering for a problem instance with sotd hence takes longer than for Brown or any ML model generating an ordering with sotd for all problems in the testing dataset took over min. Using Brown we can solve all problems in 10,951s, 27% more than the minimum. While sotd is only 0.7% less accurate than Brown in identifying the best ordering, it is much slower at s or 38% more than the minimum. So, while Brown is not much better at identifying the best, it is much better at discarding the worst!

5.3 ML choices

The results show that all ML approaches outperform the human constructed heuristics in terms of both accuracy and timings. Moreover, the results show that the new algorithm for generating features leads to a clear improvement in ML performance compared to using only a small number of human generated features in [24]. For all four modules both accuracy has increased and computation time decreased. The best achieved time was 14% above the minimum using the original 11 features but now only 6% above with the new features.

The computing time for all the methods lies between the best (s) and the worst (s). Therefore, if we scale this time to so that the shortest time corresponds to and the slowest to , then the best human-made heuristic (Brown) lies at , and the best ML method (KNN) lies at . So using ML allows us to be times closer to the minimum possible computing time.

Figure 1 shows that the human-made heuristics result in computing times that are often significantly larger than of the corresponding minimum time for each problem. The ML methods, on the other hand, all result in over problems ( of the testing dataset) within of the minimum time.

6 Final Thoughts

In this experiment the MLP and KNN models offered the best performance, and a clear advance on the prior state of the art. But we acknowledge that there is much more to do and emphasise that these are only the initial findings of the project and we need to see if the findings are replicated. Planned extensions include: expanding the dataset to problems with more variables and quantifier structure; trying different feature selection techniques, and seeing if classifiers trained for the Maple CAD may be applied to other implementations.

Our main result is that a great many more features can be obtained trivially from the input (i.e. without any projection operations) than previously thought, and that these are relevant and lead to better ML choices. Some of these are easy to express in natural language, such as the number of polynomials containing a certain variable, but others do not have an obvious interpretation. This is important because something that is hard to describe in natural language is unlikely to be suggested by a human as a feature, which illustrates the benefit of our framework. This contribution to feature extraction for algebraic problems should be more widely applicable than the CAD variable ordering decision.

Acknowledgements

This work is supported by EPSRC Project EP/R019622/1: Embedding Machine Learning within Quantifier Elimination Procedures.

References

- [1] Ábrahám, E., et al.: : Satisfiability checking meets symbolic computation. In: Proc. CICM ’16, LNCS 9791, pp. 28–43. Springer (2016)

- [2] Bishop, C.: Pattern Recognition and Machine Learning. Springer (2006)

- [3] Bradford, R., Chen, C., Davenport, J., England, M., Moreno Maza, M., Wilson, D.: Truth table invariant cylindrical algebraic decomposition by regular chains. In: Proc. CASC ’14, LNCS 8660, pp. 44–58. Springer (2014)

- [4] Bradford, R., et al.: A case study on the parametric occurrence of multiple steady states. In: Proc. ISSAC ’17, pp. 45–52. ACM (2017)

- [5] Bradford, R., Davenport, J., England, M., McCallum, S., Wilson, D.: Truth table invariant cylindrical algebraic decomposition. J. Symb. Comp. 76, 1–35 (2016)

- [6] Bradford, R., Davenport, J., England, M., Wilson, D.: Optimising problem formulations for cylindrical algebraic decomposition. In: Intelligent Computer Mathematics, LNCS 7961, pp. 19–34. Springer Berlin Heidelberg (2013)

- [7] Bridge, J., Holden, S., Paulson, L.: Machine learning for first-order theorem proving. Journal of Automated Reasoning 53, 141–172 (2014)

- [8] Brown, C.: Tutorial: Cylindrical algebraic decomposition, at ISSAC ’04. http://www.usna.edu/Users/cs/wcbrown/research/ISSAC04/handout.pdf (2004)

- [9] Brown, C.: Constructing a single open cell in a cylindrical algebraic decomposition. In: Proc. ISSAC ’13, pp. 133–140. ACM (2013)

- [10] Brown, C., Davenport, J.: The complexity of quantifier elimination and cylindrical algebraic decomposition. In: Proc. ISSAC ’07, pp. 54–60. ACM (2007)

- [11] Carette, J.: Understanding expression simplification. In: Proc. ISSAC ’04, pp. 72–79. ACM (2004)

- [12] Caviness, B., Johnson, J.: Quantifier Elimination and Cylindrical Algebraic Decomposition. Texts & Monographs in Symbolic Computation, Springer-Verlag (1998)

- [13] Chen, C., Moreno Maza, M.: An incremental algorithm for computing cylindrical algebraic decompositions. In: Computer Mathematics, pp. 199—221. Springer Berlin Heidelberg (2014)

- [14] Chen, C., Moreno Maza, M., Xia, B., Yang, L.: Computing cylindrical algebraic decomposition via triangular decomposition. In: Proc. ISSAC ’09, 95–102. ACM (2009)

- [15] Collins, G.: Quantifier elimination for real closed fields by cylindrical algebraic decomposition. In: Proc. 2nd GI Conference on Automata Theory and Formal Languages. pp. 134–183. Springer-Verlag (reprinted in the collection [12]) (1975)

- [16] Collins, G., Hong, H.: Partial cylindrical algebraic decomposition for quantifier elimination. Journal of Symbolic Computation 12, 299–328 (1991)

- [17] Davenport, J., Bradford, R., England, M., Wilson, D.: Program verification in the presence of complex numbers, functions with branch cuts etc. In: Proc. SYNASC ’12, pp. 83–88. IEEE (2012)

- [18] Dolzmann, A., Seidl, A., Sturm, T.: Efficient projection orders for CAD. In: Proc. ISSAC ’04, pp. 111–118. ACM (2004)

- [19] England, M.: Machine learning for mathematical software. In: Mathematical Software – Proc. ICMS ’18. LNCS 10931, pp. 165–174. Springer (2018)

- [20] England, M., Bradford, R., Davenport, J.: Improving the use of equational constraints in cylindrical algebraic decomposition. In: Proc. ISSAC ’15, pp. 165–172. ACM (2015)

- [21] England, M., Bradford, R., Davenport, J., Wilson, D.: Choosing a variable ordering for truth-table invariant CAD by incremental triangular decomposition. In: Proc. ICMS ’14, LNCS 8592, pp. 450–457. Springer (2014)

- [22] England, M., Davenport, J.: Experience with heuristics, benchmarks & standards for cylindrical algebraic decomposition. In: Proc. ’16. CEUR-WS 1804 (2016)

- [23] England, M., Errami, H., Grigoriev, D., Radulescu, O., Sturm, T., Weber, A.: Symbolic versus numerical computation and visualization of parameter regions for multistationarity of biological networks. In: Computer Algebra in Scientific Computing (CASC ’17), LNCS 10490, pp. 93–108. Springer (2017)

- [24] England, M., Florescu, D.: Comparing machine learning models to choose the variable ordering for cylindrical algebraic decomposition. To appear in Proc. CICM ’19 (Springer LNCS) (2019). Preprint: https://arxiv.org/abs/1904.11061

- [25] England, M., Wilson, D., Bradford, R., Davenport, J.: Using the Regular Chains Library to build cylindrical algebraic decompositions by projecting and lifting. In: Mathematical Software – ICMS ’14. LNCS 8592, pp. 458–465. Springer (2014)

- [26] Huang, Z., England, M., Davenport, J., Paulson, L.: Using machine learning to decide when to precondition cylindrical algebraic decomposition with Groebner bases. In: Proc. SYNASC ’16. pp. 45–52. IEEE (2016)

- [27] Huang, Z., England, M., Wilson, D., Bridge, J., Davenport, J., Paulson, L.: Using machine learning to improve cylindrical algebraic decomposition. Mathematics in Computer Science, Volume to be assigned, 28 pages (2019)

- [28] Huang, Z., England, M., Wilson, D., Davenport, J., Paulson, L., Bridge, J.: Applying machine learning to the problem of choosing a heuristic to select the variable ordering for cylindrical algebraic decomposition. In: Intelligent Computer Mathematics, LNAI 8543, pp. 92–107. Springer International (2014)

- [29] Iwane, H., Yanami, H., Anai, H., Yokoyama, K.: An effective implementation of a symbolic-numeric cylindrical algebraic decomposition for quantifier elimination. In: Proc. SNC ’09, pp. 55–64. SNC ’09 (2009)

- [30] Jovanovic, D., de Moura, L.: Solving non-linear arithmetic. In: Gramlich, B., Miller, D., Sattler, U. (eds.) Automated Reasoning Proc. IJCAR ’12, LNCS 7364, pp. 339–354. Springer (2012)

- [31] Kobayashi, M., Iwane, H., Matsuzaki, T., Anai, H.: Efficient subformula orders for real quantifier elimination of non-prenex formulas. In: Proc. MACIS ’15, LNCS 9582, pp. 236–251. Springer International Publishing (2016)

- [32] Kühlwein, D., Blanchette, J., Kaliszyk, C., Urban, J.: MaSh: Machine learning for sledgehammer. In: Interactive Theorem Proving, LNCS 7998, pp. 35–50. Springer Berlin Heidelberg (2013)

- [33] Liang, J., Hari Govind, V., Poupart, P., Czarnecki, K., Ganesh, V.: An empirical study of branching heuristics through the lens of global learning rate. In: Proc. SAT ’17, LNCS 10491, pp. 119–135. Springer (2017)

- [34] McCallum, S.: An improved projection operation for cylindrical algebraic decomposition. In: [12], pp. 242–268. (1998)

- [35] Markowski, C.A. and Markowski, E.P.: Conditions for the effectiveness of a preliminary test of variance. The American Statistician, 44:4, 322–326 (1990)

- [36] McCallum, S., Parusińiski, A., Paunescu, L.: Validity proof of Lazard’s method for CAD construction. Journal of Symbolic Computation 92, 52–69 (2019)

- [37] Mulligan, C., Bradford, R., Davenport, J., England, M., Tonks, Z.: Non-linear real arithmetic benchmarks derived from automated reasoning in economics. In: Proc. ’18, pp. 48–60. CEUR-WS 2189 (2018)

- [38] Mulligan, C., Davenport, J., England, M.: TheoryGuru: A Mathematica package to apply quantifier elimination technology to economics. In: Mathematical Software – Proc. ICMS ’18. LNCS 10931, pp. 369–378. Springer (2018)

- [39] Pedregosa, F., et al.: Scikit-learn: Machine learning in Python. Journal of Machine Learning Research 12, 2825–2830 (2011)

- [40] Strzeboński, A.: Cylindrical algebraic decomposition using validated numerics. Journal of Symbolic Computation 41(9), 1021–1038 (2006)

- [41] Sturm, T.: New domains for applied quantifier elimination. In: Computer Algebra in Scientific Computing, LNCS 4194, pp. 295–301. Springer (2006)

- [42] Urban, J.: MaLARea: A metasystem for automated reasoning in large theories. In: Proc. ESARLT ’07, CEUR-WS 257, p. 14. CEUR-WS (2007)

- [43] Wilson, D., Bradford, R., Davenport, J., England, M.: Cylindrical algebraic sub-decompositions. Mathematics in Computer Science 8, 263–288 (2014)

- [44] Wilson, D., Davenport, J., England, M., Bradford, R.: A “piano movers” problem reformulated. In: Proc. SYNASC ’13, pp. 53–60. IEEE (2013)

- [45] Xu, L., Hutter, F., Hoos, H., Leyton-Brown, K.: SATzilla: Portfolio-based algorithm selection for SAT. J. Artificial Intelligence Research 32, 565–606 (2008)