An approximation to peak detection power using Gaussian random field theory

Herbert Wertheim School of Public Health and Human Longevity Science,

University of California San Diego, 9500 Gilman Dr., La Jolla, CA 92093, USA

2Halicioǧlu Data Science Institute,

University of California San Diego, 9500 Gilman Dr., La Jolla, CA 92093, USA

3School of Mathematical and Statistical Sciences,

Arizona State University, 900 S. Palm Walk, Tempe, AZ 85281, USA

)

Abstract

We study power approximation formulas for peak detection using Gaussian random field theory. The approximation, based on the expected number of local maxima above the threshold , , is proved to work well under three asymptotic scenarios: small domain, large threshold, and sharp signal. An adjusted version of is also proposed to improve accuracy when the expected number of local maxima exceeds 1.

Cheng and Schwartzman (2018) developed explicit formulas for of smooth isotropic Gaussian random fields with zero mean. In this paper, these formulas are extended to allow for rotational symmetric mean functions, so that they are suitable for power calculations. We also apply our formulas to 2D and 3D simulated datasets, and the 3D data is induced by a group analysis of fMRI data from the Human Connectome Project to measure performance in a realistic setting.

Key words: Power calculations, peak detection, Gaussian random field, image analysis.

1 Introduction

Detection of peaks (local maxima) is an important topic in image analysis. For example, a fundamental goal in fMRI analysis is to identify the local hotspots of brain activity (see, for example, Genovese et al., 2002 and Heller et al., 2006), which are typically captured by peaks in the fMRI signal. The detection of such peaks can be posed as a statistical testing problem intended to test whether the underlying signal has a peak at a given location. This is challenging because such tests are conducted only at locations of observed peaks, which depend on the data. Therefore, the height distribution of the observed peak is conditional on a peak being observed at that location. This is a nonstandard problem. Solutions exist using random field theory (RFT). RFT is a statistical framework that can be used to perform topological inference and modeling. RFT-based peak detection has been studied in Cheng and Schwartzman (2017) and Schwartzman and Telschow (2019), which provide the peak height distribution for isotropic noise under the complete null hypothesis of no signal anywhere.

In general, for any statistical testing problem, accurate power calculations help researchers decide the minimum sample size required for an informative test, and thus reduce cost. Power calculation formulas exist for common univariate tests, such as z-tests and t-tests. However, particular challenges arise when we perform power calculations in peak detection settings. Due to the nature of imaging data, the number and location of the signal peaks are unknown. Besides, the power is affected by other spatial aspects of the problem, such as the shape of the peak function and the spatial autocorrelation of the noise. Considering these difficulties, it requires some extra effort to derive a power formula for peak detection.

A formal definition of power in peak detection is necessary to perform power calculations. In Cheng and Schwartzman (2017) and Durnez et al. (2016), the authors explored approaches to control the false discovery rate (FDR). For the entire domain, average peakwise power, i.e. power averaged over all non-null voxels, is a natural choice for these approaches. For a local domain where a single peak exists, the power can be defined as the probability of successfully detecting that peak. Following this idea, we describe the null and alternative hypothesis and the definition of detection power. We do so informally here for didactic purposes and present formal definitions in Section 2.

Consider a local domain where a single peak may exist, and consider the hypotheses

Suppose we observe a random field to be used as test statistic at every location, typically as the result of statistical modeling of the data. For a fixed threshold , the existence of observed peaks with height greater than would lead to rejecting the null hypothesis. Therefore, we define the type I error and power as the probability of existing at least one local maximum above under and respectively:

| Type I error: | ||||

| Power: | (1) |

Formulas for type I error have been developed for stationary fields in 1D and isotropic fields in 2D and 3D (Cheng and Schwartzman, 2015, Cheng and Schwartzman, 2017 and Cheng and Schwartzman, 2018). However, there is no formula to calculate power. In order to get an appropriate estimate of power, we need to know the peak height distribution for non-centered (the mean function is not 0) random fields. Generally speaking, it is very difficult to calculate the peak height distribution especially when the random field has non-zero mean. Durnez et al. (2016) suggests using Gaussian distribution to describe the non-null peaks and truncated Gaussian distribution to approximate the overshoot distribution. This approach is easy to implement but not very accurate because the peak height distribution is in reality always skewed and not close to any Gaussian distribution.

In this article, we propose to approximate the probability of an observed peak exceeding the detection threshold by calculating the expected number of peaks above the threshold . We show that the approximation, which is also an upper bound, works well under certain scenarios. For the entire domain, we can approximate the average peakwise power by taking the arithmetic mean of the approximation proposed in this paper over non-null voxels.

The proposed approximation makes the problem more tractable, but in general, it does not have an explicit form. In order to make it applicable in practice, we further simplify the formula under the isotropy assumption and show its explicit form in 1D, 2D, and 3D. The explicit results are validated through 2D and 3D computer simulations carried out in MATLAB. The simulation also covers multiple scenarios by modifying the parameters used to generate the data. The performance of power approximation and its conservative adjustment under these scenarios are discussed.

Finally, to assess the real-data performance of our power approximation method, we apply it to a 3D simulation induced by a real brain imaging dataset, where the parameters are estimated from the Human Connectome Project (Van Essen et al., 2012) fMRI data. By testing the method in a realistic setting, we also demonstrate how effect size and other parameters affect the power.

The paper is organized as follows. We first show in Section 2 the problem setup and theoretical results in certain scenarios. In Section 3, we derive the explicit formulas under isotropy. Simulation in 2D is conducted in Section 4. Details regarding how to apply our formula in application setting are discussed in Section 5. The methodology is applied to a 3D real dataset in Section 6.

2 Power Approximation

2.1 Setup

Let where representing the noise is a centered (zero-mean) smooth unit-variance Gaussian random field on an dimensional non-empty domain , is the standard deviation of the noise and is the mean function. Let where the ratio is the standardized mean function, which we assume to be . Here is a sufficient smoothness condition for , and this will be clarified in Assumption 1 below.

Let

We will make use of the following assumptions:

Assumption 1.

almost surely and its second derivatives satisfy the mean-square Hölder condition: for any , there exists positive constants , and such that

where is the dimensional open cube of side length centered at . This condition is satisfied, for example, if is .

Assumption 2.

For every pair with , the Gaussian random vector

is non-degenerate, i.e. its covariance matrix has full rank.

2.2 Peak detection

Following the notation in the problem setup, the null and alternative hypothesis can be written as:

The mean function is not directly observed, so the hypothesis is tested based on the peak height of . For a peak detection procedure that aims to test this hypothesis, a threshold for the peak height of needs to be set in advance. If a local maximum with height greater than is observed, we would choose to reject the null hypothesis due to the strong evidence against it. The probability that a peak of exceeds

| (2) |

is the type I error under and power under . The threshold can be obtained based on the peak height distribution under . A formula for peak height distribution of smooth isotropic Gaussian random fields has been derived in Cheng and Schwartzman (2018) and it can also be derived directly from a special case of the formulas presented in this paper. Usually, is set to be some quantile of the null distribution of peak height to maintain the nominal type I error. More details about selecting the threshold will be discussed in the real data example. Selecting is not the main focus of this paper and our method can be applied to any choice of .

2.3 Power approximation

Let be the number of local maxima of the random field above over the local domain . The power defined in (2) can be represented as . We call this the power function, seen as a function of the threshold u. Note that

| (3) |

On the other hand,

| (4) |

Thus, we have

| (5) |

This inequality tells us that for any fixed , the power is bounded within an interval of length . Thus, is a good approximation of power if one of the two conditions below is satisfied:

-

1.

The factorial moment converges to 0 and does not.

-

2.

They both converge to 0 and converges faster than .

The convergence above refers to conditions on the signal and noise parameters. In the rest of this section, we introduce four interesting results. The first result can be useful for simplifying the power function and the other three results give different scenarios where one of the conditions above holds.

2.4 Adjusted

We have provided evidence of using to approximate power through (5). However, alone might not be sufficient for power approximation since it only gives an upper bound. Also, unlike power, sometimes exceeds 1. To correct for this, we define the adjusted as

| (6) |

The adjusted is the same as when the expected number of local maxima is less or equal to 1. When is greater than 1, we divide by to make sure it never exceeds 1. The adjusted is more conservative, and we conjecture that it is a lower bound of power when there exists at least one local maximum in the domain . In applications, people are interested in a conservative estimator so that the test is guaranteed to have enough power. Combining and , we can get an approximate range of the true power. We will compare and adjusted in simulation studies.

2.5 Height equivariance

Our first result does not concern the approximation (5) yet, but it offers a simplification of the power function and that will be used later. The proposition below states that the power function and for peak detection are translation equivariant with respect to peak height.

Proposition 1.

Let be a peak signal with height , where is a unimodal mean function with maximum equal to 0 at in . Then the power function for peak detection and can be written in the form , where is the power function or at .

Proof.

Let and be the number of local maxima of the random field above over . Considering the definition of power, we have

Given that , is is also straightforward to show is translation equivariant with respect to . ∎

Next, we give three scenarios where the equality in (5) can be achieved asymptotically: small domain size, large threshold, and sharp signal.

2.6 Small domain

If the size of the local domain where a single peak exists is small enough, it can be shown that equality in (5) can be achieved asymptotically.

Theorem 1.

Consider a local domain for any fixed where is the N-dimensional open cube of side centered at . For sufficiently small and fixed threshold u,

| (7) |

Proof.

The proof is based on the proof of Lemma 3 in Piterbarg (1996) and Lemma 4.1 in Cheng and Schwartzman (2015).

| (8) |

Then we can take the Taylor expansion

where is a Gaussian vector field. Note that the determinant of is equal to the determinant of

| (9) |

For , multiply the th column of this matrix by , take the sum of all such columns and add the result to the first column. Since , we can derive , and obtain the matrix below with the same determinant as (9)

Let ,

So we have

where

Using the inequality of arithmetic and geometric means, we can bound the determinant

where is the entry of . Apply the inequality again

For any Gaussian variable and integer , the following inequality holds

where is a constant depending on . Next, we can focus on the conditional expectation and conditional variance of and .

By Assumption 1 and 2 and the fact that the conditional variance of a Gaussian variable is less or equal to the unconditional variance, we can conclude that the conditional variance of and are bounded above by some constant.

Summarizing the results above,

for some constant and

Combine the results above and with a fixed threshold

for some constant .

Next, by the proof of Lemma 4.1 in Cheng and Schwartzman (2015)

for some constant .

Therefore, there exists such that

For , by Kac-Rice formula in Adler and Taylor (2007)

Denote the integrand by . The function is continuous and positive over the compact domain . Thus , implying

For , by Kac-Rice formula

The integrand is also continuous and positive over the compact domain indicating for small . Thus we have

for sufficiently small . ∎

2.7 Large threshold

For large threshold , the following asymptotic result shows power can be precisely approximated by .

Theorem 2.

For any fixed domain , as

| (10) |

where the error term is non-negative and is some constant.

Proof.

Notice that the threshold does not affect the value of which is part of the adjusted . By (10)

If , the adjusted might be overly conservative for large threshold . Therefore, we only recommend for this scenario.

2.8 Sharp signal

The following theorem provides an asymptotic power approximation when the signal is sharp. Interestingly, while the power function is generally non-Gaussian, it becomes closer to Gaussian as the signal peaks become sharper.

Theorem 3.

Let where is a unimodal mean function with maximum equal to 0 at , , and represents the height. For any fixed threshold , as

| (11) |

where is CDF of the standard Gaussian distribution.

Proof.

By lemma A.1 of Cheng and Schwartzman (2017), as

where is some constant. Therefore .

Since and both of them only take non-negative integer values, and are bounded above by where is the number of critical points of the random field . Apply Kac-Rice formula

Denote the integrand by . The function is continuous and positive over the compact domain and as . Thus there exists such that . Then by dominated convergence theorem

as . Since , the adjusted

To calculate , apply Kac-Rice formula

| (12) |

where is the covariance matrix of . Let which attains its minimum only at . Similar to the proof of A.4 in Cheng and Schwartzman (2017), as , (2.8) can be approximated by applying Laplace’s method

This finishes the proof.

∎

3 Explicit formulas

We have showed that the power for peak detection can be approximated by the expected number of local maxima above , , under certain scenarios such as small domain and large threshold. Although we can apply the Kac-Rice formula to calculate , it remains difficult to evaluate it explicitly for without making any further assumptions. In this section, we focus on computing and show a general formula can be obtained if the noise field is isotropic. Furthermore, explicit formulas when are derived for application purposes.

3.1 Isotropic Gaussian fields

Suppose is a zero-mean unit-variance isotropic random field. We can write the covariance function of as for an appropriate function . Denote

| (13) |

where and are first and second derivative of function respectively.

The following lemma comes from Cheng and Schwartzman (2018).

Lemma 1.

In particular, it follows from Lemma 1 that and for any , implying and and hence .

We can use theoretical results from Gaussian Orthogonally Invariant (GOI) matrices to make the calculation of easier. GOI matrices were first introduced in Schwartzman et al. (2008), and used for the first time in the context of random fields in Cheng and Schwartzman (2018). It is a class of Gaussian random matrices that are invariant under orthogonal transformations, and can be useful for computing the expected number of critical points of isotropic Gaussian fields. We call an random matrix GOI with covariance parameter , denoted by , if it is symmetric and all entries are centered Gaussian variables such that

| (14) |

The following lemma is Lemma 3.4 from Cheng and Schwartzman (2018).

Lemma 2.

Let the assumptions in Lemma 1 hold. Let and be and matrices respectively. denotes identity matrix.

(i) The distribution of is the same as that of .

(ii) The distribution of is the same as that of .

Lemma 2 shows the distribution and conditional distribution of the Hessian matrix of a centered random field . Next, we establish the corresponding result for non-centered random field .

Lemma 3.

Let and be and matrices respectively.

(i) The distribution of is the same as that of

(ii) The distribution of is the same as that of

3.2 General formula under isotropy

Theorem 4.

Let , where is a smooth zero-mean unit-variance isotropic Gaussian random field satisfying Assumption 1, 2. Let a smooth mean function such that is a non-singular matrix with ordered eigenvalues at all critical points . Then for any domain

| (15) |

where is the PDF of the standard Gaussian distribution, , as in Lemma 3 represents GOI((1-)/2), and denotes the indicator function.

Proof.

By the Kac-Rice formula

Next, we show the derivation from the third to the fourth line in the equation above. Since we assume is a non-singular matrix at all critical points, then there exists an orthonormal matrix, denoted by , such that , where are ordered eigenvalues of . On the other hand, GOI matrices are invariant under orthonormal transformations. By Lemma 3, the conditional expectation is therefore

| (16) |

∎

The expression (15) can be simplified further if we further assume the mean function to be a rotational symmetric paraboloid centered at . In this case, the Hessian of is the identity matrix multiplied by a constant, i.e.

Then we can write the mean function as . Define

| (17) |

and

| (18) |

can be simplified as

| (19) |

where we make a change of variable and . Note that the parameter depends on the correlation structure of .

3.3 Explicit formulas in 1D, 2D and 3D

In (19), a general formula for under isotropy was derived. To make the formula easier to apply in practice, we have the following results for computing it in 1D, 2D, and 3D. When , the derivation is simple enough that we do not need additional assumptions on the mean function except those in Theorem 4, and it follows directly from Kac-Rice formula. When and , we assume the mean function is a rotational symmetric paraboloid centered at . is calculated by first obtaining explicit formulas for , and plugging into (19).

Proposition 2.

Let , , where is a smooth zero-mean unit-variance Gaussian process and is a smooth mean function. Assume additionally that is stationary, then

| (20) |

where the function is defined as

Proof.

Since we assume that is stationary, is independent of and , and and do not depend on . Therefore,

Note that, by the formula of conditional Gaussian distributions,

By the Kac-Rice formula

The second to third line is due to the fact that

This finishes the proof. ∎

Note that when ,

| (21) |

We need the following lemmas to calculate explicitly when and . They are direct calculation of integral by parts.

Lemma 4.

Let , for constant and

| (22) |

Lemma 5.

Let , for constant and

where

Proposition 3.

Proposition 4.

When , let assumptions in Theorem 4 hold. Then the function defined in (18) can be written explicitly as

where

Proof.

This is a direct result of Lemma 5 above with and . ∎

Note that for and , we need to solve an integral over the domain (see (19)) to get . Although we can not derive the explicit form for the entire formula, this can be evaluated in applications with the help of numerical algorithms.

3.4 Isotropic unimodal mean function

We have calculated the explicit formulas assuming the mean function is a concave paraboloid. This is a very strong assumption. However, in a general setting, where the unimodal mean function is rotationally symmetric of any shape, we can apply a multivariate Taylor expansion at the peak and use the second-order approximation to estimate power. For example, suppose the shape of the mean function is proportional to a rotational symmetric Gaussian density

| (25) |

where is the center of the mean function and is the signal bandwidth. The Taylor expansion at the center is

| (26) |

When the domain size gets small, we neglect the remainder term, and use its quadratic approximation as the mean function. With quadratic mean function, it becomes convenient to use compute . We will evaluate the performance of this approach for different domain sizes in the simulation study.

4 Simulations

In Section 2 above, we discussed power approximation under different scenarios. We showed the factorial moment decays faster than under some circumstances so that we can use or adjusted to approximate power. In this section, a simulation study is conducted to validate each scenario as well as visualize the power function, , and adjusted . Through simulation, we could also get a better sense of applying them to real data.

4.1 Paraboloidal mean function

We generate centered, unit-variance, smooth isotropic 2D Gaussian random fields over a grid of size pixels as , each field obtained as the convolution of white Gaussian noise with a Gaussian kernel of spatial standard deviation 5, and normalized to standard deviation . For the mean function , we use a concave paraboloid centered at . The equation of the paraboloid is

| (27) |

where controls the sharpness of the mean function. The smaller is, the sharper the paraboloid will be. controls the height of the signal. To maintain the rotational symmetric property of , we only consider those circles centered at as domain . The size of is measured by the radius . The default value of each parameter is listed in Table unless otherwise specified.

| Parameter | Default value |

|---|---|

| 10 | |

| 7 | |

| 3 |

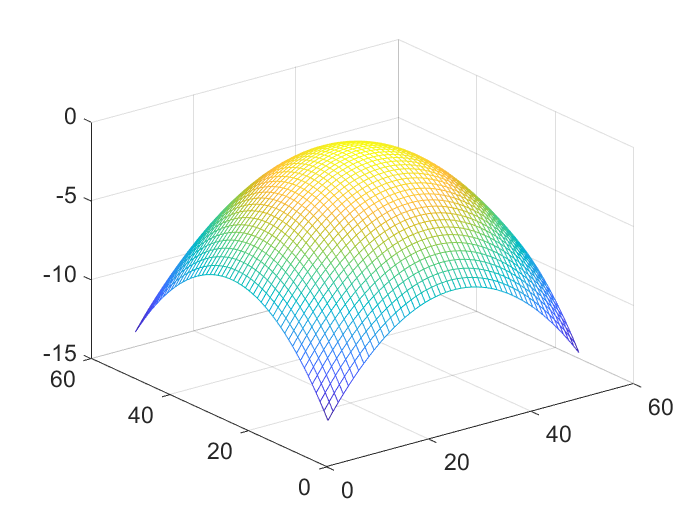

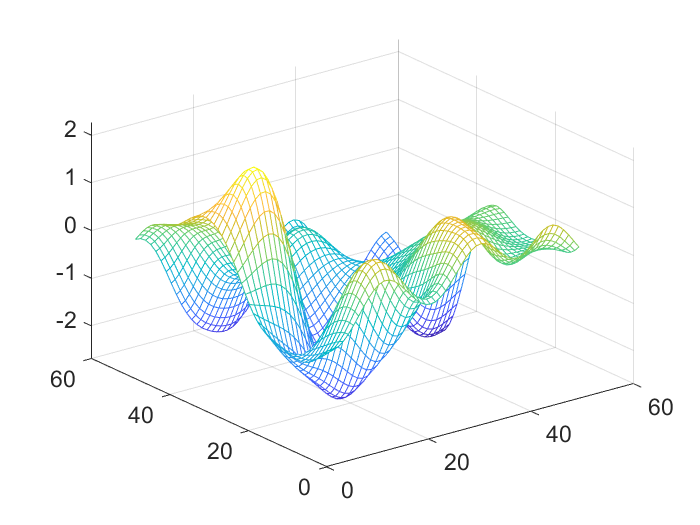

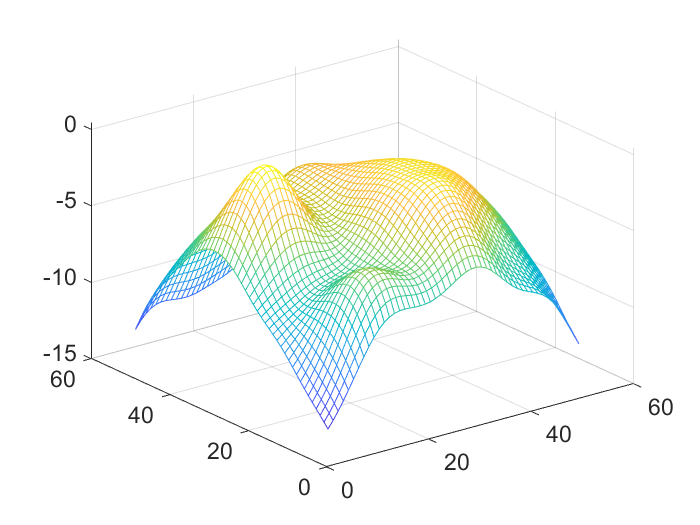

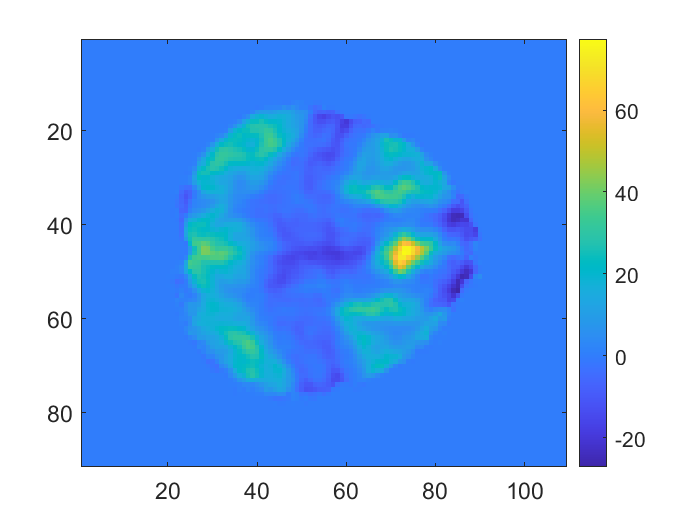

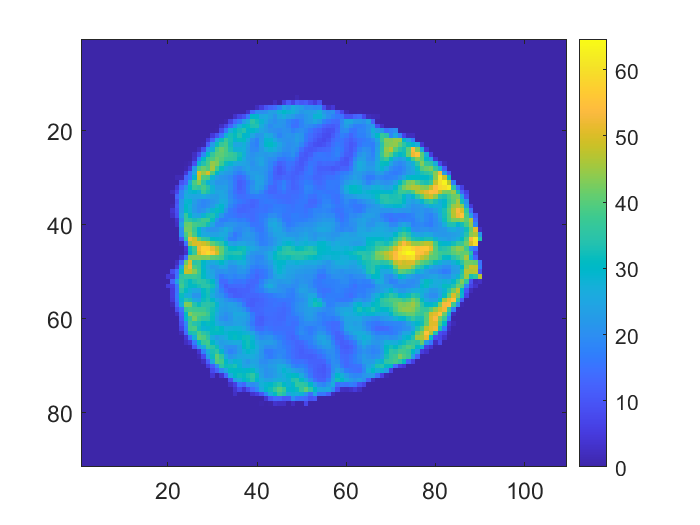

The first two panels of Figure 1 display two instances of and respectively. The third panel displays the resulting sum which is calculated by the signal-plus-noise model.

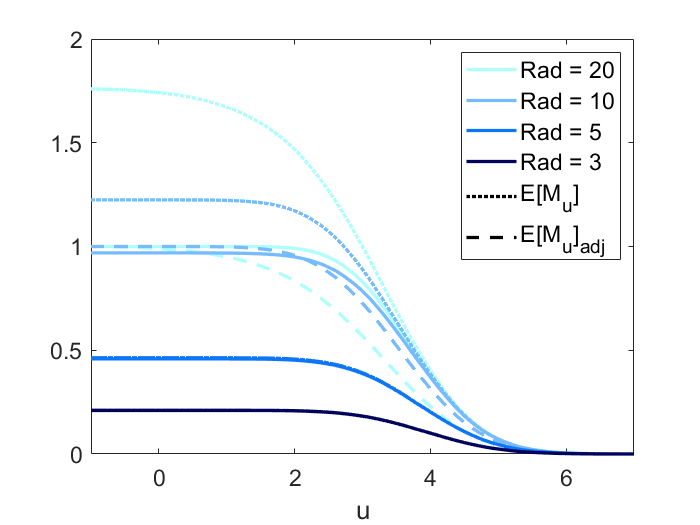

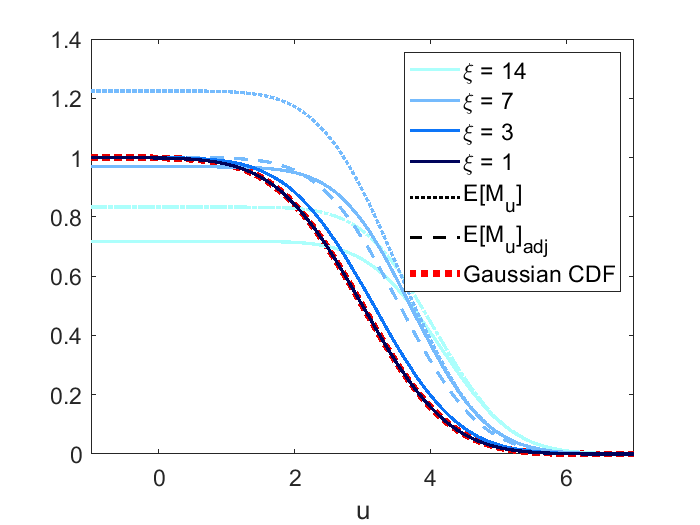

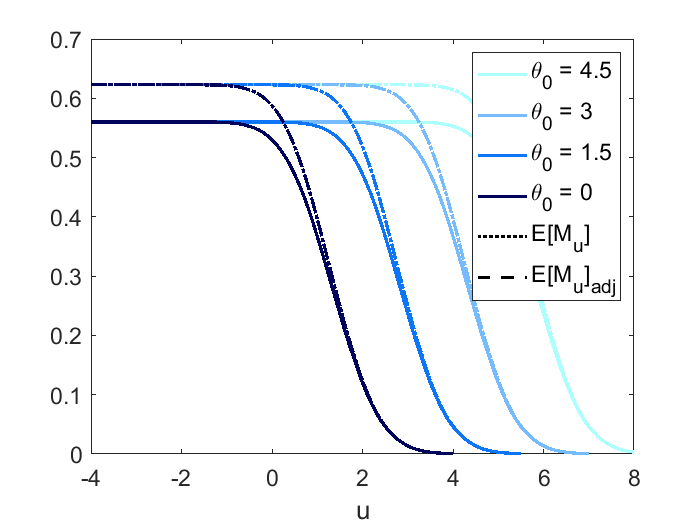

In the simulation, we validate and visualize the scenarios presented above, and check the effect of different choices of parameters on the power function, and adjusted . Four different scenarios are considered as we discussed in Section 2:

-

1.

Height equivariance

-

2.

Small domain size Rad(D)

-

3.

Large threshold

-

4.

Sharp signal (small )

(a) Mean function

(b) Noise

(c) Data Figure 1: 2D simulation: a single instance of , and their resulting .

For each simulated random field, we record the height of its highest peak if there exists at least one, and then for any threshold , we calculate the empirical estimate of detection power (1) and :

| (28) |

| (29) |

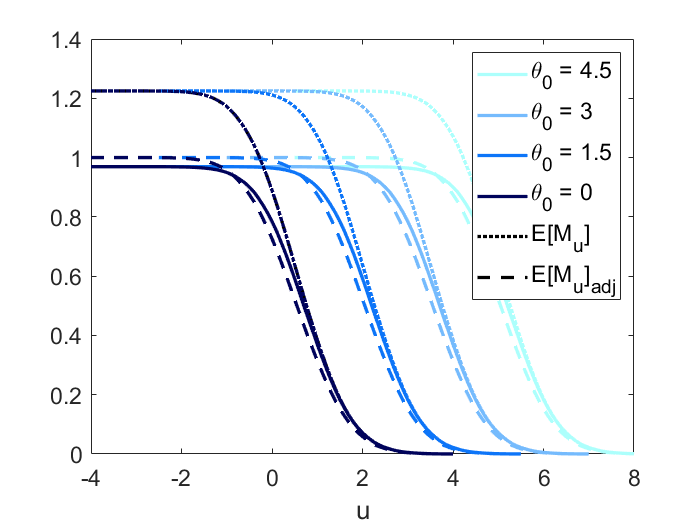

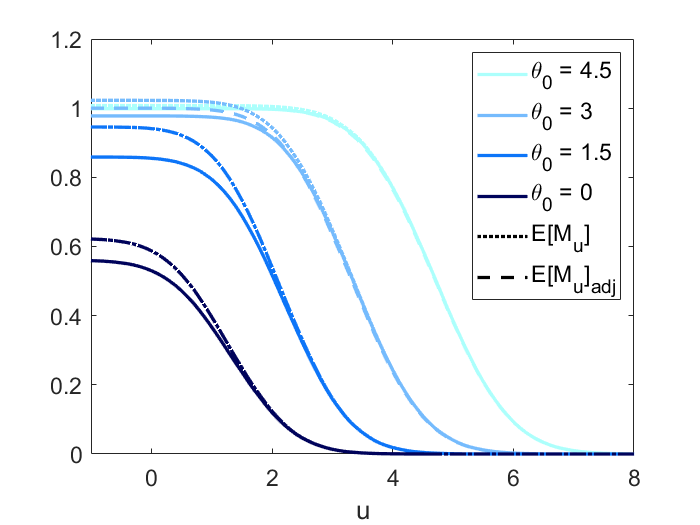

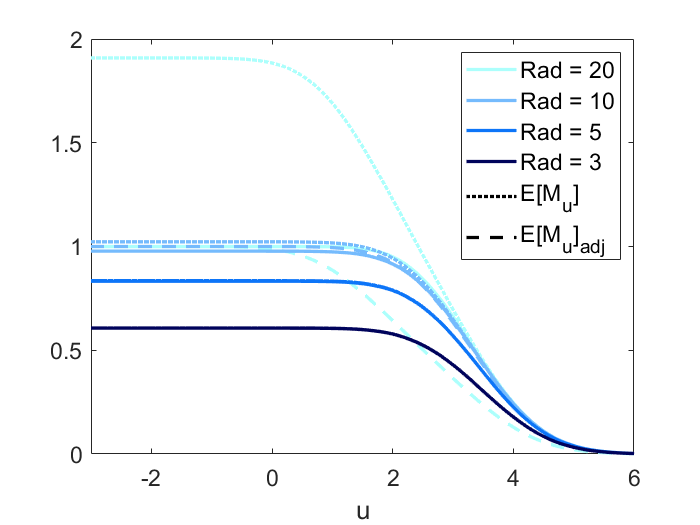

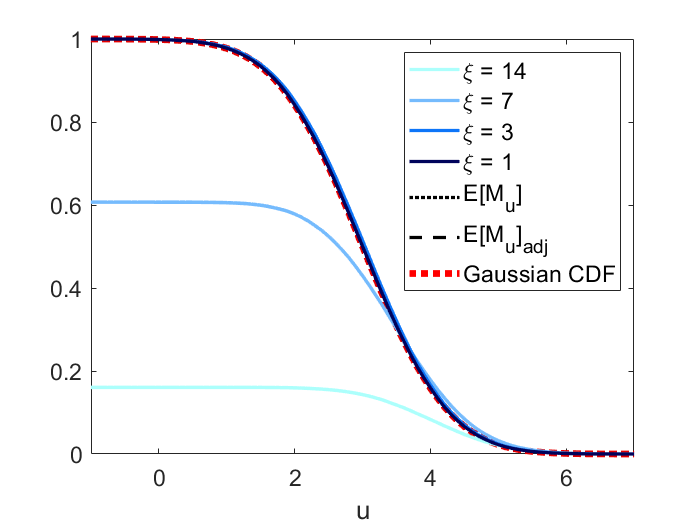

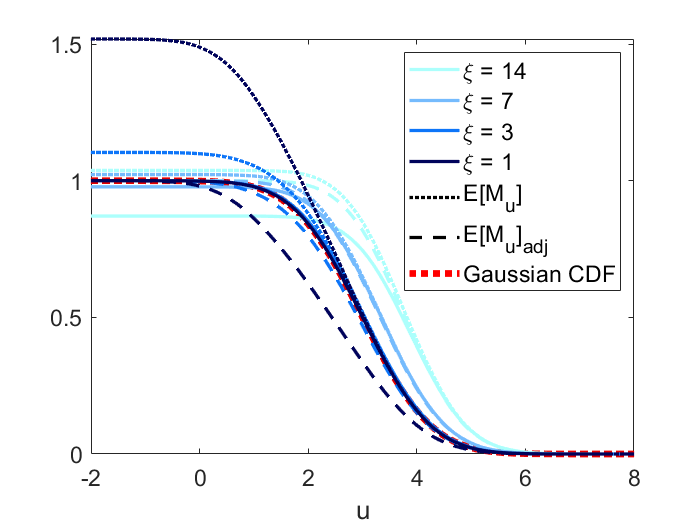

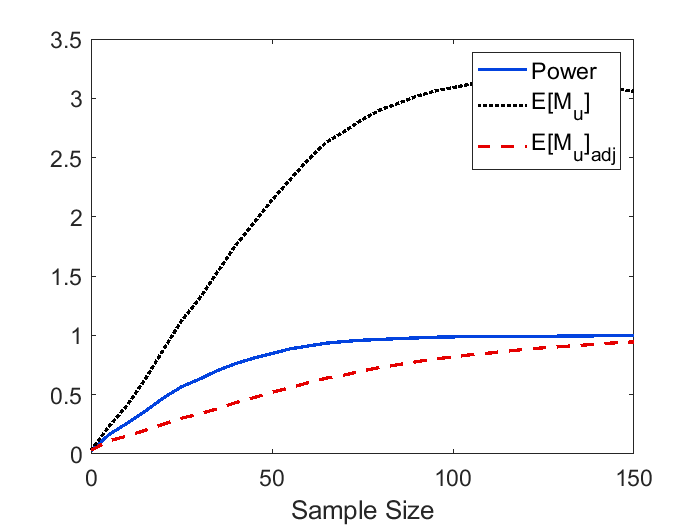

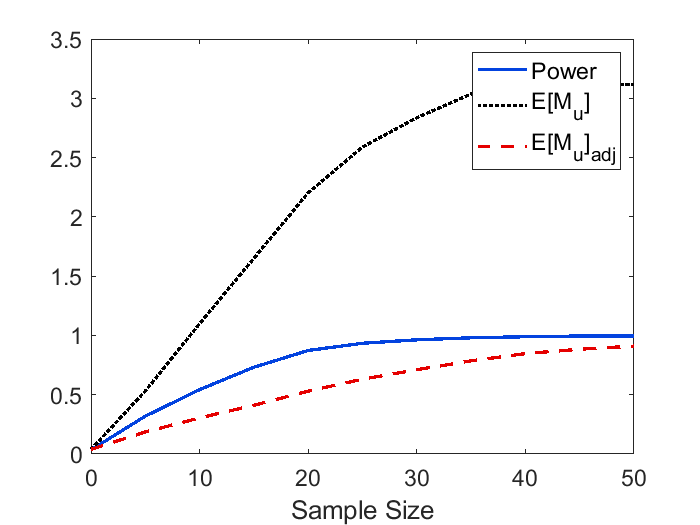

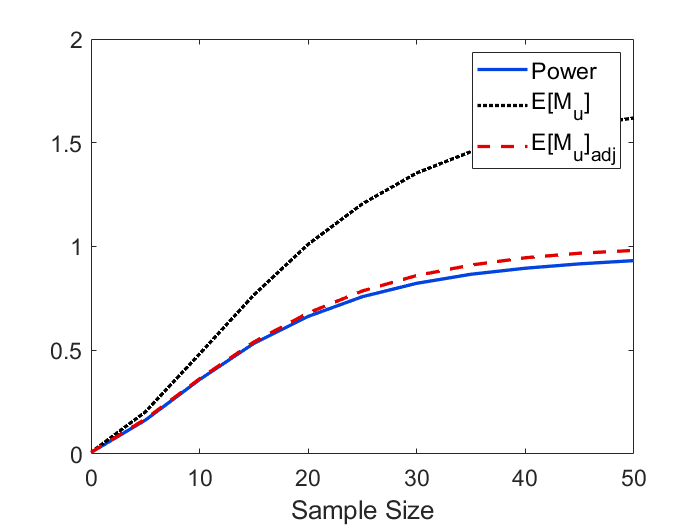

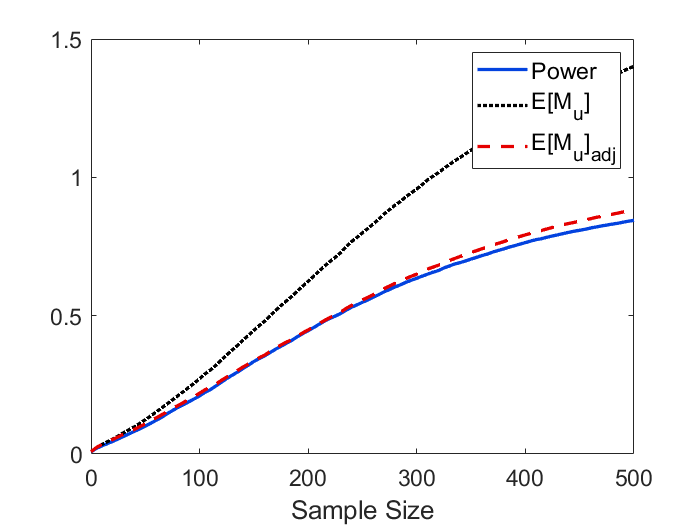

Figure 2 displays the power, and adjusted curves under the four scenarios. The first panel is to validate scenario 1 (height equivariance). As stipulated by Proposition 1, the power, and adjusted curves are parallel for different signal height having other parameters remain the same. In the second panel, both the and adjusted curve are close to the power curve under scenario 2 (small domain) which indicates that for a smaller domain (quantified by Rad()), using and adjusted to approximate power becomes more accurate as stipulated by Theorem 7. We can also find in all three panels that when is large enough, the curve converges to the power curve as increases, as stipulated by Theorem 2. The adjusted curve also converges to the power curve but with a slower rate compared to . The third panel shows the power, and adjusted curve all converge to the Gaussian CDF for sharp signal (small ), as stipulated by Theorem 3.

4.2 Constant mean function

When the mean function is constant, i.e. it does not depend on location , reduces to a centered isotropic Gaussian random field. Within the context of this paper, can be seen as the null hypothesis and the power function becomes the probability of Type I error. We use the peak height distribution when (Cheng and Schwartzman, 2018) to decide the cutoff point such that the test meets the nominal type I error. The simulation result when is displayed in Figure 3.

The performance of Type I error approximation when the mean function is 0 is similar to what we find when the mean function is quadratic (scenario 4 is ignored since the shape parameter does not exist when the mean function is constant). The conclusion is that under large (which is guaranteed in order to control the Type I error) or small domain, we have good Type I error approximation.

4.3 Gaussian mean function

The simulation results under Gaussian mean are displayed in Figure 4. For scenario 2 and 3, the results are consistent with those under quadratic mean. For scenario 1, since controls both the signal height and sharpness, the power, and adjusted are no longer equivariant in terms of . For scenario 4, if we look at Figure 4(c) with Figure 4(d), it can be seen that as the signal becomes sharper, the power, and adjusted curve converges to the Gaussian CDF only when the domain size (quantified by Rad()) is small. In this case, the asymptotic curve is a mixture of Gaussian CDF and under constant mean. This is due to the shape of Gaussian density as it converges to 0 if we expand the domain.

In conclusion, for Gaussian mean function, we recommend applying our method to approximate power only when the domain size is small.

5 Estimation from data

To use our power approximation formula in real peak detection problems, we need to estimate the spatial covariance function of the noise as well as the mean function from the data. In this section, we demonstrate the 3D application setting and how to estimate the noise spatial covariance function and the mean function. Consider an imaging dataset with subjects, and let represent the signal plus noise for subject

where , the signal , standard deviation and noise are assumed to be smooth functions. If we compute the standardized mean of all subjects, we will get a standardized random field

| (30) |

This standardized random field has constant standard deviation of 1. We can treat as the new signal and as the new noise of the standardized field. Let and . We propose using the following method to estimate the new signal and noise.

5.1 Estimation of the noise spatial covariance function

We consider the noise to be constructed by convolving Gaussian white noise with a kernel:

| (31) |

where is a dimensional kernel function, and is Gaussian white noise. Assume that the kernel is rotationally symmetric so that the noise is isotropic. Under model (31), we would be able to simulate the noise if we were able to estimate the kernel function from the data.

It can be shown that the autocorrelation of is the convolution of the kernel with itself:

By the convolution theorem, convolution in the original domain equals point-wise multiplication in the Fourier-transformed domain. Thus the kernel function can be estimated empirically using the following method:

-

1.

Determine a location of interest (e.g. center of the peak), and calculate the empirical correlation vectors between and where lies on the three orthogonal axes centered at , and belongs to a subdomain of interest.

-

2.

Take the average of the three estimated correlation vectors (forcing the noise to be isotropic) and perform Fourier transform.

-

3.

Take the square root of the Fourier coefficients, then the estimated kernel function can be obtained by performing the inverse Fourier transform.

5.2 Estimation of the mean function

Our explicit formulas are derived assuming the Hessian of the mean function is a constant times the identity matrix. Therefore, we aim to find a rotational symmetric paraboloid that best represents the mean function:

| (32) |

where the dot represents the vector inner product in . Note that all the quadratic terms share the same coefficient which is due to the rotational symmetry. To estimate (32), we can fit a linear regression using all within the subdomain as outcome.

6 A 3D real data example

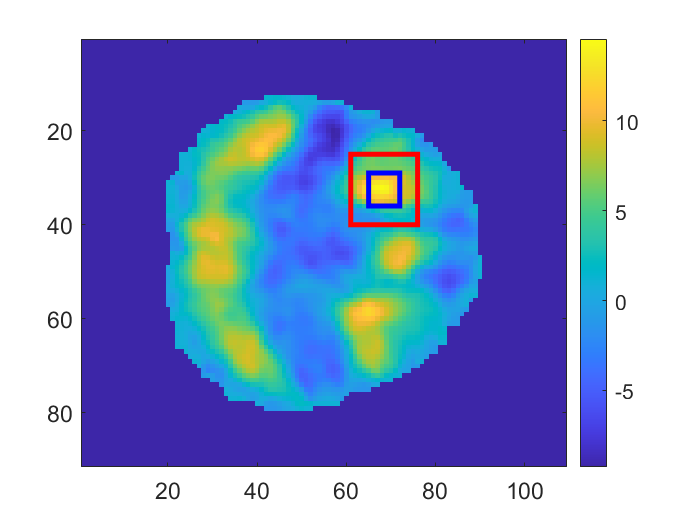

As an application, we illustrate the methods in a group analysis of fMRI data from the Human Connectome Project (HCP) (Van Essen et al., 2012). The data is used here to get realistic 3D signal and noise parameters from which to do 3D simulations as well as evaluate the performance of our formulas in power approximation. The dataset contains fMRI brain scans for 80 subjects. For each subject, the size of the fMRI image is voxels. The mean and standard deviation of the data are displayed in Figure 5(a) and 5(b).

6.1 Data preprocessing

Gaussian kernel smoothing is applied to the dataset to make the mean function unimodal around the peak and increase the signal-to-noise ratio. The standard deviation of the smoothing kernel we use in this example is 1 voxel which translates to full width at half maximum (FWHM) being around 2.235. It is obvious from Figure 5(b) that the standard deviation of the noise is not a constant for different locations, thus we use the transformation described in Section 5 to standardize the smoothed data before analyzing it. The standardized mean of the smoothed data is displayed in Figure 5(c).

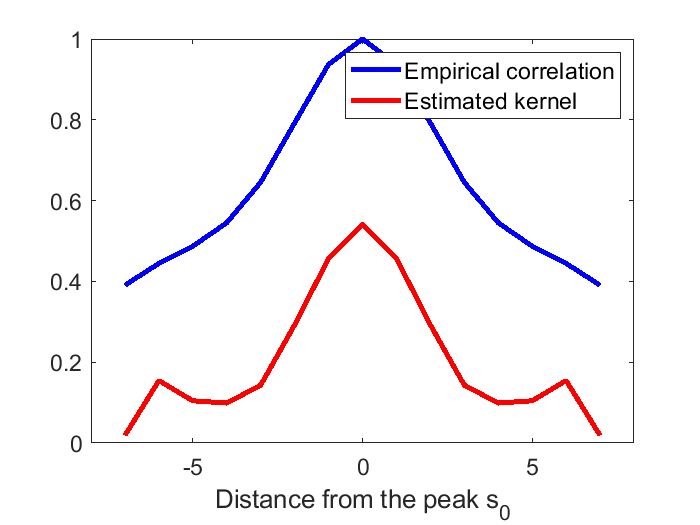

6.2 Estimation of the autocorrelation and mean functions

After standardizing the data, our next step is to estimate the mean and kernel functions using the methods described in Section 5. Here a subdomain (the red box in Figure 5(c)) around the peak is taken to estimate the kernel. Since we assume the noise is isotropic, the correlation around the peak is supposed to be strictly symmetric along any dimension. However, this is not always true in real data. To tackle this, for each of the three dimensions, we first save the correlation around the peak as a vector and create a new vector by flipping the saved correlation vector. Then we take the mean of the two vectors so that it is guaranteed to be symmetric. The empirical correlation after such symmetrization and the corresponding estimated kernel function are displayed in Figure 6.

We consider two approaches to estimate the mean function, nonparametric and parametric. The nonparametric mean estimation is obtained as a voxelwise average over subjects.

| (33) |

The parametric mean estimation is obtained by fitting a linear regression model (32) using all observed data within the subdomain of size (the blue box in Figure 5(c)) as outcome and their corresponding location variables , as covariates. The least square estimate of the mean is

| (34) |

We will compare the difference in simulated power and when the mean function is estimated by the nonparametric approach (33) vs the parametric approach (34).

6.3 3D Simulation induced by data

We have done several simulation studies under a well-designed 2D setting where the formulas are supposed to work well, but eventually, we want to apply the formulas to real-life data which is more complicated. Besides, in terms of fMRI data analysis, the image is always 3D by nature. Considering all these factors, a 3D simulation study induced by real data is necessary to validate the performance of the formulas under a more realistic setting,

In the previous two subsections, we have studied the signal and noise of the HCP data. For the simulation, we would like to generate 3D images using the estimated mean and kernel function. The noise field is generated by convolving the estimated kernel (displayed in Figure 6) and Gaussian white noise. For each simulation setting, 10,000 such noise fields are generated.

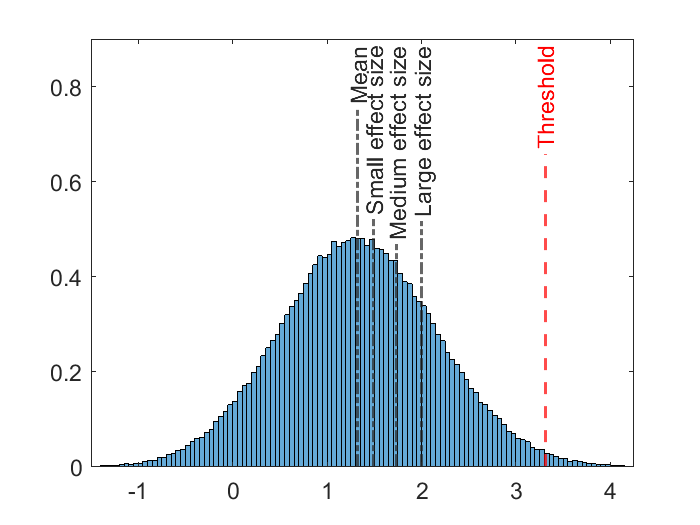

The signal from the standardized data is very strong (see Figure 5(c)). For illustrative purposes, we choose to weaken the signal by scaling down the estimated mean function (34) while maintaining the same shape. Here signal strength is measured by effect size, and the amount of scaling is determined by different levels of effect size. In traditional t-test or z-test, Cohen’s d values of 0.2, 0.5, and 0.8 (corresponding to 0.58 th 0.69 th and 0.79 th quantiles of the standard Gaussian distribution) are considered as small, medium, and large effect sizes (Cohen, 1988). The peak height distribution under the null hypothesis (zero mean function) is displayed in Figure 7, and does not follow a Gaussian distribution. Therefore, we take 0.58 th, 0.69 th and 0.79 th quantile of the null distribution minus the mean as small (0.16), medium (0.40), and large (0.65) effect size (see the black dash-dot lines in Figure 7). For simplicity, we see the peak height of the mean function as effect size in this simulation. However, this is not the most accurate way of defining effect size in the peak detection setting. More details will be discussed in 7.2. The threshold for peak detection is chosen as the 0.99 th quantile of the peak height distribution under the null () according to Cheng and Schwartzman (2017) (see the red dashed line in Figure 7).

Similar to the 2D simulation, the search domain is assumed to be a sphere centered at the true peak, and we use radius of to control the domain size. Signal sharpness is fixed since it is estimated from the data. The empirical power and are computed using (28) and (29).

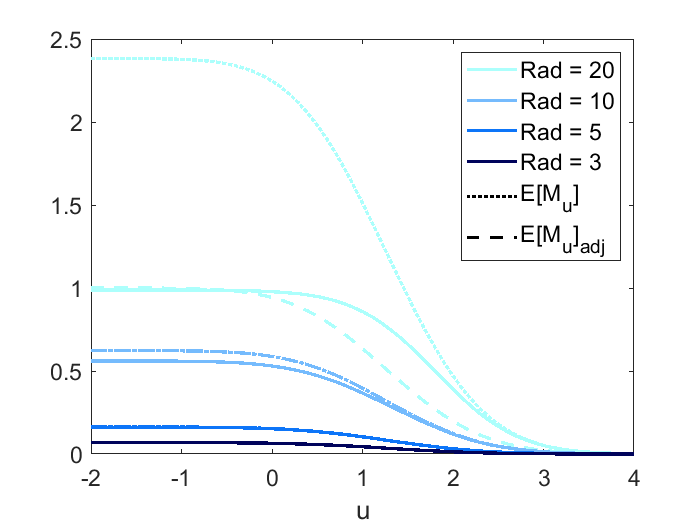

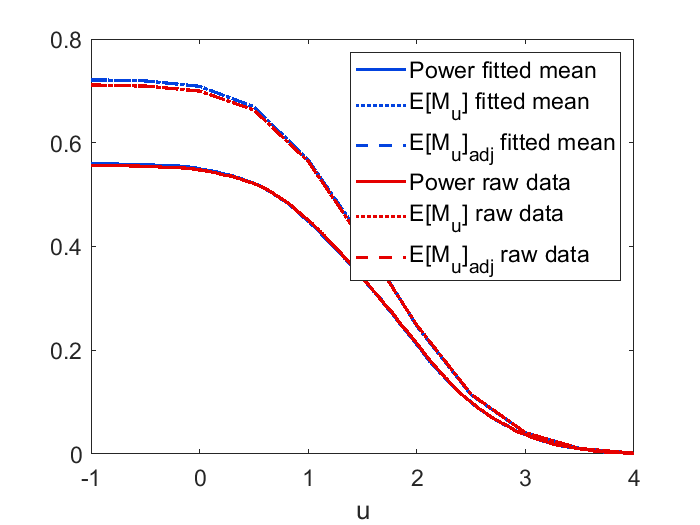

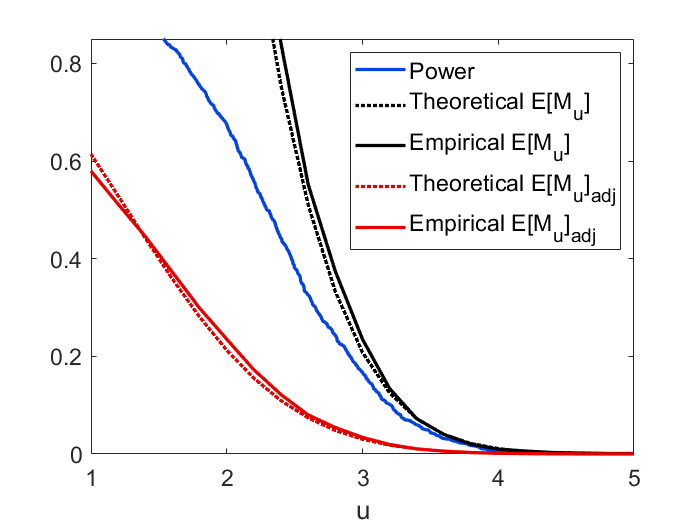

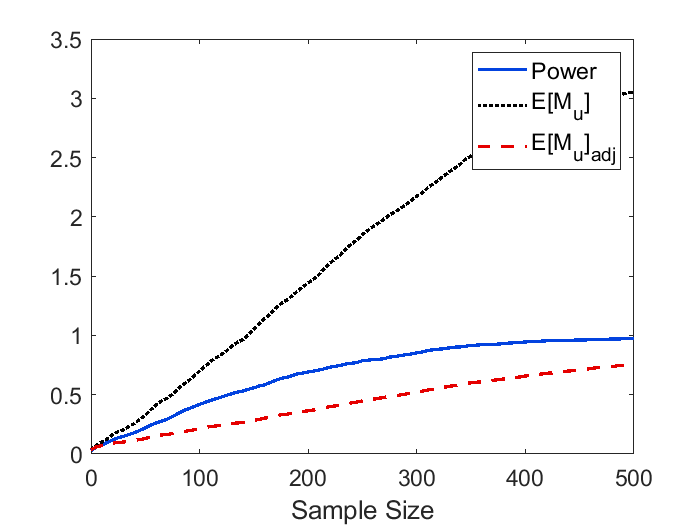

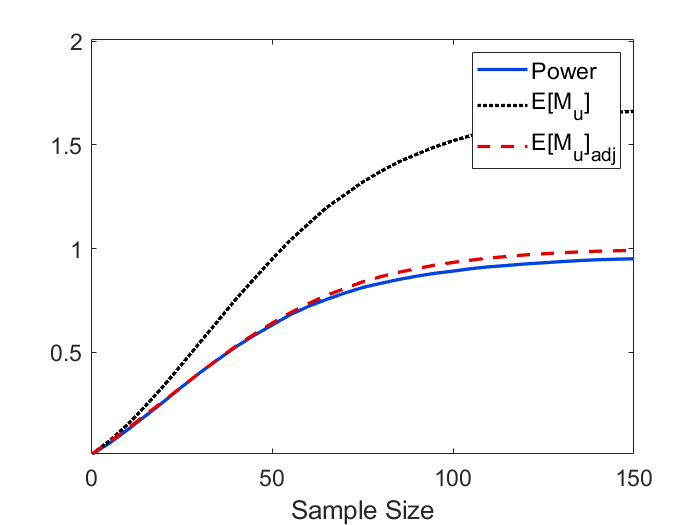

To derive the explicit formulas for , we assume the mean function to be a rotational symmetric paraboloid, and this assumption might cause some bias in applications. In Figure 8, we demonstrate the difference in simulated power and when the mean function is estimated by the nonparametric approach (33) vs the parametric approach (34). It can be observed that the quadratic approximation only has a small impact on power and in this example. In Figure 9, we validate our theoretical formula for (19) as well as the adjusted . As we can see, the theoretical curve for and adjusted closely mirrors the empirical curve. The figure also shows that the power approximation using is accurate for large as stipulated by Theorem 2. Power curves using three different effect sizes, and comparisons between large and small domain sizes are displayed in Figure 10. We can see from the figure that the approximation works well for small and large sample sizes, and provides a conservative determination of the sample when exceeds 1. We can also observe that the performance of power approximation using becomes better if the domain size is smaller as stipulated by Theorem 7.

7 Discussion

7.1 Explicit formulas and approximations

Calculating power for peak detection (1) has been a difficult problem in random field theory due to the lack of formula that can compute it directly. In this paper, we have discussed the rationale of using and to approximate peak detection power under different scenarios and derived formulas to compute assuming isotropy. Isotropy is assumed so that we are able to use the GOI matrix (Cheng and Schwartzman, 2018) as a tool to calculate via the Kac-Rice formula.

We also showed explicit formulas for (defined as (18)) when assuming the mean function is a paraboloid. Computing involves applying the probability density function for the eigenvalues of GOI matrices and details can be found in the proof of Proposition 2, 3 and 4. Then can be calculated by plugging to (19). The integration in (19), however, can not be evaluated explicitly. In practice, one may evaluate it numerically. For higher dimensions (), it remains difficult to get an explicit form of due to the fact inferred by Proposition 2, 3 and 4 that the integration becomes extremely complicated as becomes large.

7.2 Effect size

We want to emphasize that the power depends on both the signal strength parameter and shape parameter . In a traditional z-test or t-test which tests a single null hypothesis that the mean value equal to 0, the detection power depends only on a single parameter we call effect size. Here the test is conditional on the point being a local maximum. Applying a simple z-test or t-test, one could reject the null hypothesis as long as the peak height exceeds the pre-specified threshold. This approach is not accurate since the peak height does not follow a Gaussian or t distribution. To address this, the threshold can be determined by the null distribution of peak height (Cheng and Schwartzman, 2018) to control the type I error at a nominal level. However, power calculation based on the test over peak height is still biased since the true effect size depends both on the signal height and curvature. The height of the peak affects the likelihood of exceeding the threshold and the curvature affects the likelihood of existing such peak in the domain. It follows that a sharp and high peak is easier to detect compared to a flat and low peak, leading to a larger detection power.

For an interpretation of the parameter , we consider two types of mean function: paraboloid and Gaussian. Suppose the noise is the result of the convolution of white noise with a Gaussian kernel with spatial std. dev. resulting in the covariance function with as specified in Section 3.1. This is the same noise as we simulated in Section 5. When the mean function is paraboloid, consider as in (27). Here we obtain and , yielding . Thus, is a shape parameter representing the relative sharpness of the mean function with respect to the curvature of the noise. When the mean function is Gaussian, consider . This expression is obtained, for example, if the signal is the result of the convolution of a delta function with a Gaussian kernel with spatial std. dev. . We obtain and , yielding . Thus, is the height of the signal a, scaled by the ratio of the spatial extent of the noise and signal filters. In both cases, the parameter , and thus the power, are invariant under isotropic scaling of the domain, in a similar fashion to the peak height distribution under the null hypothesis (Cheng and Schwartzman, 2020).

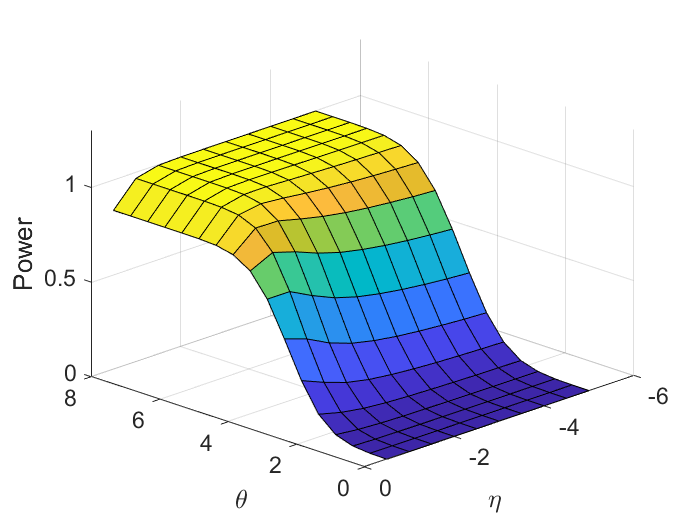

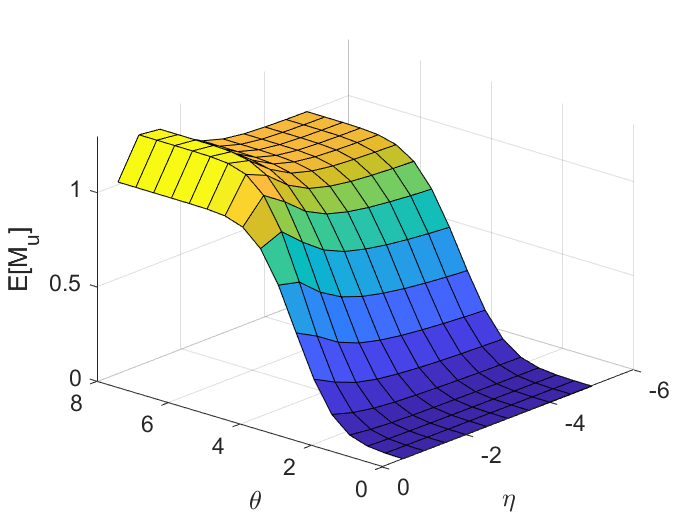

Figure 11 illustrates how and affect power and in the 2D simulation described in Section 4. As we have explained, and together determine the effect size. Although deriving an explicit form of effect size as a function of and is difficult, we are able to roughly show how the two parameters relate to power. which can be seen as signal-to-noise ratio (SNR) plays a major role. Having stay the same, the power monotonically increases with respect to . On the other hand, power monotonically decreases with respect to having stays the same. In this simulation example, the impact of on power is about 10 times stronger than if we fit a linear model of power using and . We can also observe from the figure that the effect of on power is stronger for large compared to that for small .

7.3 Application to data

To use our formula to calculate power in practice, one needs to assume the peak to be a certain type such as paraboloid or Gaussian. However, sometimes it might not be plausible to make such assumptions, leading to inaccurate power estimate.

Regarding the conjecture of being a lower bound when there exists at least one local maximum in the domain , it remains difficult to prove in general, but as we showed in the real data example, it seems to be correct in practice. When it comes to a real-life problem, we can take both and the into consideration to get a better understanding of the true sample size. We suggest using as an approximation to power when the sample size is small, considering when the sample size is large. also gives a more conservative estimate of power compared to which is useful to guarantee that the test is powerful enough when we design future studies. Because of its difficulty, we leave further study of for future work.

8 Acknowledgments

Y.Z., D.C. and A.S. were partially supported by NIH grant R01EB026859 and NSF grant 1811659. Data were provided in part by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

9 Appendix

References

- Adler and Taylor (2007) R. J. Adler, J. E. Taylor, Random Fields and Geometry., New York: Springer, 2007.

- Cheng and Schwartzman (2015) D. Cheng, A. Schwartzman, Distribution of the height of local maxima of gaussian random fields., Extremes 18 (2015) 213–240.

- Cheng and Schwartzman (2017) D. Cheng, A. Schwartzman, Multiple testing of local maxima for detection of peaks in random fields, Annals of Statistics 45(2) (2017) 529–556.

- Cheng and Schwartzman (2018) D. Cheng, A. Schwartzman, Expected number and height distribution of critical points of smooth isotropic gaussian random fields., Bernoulli 24(4B) (2018) 3422–3446.

- Cheng and Schwartzman (2020) D. Cheng, A. Schwartzman, On critical points of gaussian random fields under diffeomorphic transformations, Statistics & Probability Letters 158 (2020) 108672.

- Cohen (1988) J. Cohen, Statistical Power Analysis for the Behavioral Sciences, Routledge, 1988.

- Durnez et al. (2016) J. Durnez, J. Degryse, B. Moerkerke, R. Seurinck, V. Sochat, R. A. Poldrack, T. E. Nichols, Power and sample size calculations for fmri studies based on the prevalence of active peaks. (2016).

- Genovese et al. (2002) C. R. Genovese, N. A. Lazar, T. Nichols, Thresholding of statistical maps in functional neuroimaging using the false discovery rate, Neuroimage 15 (2002) 870–878.

- Heller et al. (2006) R. Heller, D. Stanley, D. Yekutieli, N. Rubin, Y. Benjamini, Cluster-based analysis of FMRI data, Neuroimage 33 (2006) 599–608.

- Piterbarg (1996) V. I. Piterbarg, Rice’s method for large excursions of gaussian random fields., Technical Report NO 478. Center for Stochastic Processes, Univ. North Carolina (1996).

- Schwartzman et al. (2008) A. Schwartzman, W. Mascarenhas, J. Taylor, Inference for eigenvalues and eigenvectors of gaussian symmetric matrices., Annals of Statistics 36(6) (2008) 2886–2919.

- Schwartzman and Telschow (2019) A. Schwartzman, F. Telschow, Peak p-values and false discovery rate inference in neuroimaging., NeuroImage 197 (2019) 402–413.

- Van Essen et al. (2012) D. C. Van Essen, K. Ugurbil, E. Auerbach, D. Barch, T. E. J. Behrens, R. Bucholz, A. Chang, L. Chen, M. Corbetta, S. W. Curtiss, S. Della Penna, D. Feinberg, M. F. Glasser, N. Harel, A. C. Heath, L. Larson-Prior, D. Marcus, G. Michalareas, S. Moeller, R. Oostenveld, S. E. Petersen, F. Prior, B. L. Schlaggar, S. M. Smith, A. Z. Snyder, J. Xu, E. Yacoub, WU-Minn HCP Consortium, The human connectome project: a data acquisition perspective, Neuroimage 62 (2012) 2222–2231.