An Asynchronous Approximate Distributed

Alternating Direction Method of Multipliers in Digraphs

Abstract

In this work, we consider the asynchronous distributed optimization problem in which each node has its own convex cost function and can communicate directly only with its neighbors, as determined by a directed communication topology (directed graph or digraph). First, we reformulate the optimization problem so that Alternating Direction Method of Multipliers (ADMM) can be utilized. Then, we propose an algorithm, herein called Asynchronous Approximate Distributed Alternating Direction Method of Multipliers (AsyAD-ADMM), using finite-time asynchronous approximate ratio consensus, to solve the multi-node convex optimization problem, in which every node performs iterative computations and exchanges information with its neighbors asynchronously. More specifically, at every iteration of AsyAD-ADMM, each node solves a local convex optimization problem for one of the primal variables and utilizes a finite-time asynchronous approximate consensus protocol to obtain the value of the other variable which is close to the optimal value, since the cost function for the second primal variable is not decomposable. If the individual cost functions are convex but not necessarily differentiable, the proposed algorithm converges at a rate of , where is the iteration counter. The efficacy of AsyAD-ADMM is exemplified via a proof-of-concept distributed least-square optimization problem with different performance-influencing factors investigated.

Index Terms:

Distributed optimization, asynchronous ADMM, directed graphs, ratio consensus, finite-time consensus.I Introduction

In this paper, we are interested in developing a modified ADMM algorithm for solving large-scale optimization problems in digraphs. In that direction, there are two main communication topologies considered: (i) master-workers communication topology [1, 2, 3] and (ii) multi-node communication topology [4, 5, 6, 7]. When the ADMM has a master-worker communication topology, the worker nodes optimize their local objectives and communicate their local variables to the master node which updates the global optimization variable and send it back to the workers. When the ADMM has no master node, the optimization problem is solved over a network of nodes. Herein, we focus on the ADMM realized on multi-node communication topologies.

There are several real-life applications in which synchronous operation is infeasible or costly and complicated. As a result, asynchronous information exchange is necessitated. For example, resource allocation in data centers gives rise to large-scale problems and networks, which naturally call for asynchronous solutions. Recently, different asynchronous distributed ADMM versions are proposed under different assumptions and communication topologies. For example, the version in [1, 2] is based on the master-worker architecture under a star topology. Even though worker updates are parallel and distributed, the master update is centralized as it collects information from workers and broadcasts the updated information to workers for their following iteration. Authors in [4, 5] proposed another version with the almost surely convergence by using information from a randomly selected subset of nodes for ADMM update under the positive possibility selection assumption. Then, authors in [3] combined the above two architecture and designed a master-local processor-link processor architecture with the random node selection. It is worth noting that all the above asynchronous versions are only available for undirected communication graphs, i.e., assuming that every communication link is bidirectional. Very recently, distributed ADMM versions for digraphs are proposed in [6, 7]. However, they are synchronous, which is also the motivation for this work.

In this paper, we propose AsyAD-ADMM, an asynchronous distributed ADMM algorithm for digraphs. First, the optimization problem is restructured such that at every optimization step, each node can solve individually a local convex optimization problem for one of the primal variables, while for the other primal variable the communication among nodes is required, since the cost function is not decomposable. Then, we find the solution for the primal variable whose cost function is non-decomposable by means of a finite-time asynchronous approximate consensus algorithm. More specifically, we adopt the algorithm introduced in [8, Algorithm 2]. The algorithm relies on asynchronous consensus and consensus protocols to compute the approximate average consensus value considering different communication delays over a finite number of steps. Unlike [8], the consensus algorithm should be repeated at every optimization step, which is also asynchronous. Towards this end, we propose a mechanism in which every next optimization step is initiated after the previous one has finished. The main contributions of the paper are as follows.

-

We propose an asynchronous distributed ADMM algorithm which can be applied to digraphs.

-

The convergence analysis shows AsyAD-ADMM converges at a rate of , where is the iteration counter. Factors influencing the algorithm performance are also investigated.

II Preliminaries

II-A Notation and graph theory

The set of real (non-negative integer, positive integer) numbers is denoted by () and denotes the non-negative orthant of the -dimensional real space . denotes the transpose of matrix . For , denotes the entry in row and column . By we denote the all-ones vector and by we denote the identity matrix (of appropriate dimensions). denotes the 2-norm.

In multi-node systems with fixed communication links (edges), the exchange of information between nodes can be conveniently captured by a graph of order , where is the set of nodes and is the set of edges. A directed edge from node to node is denoted by and represents a communication link that allows node to receive information from node . A graph is said to be undirected if and only if implies . A digraph is called strongly connected if there exists a path from each vertex of the graph to each vertex (). In other words, for any , , one can find a sequence of nodes , , , , such that link for all . The diameter of a graph is the longest shortest path between any two nodes in the network.

All nodes that can transmit information to node directly are said to be in-neighbors of node and belong to the set . The cardinality of , is called the in-degree of and is denoted by . The nodes that receive information from node belong to the set of out-neighbors of node , denoted by . The cardinality of , is called the out-degree of and is denoted by .

In the type of algorithms we consider, we associate a positive weight for each edge . The nonnegative matrix is a weighted adjacency matrix that has zero entries at locations that do not correspond to directed edges (or self-edges) in the graph.

II-B Standard ADMM Algorithm

The standard ADMM algorithm solves the problem:

| (1) | ||||

| s.t. |

for variables with matrices and vector (). The augmented Lagrangian is

| (2) | ||||

where is the Lagrange multiplier and is a penalty parameter. In ADMM, the primary variables and the Lagrange multiplier are updated as follows: starting from some initial vector , at each optimization iteration ,

| (3) | ||||

| (4) | ||||

| (5) |

The step-size in the Lagrange multiplier update is the same as the augmented Lagrangian function parameter .

III Problem Formulation

In this work, we consider a strongly connected digraph in which each node is endowed with a scalar cost function assumed to be known to the node only. We assume that each node has knowledge of the number of its out-going links, , and has access to local information only via its communication with the in-neighboring nodes, . The only global information available to all the nodes in the network is given in Assumption 1.

Assumption 1

The diameter of the network , or an upper bound, is known to all nodes.

While Assumption 1 is limiting, there exist distributed methods for extracting such information; see, e.g., [9, 10].

The objective is to design a discrete-time coordination algorithm that allows every node in a digraph to distributively and asynchronously solve the following global optimization problem:

| (6) |

where is a global optimization variable (or a common decision variable). In order to solve problem and to enjoy the structure ADMM scheme at the same time, a separate decision variable for node is introduced and the constraint is imposed to allow the asynchronous distributed ADMM framework. Thus, problem (6) is reformulated as

| (7) | ||||

| s.t. | (8) |

where is a predefined error tolerance. Define a closed nonempty convex set as

| (9) |

By denoting and making variable as a copy of vector , constraint (8) related to problem (7) becomes

| (10) |

Then, define as the indicator function of set as

| (11) |

Finally, problem (7) with constraint (10) is transformed to

| (12) | ||||

| s.t. |

For notational convenience, denote . Thus, denote the Lagrangian function as

| (13) |

where is the Lagrange multiplier. The following standard assumptions are required for problem (12).

Assumption 2

Each cost function is closed, proper and convex.

Assumption 3

The Lagrangian has a saddle point, i.e., there exists a solution , for which

| (14) |

holds for all in , in and in .

Assumption 2 allows to be non-differentiable [11]. By Assumptions 2-3 and based on the definition of in (11), is convex in and is a solution to problem (12) [11, 12].

At iteration , the corresponding augmented Lagrangian of optimization problem (12) is written as

| (15) | ||||

where is the -th element of vector . By ignoring terms which are independent of the minimization variables (i.e., ), for each node , the standard ADMM updates (3)-(5) change to the following format:

| (16) | ||||

| (17) | ||||

| (18) |

where the last term in (17) comes from the identity with and .

Update (16) for can be solved by a classical method, e.g., the proximity operator [11, Section 4]. Update (18) for the dual variable can be implemented trivially by node . Note that both updates can be done independently and in parallel by node .

Since in (11) is the indicator function of the closed nonempty convex set , update (17) for becomes

| (19) |

where denotes the projection (in the Euclidean norm) onto . Intuitively, from (17) and the definition of in (11), one can see that the elements of (i.e., ) should go into in finite time. If not, one will have and update (17) will never be finished. Then, from the definition of in (9), one can see that going into means .

It is worth noting that if , it means , which is in the mathematical format of consensus. In other words, each node can have reach in a finite number of steps, i.e., finite-time average consensus. Similarly, requiring means asking nodes to have with closeness to the average consensus, i.e., to have enter a circle with its center at and its radius as . Here we call it finite-time “approximate” ratio consensus.

IV Asynchronous operation and consensus algorithms

Before we proceed to the description of our main algorithm, we describe the form of asynchrony that the nodes experience and some consensus algorithms that are key ingredients for the operation of our proposed algorithms.

IV-A Asynchrony description

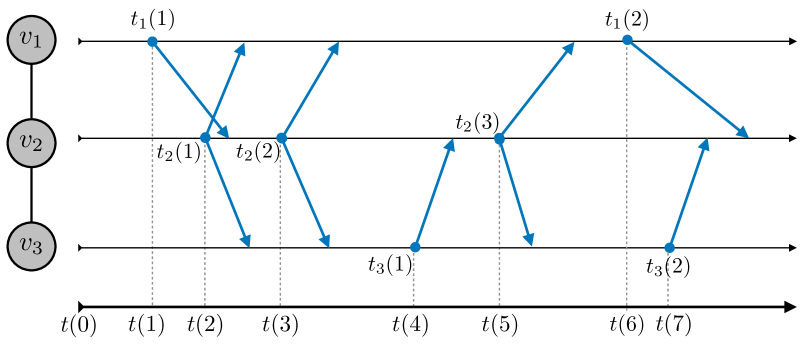

Let the time at which the iterations for the optimization start. We assume that there is a set of times at which one or more nodes transmit some value to their neighbors. In a synchronous setting, each node updates and sends its information to its neighbors at discrete times in and no processing or communication delays are considered. In an asynchronous setting, a message that is received at time and processed at time , , experiences a process delay of (or a time-index delay ). In Fig. 1, we show through a simple example how the time steps evolve for each node in the network; with we denote the time step at which iteration takes place for node .

We index nodes’ information states and any other information at time by time-index . Hence, we use to denote the information state of node at time . Note that denotes (is equivalent to) .

Assumption 4

There exists an upper bound , on the time-index steps that is needed for a node to process the information received from other nodes.

Assumption 4 basically states that since the number of nodes is finite and they update their state regularly, there exists a finite number of steps before which all nodes have updated their values (and hence broadcast it to neighboring nodes). However, it is not possible for nodes to count the number of steps elapsed in the network. For this reason we make an additional assumption.

Assumption 5

There exists an upper bound , on the actual time in seconds that is needed for a node to process the information received from other nodes.

In other words, the upper bound steps in Assumption 4 is translated to an upper bound of in seconds, something that the nodes can count individually.

IV-A1 Asynchronous ratio consensus

Here, we regard the asynchronous information exchange among nodes as delayed information. Thus, we adopt a protocol developed in [13] where each node updates its information state by combining the delayed information received by its neighbors () using constant positive weights . Integer is used to represent the delay of a message sent from node to node at time instant . We require that for all for some finite , and where is defined in Assumption 4. We make the reasonable assumption that , , at all time instances (i.e., the own value of a node is always available without delay). Each node updates its information state according to:

| (20) |

for , where is the initial state of node ; form that adheres to the graph structure, and is primitive column stochastic; and

| (21) |

Lemma 1

[13, Lemma 2] Consider a strongly connected digraph . Let and (for all and ) be the result of the iterations (20) and

| (22) |

where for (zeros otherwise) with and ; is an indicator function that captures the bounded delay on link at iteration (as defined in (21), ). Then, the solution to the average consensus problem can be asymptotically obtained as

IV-A2 Asynchronous consensus

When the updates are asynchronous, for any node , the update rule is as follows [14]:

where are the states of the in-neighbors available at the time of the update. Variable , evaluated with respect to the update time (defined in Sec. IV-A), is used here to express asynchronous state updates occurring at the neighbors of node , between two consecutive updates of the state of node . It has been shown in [14, Lemma 5.1] that this algorithm converges to the maximum value among all nodes in a finite number of steps , , where and are defined in Assumptions 1 and 4, respectively.

V Main results

Our proposed algorithm works on two levels: i) at the optimization level in which the distributed ADMM procedure is described for updating , and (Algorithm 1), and ii) at the distributed coordination level for computing in an asynchronous manner (Algorithm 2). Algorithm 2 is executed within Algorithm 1.

V-A Algorithm 1

Unlike asynchronous operation which is depicted in Fig. 1, in a synchronous algorithm all nodes need to agree on the update time , which usually requires synchronization among all nodes or the existence of a global clock. Whether the distributed ADMM update is synchronous or asynchronous arises not only when exchanging information for computing using (17), but also when they start the next optimization step (i.e., when optimization step ends and step begins). For now, we assume that the transition in the optimization steps is somehow synchronized. We will discuss ways to achieve it later.

Our AsyAD-ADMM algorithm to solve optimization problem (12) is summarized in Algorithm 1. In Algorithm 1, at every optimization step, each node does the following:

For the optimization, we assume that all nodes are aware of the network diameter (or an upper bound on ), an upper bound on time-steps , the augmented Lagrangian function parameter , and the ADMM maximum optimization step 111Note that the ADMM stopping criterion is the primal and dual feasibility condition in [11]..

V-B Algorithm 2

Under Assumption 1, Cady et al. in [15] proposed an algorithm which is based on the synchronous ratio-consensus protocol [16] and takes advantage of synchronous - and -consensus iterations to allow the nodes to determine the time step, , when their ratios, (i.e., in ADMM), are within of each other. However, the algorithm in [16] cannot deal with asynchronous information exchange scenarios. To circumvent this problem, and inspired by Cady et al. in [15], the authors in [17] adopted robustified ratio consensus proposed in [13] to propose a termination mechanism for average consensus with delays. Similarly, [8] proposed the corresponding asynchronous termination algorithm in the context of a quadratic distributed optimization problem, in which the algorithm is executed only once. We adopt the asynchronous termination algorithm proposed in [8] to compute , and we extend it to accommodate the repetition of this algorithm. The algorithm, under Assumption 4 is summarized in Algorithm 2. Specifically, Algorithm 2 makes use of the following ideas:

-

Each node runs asynchronous ratio consensus in Sec. IV-A1; in our case, we use initial conditions .

-

Every steps each node checks whether . If this is the case, then the ratios for all nodes are close to the asymptotic value and it stops iterating. Otherwise, and are reinitialized to .

, , ,

, , ,

As stated in Assumption 5, the upper bound on time-steps is guaranteed to be executed within seconds (actual time). So, nodes have a more loose synchronization of every seconds. The and are checked every seconds and if they have converged in one of the checks, the next optimization step is initiated for all seconds, after the termination of the algorithm. This approach requires some loose form of synchronization, but the time scale is much more coarse.

V-C Convergence analysis

The following Theorem 1 states that AsyAD-ADMM has convergence rate.

Theorem 1 (Convergence)

Let be the iterates in AsyAD-ADMM algorithm for problem (12), where and . Let be respectively the ergodic average of . Considering a strongly connected communication graph, under Assumptions 1-5, the following relationship holds for any iteration as

| (23) | ||||

where is the update (17) tolerance whose value is independent of the researched problem.

Proof:

See Appendix A. ∎

Remark 1

Note that the convergence proof shows the convergence rate for the optimization steps. The fact that the distributed algorithm is approximate, an additional term is introduced, that it is a function of the error tolerance and the size of the network and that it influences the solution precision.

Remark 2

The convergence proof here is basically different from the ones in [12] and [6] as the investigated problems are different. Specifically, in [12], the proposed D-ADMM can be only applied to nodes with undirected graphs as the constraint is needed to minimize the objective function (7), where the matrix is related to the communication graph structure which must be undirected. Authors in [6] proposed the D-ADMM for digraphs with the same constraint . However, it can only be applied to synchronous case.

VI Numerical Example

The distributed least square problem is considered as

| (24) |

where is only known to node , is the measured data and is the common decision variable that needs to be optimized. For the automatic generation of large number of different matrices , we choose to have the square . All elements of and are set from independent and identically distributed (i.i.d.) samples of standard normal distribution .

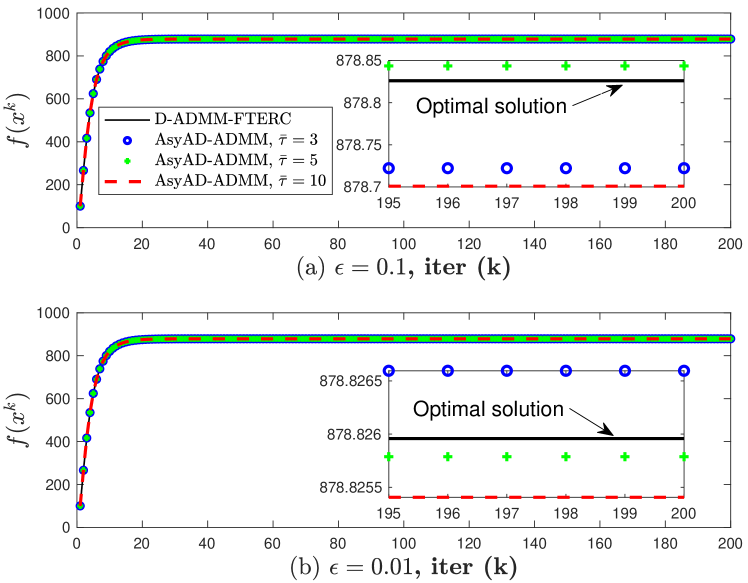

We choose and to have 600 nodes having a strongly connected digraph. We implement the D-ADMM-FTERC algorithm in [7] as a benchmark to evaluate factors (i.e., ) influencing AsyAD-ADMM performance as D-ADMM-FTERC is developed for calculating the optimal solution to distributed optimization problems for digraphs, i.e., all figures related to D-ADMM-FTERC are optimal. However, D-ADMM-FTERC is synchronous and this is the reason we develop AsyAD-ADMM in this paper.

Fig. 2 demonstrates three points: (i) AsyAD-ADMM solutions are very close to the optimal one from D-ADMM-FTERC. (ii) From Fig. 2 (a) to (b), the smaller the value of is, the closer AsyAD-ADMM solutions are to D-ADMM-FTERC one. This is reasonable as smaller value of means better and more accurate “approximate” ratio consensus for update (17) using Algorithm 2.

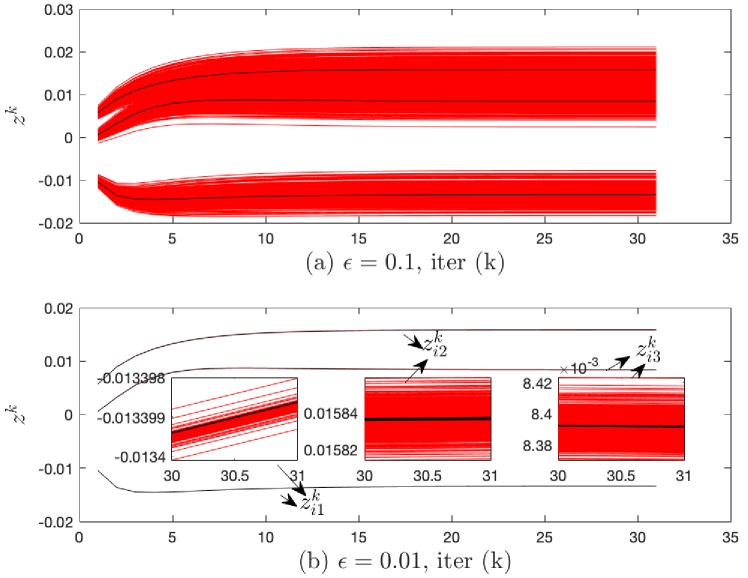

This can be also validated in Fig. 3 where red and black lines are the updates using AsyAD-ADMM and D-ADMM-FTERC, respectively. (iii) The values of (defined in Sec. IV-A1) do not influence the precision of AsyAD-ADMM, which also makes sense as decides the degree of asynchrony, not the asynchronous ADMM precision. Nodes use this information to decide when to update as shown in step 6 of Algorithm 2. The values of do influence the iteration steps of Algorithm 2 as described in Table I from which it shows the larger the value of is, the more iteration steps Algorithm 2 needs in each AsyAD-ADMM optimization step. For , the steps are the same as because we set the iteration step upper bound for Algorithm 2 as 1000; it means iteration steps are no less than 1000 when and .

Note that in Fig. 3, we have AsyAD-ADMM with the stopping condition which is for the convenience of figure presentation. The reason that Fig. 3 (a) and (b) have a big difference is , which means a smaller leads to a more accurate update (17) using Algorithm 2. And the reason is that a smaller leads to a larger Algorithm 2 iteration step as one can check it is and from Table I for and in this example. Also from Fig. 3, one can see the ADMM iteration numbers are the same for different values of for AsyAD-ADMM with the stopping condition. Furthermore, Table II describes the running time comparison on an Intel Core i5 processor at 2.6 GHz with Matlab R2020b for different values of and with . One can see for , from , even though Algorithm 2 iteration steps are the same as 1000, the running time is larger and larger.

| 7.9677s | 12.0282 s | 33.6401s | |

| 190.1420s | 246.9963s | 470.6576s |

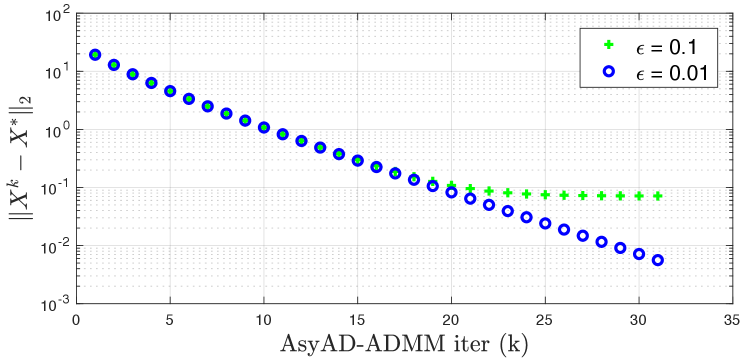

This is because Algorithm 2 running time is the time gap between two consecutive iterations multiplies the iteration step while in step 6 of Algorithm 2, is the time gap. Fig. 4 demonstrates two points. (i) It validates Theorem 1 that AsyAD-ADMM has convergence rate. (ii) It is in accordance with Fig. 2 and Fig. 3 that a smaller value of has a better solution precision.

What is more, from Fig. 2 and Table II, considering the AsyAD-ADMM solution precision and running time, it is better to have the value of neither too large nor too small; for this example, is a good value range.

VII Conclusions and Future Directions

An asynchronous approximate distributed alternating direction method of multipliers algorithm is proposed to provide a solution for the distributed optimization problems allowing asynchronous information exchange among nodes in digraphs with assumptions of bounded time-index steps of asynchronous nodes and the known digraph diameter to all nodes. By proposing the finite-time asynchronous approximate ratio consensus algorithm for one ADMM primal variable update, the solution is close to the optimal but acceptable. How to choosing algorithm parameters to have an aggressive performance is also discussed.

Future work will focus on designing a new asynchronous ADMM version without the communication graph diameter information to compute the optimal solution for distributed optimization problems.

Appendix A Proof of Theorem 1

The analysis is inspired by [12] and [6]. From the second inequality of the saddle point of Lagrangian function (14), the first inequality in (23) can be proved directly.

We now prove the second inequality in (23). For each node , since minimizes in (16), by the optimal condition, we have

| (25) |

where is the sub-gradient of at . By integrating from (18) into the above inequality, we have

| (26) |

The above inequalities can be written in compact form as

| (27) |

where . Denote

| (28) |

and . Based on “approximate” consensus Algorithm 2, we denote the real update (17) for the step as with , i.e., . Since minimizes in (17), similarly, we have

| (29) | ||||

for all , where is the sub-gradient of at . As both and are convex, by utilizing the sub-gradient inequality, from (27) and (29), we get

| (30) |

From (9), (11) and (28), we have as . Due to feasibility of the optimal solution , we obtain . By setting , (30) becomes

| (31) | ||||

where . Note that in Appendix A of [11], we know that both and are bounded; and goes to zero as . Therefore, for , there exist constant numbers and such that

Denote and . Adding to both sides of (31) yields

| (32) |

where the last equality is calculated from (18). Recall an well-known equality law that

| (33) | ||||

Then, by using equality (33), (32) changes to

| (34) | ||||

where the last inequality comes from using (18) and dropping the negative term . Now, by using , we change (34) to another format as

| (35) |

which holds true for all . Denote the constant . By summing (35) over and after telescoping calculation, we have

| (36) | ||||

Due to the convexity of both and , we get and . Thus, by the definition of and dropping the negative terms,

| (37) |

Based on , (37) combined with the definition of Lagrangian function [cf. (13)] prove (23).

References

- [1] R. Zhang and J. Kwok, “Asynchronous distributed admm for consensus optimization,” in International conference on machine learning. PMLR, 2014, pp. 1701–1709.

- [2] T.-H. Chang, M. Hong, W.-C. Liao, and X. Wang, “Asynchronous distributed ADMM for large-scale optimization-part : Algorithm and convergence analysis,” IEEE Transactions on Signal Processing, vol. 64, no. 12, pp. 3118–3130, 2016.

- [3] S. E. Li, Z. Wang, Y. Zheng, Q. Sun, J. Gao, F. Ma, and K. Li, “Synchronous and asynchronous parallel computation for large-scale optimal control of connected vehicles,” Transportation research part C: emerging technologies, vol. 121, p. 102842, 2020.

- [4] E. Wei and A. Ozdaglar, “On the o(1=k) convergence of asynchronous distributed alternating direction method of multipliers,” in 2013 IEEE Global Conference on Signal and Information Processing, 2013, pp. 551–554.

- [5] N. Bastianello, R. Carli, L. Schenato, and M. Todescato, “Asynchronous distributed optimization over lossy networks via relaxed admm: Stability and linear convergence,” IEEE Transactions on Automatic Control, pp. 1–1, 2020.

- [6] V. Khatana and M. V. Salapaka, “D-DistADMM: A distributed ADMM for distributed optimization in directed graph topologies,” in Proc. 59th IEEE Conf. Dec. Control, Dec. 2020, pp. 2992–2997.

- [7] W. Jiang and T. Charalambous, “Distributed alternating direction method of multipliers using finite-time exact ratio consensus in digraphs,” in Proc. 19th European Control Conf., Jun. 2021,accepted.

- [8] A. Grammenos, T. Charalambous, and E. Kalyvianaki, “CPU scheduling in data centers using asynchronous finite-time distributed coordination mechanisms,” arXiv preprint arXiv:2101.06139, 2021.

- [9] I. Shames, T. Charalambous, C. Hadjicostis, and M. Johansson, “Distributed network size estimation and average degree estimation and control in networks isomorphic to directed graphs,” in 50th Ann. Allerton Conf. Commun., Control, and Computing, Oct. 2012, pp. 1885–1892.

- [10] T. Charalambous, M. G. Rabbat, M. Johansson, and C. N. Hadjicostis, “Distributed finite-time computation of digraph parameters: Left-eigenvector, out-degree and spectrum,” IEEE Transactions on Control of Network Systems, vol. 3, no. 2, pp. 137–148, 2016.

- [11] S. Boyd, N. Parikh, and E. Chu, Distributed optimization and statistical learning via the alternating direction method of multipliers. Now Publishers Inc, 2011.

- [12] E. Wei and A. Ozdaglar, “Distributed alternating direction method of multipliers,” in Proc. 51st IEEE Conf. Dec. Control, 2012, pp. 5445–5450.

- [13] C. N. Hadjicostis and T. Charalambous, “Average consensus in the presence of delays in directed graph topologies,” IEEE Transactions on Automatic Control, vol. 59, no. 3, pp. 763–768, March 2014.

- [14] S. Giannini, D. Di Paola, A. Petitti, and A. Rizzo, “On the convergence of the max-consensus protocol with asynchronous updates,” in IEEE Conference on Decision and Control (CDC), 2013, pp. 2605–2610.

- [15] S. T. Cady, A. D. Domínguez-García, and C. N. Hadjicostis, “Finite-time approximate consensus and its application to distributed frequency regulation in islanded AC microgrids,” in Proc. of Hawaii International Conference on System Sciences, 2015, pp. 2664–2670.

- [16] A. D. Domínguez-García and C. N. Hadjicostis, “Coordination and control of distributed energy resources for provision of ancillary services,” in IEEE Int. Conf. Smart Grid Commun., Oct. 2010, pp. 537–542.

- [17] M. Prakash, S. Talukdar, S. Attree, V. Yadav, and M. V. Salapaka, “Distributed stopping criterion for consensus in the presence of delays,” IEEE Transactions on Control of Network Systems, vol. 7, no. 1, pp. 85–95, 2020.