An Empirical Evaluation of Posterior Sampling for Constrained Reinforcement Learning

Abstract

We study a posterior sampling approach to efficient exploration in constrained reinforcement learning. Alternatively to existing algorithms, we propose two simple algorithms that are more efficient statistically, simpler to implement and computationally cheaper. The first algorithm is based on a linear formulation of CMDP, and the second algorithm leverages the saddle-point formulation of CMDP. Our empirical results demonstrate that, despite its simplicity, posterior sampling achieves state-of-the-art performance and, in some cases, significantly outperforms optimistic algorithms.

1 Introduction

Reinforcement learning (RL) problem is a sequential decision-making paradigm that aims to improve an agent’s behavior over time by interacting with the environment. In standard reinforcement learning, the agent learns by trial and error based on the scalar signal, called the reward, it receives from the environment, aiming to maximize the average reward. Nonetheless, in many settings this is insufficient, because the desired properties of the agent behavior are better described using constraints. For example, a robot should not only fulfill its task, but should also control its wear and tear by limiting the torque exerted on its motors (Tessler et al., 2019); recommender platforms should not only focus on revenue growth but also optimize users long-term engagement (Afsar et al., 2021). In this paper we study constrained reinforcement learning under the infinite-horizon average reward setting, which encompasses many real-world problems.

A standard way of formulating the constrained RL problem is by the agent interacting with a constrained Markov Decision Process (CMDP) (Altman, 1999), where the agent must satisfy constraints on expectations of auxiliary costs. There are several approaches in the literature that have focused on solving CMDPs. These methods are mainly based on model-free RL algorithms (Chow et al., 2017; Achiam et al., 2017; Tessler et al., 2019; Bohez et al., 2019) and model-based RL algorithms (Efroni et al., 2020; Brantley et al., 2020; Singh et al., 2020). While model-free methods may eventually converge to the true policy, they are notoriously sample inefficient and have no theoretical guarantees. These methods have demonstrated prominent successes in artificial environments but extend poorly to real-life scenarios. Model-based algorithms, in its turn, focus on sample efficient exploration. From a methodological perspective, most of these methods leverage optimism in the face of uncertainty (OFU) principle to encourage adaptive exploration.

We study an alternative approach to efficient exploration in constrained reinforcement learning, posterior sampling. Our work is motivated by several advantages of posterior sampling relative to optimistic algorithms described in Osband et al. (2013). First, since posterior sampling only requires solving for an optimal policy for a single sampled CMDP, it is computationally efficient relative to many optimistic methods, which require simultaneous optimization across a family of plausible environments (Efroni et al., 2020; Singh et al., 2020). Second, optimistic algorithms require explicit construction of the confidence bounds based on observed data, which is a complicated statistical problem even for simple models. By contrast, in posterior sampling, uncertainty about each policy is quantified in a statistically efficient way through the posterior distribution, which is simpler to implement. Finally, the presence of an explicit prior allows an agent to incorporate known environment structure naturally. This is crucial for most practical applications, as learning without prior knowledge requires exhaustive experimentation in each possible state.

As such, we propose two simple algorithms based on the posterior sampling for constrained reinforcement learning in the infinite-horizon setting:

-

•

Posterior Sampling of Transitions maintains posteriors for the transition function while keeping rewards and costs as an empirical mean. At each episode, it samples an extended CMDP from the posterior distribution and executes the optimal policy. To solve the planning problem, we introduce a linear program (LP) in the space of occupancy measures.

-

•

Primal-Dual Posterior Sampling exploits a primal-dual algorithm to solve the saddle-point problem associated with a CMDP. It performs incremental updates both on the primal and dual variables. This reduces the computational cost by using a simple dynamic programming approach to compute the optimal policy instead of solving a constrained optimization problem.

We provide a comprehensive comparison of OFU- and posterior sampling-based algorithms across three environments in our study. In all cases, despite its simplicity, posterior sampling achieves state-of-the-art results and, in some cases, significantly outperforms other alternatives. In addition, as we show in Section 5.2, posterior sampling is naturally suited to more complex settings where design of an efficiently optimistic algorithm might not be possible.

2 Related work

Recently, online learning under constraints has received extensive attention. Over the past several years, learning in CMDPs has been heavily studied in different settings – episodic, infinite-horizon discounted reward, and infinite-horizon average reward. Below we provide a short overview of the most relevant works to ours, which are summarized in Table 1.

OFU-based algorithms.

Several OFU-based algorithms have been proposed for learning policies for CMDPs. All the algorithms in this group extend the idea of the UCRL2 algorithm Jaksch et al. (2010) (the first algorithm based on the optimism principle applied for classical reinforcement learning), but each of them utilizes optimistic exploration in different forms.

Efroni et al. (2020) and Brantley et al. (2020) consider sample efficient exploration in finite-horizon setting via double optimism: overestimation of rewards and underestimation of costs. This approach makes each state-action pair more appealing along both rewards and costs simultaneously. Conversely, Zheng and Ratliff (2020) and Liu et al. (2021) considers conservative (safe) exploration in infinite and finite horizon settings correspondingly using optimism (overestimation) over rewards and pessimism (overestimation) over costs. Unlike previous algorithms, where the reward signal is perturbed to one side or the other, Singh et al. (2020) considers optimism over transition probabilities in an infinite-horizon setting. Specifically, the authors construct a confidence set of transition probabilities and choose "optimistic" empirical transitions to force the exploration at each step. Consequently, Efroni et al. (2020), Qiu et al. (2020), and Liu et al. (2021) leverage the saddle-point formulation of CMDP in the episodic setting. They use standard dynamic programming for the policy update of the primal variable with the scalarized reward function. Recently, Chen et al. (2022) considered optimistic Q-learning in infinite-horizon CMDP providing a tighter bound on the span of the bias function and strictly improves the result of Singh et al. (2020).

Posterior sampling algorithms.

Somewhat surprisingly, posterior sampling has not yet gained such traction in constrained reinforcement learning. Although it has been extensively researched in unconstrained setting (Osband et al., 2013; Abbasi-Yadkori and Szepesvári, 2015; Agrawal and Jia, 2017; Ouyang et al., 2017), we are aware of only one work on posterior sampling in CMDPs (Agarwal et al., 2022). That article provides a theoretical analysis of posterior sampling for CMDP with known reward and cost functions in the infinite-horizon average reward setting. Specifically, their algorithm samples transitions from a posterior distribution at each episode and solves an LP problem to find the optimal policy. Our Posterior Sampling of Transitions algorithm is an extension of the algorithm from Agarwal et al. (2022) to unknown reward and cost functions.

| Algorithm | Regret | Constraint violation | Exploration | ||||

| Efroni et al. (2020) | |||||||

| Brantley et al. (2020) | |||||||

| Qiu et al. (2020) | |||||||

| finite horizon | |||||||

| Liu et al. (2021) | |||||||

| Singh et al. (2020) | |||||||

| Zheng and Ratliff (2020) | 0 | ||||||

| Chen et al. (2022) 111This algorithms uses unconventional terms which complicates the comparison based on other parameters | -func. | ||||||

| infinite horizon | |||||||

| Agarwal et al. (2022) | |||||||

3 Problem formulation

CMDP.

A constrained MDP model is defined as a tuple where is the state space, is the action space, is the unknown transition function, where is simplex over , is the unknown reward vector function of interest, is a cost threshold, and is the known initial distribution of the state. In general, CMDP is an MDP with multiple reward functions (), one of which, , is used to set the optimization objective, while the others, (), are used to restrict what policies can do.

For any policy and initial distribution , the expected infinite-horizon average reward is defined as

| (1) |

where is the expectation under the probability measure over the set of infinitely long state-action trajectories, is induced by policy (which directs what actions to take given what history), and the initial state . Given some fixed initial state distribution and reals , the CMDP optimization problem is to find a policy that maximizes subject to the constraints :

| (2) |

Sometimes it is convenient to define CMDP through main scalar reward function and cost functions (). In order to do that, one can easily recast the definition of CMDP by multiplying original reward components () by and , with slight abuse of notation, denoting () as (). Then, in terms of costs, CMDP , and optimization problem (2) becomes

| (3) |

In contrast to episodic RL problems, in which the state is reset at the beginning of each episode, infinite-horizon RL problems appear to be much more challenging as the interaction between agent and environment never ends or resets. Consequently, in order to control the regret vector (defined below), we assume that the CMDP is unichain, i.e., for each stationary policy , the Markov chain induced by contains a single recurrent class, and possibly, some transient states. Where a stationary policy , in its turn, is a mapping from state space to a probability distribution on the action space , , which is independent of , i.e., does not change over time.

Linear Programming approach for solving CMDP.

When CMDP is known and unichain, an optimal policy for (3) can be obtained by solving the following liner program (LP) (Altman, 1999):

| (4) | |||

| (5) | |||

| (6) | |||

| (7) |

where the decision variable is occupancy measure (fraction of visits to ).

Regret vector.

In order to measure the performance of a learning algorithm we define its reward and cost regret. The cumulative reward and cost regret for the -th cost until time is defined as

4 Posterior sampling algorithms

In this section, we introduce two simple algorithms based on posterior sampling. Both algorithms use the doubling epoch framework of Jaksch et al. (2010). The rounds are broken into consecutive epochs as follows: the -th epoch begins at the round immediately after the end of -th epoch and ends at the first round such that for some state-action pair , i.e., within epoch an algorithm has visited some state-action pair at least the same number of times it had visited this pair before epoch started. An algorithm computes a new policy at the beginning of every epoch , and uses that policy through all the rounds in that epoch. The policy to be used in epoch is computed as the optimal policy of an extended CMDP defined by the sampled transition probability vectors () and empirical reward and cost functions defined as:

| (9) |

| (10) |

where and denote the number of visits to and respectively.

We present a posterior sampling algorithms using Dirichlet priors. Dirichlet distribution is a convenient choice maintaining posteriors for the transition probability vectors , as they satisfy the following useful property: given a prior on , after observing a transition from state to (with underlying probability ), the posterior distribution is given by . By this property, for any , on starting from prior for , where 1 is vector of ones, the posterior at time is .

Posterior Sampling of Transitions.

The first algorithm we propose proceeds similarly to many optimistic algorithms, i.e., at the beginning of every epoch , posterior sampling of transitions algorithm solves LP (4)-(7) substituting unknown parameters with the sampled transition vectors and empirical estimates defined in (9), (10). The algorithm is summarized as Algorithm 1.

The algorithm has to solve an LP problem with constraints and decision variables times, where is number of epochs during horizon . 222, see, e.g., Ouyang et al. (2017) Although it is computationally more efficient than algorithms that require simultaneous optimization across a family of plausible environments, in the limit of large state or action spaces, solving such linear program can become a formidable computational burden. For that reason, we propose a primal-dual posterior sampling algorithm below.

Primal-Dual Posterior Sampling.

To overcome the limitation above, we consider a heuristic for the Algorithm 1. Specifically, we consider the Lagrangian relaxation of problem (3):

| (11) |

where and are vectors from composed of and respectively for . For a fixed choice of Lagrange multipliers , program (11) is an unconstrained optimization problem with a pseudo-reward defined as:

Thus, Lagrangian relaxation squeezes the original CMDP to a standard MDP (i.e., process with the same transitions and the modified, but scalar, reward function).

From MDP theory (Bertsekas, 2012), we know that if the MDP is unichain (and even weakly communicating), the optimal average reward satisfies the Bellman equation

| (12) |

for all . This allows to reduce the computational cost by performing a policy update using standard dynamic programming instead of solving LP (4)-(7).

Algorithm 2 formalized the logic above and presents the Primal-Dual Posterior Sampling algorithm. The algorithm consists of two main steps: Policy update and Dual update. For policy update we use a value iteration method of (Bertsekas, 1998) and for dual update we use a projected gradient descent (Zinkevich, 2003). It is worth mentioning that the reduced computational cost comes with a flaw: by construction of value iteration algorithm, policy is deterministic as the optimal solution for an extended CMDP with scalarized reward function . However, such a policy cannot be optimal in the original CMDP if in there all deterministic policies are suboptimal. To counter this flaw, we execute the mixture policy instead of , defined in line 10 of Algorithm 2.

5 Empirical evaluation

This section presents a comprehensive empirical analysis of the proposed algorithms. Specifically, we compare posterior sampling algorithms with the state-of-the-art OFU-based algorithms (benchmarks) across three environments. Among the algorithms mentioned in Table 1, we choose the most diverse but, at the same time, the most comparable for empirical analysis.

We use three OFU-based algorithms from the existing literature for comparison. These algorithms are based on the first three types of optimistic exploration mentioned in Section 2: double optimism, conservative optimism, and optimism over transitions. We compare the posterior sampling algorithms to ConRL (Brantley et al., 2020), C-UCRL (Zheng and Ratliff, 2020) and UCRL-CMDP (Singh et al., 2020). Although ConRL was originally developed for the episodic setting, we extend it to the infinite-horizon setting by using the doubling epoch framework described in Section 4. Since the definitive algorithm is quite different, we rename it as CUCRLOptimistic. To avoid ambiguities we also rename C-UCRL and UCRL-CMDP algorithms as CUCRLConservative and CUCRLTransitions correspondingly.

Below we present a description of environments used in experiments and demonstrate the empirical results. A more detailed description of benchmarks can be found in Appendix B.1.

5.1 Environments

To demonstrate the performance of the algorithms, we consider three gridworld environments in our analysis. There are four actions possible in each state, , which cause the corresponding state transitions, except that actions that would take the agent to the wall leave the state unchanged. Due to the stochastic environment, transitions are stochastic (i.e., even if the agent’s action is to go up, the environment can send the agent with a small probability left). Typically, the gridworld is an episodic task where the agent receives reward -1 on all transitions until the terminal state is reached. We reduce the episodic setting to the infinite-horizon setting by connecting terminal states to the initial state. Since there is no terminal state in the infinite-horizon setting, we call it the goal state instead. Thus, every time the agent reaches the goal, it receives a reward of 0, and every action from the goal state sends the agent to the initial state. We introduce constraints by considering the following specifications of a gridworld environment: Marsrover and Box environments.

Marsrover.

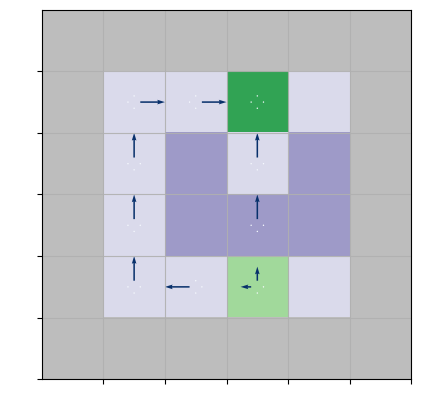

This environment was used in Tessler et al. (2019); Zheng and Ratliff (2020); Brantley et al. (2020). The agent must move from the initial position to the goal avoiding risky states. Figure (1(a)) illustrates the CMDP structure: the initial position is light green, the goal is dark green, the walls are gray, and risky states are purple. "In the Mars exploration problem, those darker states are the states with a large slope that the agents want to avoid. The constraint we enforce is the upper bound of the per-step probability of step into those states with large slope – i.e., the more risky or potentially unsafe states to explore" (Zheng and Ratliff, 2020). Each time the agent appears in a purple state incurs costs 1. Other states incur no cost.

Without safety constraints, the optimal policy is obviously to always go up from the initial state. However, with constraints, the optimal policy is a randomized policy that goes left and up with some probabilities, as illustrated in Figure (1(a)). In experiments, we consider two marsorover gridworlds: 4x4 as shown in Figure (1(a)) and 8x8.

Box.

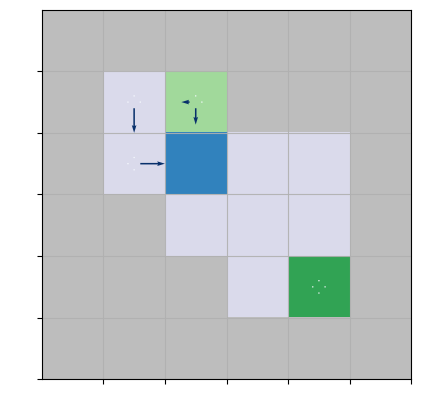

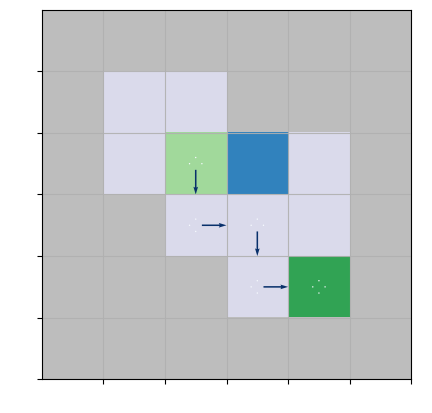

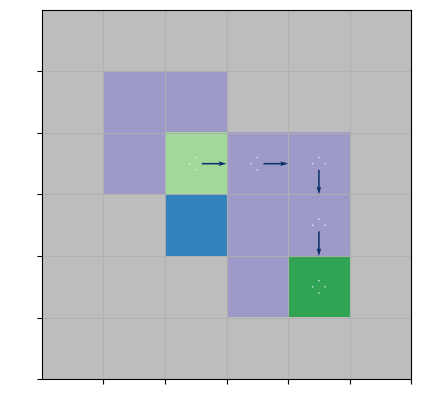

Another conceptually different specification of a gridworld is Box environment from Leike et al. (2017). Unlike the Marsrover example, there are no static risky states; instead, there is an obstacle, a box, which is only "pushable" (see Figure (1(b))). Moving onto the blue tile (the box) pushes the box one tile into the same direction if that tile is empty; otherwise, the move fails as if the tile were a wall. The main idea of Box environment is "to minimize effects unrelated to their main objectives, especially those that are irreversible or difficult to reverse" (Leike et al., 2017). If the agent takes the fast way (i.e., goes down from its initial state; see Figure (1(d))) and pushes the box into the corner, the agent will never get it back, and the initial configuration would be irreversible. In contrast, if the agent chooses the safe way (i.e., approaches the box from the left side), it pushes the box to the reversible state (see Figure (1(d))). This example illustrates situations of performing the task without breaking a vase in its path, scratching the furniture, bumping into humans, etc.

Each action incurs cost 1 if the box is in a corner (cells adjacent to at least two walls) and no cost otherwise. Similarly to the Marsrover example in Figure (1(a)), without safety constraints, the optimal policy is to take the fast way (go down from the initial state). However, with constraints, the optimal policy is a randomized policy that goes down and left from the initial state.

5.2 Simulation results

In this part, we evaluate the performance of the posterior sampling algorithms against the benchmarks. We keep track of two metrics: average reward and average cost. Reward graphs contain three level curves corresponding to the fast, optimal, and safe solutions in each CMDP. Cost graphs have only optimal solution level curves as the safe solution corresponds to 0 budget (and, therefore, 0 cost), and the fast solution corresponds to a certain value, which is high enough to afford the fast way all the time. The source code of the experiments can be found at https://github.com/danilprov/cmdp.

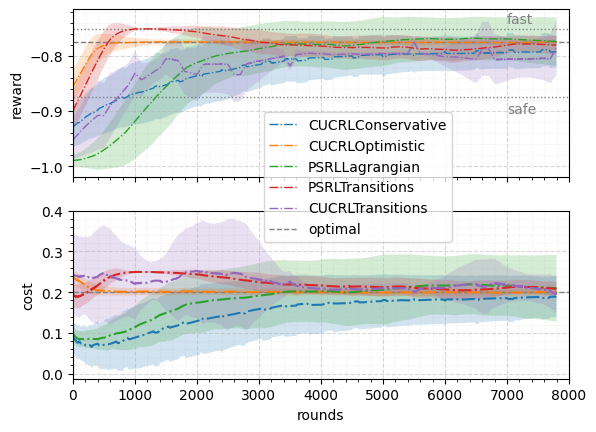

Figure 2 compares the average reward and cost incurred by five algorithms for Marsrover environments. 333CUCRLTransitions is presented only for 4x4 Marsrover environment. In fact, this algorithm is impractical for even moderate CMDPs because of nonlinear program it solves as a subroutine at the beginning of every epoch (see Appendix B.1 for details). We observe that all curves converge to the optimal solution in such a simple environment. We can see that CUCRLOptimistic gets to optimal solution very fast relatively to the other algorithms. In contrast, it takes longer for CUCRLConservative, PSRLLagrangian, and PSRLTransitions algorithms to get to the optimal solution. In the case of CUCRLConservative, it happens at the expense of safe (conservative) exploration – the algorithm does not violate constraints during the learning even with random exploration baseline (see Appendix B.1 for more details). In the case of PSRLLagrangian and PSRLTransitions, this is because of number of parameters that the algorithm learn: while other algorithms estimate number of parameters, posterior sampling algorithms learn parameters without any knowledge of confidence sets (as Figure (2(b)) shows, an increase from 16 to 64 states slows the learning down considerably).

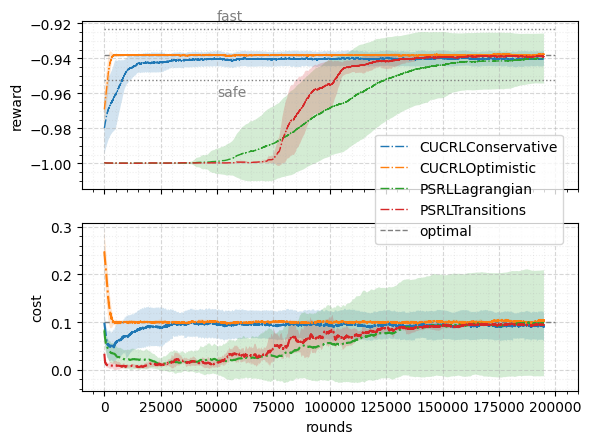

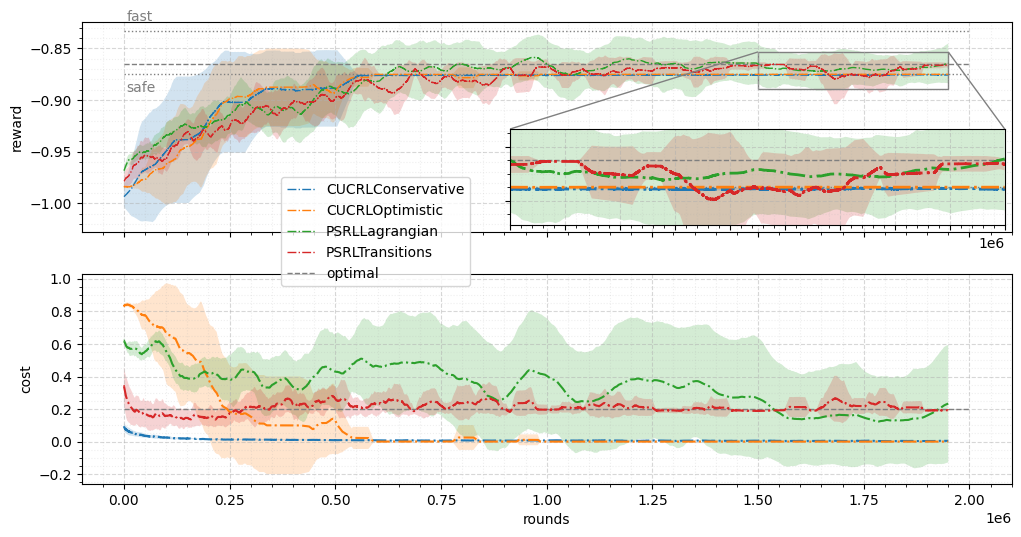

Figure 3 shows results for the Box environment. Both reward and cost graphs show that optimistic algorithms achieve the safe reward value relatively quickly (roughly after 600k rounds). However, those algorithms are stuck with the suboptimal solution afterward, i.e, both algorithms exploit only safe policy ones it is learned. In contrast, it takes a little bit longer for posterior sampling algorithms to deliver a sensible solution, but as the zoomed inset graph shows, both posterior sampling algorithms converge to the optimal solution. Here, we emphasize that while the absolute difference between algorithms is not significant, the semantics of the achievable values (dotted lines that represent ”safe” and ”fast” solutions) reveal that the existing (OFU-based) algorithms are stuck with a suboptimal solution, while the proposed (posterior-based) algorithms eventually converge to the optimal solution.

Taking a closer look at PSRLLagrangian algorithm in Figures 2, 3, we see that the standard deviation is more expansive than that of PSRLTransitions. This can be explained by the fact that for plotting the standard deviation, we used ordinary policy instead of mixture policy (as mentioned in Section 4). Consequently, the span of the standard deviation highlights that each policy is deterministic and may lie far from the optimal solution; however, the mixture policy still converges to the optimal solution. As such, the comparison of proposed algorithms comes down to the fact that PSRLTransitions converges faster than PSRLLagrangian but at the expense of higher computational costs.

6 Conclusion

This paper addresses the practical issue of sample efficient learning in CMDP with infinite-horizon average reward. The experimental evaluation carried out in this paper reveals that posterior sampling is a very effective heuristic for this setting. Compared to feasible optimistic algorithms, we believe that posterior sampling is often more efficient statistically, simpler to implement, and computationally cheaper. In its simplest form, it does not have any parameter to tune and does not require explicit construction of the confidence bound. Consequently, we highlight that the proposed algorithms consistently outperform the existing ones, making them valuable candidates for further research and implementation.

Our work addresses practically relevant RL issues and, therefore, we firmly believe that it may help design algorithms for real-life reinforcement learning applications. The environments presented here expose critical issues of reinforcement learning tasks. Yet, they might overlook problems that arise due to the complexity of more challenging tasks. The following steps, therefore, involve scaling this effort to more complex environments.

Future work also includes a theoretical analysis of the proposed algorithms. As we mentioned, Agarwal et al. (2022) theoretically analyzed a similar posterior sampling algorithm for constrained reinforcement learning. Yet the analysis of Agarwal et al. (2022) is to be revised as our PSRLTransitions algorithm is an extension of their algorithm. As regards PSRLLagrangian, we anticipate that Lagrangian relaxation would introduce an additional complication in the theoretical analysis and provide some initial thoughts on the analysis of the algorithm in Appendix A.

Acknowledgments and Disclosure of Funding

This project is partially financed by the Dutch Research Council (NWO) and the ICAI initiative in collaboration with KPN, the Netherlands.

References

- Abbasi-Yadkori and Szepesvári (2015) Yasin Abbasi-Yadkori and Csaba Szepesvári. Bayesian optimal control of smoothly parameterized systems. In Proceedings of the Thirty-First Conference on Uncertainty in Artificial Intelligence, UAI’15, 2015.

- Achiam et al. (2017) Joshua Achiam, David Held, Aviv Tamar, and Pieter Abbeel. Constrained policy optimization. In Proceedings of the 34th International Conference on Machine Learning - Volume 70, ICML’17. JMLR.org, 2017.

- Afsar et al. (2021) M. Mehdi Afsar, Trafford Crump, and Behrouz Far. Reinforcement learning based recommender systems: A survey, 2021.

- Agarwal et al. (2022) Mridul Agarwal, Qinbo Bai, and Vaneet Aggarwal. Regret guarantees for model-based reinforcement learning with long-term average constraints. In Proceedings of the Thirty-Eighth Conference on Uncertainty in Artificial Intelligence, 2022.

- Agrawal and Jia (2017) Shipra Agrawal and Randy Jia. Optimistic posterior sampling for reinforcement learning: worst-case regret bounds. In Advances in Neural Information Processing Systems, 2017.

- Altman (1999) Eitan Altman. Constrained markov decision processes, 1999.

- Bertsekas (1998) Dimitri P. Bertsekas. A new value iteration method for the average cost dynamic programming problem. SIAM Journal on Control and Optimization, 1998.

- Bertsekas (2012) Dimitri P. Bertsekas. Dynamic Programming and Optimal Control, volume 2. Athena Scientific, Belmont, MA, USA, 4rd edition, 2012.

- Bohez et al. (2019) Steven Bohez, Abbas Abdolmaleki, Michael Neunert, Jonas Buchli, Nicolas Manfred Otto Heess, and Raia Hadsell. Value constrained model-free continuous control. ArXiv, abs/1902.04623, 2019.

- Brantley et al. (2020) Kianté Brantley, Miro Dudik, Thodoris Lykouris, Sobhan Miryoosefi, Max Simchowitz, Aleksandrs Slivkins, and Wen Sun. Constrained episodic reinforcement learning in concave-convex and knapsack settings. In Advances in Neural Information Processing Systems, 2020.

- Chen et al. (2022) Liyu Chen, Rahul Jain, and Haipeng Luo. Learning infinite-horizon average-reward markov decision processes with constraints, 2022. URL https://arxiv.org/abs/2202.00150.

- Chow et al. (2017) Yinlam Chow, Mohammad Ghavamzadeh, Lucas Janson, and Marco Pavone. Risk-constrained reinforcement learning with percentile risk criteria. J. Mach. Learn. Res., 18(1):6070–6120, 2017.

- Efroni et al. (2020) Yonathan Efroni, Shie Mannor, and Matteo Pirotta. Exploration-exploitation in constrained mdps, 2020.

- Jaksch et al. (2010) Thomas Jaksch, Ronald Ortner, and Peter Auer. Near-optimal regret bounds for reinforcement learning. Journal of Machine Learning Research, 2010.

- Leike et al. (2017) Jan Leike, Miljan Martic, Victoria Krakovna, Pedro A. Ortega, Tom Everitt, Andrew Lefrancq, Laurent Orseau, and Shane Legg. Ai safety gridworlds, 2017. URL https://arxiv.org/abs/1711.09883.

- Liu et al. (2021) Tao Liu, Ruida Zhou, Dileep Kalathil, P. R. Kumar, and Chao Tian. Learning policies with zero or bounded constraint violation for constrained mdps, 2021. URL https://arxiv.org/abs/2106.02684.

- Osband et al. (2013) Ian Osband, Daniel Russo, and Benjamin Van Roy. (more) efficient reinforcement learning via posterior sampling, 2013. URL https://arxiv.org/abs/1306.0940.

- Ouyang et al. (2017) Yi Ouyang, Mukul Gagrani, Ashutosh Nayyar, and Rahul Jain. Learning unknown markov decision processes: A thompson sampling approach. In Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, 2017.

- Qiu et al. (2020) Shuang Qiu, Xiaohan Wei, Zhuoran Yang, Jieping Ye, and Zhaoran Wang. Upper confidence primal-dual reinforcement learning for cmdp with adversarial loss, 2020. URL https://arxiv.org/abs/2003.00660.

- Singh et al. (2020) Rahul Singh, Abhishek Gupta, and Ness B. Shroff. Learning in markov decision processes under constraints. CoRR, abs/2002.12435, 2020. URL https://arxiv.org/abs/2002.12435.

- Tessler et al. (2019) Chen Tessler, Daniel J. Mankowitz, and Shie Mannor. Reward constrained policy optimization. In International Conference on Learning Representations, 2019.

- Zheng and Ratliff (2020) Liyuan Zheng and Lillian Ratliff. Constrained upper confidence reinforcement learning. In Proceedings of the 2nd Conference on Learning for Dynamics and Control, 2020.

- Zinkevich (2003) Martin Zinkevich. Online convex programming and generalized infinitesimal gradient ascent. In Proceedings of the Twentieth International Conference on International Conference on Machine Learning, ICML’03, 2003.

Appendix A Appendix: theoretical analysis of the Algorithm 2

This section provides some insights into the theoretical analysis of the Primal-Dual Posterior Sampling algorithm from Section 4. The complete analysis is left to be covered in the future work.

Let be the optimal solution for the approximate CMDP , be the solution governed by policy for the approximate relaxed MDP (line 7 in Algorithm 1), be the solution governed by policy for the true relaxed MDP , and be the number of episodes over horizon .

We decompose the reward regret as

Term (1) denotes how far the transition probabilities and rewards for CMDP are from the transition probabilities and rewards induced by the policy at episode . We bound this term by bounding the deviation of sampled probabilities and empirical rewards from the true transition probabilities and rewards. In fact, this is the primary step in the analysis of the Posterior Sampling of Transitions algorithm, which analog is analyzed in Agarwal et al. [2022].

Term (2) represents the deviation of the approximate CMDP from the approximate relaxed MDP . The near-optimality of the relaxation can be proved by leveraging the fact that we are iteratively updating and using no-regret online learning procedure (Best Response for and OGD for ).

Term (3) brings us back to the true transitions and rewards (where policy is actually being executed), showing the deviation between the approximate relaxed MDP and the true relaxed MDP . We bound this term by the definition of the policy update and the limited maximum span of the value function. Specifically, a similar analysis on expected regret exists [Ouyang et al., 2017] but under a Bayesian setting. The authors decompose regret into three terms. Two of those terms are replicable in our case, but another heavily relies on the Bayesian property of posterior sampling, which does not hold in our setting. Consequently, the term (3) is where a thorough analysis is needed.

We expect all three terms be bounded by , where hides logarithmic factors in . The cost regret can be bounded following the same reasoning.

Appendix B Appendix: experimental details

B.1 Benchmarks: OFU-based algorithms

We borrow three OFU-based algorithms from the existing literature for comparison. These algorithms are based on the first three types of optimistic exploration mentioned in Section 2: double optimism, conservative optimism, and optimism over transitions. Note, we entirely borrow conservative optimism and optimism over transitions type algorithms from the original sources as their setting and assumptions match ours. These algorithms are originally called C-UCRL and UCRL-CMDP correspondingly. Their names are not informative and might even be confusing. Thus, we let ourselves rename those algorithms as specified above. The double optimism type algorithms was originally developed for the episodic setting, and we borrow only the main principle from it.

All algorithms we consider for empirical comparison solve LP (4)-(7) as a subroutine substituting unknown parameters with their empirical estimates . While each algorithm defines the estimates above differently, all of them are based on sample means (9), (10), and

-

1.

CUCRLOptimistic is a double optimism type algorithm, which implements the principle of optimism under uncertainty by introducing a bonus term that favors under-explored actions with respect to each component of reward vector. In the original work, Brantley et al. [2020] consider an episodic problem; they add a bonus to the empirical rewards (9) and subtract it from the empirical costs (10):

We follow the same principle but recast the problem to the infinite-horizon setting by using the doubling epoch framework described in Section 4.

-

2.

CUCRLConservative follows a principle of “optimism in the face of reward uncertainty; pessimism in the face of cost uncertainty.” This algorithm, which was developed by Zheng and Ratliff [2020], considers conservative (safe) exploration by overestimating both rewards and costs:

CUCRLConservative proceeds in epochs of linearly increasing number of rounds , where is the episode index and is the fixed duration given as an input. In each epoch, the random policy 444Original algorithm utilizes a safe baseline during the first rounds in each epoch, which is assumed to be known. However, to make the comparison as fair as possible, we assume that a random policy is applied instead. is executed for steps for additional exploration, and then policy is applied for number of steps, making the total duration of episode .

-

3.

CUCRLTransitions: Unlike the previous two algorithms, where uncertainty was taken into account by enhancing rewards and costs, Singh et al. [2020] developed an algorithm that constructs confidence set over :

CUCRLTransitions algorithm proceeds in epochs of fixed duration of , where is an input of the algorithm. At the beginning of each round, the agent solves the following constrained optimization problem in which the decision variables are (i) Occupation measure , and (ii) “Candidate” transition :

(13) (14) (15) (16)

In all three cases, we use the original bonus terms and refer to the corresponding papers for more details regarding the definition of these terms.

B.2 Hyperparameters

| Hyperparameter | Marsrover 4x4 | Marsrover 8x8 | Box 4x4 | |||

|---|---|---|---|---|---|---|

| CUCRLOptimistic | ||||||

| bonus coefficient | 0.5 | |||||

| CUCRLConservative | ||||||

| duration | 20 | 1000 | 1000 | |||

| bonus coefficient | 0.5 | |||||

| CUCRLTransitions | ||||||

| duration | - | - | ||||

| planner | 20 | - | - | |||

| PSRLTransitions | ||||||

| learning rate | ||||||

| planner | 50000 | 50000 | 50000 | |||

| planner | ||||||

| planner | 0.95 | 0.95 | 0.95 | |||

| PSRLLagrangian | ||||||

| - | does not require any hyperparameters | |||||