An Empirical Evaluation of the t-SNE Algorithm for Data Visualization in Structural Engineering

Abstract

A fundamental task in machine learning involves visualizing high-dimensional data sets that arise in high-impact application domains. When considering the context of large imbalanced data, this problem becomes much more challenging. In this paper, the t-Distributed Stochastic Neighbor Embedding (t-SNE) algorithm is used to reduce the dimensions of an earthquake engineering related data set for visualization purposes. Since imbalanced data sets greatly affect the accuracy of classifiers, we employ Synthetic Minority Oversampling Technique (SMOTE) to tackle the imbalanced nature of such data set. We present the result obtained from t-SNE and SMOTE and compare it to the basic approaches with various aspects. Considering four options and six classification algorithms, we show that using t-SNE on the imbalanced data and SMOTE on the training data set, neural network classifiers have promising results without sacrificing accuracy. Hence, we can transform the studied scientific data into a two-dimensional (2D) space, enabling the visualization of the classifier and the resulting decision surface using a 2D plot.

Index Terms:

Classification algorithms, supervised learning, dimensionality reduction, feature extraction, oversamplingI Introduction

Visualizing high-dimensional data is among the most fundamental tasks in modern machine learning to broaden its reach in various application domains, enabling practitioners and engineers to quickly grasp information in two- or three-dimensional (2D or 3D) maps [1]. A wide range of techniques for the visualization of high-dimensional data sets have been proposed. For example, Principal Component Analysis (PCA) is one of the prevalent techniques for reducing the dimensionality [2, 3]. The primary objective of PCA is to reduce dimensionality while preserving as much variance as possible in the given data set, where each principal component is a weighted combination of all the features or attributes in the original data set. One disadvantage of using PCA for visualization purposes is that data samples tend to be densely clustered together [4]. On the other hand, Linear Discriminant Analysis (LDA) maximizes the separation between classes while performing dimensionality reduction [5]. However, a significant drawback of LDA is that the output dimension is necessarily less than the number of categories, which makes it unappealing for binary classification problems.

The t-Distributed Stochastic Neighbor Embedding (t-SNE) algorithm [6, 7] is another popular method for visualizing high-dimensional data sets by giving each sample a location in a 2D or 3D map. In essence, t-SNE minimizes the Kullback-Leibler (KL) divergence between two probability distributions: a distribution that measures pairwise similarities in the input high-dimensional space, and another distribution that represents pairwise similarities of the corresponding low-dimensional embedded samples. Therefore, t-SNE is a nonlinear dimension reduction technique capable of preserving the local structure of the given data. As a result, t-SNE has found applications in many high-impact applications, such as visualizing biological [8] and geological [9] data sets.

Motivated by recent applications of t-SNE, we propose to employ this powerful technique for visualizing scientific data sets that originate from advanced computer simulations. Specifically, we consider a binary data set stemming from computational models of earthquake ground motions in structural engineering [10, 11]. The objective is to train binary classifiers to predict the severity of the damage, i.e., safe vs. failed simulations. However, unfortunately, interpreting trained classifiers in the high-dimensional input space comprising a multitude of features is a formidable task for engineers. Therefore, we conduct a comprehensive set of numerical experiments to demonstrate the effectiveness of t-SNE for scientific data visualization. In our experiments, the embedded data samples in a 2D map serve as the input for classification algorithms. Another contribution of this work is utilizing six different classifiers to enhance our understanding about the impact of dimensionality reduction on the performance of various classifiers. To the best of our knowledge, this paper is the first application of t-SNE in the field of structural and earthquake engineering, providing future research direction to guide academic and industry researchers. The broader impact of this paper can be related to the quantitative risk assessment in structures and infra-structures subjected to multi-hazard scenarios, such as earthquake, hurricane, and flooding.

Moreover, we systematically investigate the impact of class-imbalanced data on the resulting 2D embedding provided by t-SNE. When using data-driven approaches, the class imbalance issue (i.e., considerably more instances of one class than another) poses a significant challenge as most machine learning algorithms overlook samples from minority classes [12, 13, 14]. For example, in our case study, the number of safe simulations greatly exceeds the number of failed simulations. Although there are many techniques for tackling the class imbalance problem for classification problems, the remaining question is to examine the interface between t-SNE and imbalanced data. Hence, we propose to use a popular technique, known as the Synthetic Minority Oversampling Technique or SMOTE [15, 16], for improving the performance of t-SNE.

The remainder of the paper is outlined as follows. In Section II, we review the machine learning techniques used in this work to handle high-dimensional class-imbalanced data. In Section III, we describe our experimental setup and different approaches we take. In Section IV, we outline the scientific simulation model, which is our case study. Finally, we present our numerical experiments and discussions in Section V.

II Methods

II-A t-SNE

Given the difficulty of visualizing data with more than two dimensions, a fundamental task involves analyzing and interpreting high-dimensional data sets. For the sake of improved visualization, the t-SNE [6] algorithm is employed to reduce the dimensionality. This algorithm is a powerful nonlinear dimensionality reduction technique for the visualization of data sets containing hundreds or even thousands of dimensions in 2D and 3D maps (we focus on 2D maps in this work).

To be formal, let us consider a data set that contains samples in . The goal is to find a low-dimensional embedding or map points in . The idea is that if two points are close in the input space, their corresponding map points should be close too. To this end, we define a conditional similarity between pairs of samples:

The variance parameter is different for each sample in the data set. We can also define a symmetric similarity measure as: . Next, we should define a new similarity measure for the mapping, denoted by :

The final ingredient of t-SNE is to ensure that these two metrics are close to each other based on the Kullback-Leiber (KL) divergence between the two distributions and :

In the t-SNE algorithm, perplexity is one of the most critical hyperparameters to be adjusted by the user. The perplexity parameter in t-SNE serves as a knob that sets the number of influential nearest neighbors, thus significantly impacting the resulting mapping of the input data. The standard range for the perplexity parameter is typically between 5-100, requiring a grid search for hyperparameter optimization.

II-B SMOTE

The Synthetic Minority Oversampling Technique (SMOTE) [15] is arguably the most popular data-level method for handling the class imbalance problem. The key idea is to generate new artificial samples to increase the size of the minority class. Hence, SMOTE makes learning from the minority class easier by better aligning the decision boundary toward an appropriate acknowledgment of all categories [17].

III Experimental Settings

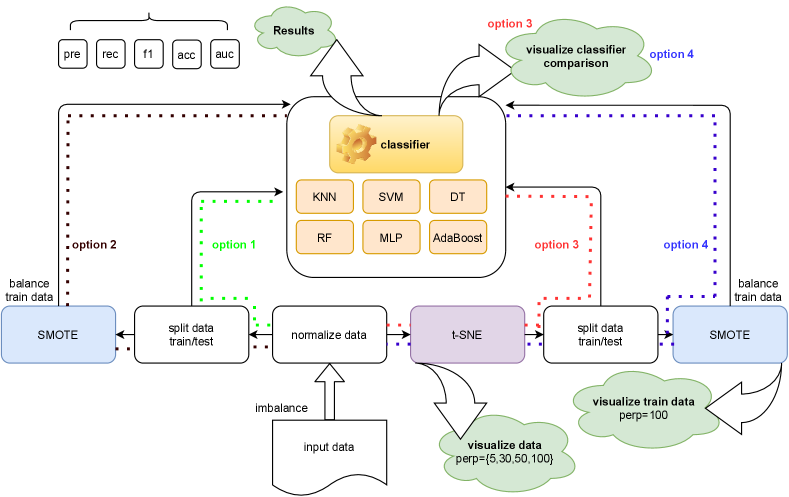

In this section, we outline four approaches that we take to systematically investigate the integration of t-SNE and SMOTE for analyzing scientific data. Fig. 1 depicts a graphical overview of these four approaches, discussed in the following.

III-A Option 1

In this approach, we work with the original input space without further actions to tackle the class imbalance problem and to visualize the high-dimensional data. We normalize the data and then divide it into train/test sets. Finally, we train different classification algorithms, described in Section III-E, while using the test set to evaluate their performance.

III-B Option 2

This is similar to option 1 with a minor difference. After splitting the data into train/test sets, we apply SMOTE to the training data set to generate synthetic data for balancing the number of samples. Note that we split our data set before using any over/under-sampling methods. Otherwise, we will bias our model, which is simply wrong because we are introducing data points for our future test set that do not exist.

III-C Option 3

Here, we aim to visualize the data before using oversampling methods and classifiers to better understand the anatomy and structure of the data. To achieve this goal of visualization, dimensionality reduction is required. To this end, after normalizing the input data, we apply t-SNE which is commonly used for visualization purposes in 2D scatter plots. Then, we split the data and fit our classifiers to get the performance results. The interesting point of this approach is that, thanks to the dimensionality reduction step, we can easily have a visual comparison between classifiers in the final step.

III-D Option 4

Similar to option 3, we first apply t-SNE to the normalized data, and we then split it into train/test sets. At this point, we use SMOTE to balance the training data set. We visualize the balanced training set and the resulting classifiers.

III-E Classification Algorithms

In this study, we incorporate six classifiers for the binary classification task. Our classifiers are: i) K-Nearest Neighbors (KNN), ii) Radial Basis Function (RBF) Support Vector Machine (SVM), iii) Decision Tree (DT), iv) Random Forest (RF), v) Multi-Layer Perceptron (MLP), and vi) AdaBoost.

-

•

K-Nearest Neighbors is a type of instance-based learning: it does not attempt to construct a general internal model, but simply stores instances of the training data. Classification is computed based on a majority vote of the nearest neighbors of each point: a query point is assigned the data class which has the most representatives within the nearest neighbors of the point. scikit-learn implements KNeighborsClassifier, where is an integer value specified by the user to define the number of nearest neighbors. In this work, we choose .

-

•

RBF Support Vector Machine finds the dividing hyperplane that separates both classes of the training set with the maximum possible margin. Then, the predicted label of a new, unseen data point is determined based on which side of the hyperplane it falls [18]. When training SVM with the radial basis function kernel, two parameters must be considered: and . In RBF kernel function, , must be greater than . The parameter , common to all SVM kernels, trades off misclassification of training examples against simplicity of the decision surface. A low makes the decision surface smooth, while a high aims at classifying all training examples correctly as much as possible. defines how much influence a single training example has. The larger is, the closer other examples must be to be affected. We consider in our model.

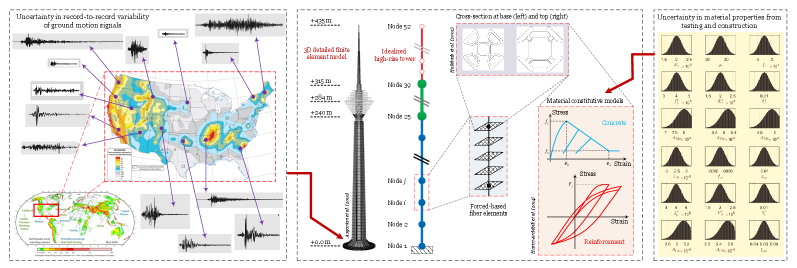

Figure 2: Developed numerical model of a complex structural system with combined uncertainty inputs (schematic plot): uncertainty in applied stochastic ground motion records, as well as randomness in the simulated material/modeling properties. -

•

Decision Trees are a non-parametric learning method which employ a straightforward idea to solve the classification problem. Trees are built beginning with the tree’s root and continuing down to its leaves. DT poses a set of carefully constructed questions with which a classification rule is developed through a set of attributes [19]. DT algorithms use a heuristic method or greedy strategy to direct their quest in the vast space of hypotheses for simplifying decision trees [20]. We consider the maximum depth of the tree max_depth = 5.

-

•

Random Forest builds multiple trees randomly among subsets of features to form branches of decision trees. Several trees are trained in random forests, instead of training a single tree [21]. Bagging method is applied to build each decision tree, and then all the decision trees are combined to form the RF. We consider max_depth = 5, n_estimators = 10, and max_features = 1.

-

•

MLPClassifier implements a multi-layer perceptron algorithm using backpropagation. MLP, a feedforward artificial neural network model, is a supervised learning algorithm. Our MLPClassifier model optimizes the log-loss function using adam, since this solver works pretty well on relatively large data sets (with thousands of training samples or more) in terms of both training time and validation score [22]. In our single hidden layer MLP model, we consider the regularization term parameter alpha=1 and the number of epochs max_iter=1000.

-

•

AdaBoost is an ensemble learning method based on creating powerful classifiers by combining weak learners. AdaBoost is an iterative algorithm in which, at any iteration, each weak classifier is trained, and the weight is assigned to, based on the accuracy achieved [23]. Classifiers with higher accuracy levels are assigned with higher weights to have a more impact on the final prediction.

In this work, all algorithms under comparison are implemented in Python. To perform the classification task and training binary classifiers, we use built-in estimators in the scikit-learn package (Version 0.24.1). We use these functions with default arguments unless otherwise stated.

IV Case Study

Risk-based safety management of infra-structures is a critical task that involves various uncertainty sources. According to the performance-based earthquake engineering (PBEE) framework proposed by the Pacific Earthquake Engineering Research (PEER) center, any realistic risk analysis should consider aleatory and epistemic uncertainties. The former originates from uncertainties in the applied load (in this case, the ground motion record-to-record variability), while the latter has its roots in material and modeling randomness. Multiple studies reported the hybrid impact of these uncertainty sources [24, 25, 26, 27, 28]. While the concept of uncertainty quantification in risk-based assessment can be studied from different viewpoints, in connection to the proposed methodology in this paper, we aim to answer an important question: how to classify safe/failure events in the case of imbalanced data?

For this purpose, we consider a high-rise telecommunication tower as a case study [29]. The height is over meters, made of reinforced concrete. The concrete shaft is the main load-carrying structure of the tower that transfers the lateral and gravitational loads to the foundation. We consider several modeling aspects, including material nonlinearities (i.e., cracking, crushing, and damage), and geometric nonlinearities. To reduce the computational burden, We develop a 2D model of the tower, including the head structure, shaft, and transition. A total of random models are generated using Latin Hypercube Sampling (LHS) to consider the variability of material/modeling parameters (i.e., concrete, steel, and system level damping). Probability density functions for all these variables are illustrated in Fig. 2 (right box).

Moreover, ground motions are selected world-wide to consider the aleatory uncertainty source. The record selection process is also random in order to cover a wide range of potential events. Fig. 2 (left box) illustrates a few selected records within the United States based on the national seismic hazard maps. Since as-recorded (i.e., unscaled) ground motion records are typically weak and may not cause failure to the structure, a scaling technique is used to generate other ground motions that are more destructive. Therefore, new records are generated (based on the original 100 ones), resulting in ground motion records that are used in our numerical model. While there are several optimal design of experiments to combine structural models from LHS with ground motion records [30, 31], we choose to use Randomized Complete Block Design, which is simply a full combination of all possible cases ( events). Each event is a unique combination of a random model and a random ground motion.

As discussed earlier, each random model has unique attributes from material/modeling (which are in the form of real numbers), and a time-dependent stochastic ground motion signal. Hence, we should convert the stochastic nature of the ground motion signal into a manageable number of scalar features. Such a conversion is already discussed in [32, 33] for generic infrastructural systems. For each ground motion, we extract intensity measure (IM) parameters (or meta-features), including all peak values, as well as the intensity-, frequency-, and duration-dependent parameters.

Combining the meat-features extracted from ground motion signals and the material/modeling attributes leads to features for each event or data sample. Therefore, the input data set consists of samples in . The outputs of our finite element simulation model provide the ground-truth labels, containing for safe and for failed simulations. To be precise, we have and (i.e., about of simulations are failed).

V Experimental Results and Discussion

This section reports the results of our empirical investigation given the experimental setup described in Section III, which is also depicted in Fig 1.

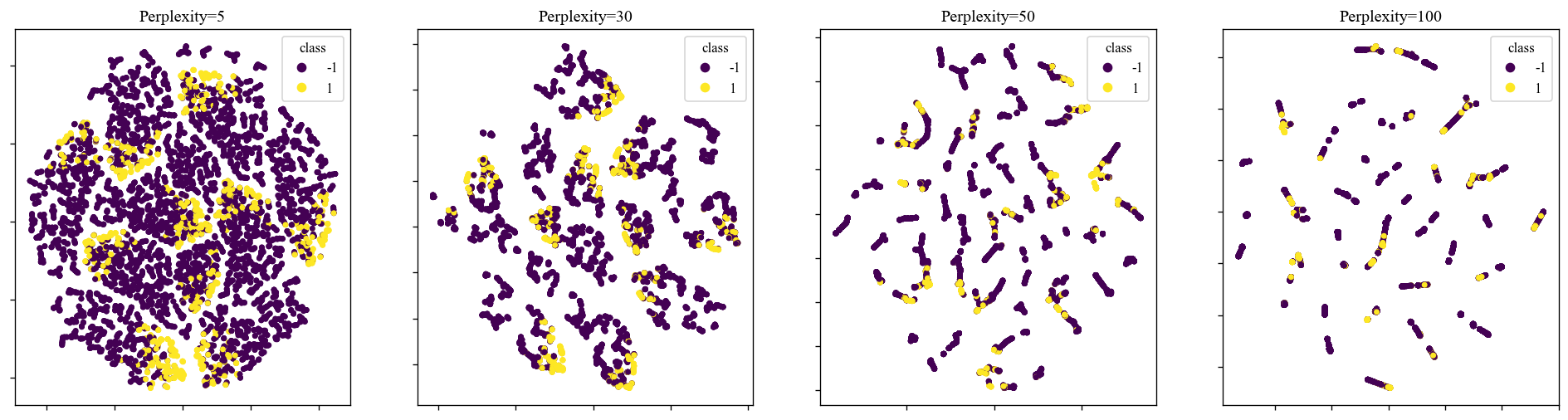

First, we plot 2D maps attained by the t-SNE algorithm for varying values of the perplexity parameter. As we observe in Fig. 3, when the perplexity parameter is set to , the resulting 2D map is not useful because the two classes are very close to each other. However, as we increase this parameter, we notice a larger separation between the two classes of safe and failed simulations, thus t-SNE offers a practical visualization technique for the discussed scientific data. Moreover, the reduced dimensionality data samples can be provided as inputs to the classification algorithms for developing prediction models.

In the second part of this section, we report several evaluation metrics for classification problems. These metrics are listed in the following:

-

•

Precision is defined as the ratio TP / (TP + FP), where TP is the number of true positives and FP denotes the number of false positives.

-

•

Recall is defined as the ratio TP / (TP + FN), where FN refers to false negatives.

-

•

F1-Score combines the precision and recall scores.

-

•

Balanced accuracy avoids inflated performance estimates on class-imbalanced data. It is defined as the average of recall obtained on each class or the arithmetic mean of sensitivity (true positive rate) and specificity (true negative rate) in the following form:

Balanced accuracy takes values between and , and higher values indicate better prediction models.

-

•

AUC-Score (Area Under Receiver-operating Characteristic Curve) score is a widely used metric for assessing performance in imbalanced learning [34].

| option 1 | option 2 | option 3 | option 4 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| classifier | pre | rec | f1 | acc | auc | pre | rec | f1 | acc | auc | pre | rec | f1 | acc | auc | pre | rec | f1 | acc | auc |

| KNN | 0.92 | 0.93 | 0.92 | 0.82 | 0.81 | 0.92 | 0.9 | 0.91 | 0.88 | 0.88 | 0.91 | 0.91 | 0.91 | 0.8 | 0.8 | 0.91 | 0.88 | 0.89 | 0.85 | 0.9 |

| SVM | 0.93 | 0.93 | 0.93 | 0.83 | 0.83 | 0.93 | 0.91 | 0.92 | 0.88 | 0.88 | 0.9 | 0.91 | 0.91 | 0.78 | 0.78 | 0.92 | 0.89 | 0.9 | 0.85 | 0.9 |

| DT | 0.92 | 0.92 | 0.92 | 0.81 | 0.81 | 0.93 | 0.91 | 0.92 | 0.9 | 0.9 | 0.85 | 0.87 | 0.86 | 0.6 | 0.6 | 0.89 | 0.65 | 0.7 | 0.65 | 0.6 |

| RF | 0.92 | 0.93 | 0.92 | 0.82 | 0.82 | 0.93 | 0.87 | 0.89 | 0.9 | 0.9 | 0.87 | 0.88 | 0.84 | 0.6 | 0.6 | 0.89 | 0.64 | 0.7 | 0.7 | 0.7 |

| MLP | 0.93 | 0.93 | 0.93 | 0.81 | 0.81 | 0.93 | 0.89 | 0.9 | 0.9 | 0.9 | 0.88 | 0.88 | 0.82 | 0.5 | 0.5 | 0.91 | 0.83 | 0.86 | 0.89 | 0.9 |

| AdaBoost | 0.94 | 0.94 | 0.94 | 0.85 | 0.85 | 0.94 | 0.92 | 0.93 | 0.92 | 0.92 | 0.86 | 0.87 | 0.84 | 0.53 | 0.53 | 0.9 | 0.8 | 0.83 | 0.81 | 0.8 |

Table I reports these performance metrics when applying the six introduced classifiers to our scientific data set. According to this table, using SMOTE, improves balanced accuracy (acc) and AUC scores across all four options. Although this behavior is expected in the high-dimensional input space, we have shown similar trends when using t-SNE for visualization purposes. As can be seen from Table I, although option 2 achieved the highest balanced accuracy (acc) and AUC scores, we lose the opportunity for the visualization of the data’s structure and classifier’s decision surface to compare the performance of various classification algorithms beyond standard numerical metrics.

Furthermore, we notice that using SMOTE when working with 2D maps is crucial. For example, comparing option 3 with 4, the balanced accuracy score (acc) increases from to for MLP. Additionally, comparing option 2 with 4, balanced accuracy (acc) and AUC scores seem almost the same across all classifiers except DT and RF. Therefore, it is wise to choose option 4 since we can take advantage of visualization with no significant loss in performance. Looking at the Table I, the same rule applies to all performance metrics reported in this study. In this experimental investigation, we discussed balanced accuracy (acc) and AUC scores because they are considered the most critical performance metrics in imbalance learning. Also, it is interesting to notice that the K-Nearest Neighbors (KNN) classifier performs well in option 3 and 4 despite its simplicity. However, if we have to pick a single classifier in this experiment, MLP achieves the highest value of balanced accuracy among the six selected classifiers in option 4.

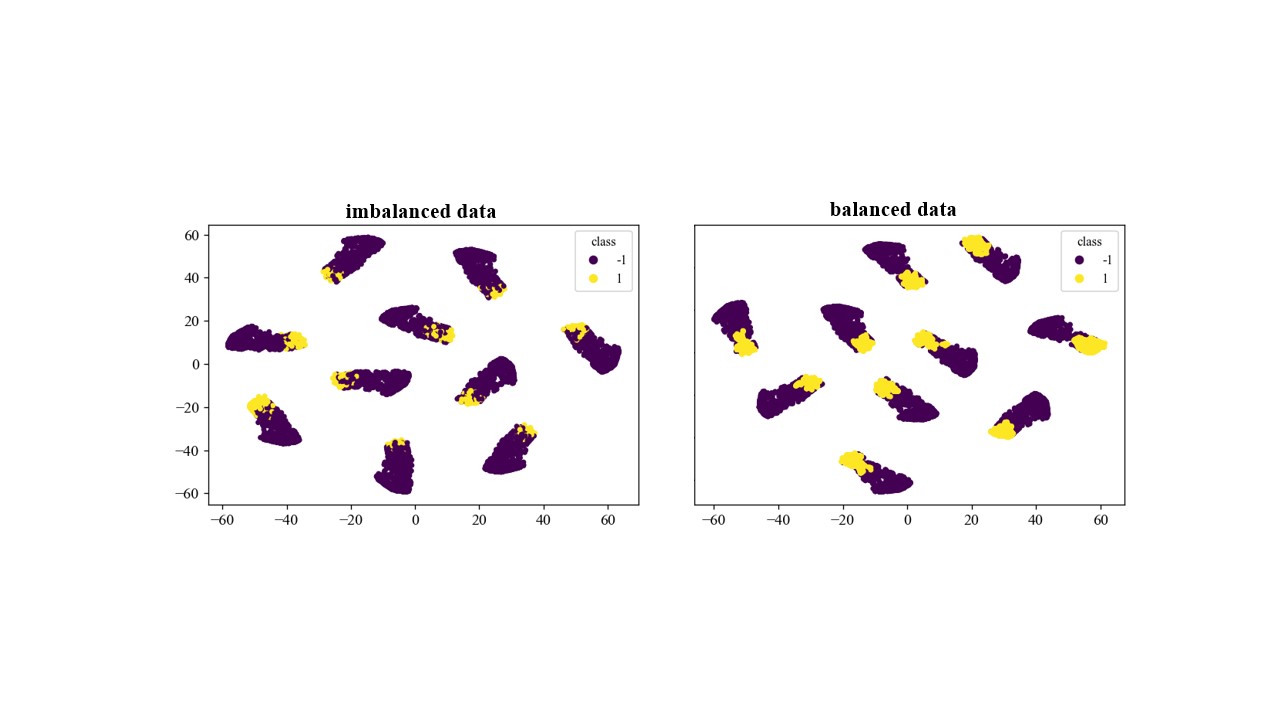

As depicted in Fig. 1, we can take advantage of data visualization in option 3 and 4, where we utilize the t-SNE algorithm. To see how SMOTE works to handle the imbalance nature of the scientific data in our case study, Fig. 4 shows 2D plots of the training data set. In this figure, purple color shows the safe simulations whereas the yellow color represents failed ones, i.e., the minority class in our imbalanced data set. As the right figure illustrates in Fig. 4, with the help of SMOTE, the re-sampled data set is well balanced. That is, the dense yellow region clearly shows that how oversampling methods (SMOTE here) is capable of generating synthetic data points to handle the class imbalance problem. As discussed earlier, to avoid bias in the model, SMOTE is only utilized on the training data set (instead of applying to the whole data set). Fig. 4 presents the imbalanced/balanced training data sets using option 3 vs. option 4, where the perplexity parameter is set to .

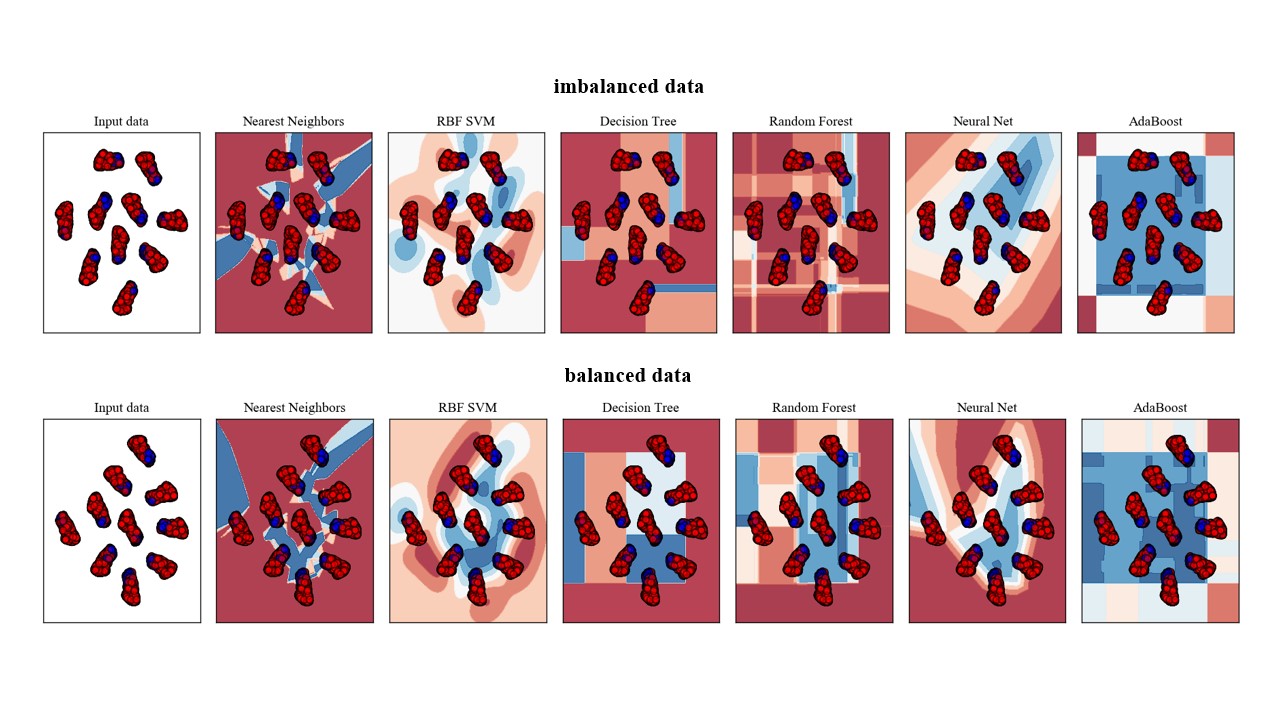

Finally, in Fig 5, we visualize and compare the trained classifiers using 2D plots attained by t-SNE with the perplexity value of . In this figure, safe/failed simulations are represented by red and blue colors, respectively. Also training points are showed in solid colors while testing points in semi-transparent.

The point of this figure is to illustrate the nature of decision surface for each classifier. We apply six different classification algorithms to our given data set to visualize the decision functions. The bottom plot refers to option 4, where we balance our training data using SMOTE, whereas the top plot is related to the imbalanced training data set, i.e., option 3.

We discern that the neural network model or MLP, RBF SVM, and K-Nearest Neighbors (KNN) on the balanced data set using SMOTE provide a meaningful decision surface to separate the two classes. On the other hand, DT, RF, and AdaBoost fail to provide reasonable decision boundaries based on visualizing classifiers. This observation is consistent with the performance metrics reported in Table I because DT, RF, and AdaBoost ranked as three classifiers with the lowest balanced accuracy scores in option 4.

To conclude, we note that t-SNE offers a powerful technique for visualizing high-dimensional scientific data sets, allowing for inspecting prediction models beyond standard numerical performance metrics. Hence, our empirical investigation provides a roadmap for practitioners and engineers who want to better understand the performance of machine learning models in high-dimensional data regimes. Furthermore, we showed that integrating re-sampling techniques and t-SNE is essential for class-imbalanced data sets.

This work just focused on the binary classification problem. In our future work, we plan to visualize high-dimensional multi-class scientific data sets using different dimensionality reduction techniques such as t-SNE, PCA, and LDA and make a fair comparison. Furthermore, we can investigate how imbalanced learning strategies handle cases with more than three classes (e.g., ten or more categories).

References

- [1] S. Ayesha, M. Hanif, and R. Talib, “Overview and comparative study of dimensionality reduction techniques for high dimensional data,” Information Fusion, vol. 59, pp. 44–58, 2020.

- [2] F. Pourkamali-Anaraki and S. Hughes, “Memory and computation efficient pca via very sparse random projections,” in International Conference on Machine Learning, 2014, pp. 1341–1349.

- [3] F. Pourkamali-Anaraki and S. Becker, “Preconditioned data sparsification for big data with applications to PCA and k-means,” IEEE Transactions on Information Theory, vol. 63, no. 5, pp. 2954–2974, 2017.

- [4] J. Wu, J. Wang, H. Xiao, and J. Ling, “Visualization of high dimensional turbulence simulation data using t-sne,” in 19th AIAA Non-Deterministic Approaches Conference, 2017, p. 1770.

- [5] P. Xanthopoulos, P. Pardalos, and T. Trafalis, “Linear discriminant analysis,” in Robust data mining. Springer, 2013, pp. 27–33.

- [6] L. Van der Maaten and G. Hinton, “Visualizing data using t-SNE,” Journal of Machine Learning Research, vol. 9, no. 11, 2008.

- [7] S. Arora, W. Hu, and P. Kothari, “An analysis of the t-sne algorithm for data visualization,” in Conference On Learning Theory, 2018, pp. 1455–1462.

- [8] D. Kobak and P. Berens, “The art of using t-SNE for single-cell transcriptomics,” Nature communications, vol. 10, no. 1, pp. 1–14, 2019.

- [9] M. Balamurali and A. Melkumyan, “t-SNE based visualisation and clustering of geological domain,” in International Conference on Neural Information Processing, 2016, pp. 565–572.

- [10] M. Hariri-Ardebili and F. Pourkamali-Anaraki, “Simplified reliability analysis of multi hazard risk in gravity dams via machine learning techniques,” Archives of Civil and Mechanical Engineering, vol. 18, no. 2, pp. 592–610, 2018.

- [11] F. Pourkamali-Anaraki and M. Hariri-Ardebili, “Neural networks and imbalanced learning for data-driven scientific computing with uncertainties,” IEEE Access, vol. 9, pp. 15 334–15 350, 2021.

- [12] B. Krawczyk, “Learning from imbalanced data: open challenges and future directions,” Progress in Artificial Intelligence, vol. 5, no. 4, pp. 221–232, 2016.

- [13] M. Buda, A. Maki, and M. Mazurowski, “A systematic study of the class imbalance problem in convolutional neural networks,” Neural Networks, vol. 106, pp. 249–259, 2018.

- [14] P. Hajibabaee, F. Pourkamali-Anaraki, and M. Hariri-Ardebili, “Kernel matrix approximation on class-imbalanced data with an application to scientific simulation,” IEEE Access, pp. 83 579–83 591, 2021.

- [15] N. Chawla, K. Bowyer, L. Hall, and P. Kegelmeyer, “Smote: synthetic minority over-sampling technique,” Journal of artificial intelligence research, vol. 16, pp. 321–357, 2002.

- [16] A. Fernández, S. Garcia, F. Herrera, and N. Chawla, “SMOTE for learning from imbalanced data: progress and challenges, marking the 15-year anniversary,” Journal of Artificial Intelligence Research, vol. 61, pp. 863–905, 2018.

- [17] G. Kovács, “Smote-variants: A python implementation of 85 minority oversampling techniques,” Neurocomputing, vol. 366, pp. 352–354, 2019.

- [18] C. Cortes and V. Vapnik, “Support-vector networks,” Machine learning, vol. 20, no. 3, pp. 273–297, 1995.

- [19] J. Quinlan, “Induction of decision trees,” Machine Learning, vol. 1, no. 1, pp. 81–106, 1986.

- [20] ——, “Simplifying decision trees,” International Journal of Man-Machine Studies, vol. 27, no. 3, pp. 221–234, 1987.

- [21] T. K. Ho, “Random decision forests,” 1995.

- [22] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay, “Scikit-learn: Machine learning in Python,” Journal of Machine Learning Research, vol. 12, pp. 2825–2830, 2011.

- [23] A. H. S. Bloehdorn, “Text classification by boosting weak learners based on terms and concepts,” 2005.

- [24] J. C. Helton and D. E. Burmaster, “Guest editorial: treatment of aleatory and epistemic uncertainty in performance assessments for complex systems,” Reliability Engineering & System Safety, vol. 54, no. 2-3, pp. 91–94, 1996.

- [25] O. Celik and B. Ellingwood, “Seismic fragilities for non-ductile reinforced concrete frames–role of aleatoric and epistemic uncertainties,” Structural Safety, vol. 32, pp. 1–12, 2010.

- [26] C. Jiang, J. Zheng, and X. Han, “Probability-interval hybrid uncertainty analysis for structures with both aleatory and epistemic uncertainties: a review,” Structural and Multidisciplinary Optimization, pp. 1–18, 2017.

- [27] M. Hariri-Ardebili and J. Xu, “Efficient seismic reliability analysis of large-scale coupled systems including epistemic and aleatory uncertainties,” Soil Dynamics and Earthquake Engineering, vol. 116, pp. 761–773, 2019.

- [28] J. Chen and Z. Wan, “A compatible probabilistic framework for quantification of simultaneous aleatory and epistemic uncertainty of basic parameters of structures by synthesizing the change of measure and change of random variables,” Structural Safety, vol. 78, pp. 76–87, 2019.

- [29] M. Hariri-Ardebili, H. Rahmani-Samani, and M. Mirtaheri, “Seismic stability assessment of a high-rise concrete tower utilizing endurance time analysis,” International Journal of Structural Stability and Dynamics, vol. 14, 2014.

- [30] M. Cavazzuti, Optimization methods: from theory to design scientific and technological aspects in mechanics. Springer Science & Business Media, 2012.

- [31] M. Hariri-Ardebili, “MCS-based response surface metamodels and optimal design of experiments for gravity dams,” Structure and Infrastructure Engineering, vol. 14, no. 12, pp. 1641–1663, 2018.

- [32] M. Hariri-Ardebili and V. Saouma, “Collapse fragility curves for concrete dams: Comprehensive study,” ASCE Journal of Structural Engineering, vol. 142, no. 10, p. 04016075, 2016.

- [33] M. Hariri-Ardebili and S. Barak, “A series of forecasting models for seismic evaluation of dams based on ground motion meta-features,” Engineering Structures, p. 109657, 2020.

- [34] M. Koziarski and M. Woźniak, “Ccr: A combined cleaning and resampling algorithm for imbalanced data classification,” International Journal of Applied Mathematics and Computer Science, vol. 27, no. 4, 2017.