∎

University of Engineering and Technology, Lahore, Pakistan

Tel: +92-42-37951901

22email: irfan.yousuf@uet.edu.pk

I. Anwer 33institutetext: Department of Transportation Engineering and Management

University of Engineering and Technology, Lahore, Pakistan

M. Abid 44institutetext: Department of Computer and Information Sciences

Pakistan Institute of Engineering and Applied Sciences, Islamabad, Pakistan

An Empirical Study of Compression-friendly Community Detection Methods

Abstract

Real-world graphs are massive in size and we need a huge amount of space to store them. Graph compression allows us to compress a graph so that we need a lesser number of bits per link to store it. Of many techniques to compress a graph, a typical approach is to find clique-like caveman or traditional communities in a graph and encode those cliques to compress the graph. On the other side, an alternative approach is to consider graphs as a collection of hubs connecting spokes and exploit it to arrange the nodes such that the resulting adjacency matrix of the graph can be compressed more efficiently. We perform an empirical comparison of these two approaches and show that both methods can yield good results under favorable conditions. We perform our experiments on ten real-world graphs and define two cost functions to present our findings.

Keywords:

Graph Compression Community Detection Node Ordering1 Introduction

A graph illustrates interconnections among entities. Mathematically, graphs are structures that represent pairwise relations between objects. A graph has two main components: nodes; which represent objects, edges; that represent relationships between nodes. A graph can have millions or even billions of nodes or edges in it and storage of such massive graphs require a huge amount of memory. To store big graphs, data compression is one of the possible techniques to reduce the number of bits required to represent a graph. Data compression can dramatically decrease the amount of storage a file takes up. However, the question is how can we compress graphs efficiently?

Many of the data reduction methods exploit the redundancy in data to compress it. A closer look at the well-known representations of graphs e.g., adjacency lists or adjacency matrix shows that we can exploit the redundancies in these representations to lower the number of bits required to store a graph (Adler and Mitzenmacher, 2001; Boldi and Vigna, 2004; Raghavan and Garcia-Molina, 2003; Randall et al., 2002; Suel and Yuan, 2001). Of the possible solutions, finding communities in a graph and then ordering the nodes such that the nodes with the same neighborhood are ordered in close proximity has shown good results (Buehrer and Chellapilla, 2008; Lim et al., 2014). We can say that the problems of compressing a graph and finding communities in it are closely related. If we can find good communities, we can compress the graph efficiently.

In this paper, we investigate the impact of community-based node ordering on graph compression. While research related to community detection dates back to the 70s in mathematical sociology and circuit design (Donath and Hoffman, 1972; Lorrain and White, 1971), Newman’s and Girvan’s work on modularity in complex systems just a couple of decades ago revitalized the field of community detection, making it one of the main pillars of network science research (Newman and Girvan, 2004; Newman, 2006b). Taking into account its importance, it is not surprising that many community detection methods have been developed (Girvan and Newman, 2002; Clauset et al., 2004; Blondel et al., 2008; Rosvall and Bergstrom, 2008; Raghavan et al., 2007; Newman, 2006a; Reichardt and Bornholdt., 2006; Pons and Latapy, 2005) using tools and techniques from variegated disciplines such as statistical physics, biology, applied mathematics, computer science, and sociology. Most recently, the authors (Lim et al., 2014) proposed a new approach to finding communities designed specifically for producing a compression-friendly ordering. The traditional community detection methods (Raghavan et al., 2007; Blondel et al., 2008; Clauset et al., 2004; Newman, 2006a; Rosvall and Bergstrom, 2008) focus on finding homogeneous regions in the graph so that nodes inside a region are tightly connected to each other than to nodes in other regions. However, the authors (Lim et al., 2014) go beyond the traditional methods and exploit the power-law degree distribution of real-world graphs to find compression-friendly communities.

In this work, we compare the compression ratios achieved by deploying some of the traditional state-of-the-art community detection methods to find communities (Girvan and Newman, 2002; Clauset et al., 2004; Blondel et al., 2008; Rosvall and Bergstrom, 2008; Raghavan et al., 2007; Newman, 2006a; Reichardt and Bornholdt., 2006; Pons and Latapy, 2005) versus the recent approach to find compression-friendly communities (Lim et al., 2014). Our purpose is twofold:

-

•

Comparing the different traditional community detection methods in terms of finding good communities for graph compression.

-

•

Comparing traditional approaches with the recent approach to community detection for better compression ratios.

The rest of the paper is organized as follows. In section 2, we provide some basic definitions to understand graphs and community detection methods. In section 3, we define graph compression and propose a naive node ordering scheme. In section 4, we present our findings. In section 5, we discuss the related work and conclude the paper in section 6.

2 Basic Definitions and Preliminaries

In this section, we provide some basic definitions and preliminaries.

2.1 Graphs and Their Representation

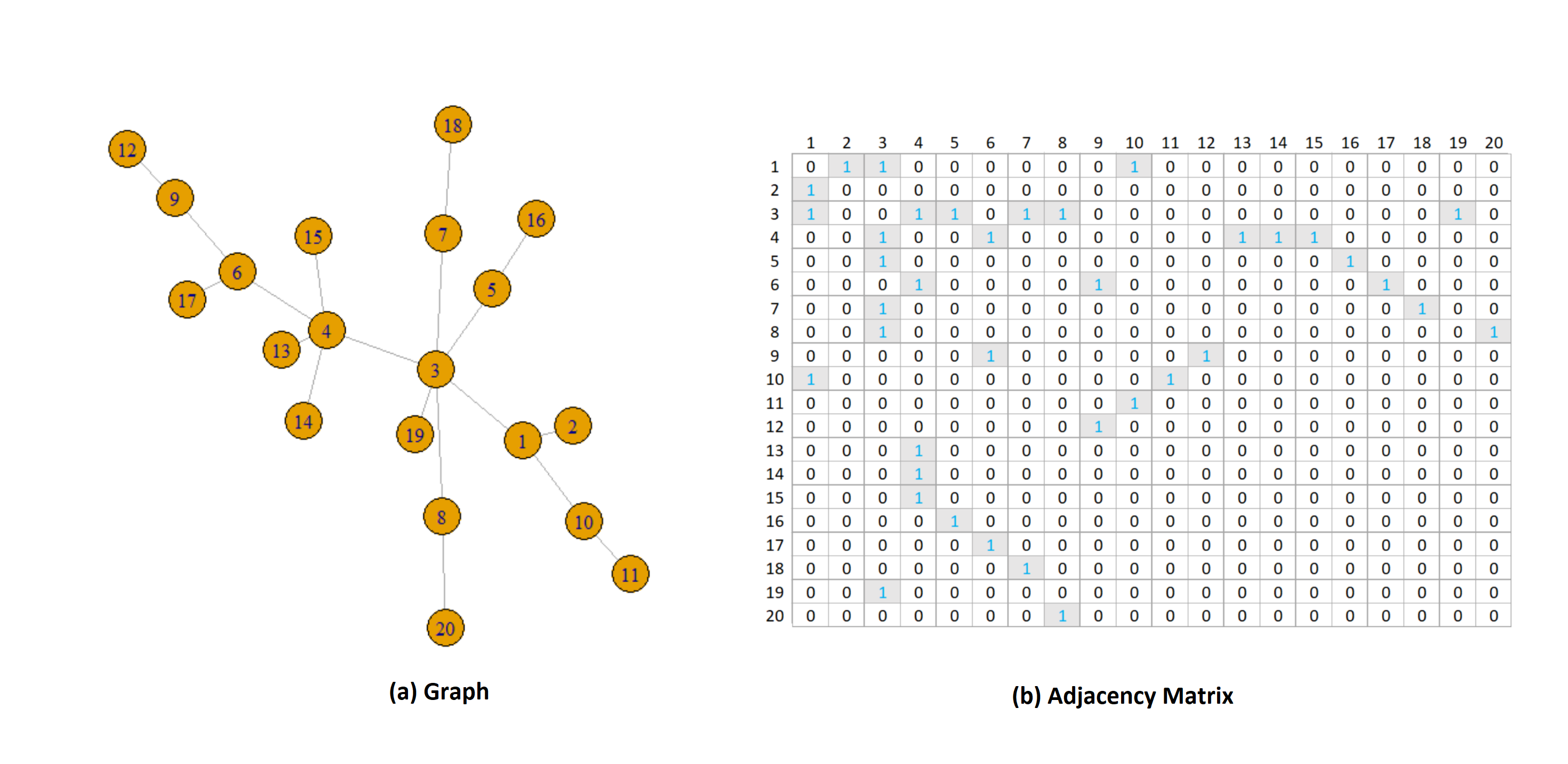

Graphs are data structures and offer a way of expressing relationships between objects. Graphs are used to represent networks, e.g., social networks, biological networks, collaboration networks, etc. A graph consists of nodes (or vertices) and edges (or links) between them to show relationships. Mathematically, a graph is written as where is the set of nodes and is the set of edges. There are different ways to represent a graph but in this paper, we will consider the adjacency matrix representation of a graph. Further, we consider only undirected graphs in this work. A small graph and its corresponding adjacency matrix are shown in Figure 1.

2.2 Community Detection Methods

A community in a graph forms a group of nodes such that these nodes have more connections with one another than with the nodes in the rest of the graph. Community structures are very common in real networks. Social networks include communities based on common location, interests, occupation, etc. In the last two decades, many methods have been proposed to detect non-overlapping communities in graphs. We select the following five traditional methods for this work. In addition, we compare these traditional methods with a recent approach, SlashBurn (Lim et al., 2014), specifically designed to find compression-friendly communities in a graph.

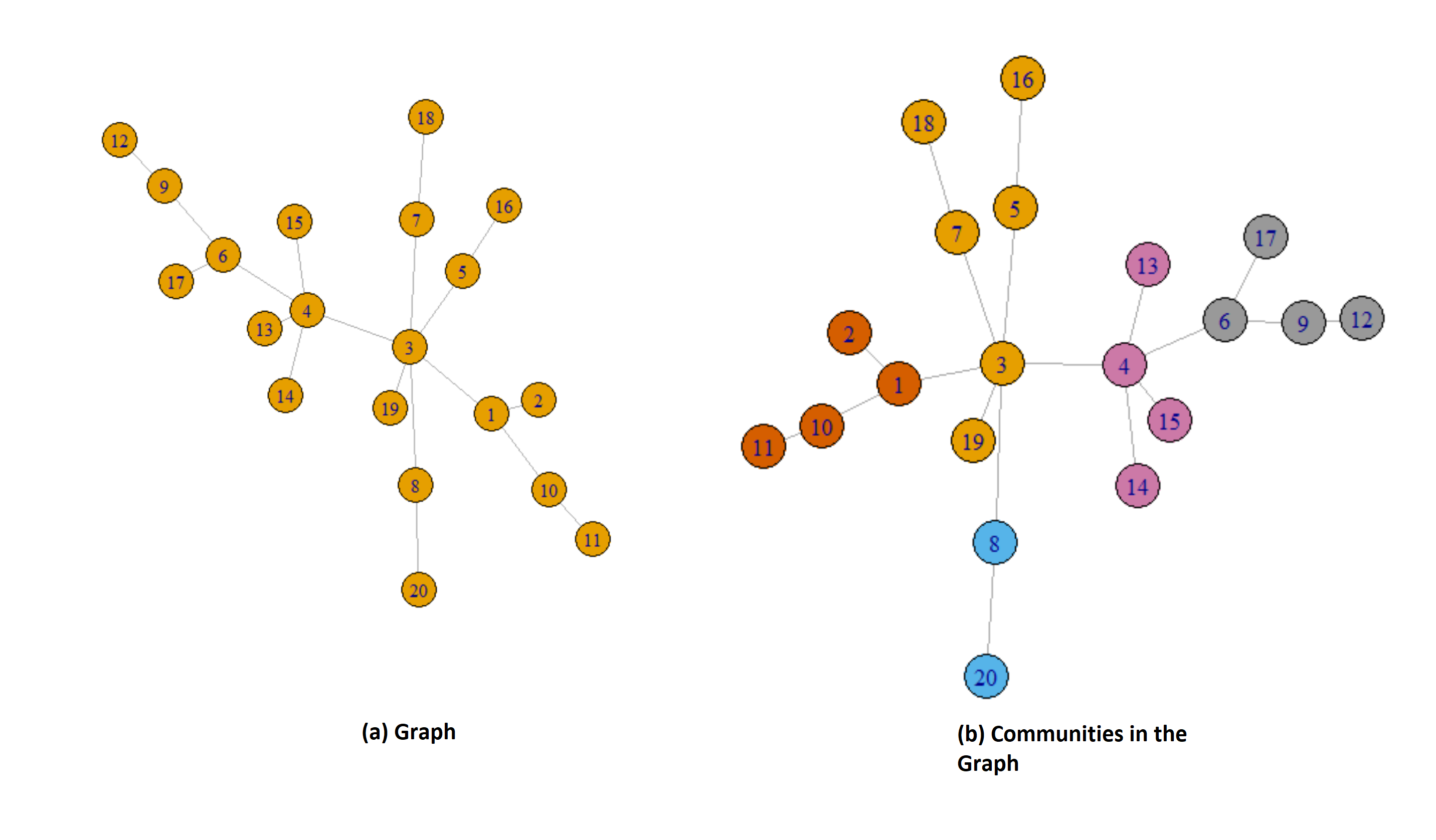

In Figure 2, we show a small graph and the communities found in it using the MultiLevel (Blondel et al., 2008) method of finding non-overlapping communities. We find five communities in the graph and show the nodes with a different color belonging to a community.

2.2.1 Label Propagation

This algorithm (Raghavan et al., 2007) assumes that each node in the network is assigned to the same community as the majority of its neighbors. At startup, it gives a distinct label to each node in the network. As the algorithm progresses, each node takes the label of the majority of its neighbors. The above step stops once each node has the same label as the majority of its neighbors.

2.2.2 MultiLevel

MultiLevel (Blondel et al., 2008) is a greedy approach to optimize modularity. This method first assigns a different community to each node of the network, then a node is moved to the community of one of its neighbors to improve modularity. The above step is repeated for all nodes until no further improvement can be achieved. Then each community is considered as a single node on its own and the second step is repeated until there is only a single node left or when the modularity cannot be increased in a single step.

2.2.3 Fast Greedy

Fast Greedy (Clauset et al., 2004) is also a greedy community detection method that optimizes the modularity score. It starts with a non-clustered initial assignment where each node belongs to a one-node community. Next, it computes the expected improvement of modularity for each pair of communities and merges them into one if that pair gives the maximum improvement of modularity. The above procedure is repeated until we cannot improve modularity by merging communities.

2.2.4 Leading Eigenvector

Leading Eigenvectors (Newman, 2006a) method optimizes the modularity by using the eigenvalues and eigenvectors of the modularity matrix. First, the leading eigenvector of the modularity matrix is calculated, and then the graph is divided into two parts such that modularity improvement is maximized based on the leading eigenvector. Repeatedly, the modularity contribution is calculated at each step in the subdivision of a network. It stops once the positive contribution to modularity is not possible.

2.2.5 Infomap

Infomap (Rosvall and Bergstrom, 2008) employs random walks to analyze the information flow through a network. Initially, it encodes the network into modules in a way that maximizes the amount of information about the original network. Then it sends the signal to a decoder through a channel with limited capacity. The decoder tries to construct a set of possible candidates for the original graph after decoding the message. The smaller the number of candidates, the more information about the original network has been transferred.

2.2.6 SlashBurn

In principle, SlashBurn (Lim et al., 2014) is a node-reordering algorithm specifically developed for graph compression. It goes beyond the traditional ‘caveman communities’ and finds communities by removing high degree nodes from the graph. The notion behind the algorithm is that removing the highest centrality nodes from the graph produces many small disconnected components that could be considered as a ‘community’. First, it removes high centrality nodes from the graph. Next, it reorders nodes such that high-degree nodes are assigned the lowest IDs, nodes from disconnected components are assigned the highest IDs, and nodes from the giant connected component are assigned the middle-range of IDs. In the next iteration, the process is repeated on the giant connected component. The process stops when the size of the giant component is smaller than the number of nodes to be deleted in the next iteration.

3 Graph Compression

The main objective of a graph compression algorithm is to reduce the number of bits required to store the graph. The recent research (Chierichetti et al., 2009; Boldi et al., 2011) shows that the ordering of nodes plays an important role in compressing graphs. Given a graph, we reorder the nodes such that a smaller number of bits are required to store the graph than the number of bits required for the unordered graph. Formally, we define our problem as:

Given a graph with adjacency matrix A, we find an ordering of nodes such that the storage cost function is minimized.

In general, the solution is to arrange the entries in the adjacency matrix so that we could group them in such a way that a group has (ideally) only 1s or 0s in it. For this purpose, we reorder the nodes such that the nodes belonging to a cluster (or community) get consecutive positions in the ordering. With such a compression-friendly ordering, we can compress the adjacency matrix by omitting the groups that have only 0s in it and compressing only those groups (or blocks) that have 1s in it.

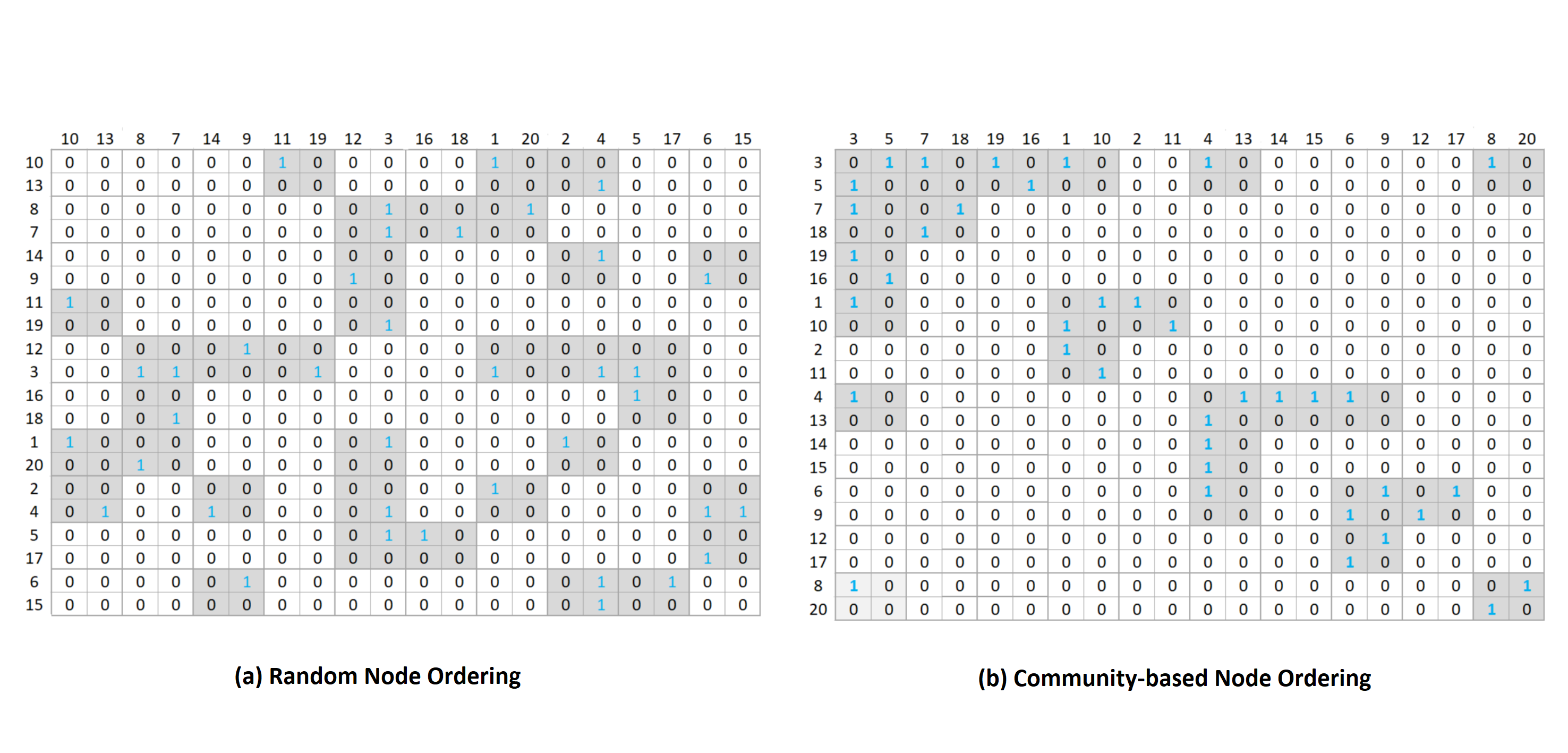

In Figure 3, we show the adjacency matrix of a small graph (see Fig. 1) with (a) random ordering of nodes and (b) compression-friendly node ordering. We apply 2 by 2 blocks to cover all the nonzero elements inside the matrix. we find that the right matrix requires a smaller number of blocks (24 blocks) than the left matrix (34 blocks). It is clear that the matrix on the right has a lesser number of non-empty blocks than the left matrix and therefore, we need a lesser number of bits to store the right matrix than the left one although both matrices are of the same graph.

3.1 Node Ordering

In the problem statement, we mentioned that we need to find a node ordering that reduces the storage cost function. We compare the five traditional community detection methods, i.e., Label Propagation, MultiLevel, Fast Greedy, Leading Eigenvectors, and Infomap with the latest node ordering and community detection method, i.e., SlashBurn. For the traditional community detection methods, we propose a naive node ordering as follows.

-

•

In the first step, we find communities in a graph using one of the community detection methods.

-

•

In the second step, we order the nodes in descending order of community sizes they belong to, i.e., the nodes belonging to the largest community are at the top of the ordered list whereas the nodes of the smallest community sink to the bottom of the ordered list.

-

•

In the last step, we order the nodes within a community in the descending order of their degrees.

To sum up, we order the nodes in descending order of community sizes and within a community, we order in descending order of degrees of nodes. The adjacency matrix of Figure 3(b) follows this node ordering.

To compare with the SlashBurn method, we follow the same node ordering as described in the original paper (Lim et al., 2014). The SlashBurn method removes hub nodes to create spokes and a giant connected component (GCC). The next iteration starts on the GCC. The method works iteratively until we reach a threshold. The method exploits the hubs to define alternative communities different from the traditional communities. Although this method achieves good compression ratios, there are two points to consider; 1) The graph shattering process to remove the hubs is computationally expensive. 2) The value of in the k-hubset, the set of nodes with top k highest centrality scores, and the value of , block width, do not have optimal values that will suit different types of real-world graphs.

3.2 Cost Functions

The main purpose of compressing a graph is to reduce the storage cost function. If we are given the adjacency matrix of a graph with compression-friendly node ordering, the cost to store this matrix should be minimum. In other words, we will need a lesser number of bits per link to store the graph. We define the following two cost functions as described in (Lim et al., 2014).

3.2.1 Number of Non-empty Blocks

Let be the width of a square block to cover all the nonzero elements in a matrix . If we use by blocks to cover all the 1s in the adjacency matrix , then the first cost function is defined as the number of nonempty blocks in .

| (1) |

where is the width of a block. It is obvious that a compression-friendly node ordering should give a smaller number of non-empty blocks than random node ordering. For example, the right matrix in Figure 3 has 24 non-empty blocks compared to the 34 blocks of the left matrix.

3.2.2 Number of Bits per Link

The second cost function uses the number of bits per link (or edge) required to encode the adjacency matrix using a block-wise encoding. We define this cost function assuming a compression method achieving the information-theoretic lower bound (Chakrabarti et al., 2004).

| (2) |

where is the number of nodes, is the set of non-empty blocks of width , is the number of 1s within block and the function is the binary Shannon entropy function defined as:

| (3) |

The first term in Equation (2) gives the number of bits to encode meta-information of blocks, i.e., row and column IDs of blocks whereas the second term calculates the number of bits to store 1s in the nonempty blocks. For example, we need 248 bits to store the right matrix of Figure 3 while the left matrix will consume 340 bits on storage.

4 Experimental Setup and Results

We apply the traditional community detection methods to find communities in a graph and then order the nodes as described above to get a compression-friendly adjacency matrix. Similarly, we also order the nodes as described in the SlashBurn method. We then find both the cost functions to compare these methods. The graphs used in our experiments are described in Table 1. The graphs are publicly available (Konect, 2015; Rossi and Ahmed, 2015).

| DataSet | Nodes | Edges | Description |

|---|---|---|---|

| 63,731 | 817,089 | A friendship network extracted from facebook consisting of people with edges representing friendship ties. | |

| BlogCatalog | 88,784 | 2,093,193 | BlogCatalog is a social blog directory. The dataset contains all links among users. |

| LiveMocha | 104,103 | 2,193,081 | LiveMocha is the largest language learning community in the world. The dataset contains all links among users. |

| Academia | 200,188 | 1,022,484 | Academia.edu is a platform for academics to share research papers. The dataset is a social network of its users. |

| GooglePlus | 211,336 | 1,141,861 | GooglePlus is a social networking service offered by Google. The network is a friendship network among its users. |

| TwitterHiggs | 456,635 | 12,508,466 | The dataset is used to study the spreading process on Twitter before, during and after the announcement of the discovery of Higgs Boson in 2012. |

| Delicious | 536,108 | 1,365,959 | Delicious is a social bookmarking service for storing, sharing and discovering web bookmarks. |

| Last.fm | 1,191,805 | 4,519,330 | This is social network of friendships of last.fm music website. The nodes are users and edges represent friendships among them. |

| YouTube | 1,134,890 | 2,987,624 | Friendship network of video sharing website YouTube. |

| Hyves | 1,402,673 | 2,777,919 | This is a social network of a Dutch online community. Nodes are users and Egdes are their friendships. |

4.1 Counting Non-empty Blocks

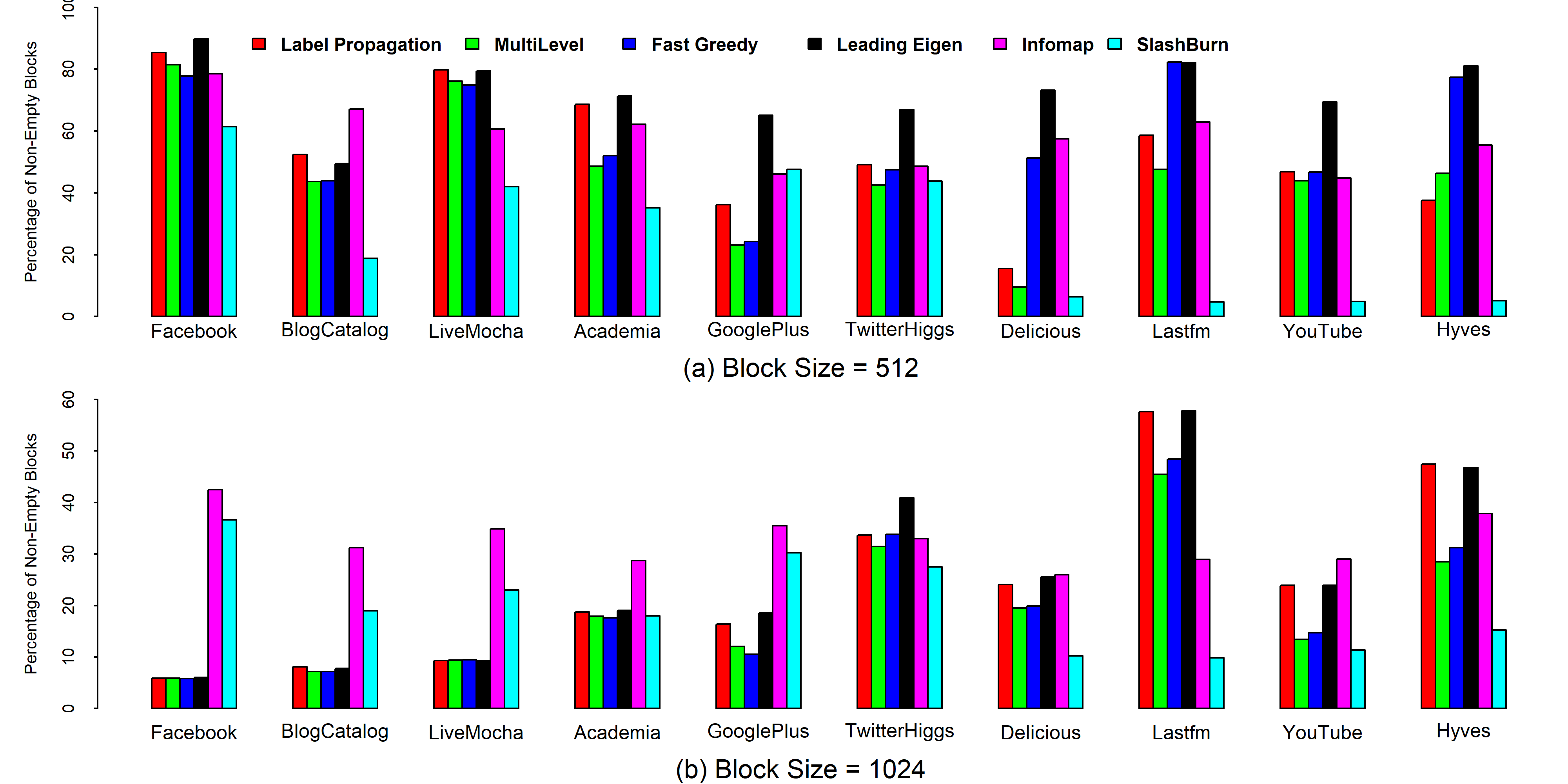

In this experiment, we count the number of non-empty blocks in the adjacency matrix of each graph for all the methods. We set the block width and so that we also see the effect of block size on this cost function. We present the results in Figure 4 in the form of bar graphs where the height of a bar shows the percentage of non-empty blocks. In Figure 4(a), we see that is minimum in the SlashBurn method in all the datasets except GooglePlus. In the last four datasets it has a very small value while in other datasets, it gives relatively higher values. On the other side, in Figure 4(b), is minimum in only four datasets in the SlashBurn method. Although SlashBurn was specially designed to produce a compression-friendly node ordering, it seems that it does not minimize the first cost function for any value of . This experiment also shows that the traditional community detection methods combined with a naive node ordering can yield a minimum number of non-empty blocks in the adjacency matrix of a graph.

| DataSet | Label Propagation | Multi Level | Fast Greedy | Leading Eigen | InfoMap | SlashBurn |

|---|---|---|---|---|---|---|

| 10.66 | 9.99 | 10.39 | 10.58 | 11.67 | 10.36 | |

| BlogCatalog | 8.37 | 8.27 | 8.35 | 8.25 | 8.26 | 6.68 |

| LiveMocha | 11.76 | 11.42 | 11.52 | 11.61 | 10.66 | 9.37 |

| Academia | 14.42 | 13.24 | 13.97 | 15.29 | 15.01 | 13.06 |

| GooglePlus | 9.14 | 9.24 | 9.85 | 13.14 | 12.34 | 11.12 |

| TwitterHiggs | 11.08 | 10.59 | 11.36 | 12.33 | 9.14 | 10.47 |

| Delicious | 14.49 | 14.09 | 14.36 | 15.76 | 18.34 | 12.77 |

| Last.fm | 18.61 | 17.13 | 17.83 | 18.70 | 20.84 | 13.09 |

| YouTube | 13.67 | 12.99 | 13.40 | 15.31 | 17.84 | 12.73 |

| Hyves | 18.29 | 16.44 | 17.19 | 19.09 | 15.47 | 14.53 |

| Average | 13.05 | 12.34 | 12.82 | 14.01 | 13.96 | 11.42 |

| DataSet | Label Propagation | Multi Level | Fast Greedy | Leading Eigen | Infomap | SlashBurn |

|---|---|---|---|---|---|---|

| 9.94 | 9.56 | 9.65 | 9.86 | 10.01 | 10.35 | |

| BlogCatalog | 6.83 | 7.54 | 7.18 | 6.95 | 7.44 | 6.88 |

| LiveMocha | 9.43 | 9.62 | 9.62 | 9.53 | 9.68 | 9.38 |

| Academia | 12.90 | 12.18 | 12.62 | 13.51 | 12.71 | 12.91 |

| GooglePlus | 8.69 | 8.92 | 8.83 | 11.05 | 8.82 | 10.81 |

| TwitterHiggs | 9.48 | 9.38 | 9.72 | 10.45 | 9.41 | 10.39 |

| Delicious | 13.08 | 12.56 | 12.50 | 14.34 | 14.63 | 12.82 |

| Last.fm | 13.92 | 12.75 | 13.24 | 13.94 | 16.95 | 12.88 |

| YouTube | 13.22 | 12.67 | 12.92 | 14.49 | 15.78 | 12.51 |

| Hyves | 15.62 | 13.46 | 13.97 | 15.29 | 17.47 | 13.90 |

| Average | 11.31 | 10.86 | 11.02 | 11.94 | 12.29 | 11.28 |

4.2 Calculating Bits per Link

In this experiment, we calculate the number of bits per link required to store a graph as per Equation (2) under two block widths and . We present the results in Tables 2 and 3. We see that when , on average, SlashBurn achieves the minimum number of bits per link to store a graph. However, when MultiLevel yields the best results on average. These results show that SlashBurn does not perform consistently and the number of bits achieved by it depends on the value of . In other words, we need to check the value of if we want to achieve good results with the SlashBurn. The same is true for other methods. It seems that going beyond caveman or traditional communities does not always offer benefits rather a good node ordering scheme combined with a traditional community detection method and an optimal value of can yield good compression ratios.

5 Related Work

There has been a lot of works on community detection and graph compression. A community detection method aims at finding homogeneous regions in the graph so that inter-region edges between different regions are minimized (Raghavan et al., 2007; Blondel et al., 2008; Clauset et al., 2004; Newman, 2006a; Rosvall and Bergstrom, 2008). In this context, network modularity is a closely related topic as it measures the strength of division of a graph into groups or communities. This is the reason these two topics usually go hand in hand (Newman, 2006b). Community detection in graphs or networks has applications in many areas of research including finance (Fenn et al., 2012; Wu et al., 2015), medical (Meunier et al., 2009; Alexander-Bloch et al., 2012), criminology (Sarvari et al., 2014; Pinheiro, 2012), and transportation (Oubaalla and Benhlima, 2018; Chen and Hu, 2013), to name a few. Graph compression has also been an active research topic and several techniques have been developed to compress large graphs. The authors (Boldi and Vigna, 2004) proposed graph compression techniques to encode large web graphs whereas (Chierichetti et al., 2009) extended the work to include social networks. These studies apply reference encoding to compress adjacency lists of big graphs. The authors (Maneth and Peternek, 2016) compress graphs by grammar while the study (Grabowski and Bieniecki, 2011) merges adjacency lists of a graph to store it efficiently.

6 Conclusion

In this work, we propose a naive node ordering scheme and show that we can achieve good compression ratios when the nodes belonging to a traditional community are arranged according to this naive node ordering. We consider five traditional community detection methods and a recent approach that exploits hubs and spokes in a graph to define alternative communities. We define two cost functions to measure the goodness of a compression-friendly community detection scheme. We perform experiments on ten real-world graphs and show that clique-like caveman communities are also compression-friendly when combined with a good node ordering. Both approaches can produce good results under suitable conditions.

References

- Adler and Mitzenmacher (2001) Adler M, Mitzenmacher M (2001) Towards compressing web graphs. In: Proceedings of the Data Compression Conference, IEEE Computer Society, Washington, DC, USA, DCC ’01, pp 203–212

- Alexander-Bloch et al. (2012) Alexander-Bloch A, Lambiotte R, Roberts B, Giedd J, Gogtay N, Bullmore E (2012) The discovery of population differences in network community structure: new methods and applications to brain functional networks in schizophrenia. Neuroimage 59(4):3889–3900

- Blondel et al. (2008) Blondel VD, Guillaume JL, Lambiotte R, Lefebvre E (2008) Fast unfolding of communities in large networks. Journal of Statistical Mechanics: Theory and Experiment p P10008

- Boldi and Vigna (2004) Boldi P, Vigna S (2004) The webgraph framework i: Compression techniques. In: Proceedings of the 13th International Conference on World Wide Web, pp 595–602

- Boldi et al. (2011) Boldi P, Rosa M, Santini M, Vigna S (2011) Layered label propagation: A multiresolution coordinate-free ordering for compressing social networks. In: Proceedings of the 20th International Conference on World Wide Web, pp 587–596

- Buehrer and Chellapilla (2008) Buehrer G, Chellapilla K (2008) A scalable pattern mining approach to web graph compression with communities. In: Proceedings of the 2008 International Conference on Web Search and Data Mining, pp 95–106

- Chakrabarti et al. (2004) Chakrabarti D, Papadimitriou S, Modha DS, Faloutsos C (2004) Fully automatic cross-associations. In: Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, p 79–88

- Chen and Hu (2013) Chen H, Hu Y (2013) Finding community structure and evaluating hub road section in urban traffic network. Procedia-Social and Behavioral Sciences 96:1494–1501

- Chierichetti et al. (2009) Chierichetti F, Kumar R, Lattanzi S, Mitzenmacher M, Panconesi A, Raghavan P (2009) On compressing social networks. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 219–228

- Clauset et al. (2004) Clauset A, M N, Moore C (2004) Finding community structure in very large networks. Physical Review E 70:066111

- Donath and Hoffman (1972) Donath W, Hoffman A (1972) Algorithms for partitioning graphs and computer logic based on eigenvectors of connection matrices. IBM Technical Disclosure Bulletin 15(3):938–944

- Fenn et al. (2012) Fenn DJ, Porter MA, Mucha PJ, McDonald M, Williams S, Johnson NF, Jones NS (2012) Dynamical clustering of exchange rates. Quantitative Finance 12(10):1493–1520

- Girvan and Newman (2002) Girvan M, Newman ME (2002) Community structure in social and biological networks. Proc Natl Acad Sci U S A 99(12):7821–7826

- Grabowski and Bieniecki (2011) Grabowski S, Bieniecki W (2011) Merging adjacency lists for efficient web graph compression. In: In Man-Machine Interactions 2

- Konect (2015) Konect (2015) Network dataset – KONECT. http://konectuni-koblenzde/networks

- Lim et al. (2014) Lim Y, Kang U, Faloutsos C (2014) Slashburn: Graph compression and mining beyond caveman communities. IEEE Transactions on Knowledge and Data Engineering 26(12):3077–3089

- Lorrain and White (1971) Lorrain F, White HC (1971) Structural equivalence of individuals in social networks. The Journal of Mathematical Sociology 1(1):49–80

- Maneth and Peternek (2016) Maneth S, Peternek F (2016) Compressing graphs by grammars. In: 2016 IEEE 32nd International Conference on Data Engineering (ICDE), pp 109–120

- Meunier et al. (2009) Meunier D, Lambiotte R, Fornito A, Ersche K, Bullmore ET (2009) Hierarchical modularity in human brain functional networks. Frontiers in neuroinformatics 3:37

- Newman (2006a) Newman ME (2006a) Finding community structure in networks using the eigenvectors of matrices. Physical Review E 74:036104

- Newman (2006b) Newman MEJ (2006b) Modularity and community structure in networks. Proceedings of the National Academy of Sciences 103(23):8577–8582

- Newman and Girvan (2004) Newman MEJ, Girvan M (2004) Finding and evaluating community structure in networks. Phys Rev E 69(2)

- Oubaalla and Benhlima (2018) Oubaalla W, Benhlima L (2018) An overview of community detection methods in transportation networks. In: Proceedings of the 12th International Conference on Intelligent Systems: Theories and Applications, SITA’18

- Pinheiro (2012) Pinheiro CAR (2012) Community detection to identify fraud events in telecommunications networks. In: Customer Intelligence

- Pons and Latapy (2005) Pons P, Latapy M (2005) Computing communities in large networks using random walks. Computer and Information Sciences pp 284–293

- Raghavan and Garcia-Molina (2003) Raghavan S, Garcia-Molina H (2003) Representing web graphs. In: Proceedings 19th International Conference on Data Engineering, pp 405–416

- Raghavan et al. (2007) Raghavan U, Albert R, Kumara S (2007) Near linear time algorithm to detect community structures in large-scale networks. Physical Review E 76:036106

- Randall et al. (2002) Randall KH, Stata R, Wickremesinghe RG, Wiener JL (2002) The link database: fast access to graphs of the web. In: Proceedings DCC 2002. Data Compression Conference, pp 122–131

- Reichardt and Bornholdt. (2006) Reichardt J, Bornholdt S (2006) Statistical mechanics of community detection. Physical Review E 74:016110

- Rossi and Ahmed (2015) Rossi RA, Ahmed NK (2015) The network data repository with interactive graph analytics and visualization. http://networkrepositorycom

- Rosvall and Bergstrom (2008) Rosvall M, Bergstrom C (2008) Maps of random walks on complex networks reveal community structure. Proceedings of the National Academy of Sciences 105(4):1118 – 1123

- Sarvari et al. (2014) Sarvari H, Abozinadah E, Mbaziira A, Mccoy D (2014) Constructing and analyzing criminal networks. In: 2014 IEEE Security and Privacy Workshops, pp 84–91

- Suel and Yuan (2001) Suel T, Yuan J (2001) Compressing the graph structure of the web. In: Data Compression Conference

- Wu et al. (2015) Wu S, Tuo M, Xiong D (2015) Community structure detection of shanghai stock market based on complex networks. In: Proceedings of 4th International Conference on Logistics, Informatics and Service Science, pp 1661–1666