An End-to-End Network for Upright Adjustment of Panoramic Images

Abstract

Nowadays, panoramic images can be easily obtained by panoramic cameras. However, when the panoramic camera orientation is tilted, a non-upright panoramic image will be captured. Existing upright adjustment models focus on how to estimate more accurate camera orientation, and attribute image reconstruction to offline or post-processing tasks. To this end, we propose an online end-to-end network for upright adjustment. Our network is designed to reconstruct the image while finding the angle. Our network consists of three modules: orientation estimation, LUT online generation, and upright reconstruction. Direction estimation estimates the tilt angle of the panoramic image. Then, a converter block with upsampling function is designed to generate angle to LUT. This module can output corresponding online LUT for different input angles. Finally, a lightweight generative adversarial network (GAN) aims to generate upright images from shallow features. The experimental results show that in terms of angles, we have improved the accuracy of small angle errors. In terms of image reconstruction, In image reconstruction, we have achieved the first real-time online upright reconstruction of panoramic images using deep learning networks.

Index Terms:

upright adjustment, LUT, panoramic imagesI Introduction

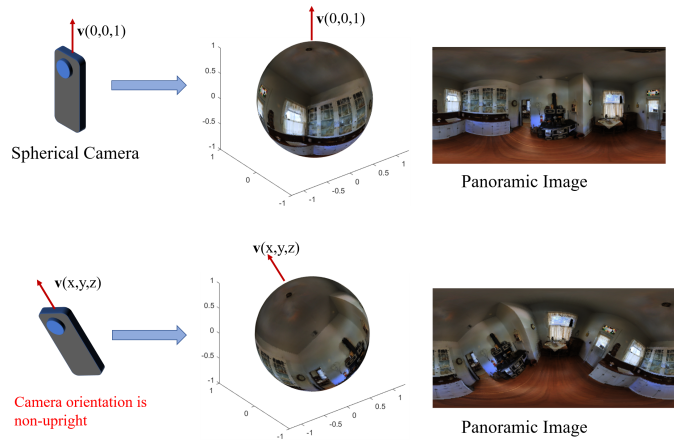

To realize immersive virtual/augmented reality, we often need to capture the surrounding scenes. The most convenient way to capture the surrounding scenes may be to use a spherical camera with 360 degrees field of view, as shown in Fig1. The spherical images captured by such a spherical camera are also widely used in virtual tourism [1, 2] depth estimation [3, 4, 5, 6], layout reconstruction [7], cultural heritage [8], recording crime scenes [9], etc. To use a spherical image, we need to know its orientation; for example, when we extend a spherical image onto an equirectangular image, the appearance of the equirectangular image will change due to a different orientation (see Fig1). Usually, similar to the research mentioned above, we assume that the spherical/equirectangular images are horizontal, which means that the horizon appears as a horizontal line in the images. For a spherical/equirectangular image captured with any orientation, we need to rectify it beforehand so that it has a canonical orientation. The processing is called the upright adjustment of panoramic images.

In a more theoretical way, as Fig1 shows, we used a vector to represent the camera orientation, denoted by . When the camera orientation is opposite to the direction of gravity, i.e.,, we can capture an upright spherical image. When the camera orientation is tilted, i.e.,, it will lead to a nonupright orientation of the captured image. That is, the canonical orientation is represented as , and the upright adjustment of panoramic images is to rotate an inclined image so that its orientation vector equals to . Since the canonical orientation can be obtained via a rotation with two rotational components, pitch and roll angles, the processing of upright adjustment of panoramic images involves two subtasks: rotational angle (pitch and roll) estimation and image remapping via rotation operation.

Recent studies on upright adjustment, either traditional feature-based methods or deep-learning-based methods, have until now focused on estimating the orientation of a panoramic image. Image reconstruction has not been much discussed and is usually processed offline using image remapping. An ideal situation would be to have a network that does upright adjustments directly without relying on post-processing.

To achieve this goal, in this paper, we propose an efficient end-to-end learning network to carry out upright adjustment of panoramic images, in which two subtasks, rotational angle (pitch and roll) estimation and image remapping via rotation operation, are completed by a single neural network. Our network is composed of three modules: orientation estimation, LUT online generation, and upright reconstruction. Our main contributions are as follows:

-

•

In contrast with the existing methods that output angles only and generate upright images offline, we are the first to propose a real-time online end-to-end solution to the best of our knowledge.

-

•

Our orientation estimation module has made some progress in the accuracy of small angle errors.

-

•

Upright reconstruction based on a lightweight cGAN is proposed to compensate for the error resulting from interpolation remapping to improve the fidelity of upright images.

-

•

As shown in the experiments, our proposed method can carry out upright adjustment of panoramic images online with about 11 fps and 429.6 MB storage. This work opens up the possibility of real-time upright adjustments on mobile devices.

II Related Works

In this section, we introduce the related works, focusing on inclination estimation and Large-scale dataset generation.

II-A Inclination Estimation of Panoramic Images

The related works on inclination estimation of panoramic images can be divided into traditional feature-based methods and deep-learning-based methods.

In traditional feature-based methods base on the observation that vertical lines should appear vertical and the horizon should appear horizontal in upright adjusted panoramic images. Martins et al.[10] proposed a probabilistic sequential orientation estimation method based on a Manhattan world assumption likelihood model. However, there are many scenarios that do not satisfy the Manhattan world assumption. Demonceaux et al.[11, 12] estimated the horizon line by maximizing a criterion for sky/ground photometric separation and computing the upright orientation by finding the horizon line. Because this method relies on the horizon line, it is only for cases where there is a clear distinction between the sky and the ground. Bazin et al. [13, 14] proposed a line-based model and vanishing points model. Jung et al. [15] proposed an automatic method for upright adjustment of spherical panorama images without any prior information, such as depths and gyro sensor data. They take the Atlanta world assumption and use the horizontal and vertical lines in the scene to formulate a cost function for upright adjustment. The last two methods rely on the ability to distinguish between vertical structures and vanishing points. However, the apparent weak point of this kind of methods is that it is difficult to applied to natural landscape.

Recently, deep-learning-based methods are reported to carry out the inclination estimation of images. Fischer et al. [16] proposed using a convolutional network to estimate image orientation. Olmschenk et al. [17] proposed using convolutional neural networks (CNNs) to automatically determine the pitch and roll of a camera using a single, scene agnostic, 2D image. However, these two methods are not applied to panoramic images of upright adjustment. Joshi and Guerzhoy [18] apply convolutional neural networks (CNNs) to the problem of image orientation detection in the context of determining the correct orientation (from 0, 90, 180, and 270 degrees). Shima et al. [19] proposed an orientation detection method for face images that relies on image category classification by deep learning. Rotated images are classified into four classes, namely, , clockwise, counterclockwise, or . However, these two methods only estimate specific angles and only for perspective images. Jeon et al. [20] proposed an upright adjustment framework based on a CNN. Instead of directly predicting the 3D rotation of the camera on a given panorama image, their method estimates the rotation by analyzing the projected 2D rotations of multiple images sampled from the panorama. Although their study was a task of panorama estimation, it did not directly use the network to estimate the panorama angle. Jung et al. [21] proposed a method that consists of two modules (a CNN and a graph convolutional network (GCN)).

As for the state-of-the-art methods for upright adjustment of panoramic images in recent years. Deep360up [22], uses DenseNet with a fully connected layer to predict the tilt direction of a spherical image, that is, the camera orientation v. The model was trained using a single angle loss, and the input of a panoramic image outputs the orientation. In [23], the Coarse2Fine approach was proposed to divide the prediction of panoramic image orientation into two stages: the first stage adjusted the image within , and the second stage refined the prediction accuracy within . The output of this model was still the predicted angle, and the final predicted angle was affected by the first stage. In [24], the camera orientation was predicted through the segmentation network combined with the vanishing point image and then adjusts the nonupright spherical image through the spherical rotation module. Although the above methods have greatly contributed to improving the accuracy of orientation estimation, they still divide the upright adjustment task into two steps: rotation angle estimation and panoramic image remapping. Their research only focuses on improving the accuracy of the angle estimation.

II-B Large-Scale Dataset Generation

In the upright adjustment of panoramic images, there is no large dataset of nonupright panoramic images for deep learning training. Therefore, as early as in previous research, Jung et al. [22] proposed methods to generate large-scale datasets. The method is divided into three steps: 1) project the input equirectangular image onto the unit sphere 2) rotate the spherical image by fixed angles, 3) back-project the rotated spherical image back into the 2D plane. And throughout the process, to speed up the image remapping process. The above three steps can be accomplished by using pre-calculated LUTs (Look Up Tables). Since the camera rotation has only two degrees of freedom affecting the tilt, two angle parameters (pitch and roll are used in this article, the same as existing methods) are used to describe the rotation, i.e., an LUT for the remapping of the upright adjustment panoramic images is generated from two rotational angles. All LUTs will be obtained after determining the angle range and angle interval. Next use a random LUT to rotate the upright image to get a non-upright image.

In the previous learning-based models, this method was used to generate dataset, so the method of generating dataset in this paper is consistent with it.

III End-to-end Learning Network for Panoramic Image Upright Reconstruction

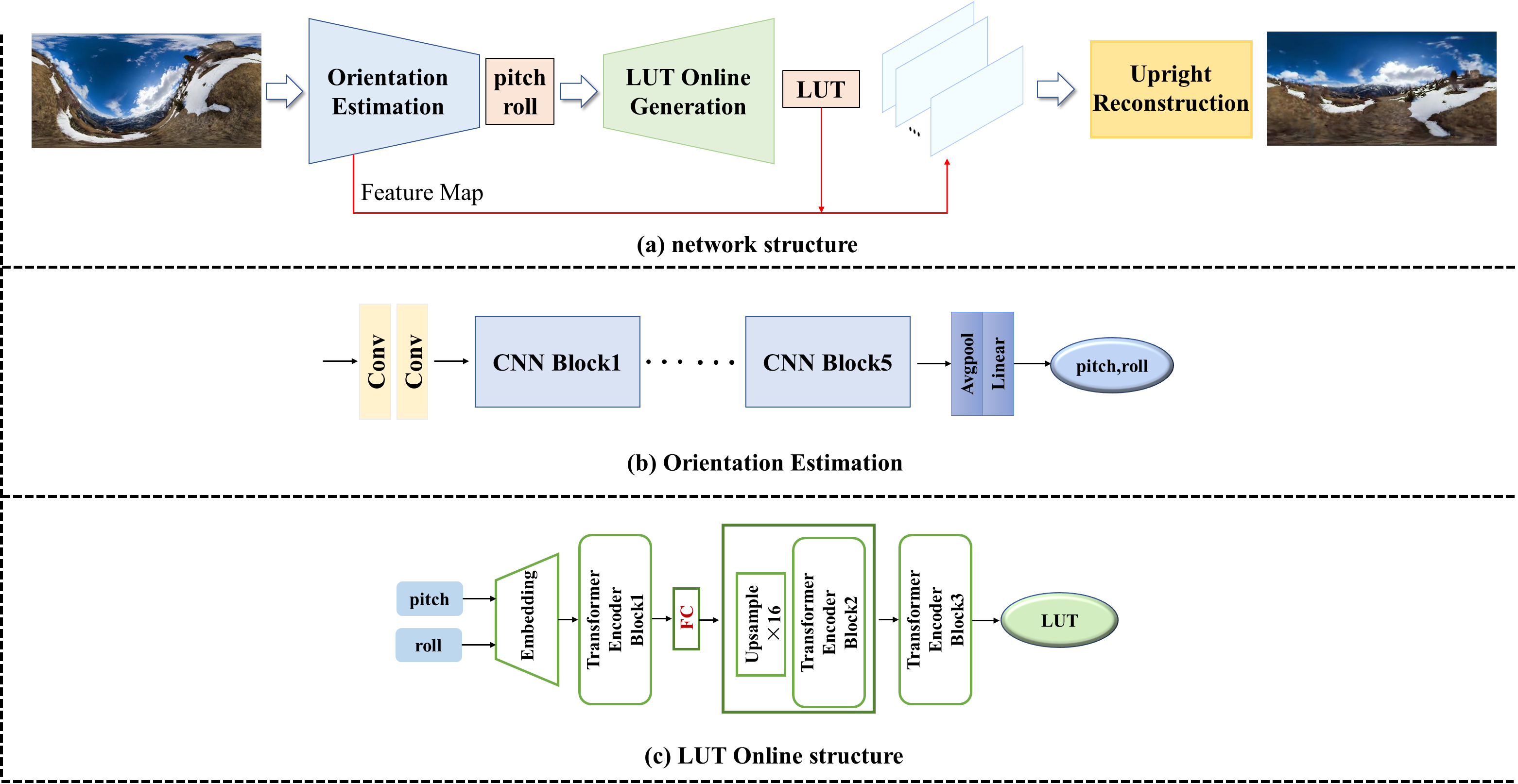

Our proposed end-to-end learning network for panoramic image upright reconstruction consists of three modules: orientation estimation, LUT online generation, and upright reconstruction. The data flow direction of the entire network is shown in Fig2(a). The nonupright image is input to the orientation estimation module to obtain pitch and roll. The whole process is represented as:

| (1) |

where represents the orientation estimation module. Next, the estimated angles are input to the LUT generation module to obtain the corresponding 256x512 size LUT, i.e.:

| (2) |

where represents the LUT online generation module. Then, the nonupright shallow feature maps in the orientation estimation module are rotated with the generated LUT to obtain upright feature maps , i.e.:

| (3) |

Finally, the upright feature maps are input to the upright reconstruction module to generate the final upright image with high fidelity, i.e.:

| (4) |

where represents the image generation module. We will explain the details of the three modules in later sections.

III-A Orientation Estimation

Similar to other methods[22, 23, 24] for upright adjustment mentioned in SectionII-A, orientation estimation is an indispensable part of the upright adjustment task. Therefore, we designed an orientation estimation to provide input for the subsequent LUT generation module.

SectionII-B mentioned that the rotation for upright adjustment is determined by two degrees of freedom, so in this paper, the orientation estimation of the camera is transformed into pitch and roll. Previous work has demonstrated that convolutional neural networks can achieve orientation estimation, so it is reasonable to assume that features can be extracted by convolution, and the features naturally contain angle information. As the number of network layers increases, the network gradually obtains higher-level features, and the final angle is obtained by classifying the high-level features through the fully connected layer. Therefore, we design a pure convolution structure for estimating pitch and roll. The structure of the inclination estimation module is shown in Fig2(b) and consists of five CNN blocks, an average pooling layer, and a fully connected layer. First, the image goes through two convolutions, which mainly represent the image with shallow features, such as some lines and contours, etc. These shallow features will be used as input in the subsequent upright reconstruction module.(SectionIII-C for details) Then, the shallow features enter five CNN blocks, each of which consists of one max pooling and two convolutions. After each CNN block, the feature map size is reduced by half, and the number of channels is doubled. The input image size is 256x512, and the size of the feature map becomes 8x16 after 5 CNN blocks. The feature map goes through the average pooling layer and the fully connected layer to return two values between 0 and 1. These two values correspond to pitch and roll, respectively. Because the tilt angle generally does not exceed for application, the pitch and roll of the predicted angles were calculated by .

In this module, we use smooth L1 loss[25] to calculate the loss, considering that the difference between the true value and the predicted value is not greater than 1. To ensure that the gradient is not too small in the initial phase of training, the coefficient is added. In summary, the angle loss is denoted as:

| (5) |

where represents the true value of pitch, represents the predicted pitch of the model, represents the true value of roll, represents the predicted roll of the model, and .

III-B LUT Online Generation

We aim to propose an end-to-end solution to the upright alignment task, but in our practice we find that convolutions alone cannot directly generate upright panoramic images. That is, the nonlinear transformation from a non-upright image to an upright image on a two-dimensional plane cannot be easily learned by a convolutional network. Inspired by methods for generating large-scale datasets, location information that cannot be easily learned by the network can be provided by LUTs.

Although the LUT has good rotational efficiency, the LUT has the obvious disadvantage of requiring a large amount of memory. Because the size and number of LUT are determined by the image size and angle respectively. To balance the rotation efficiency and space occupancy, we propose a solution for generating LUTs. From the point of view of the traditional algorithm, the parameters of the LUT generation process are only roll and pitch, i.e., different LUTs are obtained by different roll and pitch. The size of the LUT is determined by the image/feature map that needs to be rotated. In this paper, we need to rotate the feature map of 256x512 size, so we need to complete the task of LUT generation of 256x512 size. Overall, a LUT generation of size 256x512 needs to be achieved. From the point of view of data volume, the estimated angle would be transformed to . Although a unique LUT can be obtained after determining the angle, it is very difficult for the network to learn the direct mapping relationship . This difficulty stems from the fact that from to , the feature dimension span is too large, which will most likely lead to nonconvergence when training the network. Referring to the structure of CNN, which implemented downsampling followed by convolution to get the features, perhaps LUT online generation can be done in a similar reverse way to upsample the final 256x512 size LUT followed by feature encoder. Based on the above idea, our LUT online generation module can be divided into two steps:

-

•

Implementing to , a 16x32 LUT can provide a smooth transition.

-

•

Carry out upsampling with feature encoder: to .

The LUT online generation network structure is shown in Fig2(c). Next, we introduce the network details.

Embedding As shown in Fig2(c), input pitch and roll first into embedding. In fact, we use embedding to implement to . Embedding is used in natural language processing (NLP) to encode words and represent unstructured information as structured information. We embed pitch and roll values such as words in NLP, specifically embedding the two angles into two 1D vectors of 512 lengths, i.e.,

| (6) |

and the whole embedding process is learnable. Thus, during the training process, embedding is adjusted according to the loss to obtain a more suitable vector representation angle. Since the pitch and roll will obtain different LUTs if the values are exchanged, the angle position information is added after encoding the angle, where takes the value 1 or 2, corresponding to pitch and roll, respectively, and the final output of the network is obtained:

| (7) |

is input into the transformer encoder block.

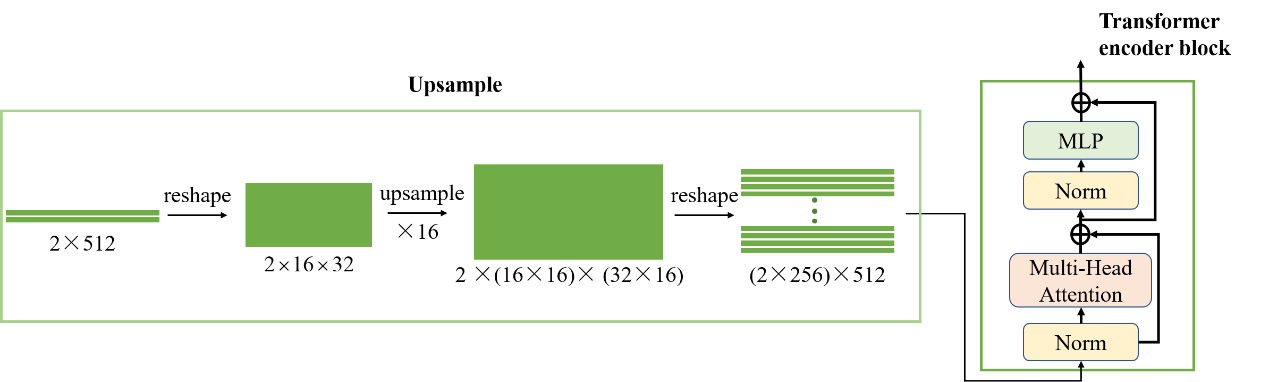

Transformer encoder block The tremendous success of Transformer in natural language processing (NLP) has proven its superiority in processing sequences [b27, 28]. Recent research has proven that the encoder of a transformer as the network backbone can have excellent performance in image generation tasks [29, 30]. In this paper, for the sequence obtained by embedding, our network backbone takes the encoder structure of transformer (Fig2(c)) as well as [31, 32]. The transformer encoder block consists of two main sublayers, the multihead self-attention mechanism and the fully connection feed-forward network, with a residual connection [33] used around each of the two sublayers, followed by layer normalization [34](the transformer encoder block details are shown on the right of Fig3). In transformer encoder block1 of the module, the correlation between two vectors will be calculated by the multihead self-attention mechanism, and then the vectors will be reencoded by the residual connection. The correlation between the i and j vectors is denoted by , where and can only take the values 1 and 2 because our network has only two vectors used for input before upsampling (as shown in Fig2(c), there are only two vectors obtained by embedding pitch and roll before upsampling). For the vectors and input into the multihead self-attention mechanism, the recoded and are denoted as:

| (8) |

| (9) |

where and are learnable parameters. and go through the feedforward network and the residual block to obtain the output of block 1. Although the two are represented as vectors related to each other, the output after these two vectors is still relatively independent. Since the LUT itself needs to be determined by two angles together and the angles are encoded into two vectors, that is, they need to be determined by two vectors together, we fuse the features of the two vectors through a layer of a fully connected layer (experiments show its necessity). At this time, the data volume of 2×512 is obtained after the first block.

To generate a LUT of size 2×256×512, we upsample the data output from the first block. The details of the upsampling are shown in Figure 3. Reshape the sequence into 2×16×32 form before upsampling, and then perform ×16 upsampling. To maintain the consistency of the ransformer encoder, we reshape the representation back to the sequence. Two more transformer encoders are added for feature learning.

In the LUT online generation module, we use L1loss for training, considering that the range of values stored inside LUT is , so the final loss multiplication factor amplifies the loss value to help training. The loss is denoted as:

| (10) |

where , denotes the real value of LUT and the generated value of LUT, respectively, and the coefficient .

III-C Upright Reconstruction

| Percentage of Predict Angle’s Deviation in Degrees | |||||||

| Dataset | Method | ||||||

| Sun360 | Ours | 29.9 | 65.3 | 80.3 | 86.3 | 89.2 | 95.2 |

| Segmentation[24](2020) | 19.7 | 53.6 | 75.5 | 87.2 | 92.6 | 98.4 | |

| Deep360up[22](2019) | 7.1 | 24.5 | 43.9 | 60.7 | 74.2 | 97.9 | |

| Coarse2Fine[23](2019) | 30.9 | 51.7 | 65.9 | 74.1 | 79.1 | 91.0 | |

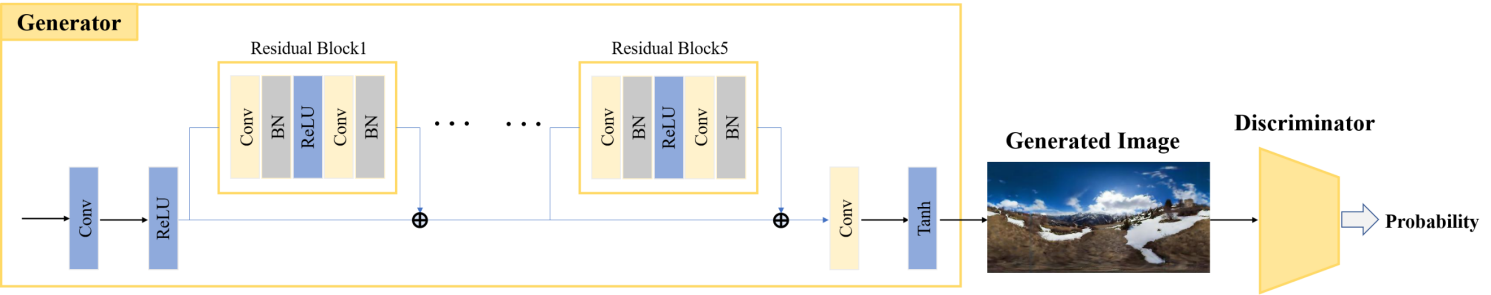

To get the final upright image, we designed a third module (Fig3). The input of the third module is the shallow feature map of the direction estimation module and the LUT of the LUT generation module. The shallow feature map is used to ease the learning difficulty of the generation module, which is consistent with the image size. For LUTs, in SectionIII-B, we have obtained LUTs adapted to the size of feature maps.

The upright reconstruction module consists of a generator and a discriminator. The backbone network of the generator consists of five residual blocks [33], which are used mainly to extract image features for the purpose of denoising. The structure of our discriminator comes from PatchGan [35]. The discriminator and the generator are alternately and iteratively trained. The purpose of the generator is to deceive the discriminator to generate a more realistic image. The probability judged by the discriminator is used as a loss to help the generator train. Overall, upright reconstruction is jointly trained with four losses. Specifically, the losses used by this module are as follows.

Image Perceptual Loss() [26]. In this study, we use VGG19 to compute the features of the image, in which image perceptual loss is denoted as:

| (11) |

where denotes the upright panorama, denotes the network generated image, denotes the VGG19 feature extraction module, and is used to calculate their mean-square error.

SSIM Loss()[36]. The structural similarity index (SSIM) is proposed for measuring the structural similarity between images, based on independent comparisons in terms of luminance, contrast, and structures. The SSIM Loss is denoted as:

| (12) |

Pixel Loss(). The above two losses are used to match the training result parameters with human perception. In addition, we use L1 loss to constrain the image in pixel space:

| (13) |

Discriminator Loss(). As shown in Fig4, the generated image is input to the discriminator, and the probability that the image is true is output. The discriminator loss is calculated as the binary cross-loss entropy of that probability with true. Experiments will show that the discriminator can solve the blurry in the upright reconstruction stage. The final joint loss is denoted as:

| (14) |

where and represents discriminator loss.

IV Experiments

IV-A Dataset and Training Details

Dataset In the deep-learning-based models of the previous research, the models all used the Sun360 dataset for training. Sun360 is a panoramic dataset and contains a variety of scenes, such as indoor, bridge building, and nature scenery scenes. Assume that the panoramic images in this dataset are all horizontal, i.e., . The upright adjustment task requires nonupright panoramic images to train the network, so we needed to generate a dataset similar to the previous upright adjustment task. As mentioned in SectionIII-A, the tilt range considered in this study was , so to generate the dataset, we generated 181x181 LUT_256x512 at 1° intervals in the range beforehand. It should be emphasized that LUT_256x512 here was different from LUT mentioned in SectionIII-B. LUT_256x512 implements the function of rotating an upright image to a nonupright image for dataset preparation. Finally, in the Sun360 database (approximately 67,000 images), we use 70% for training and 15% and 15% for testing and validation, respectively. We emphasize that since the Sun360 dataset contains multiple classifications, the Sun360 dataset is divided not simply by proportion but by ensuring the consistency of the classification images in the training, testing, and validation sets.

Because the LUT generation module requires 256x512 LUT truth values, similar to the above, we generate 181x181 LUTs at intervals in , but the function of the LUT generation module is to rotate the nonupright image into an upright image, which is contrary to dataset preparation.

Training details All three modules of the network were trained on TITAN RTX 24G. The three modules of the network are trained separately in a certain order. First, the angle estimation module is trained using the Adam optimizer, a batch size of 8, a learning rate of , 40 epochs for Sun360 and one epoch that takes approximately 16 minutes. Load the angle estimation pretraining model in the subsequent training, freeze the parameters and no longer participate in the training. Training the LUT generator module using the stochastic gradient descent (SGD) optimizer, a batch size of 8, a learning rate of , 60 epochs for LUT and one epoch takes approximately 6 minutes. Similarly, the LUT generation module provides a pretrained model for the image generation module and does not update the parameters in the subsequent image generation training. After obtaining the pretrained models of the first two modules, the training of the upright reconstruction module starts, using the Adam optimizer, a batch size of 8, a generation learning rate of , a discriminator learning rate of , 4 epochs for Sun360 and one epoch takes approximately 45 minutes.

IV-B Results of the orientation estimation module

Since the input of the LUT online generation module is provided by the output of the orientation estimation module, to generate an accurate LUT, the accuracy of the angle cannot be ignored. Before presenting the model results, we briefly discuss how to evaluate the results of the orientation estimation module. When the camera orientation is kept upright, . When the camera orientation is tilted, , where and are the truth values of roll and pitch. The network predicts the values of pitch and roll to be and , respectively, and the camera orientation is . The angle between these two vectors ( and ) is used to evaluate the error between the ground truth and the predicted value.

The results are shown in TableI. To more intuitively observe the estimation results, the direction estimation results of other state-of-the-art methods are also listed in the table. While our method is only slightly lower than [24] in results with large angle errors, we’ve made progress on small angular errors and 95% accuracy is sufficient for subsequent generation tasks from our proposed whole upright adjustment model.

IV-C Ablation analysis of LUT Online Generation

In this section, we will discuss the structure of the LUT online generation module, including the necessity of a fully connected layer (FC), different upsampling strategies and numbers of encoder blocks. PSNR and SSIM are used for quantitative analysis.

To illustrate the importance of FC in the LUT online generation module, we remove the FC to retrain the network. As Fig5 (No_FC) shows, the network does not converge in this situation, which proves the necessity of integrating the two angles in SectionIII-B.

Considering the error caused by upsampling, upsampling layer by layer is tested, the network is designed as four layers, and each layer is a x2 upsampling followed by a transformer encoder. The result is shown in Fig5 (x2). The proposed structure in SectionIII-B is shown in Fig5 (x16). According to PSNR and SSIM, the current x16 upsampling is the best.

To test the effect of encoder block numbers, we test x16 upsampling followed by 6 transformer blocks. Fig5 (x16_6) shows that it did not improve the effect. In our opinion, the LUT generation task is not as complicated as common image generation tasks; more layers may decrease the training efficiency, and a simple network is sufficient for our task.

IV-D Evaluating discriminator in upright reconstruction

IV-E Results for Upright Adjustment with Our Network

In this section, we introduce our upright adjustment models in three aspects: time, space, and generated image quality. Time We upright resize the entire test set of images on the server using our trained model, and the time measurement part excludes the image loading and preprocessing part. Measures the time from the beginning of the image entering the model to the end of the image output, that includes orientation estimation, LUT online generation and upright reconstruction. We measured an average spin time of 0.012 seconds. Obviously our model operation meets the requirements of real-time processing, which provides the possibility to realize the upright adjustment on the line.

Spatially SectionIII-B mentioned that by using LUT to help the network generate upright images, according to the LUT rotation image principle, the LUT should contain all possibilities so that it can be corrected for any rotation angle of the scene. For the range considered in our model, we needed a total of 32761 (181x181) LUTs. and the size of the LUTs has to be kept in line with the image size, while in our model, we use 256x512 size images, Thus taking up 4.65 GB of space in our server’s storage environment. While our final network (i.e., including orientation estimation, LUT online generation, upright reconstruction) only takes up 429.6 MB. Therefore it is very necessary to use the LUT generation module to optimize the storage occupied by the LUT, and 429.6MB reduces the storage requirements and can be suitable for more devices.

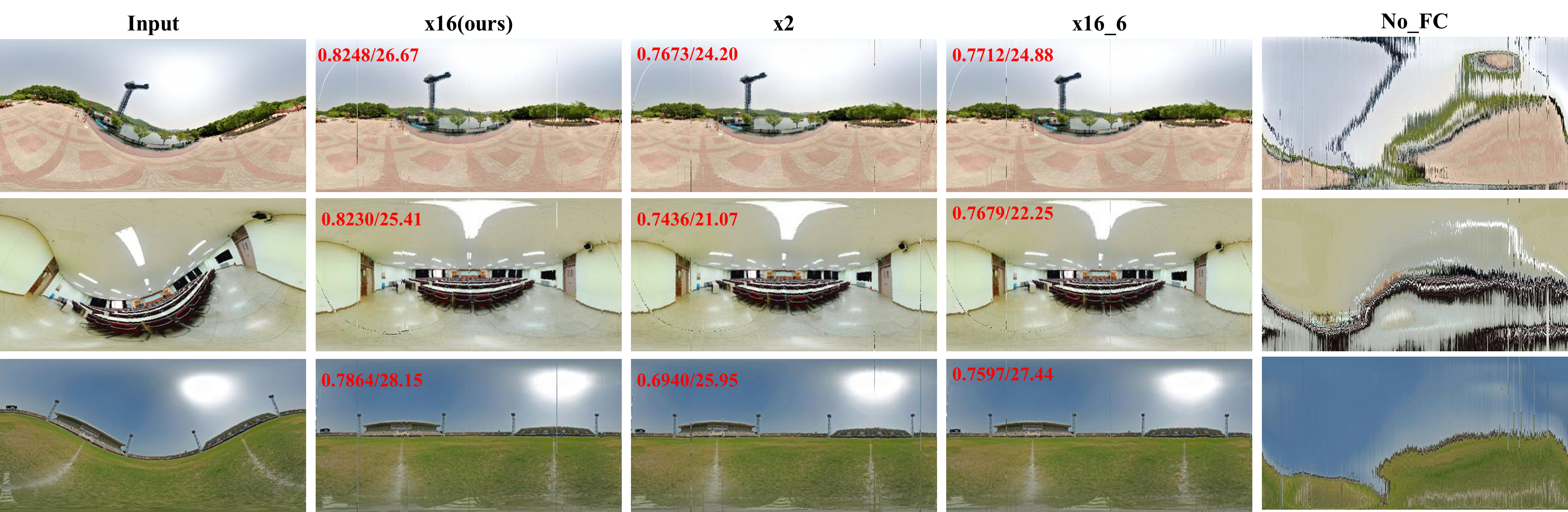

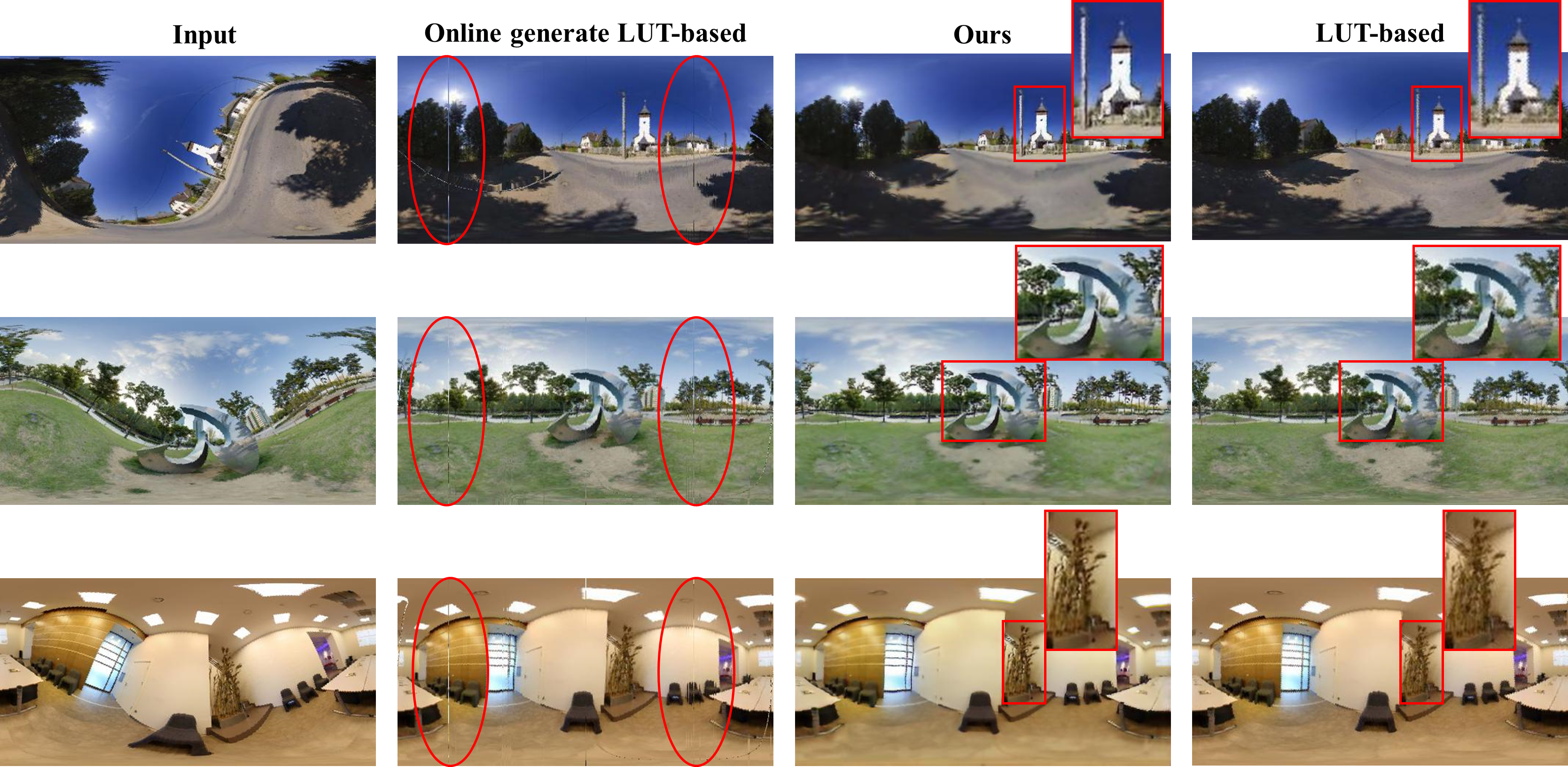

Image quality The upright images generated by our network are shown in Fig7. The objects we compared are images rotated directly using the LUT. From the point of view of the current upright adjustment methods, LUT-based is the second step they did not mention: use direct rotation or LUT-adjust for nonupright images. Since the current work only discusses how to improve the accuracy of the estimation and the actual method used to adjust the image is a default action (direct rotation or use LUT), we focus on the comparison with the traditional LUT-based. In Fig7, the image rotated with our generated LUT will have noise (the second column of Fig7), which is why we designed the upright reconstruction module. That is, the upright reconstruction module not only completes the task of generating images from feature maps but also denoises them. The LUT-based has a jagged edge due to the interpolation of remapping (enlarged in Fig7). The image we generated is relatively less jagged because it has been processed by the network and also has a certain elimination effect on the fixed noise caused by the LUT.

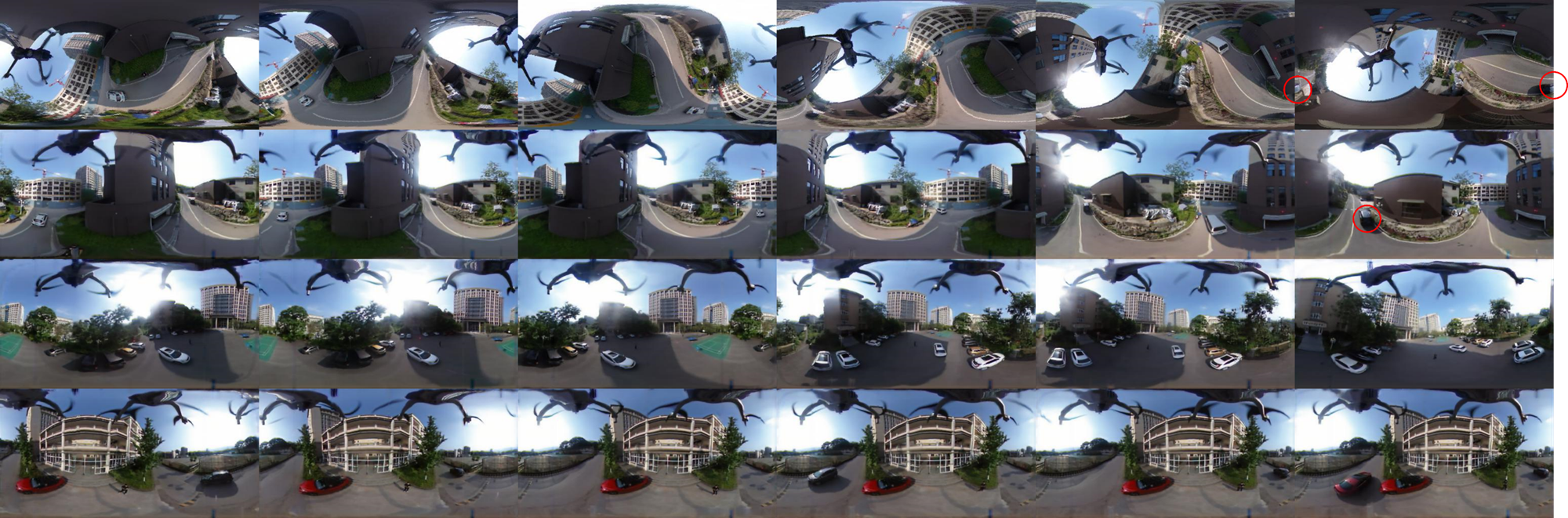

IV-F Panorama Reconstruction

To test our method in real situations, we took a sequence of real images with a drone carrying a commercial panoramic camera. Commercial panoramic cameras usually record the orientation by the gyroscope inside and then upright the panoramic images on computers offline. Supposing that the images directly acquired by the embedded system are at any orientation, without any adjustment. They should be considered the first row in Fig8, and the operator should try to observe the scene from the first-person perspective in real time. Obviously, these pictures cannot be transmitted to the unmanned aerial vehicle (UAV) observer since they are not user-friendly. If these images are fed into our end-to-end online network, a real-time rotation can be achieved Finally, all the images are horizontal (the last three rows in Fig8). The observer of this UAV would have no feeling that the drone has tiled due to some reasons, which brings better quality of continuity.

Furthermore, our online method accelerated the practicability of other panoramic image tasks. As we know, most panoramic image tasks require an upright image, and they cannot be used online if the adjustment step is offline. Now, their network could be connected directly followed by our network. For example, we can see that the last picture in the first line of Fig8 with a red circle, there is a black vehicle inside. The black vehicle should be horizontal in the real world but here vertical in images, since the camera is tilted. It would be difficult to identify, but it would be much easier after our method. After all, if online adjustment of panoramic images can be realized, it is quite significant for other real-time tasks.

V Conclusions

We proposed an online upright adjustment method for panoramic images. The greatest feature is the proposed online LUT generation module, which helps the convolutional network to generate upright images to achieve online upright adjustment. In addition, to achieve the upright adjustment task, we also design an upright reconstruction module to reduce the error of interpolation remapping. Furthermore, among existing models, we are the first to propose an online upright adjustment model, and our network achieves competitive image quality while achieving upright adjustment in real time, opening up the possibility that this task can be used in embedded systems.

References

- [1] Boukerch, Issam, et al. ”Development of Panoramic Virtual Tours System Based on Low Cost Devices.” International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 43 (2021): B2-2021.

- [2] bin Hashim, Khairul Hazrin, and Muhammad Jafni bin Jusof. ”Spherical high dynamic range virtual reality for virtual tourism: Kellie’s Castle, Malaysia.” 2010 16th International Conference on Virtual Systems and Multimedia. IEEE, 2010.

- [3] Pintore, Giovanni, et al., ”SliceNet: deep dense depth estimation from a single indoor panorama using a slice-based representation.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 11536-11545, 2021.

- [4] Jiang, Hualie, et al., ”Unifuse: Unidirectional fusion for 360 panorama depth estimation.” IEEE Robotics and Automation Letters, 6(2). pp. 1519-1526, 2021.

- [5] Rey-Area, Manuel, Mingze Yuan, and Christian Richardt., ”360MonoDepth: High-Resolution 360° Monocular Depth Estimation.” arXiv preprint arXiv:2111.15669, 2021.

- [6] Zhuang, Chuanqing, et al., ”ACDNet: Adaptively Combined Dilated Convolution for Monocular Panorama Depth Estimation.” arXiv preprint arXiv:2112.14440, 2021.

- [7] Sun, Cheng, Min Sun, and Hwann-Tzong Chen., ”Hohonet: 360 indoor holistic understanding with latent horizontal features.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2573-2582, 2021.

- [8] Koeva, Mila, Mila Luleva, and Plamen Maldjanski., ”Integrating spherical panoramas and maps for visualization of cultural heritage objects using virtual reality technology.” Sensors 17.4: pp. 829, 2017.

- [9] ABATE, Dante, et al. ”A low-cost panoramic camera for the 3d documentation of contaminated crime scenes.” International Society for Photogrammetry and Remote Sensing. Vol. 42. 2017.

- [10] Martins, André T., Pedro MQ Aguiar, and Mário AT Figueiredo., ”Orientation in Manhattan: Equiprojective classes and sequential estimation.” IEEE transactions on pattern analysis and machine intelligence, 27(5). pp. 822-827, 2005.

- [11] Demonceaux, Cédric, Pascal Vasseur, and Claude Pégard., ”Robust attitude estimation with catadioptric vision.” 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE. pp. 3448-3453, 2006.

- [12] Demonceaux, Cédric, Pascal Vasseur, and Claude Pégard., ”Omnidirectional vision on UAV for attitude computation.” Proceedings 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006. IEEE. pp. 2842-2847, 2006.

- [13] Bazin, Jean-Charles, et al., ”Rotation estimation and vanishing point extraction by omnidirectional vision in urban environment.” The International Journal of Robotics Research,31(1). pp. 63-81, 2012.

- [14] Bazin, Jean-Charles, et al., ”Motion estimation by decoupling rotation and translation in catadioptric vision.” Computer Vision and Image Understanding, 114(2). pp. 254-273, 2010.

- [15] Jung, Jinwoong, et al., ”Robust upright adjustment of 360 spherical panoramas.” The Visual Computer, 33(6). pp. 737-747, 2017.

- [16] Fischer, Philipp, Alexey Dosovitskiy, and Thomas Brox., ”Image orientation estimation with convolutional networks.” German conference on pattern recognition. Springer, Cham. pp. 368-378, 2015.

- [17] Olmschenk, Greg, Hao Tang, and Zhigang Zhu., ”Pitch and roll camera orientation from a single 2D image using convolutional neural networks.” 2017 14th Conference on Computer and Robot Vision (CRV). IEEE. pp. 261-268, 2017.

- [18] Joshi, Ujash, and Michael Guerzhoy., ”Automatic photo orientation detection with convolutional neural networks.” 2017 14th Conference on Computer and Robot Vision (CRV). IEEE. pp. 103-108, 2017.

- [19] Shima, Yoshihiro, Yumi Nakashima, and Michio Yasuda., ”Detecting orientation of in-plain rotated face images based on category classification by deep learning.” TENCON 2017-2017 IEEE Region 10 Conference. IEEE. pp. 127-132, 2017.

- [20] Jeon, Junho, Jinwoong Jung, and Seungyong Lee., ”Deep upright adjustment of 360 panoramas using multiple roll estimations.” Asian Conference on Computer Vision. Springer, Cham. pp. 199-214, 2018.

- [21] Jung, Raehyuk, Sungmin Cho, and Junseok Kwon., ”Upright adjustment with graph convolutional networks.” 2020 IEEE International Conference on Image Processing (ICIP). IEEE. pp. 1058-1062, 2020.

- [22] Jung, Raehyuk, et al., ”Deep360Up: A deep learning-based approach for automatic VR image upright adjustment.” 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE. pp. 1-8, 2019.

- [23] Shan, Yuhao, and Shigang Li., ”Discrete spherical image representation for cnn-based inclination estimation.” IEEE Access 8. pp. 2008-2022, 2019.

- [24] Davidson, Benjamin, Mohsan S. Alvi, and João F. Henriques., ”360° Camera Alignment via Segmentation.” European Conference on Computer Vision. Springer, Cham. pp. 579-595, 2020.

- [25] Girshick, Ross., ”Fast r-cnn.” Proceedings of the IEEE international conference on computer vision. pp. 1440-1448, 2015.

- [26] Johnson, Justin, Alexandre Alahi, and Li Fei-Fei., ”Perceptual losses for real-time style transfer and super-resolution.” European conference on computer vision. Springer, Cham. pp. 694-711, 2016.

- [27] Kenton, Jacob Devlin Ming-Wei Chang, and Lee Kristina Toutanova. ”Bert: Pre-training of deep bidirectional transformers for language understanding.” Proceedings of naacL-HLT. 2019.

- [28] Vaswani, Ashish, et al. ”Attention is all you need.” Advances in neural information processing systems 30 (2017).

- [29] Zhao, Long, et al. ”Improved transformer for high-resolution gans.” Advances in Neural Information Processing Systems 34 (2021): 18367-18380.

- [30] Lee, Kwonjoon, et al. ”Vitgan: Training gans with vision transformers.” arXiv preprint arXiv:2107.04589 (2021).

- [31] Zheng, Sixiao, et al. ”Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021.

- [32] Yang, Rui, et al. ”Scalablevit: Rethinking the context-oriented generalization of vision transformer.” arXiv preprint arXiv:2203.10790 (2022).

- [33] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 770–778, 2016.

- [34] Vaswani, Ashish, et al. ”Attention is all you need.” Advances in neural information processing systems 30 (2017).

- [35] Isola, Phillip, et al. ”Image-to-image translation with conditional adversarial networks.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

- [36] Wang, Zhou, et al. ”Image quality assessment: from error visibility to structural similarity.” IEEE transactions on image processing 13.4 (2004): 600-612.