An FPGA framework for Interferometric Vision-Based Navigation (iVisNav)

Abstract

Interferometric Vision-Based Navigation (iVisNav) is a novel optoelectronic sensor for autonomous proximity operations. iVisNav employs laser emitting structured beacons and precisely characterizes six degrees of freedom relative motion rates by measuring changes in the phase of the transmitted laser pulses. iVisNav’s embedded package must efficiently process high frequency dynamics for robust sensing and estimation. A new embedded system for least squares-based rate estimation is developed in this paper. The resulting system is capable of interfacing with the photonics and implement the estimation algorithm in a field-programmable gate array. The embedded package is shown to be a hardware/software co-design handling estimation procedure using finite precision arithmetic for high-speed computation. The accuracy of the finite precision FPGA hardware design is compared with the floating-point software evaluation of the algorithm on MATLAB to benchmark its performance and statistical consistency with the error measures. Implementation results demonstrate the utility of FPGA computing capabilities for high-speed proximity navigation using iVisNav.

Index Terms:

Interferometry, state estimation, least squares, FPGAI Introduction

Precise characterization of a vehicle’s state is critical to ensure safe navigation operations. Be it spacecraft rendezvous missions or aircraft landing/take-off operations, the state-of-the-art places great emphasis on safe and precise unmanned and autonomous execution [1]. Technical advancements in microelectronics and embedded systems aid in the autonomy of sensing and control. Reliable control actions demand high-quality information from the sensing devices as well as processing the information in real-time [2]. Hence, rapid enhancements in high-fidelity sensing and computing capabilities advance the real-time execution of autonomous navigation algorithms.

Traditionally, inertial sensors or inertial measurement units (IMUs) have been used to accomplish the position and attitude sensing for navigation in both autonomous and guided applications [3, 1]. Although IMUs can capture the dynamics of a fast-moving vehicle, the measurements are typically corrupted by biases, drifts, and noises which accumulate over the rendezvous and lead to considerable errors in pose measurements. Fusing the IMU data with GPS [4] partially addresses this problem by providing another set of measurements (global position) to periodically compensate for the IMU drifts. Close-range rendezvous operations are too critical to entirely depend on the GPS because of bandwidth limitations and ambiguity in resolution for minute positional changes.

Optoelectronics and machine vision are being embraced at a rapid pace for relative navigation applications [5, 6, 7]. The corresponding vision-based sensing modalities provide rich information context of the surrounding world to the ego-vehicles [8, 9]. LiDAR sensors in particular, directly provide range measurements in the interest of landing/take-off operations. However, LiDARs are prone to degradation of measurement density with range [10, 11]. Computing range rate from range measurements may lead to large errors and turn out to be unacceptable for precise landing environments. Recent advancements leveraging structured light solutions to realize velocimetry capabilities are found to be robust to most of the issues [12, 13, 14, 15, 16].

In addition to sensing, filtering, and optimal state estimation are statutory to effectively utilize the available sensor modalities and thereby achieve mission objectives [17]. An online implementation of a filtering approach allows for real-time state estimation, which is critical for autonomous proximity operations [18]. Online sensing and filtering algorithms would benefit from execution on dedicated low-cost embedded solutions such as Field Programmable Gate Arrays (FPGAs) [19]. FPGAs implement customized logic on bare-metal hardware resources and provide infrastructure for processing measurements in real-time.

Customized FPGA-based embedded solutions deploying hardware-software co-design approaches are highly sought-after in robotics. Modular interfacing with multiple sensing channels, parallel processing of estimation and control schemes at significantly lower footprints is an attractive choice for embedded developers to look away from. Consequently, FPGA accelerated solutions are demonstrated to improve performance in sensor fusion and navigation operations utilizing filtering algorithms [20, 21, 22, 23, 24, 25] and digital signal processing [26, 27].

In this article, we propose the least squares-based method that leverages FPGA architecture to estimate rate in real-time. We first outline our previous work on sensor design and the estimation process, and present the hardware design next. We demonstrate the functionality of the FPGA framework in simulated results and qualify its performance relative to the legacy software implementation on MATLAB.

II Related Work

An analog vision sensor - VisNav is first conceived to overcome the drawbacks of passive vision-based navigation for high precision relative pose estimation [12, 13]. VisNav uses a set of optical beacons for radiating bursts of frequency-modulated light. Position Sensing Diodes (PSD) on the approaching spacecraft or aircraft sense the modulated optical signals and determine the line of sight toward each beacon. Although the VisNav system supports high-speed optical measurements for position and attitude estimation, it demands a great amount of calibration and installation efforts for the involved analog optoelectronic elements. Alternately, the emergence of high-speed digital cameras replace the PSDs and counters the bandwidth limitations with custom embedded system design [14]. The compatibility of monocular camera and LEDs for a variation of VisNav is explored by Wong et al. [14]. To achieve the same levels of robustness of VisNav using low-frequency camera measurements, a custom digital hardware design, for filtering and estimation along with sensor data processing is needed.

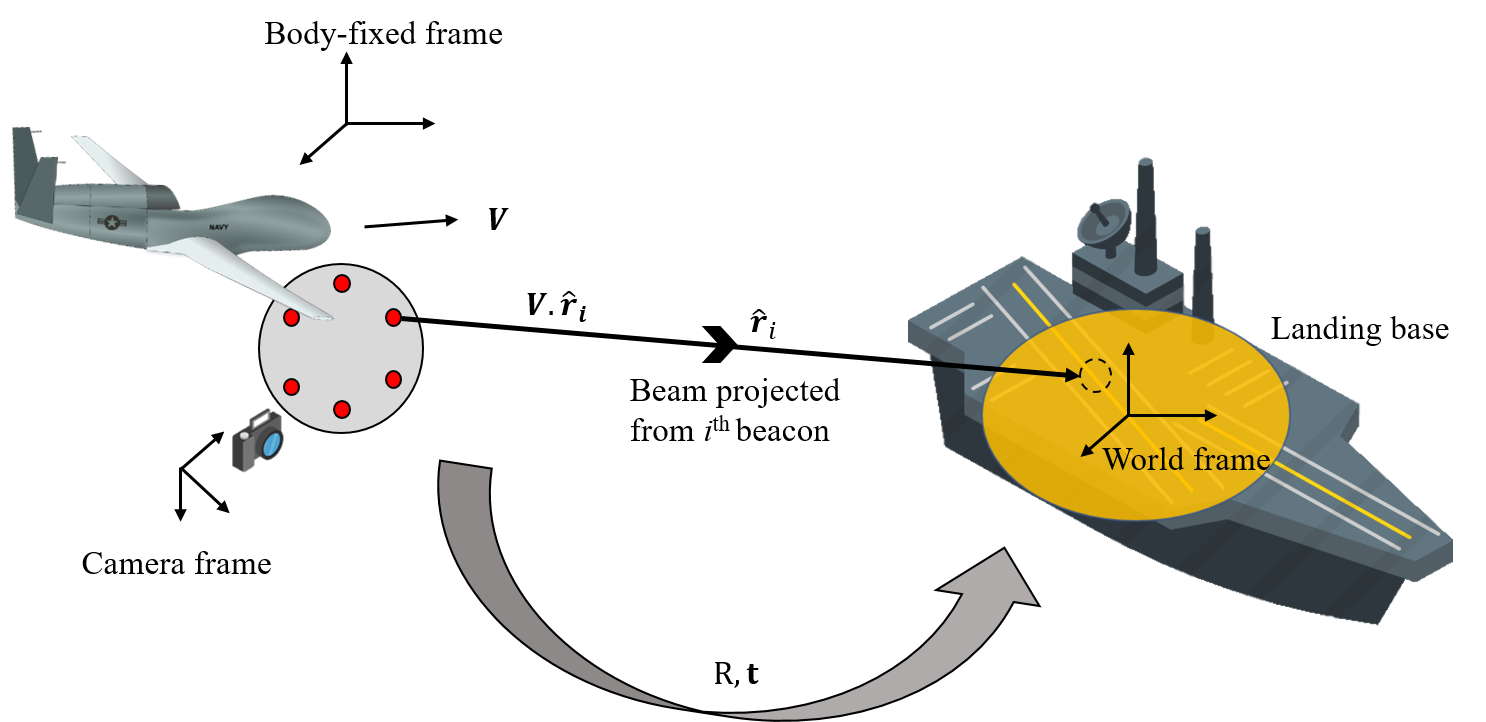

Building upon the VisNav system, an Interferometric Vision-based Navigation sensor (iVisNav) [28] shown in Fig. 1, is proposed for high-frequency velocimetry of a landing base with respect to the sensor system. This is accomplished by illuminating the base with modulated laser light from onboard structured beacons. In one method, Doppler shifts between the illuminated and the reflected light could be captured to estimate the rates of position and angular degrees of freedom [29]. Doppler frequency measurements demand high fidelity and high sampling sensors supported by a high-performance digital computing framework. A simpler and cost-effective approach is to replace the Doppler measurements with phase shift measurements attainable from a Time-of-Flight (ToF) LiDAR.

Referring to Fig. 1, the ToF LiDAR on-board the aircraft illuminates a landing base with a modulated infrared (IR) laser source and computes the phase shift () of the detected reflection according to Eq. (1). The phase shift measurements translate to the range () of the moving base from the sensor system as

| (1) |

where is the wavelength of the laser source, is the modulation frequency, and is the velocity of light ().

From consecutive records of phase shift data, a time derivative of the phase is evaluated to derive the relative radial velocity of the aircraft along the projected laser beam.

| (2) |

This implies that from digitized time-keeping and successive phase shift measurements captured at a high sampling rate, the relative velocity or range rate at measurement can be approximated as:

| (3) |

The geometric setup of the structured beacon system, bench top prototype, and rate estimation procedures are described in [28, 18]. To re-emphasize the algorithmic procedure, least squares-based rate estimation steps are outlined in the next section.

II-A iVisNav: Rate estimation

A set of six or more measurement equations acquired from the iVisNav sensor model assist in the estimation of 6-DOF translational and angular velocity profiles of a rigid body in relative motion. As shown in Eq. (3), the phase shift measurements (per sampling interval) along the beacon directions are obtained from the ToF LiDAR as

| (4) |

The ToF range measurements are combined with the direction vectors calibrated in the body-fixed frame to obtain range vectors from the beacons to the projections as . The relative displacement of the landing base, in the body frame, is denoted by . The scalar is obtained from the ToF sensor’s range value measurements. The displacement of beacon projections from the origin of the world frame are expressed in terms of the range vectors as

| (5) |

The vectors and are coordinatized in the body-fixed frame of reference, while the projection displacements are described in the world frame attached to the landing base.

The time derivative of Eq. (5) is written using the transport theorem [30] as

| (6) |

where denotes the relative angular velocity vector and denotes the corresponding skew-symmetric matrix.

By stacking the system of vectors obtained from each of the six projections, the least squares problem is obtained as

| (8) |

The optimal estimate (in the realm of the least squares) for the translational and angular velocity profiles of the center of mass is obtained by the solution to the normal equations as

| (9) |

where the symmetric weight matrix is chosen to be the reciprocal of the measurement error covariance matrix such that . This choice of places error proportional emphasis on each of the measurement equations.

Equation (9) is the least squares solution for the estimation of translational and angular rates. The solution demands six phase shift measurements from the structured light setup and also the displacement of the projections from utilizing the pose estimation from a low-frequency camera sensor.

III Hardware Design

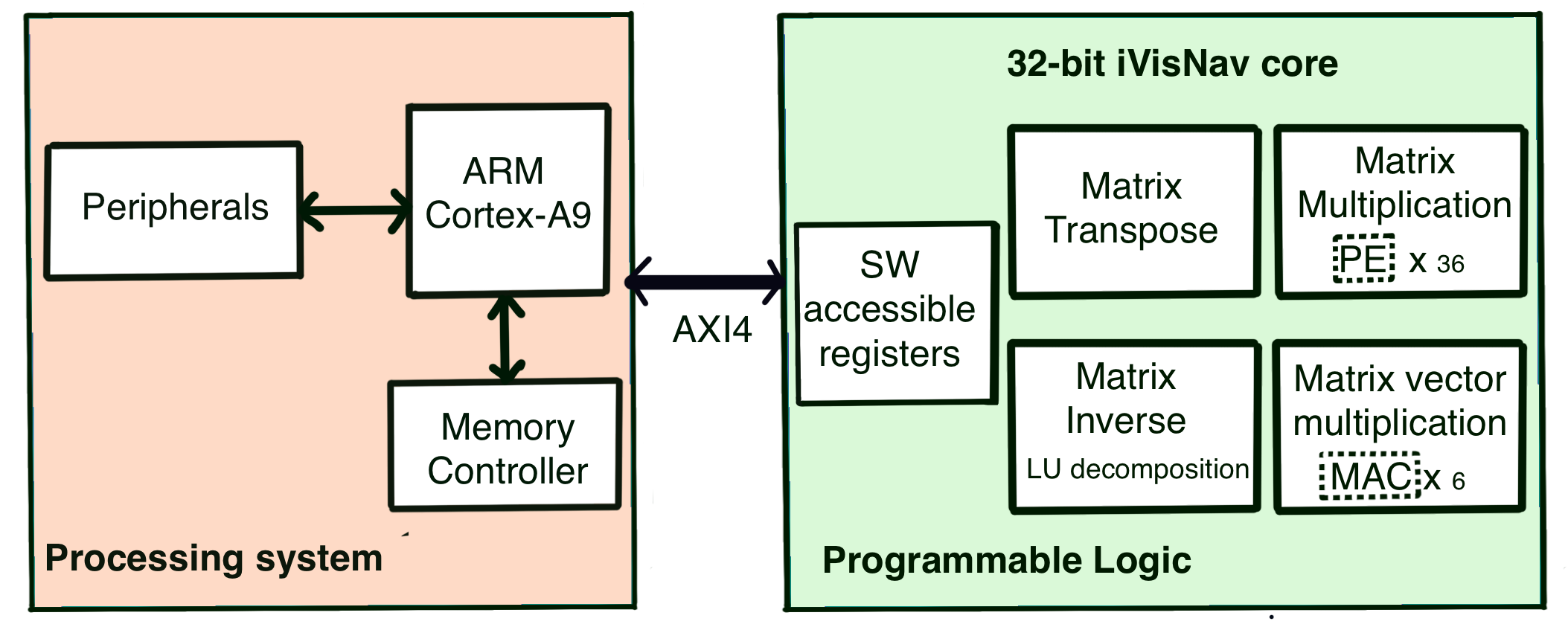

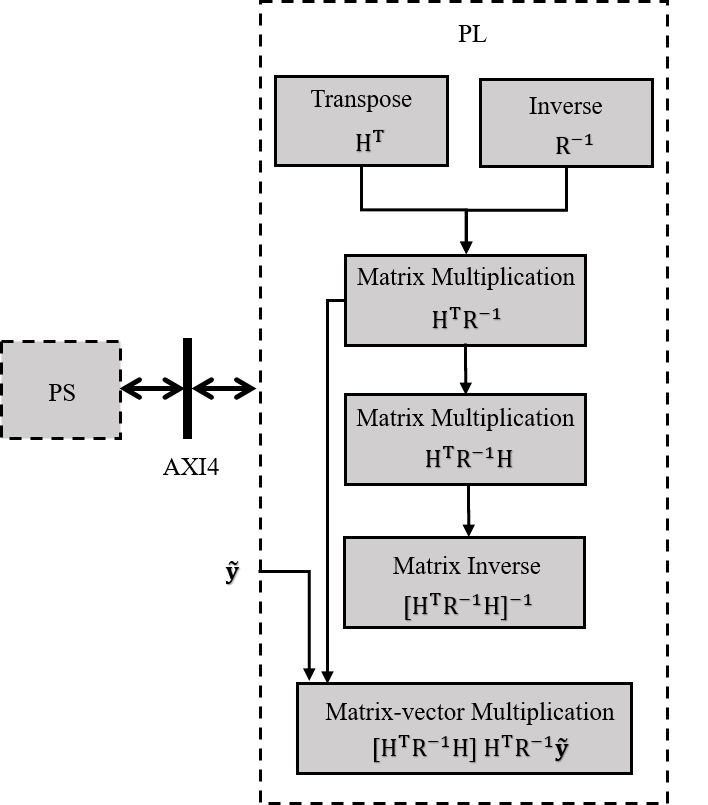

The embedded system design for iVisNav estimation framework follows a hardware-software (HW/SW) co-design philosophy, as indicated in Fig. 2. The least squares-based state estimation is realized as a custom intellectual property (IP) core implemented on the programmable logic (PL) of the FPGA. The phase difference measurements and the projection vectors (Eq. (9)) are generated on the processing system (PS). Simulated measurements on the PS are utilized to validate the least squares implementation.

III-A Development Environment

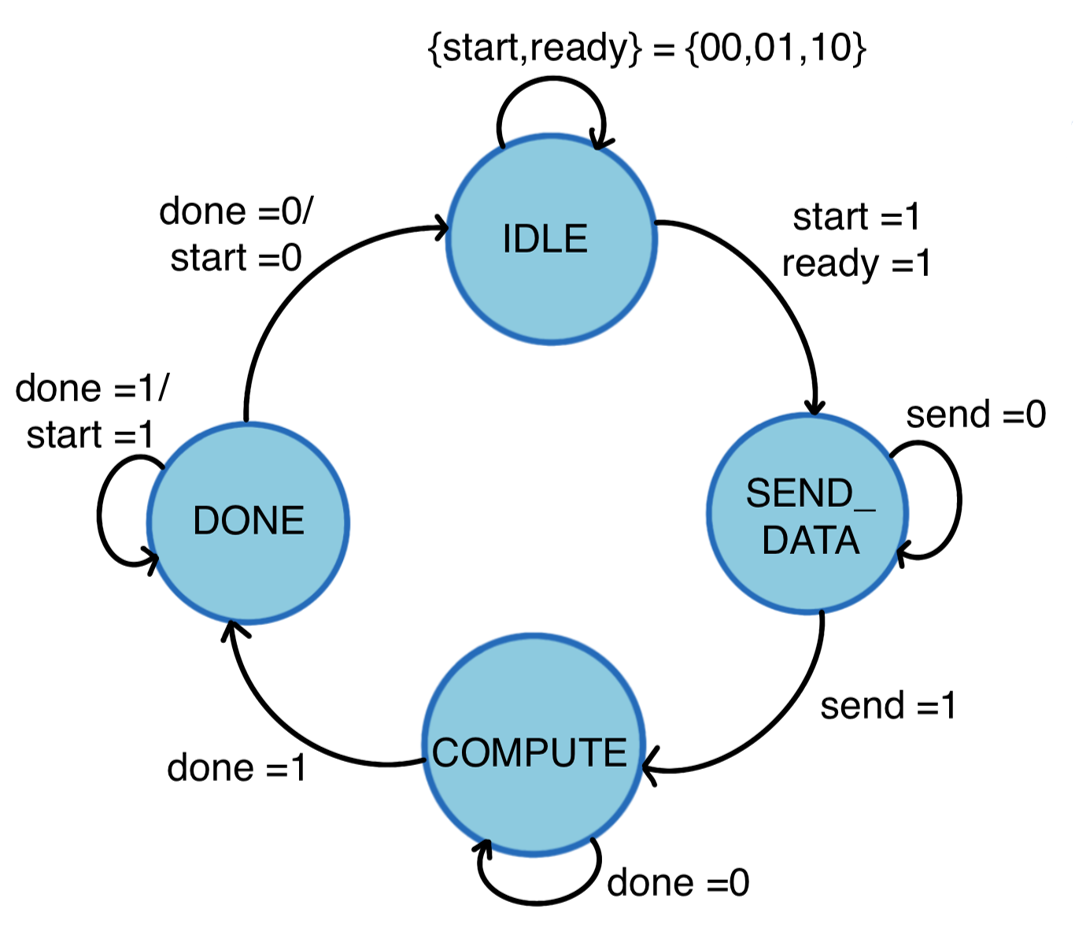

HW/SW co-design advantageously combines the traits of development efficiency in software implementation (ARM processor) with high-performance capabilities of the hardware implementation (PL) for the design of FPGA embedded systems [31]. The iVisNav estimation framework is designed to evaluate the repetitive and computationally expensive least squares algorithm on the PL while the application-specific operations are handled by the PS. The application-specific tasks for the iVisNav include measurement pre-processing and data flow control procedures. The embedded system works by efficiently delivering data from PS (via C code running on ARM CPU cores) to the PL (re-configurable FPGA logic, programmed using Verilog hardware description language) for continuous and accelerated evaluation of state estimation sequence. The PS-PL communication is controlled by a state machine (shown in Fig. 4) and supported by an advanced extensible interface (AXI4) bus protocol utilizing software-accessible registers on the PL. The proposed co-design is implemented on a Xilinx Zynq 7020 FPGA System-on-Chip (SoC) [32] and programmed using Vivado 2019.1 and Vivado High-Level Synthesis (HLS) tools. Operations on the PL are based on 32-bit fixed-point arithmetic except for matrix inversion operations, which are evaluated using IEEE 754 single-precision floating-point format for extended dynamic range.

III-B Architecture: iVisNav Core

The pipelined architecture of the iVisNav core is built with a focus on implementing the sequence of linear algebra operations as depicted in Fig. 3. Four major modules evaluate the computationally expensive filter operations: matrix transpose, matrix multiplication, matrix inversion, and matrix-vector multiplication. The PS controls the data flow by reading and writing to the software-accessible registers. The PL polls these registers for system and measurement data streams as well as to read/write the status of operations. The implementation overview of the said major modules is as follows:

III-B1 Matrix transpose

Matrix transpose operation is performed by reshuffling the buffered row-major matrix data stream to output the data in a transposed column-major order. The transpose module utilizes of the lookup tables (LUTs) available on the Zynq 7020 FPGA SoC.

III-B2 Matrix multiplication

Matrix multiplication is based upon systolic array architecture (SAA) [33]. SAA is a pipelined network arrangement of Processing Elements (PEs) in a 2D mesh-like topology [18]. The PEs perform multiply and accumulate (MAC) operations on the incoming elements and share this information immediately with the neighboring PEs. SAA avoids repeated memory accesses for matrix elements and thereby is very effective for low-latency matrix multiply operations. The matrix multiplication is an area optimized implementation to meet the stringent resource constraints on the number of digital signal processor (DSP) slices on the Zynq 7020. The high-performance multiplication module occupies of DSPs and of LUTs on board the FPGA.

III-B3 Matrix inverse

A scalable single-precision floating-point matrix inversion is implemented using LU decomposition algorithm [34]. Inversion is hardware implemented in stages of:

-

(a)

decomposition of the full-rank matrix , in an iterative manner, to compute a lower triangular matrix , a diagonal matrix , and an upper triangular matrix as

(10) -

(b)

Inversion of the matrices. Special structures enable the computation of their respective inverses with much reduced complexity as shown by Ruan [34].

-

(c)

Multiplication of to obtain the final output, as

(11)

The inversion module is pipelined at the sub-system level and optimized for high throughput. The complex inversion module is programmed in C and synthesized into register transfer level (RTL) logic using Vivado HLS. The 32-bit inversion module operates using floating-point representation to accommodate a higher dynamic range for internal data representation. To be consistent with the fixed-point implementation of the core, fixed to floating-point and float to fixed-point conversions are performed at the respective input and output ports of the inverse module. Alternative to a floating-point solution, a scaled fixed-point inverse solution might not be able to sustain bit overflows and loss of precision, as commonly observed in higher dimensional matrix operations. This conclusion is based upon failing edge cases in our previous development of a 32-bit fixed-point matrix inversion module using Schur’s complement [18]. Resource-wise, the inverse module occupies LUTs, DSPs, block RAM, of on-board flip-flops, and of LUT based RAM.

III-B4 Matrix vector multiplication

Analogous to the systolic array architecture, the matrix-vector multiplication utilizes multiply-and-accumulate (MAC) units that operate on the time-aligned input streams of matrix and measurement vector channels. This module takes of LUTs and of DSPs on board the Zynq 7020 FPGA SoC.

Table I shows the FPGA resource utilization of the iVisNav hardware architecture.

| Resource | Available | Utilization | Utilization % |

|---|---|---|---|

| LUT | 53200 | 35488 | |

| LUTRAM | 17400 | 1555 | |

| FF | 106400 | 14968 | |

| BRAM | 140 | 12.50 | |

| DSP | 220 | 120 |

III-C State Machine

The incoming data stream is buffered on the PL using block memory, and a state machine shown in Fig. 4 controls the data flow on the PL subject to the state of operation. The PL remains IDLE until it is ready, and a start is signalled by the PS. Transfer of data via the software accessible registers takes place until the process of sending is complete. The PL remains in a COMPUTE state until the filtering process is DONE and the cycle continues.

IV Results

Experimental prototyping of the iVisNav structured light sensor system is demonstrated in [28, 18]. Data obtained from the calibrated as well as the simulated sensor platform setup is utilized for validating the proposed hardware-based state estimation. The linear least squares estimation process is studied for analyzing sensitivity to a single axis rotation and translation maneuver of the sensor relative to the projection surface. In this work, we analyze the performance of the fixed-point hardware implementation of the least squares estimation in Eq. (9). Double-precision floating-point implementation of the least squares on MATLAB is taken as the golden reference for comparison with the FPGA hardware implementation.

Sensor calibration process involves configuring the direction vectors ’s () in a bench-top experiment [28]. These values for the said experiment with only axial translation and rotation maneuver are cataloged and shown in Table II. Projection displacements ’s are determined using machine vision (such as in Ref. [28]). ToF Lidars in the sensor setup deliver the phase shift measurements from which the phase differences are computed. The least squares algorithm shown in Eq. (9) is implemented on the acquired data while the projection plate is configured to translate and rotate axially with respect to the beacon setup.

| Beacon Index | |

|---|---|

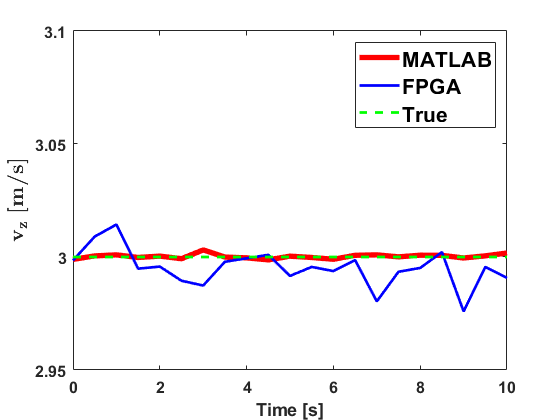

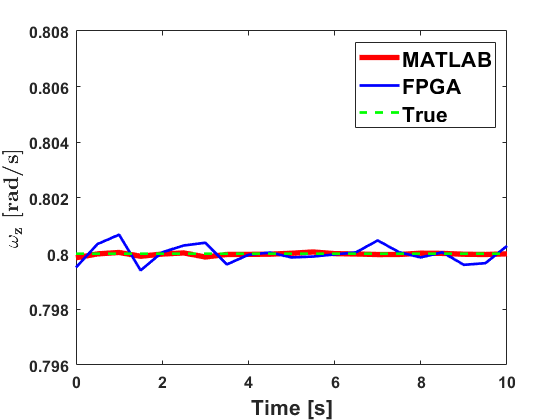

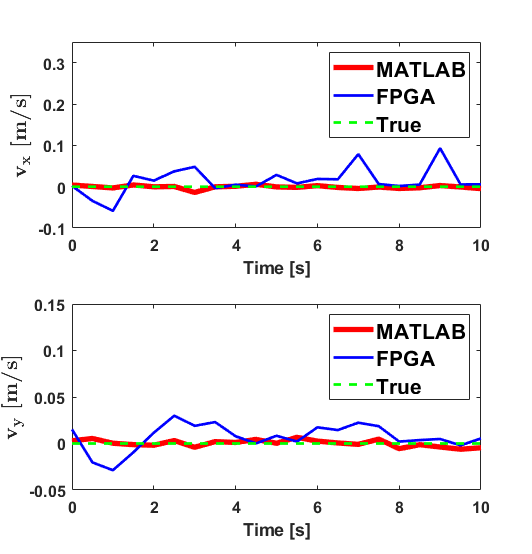

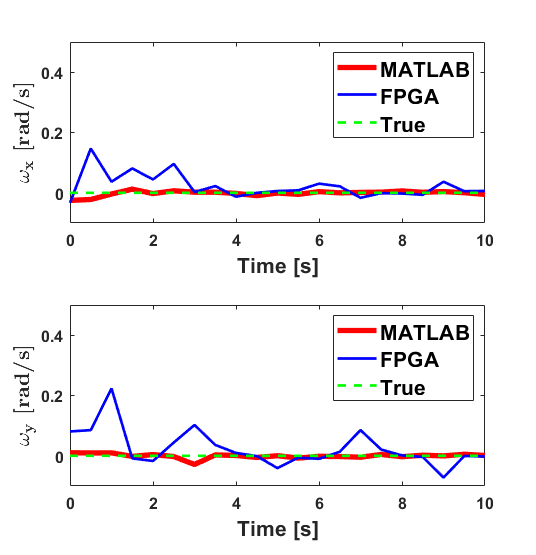

Hardware implementation of the iVisNav core is validated with the simulated inputs that correspond to system and covariance matrices and respectively, as well as the phase shift measurements, . The results obtained from the hardware implementation are compared with the true rates and are shown in Figs. 5 and 6. The figure also juxtaposes the estimates from the MATLAB’s implementation for comparing the hardware implementation accuracy with that of the MATLAB’s. We report relatively larger deviations from MATLAB’s results along channels where no motion is induced. The finite precision quantization errors appear to dominate the fractional bit representation while representing near values. These errors appear to have propagated through the matrix operations, yielding the deviations. Scaling the data prior to finite precision processing, higher number of fractional bits for representation, and floating-point representation are some techniques observed to mitigate this issue and these alternative solutions are being studied to improve accuracy in the edge-cases of filter implementation.

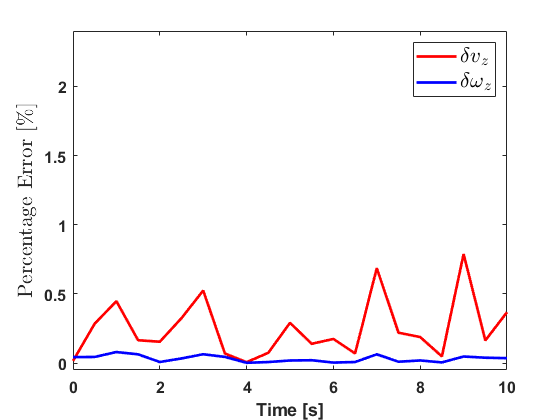

The percentage of relative errors in the channels and (where motion is induced) are shown in Fig. 7. The percentage error for an estimate obtained at the instance from the hardware (HW) in contrast with the software (SW) is computed as indicated in Eq. (12). These errors are a relative comparison between the output obtained from MATLAB to that obtained from the iVisNav core’s hardware simulation. The errors are below which indicates the accuracy that our 32-bit finite precision hardware implementation offers. Although this error performance is not guaranteed across the range of measurements, but scaling the data appropriately to represent it in the 32 available bits is paramount to achieving lower error percentages (). The latency of the least-squares implementation, measured via simulation, is observed to be about microseconds. The results demonstrate that the Register Transfer Level (RTL) design for the FPGA based estimation reliably replicates a software implementation while offering high-speed compute capabilities.

| (12) |

V Conclusion and Future Work

A finite-precision FPGA framework to estimate the relative rates of a moving projection base with respect to the iVisNav sensor system is developed in this paper. While designed for electro-optical sensors like iVisNav, the framework is applicable for motion rate estimation utilizing other Doppler sensors that use RF or other forms of energy modulation. The embedded system is capable of achieving high data throughput and accuracy requirements for real-time navigation tasks. Simulated phase difference measurements are used to verify the functioning of the iVisNav estimation logic on the FPGA. The estimation results from the 32-bit fixed-point implementation are observed to have a deviation less than from a double-precision MATLAB implementation of the same method and with a compute latency of s. Although this conservative error performance is specific to the presented simulation case and carefully chosen data scaling scheme, the FPGA implementation is observed to be accurate to the quantization bandwidth and forms a basis for optimism to potentially replace floating-point operations. Thereby, the authors conclude that similar approaches can be effectively used for high-speed pipelined frameworks and advanced sensing architectures of the future.

The framework is designed as a standalone IP core for interfacing with external modules. The state estimation was found to be realizable in a flight system by implementing it on an FPGA combined with a high-speed digitizer. Robust estimation pipelines are also being researched to improve performance and accuracy in next-generation embedded avionics.

Acknowledgment

This work is supported by the Office of Naval Research Grant Number N00014-19-1-2435. The authors acknowledge Dr. Brian Holm-Hansen and Dr. David Gonzales for their support. The authors are also thankful to Peter Arslanian, Daniel Shafer, NAWCAD, Pax-River for their support.

References

- [1] E. Nebot, “Sensors used for autonomous navigation,” in Advances in Intelligent Autonomous Systems, pp. 135–156, Springer, 1999.

- [2] S. Lopez, T. Vladimirova, C. Gonzalez, J. Resano, D. Mozos, and A. Plaza, “The promise of reconfigurable computing for hyperspectral imaging onboard systems: A review and trends,” Proceedings of the IEEE, vol. 101, no. 3, pp. 698–722, 2013.

- [3] A. Noureldin, T. B. Karamat, and J. Georgy, Fundamentals of inertial navigation, satellite-based positioning and their integration. Springer Science & Business Media, 2012.

- [4] B. W. Parkinson, P. Enge, P. Axelrad, and J. J. Spilker Jr, Global positioning system: Theory and applications, Volume II. American Institute of Aeronautics and Astronautics, 1996.

- [5] J.-Y. Du, Vision based navigation system for autonomous proximity operations: an experimental and analytical study. PhD thesis, Texas A&M University, 2005.

- [6] Y. Watanabe, E. Johnson, and A. Calise, “Optimal 3-d guidance from a 2-d vision sensor,” in AIAA Guidance, Navigation, and Control Conference and Exhibit, p. 4779, 2004.

- [7] A. Verras, R. T. Eapen, A. B. Simon, M. Majji, R. R. Bhaskara, C. I. Restrepo, and R. Lovelace, “Vision and inertial sensor fusion for terrain relative navigation,” in AIAA Scitech 2021 Forum, p. 0646, 2021.

- [8] B. E. Tweddle, Computer vision based navigation for spacecraft proximity operations. PhD thesis, Massachusetts Institute of Technology, 2010.

- [9] J. A. Christian and S. Cryan, “A survey of lidar technology and its use in spacecraft relative navigation,” in AIAA Guidance, Navigation, and Control (GNC) Conference, p. 4641, 2013.

- [10] I. J. Gravseth, R. Rohrschneider, and J. Masciarelli, “Vision navigation sensor(vns) results from the storrm mission,” Advances in the Astronautical Sciences, vol. 144, pp. 223–242, 2012.

- [11] M. Majji, J. Davis, J. Doebbler, J. Junkins, B. Macomber, M. Vavrina, and J. Vian, “Terrain mapping and landing operations using vision based navigation systems,” in AIAA Guidance, Navigation, and Control Conference, p. 6581, 2011.

- [12] J. L. Junkins, D. Hughes, and H. Schaub, “Noncontact position and orientation measurement system and method,” July 24 2001. US Patent 6,266,142.

- [13] J. Valasek, K. Gunnam, J. Kimmett, M. D. Tandale, J. L. Junkins, and D. Hughes, “Vision-based sensor and navigation system for autonomous air refueling,” Journal of Guidance, Control, and Dynamics, vol. 28, no. 5, pp. 979–989, 2005.

- [14] X. I. Wong and M. Majji, “A structured light system for relative navigation applications,” IEEE Sensors Journal, vol. 16, no. 17, pp. 6662–6679, 2016.

- [15] K. Sung and M. Majji, “Spacecraft proximity navigation using the ivisnav sensor system,”

- [16] K. Sung and M. Majji, “Doppler measurement of modulated light for high speed vehicles,” Sensors, vol. 22, no. 4, p. 1444, 2022.

- [17] J. L. Crassidis and J. L. Junkins, Optimal estimation of dynamic systems. Chapman and Hall/CRC, 2004.

- [18] B. Ramchander Rao, “Hardware implementation of navigation filters for automation of dynamical systems,” Master’s thesis, Texas A&M University, 2021.

- [19] C. Wang, E. D. Burnham-Fay, and J. D. Ellis, “Real-time fpga-based kalman filter for constant and non-constant velocity periodic error correction,” Precision Engineering, vol. 48, pp. 133–143, 2017.

- [20] G. K. Gultekin and A. Saranli, “An fpga based high performance optical flow hardware design for computer vision applications,” Microprocessors and Microsystems, vol. 37, no. 3, pp. 270–286, 2013.

- [21] J. Soh, A scalable, portable, FPGA-based implementation of the Unscented Kalman Filter. PhD thesis, 2017.

- [22] S. Hajdu, S. T. Brassai, and I. Szekely, “Complementary filter based sensor fusion on fpga platforms,” in 2017 International Conference on Optimization of Electrical and Electronic Equipment (OPTIM) & 2017 Intl Aegean Conference on Electrical Machines and Power Electronics (ACEMP), pp. 851–856, IEEE, 2017.

- [23] L. Schäffer, Z. Kincses, and S. Pletl, “A real-time pose estimation algorithm based on fpga and sensor fusion,” in 2018 IEEE 16th International Symposium on Intelligent Systems and Informatics (SISY), pp. 000149–000154, IEEE, 2018.

- [24] V. Bonato, R. Peron, D. F. Wolf, J. A. de Holanda, E. Marques, and J. M. Cardoso, “An fpga implementation for a kalman filter with application to mobile robotics,” in 2007 International Symposium on Industrial Embedded Systems, pp. 148–155, IEEE, 2007.

- [25] S. Chappell, A. Macarthur, D. Preston, D. Olmstead, B. Flint, and C. Sullivan, “Exploiting real-time fpga based adaptive systems technology for real-time sensor fusion in next generation automotive safety systems,” in The IEE Seminar on Target Tracking: Algorithms and Applications 2006 (Ref. No. 2006/11359), pp. 61–68, IET, 2006.

- [26] A. Elhossini, S. Areibi, and R. Dony, “An fpga implementation of the lms adaptive filter for audio processing,” in 2006 IEEE International Conference on Reconfigurable Computing and FPGA’s (ReConFig 2006), pp. 1–8, IEEE, 2006.

- [27] P. K. Meher, S. Chandrasekaran, and A. Amira, “Fpga realization of fir filters by efficient and flexible systolization using distributed arithmetic,” IEEE transactions on signal processing, vol. 56, no. 7, pp. 3009–3017, 2008.

- [28] K. Sung, R. Bhaskara, and M. Majji, “Interferometric vision-based navigation sensor for autonomous proximity operation,” in 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), pp. 1–7, IEEE, 2020.

- [29] D. C. Carmer and L. M. Peterson, “Laser radar in robotics,” Proceedings of the IEEE, vol. 84, no. 2, pp. 299–320, 1996.

- [30] J. L. Junkins and H. Schaub, Analytical mechanics of space systems. American Institute of Aeronautics and Astronautics, 2009.

- [31] W. Wolf, “A decade of hardware/software codesign,” Computer, vol. 36, no. 4, pp. 38–43, 2003.

- [32] Xilinx, Inc, “Zynq-7000 all programmable SoC: Technical reference manual, v1.12.2,” 2018. {https://www.xilinx.com/support/documentation/user_guides/ug585-Zynq-7000-TRM.pdf}, Last accessed on 2022-06-24.

- [33] H.-T. Kung, “Why systolic architectures?,” IEEE computer, vol. 15, no. 1, pp. 37–46, 1982.

- [34] M. Ruan, “Scalable floating-point matrix inversion design using vivado high level synthesis,” in Application Notes, pp. 1–20, Xilinx, 2017.