An information-theoretic learning model based on importance sampling

Abstract

A crucial assumption underlying the most current theory of machine learning is that the training distribution is identical to the test distribution. However, this assumption may not hold in some real-world applications. In this paper, we develop a learning model based on principles of information theory by minimizing the worst-case loss at prescribed levels of uncertainty. We reformulate the empirical estimation of the risk functional and the distribution deviation constraint based on the importance sampling method. The objective of the proposed approach is to minimize the loss under maximum degradation and hence the resulting problem is a minimax problem which can be converted to an unconstrained minimum problem using the Lagrange method with the Lagrange multiplier . We reveal that the minimization of the objective function under logarithmic transformation is equivalent to the minimization of the p-norm loss with . We applied the proposed model to the face verification task on Racial Faces in the Wild datasets and showed that the proposed model performs better under large distribution deviations.

keywords:

\KWDInformation theory , \KWDImportance sampling , \KWDMinimax principle , \KWDFace verification ,1 Introduction

A crucial assumption underlying the most current theory of machine learning is that the distribution of the training samples is identical to the distribution of the test samples. However, it is often violated in practice where the distribution of test data deviates from the distribution of training data, therefore we need to develop models that work well under realistic violations of this assumption. In this paper, we assume that the density distribution of future data, instead of being completely unknown, is restricted to a class of distributions and develop a method based on importance sampling and minimax principle[1]Although there is considerable freedom in quantizing distribution deviation[2, 3, 4], Kullback–Leibler divergence[5, 6] has been widely used as constraints for regularization in bounded rationality [7, 8], policy optimization[9, 10] and other learning problems. In this work, we use Kullback-Leibler divergence to measure distribution deviation.

The proposed model aims to minimize the empirical risk in maximum degradation for a given deviation level and hence the corresponding optimization problem is a minimax problem. Inspired from the importance sampling method[11, 12, 13], we convert the constraint between the training distribution and test distribution to a constraint over the importance sampling weights. Then, we use the Lagrange method to reformulate the constrained minimax optimization problem into unconstrained minimum optimization problem which can be solved by available algorithms like SGD, Adam, etc. The innovation of our proposed model is that the future uncertainty is controlled by , the Lagrange multiplier in the reformulated optimization problem. The objective function of the unconstrained optimization problem is called the Importance Sampling loss (ISloss). A logarithmic transformation of ISloss is called the Logarithmic Importance Sampling loss (LogISloss). We reveal that the minimization of LogISLoss is equivalent to the minimization of p-norm with .

Our proposed importance sampling loss can be applied to many machine learning problems over different applications, including regression, classification, clustering, etc. In this paper, we adopt the proposed model for the face verification task. Face verification[14] is a task used to determine whether a pair of images belongs to the same individual. In the training stage, the model learns a deep feature embedding for an image through the cross entropy loss. In the test stgae, the model calculates a cosine similarity between the two feature embeddings for a given pair of images. We conducted experiments on Racial Faces in the Wild datasets(RFW)[15, 16] and had the following findings. First, the importance sampling weights highlighted the hard classes in the training stage. Second, the model trained under LogISloss attained better performance when there was a large distribution deviation. Third, the model trained under LogISloss emphasized the hard pairs quantized by cosine similarity.

Outline of the paper Section 2 describes our proposed data variation robust learning model based on importance sampling and the algorithm to solve it. The relationship between LogISloss and p-norm is also revealed in this section. Section 3 applies the proposed model to the face verification task. Section 4 shows the experiment results on RFW datasets with respect to distribution deviation and hard samples. Finally, we conclude this paper in Section 5.

2 Proposed Method

In the learning problem considered in this paper, we have training data which are generated i.i.d according to some (usually unknown) probability density function and a set of loss function and no future data is available for the learning system. Our aim is to construct a learning algorithm for a population with an unknown distribution . We further assume that if are, instead of being completely unknown, restricted to a class of distributions, i.e.

| (1) |

Therefore, our goal becomes to minimize the worst-case expected loss over . A minimax approach is applied through minimizing the worst-case loss restricted to this constraint. Section 2.1 gives out the principled deviation of the minimax approach and solves the corresponding optimization problem. Section 2.2 presents the model under the logarithmic transformation of and reveals its relationship with p-norm.

2.1 Principle of ISloss

The performance of a learning system for a given distribution is measured by the following the risk functional

| (2) |

First, since we don’t know exactly the future data distribution in , we need to find a that maximizes the objective function. Given the worst-case distribution , we aim to find the which minimizes . Therefore, the corresponding optimization problem becomes a minimax problem

| (3) | ||||

Second, we reformulate the risk functional and the distribution deviation constraint using the idea of importance sampling method where a mathematical expectation with respect to is approximated by a weighted average of random draws from another distribution . For any probability density with whenever , the risk functional is

| (4) |

and the KL-divergence between and is

| (5) |

Third, we derive the empirical estimation of (4) and (5). A standard approach to train the models in statistical learning is to use Empirical Risk Minimization(ERM)[17, 1]. ERM learns the prediction rule by minimizing an approximated loss under the empirical distribution of samples, which is defined as

| (6) |

where the subscript E represents empirical. Suppose the observed dataset is i.i.d samples drawn from . The self-normalized importance sampling weight[18] for data point is

| (7) |

where . is called the importance sampling weight. Therefore, the empirical estimation of (4) is

| (8) |

and the empirical estimation of (5) is

| (9) | ||||

where denotes the discrete uniform distribution with samples. Plugging (8) and (9) back into (3), the optimization problem (3) becomes

| (10) | ||||

| s. t. | (11) | |||

| (12) |

where (12) is the constraint for the importance sampling weight . Next, the constrained optimization problem in (10) can be reformulated to the unconstrained optimization problem using the Lagrange method. Let the Lagrange multiplier for (11) and (12) be and respectively. The max problem in (10) can be reformulated as

| (13) |

Here is the temperature that governs the level of randomness of , which implicitly controls the allowed distribution deviations. Specifically, when , the distribution shift should be small while for , the distribution shift can be very large. Since is a pre-defined hyperparameter, is a constant and can be omitted. Then, the objective function becomes

| (14) |

Setting the derivative of with respect to to zero, we obtain the optimality necessary condition for , which is

| (15) |

Substituting (15) back into (14), we get the effective loss functional

| (16) |

The minimization of with respect to is equivalent to min-max of with respect to . In this paper, the objective function of the proposed model in (16) is named as Importance Sampling loss, ISloss for short.

2.2 LogISloss

In this section, we use the logarithmic transformation instead of as a loss measure and derive the corresponding objective function. The logarithmic risk functional is

| (17) |

Similarly, as the derivation for ISloss, the corresponding optimization problem becomes

| (18) | ||||

The optimality necessary condition for is

| (19) |

and the effective empirical loss functional becomes

| (20) |

In this paper, (20) is named Logarithmic Importance Sampling loss, LogISloss for short. Equation (20) shows the minimization problem of the logarithmic version is equivalent to the minimization of the p-norm loss function with . The objective function of ISloss(16) involves exponential and not stable in training, therefore we use LogISloss(20) in subsequent experiments.

3 Application on face verification task

Face verification[14, 19] is one of the most popular topics in the community of computer vision and pattern recognition. It is a technology used to determine whether a pair of images belong to the same individual and is widely used for identity authentication in many areas, such as attendance[20], finance[21], transportation[22], self-service and other fields. In the face verification community, researchers have proposed large-margin softmax variants [23, 24, 25, 26, 27] to enhance the inter-class discrepancy and intra-classs compactness, ArcMargin[23] and AddMargin[24, 25] are two widely-used ones. In this section, we apply the proposed model to face verification task under ArcMargin and AddMargin. We denote the cross entropy loss under ArcMargin and AddMargin as ArcLoss and AddLoss and derive the logarithmic importance sampling loss under ArcMargin and AddMargin.

3.1 IS-ArcLoss

To begin with, let us introduce some notations to facilitate the discussion in this section. Suppose is the number of training samples and is the number of classes. denotes the deep feature of -th sample. For , we refer as the target class and as the non-target class (the label excluding the ground truth one). denotes the -th column of the weight and is the bias term. We fix bias for simplicity. Suppose is the angle between and . Following [23, 25], we fix the weight by normalization and fix the deep feature by normalization and rescale it to . The feature scale is set to 64 following [23, 25].

ArcFace(Additive Angular Margin Loss)[23] uses the arc-cosine function to calculate the angle between the feature and the target weight . The model adds an additive angular margin to the target angle to enhance the inter-class discrepancy and intra-class compactness. The ArcLoss for the -th sample is defined as follows

| (21) |

Suppose denote the ArcLoss for the -th sample, and denote the number of samples in each training batch, then we name the proposed logarithmic importance sampling loss as IS-ArcLoss, which is defined as follows

| (22) |

Here, the superscript IS denotes Importance Sampling. In subsequent experiments for ArcLoss and IS-ArcLoss, the angular margin penalty is fixed to 0.5 as recommended by [23].

3.2 IS-AddLoss

AddMargin(Additive margin)[24], also known as the Large-Margin Cosine Loss[25], calculates the angle between the feature and the target weight . The model minus an additive margin to the cosine of the target angle to maximize inter-class variance and minimize intra-class variance. The AddLoss for the -th sample is defined as follows

| (23) |

Suppose denote the AddLoss for the -th sample, then we name the proposed logarithmic importance sampling loss as IS-AddLoss, which is defined as follows

| (24) |

In subsequent experiments for AddLoss and IS-AddLoss, the additive margin is fixed to 0.35 as recommended by [25].

4 Experiments and Results

In this section, we conducted experiments to show the effectiveness of the proposed method. Section 4.1 introduces datasets including training datasets, test datasets and the training schedule. Section 4.2 analyzes the importance sampling weights. Section 4.3 describes how the hyperparameter affects the model performance. Section 4.4 compares ArcLoss and IS-ArcLoss, AddLoss and IS-AddLoss with respect to distribution deviations. Section 4.5 compares ArcLoss and IS-ArcLoss with respect to hard examples.

4.1 Datasets

In the face verification system, training stage and test stage are different. The training stage is used to learn the deep feature embedding for a given image through a classification task. In the test stage, the score of a given image pair is usually calculated by the cosine similarity between the two feature embeddings. If the score is higher than a given threshold, the input pair is considered a positive pair. If the score is lower than a given threshold, the input pair is considered a negative pair. The given verification threshold is obtained from cross-validation by a pre-defined split in test datasets.

Training Datasets In this paper, we uses the Racial Faces in-the-Wild(RFW) database[15], which includes four races, namely Caucasian, African, Asian and Indian. We use BUPT-Equalizedface[15] as the training dataset which contains 27999 individuals and 1251416 images. The number of individuals in Caucasian, African, Asian and Indian are 7000, 7000, 7000 and 6999 respectively. The detailed statistics of BUPT-Equalizedface are given in Appendix-A. In later discussions, the four training subsets in BUPT-Equalizedface are denoted as RFW-Caucasian, RFW-African, RFW-Asian and RFW-Indian.

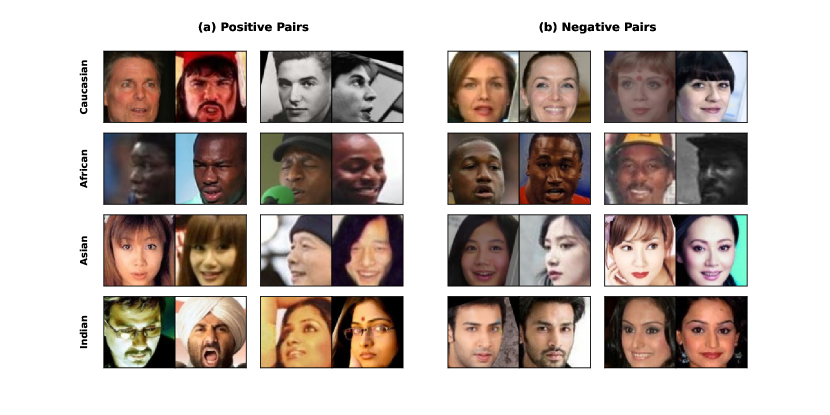

Test Datasets The RFW database has four test subsets, namely Caucasian, African, Asian and Indian. There are about 14K positive pairs and 50M negative pairs[15] for each subset in the RFW-test, most of which are easy to distinguish. In this work, the proposed model aims to emphasize large distribution deviations and hard samples. Therefore, we use the RFW-test for evaluation, which is composed of difficult pairs selected based on cosine similarity between embeddings of each pair[15]. Each subset (race) contains 3000 positive pairs and 3000 negative pairs, splited into 10 subsets in advance. We selected the verification threshold for one split based on the remaining nine splits. We propose RFW-extend as an extension to RFW-test and the details of the dataset are shown in Section 4.5. Figure 1 shows selected positive pairs and negative pairs from each race. It can be seen that some pairs are difficult even for human observers.

Experiment Settings The datasets were aligned and preprocessed in MXNet binary format and the size of the cropped image were set to . We set the training batch size, weight decay and momentum as 128, 5e-4 and 0.9, respectively. The dimension of embedding feature was set to 512 following [23, 25]. The initial learning rate was set to 0.1, and decreased by a factor of 10 at given epochs. For the BUPT-Equalizedface dataset, we trained the network for 27 epochs and decayed the learning rate at epochs 14, 20 and 24. We used half of the suggested epochs in [16] to save training time. We used IResNet-34[23], a 34-layer modified ResNet, as the default backbone in all experiments if not otherwise specified. We followed the Pytorch implementation of insightface111https://github.com/deepinsight/insightface/tree/master/recognition/arcface_torch on one GTX 1080ti and one GTX 3090ti for training.

4.2 Importance sampling weights

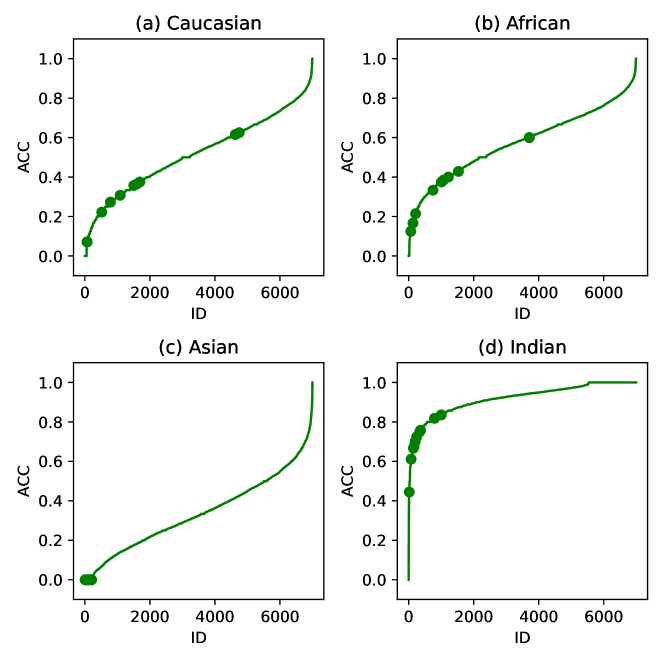

In this section, we give a heuristic example to show that the importance sampling weights emphasize the hard samples in the training dataset. We calculated the average classification accuracy for each individual (ID) and highlighted the individuals with the top-10 maximum average weights. We first calculated the cross entropy loss for each training sample then used (19) to calculate the weight. All models in Figure 2 were trained under IS-ArcLoss(). Figure 2 shows that the individuals with maximum weights are those with low accuracy, especially in (c) where the top-10 maximum weights clustered around 0. Hard samples are normally assumed to have low classification accuracy. Therefore, this observation showed the importance sampling weight emphasized hard training samples. We further analyze how the importance sampling weights change over time in Appendix-B. The experiment results indicated the importance sampling weights gradually concentrated on hard samples as the training proceeded.

4.3 Temperature

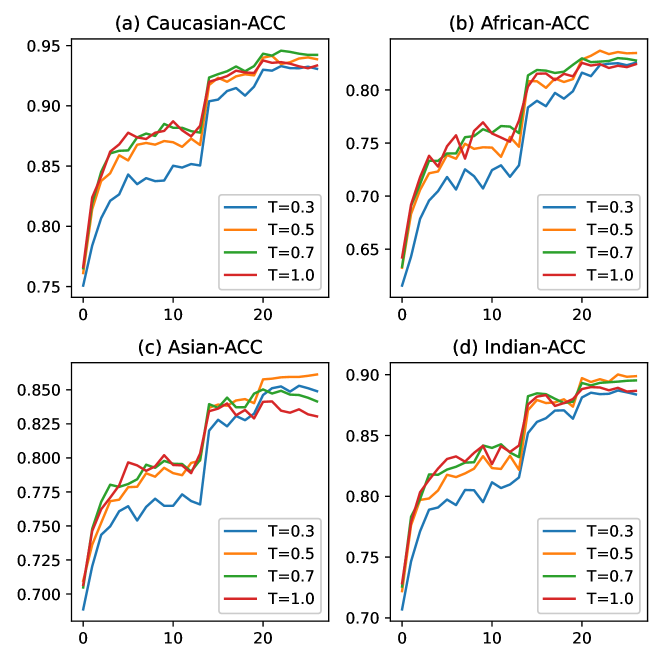

In this section, we analyze how the temperature affects the model performance. Figure 3 compares verification accuracy on four RFW-test datasets when . Figure 3 shows that achieves the best accuracy on African, Asian and Indian while achieves the best accuracy on Caucasian. One possible explanation is that the distribution deviation between RFW-Caucasian (training) and Caucasian(test) is smaller than the distribution deviations between RFW-Caucasian (training) and African/Asian/Indian(test), therefore, the test Caucasian favors a higher temperature, . This observation suggests that lower tolerates larger distribution deviations. In this paper, we used a fixed as the default temperature in all subsequent experiments. However, other training schedules on can be adopted. For example, starting at a high temperature and decaying it during training. How to choose a good schedule on for different learning problems will be explored in our future studies.

4.4 Distribution deviations

| Train | Loss | Cau | Afr | Asi | Ind |

|---|---|---|---|---|---|

| R-Cau | Arc | 93.55 | 82.23 | 84.70 | 88.77 |

| IS-Arc | 94.15(0.60) | 83.22(0.99) | 85.82(1.12) | 89.40(0.63) | |

| R-Afr | Arc | 89.23 | 92.37 | 84.02 | 88.20 |

| IS-Arc | 90.37(1.14) | 92.43(0.06) | 85.82(1.80) | 89.65(1.45) | |

| R-Asi | Arc | 85.50 | 79.13 | 90.90 | 84.45 |

| IS-Arc | 87.20(1.70) | 80.30(1.17) | 91.17(0.27) | 85.32(0.87) | |

| R-Ind | Arc | 87.98 | 81.47 | 83.60 | 91.50 |

| IS-Arc | 88.92(0.94) | 82.30(0.83) | 83.48(-0.12) | 91.80(0.3) |

In this section, we compare the performance of LogISLoss and cross-entropy loss with respect to distribution deviations. Table 1 compares verification accuracy between ArcLoss and IS-ArcLoss. The models were trained on RFW-Caucasian, RFW-African, RFW-Asian, RFW-Indian and tested on Caucasian, African, Asian, Indian. We report the result at the epoch where the training race achieves the highest verification accuracy. The accuracy gain (in small brackets) is the difference between our proposed IS-ArcLoss over ArcLoss. For example, the accuracy of the model trained on RFW-Caucasian and tested on African is 82.23% of ArcLoss and 83.22% of IS-ArcLoss, the accuracy gain is 0.99%. Table 1 shows the following findings. First, IS-ArcLoss performs better than ArcLoss in most cases. Second, the accuracy gains in non-training races are higher compared with the training race on three test datasets(RFW-Caucasian, RFW-African, RFW-Asian). Take the models trained on RFW-Caucasian(first row) for example, the accuracy gain on Caucasian, African, Asian, Indian is 0.6%, 0.99%, 1.12% and 0.63% respectively. The accuracy gain on Caucasian(0.6%) is the lowest. This observation indicates that IS-ArcLoss has better generalization ability compared with ArcLoss. If we assume the accuracy gap is a measure of the distribution shift, then a larger accuracy gap indicates a larger distribution shift. Next, consider the model trained on RFW-African(second row) for example, the test accuracy on Asian, Indian and Caucasian is 84.02%, 88.20% and 89.23%. Under the previous assumption, the distribution deviations between Asian, Indian, Caucasian and African are in increasing order. The accuracy gain on the three datasets is 1.80%, 1.45% and 1.14%, which is in decreasing order. This observation implies that the proposed IS-ArcLoss performs better when the distribution deviation is large. More experiment results on IS-ArcLoss with respect to TAR@FAR and on different backbones are shown in Appendix-C.

| Train | Loss | Cau | Afr | Asi | Ind |

|---|---|---|---|---|---|

| R-Cau | Add | 94.28 | 83.27 | 85.70 | 89.45 |

| IS-Add | 93.68(-0.60) | 83.07(-0.20) | 85.97(0.27) | 89.68(0.23) | |

| R-Afr | Add | 90.85 | 92.72 | 84.85 | 89.85 |

| IS-Add | 89.98(-0.87) | 92.50(-0.22) | 86.10(1.25) | 89.93(0.08) | |

| R-Asi | Add | 86.75 | 79.62 | 91.17 | 85.22 |

| IS-Add | 86.87(0.12) | 80.63(1.01) | 90.28(-0.89) | 85.75(0.53) | |

| R-Ind | Add | 88.93 | 82.78 | 83.93 | 92.13 |

| IS-Add | 88.47(-0.46) | 82.12(-0.66) | 83.02(-0.91) | 91.20(-0.93) |

Table 2 compares verification accuracy between AddLoss and IS-AddLoss. IS-AddLoss performs better than AddLoss on 3(RFW-Caucasian, RFW-African, RFW-Asian) out of 4 test datasets on non-training races. Another interesting observation is in line with the observation in Table 1. For the models trained on RFW-African, the accuracy gain on Asian(84.85%), Indian(89.85%) and Caucasian(90.85%) is 1.25%, 0.08% and -0.87%, which is in decreasing order. For the models trained on RFW-Asian, the accuracy gain on African(79.62%), Indian(85.22%) and Caucasian(86.75%) is 1.01%, 0.53% and 0.12%, which is in decreasing order. The results also confirm our findings in Table 1 that LogISloss performs better when the distribution shift is large.

Table 3 test LogISloss on several popular benchmarks, including Labeled Faces in the Wild(LFW)[28], Age-DB[29], Cross-age LFW(CALFW)[30], Cross-pose LFW(CPLFW)[31] and Celebrities in Frontal-Profile (CFP-FP and CFP-FF)[32], where LFW, AGE-DB, CALFW and CPLFW have 6000 pairs and CFP(large pose variation) has 7000 pairs. These datasets are also split into 10 subsets in advance. The results were evaluated on the final epoch. Table 3 shows that IS-ArcLoss performs better than ArcLoss in most cases. To be specific, the accuracy gains on LFW and CFP-FF are low, the accuracy gains on CALFW and CPLFW are medium, and the accuracy gains on CFP-FP and AgeDB are high.

| Train | Loss | LFW | CFP-FP | CFP-FF | AgeDB | CALFW | CPLFW |

|---|---|---|---|---|---|---|---|

| RFW-Caucasian | ArcLoss | 99.30 | 86.39 | 98.90 | 94.67 | 92.28 | 84.40 |

| IS-ArcLoss | 99.33(0.03) | 88.41(2.02) | 99.11(0.21) | 95.47(0.80) | 92.87(0.59) | 84.90(0.50) | |

| RFW-African | ArcLoss | 99.12 | 86.03 | 98.77 | 90.68 | 91.37 | 82.82 |

| IS-ArcLoss | 99.10(-0.02) | 87.09(1.06) | 98.97(0.20) | 92.20(1.52) | 91.77(0.40) | 83.62(0.80) | |

| RFW-Asian | ArcLoss | 98.13 | 89.46 | 97.49 | 89.55 | 88.42 | 82.65 |

| IS-ArcLoss | 98.62(0.49) | 91.51(2.05) | 97.37(-0.12) | 90.30(0.75) | 89.28(0.86) | 83.17(0.52) | |

| RFW-Indian | ArcLoss | 98.62 | 92.30 | 98.27 | 89.28 | 89.32 | 84.93 |

| IS-ArcLoss | 98.68(0.06) | 92.53(0.23) | 98.41(0.14) | 90.77(1.49) | 89.42(0.10) | 85.57(0.64) |

4.5 Hard Pairs

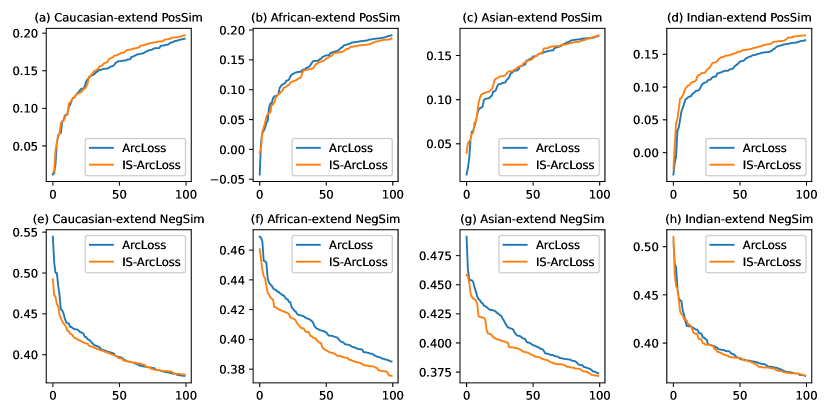

RFW-test-extend Dataset In this experiment, we propose an extended test dataset RFW-test-extend to include more pairs for evaluation. The extended dataset has four subsets, namely Caucasian-extend, African-extend, Asian-extend and Indian-extend. The number of positive pairs for the four subsets are 13650,14050,15690,13860 and the number of negative pairs are 42260,43370,54890,43120. All positive pairs and negative pairs are randomly split into 10 folds. The generation and statistics for RFW-extend are shown in Appendix-A.In face verification task, for positive pairs, the similarity between two images should be high, therefore hard positive pairs have low similarity. For negative pairs, the similarity between two images should be low, therefore hard negative pairs have high similarity. Figure 4 shows the similarity of top-100 hard positive and hard negative pairs. The models were trained on the whole BUPT-Equalizedface datasets (27999 IDs) under ArcMargin. Figure 4 shows that IS-ArcLoss has higher similarity for positive pairs compared with ArcLoss in (a)(Caucasian-extend), (c)(Asian-extend), (d)(Indian-extend) and it has lower similarity for negative pairs in all tested datasets. Visualization of the top-2 hardest pairs for each race is shown in Figure 1. This observation indicates that IS-ArcLoss emphasizes the hardest pairs.

5 Conclusion

In this paper, we develop an information-theoretic learning model derived from importance sampling to deal with the problem of data distribution deviation between current training data and future test data. We reveal that minimization of the objective function of LogISloss is equivalent to the minimization of the p-norm loss function when . We applied the proposed model to the face verification task. Experiments on distribution deviations showed that the learned features under LogISloss generalized well across different races and across different datasets compared with cross-entropy loss and experiments on the hardest pairs showed that LogISloss emphasized the hard pairs. Applying ISloss/LogISloss to other machine learning problems and designing a good schedule for remains to be explored in future endeavors.

Disclosure statement

No potential conflict of interest was reported by the authors.

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grants 61976174.

References

- [1] F. Farnia, D. Tse, A minimax approach to supervised learning, Advances in Neural Information Processing Systems 29 (2016).

- [2] L. Pardo, Statistical inference based on divergence measures, Chapman and Hall/CRC, 2018.

- [3] T. Kailath, The divergence and bhattacharyya distance measures in signal selection, IEEE transactions on communication technology 15 (1) (1967) 52–60.

- [4] A. Rényi, et al., On measures of entropy and information, in: Proceedings of the fourth Berkeley symposium on mathematical statistics and probability, Vol. 1, Berkeley, California, USA, 1961.

- [5] S. Kullback, R. A. Leibler, On information and sufficiency, The annals of mathematical statistics 22 (1) (1951) 79–86.

- [6] P. M. Williams, Bayesian conditionalisation and the principle of minimum information, The British Journal for the Philosophy of Science 31 (2) (1980) 131–144.

- [7] T. Genewein, F. Leibfried, J. Grau-Moya, D. A. Braun, Bounded rationality, abstraction, and hierarchical decision-making: An information-theoretic optimality principle, Frontiers in Robotics and AI 2 (2015) 27.

- [8] P. A. Ortega, D. A. Braun, Thermodynamics as a theory of decision-making with information-processing costs, Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 469 (2153) (2013) 20120683.

- [9] J. Schulman, S. Levine, P. Abbeel, M. Jordan, P. Moritz, Trust region policy optimization, in: International conference on machine learning, PMLR, 2015, pp. 1889–1897.

- [10] H. Hihn, S. Gottwald, D. A. Braun, An information-theoretic on-line learning principle for specialization in hierarchical decision-making systems, in: 2019 IEEE 58th conference on decision and control (CDC), IEEE, 2019, pp. 3677–3684.

- [11] P. W. Glynn, D. L. Iglehart, Importance sampling for stochastic simulations, Management science 35 (11) (1989) 1367–1392.

- [12] L. Shi, Hierarchical Bayesian inference in the brain: Psychological models and neural implementation, University of California, Berkeley, 2009.

- [13] L. Shi, T. Griffiths, Neural implementation of hierarchical bayesian inference by importance sampling, Advances in neural information processing systems 22 (2009).

- [14] J. Chen, Z. Guo, J. Hu, Ring-regularized cosine similarity learning for fine-grained face verification, Pattern Recognition Letters 148 (2021) 68–74.

- [15] M. Wang, W. Deng, J. Hu, X. Tao, Y. Huang, Racial faces in the wild: Reducing racial bias by information maximization adaptation network, in: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2019.

- [16] M. Wang, Y. Zhang, W. Deng, Meta balanced network for fair face recognition, IEEE Transactions on Pattern Analysis and Machine Intelligence (2021) 1–1doi:10.1109/TPAMI.2021.3103191.

- [17] V. Vapnik, The nature of statistical learning theory, Springer science & business media, 1999.

- [18] R. Y. Rubinstein, D. P. Kroese, Simulation and the Monte Carlo method, John Wiley & Sons, 2016.

- [19] S. Bianco, Large age-gap face verification by feature injection in deep networks, Pattern Recognition Letters 90 (2017) 36–42.

- [20] S. Pss, M. Bhaskar, Rfid and pose invariant face verification based automated classroom attendance system, in: 2016 International Conference on Microelectronics, Computing and Communications (MicroCom), IEEE, 2016, pp. 1–6.

- [21] O. E. Aru, I. Gozie, Facial verification technology for use in atm transactions, American Journal of Engineering Research (AJER) 2 (5) (2013) 188–193.

- [22] Q. T. Van, H. N. Van, L. D. V. Hoang, T. N. Ngoc, V. L. Duc, D. L. Dai, T. N. Bao, S. N. Quang, L. N. Van, A. D. Trung, et al., Intelligent parking system using automated license plate recognition and face verification, in: Proceedings of International Conference on Computing and Communication Networks: ICCCN 2021, Springer, 2022, pp. 219–227.

- [23] J. Deng, J. Guo, N. Xue, S. Zafeiriou, Arcface: Additive angular margin loss for deep face recognition, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 4690–4699.

- [24] F. Wang, J. Cheng, W. Liu, H. Liu, Additive margin softmax for face verification, IEEE Signal Processing Letters 25 (7) (2018) 926–930.

- [25] H. Wang, Y. Wang, Z. Zhou, X. Ji, D. Gong, J. Zhou, Z. Li, W. Liu, Cosface: Large margin cosine loss for deep face recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 5265–5274.

- [26] X. Wang, S. Zhang, S. Wang, T. Fu, H. Shi, T. Mei, Mis-classified vector guided softmax loss for face recognition, in: Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34, 2020, pp. 12241–12248.

- [27] W. Liu, Y. Wen, B. Raj, R. Singh, A. Weller, Sphereface revived: Unifying hyperspherical face recognition, IEEE Transactions on Pattern Analysis and Machine Intelligence (2022).

- [28] G. B. Huang, M. Mattar, T. Berg, E. Learned-Miller, Labeled faces in the wild: A database forstudying face recognition in unconstrained environments, in: Workshop on faces in’Real-Life’Images: detection, alignment, and recognition, 2008.

- [29] S. Moschoglou, A. Papaioannou, C. Sagonas, J. Deng, I. Kotsia, S. Zafeiriou, Agedb: the first manually collected, in-the-wild age database, in: proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2017, pp. 51–59.

- [30] T. Zheng, W. Deng, J. Hu, Cross-age lfw: A database for studying cross-age face recognition in unconstrained environments, arXiv preprint arXiv:1708.08197 (2017). doi:https://doi.org/10.48550/arXiv.1708.08197.

- [31] T. Zheng, W. Deng, Cross-pose lfw: A database for studying cross-pose face recognition in unconstrained environments, Beijing University of Posts and Telecommunications, Tech. Rep 5 (2018) 7.

- [32] S. Sengupta, J.-C. Chen, C. Castillo, V. M. Patel, R. Chellappa, D. W. Jacobs, Frontal to profile face verification in the wild, in: 2016 IEEE winter conference on applications of computer vision (WACV), IEEE, 2016, pp. 1–9.