An Integrated Optimization-Learning Framework for Online Combinatorial Computation Offloading in MEC Networks

Abstract

Mobile edge computing (MEC) is a promising paradigm to accommodate the increasingly prosperous delay-sensitive and computation-intensive applications in 5G systems. To achieve optimum computation performance in a dynamic MEC environment, mobile devices often need to make online decisions on whether to offload the computation tasks to nearby edge terminals under the uncertainty of future system information (e.g., random wireless channel gain and task arrivals). The design of an efficient online offloading algorithm is challenging. On one hand, the fast-varying edge environment requires frequently solving a hard combinatorial optimization problem where the integer offloading decision and continuous resource allocation variables are strongly coupled. On the other hand, the uncertainty of future system parameters makes it hard for the online decisions to satisfy long-term system constraints. To address these challenges, this article overviews the existing methods and introduces a novel framework that efficiently integrates model-based optimization and model-free learning techniques. Besides, we suggest some promising future research directions of online computation offloading control in MEC networks.

I Introduction

To meet the stringent latency requirements of thriving wireless intelligent applications (e.g., online 3D gaming and autonomous driving), mobile edge computing (MEC) [1] has emerged as a new computation paradigm to provide ubiquitous computation services. Via pushing computation resources toward network edges, MEC enables flexible and rapid deployment of new services that require intensive computation and low latency. In MEC systems, mobile devices (MDs) with hardware limitations (e.g., limited local computational power, storage and energy) can offload their computation-intensive tasks to nearby resourceful edge servers. As shown in Fig. 1, the potential application of MEC technology ranges from typical IoT (internet of things) networks to newly-emerging UAV (unmanned aerial vehicle) communication systems.

Rather than persistently offloading tasks for edge processing, offloading computation tasks in an opportunistic manner offers substantial performance gain. For instance, MDs may prefer local computing to offloading to a server when the communication channel to the edge server (ES) is weak or the ES is heavily loaded. Due to the sharing of limited edge communication and computation resources among MDs, the binary offloading decisions of different MDs or tasks (e.g., offloading a computation task or computing locally) are strongly coupled and shall be jointly optimized. As a result, the optimal offloading solution needs to be selected from a discrete action set that grows exponentially with the problem size (e.g., number of MDs or tasks). Besides, the continuous resource allocation variables (e.g., CPU frequency, transmit power and bandwidth) need to be jointly optimized with the integer offloading decisions. This renders the computation offloading problem (COP) a mixed-integer nonlinear programming (MINLP) that is generally hard to be solved efficiently.

In practice, the volatile MEC environment urges MDs to perform online offloading decisions in real time under the uncertainty of future system parameters (e.g., random channel conditions and task arrivals). The corresponding online offloading algorithm design faces two challenges. First, the fast-varying edge environment requires frequently re-solving the hard COP when the system parameters vary significantly. When the problem size is large, it is computationally prohibitive to obtain the optimal offloading solution in real-time implementation. Second, with uncertain future system information, it is hard for the online offloading decisions to satisfy long-term system constraints, e.g., average power consumption constraint or achieving task data queue stability.

Existing online offloading algorithms are mainly categorized into model-based optimization and model-free machine learning algorithms. In particular, the former formulates and solves the COP based on explicit mathematical models that describe the physical system. A major advantage of model-based optimization is the theoretical guarantee of convergence toward an optimal offloading solution. Due to the combinatorial nature of COP, optimization-based methods often require a large number of iterations to achieve a satisfying solution. Besides, they fail to exploit the experience learned from historical data, and hence need to recalculate the COP from scratch once system parameters change. As a result, it is fundamentally difficult for optimization-based methods to adapt to fast-varying MEC environments.

Recently, machine learning based methods, especially deep reinforcement learning (DRL), have emerged as promising alternatives to mathematical optimization. Based on the past experience, DRL takes advantage of deep neural networks (DNNs) to construct a direct mapping from the input system parameters to the output offloading and resource allocation decisions without explicitly solving the mathematical optimization of COP. Its low execution latency is particularly appealing to online decision making. A key drawback of model-free DRL is the slow convergence when the dimension of the action space is large. The mixed integer-continuous variables in COP yield a very large, or even infinite, action space, yielding the curse-of-dimensionality. Besides, the actions produced by the native action-reward structure of DRL may not satisfy the constraints of COP, such as per-slot SINR (signal-to-interference-plus-noise ratio) constraint and long-term queue stability constraint.

In this article, we introduce a novel framework that skillfully integrates model-based optimization and model-free DRL to overcome their respective drawbacks mentioned above. We adopt an actor-critic structure (as illustrated in Fig. 2 in section III) to optimize the offloading and resource allocation decisions. Specifically, we apply a DNN-based actor module to generate integer offloading decisions and resort to conventional optimization in the critic module to obtain the continuous resource allocation solution given the generated offloading decisions. The two modules operate alternately: the critic module evaluates the integer offloading decision produced by the actor module, and the actor module uses the evaluations to update its mapping policy for generating the offloading decisions. Such a training process exploits the past experience to repeatedly update the DNN model parameters (i.e., the policy of the DNN in the actor module) until converging to the optimal mapping policy. Subsequently, we can directly map any new input system parameters to the optimal output offloading decisions without numerical optimization, taking negligible computation time against model-based optimization methods. This essentially facilitates real-time online decision making in a fast-varying MEC environment. On the other hand, compared to conventional DRL that treats both the integer offloading and continuous resource allocation decisions as the action, the proposed approach significantly reduces the action space of the actor module by considering only the binary offloading decisions as the action, leaving resource allocation to the numerical optimization in the critic module. This greatly simplifies the learning task to a classical classification problem. More importantly, the optimization-based critic module provides precise evaluation of the integer offloading decisions generated by the actor module. This greatly improves the convergence of the training process in comparison with conventional DRL, whose convergence is often jeopardized by the imprecise evaluation on actions before the critic network is sufficiently trained. Moreover, the optimization-based critic guarantees the feasibility of the resource allocation solutions. Last but not least, the proposed framework can flexibly incorporate various off-the-shelf learning and optimization methods according to different MEC application requirements. For instance, by introducing the well-established Lyapunov optimization technique, we are able to handle long-term performance constraints under random system parameters, as detailed in section IV. Overall, the proposed integrated optimization-learning framework employs the benefits of both sides. By learning from the past experience, it is able to generate optimal offloading solutions very quickly, greatly enhancing the practicality in fast-varying MEC environments. Meanwhile, model-based optimization in the critic module guarantees fast and robust convergence even when the action space is large.

The article is organized as follows. In section II, we introduce the online computation offloading problem in MEC networks and review some conventional methods. In section III, we introduce the novel integrated optimization-learning framework for online offloading control and discuss in section IV its extension to stochastic cases. At last, we suggest some future research directions and conclude the paper.

II Online Computation Offloading Problem and Conventional Solutions

We consider an MEC network consisting of one or multiple ESs and MDs. Depending on the application scenario, the MDs can be powered by grid power, batteries, or harvested energy. Suppose that the time is divided into consecutive slots, within each the system parameters (such as wireless channel condition) remain unchanged. In each time slot, the system operator makes computation offloading decision for each task based on the current system parameters. We adopt a binary computation offloading policy [1], namely, the tasks are either computed locally or at the ES. In general, an online computation offloading problem contains the following three key elements.

-

•

Problem Parameters: The problem parameters include exogenous and endogenous parameters. In time slot , the exogenous parameters, denoted as , capture the realizations of exogenous environment random variables, including the wireless channel gain, task data arrivals, and harvested renewable energy, etc. On the other hand, the endogenous parameters, denoted as , depict the time-varying system state of the ESs and the MDs, such as the battery level and data queue length, as well as the static hardware settings of the MEC system like the maximum CPU frequency and transmit power. For simplicity, we denote the combination of exogenous and endogenous parameters as .

-

•

Action: The action in time slot , denoted as , includes computation offloading and resource allocation decisions. In particular, consists of both integer and continuous variables, denoted as . The integer variables correspond to the binary offloading decisions and the continuous variables correspond to the resource allocation decisions such as the transmit power and bandwidth occupied by MDs. These actions are constrained by limited system resource and users’ performance requirements in each time slot. Besides, the sequential actions , for , may be subject to long-term constraints such as average power consumption and task data queue stability of the ESs and MDs.

-

•

System Utility: The system utility in the th time slot, denoted as , is a real-valued function that measures the per-slot performance of the adopted action under parameters . For example, in a computation-intensive application, the utility can be the data processing rate of the system. For energy-aware applications, it is the energy consumption of energy-scarce devices.

In a fast-varying edge environment, it is very challenging to obtain the optimal actions in real time due to the combinatorial nature of the problem. In the following, we provide a brief review of existing offloading algorithms in MEC systems.

II-A Model-Based Optimization

Optimization-based methods apply integer or convex optimization techniques to solve the COP based on explicit mathematical formulations (i.e., model-based). Some representative approaches include:

-

•

Relaxation-based approaches: The main idea is to first relax the integer variables to continuous ones and reformulate the problem into an “easier” continuous optimization problem. Then, a feasible but often suboptimal integer solution is recovered from the solution of the relaxed problem. The relaxation can be performed either directly on binary offloading decision variables through linear relaxation (LR) [2] and semidefinite relaxation (SDR) [3], or indirectly on some key metrics related to the binary decisions, e.g., the portion of energy consumed by computation offloading [4]. Despite the low computing complexity, the solution quality of relaxation-based methods is not guaranteed. Besides, some MINLP problems after relaxation are still hard non-convex optimization problems due to the strong coupling between the integer and continuous variables.

-

•

Local-search-based approaches: Local-search-based approaches start from an initial solution and iteratively find a better solution in the vicinity of the current solution until no further improvement can be made. For example, [4] applies the coordinate descent (CD) method to swap the computation mode (e.g., local computing or edge computing) of only one best MD in each iteration until a local optimum is reached. To avoid trapping into a local optimum, [5] uses Gibbs sampling to update the binary variables according to a specific probability distribution function.

-

•

Metaheuristic approaches: Instead of enumerating all the possible combinations, metaheuristics search over a subset of feasible solutions in a structured manner, e.g., using bio-inspired method. For example, [6] proposed a hierarchical algorithm based on genetic algorithm (GA) and particle swarm optimization (PSO) to optimize the combinatorial offloading decisions in a large action space.

-

•

Decomposition approaches: Decomposition methods effectively reduce the computational complexity of mixed-integer optimization by decomposing a large-size problem into smaller parallel sub-problems. Blending the benefits of dual decomposition and augmented Lagrangian methods, alternating direction method of multipliers (ADMM) decomposes the hard combinatorial COP into parallel subproblems with only one integer variable [7]. The optimal offloading decision of each subproblem is obtained by simply enumerating two individual offloading options, i.e., offloading and local computation, for each user.

Due to the NP-hardness of COP, model-based optimization methods require a large number of iterations to reach a satisfying solution. Besides, they fail to make use of the valuable historical data, and thus need to completely recalculate the COP once the system parameters vary. As a result, the high complexity prohibits their real-time implementation in a fast-varying environment.

II-B Model-Free DRL-based Methods

Instead of explicitly solving each new instance of COP numerically, DRL-based methods build on the past experience to construct a direct mapping from the problem parameters to the optimal control action. By model free, we mean DRL is data driven instead of relying on an explicit (and sometimes imprecise) mathematical model of the system. Once the deep learning network is fully trained by the past experience, DRL can output the control actions very quickly in response to a new set of input system parameters. Existing DRL methods are mainly categorized into value-based, policy-based, and hybrid approaches, as detailed below.

-

•

Value-based DRL: Value-based DRL methods approximate the state-action value function (generally referred to as Q-value) using a deep neural network (DNN) and select the most-rewarding action in each iteration based on the Q-value estimation of all feasible actions. Some recent application of value-based methods on online offloading control include deep Q-learning network (DQN) [8] and double DQN [9].

-

•

Policy-based DRL: Policy-based DRL methods employ a DNN (referred to as the actor network) to construct the optimal offloading policy by iteratively tuning the DNN parameters using the policy gradient technique. Meanwhile, the quality of the policy is evaluated by a dedicated critic network using a separate DNN or Monte Carlo based methods. Commonly-used policy-based DRL methods in MEC systems include the actor-critic DRL [10] and the deep deterministic policy gradient (DDPG) [11].

-

•

Hybrid DRL: Hybrid DRL methods combine the merits of both value-based and policy-based DRL. For example, [12] adopts a DDPG-based actor module to obtain continuous resource allocation, followed by a DQN-based critic module to evaluate the resource allocation decision and select the best integer offloading decision.

To solve COP, value-based methods are computationally inefficient due to the large and hybrid integer-continuous action space. Also, it is frequently reported that policy-based methods suffer slow convergence or even divergence, especially when the critic module fails to produce a precise and stable value function approximation. Besides, model-free DRL cannot guarantee that the output actions satisfy both the per-slot and long-term system constraints.

To address the fundamental drawbacks of the existing optimization- and DRL-based methods, we introduce a novel integrated optimization-learning framework, referred to as DROO (deep reinforcement learning-based online offloading) [13], in the following section.

III An Integrated Optimization and Learning Approach

In this section, we introduce the basic structure of DROO that solves a per-slot deterministic COP. For each slot , we maximize the per-slot utility given the realization of . In the next section, we will extend DROO to solve a general stochastic COP problem with long-term objective and constraints.

As shown in Fig. 2, DROO solves the COP via three interactive modules, namely actor module, critic module, and policy update module. Specifically, the actor module adopts model-free learning, while the critic module adopts model-based optimization. Below are the details of the three modules.

-

•

Learning-based Actor Module: The actor module consists of a DNN and an offloading action quantizer. It is different from the actor modules of conventional DRLs in the following two senses. First, instead of outputting an action , the proposed actor DNN outputs a relaxed offloading decision based on the input system parameters . This greatly reduces the action space of the DNN network and thus expediting the training process. Second, unlike the actor network of conventional policy-based DRL methods that generate only a single action, DROO quantizes into candidate binary offloading decisions (), from which one best decision will be selected later. The quantization procedure unifies the exploration and exploitation during the learning progress. In particular, setting a larger leads to more exploration than exploitation, and vice versa. As a rule of thumb, a larger is to be used when device number is large. Intuitively, the ’s should be sufficiently close to to make good use of the DNN’s output, and meanwhile sufficiently separated from each other to avoid premature convergence to a suboptimal solution. DROO designs the quantizer following an order-preserving rule to generate high quantization diversity [13]. We can further introduce random noise into the quantizer to increase exploration [14].

-

•

Optimization-based Critic Module: Instead of using another DNN in the critic module as in conventional actor-critic frameworks, DROO takes advantage of the model information to precisely assess the offloading decisions through model-based optimization. In particular, the critic module evaluates the binary decisions ’s by solving the corresponding resource allocation subproblem and obtains the score for each , where is the optimal resource allocation given . Then, the action selection step selects the offloading decision with the highest score, and outputs the action . The action is then executed in the current time slot. Notice that in most cases, the resource allocation subproblem is convex, so that we can obtain the optimal efficiently. From the above description, the major computation complexity of DROO arises from solving the resource allocation subproblem times to acquire the best in each time slot. A larger explores more binary decisions, which leads to faster convergence rate and better offloading policy of the DNN after convergence. However, a larger also incurs heavier computation complexity in the online offloading decision process. To balance the performance and computation complexity, DROO initially sets a large and adaptively decreases over time as the DNN gradually approaches the optimal policy [13]. By doing so, DROO incurs very low computational complexity even with a relatively large action space.

-

•

Policy Update Module: DROO follows the standard experience replay technique to update the policy of the actor module. Specifically, the best decision and the corresponding input are added as a newly labeled training sample to a replay memory, from which a batch of samples is randomly selected to update the parameters of the DNN at every training interval. When the replay memory is full, the oldest training samples will be overwritten by the newest ones.

As iterations proceed, DROO gradually approaches the optimal state-action mapping by learning from the best actions experienced most recently. After convergence, DROO can quickly output the optimal solution instead of computing the COP from scratch when observing new input problem parameters.

DROO significantly improves the convergence of the training process compared with conventional actor-critic DRL, thanks to the following two reasons. First, the COP is decoupled under the learning-optimization structure. Both the model-free actor module and the model-based critic module solve much simpler sub-problems than the original large-size MINLP. In particular, the actor module solves a conventional multi-class classification problem, and the critic module tackles an “easy” continuous optimization. Second, model-based optimization in the critic module not only guarantees the feasibility of the output action, but also provides quick, precise, and stable evaluation of the actions. This fundamentally improves the convergence of the DRL algorithm.

To evaluate the performance of DROO, we conduct numerical simulations in a multi-user MEC network. We define the reward of each MD as the computation rate (i.e., the total amount of task data processed at both MDs and ES) in a time slot and the system utility as the weighted sum computation rate of all MDs. We aim to maximize the system utility by treating the wireless channel gains as the input problem parameters. In the simulations, we set the weighting factor of the th MD as 1 if is odd and as 1.5 otherwise. We set the actor DNN as a fully-connected multi-layer perceptron consisting of one input layer, two hidden layers, and one output layer, where the first and the second hidden layers have and hidden neurons, respectively. The two hidden layers use ReLu activation function, and the output layer uses sigmoid activation function. The replay memory size and the training sample batch size is .111The complete source code implementing DROO is available on-line at https://github.com/revenol/DROO.

In Fig. 3, we first investigate the training loss and normalized computation rate (NCR) of DROO with MDs, where the training loss is computed by the averaged cross-entropy loss function and the computation rate is normalized by the maximum achievable rate. As shown in the figure, DROO only requires about slots to converge. The computation rate approaches the optimal one at convergence. To further verify the robustness of DROO, we alternate the values of all weighting factors (from 1 to 1.5 and vice versa) at both and . The results show that DROO quickly adapts to the new parameters and automatically converges to new optimum even with a sharp variation of the weighting factors.

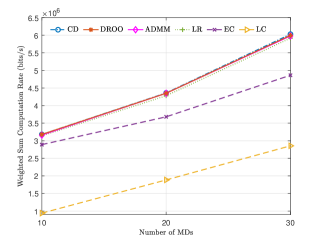

In Fig. 4, we compare the computation rate of DROO with five representative benchmark algorithms when the number of MDs varies. Specifically, local computing (LC) refers to the case where all MDs compute locally. Likewise, edge computing (EC) enforces the MDs to offload all the tasks for edge computing. Meanwhile, we plot the result of CD (coordinate descent) as an optimal benchmark, as CD is likely to achieve a close-to-optimal solution [4]. The results show that DROO achieves almost identical performance as the CD method, and significantly outperforms the LC and EC methods. To show the real-time performance of DROO, we compare in [13] the computational time for LR (linear relaxation), ADMM, CD and DROO to output a solution of . The training of the DNN is infrequent (once every tens of time slots) and can be performed in parallel with task offloading and computation, which does not incur additional delay overhead. Therefore, we only consider the computation delay of offloading action generation when comparing the computation delay with other benchmark methods. The results in [13] show that DROO requires substantially less computational time than LR, ADMM, and CD (only about 0.059s with 30 MDs compared to 0.81s for LR, 3.9s for ADMM and 3.8s for CD methods). This makes DROO technically viable for real-time offloading control in a fast-varying edge environment.

IV A Lyapunov-aided DRL Framework For the Stochastic COP

In this section, we extend the basic DROO framework to solve a stochastic COP with both long-term objective and constraints. The key is to integrate the well-known Lyapunov optimization in the design of the critic module, resulting in a Lyapunov-aided DROO framework named LyDROO [15]. As shown in Fig. 5, LyDROO makes three major modifications to the basic DROO framework.

-

•

Equivalent Queueing Modeling: Besides considering the physical queue backlogs, such as task data and battery energy queues, LyDROO introduces virtual queues for the long-term constraints such as average energy consumption and computation delay. Based on the Lyapunov optimization theory, LyDROO interprets the stochastic COP as an equivalent queue stability control problem, where the long-term constraints can be satisfied by stabilizing all the actual and virtual queues .

-

•

Lyapunov-based Online Control: LyDROO adopts Lyapunov optimization to remove the temporal correlations among actions in different time slots while keeping stable . Specifically, LyDROO slightly modifies the critic module of DROO in Fig. 2. That is, instead of maximizing in the critic, it minimizes a drift-plus-penalty function for each candidate binary candidate action, where increases with and decreases with the utility value in the th time frame. Intuitively, by constantly minimizing , LyDROO simultaneously maximizes the long-term utility and satisfies the long-term constraints by keeping all the queues stable. Notice that minimizing requires only the problem parameters of the th time frame. Therefore, we can implement the proposed LyDROO algorithm in a fully online manner without any future information..

-

•

Lyapunov-aided Critic: As illustrated at the right half of Fig. 5, LyDROO solves each per-slot deterministic MINLP following the idea of DROO. At the begining of the th time slot, LyDROO inputs the current problem parameters to the actor module, which outputs candidate binary offloading decisions. The critic module optimizes the resource allocation variables by minimizing the drift-plus-penalty function given the offloading decisions. After obtaining the scores of all the offloading actions, LyDROO stores the best-scored offloading action and the input as a new training sample into the replay memory to update the policy of the actor module. Then, LyDROO renews the queue state by executing the offloading action in the current time slot and starts a new iteration until convergence.

In the following, we conduct numerical simulations to evaluate the performance of LyDROO. We consider an MEC network of MDs with random task data arrivals. We intend to maximize the time-averaged weighted sum computation rate of all MDs while guaranteeing the task data queue stability and average power constraints at each MD. The input problem parameters include the wireless channel gains from all MDs to the ES, the task data arrivals at all MDs, and the data queue and virtual energy queue backlogs at all MDs. We assume that the task data arrival rates of all MDs follow exponential distribution with identical average rate . We set the average power constraint at each MD to 0.08 Watt. We consider two different deep learning models in the actor module of LyDROO: the DNN network and the CNN network.222The complete source code implementing LyDROO is available on-line at https://github.com/revenol/LyDROO. For performance comparison, we also consider two benchmark methods: Lyapunov-guided coordinated decent (LyCD) and Myopic optimization. Similar to LyDROO, LyCD transforms the stochastic problem into the per-slot deterministic MINLP, but solves the deterministic problem using optimization-based CD method instead of DROO. LyCD yields close-to-optimal performance, and yet is unsuitable for real-time implementation due to its high computational complexity. On the other hand, the Myopic method ignores the queue backlogs and greedily maximizes the weighted sum computation rate in each time slot until the prescribed energy budget exhausts.

The simulation results are plotted in Fig. 6. In the top sub-figure, we plot the variation of average data queue length of the MDs over time with Mbps. The results show that the Myopic method is unable to stabilize all the data queues. In contrast, the queue length of LyDROO becomes stable as time proceeds. Compared to the DNN network, the CNN network converges much faster and significantly reduces the data queue backlog during the learning stage. In the bottom sub-figure, we compare the average queue length of the three methods under different . The results show that, LyCD and LyDROO yield stable data queues for all considered data arrival rates. In contrast, Myopic cannot maintain a stable data queue when Mbps. It is also worth mentioning that both DNN- and CNN-based LyDROO have similar stable computation rate regions as LyCD, but taking much less computational time (e.g., 0.156s for DNN-based LyDROO vs. 8.02s for LyCD with 30 MDs.) [15]. These numerical results verify the effectiveness of LyDROO on solving the online offloading problem with long-term constraints.

V Future research directions

In this section, we outline several future research directions that we find particularly valuable.

V-A Online Offloading Control under Future Prediction

In a stochastic MEC system, the decision maker can predict future system information (e.g., locations of moving MDs) based on historical observation to assist online offloading decisions. One possible solution is to modify the actor module of DROO, e.g., by replacing the DNN with other neural networks with prediction capability such as recursive neural network (RNN) and long short term memory (LSTM) network. Besides, we may incorporate robust optimization techniques in the critic module to alleviate the negative impact of imprecise prediction.

V-B Distributed Online Offloading Control

When implementing DROO and LyDROO, the system operator makes centralized control based on the global system information collected from all MDs. Centralized control, however, could be costly in a large distributed MEC system. Meanwhile, due to the security and privacy concerns, MDs may be reluctant to share the parameters containing private data (e.g., device parameters which may expose user’s shopping habits). To address this problem, a promising approach is to exploit distributed learning/optimization techniques such as federated learning and multi-agent learning to design individual actor and critic modules at distributed MDs.

V-C Applications beyond Online Computation Offloading

Beyond solving the COPs in MEC networks, the DROO framework is applicable to online control in a wide-range of applications with hybrid integer-continuous optimization structure. As shown in Fig. 1, the binary decisions may denote the routing planning in an IoT network, mode selection in a device-to-device assisted communication system, user association in a UAV-assisted scenario, or service placement in a vehicular environment. Besides, DROO can be applied to on-off beamforming control in Intelligent reflecting surface (IRS) and massive multiple-input and multiple-output (MIMO) systems, etc.

VI Conclusion Remarks

In this article, we have provided an overview of the online computation offloading control methods in MEC systems. In particular, we introduced an integrated optimization-learning framework, DROO, that takes advantage of both past experience and model information to provide fast and robust convergence as well as close-to-optimal real-time offloading control. By incorporating the Lyapunov optimization, we demonstrated the flexibility of DROO to solve long-term stochastic online control problems. Besides, we have highlighted several valuable future research topics and discussed the challenges therein.

References

- [1] Y. Mao, C. You, J. Zhang, K. Huang, and K. B. Letaief, “A survey on mobile edge computing: the communication perspective,” IEEE Commun. Surv. Tutor., vol. 19, no. 4, pp. 2322–2358, Fourthquarter, 2017.

- [2] F. Wang, J. Xu, and Z. Ding, “Multi-antenna NOMA for computation offloading in multiuser mobile edge computing systems,” IEEE Trans. Commun., vol. 67, no. 3, pp. 2450–2463, Mar. 2019.

- [3] T. Q. Dinh, J. Tang, Q. D. La, and T. Q. S. Quek, “Offloading in mobile edge computing: Task allocation and computational frequency scaling,” IEEE Trans. Commun., vol. 65, no. 8, pp. 3571–3584, Apr. 2017.

- [4] S. Bi and Y. J. Zhang, “Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading,” IEEE Trans. Wireless Commun., vol. 17, no. 6, pp. 4177–4190, Jun. 2018.

- [5] J. Yan, S. Bi, Y. J. Zhang, and M. Tao, “Optimal task offloading and resource allocation in mobile-edge computing with inter-user task dependency,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 235–250, Oct. 2020.

- [6] F. Guo, H. Zhang, H. Ji, X. Li, and V. C. M. Leung, “An efficient computation offloading management scheme in the densely deployed small cell networks with mobile edge computing,” IEEE/ACM Trans. Netw., vol. 26, no. 6, pp. 2651–2664, Dec. 2018.

- [7] X. Li, S. Bi, and H. Wang, “Optimizing resource allocation for joint AI model training and task inference in edge intelligence systems,” IEEE Wireless Commun. Lett., vol. 10, no. 3, pp. 532–536, Mar. 2021.

- [8] M. Min, L. Xiao, Y. Chen, P. Cheng, D. Wu, and W. Zhuang, “Learning-based computation offloading for IoT devices with energy harvesting,” IEEE Trans. Veh. Technol., vol. 68, no. 2, pp. 1930–1941, Feb. 2019.

- [9] X. Chen, H. Zhang, C. Wu, S. Mao, Y. Ji, and M. Bennis, “Optimized computation offloading performance in virtual edge computing systems via deep reinforcement learning,” IEEE Internet Things J., vol. 6, pp. 4005–4018, Jun. 2019.

- [10] Y. Wei, F. R. Yu, M. Song, and Z. Han, “Joint optimization of caching, computing, and radio resources for fog-enabled IoT using natural actor-critic deep reinforcement learning,” IEEE Internet Things J., vol. 6, pp. 2061–2073, Apr. 2019.

- [11] Y. Dai, D. Xu, K. Zhang, S. Maharjan, and Y. Zhang, “Deep reinforcement learning and permissioned blockchain for content caching in vehicular edge computing and networks,” IEEE Trans. Veh. Technol., vol. 69, pp. 4312–4324, Apr. 2020.

- [12] J. Zhang, J. Du, Y. Shen, and J. Wang, “Dynamic computation offloading with energy harvesting devices: A hybrid-decision-based deep reinforcement learning approach,” IEEE Internet Things J., vol. 7, pp. 9303–9317, Oct. 2020.

- [13] L. Huang, S. Bi, and Y.-J. A. Zhang, “Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks,” IEEE Trans. Mobile Comput., vol. 19, no. 11, pp. 2581–2593, Nov. 2020.

- [14] J. Yan, S. Bi, and Y. J. A. Zhang, “Offloading and resource allocation with general task graph in mobile edge computing: A deep reinforcement learning approach,” IEEE Trans. Wireless Commun., vol. 19, no. 8, pp. 5404–5419, Aug. 2020.

- [15] S. Bi, L. Huang, H. Wang, and Y.-J. A. Zhang, “Lyapunov-guided deep reinforcement learning for stable online computation offloading in mobile-edge computing networks,” IEEE Trans. Wireless Commun., 2021, doi: 10.1109/TWC.2021.3085319.

Biographies

| Xian Li [M’20] is now a postdoctoral research fellow with the College of Electronics and Information Engineering, Shenzhen University, China. His research interests mainly include optimizations in mobile edge computing and wireless powered communication networks. |

| Liang Huang [M’16] is now an Associate Professor with the College of Computer Science and Technology, Zhejiang University of Technology, China. His research interests lie in the areas of queueing and scheduling in communication systems and networks. |

| Hui Wang is now a Professor with the Shenzhen Institute of Information Technology. His research interests include wireless communication, signal processing, and distributed computing systems. |

| Suzhi Bi [M’14, SM’19] is now an Associate Professor with the College of Electronics and Information Engineering, Shenzhen University, China, His research interests include the optimizations in wireless information and power transfer, mobile computing, and smart power grid communications. |

| Ying-Jun Angela Zhang [M’05, SM’11, F’19] is now a Professor with the Department of Information Engineering, The Chinese University of Hong Kong. Her research interests focus on optimization and learning in wireless communication systems. She is a Fellow of IEEE, a Fellow of IET, and an IEEE ComSoc Distinguished Lecturer. |