An interpretative and adaptive MPC for nonlinear systems

Abstract

Model predictive control (MPC) for nonlinear systems suffers a trade-off between the model accuracy and real-time computational burden. One widely used approximation method is the successive linearization MPC (SL-MPC) with EKF method, in which the EKF algorithm is to handle unmeasured disturbances and unavailable full states information. Inspired by this, an interpretative and adaptive MPC (IA-MPC) method, is presented in this paper. In our IA-MPC method, a linear state-space model is firstly obtained by performing the linearization of a first-principle-based model at the initial point, and then this linear state-space model is transformed into an equivalent ARX model. This interpretative ARX model is then updated online by the EKF algorithm, which is modified as a decoupled one without matrix-inverse operator. The corresponding ARX-based MPC problem are solved by our previous construction-free, matrix-free and library-free CDAL-ARX algorithm. This simple library-free C-code implementation would significantly reduce the difficulty in deploying nonlinear MPC on embedded platforms. The performance of the IA-MPC method is tested against the nonlinear MPC with EKF and SL-MPC with EKF method in four typical nonlinear benchmark examples, which show the effectiveness of our IA-MPC method.

Index Terms:

Model Predictive Control, Extended Kalman Filter, State-space model, ARX modelI Introduction

Model Predictive Control (MPC) is an advanced technique to control multi-input multi-output systems subject to constraints [1]. MPC has been widely used in diverse industrial areas, such as process [2], aerospace [3], power electronics [4], etc. The core idea of MPC is to predict the evolution of the controlled system through a dynamical model, solve an optimization problem over a finite time horizon, only implement the control input at the current time, and then repeat the optimization at the next sample step [2, 5].

After major developments in the field of MPC over the past three decades, MPC for linear plants described by the linear state-space model has made significant progress in theoretical stability analysis and real-time numerical algorithm implementation [6]. However, most industrial plants are nonlinear, and their constrained nonliner MPC (NMPC) formulation is a non-convex optimization problem that encounters practical difficulties in terms of computational complexity and algorithm implementation, such as solving it within sampling time on embedded platforms. It’s known that a trade-off exists between the described accuracy of the nonlinear model and the online NMPC computation cost: the more accurate/complicated the model, the greater the online computational cost.

I-A Related works

Most industrial approaches are based on linear models obtained from the linearization technique in solving MPC problems for nonlinear systems. A nonlinear plant generally admits a locally-linearized model when considering regulating a particular operating point. In most cases, a linear MPC yield adequate performance, especially for chemical process industries. This approach with one linear model has an advantage in designing and deploying the offline explicit MPC solutions based on multi-parametric quadratic programming [7] and the online MPC solutions based on fast convex optimization algorithms for embedded platforms to satisfy real-time requirements. For tracking problems that operate over a wide range of operating conditions, multiple linear model based MPC addresses the non-adequate accuracy issue in one linear model based MPC. Multiple linear models are also called linear parameter varying (LPV), often used in the context of gain-scheduling, namely an MPC controller scheduled by linear models [8]. One approach for obtaining an LPV model is the online successive linearization at the current states based on a first-principles model [9].

One improved approach is the linear time-varying (LTV) model, obtained from linearizing the nonlinear model at each prediction horizon [10]. However, the LTV-MPC approach comes at a higher computational cost than the LPV-MPC approach. Since the states and inputs during the prediction horizon are unknown, the typical way utilizes the shifted optimal inputs sequence of the previous MPC solution as the inputs, and the states are calculated by integrating the nonlinear model. Another successful and broadly used approach is the real-time iteration (RTI) scheme. The RTI approach assumes that the MPC solution at the current time is very similar to the solution obtained at the previous time. Under this assumption, a full Newton step can be taken, providing an excellent approximation of the fully converged NMPC solution. Not only that, but the RTI algorithm also divides the whole computation into preparation phase and feedback phase phases, to achieve a shorter feedback delay [11]. The RTI-NMPC approach has been implemented into the open-source software ACADO Toolkit, which allows exporting the optimized C-code for deployment [12, 13]. Another approximate scheme in the literature is the Continuation/GMRES method [14]. In [14, 15, 16], the authors also provide its full implementation within the tool AutoGenU to support the C-code generation.

In addition to the above approximating approaches, incorporating nonlinearity directly into the MPC problem can provide a systematic way of dealing with systems with nonlinear dynamics, constraints, and objectives but at the cost of heavy online computation. Solving the resulting non-convex optimization problem relies on an efficient and reliable nonlinear programming algorithm, which has been alleviated to some extent with the advent of tailored and professional software tools, such as the CasADi [17] and FORCES NLP [18].

To further reduce the online computation cost of NMPC, the tremendous advances in machine learning and deep learning inspired many works, such as learning a globally-linear model or learning an efficient representation of approximated MPC laws via deep neural networks. Herein we only name a few works related to MPC for nonlinear systems. In [19], the author utilized a lifting operator, called the Koopman operator, to lift the nonlinear dynamics into a higher dimensional space where its evolution is approximately linear. Then an MPC controller based on the lifted linear model can replace the NMPC. The lifted linear model can be learned by a user-defined dictionary library or deep neural networks from data [20, 21]. Another approach that directly learns the approximated MPC law to reduce the online computation cost has been explored in the literature. In [22], the authors show that a neural network can represent exactly the MPC law described by the piecewise affine function in the case of linear MPC. The authors further extended to the case of nonlinear systems [23], in which the deep neural network is utilized to learn the robust nonlinear MPC law.

In the practice of the MPC technique, in addition to numerical optimization algorithms needed to solve the MPC problem, another critical ingredient is estimation algorithms. Generally, the nonlinear dynamic model can be an interpretative first-principle state-space model or a black-box model built from experimental data via system identification (or modern machine learning) in an NMPC setting. A nonlinear first-principle state-space model can provide interpretability that is preferred in practice, but it often suffers the issues like unavailable full-state information, unmeasured disturbance, or unmodelled time-varying terms. Therefore, these cases require a state estimation algorithm or a joint state parameter estimation algorithm in which unmeasured disturbances and unmodeled time-varying terms are considered as parameters to be estimated. Many state estimation methods for nonlinear systems exist. The well-known extended Kalman Filter (EKF) is undoubtedly the predominant state estimation technique [24], and the EKF has also been applied in many joint state-parameter estimation applications [25, 26].

The identified nonlinear black-box model used in NMPC can be divided into two categories: state-space or input-output formulation, such as state-space based recurrent neural networks (RNNs) or input-output based neural-network autoregressive model with exogenous inputs (NNARX). One obvious advantage of input-output based models over state-space based models is that input-output based models don’t require an state estimation algorithm to estimate the generally higher-dimensional states. Despite this, input-output based models still require an online estimation algorithm to update the model parameters when discrepancies happen between the model output predictions and the streaming output measurements. The linear input-output ARX models are well known for their adaptability allowing efficient online recursive estimation algorithms, like recursive least-squares (RLS) or Kalman Filter [27]. In fact, nonlinear ARX models also have good adaptability, updating their model parameters online via the EKF algorithm [28].

As discussed above, the EKF algorithm is an essential component in NMPC practice, both for the first-principle based and black-box models. The EKF is based on the first-order linearization of nonlinear dynamics around the previously estimated vectors. Although the first-order linear approximation used by the EKF is sometimes not accurate enough, other advanced techniques can provide better estimation accuracy, but this comes at the cost of complicated implementation and expensive computational costs. The ease of implementation and computation burden are of great importance, especially in the cooperation of NMPC and EKF. Despite the widely practical usefulness of the EKF, its convergence and stability guarantee requires strict conditions, such as satisfying the nonlinear observability rank condition and having sufficiently small initial estimation error as well as disturbing noise term [29, 30]. In this paper we assume that the EKF algorithm will not suffer from divergence problems in its applications.

It is worth noting that the widely used SL-MPC approach and the EKF algorithm are both based on linearization techniques. Their combination, namely the SL-MPC with the EKF method presented in [31], has become a common choice to handle the nonlinear first-principle based model in the industrial practice of NMPC technique.In its EKF part, the states and unknown disturbances are recursively updated from the streaming input-output data based on the linearization of the discrete-time nonlinear model. In its SL-MPC part, the control input is calculated based on the estimated states and disturbances at the current time and the linearized state-space model, which is also updated online based on the linearization of a first-principle model at the estimated states and disturbances. Thus, the SL-MPC with the EKF method, in a sense, is utilizing the EKF algorithm and the streaming input-output data to continuously update online a linear state-space model, which is under the representation of states defined by the first-principle-based model. And a linear input-output plant could have infinite equivalent linear state-space realizations via coordinate transformation. Our previous work [32] also illustrated that a linear state-space model can be equivalently transformed into an input-output ARX model, which is well known for its adaptibility. In this paper, the SL-MPC with EKF method is the starting point to derive our interpretative and adaptive MPC (IA-MPC) method, which is illustrated in detail in Section II.

I-B Contribution

In our IA-MPC method, a linear state-space model is first obtained by performing the linearization of a first-principle based model at the initial point, then transformed into an equivalent ARX model; all of these are performed offline. This offline ARX model acquisition not only makes the black-box ARX model inherit the interpretability of the first-principle based model but also exploits its adaptive nature; this is why we call our method Interpretive and Adaptive MPC (IA-MPC). In the online closed-loop control, the EKF algorithm is used to update the ARX model parameters, and our previous construction-free CDAL-ARX algorithm [33] is used to compute the MPC control input.

The advantage of our IA-MPC method are sumarized as follows:

-

1.

In addition to the gained interpretability and adaptability, the acquisition of the offline ARX model also makes it possible to have a sufficiently small initial estimation error in ARX model parameters. All the EKF algorithm needs to do is the system tracking (or feedback correction), not the system identification.

-

2.

Compared to the EKF algorithm in the SL-MPC method, the EKF algorithm in our IA-MPC method can be decoupled when updating the ARX model parameters, thanks to the decoupling feature of ARX model. The decoupled EKF computation avoids matrix inversion and only involves scalar division, even allowing parallel calculation according to the number of outputs.

-

3.

The corresponding ARX-based MPC problem is solved by our previous construction-free, matrix-free, and library-free CDAL-ARX algorithm. Thus, our IA-MPC method would significantly reduce the difficulty in deploying nonlinear MPC on embedded platforms.

II Successive Linearization NMPC with EKF

This section considers a MPC tracking problem for nonlinear systems subject to the input and output constraints, considering a first-principle nonlinear model as follows

| (1a) | |||

| (1b) | |||

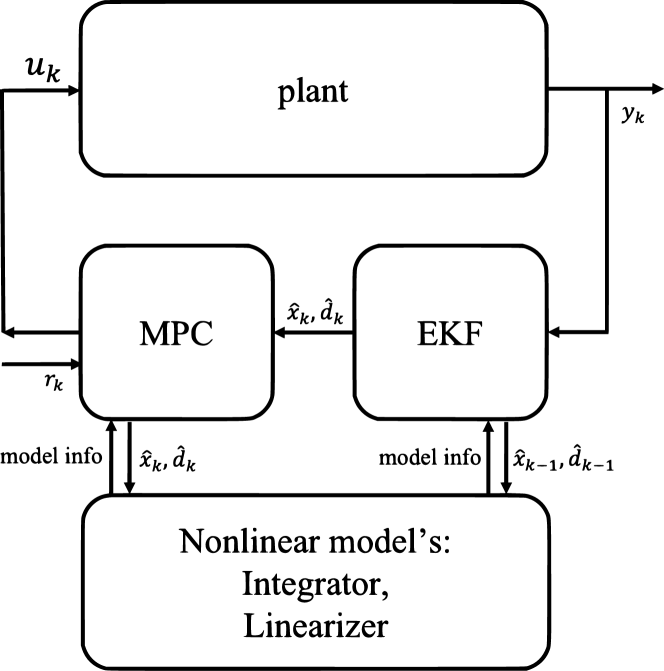

where , , and are the states, inputs, and outputs, respectively. And denotes the unmeasured disturbances. In industrial NMPC practice, one efficient approach for handling the nonlinearity of a first-principle model is based on successive linearization, which is applied both in state estimation and MPC computation [31]. Then, an output-feedback nonlinear MPC combines a state estimator (EKF) and a state-feedback successive linearization NMPC, and its schematic diagram is shown in Fig. 1.

II-A Extended Kalman Filter

The extended Kalman Filter exploits the idea that performs the linearization at each sampling time to approximate the nonlinear system as a time-varying system and apply the linear filtering theory. In digital controller design, and are assumed to a constant value between the sampling time. Thus, a discrete-time model of (1) can be formulated as follows:

| (2a) | |||

| (2b) | |||

where represents the the terminal state vector obtained by integrating the ordinary differential equation (1a) for one sample interval with the initial condition of and constant inputs of and . For a better state estimation, the unmeasured disturbance should also be estimated simultaneously. In general, can be modeled as the following stochastic difference equation

| (3) |

where is white noise sequence. Combining the Eqn (2) with (3), the following augmented model is obtained

| (4e) | |||||

| (4f) | |||||

where the measurement noise sequence is often to appear in the measured output . By linearizing (4) at , the EKF computes the new estimates from the feedback measurement and the model prediction (4e)

| (5e) | |||||

| (5j) | |||||

| (5k) | |||||

| (5m) | |||||

where

| (6) | |||

,, and are calculated using the following formula

| (7a) | |||

| (7b) | |||

| (7c) | |||

| (7d) | |||

where and represent Jacobian matrices of with respect to and , respectively. And and represent Jacobian matrices of with respect to and , respectively. Thus, we can conclude the EKF computation procedure which has been well presented and analysed, see Tab. I.

| Step | Formula |

|---|---|

| Initialization | , is a large number, |

| Initial guess , | |

| is the process noise covariance of , | |

| is the measurement noise covariance. | |

| 1-Prediction | |

| 2-Correction | |

II-B Successive Linearization NMPC

By recursively handling the streaming input-output data , the EKF algorithm could calculate the new estimated states and disturbances . The is not only used as the nominal values to linearize the nonlinear first-principle model but also used as the initial conditions of the linearized state-space model in the SL-MPC method. Note that the continuous-time model (1) has to be transformed into the discrete-time model for digital MPC design. Two approaches, first discretise then linearise and first linearise then discretise, have been reported. The EKF algorithm presented in Section II-A is based on the first discretise then linearise approach. In the context of SL-MPC, it is more common to use the first linearise then discretise. Here the continuous-time first-principle-based model (1) is linearized at current points ,

| (8a) | |||||

| (8b) | |||||

where , , , and . Here the differential eqn (8a) has to be discretised for obtaining a discrete-time model. The discretization method includes the exact and the approximated discretization. One common discretization method is the the one-step Euler’s approximate method, then a discrete-time model is obtained as follows

| (9a) | |||||

| (9b) | |||||

where , , , . Then, a MPC tracking problem formulation is listed as follows

| s.t. | (10) | ||||

The MPC tracking problem (II-B) can be solved by many quadratic programming (QP) algorithms, such as the active-set, the interior-point and the ADMM-based solver. Note that the model parameters in (9) are related to the recursively updated estimates and the last control input , it means that the MPC tracking problem (II-B) has to be reformulated at each sampling time. Most QP solvers requires an explicit condensing or sparse MPC-to-QP construction. In such a SL-MPC scenario, the MPC-to-QP construction has to be performed online at each sampling time, as does solving the QP problem. Indeed, often the online MPC-to-QP construction has a comparable computational cost to solving the QP problem itself online, especially when warm-starting strategies are employed. Our previous work [34], a construction-free CDAL solver, can avoid explicit MPC-to-QP construction in state-space MPC problem.

III Interpretative and Adaptive MPC

Compared to the exact NMPC method, the approximate method, SL-MPC with EKF, could significantly reduce the online computational burden for nonlinear output-feedback MPC problems. Nonetheless, the SL-MPC with EKF involves two-times linearizations, and its embedded implementation, including integrator, linearizer, EKF and time-changing MPC problem solver, remains a difficult task for control engineers.

In the SL-MPC with EKF method, the model parameters of the model (II-B) are dependant to the last control input and the , which are recursively estimated by the EKF algorithm. In a sense, the model are recursively updated (or feedback corrected) by the EKF algorithm. And our previous work [32] illustrates that an observable linear state-space (SS) model can be equivalently transformed into an ARX model, which will be also introduced in the following subsection III-A. Adding an SS-to-ARX transformation step in SL-MPC with EKF method would not affect the closed-loop control performance. However, an additional SS-to-ARX transformation step relies on the choosing observer design and additional matrix-matrix multiplication cost, which makes it no advantage in the online SL-MPC with EKF method. This inspired us to update the ARX model directly by the EKF algorithm from the streaming input-output data, to avoid an explicit SS-to-ARX transformation. By viewing the ARX model parameters as states of a system, the EKF algorithm could recursively updates the ARX model parameters. In fact, a system divides the states and parameters according to their changibng rate, states change fast and parameters change slowly. And this ARX model identification and tracking by the Kalman Filter based algorithm has been widely used in adaptive control [35].

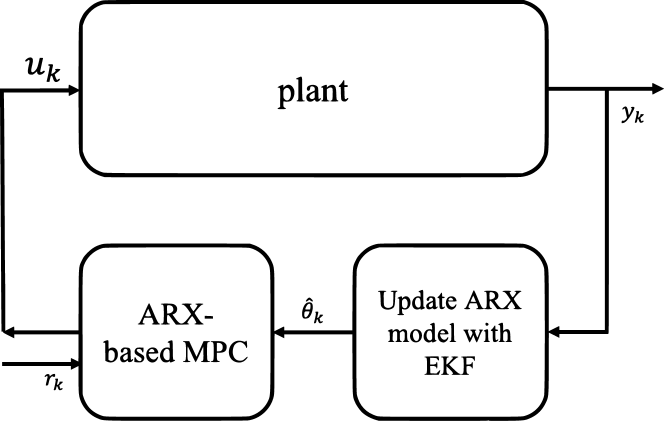

As the EKF algorithm requires a sufficiently small error in initial estimates, and the data-driven identification (initialization) of ARX model is black-box without interpretability like first-priciple-based model, we propose the introduction of the off-line SS-to-ARX transformation in our interretative and adaptive MPC IA-MPC framework. The main steps of our IA-MPC framework are list in Tab. II. Its Step 1 to 3 are performed offline, and the Step 1 is to obtain a linear state-space model by linearizing a first-principle-based model at initial point; the optional Step 2 is to obtain a minimal state-space realization by using model reduction when the state dimension is large such as in distributed parameter models or computational fluid dynamic models; After possible state elimination, the Step 3 is to obtain an equivalent ARX model by designing an SS-to-ARX transformation. In addition to the gained interpretability and consistent initial estimates, this ARX model acquisition also avoids the difficulty in choosing the ARX model orders in data-driven ARX model identification. The detailed equivalent SS-to-ARX transformation will be illustrated in the following subsection III-A. The Step 4 is the online closed-loop MPC control, in which the ARX model parameters are updated by the EKF algorithm from streaming input-output data (see the subsection III-B) and an corresponding ARX-based MPC problem are solved by our previous construction-free CDAL-ARX algroithm [33] (see the subsection III-C). For a comparison with the traditional SL-MPC with EKF framework shown in Fig. 1, the schematic diagram of our IA-MPC framework is showed in Fig. 2.

| Step | Detailed description | ||||

|---|---|---|---|---|---|

| 1 |

|

||||

| 2(optional) |

|

||||

| 3 |

|

||||

| 4 |

|

III-A Equivalent SS-to-ARX transformation

In our preivous work [32], we conclude three SS-to-ARX transformations, namely the Cayley-Hamilton-based, Observer-Theory-based and Kalman-Filter-based transformations. The Cayley-Hamilton-based tranformation can derive the unique and equivalent ARX model but with sensitivity to noises. The Observer-Theory-based and Kalman-Filter-based transformations has the same structure in the transformed ARX model, and the Kalman-Filter-based transformation requires a larger order than the Observer-Theory-based transformation. In fact, the order determines the noises robustness of the ARX model, which can easily tuned by the pole placement method. Clearly, larger order brings better noises robustness but also leads to more computational cost in ARX model update and ARX-based MPC. Herein we mainly present how to transform the adaptive linearized state-space model (9) with the help of the the Observer-Theory-based transformation.

By utilizing a gain matrix in the SS model (9) to begin the derivation of the SS-to-ARX transformation as follows

| (11) | ||||

Based on the above evolution equation (11), calculating from previous time to current time can lead to the following equation

| (12) | ||||

If a gain matrix exists such that vanishes to zero, i.e.,

| (13) |

From linear system theory, the existence of such an gain matrix is assured as long as the system is observable. Thus, the term in (12) is zero for , then multiplying the matrix on both sides of the equation (12) can derive the following ARX model,

| (14) |

where , and

Next, we provide the analysis to tell why the gain matrix can be viewed as an observer gain. The original state-space model (9) has an observer gain of the following form

| (15) | ||||

where is the estimated state. The state estimation error can be denoted as , then follows the dynamic equation,

| (16) |

The estimated state will converge the actual value as tends to infinity if the matrix is asymptotically stable, namely the condition (13), which can be satisfied by using the pole placement method.

III-B ARX model update with decoupled EKF algorithm

By viewing the time-varying ARX model parameters as system states, the ARX model (14) is re-formulateed as the following state-space form

| (17a) | |||

| (17b) | |||

where is a sequence of independent random vectors and is a output noise sequence. Although the variances of and are unknown in real applications and the actual parameters may change differently from the above random walk model (17a), the EKF algorithm can still work very well [36].

Let’s write the Eqn (17b) as the following separated formulation

| (18) |

where denotes the dimensions of , denotes the -th element of . and have the following relationship according to (14)

| (19) | ||||

The advantage of the above separated ARX formulation is that it allows the EKF algorithm to run in a parallel way and avoids the matrix-inverse operation, only involving scalar division, compared to the EKF in the SL-MPC (see Tab. I). We list the EKF computation scheme of our IA-MPC framework in Tab. III.

| Step | Formula | |

|---|---|---|

| Initialization | , is a large number, | |

| initial value from Step 3 in Tab. II, | ||

| is the process noise covariance, | ||

| is the measurement noise covariance. | ||

| 1-Prediction | ||

| 2-Correction | ||

|

|

||

III-C Construction-free ARX-based MPC algorithm

In our IA-MPC framework, the ARX model is updated by the EKF algorithm at each sampling time. Thus it leads to the corresponding time-changing ARX-based MPC tracking problem as follows.,

| s.t. | (20) | ||||

In such a a time-changing setting, the computation time spent in constructing its optimization problem and solving itself should be together considered. Indeed, often the online MPC-to-QP construction has a comparable computational cost to solving the MPC problem itself online, especially when warm-starting strategies are employed. Our previous construction-free CDAL-ARX algorithm [33] can avoid the explicit MPC-to-QP construction, thus allowing it to be suitable for this scenario.

In each iteration of our CDAL-ARX algorithm, an augmented Lagrangian (AL) subproblem is solved by the coordinate descent (CD) method, and the accelerated Nesterov’s scheme is used to update the Lagrangian dual variables. In particular, an efficient coupling scheme between CD and AL method is proposed to exploit the structure of the ARX-MPC problem, which can reduce the computation cost of each inner iteration. The detailed description of algorithm implementation can be found in [33]. In addition to the notable construction-free feature, our CDAL-ARX is also matrix-free and library-free which makes it practically useful in embedded deployment.

IV Numerical Examples

In this section, we test the performance of our proposed IA-MPC method against other two methods, namely the nonlinear MPC method with EKF and the SL-MPC with EKF method. Their MPC solution are based on CasADi v3.5.5[17] and our previous CDAL algorithm for state-space model based MPC [34], respectively, except for the same EKF computational procedure. The reported simulations are executed in MATLAB R2020a on a MacBook Pro with 2.7 GHz 4-core Intel Core i7 and 16GB RAM. Four typical nonlinear MPC numerical examples are used to investigate whether our IA-MPC method works well and provide comparisons with traditional methods.

IV-A Problem descriptions

-

1.

Two tank problem: we consider the cascaded two tanks system, which is a fluid level control system. The input signal controls the water pump that pumps the water from a reservoir into the upper water tank. The water of the upper water tank also flows through a small opening into the lower water tank. The water of the lower water tank flows through a small opening into the reservoir. Herein without considering the overflow effect, we adopt the Bernoulli’s principle and conservation of mass to derive the following first-principle model:

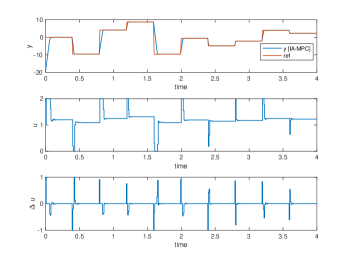

(21) where and are the water level of the upper and lower water tank, respectively. The full states cannot be measured only the as the measured output . is the input signal, and , and are constants depending on the system properties, herein we adopt value for all of them. The sampling time of discrete digital control is s and the control goal is to make the output track the given reference signal subject to the input constraints and the input increment constraints .

-

2.

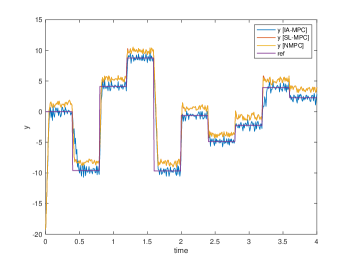

Bilinear motor problem: one common nonlinear control benchmark is a bilinear DC motor plant, whose equation is described as follows,

(22) where and are the rotor current and angular velocity, respectively. The full states cannot be measured only the as the measured output . The control input is the stator current. The system parameters are , , , , , , . The bilinearity of (22) appears between the state and the control input. The sampling time of discrete digital control is s and the control goal is to make the output track the given reference signal subject to the input constraints and the input increment constraints .

-

3.

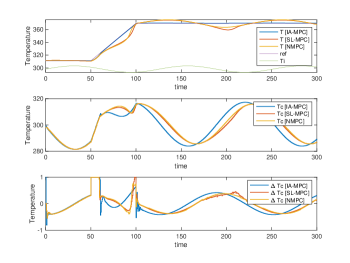

CSTR problem: one typical nonlinear process control benchmark is a continuous stirred tank reactor (CSTR) problem, which is described by the following continuous-time nonlinear model,

(23) where is the concentration of reagent A, is the temperature of the reactor, is the inlet feed stream concentration, which is assumed to have the constant value kgmol/m3. The unmeasured disturbance comes from the inlet feed stream temperature , which has slow fluctuations represented by . The manipulated variable is the coolant temperature . The constants and (in MKS units). The sampling time of discrete digital control is s and the control goal is to manipulates the coolant temperature to track a higher temperature of the reactor (equals a higher conversion rate) as well as reject the unmeasured disturbance . The physical constraints comes from the input increment constraints . Note that the unmeasured disturbance is estimated by the EKF algorithm in SL-MPC and NMPC methods.

-

4.

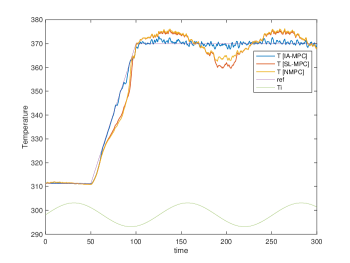

Van der Pol oscillator problem: we consider the nonlinear Van der Pol oscillator with a time varying parameter, and its dynamics are given by,

(24) where is the position, is the velocity, is the control input and is a piecewise function defined as

The sampling time of discrete digital control is s and the control goal is to make the output track the given reference signal subject to the input constraints and the input increment constraints . Note that the unmeasured time-varying parameter is estimated by the EKF algorithm in SL-MPC and NMPC methods.

IV-B Problems settings

The above four problems share the same MPC and EKF settings: the MPC prediction horizon , the MPC output weight and the MPC input increment weight , the initial error covariance matrix of EKF , the process noise covariance matrix of EKF and the measurement noise covariance of EKF . We consider two simulation scenarios, that is without and with process noises, respectively.

-

1.

Two tank problem: its process noise scenario is given a random noise rand(2,1), and the initial conditions are , and for obtaining the linearized state-space model, which is then transformed into the ARX model with order by using poles . Its tracking signal is randomly selected every s in the range .

-

2.

Bilinear motor problem: its process noise scenario is given a random noise rand(2,1), and the initial conditions are , and for obtaining the linearized state-space model, which is then transformed into the ARX model with order by using poles . Its tracking signal is randomly selected every s in the range .

-

3.

CSTR problem: its process noise scenario is given a random noise rand(2,1), and the initial state of the reactor is at a low conversion rate, with kgmol/m3, K, and then the linearized state-space model around the initial conditions is transformed into the ARX model with order by using poles . Its tracking signal gradually changes from K to K during s and then holds constant.

-

4.

Van der Pol oscillator problem: its process noise scenario is given a random noise rand(2,1), and the initial conditions are , and for obtaining the linearized state-space model, which is then transformed into the ARX model with order by using poles . Its tracking signal switches between and every s.

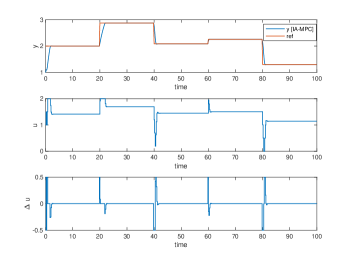

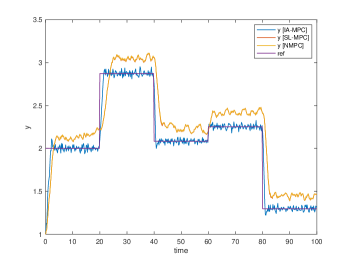

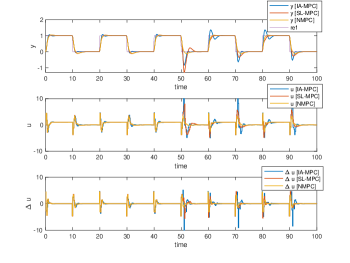

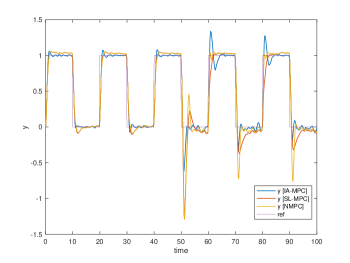

Their simulation results are plotted in Fig. 3, 4, 5 and 6, respectively. The Two tank and Bilinear motor problem have no unknown disturbances and the NMPC, SL-MPC and our IA-MPC method generate the same offset-free tracking performances subject to the input constraints in their noise-free scenario, which are plotted in Fig. 3(a) and 4(a). The CSTR and Van der Pol oscillator problems have the unknown time-varying disturbance, it’s found that our IA-MPC method generate the better offset-free tracking performances than the NMPC and SL-MPC method under the given EKF setting in their noise-free scenario, which are plotted in Fig. 5(a) and 6(a). Under the process noises, our IA-MPC method generate the better noise robustness than the NMPC and SL-MPC method in the four problems, which are plotted in Fig. 3(b), 4(b), 5(b) and 6(b). As expected, the online computation time of the exact NMPC method is much longer than the approximate SL-MPC and IA-MPC method, and the online computation time of the SL-MPC and IA-MPC method are almost the same since they both utilized our developed construction-free CDAL method, having no online construction time.

V Conclusion

This paper proposed a novel interpretative and adaptive MPC (IA-MPC) method for nonlinear systems, which was inspired by the SL-MPC with EKF method. In our IA-MPC method, a linear state-space model is firstly obtained by performing the linearization of a first-principle-based model at the initial point, and then an equivalent ARX model is obtained via the SS-to-ARX transformation. This novel initialization of ARX model allows us to keep the interpretability of a first-principle-based model and the adaptivity of the ARX model. The closed-loop control involves the EKF algorithm to update the ARX model parameters recursively and our previously developed construction-free CDAL-ARX algorithm to calculate the MPC control input. Note that our proposed implementation of our IA-MPC method is well suited for embedded platforms, thanks to its library-free C-code simple enough. The effectiveness of our IA-MPC method was illustrated by four nonlinear typical benchmarks.

Predictably our IA-MPC method has advantages over SL-MPC for high state-dimensional first-principle-based plants, such as distributed parameter models or computational fluid dynamic models, which are our main future applications.

The SL-MPC with EKF method lacks rigorous theoretical guarantee but is still widely adopted in many practical applications for its effectiveness. Its connected IA-MPC method presented in this paper, is also practically useful. Our future work will also focus on analyzing the convergence guarantee of the IA-MPC method, borrowing from work such as adaptive control of linear time-varying systems, or exploiting the covariance matrix that directly quantifies the uncertainty associated with ARX model parameters to setup robust MPC schemes.

References

- [1] C.E. Garcia, D.M. Prett, and M. Morari. Model predictive control: Theory and practice—A survey. Automatica, 25(3):335–348, 1989.

- [2] S.J. Qin and T.A. Badgwell. A survey of industrial model predictive control technology. Control engineering practice, 11(7):733–764, 2003.

- [3] U. Eren, A. Prach, B.B. Koçer, S.V. Raković, E. Kayacan, and B. Açıkmeşe. Model predictive control in aerospace systems: Current state and opportunities. Journal of Guidance, Control, and Dynamics, 40(7):1541–1566, 2017.

- [4] T. Geyer. Model predictive control of high power converters and industrial drives. John Wiley & Sons, 2016.

- [5] A. Bemporad and M. Morari. Robust model predictive control: A survey. In Robustness in identification and control, pages 207–226. Springer, 1999.

- [6] J.H. Lee. Model predictive control: Review of the three decades of development. International Journal of Control, Automation and Systems, 9(3):415–424, 2011.

- [7] A. Bemporad, M. Morari, V. Dua, and E.N. Pistikopoulos. The explicit linear quadratic regulator for constrained systems. Automatica, 38(1):3–20, 2002.

- [8] L. Chisci, P. Falugi, and G. Zappa. Gain-scheduling MPC of nonlinear systems. International Journal of Robust and Nonlinear Control: IFAC-Affiliated Journal, 13(3-4):295–308, 2003.

- [9] F. Kuhne, W.F. Lages, and J.G. da Silva Jr. Model predictive control of a mobile robot using linearization. Proceedings of mechatronics and robotics, 4(4):525–530, 2004.

- [10] P. Falcone, F. Borrelli, H.E. Tsengand J. Asgari, and D. Hrovat. Linear time-varying model predictive control and its application to active steering systems: Stability analysis and experimental validation. International Journal of Robust and Nonlinear Control: IFAC-Affiliated Journal, 18(8):862–875, 2008.

- [11] S.Gros, M. Zanon, R. Quirynen, A. Bemporad, and M. Diehl. From linear to nonlinear MPC: bridging the gap via the real-time iteration. International Journal of Control, 93(1):62–80, 2020.

- [12] R. Quirynen, M. Vukov, M. Zanon, and M. Diehl. Autogenerating microsecond solvers for nonlinear MPC: a tutorial using ACADO integrators. Optimal Control Applications and Methods, 36(5):685–704, 2015.

- [13] B. Houska, H.J. Ferreau, and M. Diehl. An auto-generated real-time iteration algorithm for nonlinear MPC in the microsecond range. Automatica, 47(10):2279–2285, 2011.

- [14] T. Ohtsuka. A continuation/GMRES method for fast computation of nonlinear receding horizon control. Automatica, 40(4):563–574, 2004.

- [15] T. Ohtsuka. A tutorial on C/GMRES and automatic code generation for nonlinear model predictive control. In 2015 European Control Conference (ECC), pages 73–86. IEEE, 2015.

- [16] S. Katayama and T. Ohtsuka. Automatic Code Generation Tool for Nonlinear Model Predictive Control with Jupyter. IFAC-PapersOnLine, 53(2):7033–7040, 2020.

- [17] J.A.E. Andersson, J. Gillis, G. Horn, J.B. Rawlings, and M. Diehl. CasADi – A software framework for nonlinear optimization and optimal control. Mathematical Programming Computation, 11(1):1–36, 2019.

- [18] A. Zanelli, A. Domahidi, J. Jerez, and M. Morari. FORCES NLP: an efficient implementation of interior-point methods for multistage nonlinear nonconvex programs. International Journal of Control, 93(1):13–29, 2020.

- [19] M. Korda and I. Mezić. Linear predictors for nonlinear dynamical systems: Koopman operator meets model predictive control. Automatica, 93:149–160, 2018.

- [20] B. Lusch, J.N. Kutz, and S.L. Brunton. Deep learning for universal linear embeddings of nonlinear dynamics. Nature communications, 9(1):1–10, 2018.

- [21] E. Kaiser, J.N. Kutz, and S.L. Brunton. Data-driven discovery of Koopman eigenfunctions for control. Machine Learning: Science and Technology, 2(3):035023, 2021.

- [22] B. Karg and S. Lucia. Efficient representation and approximation of model predictive control laws via deep learning. IEEE Transactions on Cybernetics, 50(9):3866–3878, 2020.

- [23] S. Lucia and B. Karg. A deep learning-based approach to robust nonlinear model predictive control. IFAC-PapersOnLine, 51(20):511–516, 2018.

- [24] M. Nørgaard, N.K. Poulsen, and O. Ravn. New developments in state estimation for nonlinear systems. Automatica, 36(11):1627–1638, 2000.

- [25] E.A. Wan and A.T. Nelson. Dual extended Kalman filter methods. Kalman filtering and neural networks, 123, 2001.

- [26] S.F. Fux, A. Ashouri, M.J. Benz, and L. Guzzella. EKF based self-adaptive thermal model for a passive house. Energy and Buildings, 68:811–817, 2014.

- [27] L. Guo and L. Ljung. Performance analysis of general tracking algorithms. IEEE Transactions on Automatic Control, 40(8):1388–1402, 1995.

- [28] A. Bemporad. Recurrent Neural Network Training with Convex Loss and Regularization Functions by Extended Kalman Filtering. arXiv preprint arXiv:2111.02673, 2021.

- [29] K. Reif, S. Gunther, E. Yaz, and R. Unbehauen. Stochastic stability of the discrete-time extended Kalman filter. IEEE Transactions on Automatic control, 44(4):714–728, 1999.

- [30] L. Ljung. Asymptotic behavior of the extended Kalman filter as a parameter estimator for linear systems. IEEE Transactions on Automatic Control, 24(1):36–50, 1979.

- [31] J.H. Lee and N.L. Ricker. Extended Kalman filter based nonlinear model predictive control. Industrial & Engineering Chemistry Research, 33(6):1530–1541, 1994.

- [32] L. Wu and A. Bemporad. Equivalence of SS-based MPC and ARX-based MPC. arXiv preprint arXiv:2209.00107, 2022.

- [33] L. Wu and A. Bemporad. A construction-free coordinate-descent augmented-Lagrangian method for embedded linear MPC based on ARX models. arXiv preprint arXiv:2207.06098, 2022.

- [34] L. Wu and A. Bemporad. A Simple and Fast Coordinate-Descent Augmented-Lagrangian solver for Model Predictive Control. arXiv preprint arXiv:2109.10205, 2021.

- [35] L. Guo. Estimating time-varying parameters by the Kalman filter based algorithm: stability and convergence. IEEE Transactions on Automatic Control, 35(2):141–147, 1990.

- [36] L. Cao and H.M Schwartz. Analysis of the Kalman filter based estimation algorithm: an orthogonal decomposition approach. Automatica, 40(1):5–19, 2004.