An Iterative Algorithm for Regularized Non-negative Matrix Factorizations

Abstract

We generalize the non-negative matrix factorization algorithm of Lee and Seung [3] to accept a weighted norm, and to support ridge and Lasso regularization. We recast the Lee and Seung multiplicative update as an additive update which does not get stuck on zero values. We apply the companion R package rnnmf [9] to the problem of finding a reduced rank representation of a database of cocktails.

1 Introduction

The term non-negative matrix factorization refers to decomposing a given matrix with non-negative elements approximately as the product , for comformable non-negative matrices and of smaller rank than . This factorization represents a kind of dimensionality reduction. The quality of the approximation is measured by some “divergence” between and , or some norm of their difference. Perhaps the most common is the Frobenius (or elementwise ) distance between them. Under this objective, non-negative matrix factorization can be expressed as the optimization problem:

| (1) |

where is the trace of the matrix , and means the elements of are non-negative. [10]

Lee and Seung described an iterative approach to this problem, as well as for a related optimization with a different objective. [3] Their algorithm starts with some unspecified initial iterates and , then iteratively improves and in turn. That is, given and , an update is made to arrive at ; then using and , one computes , and the process repeats. The iterative update for is

| (2) |

We use to mean the Hadamard, or ‘elementwise’, multiplication, and to mean Hadamard division. The iterative update for is defined mutatis mutandis. As should be apparent from inspection, this iterative update maintains non-negativity of estimates. That is, if , , and have only positive elements, then is finite and has only positive elements. It is not clear what should be done if elements of the denominator term are zero, and whether and how this can be avoided.

Gonzalez and Zhang describe a modification of the update to accelerate convergence. [2] Their accelerated algorithm updates row by row, and column by column, using the step length modification of Merritt and Zhang [5].

One attractive feature of the Lee and Seung iterative update is its sheer simplicity. In a high-level computer language with a rich set of matrix operations, such as Julia or octave, the iterative step can be expressed in a single line of code; the entire algorithm could be expressed in perhaps 20 lines of code. Conceivably this algorithm could even be implemented in challenged computing environments, like within a SQL query or a spreadsheet macro language. Simplicity makes this algorithm an obvious choice for experimentation without requiring an all-purpose constrained quadratic optimization solver, or other complicated software.

2 Regularized Non-Negative Matrix Factorization

We generalize the optimization problem of 1 to include a weighted norm, regularization terms, and orthogonality penalties. For a given , and non-negative weighting matrices , , , , , , , and , find matrices and to minimize

| (3) | ||||

Essentially we are trying to model as . Our loss function is quadratic in the error , with weights and , and and regularization via the terms with and and . This optimization problem includes that of 1 as a special case.

We note that a non-orthogonality penalty term on the can be expressed as

Thus a non-orthogonality penalty term can be expressed with the and . Indeed the motivation for including a sum of the terms is to allow for both straightforward regularization, where and , and orthogonality terms. For simplicity of notation, in the discussion that follows we will often treat the terms as if there were only one of them, i.e., , and omit the subscripts. Restoring the more general case consists only of expanding to a full sum of terms.

This problem formulation is appropriate for power users who can meaningfully select the various weighting matrices. And while it is easy to conceive of a use for general forms for and (the rows of have different importances, or the columns of are observed with different accuracies, for example), most users would probably prefer simpler formulations for the and matrices. To achieve something like an elasticnet factorization with non-orthogonality penalties, one should set and take

| (4) | ||||||

where here stands in for an all-ones matrix of the appropriate size. All the and parameters must be non-negative, and the identity matrices are all appropriately sized. This is the reduced form of the problem that depends mostly on a few scalars. A simple form for the weighted error is to take and to be diagonal matrices,

Depending on the regularization terms, the solution to the problem of 3 is unlikely to be unique. Certain the solution to 1 is not unique, since if is a permutation matrix of the appropriate size, then and are another set of non-negative matrices with the same objective value. Perhaps the regularization terms can help avoid these kinds of ambiguity in the solution.

2.0.1 Split Form

To approximately solve this problem our algorithm starts with some initial estimates of and and iteratively alternates between updating the estimate of with the estimate of fixed, and updating the estimate of with the estimate of fixed. To discuss these half-step updates, we can describe both of them in a single unified formulation. So we consider the optimization problem

| (5) |

where we define the objective function

| (6) | ||||

We must assume that has full column rank and has full row rank, , , and are square, symmetric and positive semidefinite. The has the same number of rows as , and has the same number of columns as . is the same size as ; we will make further restrictions on below. The matrices have the same number of rows as , and have the same number of columns as . All the are assumed to have non-negative elements, that is .

2.1 Factorization in Vector Form

The analysis is simplified if we express the problem as one of finding a vector unknown, so we will ‘vectorize’ the problem. For matrix let be the vector which consists of the columns of stacked on top of each other; let denote the Kronecker product of and . [11] See Section A in the appendix for some helpful identities involving the Kronecker product, matrix trace, and vectorization.

We write as a quadratic function of the vector

| (7) |

where

| (8) |

We write to mean . Note that , but will likely have negative elements. In fact, to use Lee and Seung’s iterative algorithm, the vector must have non-positive elements. This is equivalent to the restriction , taken elementwise. This imposes a restriction on which is hard to verify for the problem of non-negative matrix factorization. Note that by definition can be positive definite, depending on the , and the , but is only positive semi definite in the general case.

We can thus consider this problem as optimization over a vector unknown. In an abuse of notation, we reuse the objective function , writing

| (9) |

for symmetric, nonnegative positive semidefinite . So now we consider the positivity-constrained quadratic optimization problem

| (10) |

Note that the unconstrained solution to this problem is . When is positive definite, because the constraint space is convex, this solution will be unique. [6] In general, however, we will not have unique solutions. When the term is all zero, this a weighted least squares problem, possibly with Tikhonov regularization.

The gradient of the objective is is . When considering the matrix form of the problem, this has the value

We consider an iterative solution to this problem. We first select some . Then given current iterate , we seek to find the next iterate . Define

where we will set , and such that is tangent to at , and such that for all . We will take as some point which improves : its minimizer if it is non-negative, or another point if not. If improves , then it also improves , as

The tangency condition fixes the value of , and also gives the identity

To ensure that it is sufficient to select select such that is positive semidefinite. The following lemma gives us a way of selecting diagonal matrices that satisfy this condition.

First a bit of notation, for vector , is the diagonal matrix with for diagonal. For matrix , is the vector of the diagonal of . Thus we have .

Lemma 2.1.

Let be a symmetric matrix with non-negative elements, and full rank, and let be a vector with strictly positive elements. Then

(Here means that is positive semidefinite.)

Proof.

First note that if is symmetric, and has non-negative elements, then because the matrix is symmetric, with non-negative diagonal, and is diagonally dominant, thus it is positive semidefinite.

Apply that fact with . Then

as needed.

Note that the proof relies on strict positivity of the elements of . By this we can claim a bijection between and , which allows us to conclude the last line above. ∎

Lemma 2.2.

Let be a symmetric matrix with non-negative elements, and full rank. Let be some vector with non-negative elements such that has strictly positive elements. Letting

then

Proof.

First, consider the case where has strictly positive elements. Letting the minimum of occurs at , which has value

| (11) |

By Lemma 2.1, dominates , and so .

To prove the theorem for the general case where is simply non-negative, consider a sequence of strictly positive vectors which converge to , and apply the argument above, then appeal to continuity of . ∎

Theorem 2.3 (Lee and Seung).

Let be a symmetric matrix with non-negative elements, and full rank. Assume has non-negative elements, and has strictly positive elements. If

| (12) |

then . Moreover, the update preserves non-negativity of iterates as long as has non-positive elements.

Proof.

Returning to the original problem of minimizing the objective of Equation 6, the iterative update is

| (14) |

2.1.1 As a Matrix Factorization Algorithm

Now consider using this iterative update in the solution of Problem 3. One starts with initial guesses and , which are strictly positive, then computes in turn

| (15) | ||||

| (16) | ||||

Then one computes estimates of

The restriction on the from the symmetric form of the problem translates into the requirements that

| (17) | ||||

| (18) |

elementwise. This restriction suffices to keep iterates strictly positive if the initial iterates are also strictly positive. Note, however, it is not clear how this can be guaranteed a priori, as the iterates and may become small, and the may be large. In fact, the entire point of regularization is to encourage elements of and to take value zero. As a practical matter, then, code which allows arbitrary , should take the liberty of overriding the input values and always guaranteeing that the numerators are bounded elementwise by

| (19) |

for some . This is a compromise for simplicity, and thus this form of the algorithm does not always solve for the input problem. In the next section we describe a more principled approach that respects the user input.

Again the restriction on the from the symmetric problem cannot be guaranteed a priori; instead an implementation must keep the numerators bounded away from zero. We present the method in Algorithm 1. We call it a “multiplicative update” since the update steps are elementwise multiply and divide operations on the initial estimates of and . For this reason, it has the same limitations as the Lee and Seung algorithm, namely that once an element of or takes value zero, it can never take a non-zero value. This algorithm assumes the simplified form of Equations 4; the code for the more general form of the problem is an obvious modification of the update steps.

3 Additive Steps and Convergence

Theorem 2.3 only tells us that the sequence is non-decreasing. It does not guarantee convergence to a global, or even a local, minimum. In fact, if we only restrict to be non-positive, it is easy to construct cases where will not converge to the constrained optimum. For if an element of is zero, then that same element of will be zero for all . However, the optimum may occur for with a non-zero value for that element. (Certainly the unconstrained solution may have a non-zero value for that element.)

Here we analyze the Lee and Seung update as a more traditional additive step, rather than a multiplicative step. Expressing the iteration as an additive step, we have

| (20) |

where

| (21) | ||||

| (22) |

This may not be the optimal length step in the direction of . If we instead take

| (23) |

then is a quadratic function of with optimum at

| (24) |

However, if elements of could be negative. One would instead have to select a that results in a strictly non-negative . However, if , then the original algorithm would have overshot the optimum.

Lemma 3.1.

Proof.

Letting , is quadratic in with positive second derivative. Then since is between and , we have , which was to be proven. By construction the elements of are non-negative. ∎

We note that if then , since non-negativity is sustained for smaller step sizes.

This iterative update was originally described by Merritt and Zhang for solution of “Totally Nonnegative Least Squares” problems. [5] Their algorithm has guaranteed convergence for minimization of , without the weighting or regularization terms, under certain conditions. They assume strict positivity of , which we cannot easily express in terms of and , and it is not obvious that their proof can be directly used to guarantee convergence. Without guarantees of convergence, we express their method in our nomenclature as Algorithm 2, computing the step as . The vague language around selecting the step length is due to Merritt and Zhang; presumably one can choose it randomly, or always take .

3.1 Other Directions

Imagine, instead, plugging in different values of into the given by Lemma 2.2. We claim that setting would yield the global unconstrained minimizer for :

| (25) |

This would seem to be a totally useless computation because we cannot efficiently compute -, and it likely violates the positivity constraint. However, this suggests an alternative iterative step that might have accelerated convergence. For example, it might be the case that setting might give quicker convergence to a solution, where is the non-negative part of .

We can also imagine an iterative update based on different descent directions altogether. For example steepest descent, where . Both of these and the method above can be couched as the general iterative method of Algorithm 2. We note, however, that steepest descent will fail to make progress in this formulation when an element of is zero and the corresponding element of the gradient is positive. In this case, the step length would be computed as zero and the algorithm would terminate early. The Lee and Seung step naturally avoids this problem by scaling the step direction proportional element-wise to . It is not clear, however, whether the denominator part of the Lee and Seung step is necessary, and perhaps taking steps in the direction of sufficiently good convergence.

Converting this to the NMF problem as formulated in Section 2.1.1 is a straightforward, though somewhat tedious, exercise. We give the algorithm in Algorithm 3 for the case where the weighting matrices satisfy the conditions of Equations 4. We note that the restrictions on and that caused so much headache above are swept under the rug here with the check for maximum allowable step length, , in giqpm_step.

It is not clear a priori whether this algorithm converges quickly. It is very unlikely that Algorithm 2 is competitive for the constrained optimization problem on vectors given in 10. Converting to steepest descent is not recommended due to slow convergence, as noted above. One could swap out the iterative updates of and in Algorithm 3 with something like a constrained conjugate gradient. [4, 6] However, one should take several steps of conjugate gradient for each as the optimization problem changes as we update the and .

4 Simulations

The multiplicative and additive algorithms are implemented in the R package rnnmf written by the author. [9] Here we briefly summarize the results of using the code on generated data. We first generate a the exactly equals for some non-negative uniformly random and . In the first simulations we take to be , and to be . We randomly generate starting iterates, matrix and matrix . We use the same starting point for both the additive and multiplicative algorithms. We compute the Frobenius norm of the error, which is to say

for each iteration.

In Figure 1 we plot the error versus step for the additive and multiplicative methods. The additive method uses the optimal step size in the chosen direction. While not shown here, choosing the naive step without optimizing converges slower in iterations but may ultimately be faster computationally. The additive method outperforms the multiplicative method in convergence per step. Convergence of both is “lumpy”, with apparent phase changes where, we suspect, different directions become the dominant source of approximation error, and then are smoothed out.

We then apply the code to another test case. Here we generate to be , and to be . Again we test both the additive and multiplicative algorithms, but test the effect of sparse starting iterates. We randomly generate starting iterates, matrix and matrix . First we do this where about of the elements of and are zero, testing both algorithms. We repeat the experiment for the same , but generate strictly positive and .

In Figure 2 we plot the error versus step for both methods and both choices of starting iterates. Again we see somewhat slower convergence for the multiplicative method, at least measured in iterates. Moreover, the multiplicative method fails to converge for the sparse starting iterates. As we noted above, the multiplicative method cannot change an element from zero to non-negative, thus the lack of convergence for sparse initial estimates is not surprising.

5 Applications

One straightforward application of non-negative matrix factorization is in chemometrics [7], where one observes the absorption spectra of some samples at multiple wavelengths of light. The task is to approximate the observed absorption as the product of some non-negative combination of some non-negative absorption spectra of the underlying analytes. When the underlying analytes have known absorption spectra the task is like a regression; when they do not, it is more like a dimensionality reduction or unsupervised learning problem.

We consider a more unorthodox application here, namely an analysis of cocktail recipes. From a reductionist point of view, a cocktail is a mixture of a number of constituent liquid ingredients. We use a database of 5,778 cocktail recipes, scraped from the website www.kindredcocktails.com, and distributed in an R package. [8] We filter down this selection, removing ingredients which appear in fewer than 10 cocktails, then removing cocktails with those ingredients. This winnows the dataset down to 3,814 cocktails. The upstream website allows visitors to cast votes on cocktails. We use the number of votes to filter the cocktails. The median cocktail, however, has only 2 votes. We remove cocktails with fewer than 2 votes, resulting in a final set of 2,405 cocktails.

We express the cocktails as a sparse non-negative matrix whose entry at indicates the proportion of cocktail that is ingredient . We have normalized the matrix to have rows that sum to 1. We then performed a basic non-negative matrix factorization , without regularization. We do this for rank approximation, meanining has columns and has rows. To estimate the quality of the approximation we compute an value. This is computed as

| (26) |

Here is the vector of the column means of . One can think of as encoding the “Jersey Turnpike”, a drink with a small amount of every ingredient.

We plot versus in Figure 3, with the axis in square root space, giving a near-linear fit but with a crook, or knee, around , which has an We will consider factorizations with this , and also with for which To account for the differential popularity of different cocktails, we use the number of votes to weight the error of our approximation. We take to be the diagonal matrix consisting of the number of votes for each cocktail. We perform factorization under this weighted objective.

| Latent Cocktail | Ingredient | Proportion |

|---|---|---|

| factor1 | Gin | 0.433 |

| factor1 | Other (256 others) | 0.415 |

| factor1 | Lemon Juice | 0.067 |

| factor1 | Sweet Vermouth | 0.046 |

| factor1 | Lime Juice | 0.038 |

| factor2 | Bourbon | 0.474 |

| factor2 | Other (243 others) | 0.350 |

| factor2 | Sweet Vermouth | 0.071 |

| factor2 | Lemon Juice | 0.036 |

| factor2 | Campari | 0.035 |

| factor2 | Cynar | 0.034 |

| factor3 | Rye | 0.490 |

| factor3 | Other (234 others) | 0.408 |

| factor3 | Sweet Vermouth | 0.102 |

Before displaying the results, we note that a matrix factorization is only determined up to rescaling and rotation. That is, if is an invertible matrix, then the following two factorizations are equivalent:

These two factorizations might not be equivalent for the regularized factorization objective, however. We make use of this equivalence when demonstrating the output of our algorithm: we use matrix such that has unit row sums, and we rearrange the order of the factors such that the column sums of are non-increasing. With that in mind, we display the first three latent factor cocktails in in Table 1, lumping together those ingredients which make up less than of the factor cocktails.

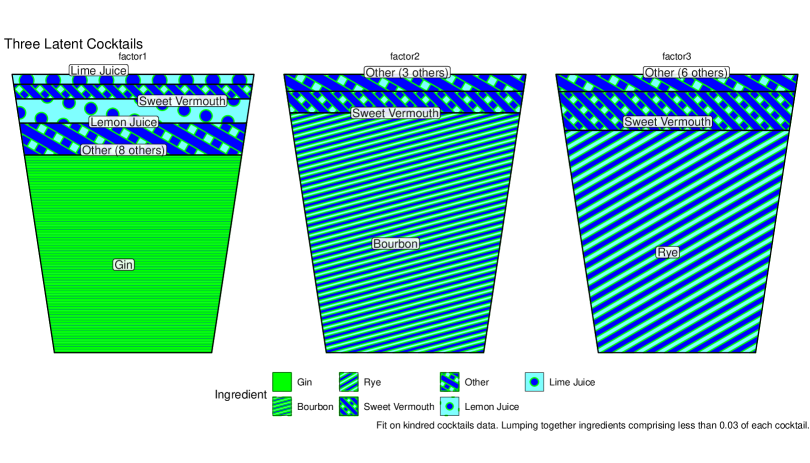

While the table is definitive, it is hard to appreciate the distribution of ingredients in the latent factor cocktails. Towards that end, we plot them in stacked barcharts in Figure 4. These barcharts are styled to look like drinks, what one might call “tumbler plots”. We notice that the latent factor cocktails contain a large number of ingredients falling under the display limit of 0.03. We also see repetition of ingredients in the factor cocktails, with Sweet Vermouth appearing in appreciable amounts in all three.

To remedy that, we fit again, this time using and . It is important to note that because we decompose that applying an penalty solely on could result in a rescaling of output such that elements of are small in magnitude and elements of are large. That is, because , we could take for large and make the penalty on irrelevant without really making the decomposition sparse. To address this, one mst typically apply an penalty to both and as we do here.

We chose the values of by trial and error, as unfortunately there is no theory to guide us here. Forging on, we plot the results in Figure 5. We see there that the factor cocktails are now contain far fewer ingredients, though they all hew to the formula of base spirit plus vermouth, with some extras. These seem more “spirit-forward” than a typical cocktail, but de gustibus non est disputandum.

Finally, we perform another fit, this time with , and using , , , values again chosen by trial and error. We plot the results of this fit in Figure 6. Again we see heavy use of base spirits with a sprinkling of secondary ingredients. One can imagine in the limit as approaches the number of base ingredients that the factorization could collapse to the trivial one . We see that behavior here with the top nine most popular ingredients dominating the latent factor cocktails. Thus it is hard to view non-negative matrix factorization as a good way of quantifying and describing the space of cocktails. Probably a better approach would be to recognize that cocktails evolve from other cocktails, then try to infer and describe the evolutionary structure.

References

- FC et al. [2024] Mike FC, Trevor L. Davis, and ggplot2 authors. ggpattern: ’ggplot2’ Pattern Geoms, 2024. URL https://github.com/coolbutuseless/ggpattern. R package version 1.1.1, commit 5d3c1de3f472abf041e0e79ced00479cd7c70796.

- Gonzalez and Zhang [2005] Edward F. Gonzalez and Yin Zhang. Accelerating the Lee-Seung algorithm for nonnegative matrix factorization. Technical Report TR05-02, Rice University, 2005. URL https://scholarship.rice.edu/bitstream/handle/1911/102034/TR05-02.pdf.

- Lee and Seung [2001] Daniel D. Lee and H. Sebastian Seung. Algorithms for non-negative matrix factorization. In T. K. Leen, T. G. Dietterich, and V. Tresp, editors, Advances in Neural Information Processing Systems 13, pages 556–562. MIT Press, 2001. URL http://papers.nips.cc/paper/1861-algorithms-for-non-negative-matrix-factorization.pdf.

- Li [2013] Can Li. A conjugate gradient type method for the nonnegative constraints optimization problems. Journal of Applied Mathematics, 2013:986317, 2013. doi: 10.1155/2013/986317. URL https://doi.org/10.1155/2013/986317.

- Merritt and Zhang [2005] Michael Merritt and Yin Zhang. Interior-point gradient method for large-scale totally nonnegative least squares problems. Journal of optimization theory and applications, 126(1):191–202, 2005. URL https://scholarship.rice.edu/bitstream/handle/1911/102020/TR04-08.pdf.

- Nocedal and Wright [2006] J. Nocedal and S. J. Wright. Numerical Optimization. Springer series in operations research and financial engineering. Springer, 2006. ISBN 9780387400655. URL http://books.google.com/books?id=VbHYoSyelFcC.

- Pav [2012] Steven E. Pav. System and method for unmixing spectroscopic observations with nonnegative matrix factorization, 2012. URL https://patentscope.wipo.int/search/en/detail.jsf?docId=US42758160.

- Pav [2023] Steven E. Pav. cocktailApp: ’shiny’ App to Discover Cocktails, 2023. URL https://github.com/shabbychef/cocktailApp. R package version 0.2.3.

- Pav [2024] Steven E. Pav. rnnmf: Regularized Non-negative Matrix Factorization, 2024. URL https://github.com/shabbychef/rnnmf. R package version 0.3.0.

- Saberi-Movahed et al. [2024] Farid Saberi-Movahed, Kamal Berahman, Razieh Sheikhpour, Yuefeng Li, and Shirui Pan. Nonnegative matrix factorization in dimensionality reduction: A survey, 2024. URL https://arxiv.org/abs/2405.03615.

- Schäcke [2013] Kathrin Schäcke. On the Kronecker product, 2013. URL https://www.math.uwaterloo.ca/~hwolkowi/henry/reports/kronthesisschaecke04.pdf.

Appendix A Matrix Identities

The following identities are useful for switching between matrix and vectorized representations. [11]

| (27) | ||||

| (28) | ||||

| (29) | ||||

| (30) | ||||

| (31) |