An Algorithm for Computing Optimal Continuous Voltage Schedules

Abstract

Dynamic Voltage Scaling techniques allow the processor to set its speed dynamically in order to reduce energy consumption. In the continuous model, the processor can run at any speed, while in the discrete model, the processor can only run at finite number of speeds given as input. The current best algorithm for computing the optimal schedules for the continuous model runs at time for scheduling jobs. In this paper, we improve the running time to by speeding up the calculation of s-schedules using a more refined data structure. For the discrete model, we improve the computation of the optimal schedule from the current best to where is the number of allowed speeds.

1 Introduction

Energy efficiency is always a primary concern for chip designers not only for the sake of prolonging the lifetime of batteries which are the major power supply of portable electronic devices but also for the environmental protection purpose when large facilities like data centers are involved. Currently, processors capable of operating at a range of frequencies are already available, such as Intel’s SpeedStep technology and AMD’s PowerNow technology. The capability of the processor to change voltages is often referred to in the literature as DVS (Dynamic Voltage Scaling) techniques. For DVS processors, since energy consumption is at least a quadratic function of the supply voltage (which is proportional to CPU speed), it saves energy to let the processor run at the lowest possible speed while still satisfying all the timing constraints, rather than running at full speed and then switching to idle.

One of the earliest theoretical models for DVS was introduced by Yao, Demers and Shenker Yao95 in 1995. They assumed that the processor can run at any speed and each job has an arrival time and a deadline. They gave a characterization of the minimum-energy schedule (MES) and an algorithm for computing it which is later improved to by Li06 . No special assumption was made on the power consumption function except convexity. Several online heuristics were also considered including the Average Rate Heuristic (AVR) and Optimal Available Heuristic (OPA). Under the common assumption of power function , they showed that AVR has a competitive ratio of for all job sets. Thus its energy consumption is at most a constant times the minimum required. Later on, under various related models and assumptions, more algorithms for energy-efficient scheduling have been proposed.

Bansal et al. Bansal04 further investigated the online heuristics for the model proposed by Yao95 and proved that the heuristic OPA has a tight competitive ratio of for all job sets. For the temperature model where the temperature of the processor is not allowed to exceed a certain thermal threshold, they showed how to solve it within any error bound in polynomial time. Recently, Bansal et al. Bansal08 showed that the competitive analysis of AVR heuristic given in Yao95 is essentially tight. Quan and Hu Quan01 considered scheduling jobs with fixed priorities and characterized the optimal schedule through transformations to MES Yao95 . Yun and Kim Yun03 later on showed the NP-hardness to compute the optimal schedule.

Pruhs et al. Pruhs04 studied the problem of minimizing the average flow time of a sequence of jobs when a fixed amount of energy is available and gave a polynomial time offline algorithm for unit-size jobs. Bunde Bunde06 extended this problem to the multiprocessor scenario and gave some nice results for unit-size jobs. Chan et al. Soda07 investigated a slightly more realistic model where the maximum speed is bounded. They proposed an online algorithm which is -competitive in both energy consumption and throughput. More work on the speed bounded model can be found in ICALP08 TAMC07 ISAAC07 .

Ishihara and Yasuura Ishihara98 initiated the research on discrete DVS problem where a CPU can only run at a set of given speeds. They solved the case when the processor is only allowed to run at two different speeds. Kwon and Kim Kwon03 extended it to the general discrete DVS model where the processor is allowed to run at speeds chosen from a finite speed set. They gave an algorithm for this problem based on the MES algorithm in Yao95 , which is later improved in Li05 to where is the allowed number of speeds.

When the CPU can only change speed gradually instead of instantly, Qu98 discussed about some special cases that can be solved optimally in polynomial time. Later, Wu et al. Wu09 extended the polynomial solvability to jobs with agreeable deadlines. Irani et al. Irani03 investigated an extended scenario where the processor can be put into a low-power sleep state when idle. A certain amount of energy is needed when the processor changes from the sleep state to the active state. The technique of switching processors from idle to sleep and back to idle is called Dynamic Power Management (DPM) which is the other major technique for energy efficiency. They gave an offline algorithm that achieves 2-approximation and online algorithms with constant competitive ratios. Recently, Albers and Antoniadis SODA12 proved the NP-hardness of the above problem and also showed some lower bounds of the approximation ratio. Pruhs et al. Pruhs10 introduced profit into DVS scheduling. They assume that the profit obtained from a job is a function on its finishing time and on the other hand money needs to be paid to buy energy to execute jobs. They give a lower bound on how good an online algorithm can be and also give a constant competitive ratio online algorithm in the resource augmentation setting. A survey on algorithmic problems in power management for DVS by Irani and Pruhs can be found in Irani05 . Most recent surveys by Albers can be found in Albers10 Albers11b .

In LiB06 , the authors showed that the optimal schedule for tree structured jobs can be computed in time. In this paper, we prove that the optimal schedule for general jobs can also be computed in time, improving upon the previously best known result Li06 . The remaining paper is organized as follows. Section 2 will give the problem formulation. Section 3 will discuss the linear implementation of an important tool — the s-schedule used in the algorithm in Li06 . Then we use the linear implementation to improve the calculation of the optimal schedule in Section 4. In Section 5, we give improvements in the computation complexity of the optimal schedule for the discrete model. Finally, we conclude the paper in Section 6.

2 Models and Preliminaries

We consider the single processor setting. A job set over is given where each job is characterized by three parameters: arrival time , deadline , and workload . Here workload means the required number of CPU cycles. We also refer to as the interval of . A schedule for is a pair of functions which defines the processor speed and the job being executed at time respectively. Both functions are assumed to be piecewise continuous with finitely many discontinuities. A feasible schedule must give each job its required workload between its arrival time and deadline with perhaps intermittent execution. We assume that the power , or energy consumed per unit time, is () where is the processor speed. The total energy consumed by a schedule is . The goal of the min-energy feasibility scheduling problem is to find a feasible schedule that minimizes for any given job set . We refer to this problem as the continuous DVS scheduling problem.

For the continuous DVS scheduling problem, the optimal schedule is characterized by using the notion of a critical interval for , which is an interval in which a group of jobs must be scheduled at maximum constant speed in any optimal schedule for . The algorithm MES in Yao95 proceeds by identifying such a critical interval , scheduling those ‘critical’ jobs at speed over , then constructing a subproblem for the remaining jobs and solving it recursively. The details are given below.

Definition 1

For any interval , we use to denote the subset of jobs in whose intervals are completely contained in . The intensity of an interval is defined to be .

An interval achieving maximum over all possible intervals defines a critical interval for the current job set. It is known that the subset of jobs can be feasibly scheduled at speed over by the earliest deadline first (EDF) principle. That is, at any time , a job which is waiting to be executed and having earliest deadline will be executed during . The interval is then removed from ; all the remaining job intervals are updated to reflect the removal, and the algorithm recurses. We denote the optimal schedule which guarantees feasibility and consumes minimum energy in the continuous DVS model as OPT.

The authors in Li06 later observed that in fact the critical intervals do not need to be located one after another. Instead, one can use a concept called -schedule defined below to do bipartition on jobs which gradually approaches the optimal speed curve.

Definition 2

For any constant , the -schedule for is an EDF schedule which uses a constant speed in executing any jobs of . It will give up a job when the deadline of the job has passed. In general, -schedules may have idle periods or unfinished jobs.

Definition 3

In a schedule , a maximal subinterval of devoted to executing the same job is called an execution interval for (with respect to ). Denote by the union of all execution intervals for with respect to . Execution intervals with respect to the -schedule will be called -execution intervals.

It is easy to see that the -schedule for jobs contains at most -execution intervals, since the end of each execution interval (including an idle interval) corresponds to the moment when either a job is finished or a new job arrives. Also, the -schedule can be computed in time by using a priority queue to keep all jobs currently available, prioritized by their deadlines. In the next section, we will show that the -schedule can be computed in linear time.

3 Computing an -Schedule in Linear Time

In this work, we assume that the underlying computational model is the unit-cost RAM model with word size . This model is assumed only for the purpose of using a special union-find algorithm by Gabow and Tarjan Gabow1983 .

Theorem 3.1

If for each , the rank of in and the rank of in are pre-computed, then the -schedule can be computed in linear time in the unit-cost RAM model.

We make the following two assumptions:

-

•

the jobs are already sorted according to their deadlines;

-

•

for each job , we know the rank of in the arrival time set .

Because of the first assumption and without loss of generality, we assume that . Algorithm 1 schedules the jobs in the order of their deadlines. When scheduling job , the algorithm tries to search for an earliest available time interval and schedule the job in it, and then repeat the process until all the workload of the job is scheduled or unable to find such a time interval before the deadline. A more detailed discussion of the algorithm is given below.

Let be . Note that the times “” and “” (where is any fixed positive constant) are included in for simplifying the presentation of the algorithm. Denote the size of by . Denote to be the -th smallest element in . Note that the rank of any in is known. During the running of the algorithm, we will maintain the following data structure:

Definition 4

For each , the algorithm maintains a value , whose value is in the range . The meaning of is that: the time interval is fully occupied by some jobs, and the time interval is idle.

If is fully occupied, then is . Note that such a time always exists during the running of the algorithm, which will be shown later when we discuss how to maintain . At the beginning of the algorithm, we assume that the processor is idle for the whole time period. That means for (see line 1 of Algorithm 1).

Example 1

An example for demonstrating the usage of the data structure is given below: Assume that . At some point during the execution of the algorithm, if some jobs have been scheduled to run at time intervals , then we will have , , , , , , , , , and .

Before we analyze the algorithm, we need to define an important concept called canonical time interval.

Definition 5

During the running of the algorithm, a canonical time interval is a time interval of the form , where . When , we call it an empty canonical time interval.

Note that a non-empty canonical time interval is always idle based on the definition of . Any arrival time will not lie inside any canonical time interval but it is possible that will touch any of the two ending points, i.e., for any , we have either or . Therefore, if we want to search for a time interval to run a job at or after time , then we should always look for the earliest non-empty canonical time interval where .

In Algorithm 1, a variable is used to track the workload to be scheduled. Lines 1-1 try to schedule as early as possible if . Line 1 tries to search for an earliest non-empty canonical time interval no earlier than the arrival time of (i.e., ). Such a always exists because there is always a non-empty canonical time interval . Line 1-1 means that, if is not earlier than the deadline of , then the job cannot be finished. Line 1 sets a value of , whose meaning is that can be used to schedule the job. The value of is no later than the deadline of . Lines 1-1 process the case when the remaining workload of cannot be finished in the time interval . Lines 1-1 process the case when the remaining workload of can be finished in the time interval . In the first case, line 1 updates to because the time interval is occupied and is idle. In the second case, a time of is occupied by after the time , so is increased by .

Example 2

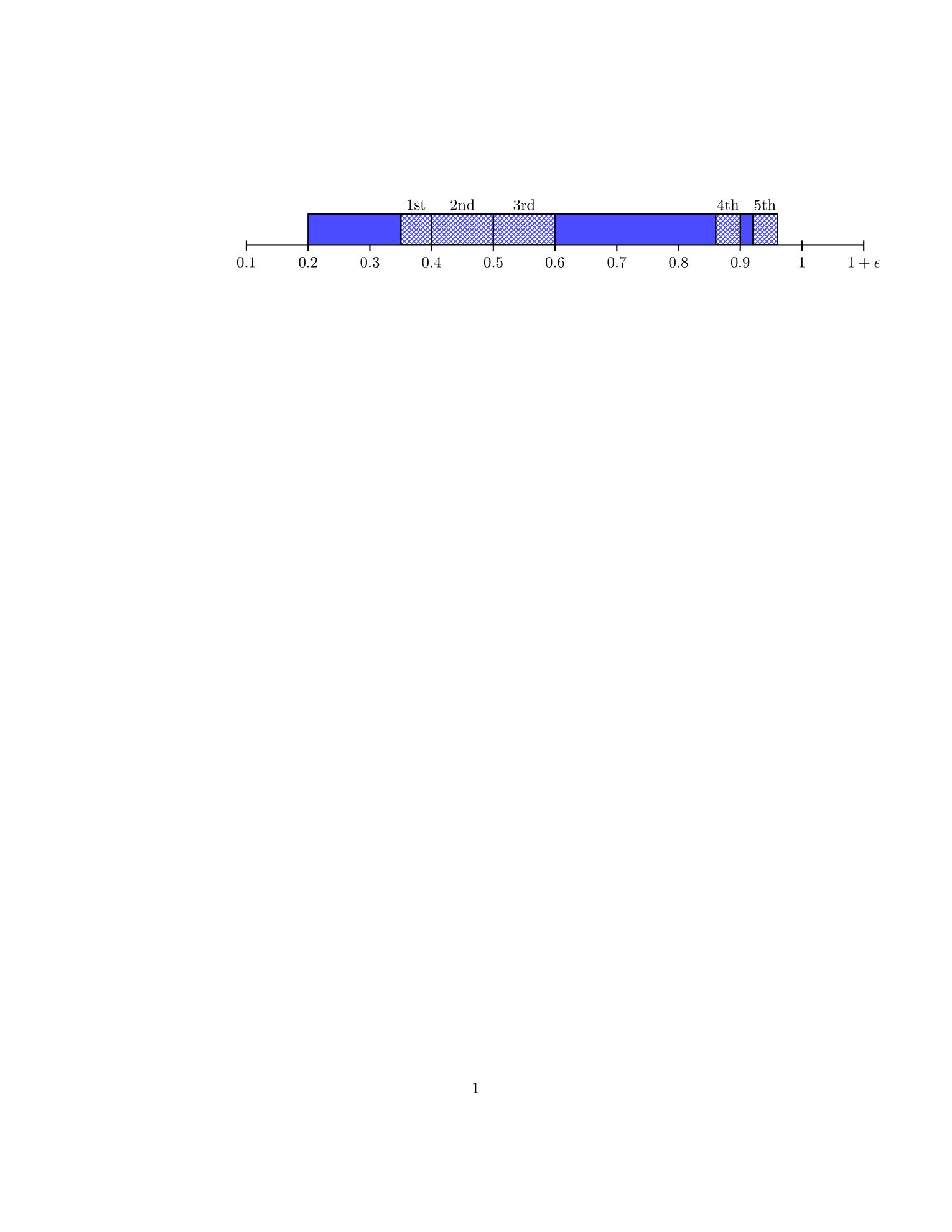

Following the example provided in the previous example, assume that the speed is , if we are to schedule a job , where , the algorithm will proceed as follows: At the beginning, will be initialized to , and (because ; see line 1). Line 1 will then get the interval as an earliest non-empty canonical time interval, and a workload of is scheduled at that time interval. The values of will be updated to accordingly. Now, becomes , and line 1 will get the time interval to schedule the job. After that becomes , and . Line 1 then gets the time interval to schedule the job, and will be further reduced to . The values of will be updated to . The next time interval found will be , and will become . The values of will be updated to . The remaining earliest non-empty canonical time interval is , but the deadline of the job is , so only will be used to schedule the job, and will be . The value of is then updated to . Finally, is the remaining earliest non-empty canonical time interval, but , so line 1-1 will break the loop, and will be an unfinished job. A graphical illustration is provided in Figure 1. The solid rectangles represent the time intervals occupied by some jobs before scheduling . The cross-hatched rectangles represent the time intervals that are used to schedule . The -th cross-hatched rectangle (where ) is the -th time interval scheduled according to this example. Note that all the cross-hatched rectangles except the -th one are canonical time intervals right before scheduling .

The most critical part of the algorithm is Line 1, which can be implemented efficiently by the following folklore method using a special union-find algorithm developed by Gabow and Tarjan Gabow1983 (see also the discussion of the decremental marked ancestor problem AlstrupHR1998 ). At the beginning, there is a set for each . The name of a set is the largest element of the set. Whenever is updated to (i.e., there is not any idle time in the interval ), we make a union of the set containing and the set containing , and set the name of this set to be the name of the set containing . After the union, the two old sets are destroyed. In this way, a set is always an interval of integers. For a set whose elements are , the semantic meaning is that, is fully scheduled but is idle. Therefore, to search for an earliest non-empty canonical time interval beginning at or after time , we can find the set containing , and let be the name of the set, then is the required time interval.

Example 3

An example of the above union-find process for scheduling in the previous example is given below: Before scheduling , we have the sets , , , , , , . The execution of line 1 will always try to search for a set that contains the element . Therefore, the first execution will find the set , so will be . After that, becomes , so the algorithm needs to make a union of the sets and to get . Similarly, the next execution will find the set , so . The algorithm will then make a union of and to get . For the next execution, the set will be found, and it will be merged with to get . In this case, , and the earliest non-empty canonical time interval is . After is updated to , the algorithm will merge with and obtain . Therefore, the next execution of line 1 will get . After the time interval is scheduled and is updated to , so the algorithm will not do any union. The last execution finds again, and a loop break is performed.

Now, we we analyze the time complexity of the algorithm.

Lemma 1

Each set always contains continuous integers.

Proof

It can be proved by induction. At the beginning, each skeleton set is a continuous integer set. During the running of the algorithm, the union operation always merges two nearby continuous integer sets to form a larger continuous integer set.

Lemma 2

There are at most unions.

Proof

It is because there are only sets.

Lemma 3

There are at most finds.

Proof

Some finds are from finding the set containing during each union. Note that there is no need to perform a find operation to find the set containing for union, because is just the name of such a set, where the set contains continuous integers with as the largest element. The other finds are from searching for earliest canonical time intervals beginning at or after time . This can be analyzed in the following way: Let be the number of times to search for an earliest non-empty canonical time interval when processing job . Let be the number of unions that are performed when processing job . We have , because each of the first finds must accompany a union. Therefore,

Since these unions and finds are operated on the sets of integer intervals, such an interval union-find problem can be solved in time in the unit-cost RAM model using Gabow and Tarjan’s algorithm Gabow1983 . Note that , so the total time complexity is . Theorem 3.1 holds.

If the union-find algorithm is implemented in the pointer machine model BenAmram1995 using the classical algorithm of Tarjan Tarjan1975 , the complexity of our -schedule algorithm will become where is the one-parameter inverse Ackermann function.

Note that, the number of finds can be further reduced with a more careful implementation of the algorithm as follows (but the asymptotic complexity will not change):

-

•

Whenever the algorithm schedules a job to run at a time interval , the algorithm no longer needs to proceed to line 1 for the same job, because there will not be any idle time interval available before the deadline.

-

•

For each job , the first time to find a non-empty canonical time interval requires one find operation. In any of the later times to search for earliest non-empty canonical time intervals for the same job, there must be a union operation just performed. The that determines the earliest non-empty canonical time interval is just the name of that new set after that union, so a find operation is not necessary in this case. Note that the find operations that accompany the unions are still required.

Using the above implementation, the number of finds to search for earliest non-empty canonical time intervals can be reduced to . Along with the finds for unions, the total number of finds of this improved implementation is at most .

4 An Continuous DVS Algorithm

We will first take a brief look at the previous best known DVS algorithm of Li, Yao and Yao Li06 . As in Li06 , Define the “support” of to be the union of all job intervals in . Define , the “average rate” of to be the total workload of divided by . According to Lemma 9 in Li06 , using to do an -schedule will generate two nonempty subsets of jobs requiring speed at least or less than respectively in the optimal schedule unless the optimal speed for is a constant . The algorithm will recursively do the scheduling based on the two subsets of jobs. Therefore, at most calls of -schedules on a job set with at most jobs are needed before we obtain the optimal schedule for the whole job set. The most time-consuming part of their algorithm is the -schedules.

To apply our improved -schedule algorithm for solving the continuous DVS scheduling problem, we need to make sure that the ranks of the deadlines and arrival times are known before each -schedule call. It can be done in the following way: Before the first call, sort the deadlines and arrival times and obtain the ranks. In each of the subsequent calls, in order to get the new ranks within the two subsets of jobs, a counting sort algorithm can be used to sort the old ranks in linear time. Therefore, the time to obtain the ranks is at most for the whole algorithm. Based on the improved computation of -schedules, the total time complexity of the DVS problem is now , improving the previous algorithm of Li06 by a factor of . We have the following theorem.

Theorem 4.1

The continuous DVS scheduling problem can be solved in time for jobs in the unit-cost RAM model.

5 Further Improvements

For the discrete DVS scheduling problem, we have an algorithm to calculate the optimal schedule by doing binary testing on the given speed levels, improving upon the previously best known Li05 . To be specific, given the input job set with size and a set of speeds , we first choose the speed to bi-partition the job set into two subsets. Then within each subset, we again choose the middle speed level to do the bi-partition. We recursively do the bi-partition until all the speed levels are handled. In the recursion tree thus built, we claim that the re-sorting for subproblems on the same level can be done in time which implies that the total time needed is . The claim can be shown in the following way. Based on the initial sorting, we can assign a new label to each job specifying which subgroup it belongs to when doing bi-partitioning. Then a linear scan can produce the sorted list for each subgroup.

6 Conclusion

In this paper, we improve the time for computing the optimal continuous DVS schedule from to . The major improvement happens in the computation of s-schedules. Originally, the s-schedule computation is done in an online fashion where the execution time is allocated from the beginning to the end sequentially and the time assigned to a certain job can be gradually decided. While in this work, we allocate execution time to jobs in an offline fashion. When jobs are sorted by deadlines, job ’s execution time is totally decided before we go on to consider . Then by using a suitable data structure and conducting a careful analysis, the computation time for s-schedules improves from to . We also design an algorithm to improve the computation of the optimal schedule for the discrete model from to .

References

- (1) S. Albers. Energy-Efficient Algorithms. Communications of the ACM, 53(1), pp. 86-96, 2010.

- (2) S. Albers. Algorithms for Dynamic Speed Scaling. (STACS 2011), pp. 1-11.

- (3) S. Albers and A. Antoniadis: Race to idle: new algorithms for speed scaling with a sleep state. (SODA 2012), pp. 1266-1285.

- (4) S. Alstrup, T. Husfeldt, and T. Rauhe. Marked ancestor problems. In FOCS’98: Proceedings of the 39th Annual Symposium on Foundations of Computer Science, pages 534–544, 1998.

- (5) N. Bansal, D. P. Bunde, H. L. Chan, and K. Pruhs. Average Rate Speed Scaling. In Proceedings of the 8th Latin American Theoretical Infomatics Symposium, volume 4957 of LNCS, 2008, pp. 240-251.

- (6) N. Bansal, H. L. Chan, T. W. Lam and L.-K. Lee. Scheduling for speed bounded processors. In Proceedings of the 35th International Symposium on Automata, Languages and Programming, 2008, pp. 409-420.

- (7) N. Bansal, T. Kimbrel, and K. Pruhs. Dynamic Speed Scaling to Manage Energy and Temperature. In Proceedings of the 45th Annual Symposium on Foundations of Computer Science, 2004, pp. 520-529.

- (8) A. M. Ben-Amram. What is a “pointer machine”?. SIGACT News 26, 2 (June 1995), 88-95.

- (9) D. P. Bunde. Power-Aware Scheduling for Makespan and Flow. In Proceedings of the 18th annual ACM symposium on Parallelism in algorithms and architectures, 2006, pp. 190-196.

- (10) H. L. Chan, W. T. Chan, T. W. Lam, L. K. Lee, K. S. Mak, and P. W. H. Wong. Energy Efficient Online Deadline Scheduling. In Proceedings of the 18th annual ACM-SIAM symposium on Discrete algorithms, 2007, pp. 795-804.

- (11) W. T. Chan, T. W. Lam, K. S. Mak, and P. W. H. Wong. Online Deadline Scheduling with Bounded Energy Efficiency. In Proceedings of the 4th Annual Conference on Theory and Applications of Models of Computation, 2007, pp. 416-427.

- (12) H. N. Gabow and R. E. Tarjan. A linear-time algorithm for a special case of disjoint set union. In STOC ’83: Proceedings of the fifteenth annual ACM symposium on Theory of computing, pages 246–251, New York, NY, USA, 1983. ACM.

- (13) I. Hong, G. Qu, M. Potkonjak and M. B. Srivastavas. Synthesis techniques for low-power hard real-time systems on variable voltage processors. In Proceedings of the IEEE Real-Time Systems Symposium, 1998, pp. 178-187.

- (14) S. Irani, R. K. Gupta and S. Shukla. Algorithms for Power Savings. ACM Transactions on Algorithms, 2007, 3(4).

- (15) S. Irani and K. Pruhs. Algorithmic Problems in Power Management. ACM SIGACT News, 2005, 36(2): pp. 63-76.

- (16) T. Ishihara and H. Yasuura. Voltage Scheduling Problem for Dynamically Variable Voltage Processors. In Proceedings of International Symposium on Low Power Electronics and Design, 1998, pp. 197-202.

- (17) W. Kwon and T. Kim. Optimal Voltage Allocation Techniques for Dynamically Variable Voltage Processors. In Proceedings of the 40th Conference on Design Automation, 2003, pp. 125-130.

- (18) T. W. Lam, L. K. Lee, I. K. K. To, and P. W. H. Wong. Energy Efficient Deadline Scheduling in Two Processor Systems. In Proceedings of the 18th International Symposium on Algorithm and Computation, 2007, pp. 476-487.

- (19) M. Li and F. F. Yao. An Efficient Algorithm for Computing Optimal Discrete Voltage Schedules. SIAM Journal on Computing, 2005, 35(3): pp. 658-671.

- (20) M. Li, Becky J. Liu, F. F. Yao. Min-Energy Voltage Allocation for Tree-Structured Tasks. Journal of Combinatorial Optimization, 2006, 11(3): pp. 305-319.

- (21) M. Li, A. C. Yao, and F. F. Yao. Discrete and Continuous Min-Energy Schedules for Variable Voltage Processors. In Proceedings of the National Academy of Sciences USA, 2006, 103(11), pp. 3983-3987.

- (22) K. Pruhs and C. Stein. How to Schedule When You Have to Buy Your Energy. in the Proceedings of the 13th International Workshop on Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques, 2010, pp. 352-365.

- (23) K. Pruhs, P. Uthaisombut, and G. Woeginger. Getting the Best Response for Your Erg. In Scandanavian Workshop on Algorithms and Theory, 2004, pp. 14-25.

- (24) G. Quan and X. S. Hu. Energy Efficient Fixed-Priority Scheduling for Real-Time Systems on Variable Voltage Processors. Proceedings of the 38th Design Automation Conference, 2001, pp. 828-833.

- (25) R. E. Tarjan. Efficiency of a Good But Not Linear Set Union Algorithm. J. ACM 22, 2 (April 1975), 215-225.

- (26) W. Wu, M. Li and E. Chen. Min-Energy Scheduling for Aligned Jobs in Accelerate Model. Theoretical Computer Science, 2011, 412(12-14), pp. 1122-1139.

- (27) F. Yao, A. Demers, and S. Shenker. A scheduling model for reduced CPU energy. In Proceedings of the 36th Annual IEEE Symposium on Foundations of Computer Science, 1995, pp. 374-382.

- (28) H. S. Yun and J. Kim. On Energy-Optimal Voltage Scheduling for Fixed-Priority Hard Real-Time Systems. ACM Transactions on Embedded Computing Systems, 2003, 2(3): pp. 393-430.