An Optimal Control Perspective on

Classical

and Quantum Physical Systems.

Abstract

In this paper, we analyze classical and quantum physical systems from an optimal control perspective. Specifically, we explore whether their associated dynamics can correspond to an open or closed-loop feedback evolution of a control problem. Firstly, for the classical regime, when it is viewed in terms of the theory of canonical transformations, we find that it can be described by a closed-loop feedback problem. Secondly, for a quantum physical system, if one realizes that the Heisenberg commutation relations themselves can be thought of as constraints in a non-commutative space, then the momentum must be dependent on the position for any generic wave function. This implies the existence of a closed-loop strategy for the quantum case. Thus, closed-loop feedback is a natural phenomenon in the physical world. For the sake of exposition, we give a short review of control theory, and some familiar examples at the classical and quantum levels are analyzed.

1 Introduction

In the last two decades, there has been increasing interest among physicists in applying ideas from physics to finance and economics, as one can see in some classical texts.[1] [2] [3] In this paper, we want to do the exact opposite, that is, we apply ideas from the optimal control theory usually used in finance and

economics,[4] [5] [6] [7] [8] to the classical and quantum physical world. Indeed, open and closed-loop control problems are not in the toolbox of mathematical methods in physics; nevertheless, dynamic optimization with its optimal control theory is the corner-stone of modern economic analysis.

There is not much literature associated with this endeavor.

Recently, there have appeared some studies of how a control problem associated with an economic model can be interpreted as a second class constrained physical system.[9] [10] [11] [12] In [9] it is found that at the classical level, the constrained dynamics given by Dirac’s brackets are the same as the dynamics given by the Pontryagin equations [13]. The right quantization of this second-class constrained system ended with a Schrödinger equation

that is just the Hamilton–Jacobi–Bellman equation used in optimal control theory and economics [14]. It was surprising to find that after the quantization of the Pontryagin theorem, Bellman’s maximum principle is obtained.

From the perspective of control theory, this is an exciting finding in terms of the feedback of these systems. The Pontryagin

theory is characterized by open-loop strategies for the Lagrangian multiplier, whereas the Hamilton–Jacobi–Bellman equation has closed-loop strategies intrinsically. Since Pontryagin’s theory is equivalent to a classical mechanical model and Bellman’s theory is just a quantum mechanical one,

this induces one to relate open-loop strategies with classical mechanics and closed-loop strategies with quantum mechanics. All this sounds as if the quantizations of open-loop strategies would be the closed-loop ones.

Thus, if an economic feedback system characterized by an optimal control theory model can be seen as a physical system, one can naturally ask the inverse question: can the physical systems be seen from an optimal control perspective? Or, more specifically, do there exist open-loop and closed-loop strategies in physical systems? If the answer is affirmative, then, where are they? Moreover, how and why do these feedback problems appear? This paper gives some clues and answers to all these questions.

For this purpose, we found that the classical theory must be represented as a closed-loop feed-back problem. Indeed, due to the invariance of the classical Hamiltonian equations of motion (which represent an open-loop dynamics) by canonical transformations, both schemes are equivalent. In the same way, from the perspective of quantum physics, we see that if one identifies the momentum with the Lagrange multiplier, the relation between momentum and position is just a closed-loop strategy in optimal control theory. Thus, both open and closed-loop feed-backs are also a natural phenomenon in our physical world.

In the following three sections we review, for the non specialist reader, the ideas of closed and open-loop strategies that appear in control theory.

2 Dynamic Optimization: The Pontryagin Approach

To emphasize the fundamental ideas and to keep the equations simple, a generic one-dimensional physical system will be considered. Generalizations to higher dimensions are straightforward. Consider an optimal control problem commonly used in financial applications.[4] [5] It is required to optimize the cost functional

| (1) |

where represents a state variable (for example, the production of a certain article) and is a control variable (such as the marketing costs). The state variable must satisfy the market dynamics

| (2) |

The problem is to determine how to obtain the production trajectory and the control path to optimize the cost functional. To get the solution, the method of Lagrange multipliers is applied, so an improved functional on the extended configuration space is considered, which is defined by

| (3) |

To obtain the solution, the integrand of (3) can be interpreted as the Lagrangian:

| (4) |

The extremal curves then satisfy the Euler–Lagrange equations:

| (5) |

These are also written respectively as

| (6) |

with defined by

| (7) |

Equations (6) are the well-known Pontryagin equations, which are obtained in optimal control theory, through the Pontryagin maximum principle, and give the solution to the optimization problem.

3 Open-loop and closed-loop strategies

The action (3) can be written in a compact form as

| (8) |

Strictly speaking, the Pontryagin equations must be obtained by optimizing the action (8) with respect to its three variables . If and are the corresponding functional variations of the initial variables, by expanding the Hamiltonian in a Taylor series, keeping the first-order terms only, integrating by parts, and using the fact that (the initial point is fixed by the initial condition (2)), then one obtains

| (9) |

To maximize the action, all the first-order terms in and must vanish. Now, it is well-known that there are two classes of control strategies:

-

•

open-loop strategies that depend only on time: , and

-

•

closed-loop strategies that depend on the state variable and time: . [15]

In the case of an open-loop strategy, the variables and are independent, and so and are linearly independent. Hence, the Pontryagin equations (6) and the transversality condition can be obtained from Equation (9). In fact, the solutions of the Pontryagin equations give the extremal curves

| (10) |

which are the solutions of Equations (6). But what happens, however, with closed-loop strategies? Here, due to the relations between the variables in , the functional variations are related by . Substituting this into (9) yields

| (11) |

If and remain independent, the optimization of the functional implies

| (12) |

plus the transversality condition, but the equation that gives the optimal condition for the control in (6) is lost. Then, if is not known as a fixed function of from the beginning, that is, one knows that the constraints exist, but one does not know their specific form, one has three unknowns , and but only two equations of motion. Thus, the variational problem is inconsistent for an arbitrary closed-loop strategy because the functional form of is not determined by the equations of motion. It must be given from the beginning.

Now, note that for a open-loop strategy, the control equation in (6) is, in fact, the following algebraic equation for :

| (13) |

From this equation, the optimal control can be obtained in principle, as a function of and :

| (14) |

One can conclude, then, that the same optimization problem implies that the naive optimal open-loop strategy in (10) is actually just a closed-loop strategy! How can this be consistent with the variational problem (9), in which , and

are independent variables?

To understand this, consider an arbitrary closed-loop strategy of the general form . Then .

After substituting this into (9), equals

| (15) |

If and are independent variables, we have the following equations of motion from the variational principle (15):

| (16) |

Obviously these equations, for an arbitrary closed-loop strategy , differ from the Pontryagin open-loop equations. Now, choose as the special optimal closed-loop strategy which is the solution of (13), so Equations (16) are then reduced to

| (17) |

But this last set of two equations is equivalent to the three Pontryagin equations. The solutions of (17) give optimal paths and , from which the optimal control open-loop strategy in (10) can be computed given the optimal closed-loop strategy (14), by

| (18) |

Thus, the special optimal closed-loop strategy is completely equivalent to an open-loop strategy over the optimal and trajectories. Then, the optimal closed-loop strategy is the same object given by the open-loop one from a dynamical point of view. So from now on, we will not distinguish between them for the case of Pontryagin’s theory.

Note that for an arbitrary given closed-loop strategy which is not optimal (), Equations (16) mean that the action is optimized, but these extremals are not necessarily global. In fact, its dynamics is not given by Pontryagin’s equations. The optimal condition in (6) gives the global extremal for the action , as was established by Pontryagin’s maximum principle.

To end this section, suppose that and are not independent but are related by ; then, the variation of is . Substituting this into (15), choosing the optimal control strategy , using the transversality condition and the fact that , we get

| (19) |

but, as , it follows that

Thus, (19) gives finally

or

where

| (20) |

is the reduced Hamiltonian in terms of . In this way, the optimization of the action implies that the closed-loop strategy must satisfy the optimal consistency condition

| (21) |

Equation (21) is closely related to the Hamilton–Jacobi–Bellman

equation. In fact, if the Lagrangian multiplier is of the form ,

then Equation (21) implies that satisfies the Hamilton–Jacobi–Bellman equation.[9]

Let be a solution of (21); then, the

optimal state variable can be obtained from (2),

by

| (22) |

Note that (22) can be seen as the Pontryagin equation for , in the sense that and are chosen in such a way that they are optimal closed-loop strategies, that is, these strategies maximize or minimize the action.

Thus, there exist three types of strategies in optimal control theory: open-loop (), inert closed-loop () and closed-loop () strategies. The first two are completely equivalent because these give the same dynamical equations.

4 Dynamic Optimization: The Bellman approach

A second approach to the optimization problem comes from dynamic programming theory, and was developed by Richard Bellman [14]. In this case, the fundamental variable is the optimal value of the action, defined by

| (23) |

subject to (2), with initial condition .

The optimality principle of Bellman implies that satisfies the Hamilton–Jacobi–Bellman equation[4]

| (24) |

The left-hand side of Equation (24) is just the maximization

of the Hamiltonian (13) with respect to the control

variable , where the Lagrangian multiplier of the Pontryagin approach must be identified with .

Thus, the Bellman theory is (from the Pontryagin perspective)

a model which has a closed-loop -strategy .

By maximizing and solving for the optimal control variable in the left-hand side of (24) as ,

the Hamilton–Jacobi–Bellman equation is

| (25) |

Differentiating in (25) with respect to , one gets

Using the fact that is optimal and replacing , one obtains

or

This equation is identical to (21). Thus, this optimal consistency condition for the closed-loop -strategy is nothing more than the derivative of the Hamilton–Jacobi–Bellman equation. Then, Equation (21) can be written, according to (25), as

| (26) |

Integrating the above equation gives finally

where is an arbitrary, time-dependent function.

Then, for an optimal closed-loop -strategy, the optimization problem gives a non-homogeneous Hamilton–Jacobi–Bellman equation (if one identifies the Lagrangian multiplier with ). The Bellman maximum principle instead gives a homogeneous Hamilton–Jacobi–Bellman equation.

5 Classical Mechanics and Open/Closed-Loop Strategies

The classical equation of motion of a generic physical system can be obtained from the Hamiltonian action defined by

| (27) |

(where is the Hamiltonian function) as an optimization problem. In this case, by expanding the Hamiltonian in a Taylor series, keeping the first-order terms only and integrating by parts, one has that the variation of the action is

| (28) |

To maximize the action, all the first-order terms in and must vanish. For this, it is supposed that:

-

•

the end points of the curve are fixed, so and , and

-

•

the variables and are considered independent,

so and are linearly independent, from which the Hamiltonian equations of motion are obtained from (28) as:

| (29) |

From an economic or dynamic optimization point of view, the problem of optimizing the action (27) is analogous to an optimal control problem, but without a control variable . As we have seen in the previous section, the solutions of the control problem are given by the Pontryagin equations (6) plus the transversality condition. Note that the first two Pontryagin equations in (6) are precisely the Hamiltonian equations of motion (29) if one identifies the Lagrangian multiplier with the canonical momentum . This implies that corresponds to a

open-loop strategy for the system ( and are independent),

which is consistent with the Hamiltonian equations of motions. Thus, the Hamiltonian theory is a open-loop model similar to Pontryagin’s theory.

So one may ask: is it possible that closed-loop strategies can occur in physical systems as they do in economic systems?

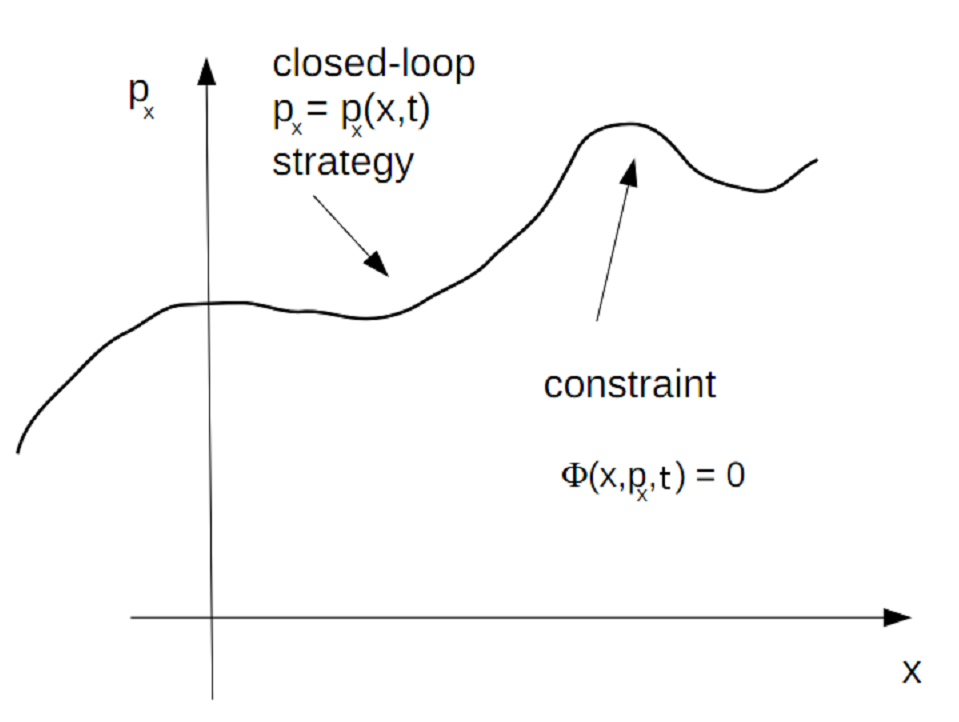

As is shown in this paper, the answer is affirmative, and they appear naturally in the context of canonical transformations. In order to give a first clue to the answer of the above question, suppose that one imposes a constraint over the phase space of the form

| (30) |

This constraint represents at each time a surface in the phase space (a line in our bi-dimensional case) where the system can evolve (see Figure 1).

Actually one can write in terms of from (30) by solving the constraint:

| (31) |

Using the analogy of the momentum with the Lagrangian multiplier , Equation (31) corresponds to the closed-loop -strategy from an economic point of view. Thus and are not independent in this case and their variations are related by . By replacing in (28) and using the fact that the endpoints of are fixed, one arrives at

| (32) |

By defining the reduced Hamiltonian , Equation (32) can be written as

| (33) |

The optimization of the action then gives

| (34) |

This last equation is a consistency condition that the closed-loop strategy

(31) must satisfy, to give an extremal of the action. That

is, if satisfies (34), then

is an optimal closed-loop strategy.

Now, if the closed-loop momentum strategy is

just the derivative of some function , such as

| (35) |

condition (34) gives

| (36) |

So, one obtains, by integration, that

| (37) |

for some function of time. The above equation is just an inhomogeneous Hamilton–Jacobi equation. Thus, the derivative of the Hamilton–Jacobi equation can be seen as the consistency condition to give to the action an extremal in the closed-loop -strategy case. Also, this little analysis implies that closed-loop momentum strategies are closely related to the Hamilton–Jacobi equation.

Now, it is well known that the Hamilton–Jacobi equation appears in classical mechanics in the context of the canonical transformations, but how and where do the closed-loop strategies appear there?

In the next section, a short review of canonical transformations will be given and it will be elucidated how the closed-loop strategies appear in that context.

6 Canonical Transformations and Closed-Loop Strategies

Consider a general coordinate transformation on the phase space

| (38) |

The transformation is called canonical if the Hamiltonian equations of motions are invariant under (38), that is, if

| (39) |

where

| (40) |

The function is called the generator of the canonical transformation, and the coordinate transformation (38) can be reconstructed from through Equations [16] [17]

| (41) |

One must note at this point that the Hamiltonian equations (29) refer to a unique coordinate system

(in this case, a Cartesian coordinate system). So, the Hamilton equations in (29) are “single observer” equations. Instead, the canonical transformation brings a new second observer into the problem, because one has two different coordinate systems:

the initial Cartesian and the second one . So

the theory of canonical transformations is a “two observers” view

of the classical mechanics. And this characteristic induces the closed-loop

-strategies from a pure classical point of view (closed-loop

-strategies can also be induced from Quantum Mechanics to

the classical realm, as we shall see later).

The Hamilton–Jacobi theory relies on the huge freedom that exists

in choosing . In fact this theory doesn’t work

directly with but with its Legendre transformation defined by

| (42) |

In this case, the canonical transformation is reconstructed via the equations

| (43) |

| (44) |

and the respective Hamiltonians are related by

| (45) |

Note again that one has huge freedom in choosing . The Hamilton–Jacobi theory corresponds to the choice of that makes the second-observer Hamiltonian equal zero:

| (46) |

So the equations of motion for the second observer are

| (47) |

Thus, for the second observer, the dynamical variable remain constant in time:

| (48) |

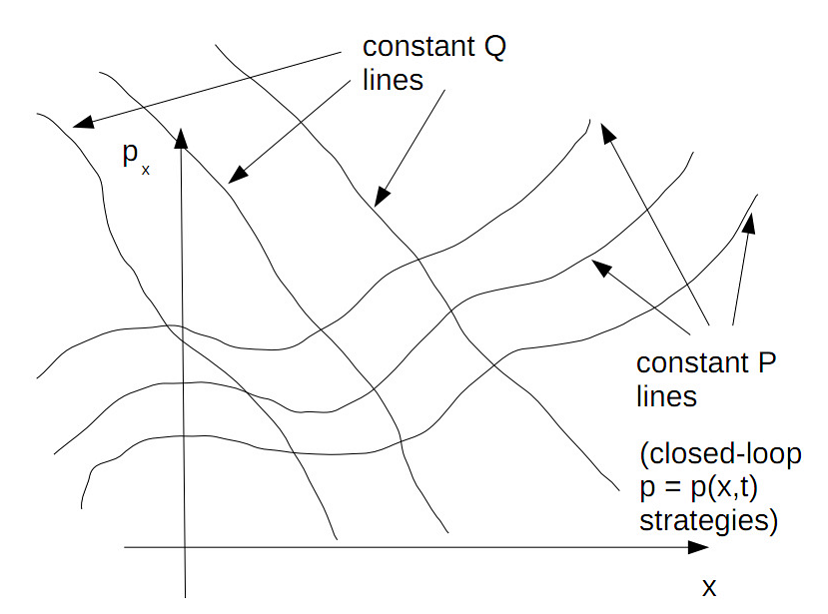

But what does the first observer see? First, due to Equations (48), the coordinate transformations (38) give

| (49) |

but each of these equations defines constant coordinate lines. These are constraints over the phase space of the first observer, from which one can generate two different closed-loop -strategies according to Equation (30)! (See Figure 2).

Thus, the “two observers” perspective of classical mechanics,

through the method of canonical transformation, is responsible for

the generation of the closed-loop -strategies.

From (49) it is not clear if

the closed-loops strategies thus generated satisfy the consistency

condition (34) or if they satisfy the second condition

(35) to obtain a Hamilton–Jacobi equation

as in (37) for . Instead, one can see these constant coordinate lines in terms of Equations

(43) and (44). In fact, these equations

are equivalent to (49), because

the canonical transformation can be reconstructed from (43)

and (44).

A constant -line in (49) is equivalent (from

(43)) to

| (50) |

thus, the constant -line in (49) satisfies

(35). From (45) and (46),

satisfies the Hamilton–Jacobi equation as in

(37) with . This implies that a closed-loop strategy generated by (50) satisfies the consistency relation (34), so (50) defines a true optimal closed-loop -strategy.

For the constant coordinate line in (49) one has

however due to (44) that

| (51) |

but from this equation one cannot obtain in terms of , so this line does not generates a closed-loop strategy at all. This is due to the structure of Equations (43) and (44). In order to reconstruct the canonical transformation

(38) it is required to invert the system (43),

(44). Note that only from (43) can the

momentum be written in terms of the first-observer variables

as . But from (44) alone one cannot

solve in terms of the . The other equation (43)

is needed to do that. Thus, a constant -line alone can not generate a true closed-loop strategy.

In this way, closed-loop -strategies appear in classical mechanics as a consequence of the two observers interpretation of the canonical transformation theory. These closed-loop strategies are inert in the same way that the optimal closed-loop ones are inert in control theory. This is because both closed-loop approaches and gives the same dynamical equation of the open-loop case. For the closed-loop case, the open-loop dynamics analogous to the given by Pontryagin’s equations are just provided by the Hamiltonian equations of motion of the first observer in the phase space.

7 Quantum Mechanics and Closed-Loop Strategies

In this section, the origins of the Hamilton–Jacobi–Bellman equation that appears in the limit in the quantum phenomena,

will be explained as a consequence of the emergence of closed-loop

-strategies in the quantum world.

Consider the Schrödinger equation for a non-relativistic particle

of mass :

| (52) |

Writing the wave function in the form

| (53) |

and by substituting (53) into the Schrödinger equation, the following equation Quantum Hamilton–Jacobi equation for is obtained:

| (54) |

Note that this equation is completely equivalent to Schrödinger’s equation, but here, the classical and quantum realms can be clearly identified. In fact, by taking the limit in (54), one gets

| (55) |

Equation (55) is just the classical Hamilton–Jacobi equation

| (56) |

for the classical Hamiltonian function associated to the non-relativistic particle

| (57) |

where one must identify with the derivative of

| (58) |

to make contact with the classical Hamiltonian theory. And it is precisely this identification which generates the closed-loop -strategy through (58). Note that it is induced from the quantum realm to the classical world, in the limit , through the classical Hamilton–Jacobi equation. The identification in (58) is a pure quantum phenomenon. In fact, considering the momentum operator

| (59) |

this operator is characterized by its eigenfunctions and eigenvalues:

| (60) |

where the solution of this equation gives

| (61) |

In this context, and are independent variables. In fact

the eigenfunction (61) corresponds to states with well

defined values of the momentum.

Note now that if one applies the momentum operator to a generic wave function which is a solution of the Schrödinger equation (written in the “momentum

form” (53)), one obtains

| (62) |

By looking at the wave function as a vector with a continuous index , the above equation implies that (locally at each point ) the momentum operator is diagonal, so that any wave function can be seen as an eigenstate of the momentum operator with momentum eigenvalue . Thus, one must identify the momentum eigenvalue in this quantum

state with the derivative of the function through (58).

It is just this identification which generates the closed-loop -strategies directly

in the quantum world.

On the other hand, the same Heisenberg canonical commutation relations

| (63) |

can be seen as a constraint in the non-commutative phase space . Thus, from (63) one could “solve” the momentum operator in terms of the operator. This necessarily implies the existence of a certain relation between and or between their eigenvalues. The representation of the canonical operator as a differential operator acting on a function space or Hilbert space as

| (64) |

is equivalent to solving the constraint (63), because on

any wave function , Equation (63) is satisfied

identically. The memory of the quantum constraint (63) is then transferred in a local way to the momentum eigenvalue, according to (62).

In a sense, the representation of the wave function as

locally diagonalizes the momentum operator over any quantum

state, and (54) is just the Schrödinger equation in

this diagonal basis. Note that all this is a kinematic effect created by the Heisenberg commutation relation (63); the

dynamical effects appear when the explicit form of is

needed, and for that, one must solve the full Quantum Hamilton–Jacobi equation

(54) explicitly. Note that Quantum Mechanics, as in (63), can be viewed as a constrained system in a non-commutative space, so, one would apply a generalization of Dirac’s method [18] [19] [20] [21] to non-commutative spaces [22] to study quantum mechanical systems.

We can say, then, that closed-loop -strategies correspond to a pure quantum phenomenon and are a consequence of Heisenberg’s uncertainty principle. In an arbitrary quantum state, momentum and position cannot be independent: they are related through the non-commutative character of the position and momentum operators. In a more defined momentum state, a less defined position state would emerge. Thus, these two variables must depend on one another in some way. Relation (58) is tantamount to a conversation between them. Only in a pure-momentum state, as given in (61), does the link disappear and position and momentum become independent variables. In fact, in a pure-momentum state, is constant, that is: all the eigenvalues are the same, the value of the position (so its matrix is a multiple of the identity matrix), so Equation (58) gives

| (65) |

as a solution, where is some function of time. Thus, the wave function is

| (66) |

which is the same momentum eigenstate amplified by a temporal arbitrary

phase. Then the linear character of in terms of implies

that and are independent variables, and no closed-loop

-strategy exists in this case.

The same can be said for a pure-position eigenstate. Thus, closed-loop

-strategies are an inherent part of the quantum mechanical

world and permeate the classical world in the limit

through the Hamilton–Jacobi equation. In the following two sections, we analyze some common textbook examples from closed-loop strategies’ point of view.

8 The Stationary Case

The quantum and classical Hamilton–Jacobi equations (54) and (55) are non-stationary equations, that is, they depend explicitly on time. In Quantum Mechanics, stationary states play a fundamental role. They are defined by

| (67) |

By substituting this into the time-dependent Schrödinger equation (52), the time independent or stationary Schrödinger equation is obtained:

| (68) |

Now by writing

| (69) |

and substituting into (68) the stationary Quantum Hamilton–Jacobi equation holds:

| (70) |

Taking again the classical limit in (70) the stationary Classical Hamilton–Jacobi equation appears:

| (71) |

Due to (67) and (69), we have which implies that

| (72) |

for the stationary case. In this case, the closed-loop -strategies are given by .

9 The Non-Stationary Case

But what about the non-stationary closed-loop -strategies in the classical limit? In order to analyze this case, consider the example of a free particle, that is, . The non-stationary classical Hamilton–Jacobi equation is now

| (73) |

One can find a solution of the form , so by substituting in (73) one gets , so , and the corresponding closed-loop -strategy is

| (74) |

One can evaluate using Equation (51)

| (75) |

where the integration constant must be identified with the constant momentum for the second observer in the coordinate system . Thus

| (76) |

from which is computed as

| (77) |

The associated open-loop strategy is found by similarly to Equation (18). Thus, by substituting (77) into (74):

| (78) |

so

| (79) |

where . These last equations are the solutions

for the motion of a free particle of course!

Now from the Hamiltonian equations, one gets the open-loop dynamics for the free particle:

| (80) |

so the open-loops dynamics is

| (81) |

Then, the open-loop -strategy for the free particle coming from the Hamiltonian equations of motions is identical to the non-stationary closed-loop -strategy coming from the non-stationary classical Hamilton–Jacobi equation. In fact, any solution of the Classical Hamilton–Jacobi equation would be an origin for a (stationary and non-stationary) closed-loop -strategy, and this one has to be equivalent with the open-loop strategy coming from the Hamiltonian equations of motion. This is because the Classical Hamilton–Jacobi equation corresponds to a “two observer” point of view of classical mechanics. The function is just the generator of the canonical transformation which leaves the Hamiltonian equations invariant. Thus, the two schemes,

-

1.

the Hamiltonian “one observer” approach with its open-loop -strategies and

-

2.

the Classical Hamilton–Jacobi “two observer” approach with its closed-loop -strategies

are equivalent, because they have the same equation of motion constructed through the canonical transformation. Closed-loop strategies coming from the Hamilton–Jacobi equation are similar in character to the inert optimal closed-loop strategies of the Pontryagin approach to optimal control theory, because they are equivalent to the open-loop ones.

10 The Pure Quantum Limit and Closed-Loop Strategies

In the previous section the classical limit was taken and its characteristics were explored in terms of the closed-loop strategies. In this section, the inverse limit is going to be taken, that is: , and the consequences of a higher non-commutative system of quantum variables explored:

| (82) |

Consider the non-stationary Quantum Hamilton–Jacobi equation (54). Taking the limit and supposing that the time derivative of the function has a higher value,

| (83) |

or, what is the same,

| (84) |

But this is the free-particle Schrödinger equation for ! In this way one can write

| (85) |

where satisfies the Quantum Hamilton–Jacobi equation

| (86) |

and the wave function is given by

| (87) |

The corresponding closed-loop -strategy is

| (88) |

Note that can be interpreted as a closed-loop -strategy for the Quantum Hamilton–Jacobi equation (86). Thus, denoting the closed-loop -strategy for (83) by , then both strategies are related by

| (89) |

Again, if the time derivative of has a higher value, and as , the Quantum Hamilton–Jacobi for (86) is in this limit again a Schrödinger equation,

| (90) |

Hence, we can write

| (91) |

where satisfies

| (92) |

and the wave function is

| (93) |

Putting the closed-loop strategy associated to the Quantum Hamilton–Jacobi equation for (92), the corresponding closed-loop -strategy is then in this case

| (94) |

Since one can keep iterating this procedure to infinity, quantum mechanical systems can admit multistage closed-loop strategies and they are connected in strongly non-linear way, as in (93).

11 Conclusions

In this article, we developed an optimal control perspective on the dynamical behavior of classical and quantum physical systems. The most crucial element of this view is the presence of feed-backs characterized by open or closed-loop strategies in the system.

Thus, in quantum theory, the closed-loop strategies appear naturally due thinking of Heisenberg’s commutation relations as a constraint in a non-commutative phase space, so this implies that there is a relation between the momentum and the particle position for any quantum state.

By taking the classical limit in the full Quantum Hamilton–Jacobi equation, one arrives at a closed-loop dynamics associated with the Classical Hamilton–Jacobi theory. The non-commutative character of quantum theory is transferred to the classical theory through the closed-loop -strategy. Since satisfies the Classical Hamilton–Jacobi equation, the dynamics generated by (by virtue of the properties of canonical transformations, whose generator is just ) is completely equivalent to those open-loop dynamics dictated by the Hamiltonian equations of motion.

From a purely classical point of view, the presence of these closed-loop strategies can be explained by the “two observers” character of the Hamilton–Jacobi theory. If the solutions of the equations of motion are constant for the first observer, then for the second one, their solutions look like constraints. This necessarily relates the momentum of the particle with its position for the second observer, generating in this way the closed-loop -strategy.

References

- [1] Rosario N. Mantegna and H. Eugene Stanley, An Introduction to Econophysics (Cambridge University Press, 2007).

- [2] Jean-Philippe Boucheaud and Marc Potters, Theory of Financial Risk and Derivative Pricing: From Statistical Physics to Risk Management (Cambridge University Press, 2009).

- [3] B. E. Baaquie, Quantum Finance: Path Integrals and Hamiltonians for Option and Interest Rates (Cambridge University Press, 2007).

- [4] Morton I. Kamien and Nancy L. Schwartz, The Calculus of Variations and Optimal Control in Economics and Management (Dover, 2012).

- [5] Suresh P. Sethi, Optimal Control Theory: Applications to Management Science and Economics (Springer, 2009).

- [6] Michael R. Caputo, Foundations of Dynamic Economic Analysis: Optimal Control Theory and Applications (Cambridge University Press, 2005).

- [7] Martin L. Weitzman , Income, Wealth, and the Maximum Principle (Harvard University Press, 2007).

- [8] Engelbert J. Dockner, Steffen Jorgensen, and Ngo Van Long, Differential Games in Economics and Management Science (Cambridge University Press, 2001).

- [9] M. Contreras, R. Pellicer, and M. Villena, “Dynamic optimization and its relation to classical and quantum constrained systems,” Physica A 479, 12–25 (2017).

- [10] Sergio A. Hojman, “Optimal Control and Dirac’s Theory of Singular Hamiltonian Systems,” unpublished.

- [11] Teturo Itami, “Quantum Mechanical Theory of Nonlinear Control” in IFAC Nonlinear Control Systems (IFAC Publications, St. Petersburg, Russia, 2001), p. 1411.

- [12] M. Contreras and J. P. Peña, “The quantum dark side of the optimal control theory,” Physica A 515, 450–473 (2019).

- [13] L. S. Pontryagin, V. G. Boltyanskii, R. V. Gamkrelidze, and E. F. Mishchenko, The Mathematical Theory of Optimal Processes, (CRC Press, 1987).

- [14] R. Bellman, “The theory of dynamic programming,” Bull. Am. Math. Soc. 60 (6), 503–516 (1954).

- [15] G. M. Erickson, “Differential game models of advertising competitions,” J. of Political Economy 8 (31), 637–654 (1973).

- [16] Alexander L. Fetter and John Dirk Walecka, Theoretical Mechanics of Particles and Continua (Dover, 2003).

- [17] Herbert Goldstein, Classical Mechanics, 3rd ed. (Pearson, 2001).

- [18] P. A. M. Dirac, “Generalized Hamiltonian dynamics,” Proc. Roy. Soc. London, A 246, 326 (1958).

- [19] P. A. M. Dirac, Lectures on Quantum Mechanics (Yeshiva University Press, New York, 1967).

- [20] C. Teitelboim and M. Henneaux, Quantization of Gauge Systems (Princeton University Press, 1994).

- [21] H. J. Rothe and K. D. Rothe, Classical and Quantum Dynamics of Constrained Hamiltonian Systems, World Scientific Lectures Notes in Physics, vol. 81, (World Scientific, 2010).

- [22] M. Contreras G., “Dirac’s method in a non-commutative phase space,” in preparation.