An Upper Confidence Bound Approach to Estimating the Maximum Mean

Abstract

Estimating the maximum mean finds a variety of applications in practice. In this paper, we study estimation of the maximum mean using an upper confidence bound (UCB) approach where the sampling budget is adaptively allocated to one of the systems. We study in depth the existing grand average (GA) estimator, and propose a new largest-size average (LSA) estimator. Specifically, we establish statistical guarantees, including strong consistency, asymptotic mean squared errors, and central limit theorems (CLTs) for both estimators, which are new to the literature. We show that LSA is preferable over GA, as the bias of the former decays at a rate much faster than that of the latter when sample size increases. By using the CLTs, we further construct asymptotically valid confidence intervals for the maximum mean, and propose a single hypothesis test for a multiple comparison problem with application to clinical trials. Statistical efficiency of the resulting point and interval estimates and the proposed single hypothesis test is demonstrated via numerical examples.

1 Introduction

Estimating the maximum mean of a number of stochastic systems finds a variety of applications in broad areas, ranging from financial risk measurement and evaluation of clinical trials to Markov decision processes and reinforcement learning. In financial risk measurement, coherent risk measures such as expected shortfall play an important role in determining risk capital charge for financial institutions, which is of great practical importance to both risk managers and regulators. In general, a coherent risk measure can be written as the maximum of expected losses (or means) under different probability measures. In practice, its estimation in practice often involves intensive simulation, and developing efficient methods for estimating coherent risk measures has been a topic of interest in simulation community. To improve upon a two-stage procedure in Chen and Dudewicz (1976) for constructing confidence intervals (CIs) for the maximum mean, Lesnevski et al. (2007) proposed a procedure for constructing a two-sided, fixed-width CI for a coherent risk measure, by combining stage-wise sampling and screening based on ranking-and-selection techniques. Estimating the maximum mean also finds applications in evaluation of clinical trials. One of the goals of clinical trials is to estimate the best treatment effect among a number of treatments with unknown mean effects. Estimates of the best mean effect provide useful information into the level of effectiveness of the best treatment.

In both applications in financial risk measurement and clinical trials, the accuracy of the maximum mean estimates is of great concern. For instance, FDA (U.S. Food and Drug Administration) is concerned about the bias and reliability of the estimates of treatment effects when using adaptive designs in clinical trials (FDA 2019). To address such concerns, statistical inference about the maximum mean is highly desirable, providing not only point estimates, but also CIs that offer information about the precision of the estimates. To provide theoretical guarantees for the CIs, central limit theorems (CLTs) are typically needed, which is part of the agenda in our study. In this paper, we aim to develop efficient estimators for the maximum mean, and provide theoretical results on the properties of the estimators, such as their biases, variances, and CLTs.

CLTs for maximum mean estimators can also be applied to a fundamental hypothesis testing problem in clinical trials, i.e., comparing treatments (with unknown mean effects denoted by ) to a control treatment with known mean effect , aiming to determine whether any of the treatments outperforms the control; see Dmitrienko and Hsu (2006) and references therein. In the current practice, a commonly used approach is to simultaneously test null hypotheses: , , where Bonferroni correction is often applied to control the type I error. However, it is well known that this approach may be conservative especially when is large, and it comes at the cost of increasing the probability of type II errors. With CLTs for estimators of the maximum mean , we propose an alternative approach by testing only a single hypothesis: , thus avoiding the use of Bonferroni correction and its subsequent drawbacks. The CLTs provide theoretical guarantees for the validity of the proposed single hypothesis test that is numerically shown to significantly outperform the multiple hypotheses approach.

Maximum mean estimation has also been studied in the literature on Markov decision processes (MDPs) and reinforcement learning, where the value function for a given state is usually the maximum of multiple expected values taken for different actions. Estimation of the value function is a fundamental sub-task in many solution methods for MDPs and reinforcement learning; see, e.g., Chang et al. (2005), van Hasselt (2010), and Fu (2017). A double (also referred to as cross-validation) estimator was proposed in van Hasselt (2010, 2013) based on two disjoint sets of samples, where one set of samples are used to select the best system (i.e., the system with the maximum mean) and the estimator is given by the average of the selected system using another set of samples. D’Eramo et al. (2016) proposed an estimator by using a weighted average of the sample means of individual systems, where the weight of a system is set as a Gaussian approximation of the probability that this system is the best. The double estimator leads to double Q-learning algorithms, and empirical studies in van Hasselt (2010) and van Hasselt et al. (2016) suggest that these algorithms may outperform conventional Q-learning and deep reinforcement learning algorithms. However, given that maximum mean estimation is only an intermediate module but not the ultimate goal in MDPs and reinforcement learning, how the estimation errors propagate through the state-action pairs and affect the optimal policies is beyond the scope of this paper and requires dedicated future research.

Estimating the maximum mean is closely related to the problem of selecting the best system, an area of active research in simulation community; see, e.g., Kim and Nelson (2006) for an overview. Arguably, the problem of estimating the maximum mean is more difficult than selecting the best system (Lesnevski et al. 2007), because the former requires a careful balance between two goals, including selecting the best system and allocating as many samples as possible to the best system. These two goals are not necessarily complementary, because correctly selecting the best system requires some samples to be allocated to all systems, which limits the achievement of the second goal.

One of the key issues in estimating the maximum mean is how to sample from individual systems. In this regard, both static sampling (van Hasselt 2010, 2013; D’Eramo et al. 2016) and adaptive sampling (Chang et al. 2005; Kocsis and Szepesvári 2006) have been studied in the literature. The former refers to sampling policies that maintain a pre-specified sampling frequency for each system, while the latter sequentially adjusts sampling frequencies based on historical samples drawn from the systems. Many adaptive sampling methods such as the upper confidence bound (UCB) policy and Thompson sampling share a common feature that higher sampling frequencies are geared towards systems with larger means. When estimating the maximum mean, this feature implies a smaller variance for the resulting estimator because more samples are allocated to the target system, thus offering better computational efficiency. Adaptive sampling/design using policies like UCB offers benefits beyond computational efficiency in the application of clinical trials, where each patient is assigned to one of the treatments to assess treatment effects. In this application, other than assessing treatment effects, another important goal is to treat patients as effectively as possible during the trials. Therefore, for the well-being of patients, it is desirable to adopt adaptive designs like the UCB policy so that more patients are assigned to more effective treatments. Interested readers are referred to Villar et al. (2015) and Robertson and Wason (2019) for recent work on adaptive designs in clinical trials.

In this paper, we study maximum mean estimation based on the UCB sampling policy. UCB is one of the most celebrated adaptive sampling methods used in online decision making with learning, which has been extensively used as a tool to balance the trade-off between exploration and exploitation during the course of learning; see, e.g., Agrawal (1995) and Auer et al. (2002) for early work on multi-armed bandit problems, and Hao et al. (2019) and references therein for recent related work. Via the UCB sampling policy, the expected amount of sampling budget allocated to the non-maximum systems is at most of a logarithmic order of the total budget, which implies that the majority of sampling budget is allocated to the system with the maximum mean. Within the UCB framework, one of the estimators being considered is the average of all the samples, no matter drawn from the system with the maximum mean or not. We refer to it as the grand average (GA) estimator. The rationale of the GA estimator stems from the fact that it is dominated by the sample average of the system with the maximum mean, from which the majority of the samples are drawn. The GA estimator is not new in the literature, and has served as a key ingredient for algorithms in MDPs; see, e.g., Chang et al. (2005) and Kocsis and Szepesvári (2006). However, to the best of our knowledge, very little is known about the statistical properties of the GA estimator, except its asymptotic unbiasedness. In this paper, we fill this gap by providing statistical guarantees for the GA estimator, including its strong consistency, mean squared error (MSE), and CLT. On the downside, a well-known drawback of the GA estimator is its bias that results from taking grand average of all systems, including those with smaller means. While this bias gradually dies out as sample size increases, its impact in finite-sample settings cannot be neglected.

As an attempt to remedy the above drawback, we propose a new estimator by taking the sample average of the system that has been allocated the largest amount of samples, referred to as the largest-size average (LSA) estimator. This estimator is intuitively appealing, as the number of allocated samples to the system with the maximum mean dominates those allocated to other systems, ensuring a high probability that the system identified by LSA is exactly the one with the maximum mean. LSA avoids taking average of systems with smaller means, and it is thus expected to have a smaller bias compared to the GA estimator.

To summarize, we make the following contributions in this paper.

-

•

We provide statistical guarantees for the GA estimator, including its strong consistency, MSE, and CLT, which are new to the literature to the best of our knowledge.

-

•

We develop a new LSA estimator for estimating the maximum mean with statistical guarantees, and show that it is preferable over the GA estimator, due to its bias that converges at least as fast as , a rate much faster than that () of the GA estimator, where and denote the total sample size and exploration rate, respectively. While polynomial exploration rates are allowed in our setup, both theoretical results and empirical evidence suggest that logarithmic exploration rate is asymptotically optimal when minimizing the MSEs of both the GA and LSA estimators.

-

•

Using the established CLTs, we construct asymptotically valid GA and LSA CIs for the maximum mean, and propose a single hypothesis test for a multiple comparison problem with application to clinical trials. Numerical experiments confirm the validity of the CIs, and show that the proposed single test exhibits lower type I error and higher statistical power, compared to a benchmarking test with multiple hypotheses.

The rest of the paper is organized as follows. In Section 2, we formulate the problem of estimating the maximum mean. The GA and LSA estimators, and a single hypothesis test for the multiple comparison problem are presented in Section 3. Theoretical analysis of the estimators is provided in Section 4. A numerical study is presented in Section 5 to demonstrate the efficiency of the estimators and the proposed single hypothesis test, followed by conclusions in Section 6.

2 Problem and Backgrounds

Consider stochastic systems, with their performances denoted by random variables . Let . For each , we assume that follows an unknown probability distribution, while independent samples can be drawn. This assumption is usually satisfied in many practical applications. For instance, may represent the stochastic performance of a complex simulation model and follows an unknown probability distribution, while samples of can be generated via simulation. We make the following assumption on ’s.

Assumption 1.

For any , follows a sub-Gaussian distribution, i.e., there exists a sub-Gaussian parameter such that , for any .

Assumption 1 is widely used in the literature on machine learning. Many commonly used distributions, such as those with bounded supports and normal distributions, are sub-Gaussian. Assumption 1 ensures the existence of a Hoeffding bound that serves as the basis for the theoretical analysis in this paper. This bound is presented in Lemma A1 in the appendix.

In this paper, we are interested in estimating the maximum mean of the systems, defined as

Let denote the index of the system with the largest mean, i.e., . Without loss of generality, is assumed to be unique.

Assumption 2.

The system with the largest mean is unique, i.e., for any .

Assumption 2 implies that the mean gap between systems and , given by , is positive for any .

Given samples of , denoted by , , a straightforward estimator of , referred to as the maximum estimator (see, e.g., D’Eramo et al. 2016), is given by

where is the sample average of . For notational convenience, where no confusion arises, we write simply in places for .

It has been well known that the maximum estimator has a positive bias and may overestimate ; see, e.g., Smith and Winkler (2006) and van Hasselt (2010). The positive bias may lead to adverse effects in various applications. For instance, in the estimation of coherent risk measures, it may lead to overestimation of risk, resulting in unduly high capital charges for risky activities (Lesnevski et al. 2007). In reinforcement learning, as errors propagate over all the state-action pairs, the positive bias may negatively affect the speed of learning (van Hasselt 2010). More importantly, when using the maximum estimator, it is not clear how to allocate sampling budget to the systems, i.e., setting the values of ’s, so as to ensure reasonable accuracy. Therefore, it is of both theoretical and practical interests to develop alternative estimators of .

3 An Upper Confidence Bound Approach

Sampling from the systems is essential for constructing any estimator of . In developing an efficient estimator, two issues are of major concern. The first issue is on how to efficiently allocate the sampling budget, while the second issue is on methods of constructing an estimator such that statistical guarantees can be established.

To address the first issue, adaptive sampling policies have been studied in the literature. A popular adaptive sampling policy is the UCB policy that was originally proposed for the multi-armed bandit (MAB) problem; see Auer et al. (2002). In traditional MAB problem, in each round, one of the systems is chosen and a random sample (reward) is drawn from the chosen system, and the decision maker aims to maximize the total rewards collected. At the heart of the MAB problem is to find an adaptive sampling policy that decides which system to sample from in each round so as to balance the tradeoff between exploration and exploitation. Among various sampling policies for MAB, it has been well known that the UCB policy achieves the optimal rate of regret, defined as the expected loss due to the fact that a policy does not always choose the system with the highest expected reward; see Lai and Robbin (1985) and Auer et al. (2002). Specifically, let denote the number of samples drawn from system during the first rounds, for . The UCB sampling policy is described in Algorithm 1.

Algorithm 1: UCB Sampling Policy

-

1.

Initialization: During the first rounds, draw a sample from each system.

-

2.

Repeat: For , draw a sample from the system indexed by

(1) where denotes the sample mean of system during the first rounds.

Update the sample average of system .

The UCB policy offers important insights into the adaptive allocation of sampling budget. Essentially, it balances the tradeoff between exploration and exploitation using the UCB defined on the right-hand-side (RHS) of (1). On the one hand, systems with higher on-going sample averages have a higher chance to be chosen in the current round, contributing to higher total rewards. On the other hand, systems that are chosen less frequently during the previous rounds, i.e., being smaller, may also have a sufficient chance to be chosen so that such systems will be sufficiently explored. The function in (1) can be interpreted as the exploration rate that controls the speed of exploring systems that may not have the largest mean.

In the MAB context, the exploration rate is set to be simply because it leads to the optimal rate of regret. However, it should be pointed out that when the objective is not to minimize the regret, as the case in our problem setting, it is not yet clear whether logarithmic exploration rate is optimal. For the sake of generality, we henceforth allow the exploration rate, denoted by , to take different forms as a function of , and refer to the resulting UCB policy as a generalized UCB (GUCB) policy, which is described in Algorithm 2.

Algorithm 2 (GUCB): Generalized UCB Sampling Policy

-

1.

Initialization: During the first rounds, draw a sample from each system.

-

2.

Repeat: For , draw a sample from the system indexed by

where denotes the sample mean of system during the first rounds.

Update the sample average of system .

3.1 Grand Average (GA) Estimator

Before developing estimators for , we highlight some key properties of the GUCB policy. To convey the main idea, for a while we focus on a special case where the exploration rate . In this case, it has been well known that (see Auer et al. 2002), for system (),

for some constant that depends on . Then, it can be easily seen that , the expected number of times system is chosen, is of order during the first rounds, because the summation of ’s equals .

In other words, it is expected that among the first rounds, system is chosen in the majority of rounds. Recall that our objective is to estimate , the mean of system . It is, therefore, reasonable to use the grand average of the samples of all the rounds as an estimator of , i.e., the GA estimator. Although it uses the samples that are not drawn from system , the validity of the GA estimator can be justified by the fact that the estimation error due to such samples may phase out when is sufficiently large, as the number of such samples is negligible compared to those drawn from system .

Specifically, the GA estimator is given by

| (2) |

where denotes the samples drawn from system during the first rounds using the GUCB policy in Algorithm 2.

The GA estimator is not new in the literature, which has served as a key ingredient for algorithms for MDPs and reinforcement learning under the special case that . However, research on the statistical properties of the estimator has been underdeveloped. To the best of our knowledge, only the asymptotic unbiasedness of the estimator has been established; see, e.g., Kocsis and Szepesvári (2006), while other statistical properties seem to be missing. One of the contributions of this paper is to fill this gap. In particular, we establish strong consistency, asymptotic MSE, and CLT for the GA estimator, which shall be discussed in detail in Section 4.

By taking grand average of the samples of all the systems including those with means smaller than , the GA estimator is naturally negatively biased. While this bias gradually dies out as the sample size increases, its impact cannot be neglected with finite samples, which has been considered as a main drawback of the GA estimator.

3.2 Largest-Size Average (LSA) Estimator

As an attempt to remedy the bias drawback of the GA estimator, we propose a new estimator based on a key observation that the number of samples drawn from system usually dominates those from other systems. We therefore define, at the th round,

| (3) |

In other words, is the index of the system from which the GUCB policy has sampled most frequently so far. It is reasonable to expect that it is very likely to observe . Recall that is the mean of the performance of system . It is, therefore, reasonable to propose a new estimator of by taking sample average of the system indexed by , i.e., the LSA estimator. Specifically, the LSA estimator is given by

| (4) |

where the samples are drawn using the GUCB policy in Algorithm 2.

The LSA estimator and the maximum estimator share a similar structure, both of them being sample averages of certain identified systems. However, the way of identifying the target system differs significantly. The maximum estimator chooses the system with the largest sample average and uses directly this sample average to estimate . Instead, the LSA estimator chooses the system from which the GUCB policy has sampled most frequently so far, and uses the sample average of this system to estimate . The latter seems to be more intuitively appealing in that it is virtually an unbiased sample-mean estimator conditioning on the event that system is correctly identified. Arguably, correctly identifying may be relatively easy as the number of rounds GUCB samples from system is usually overwhelmingly larger than those from other systems.

Compared to the GA estimator, the LSA estimator avoids taking averages of those systems with smaller means with a high probability. It is thus expected that the LSA estimator may have smaller bias, which shall be shown theoretically in Section 4.

3.3 Hypothesis Testing using Maximum Mean Estimators

In what follows, we demonstrate how the maximum mean estimators may lead to a new approach to a multiple comparison problem that finds important application in clinical trials.

In a clinical trial, suppose that one is interested in comparing treatments with unknown mean effects to a control treatment with known mean effect , where and a higher mean effect implies a better treatment. In the current practice, a commonly used approach to this comparison problem is to test simultaneously null hypotheses ’s against alternatives ’s, where

| (5) |

With the above multiple hypotheses framework, a meta null hypothesis is set as ’s being true simultaneously for all , which is rejected if any of ’s is rejected, implying that at least one of the treatments outperforms the control with certain confidence. Suppose that the overall type I error of this test with multiple hypotheses is set to be . In this case, type I errors associated with individual hypotheses cannot be set as , in order to control the overall type I error. Instead, Bonferroni correction is often applied, which assigns a type I error of to the individual tests. By doing so, it can be verified that the family-wise error rate (FWER), defined as the probability of rejecting at least one true is not larger than , provided that these individual tests are independent. To see this, suppose that there are true null hypotheses that are assumed to be without loss of generality, where takes values in and is unknown to the researcher. Then,

While it is easy to implement, Bonferroni correction has been criticized, due to its conservativeness, i.e., a large gap between FWER and especially when is large, and the drawback that it usually increases the probability of type II errors. It is thus desirable to develop other procedures for the comparison of treatments that avoid Bonferroni correction.

We note that with the estimators of the maximum mean, a more appealing approach can be developed for this multiple comparison problem. More specifically, in contrast to the multiple hypotheses formulation, we propose a single hypothesis test, with

| (6) |

Relabelling as , we note that the GA or LSA estimator, or , can be used to estimate . Essentially, by comparing (or ) to , one may be able to decide whether to reject or not. Nevertheless, one needs to know the asymptotic distribution of (or ), in order to formalize the test. We shall show in Section 4, more specifically, Theorems 5 and 8, that both and follow asymptotic normal distributions, thus providing theoretical support for the proposed single hypothesis test. Exact forms of the testing statistics resulting from the GA and LSA estimators are delayed to (11) and (14) in the subsequent section.

4 Theoretical Analysis

Throughout the paper, we denote , where ’s are the sub-Gaussian parameter defined in Assumption 1. To facilitate analysis, we first show a result on .

Proposition 1.

Proposition 1 provides an upper bound for the th moment of , the number of samples drawn from system during the first rounds, for . This upper bound relies on the exploration rate , under the assumption that the exploration rate is sufficiently fast, i.e., at least as fast as the logarithmic order. In a special case when and , the result is the same as that in Theorem 1 of Auer et al. (2002).

Essentially, Proposition 1 gives an upper bound on the rate at which the th moment of converges to as for . It implies that the sampling ratios may satisfy certain convergence properties. In particular, strong consistency of the sampling ratios are summarized in the following theorem, whose proof is provided in Section A.3 of the appendix.

Theorem 1.

Theorem 1 implies that in the long run, the number of samples drawn from system dominates the total numbers of samples drawn from other systems, in the almost sure sense. This result suggests that, it is natural to use , defined in (3), as an estimator of the unknown . Strong consistency of can also be established, which is summarized in the following theorem. Proof of the theorem is provided in Section A.4 of the appendix.

Theorem 2.

Under the same conditions of Theorem 1, as ,

4.1 GA Estimator

In what follows, we establish strong consistency, asymptotic MSE and asymptotic normality for the GA estimator . Let denote the sample drawn at the th round. Note that

Define

Then,

| (7) |

Let be the -field generated by the first samples for , and . Note that is -measurable. It follows that for ,

Therefore, and

i.e., is a martingale with mean 0.

Note that is sub-Gaussian with parameter for . Because is -measurable,

implies that is sub-Gaussian with parameter .

Further note that is a martingale difference sequence, the condition of Theorem 2 in Shamir (2011) is thus satisfied. Applying this theorem, for any , it holds that with probability at least ,

Let . Then, and

Applying the same argument to the sequence , symmetrically we have

Therefore,

and moreover,

By Borel-Cantelli lemma, as . i.e., the first term on the RHS of (7) converges to 0 almost surely.

Moreover, the second term on the RHS of (7) satisfies

where the convergence follows from Theorem 1 and the continuous mapping theorem. Therefore, by (7), we establish strong consistency of that is summarized as follows.

Theorem 3.

Under the same conditions of Theorem 1, is a strongly consistent estimator of , i.e., as ,

Theorem 3 ensures that the GA estimator, , converges to with probability 1, as , which serves as an important theoretical guarantee for the estimator. To further understand its probabilistic behavior, it is desirable to conduct error analysis, especially on its bias and variance. In the following theorem, we establish upper bounds for its bias, variance and MSE. The proof of the theorem is provided in Section A.5 of the appendix.

Theorem 4.

Theorem 4 shows that the MSE of the GA estimator converges to at a rate of . In particular, the variance is the dominant term in the MSE, compared to the square of the bias, which is of order . This result implies that when the exploration rate takes value in the range with , the bias of the GA estimator is negligible compared to its variance, making it possible to construct CIs by ignoring its bias. Furthermore, as suggested by Theorem 4, the leading term of the variance does not depend on . It is thus asymptotically optimal to choose logarithmic , the slowest possible rate, so as to reduce the asymptotic bias as far as possible. The superiority of this choice of is also confirmed by the numerical experiments in Section 5.

To provide a formal theoretical support for asymptotically valid CIs and the single hypothesis test in (6), we establish a CLT for in the following theorem, whose proof is provided in Section A.6 of the appendix.

Theorem 5.

Theorem 5 shows that the GA estimator is asymptotically normally distributed with mean and variance . Based on Theorem 5, an asymptotically valid CI can be constructed for . To do so, a remaining issue is on how to estimate the unknown . In light of the proof of Theorem 5, we estimate using

| (8) |

where for ,

| (9) |

It can be shown that converges to in probability, as . This result is summarized in the following proposition, whose proof is provided in Section A.7 of the appendix.

Proposition 2.

Under the same conditions of Theorem 5, as ,

From Theorem 5 and Proposition 2, it follows that

Then, an asymptotically valid 100 CI of is given by

| (10) |

where is the quantile of the standard normal distribution.

The CLT in Theorem 5 and the variance estimator in Proposition 2 also serve as the theoretical foundation for the proposed single hypothesis test in Section 3.3. More specifically, the following testing statistics can be constructed:

| (11) |

Note that under either the null or alternative hypothesis, . Therefore, a one-side test can be conducted using the GA-based statistics . In particular, the null hypothesis is rejected when , suggesting that at least one of the considered treatments outperform the control treatment, where denotes the overall type I error.

4.2 LSA Estimator

Strong consistency of the LSA estimator is stated in the following theorem, whose proof is provided in Section A.8 of the appendix.

Theorem 6 shows that the LSA estimator converges to as . To better understand its asymptotic error, we establish upper bounds for its asymptotic bias, variance and MSE, which are summarized in the following theorem with proof provided in Section A.9 of the appendix.

Theorem 7.

Under the same conditions of Theorem 6, for sufficiently large , and there exist constants and , such that

and thus

Theorem 7 shows that the bias of LSA estimator decays at a rate at least as fast as , which is much faster than that of GA estimator (see Theorem 4). Interestingly, the rate of convergence of its bias can be faster than if . Furthermore, as suggested by Theorem 7, the leading term of the variance does not depend on . It is thus asymptotically optimal to choose logarithmic , the slowest possible rate, so as to reduce the asymptotic bias as far as possible. The superiority of this choice of is also confirmed by the numerical experiments in Section 5.

It should be remarked that in Theorems 7 and 4, the dominant coefficients of the variance bounds for the LSA and GA estimators, i.e., and , are not tight and can be further reduced with more elaborate analysis. These coefficients do not imply that the GA estimator has a smaller variance, and in fact, numerical results in Section 5 suggest the opposite.

Similar to the case for GA, we establish a CLT for the LSA estimator, which is stated in the following theorem. Proof of the theorem is provided in Section A.10 of the appendix.

Theorem 8.

Under the same conditions of Theorem 6,

Theorem 8 shows the asymptotic normality of the LSA estimator, whose rate of convergence is . From its proof, it can also be seen that if replacing by , the CLT still holds.

While both CLTs for the GA and LSA estimators (in Theorems 5 and 8, respectively) have the same rate of convergence, it should be emphasized that they require different conditions on the exploration rate. Specifically, CLT holds for the LSA estimator so long as the exploration rate is within the range of , while CLT of the GA estimator can only be guaranteed under a more restricted condition that .

In what follows, we construct an asymptotically valid CI for based on Theorem 8. To do this, we propose to estimate the unknown variance by

Strong consistency of the estimator is established in the following proposition, whose proof is provided in Section A.11 of the appendix.

Proposition 3.

Under the same conditions of Theorem 6,

| (12) |

Similar to (10), an asymptotically valid 100 CI of is given by

| (13) |

5 Numerical Study

We consider three examples. The first example aims to illustrate the asymptotic behaviors of the GA and LSA estimators, including their errors and rates of convergence. While it has been argued in Sections 4.1 and 4.2 that logarithmic exploration rate is asymptotically optimal when minimizing MSEs of the GA and LSA estimators, we numerically confirm it using the first set of experiments. For the rest of the experiments in all examples, we choose logarithmic exploration rate given its superiority on both theoretical and empirical grounds.

In the second example, we consider an application of the GA and LSA estimator to the estimation of coherent risk measures, and compare our constructed GA and LSA CIs to the fixed-width CIs produced by the procedure of Lesnevski et al. (2007).

In the third example, we consider a clinical trial using the GA- and LSA-based single hypothesis tests proposed in (6), aiming to demonstrate their relative merits compared to an existing test with multiple hypotheses in the form of (5).

5.1 Implementation Issues

In the numerical experiments, we observed that the bias of the GA estimator with finite samples could be quite large, especially when the variances of the systems are large, or the exploration rate is large. This phenomenon is intuitively explained by that there may be too many samples drawn from systems other than , leading to a significant underestimation of the maximum mean. However, this adverse effect dies out gradually in the long run. As a means to alleviate this adverse effect in finite-sample setting, we propose to choose a certain amount of samples as a warm-up period and discard the warm-up samples in the estimation. Numerical experiments suggest that a small amount of warm-up samples, e.g. of the total sampling budget, may greatly improve the estimation quality of the GA estimator. Throughout our experiments, we set 10% of the budget as the warm-up period for the GA estimator.

By contrast, the LSA estimator is insensitive to the warm-up samples. Whether to keep or to discard these samples does not have much impact on the quality of the LSA estimator. During the implementation, we discard the warm-up period samples for the LSA estimator as well, to be parallel to the setting for the GA estimator.

From an implementation perspective, it has also been documented that slightly tuning the UCB policy by taking into consideration of the variances of the systems may substantially improve the performances of algorithms for multi-armed bandit problems; see, e.g., Auer et al. (2002). We observe the same phenomenon when estimating the maximum mean. Following the same idea of Auer et al. (2002), we propose to replace the UCB, i.e., , by

for during the implementation, where

is an estimate of the UCB of the variance of system .

5.2 Maximum of Normal Means

Consider systems, with samples from normal distributions with unknown means . Parameter configuration is similar to that of Fan et al. (2016), who focused on selecting the system with the largest mean rather than estimating the maximum mean. More specifically, the means of systems are set to be , , and the standard deviations are set to be for all systems.

We examine the performances of different estimators when estimating , by considering their biases, standard deviations, MSEs, and RRMSEs, where RRMSE is defined as the percentage of the root MSE to the true maximum mean. Results reported are based on independent replications unless otherwise stated.

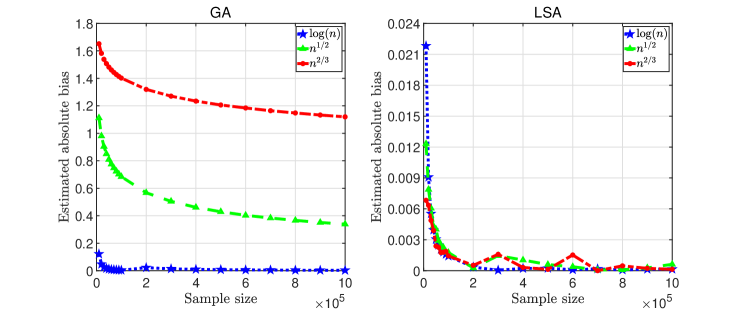

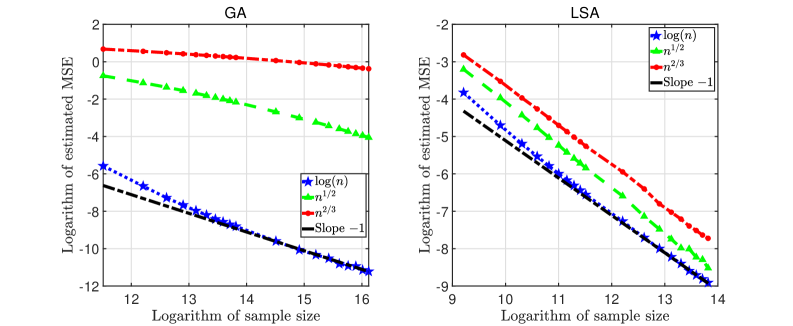

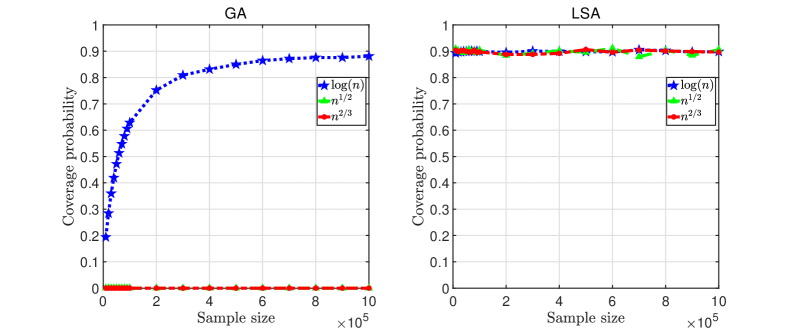

In the first set of experiments, let with the total sampling budget ranging between and . We compare biases, MSEs, and coverage probabilities for GA and LSA estimators with respect to (w.r.t.) , , and . The results are summarized in Figures 1, 2, and 3, illustrating the rates of convergence of absolute biases and MSEs, and coverage probabilities of the 90% CIs, for the GA (left panel) and LSA (right panel) estimators.

-

•

Figure 1 shows that for different choices of , the bias of the LSA estimator is substantially smaller than that of the GA estimator, consistent with Theorems 4 and 7 that the former converges to at a rate of at least , much faster than the latter (with a rate of ). Furthermore, it is observed that logarithmic leads to the smallest biases among the three choices, for both the GA and LSA estimators.

-

•

Figure 2 shows that the MSE of the LSA estimator converges approximately to at a rate of for all choice of . For the GA estimator, the same rate is observed for logarithmic , while the rate tends to be slower when and . This coincides with the requirement in Theorems 4 that must be slower than for the GA estimator.

-

•

Figure 3 shows the LSA CIs outperform the GA ones substantially in terms of coverage probabilities, especially when sample sizes are relatively small, due to the significantly smaller bias of the LSA estimator. When , both the GA and LSA CIs achieve the nominal coverage probability with sufficiently large sample sizes. However, the GA CIs fail to do so for and that violate the requirement in Theorem 5.

Given the superiority of logarithmic exploration rate, we set in the rest of the experiments in Section 5.

In the second set of experiments, we fix , and compare the GA and LSA estimators to four competing estimators given as follows. Consider two maximum average estimators that take the maximum of the sample means of the systems. In particular, the first is a maximum average estimator based on the GUCB adaptive sampling policy, referred to as adaptive maximum average (AMA) estimator, while the other is the maximum average estimator based on a simple static sampling policy that randomly allocates each sample to one of the systems with equal probabilities, referred to as simple maximum average (SMA) estimator. Moreover, we consider two heuristic estimators in the form of the sample average of certain selected system resulting from existing best arm identification methods. In particular, we consider two Bayesian best arm identification methods, including the top-two probability sampling (TTPS) method of Russo (2020), and the top-two expected improvement (TTEI) of Qin et al. (2017). For these two estimators, we assume known variances of the systems as required by the TTPS and TTEI methods, and set the tuning parameter to be the default value of , following Russo (2020) and Qin et al. (2017).

It should be pointed out that, in spite of being reasonable estimators, asymptotic properties of the AMA, TTPS and TTEI estimators, such as their biases, MSEs and central limit theorems, are not known in the literature. The comparison of these estimators in our experiments serves merely to numerically examine their merits, while development of theoretical underpinnings is a topic that deserves future investigation.

Relative biases and standard deviations as percentages of the true maximum mean, and RRMSEs are summarized in Table 1444For cases of and , reported errors are estimated based on independent replications.. From Table 1, it can be seen that all the considered estimators converge as sample size increases. Among them, LSA and AMA have almost the same biases and variances, and perform the best with the smallest MSEs. Their biases are also smaller or comparable to other estimators. GA and SMA suffer from a disadvantage of significantly larger biases compared to other estimators. TTPS and TTEI have biases comparable to those of LSA and AMA, but with larger variances. It is also interesting to observe that while SMA is positively biased, AMA is negatively biased, suggesting that the dependence structure among the samples drawn from the UCB policy may lead to a negative bias.

| LSA | GA | ||||||

|---|---|---|---|---|---|---|---|

| Relative bias | 0.12 | 2.7e-03 | 1.3e-03 | 2.38 | 0.40 | 0.03 | |

| Relative stdev | 1.28 | 0.33 | 0.10 | 1.31 | 0.36 | 0.10 | |

| RRMSE | 1.28 | 0.33 | 0.10 | 3.03 | 0.54 | 0.11 | |

| AMA | SMA | ||||||

| Relative bias | 2.7e-03 | 1.3e-03 | 0.98 | 0.01 | 3.0e-05 | ||

| Relative stdev | 1.27 | 0.33 | 0.10 | 3.76 | 1.40 | 0.45 | |

| RRMSE | 1.27 | 0.33 | 0.10 | 3.89 | 1.40 | 0.45 | |

| TTPS | TTEI | ||||||

| Relative bias | 9.0e-03 | 7.3e-03 | 6.1e-03 | 2.7e-03 | 7.5e-04 | ||

| Relative stdev | 1.51 | 0.49 | 0.14 | 1.77 | 0.45 | 0.14 | |

| RRMSE | 1.51 | 0.50 | 0.14 | 1.77 | 0.45 | 0.14 | |

5.3 Simulated CIs for Coherent Risk Measures

Consider a risk measurement application in which we are interested in estimating a coherent risk measure that is the maximum of the expected discounted portfolio loss taken over probability distributions. Lesnevski et al. (2007) proposed a simulation-based procedure to construct a fixed-width CI for the risk measure, and used an example with to demonstrate the performance of the constructed CIs. For the sake of completeness, we briefly describe this example as follows.

A coherent risk measure can be written as

where is the value of a portfolio at a future time horizon, is a stochastic discount factor which represents the time value of money, and is a set of probability measures simplified by . Denote and . Let be a random variable whose distribution under a probability measure is the same as that of under , i.e., . Then the coherent risk measure is exactly the maximum mean:

| (15) |

Consider estimating the coherent risk measure of a portfolio consisting of European-style call and put options written on three assets with price at time for . Let denote a market index. All options in the portfolio have a common terminal time . For each of , follows geometric Brownian motion with drift and volatility , and therefore , where is a standard normal random variable. The assets are dependent in the sense that , and for , where under probability measure , , , , and are independent standard normal random variables. In this model, corresponds to the market factor common to all assets, while , , and are idiosyncratic factors corresponding to each individual asset.

Denote by the standard normal distribution function. Each for is sampled restricted on four cases with equal probabilities: sampled conditional on exceeding , sampled conditional on being below , sampled conditional on being between these two numbers, and sampled with no restriction. Therefore, in (15), and each denotes the value of the portfolio corresponding to a combination of these four cases. The parameters are set as follows: is one week, , , , , , , and and for . The amounts of options in the portfolio could be referred to Table 1 in Lesnevski et al. (2007).

We estimate the coherent risk measure using the GA and LSA estimators. In the experiments, we examine the performance of the estimators, and compare the CIs to those of Lesnevski et al. (2007), which are constructed via the so-called “two-stage algorithm with screening” in the literature. When implementing this algorithm, we use exactly the same algorithm parameters as in Lesnevski et al. (2007).

The simulation-based procedure of Lesnevski et al. (2007) sets a certain amount of initial samples to enable the use of ranking-and-selection techniques. In our implementation, we set the same amount of warm-up samples. Moreover, a fixed width of the CI has to be set at the beginning of their procedure, the total sample size required to stop the process may thus vary across different replications. For the sake of fairness in the comparison, we first run the procedure of Lesnevski et al. (2007), and the same sample size is then used to construct the GA and LSA CIs. We then compare the width of our CIs to that of Lesnevski et al. (2007).

Relative errors of the GA and LSA estimators and coverage probabilities of their CIs w.r.t. different sample sizes are presented in Table 2. From the table, it can be seen that both the GA and LSA estimators exhibit high accuracy. In particular, when the sample size is larger than , RRMSEs of both estimators are less than , which are contributed mainly by the variances while their biases are negligible. However, when the sample size is relatively small, e.g., , the bias of the LSA estimator is substantially lower than that of the GA estimator, which also explains the low coverage probabilities of the GA CIs in this case. This result suggests that the LSA estimator is preferable over the GA estimator, especially for small or medium sample sizes.

| LSA | GA | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Bias | |||||||||

| Stdev | 1.21 | 0.29 | 0.09 | 0.03 | 1.54 | 0.31 | 0.09 | 0.03 | |

| RRMSE | 1.21 | 0.29 | 0.09 | 0.03 | 10.75 | 0.57 | 0.10 | 0.03 | |

| Cover. prob. | 93.1 | 95.7 | 95.3 | 95.8 | 0 | 60.6 | 93.0 | 95.6 | |

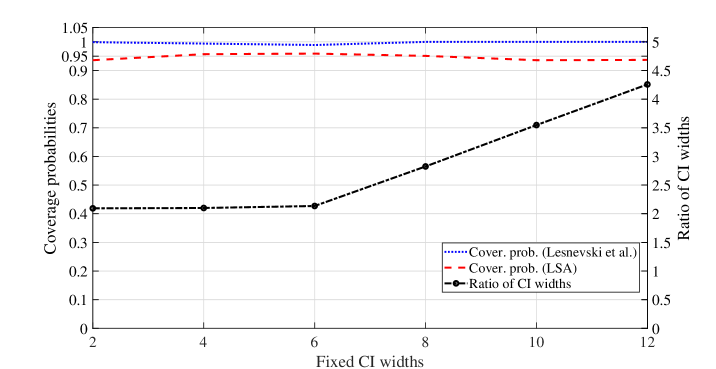

In the comparison of CIs, we observed that the widths of the GA and the LSA CIs are quite close as they have similar variances. Hence, we only report the result of the LSA CIs when comparing their widths to the method of Lesnevski et al. (2007). The comparison results are summarized in Figure 4, w.r.t. varying settings of the fixed width by Lesnevski et al. (2007). From the figure, it can be seen that the LSA CIs achieve the nominal coverage probability (), while the CIs constructed by Lesnevski et al. (2007) tend to be conservative, yielding coverage probabilities close to . The ratio between the width of the CI of Lesnevski et al. (2007) and that of the LSA CIs is also reported. It can be observed that the widths of the CIs of Lesnevski et al. (2007) are more than 2 times larger than LSA CIs, especially when the fixed width is set to be large. The ratio can be as large as 4 times when the fixed width is large, implying that the LSA method produces much narrower CIs and thus has better quality.

5.4 Hypothesis Testing in Clinical Trials

In a clinical trial, suppose that one is interested in comparing treatments with unknown mean effects to a control treatment with known mean effect . To examine the performances of different hypothesis tests, we consider respectively the GA-based and LSA-based testing statistics in (11) and (14), based on the proposed single hypothesis test in (6). We further consider a benchmarking test with multiple hypotheses in the form of (5). The benchmarking test uses a fixed randomization design that randomly assigns a patient to the treatments with equal probabilities, referred to as FR test.

Our numerical setting is the same as Mozgunov and Jaki (2020), where the outcomes of treatments for patients follow Bernoulli distributions with success probabilities , , , and overall type I error equal to . In addition to determining whether some treatments outperform the control, another important goal in the clinical trial is to treat the patients as effectively as possible during the trial (Villar et al. 2015). To quantify to what extent this goal is achieved, two measures are commonly used, including the expected proportion of patients assigned to the best treatment (POB) and the expected number of patient success (ENS); see, e.g., Mozgunov and Jaki (2020).

To measure the performance of each test, we estimate its POB and ENS. Furthermore, for a case with true means where is true, we estimate its overall type I error, while we estimate its power for another case with true means where is false. Comparison results are summarized in Table 3, based on independent replications.

It can be seen from Table 3 that the GA-based and LSA-based tests have an advantage over the FR test in that they consistently assign more patients to the best treatment and have higher ENS, and are thus preferable when taking into account the well-being of the patients. From a statistical perspective, when is true (i.e., Case 1), the estimated type I error of the LSA-based test is close to the nominal one (), while the rest tend to be conservative. Conservativeness of the GA-based test comes from its negative bias that is not negligible with a sample size of , and that of the FR test is due to drawbacks of Bonferroni correction. In Case 2 when is false, both the GA-based and LSA-based tests have much higher estimated power compared to the FR test. In summary, the LSA-based test outperforms the rest of the tests significantly in all the considered dimensions including POB, ENS, type I error, and statistical power.

| Case 1: | Case 2: | ||||||

| Test | Est. Type I | POB (s.e.) | ENS (s.e.) | Est. power | POB (s.e.) | ENS (s.e.) | |

| FR | 0.014 | 0.25(0.0001) | 121.6(0.03) | 0.814 | 0.25(0.0001) | 148.1(0.03) | |

| GA | 0.011 | 0.34(0.0006) | 123.1(0.03) | 0.966 | 0.78(0.0006) | 192.7(0.02) | |

| LSA | 0.044 | 0.34(0.0006) | 123.1(0.03) | 0.970 | 0.78(0.0006) | 192.7(0.02) | |

5.5 Robust Analysis of Stochastic Simulation: A Call Center Case

An application of the proposed maximum mean estimator is on robust analysis of stochastic simulation. Robust analysis aims to quantify the impact of input uncertainty (i.e., misspecification of the input models) inherent in stochastic simulation. For this purpose, Ghosh and Lam (2019) and Blanchet and Murthy (2019) considered worst-case performance bounds, i.e., maximum (and/or minimum) of the expected simulation output taken over a set of plausible input models. While these authors consider a collection of plausible input models in the space of continuous distributions by imposing constraints on their moments, distances from a baseline model or some other summary statistics, we consider in this paper a discrete setting where a finite number of plausible input models are specified by fitting to input data.

Specifically, the expected simulation outputs corresponding to a set of plausible input models are denoted by , respectively. From each input model, a simulation output with noise can be generated. We are interested in estimating the following worst-case bounds,

which help to quantify the input uncertainty of the plausible input models.

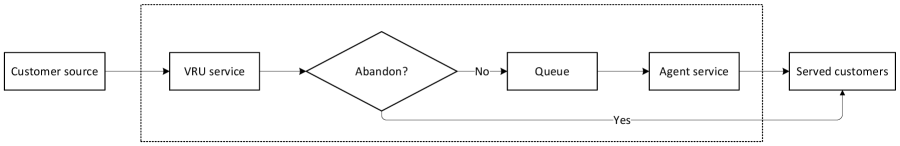

The maximum mean estimators studied in this paper can be applied to estimate , as well as by taking a negative sign on the simulation outputs. To illustrate how it works, we consider a case of a call center from an anonymous bank with real data available over a period of 12 months from January 1999 till December 1999 (Mandelbaum 2014). Workflows of the call center are described as follows. Customer phone calls arrive and ask for a banking service on their own. The call center consists of both automated service (computer-generated voice information, VRU) and agent service (AS) provided by eight agents. After the VRU service, customers either to a queue before AS, or directly to receive AS, or leave the system directly (see Figure 5). While privoviding VRU service seven days a week, 24 hours a day, the call center is staffed during 7:00 and 0:00 during weekdays. The information of each phone is stockpile, and each call has 17 fields in the data, e.g., Call ID (5 digits), VRU entry (6 digits), VRU exit (6 digits), Queue start (6 digits), Queue exit (6 digits), Outcome (AS or Hang), AS start (6 digits), AS exit (6 digits), etc.

We build up a simulation model with the logic flow described in Figure 5, to study customers’ expected waiting time during the interval 10:00-12:00 on a weekday. Key inputs to the simulation include interarrival (IA) distribution, and service time distributions for both VRU and AS services. During input modeling, we consider a base dataset and an extended dataset, including input data during the specified interval on January 1, 1999 (with 172 phone calls), and the combined data on both January 1 and 8 (with 311 phone calls in total), respectively. Comparison of simulation performances based on these two datasets helps to understand the impact of input data size on the magnitude of input uncertainty. Arena’s Input Analyzer tool is used to fit the input distributions, and Table 4 presents three candidate distributions for each of IA and AS times, all with -value larger than 0.01, while empirical distribution of VRU service time is used due to unsatisfactory -values for parametric distributions given by Inout Analyzer. The abandon rates for the two scenarios are 7.1% and 5.5%, respectively, according to the proportion of calls that hang out after VRU service.

| Dataset | IA time | AS time |

|---|---|---|

| Base dataset | -0.001 + ERLA(41.9, 1) | -0.001 + ERLA(225, 1) |

| -0.001 + EXPO(41.9) | -0.001 + EXPO(225) | |

| -0.001 + WEIB(38.4, 0.82) | -0.001 + WEIB(225, 0.992) | |

| Extended dataset | -0.001 + ERLA(41.8, 1) | -0.001 + ERLA(222, 1) |

| -0.001 + EXPO(41.8) | -0.001 + EXPO(222) | |

| -0.001 + WEIB(40.9, 0.951) | -0.001 + WEIB(222, 0.996) |

For each dataset, we specify the plausible input models as all possible combinations of the three candidate distributions for IA and AS times, while empirical distribution is employed for the VRU service time. In total there are () plausible models for each dataset, and the proposed GA and LSA estimators are applied to estimate and , the worst-case bounds for expected waiting time. Using real data, we also estimate the true value of the expected waiting time.

| LSA | GA | True value1 | |||||

|---|---|---|---|---|---|---|---|

| Dataset | |||||||

| Base dataset | CI of | [9.99, 10.87] | [10.04, 10.24] | [10.40, 10.94] | [10.20, 10.37] | 10.13 | |

| CI of | [13.70, 14.90] | [14.71, 14.97] | [13.89, 14.60] | [14.64, 14.87] | 14.81 | ||

| [10.43, 14.30] | [10.14, 14.84] | [10.67, 14.25] | [10.29, 14.75] | 11.98 | |||

| 3.87 | 4.70 | 3.58 | 4.46 | ||||

| Extended dataset | CI of | [8.78, 9.13] | [8.76, 8.91] | [9.29, 9.78] | [8.76, 8.91] | 8.84 | |

| CI of | [11.69, 12.51] | [11.98, 12.21] | [11.28, 11.86] | [11.86, 12.06] | 12.10 | ||

| [9.05, 12.10] | [8.83, 12.10] | [9.53, 11.57] | [8.84, 11.96] | 12.05 | |||

| 3.05 | 3.27 | 2.04 | 3.12 | ||||

-

1

In the last column, the first two numbers for each dataset are the (approximately) true values of and estimated based a large () sample size for each plausible input model, and the bold numbers represent the average waiting times obtained by taking averages from the real data.

Table 5 reports the constructed 90% CIs for and , the plausible performance range , as well as the width obtained by the GA and LSA estimators. Based on the table, we highlight our observations as follows.

-

•

Both the GA and the LSA estimators produce accurate estimates of and when sample size is sufficiently large, exemplified by CIs with narrow half-widths when . However, the GA estimator may have larger bias when sample size is not sufficiently large. For instance, when , the GA CIs of and lie above and below their true values (e.g. 10.13 and 14.81 for the base dataset) respectively, suggesting positive bias and negative bias for and , respectively. By contrast, the LSA CIs have better performance as they cover the true values for both the base and extended datasets.

-

•

The estimated plausible ranges of resulting from the LSA estimator cover the true waiting times for both datasets, while those from the GA estimator cover true waiting times for but not for due to its larger bias. Compared to the GA estimator, the LSA estimator produces more accurate point estimates of both and (and thus the gaps of the worst-case bounds ) for all sample sizes and both datasets. Comparing the gaps of the worst-case bounds for these two datasets (with 172 and 311 pone calls respectively), it can be seen that increasing the size of the input dataset helps reducing significantly this gap and thus the input uncertainty, e.g., the gap is reduced from 4.70 to 3.27 using the LSA estimator with .

6 Conclusions

We have studied two estimators, namely, the GA and LSA estimators, for estimating the maximum mean based on the UCB sampling policy. We have established strong consistency, asymptotic MSEs, and CLTs for both estimators, and constructed asymptotically valid CIs. Furthermore, a single hypothesis test has been proposed to solve a multiple comparison problem with application to clinical trials, and shown to significantly outperform an existing benchmarking test with multiple hypotheses, as the former exhibits lower type I error and higher statistical power.

The LSA estimator is preferable over the GA estimator, mainly due to the fact that its bias decays at a rate much faster than that of the GA estimator. The superiority of the LSA estimator over the GA estimator and several competing estimators has been demonstrated through numerical experiments.

Acknowledgements

This research is partially supported by the National Natural Science Foundation of China and Research Grants Council of Hong Kong under Joint Research Scheme project N_CityU 105/21 and GRF 11508217 and 11508620, and InnoHK initiative, The Government of the HKSAR, and Laboratory for AI-Powered Financial Technologies.

Appendix A A Appendix

A.1 Lemmas

Lemma A1.

(Hoeffding bound, Proposition 2.5 in Wainwright, 2019) Suppose that random variables , , are independent, and has mean and sub-Gaussian parameter . Then for all , we have

Lemma A2.

Suppose that ’s are i.i.d. mean-zero random variables, and is a stopping time taking positive integer values with w.p.1. Let . For any positive integer , if , then

Proof of Lemma A2.

First note that it suffices to show that for any , because these two are equivalent when is an even number, and if is an odd number, , following Hölder’s inequality.

For notational ease, denote and . Note that . Further let denote the filtration up to .

We first note the following fact. With being a constant, a nonnegative integer, and a martingale, if a process satisfies

then it can be seen that

is a martingale, by verifying the property that , where the shorthand notation , and the constant vector satisfies

| (16) |

Define and . For any nonnegative integer , if can be written in the form of

| (17) |

where ’s are constants, and are nonnegative integers depending on , and is an index set with a finite number of elements, then, we define

where is a contant vector obtained via (16) by setting and .

Then, using the aforementioned fact, the process is a martingale, and therefore,

| (18) |

is a linear combination of martingales.

In the rest of this proof, we use an induction argument. Suppose that for any integer satisfying , (a) and (b) hold, where

-

a)

is of the form in (17), satisfying when , and for any ; and

-

b)

for any and .,

we want to show that both (a) and (b) hold for .

It should be pointed out that combining (18) and (b) yields , because due to the martingale stopping theorem, and

Moreover, when , it can be easily checked that and , and thus both (a) and (b) are satisfied.

Therefore, it suffices to complete the induction argument, i.e., (a) and (b) must hold for if they hold for any . To see this, note that

| (19) |

For any , combining (a) and (18) suggests that is a linear combination of and ’s. Moreover, when and , equals and , respectively. Therefore, by (19), assembling terms and rearranging the index set lead to

for some , and , where it can be verified that when .

It can also be checked that

-

(i)

and for some and ; or,

-

(ii)

and for some .

Because , it is clear that . Hence, (a) holds for .

Furthermore, define

Then, is a martingale. Using the martingale stopping theorem, we have , i.e.,

| (20) |

where .

When (i) is true,

where the last equality follows from the fact that .

When (ii) is true, for some ,

Note that . If is an even number, then

where the first equality follows from (18) and (b). Otherwise, is an odd number, and by Cauchy-Schwartz inequality,

Therefore no matter is even or odd, . In other words, (b) holds for , which completes the induction. ∎

A.2 Proof of Proposition 1

To prove the proposition, we introduce a lemma.

Lemma A3.

Given a real , for any positive integer ,

Proof of Lemma A3.

The conclusion of the lemma for or 1 is trivial, and we thus mainly examine the case when . Because () is convex for , by Jensen’s Inequality,

It then follows that

and

Therefore, for and ,

and for ,

∎

Proof of Proposition 1. We first the result with and then .

(i) When , we want to prove that for

| (21) |

For and , we claim that at least one of the two following inequalities holds:

| (23) | |||||

| (24) |

where . In fact, by contradiction, if both the inequalities (23) and (24) are false when and , we have

where the second inequality is due to (22), which contradicts with .

In the following, we prove (21). Note that

| (25) |

Taking expectation, it follows that

Furthermore, by Lemma A1,

| (26) |

where the last inequality is due to the condition . Similarly, has the same bound. Therefore,

where the second inequality is by Lamma A3.

For any integer , by Minkowski’s inequality, we have

| (29) |

In what follows, we analyze . Note that

| (30) | |||||

where , and the last equality follows from the definition of in (27).

Consider when . By Lemma A3, for a positive integer ,

| (31) |

Therefore, for , we have

where the first inequality follows from the fact that , and the last inequality follows from (31) by letting and .

Similarly, for , we have

Hence,

The proof is complete.

A.3 Proof of Theorem 1

When , by Markov’s inequality and Proposition 1, for any and ,

Because , it follows that for ,

| (34) | |||||

Let . Then, for any ,

By Borel-Cantelli lemma, as , for , and thus

A.4 Proof of Theorem 2

In other words, for any and , there exists , such that for any ,

which implies

By the uniqueness assumption of , we have

| (35) |

i.e., as .

A.5 Proof of Theorem 4

We analyze the bias and variance of separately.

The Bias. We assert that is a stopping time. In fact, given the filtration generated by , denoted by , one has

Hence is a stopping time with respect to . Then by Wald’s equation,

leading to

Thus,

Because for , we have .

By Proposition 1,

| (36) |

The Variance. Note that

| (37) | |||||

We analyze the two terms on the RHS of (37) separately. By the Wald’s Lemma (see Theorem 13.2.14 in Athreya and Lahiri 2006),

| (38) |

Then, by (7) and Cauchy-Schwarz inequality, it can be seen that

| (39) |

where the last inequality follows from the fact that .

We then analyze the second term on the RHS of (37). Note that, by Cauchy-Schwarz inequality,

| (40) | |||||

Furthermore,

Therefore,

| (41) |

where .

A.6 Proof of Theorem 5

To prove the result, we first define a martingale difference array and introduce a lemma on asymptotic normality for martingale different arrays.

Definition A1.

(Definition 16.1.1 in Athreya and Lahiri (2006)) Let be a collection of random variables on a probability space and let be a filtration. Then, is called a martingale difference array if is -measurable and for each .

Lemma A4 (Theorem 16.1.1 of Athreya and Lahiri (2006)).

For each , let be a martingale difference array on , with for all and let be a finite stopping time w.r.t. . Suppose that for some constant , as ,

and that for each ,

Then,

We analyze the two terms on the RHS of (43) separately. We have shown that is a martingale. So is a martingale difference array (see Definition A1). To show the asymptotic normality of , it suffices to prove that the two conditions of Lemma A4 are satisfied for , and .

Second, by Cauchy-Schwarz inequality,

where , and .

Therefore, by Lemma A4, we have

It remains to prove that the second term on the RHS of (43) converges to 0 in probability. Note that

Therefore, the first moment of the second term on the RHS of (43) satisfies

where the convergence follows from Proposition 1 with and the condition .

Applying Slutsky’s Theorem to the RHS of (43) leads to the conclusion.

A.7 Proof of Proposition 2

Note that

| (44) |

We first prove that converges to in probability. From Theorem 2 of Lai and Robbins (1985), it follows that for , in probability; and from Theorem 1, it follows that a.s. By Theorem 2 in Richter (1965), we have that for ,

By the continuous mapping theorem, it follows that in probability.

Then by Theorem 1 and the continuous mapping theorem, converges to in probability.

A.8 Proof of Theorem 6

By Theorem 1, we have

| (45) |

By the strong law of large numbers (SLLN) and Theorem 1 in Richter (1965) or Theorem 8.2 in Chapter 6 of Gut (2013), we have

Denote . The definition of almost sure convergence says that

| (46) |

From (35), it follows that

| (47) |

A.9 Proof of Theorem 7

For notational ease, denote

Recall that sub-Gaussian distributions have finite moments up to any order. By Assumption 1 and the fact that , it can be seen that satisfies the conditions in Lemma A2 for any . We first note the following two facts that are useful in the proof. For any positive integer , by Proposition 1,

| (48) |

and by Lemma A2,

| (49) |

It suffices to analyze the bias and variance of , and then the MSE follows straightforwardly. Consider the bias first. Note that

where and denote the three terms on the RHS of (A.9), respectively.

Recall that due to Wald’s equation. It follows that

| (51) |

where denote the correlation coefficient between and , i.e.

It should also be noted that (and thus ), because and are positive correlated. To see this, note that

where the inequality follows naturally from the way that GUCB is defined, i.e., larger sample mean leads to higher chance of sampling from arm .

By (51), to understand the order , we only need to analyze , and . Note that by Proposition 1,

| (52) |

and

| (53) |

where the third inequality follows from Proposition 1 with .

Moreover,

| (54) |

We analyze the RHS of (54). Because ,

| (55) |

where the first equality follows from Wald’s lemma (Theorem 13.2.14 in Athreya and Lahiri 2006).

We also note that

| (56) | |||||

where the second inequality follows from Hölder’s inequality, and the third-to-last equality is due to the facts in (48) and (49) with and satisfying and for some .

In a similar manner, it can be shown that , and the details are omitted. Therefore, by (A.9),

Note further that the order of dominates those of and . Because , we know that for sufficiently large .

We now consider the variance. Note that

| (57) |

We note that by (55),

Similar to the proof of (56), we have

Moreover, using the fact in (48),

with .

Therefore,

which completes the proof.

A.10 Proof of Theorem 8

A.11 Proof of Proposition 3

Note that . It suffices to prove the two terms on the RHS of (12) converge to two terms of , respectively.

References

-

Agrawal, R. 1995. Sample mean based index policies by regret for the multi-armed bandit problem. Advances in Applied Probability, 27(4), 1054-1078.

-

Athreya, K. B., S. N. Lahiri. 2006. Measure Theory and Probability Theory. Springer Science & Business Media.

-

Auer, P., N. Cesa-Bianchi, P. Fischer. 2002. Finite-time analysis of the multiarmed bandit problem. Machine Learning, 47(2-3), 235-256.

-

Chang, H. S., M. C. Fu, J. Hu, S. I. Marcus. 2005. An adaptive sampling algorithm for solving Markov decision processes. Operations Research, 53(1), 126-139.

-

Chen, H. J., E. J. Dudewicz. 1976. Procedures for fixed-width interval estimation of the largest normal mean. Journal of the American Statistical Association, 71(355), 752-756.

-

D’Eramo, C., A. Nuara, M. Restelli. 2016. Estimating the maximum expected value through Gaussian approximation. International Conference on Machine Learning, 1032-1040.

-

Dmitrienko, A., J. C. Hsu. 2006. Multiple testing in clinical trials. S. Kotz, C. B. Read, N. Balakrishnan, B. Z. Vidakovic (Eds.), Encyclopedia of Statistical Sciences. Wiley, New Jersey.

-

Fan, W., L. J. Hong, B. L. Nelson. 2016. Indifference-zone-free selection of the best. Operations Research, 64(6), 1499-1514.

-

FDA (Food and Drug Administration). 2019. Adaptive designs for clinical trials of drugs and biologics: Guidance for industry. Available at https://www.fda.gov/media/78495/download.

-

Fu, M. C. 2017. Markov decision processes, AlphaGo, and Monte Carlo tree search: Back to the future. INFORMS TutORials in Operations Research, 68-88.

-

Gut, A. 2013. Probability: A Graduate Course. Springer Science & Business Media.

-

Hao, B., Y. Abbasi-Yadkori, Z. Wen, G. Cheng. 2019. Bootstrapping upper confidence bound. Advances in Neural Information Processing Systems, 12123-12133.

-

Kim, S.-H., B. L. Nelson. 2006. Selecting the best system. In Handbook in OR & MS: Simulation, S. G. Henderson and B. L. Nelson (Eds.), 501-534, Elsevier Science.

-

Kocsis, L., C. Szepesvári. 2006. Bandit based Monte-Carlo planning. Machine Learning: ECML, 282-293.

-

Lai, T. L., H. Robbins. 1985. Asymptotically efficient adaptive allocation rules. Advances in Applied Mathematics, 6(1), 4-22.

-

Lesnevski, V., B. L. Nelson, J. Staum. 2007. Simulation of coherent risk measures based on generalized scenarios. Management Science, 53(11), 1756-1769.

-

Mozgunov, P., T. Jaki. 2020. An information theoretic approach for selecting arms in clinical trials. Journal of the Royal Statistical Society Series B, 82(5), 1223-1247.

-

Qin, C., D. Klabjan, D. Russo. 2017. Improving the expected improvement algorithm. Proceedings of the 31st International Conference on Neural Information Processing Systems, 5387-5397.

-

Rényi, A. 1957. On the asymptotic distribution of the sum of a random number of independent random variables. Acta Mathematica Academiae Scientiarum Hungarica, 8(1-2), 193-199.

-

Richter, W. 1965. Limit theorems for sequences of random variables with sequences of random indeces. Theory of Probability & Its Applications, 10(1), 74-84.

-

Robertson, D. S., J. M. S. Wason. 2019. Familywise error control in multi-armed response-adaptive trials. Biometrics, 75, 885-894.

-

Russo, D. 2020. Simple bayesian algorithms for best arm identification. Operations Research, 68(6), 1625-1647.

-

Shamir, O. 2011. A variant of Azuma’s inequality for martingales with subgaussian tails. arXiv preprint arXiv:1110.2392.

-

Smith, J. E., R. L. Windler. 2006. The optimizer’s curse: Skepticism and postdecision surprise in decision analysis. Management Science, 52(3), 311-322.

-

van Hasselt, H. 2010. Double -learning. Advances in Neural Information Processing Systems (NIPS), 2613-2621.

-

van Hasselt, H. 2013. Estimating the maximum expected value: an analysis of (nested) cross validation and the maximum sample average. Available at arXiv preprint arXiv:1302.7175.

-

van Hasselt, H.,, A. Guez, D. Silver. 2016. Deep reinforcement learning with double Q-learning. Proceedings of the AAAI Conference on Artificial Intelligence, 30, 2094-2100.

-

Villar, S. S., J. Bowden, J. Wason. 2015. Multi-armed bandit models for the optimal design of clinical trials: Benefits and challenges. Statistical Science, 30(2), 199.

-

Wainwright, M. J. 2019. High-dimensional Statistics: A Non-asymptotic Viewpoint (Vol. 48). Cambridge University Press.