Anomaly Detection of Time Series with Smoothness-Inducing Sequential Variational Auto-Encoder

Abstract

Deep generative models have demonstrated their effectiveness in learning latent representation and modeling complex dependencies of time series. In this paper, we present a Smoothness-Inducing Sequential Variational Auto-Encoder (SISVAE) model for robust estimation and anomaly detection of multi-dimensional time series. Our model is based on Variational Auto-Encoder (VAE), and its backbone is fulfilled by a Recurrent Neural Network to capture latent temporal structures of time series for both generative model and inference model. Specifically, our model parameterizes mean and variance for each time-stamp with flexible neural networks, resulting in a non-stationary model that can work without the assumption of constant noise as commonly made by existing Markov models. However, such a flexibility may cause the model fragile to anomalies. To achieve robust density estimation which can also benefit detection tasks, we propose a smoothness-inducing prior over possible estimations. The proposed prior works as a regularizer that places penalty at non-smooth reconstructions. Our model is learned efficiently with a novel stochastic gradient variational Bayes estimator. In particular, we study two decision criteria for anomaly detection: reconstruction probability and reconstruction error. We show the effectiveness of our model on both synthetic datasets and public real-world benchmarks.

Index Terms:

Anomaly Detection, Time Series, Deep Generative Model, Variational Auto-Encoder, Recurrent Neural NetworkI Introduction

Time series anomaly detection is an important and challenging problem, and it has been studied over decades across wide application domains, including intelligence transportation [1], health care [2], KPI monitoring [3], web intrusion detection [4], environmental monitoring [5], and fault diagnosis [6], malware detection [7] etc. Such a broad application is also reflected in the diversity of formulations and models to time series anomaly detection. In contrast to anomaly detection on static data, one widely accepted idea is that the temporal continuity plays a key role in all formulations, and unusual changes in the time series data are detected and used to model anomalies. For multi-dimensional correlated time series data, the correlations make the problem further complex, which highlights the need for robust and flexible models.

Consider a matrix for a time series corpus that consists of correlated streams with time steps for each stream. On one hand, there are generally three scenarios of time series anomaly detection depending on the availability of training data and anomaly labels: supervised, semi-supervised and unsupervised [8]. Anomaly detection tasks without clean111We use ‘clean’ to describe time series data contain only normal patterns data or anomaly labels also referred to as unsupervised detection. Unsupervised methods have their advantages that no anomaly labels is needed, which is cost-saving, and they can enjoy better flexibility when the anomaly pattern drifts over time. On the other hand, time series anomaly detection can be in general divided into two settings: i) subsequence or whole sequence level anomaly whereby a subsequence is labeled as an anomaly; ii) point level anomaly for which a measurement at time in sequence is treated as an anomaly. The former scenario is usually more challenging than the latter. For more comprehensive surveys, readers are referred to [9, 10, 11].

Technique and methodology to address the above two settings are rather different, and we focus on the popular point-wise anomaly detection for multi-dimensional time series in this paper, which has received wide attention in literature [12, 13, 5]. In some cases, sequence or subsequence level anomaly detection can be generalized from point level model. In addition, many practical applications require point level detection results as abnormal signal can quickly disappear in the time series especially when the sampling rate is low. We leave it for future work for generalizing our model to more macro-level anomaly detection tasks. On the other hand, as a lack of labeled anomalies necessitates the use of unsupervised approaches, we focus on unsupervised detection model for its practical applicability. This also aligns with the rising trend of adopting unsupervised learning algorithms for anomaly detection such as one-class SVM [14], GMM [15], tDCGAN [7], VAE [3], and VRNN [16].

Different from general time series modeling aiming to fit with the data curve, anomaly detection often need to recover ‘normal’ patterns robustly in the presence of anomalies. This is challenging since the model is optimized to fit all data points. Previous works [17, 18] show that inductive bias is crucial for generalization when learning deep generative models. Motivated by previous works, we seek to take a Bayesian approach. Specifically, we devise a temporal smoothness prior which can be coherently incorporated in the Sequential Variational Auto-Encoder learning procedure, under the well-established probabilistic framework. As such, the learning of latent space can be more focused on the ‘normal’ pattern rather than blindly minimizing the reconstruction error between the raw signal and the generated one by the latent variables. Based on the learned generation model, the anomalies can be detected via a certain criterion by comparison of the reconstructed signal and the raw data point.

II Related Work

This paper is devoted to unsupervised point level anomaly detection for multi-dimensional time series. We review mostly related work by roughly dividing them into two groups: deterministic models and probabilistic methods.

Deterministic models. From the optimization perspective, classical approaches often introduce additional regularization term to account for temporal smoothness. The extra regularization enables trade-off between fitness and smoothness, making the model less sensitive to anomalies associated with the training data [19, 20]. Representative works include time series de-trending [21], and its extension for multi-dimensional correlated time series [22]. These methods tend to learn the general trend of time series. However, they often pay less attention to the stochastic nature of time series, as such the modeling capabilities are degraded when the data are noisy and anomaly-contaminated.

Probabilistic models. In these methods, the core idea is to learn the marginal likelihood of the data generation process. Then the anomalies are detected by thresholding reconstruction probability, using the techniques e.g. Hidden Markov Models [23, 24] and Matrix Factorizations [25, 26]. However, generative models are often computationally restricted to a simple linear model with conjugate probability distributions, due to the intractable integral of marginal likelihood . Moreover, limited by the potential intractability, the noise is often assumed constant, making them unsuitable for non-stationary time series.

Recently, the work [27] proposes the Stochastic Gradient Variational Bayes (SGVB) estimator and Auto-encoded Variational Bayes (AEVB) algorithm. One popular technique under this framework is Variational Auto-Encoder (VAE). By using neural network for parameterizing a flexible inference model to approximate posterior , along with the reparameterization trick, the lower bound of marginal likelihood is differentiable. In consequence, the model can be effectively trained. To a certain extent, the AEVB algorithm liberates the limitations when devising complex probabilistic generative models, especially for deep generative models.

One step further, by taking advantage of the AEVB algorithm, recent studies have introduced deep generative models for anomaly detection. The work [28] uses Variational Auto-Encoder (VAE) [27] for anomaly detection on non-sequential datasets. However, vanilla VAE makes i.i.d assumption among data points , which is unrealistic for real-world time series data. To solve this problem, the very recent approach [3] further extends vanilla VAE by preprocessing the raw data with sliding windows, and the windows are assumed to obey i.i.d. Although this approach mitigates the artifact, while the temporal structure is still not well modeled. Alternatively, researchers seek to use a deterministic Recurrent Neural Network (RNN) [29] to produce hidden states, then the latent variable is conditioned on the RNN hidden states and the previous latent variables, such that the temporal structure is captured. The resulting models include VRNN [16], STORN [30], which can be viewed as sequential versions of VAE. However, these models essentially hardly consider the presence of anomalies in their objective functions.

In this paper, we propose a Smoothness-Inducing Sequential Variational Auto-Encoder (SISVAE) model for learning multi-dimensional correlated time series data. In particular, we are focused on point level anomaly detection which can be naturally handled by our model.

The technical highlights of the paper are as follows:

i) We devise a Bayesian technique to incorporate the smoothness prior to the learning of deep generative model for multi-dimensional time series anomaly detection. Such a design to our best knowledge, has not been studied in literature and it inherits merits for the efficiency of the classical optimization model and the uncertainty modeling capability by the deep generative models. Specifically, the proposed variational smoothness regularizer encourages both smooth mean and variance transitions over time. The resulting objective, with the network can be learned by standard backpropagation.

ii) Different from Markov models that depend on constant noise assumption, our model parameterizes mean and variance individually for each time-stamp using neural networks. This enables the dynamic anomaly detection thresholds adaption according to estimated data noise. This feature is important as the variance of time series may vary over time.

iii) We perform extensive empirical evaluations on both synthetic datasets and several real-world benchmarks, showing that our model consistently outperforms the state-of-the-art competing models. We also study two decision criteria for performing anomaly detection tasks on a trained SISVAE model: reconstruction probability and reconstruction error.

| Terms | Symbol | Meaning |

| latent variable model | Description of the data generating process involving unobserved random variable . | |

| AEVB algorithm | Use a pair of encoder and decoder to learn a complex deep latent variable model. | |

| generative model | A probabilistic mapping from latent variable to observation , also called decoder. | |

| inference model | An approximation to the intractable true posterior , also called encoder. | |

| reparameterization trick | , | Using a deterministic variable to express random variable , where is an auxiliary variable with independent marginal . |

| latent state | latent variable associate with observation . | |

| latent temporal dependencies | Latent variables are not independent but exhibits some kinds of structure, such as 1st-order Markov chain. | |

| trending prior | A time-varying prior distribution for latent state. | |

| non-linear transitions | Non-linear relationship between latent space and past latent states. It is linear for Kalman filter and Hidden Markov Models. | |

| non-linear emission probability | Nonlinear relationship between latent space and observation. It is linear for Kalman filter and Hidden Markov Models. | |

| smoothness prior | A generic contextual constraint on the true signal based on human knowledge is the smoothness. | |

| non-stationary noise | The noise model varies over time, in many currently used models, only the mean of Gaussian is modeled with dynamics, while the variance is often set to be constant | |

| anomaly score | Score assigned by the model for each observation, higher score indicates higher probability of anomaly. |

III Preliminaries

Before devotion to the technical details of our proposed model, some preliminaries are described as background to facilitate the presentation of our main method.

III-A Problem Formulation

Due to different reasons such as faulty sensors or extreme events, the real-world time series data are often contaminated with anomalies. Consider we have time series data contaminated with anomalies, and each (indicating -dimensional time series) is modeled by:

| (1) |

where is the true signal, is non-stationary noise of observations, is a binary matrix, which is the indicator matrix of anomalies. The value when there is an anomaly at observation , and is the anomaly vector, and is element-wise multiplication. Our goal is to recover the true signal and detect anomaly from .

III-B Smoothness Prior Modeling

For the unsupervised learning problem, to recover true signal from anomaly-contaminated data, it is important to impose inductive bias or prior knowledge to the model as suggested in [17, 18]. For time series modeling, we first introduce smoothness prior modeling of time series as described in [31]. Consider the time series (for convenience, we deal with the uni-variate case), it is assumed to consist of the sum of a function and observation noise :

| (2) |

where is an unknown smooth function, and . The problem is to estimate from the observations. A common approach is to use a parametric model such as polynomial regression or neural network to approximate . The quality of estimation is dependent upon the appropriateness of the assumed model class. The idea is to balance a trade-off of goodness-of-fit to the data, and a smoothness criterion. The solution is to minimize the objective with an appropriately chosen smoothness trade-off parameter :

| (3) |

where is a -th order differential constraint on the solution , with , , etc.

The solution can be solved from a Bayesian perspective: consider a homoscedastic (variance is constant over time) time series with constant variance . The solution becomes maximizing:

| (4) |

Its Bayesian interpretation can be written by:

| (5) |

where is the prior distribution of , and is data distribution conditioned on and is the posterior of .

III-C Deep Generative Models and SGVB Estimator

Consider a dataset consisting of i.i.d data points . From a latent variable modeling perspective, we assume the data are generated by some random processes that capture the variations in the observed variables . Thus we have the integral of marginal likelihood:

| (6) |

where is generative model parameters, and is the prior distribution over latent variable , and is the conditional distribution that generates data . Variational Auto-Encoder (VAE) [27] models the conditional probability with a neural network as a flexible approximator. However, introducing a complex non-linear mapping for the generation process from to results in intractable inference of posterior . Hence VAE uses a variational approximation of the posterior, i.e. the evidence lower bound (ELBO) of the marginal likelihood as written by:

| (7) |

where the first R.H.S. term is the Kullback-Leibler divergence between two probability distributions and , and denotes variational parameters. The generative model and inference model are trained jointly by maximizing the ELBO w.r.t their parameters. The KL-divergence of Eq. 7 can often be integrated analytically. However, the gradient of the second term is problematic because latent variable is stochastic which blocks backpropagation. The Stochastic Gradient Variational Bayes (SGVB) [32] expresses the random variable as a deterministic variable , where is an auxiliary variable with independent marginal distribution . Then we have the SGVB estimator of the lower bound:

| (8) | ||||

Learning is performed by optimizing the SVGB estimator with backpropagation. VAE [27] uses centered isotropic multivariate Gaussian as prior of latent variable . The variational approximate posterior is a multivariate Gaussian with diagonal covariance matrix: , and its mean and variance are parameterized by a neural network. For , a Bernoulli distribution for binary data or multivariate Gaussian distribution (as adopted in our model) for continuous data is often used.

IV The Proposed Model

We present the proposed Smoothness-Inducing Sequential Variational Auto-Encoder (SISVAE) model for multi-dimensional time series point anomaly detection. We first describe how the Sequential VAE can be employed for time series density estimation, which involves a generative model and inference model to learn the latent variable for data points. Then we devise a smooth prior under the Bayesian framework which leads to a smoothness-induced sequential VAE model. We show the model can be used to detect the anomaly either by absolute reconstruction loss or reconstruction probability.

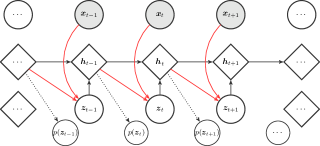

IV-A Sequential VAE as Density Estimator of Time Series

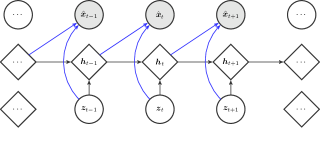

For time series modeling, many prior publications have extended VAE in a sequential manner [33, 34, 16, 35]. The main idea is to use a deterministic Recurrent Neural Network (RNN) as a backbone, represented by the sequence of RNN hidden states. At each timestamp, the RNN hidden state is conditioned on the previous observation and stochastic latent variable , such that the model is able to capture sequential features from time series data.

Let denote a multidimensional time series, such as observation data collected by multiple sensors of T time slots. Our goal is to learn the data distribution of the training time series . The model is composed of two parts, the generative model and the inference model.

IV-A1 Generative model

We specify the generative model of the following factorization:

| (9) |

The first factor of R.H.S of Eq. 9 is the conditional likelihood of a data point given all previous data and latent states. We notate as the random variable for the reconstructed data distribution of observation , we have:

| (10) |

where and denote the sufficient statistic parameters of the generated data distribution, and is the parameter set of the mapping function . In this multivariate Gaussian case, they denote mean and variance respectively. The hidden states are updated with the recurrence equation using gated recurrent unit. The second factor is the prior of latent state, depending on previous RNN hidden state :

| (11) |

where and are sufficient statistic parameters of the prior. By introducing temporal structure into the prior distribution of the latent variable, the representation power is enhanced. The hidden states are updated with the recurrence equation using gated recurrent unit (GRU) [36]:

| (12) |

The GRU transition equations of our recurrence model are:

| (13) |

where are model parameters of GRU in SISVAE model, and is the sigmoid function. In equations 11, 10 and 12, and are realized by networks. and are feature extractors of observed data and latent variable respectively, which are also realized by networks.

IV-A2 Inference model

We use variational inference to learn an approximate posterior over latent variables conditioned on the given data, which means we need to construct an approximating distribution parameterized by . We train the generative model using Auto-Encoding Variable Bayes (AEVB) algorithm [27]:

| (14) |

We construct a distribution of the following factorization:

| (15) |

The approximate posterior of latent variable depends on and :

| (16) |

where , denote the mean and variance of the approximate posterior, and is approximated by a neural network.

IV-B Anomaly Detection with Sequential VAE

As discussed before, we seek to devise suitable inductive bias for anomaly detection of time series in the sequential VAE framework. To this end, we begin with the factorization of the existing loss function. Specifically, we substitute the numerator and denominator in Eq. 14 with Eq. 9 and Eq. 15 respectively, the objective of Sequential VAE becomes:

| (17) |

The first term is the loss of inference model, which can be viewed as a regularizer, encouraging the model to learn disentangled feature by putting a prior over latent variable [37]. The second term is the reconstruction loss of the generative model. Suppose is an anomaly, and its associated reconstruction distribution is . The generative model tries to reconstruct the contaminated , rather than the true signal , otherwise the reconstruct loss, which is the negative log likelihood will be high. To minimize the objective loss function from the data with anomalies, the model will either learn a biased mean , or an over-estimated variance . As a result, the Sequential VAE can be vulnerable to anomalies.

To address account for such a bias caused by the anomalies, one natural idea is to induce smoothness prior to the generative model. Recall in Section III-B, the smoothness criterion refers to the accumulative difference of signal . One might be tempted to simply add such a smoothness regularization to the objective. However, since the Sequential VAE is under probabilistic framework, such a forced combination is not mathematically coherent. However, the classical approach treat observation as deterministic variable, however, in sequential VAE, we treat each observation as a random variable, and we estimate the probability distribution for each observation , such that the classical regularizer failed to work in probabilistic framework, which calls for more comprehensive method.

We extend the smoothness regularization to probabilistic framework, by following the common assumption that the probability density function over time would vary smoothly over time. Among all possible reconstructed series , the true signal should have a smooth transition of distribution. To construct a valid regularizer, we need to measure the smoothness of a time-varying probability distribution.

For two consecutive data points of a single time series: and , associated with reconstructions and . Let to be a distance metric between two distributions, we propose accumulative transition cost of time-varying probability distributions:

| (18) |

In this paper, we choose KL-divergence as the distance metric between distributions. For Isotropic Gaussian case in this paper, the transition cost of two Normal distributions is:

| (19) |

The final objective involves: 1) encoding loss for inference model; 2) expected reconstruction error for generative model; 3) the proposed variational smoothness reguarlizer. We apply stochastic gradient variational Bayes (SGVB) estimator to approximate the expected reconstruction error . The learning objective is:

| (20) |

where and , and is a smoothness regularization hyper-parameter. We set when .

IV-C Training

IV-C1 Preparing dataset

Ideally, we could train SISVAE with a whole time series as input, and the model outputs the whole reconstruction. However, in many real applications, the time series is typically very long. The objective function Eq. 20 of our model is summed sequentially over time, such that computing the gradient of it requires back propagation over time [38]. Researches [39] observe that RNNs are hard to train when sequence length is over 200, the model may face the problem of vanishing gradients and exploding gradients, which is also undesirable for anomaly detection tasks.

To solve this problem, we divide the long time series into short chunks, using sliding windows technique. We apply a sliding windows to the time series, which slides over multiple time series synchronously. The sliding windows is controlled by two parameters: window size and step size . Specifically, for each dataset , we have:

| (21) | ||||

where , each is a short time series chunk with length W, putting together, we have:

| (22) |

where is the whole dataset. Each item is a short time series chunk. The learning procedure is depicted in Algorithm 1.

| Traditional Models | Deep Latent Variable Models | ||||

| ARMA | LDS | Donut | STORN | SISVAE-p | |

| latent temporal dependencies | – | ✓ | ✗ | ✓ | ✓ |

| non-linear emission probability | – | ✗ | ✓ | ✓ | ✓ |

| non-linear latent transitions | – | ✗ | ✗ | ✓ | ✓ |

| trending prior | – | ✓ | ✗ | ✗ | ✓ |

| multi-dimensional | ✗ | ✓ | ✗ | ✓ | ✓ |

| non-stationary variance | ✗ | ✗ | ✓ | ✓ | ✓ |

| long sequence | ✗ | ✗ | ✓ | ✗ | ✓ |

| objective robustness | – | ✗ | ✗ | ✓ | |

IV-D Computing Anomaly Scores

The next step is to compute anomaly score for each data point . For a trained model, input a chunk of time series , each is a -dimensional vector, the model first encode it into , then decode to a sequence of random variables . The whole encoding and decoding process is called probabilistic reconstruction.

Recall that in Eq. 1 of Section III-A, we use to indicate the indicator matrix of anomaly labels. In this step, we use to indicate the matrix of anomaly scores.

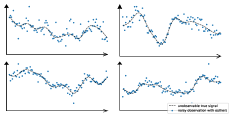

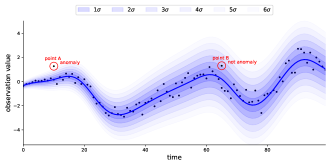

One natural choice is to use absolute reconstruction error, like anomaly detection with deterministic auto-encoders [40]. The anomaly score for observation is computed as:

| (23) |

where is the -th dimension of reconstructed mean . Intuitively, a large reconstruction error indicates anomaly behavior of time series, which is a common assumption for many reconstruction based anomaly detection algorithms. The underlying assumption of absolute reconstruction error is that the variance is stationary, which means that the variance is constant over time. However, in many real-world data, the variance is non-stationary, and it varies over time. We illustrate this using an example as shown in Fig. 3. We generate some time series data with Gaussian process as true signal, denoted as the real blue line centering scatters, then we add some Gaussian noise , where increases over time. The black dots is the noisy observation, and blue transparent regions increasing level of noise. Then, we add two points, point A and point B, they both deviate from the true signal with distance 1. We see that, point A is more likely to be an anomaly point, while point B is more likely to be a normal point that located at some noisy period. In this case, if we use absolute reconstruction error, we may fail to detect anomaly point A, or otherwise report normal point B as an anomaly.

Due to the non-stationary nature of real-world time series, we need a robust method for computing anomaly scores. Previous VAE-based anomaly detection methods have introduced reconstruction probability based anomaly score, here we devise a reconstruction probability for sequential Auto-encoders. Specifically, the probability density of input is:

| (24) |

Draw samples from , and the anomaly score is negative of average of probability. For numerical stability, we often work with probability, and we have

| (25) |

However, for Sequential VAE, this can be more challenging. Because is not independent, but has sequential dependencies. We choose to adopt Sequential Monte Carlo (SMC) [41], which is widely used in state-space models, such as Bayesian filters [42], and dynamical systems [43].

We conclude the SMC for computing anomaly scores and detecting anomalies in Algorithm 2. For the full multivariate time series matrix, , we slice them into non-overlapped chunks, and reconstruct each of them individually.

V Experiments

We present the experiment for robust modeling and anomaly detection for multi-dimensional correlated time series, compared with peer VAE methods. Recall that in this paper we deal with point level anomaly detection without supervision.

V-A Protocols and Settings

Performance Metrics. For time series data , the model outputs anomaly score matrix , and each item is the likelihood of data point being an anomaly. For detection, we set a threshold to obtain the final anomalies label indicator matrix . Then we compute precision, recall, true positive rate (TPR), false positive rate (FPR) at time series point level.

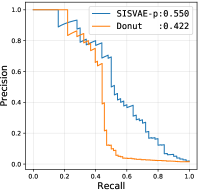

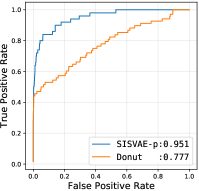

Trade-off is needed as the detection characteristics vary by different threshold . When threshold is tight, the precision will be higher and recall will be lower. We compute area under receiver operating characteristic (AUROC), area under precision recall curve (AUPRC) and the best F1-score. AUROC quantifies the FPR and TPR with different , and AUPRC quantifies the precision and recall with different . Note that all three metrics are independent of specific threshold.

There are inherent differences between AUROC and AUPRC. For anomaly detection tasks where class (anomaly or normal data points) distributions are heavily imbalances, the AUROC metric is less insensitive to false positives than the AUPRC metric. As discussed in [44, 45], the AUPRC score is more discriminative than AUROC score. Previous work may choose one of the metrics to evaluate the performance. To comprehensively evaluate the model’s performance, we report both AUROC and AUPRC scores, but we mainly focus on analysis AUPRC in this paper.

Peer methods. Recent deep learning based methods are compared, including Donut and STORN.

-

•

Donut [3]. The model proposed very recently is based on VAE, which takes a fixed length sliding windows of an uni-variate time series as input. To capture ‘normal patterns’ of data, the modified ELBO technique is devised to leverage generative model and latent regularization of VAE, such that the model is encouraged to learn only normal patterns.

-

•

STORN [30]. As a sequential version of VAE, the model is based on stochastic recurrent neural network (STORN). Different from SISVAE, the sequences of latent variables are generated independently. The model uses lower bound output as anomaly score.

We also include three traditional statistical models.

-

•

History Average (HA). We use absolute deviation from history average value as anomaly score. We include this model in comparison to show how a very simple model perform within different datasets.

- •

-

•

Linear Dynamical System (LDS). This model is also known as Kalman filter. Different from deep latent variable based state space models, the transition function and emission probability are linear (see Table II).

We further include some variants of our model, to study the contribution and behavior of each component in our model.

-

•

SISVAE-p Our recommended model, which uses the sliding windows of a time series matrix as input, similar to Donut. This model is more applicable for long sequences.

-

•

SISVAE-e. Different from SISVAE-p, it takes absolute reconstruction error as anomaly score. We use this model to show the performance gap by different detection criteria.

-

•

SISVAE-0 To show the effect of our smoothness regularizer, we let SISVAE-0 to be SISVAE-p for .

-

•

VAE-s. To test the contribution of the proposed smoothness prior technique, we add the regularization to vanilla VAE as a comparison named VAE-s. The model can be viewed as our SISVAE model without latent temporal structure. We use this model to show the effectiveness of introducing latent temporal structure.

In particular, we compare the characteristics of peer methods in Table II, whereby both traditional methods i.e. History Average (HA), Autoregressive Moving Average (ARMA), Kalman Filter (KMF) and deep latent model i.e. Donut, STORN, SISVAE, SISVAE-mb are included.

Implementation details For preprocessing, we set window size to be 120 for each dataset. The sequential VAE based models, STORN, and SISVAE have a similar network architecture, which is defined by two varying hyper-parameters, h_dim for dimension of hidden layer, and z_dim for dimension of latent variable . We set h_dim to be 200, and z_dim to be 40 for all datasets. We empirically set the smoothness regularization hyper-parameter for SISVAE during training process. The encoder, decoder, and RNN of models use the same hidden dimension. The three models are implemented using PyTorch [48] 0.4, and trained on a single Nvidia RTX 2080ti GPU. We train the three models 200 epochs using Adam [49] with learning rate 0.001. Note for Donut, the M-ELBO technique proposed by Donut may require some anomaly labels to learn ‘normal’ patterns. For fair comparison, we provide no anomaly label to the model, and the experiments are conducted under fully unsupervised setting. We use the code open sourced by the authors. We set h_dim to be 200, and z_dim to be 40, same as our model. The model is fundamentally designated for uni-variate time series and it is nontrivial to extend it to the multi-dimensional case. We test two settings: 1) train a model for each sequence individually, and compute anomaly score individually; 2) flatten the multi-dimensional time series data after preprocessing to accommodate the model, the anomaly score is computed together. We find the second protocol exhibits better performance. This may be because the normal patterns are common along different sequences. With more sequences as input, the model can be less influenced by anomalies. We adopt the second protocol for Donut in the experiments. For all deep latent variable models, we use negative reconstruction probability as anomaly score output. For all three traditional statistical models, we use the same preprocessing method. For history average (HA), the anomaly score is absolute observation value since all series have zero mean. For ARMA and LDS, we use absolute reconstruction error as anomaly score.

| Dataset | A1 | A2 | A3 | A4 | PMU |

| # sequences | 65 | 100 | 100 | 100 | 36 |

| # length | 1420 | 1421 | 1680 | 1680 | 1080 |

| # points | 92300 | 142100 | 168000 | 168000 | 38800 |

| # anomalies | 1462 | 426 | 943 | 1044 | 240 |

| # proportion | 1.58% | 0.32% | 0.56% | 0.62% | 0.62% |

.

V-B Experiments on Synthetic Data

In this section, we aim to gain insights into the behavior of our proposed SISVAE model. We investigate the anomaly detection performance of our model for different proportions of anomalies. Furthermore, we are interested in the anomaly detection performance under different settings of smoothness regularization hyper-parameter .

Referring the synthetic data generation protocol in [24], we generate 100 time series sequences of length 200 from a multi-output Gaussian process with a multiple output kernel [50], implemented by a python package named GPy [51]. Sequences are correlated through a random sampled positive definite coregionalization matrix, and we add random non-stationary Gaussian noise on the 100-dimensional sequence of length 200. The location of anomalies is simulated by a binary mask with Bernoulli distribution of probability {0.5%, 1%, 2%, 5%, 10%} for different fractions of anomalies. We insert Poisson noise where the binary mask is equal to one as anomalies, and the intensity of each anomaly is proportional to the amplitude of inserted place . The plus and minus sign is random sampled from Bernoulli distribution with .

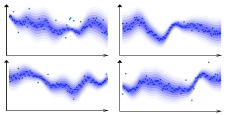

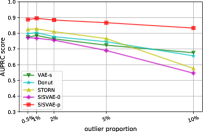

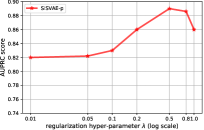

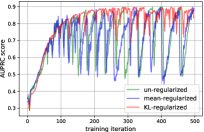

Effects of anomaly proportion. Model performance variation with respect to the proportion of anomalies is shown in Fig. 4(a). Since all the models are unsupervised, they are inevitably influenced by the anomalies, because a full reconstruction of both normal and anomaly data is encouraged in the objective function. The proposed variational smoothness regularizer places penalties at consecutive outputs of the generative model that violate the temporal smoothness assumption. As observed from the result, as the anomaly proportion grows, the AUPRC scores of two un-regularized model SISVAE-0 and STORN drop significantly, while the regularized models’ performance almost keeps maintained. SISVAE-0, the proposed SISVAE model shows superior performance over competing methods in all settings of anomaly proportion.

Effects of regularization hyper-parameter. The variational smoothness hyper-parameter in the objective by Eq. 17 determines the penalty of non-smooth output of the generative model. We analyze the detection performance of SISVAE model under different settings of hyper-parameters. We train the model with = {0.01,0.05,0.1,0.2,0.5,0.8,1.0} using synthetic dataset with 2% anomaly proportion. Figure 4(b) shows the change of AUPRC performance w.r.t , where the model achieves best performance when is set to be 0.5.

| Dataset | Metric | HA | ARMA | LDS | Donut | STORN | SISVAE-0 | VAE-s | SISVAE-e | SISVAE-p |

| A1 | AUROC | 0.616 | 0.845 | 0.664 | 0.825 | 0.867 | 0.862 | 0.886 | 0.865 | 0.876 |

| AUPRC | 0.276 | 0.305 | 0.099 | 0.178 | 0.166 | 0.184 | 0.185 | 0.300 | 0.369 | |

| F1 | 0.337 | 0.351 | 0.140 | 0.215 | 0.240 | 0.254 | 0.254 | 0.358 | 0.401 | |

| A2 | AUROC | 0.956 | 0.658 | 0.952 | 0.999 | 0.926 | 0.974 | 0.993 | 0.989 | 0.981 |

| AUPRC | 0.745 | 0.233 | 0.401 | 0.623 | 0.435 | 0.608 | 0.536 | 0.731 | 0.817 | |

| F1 | 0.774 | 0.457 | 0.480 | 0.691 | 0.515 | 0.588 | 0.611 | 0.709 | 0.789 | |

| A3 | AUROC | 0.724 | 0.486 | 0.989 | 0.990 | 0.917 | 0.912 | 0.907 | 0.951 | 0.993 |

| AUPRC | 0.252 | 0.005 | 0.835 | 0.753 | 0.732 | 0.746 | 0.713 | 0.865 | 0.954 | |

| F1 | 0.331 | 0.011 | 0.831 | 0.798 | 0.757 | 0.791 | 0.763 | 0.880 | 0.951 | |

| A4 | AUROC | 0.715 | 0.487 | 0.979 | 0.991 | 0.850 | 0.834 | 0.836 | 0.831 | 0.924 |

| AUPRC | 0.107 | 0.005 | 0.737 | 0.598 | 0.438 | 0.521 | 0.525 | 0.521 | 0.774 | |

| F1 | 0.165 | 0.010 | 0.745 | 0.704 | 0.478 | 0.582 | 0.573 | 0.582 | 0.820 | |

| PMU | AUROC | 0.629 | 0.587 | 0.805 | 0.714 | 0.684 | 0.628 | 0.629 | 0.630 | 0.814 |

| AUPRC | 0.084 | 0.023 | 0.303 | 0.312 | 0.185 | 0.174 | 0.089 | 0.153 | 0.310 | |

| F1 | 0.225 | 0.049 | 0.356 | 0.389 | 0.279 | 0.247 | 0.228 | 0.244 | 0.367 |

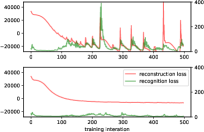

Convergence study. We analyze the contribution of our regularizer to convergence property. On the synthetic 2% anomaly proportion dataset, we train three models: i) SISVAE-p without regularization (). ii) classical smoothness regularizer of deterministic model where only the mean is regularized which is formulated as: . iii) proposed KL regularizer. We record the AUPRC score over training. As shown in Fig. 4(c), at the first 100 iterations, all the three models are trained very fast, and the detection performance increases stably. This suggests that the models are learning the major ‘normal’ patterns of the data, while the anomalies are temporally neglected. However, after 100 iterations, the performance of the un-regularized and mean-regularized models fluctuate severely between 0.45 and 0.85, and our KL-regularized model (i.e. SISVAE-p) also fluctuates but in a relatively small interval. We think the fluctuation is caused by contradiction between generative (decoder) model and recognition (encoder) model: the generative model is trying hard to reconstruct every data point perfectly, while the inference model aims to map the observed anomaly-contaminated data points into a simpler low-dimensional latent space. The decoder and encoder are playing a seesaw battle in the training procedure, thus causing a sawtooth shape AUPRC score. Under the probabilistic framework, classical mean-regularizer which is in its nature deterministic, cannot work well. Its convergence curve is akin to the un-regularzied model. In contrast, the proposed variational smoothness regularizer weakens the reconstruction objective, and guides the decoder to generate sequences that obey temporal smoothness assumption, preventing the model from overfitting ‘anomaly’ patterns. As shown in Fig. 4(d), the reconstruction loss and inference loss of regularized model converge stably, while the training of un-regularized model is unstable. Hence the KL-regularized SISVAE model reaches a higher AUPRC score.

V-C Experiments on Real-world Data

Datasets This experiment involves the following datasets, we conclude statistics of datasets in Table III.

-

•

Yahoo’s S5 Webscope Dataset: This dataset is a part of Yahoo’s Webscope program222https://webscope.sandbox.yahoo.com/. It consists of 367 time series. This dataset consists of four different classes A1/A2/A3/A4 with 65/100/100/100 time series respectively. Class A1 has real data collected from computational systems, which records real production traffic of Yahoo’s website, and the anomalies are labelled by human expert manually. Classes A2, A3 and A4 contain synthetic data, whereby anomalies are constructed and are inserted at random positions.

-

•

Open PMU power network dataset: This dataset is collected from a power distribution equipped in Alameda, CA, USA with advanced smart meters called phasor measurement units (PMU)333https://powerdata.lbl.gov [52]. There are three PMU devices, each recording three-phase voltage and current magnitude and phase angle. Anomalies caused by voltage disturbance are labeled by human experts. We collect measurement data and anomaly labels during the whole day of June 3 with 20 seconds resolution. We use 36-dimensional time series of length 1080.

Preprocessing In real application, different time series may have different scales, e.g. temperature and air pressure sensors have different scales. So we follow the common practice to normalize each sequence to standard normal distribution.

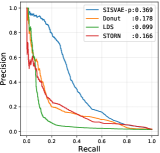

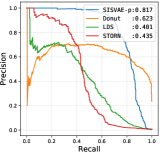

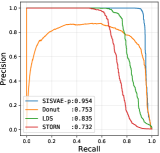

Threshold based anomaly detection. First, we conduct threshold based anomaly detection experiment. For each model, we collect anomaly score matrix for each model, then we compute AUROC, AUPRC and F1-score for each possible threshold . We select the highest F1-score the model could achieve as the final F1-score. We compare all the peer methods in this experiment, whose results are shown in Table IV. SISVAE-p outperforms other models in most datasets and metrics. For SISVAE-p and SISVAE-e, we find that our proposed SMC based reconstruction probability for computing anomaly score notably improves the performance. Among all the five datasets, we find SISVAE-p and SISVAE-e perform similar on A2 dataset. We further find that the A2 dataset is in general stationary regarding with variance, which means that modeling the time-varying variance is important for anomaly detection. For traditional statistical models, we find the simple method HA shows decent performance in some datasets, however, the potential of HA is very limited. We are surprised that ARMA failed on A3, A4 and PMU dataset. Our further study uncovers that these three datasets are highly non-stationary with both mean and variance, which violate the basic stationary assumption of ARMA. In contrast, we find LDS performs competitively on A3, A4 and PMU comparing to the best model, however, it fails on dataset A1. This suggests that linear transition and linear emission probability of LDS may not be able to capture complex non-linear relationship. For VAE-s, the proposed smoothness prior regularizer works even without a temporal structure model. In our analysis, the regularizer constructs inductive bias of the true signal, leading to robustness to unknown anomaly.

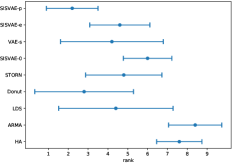

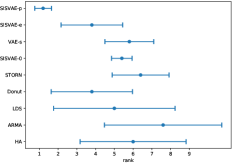

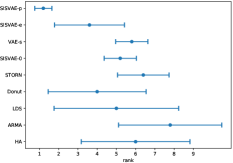

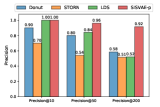

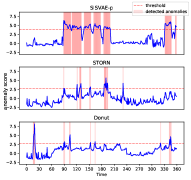

As discussed, we find models’ anomaly detection performance varies across datasets, such as LDS and ARMA. To make the overall performance comparison more intuitionistic, we compute the rank of models for each dataset and performance metric, and compute the mean and standard deviation of ranks for each models. The plots of rankings are shown in Fig. 5 showing our model outperforms other models notably.

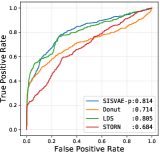

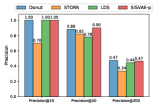

Ranking based anomaly detection. Due to limited budget, sometimes we can only deal with a limited number of detected anomalies hence the precision need be high. In this context, precision@K is a useful metric, which is widely used in information retrieval [53]. For each model, we select data points that are assigned with top highest anomaly score, and precision@K is computed by , where is the number of true anomalies in predictions. We examine models with , and the results are shown in Fig. 8. We can see that, when is 10, STORN, LDS and SISVAE-p perform well. However, Donut fails on A2, A3 and A4 dataset. The reason is that Donut places very high anomaly score on some normal data points. Overall, SISVAE-p’s precision keeps stable as increases. This means SISVAE-p makes more precise detection when the number of detection trials is limited.

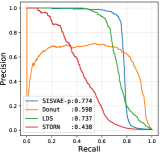

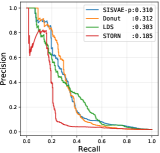

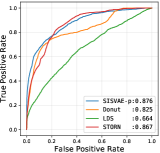

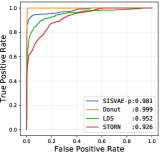

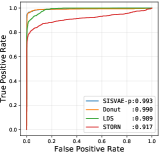

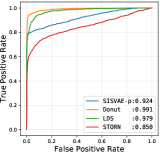

Model characteristics. The detection threshold is often set according to specific needs. In order to investigate the detailed detection characteristic, we plot the precision-recall curve (PRC), and receiver operating characteristic curve (ROC), as shown in Fig. 6 and Fig. 7. Comparing to LDS and STORN, SISVAE-p outperforms at most thresholds. For precision and recall curve in Yahoo A2, A3 and A4 dataset, note that Donuts performs strangely, where the precision and recall curves do not monotonically decrease as expected, but increase first and then decrease. We find this is because of Donut places very large anomaly scores to some normal points, while the other three models do not have this problem. This means modeling latent temporal dependencies is important for time series anomaly detection tasks.

The trade-off between recall and precision. Despite the fact that SISVAE-p is better than baselines in most cases, however, the preference of detection metric also affects the choice of model. It is important to know when to use which model. The cost of validating a detected anomaly can be expensive which calls for model’s high precision. For large-scale power grid system, it is required the precision need be strictly above 0.95. In this case, SISVAE-p outperforms other methods in recall. In contrast, for fraud detection, recall can be more important. If we set a hard bar for recall to 0.95, we find Donut is also competitive, it outperforms SISVAE-p in A2 and A4 dataset w.r.t. precision.

V-D Case study

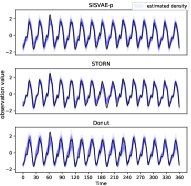

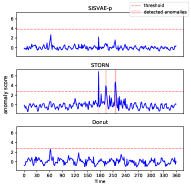

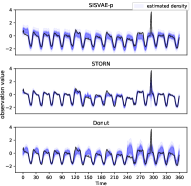

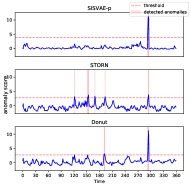

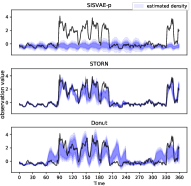

We compare the three deep generative models, SISVAE-p, STORN, and Donut with real-world examples from Yahoo-A1 dataset. For each model, we choose to set threshold when F1-score is maximized. The dataset consists of 65 sequences each with length 1420. In what follows we show some representative time series fragments for discussion.

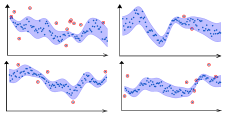

False alarms on normal time series. Figure 9(a) shows a normal sequence without any anomaly. Since the training data is contaminated with anomalies, the model can be influenced by anomalies and fails to capture normal patterns such that false alarms can be be reported. Figure 9(b) illustrates the density estimation of the three models, and Fig. 9(c) shows the anomaly score generated by three models. Note STORN reports three false alarms, while SISVAE-p and Donut do not. Moreover, we find the anomaly score generated by SISVAE-p is stably low, while Donut is more fluctuating given noisy data. The results demonstrate that SISVAE-p is robust to contaminated data for unsupervised training.

Detection of point-level anomalies. Figure 10(a) includes a sequence from Yahoo-A1 dataset with anomaly at around time point 300. This type of anomaly is often called additive outlier [54] or extreme value [55]. As shown in Fig. 10(b), density estimation of STORN and Donut are corrupted by the point anomaly, such that they place much probability density to the anomaly point. In contrast, SISVAE-p successfully recovers the true underlying density. Figure 10(c) shows the anomaly score generated by the three models, and we can see that all the models identify the anomaly point. However, STORN and Donut report some false alarms. Moreover, the anomaly score of SISVAE-p is more smooth than those of other models, suggesting that SISVAE-p is more robust to the moderate fluctuation of normal data. Overall, SISVAE is capable of detecting point-level anomalies robustly.

Detection of sub-sequence level anomalies. In some cases, the anomalies is in the presence of sub-sequences, such as anomaly cardiac cycle in ECG data [56]. Figure 11(a) shows a sequence with two anomaly sub-sequences, whereby the red shaded region covers the true anomaly. Figure 11(b) shows the density estimation, whereby Donut and STORN are notably influenced by the anomaly sub-sequence. In contrast, SISVAE-p estimates a smooth probability density over time, which is more likely to be the true density. Figure 11(c) shows SISVAE-p successfully detects most of the anomaly sub-sequences, while STORN and Donut suffer from false negative and false positives. The results demonstrate the effectiveness of the proposed smoothness prior regularizer, helping recover the true density and improve detection performance.

V-E Further Comparison with Donut

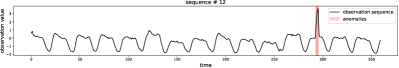

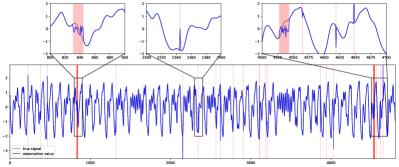

Simulation study on uni-variate time series. As discussed in Section V-A, Donut is designated for uni-variate time series. In order to investigate the detection performance in one-dimensional case, we conduct simulation study on data generated from Mackey-Glass equation [57], which serves a benchmark for nonlinear models since the true underlying function is nonlinear [58]. The time series is generated as:

| (26) |

with . We run the Mackey-Glass equation 5000 iterations, then we collect an uni-variate time series with length 5000. Two types of anomalies are added:

-

•

Point level anomaly: we insert point anomalies at time step randomly, each point anomaly is a combination of: i) Poisson noise with ; ii) Gaussian noise with zero mean and unit variance; iii) 0.2 fixed bias.

-

•

Sub-sequence level anomaly: two sub-sequences anomalies are inserted, starting at random time step, with random length sampled from even distribution of range . For each anomaly sub-sequence, true signal is replaced by a sequence sampled from a Gaussian process with RBF kernel of unit variance, and 0.3 length-scale.

The generated Mackey-Glass time series is shown in Fig. 12. To illustrate the generated anomalies, we zoom in three fragments out of the whole time series.

In this experiment, we find Donut shows better performance when z_dim is lower. We enumerate Donut’s performance on , and we find Donut performs best when . For SISVAE-p, we find the performance is relatively stable when we change z_dim. Hence we use the same setup as discussed in Section V-A. The ROC and PRC are shown in Fig. 13. In conclusion, benefiting from the sequential network structure of SISVAE, and the proposed smoothness prior regularizer, SISVAE-p exhibits better anomaly detection performance for uni-variate time series.

VI Conclusion

We have presented a deep generative model based method i.e. SISVAE (and the variants) for robust estimation and anomaly detection for multi-dimensional correlated time series. To capture ‘normal’ patterns from anomaly-contaminated time series, we propose a novel variational smoothness regularizer, which provides robustness by placing penalty on non-smooth output of the generative model. Furthermore, we discuss two decision criteria for anomaly detection: reconstruction probability and reconstruction error. Systemic experiments are conducted on both synthetic and real-world datasets, which demonstrate the proposed method outperforms state-of-the-art unsupervised anomaly detection models for multi-dimensional time series. For future work, we are interested in exploring heavy-tailed distribution such as Student- distribution as the output of the generative model.

Acknowledgments

This research was partially supported by China Major State Research Development Program (2018YFC0830400, 2018AAA0100704) and NSFC (61972250, U19B2035).

References

- [1] X. Li, Z. Li, J. Han, and J.-G. Lee, “Temporal outlier detection in vehicle traffic data,” in IEEE International Conference on Data Engineering. IEEE, 2009, pp. 1319–1322.

- [2] M. Hauskrecht, I. Batal, M. Valko, S. Visweswaran, G. F. Cooper, and G. Clermont, “Outlier detection for patient monitoring and alerting,” Journal of biomedical informatics, vol. 46, no. 1, pp. 47–55, 2013.

- [3] H. Xu, W. Chen, N. Zhao, Z. Li, J. Bu, Z. Li, Y. Liu, Y. Zhao, D. Pei, Y. Feng et al., “Unsupervised anomaly detection via variational auto-encoder for seasonal kpis in web applications,” in Proceedings of the 2018 World Wide Web Conference on World Wide Web. International World Wide Web Conferences Steering Committee, 2018, pp. 187–196.

- [4] V. Jyothsna, V. R. Prasad, and K. M. Prasad, “A review of anomaly based intrusion detection systems,” International Journal of Computer Applications, vol. 28, no. 7, pp. 26–35, 2011.

- [5] D. J. Hill and B. S. Minsker, “Anomaly detection in streaming environmental sensor data: A data-driven modeling approach,” Environmental Modelling & Software, vol. 25, no. 9, pp. 1014–1022, 2010.

- [6] J. Yan, C. Tian, J. Huang, and F. Albertao, “Incremental dictionary learning for fault detection with applications to oil pipeline leakage detection,” Electronics Letters, vol. 47, no. 21, pp. 1198–1199, 2011.

- [7] J.-Y. Kim, S.-J. Bu, and S.-B. Cho, “Zero-day malware detection using transferred generative adversarial networks based on deep autoencoders,” Information Sciences, vol. 460, pp. 83–102, 2018.

- [8] V. Chandola, A. Banerjee, and V. Kumar, “Anomaly detection: A survey,” ACM computing surveys (CSUR), vol. 41, no. 3, p. 15, 2009.

- [9] C. C. Aggarwal, “Outlier analysis,” in Data mining. Springer, 2015, pp. 237–263.

- [10] M. Gupta, J. Gao, C. C. Aggarwal, and J. Han, “Outlier detection for temporal data: A survey,” IEEE Transactions on Knowledge and Data Engineering, vol. 26, no. 9, pp. 2250–2267, 2014.

- [11] V. Chandola, A. Banerjee, and V. Kumar, “Anomaly detection for discrete sequences: A survey,” IEEE Transactions on Knowledge and Data Engineering, vol. 24, no. 5, pp. 823–839, 2012.

- [12] R. S. Tsay, D. Peña, and A. E. Pankratz, “Outliers in multivariate time series,” Biometrika, vol. 87, no. 4, pp. 789–804, 2000.

- [13] S. Basu and M. Meckesheimer, “Automatic outlier detection for time series: an application to sensor data,” Knowledge and Information Systems, vol. 11, no. 2, pp. 137–154, 2007.

- [14] S. M. Erfani, S. Rajasegarar, S. Karunasekera, and C. Leckie, “High-dimensional and large-scale anomaly detection using a linear one-class svm with deep learning,” Pattern Recognition, vol. 58, pp. 121–134, 2016.

- [15] R. Laxhammar, G. Falkman, and E. Sviestins, “Anomaly detection in sea traffic-a comparison of the gaussian mixture model and the kernel density estimator,” in Information Fusion, 2009. FUSION’09. 12th International Conference on. IEEE, 2009, pp. 756–763.

- [16] J. Chung, K. Kastner, L. Dinh, K. Goel, A. C. Courville, and Y. Bengio, “A recurrent latent variable model for sequential data,” in Advances in neural information processing systems, 2015, pp. 2980–2988.

- [17] F. Locatello, S. Bauer, M. Lucic, G. Raetsch, S. Gelly, B. Schölkopf, and O. Bachem, “Challenging common assumptions in the unsupervised learning of disentangled representations,” in International Conference on Machine Learning, 2019, pp. 4114–4124.

- [18] S. Zhao, H. Ren, A. Yuan, J. Song, N. Goodman, and S. Ermon, “Bias and generalization in deep generative models: An empirical study,” in Advances in Neural Information Processing Systems, 2018, pp. 10 792–10 801.

- [19] C. Shang, X. Huang, J. A. Suykens, and D. Huang, “Enhancing dynamic soft sensors based on dpls: A temporal smoothness regularization approach,” Journal of Process Control, vol. 28, pp. 17–26, 2015.

- [20] Y. Cai, H. Tong, W. Fan, and P. Ji, “Fast mining of a network of coevolving time series,” in Proceedings of the 2015 SIAM International Conference on Data Mining. SIAM, 2015, pp. 298–306.

- [21] W. Enders, Applied econometric time series. John Wiley & Sons, 2008.

- [22] Y. Zhou, H. Zou, R. Arghandeh, W. Gu, and C. J. Spanos, “Non-parametric outliers detection in multiple time series a case study: Power grid data analysis.” in AAAI, 2018.

- [23] Y. Cai, H. Tong, W. Fan, P. Ji, and Q. He, “Facets: Fast comprehensive mining of coevolving high-order time series,” in Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 2015, pp. 79–88.

- [24] N. Görnitz, M. Braun, and M. Kloft, “Hidden markov anomaly detection,” in International Conference on Machine Learning, 2015, pp. 1833–1842.

- [25] L. Li, J. McCann, N. S. Pollard, and C. Faloutsos, “Dynammo: Mining and summarization of coevolving sequences with missing values,” in Proceedings of the 15th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 2009, pp. 507–516.

- [26] L. Xiong, X. Chen, and J. Schneider, “Direct robust matrix factorizatoin for anomaly detection,” in Data Mining (ICDM), 2011 IEEE 11th International Conference on. IEEE, 2011, pp. 844–853.

- [27] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXiv preprint arXiv:1312.6114, 2013.

- [28] J. An and S. Cho, “Variational autoencoder based anomaly detection using reconstruction probability,” Special Lecture on IE, vol. 2, pp. 1–18, 2015.

- [29] J. L. Elman, “Finding structure in time,” Cognitive Science, vol. 14, pp. 179–211, 1990.

- [30] M. Sölch, J. Bayer, M. Ludersdorfer, and P. van der Smagt, “Variational inference for on-line anomaly detection in high-dimensional time series,” arXiv preprint arXiv:1602.07109, 2016.

- [31] G. Kitagawa and W. Gersch, Smoothness priors analysis of time series. Springer Science & Business Media, 2012, vol. 116.

- [32] D. P. Kingma and M. Welling, “Stochastic gradient vb and the variational auto-encoder,” in Second International Conference on Learning Representations, ICLR, 2014.

- [33] O. Fabius and J. R. van Amersfoort, “Variational recurrent auto-encoders,” arXiv preprint arXiv:1412.6581, 2014.

- [34] J. Bayer and C. Osendorfer, “Learning stochastic recurrent networks,” arXiv preprint arXiv:1411.7610, 2014.

- [35] M. Fraccaro, S. K. Sønderby, U. Paquet, and O. Winther, “Sequential neural models with stochastic layers,” in Advances in neural information processing systems, 2016, pp. 2199–2207.

- [36] K. Cho, B. Van Merriënboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using rnn encoder-decoder for statistical machine translation,” arXiv preprint arXiv:1406.1078, 2014.

- [37] I. Higgins, L. Matthey, A. Pal, C. Burgess, X. Glorot, M. Botvinick, S. Mohamed, and A. Lerchner, “beta-vae: Learning basic visual concepts with a constrained variational framework,” in International Conference on Learning Representations, 2017.

- [38] P. J. Werbos, “Backpropagation through time: what it does and how to do it,” Proceedings of the IEEE, vol. 78, no. 10, pp. 1550–1560, 1990.

- [39] R. Pascanu, T. Mikolov, and Y. Bengio, “On the difficulty of training recurrent neural networks,” in International Conference on Machine Learning, 2013, pp. 1310–1318.

- [40] C. Zhou and R. C. Paffenroth, “Anomaly detection with robust deep autoencoders,” in Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 2017, pp. 665–674.

- [41] A. Doucet, N. De Freitas, and N. Gordon, “An introduction to sequential monte carlo methods,” in Sequential Monte Carlo methods in practice. Springer, 2001, pp. 3–14.

- [42] A. Doucet, S. Godsill, and C. Andrieu, “On sequential monte carlo sampling methods for bayesian filtering,” Statistics and computing, vol. 10, no. 3, pp. 197–208, 2000.

- [43] J. S. Liu and R. Chen, “Sequential monte carlo methods for dynamic systems,” Journal of the American statistical association, vol. 93, no. 443, pp. 1032–1044, 1998.

- [44] J. Davis and M. Goadrich, “The relationship between precision-recall and roc curves,” in Proceedings of the 23rd international conference on Machine learning. ACM, 2006, pp. 233–240.

- [45] T. Saito and M. Rehmsmeier, “The precision-recall plot is more informative than the roc plot when evaluating binary classifiers on imbalanced datasets,” PloS one, vol. 10, no. 3, 2015.

- [46] P. Galeano, D. Peña, and R. S. Tsay, “Outlier detection in multivariate time series by projection pursuit,” Journal of the American Statistical Association, vol. 101, no. 474, pp. 654–669, 2006.

- [47] J. D. Hamilton, Time series analysis. Princeton university press Princeton, NJ, 1994, vol. 2.

- [48] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems, 2019, pp. 8024–8035.

- [49] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [50] M. A. Alvarez, L. Rosasco, N. D. Lawrence et al., “Kernels for vector-valued functions: A review,” Foundations and Trends® in Machine Learning, vol. 4, no. 3, pp. 195–266, 2012.

- [51] GPy, “GPy: A gaussian process framework in python,” http://github.com/SheffieldML/GPy, since 2012.

- [52] E. Stewart, A. Liao, and C. Roberts, “Open pmu: A real world reference distribution micro-phasor measurement unit data set for research and application development,” 2016.

- [53] R. Baeza-Yates, B. d. A. N. Ribeiro et al., Modern information retrieval. New York: ACM Press; Harlow, England: Addison-Wesley,, 2011.

- [54] P. Burridge and A. Robert Taylor, “Additive outlier detection via extreme-value theory,” Journal of Time Series Analysis, vol. 27, no. 5, pp. 685–701, 2006.

- [55] A. Siffer, P.-A. Fouque, A. Termier, and C. Largouet, “Anomaly detection in streams with extreme value theory,” in Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 2017, pp. 1067–1075.

- [56] M. C. Chuah and F. Fu, “Ecg anomaly detection via time series analysis,” in International Symposium on Parallel and Distributed Processing and Applications. Springer, 2007, pp. 123–135.

- [57] M. C. Mackey and L. Glass, “Oscillation and chaos in physiological control systems,” Science, vol. 197, no. 4300, pp. 287–289, 1977.

- [58] H. Kantz and T. Schreiber, Nonlinear time series analysis. Cambridge university press, 2004, vol. 7.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c171d920-4f03-4a90-ac37-a35a7f823865/lly.jpg) |

Longyuan Li received the B.E. degree in Electronic Engineering from Huazhong University of Science and Technology in 2013. He is currently working toward the Ph.D. degree in the Department of Electronic Engineering, Shanghai Jiao Tong University, Shanghai, China. His research interests include machine learning and data mining. He focuses on probabilistic models and Bayesian non-parametric models, for sequential data such as multi-dimensional time series. He is also interested in deep probabilistic graphical models and uncertainty estimation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c171d920-4f03-4a90-ac37-a35a7f823865/x41.jpg) |

Junchi Yan (M’10) is currently an Associate Professor with Department of Computer Science and Engineering, Shanghai Jiao Tong University, Shanghai, China. Before that, he was a Research Staff Member and Principal Scientist with IBM Research – China, where he started his career since April 2011. He obtained the Ph.D. degree in Electrical Engineering from Shanghai Jiao Tong University. His research interests are machine learning and computer vision. He serves as Area Chair for ICPR 2020, CVPR 2021, Senior PC for CIKM 2019, Associate Editor for IEEE ACCESS. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c171d920-4f03-4a90-ac37-a35a7f823865/haiyangwang.png) |

Haiyang Wang obtained B.E. degree and Ph.D. degree both in Electrical Engineering from Shanghai Jiao Tong University, Shanghai, China, in year 2013 and 2019, respectively. His research area include machine learning and data mining, especially massive spatial-temporal data mining and intelligent transportation. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c171d920-4f03-4a90-ac37-a35a7f823865/yaohui.png) |

Yaohui Jin was a Technical Staff Member with Bell Labs Research China. He joined Shanghai Jiao Tong University in 2002, where he is a Professor with the State Key Laboratory of Advanced Optical Communication Systems and Networks and the Deputy Director of Network and Information Center. His research interests include civic engagement and open innovation, cloud computing network architecture, and streaming data analysis. He is the Founder of OMNILab, which is an open innovation lab focusing on data analysis. He has published over 100 technical papers in leading conferences and journals and is the owner of over 10 patents. In 2014, OMNILab won the champion of CCF national big data challenge among nearly 1000 teams, and was the champion of the Shanghai open data innovation and creation competition. He has served over 10 technical committees. He enthuses public service and science popularization, actively promotes crowd engaged innovation, and interdisciplinary collaboration. |