Approximate Conditional Coverage & Calibration via Neural Model Approximations

Abstract

A typical desideratum for quantifying the uncertainty from a classification model as a prediction set is class-conditional singleton set calibration. That is, such sets should map to the output of well-calibrated selective classifiers, matching the observed frequencies of similar instances. Recent works proposing adaptive and localized conformal p-values for deep networks do not guarantee this behavior, nor do they achieve it empirically. Instead, we use the strong signals for prediction reliability from KNN-based approximations of Transformer networks to construct data-driven partitions for Mondrian Conformal Predictors, which are treated as weak selective classifiers that are then calibrated via a new Inductive Venn Predictor, the Predictor. The resulting selective classifiers are well-calibrated, in a conservative but practically useful sense for a given threshold. They are inherently robust to changes in the proportions of the data partitions, and straightforward conservative heuristics provide additional robustness to covariate shifts. We compare and contrast to the quantities produced by recent Conformal Predictors on several representative and challenging natural language processing classification tasks, including class-imbalanced and distribution-shifted settings.

1 Introduction

Uncertainty quantification is challenging because the problem of the reference class (see, e.g., Vovk et al., 2005, p. 159) necessitates task-specific care in interpreting even well-calibrated probabilities that agree with the observed frequencies. It is made even more complex in practice with deep neural networks, for which the otherwise strong blackbox point predictions are typically not well-calibrated and can unexpectedly under-perform over distribution shifts. And it becomes even more involved for classification, given that the promising distribution-free approach of split-conformal inference (Vovk et al., 2005; Papadopoulos et al., 2002), an assumption-light frequentist approach suitable when sample sizes are sufficiently large, produces a counterintuitive p-value quantity in the case of classification (cf., regression).

Setting.

In a typical natural language processing (NLP) binary or multi-class classification task, we have access to a computationally expensive blackbox neural model, ; a training dataset, of instances paired with their corresponding ground-truth discrete labels, ; and a held-out labeled calibration dataset, of instances. We are then given a new test instance, , from an unlabeled test set, . One approach to convey uncertainty in the predictions is to construct a prediction set, produced by some set-valued function , containing the true unseen label with a specified level on average. We consider two distinct interpretations: As coverage and as a conservatively coarsened calibrated probability (after conversion to selective classification), both from a frequentist perspective.

Desiderata.

For such prediction sets to be of interest for typical classification settings with deep networks, we seek class-conditional singleton set calibration (ccs). We are willing to accept noise in other size stratifications, but the singleton sets, , must contain the true value with a proportion , at least on average per class. We further seek singleton set sharpness; that is, to maximize the number of singleton sets. We seek reasonable robustness to distribution shifts. Finally, we seek informative sets that avoid the trivial solution of full cardinality.

If we are willing to fully dispense with specificity in the non-singleton-set stratifications for tasks with , our desiderata can be achieved, in principle, with selective classifiers.111The calibrated probabilities of the Predictor, introduced here, can be presented as empirical probabilities, prediction sets, and/or selective classifications. We emphasize the latter in the present work, as it is useful as a minimal required quantity in typical classification settings, and provides a clear contrast—and means of comparison—to alternative distribution-free approaches that may be considered by practitioners.

Definition 1 (Classification with reject option).

A selective classifier, , maps from the input to either a single class or the reject option (represented here with the falsum symbol).

Remark 1 (Prediction sets are selective classifications).

The output of any set-valued function corresponds to that of a selective classifier: Map non-singleton sets, , to . Map all singleton sets to the corresponding class in .

To date, the typical approach for constructing prediction sets in a distribution-free setting is not via methods for calibrating probabilities, but rather in the hypothesis testing framework of Conformal Predictors, which carry a PAC-style -valid coverage guarantee. In the inductive (or “split”) conformal formulation (Vovk, 2012; Papadopoulos et al., 2002, inter alia), the p-value corresponds to confidence that a new point is as or more conforming than a held-out set with known labels. More specifically, we require a measurable function , which measures the conformity between and other instances. For example, given the softmax output of a neural network for , , with as the output of the true class, is a typical choice. We construct a p-value, , as follows: , where and , where we suppose the true label is . We then construct the prediction set: . This is accompanied by a finite-sample, distribution-free coverage guarantee, which we state informally here.222We omit the randomness component (for tie-breaking), given the large sample sizes considered here.

Theorem 1 (Marginal Coverage of Conformal Predictors (Vovk et al., 2005)).

Provided the points of and are drawn exchangeably from the same distribution (which need not be further specified), the following marginal guarantee holds for a given : .

The distribution of split-conformal coverage is Beta distributed (Vovk, 2012), from which a PAC-style -validity guarantee can be obtained, and from which we can determine a suitable sample size to achieve this coverage in expectation. Unfortunately, this does not guarantee singleton set coverage (the hypothesis testing analogue of our ccs desideratum), a known, but under-appreciated, negative result that motivates the present work:

Corollary 1.

Conformal Predictors do not guarantee singleton set coverage. If they did, it would imply a stronger than marginal coverage guarantee.

Existing approaches.

Empirically, Conformal Predictors are weak selective classifiers, limiting their real-world utility. We show this problem is not resolved by re-weighting the empirical CDF near a test point (Guan, 2022), nor by applying separate per-class hypothesis tests, nor by APS conformal score functions (Romano et al., 2020), nor by adaptive regularization RAPS (Angelopoulos et al., 2021), and occurs even on in-distribution data.

Solution. In the present work, we demonstrate, with a focus on Transformer networks (Vaswani et al., 2017), first that a closer notion of approximate conditional coverage obtained via the stronger validity guarantees of Mondrian Conformal Predictors is not sufficient in itself to achieve our desired desiderata. Instead, we treat such Conformal Predictors as weak selective classifiers, which serve as the underlying learner to construct a taxonomy for a Venn Predictor (Vovk et al., 2003), a valid multi-probability calibrator. This is enabled by data-driven partitions determined by KNN (Devroye et al., 1996) approximations, which themselves encode strong signals for prediction reliability. The result is a principled, well-calibrated selective classifier, with a sharpness suitable even for highly imbalanced, low-accuracy settings, and with at least modest robustness to covariate shifts.

2 Mondrian Conformal Predictors and Venn Predictors

A stronger than marginal coverage guarantee can be obtained by Mondrian Conformal Predictors (Vovk et al., 2005), which guarantee coverage within partitions of the data, including conditioned on the labels. Such Predictors are not sufficient for obtaining our desired desiderata, but serve as a principled approach for constructing a Venn taxonomy with a desirable balance between specificity vs. generality (a.k.a., over-fitting vs. under-fitting), the classic problem of the reference class.

Both Mondrian Conformal Predictors and Venn Predictors are defined by the choice of a particular taxonomy. A taxonomy is a measurable function , where is a measurable space. A is referred to as a category, and corresponds to a classification of , as via an attribute or label. The p-value of a Mondrian Conformal Predictor is then determined similarly to Conformal Predictors, but with conditioning on the category: , where and . We will refer to the resulting coverage as approximate conditional coverage, a middle ground between marginal coverage and conditional coverage, which is not possible in the distribution-free setting with a finite sample (Lei & Wasserman, 2014):

Theorem 2 (Approximate Conditional Coverage of Mondrian Conformal Predictors (Vovk et al., 2005; Vovk, 2012)).

Provided the points of and are exchangeable within their categories defined by taxonomy (Mondrian-exchangeability), the following coverage guarantee holds for a given : .

Venn Predictors dispense with p-values (and coverage) and instead seek validity via calibration. They are multi-probability calibrators, in that they produce not one probability, but multiple probabilities for a single class, a compromise which yields an otherwise quite strong theoretical guarantee. Venn Predictors have a simple, intuitive appeal: They amount to calculating the empirical probability among similar points to the test instance. The quirk to enable the theoretical guarantee is that this is done by including the test point itself, assigning each possible label; hence, the generation of multiple empirical probability distributions. Specifically, for we first determine its category, typically some classification from the underlying model.333Existing taxonomies for Venn Predictors include using the predictions from a classifier (Lambrou et al., 2015), variations on nearest neighbors (Johansson et al., 2018), and isotonic regression, the Venn-ABERS Predictor (Vovk & Petej, 2014; Vovk et al., 2015). We will use to indicate all instances in the category, where we have added assuming the true label is . We then calculate the empirical probability:

| (1) |

We repeat this assuming each label is the true label, in turn, equivariant with respect to the taxonomy (that is, without respect to the ordering of points in the category). Remarkably, one of the probabilities from a multi-probability Venn Predictor is guaranteed to be perfectly calibrated. For our purposes, it will be sufficient to show this for the binary case. For a random variable , such as the probablistic output of a classifier, and a binary random variable , we will follow previous work (Vovk & Petej, 2014) in saying is perfectly calibrated if a.s. The validity of the Venn Predictor is then:

Theorem 3 (Venn Predictor Calibration Validity (Theorem 1 Vovk & Petej (2014) ).

Provided the points of and are IID, among the two probabilities output by a Venn Predictor for , and , one is perfectly calibrated.

3 Tasks: Classification with Transformers for NLP

The taxonomies will be chosen based on the need to partition the high-dimensional input of NLP tasks without having explicit attributes known in advance. We first introduce general notation for sequence labeling and document classification tasks. Each instance consists of a document, , of tokens: Here, either words or amino acids. In the case of supervised sequence labeling (), we seek to predict , the token-level labels for each token in the document, and we have the ground-truth token labels, , for training. For document classification (), we seek to predict the document-level label , and we have at training.

| Label | Task | Base network | Acc. | Characteristics | ||||

|---|---|---|---|---|---|---|---|---|

| 3 | 560k | {30k,7k} | Mid | In-domain (2 test sets) | ||||

| 2 | 35k | 93k | Low | Domain-shifted+imbalanced | ||||

| 2 | 16k | 488 | High | In-domain (acc. ) | ||||

| 2 | 16k | 5k | Mid-Low | Domain-shifted/OOD |

For each task, our base model is a Transformer network. After training and/or fine-tuning, we fine-tune a kernel-width 1 CNN () over the output representations of the Transformer, producing predictions and representative dense vectors at a resolution (e.g., word-level or document-level) suitable for each task. Following past work, we will refer to these representations as “exemplar vectors” primarily to contrast with “prototype”, which is sometimes taken to refer to class-centroids, whereas the “exemplars” are unique to each instance. We will subsequently use for the prediction logits produced by the corresponding to the token at index ; as the corresponding softmax normalized output for class ; and as the associated exemplar vector. For there are such logits and vectors. For , corresponds to a single representation of the document, with formed by a combination of local and global predictions (as described further in the Appendix). In the present work, we will primarily only be concerned with Transformers at the level of abstraction of the exemplar vectors, ; we refer the reader to the original works describing Transformers (Vaswani et al., 2017) and the particular choice for the (Schmaltz, 2021) for additional details. Splits. Our baselines of comparisons use , , as the training and calibration sets, respectively. For our methods, we will assume the existence of an additional disjoint split of the data, for setting the parameters of the KNNs. We will also require two calibration sets, , which serves as the calibration set for the Mondrian Conformal Predictor, and , which serves as the calibration set for the Venn Predictor.

4 Methods

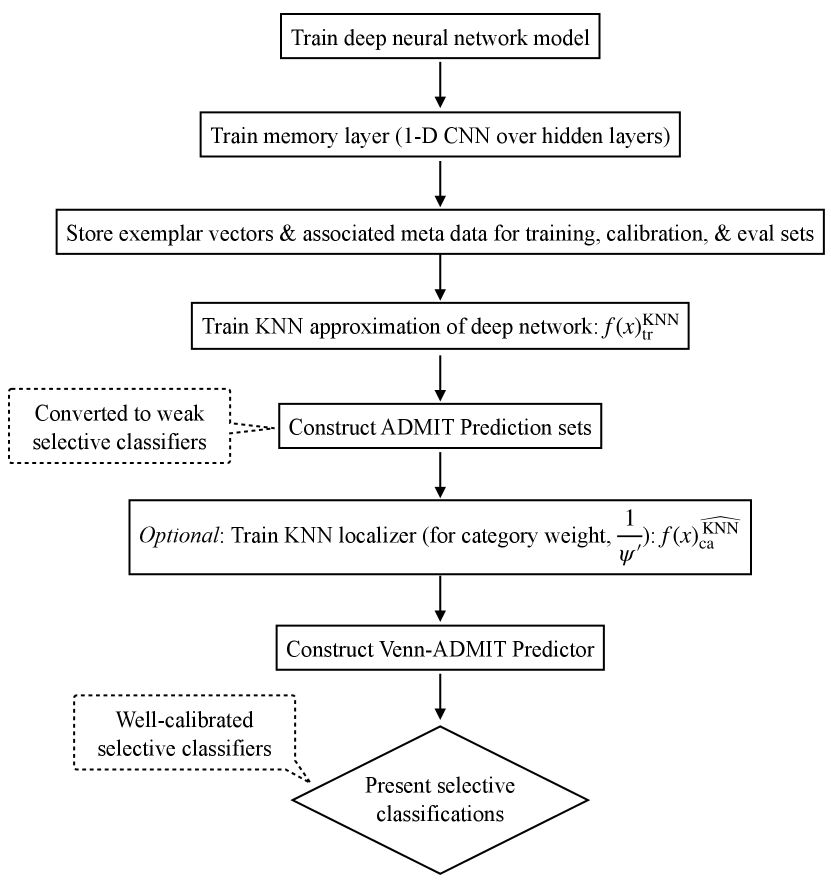

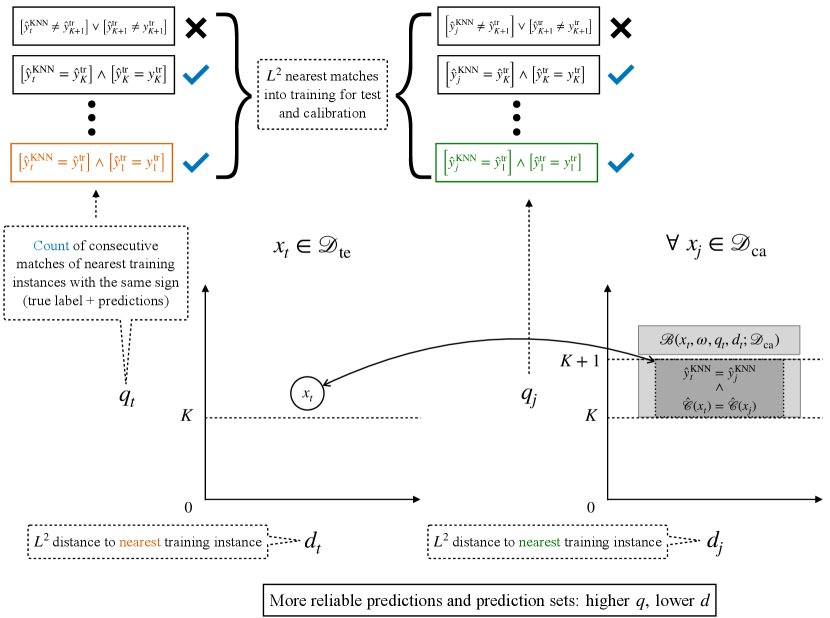

We first define the taxonomy for our Mondrian Conformal Predictor, . The resulting sets will serve as baselines of comparisons, but will primarily be used as weak selective classifiers for defining the taxonomy of our Venn Predictor, the Predictor. In both cases, we make use of non-parametric approximations of Transformers, which encode strong signals for prediction reliability, including over distribution-shifts, and are at least as effective as the model being approximated (Schmaltz, 2021). Predictions become less reliable at distances farther from the training set and with increased label and prediction mismatches among the nearest matches. We further introduce an additional KNN approximation that serves as a localizer, relating a test instance to the distribution of the conformal calibration set. We use this localizer to construct a conservative heuristic for category assignments. Figure 1 provides an overview of the components and a visualization of the prediction signals and Venn taxonomy.

4.1 KNN approximation of a Transformer network

In order to partition the feature space, we first approximate the Transformer as a weighted combination of predictions and labels over (Section 4.1.1). This approximation, , then becomes the model we use in practice, rather than the logits from the Transformer itself. We use this approximation to partition the data via a feature that separates more reliable points from less reliable points (Section 4.1.2) and via a distance-to-training band (Section 4.1.3).

4.1.1 Recasting a Transformer prediction as a weighting over the training set

We adapt the distance-weighted KNN approximation of Schmaltz (2021) for the multi-class setting. As in the original work, the KNN is trained to minimize prediction mis-matches against the output of the (not the ground-truth labels). Training is performed on a 50/50 split of , as described further in Appendix C:

| (2) |

| (3) |

is the ground-truth label ( for , for ) for class transformed to be in . is small in practice; in all experiments here, and in general can be chosen using . This approximation has learnable parameters, corresponding to and for each class, and the temperature parameter . We indicate the softmax normalized output for each class with . This model is used to produce approximations over all calibration and test instances. The choice of this particular functional form is discussed in Schmaltz (2021) and is designed to be as simple as possible while obtaining a sufficiently close approximation to the deep network.

4.1.2 Data-driven feature-space partitioning: True positive matching constraint

For each calibration and test point, we define the feature as the count of consecutive sign matches of the prediction of the KNN, , with the true label and prediction of the up to nearest matches from the training set, :

| (4) |

with . We further also use the distance to the nearest training set match as a basis for subsetting the distribution into distance bands, as discussed in the next section:

| (5) |

4.1.3 Data-driven feature-space partitioning: Distance-to-training band

We define the partition, , around each , constrained to , as the -distance-to-training band with a radius of , with as a user-specified parameter and as the estimated standard deviation of constrained true positive calibration set distances, :

| (6) |

4.2 Prediction sets with approximate conditional coverage:

We then define a taxonomy for our Mondrian Conformal Predictor by the partitions defined by and the true labels: . This conditioning on the labels means we apply split-conformal prediction separately for each label, “label-conditional” conformal prediction (Vovk et al., 2005; Vovk, 2012; Sadinle et al., 2018), which provides built-in robustness to label proportion shifts (c.f., Podkopaev & Ramdas, 2021). We will refer to this method and the resulting sets with the label . We always include the predicted label in the set. Pseudo-code appears in Appendix E.

4.3 Inductive Predictors & Selective Classifiers

An Predictor maps to a weak selective classifier. We instead seek a well-calibrated selective classifier, which we define as follows, as a straightforward coarsening of the probability, only calculated over the admitted subset:

Definition 2 (Well-calibrated selective classifiers).

We take as the random variable indicating the probability a non-rejected prediction from a selective classifier, , should be admitted. We will say a selective classifier is conservatively well-calibrated (or just “well-calibrated”) if for a given .

We construct such a selective classifier, , as follows. Construct sets for and , in both cases using as the calibration set. Next, convert the sets into selective classifiers, , as in Remark 1. Calibrate the non-rejected predictions of (i.e., the predictions that were singleton sets and now admitted predictions of ) using a Venn Predictor with a taxonomy defined by and the prediction of the KNN, , now using as the calibration set. The selective classifier is then the following decision rule, where are the two Venn probabilities associated with :

| (7) |

We can then take as , if (as used in Def. 2); i.e., a coarsening of the probability of the points admitted by .

Proposition 1 ( selective classifiers are well-calibrated).

This follows directly from Theorem 3.

4.4 Robustness

selective classifiers are robust to covariate shifts that correspond to changes in the proportion of the partitions. We propose two simple heuristics that provide additional robustness. We first state two useful propositions that will justify the heuristics.

Proposition 2 ( calibration invariance to partition censoring).

selective classifiers remain well-calibrated in the sense of Definition 2 with censoring of 1 or more partitions .

This directly follows from the fact that both the weak selective classifier () and the well-calibrated selective classifier () treat each partition independently. We can thus construct a new selective classifier, , that maps any input in the censored partition(s) to .

Proposition 3 ( calibration invariance to test point up-weighting).

selective classifiers remain well-calibrated in the sense of Definition 2 using any test point weight when calculating the empirical probabilities of the Predictor.

Increasing the test point weight above 1 can only decrease the lower probability produced by the Predictor (since the denominator in Eq. 1 can only increase). Since Def. 2 is only calculated for admitted points, this notion of conservative well-calibration is retained.

4.4.1 Censoring Less Reliable Data Partitions

The feature can be viewed as an ensemble across multiple similar instances from training. Greater agreement suggests greater confidence in the prediction. We can restrict to partitions with the maximum value, , here. By Prop. 2, calibration of the selective classifier is maintained.

4.4.2 Localized up-weighting based on category similarity

We can up-weight the test point when calculating the probabilities using an additional KNN localizer, , in this case with as the support set of the KNN and a single parameter, a temperature weight. Weights increase above 1 with greater dissimilarity between a test point and its assigned category. Additional details in Appendix B. By Prop. 3, calibration of the selective classifier is maintained.

4.4.3 Robust Venn-ADMIT Selective Classifications

5 Experiments

We have established that the selective classifications are conservatively well-calibrated; however, we have not said anything about the proportion of points that will be admitted. If the procedure is unnecessarily strict, we may nonetheless prefer the output from alternative approaches, such as Conformal Predictors. Additionally, the Predictor is inherently robust to changes in the proportions of the data partitions, but whether that corresponds to real-world distribution shifts is task and data dependent. To address these concerns, we turn to empirical evaluations.

We evaluate on a wide-range of representative NLP tasks, including challenging domain-shifted and class-imbalanced settings, and in settings in which the point prediction accuracies are quite high (marginally ) and in which they are relatively low. We follow past work in setting in our main experiments. We set . We summarize and label our benchmark tasks, the underlying parametric networks, and data in Table 1 A disjoint set of size 144k, the cb513 set from , was used for initial methods development. In the Table, is the original held-out validation set associated with each task. For the and approaches, a random sample of serves as the disjoint set for training the KNNs, with the remaining data split evenly for and . The baseline and comparison methods are given the full as . The Appendix provides implementation details on constructing the exemplar vectors, , for each of the tasks from the .

5.1 Comparison Models

As a distribution-free baseline of comparison we consider the size- and adaptiveness-optimized RAPS algorithm of Angelopoulos et al. (2021), and , which combine regularization and post-hoc Platt-scaling calibration (Platt, 1999; Guo et al., 2017), on the output of the . Using stratification of coverage by cardinality as a metric, , in particular, was reported to more closely approximate conditional coverage than the alternative (Romano et al., 2020), with smaller sets. is a split-conformal point of reference for simply using the output of without further conditioning, nor post-hoc calibration. is a localized conformal (Guan, 2022) baseline using the KNN localizer . Across methods, the point prediction is included in the set, which ensures conservative (but not necessarily exact/upper-bounded) coverage by eliminating null sets.

We use the label to indicate the Mondrian Conformal sets. We use the label to indicate selective classifications with test-point up-weighting with the KNN localizer (Sec. 4.4.2), and as those with the further restriction of (Sec. 4.4.1). The results exclude test-point up-weighting; excludes test-point up-weighting, but restricts to .

Calibration in general is difficult to evaluate, with conflicting definitions and metics (Kull et al., 2019; Gupta & Ramdas, 2022, inter alia). In the hypothesis testing framework, approaches have been proposed to make marginal Conformal Predictors more adaptive (i.e., to achieve closer approximations to conditional coverage), but evaluations tend to omit class-wise singleton set coverage, arguably the baseline required quantity needed in practice for classification. In contrast, our desiderata are easily evaluated and resolve these concerns: Of the admitted points, we calculate the proportion of points matching the true label, , for each class. That is, given an admitted prediction (or similarly, a singleton set), an end-user should have confidence that the per-class accuracy is at least . Additionally, other things being equal, the proportion of admitted points () should be maximized.

| (Acc.) | () | (Acc.) | (Acc.) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Model/Approx. | ts115 | casp12 | |||||||

| 0.75 | 0.77 | 0.70 | 0.59 | 0.40 | 0.92 | 0.93 | 0.92 | 0.78 | |

| 0.76 | 0.77 | 0.71 | 0.58 | 0.43 | 0.92 | 0.93 | 0.92 | 0.79 | |

| - | 0.77 | 0.70 | - | 0.42 | - | 0.93 | - | 0.78 | |

6 Results

| : Class Label (Amino-Acid/Token-Level Sequence Labeling) | ||||||||||||

| Subset | Acc. | Acc. | Acc. | Acc. | ||||||||

| 0.07 | 0.59 | 0.07 | 0.07 | 0.56 | 0.05 | 0.18 | 0.56 | 0.10 | 0.11 | 0.57 | 0.22 | |

| 0.96 | 0.98 | 0.12 | 0.95 | 0.94 | 0.04 | 0.92 | 0.92 | 0.08 | 0.94 | 0.96 | 0.24 | |

| 0.12 | 0.81 | 0.37 | 0.06 | 0.70 | 0.21 | 0.13 | 0.74 | 0.42 | 0.11 | 0.76 | 1. | |

| 0.09 | 0.64 | 0.02 | 0.07 | 0.65 | 0.01 | 0.16 | 0.55 | 0.03 | 0.12 | 0.60 | 0.07 | |

| 0.96 | 0.98 | 0.07 | 0.95 | 0.96 | 0.03 | 0.93 | 0.94 | 0.06 | 0.94 | 0.96 | 0.15 | |

| 0.27 | 0.87 | 0.16 | 0.09 | 0.81 | 0.08 | 0.14 | 0.79 | 0.18 | 0.16 | 0.82 | 0.43 | |

| 0.07 | 0.57 | 0.05 | 0.07 | 0.53 | 0.04 | 0.19 | 0.57 | 0.07 | 0.11 | 0.56 | 0.16 | |

| 0.96 | 0.98 | 0.05 | 0.04 | 0.90 | 0.01 | 0.03 | 0.87 | 0.02 | 0.93 | 0.94 | 0.09 | |

| 0.09 | 0.76 | 0.21 | 0.05 | 0.62 | 0.12 | 0.13 | 0.70 | 0.24 | 0.09 | 0.70 | 0.57 | |

Across tasks, the KNNs consistently achieve similar point accuracies as the base networks (Table 2). This justifies their use in replacing the output logit of the underlying Transformers. Table 3 then highlights our core motivations for leveraging the signals from the KNNs: There are stark differences across instances as increases and as the distance to training increases (shown here for , but observed across tasks). In order to obtain calibration, coverage, or even similar point accuracies, on datasets with proportionally more points with , and/or far from training, we must control for changes in the proportions of these partitions.

Table 4 and Table 5 contain the main results. The Conformal Predictors RAPS and APS behave as advertised, obtaining marginal coverage (not shown) for in-distribution data. However, the additional adaptiveness of these approaches does not translate into reliable singleton set coverage. More specifically: The Conformal Predictors tend to only obtain singleton set coverage when the point accuracy of the model is , including over in-distribution data. Only for the high-accuracy, in-distribution task (Table 5) is adequate singleton set coverage obtained. For the in-distribution task, coverage falls to the for casp12 and the low for ts115 for the class (Table 4). On the low-accuracy, class-imbalanced task (Table 5), in which the minority class occurs with a proportion less than , singleton set coverage for the minority class is very poor. Re-weighting the empirical CDF near a test point is not an adequate solution to obtain singleton set coverage. The approach obtains coverage on the distribution-shifted task (Table 5), but coverage is inadequate, as with simpler Conformal Predictors, for the task. The stronger per-class Mondrian Conformal guarantee is also not sufficient to obtain singleton set coverage in practice. The sets obtain less severe under-coverage on the task compared to the marginal Conformal Predictors, but singleton set coverage is not clearly better on the in-distribution task. The under-coverage of these approaches could come as a surprise to end-users. In this way, such split-conformal approaches are not ideal for instance-level decision making.

Fortunately, we can nonetheless achieve the desired desiderata with distribution-free methods in a straightforward manner via Venn Predictors, recasting our goal in terms of calibration rather than coverage. The base approach with test-point up-weighting, , is well-calibrated across tasks. We further note that there is no cost to be paid on these datasets by rejecting points in all partitions other than those with , as seen with . That is, the admitted points are almost exclusively in the partition. By means of comparison with the and ablations, in which calibration is obtained by without weighting, we would recommend making this restriction (i.e., using ) as the default approach in higher-risk settings as an additional safeguard.

| by Class Label (Amino-Acid/Token-Level Sequence Labeling) | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Set | Method | ||||||||

| ts115 () | |||||||||

| 0.96 | 0.22 | 0.88 | 0.06 | 0.82 | 0.14 | 0.90 | 0.43 | ||

| 0.96 | 0.24 | 0.86 | 0.07 | 0.82 | 0.17 | 0.90 | 0.48 | ||

| 0.96 | 0.24 | 0.86 | 0.07 | 0.83 | 0.17 | 0.90 | 0.48 | ||

| 0.96 | 0.23 | 0.85 | 0.07 | 0.86 | 0.18 | 0.91 | 0.49 | ||

| 0.96 | 0.23 | 0.88 | 0.07 | 0.76 | 0.14 | 0.88 | 0.43 | ||

| 0.98 | 0.14 | 0.91 | 0.03 | 0.92 | 0.08 | 0.95 | 0.24 | ||

| 0.98 | 0.14 | 0.92 | 0.03 | 0.92 | 0.08 | 0.96 | 0.24 | ||

| 0.99 | 0.14 | 0.92 | 0.03 | 0.91 | 0.07 | 0.96 | 0.24 | ||

| 0.99 | 0.14 | 0.92 | 0.03 | 0.91 | 0.07 | 0.96 | 0.24 | ||

| casp12 () | |||||||||

| 0.96 | 0.14 | 0.85 | 0.05 | 0.77 | 0.13 | 0.87 | 0.31 | ||

| 0.95 | 0.16 | 0.85 | 0.06 | 0.78 | 0.15 | 0.86 | 0.36 | ||

| 0.95 | 0.15 | 0.86 | 0.05 | 0.74 | 0.15 | 0.85 | 0.36 | ||

| 0.97 | 0.14 | 0.82 | 0.03 | 0.84 | 0.12 | 0.90 | 0.30 | ||

| 0.94 | 0.16 | 0.87 | 0.06 | 0.67 | 0.12 | 0.83 | 0.34 | ||

| 0.95 | 0.09 | 0.85 | 0.01 | 0.90 | 0.06 | 0.92 | 0.16 | ||

| 0.96 | 0.09 | 0.87 | 0.01 | 0.89 | 0.06 | 0.93 | 0.16 | ||

| 0.96 | 0.09 | 0.87 | 0.01 | 0.89 | 0.06 | 0.93 | 0.16 | ||

| 0.96 | 0.09 | 0.87 | 0.01 | 0.89 | 0.06 | 0.93 | 0.16 | ||

| by Class Label (Binary and ) | |||||||

| Set | Method | ||||||

| () | |||||||

| (Acc.) | 0.86 | 0.50 | 0.72 | 0.50 | 0.79 | 1.0 | |

| 0.86 | 0.50 | 0.72 | 0.50 | 0.79 | 1.0 | ||

| 0.79 | 0.27 | 0.91 | 0.33 | 0.86 | 0.61 | ||

| 0.75 | 0.50 | 0.80 | 0.50 | 0.78 | 1.00 | ||

| 0.80 | 0.28 | 0.91 | 0.33 | 0.86 | 0.61 | ||

| 0.90 | 0.10 | 0.96 | 0.18 | 0.94 | 0.28 | ||

| 0.96 | 0.16 | 0.93 | 0.18 | 0.94 | 0.33 | ||

| 0.96 | 0.14 | 0.94 | 0.17 | 0.94 | 0.31 | ||

| 0.96 | 0.14 | 0.94 | 0.17 | 0.94 | 0.31 | ||

| 0.96 | 0.13 | 0.94 | 0.17 | 0.95 | 0.29 | ||

| 0.96 | 0.13 | 0.94 | 0.17 | 0.95 | 0.29 | ||

| () | |||||||

| (Acc.) | 0.94 | 0.50 | 0.91 | 0.50 | 0.93 | 1.0 | |

| 0.94 | 0.50 | 0.91 | 0.50 | 0.93 | 1.0 | ||

| 0.97 | 0.47 | 0.96 | 0.46 | 0.96 | 0.93 | ||

| 0.94 | 0.50 | 0.91 | 0.50 | 0.93 | 1.00 | ||

| 0.96 | 0.47 | 0.95 | 0.46 | 0.96 | 0.92 | ||

| 0.95 | 0.43 | 0.94 | 0.44 | 0.95 | 0.88 | ||

| 0.95 | 0.42 | 0.93 | 0.40 | 0.94 | 0.82 | ||

| 0.94 | 0.38 | 0.93 | 0.40 | 0.94 | 0.78 | ||

| 0.94 | 0.38 | 0.93 | 0.40 | 0.94 | 0.78 | ||

| 0.94 | 0.37 | 0.94 | 0.40 | 0.94 | 0.76 | ||

| 0.94 | 0.37 | 0.94 | 0.40 | 0.94 | 0.76 | ||

| () | |||||||

| (Acc.) | 0.98 | 0.93 | 0.27 | 0.07 | 0.93 | 1.0 | |

| 0.98 | 0.92 | 0.26 | 0.06 | 0.94 | 0.99 | ||

| 0.97 | 0.78 | 0.34 | 0.05 | 0.94 | 0.83 | ||

| 0.97 | 0.79 | 0.34 | 0.05 | 0.94 | 0.84 | ||

| 0.97 | 0.79 | 0.34 | 0.05 | 0.94 | 0.83 | ||

| 1.00 | 0.85 | 0.19 | 0.05 | 0.95 | 0.91 | ||

| 0.93 | 0.20 | 0.77 | 0.02 | 0.92 | 0.22 | ||

| 1.00 | 0.11 | 0.75 | 0.01 | 0.98 | 0.12 | ||

| 1.00 | 0.08 | 0.89 | 0.01 | 0.99 | 0.09 | ||

| 1.00 | 0.05 | 0.92 | 0.01 | 0.99 | 0.05 | ||

| 1.00 | 0.05 | 0.92 | 0.01 | 0.99 | 0.05 | ||

7 Conclusion

The finite-sample, distribution-free guarantees of Conformal Predictors are appealing; however, the coverage guarantee is too weak for typical classification use-cases. We have instead demonstrated that the key characteristics desired for prediction sets are instead achievable by calibrating weak selective classifiers with Venn Predictors, enabled by KNN approximations of the deep networks.

Reproducibility Statement

We will provide a link to our Pytorch code and replication scripts with the camera-ready version of the paper. The data and pre-trained weights of the underlying Transformers are publicly available and are further described for each experiment in the Appendix.

Ethics Statement

Uncertainty quantification is a cornerstone for trustworthy AI. We have demonstrated a principled approach for selective classification that achieves the desired desiderata in challenging settings (low accuracy, class-imbalanced, distribution shifted) under a stringent class-wise evaluation scenario. We have also shown that alternative existing distribution-free approaches do not produce the quantities needed in typical classification settings.

Whereas the use-cases for prediction sets with marginal coverage are relatively limited, the use-cases for reliable selective classification are numerous. For example, reliable class-conditional selective classification directly applies to routing to reduce overall computation (e.g., use small, fast models, only deferring to larger models for rejected predictions), and higher-risk settings where less confident predictions must be sent to humans for further adjudication.

An unusual and advantageous aspect of a Predictor, and which further distinguishes it from post-hoc Platt-scaling-style calibration (Platt, 1999; Guo et al., 2017), is a degree of inherent example-based interpretability: The calibrated distribution for a point is a simple transformation of the empirical probability among similar points, with partitions determined by a KNN that can be readily inspected. This matching component yields a direct avenue for addressing group-wise fairness: Known group attributes can be incorporated as categories to ensure group-wise calibration.

Acknowledgments

We thank the organizers, reviewers, and participants of the Workshop on Distribution-Free Uncertainty Quantification at the Thirty-ninth International Conference on Machine Learning (ICML 2022) for feedback and suggestions on an earlier version of this work.

References

- Angelopoulos & Bates (2021) Anastasios N. Angelopoulos and Stephen Bates. A Gentle Introduction to Conformal Prediction and Distribution-Free Uncertainty Quantification. CoRR, abs/2107.07511, 2021. URL https://arxiv.org/abs/2107.07511.

- Angelopoulos et al. (2021) Anastasios Nikolas Angelopoulos, Stephen Bates, Michael Jordan, and Jitendra Malik. Uncertainty Sets for Image Classifiers using Conformal Prediction. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=eNdiU_DbM9.

- Berman et al. (2000) Helen M Berman, John Westbrook, Zukang Feng, Gary Gilliland, Talapady N Bhat, Helge Weissig, Ilya N Shindyalov, and Philip E Bourne. The protein data bank. Nucleic acids research, 28(1):235–242, 2000.

- Chelba et al. (2014) Ciprian Chelba, Tomás Mikolov, Mike Schuster, Qi Ge, Thorsten Brants, Phillipp Koehn, and Tony Robinson. One Billion Word Benchmark for Measuring Progress in Statistical Language Modeling. In Haizhou Li, Helen M. Meng, Bin Ma, Engsiong Chng, and Lei Xie (eds.), INTERSPEECH 2014, 15th Annual Conference of the International Speech Communication Association, Singapore, September 14-18, 2014, pp. 2635–2639. ISCA, 2014. URL http://www.isca-speech.org/archive/interspeech_2014/i14_2635.html.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics. doi: 10.18653/v1/N19-1423. URL https://aclanthology.org/N19-1423.

- Devroye et al. (1996) Luc Devroye, László Györfi, and Gábor Lugosi. A Probabilistic Theory of Pattern Recognition. In Stochastic Modelling and Applied Probability, 1996.

- El-Gebali et al. (2019) Sara El-Gebali, Jaina Mistry, Alex Bateman, Sean R Eddy, Aurélien Luciani, Simon C Potter, Matloob Qureshi, Lorna J Richardson, Gustavo A Salazar, Alfredo Smart, Erik L L Sonnhammer, Layla Hirsh, Lisanna Paladin, Damiano Piovesan, Silvio C E Tosatto, and Robert D Finn. The Pfam protein families database in 2019. Nucleic Acids Research, 47(D1):D427–D432, 2019. ISSN 0305-1048. doi: 10.1093/nar/gky995. URL https://academic.oup.com/nar/article/47/D1/D427/5144153.

- Guan (2022) Leying Guan. Localized Conformal Prediction: A Generalized Inference Framework for Conformal Prediction. Biometrika, 07 2022. ISSN 1464-3510. doi: 10.1093/biomet/asac040. URL https://doi.org/10.1093/biomet/asac040. asac040.

- Guo et al. (2017) Chuan Guo, Geoff Pleiss, Yu Sun, and Kilian Q. Weinberger. On Calibration of Modern Neural Networks. In Proceedings of the 34th International Conference on Machine Learning - Volume 70, ICML’17, pp. 1321–1330. JMLR.org, 2017.

- Gupta & Ramdas (2022) Chirag Gupta and Aaditya Ramdas. Top-label calibration and multiclass-to-binary reductions. In International Conference on Learning Representations, 2022. URL https://openreview.net/forum?id=WqoBaaPHS-.

- Johansson et al. (2018) Ulf Johansson, Tuve Löfström, and Håkan Sundell. Venn predictors using lazy learners. In The 2018 World Congress in Computer Science, Computer Engineering & Applied Computing, July 30-August 02, Las Vegas, Nevada, USA, pp. 220–226. CSREA Press, 2018.

- Kaushik et al. (2020) Divyansh Kaushik, Eduard Hovy, and Zachary Lipton. Learning The Difference That Makes A Difference With Counterfactually-Augmented Data. In International Conference on Learning Representations, 2020. URL https://openreview.net/forum?id=Sklgs0NFvr.

- Klausen et al. (2019) Michael Schantz Klausen, Martin Closter Jespersen, Henrik Nielsen, Kamilla Kjaergaard Jensen, Vanessa Isabell Jurtz, Casper Kaae Soenderby, Morten Otto Alexander Sommer, Ole Winther, Morten Nielsen, Bent Petersen, et al. Netsurfp-2.0: Improved prediction of protein structural features by integrated deep learning. Proteins: Structure, Function, and Bioinformatics, 2019.

- Kull et al. (2019) Meelis Kull, Miquel Perello-Nieto, Markus Kängsepp, Telmo Silva Filho, Hao Song, and Peter Flach. Beyond Temperature Scaling: Obtaining Well-Calibrated Multiclass Probabilities with Dirichlet Calibration. Curran Associates Inc., Red Hook, NY, USA, 2019.

- Lambrou et al. (2015) Antonis Lambrou, Ilia Nouretdinov, and Harris Papadopoulos. Inductive Venn Prediction. Annals of Mathematics and Artificial Intelligence, 74(1):181–201, 2015. doi: 10.1007/s10472-014-9420-z. URL https://doi.org/10.1007/s10472-014-9420-z.

- Lei & Wasserman (2014) Jing Lei and Larry Wasserman. Distribution-free prediction bands for non-parametric regression. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 76(1):71–96, 2014. doi: https://doi.org/10.1111/rssb.12021. URL https://rss.onlinelibrary.wiley.com/doi/abs/10.1111/rssb.12021.

- Loshchilov & Hutter (2019) Ilya Loshchilov and Frank Hutter. Decoupled Weight Decay Regularization, 2019.

- Maas et al. (2011) Andrew L. Maas, Raymond E. Daly, Peter T. Pham, Dan Huang, Andrew Y. Ng, and Christopher Potts. Learning Word Vectors for Sentiment Analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pp. 142–150, Portland, Oregon, USA, June 2011. Association for Computational Linguistics. URL https://aclanthology.org/P11-1015.

- Moult et al. (2018) John Moult, Krzysztof Fidelis, Andriy Kryshtafovych, Torsten Schwede, and Anna Tramontano. Critical assessment of methods of protein structure prediction (CASP)-Round XII. Proteins: Structure, Function, and Bioinformatics, 86:7–15, 2018. ISSN 08873585. doi: 10.1002/prot.25415. URL http://doi.wiley.com/10.1002/prot.25415.

- Papadopoulos et al. (2002) Harris Papadopoulos, Kostas Proedrou, Volodya Vovk, and Alex Gammerman. Inductive confidence machines for regression. In Proceedings of the 13th European Conference on Machine Learning, ECML’02, pp. 345–356, Berlin, Heidelberg, 2002. Springer-Verlag. ISBN 3540440364. doi: 10.1007/3-540-36755-1˙29. URL https://doi.org/10.1007/3-540-36755-1_29.

- Platt (1999) John C. Platt. Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods. In Advances in Large Margin Classifiers, pp. 61–74. MIT Press, 1999.

- Podkopaev & Ramdas (2021) Aleksandr Podkopaev and Aaditya Ramdas. Distribution-free uncertainty quantification for classification under label shift. In Cassio de Campos and Marloes H. Maathuis (eds.), Proceedings of the Thirty-Seventh Conference on Uncertainty in Artificial Intelligence, volume 161 of Proceedings of Machine Learning Research, pp. 844–853. PMLR, 27–30 Jul 2021. URL https://proceedings.mlr.press/v161/podkopaev21a.html.

- Rao et al. (2019) Roshan Rao, Nicholas Bhattacharya, Neil Thomas, Yan Duan, Xi Chen, John Canny, Pieter Abbeel, and Yun S Song. Evaluating Protein Transfer Learning with TAPE. In Advances in Neural Information Processing Systems, 2019.

- Rei & Yannakoudakis (2016) Marek Rei and Helen Yannakoudakis. Compositional Sequence Labeling Models for Error Detection in Learner Writing. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 1181–1191, Berlin, Germany, August 2016. Association for Computational Linguistics. doi: 10.18653/v1/P16-1112. URL https://www.aclweb.org/anthology/P16-1112.

- Romano et al. (2020) Yaniv Romano, Matteo Sesia, and Emmanuel J. Candès. Classification with valid and adaptive coverage. In Proceedings of the 34th International Conference on Neural Information Processing Systems, NIPS’20, Red Hook, NY, USA, 2020. Curran Associates Inc. ISBN 9781713829546.

- Rosenthal et al. (2017) Sara Rosenthal, Noura Farra, and Preslav Nakov. SemEval-2017 Task 4: Sentiment Analysis in Twitter. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), pp. 502–518, Vancouver, Canada, August 2017. Association for Computational Linguistics. doi: 10.18653/v1/S17-2088. URL https://www.aclweb.org/anthology/S17-2088.

- Sadinle et al. (2018) Mauricio Sadinle, Jing Lei, and Larry A. Wasserman. Least ambiguous set-valued classifiers with bounded error levels. Journal of the American Statistical Association, 114:223 – 234, 2018.

- Schmaltz (2021) Allen Schmaltz. Detecting Local Insights from Global Labels: Supervised and Zero-Shot Sequence Labeling via a Convolutional Decomposition. Computational Linguistics, 47(4):729–773, December 2021. doi: 10.1162/coli˙a˙00416. URL https://aclanthology.org/2021.cl-4.25.

- Schmaltz & Beam (2020) Allen Schmaltz and Andrew Beam. Exemplar Auditing for Multi-Label Biomedical Text Classification. CoRR, abs/2004.03093, 2020. URL https://arxiv.org/abs/2004.03093.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is All you Need. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (eds.), Advances in Neural Information Processing Systems, volume 30, pp. 6000–6010. Curran Associates, Inc., 2017. URL https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf.

- Vovk (2012) Vladimir Vovk. Conditional validity of inductive conformal predictors. In Steven C. H. Hoi and Wray Buntine (eds.), Proceedings of the Asian Conference on Machine Learning, volume 25 of Proceedings of Machine Learning Research, pp. 475–490, Singapore Management University, Singapore, 04–06 Nov 2012. PMLR. URL https://proceedings.mlr.press/v25/vovk12.html.

- Vovk & Petej (2014) Vladimir Vovk and Ivan Petej. Venn-Abers Predictors. In UAI, 2014.

- Vovk et al. (2003) Vladimir Vovk, Glenn Shafer, and Ilia Nouretdinov. Self-calibrating Probability Forecasting. In S. Thrun, L. Saul, and B. Schölkopf (eds.), Advances in Neural Information Processing Systems, volume 16. MIT Press, 2003. URL https://proceedings.neurips.cc/paper/2003/file/10c66082c124f8afe3df4886f5e516e0-Paper.pdf.

- Vovk et al. (2005) Vladimir Vovk, Alex Gammerman, and Glenn Shafer. Algorithmic Learning in a Random World. Springer-Verlag, Berlin, Heidelberg, 2005. ISBN 0387001522.

- Vovk et al. (2015) Vladimir Vovk, Ivan Petej, and Valentina Fedorova. Large-scale probabilistic predictors with and without guarantees of validity. In C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, and R. Garnett (eds.), Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc., 2015. URL https://proceedings.neurips.cc/paper/2015/file/a9a1d5317a33ae8cef33961c34144f84-Paper.pdf.

- Yannakoudakis et al. (2011) Helen Yannakoudakis, Ted Briscoe, and Ben Medlock. A New Dataset and Method for Automatically Grading ESOL Texts. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pp. 180–189, Portland, Oregon, USA, June 2011. Association for Computational Linguistics. URL https://www.aclweb.org/anthology/P11-1019.

- Zeiler (2012) Matthew D. Zeiler. ADADELTA: An Adaptive Learning Rate Method. CoRR, abs/1212.5701, 2012. URL http://arxiv.org/abs/1212.5701.

Appendix A Appendix: Contents

Appendix B describes the KNN localizer, and Appendix C provides additional details for training the KNNs. Appendix D provides guidelines on controlling for—and conveying the variance of—the sample size. We provide pseudo-code in Appendix E. In Appendix F, G, and H, we provide additional details for each of the tasks.

Appendix B KNN localizer

We use a KNN localizer, against the calibration set, as a localized conformal (Guan, 2022) baseline of comparison, and to re-weight category assignments for the Predictor. This KNN localizer recasts the test approximation output as a weighted linear combination over the calibration set approximations:

| (8) |

The single parameter, the temperature parameter of , which is calculated in an analogous manner as in Equation 3, is trained via gradient descent against to minimize prediction discrepancies between and . As with , training is performed using .

As noted in the main text, we can use the weights from this approximation as a guard against distribution shifts within the data partitions. For a given test point, we calculate (Eq. 8), and then determine the augmented distribution (i.e., , the empirical probability for a point when including the point itself, assuming a given label ) for the Venn Predictor by adding the test point up-weighted according to the weights of this KNN localizer. Specifically, the new weight for the test point is as follows:

| (9) |

where is the set of calibration points belonging to the same category as . When this weight is 1, we have the standard Venn Predictor; when this weight is greater than 1, it is a sign of a mismatch (due to a distribution shift, or an otherwise aberrant category assignment) and the minimum probability estimated by the Venn Predictor becomes smaller. satisfies Prop. 3, so calibration of the selective classifier is maintained when using this weight to up-weight the test point.

Appendix C KNN training

We train and with the same learning procedure, the only difference being the underlying model that is approximated. Here, we take as the unnormalized output logit for class of the model to be approximated (either the or ) and the unnormalized output logit for class of the approximation (either or ). The binary cross-entropy loss for a token, , is then calculated as follows:

| (10) |

That is, we seek to minimize the difference between the original model’s output and the KNN’s output, for each class, holding the parameters of the original model fixed. is averaged over all classes in mini-batches constructed from the tokens of shuffled documents. We train with Adadelta (Zeiler, 2012) with a learning rate of 1.0, choosing the epoch that minimizes

| (11) |

the total number of prediction discrepancies between the original model and the KNN approximation over the KNN dev set. During training, if the immediately preceding epoch did not yield a new minimal among the running epochs, we subsequently only calculate for the tokens with prediction discrepancies until a new minimum is found (after which we return to calculating the loss over all points), or the maximum number of epochs is reached. This has a regularizing effect: There is signal in the magnitude of the KNN output, so we aim to optimize in the direction of minimizing the residuals; however, we seek to avoid over-fitting to the magnitude of the outliers.

A key insight is that we can readily approximate the vast majority of the predictions from the Transformer networks (possibly other networks, as well) using such KNN approximations, and critically, when the approximations diverge from the model, those points tend to be from the subsets over which the underlying model is itself unreliable. This implies a non-homogenous error distribution, and we find that the aforementioned procedure of iterative masking effectively learns the KNN parameters without the need to introduce other regularization approaches. In practice, we find that a relatively small amount of data (e.g., only 10% of the original validation sets for the tasks in the experiments in the main text) is sufficient to learn the low number of parameters of the KNNs.

Appendix D Controlling for sample size

Given a single sample from (i.e., our single of some fixed size), we need to convey the variance due to the observed sample size. We opt for a simple hard threshold, , given that the distribution of split-conformal coverage is Beta distributed (Vovk, 2012). With, for example , assuming exchangeability, the finite-sample guarantee then implies coverage variation within a conditioning band of size with . See the comprehensive tutorial Angelopoulos & Bates (2021) for additional details. In our experiments, if the size of at least 1 label-specific band for a given point falls below , we revert to a set of full cardinality. For the , , and tasks, we set . With the low accuracy and low frequency of the minority class in the task, the partition is comparatively small. As such, for the task, we set to avoid heavily censoring the partition, at the expense of potentially higher variability.

Appendix E Pseudo-code

Algorithm 1 provides pseudo-code for constructing a well-calibrated selective classification, via the two stage approach described in the text. First, Algorithm 2 constructs an prediction set for a test point, . If the set only includes a single class (i.e., the weak selective classifier admitted the class), the output is then calibrated via the Predictor (Algorithm 3). The class prediction is then returned if the calibrated probability matches or exceeds the provided threshold, . As an additional safeguard, we can further restrict the partitions based on , as described in Section 4.4.1.

In the main text, we also compare to a variation without the test-point weighting. This unweighted variation appears in Algorithm 4 and replaces the corresponding line in Algorithm 1.

Appendix F Task: Protein secondary structure prediction ()

In the supervised sequence labeling task, we seek to predict the secondary structure of proteins. For each amino acid, we seek to predict one of three classes, .

For training and evaluation, we use the TAPE datasets of Rao et al. (2019).444TAPE provides a standardized benchmark from existing models and data (El-Gebali et al., 2019; Berman et al., 2000; Moult et al., 2018; Klausen et al., 2019). We approximate the Transformer of Rao et al. (2019), which is not SOTA on the task; while not degenerate, this fine-tuned self-supervised model was outperformed by models with HMM alignment-based input features in the original work. Of interest in the present work is whether coverage can be obtained with a neural model with otherwise relatively modest overall point accuracy. We use the publicly available model and pre-trained weights555https://github.com/songlab-cal/tape.

F.1

The base network consists of a pre-trained Transformer similar to with a final convolutional classification layer, consisting of two 1-dimensional CNNs: The first over the final hidden layer of the Transformer corresponding to each amino acid (each hidden layer is of size 768), using 512 filters of width 5, followed by and a second CNN using 3 filters of width 3. Batch normalization is applied before the first CNN, and weight normalization is applied to the output of each of the CNNs. The application of the 3 filters of the final CNN produces the logits, , for each amino acid.

The consists of an additional 1-dimensional CNN, which uses 1000 filters of width 1. The input to the corresponding to each amino acid is the concatenation of the final hidden layer of the Transformer, the output of the final CNN of the base network, and a randomly initialized 10-dimensional word-embedding. The output of the CNN is passed to a LinearLayer of dimension 1000 by 3. (Unlike the sparse supervised sequence labeling task of , we use neither a , nor a max-pool operation. The sequences are very long in this setting—up to 1000 used in training and 1632 at inference to avoid truncation—so removing the max-pool bottleneck enables keeping the number of filters of the CNN lower than the total number of amino acids. In this way, we also do not use the decomposition of the CNN with the LinearLayer, as in the task, since the sparsity over the input is not needed for this task.) The exemplar vectors for the KNNs are then the filter applications of the CNN corresponding to each amino acid.

We fine-tune the base network and train the in an iterative fashion. Each epoch we either update the gradients of the base network, or those of the , freezing the counter-part each epoch. We start by updating the base network (and freezing the ), and we use separate optimizers for each: Adadelta (Zeiler, 2012) with a learning rate of 1.0 for the and Adam with weight decay (Loshchilov & Hutter, 2019) with a learning rate of 0.0001 and a warmup proportion of 0.01 for the base network. For the latter, we use the BertAdam code from the HuggingFace re-implementation of Devlin et al. (2019). We fine-tune for up to 16 epochs, and we use a standard cross-entropy loss.

Appendix G Supervised grammatical error detection ()

The task is a binary sequence labeling task in which we aim to predict whether each word in the input does () or does not () have a grammatical error. and consist of essays written by second-language learners (Yannakoudakis et al., 2011; Rei & Yannakoudakis, 2016) and consists of student written essays and newswire text (Chelba et al., 2014). The test set is the FCE+news2k set of Schmaltz (2021).

The test set is challenging for two reasons. First, the class appears with a proportion of 0.07 of all of the words. This is less than our default value for , with the implication that marginal coverage can potentially be obtained by altogether ignoring that class. Second, the in-domain task itself is relatively challenging, but it is made yet harder by adding newswire text, as evident in the large score differences across and in Table 2.

The exemplar vectors, , used in the KNNs are extracted from the filter applications of a penultimate CNN layer over a frozen model, as in Schmaltz (2021).

Appendix H Tasks: Sentiment classification () and out-of-domain sentiment classification ()

and are document-level binary classification tasks in which we aim to predict whether the document is of negative () or positive () sentiment. The training and calibration sets, as well as the base networks, are the same for both tasks, with the distinction in the differing test sets. The training set is the 3.4k IMDb movie review set used in Kaushik et al. (2020) from the data of Maas et al. (2011). For calibration, we use a disjoint 16k set of reviews from the original training set of Maas et al. (2011). The test set of is the 488-review in-domain test set of original reviews used in Kaushik et al. (2020), and the test set of consists of 5k Twitter messages from SemEval-2017 Task 4a (Rosenthal et al., 2017).

Similar to the task, the exemplar vectors, , are derived from the filter applications of a penultimate CNN layer over a frozen model. However, in this case, the vectors are the concatenation of the document-level max-pooled vector, , and the vector associated with a single representative token in the document, . To achieve this, we model the task as multi-label classification and fine-tune the penultimate layer CNN and a final layer consisting of two linear layers with the combined min-max and global normalization loss of Schmaltz & Beam (2020). In this way, we can associate each word with one of (or in principle, both) positive and negative sentiment, or a neutral class, while nonetheless having a single exclusive global prediction. This provides sparsity over the detected features, and captures the notion that a document may, in totality, represent one of the classes (e.g., indicate a positively rated movie overall) while at the same time including sentences or phrases that are of the opposite class (e.g., aspects that the reviewer rated negatively). This behavior is illustrated with examples from the calibration set in Table 6. We use the max scoring word from the “convolutional decomposition”, a hard-attention-style approach, for the document-level predicted class as the single representative word for the document. For the document-level prediction, we take the max over the multi-label logits, which combine the global and max local scores.

| Model predictions over |

|---|

| What an amazing film. [...] My only gripe is that it has not been released on video in Australia and is therefore only available on TV. What a waste. |

| [...] But the story that then develops lacks any of the stuff that these opening fables display. [...] I will say that the music by Aimee Mann was great and I’ll be looking for the Soundtrack CD. [...] |

| Kenneth Branagh shows off his excellent skill in both acting and writing in this deep and thought provoking interpretation of Shakespeare’s most classic and well-written tragedy. [...] |