Approximating arrival costs in distributed moving horizon estimation: A recursive method

Abstract

In this paper, we present a new approach to distributed moving horizon estimation for constrained nonlinear processes. The method involves approximating the arrival costs of local estimators through a recursive framework. First, distributed full-information estimation for linear unconstrained systems is presented, which serves as the foundation for deriving the analytical expression of the arrival costs for the local estimators. Subsequently, we develop a recursive arrival cost design for linear distributed moving horizon estimation. Sufficient conditions are derived to ensure the stability of the estimation error for constrained linear systems. Next, we extend the arrival cost design derived for linear systems to account for nonlinear systems, and a partition-based constrained distributed moving horizon estimation algorithm for nonlinear systems is formulated. A benchmark chemical process is used to illustrate the effectiveness and superiority of the proposed method.

Keywords: Distributed state estimation, moving horizon estimation, arrival cost approximation, nonlinear processes

1 Introduction

The partition-based distributed framework has emerged as a promising structure for developing scalable and flexible decision-making solutions for large-scale complex industrial processes, since it can provide higher fault tolerance, reduced computational complexity, and increased flexibility for system maintenance [1, 2, 3]. Within a partition-based distributed decision-making framework, a large-scale process is partitioned into smaller subsystems that are interconnected with each other. Multiple decision-making units are deployed for the subsystems and coordinate their decisions through real-time communication [4, 5, 6]. To enable distributed decision-making systems to take informed control actions for appropriate process operation, it is crucial to have distributed state estimation capabilities that can provide real-time full-state estimates for the underlying systems [2, 7, 8]. In this paper, we focus on partition-based distributed state estimation for general nonlinear systems.

As an effective distributed state estimation approach, distributed moving horizon estimation (DMHE) offers the capability to handle process nonlinearity and address constraints imposed on both state variables and process disturbances [7, 8, 9, 10, 11, 12, 13, 14, 15, 16]. In [7], non-iterative partition-based DMHE algorithms were proposed for linear systems considering constraints on state variables and process disturbances. These algorithms design local estimators based on partitioned subsystem models, with each local estimator handling state estimation for the corresponding subsystem with non-overlapping states. In [8, 9, 10, 11, 12], partition-based DMHE approaches that require iterative executions within each sampling period were proposed for linear systems; these designs ensure the convergence of the state estimates generated by DMHE to their the corresponding centralized moving horizon estimation (MHE) counterparts. Based on these approaches, the objective function of centralized MHE is partitioned into several individual objective functions. An additional term is then incorporated with each partitioned objective function to construct the local objective function for the proposed DMHE algorithms. Particularly, in [8, 9, 10], a sensitivity term is integrated with the partitioned objective function to account for the impact of each local decision variable on the objective functions of interconnected subsystems, while in [11, 12], penalties on measurement noise from interconnected subsystems are incorporated to form the local objective function of each estimator. In [13, 14], partition-based DMHE approaches for nonlinear systems were proposed. In [15, 16], DMHE for constrained nonlinear systems was addressed in a way that an auxiliary observer is integrated with the corresponding MHE to form an enhanced MHE-based constrained estimator for each subsystem of the entire nonlinear process.

In MHE design, previous information not included in the current estimation window can be summarized by a function referred to as arrival cost. An accurate approximation of the arrival cost can enhance estimation performance [17, 18]. Additionally, a well-approximated arrival cost allows for a reduction in the length of the estimation window without compromising the accuracy of state estimates [18]. This reduction in the estimation window length can enhance the computational efficiency by decreasing the complexity of the optimization problem. In centralized MHE designs, various methods have been proposed to approximate the arrival cost. For linear systems, the Kalman filter has been widely used in the approximation of arrival cost [19, 20]. For nonlinear systems, solutions for approximating the arrival cost of centralized MHE include extended Kalman filter [21, 22], unscented Kalman filter [17], and particle filter [18]. In a distributed context, accurately approximating the arrival costs for the local estimators of DMHE becomes a more complicated problem. Different approximation methods for arrival cost approximation have been adopted for linear DMHE. In [8, 9, 10, 11, 12], the arrival cost was formulated as a weighted squared error between the state estimate and the a priori prediction, weighted by a constant matrix, which is fine-tuned to satisfy stability conditions. Additionally, in [7], a Kalman filter design for an auxiliary system was leveraged to approximate the weighting matrix for the arrival cost at each sampling instant. In [23], a partition-based DMHE method was proposed for the state estimation of data-driven subsystem models. In this work, the update of the arrival cost for DMHE design was facilitated by using a partition-based distributed Kalman filter approach proposed in [24]. Meanwhile, results on approximating the arrival costs for nonlinear DMHE algorithms have been limited. In [13] where a two-time-scale nonlinear DMHE was proposed, the arrival costs for the local estimators were not considered. In [14], the weighting matrix for the arrival cost design of each estimator was updated at each sampling instant. However, this paper only presents the conditions for the weighting matrix to satisfy and does not explicitly provide the update formula for the weighting matrix. In [15, 16], decentralized extended Kalman filters were utilized to approximate the arrival costs for local estimators of observer-enhanced DMHE. However, in each of the two designs, the interactive dynamics were not taken into account.

In this paper, we address the problem of approximating the arrival costs for the local estimators of a partition-based DMHE design and formulate a partition-based distributed estimation scheme for general nonlinear processes with state constraints. The objective of this work is achieved in four steps: 1) we derive an analytical expression of the arrival cost for each local estimator of the DMHE in the unconstrained linear context from the design of the distributed full-information estimation formulation in [24]; 2) we conduct the stability analysis for the proposed DMHE algorithm for linear systems with state constraints; 3) the analytical expression of arrival cost obtained for linear unconstrained systems is extended to account for nonlinear systems; 4) we formulate a partition-based constrained DMHE algorithm for general nonlinear systems, where each local estimator incorporates output measurements of the interacting subsystems and approximates the local arrival cost using the derived recursive solution. The effectiveness of the proposed method is demonstrated using a simulated chemical process. Some initial findings from this study were presented in a conference paper [25]. Compared with [25], this paper presents the stability analysis for the proposed DMHE algorithm for linear systems with state constraints. Additionally, we include additional comparisons to demonstrate the efficacy and superiority of the proposed DMHE approach.

2 Preliminaries

2.1 Notation

represents the block diagonal matrix with blocks , . represents a block matrix where the is the submatrix in the th row and the th column. is an identity matrix. is the square of the weighted Euclidean norm of vector . denotes a column vector containing a sequence . .

2.2 System description

Let us consider nonlinear systems consisting of interconnected subsystems. The dynamics of the th subsystem, , are expressed as follows:

| (1a) | ||||

| (1b) | ||||

where denotes discrete-sampling instant; and represent the state vector and output measurements of the th subsystem, respectively; is a vector of the states of all the subsystems that have direct influence on the dynamics of subsystem ; and represent the unknown process disturbances and measurement noise associated with subsystem , respectively; is a vector-value nonlinear function characterizing the dynamics of subsystem ; is the output measurement function for subsystem , .

By considering the variables for the entire system as the aggregation of the variables for each subsystem , : for vector , the dynamics of the entire system can be formulated in the following compact form:

| (2a) | ||||

| (2b) | ||||

where and can be obtained from and , respectively.

2.3 Problem formulation

In this work, we aim to propose a partition-based DMHE algorithm for nonlinear systems, which integrates a recursive update mechanism for the arrival cost of each local estimator of the distributed scheme. To achieve this goal, we first present a distributed full-information estimation (FIE) design by partitioning the objective function of a centralized FIE problem. From this design, we derive the analytical expression of the arrival cost for the proposed DMHE method in a linear context. Subsequently, we formulate the proposed DMHE method based on the obtained arrival costs, and sufficient conditions are provided to guarantee the stability of the proposed DMHE approach under state constraints. Finally, the obtained arrival costs for linear systems are extended to account for the nonlinear systems, and a partition-based DMHE algorithm for constrained nonlinear context is formulated.

Based on the above consideration, first, we start by examining a class of linear systems consisting of subsystems. The dynamics of th linear subsystem, , are described as follows:

| (3a) | ||||

| (3b) | ||||

where , , and , , , are subsystem matrices of compatible dimensions.

Based on the linear subsystem models in (3), we will design MHE-based local estimators. These estimators will then be integrated to formulate a linear DMHE design, which will be extended to account for state estimation of nonlinear systems in (1). A compact form of the linear system comprising all the subsystems in the form of (3) is described as follows:

| (4a) | ||||

| (4b) | ||||

where represents a block matrix where is the submatrix in the th row and the th column; .

2.4 Centralized full-information estimation

Full-information estimation (FIE) is an optimization-based state estimation approach, which can be viewed as a least-squares estimation method that minimizes the cumulative sum of squared errors from the initial time instant to the current time instant. Specifically, at each sampling instant , based on the linear model in (4), a centralized FIE design can be formulated as follows [26]:

| (5a) | |||

| (5b) | |||

where is an estimate of state ; is an a priori estimate of initial state ; and are estimates of process disturbances and measurement noise , respectively; , , and are positive-definite weighting matrices. It is noted that as the number of sampling instants increases over time, the associated optimization problem in (5) becomes increasingly complex and intractable. FIE is important for the design and analysis of the MHE approaches [26, 27]. In the next section, we introduce a distributed FIE design, which is used to guide the development of the DMHE approach.

3 Distributed full-information estimation

In this section, a distributed FIE problem is formulated based on the linear subsystem models in (3). First, we partition the global objective function of the centralized FIE design in (5), following [24]. Subsequently, each partitioned objective function is integrated with the sensor measurements from interconnected subsystems to construct the local objective function of each local estimator.

3.1 Construction of local objective functions

Following [24], the global objective function of the centralized FIE algorithm is decomposed into , , such that . The objective function of each local estimator is described as follows:

| (6) |

where is an estimate of the th subsystem state ; is an initial guess of ; and are the estimates of disturbances and measurement noise of the th subsystem, respectively. It is noted that the sensor measurements from the interconnected subsystems can provide valuable information for estimating the local subsystem states. Inspired by the objective function designs for the distributed state estimation algorithms proposed in [8, 10, 24], each local objective function of the local estimator of distributed FIE is presented as follows:

| (7) |

where is an estimate of measurement noise of the entire system within estimator ; , , and are the th diagonal block of weighting matrices , , and , respectively. The key difference between in (6) and in (3.1) is that includes additional information on the sensor measurements of interconnected subsystems, which can provide valuable insights for the reconstruction of the local subsystem states.

3.2 Distributed full-information estimation formulation

At sampling instant , the local estimator for the th subsystem of distributed FIE can be formulated by leveraging the local objective function proposed in (3.1):

| (8a) | |||

| (8b) | |||

| (8c) | |||

where and comprise the columns of and with respect to the state of , respectively; is a (conservative) state estimate of subsystem for sampling instant made available to the estimator of subsystem , which is determined as:

| (9) |

In (9), is the state estimate of calculated by the th estimator at sampling instant , and denotes an initial guess of , . We utilize the state estimates obtained at the previous time instant because they are calculated based on the most recent available sensor measurements. In the remainder of this paper, we simplify the subscript by omitting “”, and we denote by for brevity.

4 Distributed moving horizon estimation for linear systems

In this section, a linear DMHE design with a recursive update of arrival costs for the local estimators is presented. The stability of the proposed linear DMHE design is analyzed. First, we obtain an analytical recursive expression of the arrival cost by leveraging the distributed FIE in (8). Following this, we formulate a DMHE design where the arrival cost of each local estimator is updated using the derived recursive method for linear systems with state constraints. Additionally, we prove the stability of the proposed DMHE approach.

Inspired by the DMHE designs proposed by [8] and [12], the th estimator of the proposed DMHE at sampling instant solves the optimization below:

| (10a) | ||||

| with | ||||

| (10b) | ||||

where is the length of the estimation window; is the arrival cost that summarizes the historical information excluded from the estimation window. The detailed arrival cost design will be discussed in Section 4.1. Before proceeding, we introduce two lemmas, which will be used to derive the expression of the arrival cost for each estimator of the proposed DMHE design.

Lemma 1.

([28]) Consider the following two quadratic functions:

where and are positive definite matrices; a and b are two vectors of compatible dimensions. Then, it holds that

where

Lemma 2.

4.1 Arrival costs for linear systems

By comparing the design of the distributed FIE in (8) with the proposed DMHE in (10), the arrival cost can be constructed by deriving the analytical solution to the following optimization problem:

where

First, we consider the arrival cost , which can be formulated by deriving the analytical solution to the optimization problem below:

| (11a) | ||||

| where | ||||

| (11b) | ||||

Considering (8b) and Lemma 1, can be rewritten as

| (12) |

where

As is a constant, we do not need to take it into account when solving the optimization problem (11). According to (8a), Lemma 1, and Lemma 2, it is further obtained that

| (13) |

where

| (14a) | ||||

| (14b) | ||||

| (14c) | ||||

Let and . in (14c) can be reformulated as follows:

where

| (15) |

According to Lemma 2, we have

| (16) |

Then, the first term on the right-hand-side of (4.1) can be rewritten as:

| (17) |

Substituting (16) and (4.1) into (4.1) yields

| (18) |

Therefore, it is further obtain . By minimizing in (4.1) with respect to , the arrival cost for the th estimator can be derived as follows:

Next, let us proceed to the arrival cost by addressing the following optimization problem:

| (19a) | ||||

| where | ||||

| (19b) | ||||

By following the same procedure adopted to derive (4.1), can be expressed as:

up to a constant term. Based on (8c), it is further obtained that

where

is a constant and is neclected in deriving . Then, by applying the same procedure used to obtain (4.1) and (4.1), it is derived that:

| (20) |

where

Let us further define . Based on (4.1), we apply the forward dynamic programming method to the optimization problem (19), and it is obtained that

| (21) |

It is noted that the optimal value of for optimization problem (21) is . Therefore, it is further derived

| (22) |

In the following, according to (8a), the objective function of (22) can be rewritten as

| (23) |

where

Let . By minimizing in (4.1) with respect to , the arrival cost in (22) of the th estimator can be obtained as:

By iteratively applying the same procedure, the arrival cost for the subsequent sampling instants can be derived. Specifically, the recursive expression of the arrival cost for the th subsystem is presented as follows:

| (24) |

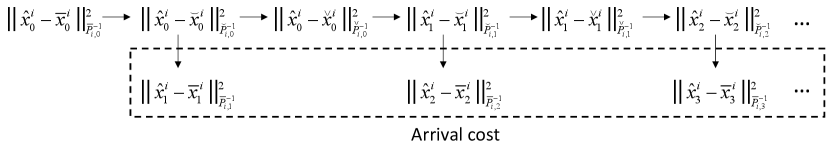

where the update of arrival cost has three steps, as illustrated in Figure 1. The details of the three steps are as follows:

-

•

From to :

(25a) (25b) -

•

From to :

(25c) (25d) -

•

From to the arrival cost :

(25e) (25f)

By leveraging the arrival cost obtained in (25), the proposed DMHE design for the linear system in (4) is completed. Specifically, at sampling instant , the th estimator of the proposed DMHE algorithm solves an optimization problem as follows:

| (26a) | |||

| (26b) | |||

| (26c) | |||

| (26d) | |||

In (26), penalizes the deviation of estimated subsystem states from the nominal process subsystem model over an estimation window, ensuring that the state estimates can follow the system dynamics. penalizes the discrepancy between the predicted measurements and actual measurements to ensure that the estimated states comply with measured outputs. The arrival cost summarizes the previous information that is not considered within the current estimation window.

4.2 Stability analysis

In this section, inspired by [30], we perform the stability analysis for the proposed DMHE method in (26), where the arrival costs of local estimators are approximated using a recursive approach for linear systems in (4) with state constraints. Before proceeding further, we introduce several matrices and one lemma as follows:

Lemma 3.

To establish the stability of the proposed DMHE algorithm, we modify the objective function of (26) by incorporating a constant term and rewrite the th estimator of the proposed DMHE method in (26). Specifically, at sampling instant , the th estimator of the proposed DMHE approach in (26) can be written as:

| (27a) | |||

| (27b) | |||

| (27c) | |||

| (27d) | |||

| where | |||

| (27e) | |||

where is the optimal value of the th estimator of the proposed DMHE approach calculated at sampling instant . The inclusion of the constant ensures that the sequence is non-decreasing. By leveraging the monotonicity and boundedness of , the sequence is convergent, as demonstrated in the proof of Proposition 2. This convergence forms the basis for proving the stability of the proposed linear DMHE algorithm in Theorem 1. It is noted that is a known value at sampling instant and can be disregarded when solving the optimization problem (27). Therefore, the reformulated DMHE method in (27) is equivalent to the proposed DMHE design in (26).

By creating augmented vectors , , , , and , . The subsystem model in (27a)-(27c) can be concatenated to form a compact collective estimation model:

| (28a) | ||||

| (28b) | ||||

| (28c) | ||||

where ; ; ; ; , ; . We further define a collective form of the objective function by summarizing the local objective functions for all :

| (29) |

where ; ; ; . It is worth mentioning that the solution of the proposed DMHE design in (27) is equivalent to solving the following optimization problem:

| (30) |

Similar to the stability analysis conducted in [30, 14], for a sequence , , we define the transit cost of the proposed DMHE approach in (27) for the subsystem :

| (31) |

Let , the collective transit cost of (30) takes the following form:

such that

Before introducing Proposition 1 and Lemma 4, an unconstrained DMHE design is formulated as follows:

| (32) |

The associated transit cost of the unconstrained DMHE approach in (32) for the th subsystem is described as follows:

| (33) |

Proposition 1.

Proof.

Based on (27e), substituting the constraints (27a)-(27c) and the constraints for , into yields:

| (34) |

From Lemma 1, it holds that

| (35) |

where

represents a constant, which will be defined later. Similarly, it is further obtained:

| (36) |

where

and

| (37) |

where

Therefore, by substituting (Proof), (Proof), and (Proof) into (Proof), we have

| (38) |

It is noted that the optimal solution of (Proof) is . Consequently, we can obtain that

| (39) |

Define ,

Consequently, (Proof) can be rewritten as

| (40) |

where ; ; ; ; . According to Lemma 3, (Proof) is equivalent to the equation

| (41) |

where

| (42a) | ||||

| (42b) | ||||

By optimality, , where , are the global minimizers of transit cost in (4.2), and the corresponding global minimum of the transit cost in (4.2) is . Therefore, we have that , and the constant term in (41) is .

Lemma 4.

Before proceeding further, we introduce a matrix and an assumption that will be used to prove the stability of the proposed DMHE method in (27).

| (43) |

Proof.

Based on (4.2) and (30), we can obtain the following

| (45) |

Therefore, the sequence is increasing. According to optimality , it follows that

| (46) |

where , , is the actual state generated by (4) without process disturbances and measurement noise. From (28), by choosing and for , the trajectory of , for , can be generated. Then, we have

| (47) |

Therefore, by optimality, it holds that

| (48) | ||||

Next, our objective is to prove that . To achieve this, we analyze each term on the right-hand-side of (48). Specifically, the first term on the right-hand-side of (48) satisfies

| (49) |

where represents the block matrix in the th row and the th column of the matrix . Similarly, by analyzing the remaining terms on the right-hand-side of (48), one can obtain

| (50a) | ||||

| (50b) | ||||

| (50c) | ||||

where is composed of the columns of with respect to subsystem state . Then, substituting (Proof) and (50) into (48) yields

where is defined in (4.2). Considering Assumption 1 and Lemma 4, one can obtain

| (51) |

From (46) and (Proof), we can iterate this procedure and obtain that

| (52) |

Considering (45) and (52), the sequence of converges as it is increasing and bounded. Consequently, (44) is proven.

We further define the estimation error at sampling instant calculated at sampling instant as . Then, the estimation error of sampling instant calculated at the previous sampling instant is denoted by .

Theorem 1.

If Assumption 1 holds, then there exists a sequence , , such that the sequence of estimation error within the estimation window for the entire system in (4) generated by the proposed DMHE in (27) is described by

Additionally, the estimation error converges, if the spectral radius of matrix satisfies

where

| (60) | ||||

| (68) |

Proof.

In the noise-free setting (i.e., and , ), the actual state satisfies

| (69a) | ||||

| (69b) | ||||

Considering (28a) and (69a), one can obtain

| (70a) | ||||

| (70b) | ||||

From (70), it it is further derived that

| (71) |

By taking into account (28c), (69b), and (71), we have

| (72) |

Therefore, (Proof) is equivalent to

Based on Proposition 2, it is obtained that

where and are defined in (60). Let and denote asymptotically vanishing variables, i.e., , . Then, it holds

| (73) |

By concatenating (71) for , we can obtain

| (74) |

where and are defined in (60). From (73) and (74), it holds

where , which satisfies . Additionally, the estimation error converges to zero when .

5 Distributed moving horizon estimation for nonlinear systems

In this section, the arrival cost design obtained for linear systems is extended to approximate the arrival costs of the local estimators in the nonlinear constrained context.

5.1 Arrival cost approximation for nonlinear systems

We extend the arrival cost design for linear unconstrainted systems, as depicted in (24), to approximate the arrival costs for nonlinear systems. The nonlinear subsystem model in (2) is used as the model basis for each local estimator. Through successively linearizing the subsystem model in (2) at each sampling instant , we obtain an approximation of the arrival cost for the th estimator. The linearization is performed as follows:

| (75) |

Then, the corresponding matrices , and , , can be derived from in (75). These matrices are utilized to update the arrival cost of the proposed constrained nonlinear DMHE. Specifically, for each subsystem , , the expression of the proposed arrival cost design for nonlinear systems is obtained from (24) and (25) by replacing with , with , , and with .

5.2 Formulation of nonlinear MHE-based estimators

Based on the arrival cost approximation for nonlinear constrained systems outlined in Section 5.1, at each sampling instant , the local estimator of the DMHE algorithm for the nonlinear system in (2) is as follows:

| (76a) | |||

| (76b) | |||

| (76c) | |||

| (76d) | |||

| (76e) | |||

In (76), }, where represents the state estimate of subsystem and serves as the decision variable of the optimization problem in (76), and is determined based on the estimate of each interconnected subsystem , , generated at the previous sampling instant ; concatenates all the of the interconnected subsystems , ; and are two compact sets that contain and , respectively. When solving the MHE-based optimization problem for the th subsystem, only the state estimates associated with subsystem , i.e., , , are treated as decision variables. The state estimates of the interconnected subsystems , , are considered as known inputs to the th estimator.

Algorithm 1 outlines the implementation steps for the proposed DMHE approach for the nonlinear system in (2) with a recursive update of arrival costs of the local estimators, which can be followed to generate the optimal state estimates for the th subsystem, .

At each sampling time , the MHE-based estimator for the th subsystem, , carry out the following steps:

-

1.

Receive measured outputs , and optimal estimates of th subsystem, , obtained at the previous sampling instant from each estimator , .

- 2.

- 3.

-

4.

Solve (76) to generate optimal state estimates (i.e., ).

-

5.

Set . Go to step 1.

6 Application to a reactor-separator process

6.1 Process description

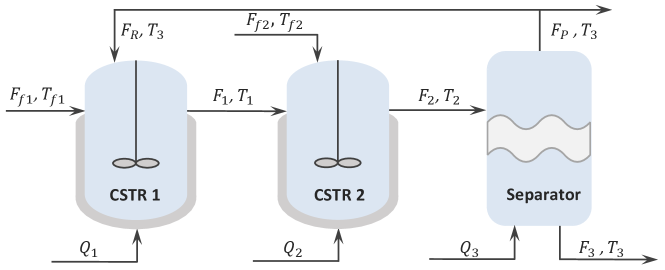

In this section, we consider a reactor-separator chemical process that consists of two continuous stirred tank reactors (CSTRs) and one flash tank separator. Based on the physical topology presented in Figure 2, we partition this process into three subsystems, with each subsystem accounting for one vessel.

This chemical process involves two reactions: the first reaction converts reactant into desired product ; the second reaction converts into side product . The system states include the mass fractions of reactant (denoted by , ), the mass fractions of product (denoted by , ), and the temperatures in three vessels (denoted by , ). Among these states, only temperatures in the three vessels can be measured online. The details of the first-principles nonlinear dynamic model and a more comprehensive description of this chemical process can be found in [15]. The objective is to implement the proposed DMHE approach, where the arrival costs for the local estimators are updated using a recursive method for estimating the nine system states based on the measured outputs , .

| 0.1939 | 0.7404 | 528.3482 | 0.2162 | 0.7190 | 520.0649 | 0.0716 | 0.7373 | 522.3765 | |

| 0.2521 | 0.9625 | 686.8525 | 0.2810 | 0.9346 | 676.0844 | 0.0931 | 0.9585 | 679.0894 |

In the simulations, the heat exchange rates considered in this process are , , and . The initial state that is utilized to generate the actual state trajectories of this process is presented in Table 1. All the states are scaled to ensure equal importance is assigned to states of different magnitudes. Unknown process disturbances and measurement noise are generated following a zero-mean Gaussian distribution with a standard deviation of for process disturbances and for measurement noise, which are further added to the states and output measurements in the scaled coordinate, respectively.

6.2 Simulation results

| Proposed DMHE | DMHE-1 | DMHE-2 | |

| RMSE | 0.1384 | 0.1425 | 0.2595 |

The estimation window is . The initial guess for the proposed DMHE is picked as , as presented in Table 1. The initial weighting matrices , , and , , are chosen as , , and . We impose constraints on the estimates of and , , generated by the proposed DMHE such that they stay within the range of , while the estimates of temperatures , , are made positive.

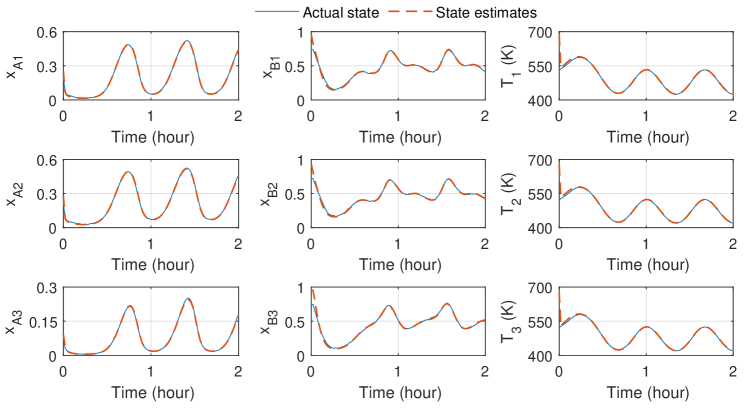

The trajectories of the state estimates given by the proposed DMHE algorithm and the actual states are presented in Figure 3. The proposed DMHE approach provides accurate estimates of the ground truth of all the process states, which demonstrates the robustness of the proposed DMHE approach against unknown disturbances. Additionally, we evaluate and compare the estimation performance of the proposed DMHE approach with two DMHE algorithms of which each local estimator only uses the sensor measurements of the corresponding subsystems: 1) DMHE-1, where the arrival cost is constructed as a weighted squared error between the state estimate and the a priori state prediction, with the weighting matrix determined by a constant matrix; 2) DMHE-2, where the arrival cost is not considered in DMHE algorithm. The constant weighting matrices , , and , , of DMHE-1, are chosen the same as the initial weighting matrices of the proposed DMHE method. The root mean squared errors (RMSE) for the three DMHE algorithms in the scaled coordinate are shown in Table 2. The proposed DMHE algorithm provides more accurate estimates than the other two methods.

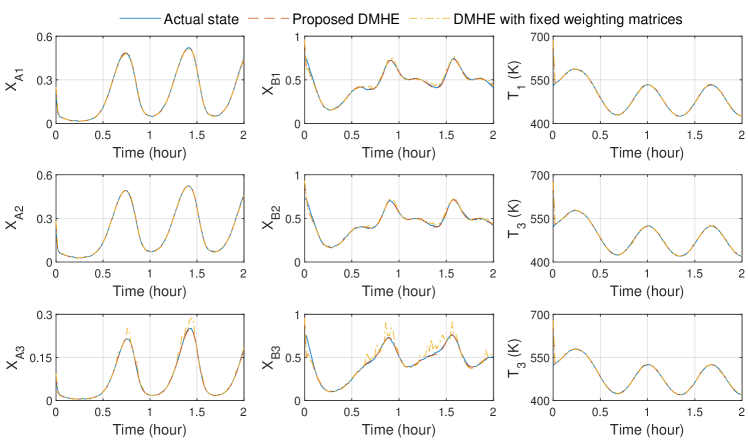

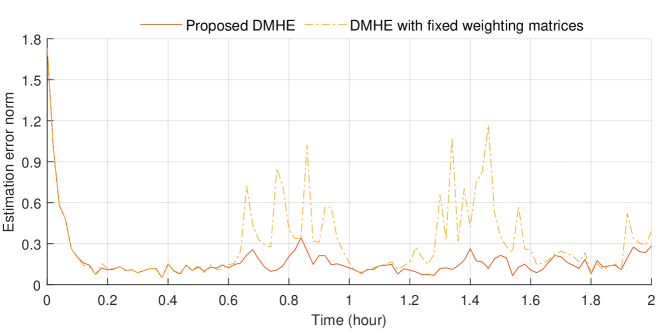

To further illustrate the superiority of employing a recursive approach to approximate and update the arrival costs at each sampling instant, we compare it with DMHE-3, where the arrival costs of local estimators are designed as a weighted squared error between the estimate of state and the initial guess of state, weighted by a constant matrix throughout the simulation period. Different from DMHE-1 in the previous comparison, DMHE-3 incorporates sensor measurements from the interconnected subsystems into the objective function of the local estimator design in the same manner as our proposed DMHE approach. In this comparison, we randomly select the weighting matrices of DMHE-3 without fine-tuning for this process. Specifically, the constant weighting matrices , , and for the th estimator of DMHE-3 are diagonal matrices with the main diagonal elements set to 1, 0.001, and 0.001, respectively. These matrices for DMHE-3 also serve as the initial weighting matrices for our proposed DMHE algorithm. In contrast, the matrix will be updated following (25) and (75) at every sampling instant when conducting state estimation using our proposed DMHE approach.

The trajectories of actual states and the estimates provided by both the proposed DMHE algorithm and DMHE-3 are shown in Figure 4, and the corresponding trajectories of the estimation errors are presented in Figure 5. The results demonstrate that the proposed DMHE approach outperforms DMHE-3 overall in terms of estimation accuracy. It is worth mentioning that when constant weighting matrices are employed for DMHE, extensive trial and error analysis is typically needed to fine-tune the weighting matrices for good estimation performance. In contrast, the arrival cost of each local estimator is updated at each sampling instant in our proposed DMHE algorithm, which allows for less accurate initial parameters and is more favorable for implementation.

Remark 1.

Compared with the iterative DMHE approaches in [8, 10, 12] that require iterative executions within each sampling period, our proposed DMHE method offers more efficient computation. This improvement is attributed to the update of the arrival cost at each sampling instant. Our approach employs a recursive method to provide a more accurate approximation of the arrival cost, which not only improves the accuracy of the state estimates but also reduces the computation complexity because the local estimators are only required to be executed once within each sampling period.

Remark 2.

One of the important tuning parameters that affect the trade-off between the estimation accuracy and the computational complexity is the length of the estimation window for the local estimators, denoted by . From an application perspective, a larger has the potential to enhance the estimation accuracy of the DMHE approach. Meanwhile, increasing also leads to increased complexity of the online optimization problems associated with the local estimators, leading to a higher computational burden. Therefore, a good trade-off between the estimation accuracy and the computational complexity should be achieved via appropriately adjusting the window length N.

7 Concluding Remarks

We addressed a partition-based distributed state estimation problem for general nonlinear systems. A recursive approach was introduced to approximate the arrival cost for each MHE-based estimator of the DMHE scheme. A partition-based distributed full-information estimation formulation was employed to derive an analytical expression for the arrival costs of local estimators of the DMHE algorithm in the linear unconstrained context. Based on the derived arrival cost for each local estimator, the proposed DMHE estimator for constrained linear systems was proposed, and the stability of the proposed DMHE scheme for linear systems was proven. Subsequently, through successive linearization of nonlinear subsystem models, the arrival cost design for linear unconstrained systems was extended to the nonlinear context. Accordingly, we proposed a partition-based DMHE algorithm for constrained nonlinear processes. The proposed DMHE method was applied to a simulated chemical process, and the results confirmed its superiority and efficacy.

In the future research, we will investigate the stability of the DMHE approach for nonlinear systems and the development of a robust DMHE algorithm for systems with uncertain model parameters.

Acknowledgment

This research is supported by the Ministry of Education, Singapore, under its Academic Research Fund Tier 1 (RG63/22), and Nanyang Technological University, Singapore (Start-Up Grant).

References

- [1] P. D. Christofides, R. Scattolini, D. M. de la Peña, J. Liu. Distributed model predictive control: A tutorial review and future research directions. Computers & Chemical Engineering, 51:21–41, 2013.

- [2] G. Battistelli, L. Chisci. Stability of consensus extended Kalman filter for distributed state estimation. Automatica, 68:169–178, 2016.

- [3] X. Yin, J. Zeng, J. Liu. Forming distributed state estimation network from decentralized estimators. IEEE Transactions on Control Systems Technology, 27(6):2430–2443, 2018.

- [4] P. Daoutidis, W. Tang, A. Allman. Decomposition of control and optimization problems by network structure: Concepts, methods, and inspirations from biology. AIChE Journal, 65(10), 2019.

- [5] W. Tang, A. Allman, D. B. Pourkargar, P. Daoutidis. Optimal decomposition for distributed optimization in nonlinear model predictive control through community detection. Computers & Chemical Engineering, 111:43–54, 2018.

- [6] S. Chen, Z. Wu, D. Rincon, P. D. Christofides. Machine learning-based distributed model predictive control of nonlinear processes. AIChE Journal, 66(11):e17013, 2020.

- [7] M. Farina, G. Ferrari-Trecate, R. Scattolini. Moving-horizon partition-based state estimation of large-scale systems. Automatica, 46(5):910–918, 2010.

- [8] R. Schneider, W. Marquardt. Convergence and stability of a constrained partition-based moving horizon estimator. IEEE Transactions on Automatic Control, 61(5):1316–1321, 2015.

- [9] R. Schneider. A solution for the partitioning problem in partition-based moving-horizon estimation. IEEE Transactions on Automatic Control, 62(6):3076–3082, 2017.

- [10] R. Schneider, R. Hannemann-Tamás, W. Marquardt. An iterative partition-based moving horizon estimator with coupled inequality constraints. Automatica, 61:302–307, 2015.

- [11] X. Yin, B. Huang. Event-triggered distributed moving horizon state estimation of linear systems. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 52(10):6439–6451, 2022.

- [12] X. Li, S. Bo, Y. Qin, X. Yin. Iterative distributed moving horizon estimation of linear systems with penalties on both system disturbances and noise. Chemical Engineering Research and Design, 194:878–893, 2023.

- [13] X. Yin, J. Liu. Distributed moving horizon state estimation of two-time-scale nonlinear systems. Automatica, 79:152–161, 2017.

- [14] M. Farina, G. Ferrari-Trecate, C. Romani, R. Scattolini. Moving horizon estimation for distributed nonlinear systems with application to cascade river reaches. Journal of Process Control, 21(5):767–774, 2011.

- [15] J. Zhang, J. Liu. Distributed moving horizon state estimation for nonlinear systems with bounded uncertainties. Journal of Process Control, 23(9):1281–1295, 2013.

- [16] J. Zeng, J. Liu. Distributed moving horizon state estimation: Simultaneously handling communication delays and data losses. Systems & Control Letters, 75:56–68, 2015.

- [17] C. C. Qu, J. Hahn. Computation of arrival cost for moving horizon estimation via unscented Kalman filtering. Journal of Process Control, 19(2):358–363, 2009.

- [18] R. López-Negrete, S. C. Patwardhan, L. T. Biegler. Constrained particle filter approach to approximate the arrival cost in moving horizon estimation. Journal of Process Control, 21(6):909–919, 2011.

- [19] C. V. Rao, J. B. Rawlings, J. H. Lee. Constrained linear state estimation – a moving horizon approach. Automatica, 37(10):1619–1628, 2001.

- [20] M. Gharbi, C. Ebenbauer. Proximity moving horizon estimation for linear time-varying systems and a Bayesian filtering view. IEEE Conference on Decision and Control, 3208–3213, Nice, France, 2019.

- [21] C. V. Rao, J. B. Rawlings. Constrained process monitoring: Moving-horizon approach. AIChE Journal, 48(1):97–109, 2002.

- [22] M. Gharbi, F. Bayer, C. Ebenbauer. Proximity moving horizon estimation for discrete-time nonlinear systems. IEEE Control Systems Letters, 5(6):2090–2095, 2020.

- [23] X. Li, S. Bo, X. Zhang, Y. Qin, X. Yin. Data‐driven parallel Koopman subsystem modeling and distributed moving horizon state estimation for large‐scale nonlinear processes. AIChE Journal, 70(3):e18326, 2024.

- [24] X. Li, A. W. K. Law, X. Yin. Partition-based distributed extended Kalman filter for large-scale nonlinear processes with application to chemical and wastewater treatment processes. AIChE Journal, 69(12):e18229, 2023.

- [25] X. Li, X. Yin. A recursive approach to approximate arrival costs in distributed moving horizon estimation. IFAC Conference on Nonlinear Model Predictive Control, Accepted.

- [26] P. K. Findeisen. Moving horizon state estimation of discrete time systems. Ph.D. thesis, University of Wisconsin–Madison, 1997.

- [27] S. Knüfer, M. A. Müller. Nonlinear full information and moving horizon estimation: Robust global asymptotic stability. Automatica, 150:110603, 2023.

- [28] J. Rawlings, D. Mayne. Postface to model predictive control: Theory and design. Nob Hill Pub, 5:155–158, 2012.

- [29] H. Henderson, S. Searle. On deriving the inverse of a sum of matrices. SIAM Review, 23(1):53–60, 1981.

- [30] M. Farina, G. Ferrari-Trecate, R. Scattolini. Moving horizon partition-based state estimation of large-scale systems–Revised version. arXiv:2401.17933, 2024.