Architecture-Aware Learning Curve Extrapolation via Graph Ordinary Differential Equation

Abstract

Learning curve extrapolation predicts neural network performance from early training epochs and has been applied to accelerate AutoML, facilitating hyperparameter tuning and neural architecture search. However, existing methods typically model the evolution of learning curves in isolation, neglecting the impact of neural network (NN) architectures, which influence the loss landscape and learning trajectories. In this work, we explore whether incorporating neural network architecture improves learning curve modeling and how to effectively integrate this architectural information. Motivated by the dynamical system view of optimization, we propose a novel architecture-aware neural differential equation model to forecast learning curves continuously. We empirically demonstrate its ability to capture the general trend of fluctuating learning curves while quantifying uncertainty through variational parameters. Our model outperforms current state-of-the-art learning curve extrapolation methods and pure time-series modeling approaches for both MLP and CNN-based learning curves. Additionally, we explore the applicability of our method in Neural Architecture Search scenarios, such as training configuration ranking.

I Introduction

Training neural architectures is a resource-intensive endeavor, often demanding considerable computational power and time. Researchers have developed various methodologies to predict the performance of neural networks early in the training process using learning curve data. Some methods (Domhan et al., 2015; Gargiani et al., 2019; Adriaensen et al., 2023) apply Bayesian inference to project these curves forward, while others employ time-series prediction techniques, such as LSTM networks. Despite their effectiveness, these approaches (Swersky et al., 2014; Baker et al., 2017) typically overlook the architectural features of networks, missing out on crucial insights that could be derived from the models’ topology.

On another front, architecture-based predictive models have been developed to forecast network performance based purely on NN structures (Shi et al., 2019; Friede et al., 2019; Wen et al., 2020; Tang et al., 2020; Yan et al., 2020; Ning et al., 2020; Xu et al., 2019; Siems et al., 2020). These models facilitate a deeper understanding of the relationship between architectures and their performance. However, they are limited in their ability to predict precise learning curve values at specific epochs and struggle to capture the variability in performance that a single architecture can exhibit under diverse training conditions.

Moreover, there is a growing interest in conceptualizing the optimization process during NN training as a dynamical system. By considering the step size in gradient descent as approaching zero, it is possible to formulate an ordinary differential equation for the model parameters (Su et al., 2016; Zhang et al., 2024; Maskan et al., 2023). This perspective is useful for analyzing the convergence of different optimization algorithms, especially for convex problems. Building on this foundational idea, we propose an innovative approach that models the evolution of learning curves using neural differential equations (Chen et al., 2018), tailored for a inductive setting where the trained learning curve predictor is applicable to new learning curves generated under various training configurations, such as different architectures, batch sizes, and learning rates. This approach leverages recent advancements in differential equations to provide a flexible framework capable of handling the complexities of modern neural training processes.

Our method merges the structural attributes of neural architectures with the dynamic nature of learning curves. We utilize a seq2seq variational autoencoder framework to analyze the initial stages of a learning curve and predict its future progression. This predictive capability is further enhanced by an architecture-aware component that produces a graph-level embedding from the architecture’s topology, employing techniques like Graph Convolutional Networks (GCN) (Kipf and Welling, 2016) and Differentiable Pooling (Ying et al., 2018). This integration not only improves the accuracy of learning curve extrapolations compared to existing methods but also significantly facilitates model ranking, potentially leading to more efficient use of computational resources, accelerated experimentation cycles, and faster progress in the field of machine learning.

Our contributions are twofold:

-

•

We introduce an architecture-aware, dynamical system-based approach to model learning curves from different architectures for a given source task. Our model can predict the learning curves of unseen architectures using only a few observed epochs.

-

•

Our method improves model ranking by analyzing just a limited number of learning curve epochs, such as 10. This approach speeds up model selection by 20 times compared to traditional full-cycle stochastic gradient descent training.

II Related Work

Learning curve extrapolation.

Previous studies have explored learning curve prediction through diverse approaches (Swersky et al., 2014; Domhan et al., 2015; Baker et al., 2017; Chandrashekaran and Lane, 2017; Gargiani et al., 2019; Ru et al., 2021; Klein et al., 2022; Adriaensen et al., 2023). A line of work has focused on Bayesian frameworks. Specifically, Domhan et al. (2015) utilized a weighted combination of functions to predict mean future validation accuracy and facilitate early termination of underperforming training runs. Building on this, Chandrashekaran and Lane (2017) extended basis function extrapolation by incorporating historical learning curves from previous training runs, while Klein et al. (2022) proposed a Bayesian neural network to flexibly model learning curves, removing the constraint that each epoch must outperform the previous one and thereby reducing instability in predictions. More recently, Adriaensen et al. (2023) applied a prior-data-fitted network training paradigm to enhance sampling efficiency from the posterior distribution of learning curves. Despite these advancements, existing methods overlook the role of architectural design, whereas our work explicitly incorporates this information to better model and understand the evolution of learning curves.

Architecture-based performance prediction. Advances in Graph Neural Networks (GNNs) have led to innovative methods for predicting the performance of neural network architectures (Shi et al., 2019; Friede et al., 2019; Wen et al., 2020; Tang et al., 2020; Yan et al., 2020; Ning et al., 2020; Xu et al., 2019; Siems et al., 2020). In exploring unsupervised learning strategies, Yan et al. (2020) used architecture embeddings created by a pre-trained model to feed a Gaussian Process model for performance prediction. These studies often incorporate foundational GNN models such as the GCN (Kipf and Welling, 2016) and the Graph Isomorphism Network (GIN) (Xu et al., 2018) to effectively process input architectures. Various training objectives have been considered, including Mean Squared Error (MSE) (Shi et al., 2019; Wen et al., 2020; Ning et al., 2020; Tang et al., 2020), graph reconstruction loss (Friede et al., 2019; Tang et al., 2020; Yan et al., 2020), and pair-wise ranking loss (Ning et al., 2020; Xu et al., 2019), highlights the diverse methods aimed at improving the prediction of architecture performance. Building on these foundations, our approach goes beyond simply using the graph representation to extract a scalar performance value; instead, it integrates the graph information into the ODE of loss and can extrapolate to any time step of interest, including the value at convergence.

Dynamical system modeling.

Neural ordinary differential equations (NODE) (Chen et al., 2018) introduce a general framework for parameterizing ODEs with deep neural networks, deriving backpropagation through the adjoint sensitivity method. Variational autoencoders combined with NODE, as introduced in (Rubanova et al., 2019), are used to predict dynamics from irregularly sampled time-series data. Building on these developments, recent works (Huang et al., 2020, 2021; Luo et al., 2023; Huang et al., 2024a; Jiang et al., 2023; Huang et al., 2024b) further integrate graph neural networks (GNNs) with NODE to model temporal graphs, allowing for the representation of evolving nodes and edges over time. In contrast to modeling individual nodal trajectories, this work employs GNNs as a graph reduction technique, using their embeddings to drive the evolution of learning curves within the NODE framework.

III Method

III-A Problem Formulation

Learning curves, such as train or test loss, are generated by optimizing a neural network on various source tasks including adult income classification, image classification, and housing price regression (Vanschoren et al., 2014). Our goal is to train a single latent ODE model capable of extrapolating learning curves for a given source task across different architectures. The model infers full learning curves of length using only the initial epochs and the corresponding network architecture, denoted as .

Our approach transforms the conventional discrete optimization process into a continuous domain, operating within the continuous time interval , where marks the start and corresponds to the last epoch . The time for each epoch, , is defined as with .

We employ a seq2seq variational autoencoder framework (Rubanova et al., 2019; Huang et al., 2020, 2021), where a sequence encoder parameterized by processes the early part of the learning curve to estimate the variational parameters of the posterior distribution , which determines the latent state at the start of the prediction period, represented by . A numerical solver then integrates an ODE function, denoted as , governing this latent state, starting from the initial condition . Each subsequent latent state is decoded independently to predict the output for . This process is mathematically represented as follows:

| (1) | ||||

| (2) | ||||

| (3) |

Here simulates the ODE function to compute the latent states at time steps (), given initial condition and the neural architecture . is a neural network mapping to the corresponding output Since the model has access to only partial information about the optimization process, it cannot fully determine the loss landscape or accurately trace the trajectory within it. Therefore, we employ a variational framework to quantify uncertainty by estimating the most probable values and their variability. We refer to our method as Learning Curve GraphODE (LC-GODE), highlighting the integration of architectures within the ODE framework to model learning curves. The illustration of our approach is shown in Figure 1.

III-B Architecture-aware Differential Equation

Observed time series encoder.

We use a sequence encoder to compute the mean and standard deviation of the posterior distribution , which is assumed to be Gaussian:

| (4) | |||

| (5) |

We implement this using an RNN with GRU units for the sequence encoder. Other encoder options include Self-Attention (Vaswani et al., 2017) and Temporal Convolutional Networks (TCN) (Pandey and Wang, 2019), which adapt well to varying observation lengths. We conduct an ablation study in the experimental section to evaluate the performance of these alternative sequence encoder implementations.

Architecture encoder.

Assuming the graph representation contains nodes, the adjacency matrix details node connections, where represents the edge from node to node . We analyze two foundational neural network types: MLPs and CNNs. In MLPs, nodes correspond to neurons and edges correspond to the presence of the connections between neurons, whereas in CNNs, nodes represent feature maps and edges depict operations like and convolutions, or average pooling. For CNNs, we adopt the cell-based representation (Dong and Yang, 2020; Liu et al., 2018), which consists of four principal building blocks: stems, normal cells, reduction cells, and classification heads. The stem block is a fixed sequence of convolutional layers to process the input images. This is followed typically by 14-20 cells with reduction cells placed at 1/3 and 2/3 of the total depth. A normal cell contains 4 nodes, each of which belongs to the set of operations: skip connections, identity or zero (indicating the presence or absence of connections between certain layers), and convolutions, and average pooling. Finally, the classification head employs a global pooling layer followed by a single fully connected layer and returns the network’s output. Since the cell is repeated throughout this macro-skeleton, a CNN can be uniquely represented by its cell.

To derive a graph-level embedding, we first implement node-level message passing, followed by global pooling to extract a global embedding. Our method differs from existing architecture-based performance prediction approaches (Liu et al., 2018; Wen et al., 2020; Knyazev et al., 2021) by treating the node as a feature map rather than focusing on operations. Nodal features are calculated from the in-degree and out-degree of each node, normalized by the total number of edges. For MLPs, edge features are binary, while for CNN cells, they are integers representing operation types: . The nodal feature matrix is denoted as . The graph encoding process returns a vector representation of the architecture.

| (6) |

For node-level message passing, we employ GCN layers and normalize the adjacency matrix to stabilize training, following (Kipf and Welling, 2016).

A GCN layer then transforms the nodal features into a hidden representation:

| (7) |

where is the feature transformation matrix. Applying GCN layers aggregates information from -hop neighbors. We employ learnable pooling, among other methods such as average- and max-pooling, and investigate each in our ablation study. The graph embedding contributes to the evolution of the latent state, as detailed in the next section, which introduces the latent ODE.

Latent ordinary differential equation.

The transformation from discrete to continuous domain is predicated on the assumption that the step size, or learning rate, approaches zero, thereby approximating the time derivative of NN parameters using (Su et al., 2016), where , denotes the objective function and parameters from the source task. Assuming the parameters implicitly depends on time , the time derivative of the training loss can be written as:

| (8) |

The ground truth ODE for loss is independent of time. Therefore we adopt autonomous differential equations to describe the continuous evolution of the learning curves. On the other hand, we do not directly use the exact formula, as this involves the computation of backpropagation of the source task, which is potentially computation intensive. Moreover, our primary goal is not to derive exact ODEs for each training configuration. Instead, we focus on efficiently inferring learning curves for new training configurations. To achieve this, we leverage the latent space, using the expressivity of hidden neurons to capture common patterns across learning curves from the same source task, despite variations in underlying architectures and hyperparameters.

Given that the training data for the source task remains fixed, the loss landscape varies depending on the architecture. Therefore, it’s sufficient to model a universal latent ODE as a function of the architecture, enabling it to describe the evolution of various learning curves, each corresponding to a different architecture. Our latent ODE is formalized by the equation:

| (9) |

Here, denotes the derivative of the latent state vector with respect to time, driven by the function , which takes as input both the latent state and a graph-level embedding . This combination allows the model to simultaneously consider the dynamic properties of the learning curve and the static characteristics of the architecture, enhancing the predictive capability of the system. Eq (9) can be regarded as a single-agent representation of the dynamics induced by NN training, which involves multiple trajectories of neurons, edge weights, and loss. This reduced representation of coupled dynamical system has been explored in (Gao et al., 2016; Laurence et al., 2019) to study the tipping point of the original network dynamics. The difference from the prior dynamical system reduction approach is that the graph reduction mechanism is learnable so that the model can be adapted to unseen trajectories derived from different optimization trials.

Finally, numerical integration of Eq (9) yields a time series of latent states (). Each latent state is independently decoded by a function .

Training objective.

To optimize our model, we maximize the evidence lower bound (ELBO), fomulated as follows

| (10) |

The first term in the ELBO equation represents the expected log-likelihood of observing future outputs given the latent states, as parameterized by , where , , and correspond to the architecture encoder, the ODE function, and the decoder, respectively. The second term penalizes the divergence (measured by the KL-divergence) between the posterior distribution of the latent states and their prior distribution, enforcing a regularization that anchors the posterior closer to the prior. This balance ensures that while the model remains flexible enough to capture complex patterns in data, it also maintains a level of generalization that prevents overfitting.

Scalability and computational cost.

The computational cost of numerical integration is minimal because the ODE models only the evolution of the loss embedding with dimension , rather than modeling each node in the architecture individually. The runtime of the forward pass is bounded by , where is the number of integration time steps, assuming the ODE function is implemented as an MLP with a fixed number of layers. Consequently, the runtime of the ODE component remains independent of the overall size of the neural network.

| car | segment | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAPE | RMSE | MAPE | RMSE | |||||||||

| Epochs | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 |

| LC-BNN | 0.5487 | 0.4887 | 0.4534 | 0.4232 | 0.3838 | 0.3592 | 0.6211 | 0.5688 | 0.5370 | 0.5384 | 0.4960 | 0.4694 |

| LC-PFN | 0.0598 | 0.0681 | 0.0723 | 0.0443 | 0.0502 | 0.0528 | 0.0654 | 0.0708 | 0.0729 | 0.0497 | 0.0543 | 0.0555 |

| VRNN | 0.1857 | 0.1923 | 0.1923 | 0.1514 | 0.1511 | 0.1511 | 0.1840 | 0.1742 | 0.1742 | 0.1613 | 0.1557 | 0.1557 |

| LSTM | 0.0853 | 0.1104 | 0.1251 | 0.0561 | 0.0709 | 0.0790 | 0.0825 | 0.1126 | 0.1346 | 0.0478 | 0.0640 | 0.0758 |

| NODE | 0.0683 | 0.0730 | 0.0764 | 0.0459 | 0.0499 | 0.0531 | 0.0794 | 0.0837 | 0.0853 | 0.0574 | 0.0597 | 0.0609 |

| NSDE | 0.0751 | 0.0768 | 0.0779 | 0.0503 | 0.0515 | 0.0522 | 0.0817 | 0.0854 | 0.0864 | 0.0595 | 0.0610 | 0.0614 |

| LC-GODE | 0.0431 | 0.0463 | 0.0488 | 0.0328 | 0.0349 | 0.0365 | 0.0566 | 0.0591 | 0.0608 | 0.0462 | 0.0477 | 0.0487 |

| cifar10 | cifar100 | |||||||||||

| MAPE | RMSE | MAPE | RMSE | |||||||||

| Epochs | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 |

| LC-BNN | 0.4235 | 0.4204 | 0.4036 | 0.2101 | 0.2116 | 0.2260 | 1.2441 | 1.4087 | 1.2946 | 0.1161 | 0.1219 | 0.1373 |

| LC-PFN | 0.1514 | 0.1618 | 0.1782 | 0.0835 | 0.1023 | 0.1325 | 0.3115 | 0.3536 | 0.3749 | 0.0738 | 0.1062 | 0.1549 |

| VRNN | 0.1231 | 0.1316 | 0.1419 | 0.0905 | 0.0917 | 0.0987 | 0.3621 | 0.5105 | 0.5801 | 0.1153 | 0.1161 | 0.1224 |

| LSTM | 0.1391 | 0.1467 | 0.1309 | 0.0752 | 0.0736 | 0.0682 | 0.3853 | 0.5599 | 0.5761 | 0.0659 | 0.0715 | 0.0720 |

| NODE | 0.1457 | 0.1525 | 0.1404 | 0.0711 | 0.0717 | 0.0727 | 0.4895 | 0.6593 | 0.6592 | 0.0681 | 0.0760 | 0.0798 |

| NSDE | 0.1491 | 0.1454 | 0.1245 | 0.0686 | 0.0670 | 0.0645 | 0.4547 | 0.5076 | 0.4201 | 0.0629 | 0.0669 | 0.0665 |

| LC-GODE | 0.1107 | 0.1093 | 0.0913 | 0.0645 | 0.0603 | 0.0557 | 0.2708 | 0.3205 | 0.2661 | 0.0621 | 0.0636 | 0.0625 |

IV Experiments

We demonstrate the capability of LC-GODE to forecast model performance across a range of common AutoML benchmarks. First, we evaluate LC-GODE against six learning curve extrapolation techniques on real-world datasets obtained using stochastic gradient descent on both tabular and image tasks. The training process is conducted individually for each source task. Furthermore, we assess our method’s effectiveness in ranking training configurations by predicted optimal performance. Lastly, we explore model sensitivity to variants of architecture, observed time-series encoders and hyper-parameters. Our code and supplemental materials are publicly available111https://github.com/dingyanna/LC-GODE.git.

Datasets.

We consider test loss and test accuracy curves for both MLP-based and CNN-based architectures. Specifically for MLPs, we use car and segment tabular data binary classification tasks from OpenML (Vanschoren et al., 2014) as source tasks. Following LCBench (Zimmer et al., 2021), we randomly generate training configurations that include variables such as the number of layers, number of hidden units, and learning rates. For each dataset, we conduct 550 optimization trials across 200 epochs. For CNN-based models, we employ the NAS-Bench-201 dataset (Dong and Yang, 2020), which provides comprehensive learning curves for each architecture over a span of 200 epochs across two image datasets: CIFAR-10, CIFAR-100 (Krizhevsky et al., 2009). We randomly select 5,000 architectures from CIFAR-10 and CIFAR-100 to form our dataset. Furthermore, we reserve 20% of all trials as the test set for each MLP source task and 25% for each CNN source task.

IV-A Extrapolating Real-world Learning Curves

Experimental setup.

The goal of this experiment is to evaluate the LC-GODE model against established learning curve prediction methods using real-world benchmarks. We train our model separately on the test loss curves of each source task. The condition length is set to 10 epochs for all methods in this experiment. The instantiation of LC-GODE that we report features: (i) an architecture encoder that utilizes 2 layers and employs a learnable pooling technique, (ii) an observed time-series encoder implemented using GRU, (iii) an ODE function with a 2-layer MLP and integrated using the Runge-Kutta 4 method (Butcher, 1996). Further details on the evaluation metrics and the training settings can be found in the supplemental materials.

Baselines.

We evaluate our model against six methods, including three Bayesian approaches and three general time-series prediction approaches. (i) LC-BNN: This method utilizes a Bayesian neural network to model the posterior distribution of future learning curves. The probability function is constructed from a combination of basis functions, following the approach described by Domhan et al. (2015). (ii) LC-PFN: A transformer-based model is trained on synthetic curves that are generated from a pre-defined prior. This method serves as an efficient alternative to traditional Markov Chain Monte-Carlo (MCMC) techniques for sampling from posterior distributions. (iii) VRNN: A probabilistic model utilizing random forests and Bayesian recurrent neural networks. (iv) LSTM: This Recurrent Neural Network captures sequential dependencies. Before the observation cutoff at , input states are derived from actual observations. Post , the model uses its own predictions from previous timestamps as inputs. (v) NODE: Latent Ordinary Differential Equations, focusing primarily on modeling the latent loss representation without incorporating architectural information. (vi) NSDE: Latent Stochastic Differential Equations, consisting of a drift term and a diffusion term. The drift term is the same as NODE, and the sequence encoder and decoder is the same as in our model. Both NODE and NSDE are trained using the variational framework.

Results.

We evaluate performance using Mean Absolute Percentage Error (MAPE) and Root Mean Squared Error (RMSE) with different prediction lengths. Table I shows the extrapolation error for test accuracy curves from four datasets. Due to space constraints, the comparison results for loss curve extrapolation are provided in the supplement. Our proposed LC-GODE outperforms all baselines on these datasets. Specifically, LC-GODE reduces the error on test accuracy curves by 36.13%, 30.72%, 34.97%, and 59.63%, and on test loss curves by 65.5%, 44.61%, 20.1%, and 23.45% compared to the NODE model without architecture information. This improvement is due to the incorporation of architecture information with graph embedding. The architectures with similar performance are mapped to nearby locations in the hidden space, as shown in Figure 2(a). To demonstrate the advantage of jointly training the time-series encoder with the graph encoder, we compare the optimal performance difference of two configurations with the distance between their initial latent states in Figure 2(b). When architecture information is included, the correlation between these distances increases from 0.77 to 0.83 for the CIFAR-10 test accuracy curves. For a detailed comparison over epochs, we plot MAPE at 10 prediction lengths for NODE, NSDE, and LC-GODE on both test accuracy and loss curves (Figure 3). Overall, models perform better and have larger improvement on test accuracy curves compared to loss curves. This could be attributed to the fact that classification accuracy is less sensitive to decision boundary changes than cross-entropy loss, making it less variable and easier to predict.

Regarding the baseline comparisons, LC-PFN emerges as the second most effective approach for extrapolating test accuracy curves of the car and segment source tasks, while the NSDE model ranks as the second in predicting test loss curves for CIFAR-10 and CIFAR-100. Both NSDE and NODE models demonstrate comparable performance, with NSDE marginally outperforming NODE. These results underscore the viability of employing time-series approaches for addressing learning curve extrapolation challenges. Notably, the slight advantage of NSDE over NODE suggests subtle benefits in capturing stochastic dynamics that may be present in complex learning scenarios. This comparison highlights the potential for refined time-series models to enhance predictive accuracy and adaptability in diverse training environments.

Model ranking

| Metric | Dataset | Accuracy | Loss | ||||

|---|---|---|---|---|---|---|---|

| NODE | NSDE | LC-GODE | NODE | NSDE | LC-GODE | ||

| regret | car | 0.0023 | 0.0023 | 0.0023 | 0.4570 | 0.0950 | 0.0000 |

| segment | 0.0052 | 0.0017 | 0.0017 | 0.0100 | 0.0100 | 0.0100 | |

| cifar10 | 0.0025 | 0.0101 | 0.0004 | 0.0164 | 0.0243 | 0.0048 | |

| cifar100 | 0.0000 | 0.0374 | 0.0000 | 0.0262 | 0.0606 | 0.0303 | |

| ranking | car (110) | 2 | 2 | 2 | 43 | 4 | 1 |

| segment (110) | 18 | 7 | 4 | 2 | 2 | 2 | |

| cifar10 (1250) | 10 | 86 | 2 | 21 | 73 | 3 | |

| cifar100 (1250) | 1 | 142 | 1 | 87 | 607 | 137 | |

We evaluate our method’s efficacy in ranking training configurations by comparing the predicted and true optimal performances at a fixed snapshot, employing two metrics: regret (the performance discrepancy between the actual and predicted best configurations) and ranking (the position of the predicted best configuration according to the true learning curves). These metrics demonstrate whether the predicted performance can effectively guide the selection of a performant configuration.

As shown in Table II, our proposed approach, LC-GODE, enhances the ranking of the predicted best model by 3 positions on the segment dataset and by 8 positions on the CIFAR-10 dataset, and reduces regret by 96% on CIFAR-10 compared to the superior baseline among NODE and NSDE for test accuracy curves. Nevertheless, when employing test loss to identify the best model, the overall ranking for all methods declines, indicating that predicted test loss is less effective for model selection.

Our approach identified a performant model with a 20x speedup compared to exhaustive brute-force training using stochastic gradient descent (SGD) on the MLP datasets. The speedup is computed as the total runtime needed to fully train all configurations via SGD, divided by the sum of the actual training time for epochs and the inference time required by LC-GODE. The inference time for LC-GODE is approximately 0.8 seconds, and the majority of the model selection time is spent on the initial epochs of SGD training. Additional results on the training and inference runtime of all methods are provided in the supplement.

Figure 4 further shows the true vs predicted best test accuracy for the 2 tabular data and 2 image classification source tasks, further indicating a high correlation between predicted and true metrics.

IV-B Ablation Study

We explore three variations for each of the following components: the message passing mechanism, graph pooling, and time series encoder. The message passing mechanisms include GCN (Kipf and Welling, 2016), Graph Attention Networks (GAT) (Veličković et al., 2017), and Graph Transformers (Hu et al., 2020). For pooling methods, we use average pooling, max-pooling, and a learnable pooling method based on DiffPool (Ying et al., 2018), where a separate GCN module determines each node’s contribution to the global embedding. The time-series encoder variations include self-attention (Vaswani et al., 2017), TCN (Pandey and Wang, 2019), and an autoregressive version of GRU (Dey and Salem, 2017; Kipf et al., 2018). The model is trained using early stopping if no improvement is observed after 50 epochs.

As shown in Figure 5, for CIFAR-100, the combination of graph transformers, DiffPool, and GRU achieved the best results, while for CIFAR-10, GAT, max-pooling, and TCN performed better. This suggests that different combinations of encoders can affect extrapolation accuracy, with a more significant impact observed on CIFAR-100.

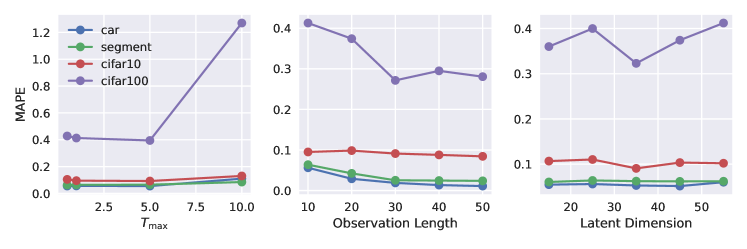

Hyperparameter sensitivity.

We analyze the impact of three hyperparameters: maximal time , observation length, and hidden dimension (Figure 6). A larger negatively impacts performance. As observation length increases, the model receives more information about the curve, requiring less extrapolation and thus reducing error. The performance remains relatively constant when the latent dimension is within the range of 20 to 50.

V Conclusion

In this study, we introduced LC-GODE, a novel approach that merges architectural insights with learning curve extrapolation from a dynamical systems perspective. Our model uses early performance data to predict future learning curve trajectories, significantly enhancing predictions of both test loss and accuracy. This architecture-aware, dynamical system-based method not only surpasses existing extrapolation techniques but also enhances model ranking and the selection of optimal configurations by analyzing just a small number of epochs. This efficient approach achieves a 20x speedup in model selection compared to traditional full training cycles using stochastic gradient descent. Future work could improve by incorporating the impact of source data to generalize across source tasks.

VI Acknowledgement

Y.N.D. and J.X.G. are supported by the National Science Foundation (No. 2047488), and by the Rensselaer-IBM AI Research Collaboration. This work was partially supported by NSF 2211557, NSF 2119643, NSF 2303037, NSF 2312501, NASA, SRC JUMP 2.0 Center, Amazon Research Awards, and Snapchat Gifts.

References

- Domhan et al. (2015) T. Domhan, J. T. Springenberg, and F. Hutter, “Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves,” in Twenty-fourth international joint conference on artificial intelligence, 2015.

- Gargiani et al. (2019) M. Gargiani, A. Klein, S. Falkner, and F. Hutter, “Probabilistic rollouts for learning curve extrapolation across hyperparameter settings,” arXiv preprint arXiv:1910.04522, 2019.

- Adriaensen et al. (2023) S. Adriaensen, H. Rakotoarison, S. Müller, and F. Hutter, “Efficient bayesian learning curve extrapolation using prior-data fitted networks,” in Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023, A. Oh, T. Naumann, A. Globerson, K. Saenko, M. Hardt, and S. Levine, Eds., 2023. [Online]. Available: http://papers.nips.cc/paper_files/paper/2023/hash/3f1a5e8bfcc3005724d246abe454c1e5-Abstract-Conference.html

- Swersky et al. (2014) K. Swersky, J. Snoek, and R. P. Adams, “Freeze-thaw bayesian optimization,” arXiv preprint arXiv:1406.3896, 2014.

- Baker et al. (2017) B. Baker, O. Gupta, R. Raskar, and N. Naik, “Accelerating neural architecture search using performance prediction,” arXiv preprint arXiv:1705.10823, 2017.

- Shi et al. (2019) H. Shi, R. Pi, H. Xu, Z. Li, J. T. Kwok, and T. Zhang, “Multi-objective neural architecture search via predictive network performance optimization,” 2019.

- Friede et al. (2019) D. Friede, J. Lukasik, H. Stuckenschmidt, and M. Keuper, “A variational-sequential graph autoencoder for neural architecture performance prediction,” arXiv preprint arXiv:1912.05317, 2019.

- Wen et al. (2020) W. Wen, H. Liu, Y. Chen, H. Li, G. Bender, and P.-J. Kindermans, “Neural predictor for neural architecture search,” in European conference on computer vision. Springer, 2020, pp. 660–676.

- Tang et al. (2020) Y. Tang, Y. Wang, Y. Xu, H. Chen, B. Shi, C. Xu, C. Xu, Q. Tian, and C. Xu, “A semi-supervised assessor of neural architectures,” in proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 1810–1819.

- Yan et al. (2020) S. Yan, Y. Zheng, W. Ao, X. Zeng, and M. Zhang, “Does unsupervised architecture representation learning help neural architecture search?” Advances in neural information processing systems, vol. 33, pp. 12 486–12 498, 2020.

- Ning et al. (2020) X. Ning, Y. Zheng, T. Zhao, Y. Wang, and H. Yang, “A generic graph-based neural architecture encoding scheme for predictor-based nas,” in European Conference on Computer Vision. Springer, 2020, pp. 189–204.

- Xu et al. (2019) Y. Xu, Y. Wang, K. Han, H. Chen, Y. Tang, S. Jui, C. Xu, Q. Tian, and C. Xu, “Rnas: Architecture ranking for powerful networks,” arXiv preprint arXiv:1910.01523, 2019.

- Siems et al. (2020) J. Siems, L. Zimmer, A. Zela, J. Lukasik, M. Keuper, and F. Hutter, “Nas-bench-301 and the case for surrogate benchmarks for neural architecture search,” arXiv preprint arXiv:2008.09777, vol. 4, p. 14, 2020.

- Su et al. (2016) W. Su, S. Boyd, and E. J. Candes, “A differential equation for modeling nesterov’s accelerated gradient method: Theory and insights,” Journal of Machine Learning Research, vol. 17, no. 153, pp. 1–43, 2016.

- Zhang et al. (2024) R. Zhang, S. Frei, and P. L. Bartlett, “Trained transformers learn linear models in-context,” J. Mach. Learn. Res., vol. 25, pp. 49:1–49:55, 2024. [Online]. Available: https://jmlr.org/papers/v25/23-1042.html

- Maskan et al. (2023) H. Maskan, K. Zygalakis, and A. Yurtsever, “A variational perspective on high-resolution odes,” in Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023, A. Oh, T. Naumann, A. Globerson, K. Saenko, M. Hardt, and S. Levine, Eds., 2023. [Online]. Available: http://papers.nips.cc/paper_files/paper/2023/hash/0569458210c88d8db2985799da830d27-Abstract-Conference.html

- Chen et al. (2018) R. T. Chen, Y. Rubanova, J. Bettencourt, and D. K. Duvenaud, “Neural ordinary differential equations,” Advances in neural information processing systems, vol. 31, 2018.

- Kipf and Welling (2016) T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” arXiv preprint arXiv:1609.02907, 2016.

- Ying et al. (2018) Z. Ying, J. You, C. Morris, X. Ren, W. Hamilton, and J. Leskovec, “Hierarchical graph representation learning with differentiable pooling,” Advances in neural information processing systems, vol. 31, 2018.

- Chandrashekaran and Lane (2017) A. Chandrashekaran and I. R. Lane, “Speeding up hyper-parameter optimization by extrapolation of learning curves using previous builds,” in Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2017, Skopje, Macedonia, September 18–22, 2017, Proceedings, Part I 10. Springer, 2017, pp. 477–492.

- Ru et al. (2021) R. Ru, C. Lyle, L. Schut, M. Fil, M. van der Wilk, and Y. Gal, “Speedy performance estimation for neural architecture search,” Advances in Neural Information Processing Systems, vol. 34, pp. 4079–4092, 2021.

- Klein et al. (2022) A. Klein, S. Falkner, J. T. Springenberg, and F. Hutter, “Learning curve prediction with bayesian neural networks,” in International conference on learning representations, 2022.

- Xu et al. (2018) K. Xu, W. Hu, J. Leskovec, and S. Jegelka, “How powerful are graph neural networks?” arXiv preprint arXiv:1810.00826, 2018.

- Rubanova et al. (2019) Y. Rubanova, R. T. Chen, and D. K. Duvenaud, “Latent ordinary differential equations for irregularly-sampled time series,” Advances in neural information processing systems, vol. 32, 2019.

- Huang et al. (2020) Z. Huang, Y. Sun, and W. Wang, “Learning continuous system dynamics from irregularly-sampled partial observations,” Advances in Neural Information Processing Systems, vol. 33, pp. 16 177–16 187, 2020.

- Huang et al. (2021) ——, “Coupled graph ode for learning interacting system dynamics,” in Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 2021, pp. 705–715.

- Luo et al. (2023) X. Luo, J. Yuan, Z. Huang, H. Jiang, Y. Qin, W. Ju, M. Zhang, and Y. Sun, “Hope: High-order graph ode for modeling interacting dynamics,” 2023.

- Huang et al. (2024a) Z. Huang, W. Zhao, J. Gao, Z. Hu, X. Luo, Y. Cao, Y. Chen, Y. Sun, and W. Wang, “Physics-informed regularization for domain-agnostic dynamical system modeling,” Advances in Neural Information Processing Systems, 2024.

- Jiang et al. (2023) S. Jiang, Z. Huang, X. Luo, and Y. Sun, “Cf-gode: Continuous-time causal inference for multi-agent dynamical systems,” in Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2023.

- Huang et al. (2024b) Z. Huang, J. Hwang, J. Zhang, J. Baik, W. Zhang, D. Wodarz, Y. Sun, Q. Gu, and W. Wang, “Causal graph ode: Continuous treatment effect modeling in multi-agent dynamical systems,” in Proceedings of the ACM Web Conference 2024, ser. WWW ’24, 2024, p. 4607–4617.

- Vanschoren et al. (2014) J. Vanschoren, J. N. Van Rijn, B. Bischl, and L. Torgo, “Openml: networked science in machine learning,” ACM SIGKDD Explorations Newsletter, vol. 15, no. 2, pp. 49–60, 2014.

- Vaswani et al. (2017) A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- Pandey and Wang (2019) A. Pandey and D. Wang, “Tcnn: Temporal convolutional neural network for real-time speech enhancement in the time domain,” in ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019, pp. 6875–6879.

- Dong and Yang (2020) X. Dong and Y. Yang, “Nas-bench-201: Extending the scope of reproducible neural architecture search,” arXiv preprint arXiv:2001.00326, 2020.

- Liu et al. (2018) H. Liu, K. Simonyan, and Y. Yang, “Darts: Differentiable architecture search,” arXiv preprint arXiv:1806.09055, 2018.

- Knyazev et al. (2021) B. Knyazev, M. Drozdzal, G. W. Taylor, and A. Romero Soriano, “Parameter prediction for unseen deep architectures,” Advances in Neural Information Processing Systems, vol. 34, pp. 29 433–29 448, 2021.

- Gao et al. (2016) J. Gao, B. Barzel, and A.-L. Barabási, “Universal resilience patterns in complex networks,” Nature, vol. 530, no. 7590, pp. 307–312, 2016.

- Laurence et al. (2019) E. Laurence, N. Doyon, L. J. Dubé, and P. Desrosiers, “Spectral dimension reduction of complex dynamical networks,” Physical Review X, vol. 9, no. 1, p. 011042, 2019.

- Zimmer et al. (2021) L. Zimmer, M. Lindauer, and F. Hutter, “Auto-pytorch: Multi-fidelity metalearning for efficient and robust autodl,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 9, pp. 3079–3090, 2021.

- Krizhevsky et al. (2009) A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- Butcher (1996) J. C. Butcher, “A history of runge-kutta methods,” Applied numerical mathematics, vol. 20, no. 3, pp. 247–260, 1996.

- Veličković et al. (2017) P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio, “Graph attention networks,” arXiv preprint arXiv:1710.10903, 2017.

- Hu et al. (2020) Z. Hu, Y. Dong, K. Wang, and Y. Sun, “Heterogeneous graph transformer,” in Proceedings of the web conference 2020, 2020, pp. 2704–2710.

- Dey and Salem (2017) R. Dey and F. M. Salem, “Gate-variants of gated recurrent unit (gru) neural networks,” in 2017 IEEE 60th international midwest symposium on circuits and systems (MWSCAS). IEEE, 2017, pp. 1597–1600.

- Kipf et al. (2018) T. Kipf, E. Fetaya, K.-C. Wang, M. Welling, and R. Zemel, “Neural relational inference for interacting systems,” in International conference on machine learning. PMLR, 2018, pp. 2688–2697.

- Loshchilov and Hutter (2017) I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” arXiv preprint arXiv:1711.05101, 2017.

Appendix A Experimental Details

Datasets.

To generate MLP learning curves, we selected 2 tabular data classification tasks from OpenML (Vanschoren et al., 2014) as detailed in Table IV. We adhere to the standard procedure outlined in LCBench (Zimmer et al., 2021), with the exception that we introduce variability by randomly sampling the number of hidden units for each layer, rather than sampling only the maximal number of hidden units. All optimization runs are performed using SGD with decaying learning rate by 0.5 every 80 epochs. The total number of epochs is 200. We discard runs The parameters randomly sampled include four floating-point values and integers, where denotes the number of layers.

| Hyperparameter | Value | Log-scale |

|---|---|---|

| Batch size | Y | |

| Learning rate | Y | |

| Batch size | Y | |

| Weight decay | N | |

| Number of layers | N | |

| Number of units per layer | Y | |

| Dropout | N |

| Tabular dataset | # train samples | # test samples | # features | # labels |

|---|---|---|---|---|

| car | 1296 | 432 | 6 | 2 |

| segment | 1732 | 578 | 19 | 2 |

Metrics.

We adopt six metrics to evaluate our approach from three dimensions.

-

•

Trajectory reconstruction. To evaluate curve reconstruction error, we utilize MAPE. Let denote the prediction length. Let denote the th trajectory in the test set. Let denote the ground truth trajectory. The reconstruction error for one trajectory is comuted as

(11) The error metric for the entire test dataset is the corresponding average over all trajectories.

(12) -

•

Model Selection. We use Pearson correlation as adopted in [?] and Kendall [NasWOT].

-

•

Efficiency. We report the training runtime per epoch and the the wall-clock time to perform inference for one architecture using our model. The speedup is computed as

(13)

Hyperparameter Setting.

Without further specification, the hyperparameters to train both our model and the baselines are set according to Table V

| Hyperparameter | Value |

|---|---|

| Latent Dimension | 16 |

| Learning Rate | 0.001 |

| Batch Size | 128 (CNN) 40 (MLP) |

| Optimizer | AdamW (Loshchilov and Hutter, 2017) |

| Number of Epochs | 400 |

| Condition Length | 20% |

| Prediction Length | 80% |

A-A Baseline Configurations.

LC-BNN.

LC-BNN is a function that maps a tuple containing a configuration and an epoch to the loss value associated with that configuration at the specified epoch. We represent the trajectory data as pairs of input and target values: where denotes the th configuration, represents an epoch, and is the corresponding metric value. For MLP configurations, we use the hyperparameters specified in LCBench, detailed in Table III. In the case of NAS-Bench-201, we utilize the hyperparameters outlined in their respective publication. Note that all architectures within NAS-Bench-201 utilize a uniform set of hyperparameters. Nevertheless, we include these hyperparameters as input to LC-BNN, as it requires at least one additional input beyond the epoch number.

Our dataset is structured within an inductive learning framework, comprising multiple training trajectories, with the test set including unseen trajectories. Since LC-BNN does not have an associated conditional length, to ensure a fair comparison, we incorporate the conditional window from our test set into the training data for LC-BNN.

LC-PFN.

To apply LC-PFN, we preprocess our data using the normalization procedure outlined in Appendix A by Adriaensen et al. (2023). This approach ensures that our data is consistently formatted and scaled according to the specified guidelines. The normalization process transforms observed curves into a constrained range where . LC-PFN takes as input the normalized observations and outputs inferred loss values. These values are then mapped back to their original space using the inverse of the normalization function. The parameters of the normalization function are defined as :

-

•

min?: A Boolean indicating whether the curve is to be minimized or maximized.

-

•

: Hard bounds defining the absolute limits of the learning curve.

-

•

: Soft bounds that guide the behavior of the learning curve.

The normalization function is formulated as:

where:

The inverse of is defined as

| (14) |

For normalizing observed log loss values, we utilize . Here is the log loss value at the first epoch of the trajectories to train AutoML models. To normalize accuracy curves, we apply .

LSTM.

The LSTM implementation is adapted from the published code associated with NRI (Kipf et al., 2018). This implementation employs teacher forcing by utilizing the observation window. Specifically, it features a step() module, which comprises a Long-short-term memory (LSTM) block and a two-layer MLP with ReLU activation. The step() function processes the previous input state and hidden state, outputting the prediction and hidden state for the next immediate time step. The forward() function iteratively calls step() for a total of times. During the initial steps, the observed learning curve data is used as the input state. For the subsequent steps beyond , the output from the previous prediction is used as the new input state.

Appendix B Additional Results

| car | segment | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAPE | RMSE | MAPE | RMSE | |||||||||

| Epochs | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 |

| LC-BNN | 0.4545 | 0.3715 | 0.3295 | 0.2798 | 0.2348 | 0.2096 | 0.4322 | 0.3893 | 0.3785 | 0.2358 | 0.2036 | 0.1877 |

| LC-PFN | 0.0723 | 0.0906 | 0.0999 | 0.0349 | 0.0397 | 0.0425 | 0.0795 | 0.0890 | 0.0937 | 0.0329 | 0.0348 | 0.0361 |

| VRNN | 0.2216 | 0.2054 | 0.2054 | 0.1306 | 0.1281 | 0.1281 | 0.3308 | 0.3660 | 0.3660 | 0.1518 | 0.1660 | 0.1660 |

| LSTM | 0.1104 | 0.1517 | 0.1733 | 0.0539 | 0.0697 | 0.0774 | 0.0782 | 0.0953 | 0.1076 | 0.0343 | 0.0386 | 0.0429 |

| NODE | 0.2110 | 0.2183 | 0.2218 | 0.0909 | 0.0927 | 0.0939 | 0.1665 | 0.1662 | 0.1670 | 0.0623 | 0.0635 | 0.0649 |

| NSDE | 0.2039 | 0.2141 | 0.2183 | 0.0884 | 0.0900 | 0.0906 | 0.1493 | 0.1574 | 0.1607 | 0.0558 | 0.0581 | 0.0593 |

| LC-GODE | 0.0644 | 0.0722 | 0.0765 | 0.0292 | 0.0307 | 0.0317 | 0.0816 | 0.0892 | 0.0925 | 0.0342 | 0.0368 | 0.0378 |

| cifar10 | cifar100 | |||||||||||

| MAPE | RMSE | MAPE | RMSE | |||||||||

| Epochs | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 | 80 | 140 | 200 |

| LC-BNN | 0.2978 | 0.3847 | 0.5879 | 0.1990 | 0.1963 | 0.2174 | 0.2843 | 0.2157 | 0.1867 | 0.2656 | 0.2155 | 0.1871 |

| LC-PFN | 0.2274 | 0.3797 | 0.6008 | 0.1166 | 0.1519 | 0.1961 | 0.0557 | 0.0922 | 0.1505 | 0.0542 | 0.0834 | 0.1282 |

| VRNN | 0.2396 | 0.2386 | 0.2378 | 0.1301 | 0.1371 | 0.1407 | 0.2138 | 0.2131 | 0.2113 | 0.1652 | 0.1668 | 0.1661 |

| LSTM | 0.1851 | 0.1993 | 0.2093 | 0.1110 | 0.1092 | 0.1017 | 0.0502 | 0.0613 | 0.0709 | 0.0526 | 0.0614 | 0.0648 |

| NODE | 0.1655 | 0.1810 | 0.2039 | 0.1017 | 0.1026 | 0.0978 | 0.0705 | 0.0808 | 0.0823 | 0.0708 | 0.0788 | 0.0775 |

| NSDE | 0.1518 | 0.1555 | 0.1639 | 0.0956 | 0.0913 | 0.0839 | 0.0477 | 0.0597 | 0.0723 | 0.0492 | 0.0587 | 0.0647 |

| LC-GODE | 0.1487 | 0.1536 | 0.1629 | 0.0953 | 0.0926 | 0.0860 | 0.0460 | 0.0571 | 0.0630 | 0.0481 | 0.0577 | 0.0598 |

| car | segment | cifar10 | cifar100 | |||||

|---|---|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | Train | Test | |

| LC-BNN | 0.0350 | 0.0183 | 0.0395 | 0.0558 | 0.0333 | 0.2833 | 0.0381 | 0.2814 |

| LC-PFN | - | 9.6855 | - | 9.4088 | - | 109.8517 | - | 112.8367 |

| VRNN | 0.4180 | 10.6489 | 0.4053 | 12.1125 | 1.7594 | 88.9615 | 1.5898 | 128.4061 |

| LSTM | 0.8364 | 0.0005 | 0.8794 | 0.0005 | 6.5582 | 0.0043 | 6.7457 | 0.0049 |

| NODE | 1.4698 | 0.1647 | 1.4684 | 0.1631 | 1.3543 | 0.1770 | 1.3206 | 0.1715 |

| NSDE | 1.5931 | 0.3403 | 1.5817 | 0.3342 | 1.3347 | 0.3229 | 1.4389 | 0.3494 |

| LC-GODE | 3.1136 | 0.8692 | 3.1476 | 0.8325 | 3.3043 | 0.7077 | 3.3879 | 0.7572 |

Table VI shows the extrapolation error of six baselines and LC-GODE for test loss curves originated from 2 tabular tasks and 2 image classification tasks computed over three prediction lengths, observing 10 epochs. Table VII shows the runtime (in seconds) of training one epoch and that of performing inference on the entire test set. For LC-PFN, the model is trained on synthetic curves drawn from a prior distribution and therefore no further training is needed, as long as the test learning curves are normalized according to the above description. Note that the inference time for Bayesian approaches, except for LC-BNN, is much greater than other approaches.