12016

Action editor: {action editor name}. Submission received: DD Month YYYY; revised version received: DD Month YYYY; accepted for publication: DD Month YYYY.

ArguGPT: evaluating, understanding and identifying argumentative essays generated by GPT models

Abstract

Content generated by artificial intelligence models have presented considerable challenge to educators around the world. When students submit AI generated content (AIGC) as their own work, instructors will need to be able to detect such text, either with the naked eye or with the help of computational tools. There is also growing need and interest to understand the lexical, syntactic and stylistic features of AIGC among computational linguists.

To address these challenges in the context of argumentative essay writing, we present ArguGPT, a carefully balanced corpus of 4,038 argumentative essays generated by 7 GPT models in response to essay prompts from three sources: (1) in-class or homework exercises, (2) TOEFL writing tasks and (3) GRE writing tasks. These machine-generated texts are paired with roughly equal number of human-written essays with low, medium and high scores matched in essay prompts. We also include an out-of-distribution test set where the machine essays are generated by models other than the GPT family—claude-instant, bloomz and flan-t5—to examine AIGC detectors’ generalization ability.

We then hire English instructors to distinguish machine essays from human ones. Results show that when first exposed to machine-generated essays, the instructors only have an accuracy of 61 percent in detecting them. But the number rises to 67 percent after one round of minimal self-training. Next, we perform linguistic analyses of the machine and human essays, which show that machines produce sentences with more complex syntactic structures while human essays tend to be lexically more complex. Finally, we test existing AIGC detectors and build our own detectors using SVMs as well as the RoBERTa model. Our results suggest that a RoBERTa fine-tuned with the training set of ArguGPT can achieve above 90% accuracy in document, paragraph and sentence-level classification. The document-level RoBERTa can generalize to other models as well (such as claude-instant), while off-the-shelf detectors such as GPTZero fail to generalize to our out-of-distribution data.

To the best of our knowledge, this is the first comprehensive analysis of argumentative essays produced by generative large language models. Our work demonstrates the need for educators to acquaint themselves with AIGC, presents the characteristics of AI generated argumentative essays and shows that detecting AIGC from the same domain seems to be an easy task for machine-learning based classifiers while transferring to essays generated by other models is challenging. Machine-authored essays in ArguGPT and our models are publicly available at https://github.com/huhailinguist/ArguGPT.

1 Introduction

Recent large language models (LLM) such as ChatGPT have shown incredible generative abilities. They have created many opportunities, as well as challenges for students and educators around the world.111This paper is written and polished by humans, with the exception of Appendix D. While students can use them to obtain information and increase efficiency in learning, many educators are concerned that ChatGPT will make it easier for students to cheat in their homework assignments, for example, by asking ChatGPT to summarize their readings, solve math problems, and even write responses and essays: tasks that are supposed to be completed by students themselves. Educators have started to find that well-written essays submitted by students, some even deemed “the best in class”, were actually written by ChatGPT, making it increasingly difficult to evaluate students’ performance in class. For instance, a philosophy professor from North Michigan University has discovered that the best essay in his class was in fact written by ChatGPT.222See https://www.nytimes.com/2023/01/16/technology/chatgpt-artificial-intelligence-universities.html Thus it is critical for educators to identify AI generated content (AIGC), either with the naked eye, or the help of some tools.

There is also growing interest and need among computational linguists to study texts generated by language models. Several studies have examined whether humans can identify AI-generated text (Brown et al., 2020; Clark et al., 2021; Dou et al., 2022). Others have built text classifiers to distinguish AI-written from human-written text (Gehrmann, Strobelt, and Rush, 2019; Mitchell et al., 2023; Guo et al., 2023).

The focus of this paper is on argumentative essays in the context of English as Other or Second Language (EOSL). There are an estimated 2 billion people learning/speaking English, and at least 12 million instructors worldwide333Data released by British Council in 2013 (See https://www.britishcouncil.org/research-policy-insight/policy-reports/the-english-effect). It is thus of practical significance for EOSL instructors to be able to identify AIGC; computational linguists also need to build efficient educational applications that accurately detect AI-generated essays.

Therefore, our first goal is to establish a baseline of the performance of the EOSL instructors in distinguishing AIGC from texts written by non-native speakers. We also want to examine whether their accuracy could be improved with minimal training. Next, we analyze the linguistic features of AIGC which contributes to a growing body of literature on AI-generated text (Dou et al., 2022; Guo et al., 2023). Last but not least, we aim to build and evaluate the performance of machine-learning classifiers on detecting AIGC.

Concretely, we ask the following questions:

-

•

Can human evaluators (language teachers) distinguish argumentative essays in English generated by GPT models from those written by human language learners?

-

•

What are the linguistic features of machine-generated essays, compared with essays written by language learners?

-

•

Can machine learning classifiers distinguish machine-generated essays from human-written ones?

To answer these questions, we first collect 4,038 machine-generated essays using seven models of the GPT family (GPT2-XL, variants of GPT3, and ChatGPT), in response to 632 prompts from multiple levels of English proficiency and writing tasks (in-class writing exercises, TOEFL and GRE). We then pair these essays with 4,115 human-written ones at low, medium and high level to form the ArguGPT corpus. Also, an out-of-distribution test set is collected to evaluate the generalization ablity of our detectors, containing 500 machine essays and 500 human essays. We conduct human evaluation tests by asking 43 novice and experienced English instructors in China to identify whether a text is written by machine or human. Next, we compare the 31 syntactic and lexical linguistic measures of human-authored and machine-generated essays, using the tools and methods from Lu (2010, 2012), aiming to uncover the textual characteristics of GPT-generated essays. Finally, we benchmark existing AIGC detectors such as GPTZero444https://gptzero.me/ and our own detectors based on SVM and RoBERTa on the development and test sets of the ArguGPT corpus.

Our major findings and contributions are: (1) We provide the first large-scale, balanced corpus of AI-generated argumentative essays for NLP and ESOL researchers. (2) We show that it is difficult for English instructors to identify GPT-generated texts. English instructors distinguish human- and GPT-authored essays with an accuracy of 61.6% in the first round, and after some minimal training, the accuracy rises to 67.7%, roughly 10 points higher than previously reported in Clark et al. (2021), probably due to the instructors’ familiarity with student-written texts. Interestingly, they are better at detecting low-level human essays but high-level machine essays. (3) In terms of syntactic and lexical complexity, we find that the best GPT models produce syntactically more complex sentences than humans (English language learners), but GPT-authored essays are often lexically less complex. (4) We discover that machine-learning classifiers can easily distinguish between machine-generated and human-authored essays, usually with very high accuracy, similar to results from Guo et al. (2023). GPTZero has 90+% accuracy both at the essay level and sentence level. Our best performing RoBERTa-large model finetuned on ArguGPT achieves 99% accuracy on the test set at the essay level and 93% at the sentence level. (5) However, on the out-of-distribution test set, only the RoBERTa model finetuned on ArguGPT corpus shows consistent transfer learning ability. Performance of the two off-the-shelf detectors dropped dramatically, especially on detecting essays written by models not included in ArguGPT, e.g., gpt-4 and claude-instant. (6) The machine-authored essays555As we do not have copyright to the human-authored essays, we will only release the index of the human essays use in our study. Interested readers can purchase relevant corpora from their owners to reproduce our results. will be released at https://github.com/huhailinguist/ArguGPT. Demo of our ArguGPT detector and related models are/will be available at https://huggingface.co/spaces/SJTU-CL/argugpt-detector.

This paper is structured in the following manner. Section 2 introduces the compilation process of the ArguGPT corpus. Section 3 describes the method used for conducting human evaluation. Section 4 presents the linguistic analysis we conduct from syntactic and lexical perspectives. Section 5 discusses the performance of existing AIGC detectors and our own detectors on the test set of ArguGPT. Section 6 introduces related works on Large Language Models (LLMs), human evaluation of AIGC, AIGC detectors, and AIGC’s impact on education. Section 7 concludes the paper.

2 The ArguGPT corpus

In this section, we describe how we compile the ArguGPT corpus and an out-of-distribution (OOD) dataset for the evaluation of generalization ability.

2.1 Description of the ArguGPT corpus

The ArguGPT corpus contains 4,115 human-written essays and 4,038 machine-generated essays produced by 7 GPT models. These essays are responses to prompts from three sources: (1) in-class writing exercises (WECCL, Written English Corpus of Chinese Learners), (2) independent writing tasks in TOEFL exams (TOEFL11), and (3) the issue writing task in GRE (GRE) (see Table 1). We first collect human essays from two existing corpora—WECCL (Wen, Wang, and Liang, 2005) and TOEFL11 (Blanchard et al., 2013), and compile a corpus for human-written GRE essays from GRE-prep materials ourselves. Next, we use the essay prompts from the three corpora of human essays to generate essays from seven GPT models listed in Table 5. Example essay prompts can be found in Table 2.

Here we list the characteristics of the ArguGPT corpus:

-

•

The human-written and machine-generated portions are comparable and matched in several respects: the number of the essays, the corpus size in tokens, the mean length of the essays, and the levels of the essays.

-

•

Each machine-generated essay comes with a score given by an automated scoring system. (see Section 2.3 for details).

-

•

The essays cover different levels of English proficiency. WECCL essays are written by low and intermediate English learners; TOEFL contains essays from all levels of writing ability; GRE essays are model essays written by highly proficient language users.

-

•

The human-written GRE essays are, to the best of our knowledge, the first corpus of GRE essays.

| Sub-corpus | # essays | # tokens | mean len | # prompts | # low level | # mid level | # high level |

| WECCL-human | 1,845 | 450,657 | 244 | 25 | 369 | 1,107 | 369 |

| WECCL-machine | 1,813 | 442,531 | 244 | 25 | 281 | 785 | 747 |

| TOEFL11-human | 1,680 | 503,504 | 299 | 8 | 336 | 1,008 | 336 |

| TOEFL11-machine | 1,635 | 442,963 | 270 | 8 | 346 | 953 | 336 |

| GRE-human | 590 | 341,495 | 578 | 590 | 6 | 152 | 432 |

| GRE-machine | 590 | 268,640 | 455 | 590 | 2 | 145 | 443 |

| OOD-human | 500 | 132,902 | 266 | - | - | - | - |

| OOD-machine | 500 | 180,120 | 360 | - | - | - | - |

| Total (w/o OOD) | 8,153 | 2,449,790 | 300 | 623 | 1,340 | 4,150 | 2,663 |

| Sub-corpus | Example Essay Prompt |

| WECCL | Education is expensive, but the consequences of a failure to educate, especially in an increasingly globalized world, are even more expensive. |

| Some people think that education is a life-long process, while others don’t agree. | |

| TOEFL11 | It is better to have broad knowledge of many academic subjects than to specialize in one specific subject. |

| Young people enjoy life more than older people do. | |

| GRE | Major policy decisions should always be left to politicians and other government experts. |

| The surest indicator of a great nation is not the achievements of its rulers, artists, or scientists, but the general well-being of all its people. |

To the best of our knowledge, this is the first large-scale, prompt-balanced corpus of human and machine written English argumentative essays, with automated scores. We believe it can be beneficial to ESOL instructors, corpus linguists, and AIGC researchers.

2.2 Collecting human-written essays

Our goal is to collect human essays representing different levels of English proficiency. To this end, we decided to include essays from three sources: (1) WECCL, which we believe is representative of the writings from low to intermediate EOSL learners, (2) TOEFL11, representative of intermediate to advanced learners, and (3) GRE, which represents more advanced learners as well as native speakers. We elaborate on how the essays are collected and sampled below.

2.2.1 WECCL

WECCL (Written English Corpus of Chinese Learners) corpus is the sub-corpus of SWECCL (Spoken and Written English Corpus of Chinese Learners) (Wen, Wang, and Liang, 2005). Texts in WECCL are essays written by English learners from Chinese universities, collected in the form of in-class writing tasks or after-class writing assignments. WECCL contains exposition essays and argumentative essays, but only argumentative essays are used in our corpus. The original WECCL corpus has 4,678 essays in response to 26 prompts. We score these essays with aforementioned automated scoring system, and then categorize them into three levels: low (score 13), medium (14 score 17), and high (score 18). To keep it balanced with the TOEFL subcorpus, we down-sample WECCL into 1,845 essays, ensuring the ratio of low:medium:high is 1:3:1. From Table 1, we can see that WECCL essays are shorted in length among the three human sub-corpora, with mean length 244 words per essay.

2.2.2 TOEFL11

We use the TOEFL11 corpus released by ETS (Blanchard et al., 2013), which includes 12,100 essays written for the independent writing task in the Test of English as Foreign Language (TOEFL) by English learners with 11 native languages in response to 8 prompts. Since the essays come with three score levels (i.e., low, medium, high), we do not score them using the YouDao system. We down-sample TOEFL11 to 1,680 essays, making sure that we have the same number of essays per prompt. The ratio of low, medium, high is set to 1:3:1 as well.

2.2.3 GRE

We also collect essays in response to the GRE issue task. The Graduate Record Exam (GRE) has two writing tasks. The issue task asks the test taker to write an essay on a specific issue whereas the argument task requires the test take to read a text first and analyze the argument presented in the text mainly from logical aspect666More information about GRE writing could be found: https://www.ets.org/gre/test-takers/general-test/prepare/content/analytical-writing.html. In keeping with the prompts of WECCL and TOEFL11, we only consider the issue task in GRE.

As there are no publicly available corpus of GRE essays, we first collected 981 human written essays from 14 GRE-prep materials. An initial inspection shows that the collected essays have following two problems: 1) some prompts do not conform to the usual GRE writing prompts (e.g., some prompts have only one phrase: “Imaginative works vs. factual accounts”), 2) some essays show up in different GRE-prep materials. After removing the problematic prompts and keeping only one of the reduplicated essays, a total of 590 essays remained. We then score these essays using the YouDao automatic scoring system, and assign essays to three levels: low (score 3), medium (3 score 5) and high (score 5).

Note that as these essays are sample essays from humans, only 6 out of the 590 essays are grouped into low level (see Table 1).

2.3 Automatic scoring of essays

We use automated scoring systems to score the essays in ArguGPT for two reasons: (1) to allow balanced sampling from different levels of human essays, and (2) to estimate the quality of machine essays generated by different models.777We did not score human TOEFL essays as they come with a three-level (low, medium and high) score from the TOEFL11 corpus (Blanchard et al., 2013).

In the pilot study, we use two automated scoring systems (YouDao and Pigai888YouDao: https://ai.youdao.com; Pigai: http://www.pigai.org/) to score a total of 480 machine essays on 10 prompts with 6 GPT models. Analyses show that the scores given by the two systems are highly correlated: we see a Pearson correlation of 0.7570 for all 480 essays, 0.8730 when scores are grouped by prompts, and 0.9510 when grouped by models. Thus we decide to use only one system—YouDao, which provides an API for easy scoring. We further experiment with different settings of the YouDao system and decide to use their 30-point scale for TOEFL, 6-point scale for GRE, as they are optimized for TOEFL and GRE writing tasks, and a 20-point scale for WECCL, as our experiments show that this scale is most discriminating for essays generated by different models responding to WECCL prompts (see Appendix B.1 for details).

2.4 Collecting machine-generated essays

In this section, we introduce how we collect machine-generated essays. We conduct minimal prompt-tuning to select a proper format of prompt according to scores given by the automated scoring system. Finally, we use those prompts to generate essays.

2.4.1 Prompt selection

GPT models are prompt-sensitive (Chen et al., 2023). Thus for this study, we perform prompt tuning in our pilot.

We distinguish essay prompt from added prompt. An essay prompt is the sentence(s) that the test taker should respond to, e.g., “Young people enjoy life more than older people do.” An added prompt is the prompt or instruction added by us to prompt the model, e.g., “Please write an essay of 400 words.” One example is shown in Table 3.

| Prompt Type | Example |

| added prompt (prefix) | Do you agree or disagree with the following statement? |

| essay prompt | Young people enjoy life more than older people do. |

| added prompt (suffix) | Use specific reasons and examples to support your answer. |

An added prompt consists of two parts as the prefix and suffix to the essay prompt. Yet the prefix part is optional in our experimental settings. Therefore, the full prompt given to the machines is in the following format, where sometimes only the suffix part of the added prompt is used:

Our goal is to find the best-added prompt that maximizes the scores given by the YouDao automated system. To this end, we first devise 20 added prompts and manually inspect the generated essays, which are then narrowed down to 5 added prompts that produce good essays. Next, we generate essays using each of the 5 added prompts and 2 essay prompts from each of the WECCL, TOEFL11 and GRE sub-corpus. The mean score of the essays generated by these prompts are shown in Table 4.

| No. | Content of the Added Prompt | TOEFL11 | WECCL | GRE |

| 1 | Do you agree or disagree? Use specific reasons and examples to support your answer. Write an essay of roughly 300/400/500 words. | 20.53 | 20.56 | 20.97 |

| 2 | Do you agree or disagree? It is a test for English writing. Please write an essay of roughly 300/400/500 words. | 19.68 | 20.09 | 20.21 |

| 3 | Do you agree or disagree? Pretend you are the best student in a writing class. Write an essay of roughly 300/400/500 words, with a large vocabulary and a wide range of sentence structures to impress your professor. | 20.41 | 19.71 | 19.65 |

| 4 | Do you agree or disagree? Pretend you are a professional American writer. Write an essay of roughly 300/400/500 words, with the potential of winning a Nobel prize in literature. | 20.09 | 20.52 | 19.79 |

| 5 | Do you agree or disagree? From an undergraduate student’s perspective, write an essay of roughly 300/400/500 words to illustrate your idea. | 20.65 | 20.32 | 19.99 |

From Table 4, we see that essays from different prompts seem to be very close on their scores. Thus we choose prompt 01 because it has the highest average score for the three subcorpora (for more detail, see Appendix C):

<Essay prompt> + Do you agree or disagree? Use specific reasons and examples to support your answer. Write an essay of roughly 300/400/500 words.

To balance the length of machine essays with human essays, the prompts for WECCL, TOEFL11 and GRE differ in their requirement of essay length (300, 400 and 500 words respectively).

2.4.2 Generation configuration

We experiment with essay generation using 7 GPT models (see Table 5). We use all 7 models to generate essays in response to prompts in TOEFL11 and WECCL 999We give gpt2-xl beginning sentences randomly chosen from human essays for continuous writing, and remove those beginning sentences after generation.. However, as our GRE essays are mostly sample essays with high scores, we generate all 590 GRE machine essays using the two more powerful models: text-davinci-003 and gpt-3.5-turbo.

For the balance of the ArguGPT corpus, we generate 210 essays for each TOEFL11 prompt (30 essays per model with 6 essays per temperature for temp ), 35-210 essays for each WECCL prompt (the number of human essays for each WECCL prompt is different), and only 1 essay for each GRE prompt.

In our pilot study, we find that GPT-generated essays may have the following three problems: 1) Short: Essays contain only one or two sentences. 2) Repetitive: One essay contains repetitive sentences or paragraphs. 3) Overlapped: Essays generated by the same model may overlap with each other.

Thus we filter out essays with any of the three problems. First, we remove essays shorter than 100 words101010The minimal length of gpt2-xl is set to 50, for it is more difficult for gpt2-xl to generate longer texts.. Then we compute the similarity of each sentence pair in an essay by comparing how many words co-occur in both sentences. If 80% of words co-occur, then the sentence pair is considered to be two similar sentences. If 40% of sentences in one essay are similar sentences, then the essay is considered to be repetitive and will be removed. In like manner, we pair sentences in Essay A with sentences in Essay B. If 40% of sentences in Essay A and Essay B altogether are similar, then Essay A is considered to be overlapped with Essay B, which will result in the removal of Essay B.

The proportion of essays generated is given in Table 5. We have generated 9,647 essays in total, with 4,708 valid. Then we sample essays from machine-written WECCL/GRE essays to match the number of essays in human-written WECCL/GRE essays. We also manually remove some gpt2-generated essays that are apparently not in the style of argumentative writing (See Appendix A), resulting in 4,038 machine-generated essays in total.

| Model | Time stamp | # total | # valid | # short | # repetitive | # overlapped |

| gpt2-xl | Nov, 2019 | 4,573 | 563 | 1,637 | 0 | 2,373 |

| text-babbage-001 | April, 2022 | 917 | 479 | 181 | 240 | 17 |

| text-curie-001 | April, 2022 | 654 | 498 | 15 | 110 | 31 |

| text-davinci-001 | April, 2022 | 632 | 493 | 1 | 41 | 97 |

| text-davinci-002 | April, 2022 | 621 | 495 | 1 | 56 | 69 |

| text-davinci-003 | Nov, 2022 | 1,130 | 1,090 | 0 | 30 | 10 |

| gpt-3.5-turbo | Mar, 2023 | 1,122 | 1,090 | 0 | 4 | 28 |

| # total | - | 9,647 | 4,708 | 1,835 | 481 | 2,625 |

2.5 Preprocessing

We preprocess all human and machine texts in the same manner, so that the GPT/human-authored texts would not be recognized based on superficial features such as spaces after punctuation and inconsistent paragraph breaks.

Specifically, we perform the following preprocessing steps.

-

•

Essays generated by gpt-3.5-turbo often begin with “As an AI model…”, which gives away its author. Therefore, we remove sentences beginning with “As an AI model …”.

-

•

There are incorrect uses of capitalization. We capitalize the first letter of every sentence and the pronoun “I”.

-

•

There are incorrect uses of spaces and line breaks. We normalize the use of spaces and line breaks. One space is inserted after every punctuation; one space is inserted between two words; all spaces at the beginning of each paragraph are deleted; two line breaks are inserted at the end of each paragraph.

-

•

We normalize the use of apostrophes (e.g., don-t -> don’t).

2.6 Collecting out-of-distribution data

An out-of-distribution (OOD) test set is collected to evaluate the generalization ability of the detectors trained on the in-distribution dataset. Ideally, we should pair human and machine essays with the same writing prompts to compose the OOD test set, with which we can see the performance of detectors on both positive and negative samples at the same time. However, after compiling the ArguGPT dataset, we find no more human-written argumentative essays with accessible writing prompts. Therefore, we simply collect 500 human essays and 500 machine essays respectively without pairing them together.

The OOD test set is divided into two parts, as Machine OOD and Human OOD. Machine essays are generated by LLMs and human essays are written by Chinese English learners (see Table 6). Human essays and machine ones in OOD dataset share different writing prompts. In the two independent sub-sets, we can evaluate the performance on negative and positive samples respectively.

| OOD_machine | OOD_human | ||||

| sub-corpus | # essays | # tokens | sub-corpus | # essays | # tokens |

| gpt-3.5-turbo | 100 | 44,028 | st2: high school students | 100 | 19,975 |

| gpt-4 | 100 | 43,986 | st3: junior college students | 100 | 16,318 |

| claude-instant | 100 | 31,815 | st4: senior college students | 100 | 17,165 |

| bloomz-7b | 100 | 29,659 | st5: junior English majors | 100 | 24,978 |

| flan-t5-11b | 100 | 30,632 | st6: senior English majors | 100 | 54,466 |

Human OOD essays

The human OOD dataset is collected to test how the detectors trained with limited writing prompts perform on human essays in response to unseen ones. Human essays are sampled from CLEC (Chinese Learner English Corpus) Gui and Yang (2003), containing argumentative essays written by Chinese English learners of five different levels111111Writing prompts of these essays are not published by authors of CLEC. (see Table 6 for details).

Being written by Chinese English learners, essays in CLEC share similar linguistic properties as WECCL. However, the writing prompts in CLEC are speculated to be different from WECCL for the topics of these essays never occur in the ArguGPT dataset. Therefore, this dataset can be used to evaluate the performance of detectors on the out-of-distribution writing prompts while the linguistic features are probably in-distribution.

Machine OOD essays

The machine OOD test set is collected to evaluate the performance of the detectors in two cases: (1) new writing prompts, and (2) LLMs that are not used to generate the essays in the training set.

As the writing prompts in ArguGPT have two parts (i.e., essay prompt and essay prompt), we use the following steps to generate the prompts (see Table 7): half of the writing prompts are composed of 25 unseen essay prompts generated by ChatGPT, with the same added prompt used in the training portion of the ArguGPT dataset as the suffix; another half are 25 prompts resulted from combinations of 5 essay prompts sampled from the training set, with 5 unseen added prompts that are again generated by ChatGPT.

| type | # | source | example | |

| w/ unseen added prompts | essay | 5 | sampled from ArguGPT | Young people enjoy life more than older people do. |

| added | 5 | generated by ChatGPT | Analyze the statement <essay prompt> , by examining its causes, effects, and potential solutions. Write an essay of roughly 400 words. | |

| w/ unseen essay prompts | essay | 25 | generated by ChatGPT | Social media has more harmful effects than beneficial effects on society. |

| added | 1 | the one used in ArguGPT | <essay prompt> Do you agree or disagree? Use specific reasons and examples to support your answer. Write an essay of roughly 400 words. |

We use five models to generate machine-written argumentative essays in response to above 50 prompts, four of which are not used in the generation of the training data, thus serving our purpose to examine the generalization ability of our detectors:

-

•

gpt-3.5-turbo: gpt-3.5-turbo is an in-distribution (ID) model used in the ArguGPT dataset. We want to test the generalization ability on the ID model in response to OOD prompts. The essays of gpt-3.5-turbo are collected via OpenAI’s API.

-

•

gpt-4: gpt-4 is an OOD model from the GPT family. Therefore, we can see how the detectors trained on data generated by previous models predict ones written by the latest one. The essays of gpt-4 are accessed via the web-interface.

-

•

claude-instant: claude-instant121212https://claude-ai.ai/ is a large language model developed by Anthropic. With essays generated by claude-instant, we can test how the detectors transfer the ability to the model from non-GPT family. The essays of claude-instant are collected in the web-interface as gpt-4.

-

•

bloomz-7b (Workshop et al., 2023) and flan-t5-11b (Chung et al., 2022): We also use two language models in a much smaller scale, to investigate how well these detectors can detect essays generated by smaller language models. For these two models we run them locally on two 24GB RAM GPUs to generate essays.

Each model is asked to generate 2 essays for each prompt, amounting to 500 argumentative essays in total, serving as the machine OOD test set for positive samples.

3 Human evaluation

Our first research question is whether ESL instructors can identify the texts generated by GPT models. To answer this question, we recruit a total of 43 ESL instructors for two rounds of Turing tests. In each round, they are asked to identify which 5 essays are machine-written from 10 randomly sampled TOEFL essays. They are also asked to share their observations on the linguistic and stylistic characteristics of GPT-generated essays. In Section 3.1, we describe details of this experiment. In Section 3.2 we present and analyze the results.

3.1 Methods

Task

We ask human participants to determine whether an essay is written by a human or a machine. Previous research show that it is difficult for a layperson to spot a machine generated text (Brown et al., 2020; Dou et al., 2022; Guo et al., 2023). In light of such discoveries, we present 5 machine generated essays and 5 human essays each round to the participant, and ask them to rate the probability of each text being written by a human/machine on a 6-point Likert Scale, where 1 corresponds to “definitely human” and 6 corresponds to “definitely machine”. For the 5 machine essays, we sample 1 from each of the following 5 models: gpt2-xl, text-babbage-001, text-curie-001, text-davinci-003, gpt-3.5-turbo. For the 5 human essays, we sample 1 low, 3 medium and 1 high level essays, disregarding the native language of the human author.

Each participant will perform such a rating task for two rounds, on two different sets of essays. Answers given by participants are correct when they rating 1-3 point for human essays and 4-6 point for machine essays. After each round, they will be presented with the correct answers, giving them a chance to observe the features of the GPT-generated essays, which they are asked to write down and submit in a text box. Then they will be presented with the next set of essays. See Figure 1 for the pipeline of the experiment. We expect the accuracy to be higher in the second set of essays as the participants have seen machine essays and the correct answers in the first round. Instructions for the experiment can be found in Appendix D.

Participants

We recruit a total of 43 ESL instructors/teaching assistants from over 5 universities across China. The instructors from the English Department and/or College English Department131313The former is responsible for teaching English majors, while the latter is responsible for teaching general English courses to non-English majors in the university. include assistant professors/lecturers, associate professors, professors, and Ph.D. and MA students who have experiences as teaching assistants. Details are presented in Table 8. Each participant are compensated RMB (40 + 2correct answers), as an incentive for them to try their best in the task. The mean time of completion for round 1 and 2 is 15 minutes and 10 minutes respectively141414We did not see a strong correlation between completion time and the participants’ accuracy in the rating task(Pearson around 0.1).

| Identity | # Participants | Accuracy |

| MA student | 4 | 0.5875 |

| Ph.D. Student | 16 | 0.6656 |

| Assi. Professor/Lecturer | 11 | 0.6364 |

| Asso. Professor | 7 | 0.6929 |

| Professor | 3 | 0.6500 |

| Other | 2 | 0.5000 |

| total | 43 | - |

3.2 Results

The 43 participants make 860 ratings in response to 280 essays, which are taken from the 300 TOEFL essays in the test split of the corpus (see Section 5). We count the number of correct answers among the 860 choices in order to obtain the accuracy.

3.2.1 Quantitative analysis

The accuracy of our human participants in identifying machine essays is presented in Table 9. From the left side of Table 9, we see that the mean accuracy from all subjects in both rounds is 0.6465, roughly 15 percent more than the baseline, which is 0.5, since we have an equal number of human and machine texts in the test set.

One interesting discovery is that it is much easier for our participants to identify human essays. The accuracy of identifying human essays reaches 0.7744, while the accuracy of machine is only at chance level: 0.5186. We believe this is because all of our participants have a lot of experience reading ESOL learners’ writings and are thus quite familiar with the style and errors one can find in an essay written by a human language learner.

However, only 11 out of the 43 participants indicated that they are familiar with the (Chat)GPT models; that is, most of them are unfamiliar with the type of text generated by these models, which could explain why they have lower accuracy when identifying GPT-authored texts.

Going down the left side of Table 9, participants who self-report that they have some familiarity with LLMs have better performance on our task than those who are not familiar with LLMs (0.69 vs 0.64).

We also observe some interesting trends, as shown on the right side of Table 9. Participants are better at identifying low level human essays (acc: 0.8372), and essays generated by more advanced models such as text-davinci-003 and gpt-3.5-turbo (acc: 0.6279). Participants are particularly bad at identifying essays generated by gpt2-xl (acc: 0.3721). This is different from Clark et al. (2021, section 1) who suggested that the evaluators “underestimated the quality of text current models are capable of generating”. When our experiments were conducted, ChatGPT has become the latest model and participants seem to overestimate the non-ChatGPT models, by assigning gpt2-xl essays to human essays, as they have commonly seen student essays with low quality.

| Group by | Group | Accuracy | Author | Accuracy | |

| Essay type | Overall | 0.6465 | M | gpt2-xl | 0.3721 |

| Human essays | 0.7744 | text-babbage-001 | 0.4651 | ||

| Machine essays | 0.5186 | text-curie-001 | 0.4651 | ||

| Same essay prompt | Yes | 0.6472 | text-davinci-003 | 0.6628 | |

| for 10 essays | No | 0.6460 | gpt-3.5-turbo | 0.6279 | |

| Familiarity | Not familiar (600 ratings) | 0.6400 | H | human-low | 0.8372 |

| w/ GPT | Familiar (220 ratings) | 0.6909 | human-medium | 0.7752 | |

| Other (40 ratings) | 0.5000 | human-high | 0.7093 |

| Round 1 | Round 2 | |||||

| Overall | Human | Machine | Overall | Human | Machine | |

| Accuracy | 0.6163 | 0.7535 | 0.4791 | 0.6767 | 0.7954 | 0.5581 |

Subjects’ overall accuracy in the first round is 0.6163, while performance in the second round rises to 0.6767 (see Table 10). This suggests that after some exposure to machine texts (i.e., 5 machine texts and 5 human texts side by side) and reflection on the linguistic features of machine texts, our subjects become better at identifying machine texts, with the accuracy rising from 0.4791 to 0.5581. This result is in line with Clark et al. (2021) who employed 3 methods that ultimately improved human evaluators’ judgment accuracy from chance level to 55%. We also see a 4% improvement in the accuracy of identifying our human-written essays.

3.2.2 Qualitative analysis

In this section, we summarize the features of machine-essays provided by our participants in the experiment.

First, participants associate two distinctive features with human essays: (1) human essays have more spelling and grammatical errors, which is also mentioned in Dou et al. (2022), and (2) human essays may contain more personal experience. Seeing typos/grammatical mistakes and personal experience in one essay, participants are very likely to categorize it as human-authored.

As for machine essays, participants generally think (1) machine essays provide many similar examples, and (2) machine essays have repetitive expressions. These two features corroborate the findings of Dou et al. (2022). Reasons when participants make the right choices are presented in Table 11.

| Text Excerpt | Author | Choice | Reason |

| So to the oppsite of the point that mentioned in the theme, I think there will more people choose cars as their first transpotation when they are out and certainly there will be more cars in twenty years. | human- medium | Human | There are too many typos and grammatical errors. |

| Apart from that the civil service is the Germnan alternative to the militarz service. For the period of one year young people can help in there communities. | human- high | Human | The essay might be written by a German speaker. |

| Firstly… when I traveled to Japan… Secondly… when I went on a group tour to Europe… Thirdly… when I went on a safari in Africa… | gpt- 3.5- turbo | Machine | Examples provided are redundant. |

| I wholeheartedly… getting a more personalized experience… Some of the benefits… getting a more personalized experience… So, overall… get a more personalized experience… | text- curie- 001 | Machine | There are too many repetitive expressions. |

However, even with the knowledge of the two features of machine essays discussed above, participants are not very confident when identifying machine essays. There are redundant and repetitive expressions in human writings as well, which might confuse participants in this regard. Another important feature frequently mentioned by participants is off-prompt, meaning the writing digresses from the topic given by the prompt. Some tend to think it is a feature of machine essays, while others think it features human essays.

After being presented with correct answers in the human evaluation experiment, participants summarize their impression on AI-generated argumentative essays. We list some of the commonly mentioned ones below.

-

•

Language of machines is more fluent and precise. (1) There are no grammatical mistakes or typos in machine-generated essays. (2) Sentences produced by machines have more complex syntactic structures. (3) The structure of argumentation in machine essays is complete.

-

•

Machine-generated argumentative essays avoid subjectivity. (1) Machines never provide personal experience as examples. (2) Machines seldom use “I” or “I think”. (3) It is impossible to speculate the background information of the author in machine essays.

-

•

Machines hardly provide really insightful opinions. (1) Opinions or statements provided by machines are very general, which seldom go into details. (2) Examples in machine-generated essays are comprehensive, but they are plainly listed rather than coherently organized.

After browsing the reasons given by our participants, we find that grammatical mistakes (including typos) and use of personal experience are usually distinctive and effective features to identify human essays (English learners in our case), according to which participants can have a higher accuracy. However, a fixed writing format and off-prompt are not so reliable. If participants identify machine essays by these two features, the accuracy will drop. For a fixed writing format and off-prompt often feature human-authored essays as well.

3.3 Summary

Our results indicate that knowing the answers of the first round test is helpful for identifying texts in the second round, which is consistent with Clark et al. (2021). Contra Brown et al. (2020), our results show that essays generated by more advanced models are more distinguishable. Moreover, the accuracy on identifying texts generated by models from GPT2 and GPT3 series are lower than what is reported in previous literature (Uchendu et al., 2021; Clark et al., 2021; Brown et al., 2020). It indicates that human participants anticipate that machines are better than human at writing argumentative essays. When the models (e.g., gpt2-xl) generate an essay of lower quality, our human participants might feel more confused because they expect the machines should have better performance.

Looking into the summaries of AIGC features provided by participants, we can see that participants have a more detailed picture of how human essays look like (e.g., the mistakes that human English learners are likely to make). On the other hand, they can also capture some features of machine essays after reading several of them, though these features were not strong enough to help participants determine whether essays are human- or machine-authored.

Therefore, we think that if English teachers become more familiar with AIGC, they will be more capable to identify the features between human and machine.

4 Linguistic analysis

In this section, we compare the linguistic features of machine and human essays. We group the essays by author: (1) low-level human, (2) medium-level human, (3) high-level human, (4) gpt2-xl, (5) text-babbage-001, (6) text-curie-001, (7) text-davinci-001, (8) text-davinci-002, (9) text-davinci-003, and (10) gpt-3.5-turbo.

We first present some descriptive statistics of human and machine essays. We then use established measures and tools in second-language (L2) writing research to analyze the syntactic complexity and lexical richness of both human and machine written texts (Lu, 2012, 2010).

4.1 Methods

Descriptive statistics

We use in-house Python scripts and NLTK (Bird, 2002) to obtain descriptive statistics of the essays in the following 5 measures: (1) mean essay length, (2) mean number of paragraphs per essay, (3) mean paragraph length, (4) mean number of sentences per essay, and (5) mean sentence length.

Syntactic complexity

To analyze the syntactic complexity of the essays, we apply the L2 Syntactic Complexity Analyzer to calculate 14 syntactic complexity indices for each text (Lu, 2010). These measures have been widely used in L2 writing research. Table 12 presents details of the indices. However, only six out of these 14 measures are reported by Lu (2010) to be linearly correlated with language proficiency levels. Therefore, we only present the results for these six measures.

| Measure | Code | Definition | ||

| Length of production unit | ||||

| Mean length of clause | MLC | # of words / # of clauses | ||

| Mean length of T-unit | MLT | # of words / # of T-units | ||

| Coordination | ||||

| Coordinate phrases per clause | CP/C | # of coordinate phrases / # of clauses | ||

| Coordinate phrases per T-unit | CP/T | # of coordinate phrases / # of T-units | ||

| Particular structures | ||||

| Complex nominals per clause | CN/C | # of complex nominals / # of clauses | ||

| Complex nominals per T-unit | CN/T | # of complex nominals / # of T-units | ||

Lexical complexity

Lexical complexity or richness is a good indicator of essay quality and has been considered a useful and reliable measure to examine the proficiency of L2 learners (Laufer and Nation, 1995). Many L2 studies have discussed the criteria for evaluating lexical complexity. In this study, we follow Lu (2012) who compared 26 measures in language acquisition literature and developed a computational system to calculate lexical richness from three dimensions: lexical density, lexical sophistication, and lexical variation.

Lexical density means the ratio of the number of lexical words to the total words in a text. We follow Lu (2012) to define lexical words as nouns, adjectives, verbs, as well as adverbs with an adjectival base, such as “well" and the words formed by “-ly" suffix. Modal verbs and auxiliary verbs are not included. Lexical sophistication calculates the advanced or unusual words in a text (Read, 2000). We further operationalize sophisticated words as the lexical words, and verbs which are not on the list of the 2,000 most frequent words generated from the American National Corpus. As for lexical variation, it refers to the use of different words and reflects the learner’s vocabulary size.

N-gram analysis

We use the NLTK package to extract trigrams, 4-grams and 5-grams from both human and machine essays and calculate their frequencies in order to find out the usage preferences in human and machine essays. We then compute log-likelihood (Rayson and Garside, 2000) for each N-gram in order to uncover phrases that are overused in either machine or human essays.

4.2 Results

4.2.1 Descriptive statistics

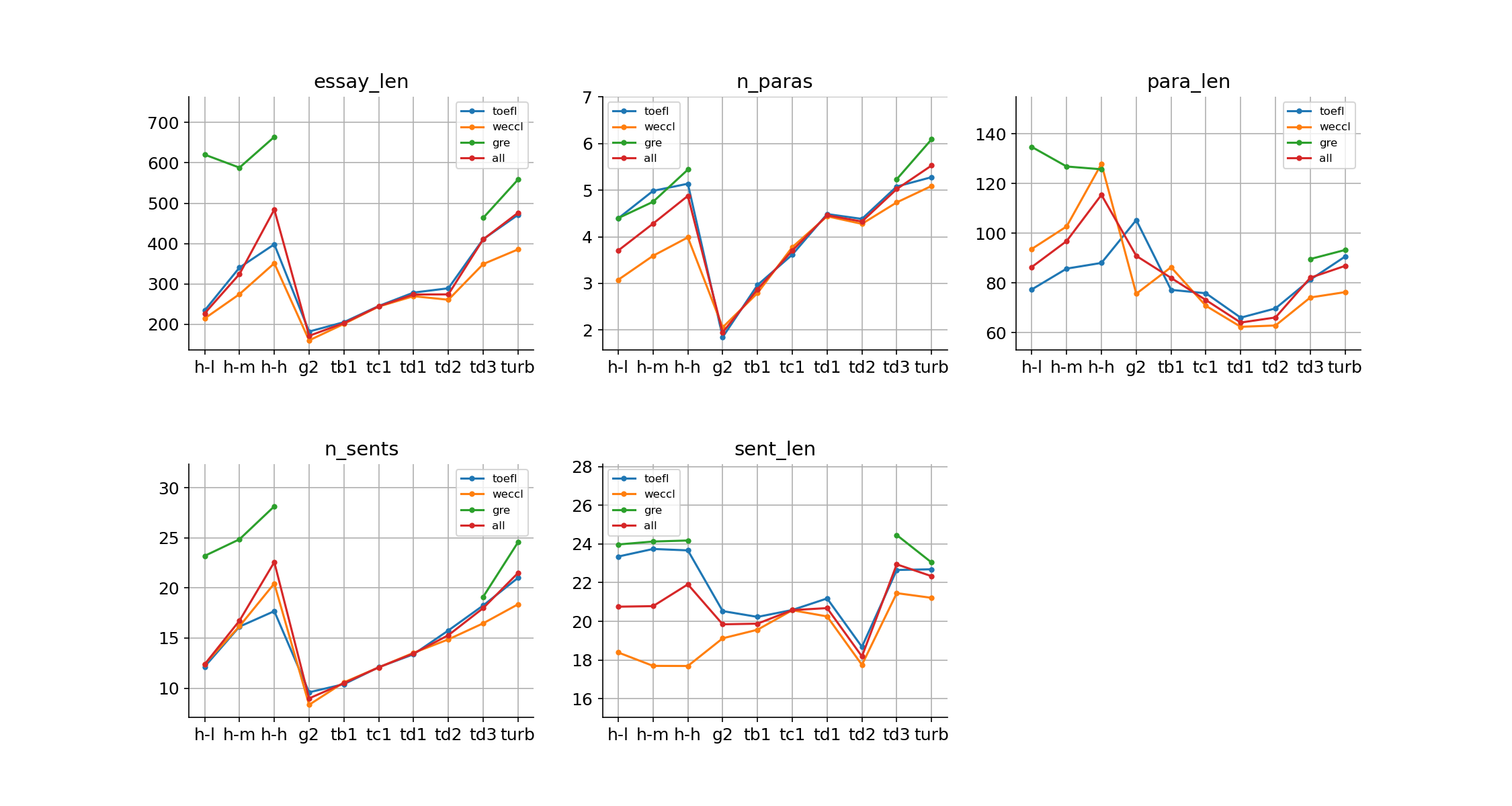

The descriptive statistics of sub-corpora in the ArguGPT corpus are presented in Figure 2. As for humans, essays with higher scores are likely to have a longer length from both essay-level and paragraph-level and more paragraphs and sentences in one essay. However, humans of all three levels write sentences of similar length.

In like manner, more advanced AI models are likely to have longer essay length, more paragraphs and sentences. However, the mean length of paragraphs goes down from gpt2-xl to text-davinci-001, and goes up slightly from text-davinci-002 to gpt-3.5-turbo. As for the mean length of sentences, text-davinci-002 writes the shortest sentences among machines.

Different machines match humans of different performance levels of mean essay-, paragraph-, and sentence-level length. However, regarding the length of paragraphs, human writers outperform machine writers.

4.2.2 Syntactic complexity

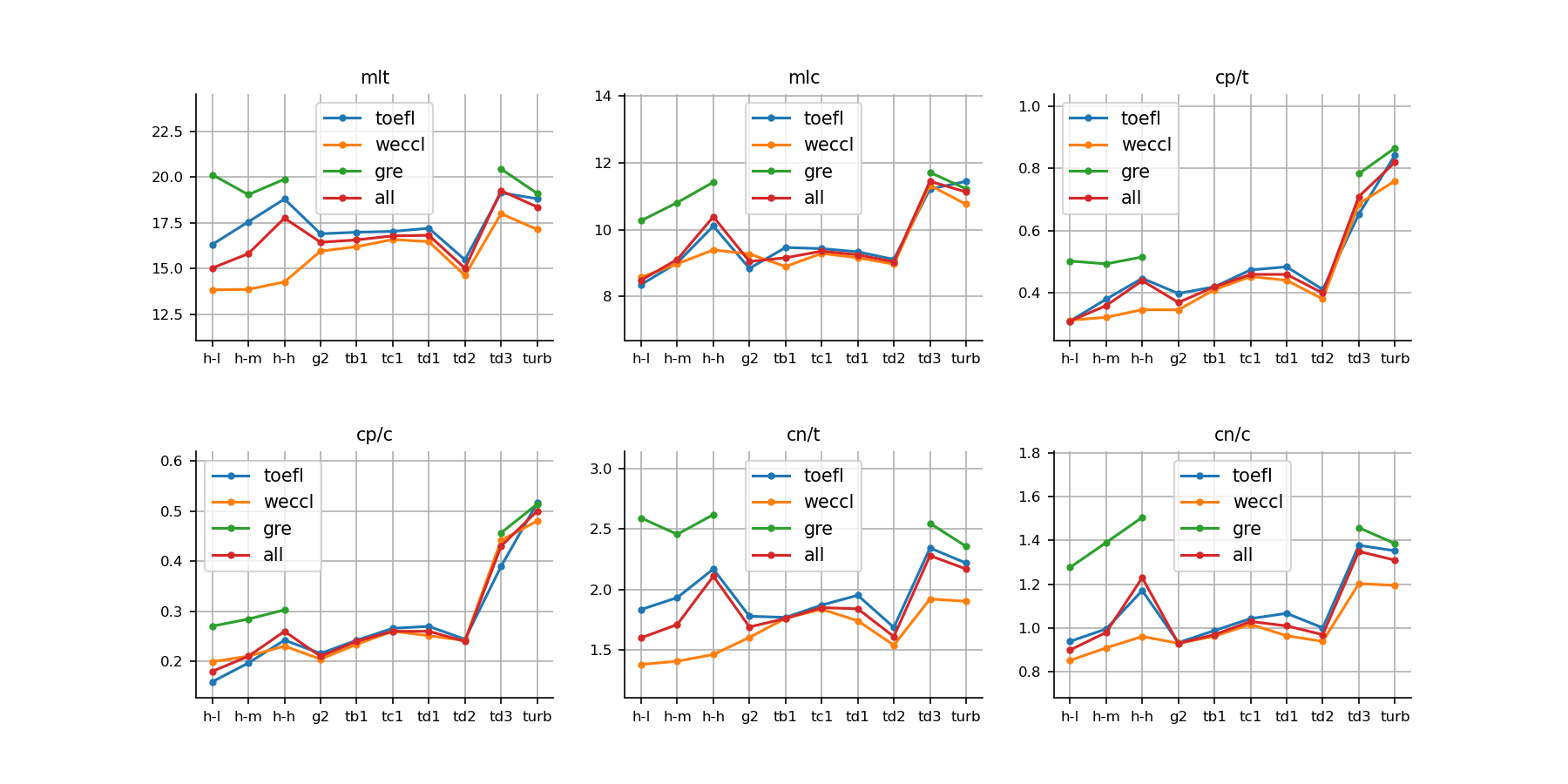

Figure 3 gives the means of the six syntactic complexity values of the essays which are grouped by sub-corpora in the ArguGPT corpus.

As shown in Figure 3, all 6 chosen syntactic complexity values progress linearly across 3 score levels for human essays. They also indicate a general growing trend across the language models following the order of development. It is noticeable that text-davinci-002 is worse w.r.t. these measures than previous and later models. According to MLT (Mean length of T-unit) and CN/T (complex nominals per T-unit), it is even outperformed by gpt2-xl.

When we compare human essays with machine essays, even high-level human English learners are outperformed by gpt-3.5-turbo and text-davinci-003 in all 6 measures. This is particularly true for CP/T and CP/C, which indicates that coordinate phrases are much more common in the essays from the last two models than from human learners. On the other hand, gpt2, text-babbage-001, text-curie-001, and text-davinci-001/002 seem to be on par with human learners on these measures.

We take the above results to suggest that in general, more powerful models such as davince-003 and ChatGPT produce syntactically more complex essays than even high-level English learners.

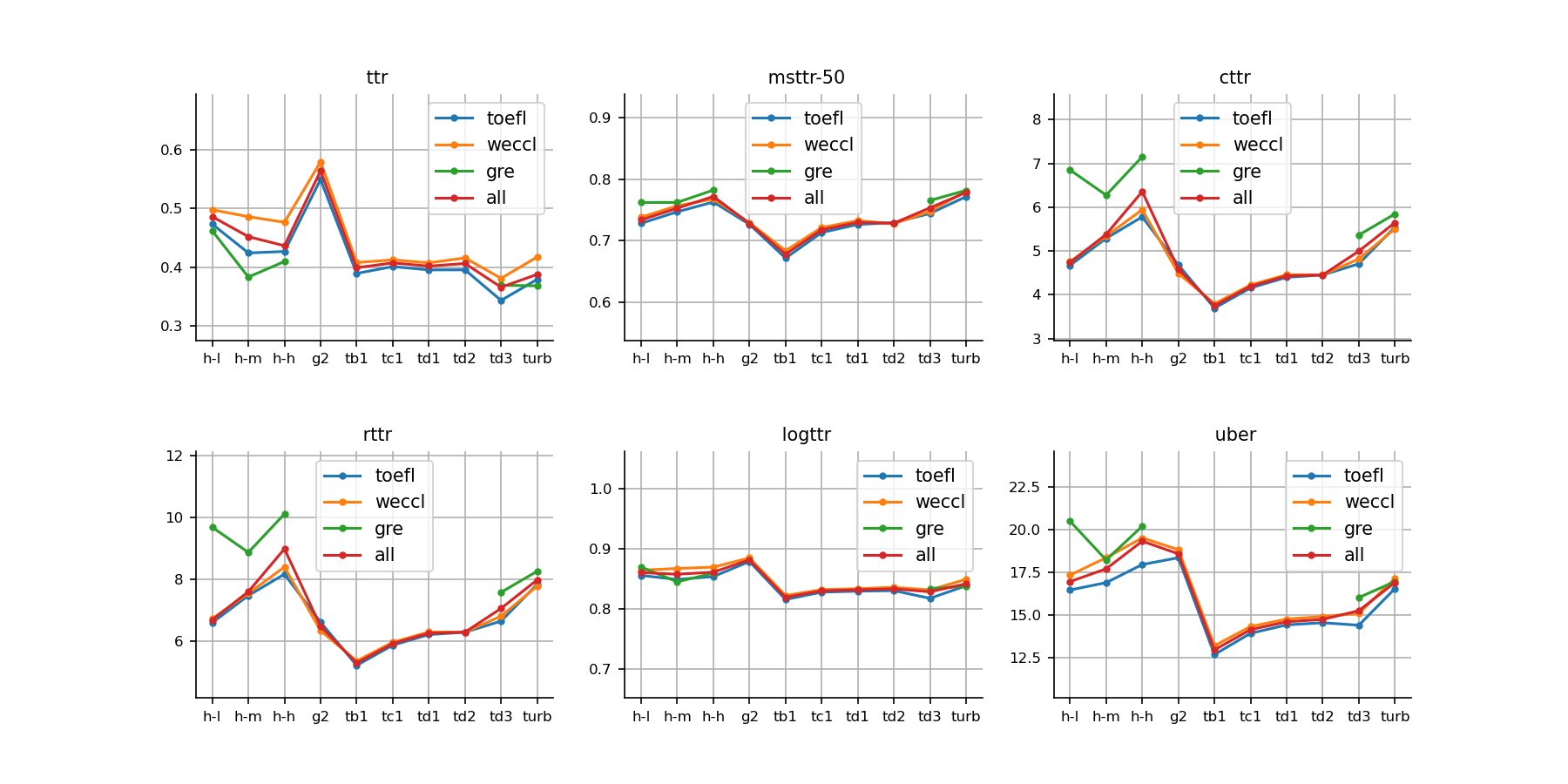

4.2.3 Lexical complexity

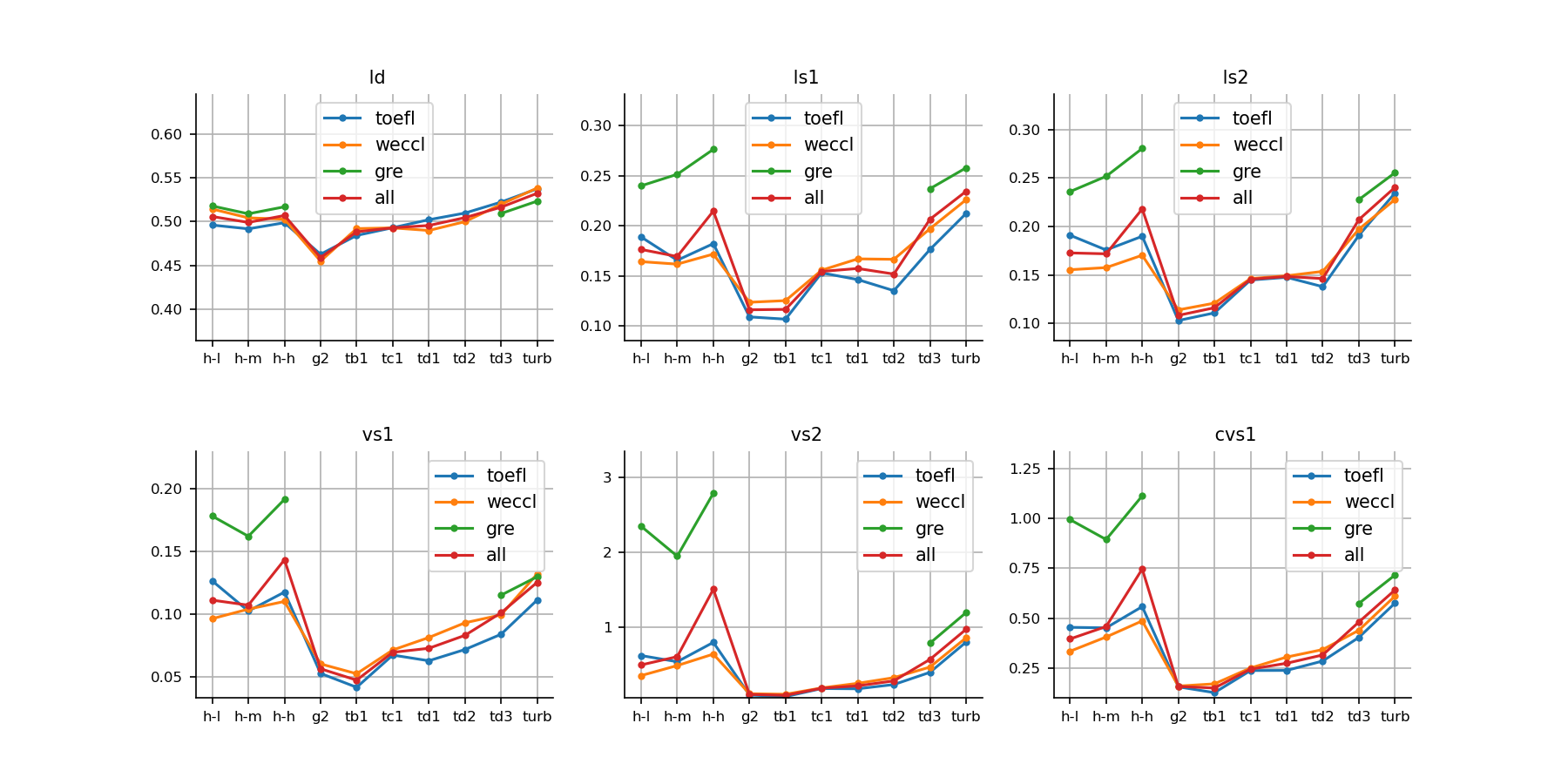

The lexical complexity of ArguGPT corpus is presented in Figures 4 to 7 (for actual numbers, see Table 27 and Table 28 in the Appendix).

Lexical density

Lexical density in Figure 4 shows that human essays tend to use more function words compared to text-davinci-003 and gpt-3.5-turbo, as these two models prefer more lexical words.

Lexical sophistication

As for lexical sophistication (also shown in Figure 4), advanced L2 learners outperform or are on par with gpt-3.5-turbo in all five indicators (lexical sophistication 1/2, and three measures of verb sophistication).

In terms of verb sophistication (bottom row of Figure 4), the differences are pronounced between low/medium level and high level of human writing. Advanced learners surpass gpt-3.5-turbo while the intermediate level is on par with text-davinci-003. However, high-level human essays in WECCL perform worse than gpt-3.5-turbo. Moreover, GRE essays have much higher values than WECCL and TOEFL in these three measures, especially for the advanced level. We attribute this to the nature of our GRE corpus, where essays are all example essays for those preparing for the GRE test to emulate rather than representative of all levels of test takers.

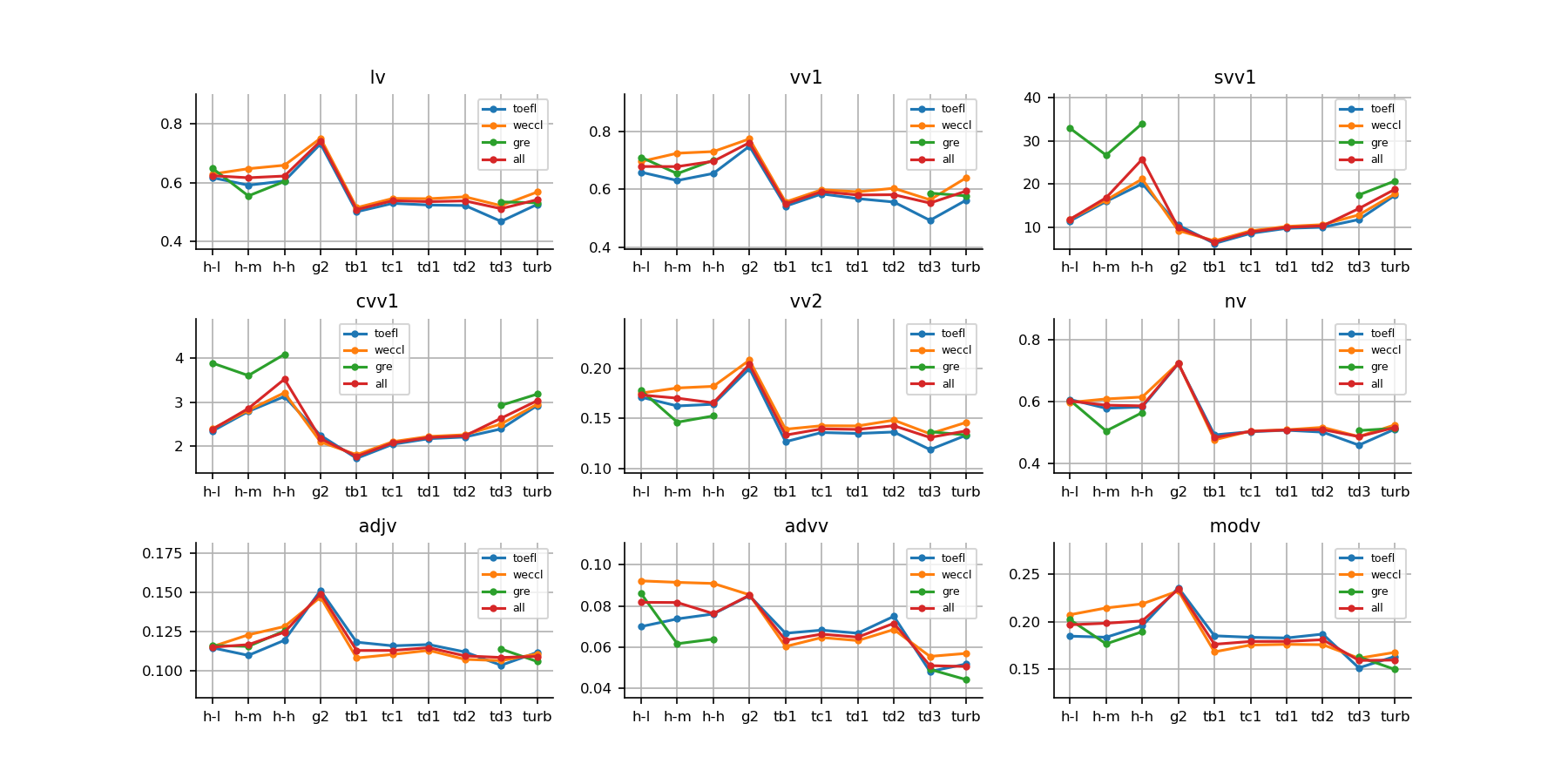

Lexical variation

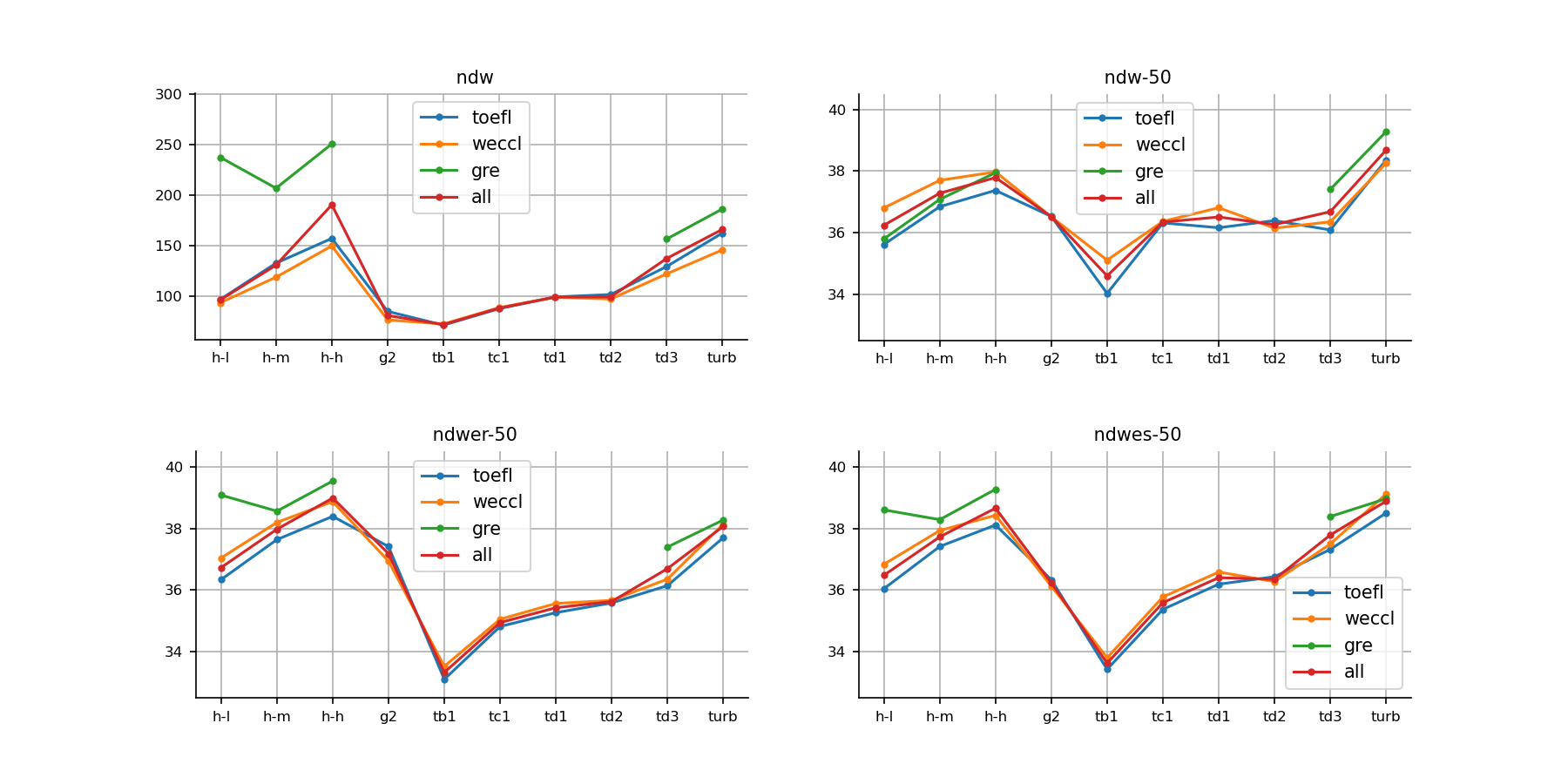

Measures for lexical variation are shown in Figure 5 (number of different words), Figure 6 (type-token ratio) and Figure 7 (type-token ratio of word class). They indicate the range and diversity of a learner’s vocabulary.

Among the four measures of the number of different words in Figure 5, advanced learners exceed gpt-3.5-turbo in two metrics. Text-davinci-003 and gpt-3.5-turbo are comparable to medium and high levels of L2 speakers’ writing, respectively. The trend is similar in WECCL and TOEFL corpora, except for the GRE corpus. Our GRE essays have the largest vocabulary and surpass gpt-3.5-turbo in three metrics.

Type-token ratio (TTR) is an important measure of lexical richness. Six indices of TTR in Figure 6 all suggest that, while gpt-3.5-turbo excels the medium-skilled test takers in WECCL and TOEFL, there is still a discernible gap between the performance of machines and that of skillful non-native speakers. GRE test takers at all levels outstrip the machines. It should be emphasized that TTR is not standardized compared to CTTR (corrected TTR) and its other variants, and shorter essays tend to have a higher TTR. Therefore, essays generated by gpt2-xl have a higher TTR. Other standardized variants better represent the lexical richness of the essays.

Type-token ratio can be further explored with respect to each word class. Figure 7 shows the variation of lexical words and other five ratios, including verbs, nouns, adjectives, adverbs and modifiers. We observe that advanced learners outperform gpt-3.5-turbo in all metrics among the three corpora. The margins are obvious in lexical words, verbs, nouns and adverbs. Note that SVV1 and CVV1 are standardized compared with other metrics, and may be more suitable for analysis of the discrepancies. The verb system is recognized as the focus in second language acquisition, for it is essential to construct any language (Housen, 2002), and humans showcase a stronger ability in applying abundant verbs than machines.

We take these results to suggest that unlike syntactic complexity, high-level English learners and native speakers of English are on par or even exceed gpt-3.5-turbo in terms of lexical complexity.

4.2.4 N-gram analysis

Table 13 lists 20 trigrams that are used significantly more frequently by language models than human. It is worth noting that “i believe that” appears 2,056 times in 3,338 machine-generated essays, but only 207 times in 3,415 human essays. This seems to be a pet phrase for text-davinci-001, as the phrase occurs 503 times in 509 texts generated by text-davinci-001, while the number of occurrences in 284 essays produced by gpt2-xl is only 31.

| Overused by machines | Overused by humans | ||||||

| Trigram | log-lklhd | M | H | Trigram | log-lklhd | M | H |

| i believe that | 1987.2 | 2056 | 207 | more and more | 313.4 | 179 | 753 |

| can lead to | 1488.6 | 1152 | 32 | what’s more | 230.4 | 2 | 197 |

| more likely to | 1257.1 | 1034 | 43 | the young people | 205.6 | 5 | 193 |

| it is important | 1063.8 | 1130 | 122 | we have to | 194.7 | 29 | 269 |

| are more likely | 831.9 | 679 | 27 | in a word | 184.7 | 1 | 154 |

| be able to | 775.3 | 1296 | 311 | to sum up | 178.7 | 3 | 161 |

| is important to | 646.7 | 707 | 82 | most of the | 177.6 | 27 | 247 |

| lead to a | 644.0 | 554 | 29 | in the society | 175.9 | 1 | 147 |

| a sense of | 531.4 | 562 | 60 | and so on | 171.1 | 24 | 232 |

| this can lead | 528.2 | 364 | 2 | we all know | 157.9 | 4 | 149 |

| can help to | 496.6 | 373 | 8 | the famous people | 156.7 | 4 | 148 |

| understanding of the | 493.7 | 507 | 50 | the same time | 156.4 | 48 | 282 |

| believe that it | 470.6 | 439 | 32 | of the society | 147.3 | 15 | 182 |

| this is because | 468.2 | 564 | 81 | we can not | 147.1 | 43 | 260 |

| likely to be | 459.7 | 422 | 29 | i think the | 144.6 | 17 | 186 |

| this can be | 455.0 | 445 | 38 | as far as | 144.3 | 3 | 133 |

| believe that the | 427.9 | 499 | 67 | so i think | 142.1 | 2 | 126 |

| the world around | 421.2 | 345 | 14 | at the same | 138.4 | 57 | 284 |

| may not be | 410.9 | 504 | 75 | his or her | 133.7 | 19 | 182 |

| skills and knowledge | 404.3 | 292 | 4 | i want to | 133.5 | 13 | 163 |

The right side of Table 13 lists 20 trigrams which are used significantly more frequently by humans. We can see from the log-likelihood value that the difference in usage of these phrases between humans and machines is not as prominent as those overused by machines. Nevertheless, it is still noticeable that “more and more” appears more often in human writing. When looking into the usage of this phrase in the TOEFL corpus, which contains essays written by English learners with 11 different native languages, we found that it is preferred by students whose first language is Chinese or French.

4.3 Summary

To sum up, the results of the linguistic analysis suggest that for syntactic complexity, text-davinci-003 and gpt-3.5-turbo produce syntactically more complex essays than most ESOL learners, especially on measures related to coordinating phrases, while other models are on par with the human essays in our corpus.

For lexical complexity, models like gpt2-xl, text-babbage/curie-001, and text-davinci-001/002, for the most part, are on par with elementary/intermediate English learners on several measures, while text-davinci-003 and gpt-3.5-turbo are approximately on the level of advanced English learners. For some lexical richness measures (verb sophistication, for instance) and GRE essays, our human data show even greater complexity than the best AI models.

In other words, sentences produced by machines are of greater length and syntactic complexity, but words used by machines are likely of less richness and diversity when compared to human writers (mostly English learners in our case).

Moreover, from the N-gram analysis, machines prefer expressions such as “I believe that”, “can lead to”, and “more likely to”, while these phrases are seldom used in our human-authored essays.

Additionally, we find that there is a general trend of increasing lexical/syntactic complexity as the models progress, but for certain measures, gpt2-xl and text-davinci-002 seem to be the exceptions.

5 Building and testing AIGC detectors

In this section, we examine whether machine-learning classifiers can distinguish machine-authored essays from human-written ones.

Specifically, we test two existing AIGC detectors—GPTZero and RoBERTa of Guo et al., and build and test our own detectors—SVM models with different linguistic features and a deep learning classifier based on RoBERTa. We also experiment zero/few-shot learning with gpt-3.5-turbo.

5.1 Experimental settings

We split the ArguGPT corpus into train, dev, and test sets (see Table 14), where the dev and test sets contain 700 essays respectively. In the dev and test sets, the proportion of low : medium : high level human essays is kept to 1:3:1 for WECCL and TOEFL11. As for WECCL and TOEFL11 machine essays, we sample 150 essays for each exam that are generated by 5 models (excluding text-davinci-001 and text-davinci-002). For GRE essays in the dev and test sets, we randomly sample 50 human essays, and 50 machine essays generated by text-davinci-003 and gpt-3.5-turbo.

For the two existing detectors—GPTZero and the RoBERTa model from (Guo et al., 2023), we use them as off-the-shelf tools without further fine-tuning or modification.

We train SVMs and finetune a RoBERTa-large model using the training set of ArguGPT. We perform zero/few-shot learning with gpt-3.5-turbo via OpenAI’s API.

For all experiments, we train and evaluate on document-, paragraph-, and sentence-level.

| Split | # WECCL | # TOEFL11 | # GRE | # Doc | # Para | # Sent |

| train | 3,058 | 2,715 | 980 | 6,753 | 29,124 | 111,283 |

| dev | 300 | 300 | 100 | 700 | 2,947 | 11,302 |

| test | 300 | 300 | 100 | 700 | 2,953 | 11,704 |

5.2 Results

The results for all detectors are summarized in Table 15.

| Test set | Our detectors | Existing detectors | |||||

| Test data | maj. bsln | Train data | RoBERTa | Best SVM | GPTZero | Guo et al. (2023) | |

| Doc | 50 | Doc | all | 99.38 | 95.14 | 96.86 | 89.86 |

| 50% | 99.76 | 94.14 | |||||

| 25% | 99.14 | 93.86 | |||||

| 10% | 97.67 | 92.29 | |||||

| Para | 52.62 | Doc | all | 74.58 | 83.61 | 92.11 | 79.95 |

| Para | 97.88 | 90.55 | |||||

| Sent | 54.18 | Doc | all | 49.73 | 72.15 | 90.10 | 71.44 |

| Sent | 93.84 | 81 | |||||

5.2.1 Existing detectors for AIGC: GPTZero and RoBERTa from (Guo et al., 2023)

We test two existing detectors for AIGC: GPTZero and finetuned RoBERTa of Guo et al. 151515https://huggingface.co/Hello-SimpleAI/chatgpt-detector-roberta.

We evaluate each detector’s performance on the ArguGPT test set. The RoBERTa model from Guo et al. (2023), which was finetuned on text in other genres such as finance and medicine, achieves an accuracy of around 90% for document-level classification, lower than the other classifiers, possibly due to the nature of their training data. The same model achieves 79.95% and 71.44% respectively on paragraph and sentence level classification.

As for GPTZero, we use their API, which returns the probability of being written by an AI model; the returned result includes probabilities for the entire essay, each paragraph and each sentence. We consider any text with a probability higher than 0.65 to be AI-written, following the documentation of GPTZero. Its performance is shown in Table 15. We can see that GPTZero reaches very high accuracy on document, paragraph and sentence levels (all above 90%).

5.2.2 SVM detector

In this section, we aim to find out if AIGC and human-written essays are distinguishable using hand-crafted linguistic features and a SVM classifier. By training SVMs on document-level with syntactic and stylistic features (i.e., no content information) as well as word unigrams (which include content words) and comparing their performance, we select the best set of features for classification and apply these features on paragraph and sentence levels.

Linguistic Features

We select some commonly used linguistic features and extract them with translationese101010https://github.com/huhailinguist/translationese which is extended from https://github.com/lutzky/translationese. package, which has been used in linguistically informed text classification tasks for translated and non-translated texts (Volansky, Ordan, and Wintner, 2015; Hu and Kübler, 2021). The implementation uses Stanford CoreNLP (Manning et al., 2014) for POS-tagging and constituency parsing. Specifically, we experimented with the following features:

-

•

CFGRs: The ratio of each context-free grammar (CFG) rule in the given text to the total number of CFGRs.

-

•

function words: The normalized frequency of each function word’s occurrence in the chunk. Our list of function words comes from (Koppel and Ordan, 2011). It consists of function words and some content words that are crucial in organizing the text, which constitutes 467 words in total.

-

•

most frequent words: The normalized frequencies (ratio to the token number) of the most frequent words () in a large reference corpus TOP_WORDS111111https://github.com/huhailinguist/translationese/blob/master/translationese/word_ranks.py. The top words are mainly function words.

-

•

POS unigrams: The frequency of each unigram of POS.

-

•

punctuation: The frequency (ratio to the token number) of punctuation in a given chunk.

-

•

word unigrams: The frequency of the unigram of words. We only include unigrams with more than three occurrences in the given text.

Among the features, CFGRs and POS unigrams separately represent the sentence structures and part of speech preference. They are the stylistic features reflecting the patterns underlying the superficial language expressions. function words, word unigrams and most frequent words reveal the concrete choices in lexical items. punctuation represents the habit of punctuation use.

We use the general SVM model from scikit-learn package (Pedregosa et al., 2011). We optimize its parameters including the and and the kernel functions by training with different combinations of them and selecting the one with the best performance on the development set. We also normalize our feature matrices before passing it to the model.

| Linguistic Features | Training Set | Feature Number | |||

| All | 50% | 25% | 10% | ||

| CFGRs (frequency 10) | 91.71 | 90.29 | 90.14 | 87 | 939 |

| CFGRs (frequency 20) | 78.71 | 78 | 78.14 | 76.71 | 131 |

| Function Words | 95.14 | 94.14 | 93.86 | 92.29 | 467 |

| Top 10 Frequent Words | 75.14 | 76.29 | 75.71 | 75.43 | 10 |

| Top 50 Frequent Words | 89.00 | 87.14 | 87.00 | 86.00 | 50 |

| POS Unigrams | 90.71 | 88.86 | 88.71 | 87.71 | 45 |

| Punctuation | 80 | 80.14 | 78.86 | 79.14 | 14 |

| Word Unigrams | 90.71 | 87.86 | 87.57 | 86.14 | 2,409 |

Results

As shown in Table 16, the classifier trained with function words attains an accuracy of 94.33% with the full training set. The detector trained with POS unigrams also perform well in this task, reaching an accuracy of 87%. The detectors using context free grammar rules and word unigrams as training feature also report an over 85% accuracy but with more features than POS unigrams. Increasing the number of frequent words from 10 to 50 enhances the accuracy by nearly 10%. It is interesting to note that using only 14 punctuations as features can give us an accuracy of 80%.

Analysis

We observe that training with syntactic and stylistic features results in high performance in our detector, which is shown in the accuracy in detector trained with POS unigrams and context free grammar rules. In particular, the detectors trained with CFGRs and POS unigrams outperform that trained with word unigrams. This indicates that relying on syntactic information alone, the detector can tell the differences between AIGC and human-written essays.

The detector trained with function words achieve the highest accuracy (95.14%, suggesting that humans and machines have different usage patterns for these words.

The performance of the model trained with the distribution of punctuation is around 80% with only 14 features, which suggests that humans and machines use punctuations very differently.

Overall, our results show that the SVM detector can distinguish the AIGC from human-written essays with high accuracy based on syntactic features alone. When trained with functions words as features, the SVM can achieve 95% accuracy at the document level.

5.2.3 Fine-tuning RoBERTa-large for classification

Methods

To further investigate whether AI generated essays are statistically distinguishable from human essays, we fine-tune a RoBERTa-large161616We use the huggingface implementation (Wolf et al., 2019) from https://huggingface.co/roberta-large. (Liu et al., 2019). Similar to how we train SVM detectors, we also train RoBERTa detectors on training sets of different granularities or different sizes to analyze the difficulties of AIGC detection. We train the model for 2 epochs using the largest batch size that can fit into a single GPU with 24 GB RAM. The full set of training hyperparameters are presented in Table 24. We evaluate the detector on the test set, but also report its performance on the portions of essays generated by different models, as presented in Table 17.

| Train data | gpt2-xl | babbage | curie | davinci-003 | turbo | all |

| gpt2-xl | 97.46 | 100.00 | 98.33 | 98.82 | 97.67 | 98.05 |

| babbage-001 | 98.31 | 100.00 | 98.33 | 98.82 | 98.84 | 99.19 |

| curie-001 | 97.74 | 100.00 | 99.44 | 99.41 | 99.81 | 99.33 |

| davinci-001 | 98.02 | 100.00 | 100.00 | 99.41 | 99.23 | 99.24 |

| davinci-002 | 98.31 | 99.72 | 99.45 | 99.80 | 99.42 | 99.33 |

| davinci-003 | 86.44 | 99.45 | 99.17 | 99.61 | 99.61 | 97.19 |

| turbo | 81.36 | 97.50 | 99.44 | 99.22 | 99.23 | 96.00 |

| 10% | - | - | - | - | - | 99.67 |

| 25% | - | - | - | - | - | 99.14 |

| 50% | - | - | - | - | - | 99.76 |

| all | 99.15 | 99.72 | 99.45 | 99.41 | 100.00 | 99.38 |

Results

From Table 17, we observe that RoBERTa easily achieves 99 accuracy in detecting AI generated essays, even when trained only on less then 10% of the data. When directly transferred to detecting paragraph-level and sentence-level data (see Table 15), the model’s performance drops by 23 points and 44 points respectively, but this is due to the distinct length gaps between training data and test data rather then the inherent difficulty in discriminated machine-generated sentences from human-written ones, as models trained on paragraph-level and sentence-level data scores 97.88 and 99.38 accuracy respectively on each one’s i.i.d test data.

Table 17 also confirms that the essays generated by models from GPT-3.5 family share a similar distribution, while the essays generated by GPT-2 are likely from a different distribution. But we hypothesize that this is not because essays generated by GPT-2 are harder to distinguish from human essays, but rather because its essays are not as well-posed as those generated by other models, and thus introduces larger noise into the detector’s training process.

5.2.4 Zero/few-shot learning experiment of gpt-3.5-turbo as AIGC detector

Methods

We test the capability of gpt-3.5-turbo (ChatGPT) on the AIGC detection task. We experiment with zero/one/two-shot learning by putting zero/one/two pairs of positive and negative examples in the prompt (for details see Appendix F).

All evaluation is done on our validation set. Since the performance is very poor, we do not further evaluate it on the test set.

Results

The accuracy for gpt-3.5-turbo on the AIGC detection task is presented in Table 18. The results show that gpt-3.5-turbo performs poorly on the AIGC detection task. Under the zero-shot scenario, the model classifies almost all essays as AI-generated. Therefore, the accuracy of zero-shot is close to 50%. Under the one-shot/two-shot scenarios, the average accuracy for the six pairs of prompts is also roughly 50%, suggesting that perhaps this task is still too difficult for the model in a few-shot setting. The model also has poor performance at paragraph-level classification, and we do not further experiment on sentence-level evaluation.

| Doc | Para | |

| Zero-shot | 50.33 | 43.28 |

| One-shot | 44.56 | 36.47 |

| Two-shot | 51.66 | 37.81 |

5.2.5 Out-of-distribution performance

To investigate the generalization ability of AIGC detectors, four aforementioned detectors are evaluated on the OOD dataset on the doc-, para-171717We don’t paragraph human essays for CLEC does not provide explicit notations for paragraphs., and sent-level, including (1) our RoBERTa trained on the training set of ArguGPT dataset, (2) the SVM model trained on the features of function words, (3) GPTZero (version 2023-06-12), and (4) RoBERTa released by Guo et al.. The results are presented in Table 19.

We first make three general observations:

-

•

It is much easier for detectors to detect OOD human essays—all detectors have 90+% accuracy—than machine essays—some detectors have poor performance, at around 50%.

-

•

GPTZero has the best performance on the human OOD test set with document-level accuracy at 100.00% and sentence level at 96.92%.

-

•

Our RoBERTa trained on the ArguGPT dataset has the best performance on the machine OOD test set (doc: 97.00%; para: 93.13%; sent: 83.57%).

Results on the human OOD test set

We observe that the performance on the human OOD test set is much better than the performance on the machine OOD test set. Apart from the RoBERTa fine-tuned on other text genres (Guo et al., 2023) which has a sent-level accuracy of 60.60%, all other four detectors have the accuracy of 94%+ for human OOD test set at both sent- and doc-level, among which GPTZero is the best detector for human OOD essays (doc: 100.00%; sent: 96.92%).

| Machine | ||||||||

| Model | Level | Sub-corpus | Overall | ID acc./ | ||||

| turbo | gpt-4 | claude | bloomz | flan-t5 | OOD acc. | |||

| RoBERTa | doc | 99.67 | 100.00 | 97.00 | 95.67 | 92.67 | 97.00 | 99.71/2.71 |

| para | 98.85 | 95.82 | 90.33 | 79.27 | 75.67 | 93.13 | 98.71/5.58 | |

| sent | 97.01 | 92.83 | 83.81 | 63.85 | 77.80 | 83.57 | 97.26/13.69 | |

| Best SVM | doc | 85.00 | 88.00 | 75.00 | 60.00 | 53.00 | 72.20 | 94.00/21.80 |

| para | 83.80 | 60.69 | 59.61 | 39.00 | 46.00 | 64.43 | 89.42/24.99 | |

| sent | 72.65 | 57.83 | 56.14 | 16.00 | 28.00 | 53.13 | 78.33/25.20 | |

| GPTZero | doc | 94.00 | 32.00 | 11.00 | 54.00 | 76.00 | 53.40 | 95.42/42.02 |

| para | 94.27 | 50.00 | 21.16 | 56.09 | 84.00 | 57.72 | 94.06/36.34 | |

| sent | 96.77 | 56.25 | 22.52 | 62.13 | 87.50 | 65.37 | 96.57/31.20 | |

| Guo et al. (2023) | doc | 80.00 | 15.00 | 30.00 | 84.00 | 87.00 | 59.20 | 94.00/34.80 |

| para | 90.83 | 49.86 | 47.25 | 76.21 | 87.00 | 64.67 | 92.42/27.75 | |

| sent | 88.08 | 59.86 | 59.92 | 61.87 | 79.88 | 69.87 | 87.19/17.32 | |

| Human | ||||||||

| Model | Level | Sub-corpus | Overall | ID acc./ | ||||

| st2 | st3 | st4 | st5 | st6 | OOD acc. | |||

| RoBERTa | doc | 95.33 | 99.67 | 100.00 | 97.33 | 100.00 | 98.47 | 99.05/0.58 |

| para | - | - | - | - | - | - | - | |

| sent | 94.64 | 95.65 | 96.64 | 94.75 | 89.22 | 93.20 | 90.93/-2.27 | |

| Best SVM | doc | 92.00 | 91.00 | 95.00 | 97.00 | 99.00 | 94.80 | 96.29/1.49 |

| para | - | - | - | - | - | - | - | |

| sent | 92.89 | 90.01 | 92.00 | 89.75 | 81.61 | 87.91 | 83.25/-4.66 | |

| GPTZero | doc | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 98.28/-1.72 |

| para | - | - | - | - | - | - | - | |

| sent | 98.09 | 94.17 | 99.75 | 96.00 | 95.61 | 96.92 | 96.57/-0.35 | |

| Guo et al. (2023) | doc | 96.00 | 100.00 | 99.00 | 100.00 | 100.00 | 99.00 | 85.71/-13.29 |

| para | - | - | - | - | - | - | - | |

| sent | 71.11 | 62.64 | 71.46 | 64.82 | 46.98 | 60.60 | 58.23/-2.37 | |

Results on machine OOD test set

When we turn to results on OOD essays written by generative models, we see that the RoBERTa finetuned on ArguGPT training data achieves exceptionally high accuracy at all three levels (doc: 97%, para: 93%, sent: 83%). At the document level, the performance is only 2 percentage points below the in-distribution performance, while at the sentence level, we see a drop of roughly 13% from in-distribution performance (see last column of Table 19).

Our best model of SVMs performs second best at the document-level. Nevertheless, the SVM model has the lowest accuracy in prediction at the sentence level.

On the other hand, the two detectors that are not specifically trained for argumentative essay detection—GPTZero and RoBERTa of Guo et al.—exhibit surprisingly poor performance, with an accuracy of 53.40% and 59.20% respectively. For the RoBERTa from Guo et al. (2023), this could be attributed to the fact that their training data do not contain argumentative essays, and that all the texts in their training data are generated by one model—gpt-3.5-turbo.

The performance of GPTZero is particularly unsatisfactory for essays generated by gpt-4 (32% at doc-level) and claude-instant (11%). Thus one should be cautious when using GPTZero for detection essays written by AI models other than gpt-3.5-turbo. We also note that GPTZero has better performance on finer-grained prediction (i.e. sent-level para-level doc-level). However, there is a reverse trend for the ArguGPT-finetuned RoBERTa, namely it performs best at the document-level, but worst at sent-level.

For essays generated by different models, detectors have drastically different performance. For essays generated by gpt-3.5-turbo, all detectors have a similar performance compared to the in-distribution (ID) evaluation on the ArguGPT test set, except for the SVM and RoBERTa from Guo et al. (2023) which has a roughly 10% gap between the OOD and ID performance. However, for essays generated by gpt-4 and claude-instant, it becomes extremely difficult for two off-the-shelf detectors, GPTZero and Guo et al. (2023), with their accuracy between 11% and 32%. On the other hand, our RoBERTa finetuned on the ArguGPT training data shows almost no performance drop from ID to OOD evaluation at the document level (acc: 95+%). For essays generated by the two smaller language models bloomz-7b and flan-t5-11b, we observe that the two off-the-shelf detectors have a better performance, with accuracy between 54% and 87% at the doc-level.

Discussion and implications

Our experiments on the OOD test set have several implications.

First, when evaluating AIGC detectors, it is necessary to construct a more comprehensive evaluation set that covers text generated by multiple models, as detection accuracy varies dramatically for text generated by different models. In our experiments, detectors have much better performance of predicting gpt-3.5-turbo than other models. However, as these generative models quickly update and new models emerge, an evaluation set should ideally cover as many models as possible so as to reflect the actual detection performance.

Second, transferring to detect AIGC generated by a different model might be more difficult than transferring to a different text genre. This is manifest in the ID and OOD performance of the RoBERTa by Guo et al. (2023), which is fine-tuned on text of 5 genres generated solely by gpt-3.5-turbo: while it has 80+% accuracy on detecting argumentative essays written by gpt-3.5-turbo, the performance drops to 15% and 30% respectively for essays generated by gpt-4 and claude-instant. Our results suggest that text produced by different models may have distinctive textual features that can be challenging for transfer learning. This resonates with our first point where a more comprehensive evaluation set is necessary.

Third, it is easier for the detectors to identify human essays than machine essays. Our OOD results show that the detectors have higher performance on human essays, suggesting that the human essays in our OOD set are more or less homogeneous to the ID data, whereas the machine essays are likely from a genuine different distribution.

6 Related work

6.1 The evolution of large language models

Since Vaswani et al. (2017) proposed Transformer, a machine translation model that relies on self-attention, language models have kept advancing at an unprecedented pace in recent years. The field has seen innovative ideas ranging from the pretraining-finetuning paradigm (Radford et al., 2018; Devlin et al., 2018) and larger-scale mixed-task training (Raffel et al., 2019) to implicit (Radford et al., 2019) and explicit (Wei et al., 2021; Sanh et al., 2021) multitask learning and in-context learning (Brown et al., 2020). Some works scaled language models to hundreds of billions of parameters (Rae et al., 2021; Smith et al., 2022; Chowdhery et al., 2022), while others reevaluated scaling laws (Kaplan et al., 2020; Hoffmann et al., 2022) and trained smaller models on larger amount of higher-quality data (Anil et al., 2023; Li et al., 2023). Some trained them to follow natural (Mishra et al., 2022), supernatural (Wang et al., 2022b), and unnatural (Honovich et al., 2022) instructions. Others investigated their abilities in reasoning with chain-of-thought (Wei et al., 2022; Wang et al., 2022a; Kojima et al., 2022).