Assessing the significance of longitudinal data in Alzheimer’s Disease forecasting

Abstract

In this study, we employ a transformer encoder model to characterize the significance of longitudinal patient data for forecasting the progression of Alzheimer’s Disease (AD). Our model, Longitudinal Forecasting Model for Alzheimer’s Disease (LongForMAD), harnesses the comprehensive temporal information embedded in sequences of patient visits that incorporate multimodal data, providing a deeper understanding of disease progression than can be drawn from single-visit data alone. We present an empirical analysis across two patient groups—Cognitively Normal (CN) and Mild Cognitive Impairment (MCI)—over a span of five follow-up years. Our findings reveal that models incorporating more extended patient histories can outperform those relying solely on present information, suggesting a deeper historical context is critical in enhancing predictive accuracy for future AD progression. Our results support the incorporation of longitudinal data in clinical settings to enhance the early detection and monitoring of AD. Our code is available at https://github.com/batuhankmkaraman/LongForMAD.

Keywords:

Alzheimer’s Forecasting Longitudinal Data Transformer Neural Networks1 Introduction and Related Literature

Alzheimer’s Disease (AD) poses a significant challenge to global healthcare systems, affecting millions of individuals and their families. Early and accurate prediction of AD progression is crucial for effective management and treatment planning. The two key progression events to forecast in Alzheimer’s are the conversion from the cognitively normal (CN) state to the mild cognitive impairment (MCI) state, and from the MCI state to the AD state. Recent advancements in machine learning and deep learning have opened new avenues for predicting disease progression, leveraging vast amounts of medical data. Longitudinal studies have been instrumental in identifying risk factors and progression markers of various disease types [13].

In previous studies, various methods have been employed to leverage longitudinal data for AD. Longitudinal cortical thickness changes from multiple time points in patients’ history are used for classifying CN vs AD and sMCI (stable MCI) vs pMCI (progressive MCI) at the time of the most recent visit in [15] with a support vector machine (SVM). [23] uses longitudinal clinical data and biomarkers from multiple time points to classify sMCI vs pMCI using a multi-kernel SVM. In more recent years, [5] employs an RNN-based model for CN vs AD and sMCI vs pMCI classification using MRIs collected at multiple time points. [10] employs a convolutional architecture for survival prediction, using longitudinal clinical data and biomarkers collected from multiple time points in patients’ history to forecast non-AD vs AD outcomes in a 5-year future time horizon. Transformer encoders [20], have shown promise in medical data analysis by capturing the temporal dynamics of disease progression [21, 19]. Their capability to process variable-length and non-uniform input sequences makes them well-suited for analyzing heterogeneous longitudinal historical data commonly found in healthcare records. In the context of Alzheimer’s, [8] employs a transformer-based model for sMCI vs pMCI classification using 3D MRIs from the patient’s current visit and one prior visit. Similarly, [3] utilizes a transformer-based model to combine image embeddings extracted from MRIs for CN vs AD classification at the time of the last MRI. However, relying solely on the patient’s current visit and one prior visit is inadequate for comprehensively understanding the importance of longitudinal data. The aforementioned studies either do not predict future clinical state or focus solely on sMCI vs pMCI for the MCI group or non-AD vs AD discrimination, without distinguishing between CN and MCI groups. The sMCI and pMCI classifications are based on an arbitrary follow-up year. While some studies use all years in a 5-year follow-up [7], they do not thoroughly investigate the importance of longitudinal patient data across different prediction time horizons. Few studies explore the progression of CN baseline patients [4], and none have fully examined the impact of longitudinal patient history, such as history duration and data collection frequency.

In this work, we quantify the significance of longitudinal patient data in AD forecasting for both CN-baseline and MCI-baseline patient groups, across each year of a 5-year follow-up time horizon, and for various longitudinal data modalities. We utilize an extensive history of patients’ visits, going back up to three years, and assess the impact of history on a model’s predictions in terms of both history duration and data collection frequency. To facilitate this analysis, we present a Longitudinal Forecasting Model for Alzheimer’s Disease (LongForMAD), a transformer encoder-based model capable of making predictions under diverse data modality and patient history availability scenarios for both CN-baseline and MCI-baseline patients and for any future time point. Our analysis highlights the critical importance of longitudinal data in clinical environments, illustrating its pivotal role in enhancing the early detection of AD, especially in the CN cohort.

2 Materials and Methods

2.1 Dataset

All participants used in this work are from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database [18].

Participants are chosen based on their clinical diagnosis status. Specifically, we include individuals who have not been diagnosed with clinical AD at their baseline visit. Additionally, these participants must have undergone at least one follow-up diagnostic assessment after this baseline visit. We exclude CN baseline subjects who converted to AD because they are very few (n=9). In addition, we exclude CN baseline participants who converted to MCI before reverting to CN (n=41), and MCI baseline participants who were diagnosed as CN in a later follow-up year (n=284) since these subjects might have been diagnosed incorrectly at some point. Therefore, we focus solely on CN baseline subjects who progress to MCI and MCI baseline subjects who advance to AD, reflecting the irreversible progression of Alzheimer’s disease. After the exclusions, we are left with 1404 participants. Table 1 lists summary statistics for the participants.

| CN at | MCI at | |

|---|---|---|

| baseline | baseline | |

| Female/Male | 335 / 280 | 324 / 465 |

| Age | 73.19 6.18 | 73.46 7.39 |

| Education | 16.51 2.57 | 15.93 2.81 |

| APOE4 | 430/169/14 | 371/313/98 |

| CDR | 0.04 0.13 | 1.55 0.89 |

| MMSE | 29.11 1.11 | 27.52 1.82 |

The data, typical of many longitudinal studies, includes missing follow-up visits, irregular timings, and subject dropouts before study completion. Table 2 details the number of subjects in each diagnostic group for annual follow-ups. In all analyses, including Table 2, subjects who progressed to a later stage (CN to MCI, or MCI to AD) before dropping out are assumed to remain at the advanced stage. Stable subjects not present in a specific follow-up year are excluded from training and testing for that year.

| Patient | Follow-up | Follow-up year | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Group | DX | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| CN at | CN | 427 | 527 | 181 | 230 | 123 | 173 | 97 | 72 | 33 | 17 | 23 | 11 | 19 | 2 | 4 |

| baseline | MCI | 14 | 32 | 41 | 49 | 54 | 65 | 79 | 83 | 85 | 87 | 87 | 88 | 88 | 88 | 88 |

| MCI at | MCI | 674 | 431 | 317 | 202 | 127 | 93 | 88 | 57 | 30 | 20 | 6 | 8 | 3 | - | - |

| baseline | AD | 110 | 218 | 261 | 286 | 292 | 305 | 313 | 322 | 324 | 326 | 327 | 327 | 327 | 327 | 327 |

2.2 Input features

We use the clinical data and biomarkers as our input features. Clinical data includes subject demographics, genotype (the number of APOE4 alleles, specifically), cognitive test scores, and diagnosis (CN or MCI). The complete list of demographic features and cognitive tests can be found in [17]. The biomarkers are Cerebrospinal Fluid (CSF) measurements and Magnetic Resonance Imaging (MRI) volume measurements [9] (computed using the FreeSurfer software [6]). In the ADNI study, subjects undergo multiple cognitive assessments and MRI scans across visits, providing a detailed longitudinal medical history. The demographic and genotype variables remain static, and CSF is not collected at every visit. ADNI is divided into four phases (ADNI-1, ADNI-GO, ADNI-2, and ADNI-3), each with its own data acquisition protocol. There is considerable heterogeneity in the follow-up data collection, including irregular visit intervals and frequent missing visits. The extent of data missingness across various modalities for the 1404 participants in our study is detailed in Table 3.

| Data | CN at | MCI at |

|---|---|---|

| type | baseline | baseline |

| COGN | 45.28 | 43.42 |

| MRI | 58.22 | 48.00 |

| CSF | 85.54 | 83.97 |

Following [11], we begin the input preprocessing by recording the binary missingness mask for the feature set. Each participant has their own binary missingness mask indicating what variable was observed or not for that particular individual at a specific visit. Then, we perform mode/mean substitution for missing categorical/numerical features, respectively, following [2]. The categorical variables except the diagnosis are one-hot encoded, and numerical variables are z-score normalized in the last step of feature processing. To prevent any information leakage, both mode/mean substitution and z-score normalization statistics are collected from training data and used for training, validation and test data. Concatenating the input features, and the binary missingness mask yields a feature vector of length 113.

2.3 Model

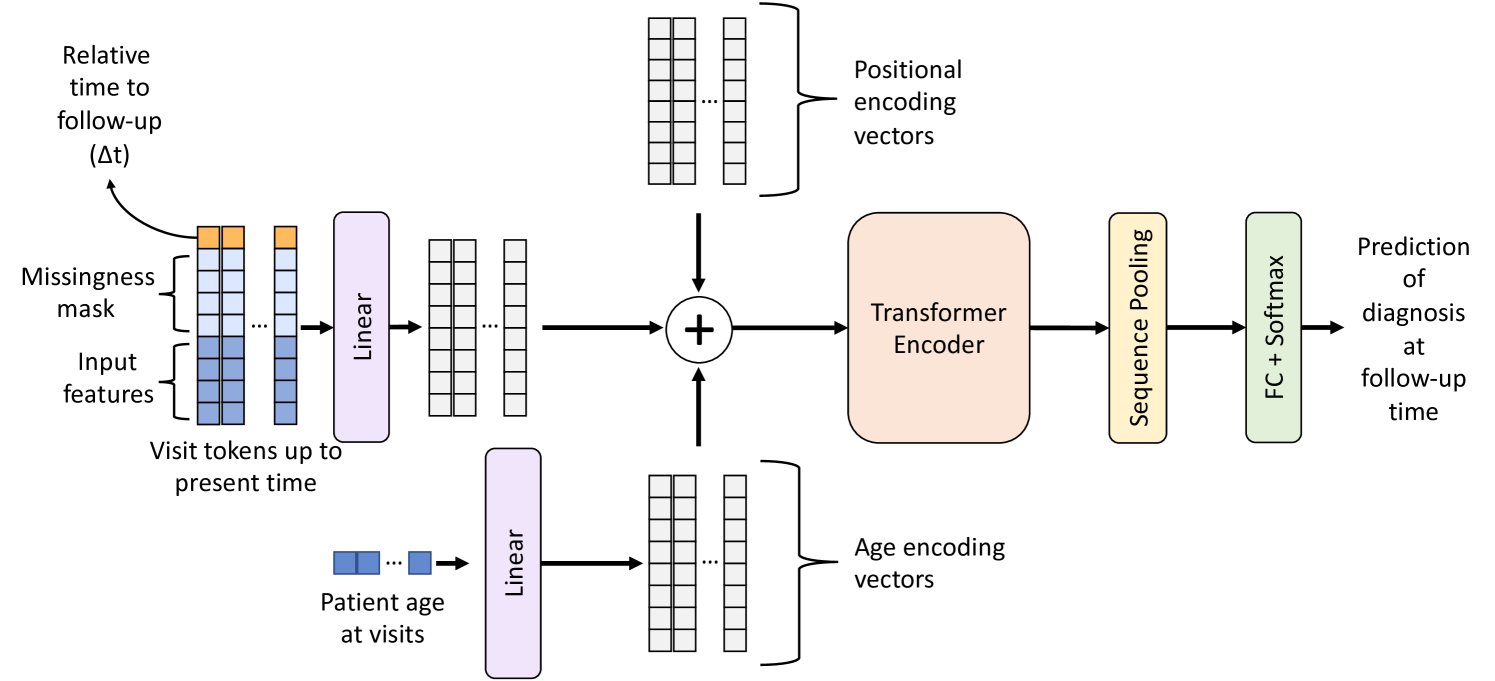

We focus on predicting an individual’s future diagnostic status, categorizing it into one of three classes: CN, MCI, or AD. This prediction is based on data obtained from preceding annual medical visits. The chronological nature of these visits forms the basis of our sequential input, with the goal of generating a corresponding classification prediction. To achieve this, we implement a transformer-based sequence-to-class neural network, LongForMAD, wherein each medical visit is represented as a distinct input token. The detailed architecture of LongForMAD is illustrated in Fig. 1.

Our model enhances each visit token by appending the prediction time horizon (t in Figure 1), defined as the interval between the visit’s data collection and the intended future prediction time point. Following [11], this increases the input token length to 114. This adaptation allows our model to make predictions over various future time horizons without the need to train distinct networks for each horizon. Instead, a singular, comprehensive network is trained across the entire dataset, covering all labels from subsequent follow-up years. In terms of network architecture, we employ a transformer encoder layer, a sequence pooling layer, and a fully connected classifier. Notably, our network integrates a standard learnable position encoding layer (initialized with 0 vectors) and a parameterized linear age encoding layer, as proposed by [16].

Following the addition of positional and age encodings to the input visit tokens, which are projected to the same latent space by a linear layer, the transformer encoder processes the input utilizing self-attention. This process enables the efficient identification and extraction of pertinent features from each token by analyzing the patient’s AD longitudinal data comprehensively. The sequence pooling layer, which applies average pooling along the token axis, then consolidates the information gathered from the entire longitudinal data of the patient. The aggregated feature set is subsequently passed through the fully connected classifier with softmax activation, which computes a diagnosis probability distribution, which represents the probabilities of CN, MCI, and AD diagnoses at the predicted future time point. Our network incorporates element-wise rectified linear units (ReLUs) as nonlinear activation functions between layers.

2.4 Training

Transformers, despite their effectiveness in various tasks, are notably data intensive and prone to overfitting due to their extensive use of self-attention mechanisms, which require substantial data to learn effectively. We have designed a training strategy incorporating several techniques to mitigate overfitting and enhance model performance.

To begin, we expand our training and validation datasets. We consider every visit with a diagnosis of CN or MCI within the training data as a present-time reference point, denoted as present point. Visits with a confirmed AD diagnosis are not designated as present point in our process, as projecting their future is beyond the purview of this study. For each visit designated as present point, we compile the patient’s historical data, which may extend to a maximum of three years prior. Consequently, the longest sequence of longitudinal data used for training encompasses four visits: the visit occurring three years before present point, the visit occurring two years before present point, the visit one year before present point, and the visit at the point of present point. Subsequently, we record all subsequent follow-up diagnoses occurring in the five years succeeding present point. By constructing these 9-year progression trajectories, we effectively triple the volume of training and validation samples, diversifying the dataset. To prevent information leakage, we ensure that all 9-year progression trajectories originating from the same subject are exclusively allocated to one of the splits: either training, validation, or test set.

We leverage the inherent capability of transformer encoders to process input sequences of variable lengths, utilizing this feature to perform data augmentation on longitudinal history records. We perform the augmentation by omitting randomly selected past visits from the training data in each training epoch. Such a technique prompts the model to generalize its learning across the full spectrum of a patient’s longitudinal data, rather than narrowly adapting to particular visits, thereby reducing the potential for overfitting.

Table 2 reveals two imbalances: the changing class label distribution over time and the decreasing availability of clinical labels due to dropouts. The fluctuation in the number of stable CN baseline individuals across different years can be linked to varying monitoring policies in the ADNI study phases. To address these imbalances, we employ a loss re-weighting scheme during training, similar to [11], which assigns higher penalties to misclassifications in minority classes by using sample-level weights. We categorize participants into four groups for each follow-up year (CN baseline non-converters, CN baseline converters, MCI baseline non-converters, and MCI baseline converters) and adjust the weights to ensure balanced representation across all years. Without this mitigation, the model might prioritize predicting follow-up years and patient groups with the highest number of samples, neglecting the minorities. The weights for each sample point are calculated based on the expanded datasets.

We calculate a single training loss using the sample points generated by our augmentation strategy. However, for validation, we evaluate the validation loss across every possible scenario regarding the presence or absence of a visit in a history year, which amounts to different scenarios. These scenarios include cases such as whether the visit at present point occurred, whether there was a visit 1 year before present point, and so forth. To generate these scenarios, we simply exclude the relevant visits from the history of the validation subjects. We then compute the average of these loss values and use this average as the criterion for early stopping.

2.5 Evaluation

In evaluation, our primary goal is to assess the influence of the presence of patient longitudinal history on the predictions made by our model. It is important to highlight that our transformer-based network is designed to handle cases where a patient’s longitudinal history is not fully complete, meaning it does not necessarily include all past visits before present point. This enables the model to generate predictions even with partial or no historical data. We perform the analysis across various longitudinal data modality cases present in the input. Our model is capable of making predictions with missing features in the visits, as discussed in Section 2.2. To create those “cases", we synthetically replace unwanted features with mean/mode values derived from the training set and flip the corresponding entries in the missingness mask. We calculate our performance scores in two patient groups (CN at present point and MCI at present point) and every follow-up year separately. Therefore, each score analysis we conduct is bifurcated, one corresponding to the CN-to-MCI conversion task and the other to the MCI-to-AD conversion task.

ADNI, like many real-world longitudinal studies, is subject to biases in subject recruitment and follow-up. A notable issue is “temporal bias" [22], arising from the non-uniform distribution of visits across disease stages. Employing an inference strategy that mitigates temporal bias is crucial when evaluating model performance. To compute the performance scores for a particular patient group, follow-up year, longitudinal data modality case, and longitudinal history scenario, the process begins by randomly selecting a single present point for each test subject. This selection is made by choosing from all available instances of present points, with each selected instance ensuring that the subject belongs to the specific patient group of interest (CN at present point or MCI at present point). Furthermore, each chosen instance should be linked with a diagnosis in the designated follow-up year. It is important to highlight that within a pseudo test set, each subject contributes a single follow-up diagnosis to be predicted from a certain historical instance. Thus, all sample points in a pseudo test set are independent. Then, we obtain predictions by utilizing all relevant input features and longitudinal history information implied by the longitudinal data modality case and history scenario. We record the performance scores and repeat this operation for multiple random pseudo test sets. In the final stage, we calculate the average of the performance score values obtained from those pseudo test sets. This average represents the final comprehensive score for that specific patient group, follow-up year, and longitudinal history scenario pair. The purpose of repeating the operation for multiple pseudo test sets is to broaden the range of disease progression trajectories we use in our evaluation. By doing so, we aim to mitigate the potential effects of temporal bias on our results. We note that since our dataset expansion strategy utilizes every possible visit as present point during training, as discussed in Section 2.4, it not only expands the dataset size but also addresses temporal bias.

3 Experiments

3.1 Experimental Details

We employed a randomized, diagnosis-stratified approach to divide the data into training and testing sets with an 80-20 ratio. This division process was replicated 200 times, with the results presented herein being the average outcomes of these iterations. Within each split, we conducted a 5-fold cross-validation on the training set, and the validation loss was used to determine early stopping for model training. For each fold, five models were trained with different random seeds. The final predictions for each test scenario were obtained by averaging the outputs of 25 models, combining 5 cross-validation folds and 5 unique initializations. We used Adam optimizer [12] for training. To tune the architecture of LongForMAD, we employ a grid search strategy across each of the train/test splits. The optimal architecture for each test set is determined based on the hyperparameter values that achieve the highest performance on a corresponding validation set. The details of fixed hyperparameters, along with the optimization grid used for the adjustable hyperparameters, are documented on our github repository. For the calculation of performance scores, we utilize 100 random pseudo test sets. Due to the unbalanced follow-up diagnoses in Table 2, we primarily use the area under the receiver operating characteristic curve (AUROC) to evaluate our model. Our analyses show nearly identical ROC curves for both one-versus-one and one-versus-rest approaches, indicating a low likelihood of CN subjects being predicted as AD and MCI subjects as CN. Therefore, we report the one-versus-rest analysis results, considering MCI as the positive class for CN-to-MCI conversions and AD for MCI-to-AD conversions.

3.2 Results

A comparison of LongForMAD’s performance with other existing longitudinal models in the literature for MCI-to-AD conversion in the third follow-up year is presented in Table 4. It is important to note that our comparison is limited to this specific patient group and follow-up year because the models against which we can benchmark our performance are primarily focused on this particular scenario. We also emphasize that this comparison is made primarily to demonstrate that our modeling approach is sufficiently robust, justifying the analysis of its predictions.

| Longitudinal | Sequential | 3-year | |

| data | network | MCI-to-AD | |

| Model | modality | architecture | AUROC |

| [5] | MRI | RNN | 73.03 |

| [14] | MRI | - | 74.60 |

| [1] | MRI | - | 77.97 |

| [1] | MRI | - | 79.26 |

| [8] | MRI | Transformer | 81.53 |

| LongForMAD (Ours) | MRI | Transformer | 78.65 |

| LongForMAD (Ours) | MRI | Transformer | 92.03 |

| COGN |

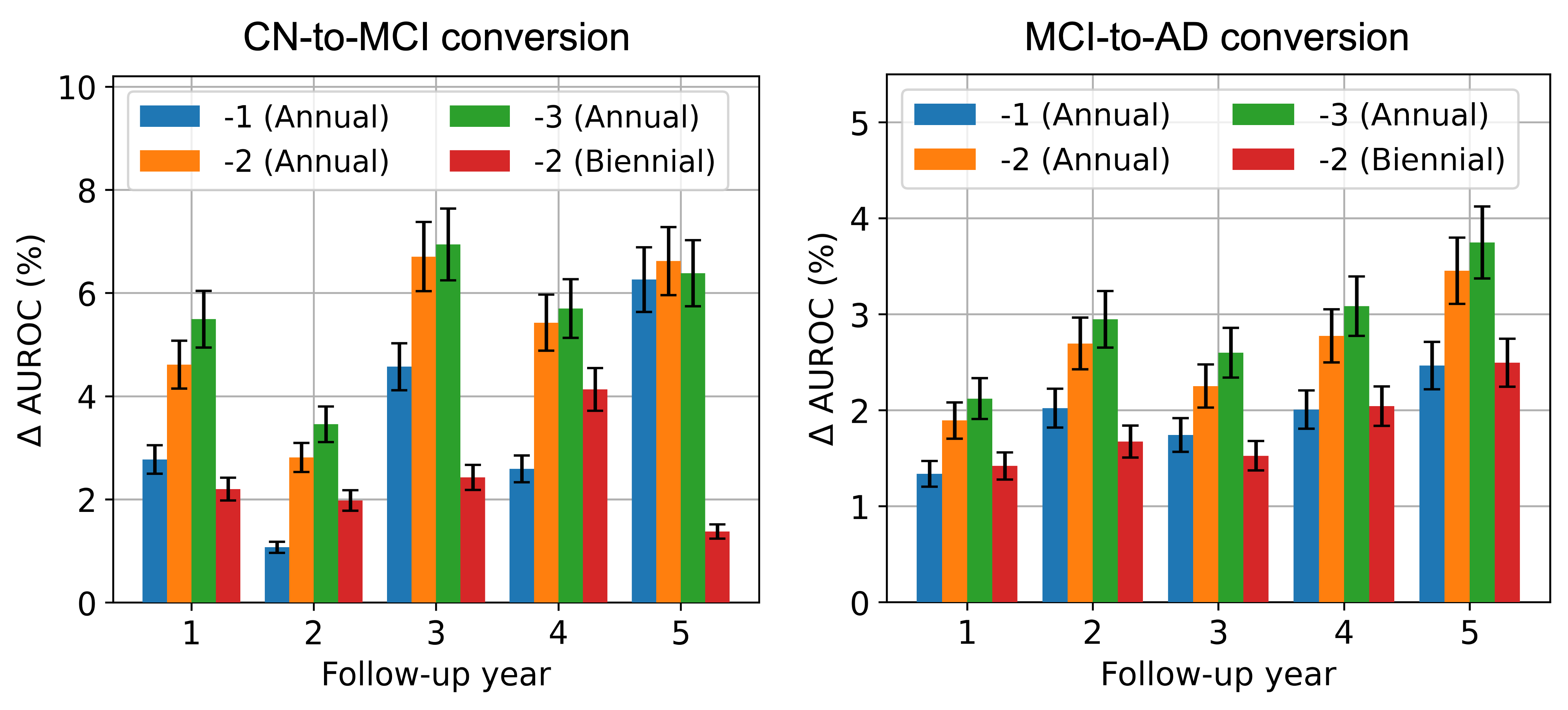

We assess the impact of patient longitudinal history availability in two ways: We compare the performance of our model when patient history information is available starting from years -3, -2, -1, and 0 to investigate the impact of longitudinal history duration, year 0 being the present point. Then, by keeping the history duration constant at 2 years (i.e., using history starting from year -2), we analyze the effect of longitudinal history data collection frequency. We compare the model’s performance when data is collected annually (involving years -2, -1, and 0) versus biennially (involving years -2 and 0). We note that in the analysis of history duration, we maintain a constant data collection frequency of 1 visit per year.

As detailed in Section 2.2, the modalities that present a longitudinal history are cognitive test scores and MRI biomarkers. We assess the importance of patient longitudinal history across two time-varying (i.e., longitudinal) data modality “cases.” In the first case, referred as “MRI-only", we synthetically induce missingness in cognitive test scores for all patients, leaving MRI biomarkers as the sole time-varying modality, to assess the significance of historical MRI biomarkers. Then, for a comprehensive assessment of the impact of longitudinal history, we incorporate all available input features in the second scenario, referred as “complete-set" case.

3.2.1 Impact of longitudinal history duration

3.2.2 MRI-only case

Figure 2 shows the change in AUCROC (AUROC) scores obtained with the addition of historical MRI data against the scenario where no longitudinal history is available. In Figure 2, incorporating longitudinal MRIs consistently improves prediction performance for CN-to-MCI conversion across all follow-up years. The largest improvement is observed in the third year of follow-up. We note that the benefits of a 3-year annual history appear to diminish slightly compared to a 2-year annual history. In fact, incorporating the visit data from year -3 results in a decrease in performance in follow-up year 5. This suggests that including patient history over longer durations might lead to overfitting, likely due to the inclusion of time points that offer no predictive value. For MCI-to-AD conversion, the addition of prior MRIs again leads to an increase in AUROC scores. Extending the history duration yields a diminishing return in performance improvement, similar to the CN-to-MCI conversion; however, a decrease in performance is not observed. Moreover, unlike CN-to-MCI conversion, the value of historical MRIs for MCI-to-AD conversion appears to increase almost monotonically as the prediction time horizon extends. This suggests that the earliest changes leading to AD are prominently reflected in longitudinal MRIs.

3.2.3 Complete-set case

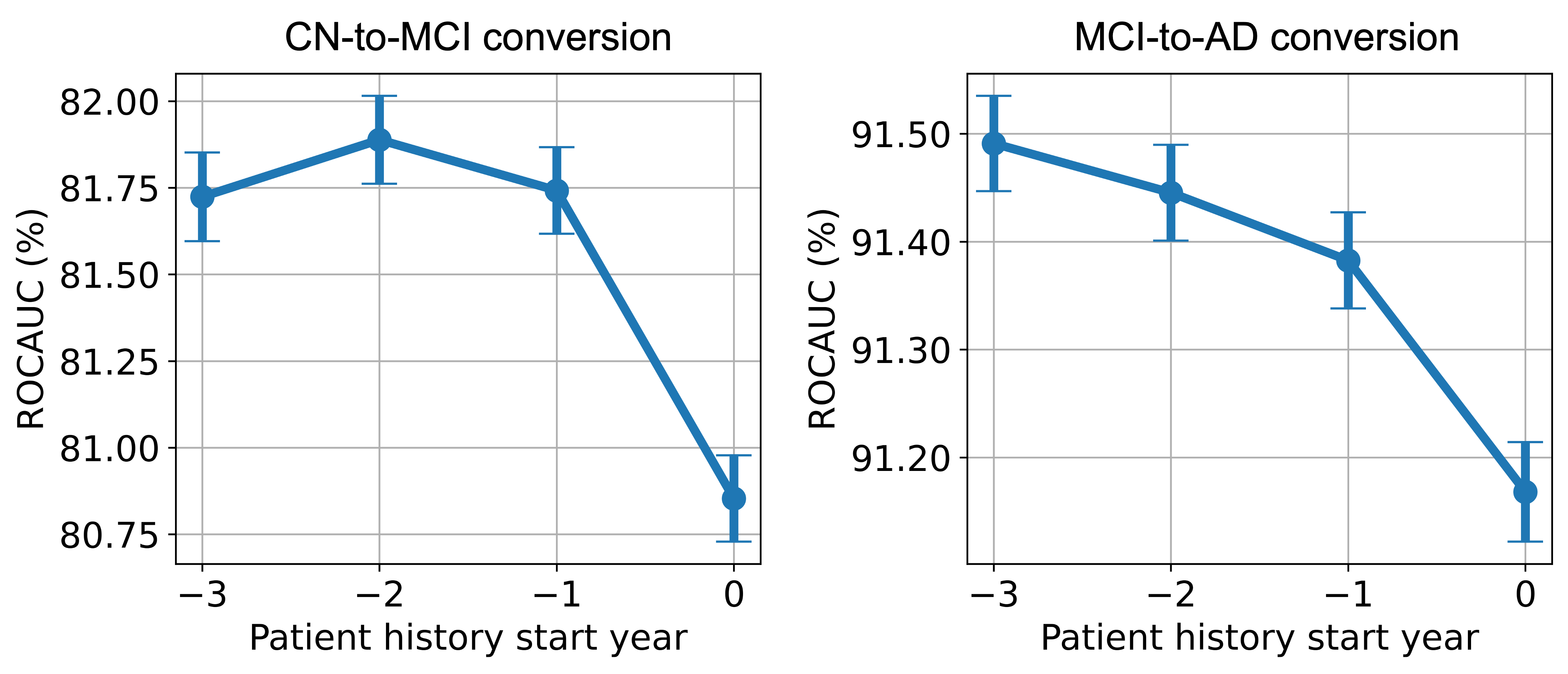

In the complete-set, i.e., when both longitudinal MRI and cognitive variable sets are included, we observe similar patterns in the AUROC gains of the different follow-up years. Hence, we only show the average AUROC score (obtained as the mean over the five follow-up years) in Figure 3.

In Figure 3, we observe that for CN-to-MCI conversion, the inclusion of a full set of data modalities from the patient’s history does indeed enhance the model’s performance. However, the magnitude of improvement is notably smaller compared to the MRI-only case in Figure 2. This indicates the cognitive assessments are highly predictive for AD forecasting, and having access to such data at the present point alone brings the model’s prediction performance close to its potential maximum. Compared to the MRI-only scenario, diminishing returns are more evident when historical data is extended from 1 to 3 years. The inclusion of data from year -3 results in reduced model performance averaged over all follow-up years, unlike the performance drop which occurred in only the fifth follow-up year in MRI-only case. This reduction in AUROC scores with broader history use implies that older data may be less relevant, possibly clouding the more recent, indicative information.

The MCI-to-AD conversion panel of Figure 3 shows a significantly smaller increase in performance with the inclusion of history compared to the MRI-only case in Figure 2, highlighting the predictive strength of cognitive test scores. Consistent with our earlier MRI-only findings, we do not observe a decrease in performance within the 3-year history window.

3.3 Impact of data collection frequency

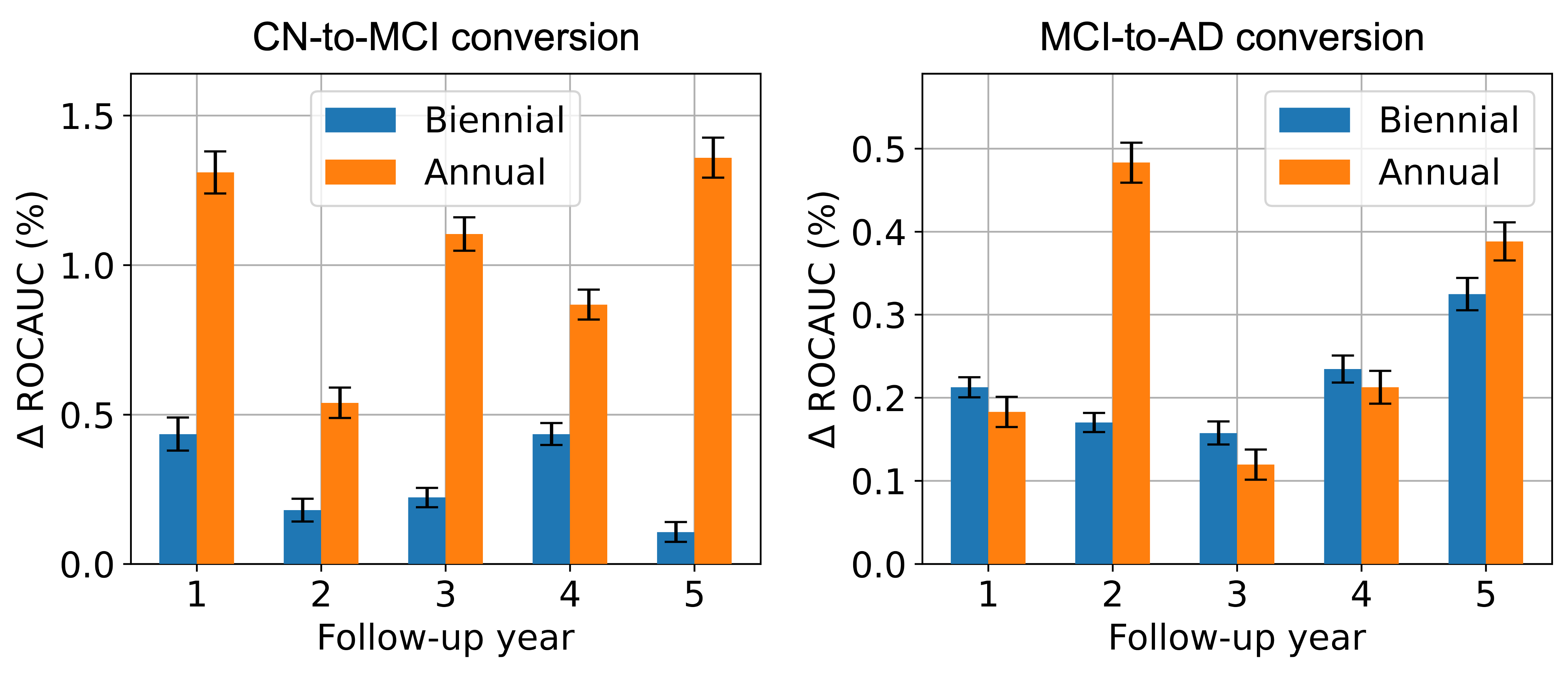

We examine the effect of longitudinal history data collection frequency by maintaining a constant history duration of two years and comparing AUROC scores between scenarios of annual and biennial data collection against the scenario with no patient history. For the MRI-only case depicted in Figure 2, it’s evident that annual data collection offers a significantly greater improvement in predictive performance compared to biennial collection for both patient groups. Additionally, it is worth noting that the gain in performance with annual data collection is smaller in MCI-to-AD conversion when compared to CN-to-MCI conversion within the MRI-only case.

Figure 4 illustrates a similar comparison for the complete-set case. For the CN-to-MCI conversion, the trends are nearly identical to those observed in the MRI-only case, albeit with a smaller overall increase in performance. For the MCI-to-AD conversion, the differences between annual and biennial data collection frequencies are less stark, yet on average, annual data collection shows a slight advantage, particularly due to a notable improvement in the second follow-up year. The results demonstrate that both CN and MCI patient groups benefit from higher frequencies of historical data collection, with the CN group showing a more pronounced and consistent improvement in AUROC in both data modality cases. This again highlights that more sophisticated modeling, such as including longitudinal history, offers a larger boost for the harder CN-to-MCI conversion prediction task, as stated in Section 3.2.1.

4 Conclusion

In this work, we presented a comprehensive analysis of AD forecasting with the use of patient’s longitudinal clinical data and biomarker histories. The results we obtained with LongForMAD reveal a significant benefit from incorporating more extensive longitudinal history, particularly for those with CN, who demonstrated a more substantial improvement in predictive performance. The observed trends from multiple data modalities encourage further investigation into personalized monitoring and intervention strategies based on imaging methods. In the future, incorporating image encoders into our model would be valuable for extracting more nuanced features from MRIs. Additionally, visualizing the changes in the localization of these encoders with respect to the changes in longitudinal history presence could provide valuable insights into disease progression.

References

- [1] Altay, F., Sanchez, G.R., James, Y., Faraone, S.V., Velipasalar, S., Salekin, A.: Preclinical stage alzheimer’s disease detection using magnetic resonance image scans. arXiv (Cornell University) (11 2020). https://doi.org/10.48550/arxiv.2011.14139

- [2] Campos, S., Pizarro, L., Valle, C., Gray, K.R., Rueckert, D., Allende, H.: Evaluating imputation techniques for missing data in adni: A patient classification study. In: Pardo, A., Kittler, J. (eds.) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. pp. 3–10. Springer International Publishing, Cham (2015)

- [3] Chen, Q., Hong, Y.: Longformer: Longitudinal transformer for alzheimer’s disease classification with structural mris (12 2023). https://doi.org/10.48550/arXiv.2302.00901, https://arxiv.org/abs/2302.00901

- [4] Chen, Y., Denny, K.G., Harvey, D., Farias, S.T., Mungas, D., DeCarli, C., Beckett, L.: Progression from normal cognition to mild cognitive impairment in a diverse clinic-based and community-based elderly cohort. Alzheimer’s & Dementia 13, 399–405 (04 2017). https://doi.org/10.1016/j.jalz.2016.07.151

- [5] Cui, R., Liu, M.: Rnn-based longitudinal analysis for diagnosis of alzheimer’s disease. Computerized Medical Imaging and Graphics 73, 1–10 (04 2019). https://doi.org/10.1016/j.compmedimag.2019.01.005

- [6] Fischl, B., van der Kouwe, A., Destrieux, C., Halgren, E., Ségonne, F., Salat, D.H., Busa, E., Seidman, L.J., Goldstein, J., Kennedy, D., Caviness, V., Makris, N., Rosen, B., Dale, A.M.: Automatically Parcellating the Human Cerebral Cortex. Cerebral Cortex 14(1), 11–22 (2004). https://doi.org/10.1093/cercor/bhg087, http://cercor.oxfordjournals.org/content/14/1/11.abstract

- [7] Fouladvand, S., Noshad, M., Periyakoil, V.J., Chen, J.H.: Machine learning prediction of mild cognitive impairment and its progression to alzheimer’s disease. Health science reports 6 (10 2023). https://doi.org/10.1002/hsr2.1438

- [8] Hu, Z., Wang, Z., Jin, Y., Hou, W.: Vgg-tswinformer: Transformer-based deep learning model for early alzheimer’s disease prediction 229, 107291–107291 (02 2023). https://doi.org/10.1016/j.cmpb.2022.107291

- [9] Jack, C.R., Barnes, J., Bernstein, M.A., Borowski, B.J., Brewer, J., Clegg, S., Dale, A.M., Carmichael, O., Ching, C., DeCarli, C., Desikan, R.S., Fennema-Notestine, C., Fjell, A.M., Fletcher, E., Fox, N.C., Gunter, J., Gutman, B.A., Holland, D., Hua, X., Insel, P., Kantarci, K., Killiany, R.J., Krueger, G., Leung, K.K., Mackin, S., Maillard, P., Malone, I.B., Mattsson, N., McEvoy, L., Modat, M., Mueller, S., Nosheny, R., Ourselin, S., Schuff, N., Senjem, M.L., Simonson, A., Thompson, P.M., Rettmann, D., Vemuri, P., Walhovd, K., Zhao, Y., Zuk, S., Weiner, M.: Magnetic resonance imaging in alzheimer’s disease neuroimaging initiative 2. Alzheimer’s & Dementia 11, 740–756 (07 2015). https://doi.org/10.1016/j.jalz.2015.05.002, https://www.sciencedirect.com/science/article/pii/S1552526015001685

- [10] Jarrett, D., Yoon, J., van der Schaar, M.: Dynamic prediction in clinical survival analysis using temporal convolutional networks. IEEE Journal of Biomedical and Health Informatics 24, 424–436 (02 2020). https://doi.org/10.1109/jbhi.2019.2929264

- [11] Karaman, B.K., Mormino, E.C., Sabuncu, M.R.: Machine learning based multi-modal prediction of future decline toward alzheimer’s disease: An empirical study. PLOS ONE 17, e0277322 (11 2022). https://doi.org/10.1371/journal.pone.0277322

- [12] Kingma, D., Lei Ba, J.: Adam: A method for stochastic optimization (01 2017), https://arxiv.org/pdf/1412.6980.pdf

- [13] Lee, H., Kim, J., Park, E., Kim, M., Kim, T., Kooi, T.: Enhancing breast cancer risk prediction by incorporating prior images. Lecture Notes in Computer Science pp. 389–398 (01 2023). https://doi.org/10.1007/978-3-031-43904-9_38

- [14] Li, F., Liu, M.: A hybrid convolutional and recurrent neural network for hippocampus analysis in alzheimer’s disease. Journal of Neuroscience Methods 323, 108–118 (07 2019). https://doi.org/10.1016/j.jneumeth.2019.05.006

- [15] Li, Y., Wang, Y., Wu, G., Shi, F., Zhou, L., Lin, W., Shen, D.: Discriminant analysis of longitudinal cortical thickness changes in alzheimer’s disease using dynamic and network features. Neurobiology of Aging 33, 427.e15–427.e30 (02 2012). https://doi.org/10.1016/j.neurobiolaging.2010.11.008

- [16] Li, Y., Rao, S., Solares, J.R.A., Hassaine, A., Ramakrishnan, R., Canoy, D., Zhu, Y., Rahimi, K., Salimi-Khorshidi, G.: Behrt: Transformer for electronic health records. Scientific Reports 10 (04 2020). https://doi.org/10.1038/s41598-020-62922-y

- [17] Adni | study documents, https://adni.loni.usc.edu/methods/documents/

- [18] Mueller, S.G., Weiner, M.W., Thal, L.J., Petersen, R.C., Jack, C.R., Jagust, W., Trojanowski, J.Q., Toga, A.W., Beckett, L.: Ways toward an early diagnosis in alzheimer’s disease: The alzheimer’s disease neuroimaging initiative (adni). Alzheimer’s & Dementia 1, 55–66 (07 2005). https://doi.org/10.1016/j.jalz.2005.06.003

- [19] Shen, Y., Park, J., Yeung, F., Goldberg, E., Heacock, L., Org, L., Geras, K.: Leveraging transformers to improve breast cancer classification and risk assessment with multi-modal and longitudinal data (11 2023), https://arxiv.org/pdf/2311.03217.pdf

- [20] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need (06 2017), https://arxiv.org/abs/1706.03762

- [21] Wang, X., Tan, T., Gao, Y., Su, R., Zhang, T., Han, L., Teuwen, J., D’Angelo, A., Drukker, C.A., Schmidt, M.K., Beets-Tan, R., Karssemeijer, N., Mann, R.: Predicting up to 10 year breast cancer risk using longitudinal mammographic screening history. medRxiv (2023). https://doi.org/10.1101/2023.06.28.23291994, https://www.medrxiv.org/content/early/2023/06/29/2023.06.28.23291994

- [22] Yuan, W., Beaulieu-Jones, B.K., Yu, K.H., Lipnick, S.L., Palmer, N., Loscalzo, J., Cai, T., Kohane, I.S.: Temporal bias in case-control design: preventing reliable predictions of the future. Nature Communications 12 (02 2021). https://doi.org/10.1038/s41467-021-21390-2

- [23] Zhang, D., Shen, D.: Predicting future clinical changes of mci patients using longitudinal and multimodal biomarkers. PLoS ONE 7, e33182 (03 2012). https://doi.org/10.1371/journal.pone.0033182