Asymptotics of Network Embeddings Learned via Subsampling

Abstract

Network data are ubiquitous in modern machine learning, with tasks of interest including node classification, node clustering and link prediction. A frequent approach begins by learning an Euclidean embedding of the network, to which algorithms developed for vector-valued data are applied. For large networks, embeddings are learned using stochastic gradient methods where the sub-sampling scheme can be freely chosen. Despite the strong empirical performance of such methods, they are not well understood theoretically. Our work encapsulates representation methods using a subsampling approach, such as node2vec, into a single unifying framework. We prove, under the assumption that the graph is exchangeable, that the distribution of the learned embedding vectors asymptotically decouples. Moreover, we characterize the asymptotic distribution and provided rates of convergence, in terms of the latent parameters, which includes the choice of loss function and the embedding dimension. This provides a theoretical foundation to understand what the embedding vectors represent and how well these methods perform on downstream tasks. Notably, we observe that typically used loss functions may lead to shortcomings, such as a lack of Fisher consistency.

Keywords: networks, embeddings, representation learning, graphons, subsampling

1 Introduction

Network data are commonplace in modern-day data analysis tasks. Some examples of network data include social networks detailing interactions between users, citation and knowledge networks between academic papers, and protein-protein interaction networks, where the presence of an edge indicates that two proteins in a common cell interact with each other. With such data, there are several types of tasks we may be interested in. Within a citation network, we can classify different papers as belonging to particular subfields (a community detection task; e.g Fortunato, 2010; Fortunato and Hric, 2016). In protein-protein interaction networks, it is too costly to examine whether every protein pair will interact together (Qi et al., 2006), and so given a partially observed network we are interested in predicting the values of the unobserved edges. As users join a social network, they are recommended individuals who they could interact with (Hasan and Zaki, 2011).

A highly successful approach to solve network prediction tasks is to first learn an embedding or latent representation of the network into some manifold, usually a Euclidean space. A classical way of doing so is to perform principal component analysis or dimension reduction on the Laplacian of the adjacency matrix of the network (Belkin and Niyogi, 2003). This originates from spectral clustering methods (Pothen et al., 1990; Shi and Malik, 2000; Ng et al., 2001), where a clustering algorithm is applied to the matrix formed with the eigenvectors corresponding to the top -eigenvalues of a Laplacian matrix. One shortcoming is that for large data sets, computing the SVD of a large matrix to obtain the eigenvectors becomes increasingly computationally restrictive. Approaches which scale better for larger data sets originate from natural language processing (NLP). DeepWalk (Perozzi et al., 2014) and node2vec (Grover and Leskovec, 2016) are both network embedding methods which apply embedding methods designed for NLP, by treating various types of random walks on a graph as “sentences”, with nodes as “words” within a vocabulary. We refer to Hamilton et al. (2017b) and Cai et al. (2018) for comprehensive overviews of algorithms for creating network embeddings. See Agrawal et al. (2021) for a discussion on how such embedding methods are related to other classical methods such as multidimensional scaling, and embedding methods for other data types.

To obtain an embedding of the network, each node or vertex of the network (say ) is represented by a single -dimensional vector , which are learned by minimizing a loss function between features of the network and the collection of embedding vectors. There are several benefits to this approach. As the learned embeddings capture latent information of each node through a Euclidean vector, we can use traditional machine learning methods (such as logistic regression) to perform a downstream task. The fact that the embeddings lie within a Euclidean space also means that they are amenable to (stochastic) gradient based optimization. One important point is that, unlike in an i.i.d setting where subsamples are essentially always obtained via sampling uniformly at random, here there is substantial freedom in the way in which subsampling is performed. Veitch et al. (2018) shows that this choice has a significant influence in downstream task performance.

Despite their applied success, our current theoretical understanding of methods such as node2vec are lacking. We currently lack quantitative descriptions of what the embedding vectors represent and the information they contain, which has implications for whether the learned embeddings can be useful for downstream tasks. We also do not have quantitative descriptions for how the choice of subsampling scheme affects learned representations. The contributions of our paper in addressing this are threefold:

-

a)

Under the assumption that the observed network arises from an exchangeable graph, we describe the limiting distribution of the embeddings learned via procedures which depend on minimizing losses formed over random subsamples of a network, such as node2vec (Grover and Leskovec, 2016). The limiting distribution depends both on the underlying model of the graph and the choice of subsampling scheme, and we describe it explicitly for common choices of subsampling schemes, such as uniform edge sampling (Tang et al., 2015) or random-walk samplers (Perozzi et al., 2014; Grover and Leskovec, 2016).

-

b)

Embedding methods are frequently learned via minimizing losses which depend on the embedding vectors only through their pairwise inner products. We show that this restricts the class of networks for which an informative embedding can be learned, and that networks generated from distinct probabilistic models can have embeddings which are asymptotically indistinguishable. We also show that this can be fixed by changing the loss to use an indefinite or Krein inner product between the embedding vectors. We illustrate on real data that doing so can lead to improved performance in downstream tasks.

-

c)

We show that for sampling schemes based upon performing random walks on the graph, the learned embeddings are scale-invariant in the following sense. Suppose that we have two identical copies of a network generated from a sparsified exchangeable graph, and on one we delete each edge with probability . Then in the limit as the number of vertices increases to infinity, the asymptotic distributions of the embedding vectors trained on the two networks will be asymptotically distinguishable. We highlight that this may provide some explanation as to the desirability of using random walk based methods for learning embeddings of sparse networks.

1.1 Motivation

We note that several approaches to learn network embeddings (Perozzi et al., 2014; Tang et al., 2015; Grover and Leskovec, 2016) do so by performing stochastic gradient updates of the embedding vectors by updates

| (1) |

Here is the sigmoid function, the sets and are pairs of nodes which are chosen randomly at each iteration (referred to as positive and negative samples respectively) and is a step size. The goal of the objective is to force pairs of vertices within to be close in the embedding space, and those within to be far apart. At the most basic level, we could just have that consists of edges within the graph and non-edges, so that vertices which are disconnected from each other are further apart in the embedding space than those which are connected. In a scheme such as node2vec, arises through a random walk on the network, and arises by choosing vertices according to a unigram negative sampling distribution for each vertex in the random walk .

For simplicity, assume that the only information available for training is a fully observed adjacency matrix of a network of size . Moreover, we let and be random sets which consist only of pairs of vertices which are connected () and not connected () respectively. In this case, if we write , then the algorithm scheme described in (1) arises from trying to minimize the empirical risk function (which depends on the underlying graph )

| (2) |

with a stochastic optimization scheme (Robbins and Monro, 1951), where we write for the cross entropy loss.

This means that the optimization scheme in (1) attempts to find a minimizer of the function defined in (2). We ask several questions about these minimizers where there is currently little understanding:

-

Q1:

To what extent is there a unique minimizer to the empirical risk (2)?

-

Q2:

Does the distribution of the learnt embedding vectors change as a result of changing the underlying sampling scheme? If so, can we describe quantitatively how?

-

Q3:

During learning of the embedding vectors, are we using a loss which limits the information we can capture in a learned representation? If so, can we fix this in some way?

Answering these questions allow us to evaluate the impact of various heuristic choices made in the design of algorithms such as node2vec, where our results will allow us to describe the impact with respect to downstream tasks such as edge prediction. We go into more depth into these questions below, and discuss in Section 1.5 how our main results help address these questions.

1.1.1 Uniqueness of minimizers of the empirical risk

We highlight that the loss and risk functions in (1) and (2) are invariant under any joint transformation of the embedding vectors by an orthogonal matrix . As a result, we can at most ask whether the gram matrix induced by the embedding vectors is uniquely characterized. This is challenging as the embedding dimension is significantly less than the number of vertices - even for networks involving millions of nodes, the embedding dimensions used by practitioners are of the order of magnitude of hundreds. As a result the gram matrix is rank constrained. Consequently, when reformulating (2) to optimize over the matrix , the optimization domain is non-convex, meaning answering this question is non-trivial. Answering this allows us to understand whether the embedding dimension fundamentally influences the representation we are learning, or instead only influences how accurately we can learn such a representation.

1.1.2 Dependence of embeddings on the sampling scheme choice in learning

While we know that random-walk schemes such as node2vec are empirically successful, there has been little discussion as to how the representation learnt by such schemes compares to (for example) schemes where we sample vertices randomly and look at the induced subgraph. This is useful for understanding their performance on downstream tasks such as community detection or link prediction. Another useful example is for when embeddings are used for causal inference (Veitch et al., 2019), where there is the needed to validate assumptions that the embeddings containing information relevant to the prediction of propensity scores and expected outcomes. A final example arises in methods which try and attempt to “de-bias” embeddings through the use of adaptive sampling schemes (Rahman et al., 2019), to understand what extent they satisfy different fairness criteria.

We are also interested in understanding how the hyperparameters of a sampling scheme affect the expected value and variance of gradient estimates when performing stochastic gradient descent. The distinction is important, as the expected value influences the empirical risk being minimized - therefore the underlying representation - and the variance the speed at which an optimization algorithm converges (Dekel et al., 2012). When using stochastic gradient descent in an i.i.d data setting, the mini-batch size does not effect the expected value of the gradient estimate given the observed data, but only its variance, which decreases as the mini-batch size increases. However, for a scheme like node2vec, it is not clear whether hyperparameters such as the random walk length, or the unigram parameter affect the expectation or variance of the gradient estimates (conditional on the graph ).

1.1.3 Information-limiting loss functions

One important property of representations which make them useful for downstream tasks are their ability to differentiate between different graph structures. One way to examine this is to consider different probabilistic models for a network, and to then examine whether the resulting embeddings are distinguishable from each other. If they are not, then this suggests some information about the network has been lost in learning the representation. By examining the range of distributions which have the same learned representation, we can understand this information loss and the effect on downstream task performance.

1.2 Overview of results

1.2.1 Embedding methods implicitly fit graphon models

We highlight that the loss in (2) is the same as the loss obtained by maximizing the log-likelihood formed by a probabilistic model for the network of the form

| (3) |

using stochastic gradient ascent. Here is a closed set corresponding to a constrained set for the embedding vectors. In the limit as the number of vertices increases to infinity, such a model corresponds to an exchangeable graph (Lovász, 2012), as the infinite adjacency matrices are invariant to a permutation of the labels of the vertices.

In an exchangeable graph, each vertex has a latent feature , with edges arising independently with for a function called a graphon; see Lovász (2012) for an overview. Such models can be thought of as generalizations of a stochastic block model (Holland et al., 1983), which have a correspondence to when the function is a piecewise constant function on sets for some partition of , with the partitions acting as the different communities within the SBM. If is the size of , and we write for the value of on , this is equivalent to the usual presentation of a stochastic block model

| (4) |

where is the community label of vertex . One can also consider sparsified exchangeable graphs, where for a graph on vertices, edges are generated with probability for a graphon and a sparsity factor as . This accounts for the fact that most real world graphs are not “dense” and do not have the number of edges scaling as ; in a sparsified graphon, the number of edges now scales as .

For the purposes of theoretical analysis, we look at the minimizers of (2) when the network arises as a finite sample observation from a sparsified exchangeable graph whose graphon is sufficiently regular. We then examine statistically the behavior of the minimizers as the number of vertices grows towards infinity. As embedding methods are frequently used on very large networks, a large sample statistical analysis is well suited for this task. One important observation is that even when the observed data is from a sparse graph, embedding methods which fall under (3) are implicitly fitting a dense model to the data. As we know empirically that embedding methods such as node2vec produce useful representations in sparse settings, we introduce the sparsity to allow some insight as to how this can occur.

1.2.2 Types of results obtained

We now discuss our main results, with a general overview followed by explicit examples. In Theorems 10 and 19, we show that under regularity assumptions on the graphon, in the limit as the number of vertices increases to infinity, we have for any sequence of minimizers to that

| (5) |

for a function we determine, and rate . Both and depend on the graphon and the choice of sampling scheme. The rate also depends on the embedding dimension ; we note that our results may sometimes require as in order for , but will always do so sub-linearly with . As a result (5) allows us to guarantee that on average, the inner products between embedding vectors contain some information about the underlying structure of the graph, as parameterized through the graphon function . One notable application of this type of result is that it allows us to give guarantees for the asymptotic risk on edge prediction tasks, when using the values as scores to threshold for whether there is the presence of an edge in the graph. Our results apply to sparsified exchangeable graphs whose graphons are either piecewise constant (corresponding to a stochastic block model), or piecewise Hölder continuous.

To show how our results address the questions introduced in Section 1.1, and to highlight the connection with using the embedding vectors for edge prediction tasks, we give explicit examples (with minimal additiional notation) of results which can be obtained from the main theorems of the paper. For the remainder of the section, suppose that

denotes the cross-entropy loss function (where and ). We consider graphs which arise from a sub-family of stochastic block models - frequently called SBM models - where a graph of size is generated via the probabilistic model

| (6) |

Here is a sparsifying sequence. For our results below, we will consider the cases when or (so in the second case). With regards to the choice of sampling schemes, we consider two choices:

-

i)

Uniform vertex sampling: A sampling scheme where we select vertices uniformly at random, and then form a loss over the induced sub-graph formed by these vertices.

- ii)

Recall that defining a sampling scheme and a loss function induces a empirical risk as given in (2), with the sampling scheme defining sampling probabilities . Below we will give theorem statements for two types of empirical risks, depending on how we combine two embedding vectors and to give a scalar. The first uses a regular positive definite inner product , and the second uses a Krein inner product, which takes the form where is a diagonal matrix with entries .

Supposing we have embedding vectors , we consider the risks

| (7) | ||||

| (8) |

where and is the -dimensional identity matrix. With this, we are now in a position to state results of the form given in (5). As it is easier to state results when using the second risk , we will begin with this, and state two results corresponding to either the uniform vertex sampling scheme, or the node2vec sampling scheme. We then discuss implications of the results afterwards.

Theorem 1

Suppose that we use the uniform vertex sampling scheme described above, we choose the embedding dimension , and for all . Then for any sequence of minimizers to , we have that

in probability as , where is the matrix

Theorem 2

Suppose in Theorem 1 we instead use the node2vec sampling scheme described earlier, and now either or . Then the same convergence guarantee holds, except now the matrix takes the form

With these two results, we make a few observations:

-

i)

In our convergence theorems, we say that for any sequence of minimizers, the matrix will have the same limiting distribution. Although here we explicitly choose , can be any sequence which which diverges to infinity (provided it does so sufficiently slowly) and have the same result hold. Consequently, this suggests that up to symmetry and statistical error, the minimizers of the empirical risk will be essentially unique, giving an answer to Q1.

-

ii)

For different sampling schemes, we are able to give a closed form description of the limiting distribution of the matrices , and we can see that they are different for different sampling schemes. This affirms Q2 as posed above in the positive. One interesting observation from the Theorems 1 and 2 is the dependence on the sparsity factor. While a uniform vertex sampling scheme does not work well in the sparsified setting (and so we give convergence results only when ) in node2vec the representation remains stable in the limit when .

-

iii)

Theorem 1 tells us that if we use a uniform sampling scheme, then using the Krein inner product during learning and the as scores, we are able to perform edge prediction up to the information theoretic threshold.

-

iv)

If in Theorem 2 we instead let the walk length in node2vec to be of length , the term in the limiting distribution for node2vec would be replaced by . This means that in the limit , the limiting distribution is independent of the walk length. We discuss later in Section 4.1 the roles of the hyperparameters in node2vec, and argue that the walk length places a role in only reducing the variance of gradient estimates.

So far we have only given results for minimizers of the loss . We now give an example of a convergence result for , and afterwards discuss how this result addresses Q3 as posed above.

Theorem 3

Suppose the graph arises from a SBM() model. Let denote the inverse sigmoid function. Suppose that we use the uniform vertex sampling scheme described above, the embedding dimension satisfies and . Then for any sequence of minimizers to , we have that

and the values of and depend on and as follows:

-

a)

If and , then and ;

-

b)

If and , then ;

-

c)

If and , then ;

-

d)

Otherwise, .

From the above theorem we can see that the representation produced is not an invertible function of the model from which the data arose. For example in the regime where and , the representation depends only on the size of the gap , and so one can choose different values of for which the limiting distribution is the same. This answers the first part of Q3. (We discuss this further in Section 3.4; see the discussion after Proposition 20.) In contrast, this does not occur in Theorem 1 - the representation learned is an invertible function of the underlying model. Theorem 3 also highlights that, when using only the regular inner product during training and scores , there are regimes (such as when ) where the scores produced will be unsuitable for purposes of edge prediction.

The fundamental difference between Theorems 1 and 3 is that the risk we consider in Theorem 1 arises by making the implicit assumption that the network arises from a probabilistic model . This means the inverse-logit matrix of edge probabilities are not constrained to be positive-definite, whereas using as in (3) to give places a positive-definite constraint on this matrix. This can be interpreted as a form of model misspecification of the data generating process. To address the information loss which occurs when parameterizing the loss through inner products , we can fix this by replacing it with a Krein inner product. This gives an answer to the second part of Q3. We later demonstrate that making this change can lead to improved performance when using the learned embeddings for downstream tasks on real data (Section 5.2), suggesting these findings are not just an artefact of just the type of models we consider.

1.3 Related works

There is a large literature looking at embeddings formed via spectral clustering methods under various network models from a statistical perspective; see e.g Ma et al. (2021); Deng et al. (2021) for some recent examples. For models supporting a natural community structure, these frequently take the form of giving guarantees on the behavior of the embeddings, and then argue that using a clustering method with the embedding vectors allows for weak/strong consistency of community detection. See Abbe (2017) for an overview of the information theoretic thresholds for the different type of recovery guarantees.

Lei and Rinaldo (2015) consider spectral clustering using the eigenvectors of the adjacency matrix for a stochastic block model. Rubin-Delanchy et al. (2017) consider spectral embeddings using both the adjacency matrix and Laplacian matrices from models arising from generative models of the form where ) and the are i.i.d random variables with known and fixed - such graphs are referred to frequently as dot product graphs. These allow for a broader class of models than stochastic block models, such as mixed-membership models. The case was considered by Tang and Priebe (2018), with central limit theorem results given in Levin et al. (2021); see Athreya et al. (2018) for a broader review of statistical analyses of various methods on these graphs. In Lei (2021), they consider similar models where where is a Krein space (formally, this is a direct sum of Hilbert spaces equipped with an indefinite inner product, formed by taking the difference of the inner products on the summand Hilbert spaces), with their results applying to non-negative definite graphons and graphons which are Hölder continuous for exponents . They then discuss the estimation of the using the eigendecomposition of the adjacency matrix (which we have noted can be viewed as a type of embedding) from a functional data analysis perspective. We note that in our work we do not directly assume a model of such a form, but some of our proofs use some similar ideas.

With regards to embeddings learned via random walk approaches such as node2vec (Grover and Leskovec, 2016), there are a few works which study modified loss functions. To be precise, these suppose that each vertex has two embedding vectors and , with terms of the form replaced in the loss with , and , are allowed to vary independently with each other. Qiu et al. (2018) study several different embedding methods within this context (including those involving random walks) where they explicitly write down the closed form of the minimizing matrix for the loss having averaged over the random walk process when and is fixed. In order to be always able to write down explicitly the minimizing matrix, they rely on the assumption that and that and are unconstrained of each other, so that the matrix is unconstrained. This avoids the issues of non-convexity in the problem. We note that in our work we are able to handle the case where we enforce the constraints (as in the original node2vec paper) and , so we address the non-convexity.

Zhang and Tang (2021) then considers the same minimizing matrix as in Qiu et al. (2018) for stochastic block models, and examines the best rank approximation (with respect to the Frobenius norm) to this matrix, in the regime where and is less than or equal to the number of communities. We comment that our work gives convergence guarantees under broad families of sampling schemes, including - but not limited to - those involving random walks, and for general smooth graphons rather than only stochastic block models. Veitch et al. (2018) discusses the role of subsampling as a model choice, within the context of specifying stochastic gradient schemes for empirical risk minimization for learning network representations, and highlights the role they play in empirical performance.

1.4 Notation and nomenclature

For this section, we write for the Lebesgue measure, the interior of a set and as the closure of . We say that a partition of , written , is a finite collection of pairwise disjoint, connected sets whose union is , and and for all . For a partition of , we define

which gives a partition of . A refinement of is a partition where for every , there exists a (necessarily unique) such that .

We say a function is Hölder, where is closed and , are constants, if

We say a function is piecewise Hölder if the following holds: for any , the restriction admits a continuous extension to , with this extension being Hölder. Similarly, we say that a function is piecewise continuous on if for every , admits a continuous extension to .

For a graph with vertex set and edge set , we let denote the adjacency matrix of , so iff . Here we consider undirected graphs with no self-loops, so ; we count and together as one edge. For such a graph, we let

-

•

denote the number of edges of ;

-

•

denotes the degree of the vertex , so .

A subsample of a graph is a collection of vertices , along with a symmetric subset of the adjacency matrix of restricted to ; that is, a subset of . The notation therefore refers to whether is an element of the aforementioned subset of .

In the paper, we consider sequences of random graphs generated by a sequence of graphons . A graphon is a symmetric measurable function . To generate these graphs, we draw latent variables independently for , and then for set

independently, and for . We then let be the graph formed with adjacency matrix restricted to the first vertices. Unless mentioned otherwise, we understand that references to and - now dropping the superscript - refer to the above generative process. For a graphon , we will denote

-

•

for the edge density of ;

-

•

for the degree function of ;

-

•

, so .

Given a sequence of random graphs generated in the above fashion, we define the random variables and for the number of edges, and degrees of a vertex in , respectively.

For triangular arrays of random variables and , we say that if for all , , there exists such that for all we have that . If can be chosen uniformly in , then we simply write . We use similar notation for , (where iff ), (where iff ) and (where iff and ). For non-stochastic quantities, we use similar notation, except that we drop the subscript . Throughout, we use the notation to denote the measure of sets; specifically, if then is the number of elements of the set , and if then or is the Lebesgue measure of the set . Similarly, for sequences and functions, we use to denote the or norms respectively. The notation indicates the set of integers .

1.5 Outline of paper

In Section 2, we discuss the main object of study in the paper, and the assumptions we require throughout. The assumptions concern the data generating process of the observed network, the behavior of the subsampling scheme used, and the properties of the loss function used to learn embedding vectors. Section 3 consist of the main theoretical results of the paper, giving a consistency result for the learned embedding vectors under different subsampling schemes. Section 4 gives examples of subsampling schemes which our approach allows us to analyze, and highlights a scale invariance property of subsampling schemes which perform random walks on a graph. In Section 5, we demonstrate on real data the benefit in using an indefinite or Krein inner product between embedding vectors, and demonstrate the validity of our theoretical results on simulated data. Proofs are deferred to the appendix, with a brief outline of the ideas used for the main results given in Appendix B.

2 Framework of analysis

We consider the problem of minimizing the empirical risk function

| (9) |

where we have that

-

i)

the embedding vectors are -dimensional (where is allowed to grow with ), with corresponding to the embedding of vertex of the graph;

-

ii)

is a non-negative loss function;

-

iii)

is a (bilinear) similarity measure between embedding vectors; and

-

iv)

refers to a stochastic subsampling scheme of the graph , with representing a graph on vertices.

We now discuss our assumptions for the analysis of this object, which relate to a generative model of the graph , the loss function used, and the properties of the subsampling scheme. For purposes of readability, we first provide a simplified set of assumptions, and give a general set of assumptions for which our theoretical results hold in Appendix A.

2.1 Data generating process of the network

We begin by imposing some regularity conditions on the data generating process of the network. Recall that we assume the graphs are generated from a graphon process with latent variables and generating graphon , where is a graphon and is a sparsity factor which may shrink to zero as .

Remark 4

The above assumption corresponds to the graph being an exchangeable graph. Parameterizing such graphs through a graphon and one dimensional latent variables is a canonical choice as a result of the Aldous-Hoover theorem (e.g Aldous, 1981), and is extensive in the network analysis literature. However, this is not the only possible choice for the latent space. More generally we could consider some probability measure on , and a symmetric measurable function , where the graph is generated by assigning a latent variable independently for each vertex, and then joining vertices with an edge independently of each other with probability .

From a modelling perspective a higher dimensional latent space is desirable; an interesting fact is that any such graph is equivalent in law to one drawn from a graphon with latent variables (Janson, 2009, Theorem 7.1). As a simple illustration of this fact, suppose that users in a social network graph have characteristics for some , and that two individuals and are connected in the network (independently of any other pair of users) with probability , which depends only on their characteristics. Assuming that the are drawn i.i.d from a distribution on , we can always simulate such a distribution by partitioning according to the probability mass function , drawing a latent variable , and then assigning to the value corresponding to the part of the partition of for which landed in. Letting denote this mapping, the model is then equivalent to a one with a graphon . Consequently, our results will be presented mostly in terms of graphons . However, they can be extended with relative ease to graphons with higher dimensional latent spaces, which we discuss further in Section 3.3.

Assumption 1 (Regularity + smoothness of the graphon)

We suppose that the sequence of graphons generating are, up to weak equivalence of graphons (Lovász, 2012), such that i) the graphon is piecewise Hölder, , , for some partition of and constants , ; ii) there exist constants such that and a.e; and iii) the sparsifying sequence is such that .

Remark 5

We will briefly discuss the implications of the above assumptions. The smoothness assumptions in a) are standard when assuming networks are generated from graphon models (e.g Wolfe and Olhede, 2013; Gao et al., 2015; Klopp et al., 2017; Xu, 2018). The assumption in b) that is bounded from below is strong, and is weakened in the most general assumptions listed in Appendix A. This, along with the assumption that , implies that the degree structure of is regular, in that the degrees of every vertex are roughly of the same order, and will grow to infinity as does; this is a limitation in that real world networks do not always exhibit this type of behavior, and have either scale-free or heavy-tailed degree distributions (e.g Albert et al., 1999; Broido and Clauset, 2019; Zhou et al., 2020). Regardless of the sparsity factor, graphon models will tend to have structural deficits; for example, they tend to not give rise to partially isolated substructures (Orbanz, 2017). We note that assumptions on the sparsity factor where grows like for some , remain standard when using graphons as a tool for theoretical analyses (e.g Wolfe and Olhede, 2013; Borgs et al., 2015; Klopp et al., 2017; Xu, 2018; Oono and Suzuki, 2021). Future work could extend our results to generalizations of graphon models, such as graphex models (Veitch and Roy, 2015; Borgs et al., 2019), which better account for issues of sparsity and regularity of graphs.

2.2 Assumptions on the loss function and

We now discuss our assumptions on the loss function , which we follow with a discussion as to the form of the functions .

Assumption 2 (Form of the loss function)

We assume that the loss function is equal to the cross-entropy loss

| (10) |

where is the sigmoid function.

We note that our analysis can be extended to loss functions of the form

where corresponds to a distribution which is continuous, symmetric about and strictly log-concave. This includes the probit loss (Assumption BI), or more general classes of strictly convex functions which include the squared loss (Assumption B). We now discuss the form of .

Assumption 3 (Properties of the similarity measure )

Supposing we have embedding vectors , we assume that the similarity measure is equal to one of the following bilinear forms:

-

i)

(i.e a regular or definite inner product) or

-

ii)

for some (i.e an indefinite or Krein inner product);

where , for , and .

2.3 Assumptions on the sampling scheme

We now introduce our assumptions on the sampling scheme. For most subsampling schemes, the probability that the pair is part of the subsample depends only on local features of the underlying graph . We formalize this notion as follows:

Assumption 4 (Strong local convergence)

There exists a sequence of -measurable functions, with for each , such that

for some non-negative sequence .

We refer to the as sampling weights. This condition implies that the probability that is sampled depends approximately on only local information, namely the latent variables , and the value of , i.e that

| (11) |

As a result of the concentration of measure phenomenon, many sampling frameworks satisfy this condition (see Section 4). This includes those used in practice, such as uniform vertex sampling, uniform edge sampling (Tang et al., 2015), along with “random walk with unigram negative sampling” schemes like those of Deepwalk (Perozzi et al., 2014) and node2vec (Grover and Leskovec, 2016). In particular, we are able to give explicit formulae for the sampling weights in these scenarios. We also impose some regularity conditions on the conditional averages of the sampling weights.

Assumption 5 (Regularity of the sampling weighs)

Remark 6

For all the sampling schemes we consider, the conditions on and will follow from Assumption 1 and the formulae for the sampling weights we derive in Section 4; in particular, the exponent will be a function of and the particular choice of sampling scheme. To illustrate this, if we suppose that we use a random walk scheme with unigram negative sampling (Perozzi et al., 2014) as later described in Algorithm 4, we show later (Proposition 26) that

| (12) | ||||

| (13) |

where , and are hyperparameters of the sampling scheme. In particular, if is piecewise Hölder with exponent , then we show (Lemma 82) that and will be piecewise Hölder with exponent .

3 Asymptotics of the learned embedding vectors

In this section, we discuss the population risk corresponding to the empirical risk (9), show that any minimizer of (9) converges to a minimizer of this population risk, and then discuss some implications and uses of this result.

3.1 Convergence of empirical risk to population risk

Given the empirical risk (9), and assuming that the embedding vectors are constrained to lie within a compact set for some , our first result shows that the population limit analogue of (9) has the form

| (14) |

where the domain consists of functions for functions . We can interpret as giving embedding vectors for vertices with latent feature , with then measuring the similarity between two vertices with latent features and . We write

| (15) |

for all such functions which are represented in this fashion. We then have that the minimized empirical risk converges to the minimized population risk :

Theorem 7

The proof can be found in Appendix C (with Theorem 30 stating a more general result under the assumptions listed in Appendix A), with a proof sketch in Appendix B.

Remark 8

The error term above consists of three parts. The term relates to the fluctuations of the empirical sampling probabilities to the sampling weights and . The second term arises as the penalty for getting uniform convergence of the loss functions when averaged over the adjacency assignments. The final term arises from using a stochastic block approximation for the functions and , and optimizing the tradeoff between the number of blocks for approximating these functions, and the relative error in the proportion of the in a block versus the size of the block.

Remark 9

Typically for random walk schemes we have that and under Assumption 1, and so the error term is of the form

One affect of this is that as decreases in magnitude, the permissable embedding dimensions decrease also; we also always require that in order for the rate .

3.2 Convergence of the learned embedding vectors

We now argue that the minimizers of (9) converge in an appropriate sense to a minimizer of over a constraint set which depends on the choice of similarity measure . Before considering any constrained estimation of , we highlight that depending on the form of , we can write down a closed form to the unconstrained minimizer of over all (symmetric) functions . When is the cross-entropy loss, by minimizing the integrand of point-wise, the unconstrained minimizer of will equal

| (16) |

As and are proportional to and respectively, we are learning a re-weighting of the original graphon. As a special case, if the sampling formulae are such that (so the probability that a pair of vertices is sampled is asymptotically independent of whether they are connected in the underlying graph) then (16) simplifies to the equation . This is the case for a sampling scheme which samples vertices uniformly at random and then returns the induced subgraph (Algorithm 1). Otherwise, will still depend on , but may not be an invertible transformation of ; for example, for a random walk sampler with walk length , one negative sample per positively sampled vertex, and a unigram negative sampler with (Algorithm 4), we get that

| (17) |

As a result of Theorem 7, we posit that when taking as , the embedding vectors learned via minimizing (9) will converge to a minimizer of when is constrained to the “limit” of the sets in (15) as . As this set depends on , whether is a positive-definite inner product (or not) corresponds to whether is constrained to being non-negative definite (or not) in the following sense: suppose allows an expansion of the form

| (18) |

for some numbers and orthonormal functions . Then, are the all non-negative - in which case is non-negative definite - or not? We prove in Appendix H that as a consequence of our assumptions, we can write

| (19) |

where for each the collection of functions are orthonormal. With this, we begin with giving a convergence guarantee when for all . In this case, is the limiting distribution of the inner products of the embedding vectors learned via minimizing (9).

Theorem 10

Suppose that Assumptions 1, 2, 4 and 5 hold. Also suppose that Assumption 3 holds with with . Finally, suppose that in (19) the are non-negative for all . Then there exists sufficiently large such that whenever , for any sequence of minimizers , we have that

In the case where the and are piecewise constant on a fixed partition for all , where is a partition of into parts, then is piecewise constant on also, there exists such that, then provided , the above convergence result holds with

See Theorem 66 in Appendix D for the proof, with the latter theorem holding under more general regularity conditions. We highlight that in the above theorem, one can also take with and and have the convergence theorem also hold, with the term being replaced by a term.

Remark 11

In the above bound for , the first three terms correspond to the terms in the convergence of the loss function as in Theorem 7. The fourth term arises from relating the matrix back to the function . The fifth term arises from the error in considering the difference between and the best rank approximation to ; in particular, if is actually finite rank in that for all , for some free of , then provided we can discard the term, and so under the conditions in which the rate in Theorem 7 converges to zero, the rate in Theorem 10 also goes to zero as .

In general, from the above result we can argue that there exists a sequence of embedding dimensions such that as , albeit possibly at a slow rate (by choosing e.g for very small). If the and are piecewise constant on a partition of size , then it is in fact possible to obtain consistency as soon as and . Here, there is a tradeoff between choosing large enough so that we get a good rank approximation to , and keeping the capacity of the optimization domain sufficiently small that the convergence of the minimal loss values is quick (see Remark 13 for a discussion of choosing optimally).

We finally note that in the statement of Theorem 10 the constant is held fixed; it is however possible to take and have the bound increase only by a multiplicative factor of for some constant .

In the case where some of the are negative, we can obtain a similar result which gives convergence to , although now choosing is necessary. We show later in Proposition 20 an example of a two community SBM which highlights the necessity of using a Krein inner product.

Theorem 12

Suppose that Assumptions 1, 2, 3, 4 and 5 hold. Given an embedding dimension , pick and in where , such that is equal to the number of non-negative values out of the absolutely largest values of in (19). Then there exists sufficiently large such that whenever , for any sequence of minimizers , we have that

In the case where the and are piecewise constant on a fixed partition for all , where is a partition of into parts, then there exists for which, as soon as , we have that the above convergence result holds with

Remark 13

The term above is the analogue of the term in Theorem 10, which arises from the fact that the decay of the as a function of is quicker when we can guarantee that they are all positive. Consequently, we have analogous remarks for that if the are all zero for , then as soon as , this term will disappear. Similarly, the term arises from looking at the best rank approximation to . As the eigenvalues can be positive and negative, the choice of and means we choose the top eigenvalues (by absolute value) for any given , and so we can obtain the rate. To see how the rates of convergence are affected by the optimal choice of embedding dimension , when and , optimizing over gives

and so the last term will tend to dominate in the sparse regime.

To summarize, Theorems 10 and 12 characterize the distribution of pairs of embedding vectors, through the similarity measure used for training. They show that the distribution of embedding vectors asymptotically decouple in that, in an average sense, the distribution of depends only on the latent features for the respective vertices. Moreover, when we have a cross-entropy loss and the similarity measure is correctly specified, we can explicitly write down the limiting distribution in terms of the sampling formulae corresponding to the choice of sampling scheme, and the original generating graphon.

3.3 Extension to graphons on higher dimensional latent spaces

As discussed earlier in Remark 4, it is possible to consider graphons more generally as functions with latent variables drawn from some probability distribution on . As these can always be made equivalent to graphons , there is a natural question as to whether our results can be applied to higher dimensional graphons. To illustrate that we can do so, here we illustrate what occurs when we have a graphon with latent variables independently for some , with a graphon function :

Assumption 6 (Graphon with high dimensional latent factors)

Suppose that the are generated by a sequence of graphons where; the latent parameters for some ; the graphon is symmetric and piecewise Hölder for some partition of ; there exist constants such that a.e; and . Moreover, we suppose that the functions

defined for , are piecewise Hölder for some exponent ; are uniformly bounded above; and uniformly bounded below and away from zero.

To apply our existing results, we will make use of the following theorem.

Theorem 14

Let be a graphon on which is Hölder. Then there exists an equivalent graphon on which is Hölder where depends only on and . Moreover, for any and function we have that .

Proof [Proof of Theorem 14]

The first part is simply Theorem 2.1 of Janson and Olhede (2021), which uses the fact that there exists a measure preserving map which is Hölder(, ) for some constant , in which case is equivalent to and is Hölder. The second part then follows by the change of variables formulae and the fact that is measure preserving.

In this setting, the population risk (14) is now of the form

| (20) |

We can now obtain analogous versions of Theorems 7 and 12 as follows:

Theorem 16

Suppose that Assumptions 2, 3 and 6 hold, and that we use Algorithm 4 (random walk + unigram negative sampling) for the sampling scheme with , so that in Assumption 6. Under the same assumptions on the choice of the embedding dimension as given in Theorem 12, it follows that there exists sufficiently large such that whenever , for any sequence of minimizers , we have that

where

Remark 17

We note that the rates of convergence in Theorems 15 and 16 depend on the dimension of the latent parameters. This cannot be avoided by our proof strategy - if we manually modified the proof, rather than simply applying Theorem 14, we would still end up with the same rates of convergence. For example, part of our bounds depend on the decay of the eigenvalues of the operator , which under our smoothness assumptions will have eigenvalues decay as (Birman and Solomyak, 1977). We highlight that such dependence on the latent dimension is common for other tasks involving networks, such as graphon estimation (Xu, 2018), and such dependence commonly arises in non-parametric estimation tasks (Tsybakov, 2008).

Remark 18

We highlight that there is some debate as to the types of graphs which can arise from latent variable models when the latent dimension is high (Seshadhri et al., 2020; Chanpuriya et al., 2020). We highlight that this is distinct from matters of what embedding dimensions should be chosen when fitting an embedding model, as methods such as node2vec are not necessarily trying to recover exactly the latent variables used as part of a generative model. For example, from Theorem 16 above, if we suppose that and substitute this into the given formula for , we can see that is not a function of due to the terms in the denominator.

3.4 Importance of the choice of similarity measure

Theorem 10 only applies when the in (19) are all non-negative, and Theorem 12 only applies to the case where we have some negative and we make the choice of . We now study the case where there are some negative and we choose .

Theorem 19

Suppose that Assumptions 1, 2, 4 and 5 hold, and suppose that Assumption 3 holds with denoting the inner product on . Define

where the closure is taken in a suitable topology (see Appendix D.2). Note that the set does not depend on (see Lemma 55). Then there exists a unique minimizer to over . Under some further regularity conditions (see Theorem 66), there exists and a sequence of embedding dimensions , such that whenever , for any sequence of minimizers , we have that

If moreover we know that and are piecewise constant on a fixed partition for all , where is a partition of into parts, then is also piecewise constant on the partition , and can be calculated exactly via a finite dimensional convex program.

In the case where we select , we now argue that this leads to a lack of injectivity - it will not be possible to distinguish two different graph distributions from the learned embeddings alone. As a consequence, there is necessarily some information about the network lost, the importance of which depends on the downstream task at hand. For example, suppose the graph is generated by a two-community stochastic block model with even sized communities, with within-community edge probability and between-community edge probability . We then have the following:

Proposition 20

Suppose that the graphon corresponds to a SBM with two communities of equal size, such that the within-community edge probability is and the between-community edge probability is ; i.e that

and that we learn embeddings using a cross entropy loss and a uniform vertex subsampling scheme (Algorithm 1 in Section 4). Then the global minima of over is given by

where

-

a)

if and , then , ;

-

b)

if and , then ;

-

c)

if and , then ;

-

d)

otherwise, , .

Lack of injectivity: As mentioned earlier, we can have multiple graphons for which the minima of over non-negative definite are identical; for instance, note that in the above example when and , then the minima of over non-negative definite depends only on the gap .

Loss of information: In the case where and , Theorem 19 and Proposition 20 tell us that the embedding vectors learned via minimizing (9) will satisfy

In particular, the generating graphon cannot be directly recovered from as it only identified up to the value of . Despite this, we note that still preserves the community structure of the network, in that if and only if and belong to the same community. It therefore follows that asymptotically, on average the learned embedding vectors corresponding to vertices in the same community are positively correlated, whereas those in opposing communities are negatively correlated.

When the minima is a constant function (such as when above), the limiting distribution contains no usable information about the underlying graphon, and therefore neither do the inner products of the learned embedding vectors. We discuss when this occurs for general graphon models in Proposition 71. In all, this highlights the advantage in using a Krein inner product between embedding vectors, as these issues are avoided. Later in Section 5.2 we observe empirically the benefits of making such a choice.

3.5 Application of embedding convergence: performance of link prediction

We discuss the asymptotic performance of embedding methods when used for a link prediction downstream task. Consider the scenario where we make a partial observation of an underlying network , with the property that if then , but if , we do not know whether or . For example, this model is appropriate for when we are wanting to predict the future evolution of a network. The task is then to make predictions about using the observed data .

In the context above, link prediction algorithms frequently use the network to produce a score corresponding to the likelihood of whether the pair is an edge in the network . The scores are usually interpreted so that the larger is, the more likely it will occur that . We consider metrics to evaluate performance of the form

| (21) |

when using the scores to predict the presence of edges in a network . We write for a discrepancy measure between the predicted score and an observed edge or non-edge in the test set. For example, in the case where

| (22) |

is a zero-one loss (having thresholded the scores by to obtain a -valued prediction), (21) becomes the misclassification error. Smoother losses can be obtained by using

| (23) | ||||

| (24) |

i.e the softmax cross-entropy or hinge losses respectively. Given a network embedding with embedding vectors for each vertex , one frequent way of producing scores is to let where is a similarity measure as in Assumption 3. By applying Theorems 10, 12 or 19, we can begin to analyze the performance of a link prediction method using scores produced by embeddings learned via minimizing (9).

Proposition 21

Let be the set of symmetric adjacency matrices on vertices with no self-loops. Suppose that is a sequence of adjacency matrices drawn from a graphon process satisfying the conditions in one of Theorems 10, 12 or 19, with denoting the embedding vectors learned via minimizing (9) using . Let be the minimal value of which appears in the aforementioned convergence theorems, and the corresponding convergence rate. Recall that denotes the similarity measure in Assumption 3. Write and for the scoring matrices formed by using the learned embeddings from minimizing (9) and respectively. Then we have that for any loss function which is Lipschitz in for that

When denotes (21) using the zero-one loss with threshold , further assume that there exists a finite set for which

| (25) |

Then for any sequence with as , we have that

Remark 22

We note that examples of loss functions which are Lipschitz include the hinge loss (24), along with any ‘clipped’ version of the softmax cross entropy loss (23), where the scores are truncated so that the loss does not become unbounded as . A sufficient condition for the regularity condition (25) to hold is that the total number of jumps in the distribution functions associated to the for all is finite; for example, this occurs if is a piecewise constant function.

We now illustrate a use of the theorem above, in the context of the censoring example introduced at the beginning of the section. Suppose that the network is generated via a graphon . We then calculate that

independently across all pairs (as the probability that given is zero). If we further have that for some symmetric, measurable function , then also has the law of an exchangeable graph. As a simple example, we could consider , corresponding to edges being randomly deleted from .

If we instead assume that has the law of an exchangeable graph with graphon , then we can calculate that

independently across all pairs . Again, if , then will have the law of an exchangeable graph too. For example, in the context of the social network example, one may suppose that the likelihood of an edge forming between two vertices is linked to the proportion of users who they are both connected with, or that it is linked to their respective degrees. We could then hypothesize that e.g

If either of the conditions hold, we can switch between using or by using and respectively.

Now suppose that we learn an embedding using the network to produce a scoring matrix (as described above) to make predictions about . Moreover assume that in (9) we use the cross-entropy loss, a Krein inner product for the bilinear from , and that satisfies the conditions in Theorem 12. This implies that the optimal value of (where and are functions of , and so we make the dependence on explicit) is given by as in (16). Provided the number of vertices in is large, Proposition 21 tells us that will be approximately equal to . When is the softmax cross-entropy loss, we then get that

| (26) | ||||

With the expression on the right hand side, it is then possible to numerically investigate for which network models (given a fixed entropy) will a particular choice of sampling scheme be effective in combating particular types of censoring. This is because once the entropy of has been fixed, minimizing the RHS in (26) corresponds to minimizing the KL divergence between the measures with densities

defined for and .

4 Asymptotic local formulae for various sampling schemes

In this section we show that frequently used sampling schemes satisfy the strong local convergence assumption (Assumption 4) and give the corresponding sampling formulae and rates of convergence. We leave the corresponding proofs to Appendix F. We begin with a scheme which simply selects vertices of the graph at random.

Algorithm 1 (Uniform vertex sampling)

Given a graph and number of samples , we select vertices from uniformly and without replacement, and then return as the induced subgraph using these sampled vertices.

Proposition 23

We now consider uniform edge sampling (e.g Tang et al., 2015), complemented with a unigram negative sampling regime (e.g Mikolov et al., 2013). We recall from the discussion in Section 1.1 that a negative sampling scheme is intended to force pairs of vertices which are negatively sampled to be far apart from each other in an embedding space, in contrast to those which are positively sampled.

Algorithm 2 (Uniform edge sampling with unigram negative sampling)

Given a graph , number of edges to sample and number of negative samples per ‘positively’ sampled vertex, we perform the following steps:

-

i)

Form by sampling edges from uniformly and without replacement;

-

ii)

We form a sample set of negative samples by drawing, for each , vertices i.i.d according to the unigram distribution

and then adjoining if .

We then return .

Proposition 24

Alternatively to using a unigram distribution for negative sampling, one other approach is to select edges (such as via uniform sampling as above), and then return the induced subgraph as the entire sample.

Algorithm 3 (Uniform edge sampling and induced subgraph negative sampling)

Given a graph and number of edges to sample, we perform the following steps:

-

i)

Form by sampling edges from uniformly and without replacement;

-

ii)

Return as the induced subgraph formed from all of the vertices .

Proposition 25

We can also consider random walk based sampling schemes (see e.g. Perozzi et al., 2014).

Algorithm 4 (Random walk sampling with unigram negative sampling)

Given a graph , a walk length , number of negative samples per positively sampled vertex, unigram parameter and an initial distribution , we

-

i)

Select an initial vertex according to ;

-

ii)

Perform a simple random walk on of length to form a path , and report for as part of ;

-

iii)

For each vertex , we select vertices independently and identically according to the unigram distribution

and then form as the collection of which are non-edges in ;

and then return .

In the above scheme, there is freedom in how we can specify the initial vertex of the random walk. Here we will do so using the stationary distribution of a simple random walk on , namely , as this simplifies the analysis of the scheme.

Proposition 26

One important property of the samplers discussed in Algorithms 2, 3 and 4 is that they are essentially invariant to the scale of the underlying graph, in that the dominating parts of the expressions for the are free of the sparsity factor . We write this down for the random walk sampler.

Lemma 27

Remark 28

We note that in algorithmic implementations of negative sampling schemes in practice, there is usually not an explicit check for whether the negatively sampled edges are non-edges in the original graph. This is done for the reason that graphs encountered in the real world are frequently sparse, and so the check would take up computational time while only having a small effect on the learnt embeddings. This would correspond to removing the factor in the above formula for , and so Lemma 27 reaffirms the above reasoning.

4.1 Expectations and variances of random-walk based gradient estimates

Throughout we have studied the empirical risk induced through using a stochastic gradient scheme to learn a network embedding, given a subsampling scheme . Subsampling schemes used by practitioners (such as in node2vec) depend on some choice of hyperparameters. These are selected either via a grid-search, or by using default suggestions - for example, the unigram sampler in Algorithm 4 is commonly used with , as recommended in Mikolov et al. (2013). A priori, the role of such parameters is not obvious, and so we give some insights into the role of particular hyperparameters within the random walk scheme described in Algorithm 4. We focus on the expected value and variance of the gradient estimates used during training.

To illustrate the importance of these two values, we discuss first what happens in a traditional empirical risk minimization setting, where given data where is large and a loss function , we try to optimize over the empirical loss function by using a stochastic gradient scheme. More specifically, we obtain a sequence via

given an initial point , step sizes and a random gradient estimate . We then run this for a sufficiently large number of iterations such that ; see e.g Robbins and Monro (1951). For the empirical risk minimization setting detailed above, one common approach has take the form

where are sampled i.i.d uniformly from for each . We then get for any choice of , and when assuming that the gradient of is bounded. In general, the variance of the gradient estimates determines the speed of convergence of a stochastic gradient scheme - the smaller the variance, the quicker the convergence (Dekel et al., 2012) - and so choosing a larger batch size should leave to better convergence. Importantly, when comparing two gradient estimates, we cannot make a bona-fide comparison of their variances without ensuring that they have similar expectations, as otherwise the two schemes are optimizing different empirical risks.

In the network embedding setting, to form a gradient estimate we could take independent subsamples and average over these, to get an estimator which (when averaging over the subsampling process) gives an unbiased estimator of the gradient of the empirical risk . This also has the variance of the gradient estimates decaying as . A more interesting question is to study what occurs when we only use one subsampling scheme per gradient estimate - as in practice - and vary the hyperparameters. For example, in the random walk scheme Algorithm 4, as a consequence of Proposition 26, under the assumptions of Theorem 12, the matrix is approximately equal to

which is essentially free of the random walk length once is sufficiently large. A natural question is to therefore ask what the role of is in such a setting. In the result below, we highlight that the role of leads to producing gradient estimates with reduced variance. The proof is given on page F.2.

Proposition 29

Let be a single instance of the subsampling scheme described in Algorithm 4 given a graph . Define the random vector

so . Supposing that Assumptions 1, 2 and 3 hold, then we have that, writing ,

for some function free of , and letting be the -th component of , we have that

uniformly over all and . In particular, the representation learned by Algorithm 4 is approximately invariant to the walk length for large , as guaranteed by Theorem 12; the gradients are asymptotically free of the walk length when and are large; and the norm of the variance of the gradients decays as .

5 Experiments

We perform experiments111Code is available at https://github.com/aday651/embed-asym-experiments. on both simulated and real data, illustrating the validity of our theoretical results. We also highlight that the use of a Krein inner product between embedding vectors can lead to improved performance when using the learned embeddings for downstream tasks.

5.1 Simulated data experiments

To illustrate our theoretical results, we perform two different sets of experiments on simulated data. The first demonstrates some potential limitations of using the regular inner product between embedding vectors in the empirical risk being optimized. The second demonstrates the validity of the sampling formulae for different sampling schemes.

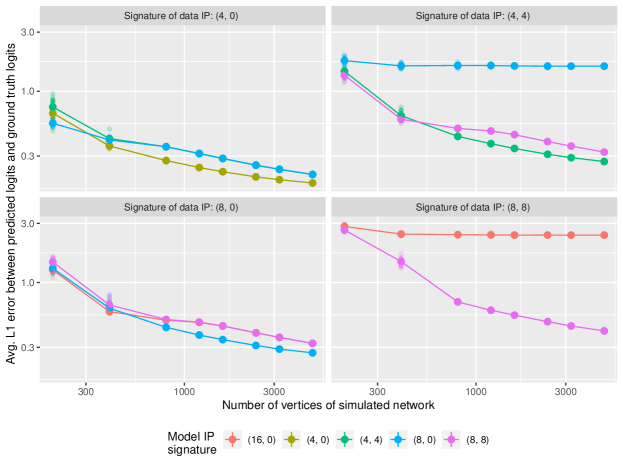

For the first experiment, we consider generating networks with vertices, where each vertex is given a latent vector drawn independently (where ), with edges formed between vertices independently with probability

Here is the sigmoid function, and for any . We simulate twenty networks for each possible combination of: , , , , , , , or ; and equal to , , , or . We then train each network using a constant step-size SGD method with a uniform vertex sampler for 40 epochs222By epochs, we are referring to the cumulative number of pairs of vertices which are used to form a gradient at each iteration, relative to the total number of edges in the graph., using a similarity measure between embedding vectors for various values of . Some are equal to , so that the similarity measure used for the data generating process and training are identical. Some are greater than , so the data generating process still falls within the constraints of the model. Finally, we also let some be less than , in which case the data generating process falls outside the specified model class for learning. With the learned embeddings we then calculate the value of

| (27) |

In words, we are computing the average error between the estimated edge logits using the learned embeddings (with a bilinear form between embedding vectors in the loss function), and the actual edge logits used to generate the network. The results are displayed in Figure 1. By the convergence theorems discussed in Sections 3.2 and 3.4, we expect that (27) will be if and only if and , and indeed this is the trend displayed in Figure 1.

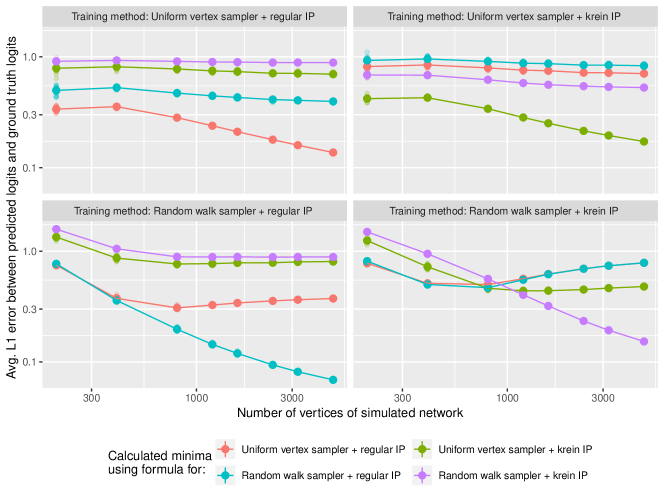

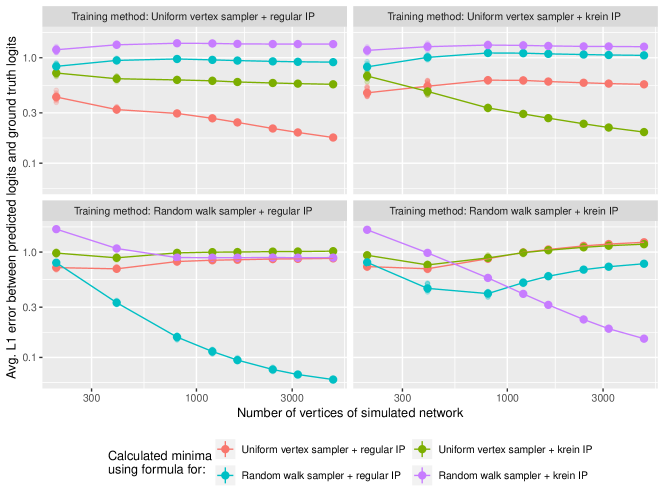

For the second result, we illustrate the validity of the sampling formulae calculated in Section 4. To do so, we begin by generating a network of vertices from one of the following stochastic block models, where denotes the community sizes and the community linkage matrices:

| SBM1: | ||||

| SBM2: |

Here each vertex is assigned a latent variable which is used to determine the corresponding community (depending on where lies within the partition of induced by ). As illustrated in Sections 3 and 4, depending on the sampling scheme (samp), and whether we use a regular or Krein inner product (IP) as the similarity measure between embedding vectors (recall Assumption C), there is a function for which the minimizers of (9) satisfy

| (28) |

We note that for stochastic block models, when we choose - corresponding to minimizing over - we can numerically compute the formula for via a convex program as a result of Proposition 59. In the case where we choose to be a Krein inner product, the discussion in Section 3.2 tells us that we can write down the minima of over exactly.

For each generated network, we train using either a) a random vertex sampler or a random walk + unigram sampler, and b) either the regular or Krein inner product for . We then calculate the value of (28) for each possible form of for the sampling schemes and inner products we consider. The experiments are then repeated for the same values of , and number of networks per choice of , as in the first experiment; the results are displayed in Figure 2. From the figure, we observe that the LHS of (28) decays to zero only when the choice of corresponds to the sampling scheme and inner product actually used, as expected.

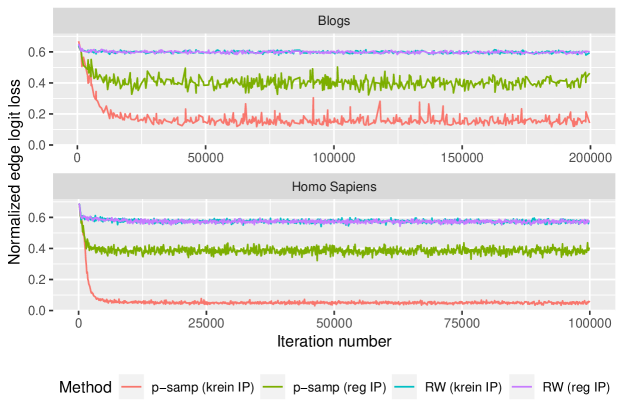

5.2 Real data experiments

We now demonstrate on real data sets that the use of the Krein inner product leads to improved prediction of whether vertices are connected in a network, and as a consequence can lead to improvements in downstream tasks performance. To do so, we will consider a semi-supervised multi-label node classification task on two different data sets: a protein-protein interaction network (Grover and Leskovec, 2016; Breitkreutz et al., 2008) with 3,890 vertices, 76,583 edges and 50 classes; and the Blog Catalog data set (Tang and Liu, 2009) with 10,312 vertices, 333,983 edges and 39 classes.

For each data set, we perform the same type of semi-supervised experiments as in Veitch et al. (2018). We learn 128 dimensional embeddings of the networks using two sampling schemes - random walk/skipgram sampling and p-sampling, both augmented with unigram negative samplers - and either a regular inner product (with signature ) or a Krein inner product (with signature ). We simultaneously train a multinomial logistic regression classifier from the embedding vectors to the vertex classes, with half of the labels censored during training (to be predicted afterwards), and the normalized label loss kept at a ratio of 0.01 to that of the normalized edge logit loss.

After training, we draw test sets according to three different methods (uniform vertex sampling, a random walk sampler and a p-sampler), and calculate the associated macro F1 scores333For a multi-class classification problem, the F1 score for a class is the harmonic average of the precision and recall; the macro F1 score is then the arithmetic average of these quantities over all the classes.. The results of this are displayed in Table 1, and the plots of the normalized edge loss during training for each of the data sets can be found in Figure 3. From these, we observe that for each of the data sets when using p-sampling with a unigram negative sampler, there is a large decrease in the normalized edge loss during training when using the Krein inner product compared to the regular inner product. We also see a sizeable increase in the average macro F1 scores. For the skipgram/random walk sampler, we do not observe an improvement in the edge logit loss, but observe a minor increase in macro F1 scores.

| Dataset | Sampling scheme | Inner product | Average macro F1 scores | ||

|---|---|---|---|---|---|

| Uniform | Random walk | p-sampling | |||

| PPI | Skipgram/RW + NS | Regular | 0.203 | 0.250 | 0.246 |

| Skipgram/RW + NS | Krein | 0.245 | 0.298 | 0.290 | |

| p-sampling + NS | Regular | 0.408 | 0.423 | 0.417 | |

| p-sampling + NS | Krein | 0.486 | 0.468 | 0.461 | |

| Blogs | Skipgram/RW + NS | Regular | 0.154 | 0.192 | 0.194 |

| Skipgram/RW + NS | Krein | 0.250 | 0.279 | 0.285 | |

| p-sampling + NS | Regular | 0.132 | 0.155 | 0.166 | |

| p-sampling + NS | Krein | 0.349 | 0.291 | 0.290 | |

6 Discussion

In our paper, we have obtained convergence guarantees for embeddings learnt via minimizing empirical risks formed through subsampling schemes on a network, in generality for subsampling schemes which depend only on local properties of the network. As a consequence of our theory, we also have argued that using an inner product between embedding vectors in losses of the form (9) can limit the information contained within the learned embedding vectors. Mitigating this through the use of a Krein inner product instead can lead to improved performance in downstream tasks.

We note that our results apply within the framework of (sparsified) exchangeable graphs. While such graphs are convenient for theoretical purposes, and can reflect how real world networks are sparse, they are generally not capable of capturing the power-law type degree distributions of observed networks. There are alternative families of models for network data which are not vertex exchangeable and alleviate some of these problems, such as graphs generated by a graphex process (Veitch and Roy, 2015; Borgs et al., 2017, 2018), along with other models such as those proposed by Caron and Fox (2017) and Crane and Dempsey (2018). As these models all contain enough structure similar to that of exchangeability (such as through an underlying point process to generate the network - see Orbanz (2017) for a general discussion on these points), we anticipate that our overall approach can be used to analyze the performance of embedding methods on broader classes of models for networks.

Our theory only considers embeddings learnt in an unsupervised, transductive fashion, whereas inductive methods for learning network embeddings are increasing popular. We highlight that inductive methods such as GraphSAGE (Hamilton et al., 2017a) work by parameterizing node embeddings through an encoder (possibly with the inclusion of nodal covariates), with the output embeddings then trained through a DeepWalk procedure. Provided that the encoder used is sufficiently flexible so that the range of embedding vectors is unconstrained (which is likely the case for the neural network architectures frequently employed), our results still apply in that we can give convergence guarantees for the output of the encoder analogously to Theorems 10, 12 and 19.

Acknowledgements

We acknowledge computing resources from Columbia University’s Shared Research Computing Facility project, which is supported by NIH Research Facility Improvement Grant 1G20RR030893-01, and associated funds from the New York State Empire State Development, Division of Science Technology and Innovation (NYSTAR) Contract C090171, both awarded April 15, 2010. Part of this work was completed while M. Austern was at Microsoft Research, New England. We thank the two anonymous reviewers and the editor for their feedback, which significantly improved the readability and contributions of the paper.

Appendix A Technical Assumptions

Here we introduce a more general set of technical assumptions than those introduced in Section 2 for which our technical results hold. For convenience, at points we will duplicate our assumptions to keep the labelling consistent, and so Assumptions A,B and E are generalizations of Assumptions 1, 2 and 5 respectively, and Assumptions C and D are the same as Assumptions 3 and 4 respectively.

Assumption A (Regularity and smoothness of the graphon)

We suppose that the underlying sequence of graphons generating are, up to weak equivalence of graphons (Lovász, 2012), such that:

-

a)

The graphon is piecewise Hölder, , , for some partition of and constants , ;

-

b)

The degree function is such that for some exponent ;

-

c)

The graphon is such that for some exponent ;

-

d)

There exists a constant such that a.e;

-

e)

The sparsifying sequence is such that if , and if .

Assumption B (Properties of the loss function)

Assume that the loss function is non-negative, twice differentiable and strictly convex in for , and is injective in the sense that if for and , then . Moreover, we suppose that there exists (where we call the growth rate of the loss function ) such that

-

i)

For , the loss function is locally Lipschitz in that there exists a constant such that

-

ii)

Moreover, there exists constants and such that, for all and , we have

These conditions ensure that and grows like as and respectively.

Note that the cross-entropy loss satisifies the above conditions with , and also satisifies the conditions below:

Assumption BI (Loss functions arising from probabilistic models)

In addition to requiring all of Assumption B to hold, we additionally suppose that there exists a c.d.f for which