Augmented Reality and Mixed Reality Measurement Under Different Environments: A Survey on Head-Mounted Devices

Abstract

Augmented Reality (AR) and Mixed Reality (MR) have been two of the most explosive research topics in the last few years. Head-Mounted Devices (HMDs) are essential intermediums for using AR and MR technology, playing an important role in the research progress in these two areas. Behavioral research with users is one way of evaluating the technical progress and effectiveness of HMDs. In addition, AR and MR technology is dependent upon virtual interactions with the real environment. Thus, conditions in real environments can be a significant factor for AR and MR measurements with users. In this paper, we survey 87 environmental-related HMD papers with measurements from users, spanning over 32 years. We provide a thorough review of AR- and MR-related user experiments with HMDs under different environmental factors. Then, we summarize trends in this literature over time using a new classification method with four environmental factors, the presence or absence of user feedback in behavioral experiments, and ten main categories to subdivide these papers (e.g., domain and method of user assessment). We also categorize characteristics of the behavioral experiments, showing similarities and differences among papers.

Index Terms:

Mixed Reality, Augmented Reality, Head-Mounted Device, environmental factor, metaverse[remember picture,overlay] \node[anchor=south,yshift=10pt] at (current page.south) ©2022 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. DOI: \href¡http://tex.stackexchange.com¿10.1109/TIM.2022.3218303 ;

I Introduction

Currently there is widespread and increasing interest in using virtual worlds and virtual objects for entertainment, education, training, and research applications. This includes the metaverse, a single fully-connected virtual universe. The most well known and widely used technologies for virtual environments are Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR). In AR and MR specifically, the research focus is on the integration of virtual objects with real environments across a variety of applications and aspects of daily human life.

Because of the wide and growing number of potential applications, it is likely that AR and MR will have greatly increased market value in the near future. According to the Augmented Reality & Mixed Reality Market Trend article in ReportLinker [1], AR/VR demand has increased significantly due to COVID-19. Moreover, AR’s market value growth rate is expected to reach 83.3% from its current value, while the growth rate of MR is expected to be 41.8% from its current value, which means that these fields will have a high degree of development in the next few years. The Google Books Ngram Viewer [2] shows that AR increased significantly in the book mentions proportion around 1990 and grew to about 300 times in 2019. Similarly, MR increased significantly in mentions proportion around 1994 and grew by a factor of approximately 46 times in 2019.

In recent years, industry and academic researchers have developed numerous AR, VR, and MR Head-Mounted Devices (HMDs), which combine virtual information with information in real-world environments. A user wearing an HMD enters a virtual or real-virtual hybrid world, enabling interactions that are not generally possible in the physical world alone. This capability has led to the use of HMDs in many different fields to introduce and develop new interactive methods. Example applications include entertainment/gaming (e.g. [3]), training (e.g., [4]), education (e.g., [5]), healthcare (e.g., [6]), and design (e.g., [7]).

A large body of existing research and development of HMDs focuses on the two primary goals of making users feel at ease in virtual environments and at an affordable price. Particularly for the first goal, assessments of human users in the HMDs is indispensable to developing the devices and their corresponding software. Similarly, researchers in the various application fields described above (entertainment, education, etc.) rely on human studies to guide the development of virtual object display techniques and user interaction methods. In this survey paper, we summarize the literature for research with HMDs and user measurement using different categories. Our main target was papers assessing AR and/or MR HMDs, but because many measurement papers use VR HMDs or use both VR and AR devices in the same experiments, we also included papers using VR HMDs.

This paper provides three new contributions over existing related literature surveys:

-

•

Latest (as of early 2022) environmental-related and HMD-related measurement survey paper: We summarize the AR/MR HMD literature from 1990 to early 2022, including trends over time, identifying gaps for future research directions.

-

•

Establish four environmental factor categories and ten new measurement categories: We have integrated the environmental factors commonly used in existing papers and divided them into four main categories. We further subdivided these papers into ten main categories of measurement.

-

•

Unify different measurement experiment designs: We analyzed different user measurement papers and summarized the experiment designs, including participant attributes and experimental procedure.

II Background

Here we define and summarize the relevant background literature about the key elements addressed in this survey paper: AR, MR, virtual environments, and HMDs.

II-A Augmented Reality

In AR, users see virtual objects (usually displayed through a handheld device) appearing in the real-world environment. In the AR environment, the virtual objects seen by the users are rendered virtually. Common examples are Nintendo’s Pokémon Go App and the IKEA Mobile App.

The first concept related to AR appeared in 1901 in the novel The Master Key, written by Lyman Frank Baum, which describes a child who experimented with a wearable electronic display to change and overlay data onto a real-world environment. This concept was later implemented by researchers and has became increasingly popular. Many AR-related survey papers have been written to discuss existing AR capabilities, applications, and limitations with technology. Moreover, according to [8], a description of the world’s first HMD using AR was published in 1960 [9].

The first and currently most cited AR-related survey paper was published in 1997 by Dr. Azuma [10], focusing on categories of applications for AR and registration problems. A subsequent (2001) highly-cited survey paper [11] described methods of displaying AR applications such as ”Head-worn displays” (i.e., HMDs), handheld displays, projection displays, as well as challenges for AR displays, such as outdoor environments and positional sensing. Then, in 2010, [8] published a survey paper for the AR-related research linking AR and MR. In this paper, AR and MR were linked using the concept of the reality-virtuality continuum. Similar to [11], this article summarized the display options and application examples for HMDs and also promising new technologies that have made rapid recent progress, such as optical see-through [12] and spatial displays.

II-B Mixed Reality

Similar to AR, MR also creates virtual objects in a real-world environment using the environment for hybrid rendering methods to generate objects. A current common example is the Microsoft HoloLens [13]. The most significant difference between AR and MR is that the interaction between virtual items and the real environment is added to the MR environment. This difference allows the virtual objects in the MR environment to better simulate and correspond to the physical conditions of the real-world environment. Users can use their own hands to interact with virtual objects directly.

MR was first introduced in 1994 [14, 15], extending AR to see-through and monitor-based displays. These authors used a continuum to present the relation between the real environment, AR, Augmented Virtuality (AV), and the virtual environment. A more complete concept of MR was proposed in [16], which is an extension and mixing of AR and VR. This paper included the concept of space, objects, and users’ activity, which established an important foundation for future MR-related work. A further extension [17] introduced more detailed concepts and specific applications, as well as the possibility of collaboration (i.e., multiple simultaneous users) in MR. Collaboration in MR has since been highly used in research and applications (e.g., Microsoft Mesh: https://www.microsoft.com/en-us/mesh). Similarly, [18] presented collaborative MR works and their own calibration method.

II-C Virtual Environment

Virtual environments are widely used in various research fields such as computer science and psychology. In [27], the authors focused on distributed virtual environments, which incorporate Internet communication and protocols. Another group targeted potential uses of brain-computer interfaces in interactive virtual environments [28]. A recent survey paper on presence in virtual environments [29] suggests that the sense of presence has a positive correlation with authenticity [30].

In addition to review papers, many individual papers describe research on users in virtual environments. For example, [31] discussed the display system related to the virtual environment, providing an HMD prototype of the virtual environment display system, including hand position tracking sensors.

Later, [32] introduced interaction techniques in the virtual environment, including users’ movement, selection techniques, manipulation (position/rotation), and scaling method. Although this paper was published in 1995, their implementation was presciently close to technology in 2022. In a different approach, [33], mainly focused on cameras (rotation and zooming) for image-based virtual environments using modeling and rendering.

To evaluate interaction techniques in virtual environments, [34] proposed a testbed evaluation method that provided multiple performance metrics for interactions, including object selection and manipulation as well as travel. Lastly, in [35], Immersive Virtual Environment Technology (IVET) used virtual avatars for research in social psychology such as self and group identity.

II-D Head-Mounted Devices

As described earlier, one of the most widely known tools for using AR, VR, and MR is the HMD, a helmet-shaped device that can move with the user and has screens in front of one or both eyes to display objects in the virtual environment. As of early 2022, the most commonly used HMDs were the NVIS nVisor [36], Google Glass [37], HTC Vive [38], Microsoft HoloLens [13], Oculus Rift [39], and Oculus Quest [3], pictured in Figure 1.

One category of HMD is the Optical See-Through Head-Mounted Device (OST-HMD). This technology uses optical methods to reflect virtual objects and allows the real-world objects to pass through the lens in front of the user’s eyes to achieve the appearance of virtual objects and real-world objects together in the same space. OST-HMDs are particularly useful for AR and MR.

In 1968, Dr. Sutherland published one of the earliest papers [40] regarding a complete design of an HMD. This paper introduced the display system for the HMD and the head position sensor for tracking. Later work [41] described hardware architecture for AR devices, including OST-HMD, virtual retinal system HMD, and video see-through HMD. In a comparison of user navigation performance, [42] found that performance was better with a desktop display than an HMD, although most users were satisfied with the HMD.

There have also been several survey papers reviewing different research areas using HMDs, usually associated with AR and VR. Some review papers have focused on HMD hardware. For example, [43] provided a survey of HMD history such as Active-Matrix Liquid-Crystal-Displays (AM-LCDs), Ferroelectric Liquid Crystal on Silicon (FLCOS) [44], Organic Light Emitting Displays (OLEDs) [45], and Time Multiplex Optical Shutter (TMOS) [46].

There have also been comparisons among different HMDs in user experiments. In [47], the authors compared the distance estimation in multiple HMDs such as Oculus Rift, Nvis, and HTC Vive. Returning to HMD hardware, [48] published a survey paper on state-of-the-art OST-HMD-related techniques for AR and MR, such as Microsoft HoloLens and HoloLens 2 [13]. This survey focused on visual coherence (i.e., blending of virtual and real content) in OST-HMDs in AR and MR research areas. The authors also discussed existing challenges among OST-HMD-related papers, divided into three key areas: spatial realism, temporal realism, and visual realism.

There are a variety of examples of specific applications for HMDs. For example, [49] discussed the social acceptability of disabled/non-disabled people wearing HMDs. [50] analyzed the perception of view transition for dense multi-view content when users are wearing HMDs and transitioning their views. In [51], the authors compared four different kinds of HMDs with conventional optical low-vision aids. Then, [52] used HMDs to investigate the causal agents of head trauma in athletes. Also, in [53], the authors used HMDs to compare user preferences for different virtual navigation instructions that integrate with the real environment in the MR world. Lastly, [54] built a custom HMD to evaluate performance and eye fatigue for context and focal switching in AR.

The above background knowledge demonstrates how interconnected the topics of AR, MR, virtual environments, and HMDs are. Also, many measurement-related papers use the tools mentioned in these topics for experiments. Therefore, we aim to integrate these topics, and organize and discuss papers that use HMDs and environmental factors as the primary measurement goals.

III Literature Review

To focus our literature review, we searched for the terms ”HMD” with ”measurement” and ”environmental factors” in various combinations in ACM Digital Library, IEEE Xplore, SPIE Digital Library, Springer Link, and other libraries. We also used Google Scholar to find additional papers published on other platforms or otherwise missed. After analyzing the content of papers found using the above keywords, we used our own defined paper categories to add keywords in advanced searches—for example: ”HMD signal measurement in environment” or ”HMD comfort assessment in virtual environment.”

We targeted measurement papers from 1990 to early 2022 and required that the content of included papers must have three of the following four types of information: environmental factors, participant information (e.g., Age distribution, Male/Female ratio), data collection method, and the type of HMD(s) used in the experiment.

We also collected other information from these papers, such as measurement method, experimental procedure, data processing method, and challenges/limitations encountered, and organized them in the following sections. Categorizing and summarizing these papers by their methodology allows us to identify gaps in the literature, and inform methods for future experiments.

IV Categorization: Environmental Factors, User or Device Measurements, and Participants

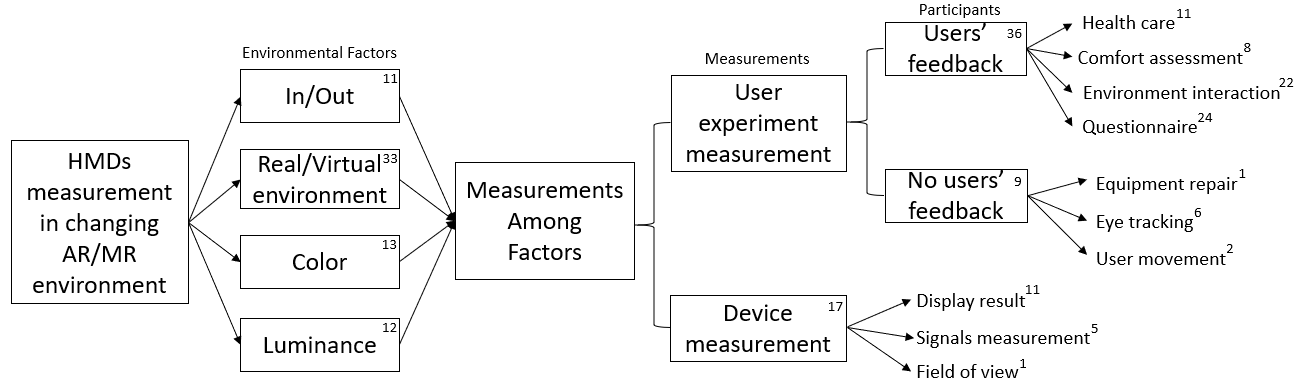

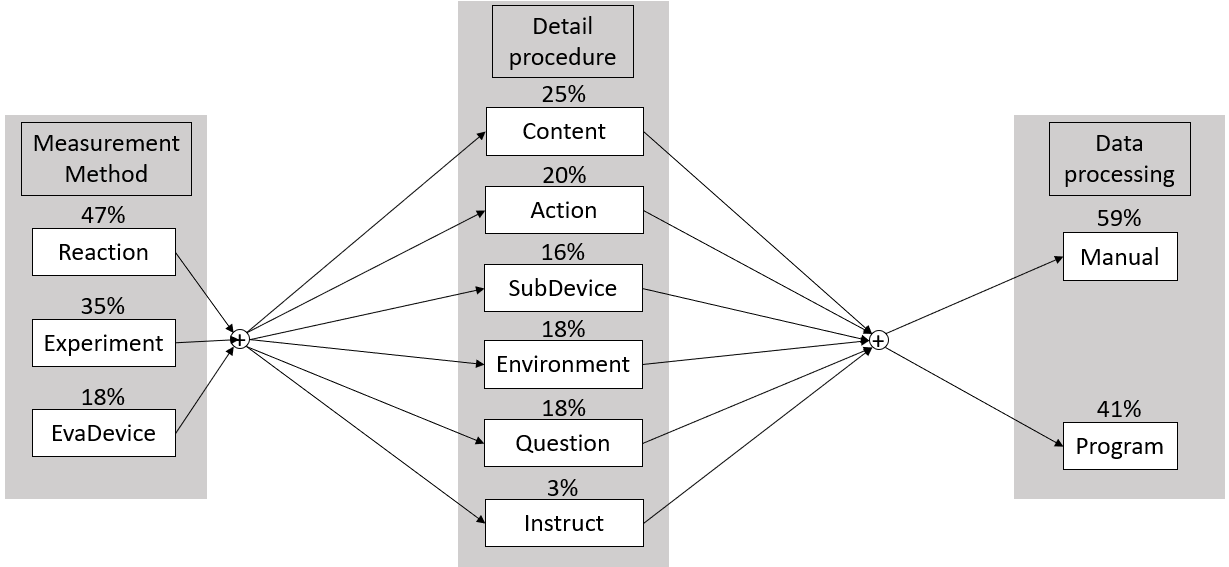

In this section, we introduce our method of categorizing the relevant papers. This is visualized in the tree diagram in Figure 2. In this diagram, the number of measurement-related papers assigned to each category appears in the upper right corner of that category (some papers may be classified in more than one category). First, we divide papers into four categories according to the main environmental factors manipulated and/or assessed in the papers, which are: indoor environment vs. outdoor environment (In/Out) [55, 56, 57, 58], virtual environment vs. virtual/real environment (Real/Virtual environment) [59, 60], color difference [61, 62], and luminance difference [63, 64]. (The reference list for each environmental factor is listed in the Appendix A) We provide more detailed information about these four environmental factor categories in section V.

We further divided the related papers according to the type of measurement conducted: user experiment measurement or device measurement [65, 66], as shown in the right half of Figure 2. The primary classification basis for these two categories is whether the researcher assesses some aspect of human experience or behavior (e.g., action patterns, reactions, or feedback), or assesses only aspects of the HMD.

User experiment measurement studies may be divided into two additional categories: users’ feedback and no users’ feedback. This classification is based on whether the experiment requires any type of response or input behavior from participants. Examples of users’ feedback include asking participants to report a target’s color [61, 67], to fill in a questionnaire after the experiment is over [68, 6], or automatically logged responses, such as time spent on achieving pre-set goals [69, 53], or accuracy in putting virtual objects in the correct position in the environment [70, 71]. Studies that incorporate users’ responses are the most common category among the types of measurement, likely because capturing direct feedback from users is a quick and easy way to obtain experimental data. Frequently used cases in this category are: health care (participants report their symptoms after using HMDs) [72, 73], comfort assessment (participants report whether they feel uncomfortable after using HMDs in different environments) [74, 75], environment interaction (participants provide feedback on changes in the environment) [76, 77], and questionnaire (the participants are asked to fill in questionnaires before or after the experiment) [49, 78].

In the less common category of no users’ feedback, experimenters observe participants’ actions externally and record them as experimental data. Instead of asking for specific feedback or responses from participants, experimenters record (manually or with external measurement devices) participants’ actions for comparison. Common use cases include equipment repair (participants to repair some instruments and experimenters observe their actions) [79], eye tracking (experimenters record the movement of the eye by the machine and aggregate it into data) [80, 81], and user movement (experimenters observe participants movement as they complete a specific goal) [82, 83]. Although there are relatively few published papers at present in the category of using HMDs for equipment repair, this topic has boomed in recent years, with technologists trying to use MR or AR devices (such as Microsoft HoloLens) to accomplish this goal. We placed equipment repair into a separate category, as we expect this area to continue to grow.

For studies that fall into the category of device (as opposed to user) measurement, the primary goal is typically to test the technical performance of HMDs, such as performance in different environments, HMDs’ display quality, or the efficacy of HMD systems. Common themes of these papers are: display result [84, 85], signals measurement [86, 87], and field of view measurement (FOV) [6]. Although these studies may require human participants to manipulate the devices, the main goal and focus of assessment is on quantifying technical aspects of HMDs. (The reference list for each category is listed in the Appendix B)

In the next sections, we provide more detail on the papers included in the categories described above. Section V discusses the environmental factors on the left half of Figure 2, Section VI covers participants’ composition, data collection methods, and the mechanism (HMDs) used in related papers, and Section VII covers the detailed structure of the experiment, corresponding to the right half of Figure 2. Lastly, we provide general discussion, future directions and conclusions in Sections VIII and IX.

V Environmental Factors

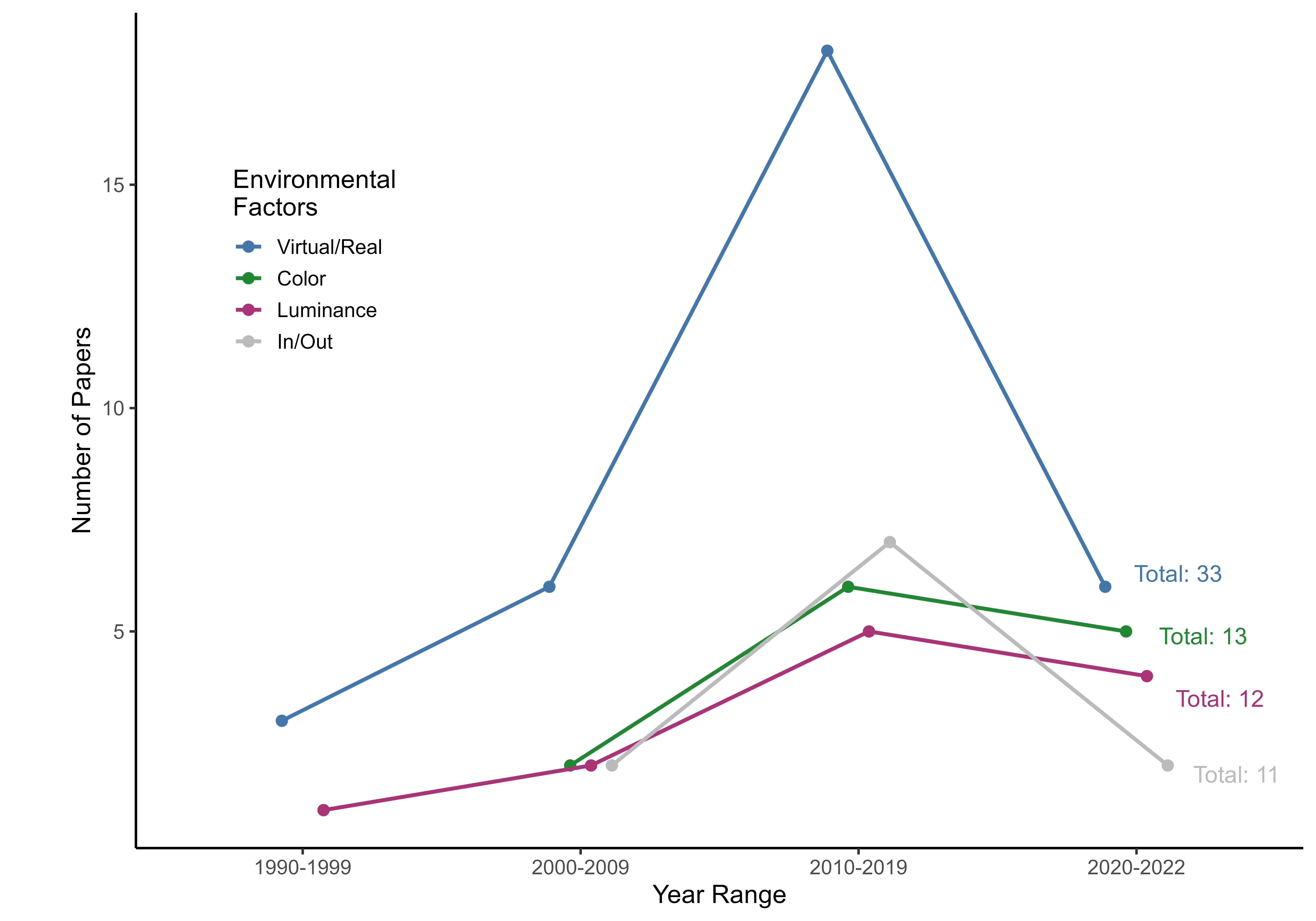

In this section, we discuss some common environmental factors, such as sunlight and surrounding color difference between indoor and outdoor, that may affect the results of HMD measurements, and their prevalence in the surveyed literature, illustrated in Figures 3a and 3b.

V-A Indoor Environment vs. Outdoor Environment

One of the most frequently mentioned environmental factors is the difference between indoor and outdoor environments. For example, [77] mentions that most OST-HMDs add AR light so they do not have to compete against real-world lighting. However, when the OST-HMDs are exposed to direct sunlight in outdoor environments, they lose a significant amount of contrast. The authors recommend that beyond reducing environment light, future OST-HMD works need to consider simulating and making adjustments to the intensive environment lighting in the outdoors. In a comparison of egocentric distance judgments with two different HMDs using indoor and outdoor virtual environments, [88] found greater underestimation in outdoor than indoor environments (see also [47]). In addition, [89] describes how the typical settings of indoor and outdoor environments should be considered; for example, hallways are typical for indoor settings, while lawns are typical for outdoor. In [90], the experimenters tested their HMD-based system in both indoor and outdoor environments to achieve complete 3-D eye-tracking accuracy. Lastly, [91] used a VR device to simulate the MR world and compared the usability of ten different navigation instruction designs.

While the differences between indoor vs. outdoor environments are frequently discussed in the literature, Figure 3a, shows that this factor in the first two time intervals is actually assessed in measurement studies relatively infrequently. Indoor experimental settings are often more convenient and afford more experimental control, especially over variables like luminance. HMD measurement experiments that compare outdoor and indoor environments faced significant challenges in the past. However, since the application of HMDs to the outdoors is a critical challenge, this factor became more prevalent after 2010, and we expect the same trend to continue in future studies.

V-B Virtual Environment vs. Virtual/Real Environment

Another important factor for measurement studies is the difference between two virtual environments or between virtual and real environments. Under the transition of different environments, the virtual objects rendered in front of the user may be displayed differently through HMDs. With different rendering effects, experimenters can do the same measurement across different environments to detect different results.

In [92], the authors developed a Projective Head Mounted Display (PHMD) to build a compound environment of the workstation and surrounding virtual world. In addition, [93, 94] designed an experiment under real and virtual room conditions. The result indicated that the mock HMD in the real-world caused a reliable effect of underestimation. Then, [95] proposed an idea of how to compensate for the difference in space perception between the real-world and the VR environment by asking users to reach the farthest position in each environment.

Figure 3a shows that a large proportion of included papers compare either virtual vs. virtual environment or virtual vs. real environment. One reason to compare two virtual environments is to ensure that results (e.g. differences between two different HMDs) generalize beyond a single virtual environment. When virtual vs. real environments are compared, the main goal is usually to observe that the participants produce different responses and feedback to the same type of display in these two environments. This experiment allows the researchers to understand that the human response to the real environment may not be exactly the same as the response of the virtual environment, and more attention and understanding are needed when designing HMD-related work.

V-C Color Difference

Similarly, recent papers also consider the difference, or lack thereof, for the color of virtual objects and color of the environment. Rendering virtual objects with colors close to the color of environment may make it difficult for participants to see the target objects created by HMDs. This could also impair judgements of distance.

The authors of [96] noted frequent errors in the AR application of OST-HMDs between the input and output colors on display, and applied a complete color reproduction method to solve this problem. In [86], the experimenters used different colors to indicate different target positions for the participants. In addition, [97] introduced an extended color discrimination model to address the issue of the combination of the color displayed by the HMD and the color of the external environment leading to a false perception. By using optical and video see-through HMDs as evaluation devices in the MR world, [61] examined the effect of color parameters on users’ perceptions when using different types of MR devices, showing that users often overestimated color in MR environments. Finally, in recent years, high-fidelity color in MR environments has been an essential task for every OST-HMD. [67] proposed a rendering method to enhance the color contrast between virtual objects and the background of the real environment, which can raise the fidelity degree of color in MR environments and provide a better visual experience.

Figure 3a shows that older papers exploring the effects of color differences are rare, with more appearing recently. This may be explained by the relatively low resolution of older-version HMDs. The increased resolution of new versions of HMDs (for example, the display resolution of HoloLens 2 is 2048x1080 per eye, and the display resolution of Oculus Quest is 1440×1600 per eye) may mean that users are more sensitive to the colors displayed in HMDs. In future measurement-related research in AR/MR, the possible impact of virtual color with the real-world environment is likely to be increasingly important.

V-D Luminance Difference

The luminance of the environment, both alone and in combination with color, is also an important factor for the perception of virtual objects. Here, luminance indicates the intensity of light in the environment. For example, a dark red background will make bright green easier to notice, or excessively high luminance may prevent users from seeing the target object clearly in a dark environment.

In [98], the authors designed a series of experiments to examine the relation between luminance and distance judgments in HMDs, such as finding a certain threshold of the luminance that affects the result. In addition to luminance affecting distance judgment, [74] proposed that significant increases in luminance while viewing HMDs can cause visual discomfort. This paper evaluated the luminance change and maximum luminance that will cause discomfort to the users. Recent research has focused specifically on environment luminance affecting OST-HMDs, which use additive light models, meaning they lose a significant amount of contrast in high luminance environments (e.g., outdoor on a sunny day with no clouds). In [77], the authors designed a measurement to evaluate perceptual challenges with OST-HMDs and made some suggestions for future improvements.

As shown in Figure 3a, studies have assessed luminance differences as a factor throughout the time periods surveyed, with what appears to be a small increase over time. Common effects of different luminances are changing visibility of virtual objects for users, and high-luminance environments causing user discomfort. As mentioned above, indoor and outdoor luminances are often substantially different. Some related papers used indoor high luminance to simulate outdoor luminance while controlling for other differences between indoor and outdoor environments. Others have recently tried to make HMDs usable in both indoor and outdoor environments.

We also summarize the number of papers for each environmental factor over time and the specific topic areas for environmental factor in Figure 3b. This figure again shows the relatively high proportion of papers with the category of Virtual/Real, across multiple topic areas. By topic area, environmental interaction and questionnaires are frequently covered in papers assessing all four environmental factors. Whereas the topic area of comfort assessment only has three papers, two of which focus on the luminance (magenta bar) conditions that cause participants to experience discomfort while wearing HMDs. Similarly, eye tracking only has a single paper for each of three environmental factors (color, luminance, and in/out) since attention-drawing factors used in these papers are often the changes in color or luminance, these are common environmental factors when designing the measurement of eye tracking.

VI Existing experiment designs among measurements

HMD-related research using AR, VR, and MR uses a variety of experimental designs, depending on the research question, including measurements to test the machine’s performance or to assess human response to specific aspects of the virtual environment. In this section, we summarize the experimental design of the included papers according to three frequent experimental factors: participants, data collection, and mechanism.

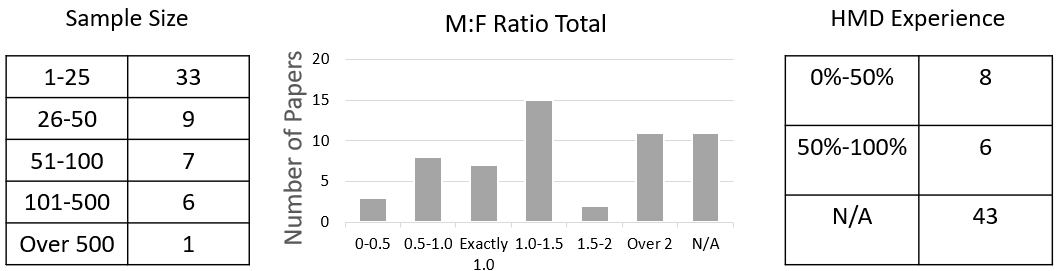

VI-A Participants

In this subsection, we examine the relationships among the number of participants recruited for measurement studies, the composition of participant samples, and the category of the studies. For example, many studies are conducted at universities [99], meaning that participants are largely students who are less than 30 years old. We explore the distribution of participant ages among different categories of measurement papers. Similarly, men generally outnumber women among students in computer science and engineering. There are potentially gender differences in the results of the experiments, so we also compare the male and female ratios of participant samples in the surveyed papers. Previous HMD experience may also potentially affect results; we organized papers by whether they assessed HMD experience in their participants and if so, the distribution of experience.

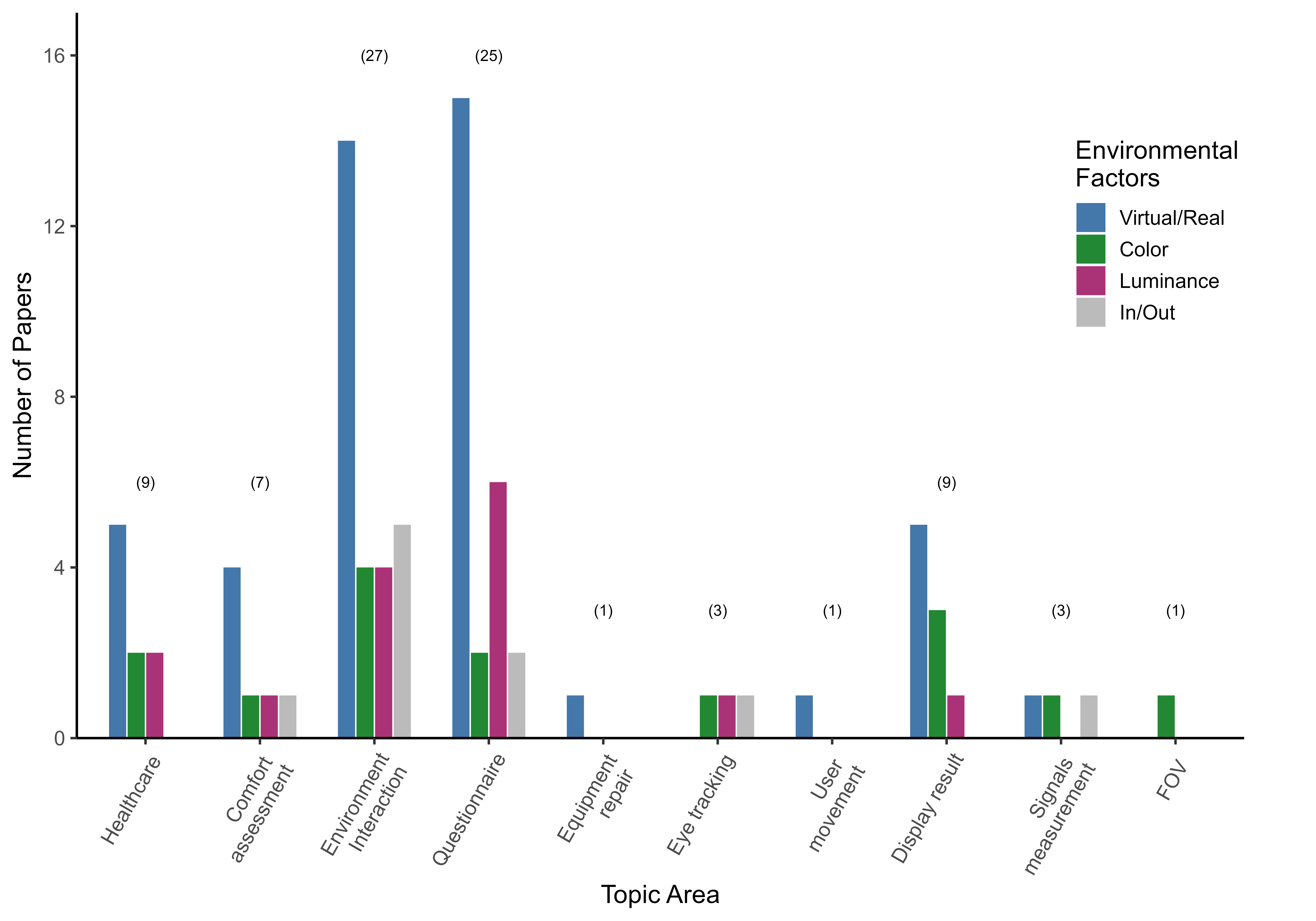

The left panel of Figure 4 shows the “sample size” or total number of participants recruited in each paper. Note in the majority of papers the sample size tends to be limited, between N = 1 to 25. Many factors may affect the number of participants, such as: aims of the study (e.g., qualitative usability vs. quantitative performance), study design (e.g., whether or not there are repeated measures), study duration and resources needed to run the study, and whether participants with particular expertise is required. Nevertheless, in general, larger sample sizes are desirable for quantitative research using inferential statistics.

The Male-to-Female Ratio Total chart in the middle panel of Figure 4 does not support the above assumption that participant samples in most experiments are overwhelmingly male. Although there may be specific areas of research such as male athlete sports-related [52] that will include only male participants, or some papers that do not describe the ratio of men to women in detail, most of the measurement-related papers attempted to roughly balance the ratio of men to women. To ensure that experimental results generalize across genders, it is important to recruit both male and female participants. However, it can be challenging to achieve a male-to-female ratio of exactly 1. We consider an M:F ratio between 0.5 to 1.5 to be roughly balanced; Figure 4 shows that most papers fall within this guideline.

Prior experience with HMDs is also an important participant variable. As shown in the right panel of Figure 4, most papers did not report participant HMD experience (marked as ”N/A”). The small number of papers (14 out of 57) that did report participants’ prior experience with HMDs were roughly balanced as to whether the majority of their sample had experience with HMDs. Depending on the category of the measurement experiment, the HMD experience of the participant who needs to be recruited will be different since the participants’ experience with HMDs may have different progress and outcomes for the experiment. The most common case is in the category of health care, where recruiting experienced participants enables experiments to be performed more quickly and accurately because no additional training is required for them.

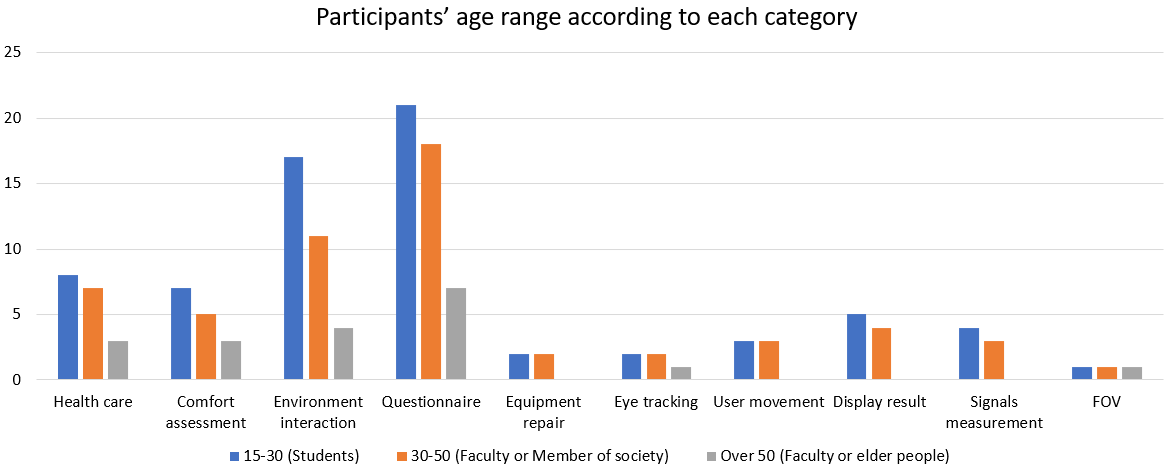

As [99] proposed, since most academic papers are written in universities the age distribution of most participants tends to skew younger, corresponding to the typical age of university students. Figure 5 depicts the age range of participants in papers from different topic areas. Most papers provided an age range of participants, but only a small number of papers reported more detail such as the mean age or the source of the participant sample (e.g., university students). In support of [99], we see that the age range between 15-30 in many topic areas is relatively high, likely because recruiting students in universities is convenient and inexpensive when the experiment requires many participants. Also, experiments with novel HMD technology may be more likely to attract students’ attention and make them more willing to participate in the experiment. We do not see evidence in Figure 5 that the age distribution of participants is related to the paper category; most of the researchers recruited 15- to 30-year-old students as participants for each category. However, participant age can often have an impact on experimental results. Much like balancing Male-to-Female ratios, it is important to assess a range of participant ages in order to be able to generalize results. Furthermore, there may be research applications where older users who are relatively unfamiliar with HMDs are the relevant population. Therefore, we recommend that researchers report detailed information about the ages of their participants, and also consider recruiting beyond the typically convenient population of university students.

VI-B Data Collection

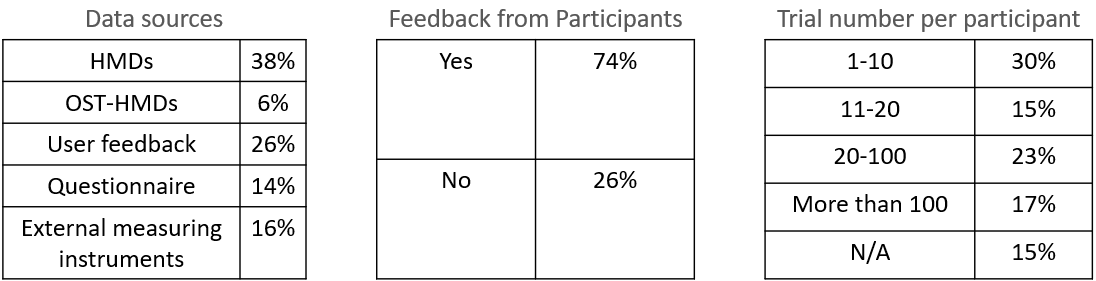

The method of data collection is a critical aspect of any measurement experiment, and there are a number of potential data sources for studies with HMDs, including sensor data (HMDs or external measuring instruments), user responses, and questionnaires. We collected the data acquisition methods in measurement papers related to HMDs using AR and MR and organized them in this section, and then summarized this information in Figure 6.

Although HMDs are the most common data source in the surveyed papers, since measurements often require participants input, many papers also use user feedback as a data source. User feedback is usually divided into verbal feedback during the experiment, such as verbally telling the experimenter the distance to a target, or written feedback, such as completing questionnaires after the test. Since questionnaire was commonly used in measurement-related papers, we created a separate questionnaire category in Figure 6 (Left). Other standard data sources include external sensors that record data in HMDs or computer software that connects to HMDs to collect data; we collectively call these ”External measuring instruments.” Furthermore, our survey indicated that many recent measurement papers used OST-HMDs as their main measurement data sources. Therefore, we created OST-HMDs as an additional category in Figure 6 (Left).

In addition, 6 (Center) shows the majority of papers directly assessed feedback from participants, which can be used to improve the experiment process, time length, or other environmental factors based on the feedback. Then, about half of the papers had 11 trials or more per participant, shown in Figure 6 (right). Note that 15% of papers did not report the number of trials for each participant, marked as ”N/A.” Only 17% of papers had 100 repeated trials per participant, this may be because of the possibility of fatigue and/or discomfort with extended use of an HMD.

VI-C Mechanism

As of early 2022, there are many different types of HMDs on the market, including AR, VR, and MR HMDs. Different HMDs use different computer platforms and sensors, and experimenters need to design corresponding measurement experiments according to the capabilities of the device. In this section, we summarize the assessment of different HMDs across time and various topic areas. Since the number of HMDs for AR and MR is relatively small, we also include papers using VR HMDs in this section.

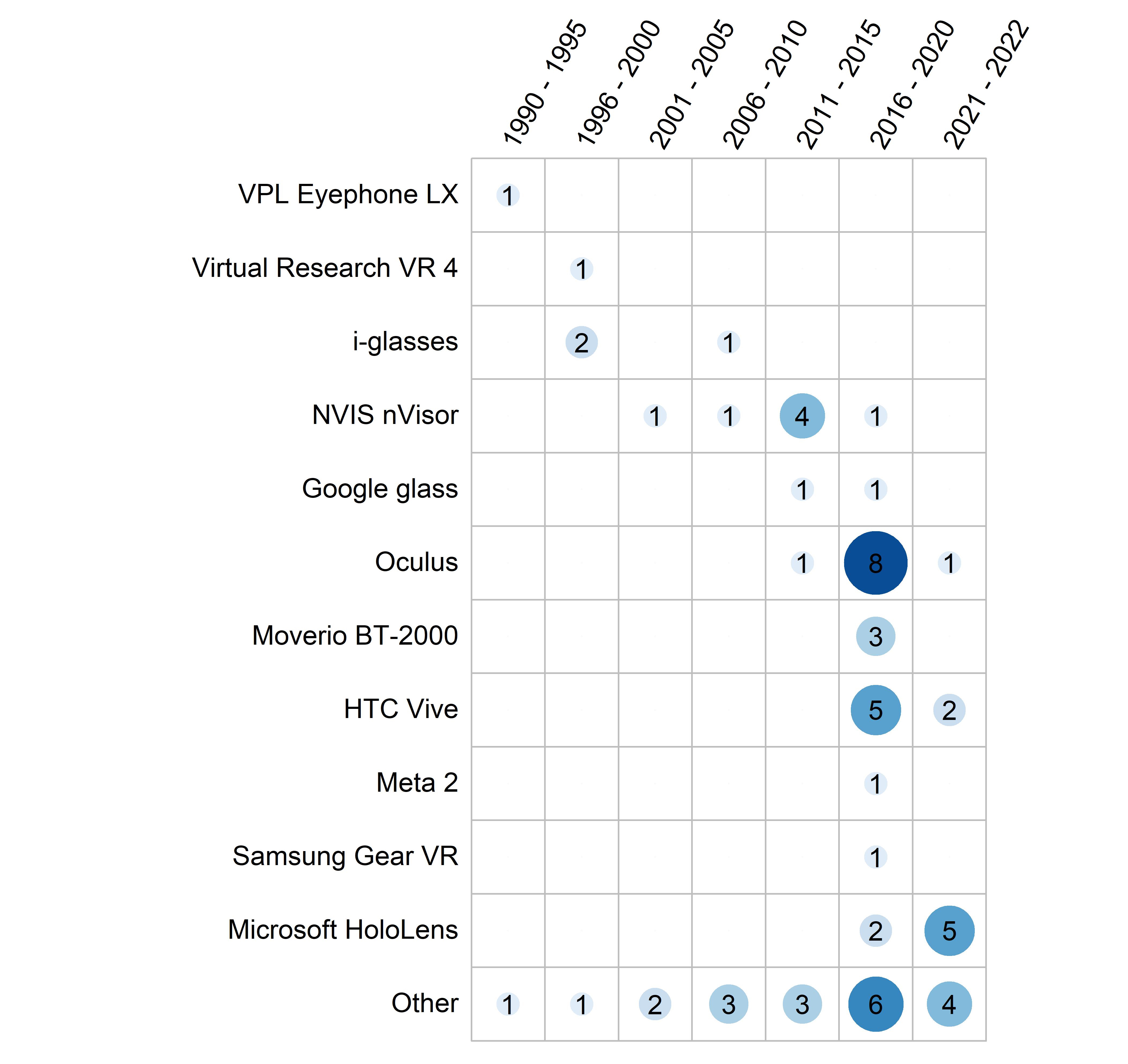

Figure 7a shows that the most widely used HMDs are different depending on the year. In earlier papers, there are many different types of HMDs used, even HMDs built by the researchers themselves. Since about 2004, authors of measurement-related papers began to use one of the most common HMDs during that time, which was a different version of NVIS nVisor [36]. After about 2014, other modern and well-known HMDs, such as Google glass [37], Oculus [39], and HTC Vive [38], began to appear and were widely used by researchers. In addition to general HMDs, OST-HMDs have been frequently used in recent years; such devices include Microsoft HoloLens 1st and 2nd generation [13], which is clearly shown in the rightmost columns of Figure 7a. Researchers’ widespread use of OST-HMDs means that MR has begun to flourish, and more information close to reality can be obtained. In addition, although not mentioned in detail in this figure, a new version of Oculus, Oculus Quest, has been widely used in research in recent years. Oculus Quest is wireless and thus will be more helpful to future research. Since a large number of papers use custom HMDs or rarely used HMDs, we classify them in the ”other” category. For example, in [100], the authors used swimming goggles, video cameras, transparent hot mirrors, and eye trackers to build a custom HMD. There are many papers focusing on technological advances in HMDs, and the use of custom HMDs enables researchers to break away from existing HMDs to achieve their goals. Therefore, the proportion of the category ”Other” in Figure 7a and Figure 7b is relatively high.

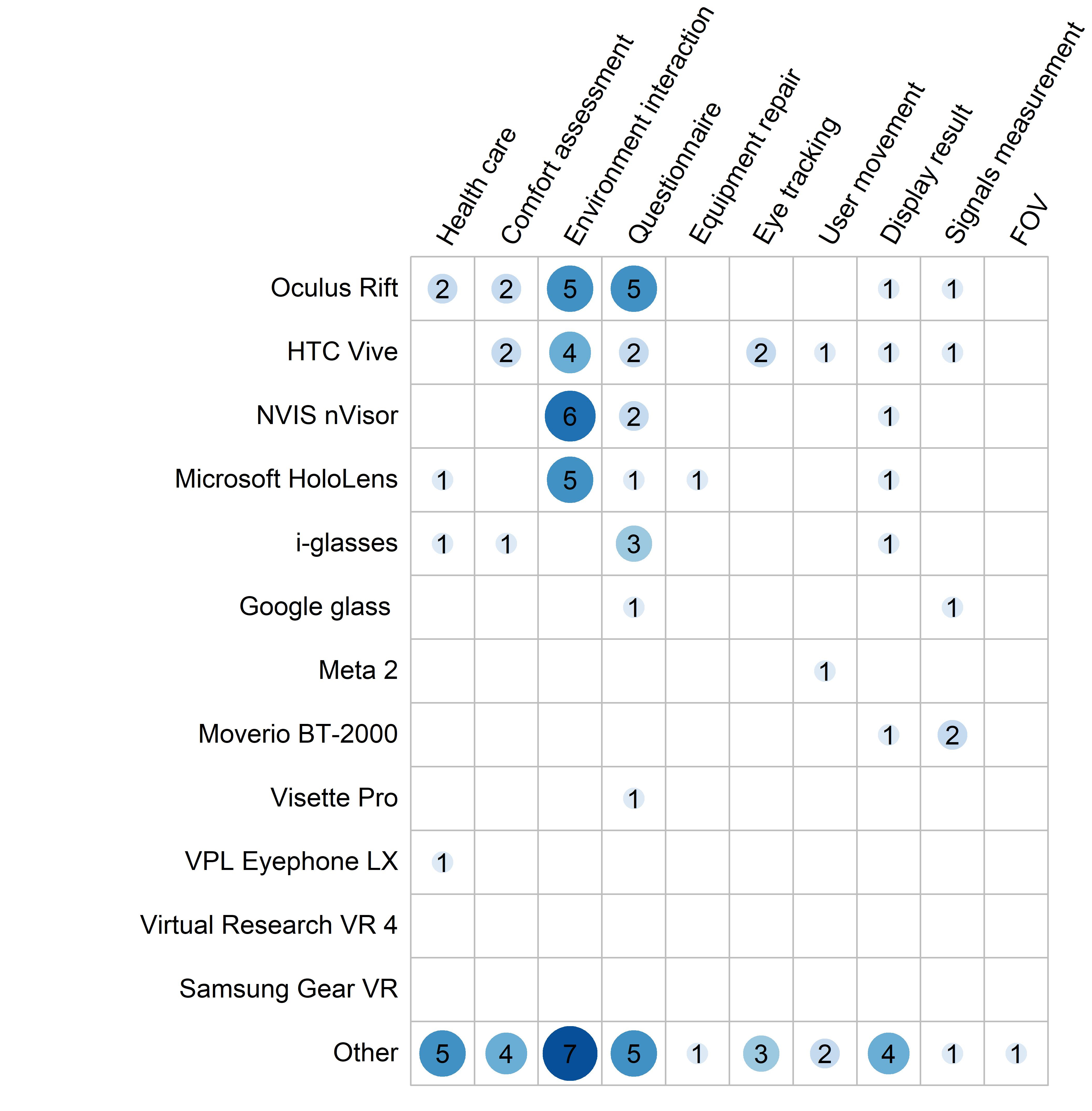

Figure 7b shows the HMDs used across different measurement categories. There is no clear relationship between category and device type. There are many experiments of the same type that can be used in similar HMDs; for example, [47] in 2018 used several different HMDs in the topic of distance underestimation, including Oculus Rift, Nvis HMDs, and HTC Vive. We see again a large number of devices falling in the ”other” category in this figure. As described above, many of these are custom HMDs, which were often designed only for a specific research field. For example, in [51] in 2004, the target research area was health care, so the HMDs used needed to match the medical needs to select the appropriate machine.

VII Experimental Procedure

In this section, we summarize frequently used experimental procedures across the literature (depicted in Figure 8), including:

-

•

Measurement method: classifies papers based on whether there are human participants and whether they provide data during or after the test.

-

•

Detail procedure: categorizes the design details of the experiment into: Content change, Participants’ action, Device substitution, Environment change, and Questionnaire.

-

•

Data processing method: describes how the experimenter processes the data after collecting the data, manually or with Computer program calculation.

In Figure 8, we first divide the measurement method mentioned into two main categories: device evaluation (EvaDevice) ([84, 101]) and participants’ reaction. We further divide the category of participants’ reaction into Participants’ reaction during test (Reaction) ([93, 83]) and Participants’ user experiment after test (Experiment) ([102, 103]). Most measurement-related papers recruit participants to obtain user feedback. Sometimes participants’ reactions are collected during the experiment, and sometimes participant feedback is recorded after completing the experiment. Some measurement papers do not recruit participants but only conduct tests to compare differences between different HMDs under certain factors, so we call this category device evaluation. Dividing into these three categories allows researchers to quickly understand how most measurement-related papers design their experimental procedures and refer to these related works’ methods in their own designs.

In addition, the center of Figure 8 shows the six main categories of detailed procedure we encountered in the surveyed literature. These categories include: content change (Content; [104, 105, 106, 107]), participants’ action (Action; [108, 109]), device substitution (SubDevice; [110, 111, 112]), environment change (Environment; [55, 97]), questionnaire (Question; [113, 114]), and experimenter instruction (Instruct; [89, 6]). First, Content change is to change the settings of items in the test environment, such as the color, luminance, or size of virtual/real objects. This procedure is the most common (25%) in measurement-related papers perhaps because modifying the shape or attribute of an object is an intuitive and accessible change. Second, participants’ action refers to participants taking corresponding actions under the experimenter’s preset settings. For example, the participant moves the target object from one location to another under the preset settings of the experiment. This procedure is also very common (20%) among measurement-related papers. The device substitution category refers to experiments that test and compare the results produced by different HMDs in the same situation. These results can inform the selection of appropriate HMDs to be used in future measurement-related experiments or implementations. The concept of environment change includes papers that manipulate the test environment where the assessment occurs during the experiment. As described in the Virtual Environment vs. Virtual/Real Environment subsection (V-B), the impact of the environment on participants and HMDs can be substantial. Next, one of the most commonly used methods for obtaining user feedback, questionnaire, is classified as the fifth procedures category. Questionnaires are typically administered after the experiment to obtain user feedback, especially in the health care and comfort assessment categories. However, there are also a small number of experiments that use questionnaires to obtain some user information and user expectations before the experiment starts. Questionnaire information can be extremely useful for assessing individual differences in participants as well as usability and preferences. Finally, a rare (3%) but important procedure used in HMD measurement-related papers is experimenter instruction. Similar to the Action category, the experimenters instruct the participant to do specific actions during the experiment, but in this procedure the experimenter provides different instructions according to the different reactions of each participant. This method is reasonably practical in measurement, and changing the direction of the experiment according to different situations can allow for different insights than can otherwise be obtained.

In the right side of Figure 8, we divide the data processing method into two categories: manual observation (Manual) ([73, 61]) and computer program calculation (Program) ([115, 62]). These two methods represent how the experimenter processes the data obtained via the measurement method and detailed procedure mentioned above. Manual observation means that after obtaining the feedback data from the participant, the experimenter manually sorts and analyzes the data. A common method is to manually code process subjective feedback data from questionnaires. The advantage of this method is that in collation and analysis, the experimenter may uncover unanticipated patterns in participant responses. However, the disadvantage is that it takes more time to process the data. Computer program calculation refers to the use of computer programs to organize and output the results needed by the experimenter after the data is obtained. The advantage is that it can quickly organize all the information and produce easy-to-read results, such as graphs or tables. The disadvantage is that it is easy to overlook some subtle information that is not the main experiment goal, which may lead to incomplete or misleading results. We found that papers that used the manual observation method are more common (59%) than papers that used computer program calculation (41%), perhaps because manual observation is more straightforward and can be implemented relatively quickly.

VIII Challenges, Limitations, and Discussion

Researchers will face a variety of challenges in the future, such as insufficient machine technology or difficulty simulating the real environment fully in the experimental virtual environment. Note that achieving high realism is not necessarily beneficial for effective performance in simulation-based training for aviation and health care, despite strong user preferences for high visual fidelity [116]. Nevertheless, as time goes by, some of the challenges encountered in previous papers are likely to be solved by researchers and documented in future papers. Therefore, in this section, we introduce several common challenges and possible solutions in measurement experiments related to HMDs.

Several of the surveyed measurement papers discussed the challenges and limitations they encountered. In a paper on environmental changes, [55] mentioned that they should consider whether the experiment could proceed as smoothly when switching environments in their future work, which shows that the aforementioned environmental factors of virtual environment vs. virtual/real environment are essential and challenging. Furthermore, they suggested that using more accurate medical equipment on top of a questionnaire would be more accurate; this highlights the benefit of using multiple data sources. In [96], the authors discussed the limitations in the method and hardware they faced for their OST-HMD color calibrations method, including both eye model issues and HMD model issues. For the eye model issues, due to technical limitations there was and is no technology to fully understand how the human retina absorbs sunlight and allows the human brain to perceive colors. Therefore, even OST-HMD cannot fully simulate the response of the human retina. The HMD issue refers to the limited display color range of the OST-HMD and the interference with the environmental light that can cause display distortion.

Another limitation mentioned by [77] is that the color contrast will decrease when using OST-HMD in high-brightness indoors or outdoors. These and other authors [62] also describe the limitations due to the outbreak of COVID-19, such as difficulties recruiting large enough participant samples for sufficient experimental data. If future works can develop methods to perform HMD experiments remotely, this will be extremely beneficial if a similar situation occurs again. Perhaps related to technical limitations of current HMDs, such as weight and FOV, perceived distances tend to have greater underestimation outdoors than indoors. For example, [88] stated that the research on distance underestimation in outdoor environments had not been fully explored since outdoor environments have numerous variables (e.g., overall scale, realism, and geometrical complexity). In mixed reality, the misperception of space may also depend on the distances. Using mixed reality, [117] evaluated far distance perception. They found both overestimation (at 25 to 200 meters) and underestimation (at 300 to 500 meters) of distances. To the best of our knowledge, use of HMDs for far distances, whether indoor or outdoors, is a research gap. It is also a technological limitation of current devices (e.g., the optimal distances for projecting AR content with a Hololens 2 is 1.25 to 5 meters from the user [13]).

The authors in [98] mention the limited FOV users experience when wearing an HMD, making it difficult to reproduce the same experience of the real world in HMD. If a primary goal of HMD development is to increase user comfort with the devices, an HMD with all-covered FOV will be a critical goal. Several authors [68, 118, 119] mention the problem that while HMDs may provide a greater sense of presence to users but can cause simulator sickness. A recent systematic literature review of more than 300 papers [120] found that a widely used measure of simulator sickness did not fully capture other comfort factors (e.g., eye strain and physical comfort). Therefore, a range of side effects should also be considered in the experiments’ design to avoid fatigue, dizziness, and other discomforts that may interfere with experimental results. Many researchers are now trying to solve these problems.

As summarized above, many common challenges and limitations are due to the current technical capabilities of HMDs, with the recent majority related to color (display) and luminance (Outdoor environment) in environmental factors. Therefore, solving existing challenges can directly affect the development of future HMDs and bring better experiences to users.

IX Conclusion

IX-A Summary

In this paper, we have categorized a total of 87 papers using HMDs in AR, MR, and VR. The categories included environmental factors and user vs. device measurements. We identified several potential research gaps. Comparison of indoor vs. outdoor was the environmental factors assessed by the fewest papers. This may be largely due to technical limitations with the performance of current HMDs in outdoor environments, but the number continues to increase after 2010. In addition, only one paper evaluated user movement. This indicates almost all of the papers had participants in a stationary standing or sitting position. However, real-world use of AR/MR for tasks such as maintenance, some health care applications (e.g., surgery), or spatial navigation will typically require walking and/or changing body positions (e.g., leaning to look from different perspectives). In the device measurement category, no papers proposed standardized methods for evaluating and comparing technical aspects of HMDs.

In terms of gaps for user experiments, we also found these papers tended to have limited sample sizes. The majority of papers had 25 or fewer participants, although many papers had repeated measures (multiple trials per participant). Surprisingly, there was a clear lack of a gap for the ratio of male to female participants which were often similar. However, most papers did not report whether they assessed prior HMD experience. If HMD use continues to grow as predicted over time, assessing experience with HMDs is likely to become increasingly useful. Additionally, most studies tended to rely on a younger, student population for participant samples, which may not be ideal for all applications or for generalizability of results.

In terms of hardware, the most frequently used HMDs were the NVIS nVisor, Oculus, Google Glass, and Microsoft HoloLens. There were many ”other” devices and seven different HMDs were only used in a single paper. While we categorized HMD hardware, a limitation of the current paper is that we cannot fully categorize the software used by paper due to large software diversity (e.g., custom software versus commercial off the shelf software such as Unity or Unreal Engine versus open source such as ARToolKit). But we still list the software mainly used by mentioned HMD in the Appendix C.

IX-B Future Directions

In the future, researchers can find the reference materials they need based on this paper and use our integrated figures to find trends in these research topics in recent years. We hope this paper can be an informative overview to help further develop categories and experiments with environmental-related measurements. Furthermore, this categorization may also be useful for other different types of AR/MR devices, such as mobile AR/MR devices.

Appendix A References List For Each Environmental Factor

| In/Out | 1990-1999 | (total 0) |

| (Total 11) | 2000-2009 | [56], [89] (total 2) |

| 2010-2019 | [47], [55], [57], [58], [77], [87], | |

| [88] (total 7) | ||

| 2020-2022 | [90], [91] (total 2) | |

| Virtual/Real | 1990-1999 | [72], [73], [92] (total 3) |

| (Total 33) | 2000-2009 | [42], [60], [82], [93], [94], [104] |

| (total 6) | ||

| 2010-2019 | [47], [49], [55], [57], [58], [59], | |

| [66], [68], [76], [88], [95], [96], | ||

| [102], [105], [108], [109], [114], | ||

| [115] (total 18) | ||

| 2020-2022 | [53], [71], [79], [87], [111], [112] | |

| (total 6) | ||

| Color | 1990-1999 | (total 0) |

| (Total 13) | 2000-2009 | [56], [104] (total 2) |

| 2010-2019 | [6], [75], [86], [96], [97], [115] | |

| (total 6) | ||

| 2020-2022 | [61], [62], [67], [80], [111] | |

| (total 5) | ||

| Luminance | 1990-1999 | [72] (total 1) |

| (Total 12) | 2000-2009 | [56], [63] (total 2) |

| 2010-2019 | [64], [78], [98], [102], [105] | |

| (total 5) | ||

| 2020-2022 | [61], [69], [74], [77] (total 4) |

Appendix B References List For Each Category

| Health care | [6], [51], [52], [63], [66], [72], [73], |

|---|---|

| [86], [108], [112], [113] (total 11) | |

| Comfort assessment | [54], [58], [59], [74], [75], [103], |

| [108], [114] (total 8) | |

| Environment interaction | [47], [53], [55], [69], [70], [71], |

| [75], [76], [77], [82], [88], [91], | |

| [93], [95], [98], [102], [104], [105], | |

| [109], [110], [111], [115] (total 22) | |

| Questionnaire | [42], [49], [53], [54], [55], [58], |

| [60], [61], [68], [69], [71], [73], | |

| [74], [76], [78], [83], [91], [102], | |

| [103], [105], [109], [111], [113], | |

| [114] (total 24) | |

| Equipment repair | [79] (total 1) |

| Eye tracking | [64], [78], [80], [81], [90], [100] |

| (total 6) | |

| User movement | [82], [83] (total 2) |

| Display result | [42], [60], [61], [62], [67], [68], |

| [83], [84], [85], [109], [114] | |

| (total 11) | |

| Signals measurement | [86], [87], [101], [106], [107] |

| (total 5) | |

| Field of view | [6] (total 1) |

Appendix C Software Mainly Used For Each HMD

| VPL Eyephonc LX | Reality Built For Two (RB2) |

|---|---|

| Virtual Research VR 4 | Developers created software |

| i-glasses | ViO SDK, Win95 driver |

| Visette Pro | Computer-based |

| software created applications | |

| NVIS nVisor | Computer modeling software “GO” |

| Google glass | Glass Development Kit |

| Oculus | Oculus Developer Hub (ODH), |

| Unreal Engine, Unity | |

| Moverio BT-2000 | Android platform control |

| by EPSON own API | |

| HTC Vive | VIVE WAVE, VIVEPORT, |

| VIVE Sense, OpenXR | |

| Meta 2 | Meta 2 SDK, Unity |

| Samsung Gear VR | Unity |

| Microsoft HoloLens | Mixed Reality ToolKit, Unity, |

| Unreal Engine, OpenXR, JavaScript |

Acknowledgments

This research was sponsored by the DEVCOM U.S. Army Research Laboratory under Cooperative Agreement Number W911NF-21-2-0145 to B.P.

We thank Jessica Schultheis for very helpful editing. Any errors or omission are those of the authors.

The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the DEVCOM Army Research Laboratory or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation.

References

- [1] ReportLinker, “Augmented reality & mixed reality market - growth, trends, covid-19 impact, and forecasts (2021 - 2026),” 2021.

- [2] Google, “Google books ngram viewer,” 2022, http://books.google.com/ngrams.

- [3] Oculus, “Oculus quest 2,” 2021, https://www.oculus.com/quest-2/.

- [4] H. Zhang, “Head-mounted display-based intuitive virtual reality training system for the mining industry,” International Journal of Mining Science and Technology, vol. 27, no. 4, pp. 717–722, 2017, special Issue on Advances in Mine Safety Science and Engineering. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2095268617303439

- [5] Y. M. Tang, K. M. Au, H. C. Lau, G. T. Ho, and C. H. Wu, “Evaluating the effectiveness of learning design with mixed reality (MR) in higher education,” Virtual Reality, vol. 24, no. 4, pp. 797–807, 2020.

- [6] W. Wittich, M. C. Lorenzini, S. N. Markowitz, M. Tolentino, S. A. Gartner, J. E. Goldstein, and G. Dagnelie, “The Effect of a Head-mounted Low Vision Device on Visual Function,” Optometry and Vision Science, vol. 95, no. 9, pp. 774–784, 2018.

- [7] A. Vitali and C. Rizzi, “Acquisition of customer’s tailor measurements for 3d clothing design using virtual reality devices,” Virtual and Physical Prototyping, vol. 13, pp. 1–15, 05 2018.

- [8] R. Van Krevelen and R. Poelman, “A survey of augmented reality technologies, applications and limitations,” International Journal of Virtual Reality (ISSN 1081-1451), vol. 9, p. 1, 06 2010.

- [9] H. Tamura, “Steps toward a giant leap in mixed and augmented reality,” Proceedings of the International Display Workshops, vol. 1, pp. 5–8, 01 2012.

- [10] R. T. Azuma, “A Survey of Augmented Reality,” Presence: Teleoperators and Virtual Environments, vol. 6, no. 4, pp. 355–385, 08 1997. [Online]. Available: https://doi.org/10.1162/pres.1997.6.4.355

- [11] R. Azuma, Y. Baillot, R. Behringer, S. Feiner, S. Julier, and B. MacIntyre, “Recent advances in augmented reality,” IEEE Computer Graphics and Applications, vol. 21, no. 6, pp. 34–47, 2001.

- [12] G. J. Klinker, D. Stricker, and D. Reiners, “Augmented reality for exterior construction applications,” 2001.

- [13] Microsoft, “Hololens,” 2015, https://www.microsoft.com/en-us/hololens.

- [14] P. Milgram and F. Kishino, “A taxonomy of mixed reality visual displays,” IEICE Trans. Information Systems, vol. vol. E77-D, no. 12, pp. 1321–1329, 12 1994.

- [15] P. Milgram, H. Takemura, A. Utsumi, and F. Kishino, “Augmented reality: a class of displays on the reality-virtuality continuum,” in Telemanipulator and Telepresence Technologies, H. Das, Ed., vol. 2351, International Society for Optics and Photonics. SPIE, 1995, pp. 282 – 292. [Online]. Available: https://doi.org/10.1117/12.197321

- [16] S. Benford, J. Bowers, L. E. Fahlén, J. Mariani, and T. Rodden, “Supporting Cooperative Work in Virtual Environments,” The Computer Journal, vol. 37, no. 8, pp. 653–668, 01 1994. [Online]. Available: https://doi.org/10.1093/comjnl/37.8.653

- [17] S. Benford, C. Greenhalgh, and G. Reynard, “292834.292836,” vol. 5, no. 3, pp. 185–223, 1998.

- [18] M. Billinghurst and H. Kato, “Collaborative mixed reality,” in Proceedings of the First International Symposium on Mixed Reality, 1999, pp. 261–284.

- [19] S. Mann, T. Furness, Y. Yuan, J. Iorio, and Z. Wang, “All reality: Virtual, augmented, mixed (x), mediated (x, y), and multimediated reality,” arXiv preprint arXiv:1804.08386, 2018.

- [20] M. K. Bekele, R. Pierdicca, E. Frontoni, E. S. Malinverni, and J. Gain, “A survey of augmented, virtual, and mixed reality for cultural heritage,” Journal on Computing and Cultural Heritage (JOCCH), vol. 11, no. 2, pp. 1–36, 2018.

- [21] M. Speicher, B. D. Hall, and M. Nebeling, “What is mixed reality?” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 2019, pp. 1–15.

- [22] C. E. Hughes, C. B. Stapleton, D. E. Hughes, and E. M. Smith, “Mixed reality in education, entertainment, and training,” IEEE computer graphics and applications, vol. 25, no. 6, pp. 24–30, 2005.

- [23] Z. Pan, A. D. Cheok, H. Yang, J. Zhu, and J. Shi, “Virtual reality and mixed reality for virtual learning environments,” Computers & graphics, vol. 30, no. 1, pp. 20–28, 2006.

- [24] Y.-M. Tang, K. M. Au, H. C. Lau, G. T. Ho, and C.-H. Wu, “Evaluating the effectiveness of learning design with mixed reality (mr) in higher education,” Virtual Reality, vol. 24, no. 4, pp. 797–807, 2020.

- [25] M. Fiorentino, R. de Amicis, G. Monno, and A. Stork, “Spacedesign: A mixed reality workspace for aesthetic industrial design,” in Proceedings. International Symposium on Mixed and Augmented Reality. IEEE, 2002, pp. 86–318.

- [26] A. D. Cheok, M. Haller, O. N. N. Fernando, and J. P. Wijesena, “Mixed reality entertainment and art,” International Journal of Virtual Reality, vol. 8, no. 2, pp. 83–90, 2009.

- [27] E. S. Liu and G. K. Theodoropoulos, “Interest management for distributed virtual environments: A survey,” ACM Comput. Surv., vol. 46, no. 4, Mar. 2014. [Online]. Available: https://doi-org.libproxy.utdallas.edu/10.1145/2535417

- [28] B. Kerous and F. Liarokapis, “Brain-computer interfaces - a survey on interactive virtual environments,” in 2016 8th International Conference on Games and Virtual Worlds for Serious Applications (VS-GAMES), 2016, pp. 1–4.

- [29] V. Souza, A. Maciel, L. Nedel, and R. Kopper, “Measuring presence in virtual environments: A survey,” ACM Comput. Surv., vol. 54, no. 8, Oct. 2021. [Online]. Available: https://doi.org/10.1145/3466817

- [30] D. Sjölie, “Human brains and virtual realities: Computer-generated presence in theory and practice,” Ph.D. dissertation, Umeå universitet, 2013.

- [31] S. S. Fisher, M. McGreevy, J. Humphries, and W. Robinett, “Virtual environment display system,” in Proceedings of the 1986 workshop on Interactive 3D graphics, 1987, pp. 77–87.

- [32] M. R. Mine, “Virtual environment interaction techniques,” UNC Chapel Hill CS Dept, 1995.

- [33] S. E. Chen, “Quicktime vr: An image-based approach to virtual environment navigation,” in Proceedings of the 22nd annual conference on Computer graphics and interactive techniques, 1995, pp. 29–38.

- [34] D. A. Bowman, D. B. Johnson, and L. F. Hodges, “Testbed evaluation of virtual environment interaction techniques,” Presence, vol. 10, no. 1, pp. 75–95, 2001.

- [35] J. Blascovich, J. Loomis, A. C. Beall, K. R. Swinth, C. L. Hoyt, and J. N. Bailenson, “Immersive virtual environment technology as a methodological tool for social psychology,” Psychological inquiry, vol. 13, no. 2, pp. 103–124, 2002.

- [36] NVIS, “Nvis nvisor,” https://www.nvisinc.com/product.html.

- [37] Google, “Google glass,” 2013, https://www.google.com/glass/start/.

- [38] HTC, “Htc vive,” 2016, https://www.vive.com/us/.

- [39] Oculus, “Oculus rift,” 2012, https://www.oculus.com/rift/.

- [40] I. E. Sutherland, “A head-mounted three dimensional display,” in Proceedings of the December 9-11, 1968, fall joint computer conference, part I, 1968, pp. 757–764.

- [41] R. Silva, J. C. Oliveira, and G. A. Giraldi, “Introduction to augmented reality,” National laboratory for scientific computation, vol. 11, 2003.

- [42] B. S. Santos, P. Dias, A. Pimentel, J.-W. Baggerman, C. Ferreira, S. Silva, and J. Madeira, “Head-mounted display versus desktop for 3d navigation in virtual reality: a user study,” Multimedia tools and applications, vol. 41, no. 1, pp. 161–181, 2009.

- [43] J. P. Rolland and H. Hua, “Head-mounted display systems,” Encyclopedia of optical engineering, vol. 2, 2005.

- [44] S.-T. Wu and D.-K. Yang, Reflective liquid crystal displays. Wiley, 2001.

- [45] G. Rajeswaran, “Active-matrix low-temperature poly-si tft/oled full-color displays: Status of development & commercialization,” SID2000 Digest, Long Beach, USA, 2000.

- [46] M. Selbrede and B. Yost, “Time multiplexed optical shutter (tmos) display technology for avionics platforms,” in Defense, Security, Cockpit, and Future Displays II, vol. 6225. International Society for Optics and Photonics, 2006, p. 62251B.

- [47] L. E. Buck, M. K. Young, and B. Bodenheimer, “A comparison of distance estimation in hmd-based virtual environments with different hmd-based conditions,” ACM Transactions on Applied Perception (TAP), vol. 15, no. 3, pp. 1–15, 2018.

- [48] Y. Itoh, T. Langlotz, J. Sutton, and A. Plopski, “Towards indistinguishable augmented reality: A survey on optical see-through head-mounted displays,” ACM Computing Surveys (CSUR), vol. 54, no. 6, pp. 1–36, 2021.

- [49] H. Profita, R. Albaghli, L. Findlater, P. Jaeger, and S. K. Kane, “The at effect: how disability affects the perceived social acceptability of head-mounted display use,” in proceedings of the 2016 CHI conference on human factors in computing systems, 2016, pp. 4884–4895.

- [50] J. Cubelos, P. Carballeira, J. Gutiérrez, and N. García, “Qoe analysis of dense multiview video with head-mounted devices,” IEEE Transactions on Multimedia, vol. 22, no. 1, pp. 69–81, 2019.

- [51] L. E. Culham, A. Chabra, and G. S. Rubin, “Clinical performance of electronic, head-mounted, low-vision devices,” Ophthalmic and Physiological Optics, vol. 24, no. 4, pp. 281–290, 2004.

- [52] B. Cummiskey, D. Schiffmiller, T. M. Talavage, L. Leverenz, J. J. Meyer, D. Adams, and E. A. Nauman, “Reliability and accuracy of helmet-mounted and head-mounted devices used to measure head accelerations,” Proceedings of the Institution of Mechanical Engineers, Part P: Journal of Sports Engineering and Technology, vol. 231, no. 2, pp. 144–153, 2017.

- [53] W.-y. Lin and Y.-c. Wang, “Empirical Evaluation of Calibration and Long-term Carryover Effects of Reverberation on Egocentric Auditory Depth Perception in VR,” pp. 232–240, 2022.

- [54] M. S. Arefin, N. Phillips, A. Plopski, J. L. Gabbard, and J. E. Swan II, “The effect of context switching, focal switching distance, binocular and monocular viewing, and transient focal blur on human performance in optical see-through augmented reality,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–1, 2022.

- [55] M. Nakao, T. Terada, and M. Tsukamoto, “An information presentation method for head mounted display considering surrounding environments,” in Proceedings of the 5th Augmented Human International Conference, ser. AH ’14. New York, NY, USA: Association for Computing Machinery, 2014. [Online]. Available: https://doi-org.libproxy.utdallas.edu/10.1145/2582051.2582098

- [56] H. Renkewitz, V. Kinder, M. Brandt, and T. Alexander, “Optimal font size for Head-Mounted-Displays in outdoor applications,” Proceedings of the International Conference on Information Visualisation, pp. 503–508, 2008.

- [57] F. De Felice and A. Petrillo, “Proposal of a structured methodology for the measure of intangible criteria and for decision making,” International Journal of Simulation and Process Modelling, vol. 9, no. 3, pp. 157–166, 2014.

- [58] S. Rangelova, D. Decker, M. Eckel, and E. Andre, Simulation sickness evaluation while using a fully autonomous car in a head mounted display virtual environment. Springer International Publishing, 2018, vol. 10909 LNCS. [Online]. Available: http://dx.doi.org/10.1007/978-3-319-91581-4_12

- [59] M. T. Robert, L. Ballaz, and M. Lemay, “The effect of viewing a virtual environment through a head-mounted display on balance,” Gait and Posture, vol. 48, pp. 261–266, 2016. [Online]. Available: http://dx.doi.org/10.1016/j.gaitpost.2016.06.010

- [60] E. Patrick, D. Cosgrove, A. Slavkovic, J. A. Rode, T. Verratti, and G. Chiselko, “Using a large projection screen as an alternative to head-mounted displays for virtual environments,” Conference on Human Factors in Computing Systems - Proceedings, vol. 2, no. 1, pp. 478–485, 2000.

- [61] K. S. Shin, H. Kim, J. G. Lee, and D. Jo, “Exploring the effects of scale and color differences on users’ perception for everyday mixed reality (Mr) experience: toward comparative analysis using mr devices,” Electronics (Switzerland), vol. 9, no. 10, pp. 1–14, 2020.

- [62] T. D. Do, J. J. LaViola, and R. P. McMahan, “The effects of object shape, fidelity, color, and luminance on depth perception in handheld mobile augmented reality,” in 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020, pp. 64–72.

- [63] W. Birkfellner, M. Figl, K. Huber, F. Watzinger, F. Wanschitz, J. Hummel, R. Hanel, W. Greimel, P. Homolka, R. Ewers, and H. Bergmann, “A head-mounted operating binocular for augmented reality visualization in medicine - Design and initial evaluation,” IEEE Transactions on Medical Imaging, vol. 21, no. 8, pp. 991–997, 2002.

- [64] M. Cognolato, M. Atzori, and H. Müller, “Head-mounted eye gaze tracking devices: An overview of modern devices and recent advances,” Journal of Rehabilitation and Assistive Technologies Engineering, vol. 5, p. 205566831877399, 2018.

- [65] J. H. Ryu, J. W. Kim, K. K. Lee, and J. O. Kim, “Colorimetric background estimation for color blending reduction of OST-HMD,” 2016 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, APSIPA 2016, pp. 1–4, 2017.

- [66] Z. Y. Chan, A. J. MacPhail, I. P. Au, J. H. Zhang, B. M. Lam, R. Ferber, and R. T. Cheung, “Walking with head-mounted virtual and augmented reality devices: Effects on position control and gait biomechanics,” PLoS ONE, vol. 14, no. 12, pp. 1–14, 2019.

- [67] Y. Zhang, R. Wang, E. Y. Peng, W. Hua, and H. Bao, “Color Contrast Enhanced Rendering for Optical See-through Head-mounted Displays,” IEEE Transactions on Visualization and Computer Graphics, vol. PP, no. c, p. 1, 2021.

- [68] Y. Shu, Y.-Z. Huang, S.-H. Chang, and M.-Y. Chen, “Do virtual reality head-mounted displays make a difference? a comparison of presence and self-efficacy between head-mounted displays and desktop computer-facilitated virtual environments,” Virtual Reality, vol. 23, no. 4, pp. 437–446, 2019.

- [69] D. Kahl, M. Ruble, and A. Krüger, “Investigation of size variations in optical see-through tangible augmented reality,” in 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2021, pp. 147–155.

- [70] J. Swan, M. Livingston, H. Smallman, D. Brown, Y. Baillot, J. Gabbard, and D. Hix, “A perceptual matching technique for depth judgments in optical, see-through augmented reality,” in IEEE Virtual Reality Conference (VR 2006), 2006, pp. 19–26.

- [71] F. A. Khan, V. V. R. M. K. R. Muvva, D. Wu, M. S. Arefin, N. Phillips, and J. E. Swan, “Measuring the perceived three-dimensional location of virtual objects in optical see-through augmented reality,” in 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2021, pp. 109–117.

- [72] M. Mon‐Williams, J. P. Warm, and S. Rushton, “Binocular vision in a virtual world: visual deficits following the wearing of a head‐mounted display,” Ophthalmic and Physiological Optics, vol. 13, no. 4, pp. 387–391, 1993.

- [73] E. Peli, “The visual effects of head-mounted display (HMD) are not distinguishable from those of desk-top computer display,” Vision Research, vol. 38, no. 13, pp. 2053–2066, 1998.

- [74] H. Ha, Y. Kwak, H. Kim, and Y. jun Seo, “Discomfort luminance level of head-mounted displays depending on the adapting luminance,” Color Research and Application, vol. 45, no. 4, pp. 622–631, 2020.

- [75] M. Wille, B. Grauel, and L. Adolph, “Strain caused by Head Mounted Displays,” Proceedings of the Human Factors and Ergonomics Society Europe Chapter 2013 Annual Conference, vol. 4959, no. 2013, 2013. [Online]. Available: http://www.hfes-europe.org/books/proceedings2013/Wille.pdf

- [76] A. Peer and K. Ponto, “Evaluating perceived distance measures in room-scale spaces using consumer-grade head mounted displays,” 2017 IEEE Symposium on 3D User Interfaces, 3DUI 2017 - Proceedings, pp. 83–86, 2017.

- [77] A. Erickson, K. Kim, G. Bruder, and G. F. Welch, “Exploring the limitations of environment lighting on optical see-through head-mounted displays,” in Symposium on Spatial User Interaction, 2020, pp. 1–8.

- [78] Y. Wang, G. Zhai, S. Chen, X. Min, Z. Gao, and X. Song, “Assessment of eye fatigue caused by head-mounted displays using eye-tracking,” BioMedical Engineering Online, vol. 18, no. 1, pp. 1–19, 2019. [Online]. Available: https://doi.org/10.1186/s12938-019-0731-5

- [79] D. Ariansyah, J. A. Erkoyuncu, I. Eimontaite, T. Johnson, A. M. Oostveen, S. Fletcher, and S. Sharples, “A head mounted augmented reality design practice for maintenance assembly: Toward meeting perceptual and cognitive needs of AR users,” Applied Ergonomics, vol. 98, no. August 2021, p. 103597, 2022. [Online]. Available: https://doi.org/10.1016/j.apergo.2021.103597

- [80] Y. Imaoka, A. Flury, and E. D. de Bruin, “Assessing Saccadic Eye Movements With Head-Mounted Display Virtual Reality Technology,” Frontiers in Psychiatry, vol. 11, no. September, pp. 1–19, 2020.

- [81] M. Lidegaard, D. W. Hansen, and N. Krüger, “Head mounted device for point-of-gaze estimation in three dimensions,” in Proceedings of the Symposium on Eye Tracking Research and Applications, ser. ETRA ’14. New York, NY, USA: Association for Computing Machinery, 2014, p. 83–86. [Online]. Available: https://doi-org.libproxy.utdallas.edu/10.1145/2578153.2578163

- [82] B. J. Mohler, J. L. Campos, M. B. Weyel, and H. H. Bülthoff, “Gait parameters while walking in a head-mounted display virtual environment and the real world,” Proceedings of the 13th Eurographics Symposium on Virtual Environments, pp. 85–88, 2007. [Online]. Available: http://nguyendangbinh.org/Proceedings/IPT-EGVE/2007/papers/short1001_final.pdf

- [83] A. U. Batmaz, M. D. B. Machuca, D. M. Pham, and W. Stuerzlinger, “Do head-mounted display stereo deficiencies affect 3d pointing tasks in AR and VR?” 26th IEEE Conference on Virtual Reality and 3D User Interfaces, VR 2019 - Proceedings, pp. 585–592, 2019.

- [84] M. Mine, “Characterization of end-to-end delays in head-mounted display systems,” The University of North Carolina at Chapel Hill, TR93- …, pp. 1–11, 1993. [Online]. Available: http://www0.cs.ucl.ac.uk/teaching/VE/Papers/93-001.pdf%5Cnhttp://dl.acm.org/citation.cfm?id=897856

- [85] B. Watson, N. Walker, L. F. Hodges, and A. Worden, “Managing Level of Detail through Peripheral Degradation,” ACM Transactions on Computer-Human Interaction, vol. 4, no. 4, pp. 323–346, 1997.

- [86] A. Alamri, J. Cha, and A. El Saddik, “Ar-rehab: An augmented reality framework for poststroke-patient rehabilitation,” IEEE Transactions on Instrumentation and Measurement, vol. 59, no. 10, pp. 2554–2563, 2010.

- [87] D. Weber, S. Hertweck, H. Alwanni, L. D. J. Fiederer, X. Wang, F. Unruh, M. Fischbach, M. E. Latoschik, and T. Ball, “A Structured Approach to Test the Signal Quality of Electroencephalography Measurements During Use of Head-Mounted Displays for Virtual Reality Applications,” Frontiers in Neuroscience, vol. 15, no. November, 2021.

- [88] S. H. Creem-Regehr, J. K. Stefanucci, W. B. Thompson, N. Nash, and M. McCardell, “Egocentric distance perception in the oculus rift (dk2),” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception, ser. SAP ’15. New York, NY, USA: Association for Computing Machinery, 2015, p. 47–50. [Online]. Available: https://doi.org/10.1145/2804408.2804422

- [89] T. Y. Grechkin, T. D. Nguyen, J. M. Plumert, J. F. Cremer, and J. K. Kearney, “How does presentation method and measurement protocol affect distance estimation in real and virtual environments?” ACM Transactions on Applied Perception (TAP), vol. 7, no. 4, pp. 1–18, 2010.

- [90] M. Liu, Y. Li, and H. Liu, “Robust 3-d gaze estimation via data optimization and saliency aggregation for mobile eye-tracking systems,” IEEE Transactions on Instrumentation and Measurement, vol. 70, pp. 1–10, 2021.

- [91] J. Lee and Y. Kim, “User Preference for Navigation Instructions in Mixed Reality,” pp. 802–811, 2022.

- [92] R. Kijima and T. Ojika, “Transition between virtual environment and workstation environment with projective head mounted display,” in Proceedings of IEEE 1997 Annual International Symposium on Virtual Reality. IEEE, 1997, pp. 130–137.

- [93] P. Willemsen, M. B. Colton, S. H. Creem-Regehr, and W. B. Thompson, “The Effects of Head-Mounted Display Mechanics on Distance Judgments in Virtual Environments *,” Tech. Rep., 2004.

- [94] P. Willemsen, M. Colton, S. Creem-Regehr, and W. Thompson, “The effects of head-mounted display mechanical properties and field of view on distance judgments in virtual environments,” TAP, vol. 6, 02 2009.

- [95] K. Hiramoto and K. Hamamoto, “Study on the difference of reaching cognition between the real and the virtual environment using hmd and its compensation,” in 2018 11th Biomedical Engineering International Conference (BMEiCON). IEEE, 2018, pp. 1–5.

- [96] Y. Itoh, M. Dzitsiuk, T. Amano, and G. Klinker, “Semi-Parametric Color Reproduction Method for Optical See-Through Head-Mounted Displays,” IEEE Transactions on Visualization and Computer Graphics, vol. 21, no. 11, pp. 1269–1278, 2015.

- [97] T. H. Harding, J. K. Hovis, C. E. Rash, M. K. Smolek, and M. R. Lattimore, “HMD daylight symbology: color discrimination modeling,” vol. 1064203, no. May 2018, p. 2, 2018.

- [98] B. Li, J. Walker, and S. A. Kuhl, “The Effects of Peripheral Vision and Light Stimulation on Distance Judgments through HMDs,” ACM Transactions on Applied Perception, vol. 15, no. 2, 2018.

- [99] A. Dey, M. Billinghurst, R. W. Lindeman, and J. E. Swan, “A systematic review of 10 Years of Augmented Reality usability studies: 2005 to 2014,” 2018.

- [100] E. Schneider, K. Bartl, S. Bardins, T. Dera, G. Boening, and T. Brandt, “Eye movement driven head-mounted camera: It looks where the eyes look,” Conference Proceedings - IEEE International Conference on Systems, Man and Cybernetics, vol. 3, pp. 2437–2442, 2005.

- [101] J. Hernandez, Y. Li, J. M. Rehg, and R. W. Picard, “Bioglass: Physiological parameter estimation using a head-mounted wearable device,” in 2014 4th International Conference on Wireless Mobile Communication and Healthcare - Transforming Healthcare Through Innovations in Mobile and Wireless Technologies (MOBIHEALTH), 2014, pp. 55–58.