Bagging cross-validated bandwidth selection in nonparametric regression estimation with applications to large-sized samples

Abstract

Cross-validation is a well-known and widely used bandwidth selection method in nonparametric regression estimation. However, this technique has two remarkable drawbacks: (i) the large variability of the selected bandwidths, and (ii) the inability to provide results in a reasonable time for very large sample sizes. To overcome these problems, bagging cross-validation bandwidths are analyzed in this paper. This approach consists in computing the cross-validation bandwidths for a finite number of subsamples and then rescaling the averaged smoothing parameters to the original sample size. Under a random-design regression model, asymptotic expressions up to a second-order for the bias and variance of the leave-one-out cross-validation bandwidth for the Nadaraya–Watson estimator are obtained. Subsequently, the asymptotic bias and variance and the limit distribution are derived for the bagged cross-validation selector. Suitable choices of the number of subsamples and the subsample size lead to an rate for the convergence in distribution of the bagging cross-validation selector, outperforming the rate of leave-one-out cross-validation. Several simulations and an illustration on a real dataset related to the COVID-19 pandemic show the behavior of our proposal and its better performance, in terms of statistical efficiency and computing time, when compared to leave-one-out cross-validation.

Keywords: cross-validation, subsampling, regression, Nadaraya–Watson, bagging

1 Introduction

The study of a variable of interest depending on other variable(s) is a common problem that appears in many disciplines. To deal with this issue, an appropriate regression model setting up the possible functional relationship between the variables is usually formulated. As part of this analysis, the unknown regression function, describing the general relationship between the variable of interest and the explanatory variable(s), has to be estimated. This task can be carried out using nonparametric methods that do not assume any parametric form for the regression function, providing flexible procedures and avoiding misspecification problems. Among the available nonparametric approaches, kernel-type regression estimators (Wand and Jones,, 1995) are perhaps the most popular. To compute this type of estimators the user has to select a kernel function (typically a density function) and a bandwidth or smoothing parameter that regulates the amount of smoothing to be used, which in turn determines the trade-off between the bias and the variance of the estimator. Although the choice of the kernel function is of secondary importance, the smoothing parameter plays a crucial role. In this regard, numerous contributions have been made over the last decades, providing methods to select the bandwidth. These approaches include, among others, cross-validation methods (Härdle et al.,, 1988) and plug-in selectors (Ruppert et al.,, 1995). In Köhler et al., (2014), a complete review and an extensive simulation study of different data-driven bandwidth selectors for kernel regression are presented. Due to their wide applicability and the good performance obtained in this complete comparison, in the present paper, we focus on analyzing cross-validation bandwidth selection techniques.

Cross-validation is a popular method of model selection that precedes an early discussion of the method by Stone, (1974). In its simplest form, cross-validation consists of splitting the dataset under study into two parts, using one part to fit one or more models, and then predicting the data in the second part with the models so-built. In this way, by not using the same data to fit and validate the models, it is possible to objectively compare the predictive capacity of different models. The leave-one-out version of cross-validation, which will be of interest in the present paper, is somewhat more involved. It excludes one datum from the dataset, fits a model from the remaining observations, uses this model to predict the datum left out, and then repeats this process for all the data.

The present paper studies the leave-one-out cross-validation bandwidth selection method and the application of bagging (Breiman,, 1996) to this procedure. We derive some asymptotic properties of the corresponding selectors when considering a random-design regression model and the Nadaraya–Watson kernel-type estimator is used. The Nadaraya–Watson estimator can be seen as a particular case of a wider class of nonparametric estimators, the so-called local polynomial estimators (Stone,, 1977; Cleveland,, 1979; Fan,, 1992), when performing a local constant fit. Given a random sample of size , bagging cross-validation consists of selecting subsamples of size , each without replacement, from the observations. One then computes a cross-validation bandwidth from each of the subsets, averages them, and then scales the average down appropriately to account for the fact that . It is well-known that the use of bagging can lead to substantial reductions in the variability of an estimator that is nonlinear in the observations (see Friedman and Hall,, 2007), as occurs in the case of the cross-validation criterion function. The use of bagging in conjunction with cross-validation for bandwidth selection has already been studied in the case of kernel density estimation by several authors (see, for example Barreiro-Ures et al.,, 2020; Hall and Robinson,, 2009). In addition to the potential improvement in statistical precision, even in the case of small sample sizes, the use of bagging (with appropriate elections of and ) can drastically reduce computation times, especially for very large sample sizes. Note that the complexity of cross-validation is , while the complexity of bagging cross-validation is . Larger reductions in computation time can also be additionally achieved with the application of binning techniques in the bagging procedure.

Apart from the theoretical analysis of the cross-validation bandwidth selection methods, another goal of this study is to apply the techniques studied in the present paper to a dataset related to the current COVID-19 pandemic. In particular, using a moderately large sample, provided by the Spanish Center for Coordinating Sanitary Alerts and Emergencies, consisting of the age and the time in hospital of people infected with COVID-19 in Spain, we are interested in studying the relationship between those two variables by means of the Nadaraya–Watson estimator. Apart from its purely epidemiological interest and due to the considerable size of the sample, this dataset is also useful to put into practice the techniques analyzed in the present paper.

The remainder of the paper is as follows. In Section 2, the regression model considered, the Nadaraya–Watson regression estimator and the important problem of bandwidth selection are presented. In Section 3, the leave-one-out cross-validation bandwidth selection method is described and some asymptotic properties of the corresponding selector are provided when the Nadaraya–Watson estimator is used. Section 4 considers the use of bagging for cross-validation in bandwidth selection for the Nadaraya–Watson estimator. Asymptotic expressions for the bias and the variance of the proposed bandwidth selector, as well as for its limit distribution, are also derived in this section. In Section 5, an algorithm is proposed to automatically choose the subsample size for the bandwidth selector studied in Section 4. The techniques proposed are empirically tested through several simulation studies in Section 6 and applied to the previously mentioned COVID-19 dataset in Section 7. Finally, concluding remarks are given in Section 8. The detailed proofs and some additional plots concerning the simulation study are included in the accompanying supplementary materials.

2 Regression model and Nadaraya–Watson estimator

Let be an independent and identically distributed (i.i.d.) sample of size of the two-dimensional random variable , drawn from the nonparametric regression model:

| (1) |

where denotes the regression function, and is the error term, satisfying that and .

The Nadaraya–Watson estimator or local constant estimator (Nadaraya,, 1964; Watson,, 1964) offers a nonparametric way to estimate the unknown regression function, . It is given by:

| (2) |

where denotes the bandwidth or smoothing parameter and the kernel function. As pointed out in the introduction, the value of the bandwidth is of great importance since it determines the amount of smoothing performed by the estimator and, therefore, heavily influences its behavior. Thus, in practice, data-driven bandwidth selection methods are needed. In the present paper, we deal with this problem, analyzing cross-validation bandwidth selection techniques when using the estimator (2) and considering the general regression model (1).

A possible way to select a (global) optimal bandwidth for (2) consists in minimizing a (global) optimality criterion, for instance, the mean integrated squared error (MISE), defined as:

| (3) |

where denotes the marginal density function of . The bandwidth that minimizes (3) is called the MISE bandwidth and it will be denoted by , that is,

| (4) |

The MISE bandwidth depends on and and, since in practice both functions are often unknown, cannot be directly calculated. However, it can be estimated, for example, using the cross-validation method.

3 Cross-validation bandwidth

Cross-validation is a method that offers a criterion for optimality which works as an empirical analogue of the MISE and so it allows us to estimate . The cross-validation function is defined as:

| (5) |

where denotes the Nadaraya–Watson estimator constructed using , that is, leaving out the -th observation,

| (6) |

Hence, the cross-validation bandwidth, , can be defined as

| (7) |

In order to obtain the asymptotic properties of (7) as an estimator of (4), it is necessary to study certain moments of (5) and its derivatives. However, the fact that the Nadaraya–Watson estimator has a random denominator makes this a very difficult task. To overcome this problem, the following unobservable modified version of the Nadaraya–Watson estimator was proposed in Barbeito, (2020):

| (8) |

Expression (8) does not define an estimator, but a theoretical approximation of (2). Of course, (8) can be used to define a modified version of the cross-validation criterion,

| (9) |

where denotes the leave-one-out version of (8) without the -th observation, that is,

| (10) |

For the sake of simplicity and convenience, from now on, we will denote by and the modified version of the cross-validation criterion defined in (9) and the bandwidth that minimizes (9), respectively. Using Taylor expansions, we can obtain the following approximation:

| (11) | |||||

where the second term of (11) is negligible with respect to the first one when it comes to the bias and the variance of . Since the first-order terms of and coincide for every , we need to calculate the second-order terms of both and in order to analyze the bias of the modified cross-validation bandwidth. As for the variance of the modified cross-validation bandwidth, it suffices to calculate the first-order term of . It is well-known that under suitable regularity conditions, up to first order,

where

with and , , provided that these integrals exist finite. Then, the first-order term of the MISE bandwidth, , has the following expression:

where

However, there are no results in the literature that obtain the higher-order terms of the MISE of the Nadaraya–Watson estimator. To undertake this task, let us start by defining

Then, we have that

| (12) |

where

Expression (12) splits as a sum of five terms with no random denominator plus an additional term, , which has a random denominator. This last term is negligible with respect to the others. This approach will help us study the higher-order terms of the MISE of (2). Let us define , then we have that

3.1 Asymptotic results

The asymptotic bias and variance of the cross-validation bandwidth minimizing (9) are derived in this section. For this, some previous lemmas are proved. The detailed proof of these results can be found in the supplementary material. The following assumptions are needed:

-

A1.

is a symmetric and differentiable kernel function.

-

A2.

For every , the integrals , and exist and are finite.

-

A3.

The functions and are eight times differentiable.

-

A4.

The function is four times differentiable.

Lemma 3.1 provides expressions for the first and second order terms of both the bias and the variance of .

Lemma 3.1.

Under assumptions A1–A4, the bias and the variance of satisfy:

and

Lemma 3.2 provides expressions for the first and second order terms of both the expectation and variance of .

Lemma 3.2.

Let us define

Then, under assumptions A1–A4,

| (13) | |||||

| (14) |

Finally, Theorem 3.1, which can be derived from (11), Lemma 3.1 and Lemma 3.2, provides the asymptotic bias and variance of the cross-validation bandwidth that minimizes (9).

Theorem 3.1.

Under assumptions A1–A4, the asymptotic bias and variance of the bandwidth that minimizes (9) are:

where

4 Bagged cross-validation bandwidth

While the cross-validation method is very useful to select reliable bandwidths in nonparametric regression, it also has the handicap of requiring a high computing time if the sample size is very large. This problem can be partially circumvented by using bagging (Breiman,, 1996), a statistical technique belonging to the family of ensemble methods (Opitz and Maclin,, 1999), in the bandwidth selection procedure. In this section, we explain how bagging may be applied in the cross-validation context. Additionally, the asymptotic properties of the corresponding selector are obtained. Apart from the obvious reductions in computing time, the bagging cross-validation selector also presents better theoretical properties than the leave-one-out cross-validation bandwidth. This will be corroborated in the numerical studies presented in Sections 6 and 7.

Let be a random sample of size drawn without replacement from the i.i.d sample defined in Section 2. This subsample is used to calculate a cross-validation bandwidth, . A rescaled version of , , can be viewed as a feasible estimator of the optimal MISE bandwidth, , for . Bagging consists of repeating this resampling procedure independently times, leading to rescaled bandwidths, . The bagging bandwidth is then defined as:

| (15) |

In the case of kernel density estimation, both the asymptotic properties and the empirical behavior of this type of bandwidth selector have been studied in Hall and Robinson, (2009) for and generalized in Barreiro-Ures et al., (2020), where the asymptotic properties of the bandwidth selector are derived for the more practical case of a finite .

In the next section, the asymptotic bias and variance of the bagging bandwidth (15) when using the Nadaraya–Watson estimator (2) and considering the regression model (1) are obtained. Moreover, its asymptotic distribution is also derived.

4.1 Asymptotic results

Expressions for the asymptotic bias and the variance of (15) are given in Theorem 4.1. The following additional assumption is needed:

-

A5.

As , and tends to a positive constant or .

Theorem 4.1.

Corollary 4.1.

It should be noted that, while converges in distribution at the rate , this result can be improved with the use of bagging and letting and tend to infinity at adequate rates. For example, if both and tended to infinity at the rate , then would converge in distribution at the rate , which is indeed a faster rate of convergence than .

5 Choosing an optimal subsample size

In practice, an important step of our approach is, for fixed values of and , choosing the optimal subsample size, . A possible optimality criterion could be to consider the value of that minimizes the main term of the variance of . In this case, we would get:

and the variance of the bagging bandwidth would converge to zero at the rate

which is a faster rate of convergence than that of the standard cross-validation bandwidth. In particular,

The obvious drawback of this criterion is that it would not allow any improvement in terms of computational efficiency, since the complexity of the algorithm would be the same as in the case of standard cross-validation, . This makes this choice of inappropriate for very large sample sizes. Another possible criterion for selecting would be to minimize, as a function of , the asymptotic mean squared error (AMSE) of , given by:

| (16) |

Since , and are unknown, we propose the following method to estimate

-

Step 1.

Consider subsamples of size , drawn without replacement from the original sample of size .

-

Step 2.

For each of these subsamples, obtain an estimate, , of the marginal density function of the explanatory variable (using kernel density estimation, for example) and an estimate, , of the regression function (for instance, by fitting a polynomial of a certain degree). Do the same for the required derivatives of both and .

-

Step 3.

Use the estimates obtained in the previous step to compute the constants , and for each subsample, where () denotes the subsample index.

-

Step 4.

Compute the bagged estimates of the unknown constants, that is,

and obtain by plugging these bagged estimates into (16).

-

Step 5.

Finally, estimate by:

Additionally, assuming that , then

and the rate of convergence to zero of the AMSE of the bagging bandwidth would be:

Hence,

and this ratio would tend to zero if tended to infinity at a rate faster than . Furthermore, if we let and , then the computational complexity of the algorithm would be , much lower than that of standard cross-validation. In fact, by selecting in this way, the complexity of the algorithm will only equal to that of standard cross-validation when tends to infinity at the rate .

6 Simulation studies

The behavior of the leave-one-out and bagged cross-validation bandwidths is evaluated by simulation in this section. We considered the following regression models:

-

M1:

, , , ,

-

M2:

, , , ,

-

M3:

, , , ,

whose regression functions are plotted in Figure 1. The Gaussian kernel was used for computing the Nadaraya–Watson estimator throughout this section. Moreover, to reduce computing time in the simulations, we used binning to select the ordinary and the bagged cross-validation bandwidths.

In a first step, we empirically checked how close the bandwidths that minimize the MISE of (2) and (8) are. For this, we simulated samples of different sizes (, and ) from models M1, M2 and M3 and compute the corresponding MISE curves for the standard Nadaraya–Watson estimator and for its modified version, given in (8). For the sake of brevity, the plot containing these curves is included in the accompanying supplementary materials. That plot shows that the bandwidth that minimizes the MISE of (8) and the MISE of the standard Nadaraya–Watson estimator appear to be quite close, even for moderate sample sizes. Of course, the distance between the minima of both curves tends to zero as the sample size increases. Moreover, the standard cross-validation bandwidths and the modified cross-validation selectors (using the standard and the modified version of the Nadaraya–Watson estimator, respectively) are obtained for samples of sizes ranging from to drawn from model M2. The corresponding figure is also included in the supplementary material. It shows that both bandwidth selectors provide similar results, which in turn get closer as increases.

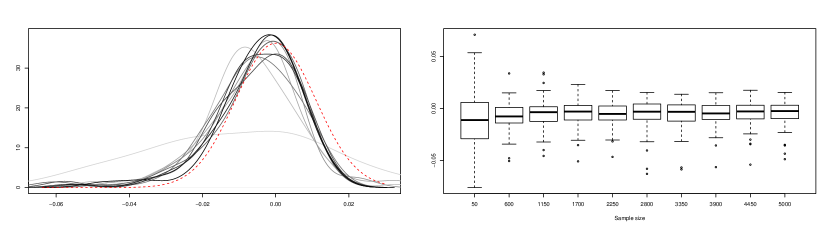

In a second step, we checked how fast the statistic approaches its limit distribution. For this, 1000 samples of size were simulated from model M2 (with values of ranging from to ) and the corresponding values of were computed. Figure 2 shows the kernel density estimates and boxplots constructed using these samples of . The empirical behavior observed in Figure 2 is in agreement with the result reflected in Corollary 3.1, since the sampling distribution of seems to tend to a normal distribution with zero mean and constant variance. Similar plots were obtained when considering models M1 and M3. They are not shown here for the sake of brevity.

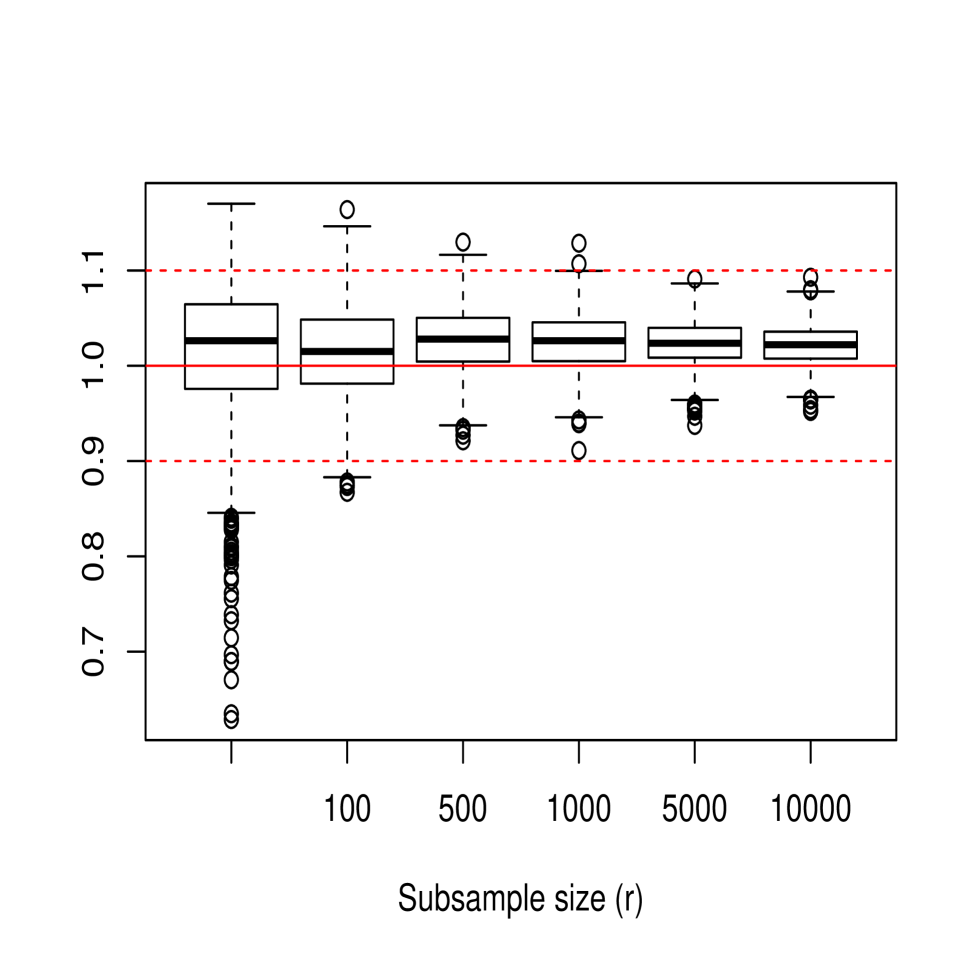

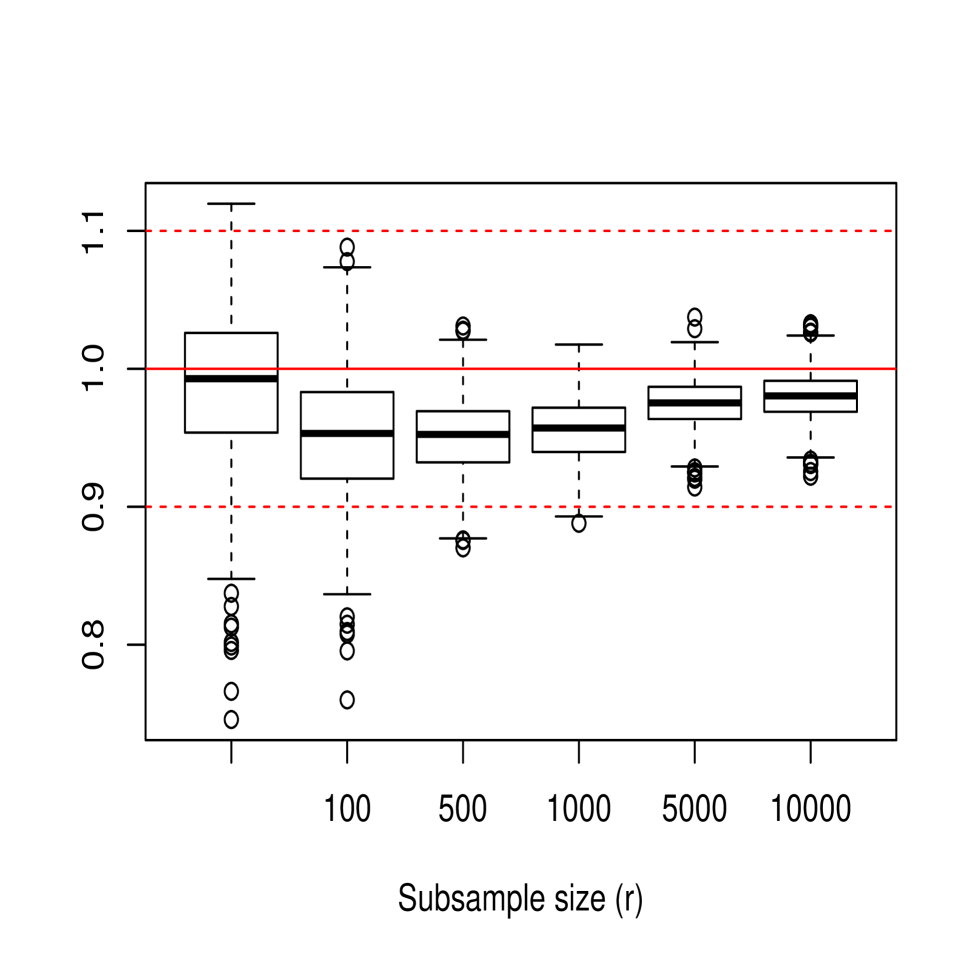

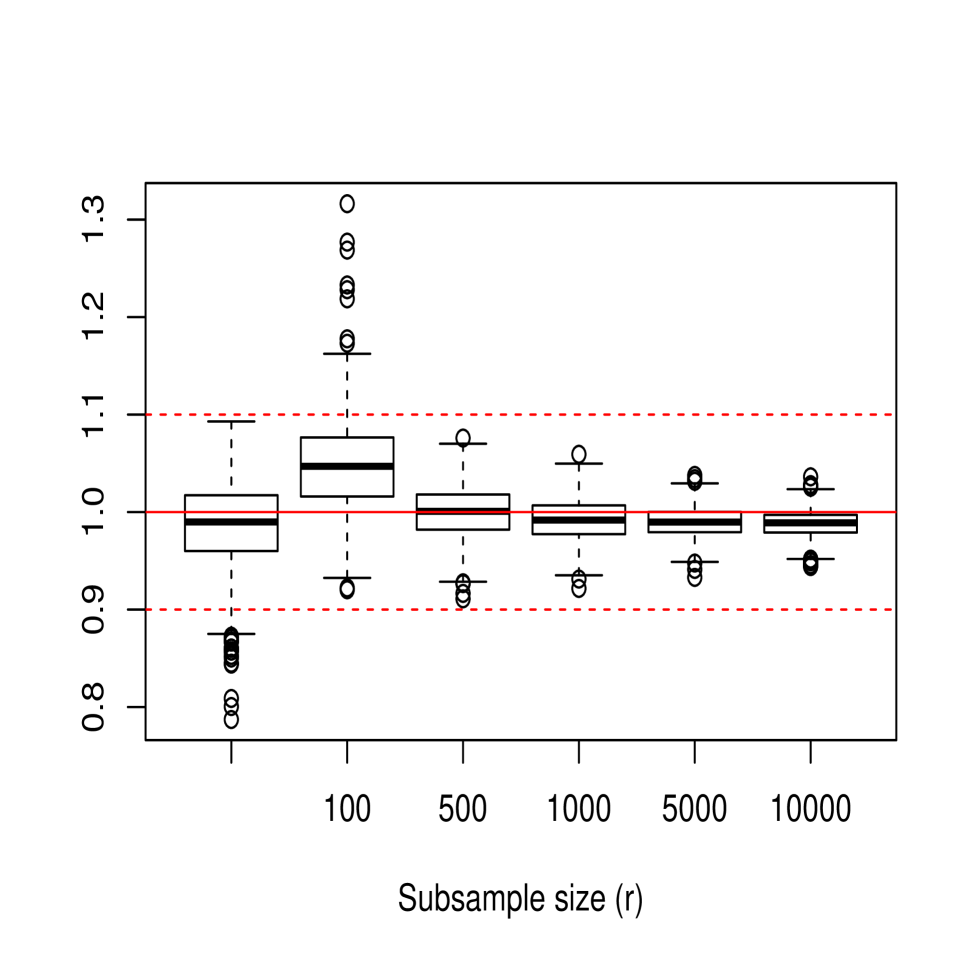

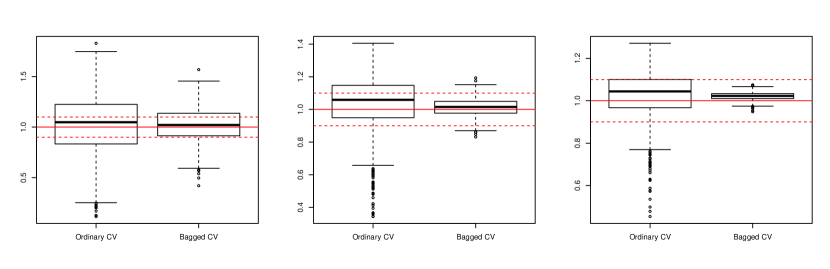

In the next part of the study, we focused on empirically analyzing the performance of the bagged cross-validation bandwidth , given in (15), for different values of , and . Figure 3 shows the sampling distribution of , where denotes either the ordinary or bagged cross-validation bandwidth. For this, 1000 samples of size from models M1, M2 and M3 were generated, considering in the case of the values and . For all three models, it is observed how the bias and variance of the bagging bandwidth decrease as the subsample size increases and how its mean squared error seems to stabilize for values of close to 5000. Moreover, the behavior of the bagging selector turns out to be quite positive even when considering subsample sizes as small as , perhaps excluding the case of model M3 for which the variance of the bagging bandwidth is still relatively high for , although it undergoes a rapid reduction as the subsample size increases slightly.

The effect that has on the mean squared error of the bagged bandwidth is also illustrated in Table 1, which shows the ratio of the mean squared errors of the bagged bandwidth and the ordinary cross-validation bandwidth, MSEMSE, for the three models.

| Model | |||

|---|---|---|---|

| M1 | M2 | M3 | |

| Subsample size () | MSE ratio | ||

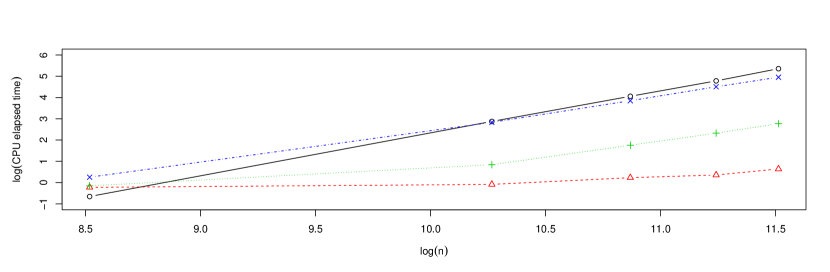

Apart from a better statistical precision of the cross-validation bandwidths selected using bagging, another potential advantage of employing this approach is the reduction of computing times, especially with large sample sizes. To analyze this issue, Figure 4 shows, as a function of the sample size, , the CPU elapsed times for computing the standard and the bagged cross-validation bandwidths. Both variables are shown on a logarithmic scale. In the case of the bagging selector, three different subsample size values () depending on were considered: , and . Calculations were performed in parallel using an Intel Core i5-8600K 3.6GHz CPU. The sample size and a fixed number of subsamples, , were used. In this experiment, binning techniques were employed using a number of bins of for standard cross-validation and in the case of bagged cross-validation. The time required to compute the bagged cross-validation bandwidth was measured considering the three possible growth rates for , mentioned above.

Fitting an appropriate model, these CPU elapsed times could be used to predict the computing times of the different selectors for larger sample sizes. Considering Figure 4, the following log-linear model was used:

| (17) |

where denotes the CPU elapsed time as a function of the original sample size, . In the case of the bagged cross-validation bandwidths, there is a fixed time corresponding to the one required for the setting up of the parallel socket cluster. This time, which does not depend on , or , but only on the CPU and the number of cores used in the parallelization, was estimated to be . Using this value, the corrected CPU elapsed times obtained for the bagged bandwidths, , were employed to fit the log-linear model (17) estimating by least squares and, subsequently, to make predictions. Table 2 shows the predicted CPU elapsed time for ordinary and bagged cross-validation for large sample sizes. Although we should take these predictions with caution, the results in Table 2 serve to illustrate the important reductions in computing time that bagging can provide for certain choices of and , especially for very large sample sizes.

| Sample size () | |||

|---|---|---|---|

| Method | Computing time | ||

| Standard CV | hours | days | years |

| Bagged CV () | seconds | minutes | hours |

| Bagged CV () | minutes | hours | days |

| Bagged CV () | hours | days | years |

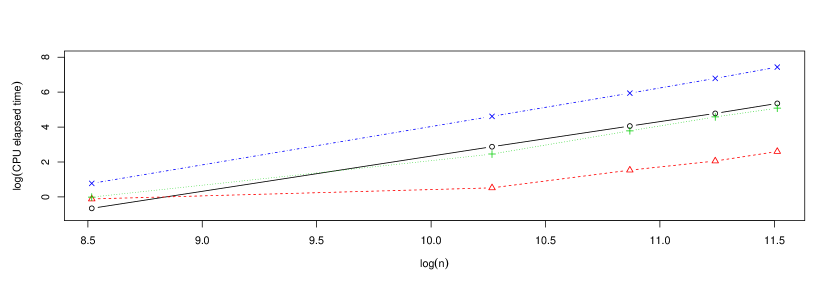

Next, the influence of the number of subsamples, , in the computing times of the bagged badwidths was studied. Similarly to Figure 4, Figure 5 shows the CPU elapsed times for computing the cross-validation bandwidths (standard and bagged). For the bagging method, the number of subsamples, , was selected depending on the original sample size () by . The growth rates used for are the same as in the case of Figure 4.

It should also be stressed that although the quadratic complexity of the cross-validation algorithm is not so critical in terms of computing time for small sample sizes, even in these cases, the use of bagging can still lead to substantial reductions in mean squared error of the corresponding bandwidth selector with respect to the one selected by ordinary cross-validation. In order to show this, samples from model M1 of sizes were simulated and the ordinary and bagged cross-validation bandwidths for each of these samples were computed. In the case of the bagged cross-validation bandwidth, both the size of the subsamples and the number of subsamples were selected depending on , choosing . Figure 6 shows the sampling distribution of , where denotes either the ordinary or bagged cross-validation bandwidth. In the three scenarios, it can be observed the considerable reductions in variance produced by bagging more than offset the slight increases in bias, thus obtaining significant reductions in mean squared error with respect to the ordinary cross-validation bandwidth selector. Specifically, the relative reductions in mean squared error achieved by the bagged bandwidth turned out to be , and for , and , respectively. This experiment was repeated with models M2 and M3, obtaining similar results.

7 Application to COVID-19 data

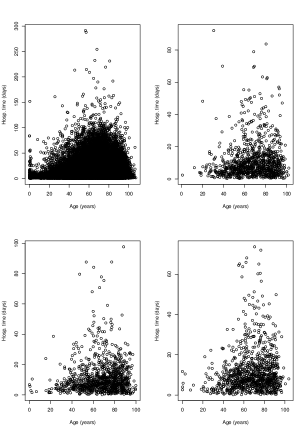

In order to illustrate the performance of the techniques studied in the previous sections, the COVID-19 dataset briefly mentioned in the introduction is considered. It consists of a sample of size which contains the age (the explanatory variable) and the time in hospital (the response variable) of people infected with COVID-19 in Spain from January 1, 2020 to December 20, 2020. Due to the high number of ties in this dataset and in order to avoid problems when performing cross-validation, we decided to remove the ties by jittering the data. In particular, three independent random samples of size , , and , drawn from a continuous uniform distribution defined on the interval , were generated. Then, was added to the original explanatory variable and to the original response variable. Figure 7 shows scatterplots for the complete sample as well as for three randomly chosen subsamples of size .

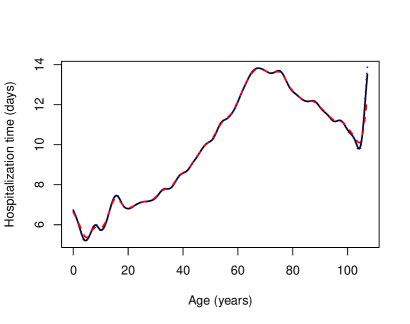

To compute the standard cross-validation bandwidth using binning, the number of bins was set to , that is, roughly of the sample size. The value of the bandwidth thus obtained was and computing it took seconds. For the bagged bandwidth, subsamples of size were considered. Binning was used again for each subsample, fixing the number of bins to . The calculations associated with each subsample were performed in parallel using cores. The value of the bagged bandwidth was and its computing time was seconds. Figure 8 shows the Nadaraya–Watson estimates with both standard and bagged cross-validation bandwidths. For comparative purposes, the local linear regression estimate with direct plug-in bandwidth (Ruppert et al.,, 1995) was also computed.

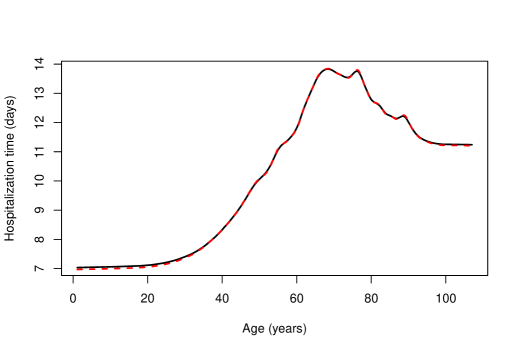

Figure 8 shows that the Nadaraya–Watson estimator with standard cross-validation bandwidth produces a slightly smoother estimate than the one obtained with the bagged bandwidth, the latter being almost indistinguishable from the local linear estimate computed with direct plug-in bandwidth. One can conclude that the expected time that a person infected with COVID-19 will remain in hospital increases non-linearly with age for people under approximately years. This trend is reversed for people aged between and years. This could be due to the fact that patients in this age group are more likely to die and, therefore, end the hospitalization period prematurely. Finally, the expected hospitalization time grows again very rapidly with age for people over years of age, although this could be caused by some boundary effect, since the number of observations for people over years old is very small, specifically , which corresponds to roughly of the total number of observations. In order to avoid this possible boundary effect, the estimators were also fitted to a modified version of the sample in which the explanatory variable was transformed using its own empirical distribution function. The resulting estimators are shown in Figure 9, where the explanatory variable was returned to its original scale by means of its empirical quantile function.

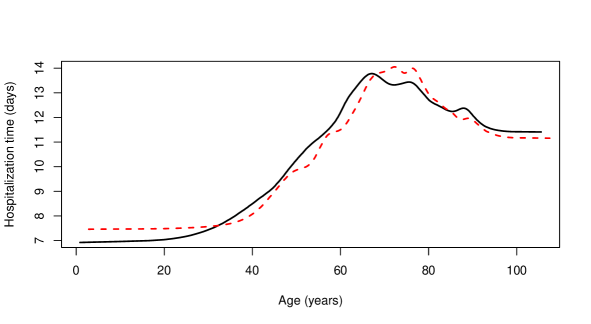

Finally, the same procedure was followed to estimate the expected time in hospital but splitting the patients by gender, as shown in Figure 10. This figure shows that the expected time in hospital is generally shorter for women, except for ages less than years or between and years. Anyhow, the difference in mean time in hospital for men and women never seems to exceed one day. In Figure 10, only the Nadaraya–Watson estimates computed with the bagged cross-validation bandwidths ( for men and for women) are shown. Both the Nadaraya–Watson estimates with standard cross-validation bandwidths ( for men and for women) and the local linear estimates with direct plug-in bandwidths produced very similar and graphically indistinguishable results from those shown in Figure 10.

8 Discussion

The asymptotic properties of the leave-one-out cross-validation bandwidth for the Nadaraya–Watson estimator considering a regression model with random design have been studied in this paper. Additionally, a bagged cross-validation selector have been also analyzed (theoretically and empirically) as an alternative to standard leave-one-out cross-validation. The advantage of this bandwidth selector is twofold: (i) to gain computational efficiency with respect to standard leave-one-out cross-validation by applying the cross-validation algorithm to several subsamples of size rather than a single sample of size , and (ii) to reduce the variability of the leave-one-out cross-validation bandwidth. Although the new bandwidth selector studied in the present paper can outperform the behavior of the standard cross-validation selector even for moderate sample sizes, improvements in computation time become truly significant only for large-sized samples.

The methodology presented in this paper can be applied to other bandwidth selection techniques, apart from cross-validation, as mentioned in Barreiro-Ures et al., (2020). Extensions to bootstrap bandwidth selectors is an interesting topic for a future research. The bootstrap resampling plans proposed by Cao and González-Manteiga, (1993) can be used to derive a closed form for the bootstrap criterion function in nonparametric regression estimation, along the lines presented by Barbeito et al., (2021) who have dealt with matching and prediction.

Another interesting future research topic is the extension of the results presented in this paper to the case of the local linear estimator, whose behavior is known to be superior to that of the Nadaraya–Watson estimator, especially in the boundary regions.

Supplementary Materials

Supplementary materials include proofs and some plots completing the simulation study.

Acknowledgments

The authors would like to thank the Spanish Center for Coordinating Sanitary Alerts and Emergencies for kindly providing the COVID-19 hospitalization dataset.

Funding

This research has been supported by MINECO grant MTM2017-82724-R, and by Xunta de Galicia (Grupos de Referencia Competitiva ED431C-2020-14 and Centro de Investigación del Sistema Universitario de Galicia ED431G 2019/01), all of them through the ERDF.

References

- Barbeito, (2020) Barbeito, I. (2020). Exact bootstrap methods for nonparametric curve estimation. PhD thesis, Universidade da Coruña. https://ruc.udc.es/dspace/handle/2183/26466.

- Barbeito et al., (2021) Barbeito, I., Cao, R., and Sperlich, S. (2021). Bandwidth selection for statistical matching and prediction. Technical report, University of A Coruña. Department of Mathematics. http://dm.udc.es/preprint/Bandwidth_Selection_Matching_Prediction_NOT_BLINDED.pdf and http://dm.udc.es/preprint/SuppMaterial_Bandwidth_Selection_Matching_Prediction_NOT_BLINDED.pdf.

- Barreiro-Ures et al., (2020) Barreiro-Ures, D., Cao, R., Francisco-Fernández, M., and Hart, J. D. (2020). Bagging cross-validated bandwidths with application to big data. Biometrika. https://doi.org/10.1093/biomet/asaa092.

- Breiman, (1996) Breiman, L. (1996). Bagging predictors. Machine Learning, 24:123–140.

- Cao and González-Manteiga, (1993) Cao, R. and González-Manteiga, W. (1993). Bootstrap methods in regression smoothing. Journal of Nonparametric Statistics, 2(4):379–388.

- Cleveland, (1979) Cleveland, W. S. (1979). Robust locally weighted regression and smoothing scatterplots. Journal of the American Statistical Association, 74(368):829–836.

- Fan, (1992) Fan, J. (1992). Design-adaptive nonparametric regression. Journal of the American Statistical Association, 87(420):998–1004.

- Friedman and Hall, (2007) Friedman, J. H. and Hall, P. (2007). On bagging and nonlinear estimation. Journal of Statistical Planning and Inference, 137:669–683.

- Hall and Robinson, (2009) Hall, P. and Robinson, A. P. (2009). Reducing variability of crossvalidation for smoothing parameter choice. Biometrika, 96(1):175–186.

- Härdle et al., (1988) Härdle, W., Hall, P., and Marron, J. S. (1988). How far are automatically chosen regression smoothing parameters from their optimum? Journal of the American Statistical Association, 83(401):86–95.

- Köhler et al., (2014) Köhler, M., Schindler, A., and Sperlich, S. (2014). A review and comparison of bandwidth selection methods for kernel regression. International Statistical Review / Revue Internationale de Statistique, 82(2):243–274.

- Nadaraya, (1964) Nadaraya, E. A. (1964). On estimating regression. Theory of Probability & Its Applications, 9(1):141–142.

- Opitz and Maclin, (1999) Opitz, D. and Maclin, R. (1999). Popular ensemble methods: an empirical study. Journal of Artificial Intelligence Research, 11:169–198.

- Ruppert et al., (1995) Ruppert, D., Sheather, S. J., and Wand, M. P. (1995). An effective bandwidth selector for local least squares regression. Journal of the American Statistical Association, 90(432):1257–1270.

- Stone, (1977) Stone, C. J. (1977). Consistent nonparametric regression. The Annals of Statistics, 5(4):595–620.

- Stone, (1974) Stone, M. (1974). Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society, Series B, 36:111–147.

- Wand and Jones, (1995) Wand, M. P. and Jones, M. C. (1995). Kernel smoothing. Chapman and Hall, London.

- Watson, (1964) Watson, G. S. (1964). Smooth regression analysis. Sankhyā: The Indian Journal of Statistics, Series A, 26(4):359–372.