Bargaining dynamics in exchange networks

Abstract

We consider a one-sided assignment market or exchange network with transferable utility

and propose a model

for the dynamics of bargaining in such a market. Our dynamical model is local, involving iterative updates of ‘offers’ based on estimated best alternative matches, in the spirit of pairwise Nash bargaining. We establish that when a balanced outcome (a generalization of the pairwise Nash bargaining solution to networks) exists, our dynamics converges rapidly to such an outcome.

We extend our results to the cases of (i) general agent ‘capacity constraints’, i.e., an agent may be allowed to participate in multiple matches, and (ii) ‘unequal bargaining powers’ (where we also find a surprising change in rate of convergence).

Keywords: Nash bargaining, network, dynamics, convergence, matching, assignment, exchange, balanced outcomes.

JEL classification: C78

1 Introduction

Bargaining has been heavily studied in the economics and sociology literature, e.g., [32, 36, 26, 35]. While the case of bargaining between two agents is fairly well understood [32, 36, 26], less is known about the results of bargaining on networks. We consider exchange networks [13, 27], also called assignment markets [39, 33], where agents occupy the nodes of a network, and edges represent potential partnerships between pairs of agents, which can generate some additional value for these agents. To form a partnership, the pair of agents must reach an agreement on how to split the value of the partnership. Agents are constrained on the number of partnerships they can participate in, for instance, under a matching constraint, each agent can participate in at most one partnership. The fundamental question of interest is: Who will partner with whom, and on what terms? Such a model is relevant to the study of the housing market, the labor market, the assignment of interns to hospitals, the marriage market and so on. An assignment model is suitable for markets with heterogeneous indivisible goods, where trades are constrained by a network structure.

Balanced outcomes111Also called symmetrically pairwise-bargained allocations in [33] or Nash bargaining solutions [27]. generalize the pairwise Nash bargaining solution to this setting. The key issue here is the definition of the threats or best alternatives of participants in a match — these are defined by assuming the incomes of other potential partners to be fixed. In a balanced outcome on a network, each pair plays according to the local Nash bargaining solution thus defined. Balanced outcomes have been found to possess some favorable properties:

Predictive power. The set of balanced outcomes refines the set of stable outcomes (the core), where players have no incentive to deviate from their current partners. For instance, in the case of a two player network, all possible deals are stable, but there is a unique balanced outcome. Balanced outcomes have been found to capture various experimentally observed effects on small networks [13, 27].

Computability. Kleinberg and Tardos [27] provide an efficient centralized algorithm to compute balanced outcomes. They also show that balanced outcomes exist if and only if stable outcomes exist.

Connection to cooperative game theory. The set of balanced outcomes is identical to the core intersection prekernel of the corresponding cooperative game [33, 4].

However, this leaves unanswered the question of whether balanced outcomes can be predictive on large networks, since there was previously no dynamical description of how players can find such an outcome via a bargaining process.

In this work, we consider an assignment market with arbitrary network structure and edge weights that correspond to the value of the potential partnership on that edge. We present a model for the bargaining process in such a network setting, under a capacity constraint on the number of exchanges that each player can establish. We define a model that satisfies two important requirements: locality and convergence.

Locality. It is reasonable to assume that agents have accurate information about their neighbors in the network (their negotiation partners). Specifically, an agent is expected to know the weights of the edges with each of her negotiation partners. Further, for , agent may estimate the ‘best alternative’ of agent in the current ‘state’ of negotiations.222For instance, may form this estimate based on her conversation with . (We do not present a game theoretic treatment with fully rational/strategic agents in this work, cf. Section 6.) On the other hand, it is unlikely that the offer made to an agent during a negotiation depends on arbitrary other agents in the network. We thus require that agent make ‘myopic’ choices on the basis of their neighborhood in the exchange networks. This is consistent with the bulk of the game theory literature on learning [18, 10].

Convergence. The duration of a negotiation is unlikely to depend strongly on the overall network size. For instance, the negotiation on the price of a house, should not depend too much on the size of the town in which it takes place, all other things being equal. Thus a realistic model for negotiation should converge to a fixed point (hence to a set of exchange agreements) in a time roughly independent of the network size.

Our dynamical model is fairly simple. Players compute the current best alternative to each exchange, both for them, and for their partner. On the basis of that, they make a new offer to their partner according to the pairwise Nash bargaining solution. This of course leads to a change in the set of best alternatives at the next time step. We make the assumption that ‘pairing’ occurs at the end, or after several iterative updates, thus suppressing the effect of agents pairing up and leaving.

This dynamics is of course local. Each agent only needs to know the offers she is receiving, as well as the offers that her potential partner is receiving. The technical part of this paper is therefore devoted to the study of the convergence properties of this dynamics.

Remarkably, we find that it converges rapidly, in time roughly independent of the network size. Further its fixed points are not arbitrary, but rather a suitable generalization of Nash bargaining solutions [13, 33] introduced in the context of assignment markets and exchange networks (also referred to as ‘balanced outcomes’ [27] or ‘symmetrically pairwise-bargained allocations’ [33]). Thus, our work provides a dynamical justification of Nash bargaining solutions in assignment markets.

We now present the mathematical definitions of bargaining networks and balanced outcomes.

The network consists of a graph , with positive weights associated to the edges . A player sits at each node of this network, and two players connected by edge can share a profit of dollars if they agree to trade with each other. Each player can trade with at most one of her neighbors (this is called the -exchange rule), so that a set of valid trading pairs forms a matching in the graph .

We define an outcome or trade outcome as a pair where is a matching of , and is the vector of players’ profits. This means, , and implies , whereas for every unmatched node we have .

A balanced outcome, or Nash bargaining (NB) solution, is a trade outcome that satisfies the additional requirements of stability and balance. Denote by the set of neighbors of node in .

Stability. If player is trading with , then she cannot earn more by simply changing her trading partner. Formally for all .

Balance. If player is trading with , then the surplus of over her best alternative must be equal to the surplus of over his best alternative. Mathematically,

| (1) |

for all . Here refers to the non-negative part of , i.e. .

It turns out that the interplay between the -exchange rule and the stability and balance conditions results in highly non-trivial predictions regarding the influence of network structure on individual earnings.

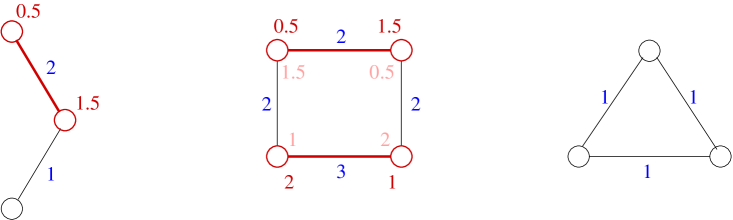

We conclude with some examples of networks and corresponding balanced outcomes (see Figure 1).

The network has a unique balanced outcome with the nodes and forming a partnership with a split of . Node remains isolated with . The best alternative of node is , whereas it is for node , and the excess of is split equally between and , so that each earns a surplus of over their outside alternatives.

The network admits multiple balanced outcomes. Each balanced outcome involves the pairing . The earnings are balanced, and so is the symmetric counterpart of this earnings vector . In fact, every convex combination of these two earnings vectors is also balanced.

The network does not admit any stable outcome, and hence does not admit any balanced outcomes. To see this, observe that for any outcome, there is always a pair of agents who can benefit by deviating.

1.1 Related work

Recall the LP relaxation to the maximum weight matching problem

| maximize | (2) | ||||

| subject to | |||||

Proposition 1.

Following [33, 13], Kleinberg and Tardos [27] first considered balanced outcomes on general exchange networks and proved that: a network admits a balanced outcome if and only if it admits a stable outcome.

The same paper describes a polynomial algorithm for constructing balanced outcomes. This is in turn based on the dynamic programming algorithm of Aspvall and Shiloach [1] for solving systems of linear inequalities. However, [27] left open the question of whether the actual bargaining process converges to balanced outcomes.

Rochford [33], and recent work by Bateni et al [4], relate the assignment market problem to the extensive literature on cooperative game theory. They find that balanced outcomes correspond to the core intersect pre-kernel of the corresponding cooperative game. A consequence of the connection established is that the results of Kleinberg and Tardos [27] are implied by previous work in the economics literature. The existence result follows from Proposition 1, and the fact that if the core of a cooperative game is non-empty then the core intersect prekernel is non-empty. Efficient computability follows from work by Faigle et al [17], who provide a polynomial time algorithm for finding balanced outcomes. 333In fact, Faigle et al [17] work in the more general setting of cooperative games. The algorithm involves local ‘transfers’, alternating with a non-local LP based step after every transfers.

However, [33, 4] also leave open the twin questions of finding (i) a natural model for bargaining, and (ii) convergence (or not) to NB solutions.

Azar and co-authors [2] studied the question as to whether a balanced outcome can be produced by a local dynamics, and were able to answer it positively444Stearns [43] defined a very similar dynamics and proved convergence for general cooperative games. The dynamics can be interpreted in terms of the present model using the correspondence with cooperative games discussed in [4].. Their results left, however, two outstanding challenges: The algorithm analyzed by these authors first selects a matching in using the message passing algorithm studied in [5, 22, 6, 37], corresponding to the pairing of players that trade. In a second phase the algorithm determines the profit of each player. While such an algorithm can be implemented in a distributed way, Azar et al. point out that it is not entirely realistic. Indeed the rules of the dynamics change abruptly after the matching is found. Further, if the pairing is established at the outset, the players lose their bargaining power; The bound on the convergence time proved in [2] is exponential in the network size, and therefore does not provide a solid justification for convergence to NB solutions in large networks.

1.2 Our contribution

The present paper (based partly on our recent conference papers [25, 23]) aims at tackling these challenges. First we introduce a natural dynamics that is interpretable as a realistic negotiation process. We show that the fixed points of the dynamics are in one to one correspondence with NB solutions, and prove that it converges to such solutions. Moreover, we show that the convergence to approximate NB solutions is fast. Furthermore we are able to treat the more general case of nodes with unsymmetrical bargaining powers and generalize the result of [27] on existence of NB solutions to this context. These results are obtained through a new and seemingly general analysis method, that builds on powerful quantitative estimates on mappings in the Banach spaces [3]. For instance, our approach allows us to prove that a simple variant of the edge balancing dynamics of [2] converges in polynomial time (see Section 7).

We consider various modifications to the model and analyze the results. One direction is to allow arbitrary integer ‘capacity constraints’ that capture the maximum number of deals that a particular node is able to simultaneously participate in (the model defined above corresponds to a capacity of one for each node). Such a model would be relevant, for example, in the context of a job market, where a single employer may have more than one opening available. We show that many of our results generalize to this model in Section 5.

Another well motivated modification is to depart from the assumption of symmetry/balance and allow nodes to have different ‘bargaining powers’. Rochford and Crawford [34] mention this modification in passing, with the remark that it “…seems to yield no new insights”. Indeed, we show here (see Section 4) that our asymptotic convergence results generalize to the unsymmetrical case. However, surprisingly, we find that the natural dynamics may now take exponentially long to converge. We find that this can occur even in a two-sided network with the ‘sellers’ having slightly more bargaining power than the ‘buyers’. Thus, a seemingly minor change in the model appears to drastically change the convergence properties of our dynamics. Other algorithms like that of Kleinberg and Tardos [27] and Faigle et al [17] also fail to generalize, suggesting that, in fact, we may lose computability of solutions in allowing asymmetry. However, we show that a suitable modification to the bargaining process yields a fully polynomial time approximation scheme (FPTAS) for the unequal bargaining powers. The caveat is that this algorithm, though local, is not a good model for bargaining because it fixes the matching at the outset (cf. comment above).

Our dynamics and its analysis have similarities with a series of papers on using max-product belief propagation for the weighted matching problems [5, 22, 6, 37]. We discuss that connection and extensions of our results to those settings in one of our conference papers [25, Appendix F]. We obtain a class of new message passing algorithms to compute the maximum weight matching, with belief propagation and our dynamics being special cases.

Related work in sociology

Besides economists, sociologists have been interested in such markets, called exchange networks in that literature. The key question addressed by network exchange theory is that of how network structure influences the power balance between agents. Numerous predictive frameworks have been suggested in this context including generalized Nash bargaining solutions [13]. Moreover, controlled experiments [45, 29, 41] have been carried out by sociologists. The typical experimental set-up studies exactly the model of assignment markets proposed by economists [39, 33]. It is often the case that players are provided information only about their immediate neighbors. Typically, a number of ‘rounds’ of negotiations are run, with no change in the network, so as to allow the system to reach an ‘equilibrium’. Further, players are usually not provided much information beyond who their immediate neighbors are, and the value of the corresponding possible deals.

1.3 Outline of the paper

Our dynamical model of bargaining in a network is described in Section 2. We state our main results characterizing fixed points and convergence of the dynamics in Section 3.

We present two extensions of our model. In Section 4 we investigate the unsymmetrical case with nodes having different bargaining powers. We find that the (generalized) dynamics may take exponentially long to converge in this case, but we provide a modified local algorithm that provides an FPTAS. We consider the case of general capacity constraints in Section 5, and show that our main results generalize to this case.

In Section 7, we prove Theorems 2 and 3 on convergence of our dynamics. We characterize fixed points in Section 8 with a proof of Theorem 1 (the proof of Theorem 4 is deferred to Appendix D). Section 9 shows polynomial time convergence on bipartite graphs (proof of Theorem 5).

We present a discussion of our results in Section 6.

Appendix A contains a discussion on variations of the natural dynamics including time and node varying damping factors and asynchronous updates.

2 Dynamical model

Consider a bargaining network , where the vertices represent agents, and the edges represent potential partnerships between them. There is a positive weight on each edge , representing the fact that players connected by edge can share a profit of dollars if they agree to trade with each other. Each player can trade with at most one of her neighbors (this is called the -exchange rule), so that a set of valid trading pairs forms a matching in the graph . We define a trade outcome as in Section 1, in accordance with the above constraints.

We expect natural dynamical description of a bargaining network to have the following properties: It should be local, i.e. involve limited information exchange along edges and processing at nodes; It should be time invariant, i.e. the players’ behavior should be the same/similar on identical local information at different times; It should be interpretable, i.e. the information exchanged along the edges should have a meaning for the players involved, and should be consistent with reasonable behavior for players.

In the model we propose, at each time , each player sends a message to each of her neighbors. The message has the meaning of ‘best current alternative’. We denote the message from player to player by . Player is telling player that she (player ) currently estimates earnings of elsewhere, if she chooses not to trade with .

The vector of all such messages is denoted by . Each agent makes an ‘offer’ to each of her neighbors, based on her own ‘best alternative’ and that of her neighbor. The offer from node to is denoted by and is computed according to

| (4) |

It is easy to deduce that this definition corresponds to the following policy: An offer is always non-negative, and a positive offer is never larger than (no player is interested in earning less than her current best alternative); Subject to the above constraints, the surplus (if non-negative) is shared equally. We denote by the vector of offers.

Notice that is just a deterministic function of . In the rest of the paper we shall describe the network status uniquely through the latter vector, and use to denote defined by (4) when required so as to avoid ambiguity.

Each node can estimate its potential earning based on the network status, using

| (5) |

the corresponding vector being denoted by . Notice that is also a function of .

Messages are updated synchronously through the network, according to the rule

| (6) |

Here is a ‘damping’ factor: can be thought of as the inertia on the part of the nodes to update their current estimates (represented by outgoing messages). The use of eliminates pathological behaviors related to synchronous updates. In particular, we observe oscillations on even-length cycles in the undamped synchronous version. We mention here that in Appendix A we present extensions of our results to various update schemes (e.g., asynchronous updates, time-varying damping factor).

Remark 2.

An update under the natural dynamics requires agent to perform arithmetic operations, and operations in total.

Let . Often in the paper we take , since this can always be achieved by rescaling the problem, which is the same as changing units. It is easy to see that , and at all times (unless the initial condition violates this bounds). Thus we call a ‘valid’ message vector if .

2.1 An Example

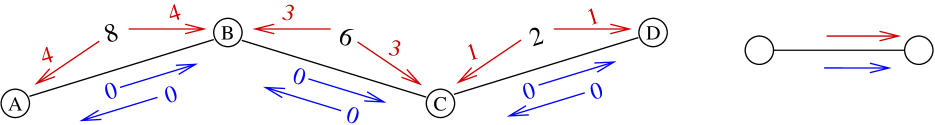

We consider a simple graph with , , , and .

The unique maximum weight matching on this graph is . By Proposition 1, stable outcomes correspond to matching and can be parameterized as

where are constrained as

For instance, the set of stable outcomes (all on matching ) includes , , and so on. Now suppose we impose the balance condition Eq. (1) in addition, i.e., we look for balanced outcomes. Using the algorithm of Kleinberg and Tardos [27], we find that the network admits a unique balanced outcome .

Now we consider the evolution of the natural dynamics proposed above on the graph . We arbitrarily choose to study the initialization , i.e., each node initially estimates its best alternatives to be with respect to each neighbor. We set for simplicity555Our results assume to avoid oscillatory behavior. However, it turns out that on graphs with no even cycles, for instance the graph under consideration, oscillations do not occur. We choose to consider for simplicity of presentation.. The evolution of the estimates and offers under the dynamics is shown in Figure 2. We now comment on a few noteworthy features demonstrated by this example. In the first step, nodes and receive their best offers from each other, node receives its best offer from and node receives its best offer from . Thus, we might expect nodes and to be considering the formation of a partnership already (though the terms are not yet clear), but this is not the case for and . After one iteration, at , both pairs and receive their best offers from each other. In fact, this property remains true at all future times (the case is shown). However, the vectors and continue to evolve from one iteration to the next. At iteration , a fixed point is reached, i.e., and remain unchanged for . Moreover, we notice that the fixed point captures the unique balanced outcome on this graph, with the matching and the splits and emerging from the fixed point .

We remark here that convergence to a fixed point in finite number of iterations is not a general phenomenon. This occurs as a consequence of the simple example considered and the choice . However, as we prove below, we always obtain rapid convergence of the dynamics, and fixed points always correspond to balanced outcomes, on any graph possessing balanced outcomes, and for any initialization.

3 Main results: Fixed point properties and convergence

Our first result is that fixed points of the update equations (4), (6) (hereafter referred to as ‘natural dynamics’) are indeed in correspondence with Nash bargaining solutions when such solutions exist. Note that the fixed points are independent of the damping factor . The correspondence with NB solutions includes pairing between nodes, according to the following notion of induced matching.

Definition 3.

We say that a state (or just ) induces a matching if the following happens. For each node receiving non-zero offers (), is matched under and gets its unique best offer from node such that . Further, if then is not matched in . In other words, pairs in receive unique best offers that are positive from their respective matched neighbors whereas unmatched nodes receive no non-zero offers.

Consider the LP relaxation to the maximum weight matching problem (2). A feasible point for LP (2) is called half-integral if for all , . It is well known that problem (2) always has an optimum that is half-integral [38]. An LP with a fully integer () is called tight.

Theorem 1.

Let be an instance admitting one or more Nash bargaining solutions, i.e. the LP (2)

admits an integral optimum.

(a) Unique LP optimum (generic case): Suppose the optimum is unique corresponding to matching .

Let be a fixed point of

the natural dynamics. Then

induces matching and is a Nash bargaining solution.

Conversely, every Nash bargaining solution has and

corresponds to a unique

fixed point of the natural dynamics with .

(b) Let be a fixed point of

the natural dynamics. Then is a Nash bargaining solution for any integral maximum weight matching . Conversely, if is a Nash bargaining solution, is a maximum weight matching and there is a unique fixed point of the natural dynamics with .

We prove Theorem 1 in Section 8. Theorem 12 in Appendix C extends this characterization of fixed points of the natural dynamics to cases where Nash bargaining solutions do not exist.

Remark 4.

The condition that a tight LP (2) has a unique optimum is generic (see Appendix C, Remark 18). Hence, fixed points induce a matching for almost all instances (cf. Theorem 1(a)). Further, in the non-unique optimum case, we cannot expect an induced matching, since there is always some node with two equally good alternatives.

The existence of a fixed point of the natural dynamics is immediate from Brouwer’s fixed point theorem. Our next result says that the natural dynamics always converges to a fixed point. The proof is in Section 7.

Theorem 2.

The natural dynamics has at least one fixed point. Moreover, for any initial condition with , converges to a fixed point.

Note that Theorem 2 does not require any condition on LP (2). It also does not require uniqueness of the fixed point.

With Theorems 1 and 2, we know that in the limit of a large number of iterations, the natural dynamics yields a Nash bargaining solution. However, this still leaves unanswered the question of the rate of convergence of the natural dynamics. Our next theorem addresses this question, establishing fast convergence to an approximate fixed point.

However, before stating the theorem we define the notion of approximate fixed point.

Definition 5.

We say that is an -fixed point, or -FP in short, if, for all we have

| (7) |

and similarly for . Here, is obtained from through Eq. (4) (i.e., ).

Note that -fixed points are also defined independently of the damping .

Theorem 3.

Let be an instance with weights . Take any initial condition . Take any . Define

| (8) |

Then for all , is an -fixed point. (Here )

Remark 6.

For any , it is possible to construct an example such that it takes iterations to reach an -fixed point. This lower bound can be improved to in the unequal bargaining powers case (cf. Section 4). However, in our constructions, the size of the example graph grows with decreasing in each case.

We are left with the problem of relating approximate fixed points to approximate Nash bargaining solutions. We use the following definition of -Nash bargaining solution, that is analogous to the standard definition of -Nash equilibrium (e.g., see [14]).

Definition 7.

We say that is an -Nash bargaining solution if it is a valid trade outcome that is stable and satisfies -balance. -balance means that for every we have

| (9) |

A subtle issue needs to be addressed. For an approximate fixed point to yield an approximate Nash bargaining solution, a suitable pairing between nodes is needed. Note that our dynamics does not force a pairing between the nodes. Instead, a pairing should emerge quickly from the dynamics. In other words, nodes on the graph should be able to identify their trading partners from the messages being exchanged. As before, we use the notion of an induced matching (see Definition 3).

Definition 8.

Consider LP (2). Let be the set of half integral points in the primal polytope. Let be an optimum. Then the LP gap is defined as .

Theorem 4.

Let be an instance for which the LP (2) admits a unique optimum, and this is integral, corresponding to matching . Let the gap be . Let be an -fixed point of the natural dynamics, for some . Let be the corresponding earnings estimates. Then induces the matching and is an -Nash bargaining solution. Conversely, every -Nash bargaining solution has for any .

Note that is equivalent to the unique optimum condition (cf. Remarks 2, 5). The proof of this theorem requires generalization of the analysis used to prove Theorem 1 to the case of approximate fixed points. Since its proof is similar to the proof of Theorem 1, we defer it to Appendix D. We stress, however, that Theorem 4 is not, in any sense, an obvious strengthening of Theorem 1. In fact, this is a delicate property of approximate fixed points that holds only in the case of balanced outcomes. This characterization breaks down in the face of a seemingly benign generalization to unequal bargaining powers (cf. Section 4 and [23, Section 4]).

Theorem 4 holds for all graphs, and is, in a sense, the best result we can hope for. To see this, consider the following immediate corollary of Theorems 3 and 4.

Corollary 9.

Let be an instance with weights . Suppose LP (2) admits a unique optimum, and this is integral, corresponding to matching . Let the gap be . Then for any , there exists such that for any , induces the matching and is an -NB solution.

Proof.

Corollary 9 implies that for any , the natural dynamics finds an -NB solution in time .

This result is the essentially the strongest bound we can hope for in the following sense. First, note that we need to find (see converse in Theorem 4) and balance the allocations. Max product belief propagation, a standard local algorithm for computing the maximum weight matching, requires iterations to converge, and this bound is tight [6]. Similar results hold for the Auction algorithm [7] which also locally computes . Moreover, max product BP and the natural dynamics are intimately related (see [25]), with the exception that max product is designed to find , but this is not true for the natural dynamics. Corollary 9 shows that natural dynamics only requires a time that is polynomial in the same parameters and to find , while it simultaneously takes rapid care of balancing the outcome.

3.1 Example: Polynomial convergence to -NB solution on bipartite graphs.

The next result further shows a concrete setting in which Corollary 9 leads to a strong guarantee on quickly reaching an approximate NB solution.

Theorem 5.

Let be a bipartite graph with weights . Take any . Construct a perturbed problem instance with weights , where are independent identically distributed random variables uniform in . Then there exists , such that for

| (10) |

the following happens for all with probability at least . State induces a matching that is independent of . Further, is a -NB solution for the perturbed problem, with .

represents our target in the probability that a pairing does not emerge, while represents the size of perturbation of the problem instance.

3.2 Other results

A different analysis allows us to prove exponentially fast convergence to a unique Nash bargaining solution. We describe this briefly in Section 7.1, referring to an unpublished manuscript [24] for the proof, in the interest of space.

We investigate the case of nodes with unsymmetrical bargaining powers in Section 4. We show that generalizations of the Theorems 1, 2 and 3 hold for a suitably modified dynamics. Somewhat surprisingly, we find that the modified dynamics may take exponentially long to converge (so Theorem 3 does not generalize). However, we find a different local procedure that efficiently finds approximate solutions.

In Section 5 we consider the case where agents have arbitrary integer capacity constraints on the number of partnerships they can participate in, instead of the one-matching constraint. We generalize our dynamics and the notion of balanced outcomes to this case. We show that Theorems 1, 2 and 3 generalize. As a corollary, we establish the existence of balanced outcomes whenever stable outcomes exist (Corollary 14) in this general setting666The caveat here is that Corollary 14 does not say anything about the corner case of non-unique maximum weight -matching..

Appendix A presents extensions of our convergence results to cases where the damping factor varies in time or from node to node, and when updates are asynchronous. This shows that our insights are robust to variations in the natural of damping and the timing of iterative updates.

4 Unequal bargaining powers

It is reasonable to expect that not all edge surpluses on matching edges are divided equally in an exchange network setting. Some nodes are likely to have more ‘bargaining power’ than others. This bargaining power can arise, for example, from ‘patience’; a patient agent is expected to get more than half the surplus when trading with an impatient partner. This phenomenon is well known in the Rubinstein game [36] where nodes alternately make offers to each other until an offer is accepted – the node with a smaller discount factor earns more in the subgame perfect Nash equilibrium. Moreover, a recent experimental study of bargaining in exchange networks [11] found that patience correlated positively with earnings.

A reasonable approach to model this effect would be to assign a positive ‘bargaining power’ to each node, and postulate that if a pair of nodes trade with each other, then the edge surplus is divided in the ratio of their bargaining powers. We choose instead, a more general setting where on each edge there is an expected surplus split fraction quantified by . Namely, is the fraction of surplus that goes to if and trade with each other, and similarly for . Note that we have . We call a weighted graph along with the postulated split fraction vector an unequal division (UD) instance.

The balance condition is replaced by correct division condition

| (11) | ||||

on matched edges . We retain the stability condition. We call trade outcomes satisfying (11) and stability unequal division (UD) solutions. A natural modification to our dynamics in this situation consists of the following redefinition of offers.

| (12) |

We call the dynamics resulting from (12) and the update rule (6) the unsymmetrical natural dynamics. One can check that defined in (24) is nonexpansive for offers defined as in (12). It follows that Theorems 2 and 3 hold for the UD-natural dynamics with damping. (We retain Definition 5 of an -FP). Further, Theorem 1 can also be extended to this case. The proof involves exactly the same steps as for the natural dynamics (cf. Section 8). Properties 1-6 in the direct part all hold (proofs nearly verbatim) and an identical construction works for the converse.

Theorem 6.

Let be an instance for which the LP (2) admits an integral optimum. Let be a fixed point of the UD-natural dynamics. Then is a UD solution for any maximum weight matching . Conversely, for any UD solution , matching is a maximum weight matching and there is a unique fixed point of the UD-natural dynamics with .

Further, if the LP (2) has a unique integral optimum, corresponding to matching , then any fixed point induces matching .

We note that the following generalization of the result on existence of Nash bargaining solutions [27] follows from Theorem 6 and the existence of fixed points.

Lemma 1.

UD solutions exist if and only if a stable outcome exists (i.e. LP (2) has an integral optimum.)

Proof.

The direct part of Theorem 6, along with the existence of fixed points of the UD natural dynamics (from Brouwer’s fixed point theorem, also first part of Theorem 2 for UD) shows that UD solutions exist if LP (2) has an integral optimum. The converse is trivial since if LP (2) has no integral optimum, then there are no stable solutions (see Proposition 1) and hence no UD solutions. ∎

4.1 Exponential convergence time in the UD case

It is possible to derive a characterization similar to Theorem 4 also for the UD case. However, the bound on needed to ensure that the right pairing emerges in an -FP turns out to be exponentially small in . As such, we are only able to show that a pairing emerges in time . In fact, as the example below shows, it does take exponentially long for a pairing to emerge in worst case.

Let . In Appendix G.1, we construct a sequence of instances , such that for each instance in the sequence the following holds.

-

(a)

The instance admits a UD solution, and the gap .

-

(b)

There is a message vector that is an -FP for such that does not induce any matching. In fact, for any matching in our example, there exists such that gets an offer from outside the matching that exceeds the offer of its partner under the matching (if any) by at least 1. Thus, is ‘far’ from inducing a matching.

Further, split fractions are bounded within for arbitrary desired ( depends on ). Also, the weights are uniformly bounded by a constant .

Now, if we start at an -FP, the successive iterates of our dynamics are all -FPs (this follows from the nonexpansivity of the update operator, cf. Section 8). Hence, no offer can change by more than in each iteration. Thus, in our constructed instances , it requires at least iterations starting with , before induced a matching. Thus, our construction with the above properties implies that the unsymmetrical natural dynamics can take exponentially long to induce a matching, even on well behaved instances (). Moreover, as discussed in Appendix G.1, our construction corresponds to a plausible two-sided market, so this is not to be dismissed as a unrealistic special case that can be ignored.

4.2 A fully polynomial time approximation scheme

We show that though the natural dynamics may take exponentially long to converge, there is a polynomial time iterative ‘re-balancing’ algorithm that enables us to compute an approximate UD solution.

First we define an approximate version of correct division, asking that Eq. (11) be satisfied to within an additive , for all matched edges. For each edge , we define the ‘edge surplus’ as the excess of over the sum of best alternatives, i.e.,

| (13) |

Definition 10 (-Correct division).

We define approximate UD solutions as follows:

Definition 11 (-UD solution).

An outcome is an -UD solution for if it is stable and it satisfies -correct division (cf. Definition 10).

It follows from Lemma 1 that -UD solutions exist if and only if the LP (2) admits an integral optimum, which is the same as the requirement for existence of UD solutions. We prove the following:

Theorem 7.

There is an algorithm that is polynomial in the input and , such that for any problem instance with weights uniformly bounded by , i.e., :

-

•

If the instance admits a UD solution, the algorithm finds an -UD solution.

-

•

If the instance does not admit a UD solution, the algorithm returns the message unstable.

Our approach to finding an -UD solution consists of two main steps:

-

1.

Find a maximum weight matching and a dual optimum (solution to the dual LP (3)) . Thus, form a stable outcome . Else certify that the instance has no UD solution.

-

2.

Iteratively update the allocation without changing the matching. Updates are local, and are designed to converge fast to an allocation satisfying the -correct division solution while maintaining stability. Thus, we arrive at an -UD solution.

As mentioned earlier, this is similar to the approach of [2]. The crucial differences (enabling our results) are: (i) we stay within the space of stable outcomes, (ii) our analysis of convergence.

5 General capacity constraints

In several situations, agents may be less restricted: Instead of an agent being allowed to enter at most one agreement, for each agent , there may be an integer capacity constraint specifying the maximum number of partnerships that can enter into. For instance, in a labor market for full time jobs, an employer may have 4 openings for a particular role (), another employer may have 6 openings for a different role, and so on, but the job seekers can each accept at most one job. In this section, we describe a generalization of our dynamical model to the case of general capacity constraints, in an attempt to model behavior in such settings. We find that most of our results from the one-matching case, cf. Section 3, generalize.

5.1 Preliminaries

Now a bargaining network is specified by an undirected graph with positive weights on the edges , and integer capacity constraints associated to the nodes . We generalize the notion of ‘matching’ to sets of edges that satisfy the given capacity constraints: Given capacity constraints , we call a set of edges a -matching if the degree of in the graph is at most , for every . We say that is saturated under if .

We assume that there are no double edges between nodes777This assumption was not needed in the one-exchange case since, in that case, utility maximizing agents and will automatically discard all but the heaviest edge between them. This is no longer true in the case of general capacity constraints.. Thus, an agent can use at most one unit of capacity with any one of her neighbors in the model we consider.

A trade outcome is now a pair , where is a -matching and is a splitting of profits , with if , and if .

Define if is saturated (i.e. ) and if is not saturated. Note that this definition is equivalent to . Here denotes the -th largest of a set of non-negative reals, being defined as if there are less than numbers in the set. It is easy to see that our definition of here is consistent with the definition for the one-exchange case. (But is not consistent with , which is why we use different notation.)

We say that a trading outcome is stable if for all . This definition is natural; a selfish agent would want to switch partners if and only if he can gain more utility elsewhere.

An outcome is said to be balanced if

| (15) |

for all .

Note that the definitions of stability and balance generalize those for the one-exchange case.

An outcome is a Nash bargaining solution if it is both stable and balanced.

Consider the problem of finding the maximum weight (not necessarily perfect) -matching on a weighted graph . The LP-relaxation of this problem and it’s dual are given by

| (16) |

Complementary slackness says that a pair of feasible solutions is optimal if and only if

-

•

For all .

-

•

For all .

-

•

For all .

Lemma 2.

Consider a network with edge weights and capacity constraints . There exists a stable solution if and only if the primal LP (16) admits an integer optimum. Further, if is a stable outcome, then is a maximum weight -matching, and for all and for all is an optimum solution to the dual LP.

Proof.

If is an integer optimum for the primal LP, and is the corresponding -matching), the complementary slackness conditions read

-

(i)

For all .

-

(ii)

For all .

-

(iii)

For all with .

We can construct a stable outcome by setting for , and otherwise: Using (iii) above, (cf. definition of above), so for any , we have , using (ii) above. It is easy to check that for any using (i) above. Thus, is a stable outcome.

For the converse, consider a stable allocation . We claim that forms a (integer) primal optimum. For this we simply demonstrate that there is a feasible point in the dual with the same value as the primal value at : Take , and for edges in , and otherwise. The dual objective is then exactly equal to the weight of . This also proves the second part of the lemma. ∎

5.2 Dynamical model

We retain the notation for the ‘best alternative’ estimated in iteration . As before, ‘offers’ are determined as

in the spirit of the pairwise Nash bargaining solution.

Now the best alternative should be the estimated income from the ‘replacement’ partnership, if and do not reach an agreement with each other. This ‘replacement’ should be the one corresponding to the largest offer received by from neighbors other than . Hence, the update rule is modified to

| (17) |

where is the damping factor.

Further, we define by

| (20) |

Here ties are broken arbitrarily in ordering incoming offers. Finally, we define

| (21) |

5.3 Results

Our first result is that fixed points of the new update equations (4), (17) are again in correspondence with Nash bargaining solutions when such solutions exist (analogous to Theorem 1). Note that the fixed points are independent of the damping factor . First, we generalize the notion of an induced matching.

Definition 12.

We say that a state (or just ) induces a -matching if the following happens. For each node receiving at least non-zero offers (): there is no tie for the incoming offer to , and node is matched under to the neighbors from whom it is receiving its highest offers. For each node receiving less than non-zero offers: node is matched under to all its neighbors from whom it is receiving positive offers.

Consider the LP relaxation to the maximum weight matching problem (2). A feasible point for LP (2) is called half-integral if for all , . Again, it can be easily shown that the primal LP (16) always has an optimum that is half-integral [38, Chapter 31]. As before, an LP with a fully integer (i.e., for all ) is called tight.

Theorem 8.

Let with edge weights and capacity constraints be an instance such that the primal LP (16) has a unique optimum that is integral, corresponding to matching .

Let be a fixed point of

the natural dynamics. Then

induces matching and is a Nash bargaining solution.

Conversely, every Nash bargaining solution has and

corresponds to a unique

fixed point of the natural dynamics with .

Remark 13.

Corollary 14.

Let with edge weights and capacity constraints be an instance such that the primal LP (16) has a unique optimum that is integral. Then the instance possesses a Nash bargaining solution.

Thus, we obtain a (almost) tight characterization of the when NB solutions exist in the case of general capacity constraints888For simplicity, we have stated and proved, in Theorem 8, a generalization of only part (a) of Theorem 1. However, we expect that part (b) also generalizes, which would then lead to an exact characterization of when NB solutions exist in this case..

Our convergence results, Theorems 2 and 3, generalize immediately, with the proofs (cf. Section 7) going through nearly verbatim:

Theorem 9.

Let with edge weights and capacity constraints be any instance. The natural dynamics has at least one fixed point. Moreover, for any initial condition with , converges to a fixed point.

We retain the Definition 5 for an -fixed point.

Theorem 10.

Let with weights and capacity constraints be any instance. Take any initial condition . Take any . Define

| (22) |

Then for all , is an -fixed point. (Again )

6 Discussion

Our results provide a dynamical justification for balanced outcomes, showing that agents bargaining with each other in a realistic, local manner can find such outcomes quickly. Refer to Section 1.2 for a summary of our contributions.

Some caution is needed in the interpretation of our results. Our dynamics avoids the question of how and when a pair of agents will cease to make iterative updates, and commit to each other. We showed that the right pairing will be found in time polynomial in the network size and the parameter LP parameter . But how will agents find out when this convergence has occurred? After all, agents are not likely to know , and even less likely to know . Further, why should agents wait for the right pairing to be found? It may be better for them to strike a deal after a few iterative updates because (i) they may estimate that they are unlikely to get a better deal later, (ii) they may be impatient, (iii) the convergence time may be very large on large networks. If a pair of agents do pair up and leave, then this changes the situation for the remaining agents, some of whom may have lost possible partners ([30] studies a model with this flavor). Our dynamics does not deal with this. A possible approach to circumventing some of these problems is to interpret our model in the context of a repeated game, where agents can pair up, but still continue to renegotiate their partnerships. Formalizing this is an open problem.

Related to the above discussion is the fact that our agents are not strategic. Though our dynamics admits interpretation as a bargaining process, it is unclear how, for instance, agent becomes aware of the best alternative of a neighbor . In the case of a fixed best alternative, the work of Rubinstein [36] justifies the pairwise Nash bargaining solution, but in our case the best alternative estimates evolve in time. Thus, it is unclear how to explain our dynamics game theoretically. However, we do not consider this to be a major drawback of our approach. Non-strategic agent behavior is commonly assumed in the literature on learning in games [18], even in games of only two players. Alternative recent approaches to bargaining in networks assume strategic agents, but struggle to incorporate reasonable informational assumptions (e.g. [30] assumes common knowledge of the network and perfect information of all prior events). Prima facie, it appears that bounded rationality models like ours may be more realistic.

Several examples admit multiple balanced outcomes (see Example 3 in Section 1). In fact, this is a common feature of two-sided assignment markets, which typically contain multiple even cycles. It would be very interesting to investigate whether our dynamics favors some balanced outcomes over others. If this is the case, it may improve our ability to predict outcomes in such markets.

Our model assumes the network to be exogenous, which does not capture the fact that agents may strategically form links. It would be interesting (and very challenging) to endogenize the network. A perhaps less daunting proposition is to characterize bargaining on networks that experience shocks, like the arrival of new agents, the departure of agents or the addition/deletion of links. Our result showing convergence to an approximate fixed point in time independent of the network size provides hope of progress on this front.

Finally, we remark on the computational problem of computing exact UD solutions in the unsymmetric case (recall that we give an FPTAS). We conjecture that the problem is computationally hard (cf. Section 1.2), with the recently introduced complexity class continuous local search [15] providing a possible way forward. We leave it as a challenging open problem to prove or refute this conjecture.

7 Convergence to fixed points: Proofs of Theorems 2 and 3

Theorems 2 and 3 admit a surprisingly simple proofs, that build on powerful results in the theory of nonexpansive mappings in Banach spaces.

Definition 15.

Given a normed linear space , and a bounded domain , a nonexpansive mapping is a mapping satisfying for all .

Mann [31] first considered the iteration for , which is equivalent to iterating . Ishikawa [20] and Edelstein-O’Brien [16] proved the surprising result that, if the sequence is bounded, then (the sequence is asymptotically regular) and indeed with a fixed point of .

Baillon and Bruck [3] recently proved a powerful quantitative version of Ishikawa’s theorem: If for all , then

| (23) |

The surprise is that such a result holds irrespective of the mapping and of the normed space (in particular, of its dimensions). Theorems 2 and 3 immediately follow from this theory once we recognize that the natural dynamics can be cast into the form of a Mann iteration for a mapping which is nonexpansive with respect to a suitably defined norm.

Let us stress that the nonexpansivity property does not appear to be a lucky mathematical accident, but rather an intrinsic property of bargaining models under the one-exchange constraint. It loosely corresponds to the basic observation that if earnings in the neighborhood of a pair of trade partners change by amounts , then the balanced split for the partners changes at most by , i.e., the largest of the neighboring changes.

Our technique seems therefore applicable in a broader context. (For instance, it can be applied successfully to prove fast convergence of a synchronous and damped version of the edge-balancing dynamics of [2].)

Proof (Theorem 2).

We consider the linear space indexed by directed edges in . On the bounded domain we define the mapping by letting, for ,

| (24) |

where is defined by Eq. (4). It is easy to check that the sequence of best alternatives produced by the natural dynamics corresponds to the Mann iteration . Also, is nonexpansive for the norm

| (25) |

Non-expansivity follows from:

(i) The ‘’ in

Eq. (24) is non expansive.

(ii) An offer as defined

by Eq. (4) is nonexpansive. To see this, note that

,

where is given by

| (28) |

It is easy to check that is continuous everywhere in . Also, it is differentiable except in , and satisfies . Hence, is Lipschitz continuous in the norm, with Lipschitz constant 1, i.e., it is nonexpansive in sup norm.

Notice that maps into itself. The thesis follows from [20, Corollary 1]. ∎

Proof (Theorem 3).

With the definitions given above, consider (whence for all ) and apply [3, Theorem 1]. ∎

7.1 Exponentially fast convergence to unique Nash bargaining solution

Convergence of the natural dynamics was studied in an earlier version of this paper using a different (and much more laborious) technique [24]. While the results in Section 3 constitute a large improvement in elegance and generality over those of [24], the latter retain an independent interest. Indeed the analysis of [24] shows that convergence is exponentially fast in a well defined class of instances. We decided therefore to retain the main result of that analysis (recast from [24]).

Theorem 11.

Assume . Let be an instance having unique Nash bargaining solution with KT gap , and let denote the corresponding allocation. Then, for any , there exists such that, for any initial condition with , and any the natural dynamics yields earning estimates , with for all . Moreover, induces the matching and is a -NB solution for any .

We refer to Appendix F for a definition of the KT gap (here KT stands for Kleinberg-Tardos). Suffice it to say that it is related to the Kleinberg-Tardos decomposition of and that it is polynomially computable [27].

As mentioned above, the proof is based on a very different technique, namely on ‘approximate decoupling’ of the natural dynamics on different KT structures under the assumptions (which is generic) and that there is a unique NB solution. See preprint [24] for a complete proof.

8 Fixed point properties: Proof of Theorem 1

Let be the set of optimum solutions of LP (2). We call a strong-solid edge if for all and a non-solid edge if for all . We call a weak-solid edge if it is neither strong-solid nor non-solid.

Proof of Theorem 1: From fixed points to NB solutions. The direct part follows from the following set of fixed point properties. The proofs of these properties are given in Appendix C. Throughout is a fixed point of the dynamics (4), (6) (with given by (5)).

(1) Two players are called partners if . Then the following are equivalent: (a) and are partners, (b) , (c) and .

(2) Let be the set of all partners of . Then the following are equivalent: (a) and , (b) and , (c) , (d) and receive unique best positive offers from each other.

(3) We say that is a weak-dotted edge if , a strong-dotted edge if , and a non-dotted edge otherwise. If has no adjacent dotted edges, then .

(4) An edge is strong-solid (weak-solid) if and only if it is strongly (weakly) dotted.

(5) The balance property (1), holds at every edge (with both sides being non-negative).

Proof of Theorem 1 (a), direct implication.

Assume that the LP (2) has a unique optimum that is integral. Then, by property 4, the set of strong-dotted edges form the unique maximum weight matching and all other edges are non-dotted. By property for that is unmatched under , . Hence by property , induces the matching . Finally, by properties 6 and 5, the pair is stable and balanced respectively, and thus forms a NB solution. ∎

The corresponding result for the non-unique optimum case (part (b)) can be proved similarly: it follows immediately Theorem 12, Appendix C.

Remark 16.

Proof of Theorem 1: From NB solutions to fixed points.

Proof.

Consider any NB solution . Using Proposition 1, is a maximum weight matching. Construct a corresponding FP as follows. Set for all . Compute using . We claim that this is a FP and that the corresponding is . To prove that we are at a fixed point, we imagine updated offers based on , and show .

Consider a matching edge . We know that . Also stability and balance tell us and both sides are non-negative. Hence, . Therefore ,

By symmetry, we also have . Hence, the offers remain unchanged. Now consider . We have and, . Similar equation holds for . The validity of this identity can be checked individually in the cases when and . Hence, . This leads to . By symmetry, we know also that .

Finally, we show . For all , we already found that and vice versa. For any edge , we know . This immediately leads to . It is worth noting that making use of the uniqueness of LP optimum we know that , and we can further show that if and only if , i.e., the fixed point reconstructs the pairing . ∎

9 Polynomial convergence on bipartite graphs: Proof of Theorem 5

Theorem 5 says that on a bipartite graph, under a small random perturbation on any problem instance, the natural dynamics is likely to quickly find the maximum weight matching. Now, in light of Corollary 9, this simply involves showing that the gap of the perturbed problem instance is likely to be sufficiently large. We use a version of the well known Isolation Lemma to for this. Note that on bipartite graphs, there is always an integral optimum to the LP (2).

Lemma 3 (Isolation Lemma).

Consider a bipartite graph . Choose . Edge weights are generated as follows: for each , is chosen uniformly in . Denote by the set of matchings in . Let be a maximum weight matching. Let be a matching having the maximum weight in . Denote by the weight of a matching . Then

| (29) |

Proof of Theorem 5.

Using Lemma 3, we know that the gap of the perturbed problem satisfies with probability at least . Now, the weights in the perturbed instance are bounded by . Rescale by dividing all weights and messages by , and use Corollary 9. The theorem follows from the following two elementary observations. First, an -NB solution for the rescaled problem corresponds to an -NB solution for the original problem. Second, induced matchings are unaffected by scaling. ∎

We remark that Theorem 5 does not generalize to any (non-bipartite) graph with edge weights such that the LP (2) has an integral optimum, for the following reason. We can easily generalize the Isolation Lemma to show that the gap of the perturbed problem is likely to be large also in this case. However, there is a probability arbitrarily close to 1 (depending on the instance) that a random perturbation will result in an instance for which LP (2) does not have an integral optimum, i.e. the perturbed instance does not have any Nash bargaining solutions!

Acknowledgements. We thank Eva Tardos for introducing us to network exchange theory and Daron Acemoglu for insightful discussions. We also thank the anonymous referees for their comments.

A large part of this work was done while Y. Kanoria, M. Bayati and A. Montanari were at Microsoft Research New England. This research was partially supported by NSF, grants CCF-0743978 and CCF-0915145, and by a Terman fellowship. Y. Kanoria is supported by a 3Com Corporation Stanford Graduate Fellowship.

References

- [1] B. Aspvall and Y. Shiloach, A polynomial time algorithm for solving systems of linear inequalities with two variables pre inequality, Proc. 20th IEEE Symp. Found. of Comp. Sc., 1979.

- [2] Y. Azar, B. Birnbaum, L. Elisa Celis, N. R. Devanur and Y. Peres, Convergence of Local Dynamics to Balanced Outcomes in Exchange Networks, Proc. 50th IEEE Symp. Found. of Comp. Sc., 2009.

- [3] J. Baillon and R. E. Bruck, The rate of asymptotic regularity is , in: A.G. Kartsatos (ed.), Theory and applications of nonlinear operators of accretive and monotone type, Lecture Notes in Pure and Appl. Math. 178, Marcel Dekker, Inc., New York, 1996, pp. 51–81.

- [4] M. Bateni, M. Hajiaghayi, N. Immorlica and H. Mahini, The cooperative game theory foundations of network bargaining games, Proc. Intl. Colloq. Automata, Languages and Programming, 2010.

- [5] M. Bayati, D. Shah and M. Sharma, Max-Product for Maximum Weight Matching: Convergence, Correctness, and LP Duality, IEEE Trans. Inform. Theory, 54 (2008), pp. 1241–1251.

- [6] M. Bayati, C. Borgs, J. Chayes, R. Zecchina, On the exactness of the cavity method for Weighted b-Matchings on Arbitrary Graphs and its Relation to Linear Programs, arXiv:0807.3159, (2007).

- [7] D. P. Bertsekas, The Auction Algorithm: A Distributed Relaxation Method for the Assignment Problem, Annals of Operations Research, 14 (1988), pp. 105–123.

- [8] K. Binmore, A. Rubinstein and A. Wolinsky, The Nash Bargaining Solution in Economic Modeling, Rand Journal of Economics, 17(2) (1986), pp. 176–188.

- [9] N. Braun, T. Gautschi, A Nash bargaining model for simple exchange networks, Social Networks, 28(1) (2006), pp. 1–23.

- [10] M. Kandori, G. J. Mailath, and R. Rob, Learning, Mutation, and Long Run Equilibria in Games, Econometrica 61(1) (1993).

- [11] T. Chakraborty, S. Judd, M. Kearns, J. Tan, A Behavioral Study of Bargaining in Social Networks, Proc. 10th ACM Conf. Electronic Commerce, 2010.

- [12] T. Chakraborty, M. Kearns and S. Khanna, Network Bargaining: Algorithms and Structural Results, Proc. 10th ACM Conf. Electronic Commerce, July 2009.

- [13] K. S. Cook and T. Yamagishi, Power exchange in networks: A power-dependence formulation, Social Networks, 14 (1992), pp. 245–265.

- [14] C. Daskalakis, C. Papadimitriou, On oblivious PTAS’s for nash equilibrium, Proc. ACM Symp. Theory of Computing, 2009.

- [15] C. Daskalakis, C. Papadimitriou, Geometrically Embedded Local Search, Proc. ACM-SIAM Symp. Discrete Algorithms, 2011.

- [16] M. Edelstein and R.C. O’Brien, Nonexpansive mappings, asymptotic regularity, and successive approximations, J. London Math. Soc. 1 (1978), pp. 547–554.

- [17] U. Faigle, W. Kern, and J. Kuipers, On the computation of the nucleolus of a cooperative game, International Journal of Game Theory, 30 (2001), pp. 79–98.

- [18] D. Fudenberg and D. K. Levine, The Theory of Learning in Games, The MIT Press, 1998.

- [19] Gabow, H. N., Tarjan, R. E.: Faster scaling algorithms for general graph-matching problems. J. ACM, 38(4):815- 853, 1991.

- [20] S. Ishikawa, Fixed points and iteration of a nonexpansive mapping in a Banach space, Proc. American Mathematical Society, 59(1) (1976).

- [21] D. Gamarnik, D. Shah and Y. Wei, Belief Propagation for Min-cost Network Flow: Convergence and Correctness, Proc. ACM-SIAM Symp. Disc. Alg., 2010.

- [22] B. Huang, T. Jebara, Loopy belief propagation for bipartite maximum weight b-matching, Artificial Intelligence and Statistics (AISTATS), March, 2007.

- [23] Y. Kanoria, An FPTAS for Bargaining Networks with Unequal Bargaining Powers, Proc. Workshop on Internet and Network Economics, 2010, (full version arXiv:1008.0212).

- [24] Y. Kanoria, M. Bayati, C. Borgs, J. Chayes, and A. Montanari, A Natural Dynamics for Bargaining on Exchange Networks, arXiv:0912.5176 (2009).

- [25] Y. Kanoria, M. Bayati, C. Borgs, J. Chayes, and A. Montanari, Fast Convergence of Natural Bargaining Dynamics in Exchange Networks, Proc. ACM-SIAM Symp. on Discrete Algorithms (2011), pp. 1518–1537.

- [26] E. Kalai, M. Smorodinsky, Other Solutions to Nash’s Bargaining Problem, Econometrica, 43(3) (1975).

- [27] J. Kleinberg and E. Tardos, Balanced outcomes in social exchange networks, in Proc. ACM Symp. Theory of Computing, 2008.

- [28] U. Kohlenback, A Quantitative Version Of A Theorem Due To Borwein-Reich-Shafrir, Numerical Functional Analysis and Optimization, 22(5-6), August 2001.

- [29] J.W. Lucas, C.W. Younts, M.J. Lovaglia, and B. Markovsky, Lines of power in exchange networks, Social Forces, 80 (2001), pp. 185–214.

- [30] M. Mihai, D. Abreu, Markov Equilibria in a Model of Bargaining in Networks, to appear in Games and Economic Behavior http://economics.mit.edu/files/6985.

- [31] W. R. Mann, Mean value methods in iteration, Proc. Amer. Math Soc., 4 (1953), pp. 506–510.

- [32] J. Nash, The bargaining problem, Econometrica, 18 (1950), pp. 155–162.

- [33] S.C. Rochford, Symmetric pairwise-bargained allocations in an assignment market, in J. Economic Theory, 34 (1984), pp. 262–281.

- [34] V. P. Crawford, S. C. Rochford, Bargaining and Competition in Matching Markets, Intl. Economic Review 27(2) (1986), pp. 329–348.

- [35] J. Z. Rubin, B. R. Brown, The Social Psychology of Bargaining and Negotiation, Academic Press 1975.

- [36] A. Rubinstein: Perfect equilibrium in a bargaining model, Econometrica, 50 (1982), pp. 97–109.

- [37] S. Sanghavi, D. Malioutov, A. Willsky, Linear Programming Analysis of Loopy Belief Propagation for Weighted Matching, Proc. Neural Inform. Processing Systems, 2007.

- [38] A. Schrijver, Combinatorial Optimization, Springer-Verlag, Vol. A (2003).

- [39] L. Shapley and M. Shubik, “The Assignment Game I: The Core,” Intl. J. Game Theory, 1 (1972), pp. 111-130.

- [40] M. Sotomayor, On The Core Of The One-Sided Assignment Game, 2005, http://www.usp.br/feaecon/media/fck/File/one_sided_assignment_game.pdf.

- [41] J. Skvoretz and D. Willer, Exclusion and power: A test of four theories of power in exchange networks, American Sociological Review, 58 (1993), pp. 801–818.

- [42] F. Spitzer, Principles of Random Walk, Springer, 2005.

- [43] R. E. Stearns, Convergent transfer schemes for n-person games, Transactions of American Mathematical Society, 134 (1968), pp. 449- 459.

- [44] M. J. Wainwright, T. S. Jaakkola, and A. S. Willsky, Exact MAP estimates via agreement on (hyper)trees: Linear programming and message-passing, IEEE Trans. Inform. Theory, 51 (2005), pp. 3697–3717.

- [45] D. Willer (ed.) Network Exchange Theory, Praeger, 1999.

Appendix A Variations of the natural dynamics

Now that we have a reasonable dynamics that converges fast to balanced outcomes, it is natural ask whether variations of the natural dynamics also yield balanced outcomes. What happens in the case of asynchronous updates, different nodes updating at different rates, damping factors that vary across nodes and in time, and so on? We discuss some of these questions in this section, focussing on some situations in which we can prove convergence with minimal additional work. Note that we are only concerned with extending our convergence results since the fixed point properties remain unchanged.

A.1 Node dependent damping

Consider that the damping factor may be different for different nodes, but unchanging over time. Denote by , the damping factor for node . Assume that for some , i.e. damping factors are uniformly bounded away from and . Define operator by

| (30) |

is nonexpansive. Now, the dynamics can be written as . Clearly, convergence to fixed points (Theorem 2) holds in this situation. Note that fixed points of are the same as fixed points of the natural dynamics. Moreover, we can use [3] to assert that and hence is an -FP. In short, we don’t lose anything with this generalization!

A.2 Time varying damping

Now consider instead that the damping may change over time, but is the same for all nodes. Denote by the damping factor at time , i.e.

| (31) |

The result of [20] implies that, as long as and , the dynamics is guaranteed to converge to a fixed point. Note that again the fixed points are unchanged. [28] provides a quantitative estimate of the rate of convergence in this case, guaranteeing in particular that an -FP is reached in time if is uniformly bounded away from and . Note that this estimate is much weaker than the one provided by [3], leading to Theorem 3. It seems intuitive that the stronger bound holds also for the time varying damping case in the general nonexpansive operator setting, but a proof has remained elusive thus far.

A.3 Asynchronous updates

Finally, we look at the case of asynchronous updates, i.e., one message is updated in any given step while the others remain unchanged. Define by

| (34) |

Let . There are such operators, two for each edge. Clearly, each is nonexpansive in sup-norm. Now, consider an arbitrary permutation of the directed edges . Consider the updates induced by in order, each with a damping factor of . Consider the resulting product

| (35) | ||||

Here (35) defines , and is the identity operator. It is easy to deduce that above is nonexpansive from the following elementary facts – the product of nonexpansive operators in nonexpansive, and the convex combination of nonexpansive operators is nonexpansive. Also, . Thus, if we repeat these asynchronous updates periodically in a series of ‘update cycles’, we are guaranteed to quickly converge to an -FP of ([3]).

Proposition 17.

An -FP of is an -FP of the natural dynamics.

Proof.

Suppose we start an update cycle at , an -FP of . Then we know that at the end of the update cycle, no coordinate changes by more than . Note that among the steps in a cycle, any particular ‘coordinate’ only changes in one step. Thus, each such coordinate change is bounded by . Consider the -th step in the update cycle. The state before the -th step, call it , is -close to . Also, we know that the coordinate changes by at most in this step. Hence,

This holds for . Hence the result. ∎

Note that with , Proposition 17 tells us that fixed points of are fixed points of the natural dynamics. Thus, we are immediately guaranteed convergence to fixed points of the natural dynamics. Moreover, the quantitative estimate in Proposition 17 guarantees that in a small number of update cycles we reach approximate fixed points of the natural dynamics.

Finally, we comment that instead of ordering updates by a permutation of directed edges, we could have an arbitrary periodic sequence of updates satisfying non-starvation and obtain similar results. For example, this would include cases where some nodes update more frequently than others. Also, note that the damping factors of were chosen for simplicity and to ensure fast convergence. Any non-trivial damping would suffice to guarantee convergence.

It remains an open question to show convergence for non-periodic asynchronous updates.

Appendix B Proof of Isolation lemma

Our proof of the isolation lemma is adapted from [21].

Proof of Lemma 3.

Fix and fix for all . Let be a maximum weight matching among matchings that strictly include edge , and let be a maximum weight matching among matchings that exclude edge . Clearly, and are independent of . Define

Clearly, , since we cannot do worse by forcing exclusion of a zero weight edge. Thus, there is some unique such that . Define . Let be the event that . It is easy to see that occurs if and only if . Thus, . Now,

| (36) |

and the lemma follows by union bound.

∎

Appendix C Proofs of fixed point properties

In this section we state and prove the fixed point properties that were used for the proof of Theorem 1 in Section 8. Before that, however, we remark that the condition: “LP (2) has a unique optimum” in Theorem 1(a) is almost always valid.

Remark 18.

We argue that the condition “LP (2) has a unique optimum” is generic in instances with integral optimum:

Let be the set of instances having an integral optimum.

Let be the set of instances having a unique integral optimum.

It turns out that has dimension (i.e.

the class of instances having an integral optimum is large) and

that is both

open and dense in .

Notation. In proofs of this section and Section D we denote surplus of edge by .

Lemma 4.

satisfies the constraints of the dual problem (3).

Proof.

Since offers are by definition non-negative therefore for all we have . So we only need to show for any edge . It is easy to see that and . Therefore, if then holds and we are done. Otherwise, for we have and which gives .∎

Recall that for any , we say that and are ‘partners’ if and denotes the partners of node . In other words .

Lemma 5.

The following are equivalent:

(a) and are partners,

(b) .

(c) and .

Moreover, if and then .

Proof.

We will prove .

: Since and always holds then .

: If then . But therefore . The argument for is similar.

: If then and which gives and we are done. Otherwise, we have which contradicts Lemma 4 that satisfies the constraints of the dual problem (3).

Finally, we need to show that and give . First note that by equivalence of and we should have . On the other hand . Now if we get which is a contradiction. Therefore . ∎

Lemma 6.

The following are equivalent:

(a) and ,

(b) and ,

(c) .

(d) and receive unique best positive offers from each other.

Proof.

: means that for all , . This means (using ). Hence, . From , it also follows that or . Therefore, which gives or . From this we can explicitly write which is strictly bigger than . Hence we obtain .

By symmetry . Thus, we have shown that , and are equivalent.

: implies that . Thus, receives its unique best positive offer from . Using symmetry, it follows that holds.

: implies and . By Lemma 5, and are partners, i.e. . Hence, . But since holds, and . This leads to .

This finishes the proof. ∎

Recall that is a weak-dotted edge if , a strong-dotted edge if , and a non-dotted edge otherwise. Basically, for any dotted edge we have and .

Corollary 19.

Lemma 7.

If has no adjacent dotted edges, then

Proof.

Assume that the largest offer to comes from . Therefore, . Now if then or is dotted edge which is impossible. Thus, and . ∎

Lemma 8.

The following are equivalent:

(a) ,

(b) ,

(c) .

Proof.

: “not (b)” “not (a)”.

: Follows from the definition of .

: From we have . Therefore, . ∎

Note that (b) is symmetric in and , so (a) and (c) can be transformed by interchanging and .

Corollary 20.

if and only if

Lemma 9.

holds

Proof.

If then the result follows from Lemma 8. Otherwise, is strongly dotted and , . From here we can explicitly calculate . ∎

Lemma 10.

The unmatched balance property, equation (1), holds at every edge , and both sides of the equation are non-negative.

Proof.

Next lemmas show that dotted edges are in correspondence with the solid edges that were defined in Section 8.

Lemma 11.

A non-solid edge cannot be a dotted edge, weak or strong.

Before proving the lemma let us define alternating paths. A path in is called alternating path if: (a) There exist a partition of edges of into two sets such that either or . Moreover () consists of all odd (even) edges; i.e. (). (b) The path might intersect itself or even repeat its own edges but no edge is repeated immediately. That is, for any and . is called an alternating cycle if .

Also, consider and that are optimum solutions for the LP and its dual, (2) and (3). The complementary slackness conditions (see [38]) for more details) state that for all , and for all , . Therefore, for all solid edges the equality holds. Moreover, any node is adjacent to a solid edge if and only if .

Proof of Lemma 11.

First, we refine the notion of solid edges by calling an edge , 1--solid (--solid) whenever ().

We need to consider two cases:

Case (I). Assume that LP has an optimum solution that is integral as well (having a tight LP).

The idea of the proof is that if there exists a non-solid edge which is dotted, we use a similar analysis to [6] to construct an alternating path consisting of dotted and -solid edges that leads to creation of at an optimal solution to LP (2) that assigns a positive value to . This contradicts the non-solid assumption on .

Now assume the contrary: take that is a non-solid edge but it is dotted. Consider an endpoint of . For example take . Either there is a -solid edge attached to or not. If there is not, we stop. Otherwise, assume is a -solid edge. Using Lemma 7, either or there is a dotted edge connected to . But if this dotted edge is then . Therefore, by Lemma 6 there has to be another dotted edge connected to . Now, depending on whether has (has not) an adjacent -solid edge we continue (stop) the construction. A similar procedure could be done by starting at instead of . Therefore, we obtain an alternating path with all odd edges being dotted and all even edges being -solid. Using the same argument as in [6] one can show that one of the following four scenarios occur.

Path: Before intersects itself, both end-points of the path stop. Either the last edge is -solid (then for the last node) or the last edge is a dotted edge. Now consider a new solution to LP (2) by if and if . It is easy to see that is a feasible LP solution at all points and also for internal vertices of . The only nontrivial case is when (or ) and the edge (or ) is dotted. In both of these cases, by construction is not connected to an -solid edge outside of . Hence, making any change inside of is safe. Now denote the weight of all solid (dotted) edges of by (). Here, we only include edges outside in . Clearly,

| (39) |

But . Moreover, from Lemma 4, is dual feasible which gives . We are using the fact that if there is a -solid edge at an endpoint of the of the endpoint should be . Now Eq. (39) reduces to This contradicts that is non-solid since .