Barter Exchange with Shared Item Valuations

Abstract.

In barter exchanges agents enter seeking to swap their items for other items on their wishlist. We consider a centralized barter exchange with a set of agents and items where each item has a positive value. The goal is to compute a (re)allocation of items maximizing the agents’ collective utility subject to each agent’s total received value being comparable to their total given value. Many such centralized barter exchanges exist and serve crucial roles; e.g., kidney exchange programs, which are often formulated as variants of directed cycle packing. We show finding a reallocation where each agent’s total given and total received values are equal is NP-hard. On the other hand, we develop a randomized algorithm that achieves optimal utility in expectation and where, i) for any agent, with probability 1 their received value is at least their given value minus where is said agent’s most valuable owned and wished-for item, and ii) each agent’s given and received values are equal in expectation.

1. Introduction

Social media platforms have recently emerged into small scale business websites. For example, platforms like Facebook, Instagram, etc. allow its users to buy and sell goods via verified business accounts. With the proliferation of such community marketplaces, there are growing communities for buying, selling and exchanging (swapping) goods amongst its users. We consider applications, viewed as Barter Exchanges, which allow users to exchange board games, digital goods, or any physical items amongst themselves. For instance, the subreddit GameSwap111www.reddit.com/r/Gameswap (61,000 members) and Facebook group BoardgameExchange222https://www.facebook.com/groups/boardgameexchange (51,000 members) are communities where users enter with a list of owned video games and board games. The existence of this community is testament to the fact that although users could simply liquidate their goods and subsequently purchase the desired goods, it is often preferable to directly swap for desired items. Additionally, some online video games have fleshed out economies allowing for the trade of in-game items between players while selling items for real-world money is explicitly illegal e.g., Runescape333www.jagex.com/en-GB/terms/rules-of-runescape. In these applications, a centralized exchange would achieve greater utility, in collective exchanged value and convenience, as well as overcome legality obstacles.

A centralized barter exchange market provides a platform where agents can exchange items directly, without money/payments. Beyond the aforementioned applications, there exist a myriad of other markets facilitating the exchange of a wide variety of items, including books, children’s items, cryptocurrency, and human organs such as kidneys. There are both centralized and decentralized exchange markets for various items. HomeExchange444www.homeexchange.com and ReadItSwapIt555www.readitswapit.co.uk are decentralized marketplaces that facilitate pairwise exchanges by mutual agreement of vacation homes and books, respectively. Atomic cross chain swaps allow users to exchange currencies within or across various cryptocurrencies (e.g., Herlihy, 2018; Thyagarajan et al., 2022). Kidney exchange markets (see, e.g., Aziz et al., 2021; Abraham et al., 2007) and children’s items markets (e.g., Swap666www.swap.com) are examples of centralized exchanges facilitating swaps amongst incompatible patient-donor pairs and children items or services amongst parents. Finding optimal allocations is often NP-hard. As a result heuristic solutions have been explored extensively (Glorie et al., 2014; Plaut et al., 2016).

Currently, the aforementioned communities GameSwap and BoardGameExchange make swaps in a decentralized manner between pairs of agents, but finding such pairwise swaps is often inefficient and ineffective due to demanding a “double coincidence of wants” (Jevons, 1879). However, centralized multi-agent exchanges can help overcome such challenges by allowing each user to give and receive items from possibly different users. Moreover, the user’s goal is to swap a subset of their owned games for a subset of their desired games with comparable (or greater) value. Although an item’s value is subjective, a natural proxy is its re-sale price, which is easily obtained from marketplaces such as Ebay.

We consider a centralized exchange problem where each agent has a have-list and a wishlist of distinct (indivisible) items (e.g., physical games) and, more generally, each item has a value agreed upon by the participating agents (e.g., members of the GameSwap community). The goal is to find an allocation/exchange that (i) maximizes the collective utility of the allocation such that (ii) the total value of each agent’s items before and after the exchange is equal.777Equivalently, the total value of the items given is equal to the total value of the items received. We call this problem barter with shared valuations, , and it is our subject of study. Notice that bipartite perfect matching is a special case of where each agent has a single item in both its have-list and wishlist each and where all the items are have the same value. On the other hand, we show is NP-Hard (Theorem 2).

In the following sections we formulate as bipartite graph-matching problem with additional barter constraints. Our algorithm BarterDR is based on rounding the fractional allocation of the natural LP relaxation to get a feasible integral allocation. A direct application of existing rounding algorithms (like (Gandhi et al., 2006)) to results in a worst-case where some agents give away all their items and receive none in exchange. This is wholly unacceptable for any deployed centralized exchange. In contrast, our main result ensures BarterDR allocations have reasonable net value for all agents; more precisely each agent gives and receives the same value in expectation and the absolute difference between given and received values is at most the value of their most valuable item (Theorem 1).

1.1. Problem formulation:

Suppose we are given a ground set of items to be swapped, item values where denotes the non-negative real numbers, and a community of agents where denotes . Each agent possesses items and has wishlist . A valid allocation of these items involves agents swapping their items with other agents that desire said item. Let denote the utility gained when agent gives an item to an agent . The goal of is to find a valid allocation of maximum utility subject to no agent giving away more value than they received. Formally, any valid allocation is represented by the function such that if agent gives agent item and , otherwise. Additionally, each agent (i) receives only desired items, meaning if , and otherwise; and (ii) gives away only items they possess, thus if , and otherwise. We begin by defining a special variant of a graph matching problem called Value-balanced Matching (VBM).

Definition 0.

Value-balanced Matching (VBM) Suppose there is bipartite graph with vertex values . Let each edge have weight , , and . For a given matching , let and , where denotes disjoint union. The goal of VBM is to find of maximum weight subject to, for each , the value of items matched in and are equal i.e., .

Lemma 1.0.

For any instance of , there exists a corresponding instance of VBM such that the utility of an optimal allocation in the instance is equal to the optimal weight matching in the corresponding instance and vice-versa.

Proof.

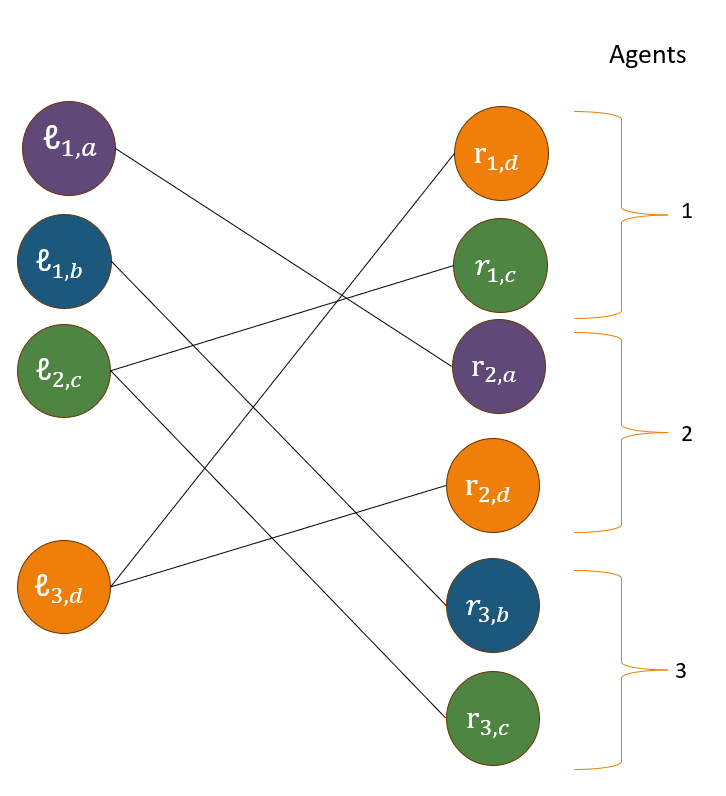

Given a instance given by we can reduce it to a corresponding instance of VBM via the construction of an appropriate bipartite graph with vertex values. For each agent , build the vertex sets and . Then the bipartite graph of interest has vertex sets and , as well as edge set . For each edge , the weight of is given by . Each vertex and has vertex values i.e., . Notice that the edges in represent vertices belonging the same item, hence they have identical values. Refer to Figure 1 for an example constructing a VBM instance.

Suppose we are given any feasible allocation for an instance of with utility of , then we can say construct a feasible matching corresponding to instance of weight . We construct the matching as follows. For any and , if , then add the edge to . The total weight of the matching is given by . It remains to show that is indeed a value-balanced matching. We begin by showing that is a feasible matching to the VBM problem. Since is a valid allocation to , we can say that for any agent , the value of the items received is equal to the value of the items given away i.e., . This implies that for each , we have , therefore, . Thus, is a feasible VBM matching.

To prove the other direction we begin by assuming that is a feasible matching to of weight . Given , we can construct a feasible allocation by assigning if the edge , and otherwise. Notice that is a valid allocation; for any agent , the value of items received is equal to the value of the items given away. This is because is a feasible matching to VBM, meaning for any , implying that . The utility of allocation is given by This completes our proof. ∎

Corollary 0.

is equivalent to VBM.

The proof of the corollary directly follows from Lemma 2.

1.2. LP formulation of VBM

Any feasible matching in such that for each , if and otherwise is feasible in the following Integer Program (IP);

| (1a) | ||||||

| (1b) | subj. to | |||||

| (1c) | ||||||

| (1d) | ||||||

| (1e) | . | |||||

For , we denote where denotes the open neighborhood of i.e., . Thus we can say that if an edge says agent gives item to agent in the corresponding instance.

Lemma 1.0.

Proof.

IP (1) is clearly equivalent to VBM and VBM is equivalent to , per Corollary 3. The objective of IP 1, is the allocation’s utility. By relaxing (1e) to for we arrive at the natural LP relaxation of , namely , which can only have a greater optimal objective value. ∎

We conclude this section by a simple note. For each we may set and recover the objective of maximizing the collective value received by all agents. Nevertheless, our results hold even if is set arbitrarily. For example, the algorithm designer could place greater value on certain item transactions, or they may maximize the sheer number of items received by uniformly setting .

2. Preliminaries: GKPS dependent rounding

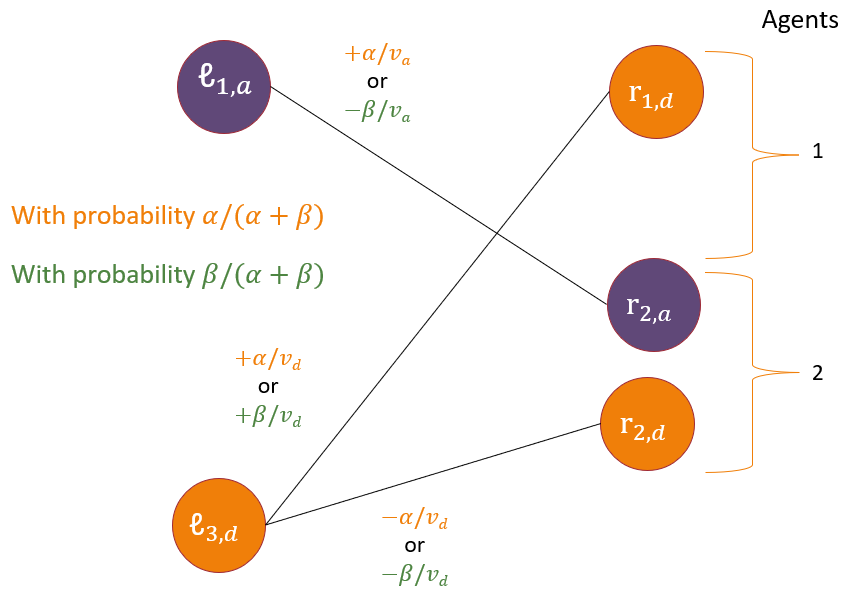

Our results build on the dependent rounding algorithm due to (Gandhi et al., 2006), henceforth referred to as GKPS-DR. GKPS-DR is an algorithm that takes defined over the edge set of a biparite graph and outputs . In each iteration GKPS-DR considers the graph of floating edges (those edges with ) and selects a maximal path or cycle on floating edges. The edges of are decomposed into alternate matchings and and rounded in the following way. Fix , and . Thus, each is updated to according to one of the following disjoint events: with probability

The selection of and ensures at least one edge is rounded to or in every iteration. GKPS-DR guarantees (P1) marginal, (P2) degree preservation, and (P3) negative correlation properties:

-

(P1)

, .

-

(P2)

and with probability 1, .

-

(P3)

, .

Remark 1.

When GKPS-DR rounds a path between vertices and , the signs of the changes to and are equal if and only if and belong to different graph sides.

3. Related work

Centralized barter exchanges have been studied by several others in the context of kidney-exchanges (Abraham et al., 2007; Ashlagi et al., 2018; Aziz et al., 2021). generalizes a well-studied kidney-exchange problem in the following way. The Kidney Exchange Problem (KEP) is often formulated as directed cycle packing in compatibility patient-donor graphs (Abraham et al., 2007) where each node in the graph corresponds to a patient-donor pair and directed edges between nodes indicate compatibility. Abraham et al. (2007); Biró et al. (2009) observed this problem reduces to bipartite perfect matching, which is solvable in polynomial-time. We show is NP-Hard and thus resort to providing a randomized algorithm with approximate guarantees on the agents’ net values via LP relaxation followed by dependent rounding.

There has been extensive work on developing dependent rounding techniques, that round the fractional solution in some correlated way to satisfy both the hard constraints and ensure some negative dependence amongst rounded variables that can result in concentration inequalities. For instance, the hard constraints might arise from an underlying combinatorial object such as a packing (Brubach et al., 2017), spanning tree (Chekuri et al., 2010), or matching (Gandhi et al., 2006) that needs to be produced. In our case, the rounded variables must satisfy both matching (1b), (1c), and barter constraints (1d) (i.e., each agent gives the items of same total value as it received). Gandhi et al. (2006) developed a rounding scheme where the rounded variables satisfy the matching constraints along with other useful properties. Therefore, we adapt their rounding scheme (to satisfy matching constraints) followed by a careful rounding scheme that results in rounded variables satisfying the barter constraints.

Centralized barter exchanges are well-studied under various barter settings. For instance, Abraham et al. (2007) showed that the bounded length edge-weighted directed cycle packing is NP-Hard which led to several heuristic based methods to solve these hard problems, e.g., by using techniques of operations research (Constantino et al., 2013; Glorie et al., 2014; Plaut et al., 2016; Carvalho et al., 2021), AI/ML modeling (McElfresh et al., 2020; Noothigattu et al., 2020). Recently several works focused on the fairness in barter exchange problems (Abbassi et al., 2013; Fang et al., 2015; Klimentova et al., 2021; Farnadi et al., 2021). Our work adds to the growing body of research in theory and heuristics surrounding ubiquitous barter exchange markets.

4. Outline of our contributions and the paper

Firstly, we introduce the problem, a natural generalization of edge-weighted directed cycle packing and show that it is NP-Hard to solve the problem exactly. Our main contribution is a randomized dependent rounding algorithm BarterDR with provable guarantees on the quality of the allocation. The following definitions help present our results. Suppose we are given an integral allocation , we define the net value loss of each agent (i.e., the violation in the barter constraint (1d)):

| (2) |

Our main contribution is a rounding algorithm BarterDR that satisfies both matching ((1b) and (1c)) and barter constraints ((1d)) as desired in multi-agent exchanges. Recall that the rounding algorithm GKPS-DR (indeed a pre-processing step of BarterDR) rounds the fractional matching to an integral solution enjoying the properties mentioned in Section 2. The main challenge in our problem is satisfying the barter constraint. Here, a direct application of GKPS-DR alone can result in a worst case violation of on , corresponding to the agent losing all their items and gaining none (see the example in the Appendix). However, our algorithm BarterDR rounds much more carefully to ensure, for each agent , is at most , i.e., the most valuable item in . The two following theorems provide lower and upper bounds on tractable (i.e., (2)) guarantees for . BarterDR on a bipartite graph is worst-case time where . We view Theorem 1 as our main result.

Theorem 1.

Given a instance, BarterDR is an efficient randomized algorithm achieving an allocation with optimal utility in expectation and where, for all agents , with probability and .

Theorem 2.

Deciding whether a instance has a non-empty valid allocation with for all agents is NP-hard, even if all item values are integers.

Owing to its similarities to GKPS-DR, BarterDR enjoys similar useful properties:

Outline of the paper

In Section 5 we describe BarterDR (Algorithm 1), our randomized algorithm for , and its subroutines FindCCC and CCWalk in detail. Next, we give proofs and proof sketches for Theorems 1 and 3.

5. BarterDR: dependent rounding algorithm for

Notation

BarterDR is a rounding algorithm that proceeds in multiple iterations, therefore we use a superscript to denote the value of a variable at the beginning of iteration . An edge is said to be floating if . Analogously, let , is said to be a floating vertex if and the sets of floating vertices are and . . is defined similarly. . denotes the connected component in containing vertex . Define , for each , to be the set of participating vertices in each barter constraint. We say two vertices are partners if there exists such that and . Note if and are partners, then they are distinct vertices corresponding to items (owned or desired) of some common agent . In iteration , a vertex is said to be partnerless if ; i.e., is the only floating vertex in . We use the shorthand to denote and are partners. Edges and vertices not floating are said to be settled: once an edge (vertex ) is settled BarterDR will not modify (). For vertices and , denotes a simple path from to . Define to be , as defined in (2), but with variables instead of . The fractional degree of refers to .

Once an edge is settled, its value does not change. In the each iteration BarterDR looks exclusively at the floating edges and the graph induced by them. Namely, where and is defined analogously. In each iteration, at least one edge or vertex becomes settled, i.e., . Therefore BarterDR terminates in iteration where and .

Algorithm and analysis outline.

BarterDR begins by making acyclic via the pre-processing step in Section 5.1. Next, BarterDR proceeds as follows. While there are floating edges find an appropriate sequence of paths constituting a CCC or CCW (defined in Section 5.2). The strategy for judiciously rounding is fleshed out in Section 5.3. Finally, Section 5.4 concludes with proofs for Theorems 1 and 3.

5.1. Pre-processing: remove cycles in

The pre-processing step consists of finding a cycle via depth-first search in the graph of floating edges and rounding via GKPS-DR until there are no more cycles. Let denote the LP solution and denote the output of the pre-processing step. BarterDR begins on .

GKPS-DR on cycles never changes fractional degrees, i.e., . Lemma 3 is used to construct CCC’s and CCW’s, and it is the raison d’être for the pre-processing step.

Lemma 5.0.

The pre-processing step is efficient and gives for all agents with probability .

Proof.

The pre-processing step runs GKPS-DR on until no cycles remain. GKPS-DR on a cycle guarantees that at the end of each iteration the fractional vertex degrees remain unchanged. Moreover, the full GKPS algorithm takes time , bounding the pre-processing step’s runtime which can be seen as GKPS with an early stoppage condition. ∎

Lemma 5.0.

Let be a vector of floating edges over a bipartite graph . Then the number of floating vertices in is not 1.

Proof.

Let be the sole floating vertex, i.e., , and, without loss of generality, let . For , let . Then . But being the only floating vertex implies and . ∎

Lemma 5.0.

Each connected component of has at least floating vertices.

Proof.

Fix an arbitrary connected component of . If has 0 floating vertices, then each vertex has degree at least two because . This implies the existence of a cycle in , but this cannot happen as the pre-processing step eliminates all cycles. Applying Lemma 2 to , the only remaining possibility is that has at least two floating vertices. ∎

5.2. Construction of CCC’s and CCW’s via FindCCC

This section introduces CCC’s and CCW’s. The definition of these structures facilitates rounding edges while respecting the barter constraints each iteration. The subroutines for constructing CCC’s and CCW’s, FindCCC and CCWalk, are described in Algorithms 2 and 3. The correctness of these subroutines, and thus the existence of CCC’s and CCW’s, follows from Lemma 5.

Definition 0.

A connected component cycle (CCC) is a sequence of paths such that, letting be the paths’ endpoint vertices,

-

(1)

, (taking ),

-

(2)

, ,

-

(3)

, belongs to a unique connected component, and

-

(4)

, and are floating vertices.

Instead, we have a connected component walk (CCW) if criteria 3) and 4) are met but 1) and 2) are relaxed to: 1) , and and are partnerless; and 2) .

Recall that a rounding iteration is fixed so whether a vertex is floating or partnerless is well-defined. When is rounded the set of vertices whose fractional degrees change is precisely . Requirements 1 and 2 of a CCC say and are partners and they do not have any other partner vertices in . Comparably, for CCW’s these requirements imply the same for all vertices but the “first” and “last,” which are partnerless. Therefore, for CCC’s and CCW’s the vertices in respectively appear in and distinct barter constraints. The requirements in the definitions of CCC and CCW come in handy during the analysis because: each path belongs to a different connected component hence they are vertex and edge disjoint; if a barter constraint has exactly two vertices in then these vertices’ fractional degree changes can be made to cancel each other out in the barter constraint; and floating vertices ensure paths can be rounded in a manner analogous to GKPS-DR. For comparison, GKPS-DR also needed paths with floating endpoints, but maximal paths always have such endpoints whereas the paths of need not be maximal. Consequently, the requirement that paths of have floating endpoints must be imposed.

Uncrossing the half-CCWs.

We define what we mean by ”crossing” half-CCW’s in FindCCC Algorithm 2 and how to resolve this into a CCC. Using and build . can be seen as the sequence of path endpoints (i.e., in CCWalk) resulting from a run of , which possibly did not stop when it should have returned a CCC. By the half-CCW’s ”crossing” we mean that in some iteration of the while-loop of CCWalk either a connected component is revisited or was partners with a vertex previously visited. But these cases are precisely Algorithms 3 and 3 from CCWalk where a CCC is resolved and returned.

Lemma 5.0.

If has no cycles, FindCCC efficiently returns a CCC or CCW.

Proof of Lemma 5.

for is guaranteed to be acyclic by the pre-processing step. Recall, this acyclic property is used by Lemma 3 to guarantee each CC has at least two floating vertices. This ensures Algorithm 3 of FindCCC is well defined.

Let be the sequence of vertices at the beginning of iteration , and let denote without some vertex . Like in CCWalk, “” denotes sequence concatenation. Let and be the first and last vertices of ; note does not change over iterations. We prove the correctness of CCWalk with the aid of the following loop invariants maintained at the beginning of each iteration of the while-loop.

-

(I1)

has no partners in .

-

(I2)

, has exactly one partner in .

-

(I3)

is the only vertex from in .

-

(I4)

, there are exactly two vertices from contained in .

Proceed by induction. When , so so all invariants are (vacuously) true. Let be the predicate saying all invariants hold at the beginning of iteration . We assume and show .

If there is an iteration , then CCWalk did not return during iteration and must have added to . If had a partner in then iteration would have been the last as a CCC would have been returned. Therefore (I1) holds at the beginning of iteration .

By , , has exactly one partner in and has zero partners in . By construction is selected to be the partner of so now and have exactly one partner each in . Therefore (I2) holds at the beginning of iteration .

If were to not meet (I3) then it must mean that either or . But in this case the connected component was revisited during iteration and a CCC would have been returned. Therefore, (I3) holds.

By construction . Moreover, otherwise was revisited and iteration would have been the last. Therefore, (I4) continues to hold.

Moreover note the while-loop runs at most many times since revisiting a connected component of causes the function to return.

Next we leverage the loop invariants to prove CCWalk returns valid CCC’s. First observe that by construction of , Properties 1) and 4) of a CCC are always immediate. CCWalk returns CCC’s when a connected component is revisited or when the added already has partners present in . We inspect both return cases to ensure indeed a valid CCC is returned. Fix some iteration .

Suppose a connected component , is revisited. Then is returned. By (I1), and are each others only partners. Recall by construction , and , so by (I2) it follows that each of has exactly one partner amongst themselves. Therefore, Property 2) of CCC’s holds. It remains to check Property 3). By (I4) each of , for belongs to a unique connected component. Therefore, , too, must belong to a unique connected component different from each ; otherwise there was a connected component containing , , and contradicting one of (I3) and (I4) (depending on whether or ).

Instead suppose a CCC is returned because had a partner . Let be the last vertex in such that . If then is clearly a CCC. So suppose . It must be that for some ; otherwise is not the last such vertex. So focus on proving is a CCC. Property 2) follows because and are each other’s only partners by (I1); and are each other’s only partners because by (I2) previously had only partner but we have cut it out from the CCC; and the rest of the pairs have unique partners by (I2). Lastly, Property 3) holds because was not a revisited CC so and share a unique CC, and the rest of the path endpoints belong to distinct CC’s by (I4).

The last remaining case is that where the two half-CCW’s overlap. This can be resolved into a CCC in the manner already described in the main text under the paragraph Uncrossing the half-CCW’s of Section 5.2.

Runtime of FindCCC

We conclude with comments about the runtime of FindCCC. We can build a hash-map mapping vertices to a set of all their floating partners. This hash-map can be constructed in time . Similarly, we can build a set to keep track of visited connected components. Finding the partner of can be done in time by checking the hash-map, and finding the vertex in can be done by starting a depth first search from until a floating vertex is reached. After rounding the CCC/CCW, remove vertices that became settled from their respective sets of floating partners. Altogether, the depth first searches starting from each altogether visit each vertex and edge at most times before returning and each vertex is removed from the hash-map’s set of floating partners at most once. Therefore, CCWalk runs in time . FindCCC calls CCWalk times and resolving the two-half CCW’s can be thought of as another run of CCWalk, as argued above. Therefore, FindCCC finishes in time . ∎

5.3. Rounding CCC’s and CCW’s

5.3.1. Roundable colorings

Now we shed light on what we mean by carefully rounding the paths of the CCC/CCW . But first we build some intuition. Focus on and for some fixed in case of a CCC (or in the case of a CCW). Since , whatever rounding procedure we use, we want the relative signs of the changes to and to depend on whether and fall on the same or different sides of (these sides being “left” and “right” corresponding to vertex sets and ; equivalently, left and right of “” in (1d)). This way (1d) is preserved after rounding. Likewise, the magnitudes of fractional degree changes to and must be balanced depending on and so that (1d) is preserved for corresponding to . Intuitively, we round carefully (1d) by ensuring i) the signs of the changes depend on which side of the equation tehy appear on, and ii), the magnitudes of said changes are weighted by the values of the items they represent. We make this precise via roundable colorings. If and are vertices belonging to different sides of the graph, we say ; otherwise we say .

Definition 0 (Roundable coloring).

The CCC has a roundable coloring if there exists such that i) for all , if and only if ; and ii) for all , if and only if . A roundable coloring for a CCW is defined the same way except ii) becomes , if and only if .

Property i) will ensure see same-sign fractional degree change if and only if , which happens if and only if they appear on the same side (1d). Property ii) is equivalent to Remark 1 and verifies each is roundable in GKPS-DR-inspired manner.

Lemma 5.0.

Every CCC and CCW admits an efficiently computable roundable coloring .

Proof of Lemma 7.

Consider the following heuristic to find a roundable coloring of . Assign an arbitrary color to , say . Because only has two colors, this immediately determines the color of , which depends on whether and are same-side vertices. Again, this immediately determines the color of , which in turn determines the color of , and so on.

If is a CCW then and are partnerless so their colors do not affect the first property. The vertices are colored in the order ensuring that both properties hold. Therefore, this scheme produces a roundable coloring for CCW’s in time .

Instead suppose is a CCC. It only remains to check that . We now verify this. Observe that the greedy algorithm ensures

and

where equals 1 if “” is true and 0 otherwise; this is a slight abuse of notation since is a relation but we are treating it as a boolean function. Expanding by repeated application of the above observations, we have

where is the number of same-side paths and is the number of same-side partners not counting the pair and . Then ensuring we have a roundable coloring reduces to ensuring that is even and is odd. Letting be the total number of same-side partners, the above is equivalent to asking be even, which we now prove.

Let be the number of different-side paths, and let , , respectively be the number of left-left, right-right, and left-right partner pairs. So,

| (3) |

Let be the number of left vertices in the CCC. Clearly, . Look at , this is the number of left vertices remaining after removing the different-side paths in . Since these vertices must be covered by same side paths we must have even. Then, with all congruences taken modulo ,

Plugging the above into (3) gives . Therefore, . ∎

5.3.2. Using roundable colorings

Roundable colorings will ensure the sings of changes are as desired. We now introduce notation to use the roundable colorings and ensure that the magnitudes of said changes is done in proportion to the value of items involved.

Although the notation used next is cumbersome, the intuition is to fix “small enough” that all edge variables stay in and vertex fractional degrees stay within their current ceilings and floors but “large enough” that at least one edge or vertex is settled. First, fix the roundable coloring , which is possible per Lemma 7. Next, decompose each path into alternating matchings and such that such that ; property ii) of guarantees this is possible. In other words, vertex is present in . For readability drop the superscripts briefly and let

| (4) |

and, symmetrically,

| (5) |

Finally, the magnitudes fixed (in analogy to Section 2) are

| (6) |

Both and are well defined as they are the minima of non-empty finite sets. The update proceeds probabilistically as follows: ,

| (7) |

| (8) |

At a high level and are large enough that every rounding iteration at least one vertex or edge is settled. Adding the terms ensures term appearing in (1d) will cancel out nicely, as seen in the proof of Lemma 9.

5.4. Algorithm analysis

Proof sketch of Theorem 3

Except for (J2), the proofs for the invariants are almost identical to those in (Gandhi et al., 2006). This is because BarterDR is crafted so as to be similar to GKPS-DR in the ways necessary for this analysis to carry over. Owing to proofs’ similarities, we defer their full treatment to Appendix B. The proof proceeds via the following invariants maintained at each iteration of BarterDR.

-

(J1)

, .

-

(J2)

and with probability 1, .

-

(J3)

, .

The main place where our proof deviates results from BarterDR choosing and differently. In particular, GKPS-DR selects a single path and at least one of its edges becomes settled each iteration. In BarterDR the guarantee says, among all paths of the fixed CCC/CCW, at least one edge or vertex becomes settled each iteration.

Lemma 5.0.

BarterDR achieves optimal objective in expectation and .

Proof of Lemma 8.

Lemma 5.0.

If and there exists distinct floating , then .

Proof of Lemma 9.

If no vertex from appears in the CCC/CCW’s endpoints then we are done. So suppose and in this -th rounding iteration. By assumption there exists another floating vertex, namely in iteration when was constructed. Therefore, is not partnerless hence it cannot be the endpoint of a CCW so there exists such that . Moreover, by property 2 of the definition of a CCC/CCW, said is unique. Therefore, and are the only vertices in affecting this iteration ; i.e., . Since , we may assume without loss of generality that and for some (or for a CCW); recall . We know if and only if and belong to opposite graph sides where is the valid coloring function corresponding to , which can be fixed efficiently per Lemma 7.

Consider the two possible rounding events, described in (7). Call these events and . Suppose and are opposite-side vertices, hence . Focus on event (the proof for is exactly the same but replacing with ). Under event we have

A moment’s notice here shows that per the definitions of Sections 5.3.2, 5.3.2 and 6. Note the factor of “” appears because belongs to for some . Conveniently, this leaves us with

| (9) | ||||

| (10) |

using the fact . Without loss of generality let . Therefore, expanding :

| (11) |

Having fixed , we know if and only if . Thus, take out and from the sums and substitute (10) to have

| (12) |

Now observe that for all . Moreover, if and only if , so we can reabsorb the terms “” and “” into their respective summations; thus yielding . But this is precisely , which we’ve assumed to be 0. ∎

Proof of Theorem 1.

It is straightforward to check that after solving there are at most floating edges. Each iteration of the pre-processing step finds a cycle, say using depth-first-search, and rounds said cycle in time with at least one edge being settled every time a cycle is rounded. Therefore, the pre-processing step takes time at most . Similarly, FindCCC takes time to find and round a CCC or CCW. Each iteration a CCC/CCW is rounded either one edge or vertex becomes settled. Therefore BarterDR runs in time .

Let be like in (2) but with variables instead of . Then Lemma 9 guarantees that for each agent , implies until has exactly one floating vertex (if this happens at all). This means if in some iteration the number of floating vertices in went from at least 2 to 0, then by the degree preservation invariant (J2), proved in the proof of Theorem 3, and we are done. Therefore, the only case we must consider is when there is a solitary floating vertex . Let be the first iteration that started with having a sole vertex with . Then by expanding

| (13) | ||||

| (14) | ||||

| (15) | ||||

| (16) | ||||

| (17) |

which is our desired bound for Theorem 1. Equation (14) follows because we assume (as corresponds to the LP solution and the pre-processing step thus guarantees ) and is the first iteration where contains exactly one floating vertex; therefore, by induction and Lemma 9, . Inequality (16) follows from the triangle inequality. The strict inequality in (17) follows because was the sole floating vertex of in iteration ; hence by Lemma 2, and .

By assumption, for all since is an optimal solution to the corresponding and the pre-processing step ensures . Then, by Lemma 9, the only ’s that are not necessarily preserved are those where ends up with exactly one floating vertex in some algorithm iteration . As argued above, this case leads to . Together with Lemma 8 this completes the proof. ∎

Consequently from Theorems 1 and 3:

Corollary 0.

with all items having equal values is integral.

To see this note that if all item values are equal then setting them all equal to gives an equivalent instance with . Then the guarantee recovers . In this special case, our randomized algorithm recovers an integral solution to the LP, proving its integrality.

6. Extension of to multiple copies of each item

In Section 1.1 we formulated where each agent has and wants at most one copy of any item . We now consider the natural generalization where agents may swap an arbitrary number item copies. In addition to the previous inputs, for each agent , we take capacity functions and denoting the (non-negative) number of copies owned and desired of each . For simplicity we define for and for . Analogously, now and says agent gives copies of item to agent . Much like before, a valid allocation is one where each agent (i) receives only desired items within capacity, meaning ; and (ii) gives away only items they possess within capacity, thus . The goal of remains the same: to find a valid allocation of maximum utility (now defined as ) subject to no agent giving away more value than they receive (where the values given and received by agent are now and , respectively).

Like before, admits the following similar IP formulation with LP relaxation after relaxing (18e). For we relax to be in .

| (18a) | ||||||

| (18b) | subj. to | |||||

| (18c) | ||||||

| (18d) | ||||||

| (18e) | . | |||||

6.1. Equivalence of -Caps with single item copies

By explicitly making distinct items for each capacitated item in a instance we can reduce said instance to one of . Although, the resulting and formulation now has a number of vertices exponential in the size of the input, this is not a problem. We only use this instance as a thought experiment to establish that BarterDR will give correct output. To avoid solving any large instances we first solve the polynomial-sized and subsequently observe BarterDR actually has polynomial size input in Lemma 2.

Lemma 6.0.

Any instance of has a corresponding (larger but) equivalent instance with unit capacities.

Proof of Lemma 1.

The instance with unit copies of each item will be large but it is only a thought experiment; we do not directly solve the corresponding LP nor write the full graph down. Fix an agent and an item in . Make copies of this vertex each with unit capacity, say . Similarly, for an item , make copies . Like before add edges between all vertices corresponding to the same original items. Keep all edge weights the same and use the same corresponding weights for edges between copies i.e., if and then . Call this new set of edges over vertex copies .

To see the two formulations are equivalent, we show , is feasible to if and only if , is feasible to . Moreover, and have the same objective value. Let then for and . Correspondingly let and set all equal to and . if and only if and are feasible. Moreover, both and each contribute to the objective and value given by agent and received by agent .

Therefore we always write and solve and use only as a thought experiment to facilitate the presentation of the problem. It is straightforward to check that the size of is where and similarly . ∎

Let correspond to the graph with vertex copies as outlined in the proof of Lemma 1.

Lemma 6.0.

A solution to has at most floating variables.

7. Fairness

Fairness is an important consideration when resource allocation algorithms are deployed in the real-world. Theorem 3 allows for adding fairness constraints to . Previous works such as (Duppala et al., 2023; Esmaeili et al., 2022, 2023) studied various group fairness notions, and formulated the fair variants of problems like Clustering, Set Packing, etc., by adding fairness constraints to the Linear Programs of the respective optimization problems.

Consider a toy example of such an approach where we are given communities of agents coming together to thicken the market. In order to incentivize said communities to join the centralized exchange, the algorithm designer may promise that each community will receive at least units of value on average. By adding the constraints

| (19) |

to the , the algorithm designer ensures that the expected utility of each group is at least . More precisely, (P1) and the linearity of expectation ensures

The same rationale can be extended to provide individual guarantees (in expectation) by adding analogous constraints for each agent. We conclude this brief discussion by highlighting the versatility of LPs and, as a result, of BarterDR.

8. Hardness of

We first prove Theorem 2: it is NP-Hard to find any non-empty allocation satisfying for all agents . By non-empty we mean the corresponding LP solution , i.e., at least one agent gives away an item. The proof proceeds by reducing from the NP-hard problem of Partition.

Definition 0 (Partition).

A Partition instance takes a set of positive integers summing to an integer . The goal of Partition is to determine if can be partitioned into disjoint subsets and such that each subset sums exactly to an integer .

Lemma 8.0.

Given a Partition instance, it can be reduced in polynomial time to a corresponding instance with two agents.

Proof.

Consider an instance of partition problem where is a set of integers where . Given , the corresponding instance is constructed as follows.

Let the set of items with item values for each item and for item . There are only two agents. Agent has item lists and . Symmetrically, agent has item lists and . The particular weights of allocating items (i.e., allocation’s utility) do not matter as we only care about whether some non-empty allocation exists. ∎

Recall the goal is to show there exists a non-empty allocation such that for each agent , if and only if the corresponding Partition instance has a solution.

Lemma 8.0.

There exists a polynomial time algorithm to find a non-empty allocation of items with for each agent in the instance if and only if there exists a polynomial time algorithm to the corresponding Partition instance.

Proof.

Forward direction (Partition ). Given a solution to the Partition instance, the corresponding instance has a solution in the following manner. Allocate the items to agent and allocate the item to agent . Thus, the value of the items received and given by both the agents is exactly resulting in a non-empty allocation with .

Backward Direction ( Partition). Take a non-empty allocation of items to each agent with . Such a non-empty allocation must have agent giving away their only item, which has value . Therefore implies agent received units of value. Let the items agent received be , letting . Thus, . Therefore, the corresponding partition instance has solution and . ∎

Together, Lemmas 2 and 3 prove Theorem 2: it is NP-hard to find a non-empty allocation of items with for any agent .

8.1. LP approaches will not beat

A natural follow-up question: is it possible to guarantee for some ? At least using , this is not be possible. Consider the family of instances wherein two agents have one item each and desire each other’s item. The agent’s items are and , respectively, with values and . Let the utility be that of maximizing the total value reallocated (i.e., for , , in the corresponding VBM instance). The LP solution corresponds to agent 1 giving a -fraction of her item away and agent 2 giving his entire item away for an optimal objective value of . Even if agent always gives his item away, the objective achieved is only . Therefore, to achieve the optimal objective agent must give away her item with positive probability. This leads to the worst case, . Therefore,

Remark 2.

For any , there exists such that achieves .

A moment’s reflection makes it clear that the only feasible (integral) reallocation to this family of instances is the empty reallocation i.e., the zero vector. A direction to bypass this ”integrality gap”-like barrier in might be to write only for instances in which we know has a feasible non-empty integral reallocation. However, this is in and of itself a hard problem as proved in Theorem 2.

9. Conclusion

We introduce and study , a centralized barter exchange problem where each item has a value agreed upon by the participating agents. The goal is to find an allocation/exchange that (i) maximizes the collective utility of the allocation such that (ii) the total value of each agent’s items before and after the exchange is equal. Though it is NP-hard to solve exactly, we can efficiently compute allocations with optimal expected utility where each agent’s net value loss is at most a single item’s value. Our problem is motivated by the proliferation of large scale web markets on social media websites with 50,000-60,000 active users eager to swap items with one another. We formulate and study this novel problem with several real-world exchanges of video games, board games, digital goods and more. These exchanges have large communities, but their decentralized nature leaves much to be desired in terms of efficiency.

Future directions of this work include accounting for arbitrary item valuations i.e., different agents may value items differently. Directly applying our algorithm does not work because we rely on the paths of consisting of items with all-equal values. Additionally, we briefly touch on how some fairness criteria interact with our model algorithm; however, the exploration of many important fairness criteria is left for future work. Finally, we argue why our specific LP-based approach cannot guarantee better than . It remains an open question whether other approaches (LP-based or not) can beat this bound or whether improving this result is NP-hard altogether.

Acknowledgements.

Sharmila Duppala, Juan Luque and Aravind Srinivasan are all supported in part by NSF grant CCF-1918749.References

- (1)

- Abbassi et al. (2013) Zeinab Abbassi, Laks V. S. Lakshmanan, and Min Xie. 2013. Fair Recommendations for Online Barter Exchange Networks. In International Workshop on the Web and Databases.

- Abraham et al. (2007) David J. Abraham, Avrim Blum, and Tuomas Sandholm. 2007. Clearing Algorithms for Barter Exchange Markets: Enabling Nationwide Kidney Exchanges. In Proceedings of the 8th ACM Conference on Electronic Commerce (EC ’07). Association for Computing Machinery, New York, NY, USA, 295–304. https://doi.org/10.1145/1250910.1250954

- Ashlagi et al. (2018) Itai Ashlagi, Adam Bingaman, Maximilien Burq, Vahideh Manshadi, David Gamarnik, Cathi Murphey, Alvin E. Roth, Marc L. Melcher, and Michael A. Rees. 2018. Effect of Match-Run Frequencies on the Number of Transplants and Waiting Times in Kidney Exchange. American Journal of Transplantation 18, 5 (2018), 1177–1186. https://doi.org/10.1111/ajt.14566

- Aziz et al. (2021) Haris Aziz, Ioannis Caragiannis, Ayumi Igarashi, and Toby Walsh. 2021. Fair Allocation of Indivisible Goods and Chores. Auton Agent Multi-Agent Syst 36, 1 (Nov. 2021), 3. https://doi.org/10.1007/s10458-021-09532-8

- Biró et al. (2009) Péter Biró, David F. Manlove, and Romeo Rizzi. 2009. Maximum Weight Cycle Packing in Directed Graphs, with Application to Kidney Exchange Programs. Discrete Math. Algorithm. Appl. 01, 04 (Dec. 2009), 499–517. https://doi.org/10.1142/S1793830909000373

- Brubach et al. (2017) Brian Brubach, Karthik Abinav Sankararaman, Aravind Srinivasan, and Pan Xu. 2017. Algorithms to Approximate Column-sparse Packing Problems. ACM Transactions on Algorithms (TALG) 16 (2017), 1 – 32.

- Carvalho et al. (2021) Margarida Carvalho, Xenia Klimentova, Kristiaan Glorie, Ana Viana, and Miguel Constantino. 2021. Robust models for the kidney exchange problem. INFORMS Journal on Computing 33, 3 (2021), 861–881.

- Chekuri et al. (2010) Chandra Chekuri, Jan Vondrák, and Rico Zenklusen. 2010. Dependent randomized rounding via exchange properties of combinatorial structures. In 2010 IEEE 51st Annual Symposium on Foundations of Computer Science. IEEE, 575–584.

- Constantino et al. (2013) Miguel Constantino, Xenia Klimentova, Ana Viana, and Abdur Rais. 2013. New insights on integer-programming models for the kidney exchange problem. European Journal of Operational Research 231, 1 (2013), 57–68.

- Duppala et al. (2023) Sharmila Duppala, Juan Luque, John Dickerson, and Aravind Srinivasan. 2023. Group Fairness in Set Packing Problems. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, IJCAI-23, Edith Elkind (Ed.). International Joint Conferences on Artificial Intelligence Organization, 391–399. https://doi.org/10.24963/ijcai.2023/44 Main Track.

- Esmaeili et al. (2023) Seyed Esmaeili, Sharmila Duppala, Davidson Cheng, Vedant Nanda, Aravind Srinivasan, and John P Dickerson. 2023. Rawlsian fairness in online bipartite matching: Two-sided, group, and individual. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 37. 5624–5632.

- Esmaeili et al. (2022) Seyed A. Esmaeili, Sharmila Duppala, John P. Dickerson, and Brian Brubach. 2022. Fair Labeled Clustering. https://doi.org/10.48550/arXiv.2205.14358 arXiv:2205.14358 [cs]

- Fang et al. (2015) Wenyi Fang, Aris Filos-Ratsikas, Søren Kristoffer Stiil Frederiksen, Pingzhong Tang, and Song Zuo. 2015. Randomized assignments for barter exchanges: Fairness vs. efficiency. In Algorithmic Decision Theory: 4th International Conference, ADT 2015, Lexington, KY, USA, September 27–30, 2015, Proceedings. Springer, 537–552.

- Farnadi et al. (2021) Golnoosh Farnadi, William St-Arnaud, Behrouz Babaki, and Margarida Carvalho. 2021. Individual Fairness in Kidney Exchange Programs. Proceedings of the AAAI Conference on Artificial Intelligence 35, 13 (May 2021), 11496–11505.

- Gandhi et al. (2006) Rajiv Gandhi, Samir Khuller, Srinivasan Parthasarathy, and Aravind Srinivasan. 2006. Dependent Rounding and Its Applications to Approximation Algorithms. J. ACM 53, 3 (May 2006), 324–360. https://doi.org/10.1145/1147954.1147956

- Glorie et al. (2014) Kristiaan M Glorie, J Joris van de Klundert, and Albert PM Wagelmans. 2014. Kidney exchange with long chains: An efficient pricing algorithm for clearing barter exchanges with branch-and-price. Manufacturing & Service Operations Management 16, 4 (2014), 498–512.

- Herlihy (2018) Maurice Herlihy. 2018. Atomic cross-chain swaps. In ACM Symposium on Principles of Distributed Computing (PODC). 245–254.

- Jevons (1879) William Stanley Jevons. 1879. The Theory of Political Economy. Macmillan and Company.

- Klimentova et al. (2021) Xenia Klimentova, Ana Viana, João Pedro Pedroso, and Nicolau Santos. 2021. Fairness models for multi-agent kidney exchange programmes. Omega 102 (2021), 102333.

- McElfresh et al. (2020) Duncan McElfresh, Michael Curry, Tuomas Sandholm, and John Dickerson. 2020. Improving Policy-Constrained Kidney Exchange via Pre-Screening. In Advances in Neural Information Processing Systems, Vol. 33. 2674–2685.

- Noothigattu et al. (2020) Ritesh Noothigattu, Dominik Peters, and Ariel D Procaccia. 2020. Axioms for learning from pairwise comparisons. Advances in Neural Information Processing Systems 33 (2020), 17745–17754.

- Plaut et al. (2016) Benjamin Plaut, John Dickerson, and Tuomas Sandholm. 2016. Fast optimal clearing of capped-chain barter exchanges. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 30.

- Thyagarajan et al. (2022) Sri AravindaKrishnan Thyagarajan, Giulio Malavolta, and Pedro Moreno-Sanchez. 2022. Universal atomic swaps: Secure exchange of coins across all blockchains. In IEEE Symposium on Security and Privacy (S&P). IEEE, 1299–1316.

Appendix A Direct application of GKPS-DR fails

Example 1 is a worst case instance where a direct application of GKPS-DR to the fractional optimal solution of results in a net loss of for some agent ; i.e., agent gives away all their items and does not receive any item from its wishlist.

Example 0.

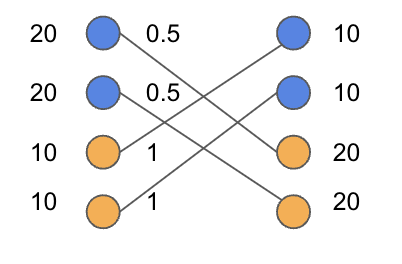

Consider an instance of with two agents where and . Let the values of the items be and .

Figure 3 shows the bipartite graph of the instance from Example 1. The edges are unweighted and the optimal LP solution is . The first two coordinates of correspond to items given by agent . GKPS-DR will round both of these co-ordinates to with positive probability thus resulting in agent incurring a net loss of units of value.

Observation 1.

GKPS-DR rounding results in the vector with positive probability; this is the worst case for agent where their net loss is units of value.

The above example can be easily generalized to instances with larger item-lists and more agents where some agent achieves net value loss with positive probability.

Appendix B Proof of Theorem 3

Lemma B.0.

BarterDR satisfies (J1).

Proof.

The property holds trivially for . Recall corresponds to the output of the pre-processing step. This fact is proved in (Gandhi et al., 2006). Therefore, focus on some fixed and proceed by induction. Fix and the CCC/CCW to be rounded . Proceed by considering the following two events.

Event :

does not appear in so does not change this iteration. Thus by the induction hypothesis .

Event :

appears in , say, in path for a fixed .

Recall values and from (6) are fixed and

is modified according to (7).

Assuming then

The same holds if instead . Hence

Let be the set of possible values for .

By the IH, then . ∎

Lemma B.0.

BarterDR satisfies (J2).

Proof.

The property holds trivially for . Recall corresponds to the output of the pre-processing step. This fact is proved in (Gandhi et al., 2006). Therefore, focus on some fixed and proceed by induction. Fix and the CCC/CCW to be rounded . Recall denotes the endpoints of the paths of . Proceed by cases.

Case : . Then either does not appear in or appears in but with two edges incident on it. In the former case clearly . In the latter case, the change of each incident edge is equal in magnitude and opposite in sign (since one edge belongs to and the other to ) therefore as well. Thus by the IH .

Case : . There is a single incident edge . Without loss of generality, said edge belongs path and thus to (the proof for is identical). Then either or . In either case, by definition of and (i.e., (6)), and are small enough that . Observe and .

Having handled exhaustive cases, the proof is complete. ∎

Lemma B.0.

BarterDR satisfies (J3).

Proof.

The property holds trivially for . Recall corresponds to the output of the pre-processing step. This fact is proved in (Gandhi et al., 2006). Therefore, focus on some fixed and proceed by induction. Fix a vertex and a subset of edges incident on like in (J3). Also fix the CCC/CCW to be rounded . Proceed based on the following events.

Event : no edge in has its value modified. Then .

Event : two edges have their values modified. Said edges must both belong to , for some fixed , with one belonging to and the other to ; say and . Then

where and are fixed per (6). Let . Then

The above expectation can be written as , where

It is easy to s how . Thus, for any fixed and for any fixed , and for fixed values of , the following holds:

Hence, .

Event : exactly one edge in the set has its value modified. Let denote the event that edge has its value changed in the following probabilistic way

Thus, . Letting , we get that equals

Since the equation holds for any , for any values of , and for any , we have . ∎