Bayes beats Cross Validation: Fast and Accurate

Ridge Regression via Expectation Maximization

Abstract

We present a novel method for tuning the regularization hyper-parameter, , of a ridge regression that is faster to compute than leave-one-out cross-validation (LOOCV) while yielding estimates of the regression parameters of equal, or particularly in the setting of sparse covariates, superior quality to those obtained by minimising the LOOCV risk. The LOOCV risk can suffer from multiple and bad local minima for finite and thus requires the specification of a set of candidate , which can fail to provide good solutions. In contrast, we show that the proposed method is guaranteed to find a unique optimal solution for large enough , under relatively mild conditions, without requiring the specification of any difficult to determine hyper-parameters. This is based on a Bayesian formulation of ridge regression that we prove to have a unimodal posterior for large enough , allowing for both the optimal and the regression coefficients to be jointly learned within an iterative expectation maximization (EM) procedure. Importantly, we show that by utilizing an appropriate preprocessing step, a single iteration of the main EM loop can be implemented in operations, for input data with rows and columns. In contrast, evaluating a single value of using fast LOOCV costs operations when using the same preprocessing. This advantage amounts to an asymptotic improvement of a factor of for candidate values for (in the regime where is the number of regression targets).

1 Introduction

Ridge regression [25] is one of the most widely used statistical learning algorithms. Given training data and , ridge regression finds the linear regression coefficients that minimize the -regularized sum of squared errors, i.e.,

| (1) |

In practice, using ridge regression additionally involves estimating the value for the tuning parameter that minimizes the expected squared error for new data and sampled from the same distribution as the training data. This problem is usually approached via the leave-one-out cross-validation (LOOCV) estimator, which can be computed efficiently by exploiting a closed-form solution for the leave-one-out test errors for a given . The wide and long-lasting use of the LOOCV approach suggests that it solves the ridge regression problem more or less optimally, both in terms of its statistical performance, as well as its computational complexity.

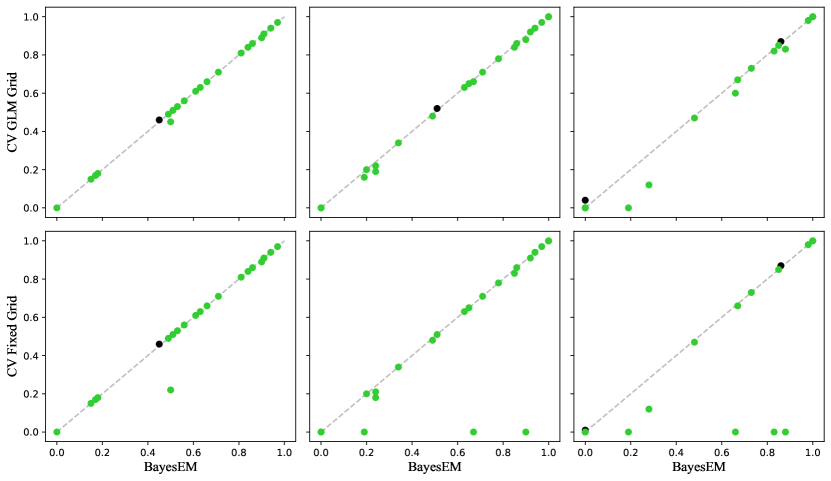

However, in this work, we show that LOOCV is outperformed by a simple expectation maximization (EM) approach based on a Bayesian formulation of ridge regression. While the two procedures are not equivalent, in the sense that they generally do not produce identical parameter values, the EM estimates tend to be of equal quality or, particularly in sparse regimes, superior to the LOOCV estimates (see Figure 1). Specifically, the LOOCV risk estimates can suffer from potential multiple and bad local minima when using iterative optimization, or misspecified candidates when performing grid search. In contrast, we show that the EM algorithm finds a unique optimal solution for large enough (outside pathological cases) without requiring any hard to specify hyper-parameters, which is a consequence of a more general bound on (Thm. 3.1) that we establish to guarantee the unimodality of the posterior distribution of Bayesian ridge regression—a result with potentially wider applications. In addition, the EM procedure is asymptotically faster than the LOOCV procedure by a factor of where is the number of candidate values for to be evaluated (in the regime where , , and are the number of covariates, target variables, and data points, respectively). In practice, even in the usual case of and , the EM algorithm tends to outperform LOOCV computationally by an order of magnitude as we demonstrate on a test suite of datasets from the UCI machine learning repository and the UCR time series classification archive.

While the EM procedure discussed in this paper is based on a recently published procedure for learning sparse linear regression models [44], the adaption of this procedure to ridge regression has not been previously discussed in the literature. Furthermore, a direct adoption would lead to a main loop complexity of that is uncompetitive with LOOCV. Therefore, in addition to evaluating the empirical accuracy and efficiency of the EM algorithm for ridge regression, the main technical contributions of this work are to show how certain key quantities can be efficiently computed from either a singular value decomposition of the design matrix, when , or an eigenvalue decomposition of the Gram matrix , when . This results in an E-step of the algorithm in time where , and an M-step found in closed form and solved in time , yielding an ultra-fast main loop for the EM algorithm.

These computational advantages result in an algorithm that is computationally superior to efficient, optimized implementations of the fast LOOCV algorithm. Our particular implementation of LOOCV actually outperforms the implementation in scikit-learn by approximately a factor of two by utilizing a similar preprocessing to the EM approach. This enables an evaluation for a single (which is still slower than the evaluation for our new EM algorithm; see Table 1 for an overview of asymptotic complexities of LOOCV and EM), and may be of interest to readers by itself. Our implementation of both algorithms, along with all experiment code, are publicly available in the standard package ecosystems of the R and Python platforms, as well as on GitHub111https://github.com/marioboley/fastridge.git.

In the remainder of this paper, we first briefly survey the literature of ridge regression with an emphasis on the use of cross validation (Sec. 2). Based on the Bayesian interpretation of ridge regression, we then introduce the EM algorithm and discuss its convergence (Sec. 3). Finally, we develop fast implementations of both the EM algorithm and LOOCV (Sec. 4) and compare them empirically (Sec. 5).

| Method | main loop | pre-processing | Overall () |

|---|---|---|---|

| Naive adaption of EM | |||

| Proposed BayesEM | |||

| Fast LOOCV |

2 Ridge Regression and Cross Validation

Ridge regression [25] (also known as -regularization) is a popular method for estimation and prediction in linear models. The ridge regression estimates are the solutions to the penalized least-squares problem given in (1). The solution to this optimization problem is given by:

| (2) |

When , the ridge estimates coincide with the minimum norm least squares solution [31, 22], which simplifies to the usual least squares estimator in cases where the design matrix has full column rank (i.e. is invertible). Conversely, as , the amount of shrinkage induced by the penalty increases, with the resulting ridge estimates becoming smaller for larger values of . Under fairly general assumptions [26], including misspecified models and random covariates of growing dimension, the ridge estimator is consistent and enjoys finite sample risk bounds for all fixed , i.e., it converges almost surely to the prediction risk minimizer, and its squared deviation from this minimizer is bounded for finite with high probability. However, its performance can still vary greatly with the choice of ; hence, there is a need to estimate the optimal value from the given training data.

Earlier approaches to this problem [e.g. 28, 14, 30, 29, 2, 34, 19, 48, 23, 7] rely on an explicit estimate of the (assumed homoskedastic) noise variance, following the original idea of Hoerl and Kennard [25]. However, estimating the noise variance can be problematic, especially when is not much smaller than [18, 20, 24, 46]. More recent approaches adopt model selection criteria to select the optimal without requiring prior knowledge or estimation of the noise variance. These methods involve minimizing a selection criterion of choice, such as the Akaike information criterion (AIC) [1], Bayesian information criterion (BIC) [40], Mallow’s conceptual prediction (Cp) criterion [33], and, most commonly, cross validation (CV) [4, 49].

A particularly attractive variant of CV is leave-one-out cross validation (LOOCV), also referred to as the prediction error sum of squares (PRESS) statistic in the statistics literature [3]

| (3) |

where , and denotes the solution to (2) when the -th data point is omitted. LOOCV offers several advantages over alternatives such as 10-fold CV: it is deterministic, nearly unbiased [47], and there exists an efficient "shortcut" formula for the LOOCV ridge estimate [36]:

| (4) |

where is the regularized “hat”, or projection, matrix and are the residuals of the ridge fit using all data points. As it only requires the diagonal entries of the hat matrix, Eq. (4) allows for the computation of the PRESS statistic with the same time complexity as a single ridge regression fit.

Moreover, unless , the LOOCV ridge regression risk as a function of converges uniformly (almost surely) to the true risk function on and therefore optimizing it consistently estimates the optimal [36, 22]. However, for finite , the LOOCV risk can be multimodal and, even worse, there can exist local minima that are almost as bad as the worst [42]. Therefore, iterative algorithms like gradient descent cannot be reliably used for the optimization, giving theoretical justification for the pre-dominant approach of optimizing over a finite grid of candidates . Unfortunately, despite the true risk function being smooth and unimodal, a naïvely chosen finite grid cannot be guaranteed to contain any candidate with a risk value close to the optimum. While this might not pose a problem for small when the error in estimating the true risk via LOOCV is likely large, it can potentially become a dominating source of error for growing and . Therefore, letting grow moderately with the sample size appears necessary, turning it into a relevant factor in the asymptotic time complexity.

As a further disadvantage, LOOCV (or CV in general) is sensitive to sparse covariates, as illustrated in Figure 1 where the performance of LOOCV, relative to the proposed EM algorithm, degrades as the noise variance grows. In the sparse covariate setting, a situation common in genomics, the information about each coefficient is concentrated in only a few observations. As LOOCV drops an observation to estimate future prediction error, the variance of the CV score can be very large when the predictor matrix is very sparse, as the estimates depend on only a small number of the remaining observations. In the most extreme case, known as the multiple means problem [27], , and all the information about each coefficient is concentrated in a single observation. In this setting, the LOOCV score reduces to , and provides no information about how to select . In contrast, the proposed EM approach explicitly ties together the coefficients via the probabilistic Bayesian interpretation of as the inverse-variance of the unknown coefficient vector. This “borrowing of strength” means that the procedure provides a sensible estimate of even in the case of multiple means (see Appendix A).

3 Bayesian Ridge Regression

The ridge estimator (2) has a well-known Bayesian interpretation; specifically, if we assume that the coefficients are a priori normally distributed with mean zero and common variance we obtain a Bayesian version of the usual ridge regression procedure, i.e.,

| (5) | ||||

where is an appropriate prior distribution assigned to the variance hyperparameter . For a given and , the conditional posterior distribution of is also normal [32]

| (6) | ||||

where the posterior mode (and mean) is equivalent to the ridge estimate with penalty (we rely on the variable name in the notation to indicate whether it refers to (6) or (2)).

Shrinkage Prior

To estimate the hyperparameter in the Bayesian framework, we first must choose a prior distribution for the hypervariance . We assume that no strong prior knowledge on the degree of shrinkage of the regression coefficients is available, and instead assign the recommended default beta-prime prior distribution for [15, 38] with probability density function:

| (7) |

where is the beta function. Specifically, we choose , which corresponds to a standard half-Cauchy prior on . The half-Cauchy is a heavy-tailed, weakly informative prior that is frequently recommended as a default choice for scale-type hyperparameters such as [38]. Further, this estimator is very insensitive to the choice of or . As demonstrated by Theorem 6.1 in [6], the marginal prior density over , has polynomial tails in for all , and has Cauchy or heavier tails for . This type of polynomial-tailed prior distribution over the norm of the coefficients is insensitive to the overall scale of the coefficients, which is likely unknown a priori. This robustness is in contrast to other standard choices of prior distributions for such as the inverse-gamma distribution [e.g., 41, 35] which are highly sensitive to the choice of hyperparameters [38].

Unimodality and Consistency

The asymptotic properties of the posterior distributions in Gaussian linear models (5) have been extensively researched [45, 16, 17, 8]. These studies reveal that in linear models, the posterior distribution of is consistent, and converges asymptotically to a normal distribution centered on the true parameter value. When is fixed, this assertion can be established through the Bernstein-Von Mises theorem [45, Sec. 10.2]. Our specific problem (5) satisfies the conditions for this theorem to hold: 1) both the Gaussian-linear model and the marginal distribution are identifiable; 2) they have well defined Fisher information matrices; and 3) the priors over and are absolutely continuous. Further, these asymptotic properties remain valid when the number of predictors is allowed to grow with the sample size at a sufficiently slower rate [16, 8].

The following theorem (see the proof in Appendix B) provides a simple bound on the number of samples required to guarantee that the posterior distribution for the Bayesian ridge regression hierarchy given by (5) has only one mode outside a small environment around zero.

Theorem 3.1.

Let , and let be the smallest eigenvalue of . If and then the joint posterior has a unique mode with . In particular, if with and then there is a unique mode with if .

In other words, all sub-optimal non-zero posterior modes vanish for large enough if the smallest eigenvalue of grows at least proportionally to some positive power of . This is a very mild assumption that is typically satisfied in fixed as well as random design settings, e.g., with high probability when the smallest marginal covariate variance is bounded away from zero.

Expectation Maximization

Given the restricted unimodality of the joint posterior (5) for large enough , in conjunction with its asymptotic concentration around the optimal , estimating the model parameters via an EM algorithm appears attractive, as they are guaranteed to converge to an exact posterior mode. In particular, in the non-degenerate case that , there exist , such that for large enough, but finite , the posterior concentrates around , and thus is identified by EM if initialized with a large enough .

Specifically, we use the novel approach [44] in which the coefficients are treated as “missing data”, and and as parameters to be estimated. Given the hierarchy (5), the resulting Bayesian EM algorithm then solves for the posterior mode estimates of by repeatedly iterating through the following two steps until convergence:

E-step. Find the parameters of the Q-function, i.e., the expected complete negative log-posterior (with respect to ), conditional on the current estimates of and , and the observed data :

| (8) |

where the quantities to be computed are the (conditionally) expected sum of squared errors and the expected squared norm . Denoting by the trace operator, one can show (see Appendix C) that these quantities can be computed as

| (9) |

M-step. Update the parameter estimates by minimizing the Q-function with respect to the shrinkage hyperparameter and noise variance , i.e.,

| (10) |

Instead of numerically optimizing the two-dimensional Q-function (10), we can derive closed-form solutions for both parameters by first finding , i.e., the update for , as a function of , and then substituting this into the Q-function. This yields a Q-function that is no longer dependent on , and solving for is straightforward. The resulting parameter updates in the M-step are given by:

| (11) |

where . The derivations of these formulae are presented in Appendix D.

From (11), we see that updating the parameter estimates in the M-step requires only constant time. Therefore, the overall efficiency of the EM algorithm is determined by the computational complexity of the E-step. Computing the parameters of the Q-functions directly via (9) requires inverting , resulting in operations. In the next section, we show how to substantially improve this approach via singular value decomposition.

4 Fast Implementations via Singular Value Decomposition

To obtain efficient implementations of the E-Step of the EM algorithm as well as of the LOOCV shortcut formula, one can exploit the fact that the ridge solution is preserved under orthogonal transformations. Specifically, let and and let be a compact singular value decomposition (SVD) of . That is, and are semi-orthonormal column matrices, i.e., and , and is a diagonal matrix that contains the non-zero singular values of . With this decomposition, and an additional pre-processing step to compute , we can compute the ridge solution for a given with respect to the rotated inputs in time via

| (12) |

where denotes the element-wise Hadamard product of vectors and . The compact SVD itself can be obtained in time via an eigendecomposition of either in case or in case followed by the computation of the missing or .

In summary, after an pre-processing step, we can obtain rotated ridge solutions for an individual candidate in time . Moreover, for the optimal , we can find the ridge solution with respect to the original input matrix via an post-processing step. Below we show how the key statistics that have to be computed per candidate (and ) can be computed efficiently based on , the pre-computed , and SVD. For the EM algorithm, these are the posterior squared norm and sum of squared errors, and for the LOOCV algorithm, this is the PRESS statistic. While the main focus of this work is the EM algorithm, the fast computation of the PRESS shortcut formula appears to be not widely known (e.g., the current implementation in both scikit-learn and glmnet do not use it) and may therefore be of independent interest.

ESN

For the posterior expected squared norm , we first observe that , and then note that the trace can be computed as

| (13) |

where in the first equation we denote by , the full matrices of eigenvectors and eigenvalues of (including potential zeros), and in the second equation we used the cyclical property of the trace. Thus, all quantities required for can be computed in time given the SVD and .

ESS

For the posterior expected sum of squared errors , we can compute the residual sum of squares term via

| (14) |

where we use and in the first equation and the orthonormality of and the definition of in the second. Finally, for the trace term, we find that

| (15) |

PRESS

The shortcut formula of the PRESS statistic (4) for a candidate requires the computation of the diagonal elements of the hat matrix as well as the residual vector . With the SVD, the first simplifies to

where we use the fact that diagonal matrices commute. This allows to compute the desired diagonal elements in time via

| (16) |

where denotes the elements of . Computing the residual vector is easily done via the rotated ridge solution . However, this still requires operations, simply because there are residuals to compute.

Thus, in summary, by combining the pre-processing with the fast computation of the PRESS statistic, we obtain an overall implementation of ridge regression via LOOCV where denotes the number of candidate and the number of regression target variables. In contrast, for the EM algorithm, by combining the fast computation of ESS and ESN, we end up with an overall complexity of where denotes the number of EM iterations. If we further assume that , which is supported by experimental results, see Sec. 5, and that both there is an asymptotic advantage of a factor of of the EM approach. This regime is common in settings where more data allows for more fine-grained input as well as output measurements, e.g., in satellite time series classification via multiple target regression [11, 37]. All time complexities are summarized in Tab. 1 and detailed pseudocode for both the fast EM algorithm and the fast LOOCV algorithm is provided in the Appendix (see Table 3 and 4).

5 Empirical Evaluation

In this section, we compare the predictive performance and computational cost of LOOCV against the proposed EM method. We present numerical results on both synthetic and real-world datasets. To implement the LOOCV estimator, we use a predefined grid, . We use the two most common methods for this task: (i) fixed grid - arbitrarily selecting a very small value as , a large value as , and construct a sequence of values from to on log scale; (ii) data-driven grid - find the smallest value of that sets all the regression coefficient vector to zero 222For ridge regression, . Following the glmnet package, the sequence of is derived for . The penalty function used by glmnet is , where corresponds to ridge regression. (i.e. ), multiply this value by a ratio such that and create a sequence from to on log scale. The latter method is implemented in the glmnet package in combination with an adaptive coefficient

which we replicate here as input to our fast LOOCV algorithm (Appendix, Table 4) to efficiently recover the glmnet LOOCV ridge estimate. 333glmnet LOOCV is computed directly by model refitting via coordinate-wise descent which can be slow.

We consider a fixed grid of and the grid based on the glmnet heuristic; in both cases, we use a sequence of length 100. The latter is a data-driven grid, so we will have a different penalty grid for each simulated or real data set. Our EM algorithm does not require a predefined penalty grid, but it needs a convergence threshold which we set to be . All experiments in this section are performed in Python and the R statistical platform. Datasets and code for the experimental results is publicly available. As is standard in penalized regression, and without any loss of generality, we standardized the data before model fitting. This means that the predictors are standardized to have zero mean, standard deviation of one, and the target has a mean of zero, i.e., the intercept estimate is simply .

5.1 Simulated Data

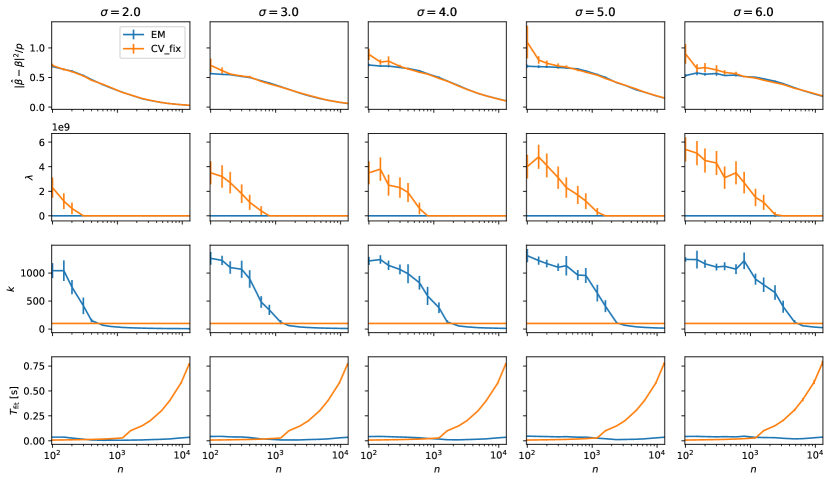

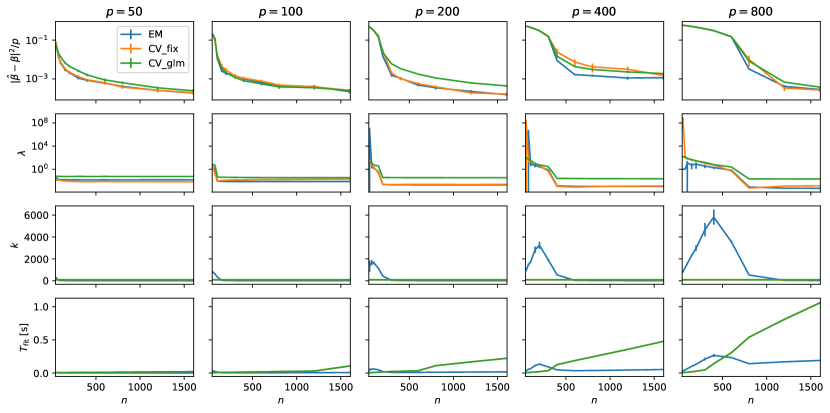

In this section, we use a simulation study to investigate the behavior of EM and LOOCV as a function of the sample size, , and two other parameters of interest: the number of covariates , and the noise level of the target variable. In particular, we are interested in the parameter estimation performance, the corresponding -values, and the computational cost. To gain further insights into the latter, the number of iterations performed by the EM algorithm is of particular interest, as we do not have quantitative bounds for its behavior. We consider two settings that vary in the level of sparsity and correlation structure of the covariates. The first setting (Fig. 1) assumes i.i.d Bernoulli distributed covariates with small success probabilities that result in sparse covariate matrices, while the second setting (Fig. 2) assumes normally distributed covariates with random non-zero covariances. In both cases, the target variable is conditionally normal with mean for a random drawn from a standard multivariate normal distribution.

Looking at the results, a common feature of both settings is that the computational complexity of the EM algorithm is a non-monotone function in . In contrast to LOOCV, the behavior of EM shows distinctive phases where the complexity temporarily decreases with before it settles into the, usually expected, monotonically increasing phase. As can be seen, this is due to the behavior of the number of iterations , which peaks for small values of before it declines rapidly to a small constant (around 10) when the cost of the pre-processing begins to dominate. The occurrence of these phases is more pronounced for both growing and growing . This behavior is likely due to the convergence to normality of the posterior distribution as the sample size , with convergence being slower for large .

An interesting observation is that CV with the employed glmnet grid heuristic fails, in the sense that the resulting ridge estimator does not appear to be consistent for large in Setting 2. This is due to the minimum value of produced by the glmnet heuristic being too large, and the resulting ridge estimates being overshrunk. This clearly underlines the difficulty of choosing a robust dynamic grid – a problem that our EM algorithm avoids completely.

5.2 Real Data

We evaluated our EM method on 24 real-world datasets. This includes 21 datasets from the UCI machine learning repository [5] (unless referenced otherwise) for normal linear regression tasks and 3 time-series datasets from the UCR repository [10] for multitarget regression tasks. The latter is a multilabel classification problem in which the feature matrix was generated by the state-of-the-art HYDRA [12] time series classification procedure (which by default uses LOOCV ridge regression for classification), and we train ridge regression models in a one-versus-all fashion, where is the number of target classes. The datasets were chosen such that they covered a wide range of sample sizes, , and number of predictors, . We compared our EM algorithm against the fast LOOCV in terms of predictive performance, measured in (and classification accuracy) on the test data, and computational efficiency.

Our linear regression experiments involve 3 settings: (i) standard linear regression; (ii) second-order multivariate polynomial regression with added interactions and second-degree polynomial transformations of variables, and (iii) third-order multivariate polynomial regression with added three-way interactions and cubic polynomial transformations. For each experiment, we repeated the process 100 times and used a random 70/30 train-test split. Due to memory limitations, we limit our design matrix size to a maximum of 35 million entries. If the number of transformed predictors exceeded this limit, we uniformly sub-sampled the interaction variables to ensure that , and then fit the model using the sampled variables. Note that we always keep the original variables (main effects) and sub-sampled the interactions. In the case of multitarget regression, we performed a random 70/30 train-test split and repeated the experiment 30 times. To ensure efficient reproducibility of our experiments, we set a maximum runtime of 3 hours for each dataset. Any settings that exceeded this time limit were consequently excluded from the result table.

[t]

| Linear | 2nd Order | 3rd Order | ||||||||||||||

| Dataset () | EM | Fix | GLM | EM | Fix | GLM | EM | Fix | GLM | |||||||

| 408275 | 77 | 20 | 0.94 | 0.94 | 0.94 | 86 | 16 | 0.94 | 0.94 | 0.94 | - | - | - | - | - | |

| Blog | 39355 | 275 | 13 | 0.46 | 0.46 | 0.46 | 804 | 9.1 | 0.51 | 0.51 | 0.51 | - | - | - | - | - |

| CT slices | 37450 | 379 | 12 | 0.86 | 0.86 | 0.86 | 930 | 7.7 | 0.92 | 0.91 | 0.92 | - | - | - | - | - |

| TomsHw | 19725 | 96 | 17 | 0.63 | 0.63 | 0.63 | 1775 | 6.5 | 0.71 | 0.71 | 0.71 | - | - | - | - | - |

| NPD - com | 8353 | 13 | 13 | 0.84 | 0.84 | 0.84 | 104 | 15 | 1.00 | 1.00 | 1.00 | 559 | 8.6 | 1.00 | 1.00 | 1.00 |

| NPD - tur | 8353 | 13 | 14 | 0.91 | 0.91 | 0.91 | 104 | 15 | 1.00 | 1.00 | 1.00 | 559 | 8.6 | 1.00 | 1.00 | 1.00 |

| PT - motor | 4112 | 19 | 13 | 0.15 | 0.15 | 0.15 | 208 | 12 | 0.25 | 0.19 | 0.21 | 1539 | 3.9 | -1.09 | 0.01 | 0.04 |

| PT - total | 4112 | 19 | 13 | 0.17 | 0.17 | 0.17 | 208 | 12 | 0.24 | 0.23 | 0.21 | 1539 | 3.7 | -1.38 | -0.04 | 0.00 |

| Abalone | 2923 | 9 | 13 | 0.53 | 0.53 | 0.53 | 51 | 16 | 0.38 | 0.35 | 0.50 | 209 | 12 | 0.28 | 0.12 | 0.12 |

| Crime | 1395 | 99 | 14 | 0.66 | 0.66 | 0.66 | 5049 | 1.3 | 0.67 | -0.74 | 0.66 | 17652 | 1.1 | 0.66 | -0.22 | 0.60 |

| Airfoil | 1052 | 5 | 17 | 0.51 | 0.51 | 0.51 | 20 | 14 | 0.62 | 0.62 | 0.62 | 55 | 11 | 0.73 | 0.73 | 0.73 |

| Student | 730 | 39 | 12 | 0.18 | 0.18 | 0.18 | 801 | 3.8 | 0.19 | -0.89 | 0.16 | 10693 | 1.1 | 0.19 | -6.22 | -0.18 |

| Concrete | 721 | 8 | 16 | 0.61 | 0.61 | 0.61 | 44 | 11 | 0.78 | 0.78 | 0.78 | 164 | 5.5 | 0.85 | 0.85 | 0.85 |

| F.Fires | 361 | 12 | 2.4 | -0.01 | -0.01 | -0.03 | 295 | 0.5 | -0.01 | -0.01 | -0.14 | 1984 | 0.3 | -0.01 | -50.6 | -0.45 |

| B.Housing | 354 | 13 | 11 | 0.71 | 0.71 | 0.71 | 104 | 8.1 | 0.84 | 0.83 | 0.80 | 559 | 2.2 | 0.83 | -3e2 | 0.83 |

| 346 | 15 | 15 | 0.91 | 0.91 | 0.91 | 167 | 6.6 | -5.09 | -26.4 | -3.99 | 1087 | 1.9 | -2.53 | -2e4 | -5.76 | |

| Diabetes 1 | 309 | 10 | 13 | 0.49 | 0.49 | 0.49 | 65 | 6.5 | 0.49 | 0.48 | 0.48 | 285 | 2.1 | 0.47 | 0.47 | 0.47 |

| R.Estate | 289 | 6 | 13 | 0.56 | 0.56 | 0.56 | 27 | 10 | 0.65 | 0.65 | 0.65 | 83 | 5.5 | 0.65 | 0.65 | 0.65 |

| A.MPG | 278 | 8 | 13 | 0.81 | 0.81 | 0.81 | 35 | 8.3 | 0.86 | 0.86 | 0.86 | 119 | 4.1 | 0.86 | 0.86 | 0.86 |

| Yacht | 215 | 7 | 16 | 0.97 | 0.97 | 0.97 | 27 | 11 | 0.97 | 0.97 | 0.97 | 83 | 6.1 | 0.98 | 0.98 | 0.98 |

| A.mobile | 111 | 25 | 6.1 | 0.90 | 0.89 | 0.89 | 1076 | 1.7 | 0.90 | -4e3 | 0.89 | 12924 | 0.5 | 0.88 | -1e4 | 0.83 |

| Eye 1 | 84 | 200 | 2.7 | 0.50 | 0.26 | 0.45 | 20300 | 1.3 | 0.19 | 0.19 | 0.19 | - | - | - | - | - |

| Ribo 1 | 49 | 4088 | 2.3 | 0.64 | 0.64 | 0.64 | - | - | - | - | - | - | - | - | - | - |

| Crop (24) 2 | 11760 | 3072 | 49 | 0.75 | 0.75 | 0.76 | - | - | - | - | - | - | - | - | - | - |

| ElecD (7) 2 | 11645 | 4096 | 9.2 | 0.88 | 0.88 | 0.89 | - | - | - | - | - | - | - | - | - | - |

| StarL (3) 2 | 6465 | 7168 | 2.1 | 0.98 | 0.60 | 0.98 | - | - | - | - | - | - | - | - | - | - |

-

1

This dataset is not from the UCI repository. Data references can be found in the appendix.

-

2

Time-series dataset from the UCR repository. EM, Fix, and GLM are the classification accuracy on the test data

Table 2 details the results of our experiments; specifically, the ratio of time taken to run fast LOOCV divided by the time taken to run our EM procedure (), and the values obtained by both methods on the withheld test set. The number of features, , and observations, recorded are values after data preprocessing (missing observations removed, one-hot encoding transformation, etc.). The results demonstrate that our EM algorithm can be up to 49 times faster than the fast LOOCV, with the speed-ups becoming more apparent as the sample size and the number of target variables increases. In addition, we see that this advantage in speed does not come at a cost in predictive performance, as our EM approach is comparable to, if not better than, LOOCV in almost all cases (also see Appendix, Figure 1, in which most of values are distributed along the diagonal line).

An interesting observation is that LOOCV using the fixed grid can occasionally perform extremely poorly (as indicated by large negative values) while LOOCV using the glmnet grid does not seem to exhibit this behavior. This appears likely to be due to the grid chosen using the glmnet heuristic. Its performance is artificially improved because it is unable to evaluate sufficiently small values of and is not actually selecting the very small value that minimizes the LOOCV score. The incorrectly large values are providing protection in these examples from undershrinkage.

6 Conclusion

The introduced EM algorithm is a robust and computationally fast alternative to LOOCV for ridge regression. The unimodality of the posterior guarantees a robust behavior for finite under mild conditions relative to LOOCV grid search, and the SVD preprocessing enables an overall faster computation with an ultra-fast main loop. Combining this with a procedure such as orthogonal least-squares to provide a highly efficient forward selection procedure is a promising avenue for future research. As the Q-function is an expected negative log-posterior, it offers a score on which the usefulness of predictors themselves may be assessed, i.e., a model selection criteria, resulting in a potentially very accurate and fast procedure for sparse model learning. An important open problem is the theoretical analysis of the expected number of EM iterations that is required for convergence. The empirical evidence suggests that converges to a constant, and is thus negligible in the asymptotic time complexity. This is in alignment with the convergence of the posterior to a multivariate normal distribution. However, such intuitive and empirical arguments cannot replace a rigorous worst-case analysis.

Acknowledgments and Disclosure of Funding

We thank the anonymous reviewers for their valuable feedback that led to a substantially improved theoretical analysis. This work was supported by the Australian Research Council (DP210100045).

References

- Akaike [1974] Hirotugu Akaike. A new look at the statistical model identification. In Selected Papers of Hirotugu Akaike, pages 215–222. Springer, 1974.

- Alkhamisi et al. [2006] Mahdi Alkhamisi, Ghadban Khalaf, and Ghazi Shukur. Some modifications for choosing ridge parameters. Communications in Statistics-Theory and Methods, 35(11):2005–2020, 2006.

- Allen [1974] David M Allen. The relationship between variable selection and data agumentation and a method for prediction. technometrics, 16(1):125–127, 1974.

- Arlot and Celisse [2010] Sylvain Arlot and Alain Celisse. A survey of cross-validation procedures for model selection. 2010.

- Asuncion and Newman [2007] Arthur Asuncion and David Newman. UCI machine learning repository, 2007.

- Barndorff-Nielsen et al. [1982] Ole Barndorff-Nielsen, John Kent, and Michael Sørensen. Normal variance-mean mixtures and z distributions. International Statistical Review/Revue Internationale de Statistique, pages 145–159, 1982.

- Bhat and Raju [2017] Satish Bhat and Vidya Raju. A class of generalized ridge estimators. Communications in Statistics - Simulation and Computation, 46(7):5105–5112, 2017. doi: 10.1080/03610918.2016.1144765. URL https://doi.org/10.1080/03610918.2016.1144765.

- Bontemps [2011] Dominique Bontemps. Bernstein–von Mises theorems for Gaussian regression with increasing number of regressors. 2011.

- Bühlmann et al. [2014] Peter Bühlmann, Markus Kalisch, and Lukas Meier. High-dimensional statistics with a view toward applications in biology. Annual Review of Statistics and Its Application, 1:255–278, 2014.

- Dau et al. [2019] Hoang Anh Dau, Anthony Bagnall, Kaveh Kamgar, Chin-Chia Michael Yeh, Yan Zhu, Shaghayegh Gharghabi, Chotirat Ann Ratanamahatana, and Eamonn Keogh. The UCR time series archive. IEEE/CAA Journal of Automatica Sinica, 6(6):1293–1305, 2019.

- Dempster et al. [2020] Angus Dempster, François Petitjean, and Geoffrey I Webb. ROCKET: Exceptionally fast and accurate time series classification using random convolutional kernels. Data Mining and Knowledge Discovery, 34(5):1454–1495, 2020.

- Dempster et al. [2023] Angus Dempster, Daniel F Schmidt, and Geoffrey I Webb. HYDRA: Competing convolutional kernels for fast and accurate time series classification. Data Mining and Knowledge Discovery, 2023.

- Efron et al. [2004] Bradley Efron, Trevor Hastie, Iain Johnstone, and Robert Tibshirani. Least angle regression. The Annals of statistics, 32(2):407–499, 2004.

- Ehsanes Saleh and Golam Kibria [1993] AK Md Ehsanes Saleh and BM Golam Kibria. Performance of some new preliminary test ridge regression estimators and their properties. Communications in Statistics-Theory and Methods, 22(10):2747–2764, 1993.

- Gelman [2006] Andrew Gelman. Prior distributions for variance parameters in hierarchical models. Bayesian Analysis, 1(3):515–533, 2006.

- Ghosal [1999] Subhashis Ghosal. Asymptotic normality of posterior distributions in high-dimensional linear models. Bernoulli, pages 315–331, 1999.

- Ghosal et al. [2000] Subhashis Ghosal, Jayanta K Ghosh, and Aad W Van Der Vaart. Convergence rates of posterior distributions. Annals of Statistics, pages 500–531, 2000.

- Golub et al. [1979] Gene H Golub, Michael Heath, and Grace Wahba. Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics, 21(2):215–223, 1979.

- Hamed et al. [2013] Ramadan Hamed, Ali EL Hefnawy, and Aya Farag. Selection of the ridge parameter using mathematical programming. Communications in Statistics-Simulation and Computation, 42(6):1409–1432, 2013.

- Hanson [1971] Richard J Hanson. A numerical method for solving Fredholm integral equations of the first kind using singular values. SIAM Journal on Numerical Analysis, 8(3):616–622, 1971.

- Harrison Jr and Rubinfeld [1978] David Harrison Jr and Daniel L Rubinfeld. Hedonic housing prices and the demand for clean air. Journal of environmental economics and management, 5(1):81–102, 1978.

- Hastie et al. [2022] Trevor Hastie, Andrea Montanari, Saharon Rosset, and Ryan J Tibshirani. Surprises in high-dimensional ridgeless least squares interpolation. Annals of statistics, 50(2):949, 2022.

- Hefnawy and Farag [2014] Ali El Hefnawy and Aya Farag. A combined nonlinear programming model and Kibria method for choosing ridge parameter regression. Communications in Statistics-Simulation and Computation, 43(6):1442–1470, 2014.

- Hilgers [1976] John W Hilgers. On the equivalence of regularization and certain reproducing kernel Hilbert space approaches for solving first kind problems. SIAM Journal on Numerical Analysis, 13(2):172–184, 1976.

- Hoerl and Kennard [1970] Arthur E Hoerl and Robert W Kennard. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics, 12(1):55–67, 1970.

- Hsu et al. [2012] Daniel Hsu, Sham M. Kakade, and Tong Zhang. Random design analysis of ridge regression. In Shie Mannor, Nathan Srebro, and Robert C. Williamson, editors, Proceedings of the 25th Annual Conference on Learning Theory, volume 23 of Proceedings of Machine Learning Research, pages 9.1–9.24, Edinburgh, Scotland, 25–27 Jun 2012. PMLR.

- JAMES [1961] W JAMES. Estimation with quadratic loss. In Proc. 4th Berkeley Symp. on Math. Statist. and Prob., 1961, 1961.

- JF [1976] Lawless JF. A simulation study of ridge and other regression estimators. Communications in Statistics-theory and Methods, 5(4):307–323, 1976.

- Khalaf and Shukur [2005] Ghadban Khalaf and Ghazi Shukur. Choosing ridge parameter for regression problems. 2005.

- Kibria [2003] BM Golam Kibria. Performance of some new ridge regression estimators. Communications in Statistics-Simulation and Computation, 32(2):419–435, 2003.

- Kobak et al. [2020] Dmitry Kobak, Jonathan Lomond, and Benoit Sanchez. The optimal ridge penalty for real-world high-dimensional data can be zero or negative due to the implicit ridge regularization. The Journal of Machine Learning Research, 21(1):6863–6878, 2020.

- Lindley and Smith [1972] D. V. Lindley and A. F. M. Smith. Bayes estimates for the linear model. Journal of the Royal Statistical Society (Series B), 34(1):1–41, 1972.

- Mallows [2000] Colin L Mallows. Some comments on Cp. Technometrics, 42(1):87–94, 2000.

- Muniz and Kibria [2009] Gisela Muniz and BM Golam Kibria. On some ridge regression estimators: An empirical comparisons. Communications in Statistics—Simulation and Computation®, 38(3):621–630, 2009.

- Park and Casella [2008] Trevor Park and George Casella. The Bayesian lasso. Journal of the American Statistical Association, 103(482):681–686, 2008.

- Patil et al. [2021] Pratik Patil, Yuting Wei, Alessandro Rinaldo, and Ryan Tibshirani. Uniform consistency of cross-validation estimators for high-dimensional ridge regression. In International Conference on Artificial Intelligence and Statistics, pages 3178–3186. PMLR, 2021.

- Petitjean et al. [2012] François Petitjean, Jordi Inglada, and Pierre Gançarski. Satellite image time series analysis under time warping. IEEE transactions on geoscience and remote sensing, 50(8):3081–3095, 2012.

- Polson and Scott [2012] Nicholas G Polson and James G Scott. On the half-Cauchy prior for a global scale parameter. Bayesian Analysis, 7(4):887–902, 2012.

- Scheetz et al. [2006] Todd E Scheetz, Kwang-Youn A Kim, Ruth E Swiderski, Alisdair R Philp, Terry A Braun, Kevin L Knudtson, Anne M Dorrance, Gerald F DiBona, Jian Huang, Thomas L Casavant, et al. Regulation of gene expression in the mammalian eye and its relevance to eye disease. Proceedings of the National Academy of Sciences, 103(39):14429–14434, 2006.

- Schwarz et al. [1978] Gideon Schwarz et al. Estimating the dimension of a model. The annals of statistics, 6(2):461–464, 1978.

- Spiegelhalter et al. [1996] David Spiegelhalter, Andrew Thomas, Nicky Best, and Wally Gilks. BUGS 0.5: Bayesian inference using gibbs sampling manual (version ii). MRC Biostatistics Unit, Institute of Public Health, Cambridge, UK, pages 1–59, 1996.

- Stephenson et al. [2021] Will Stephenson, Zachary Frangella, Madeleine Udell, and Tamara Broderick. Can we globally optimize cross-validation loss? Quasiconvexity in ridge regression. Advances in Neural Information Processing Systems, 34:24352–24364, 2021.

- Strawderman [1971] William E Strawderman. Proper Bayes minimax estimators of the multivariate normal mean. The Annals of Mathematical Statistics, 42(1):385–388, 1971.

- Tew et al. [2022] Shu Yu Tew, Daniel F Schmidt, and Enes Makalic. Sparse horseshoe estimation via expectation-maximisation. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pages 123–139. Springer, 2022.

- Van der Vaart [2000] Aad W Van der Vaart. Asymptotic statistics, volume 3. Cambridge university press, 2000.

- Varah [1973] M Varah, James. On the numerical solution of ill-conditioned linear systems with applications to ill-posed problems. SIAM Journal on Numerical Analysis, 10(2):257–267, 1973.

- Wang and Zou [2021] Boxiang Wang and Hui Zou. Honest leave-one-out cross-validation for estimating post-tuning generalization error. Stat, 10(1):e413, 2021.

- Yasin et al. [2013] ASAR Yasin, Adnan Karaibrahimoğlu, and GENÇ Aşır. Modified ridge regression parameters: A comparative Monte Carlo study. Hacettepe Journal of Mathematics and Statistics, 43(5):827–841, 2013.

- Zhang and Yang [2015] Yongli Zhang and Yuhong Yang. Cross-validation for selecting a model selection procedure. Journal of Econometrics, 187(1):95–112, 2015.

Appendix A EM Procedure for Multiple Means

We show that the Bayesian EM procedure provides sensible estimates of the regularization parameter even in the setting of the normal multiple means problem with known variance . In this setting, the LOOCV is unable to provide any guidance on how to choose due to all the information for each regression parameter being concentrated in a single observation. We use the parameterisation of the hyperparameter, rather than , as the resulting estimator has an easy to analyse form.

In the normal multiple means model, we are given , i.e., is a -dimensional normally distributed vector with mean and identity covariance matrix. The conditional posterior distribution of is:

| (17) |

where . Under this setting, Strawderman [43] proved that if , then any estimator of the form

| (18) |

where and is non-decreasing, is minimax, i.e., it dominates least-squares. We will now show that our EM procedure not only yields reasonable estimates in this setting, in contrast to LOOCV, but that these estimates are minimax, and hence dominate least-sqaures.

For the normal means model, we can obtain a closed form solution for the optimum , by solving for the stationary point for which , with :

and with , and . This yields

with solution . Plugging this solution into (17), we note that the resulting estimator of (17) is of the form (18) with

As we have when , the EM ridge estimator is minimax in this setting for .

Appendix B Proof of Theorem 3.1

We prove that for sufficiently large , a continuous injective reparameterization of the negative log joint posterior of (5) & (7) is convex when restricted to . This is sufficient, since unimodality is preserved by strictly monotone transformations and continuous injective reparameterizations.

Specifically, for the presented hierarchical model, the negative log joint posterior up to an additive constant is

and reparameterising with , and and reorganising terms yields

The first three terms can easily be checked to be convex via a second derivative test, for which the convexity of the second term is contingent on the condition that , a condition that holds true in our specific scenario with . For the last two terms, the combined Hessian is of block form with , , and . Symmetric matrices of this form are positive definite if and its Schur complement

are positive definite. Clearly, is positive definite. Moreover, for , we have

where we used the fact that is the orthogonal projection onto the column space of . The overall inequality implies the required positivity of the Schur complement.

Appendix C Derivation of Equation 9

C.1 Derivation of

Here we show that

This is a rather straightforward proof. We use the fact that given a random variable ; the expected squared value of is .

C.2 Derivation of

Here we show that

| (19) |

We first provide an important fact on the quadratic forms of random variables in Lemma C.1 below:

Lemma C.1.

Let be a p-dimensional random vector and be a p-dimensional symmetric matrix. If and , then .

Appendix D Solving for the parameter updates (Derivation of Equation 11)

Rather than solving a two-dimensional numerical optimization problem (10), we show that given a fixed , we can find a closed formed solution for , and vice versa. To start off, we need to find the solution for as a function of . First, find the negative logarithm of the joint probability distribution of hierarchy (5):

| (21) |

Dropping terms that do not depend on yields:

| (22) |

Solving the above minimization problem involves differentiating the negative logarithm with respect to and solving for that set the derivative to zero. This gives us:

| (23) |

Next, to obtain the M-step updates for the shrinkage parameter , we repeat the same procedure - find the negative logarithm of the joint probability distribution and remove terms that do not depend on either or :

| (24) |

Substiting the solution for (23) into equation (24), yields a Q-function that depends only on . We eliminate the dependency on by finding the optimal as a function of and substitute it into the Q-function of (24):

| (25) |

Differentiating (25) with respect to and solving for the that set the derivative to zero yields:

| (26) |

The update is the positive solution to the quadratic equation (in terms of ) (26):

| EM Algorithm with SVD | Operations |

|---|---|

| Input: Standardised predictors , centered targets and convergence threshold | |

| Output: | |

| IF | |

| ELSE | |

| DO | |

| (E-step) | |

| (M-step) | |

| until | |

| return |

| Fast LOOCV ridge with SVD | Operation |

|---|---|

| Input: Standardised predictors , centered targets and a grid of penalty parameters | |

| Output: | |

| IF | |

| ELSE | |

| } | |

| Find | |

| return |

Appendix E Supplementary Results Material

E.1 Real Datasets Details

| Datasets | Abbreviation | Target Variable | Source | ||

| Buzz in social media (Twitter) | 583250 | 77 | mean number of active discussion | UCI | |

| Blog Feedback | Blog | 60021 | 281 | number of comments in the next 24 hours | UCI |

| Relative location of CT slices on axial axis | CT slices | 53500 | 386 | reference: Relative image location on axial axis | UCI |

| Buzz in social media (Tom’s Hardware) | TomsHw | 28179 | 97 | Mean Number of display | UCI |

| Condition-based maintenance of naval propulsion plants | NPD - com | 11934 | 16 | GT Compressor decay state coefficient | UCI |

| Condition-based maintenance of naval propulsion plants | NPD - tur | 11934 | 16 | GT Turbine decay state coefficient | UCI |

| Parkinson’s Telemonitoring | PT - motor | 5875 | 26 | motor UPDRS score | UCI |

| Parkinson’s Telemonitoring | PT - total | 5875 | 26 | total UPDRS score | UCI |

| Abalone | Abalone | 4177 | 8 | Rings (age in years) | UCI |

| Communities and Crime | Crime | 1994 | 128 | ViolentCrimesPerPop | UCI |

| Airfoil Self-Noise | Airfoil | 1503 | 6 | Scaled sound pressure level (decibels) | UCI |

| Student Performance | Student | 649 | 33 | final grade (with G1 & G2 removed) | UCI |

| Concrete Compressive Strength | Concrete | 1030 | 9 | Concrete compressive strength (MPa) | UCI |

| Forest Fires | F.Fires | 517 | 13 | forest burned area (in ha) | UCI |

| Boston Housing | B.Housing | 506 | 13 | Median value of owner-occupied homes in $1000’s | [21] |

| Facebook metrics | 500 | 19 | Total Interactions (with comment, like, and share columns removed) | UCI | |

| Diabetes | Diabetes | 442 | 10 | quantitative measure of disease progression one year after baseline | [13] |

| Real estate valuation | R.Estate | 414 | 7 | house price of unit area | UCI |

| Auto mpg | A.MPG | 398 | 8 | city-cycle fuel consumption in miles per gallon | UCI |

| Yacht hydrodynamics | Yacht | 308 | 7 | residuary resistance per unit weight of displacement | UCI |

| Automobile | A.mobile | 205 | 26 | price | UCI |

| Rat eye tissues | Eye | 120 | 200 | the expression level of TRIM32 gene | [39] |

| Riboflavin | Ribo | 71 | 4088 | Log-transformed riboflavin production rate | [9] |

| Crop | Crop | 24000 | 3072 | 24 crop classes | UCR |

| Electric Devices | ElecD | 16637 | 4096 | 7 electric devices | UCR |

| StarLight Curves | StarL | 9236 | 7168 | 3 starlight curves | UCR |