Bayesian Covariance Estimation for Multi-group Matrix-variate Data

Abstract

Multi-group covariance estimation for matrix-variate data with small within-group sample sizes is a key part of many data analysis tasks in modern applications. To obtain accurate group-specific covariance estimates, shrinkage estimation methods which shrink an unstructured, group-specific covariance either across groups towards a pooled covariance or within each group towards a Kronecker structure have been developed. However, in many applications, it is unclear which approach will result in more accurate covariance estimates. In this article, we present a hierarchical prior distribution which flexibly allows for both types of shrinkage. The prior linearly combines shrinkage across groups towards a shared pooled covariance and shrinkage within groups towards a group-specific Kronecker covariance. We illustrate the utility of the proposed prior in speech recognition and an analysis of chemical exposure data.

keywords:

2302.09211

and

1 Introduction

Matrix-variate datasets consisting of a sample of matrices each with dimensionality are increasingly common in modern applications. Examples of such datasets include repeated measurements of a multivariate response, two-dimensional images, and spatio-temporal observations. Often, a matrix-variate dataset may be partitioned into several distinct groups or subpopulations for which group-level inference is of particular interest. For example, a multi-group dataset may be subdivided by socio-economic population or geographic region.

In analyzing multi-group matrix-variate data of this type, describing heterogeneity across groups is often of particular interest. For instance, in remote-sensing studies, scientists may be interested in understanding variation across classes of land cover from repeated measurements of spectral information, as in Johnson et al. (2012). The information collected at each site may be represented as a matrix where the rows represent wavelengths and the columns represent dates when the images were taken. Accurate multi-group covariance estimation is necessary for such a task. Moreover, in many such applications, the population covariance of matrix-variate data may be near separable in that the covariances across the columns are near each other or the covariances across the rows are near each other. Incorporation of this structural information in an estimation procedure can improve estimation accuracy. More generally, accurate covariance estimation of multi-group matrix-variate data is a pertinent task in many statistical methodologies including classification, principal component analysis, and multivariate regression analysis, among others. For example, classification of a new observation based on a labeled training dataset with quadratic discriminant analysis (QDA) requires group-level estimates of population means and covariance matrices. As a result, adequate performance of the classification relies on, among other things, accurate group-level covariance estimates.

One approach to analyzing multi-group matrix-variate data is to vectorize each data matrix and utilize methods designed for generic multivariate data separately for each group. In this way, direct covariance estimates which only make use of within-group samples may be obtained from matrix-variate data by vectorizing each observation and computing the standard sample covariance matrices. While a group’s sample covariance may be unbiased, the estimate may have large variance unless the group-specific sample size is appreciably larger than the dimension . This is a limiting requirement as modern datasets often consist of many features, that is, often , or even . As a result, more accurate covariance estimates may be obtained via indirect or model-based methods which incorporate auxiliary information. Such methods may introduce bias, but can correspond to estimates with lower variance than unbiased methods.

To improve the accuracy of covariance estimates for multi-group data, researchers may estimate each group’s covariance with the pooled sample covariance matrix. This implicitly imposes an assumption of homogeneity of covariances across the populations and greatly reduces the number of unknown parameters to be estimated. Such an estimator may be biased, but can have lower error than the population-specific sample covariance. For example, linear discriminant analysis (LDA), which assumes homoscedasticity across groups, has been shown to outperform QDA when sample sizes are small, even in the presence of heterogeneous population covariances (see, for example, Marks and Dunn (1974)). A more robust approach estimates each group’s covariance as a weighted sum of a pooled estimate and the group-specific sample covariance. Such an approach is often referred to as partial-pooling, or, in the Bayesian framework, hierarchical modeling. For a nice introduction to partial pooling and an empirical Bayesian implementation, see Greene and Rayens (1989). More work on the topic is found in Friedman (1989); Rayens and Greene (1991); Brown et al. (1999). Relatedly, there has been work which assumes pooled elements of common covariance decompositions (e.g. pooled eigenvectors across groups (Flury, 1987)) and proposals to shrink elements of decompositions to pooled values (Daniels, 2006; Hoff, 2009b).

Alternatively, as opposed to pooling information across groups, accuracy may be improved by imposing structural assumptions on the covariances separately for each group. Some common structural assumptions include diagonality (Daniels and Kass, 1999), bandability (Wu and Pourahmadi, 2009), and sparsity (Friedman et al., 2008), among others. For matrix-variate data, a separable or Kronecker structure covariance assumption (Dawid, 1981) may be more appropriate. A Kronecker structured covariance represents each population covariance as the Kronecker product of two smaller covariance matrices of dimension and which respectively represent the across-row and across-column covariances. Again, while a separable covariance estimator may be biased, it may have smaller error than an unstructured covariance estimator. In practice, however, the population covariance may not be well represented by a Kronecker structure. To allow for robustness to misspecification, a researcher may proceed in a Bayesian manner and adaptively shrink to the Kronecker structure as in Hoff et al. (2022). Such an estimator can be consistent, but may have stability issues similar to that of an unstructured covariance matrix if the population covariance is not well represented by a Kronecker structure. In this instance, more accurate multi-group covariance estimates may be obtained by pooling across groups rather than shrinking within each group to a separable structure.

More generally, in covariance estimation based on matrix-variate data from multiple populations, it is rarely obvious whether shrinking each unstructured covariance separately towards a Kronecker structure or shrinking all unstructured covariances towards an unstructured pooled covariance will result in more accurate estimates. This is particularly difficult in the presence of small within-group sample sizes as popular classical statistical tests for both homogeneity of covariances (Box, 1949) and accuracy of a Kronecker structure assumption (Lu and Zimmerman, 2005) rely on approximations that require large sample sizes to achieve the desired precision.

To account for this uncertainty, we propose a hierarchical model that adaptively allows for both types of shrinkage. Specifically, in this article, we provide a model-based multi-group covariance estimation method for matrix-variate data that improves the overall accuracy of direct covariance estimates. We propose a hierarchical model for unstructured group-level covariances that adaptively shrinks each estimate either within-population towards a separable Kronecker structure, across-populations towards a shared pooled covariance, or towards a weighted additive combination of the two. The model features flexibility in the amount of each type of shrinkage. Furthermore, the proposed model has a latent-variable representation that results in straightforward Bayesian inference via a Metropolis-Hastings algorithm. The proposed model provides robustness to mis-specification of structural assumptions and improved stability if assumptions are wrong while maintaining coherence and interpretability.

The article proceeds as follows. In Section 2 we motivate our method and detail the proposed hierarchical model. We describe a Bayesian estimation algorithm in Section 3 and demonstrate properties of the proposed method via a simulation study in Section 4. The flexibility of the proposed method is shown in two examples in Section 5. In the first example, we demonstrate the usefulness of inference under the proposed model in speech recognition. In the second example, we perform inference on a chemical exposure data set where understanding heterogeneity across socio-demographic groups is of key interest. We conclude with a discussion in Section 6.

2 Methodology

In this section we introduce the Shrinkage Within and Across Groups (SWAG) covariance model, a hierarchical model developed for simultaneous covariance estimation for multi-group, matrix-variate data. We are particularly motivated by improving the overall accuracy of group-specific estimates of population covariances when the true covariance structures are unknown and group-specific sample sizes are small relative to the number of features. The proposed model adaptively allows for flexible shrinkage either across groups, within a group to a Kronecker structure, or an additive combination of the two. The SWAG model is constructed from semi-conjugate priors to allow for straightforward Bayesian estimation and interpretable parameters. The section proceeds by introducing motivation in Sections 2.1 and 2.2, presenting the proposed hierarchical covariance model in Section 2.3, and elaborating on parameter interpretation in Section 2.4.

2.1 Partial-pooling shrinkage for multi-group data

As detailed in the Introduction, a common method used to improve a population’s covariance estimate is linear shrinkage from the population’s sample covariance matrix towards some covariance term which can be estimated with greater precision. One such method for multi-group multivariate data is partial pooling, as detailed in Greene and Rayens (1989, GR). In particular, for population , let be an i.i.d. random sample of -dimensional vectors from a mean-zero normal population with unknown non-singular covariance matrix ,

GR use mutually independent inverse-Wishart priors for each population covariance,

for , parameterized such that .

The Bayes estimator for the covariance of population under squared error loss partially-pools each group’s sample covariance,

| (1) |

where and is the sample covariance matrix for population . GR use plug-in estimates for the pooled covariance and degrees of freedom which are obtained in an empirical Bayesian manner.

This partially-pooled estimator linearly shrinks a population’s sample covariance matrix towards a pooled covariance by a weight that depends on the degrees of freedom and the group-specific sample size . In this way, the degrees of freedom parameter determines the amount of shrinkage towards the pooled covariance. In particular, if the degrees of freedom is large relative to the sample size , the covariance estimate is strongly shrunk towards the pooled value. In populations where the group-specific sample size is large, or if the degrees of freedom estimate is comparatively small, more weight is placed on the sample covariance matrix.

2.2 Kronecker shrinkage for matrix-variate data

For a matrix-variate population, the accuracy of a covariance estimate may be improved via linear shrinkage towards a population-specific Kronecker structure. Let be an i.i.d. sample of random matrices, each of dimension , from a mean-zero normal population with non-singular covariance matrix where ,

Even if and are each relatively small, obtaining a statistically stable estimate of the unstructured -dimensional covariance may require a prohibitively large sample size. As a result, shrinkage towards a parsimonious Kronecker structured covariance may be used, where “” is the Kronecker product, is a “row” covariance matrix, and is a “column” covariance matrix. A linear shrinkage estimator that shrinks a population’s sample covariance towards a population-specific Kronecker separable covariance may be obtained from the following prior,

parameterized so that . Here, the Bayes estimator of the covariance under squared error loss is

| (2) |

where . An empirical Bayesian estimation approach based on shrinkage towards a Kronecker structure is presented in Hoff et al. (2022). In context to that article, here, we will take a fully Bayesian approach.

The estimator given in Equation 2 linearly shrinks the unstructured sample covariance towards a Kronecker structured covariance by the weight that depends on the sample size and the estimated degrees of freedom. As with the partially-pooled estimator, this estimator is strongly shrunk towards the Kronecker structure when the degrees of freedom is large relative to sample size. If the degrees of freedom is small, or the sample size is large, more weight is placed on the sample covariance.

2.3 Flexible shrinkage for multi-group matrix-variate data

For each group , let be an i.i.d. sample of random matrices, each of dimension , from a mean-zero normal population with non-singular covariance , that is,

| (3) |

As it is often unclear which of the approaches presented is most appropriate in the presence of multi-group matrix-variate data of this type, we propose an approach that combines the two methods of covariance shrinkage discussed. In particular, we propose the Shrinkage Within and Across Groups (SWAG) hierarchical prior distribution which linearly combines an estimate shrunk towards a pooled covariance and an estimate shrunk towards a group-specific Kronecker structure by a weight that is estimated from the data.

Specifically, the SWAG prior utilizes an over-parameterized representation of each group’s covariance. That is, for population ,

| (4) |

where each is shrunk towards a common covariance, and each is shrunk towards a group specific Kronecker covariance. Each covariance is shrunk across groups towards a common covariance matrix using the prior distribution

| (5) |

parameterized such that . When is estimated from data across all groups, this term is interpreted as a pooled covariance matrix. As we are interested in obtaining a covariance matrix estimate based on matrix-variate data, each term is shrunk towards a group-specific Kronecker structured covariance,

| (6) |

where . Here, as before, is a row covariance matrix from population and is the corresponding column covariance matrix. Furthermore, to more clearly separate these two notions of shrinkage (within population or across populations), a Wishart prior on the across-population covariance allows for flexible shrinkage of this pooled term towards an across-group Kronecker covariance:

| (7) |

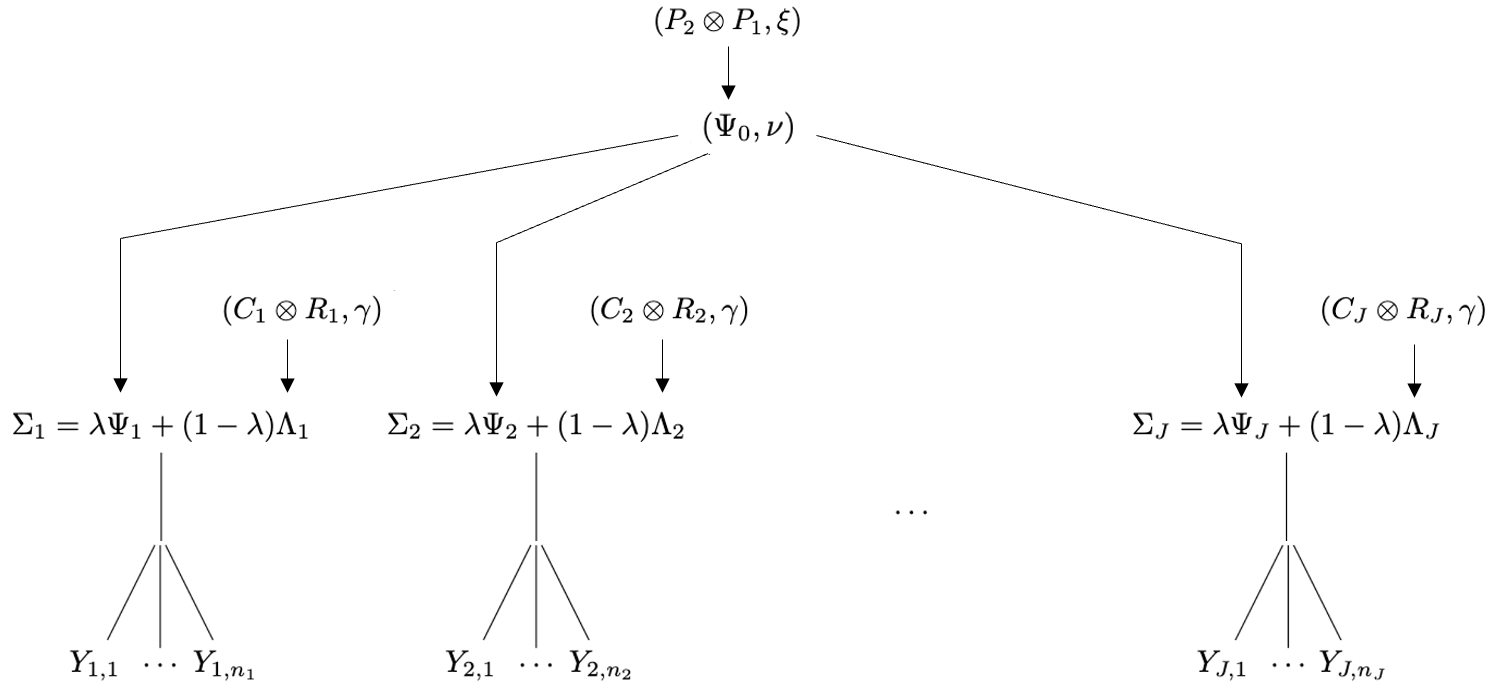

parameterized such that where and . In this way, the weight is interpreted as partially controlling the amount of shrinkage towards homogeneity versus towards heterogeneity of covariances across groups. A visualization of the proposed SWAG hierarchy is given in Figure 1. In summary, the SWAG model combines a standard hierarchical model on the across-group shrunk covariances with Bayesian shrinkage towards a separable structure on the within-group shrunk covariances and the pooled covariance .

We note that the SWAG model is primarily motivated by the need to obtain more accurate group-specific covariance estimates, so, while there is redundancy in this parameterization, the group-specific covariances are identifiable. As a result, this over-parameterization will not affect inference on estimation of group-level covariances, estimation of mean effects, imputation of missing data, or response prediction.

2.4 Interpretation of Parameters

In this section, we highlight properties of the proposed SWAG covariance priors under the normal sampling model given in Equation 3. A priori, regardless of the specified sampling model, the marginal expectation under the SWAG prior is a weighted sum of a pooled covariance and the heterogeneous Kronecker separable covariance:

| (8) |

for , where the weight quantifies the prior weight on each structure. As a result, the SWAG prior presents a flexible approach to combine shrinkage across groups towards a pooled value and shrinkage within groups towards a separable structure.

The role of the weight is further elucidated at the extremes of its sample space. Given , the SWAG model reduces to a Bayesian analogue of the partially-pooled estimators given in Greene and Rayens (1989), as given in Equation 1. At the weight’s opposite extreme, when , the SWAG model is equivalent to the Kronecker structure shrinkage model presented in Section 2.2 applied separately to each group. To alleviate some of the potential ambiguity in interpretation of , we further allow to be flexibly shrunk to a Kronecker structure. In this way, represents hierarchical shrinkage across populations towards a pooled covariance and represents within-population shrinkage.

Moreover, under the SWAG prior, the prior marginal expected value of a population’s covariance is a weighted sum of a pooled covariance and a population-specific separable covariance (Equation 8). Under normality, as the degrees of freedom and increase, the marginal sampling model of a random matrix converges to a normal distribution with this prior expectation as the covariance. That is, when the degrees of freedom parameters and are large, the unstructured covariances and are each strongly shrunk towards their respective prior expected value, and, therefore, the population covariances are approximately represented by the weighted sum of the pooled covariance and population-specific Kronecker structure.

3 Parameter Estimation

3.1 Latent Variable Representation

The SWAG model has a latent variable representation that allows for straightforward Bayesian inference. Specifically, consider the following representation of the proposed SWAG model:

| (9) | ||||

independently for each population where the priors on each and are as given in Equations 5 and 6. That is, each matrix is represented as a weighted sum of one factor () which partially pools covariances across populations and another factor () which flexibly shrinks the population covariance towards a group-specific Kronecker structure. Marginal with respect to the factors, this latent variable representation is equivalent to the sampling model proposed in the SWAG model,

Conditioning on the factor results in closed form full conditionals of the covariance parameters of interest, as detailed in subsequent subsections.

3.2 Posterior Approximation

In this section, we detail a Metropolis-Hastings algorithm for parameter estimation based on the latent variable representation of the SWAG model. Label , , , , , and . Then, Bayesian inference is based on the joint posterior distribution, with density

and a Monte Carlo approximation to this posterior distribution is available via a MetropolisHastings algorithm. Based on the latent variable representation presented in Equations 9, nearly all of the parameters in the SWAG model maintain semi-conjugacy leading to a straightforward Metropolis-Hastings algorithm which constructs a Markov chain in

Such Bayesian inference provides estimates and uncertainty quantification for arbitrary functions of the parameters. While we focus on the mean-zero case, the algorithm presented may be trivially extended to include estimation of mean parameters.

For a full Bayesian analysis, priors must be specified for all unknown parameters. For simplicity, a straightforward prior may be used to describe prior expectations of behavior of . On the degrees of freedom parameters and , negative binomial distributions with the appropriate support may be used, that is, , parameterized by size , success probability , and lower bound . Semi-conjugate priors on the remaining covariance parameters are proposed to facilitate computation,

A discussion of hyperparameter specification is provided in Section 3.3.

A Metropolis-Hastings sampler proceeds by iteratively generating new sets of model parameters based on their full conditional distributions. When iterated until convergence, this procedure will generate a Markov chain that approximates the joint posterior distribution . The sampling steps are now detailed.

3.2.1 Sampling of population model parameters

The full conditionals of sampling model covariances and factors, as well as the key shrinkage controlling parameters are discussed in this subsection. To reduce dependence along the Markov chain, we propose to sample the weight and the latent factor from their joint full conditional distribution, , where, to streamline notation, we let denote the set containing all variables in except for . A sample from must first be obtained where

As the full conditional distribution of is not available in closed form, a sample of may be obtained from a Metropolis step that proceeds by first obtaining a proposed value from a reflecting random walk around the previous value of in the Markov chain (Hoff, 2009a). That is, an initial value is sampled from , and it is reflected across the approporiate bound to retain the correct support, :

Then, the proposal is accepted as an updated value for with probability The full conditional of each latent factor for population is independently where

Again, to reduce dependence along the Markov chain, we propose to sample the degrees of freedom and covariances as well as and from their joint full conditional distribution. In particular, the joint full conditional of is where may be sampled from a reflecting random walk Metropolis step. In this case, a proposal may be obtained from a reflecting random walk based on the previous iteration’s value of , that is, sample an initial value from and utilize the following reassignment schema to ensure the sample has the correct support:

The proposal is accepted as an updated value for with probability

where . Then, sample each from its full conditional distribution,

for each . Samples from the joint full conditional distribution of , are obtained similarly. A proposal sample is obtained from a reflecting random walk based on an initial value drawn from and accepted with probability

where , and the full conditional of each is independently where .

In addition to facilitating computation, these full conditionals contribute to the interpretation of parameters. In particular, for population , the full conditional means of and resemble shrinkage estimators towards a pooled covariance and a Kronecker structured covariance, respectively. Specifically, the full conditional density of the across-group shrunk covariance , given all other model parameters and the observed data matrices , is inverse-Wishart with mean

| (10) |

where . That is, the prior on shrinks the sample covariance of the latent factor towards the pooled covariance by a shrinkage factor determined by the degrees of freedom and the within-group sample size . Similarly, the full conditional density of the within-group shrunk is inverse-Wishart with mean

| (11) |

where and . In this case, the prior on shrinks the sample covariance of the data residual towards a separable covariance by a weight which depends on the degrees of freedom and .

3.2.2 Full conditionals of Kronecker shrinkage parameters

The unstructured covariances are each shrunk towards a population-specific Kronecker structured covariance. The derivations of the full conditionals of these Kronecker covariances make use of a few Kronecker product properties, namely, and for of dimension and of dimension . Then, it is straightforward to derive the full conditional of each population ’s row covariance,

for from and column covariance

for from .

3.2.3 Full conditionals of pooled shrinkage parameters

The unstructured covariances are shrunk across-groups towards a pooled unstructured covariance. The full conditional of the pooled covariance is The final level of the hierarchy allows for shrinkage of this pooled unstructured covariance towards a pooled Kronecker structured covariance. The full conditional for the pooled row covariance is

for from , and the full conditional for the pooled column covariance is

for from . The corresponding degrees of freedom term may be sampled from a Metropolis step, similar to . Specifically, a proposal sample may be obtained from a reflecting random walk based on an initial value drawn from . Then, is accepted as an updated value for with probability

3.2.4 A note on computational expense

The computational complexity of the proposed Metropolis-Hastings algorithm is at least . However, while Bayesian computation of the SWAG model may appear cumbersome, we note that many of the computationally expensive steps may be run in parallel across group. Specifically, sampling the random effects , the covariance terms and , and the Kronecker covariance terms and may each be computed in parallel across the groups. In this way, the proposed algorithm may scale nicely with number of groups, depending on computational resources.

3.3 Hyperparameter specification

In the absence of meaningful external or prior information, weakly informative or non-informative priors on all unknown variables can be considered. On the weight , the prior hyperparameters may be selected in a way that weakly encourages favoring one of the types of shrinkage, within or across groups, by setting .

Specifying weakly informative hyperparameters for the degrees of freedom priors requires slightly more scrutiny as the impact of and on the Wishart prior distributions will depend on, among other things, covariance dimension . In a Wishart distribution, the degrees of freedom parameter controls the concentration of the distribution around the prior mean. A value that is large relative to the covariance dimension can correspond to considerable concentration, and a value near the lower bound corresponds to limited concentration. As such, we suggest using a weakly informative prior for a degree of freedom by specifying hyperparameters that allow for nontrivial prior mass on a range from to values large relative to . In practice, we found it worked well to set hyperparameters such that a large majority of the prior mass is placed on values in the range . The size parameter may be set such that the degrees of freedom prior mean is a value near the first quantile of this range. The prior success probability may be set such that there is a fair amount of dispersion around the mean. In analyses with moderate dimension, we use which corresponds to a degrees of freedom prior variance of 5 times the prior mean. In analyses with large dimension, we use to allow for a larger prior variance. In both cases, such a prior tends to be right skewed, whereby more prior mass is placed on small to moderate values in the parameter space while still incorporating nonnegligible prior mass on moderate to large values.

The hyperparameters on the covariance parameters, , and , require special attention due to the over-parameterization of the proposed model and the scale ambiguity property of the Kronecker product. That is, for a scalar and matrices , . To deal with these potential ambiguities, we propose standardizing the data in a pre-processing step before estimating model parameters and setting the scale hyperparameters such that the prior mean of these parameters is the identity matrix. The implications of this hyperparameter choice result in an a priori homoscedastic marginal expectation of the within-group covariances, for each . The corresponding degrees of freedom hyperparameters are taken as and to represent diffuse distributions that maintain finite first moments. For example, a Wishart prior of this type, , on corresponds to a diffuse distribution with prior expected value . Furthermore, this choice of prior suggests weak shrinkage to an isotropic covariance matrix at the lowest level of the hierarchy. Weakly shrinking to such a matrix has motivations in ridge regression and the regularization discriminant analysis literature, as in Friedman (1989).

4 Simulation Studies

We demonstrate the performance of the proposed SWAG model by comparing the accuracy of various covariance estimators obtained under four different population covariance regimes. In particular, each regime features either homogeneous (Ho) or heterogeneous (He) covariances across groups, and the covariances in each regime are either Kronecker structured (K) or not Kronecker structured (N). Our goal in this section is to generally explore results when the true group-specific covariance matrices have off-diagonal values relatively far from zero within a given group. Specifically, in unstructured regimes, each group’s true covariance is an exchangeable correlation matrix of dimension with a fixed correlation randomly generated between 0.35 and 0.9. In Kronecker structured regimes, each group’s true covariance is the Kronecker product of a exchangeable correlation matrix and a exchangeable correlation matrix. For a given regime and parameter size combination, the true covariance matrices do not vary within the simulation. Details of the within-group true covariances for each regime are contained in Contrast Table 1. While we do not necessarily expect the truth in real-world scenarios to be one of these extreme cases, this study provides insight into the behavior of the SWAG model.

| Ho | He | |

|---|---|---|

| K | ||

| N |

In a simulation study under each regime, we compare the loss of various covariance estimators under a range of dimensionality and number of groups. Specifically, we consider , , and . As we are particularly motivated by the small sample size case, we consider within-group sample sizes . For each regime, we take the MLEs of the covariances under the simplest correctly specified model as the oracle estimator. In total, this includes the standard sample MLE for each group’s covariance , the pooled sample MLE , the separable MLE for each group , and the pooled separable MLE . These oracle estimators for each group’s covariance under the four regimes considered are specified in Contrast Table 2. We note that the most accurate estimator of the He,U regime may vary depending on the problem dimension and the number of the groups due to high variance of the sample covariance estimator when sample size is small relative to number of features, as in this study.

| Ho | He | |

|---|---|---|

| K | ||

| N |

The simulation study proceeds as follows. For each permutation of regime, dimension, and number of groups, we sample 50 data sets each from a mean-zero matrix normal distribution of the appropriate dimension with population covariance as given in Table 1. For each data set, we compute the reference covariance estimates , , , and . Additionally, we run the proposed Metropolis-Hastings sampler for the SWAG model for 28,000 iterations removing the first 3,000 iterations as a burn-in period and saving every 10th iteration as a thinning mechanism. From the resulting 2,500 Monte Carlo samples, we obtain the Bayes estimate under an invariant loss, Stein’s loss, of each group’s covariance, where . Then, we compute Stein’s loss averaged across the populations where . We report the average of the 50 values to approximate frequentist risk for each scenario considered. In our analysis of results, we refer to as the loss and do not discuss group-specific Stein losses.

In general, we expect inference with the ‘oracle’ estimator to outperform that of the SWAG model in each regime considered. However, as knowledge of true structural behavior is rare in practice, the oracle estimator of one regime obtained from a correctly specified model may perform arbitrarily poorly in a different regime. In contrast, due to the flexibility of the proposed SWAG model, we expect the SWAG estimator to perform nearly as well as each regime’s oracle estimator, and outperform the other estimators considered. Specifically, the SWAG model is correctly specified for all four cases as each regime corresponds to particular limiting choices of parameters in the SWAG model. Furthermore, given that we consider problem dimension size similar to each population’s sample size, we expect the SWAG estimator to outperform the sample covariance estimators in all cases.

| Homogeneous, Kronecker | Heterogeneous, Kronecker | |||||||||

| J = 4, p = 6 | 1.36 | 6.82 | 0.85 | 1.77 | 0.31 | 1.66 | 6.82 | 2.54 | 1.77 | 1.95 |

| J = 4, p = 12 | 2.40 | 13.42 | 1.69 | 1.36 | 0.28 | 2.73 | 13.42 | 5.33 | 1.36 | 3.74 |

| J = 4, p = 24 | 3.21 | 25.77 | 3.47 | 1.81 | 0.44 | 4.38 | 25.77 | 10.76 | 1.81 | 7.42 |

| J = 10, p = 6 | 1.14 | 7.12 | 0.33 | 1.82 | 0.12 | 1.54 | 7.12 | 2.21 | 1.82 | 1.98 |

| J = 10, p = 12 | 2.32 | 13.48 | 0.65 | 1.39 | 0.12 | 2.67 | 13.48 | 4.98 | 1.39 | 4.37 |

| J = 10, p = 24 | 2.55 | 25.70 | 1.27 | 1.81 | 0.17 | 4.43 | 25.70 | 10.39 | 1.81 | 9.14 |

| Homogeneous, not Kronecker | Heterogeneous, not Kronecker | |||||||||

| J = 4, p = 6 | 1.49 | 6.82 | 0.85 | 4.81 | 2.42 | 1.36 | 6.82 | 1.96 | 6.27 | 4.53 |

| J = 4, p = 12 | 2.65 | 13.42 | 1.69 | 7.07 | 5.79 | 2.77 | 13.42 | 4.21 | 9.04 | 10.58 |

| J = 4, p = 24 | 4.63 | 25.77 | 3.47 | 22.02 | 20.27 | 5.03 | 25.77 | 8.63 | 31.70 | 31.47 |

| J = 10, p = 6 | 1.47 | 7.12 | 0.33 | 4.64 | 2.12 | 1.54 | 7.12 | 1.48 | 6.14 | 4.44 |

| J = 10, p = 12 | 2.51 | 13.48 | 0.65 | 7.20 | 5.60 | 2.63 | 13.48 | 3.14 | 9.66 | 10.97 |

| J = 10, p = 24 | 3.45 | 25.70 | 1.27 | 22.32 | 20.27 | 4.52 | 25.70 | 6.48 | 33.55 | 33.17 |

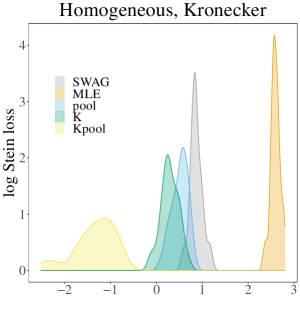

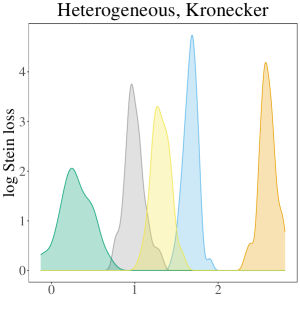

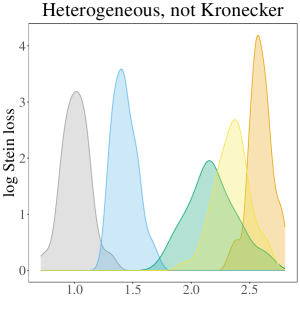

The results of the simulation study are presented in Table 3. In the table, the oracle estimator for each regime has a grey background, and the smallest two average losses are in bold font. In summary, the SWAG estimator performs best or second best in all cases considered except those in the Ho, Kr regime. In nearly all cases where the SWAG estimator has the second smallest loss, it is beat by the respective regime’s oracle estimator. Therefore, given that the oracle is unknown in practice, we conclude the SWAG model is particularly effective in accurate population covariance estimation. While the overarching conclusion of the performance of the SWAG model remains the same across most cases considered, the dynamics differ across regime. To gain a better understanding of the variation around the average losses displayed in the table, Figure 2 displays the empirical densities of the 50 values for each regime in the case where .

In the regime where population covariances are homogeneous across population and Kronecker structured, the oracle estimator has the smallest average loss in the cases explored, as expected. Interestingly, for a given , the average loss corresponding to and for is less than that for . This is a consequence of the scaling used within Stein’s loss function as, in this case, the difference between the first two terms of the loss increases by less than the increase in . In general, the pooled MLE and group-specific Kronecker MLEs perform well in this regime, which is not surprising given the variety of estimators considered and the overlap in their underlying assumptions. The SWAG estimator tends to have a similar to these estimators, and a notably smaller average loss than the sample covariance. Furthermore, in most cases considered, the empirical density of the loss corresponding to these three estimators ( and ) overlap, as seen in Figure 2.

Given population covariances homogeneous across population and not Kronecker structured, the pooled MLE and SWAG estimators have the two smallest average losses. All other estimators have comparatively large average loss. This is particularly apparent in Figure 2 where the densities of the losses corresponding to and are smaller than and distinct from the densities corresponding to the other estimators considered. In high dimension cases in particular, incorrectly assuming a Kronecker covariance in this regime results in estimators nearly as inaccurate as the sample covariances.

In the heterogeneous and Kronecker structured regime, the oracle estimator and the SWAG estimator have the two smallest average losses. Overall in this regime, these two estimators behave similarly and are notable improvements over the other estimators considered, as seen clearly in Figure 2. Again, in this regime, the average loss corresponding to the oracle estimator for is less than that for as a result of the scaling used in Stein’s loss function.

In the fourth, and perhaps most realistic, regime considered, where population covariances are heterogeneous across population and not Kronecker structured, the SWAG estimator has the smallest average loss in all cases except when , where it has the second smallest average loss. In this regime, the oracle estimator is outperformed by all other estimators considered. This is not surprising given the combination of the simplicity of the exchangeable population covariances in conjunction with large instability of the sample covariance due in part to the small sample sizes considered for each regime. Again, we see estimators based on an incorrect assumption of separability result in distinctly larger loss than the more flexible SWAG estimator (Figure 2).

In total, these results support the conclusion that the SWAG model outperforms standard alternatives given the true structure of the population covariances is unknown. The flexibility of the SWAG model is particularly useful given the large error under estimators based on incorrect assumptions. In particular, wrongly assuming a Kronecker structure can result in an error nearly as large as that obtained under the sample covariance. While the extreme regimes considered do not necessarily reflect the truth in real-world scenarios, this simulation study highlights the flexibility of the SWAG model.

4.1 Analysis of computational expense

| Ho, K | Ho, N | He, K | He, N | |

|---|---|---|---|---|

| J = 4; p = 6 | 10.58 | 12.49 | 9.89 | 14.32 |

| J = 4; p = 12 | 13.83 | 16.23 | 14.20 | 18.71 |

| J = 4; p = 24 | 24.46 | 28.36 | 25.07 | 32.59 |

| J = 10; p = 6 | 23.13 | 27.92 | 24.41 | 32.37 |

| J = 10; p = 12 | 31.12 | 36.96 | 31.63 | 42.61 |

| J = 10; p = 24 | 55.92 | 65.04 | 56.37 | 74.33 |

Table 4 displays the wall-clock run time of the Metropolis-Hastings sampler for 28,000 iterations, averaged across 50 replications. We sample all parameters as described in Section 3.2 and do not use any parallelization in the sampling of parameters. The algorithm was implemented for a given regime with code written with the R statistical programming language on the Duke University Compute Cluster on a single thread with 32 CPUs and 228 GB of RAM. The smallest parameter space considered () takes about 10-15 minutes to run, and the largest parameter space considered () takes about 50-80 minutes. In summary, doubling of the dimension from 6 to 12 results in about a 30% increase in computation time. Doubling from 12 to 24 results in about a 70% increase in computation time. For a given , an increase in the number of groups from 4 to 10 results in an increase in computation time of approximately 125%. As discussed in Section 3.2, the increase in computation time for large populations may be mitigated by parallelizing sampling across populations in Metropolis-Hastings algorithm.

5 Examples

We demonstrate the usefulness of the SWAG model for estimating covariance matrices in multi-group matrix-variate populations by analyzing two data sets. In the first example, we perform a speech recognition task on a publicly available spoken-word audio dataset. In the second example, we analyze chemical exposure data which features small group-specific sample sizes.

In general, a data matrix for a single group of dimension can be decomposed into two orthogonal matrices such that one may be used for mean estimation and the other, based on centered data, for covariance estimation. As such, while a mean estimation step could be included in the proposed SWAG Metropolis-Hastings algorithm, we will estimate the covariance matrices based on centered data matrices throughout the applications. To elaborate, first, define the -dimensional centering matrix where is a rank-1 idempotent projection matrix and is a rank- idempotent projection matrix (Christensen, 2011). Then, note that,

where is the length vector of column means of and is the residual matrix. Then, for , and where is the sample covariance matrix. and are uncorrelated, so and are uncorrelated (Mardia et al., 1979). In this way, may be estimated using centered data .

5.1 Classification of spoken-word audio data

Classification of a new observation based on a labeled training dataset consisting of matrices, each with common dimensionality , observed from each of populations is an important statistical task for speech recognition. To illustrate the utility of the SWAG covariance estimator, we analyze classification of spoken-audio samples of words “yes”, “no”, “up”, “down”, “left”, “right”, “on”, “off”,“stop”, and “go” from a dataset consisting of 1-second WAV files where each word has a sample size ranging from 1,987 to 2,103 (Warden, 2017, 2018). Audio data such as these are commonly described by mel-frequency cepstral coefficients (MFCCs) which represent the power spectrum of a sound across time increments. Accordingly, we represent each audio sample as a feature matrix of the first MFCCs across time bins (Ligges et al., 2018).

One popular classification method for generic multivariate data is quadratic discriminant analysis (QDA). QDA is based on the result that, assuming normality and equal a priori probabilities of group membership, the probability of misclassification is minimized by assigning an unlabeled matrix to group which minimizes the discriminant score function (Mardia et al., 1979),

| (12) |

where and , , are respectively the mean vector and covariance matrix for population and is the vectorization operator that stacks the columns of a matrix into a column vector. In practice, of course, these parameters are unknown and thus are estimated from a training data set. As a result, adequate performance of the classification relies on, among other things, accurate group-level covariance estimates.

We display the utility of the multiple population SWAG estimators over standard estimators by comparing correct classification rates resulting from QDA in a leave-some-out comparison. To do this, we retain a random selection of observations from each population as a testing dataset, and the remaining observations constitute the training dataset. For the discriminant analysis, as we are interested in comparing covariance estimation approaches, we use the sample mean to estimate each and a variety of covariance estimates for , all computed from the training dataset. Specifically, we obtain the SWAG covariance estimates from output from the proposed Metropolis-Hastings algorithm run for 5,100 iterations with a burn-in of 300 and a thinning mechanism of 25. We compare this with the unstructured sample covariance obtained separately for each word , labeled MLE in this section. Additionally, we consider the partially pooled empirical Bayesian covariance estimate outlined in Greene and Rayens (1989) (GR), and the core shrinkage estimate (Hoff et al., 2022) which partially shrinks each word’s sample covariance matrix towards a separable covariance (CSE). Prior to computing the various covariance estimates, the data for each word is standardized and centered. The scale is then re-introduced to the covariance estimates prior to conducting the classification analysis.

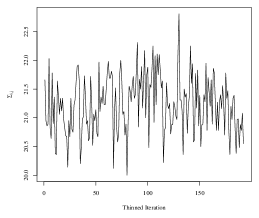

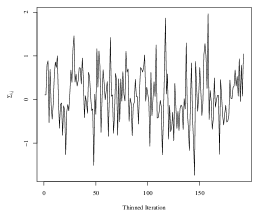

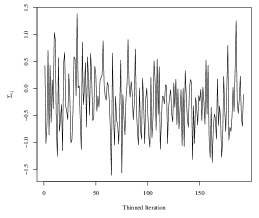

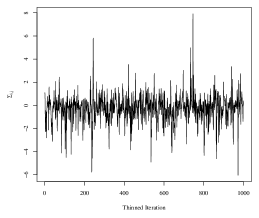

To obtain the SWAG estimate, we run the Metropolis-Hastings sampler in an implementation written in the Julia programming language. The code is run on the Duke University Compute Cluster using a single thread with 64 CPUs and 600 GB of RAM. We do not use any parallelization in the sampling of parameters. One implementation of the algorithm for 5,100 iterations took approximately 25 hours to run, including compilation of the code and saving the large MCMC output files. To assess convergence of the Markov chain, we analyze four randomly selected elements of the within-group covariances in detail. Trace plots corresponding to each of the four elements are plotted in Figure 4. The maximum lag-10 autocorrelation among the four elements was 0.10 in absolute value, and the effective sample sizes of the thinned chains corresponding to each element were and (out of 192 thinned iterations).

| SWAG | MLE | CSE | GR | |

| yes | 0.94 | 0.42 | 0.85 | 0.76 |

| no | 0.80 | 0.10 | 0.82 | 0.80 |

| up | 0.48 | 0.08 | 0.60 | 0.17 |

| down | 0.69 | 0.27 | 0.54 | 0.57 |

| left | 0.74 | 0.80 | 0.63 | 0.41 |

| right | 0.86 | 0.43 | 0.58 | 0.77 |

| on | 0.80 | 0.13 | 0.64 | 0.60 |

| off | 0.65 | 0.25 | 0.64 | 0.32 |

| stop | 0.88 | 0.80 | 0.70 | 0.63 |

| go | 0.64 | 0.05 | 0.47 | 0.47 |

| average | 0.75 | 0.33 | 0.65 | 0.55 |

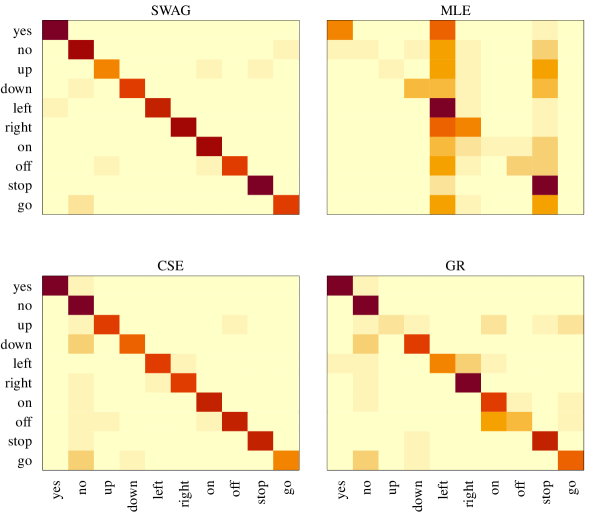

Classifications for the test observations are made using each covariance estimate, and results are summarized in confusion matrices displayed in Figure 3, with the true word classes along the rows and predicted word classes along the columns. The correct classification rates, or, the values of the diagonal elements in the confusion matrices, are contained in Table 5. In general, the SWAG classifier outperforms the other estimates. The SWAG estimate has a higher correct classification rate averaged over all words, and it features notably larger word-specific classification rates for the majority of words.

When comparing the SWAG estimate with the MLE, it features a significantly higher correct classification rate for every word except two, “left” and “stop”. Upon further inspection, however, the two large correct classification rates for the MLE are a feature of this classifier nearly always choosing one of these two words, as displayed in the confusion matrix. The correct classification rates obtained from the SWAG classifier are greater than or equal to those from the partially pooled estimate GR for every word, oftentimes by a large margin, which corresponds with a greater overall correct classification rate. Moreover, linear discriminant analysis, which uses a pooled covariance estimate for each population covariance, performs even worse with an across-word average correct classification rate of 0.27. The most convincing competing classifier is the core shrinkage estimate. It has a larger correct classification rate for for the word “up” and correctly classifies two more observations for the word “no” than the SWAG estimate. While the CSE features slightly better performance for these two words, though, the SWAG classifier performs better over all populations. Furthermore, the SWAG classifier performs much better overall than the separable MLEs obtained separately for each population, , which has an across-word average correct classification rate of 0.49. On the whole, the SWAG classifier outperforms the other classifiers considered with respect to accurate classification across all populations, and this example showcases the benefit of allowing for shrinkage both within and across populations.

5.2 Analysis of TESIE chemical exposures data

Many recent medical and environmental studies are concerned with understanding differences among socio-economic groups from repeated measurements of chemical exposures (James-Todd et al., 2017). In such an application, researchers may be interested in understanding within and across group heterogeneity. Additionally, researchers are often interested in understanding covariate effects and appropriately handling missing data. For all such tasks, accurate covariance modeling is critical.

In this section, we analyze a sample gathered in the Toddlers Exposure to SVOCS in Indoor Environments (TESIE) study (Hoffman et al., 2018). In this study, biomarkers of various semi-volatile organic compounds (SVOCs) were extracted from paired samples of urine, blood, and silicone wristbands (see, e.g., Hammel et al. (2018)). In particular, each observation is a matrix where the rows represent biomarkers from SVOCs obtained from the sources. Additionally, socio-economic covariates were collected for each individual in the study including highest education level attained and race, among others. We will analyze the covariance matrices across education level as a proxy for different socio-economic populations. Specifically, the three education levels considered are less than high school (LHS), high school degree or GED (HS), and some college or college degree (C). The sample sizes are 30, 19, and 24 for the three populations defined by education levels LHS, HS, and C, respectively.

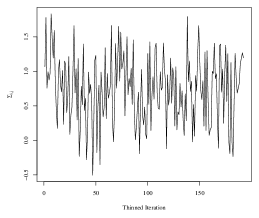

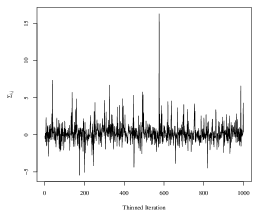

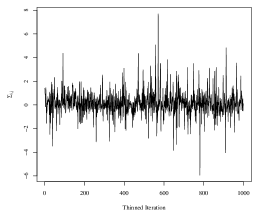

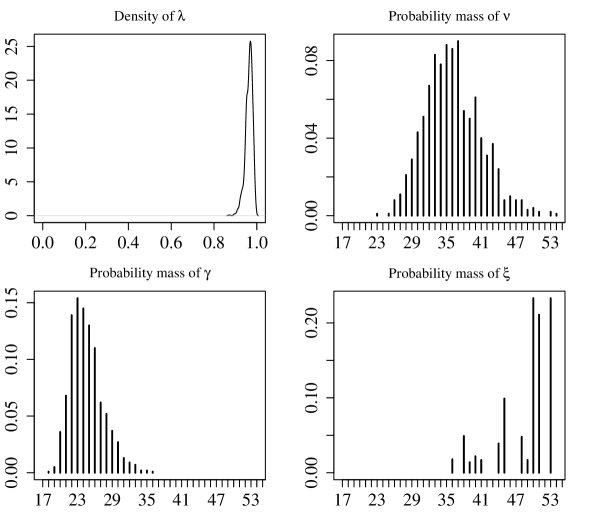

We proceed with simultaneously estimating each group’s covariance with the SWAG model. While we remove the mean effect, the SWAG model can be extended to include a regression on covariates of interest, for example, with the addition of sampling step for a regression coefficient in the proposed MCMC sampler. We run the Metropolis-Hastings sampler for 33,000 iterations, remove the first 3,000 iterations as a burn-in period, and retain every 30th sample as a thinning mechanism. The 33,000 iterations were completed in 3 minutes in an implementation of the sampler using the R statistical programming language on a personal machine with an Apple Silicon processor and 8 GB of RAM. Mixing of the Markov chain for model parameters was good. The autocorrelation for the thinned chains corresponding to each of the elements in was low, with a maximum lag-10 autocorrelation among all 360 elements of 0.11 in absolute value. Furthermore, the average effective sample size of the thinned chains was 773.73, with a range of 250.40 to 1000 (out of 1000 thinned iterations). For reference, see Figure 5 for trace plots of four randomly selected elements of .

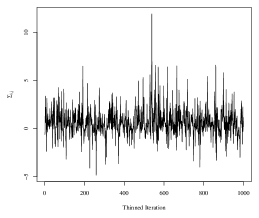

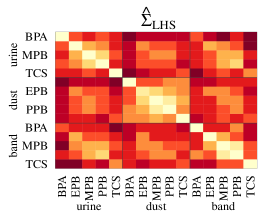

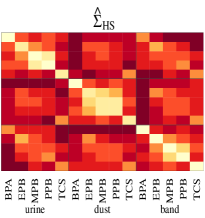

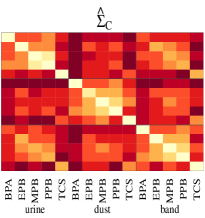

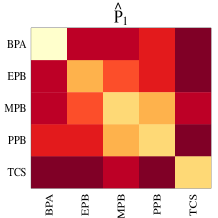

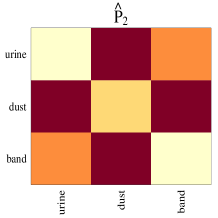

Approximations to the posteriors of shrinkage-controlling parameters and are plotted in Figure 6. The posterior density of the weight on within versus across population shrinkage, , is concentrated around the upper bound of one, indicating that most of the weight is being placed on shrinking across groups towards a pooled covariance. Additionally, the posteriors of the degrees of freedom for across-group shrinkage and are concentrated around large values indicating strong shrinkage from an unstructured covariance towards a pooled Kronecker structured covariance. In this way, interpretation of summarizing across-row and across-column covariance estimates is straightforward.

This shrinkage behavior is evident upon comparing the Bayes estimates of the group-specific covariances and to the Bayes estimates of the pooled Kronecker covariances and (Figure 7). Here, is the across-SVOC covariance estimate and is the across-source covariance estimate. The shrinkage towards a pooled separable structure is recognizable throughout the three group-specific covariance estimates. In particular, the general pattern of across-SVOC heterogeneity seen in is roughly present in each 5x5 block in the group-specific covariances, seen in the top row of Figure 7. However, a benefit of the SWAG model is the ability to allow for divergences from this separable structure, a feature also discernible in the group-specific estimates. For example, within a given population, say, the LHS population, the pattern among the covariances between BPA and the other SVOCs from samples obtained from urine differs across measurement source by more than a single factor. Moreover, this pattern differs across populations which reflects heterogeneity across populations. In total, the output of the SWAG model allows for interpretation of a row covariance and a column covariance, shared across groups, while allowing for deviations from this structure at the group level.

6 Discussion

In this article, we propose a flexible model-based covariance estimation procedure for multi-group matrix-variate data. The SWAG hierarchical model provides a coherent approach to combining two common types of shrinkage, within populations towards a Kronecker structure and across populations towards a pooled covariance. Bayesian inference of model parameters is straightforward with a Metropolis-Hastings algorithm and allows for uncertainty quantification of covariance estimates. In simulation studies, we show the flexibility of the proposed method results in covariance estimates that outperform standard estimates in a wide array of settings in terms of loss.

The flexibility of the SWAG model is developed specifically for matrix-variate data, but the model and estimation procedure can be adapted for other types of data or applications. If the data being analyzed are not matrix-variate, different structures can be utilized in place of the Kronecker product. Additionally, the shrinkage towards a pooled covariance can be replaced to represent more complex relationships across the populations such as, for example, an autoregressive relationship.

While an inverse-Wishart prior results in computationally convenient inference and has a practically useful interpretation in that the Bayes estimator of the population covariance under a normal-inverse-Wishart hierarchical model is a linear shrinkage estimator, it is potentially limiting in that, for inference on the covariance of a single group, one degree of freedom parameter controls concentration around the prior for the standard deviations and the correlation structure. As such, a promising direction for future work is to explore SWAG priors on the correlation decomposition of unstructured covariances, as in Barnard et al. (2000). This could allow for informative priors to be placed on the correlation structure, while using uninformative priors on the variable-specific standard deviations, or, vice-a-versa.

Replication codes are available at https://github.com/betsybersson/SWAG.

[Acknowledgments] The authors thank Kate Hoffman and Heather Stapleton for providing data from the Toddlers Exposure to SVOCS in Indoor Environments (TESIE) study analyzed in Section 5.2.

Supplement A: Metropolis-Hastings Algorithm for SWAG \sdescriptionThis supplement contains explicit details of a Metropolis-Hastings algorithm for parameter estimation for the SWAG model.

References

- Barnard et al. (2000) Barnard, J., Mcculloch, R., and Meng, X.-L. (2000). “Modeling covariance matrices in terms of standard deviations and correlations, with applications to shrinkage.” Statistica Sinica, 10: 1281–1311.

- Box (1949) Box, G. E. P. (1949). “A General Distribution Theory for a Class of Likelihood Criteria.” Biometrika, 36(3): 317–346.

- Brown et al. (1999) Brown, P. J., Fearn, T., and Haque, M. S. (1999). “Discrimination with many variables.” Journal of the American Statistical Association, 94(448): 1320–1329.

- Christensen (2011) Christensen, R. (2011). Plane Answers to Complex Questions. New York: Springer, fourth edition.

- Daniels (2006) Daniels, M. J. (2006). “Bayesian modeling of several covariance matrices and some results on propriety of the posterior for linear regression with correlated and/or heterogeneous errors.” Journal of Multivariate Analysis, 97: 1185–1207.

- Daniels and Kass (1999) Daniels, M. J. and Kass, R. E. (1999). “Nonconjugate Bayesian Estimation of Covariance Matrices and its Use in Hierarchical Models.” Journal of the American Statistical Association, 94(448): 1254–1263.

- Dawid (1981) Dawid, A. P. (1981). “Some matrix-variate distribution theory: notational considerations and a Bayesian application.” Biometrika, 68(1): 265–274.

- Flury (1987) Flury, B. K. (1987). “Two generalizations of the common principal component model.” Biometrika, 74(1): 59–69.

- Friedman et al. (2008) Friedman, J., Hastie, T., and Tibshirani, R. (2008). “Sparse inverse covariance estimation with the graphical lasso.” Biostatistics, 9(3): 432–441.

- Friedman (1989) Friedman, J. H. (1989). “Regularized discriminant analysis.” Journal of the American Statistical Association, 84(405): 165–175.

- Greene and Rayens (1989) Greene, T. and Rayens, W. S. (1989). “Partially pooled covariance matrix estimation in discriminant analysis.” Communications in Statistics - Theory and Methods, 18(10): 3679–3702.

- Hammel et al. (2018) Hammel, S. C., Phillips, A. L., Hoffman, K., and Stapleton, H. M. (2018). “Evaluating the Use of Silicone Wristbands To Measure Personal Exposure to Brominated Flame Retardants.” : Environ. Sci. Technol., 52: 11875–11885.

- Hoff et al. (2022) Hoff, P., Mccormack, A., and Zhang, A. R. (2022). “Core shrinkage covariance estimation for matrix-variate data.” Journal of the Royal Statistical Society, Series B.

- Hoff (2009a) Hoff, P. D. (2009a). A First Course in Bayesian Statistical Methods. New York: Springer Texts in Statistics.

- Hoff (2009b) — (2009b). “A hierarchical eigenmodel for pooled covariance estimation.” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 71(5): 971–992.

- Hoffman et al. (2018) Hoffman, K., Hammel, S. C., Phillips, A. L., Lorenzo, A. M., Chen, A., Calafat, A. M., Ye, X., Webster, T. F., and Stapleton, H. M. (2018). “Biomarkers of exposure to SVOCs in children and their demographic associations: The TESIE Study.” Environment International, 119(Cdc): 26–36.

- James-Todd et al. (2017) James-Todd, T. M., Meeker, J. D., Huang, T., Hauser, R., Seely, E. W., Ferguson, K. K., Rich-Edwards, J. W., and McElrath, T. F. (2017). “Racial and ethnic variations in phthalate metabolite concentration changes across full-term pregnancies.” Journal of Exposure Science and Environmental Epidemiology, 27(2): 160–160.

- Johnson et al. (2012) Johnson, B., Tateishi, R., and Xie, Z. (2012). “Using geographically weighted variables for image classification.” Remote Sensing Letters, 3(6): 491–499.

- Ligges et al. (2018) Ligges, U., Krey, S., Mersmann, O., and Schnackenberg, S. (2018). “tuneR: Analysis of Music and Speech.” Technical report.

- Lu and Zimmerman (2005) Lu, N. and Zimmerman, D. L. (2005). “The likelihood ratio test for a separable covariance matrix.” Statistics and Probability Letters, 73(4): 449–457.

- Mardia et al. (1979) Mardia, K., Kent, J., and Bibby, J. (1979). Multivariate Analysis. London: Academic Press, 1 edition.

- Marks and Dunn (1974) Marks, S. and Dunn, O. J. (1974). “Discriminant functions when covariance matrices are unequal.” Journal of the American Statistical Association, 69(346): 555–559.

- Rayens and Greene (1991) Rayens, W. and Greene, T. (1991). “Covariance pooling and stabilization for classification.” Computational Statistics and Data Analysis, 11(1): 17–42.

-

Warden (2017)

Warden, P. (2017).

“Speech commands: A public dataset for single-word speech

recognition.”

URL http://download.tensorflow.org/data/speech_commands_v0.01.tar.gz - Warden (2018) — (2018). “Speech commands: A dataset for limited-vocabulary speech recognition.” Technical report.

- Wu and Pourahmadi (2009) Wu, W. B. and Pourahmadi, M. (2009). “Banding sample autocovariance matrices of stationary processes.” Statistica Sinica, 19: 1755–1768.