Bayesian Disturbance Injection:

Robust Imitation Learning of Flexible Policies

Abstract

Scenarios requiring humans to choose from multiple seemingly optimal actions are commonplace, however standard imitation learning often fails to capture this behavior. Instead, an over-reliance on replicating expert actions induces inflexible and unstable policies, leading to poor generalizability in an application. To address the problem, this paper presents the first imitation learning framework that incorporates Bayesian variational inference for learning flexible non-parametric multi-action policies, while simultaneously robustifying the policies against sources of error, by introducing and optimizing disturbances to create a richer demonstration dataset. This combinatorial approach forces the policy to adapt to challenging situations, enabling stable multi-action policies to be learned efficiently. The effectiveness of our proposed method is evaluated through simulations and real-robot experiments for a table-sweep task using the UR3 6-DOF robotic arm. Results show that, through improved flexibility and robustness, the learning performance and control safety are better than comparison methods.

I Introduction

Imitation learning provides an attractive means for programming robots to perform complex high-level tasks, by enabling robots to learn skills via observation of an expert demonstrator [1, 2, 3, 4]. However, two significant limitations of this approach severely restrict applicability to real-world scenarios, these being: 1. learning policies from a human expert demonstrator is often very complex, as humans often choose between multiple optimal actions, and 2. learned policies can be vulnerable to compounding errors during online operation (i.e., there is a lack of robustness). In the former, uncertainties and probabilistic behavior of the human expert (e.g., multiple optimal actions for a task), increases the complexity of learning policies, requiring flexible policy models. In the latter case, compounding errors from environmental variations (e.g., task starting position), may induce significant differences between the expert’s training distribution and the applied policy. This drifting issue is commonly referred to as covariate shift [5], and requires robust policy models.

To address both these limitations, this paper presents a novel Bayesian imitation learning framework, that learns a probabilistic policy model capable of being both flexible to variations in expert demonstrations, and robust to sources of error in policy application. This is referred to as Bayesian Disturbance Injection (BDI).

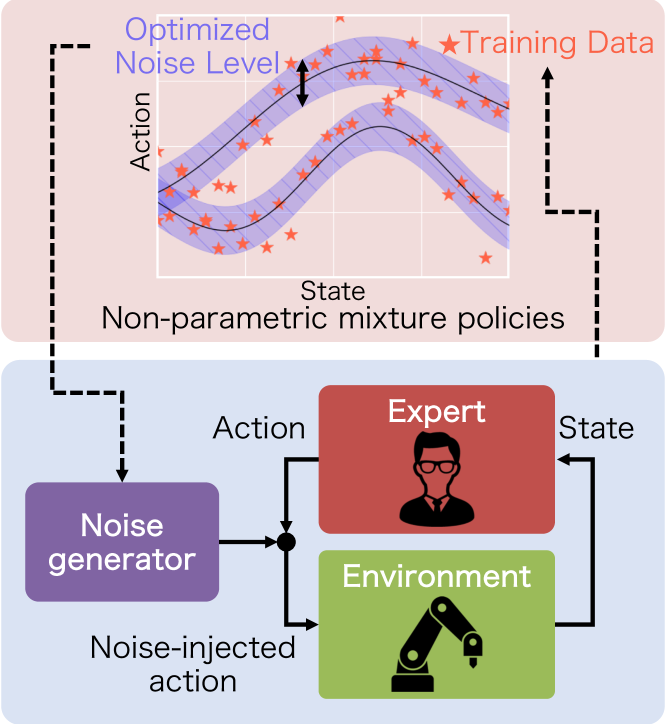

Specifically, this paper establishes robust multi-modal policy learning, with flexible regression models [6] as probabilistic non-parametric mixture policies (Fig. 1, top). To induce robustness, noise is injected into the expert’s actions (Fig. 1, bottom) to generate a richer set of demonstrations. Inference of the policy, and optimization of injection noise is performed simultaneously by variational Bayesian learning, thereby minimising covariate shift between the expert demonstrations and learned policy.

To evaluate the effectiveness of the proposed framework, an implementation of Multi-modal Gaussian Process BDI (MGP-BDI) is derived, and experiments in learning probabilistic behavior from a table-sweeping task using the UR3 6-DOF robotic arm is performed. Results show improved flexibility and robustness, with increased learning performance and control safety relative to comparison methods.

II Related Work

II-A Flexibility

A key objective of imitation learning is to ensure that models can capture the variation and stochasticity inherent in human motion. Classical dynamical frameworks for learning trajectories from demonstrations include Dynamic Movement Primitives (DMPs), which can generalize the learned trajectories to new situations (i.e., goal location or speed). However, this generalization depends on the heuristic, thus unsuitable for learning state-dependent feedback policies [7, 8, 9].

Gaussian Mixture Regression (GMR) is an non-parametric, intuitive means to learn trajectories or policies from demonstrators in the state-action-space. In this, Gaussian Mixture Modelling (GMM) [10] is used as a basis function to capture non-linearities during learning, and has been utilized in imitation learning that deals with human demonstrations [11]. However, GMR requires to engineer features (e.g., Gaussian initial conditions) by hand to deal with high-dimensional systems. [12].

As an alternative, Gaussian process regression (GPR) deals with implicit (high-dimensional) feature spaces with kernel functions. It thus can directly deal with high-dimensional observations without explicitly learning in this high-dimensional space [13]. In particular, Overlapping Mixtures of Gaussian Processes (OMGP) [14] learns a multi-modal distribution by overlapping multiple GPs, and has been employed as a policy model with multiple optimal actions on flexible task learning of robotic policies [15]. To further reduce a priori tuning, Infinite Overlapping Mixtures of Gaussian Processes (IOMGP) [6] requires only an upper bound of the number of GPs to be estimated. As such, IOMGP is an intuitive means of learning complex multi-modal policies from unlabeled human demonstration data and is employed in this paper.

II-B Robustness

While flexibility is key to capturing demonstrator motion, a major issue limiting application of learned policies is the problem of covariate shift. Specifically, environment variations (e.g., manipulator starting position) induces differences between the policy distribution as learned by the manipulator and the actual task distribution during application.

A more general approach to minimizing the covariate shift in imitation learning, is Dataset Aggregation (DAgger) [16], whereby when the robot moves to a state not included in the training data, the expert teaches the optimal recovery. However, this approach has limited applicability in practice due to the risk of running a poorly learned robot and the tediousness to have human experts continue to teach the robot the optimal actions.

An intuitive approach to robustifying learned policies against sources of error, without needing to a priori specify task-relevant learning parameters, is to exploit phenomenon similar to persistence excitation [17]. In this, noise is injected into the expert’s demonstrated actions, and the recovery behavior of the expert is learned from this perturbation. In an imitation learning context, Disturbances for Augmenting Robot Trajectories (DART) [18] exploits this phenomenon for learning a deterministic policy model with a single optimal action. Additionally, DART is well suited to creating a richer dataset, by concurrently determining the optimal noise level to be injected into the demonstrated actions during policy learning.

However, the algorithm proposed to achieve DART [18] has a major limitation. It assumes a uni-model deterministic policy, which is unsuitable for many real-world imitation learning tasks, that may consist of multiple optimal actions.

In contrast to that approach, this paper explores a novel method that can eliminate such a severe limitation by incorporating a prior distribution on policy then optimizing both the policy and the injection noise parameters simultaneously via variational Bayesian learning. As such, this novel imitation learning framework improves robustness while maintaining flexibility.

III Preliminaries

III-A Imitation Learning from Expert’s Demonstration

The objective of imitation learning is to learn a control policy by imitating the action from the expert’s demonstration data. A dynamics model is denoted as Markovian with a state , an action and a state transition distribution . A policy decides an action from a state. A trajectory which is a sequence of state-action pairs of steps. The trajectory distribution is indicated as:

| (1) |

A key aspect of imitation learning is to replicate the expert’s behavior, and as such the function which computes the similarity of two policies using trajectories is defined as:

| (2) |

A learned policy is obtained by solving the following optimization problem using a trajectory collected by an expert’s policy :

| (3) |

In imitation learning, a learned policy may suffer from the problem of error compounding, caused by covariate shift. This is defined as the distributional difference between the trajectories in data collection, and those in testing:

| (4) |

III-B Robust Imitation Learning by Injecting Noise into Expert

To learn policies that are robust to covariate shift, DART has previously been proposed [18] for imitation learning problems. In this, expert demonstrations are injected with noise to produce a richer set of demonstrated actions. The level of injection noise is optimized iteratively to reduce covariate shift during data collection.

In this, it is assumed that the injection noise is sampled from a Gaussian distribution as , where is the number of optimizations. The injection noise is added to the expert’s action .

The noise injected expert’s trajectory distribution is denoted as and the trajectory distribution with learned policy as . To reduce covariate shift, DART introduces upper bound of covariate shift by Pinsker’s inequality as:

| (5) |

where, is Kullback-Leibler divergence. However, the upper bound is intractable since the learned policy’s trajectory distribution is unknown. Therefore, DART solves the upper bound using the noise injected expert’s trajectory distribution instead of the learned policy’s trajectory distribution. Optimal injection noise distribution is optimized as:

| (6) |

The optimized injection noise distribution can be interpreted as the likelihood of the learned policy.

The learned policy used in the optimization of is obtained by following:

| (7) |

IV Proposed Method

In this section, a novel Bayesian imitation learning framework is proposed (Fig. 1) to learn a multi-modal policy via expert demonstrations with noise injection. Non-parametric mixture model is utilized as a policy prior and incorporates the injection noise distribution as a likelihood to a policy model. An imitation learning method is derived, which learns a multi-modal policy and an injection noise distribution, by variational Bayesian learning. Note, IOMGP [6] is employed as a policy prior in this paper. For simplicity but without loss of generality, this section focuses on one-dimensional action.

IV-A Policy Model

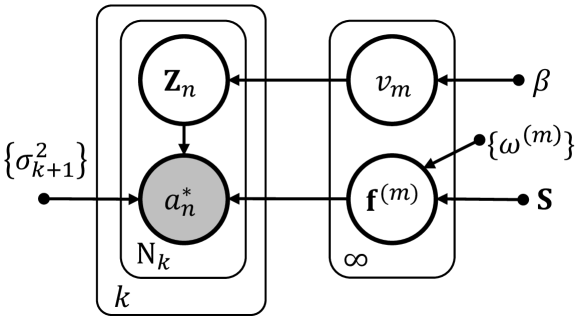

To learn a multi-modal policy, the policy prior is considered as the product of infinite GPs, inspired by IOMGP. Fig. 2 shows a policy model in which expert’s actions are estimated by where , is a size of the dataset that collected at -th iteration. The latent function is the output of -th GP given state . To allocate -th expert’s action to -th latent function , indicator matrix is defined. To estimate the optimal number of GPs, a random variable quantifies the uncertainty assigned to . In addition, the set of injection noise parameters, , indicate the distribution of the expert’s action in the distance from the latent function .

The set of latent functions is denoted as and a GP prior is given by :

| (8) |

where is the -th kernel gram matrix with the kernel function and a kernel hyperparameter . Let be the set of hyperparameters of infinite number of kernel functions.

Then the Stick Breaking Process (SBP) [19] is used as a prior of , which can be interpreted as an infinite mixture model as follows:

| (9) | ||||

| (10) |

Note that the implementation of variational Bayesian learning cannot deal with infinite-dimensional vectors, so the component is replaced with a predefined upper bound of the mixtures . is a random variable indicating the probability that the data corresponds to the -th GP. Thus, it is possible to estimate the optimal number of GPs with a high probability of allocation starting from an infinite number of GPs. is a hyperparameter of SBP denoting the level of concentration of the data in the cluster.

The above policy model differs from the IOMGP model for regression [6]; our model employs the set of injection noise parameters because of the injection noise distribution is updated at each iteration. The next injection noise optimized by (III-B) deals with the training data collected in the current iteration, while the policy inference (7) deals with all the training data gathered up to the current iteration. Thus, the heteroscedastic Gaussian noise is defined as:

| (11) |

showing the association between the injection noise parameters and the training data collected for each iteration. In this, is a vector whose size is and all components are one. In addition, the value of the likelihood (III-B) does not change even if the mean and the input are replaced, the likelihood for the is derived as :

| (12) |

This formulation is described in a graphical model that defines the relationship between the variables as shown in Fig. 2, and the joint distribution of the model as :

| (13) |

where represents a set of hyperparameters.

IV-B Optimization of Policies and Injection Noise via Variational Bayesian Learning

Bayesian learning is a framework that estimates the posterior distributions of the policies and their predictive distributions for new input data rather than point estimates the policy parameters. To obtain the posterior and predictive distributions, the marginal likelihood is calculated as :

| (14) |

However, it is intractable to calculate the log marginal likelihood of (IV-B) analytically. Therefore, the variational lower bound is derived as the objective function of variational learning. The true posterior distribution is approximated by the variational posterior distribution, which maximizes the variational lower bound. As a common fashion of variational inference, the variational posterior distribution is assumed to be factorized among all latent variables (known as the mean-field approximation [20]) as follows:

| (15) |

The variational lower bound is derived from this assumption by applying the Jensen inequality to the log marginal likelihood, as:

| (16) |

In addition, the optimization formulation is derived using the Expectation-Maximization (EM) algorithm. The variational posterior distribution is optimized with a fixed hyperparameter in E-step, and the hyperparameter is optimized with a fixed variational posterior distribution in M-step with:

| (17) |

See the SectionVII-A,B for details of update laws and lower bound of marginal likelihood. And a summary of the proposed method is shown in Algorithm 1.

IV-C Predictive Distribution

Using the hyper-parameter and the variational posterior distribution , optimized by variational Bayesian learning, the predictive distribution of the -th action and variance on an current state can be computed analytically as (see [13]).

V Evaluation

In this section, the proposed approach is evaluated through simulation and experiments on a 6-DOF robotic manipulator. Specifically, to evaluate the robustness and flexibility of the proposed approach, the following key questions are investigated: i) Does the flexibility induced by MGP-BDI allow for capturing policies with multiple optimal behaviors? ii) Does inducing model robustness via noise injection reduce covariate shift error? iii) Is the optimized injection noise of significantly low variance, allowing for safe perturbations in real-world demonstrations?

To evaluate the proposed method (MGP-BDI), comparisons are made against baseline policy learning methods: 1. Supervised imitation learning (i.e., behavior cloning (BC)[21]) using standard unimodal GPs (UGP-BC) [13], 2. BDI using standard unimodal GPs (UGP-BDI), 3. BC using infinite overlapping mixtures of Gaussian processes (MGP-BC). Specifically, these three are chosen since they represent the state-of-the-art in either flexible or robustness imitation learning. In all experiments, the maximum number of mixture GPs is fixed at ().

V-A Simulation

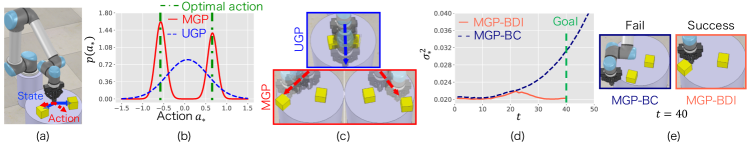

An initial experiment is presented to characterise the flexibility and robustness of the proposed approach, for learning and completing tasks in an environment. Specifically, a standard manipulator learning task (table-sweeping) involving multi-object handling is performed in the V-REP [22] environment, as shown in Fig. 3-(a).

In this experiment, learned policies are evaluated in terms of ability to flexibly learn tasks with multiple optimal actions (e.g., the order in which to sweep the objects from the table), and well as robustness to environmental covariance shift inducing disturbances (e.g., friction between the objects and table inducing variations of object movement).

V-A1 Setup

Initially, two boxes and the robot arm are placed at fixed coordinates on a table. The state of the system is defined as the relative coordinate between the robot arm and two boxes (), an action is defined as the velocity of the robot arm in the x and y axis. Demonstrations are generated using a custom PID controller to simulate the human expert, which sweeps the boxes away from the centre. Two demonstrations from these initial conditions are then performed, capturing both variations in the order of which the objects are swept from the table. For each demonstration, the performance is given by the total number of boxes swept off the table (min : 0, max: 2). If a demonstration is unsuccessful (i.e., both boxes were not swept off), the demonstration is discarded, and repeated. Given two successful demonstrations, the data is used to optimize the policy and noise parameters until (17) converges (as seen in Fig. 1). This experiment is repeated for iterations, appending the successful demonstrations to the training dataset, and continuously updating the policy and noise estimates until convergence of the noise parameters. In the following experiments, results in convergence. During the test stage, only the robot arm is randomly placed at .

A second experiment is also presented, in a more complex task. To evaluate the proposed method’s scalability, three boxes are placed in the environment, in random coordinates one by one within trisected areas on the table. The state is given by the relative coordinate between the robot arm and three boxes (), and the performance was calculated as the total number of boxes swept from the table (min : 0, max : 3). Due to the increased task complexity, the maximum number of iterations for updating the policy and noise estimates is .

V-A2 Result

The results for these experiments are seen in Fig. 3. In terms of flexibility of learning, Fig. 3-(b) shows that the unimodal policy learner fails to capture the fact there are multiple optimal actions in the environment, instead learning a mean-centred policy that fails to reach either object (as seen in Fig. 3-(c). In comparison, the proposed multi-modal approach correctly learns the multi-modal distribution, and outputs actions to sweep the two boxes.

In terms of robustness, it is seen in Fig. 3-(d) that even if a non-parametric flexible policy learner is used (MGP-BC), the variance in the learned action for this policy exponentially increases after the -th time-step, and task failure occurs as seen in Fig. 3-(e). This time-step indicates where the robot interacted with the object in the environment, and a sudden increase in uncertainty of the box position occurred. This is a possibility due to the dynamic behavior of the box being different that encountered between this demonstration and the training set, resulting in the model being unable to recover. In comparison, while the proposed noise-injected method also experiments some uncertainty at this interaction, it recovers and retains a near constant certainty throughout the remainder of the task application. In the two-box experiment, MGP-BDI and MGP-BC respectively earned performances and of the expert demonstrator, and in the three-box experiment this remained similar at and . Other models in which unimodal policies are learned (UGP-BDI and UGP-BC) have been verified to yield about 0 performances.

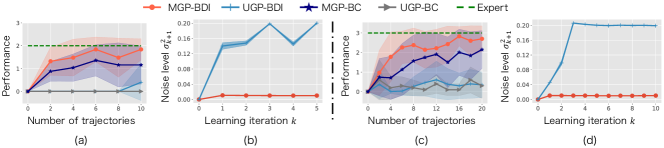

To evaluate the learning performance, the performance and optimized noise variance is evaluated. In the two-box experiment, Fig. 4-(a), it is seen that the unimodal policy methods (UGP-BC, and UGP-BDI), both fail to learn the multi-modal task, and retain a near zero performance throughout learning. In comparison, the multi-modal policy methods (MGP-BC, and MGP-BDI), both increase learning performance as the number of iterations increase, and MGP-BDI outperforms consistently. In addition to this, it is seen in Fig. 4-(b) when the expert has probabilistic behavior (i.e., multiple targets), the standard UGP-BDI learns an excessive (and potentially unsafe) amount of noise. This is due to the fact that because UGP-BDI assumes a deterministic policy model, a large modeling approximation error is induced, resulting in uncertainty in task performance, and overestimation in the noise variance when attempting to self-correct.

In contrast, the proposed method retains a very low variance in injected noise, by learning policy and noise optimization in a Bayesian framework allowing for confidence in the model to increase gradually as more training data sets are collected. This corresponds to a more stable, and safer demonstration. A similar set of results is seen in the more complex three-box experiment Fig. 4-(c-d).

V-B Real robot experiment with a human expert

V-B1 Setup

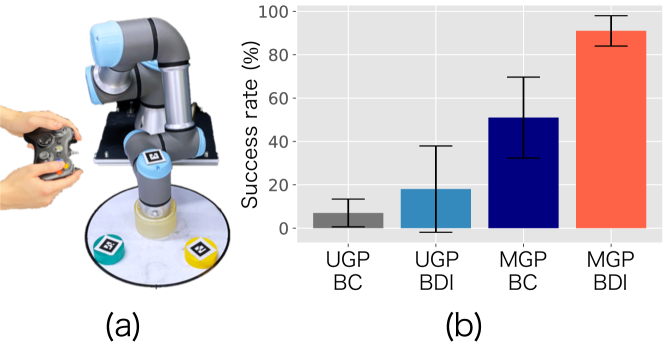

The proposed method is evaluated in a real-world robotics task, where the demonstrations of sweeping office supplies (e.g., rubber tape) from a table in the correct order are provided by a human expert, as shown in Fig. 5-(a).

Prior to the start of a demonstration, the two objects are placed at random positions in the upper semicircle of the table. Following the same procedure as outlined in Sec.V-A1, the human expert performs two demonstrations in which the objects are swept off the table in random order, and the method optimizes the measure and the noise parameter until (17) is converged. This process is repeated times (10 trajectories). To measure the state of the system (the x,y position of the objects) AR markers are attached to each object (robot arm and rubber tape) and tracked through a RGB-D camera (RealSense D435). As in the previous simulation experiment, the task action is the end-effector velocity. To validate the performance of MGP-BDI in reducing covariate shift, the initial positions of office supplies were arbitrarily placed in a circle on the table to induce scenarios this being a challenging task due to the covariate shift. The learning models’ performance is evaluated according to the success of the test episode. Success is determined by the presence of office supplies on the table at the end of the test episode.

V-B2 Result

The results of this experiment are seen in Fig. 5-(b). From this, it is seen that the unimodal policy methods (UGP-BC, UGP-BDI) have a poor success rate of and , respectively. As such, they fail to correctly learn policies to account for multiple optimal actions in the environment, and demonstrate a lack of flexibility. In contrast, the multi-modal policy methods (MGP-BC and MGP-BDI) show improved performance ( and , respectively), however it is clear that even when incorporating flexibility, the success rate for BC is poor.

VI Conclusion

This paper presents a novel Bayesian imitation learning framework, that injects noise into an expert’s demonstration, to learn robust multi-optimal policies. This framework captures human probabilistic behavior and allows for learning reduced covariate shift policies, by collecting training data on an optimal set of states. The effectiveness of the proposed method is verified on a real robot with human demonstrations. In the future, this approach will be integrated with kernel approximation methods, or deep neural networks, to learn complex multi-action tasks from unstructured demonstrations (e.g., cooking involving cutting, mixing, and pouring).

VII Appendix

VII-A Update laws in

Update of :

| (18) | ||||

| (19) | ||||

| (20) | ||||

| (21) |

Update of :

| (22) | ||||

| (23) |

where, is the digamma function.

Update of :

| (24) | ||||

| (25) |

VII-B Lower bound of marginal likelihood:

References

- [1] A. Coates, P. Abbeel, and A. Y. Ng, “Apprenticeship learning for helicopter control,” Communications of the ACM, vol. 52, no. 7, pp. 97–105, 2009.

- [2] M. Bojarski, D. Del Testa, D. Dworakowski, B. Firner, B. Flepp, P. Goyal, L. D. Jackel, M. Monfort, U. Muller, J. Zhang, et al., “End to end learning for self-driving cars,” Neural Information Processing Systems (NIPS). Deep Learning Symposium, 2016.

- [3] T. Zhang, Z. McCarthy, O. Jow, D. Lee, X. Chen, K. Goldberg, and P. Abbeel, “Deep imitation learning for complex manipulation tasks from virtual reality teleoperation,” in International Conference on Robotics and Automation (ICRA). IEEE, 2018, pp. 1–8.

- [4] T. Osa, J. Pajarinen, G. Neumann, J. A. Bagnell, P. Abbeel, J. Peters, et al., “An algorithmic perspective on imitation learning,” Foundations and Trends in Robotics, vol. 7, no. 1-2, pp. 1–179, 2018.

- [5] S. Ross and D. Bagnell, “Efficient reductions for imitation learning,” in Proceedings of the thirteenth international conference on artificial intelligence and statistics, 2010, pp. 661–668.

- [6] J. Ross and J. Dy, “Nonparametric mixture of gaussian processes with constraints,” in International Conference on Machine Learning (ICML), 2013, pp. 1346–1354.

- [7] S. Schaal, J. Peters, J. Nakanishi, and A. Ijspeert, “Learning movement primitives,” in Robotics research. the eleventh international symposium. Springer, 2005, pp. 561–572.

- [8] A. J. Ijspeert, J. Nakanishi, H. Hoffmann, P. Pastor, and S. Schaal, “Dynamical movement primitives: learning attractor models for motor behaviors,” Neural computation, vol. 25, no. 2, pp. 328–373, 2013.

- [9] S. M. Khansari-Zadeh and A. Billard, “Learning stable nonlinear dynamical systems with gaussian mixture models,” IEEE Transactions on Robotics, vol. 27, no. 5, pp. 943–957, 2011.

- [10] S. Calinon, “A tutorial on task-parameterized movement learning and retrieval,” Intelligent service robotics, vol. 9, no. 1, pp. 1–29, 2016.

- [11] M. Kyrarini, M. A. Haseeb, D. Ristić-Durrant, and A. Gräser, “Robot learning of industrial assembly task via human demonstrations,” Autonomous Robots, vol. 43, no. 1, pp. 239–257, 2019.

- [12] Y. Huang, L. Rozo, J. Silvério, and D. G. Caldwell, “Kernelized movement primitives,” The International Journal of Robotics Research, vol. 38, no. 7, pp. 833–852, 2019.

- [13] C. E. Rasmussen, “Gaussian processes in machine learning,” in Summer School on Machine Learning. Springer, 2003, pp. 63–71.

- [14] M. Lázaro-Gredilla, S. Van Vaerenbergh, and N. D. Lawrence, “Overlapping mixtures of gaussian processes for the data association problem,” Pattern recognition, vol. 45, no. 4, pp. 1386–1395, 2012.

- [15] H. Sasaki and T. Matsubara, “Multimodal policy search using overlapping mixtures of sparse gaussian process prior,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 2433–2439.

- [16] S. Ross, G. Gordon, and D. Bagnell, “A reduction of imitation learning and structured prediction to no-regret online learning,” in Proceedings of the fourteenth international conference on artificial intelligence and statistics, 2011, pp. 627–635.

- [17] S. Sastry and M. Bodson, Adaptive control: stability, convergence and robustness. Courier Corporation, 2011.

- [18] M. Laskey, J. Lee, R. Fox, A. Dragan, and K. Goldberg, “DART: Noise injection for robust imitation learning,” in Conference on Robot Learning (CoRL), 2017, pp. 143–156.

- [19] J. Sethuraman, “A constructive definition of dirichlet priors,” Statistica sinica, pp. 639–650, 1994.

- [20] G. Parisi, Statistical field theory. Addison-Wesley, 1988.

- [21] M. Bain and C. Sammut, “A framework for behavioural cloning.” in Machine Intelligence 15, 1995, pp. 103–129.

- [22] E. Rohmer, S. P. Singh, and M. Freese, “V-REP: A versatile and scalable robot simulation framework,” in International Conference on Intelligent Robots and Systems. IEEE, 2013, pp. 1321–1326.