page1

Bayesian Eye Tracking

Abstract

Model-based eye tracking has been a dominant approach for eye gaze tracking because of its ability to generalize to different subjects, without the need of any training data and eye gaze annotations. Model-based eye tracking, however, is susceptible to eye feature detection errors, in particular for eye tracking in the wild. To address this issue, we propose a Bayesian framework for model-based eye tracking. The proposed system consists of a cascade-Bayesian Convolutional Neural Network (c-BCNN) to capture the probabilistic relationships between eye appearance and its landmarks, and a geometric eye model to estimate eye gaze from the eye landmarks. Given a testing eye image, the Bayesian framework can generate, through Bayesian inference, the eye gaze distribution without explicit landmark detection and model training, based on which it not only estimates the most likely eye gaze but also its uncertainty. Furthermore, with Bayesian inference instead of point-based inference, our model can not only generalize better to different sub-jects, head poses, and environments but also is robust to image noise and landmark detection errors. Finally, with the estimated gaze uncertainty, we can construct a cascade architecture that allows us to progressively improve gaze estimation accuracy. Compared to state-of-the-art model-based and learning-based methods, the proposed Bayesian framework demonstrates significant improvement in generalization capability across several benchmark datasets and in accuracy and robustness under challenging real-world conditions.

1 Introduction

Eye tracking is to identify people’s focus of attention(or line of sight) in the 3D space or on the 2D screen. Applications of eye tracking range from marketing, psychology, user behavior research to gaming, human-computer interaction, medical diagnosis, etc.

Recent gaze estimation methods can be divided into two categories: 1) learning-based and 2) model-based. Learning-based methods [13, 16, 25, 36, 37, 14] aim at learning a mapping function from the input image to eye gaze. The most popular mapping function nowadays is the convolutional neural network and its variants. Despite their impressive performance on benchmark datasets, the major issue with learning-based methods is that they cannot generalize well and that they require significant amount training data with eye gaze annotations to perform well. Accurate eye gaze annotation is a tedious, expensive, and time-consuming process and it often requires specialized equipment [32] . Model-based methods leverage on a geometric model representing the anatomical structure of eye to estimate eye gaze. The idea is to first detect 2D eye or facial landmarks, then reconstruct the 3D location of eyeball center and pupil center from 2D observations. Finally, 3D eye gaze can be estimated by connecting the eyeball center and pupil center. Early efforts were focused on designing new geometric models [1, 11, 12, 10, 15, 20, 2, 33, 35, 26, 27] to better recover 3D information from 2D observations. Recently, researchers are more interested in improving the accuracy of eye/facial landmark detection [6, 18, 28, 19] with the powerful deep learning tools. While training-free and generalizabale, the model-based approach often cannot produce accurate eye gaze estimation due to inaccuracies with eye landmark detection, in particular for real world eye tracking, unless special hardware (e.g IR light) is used.

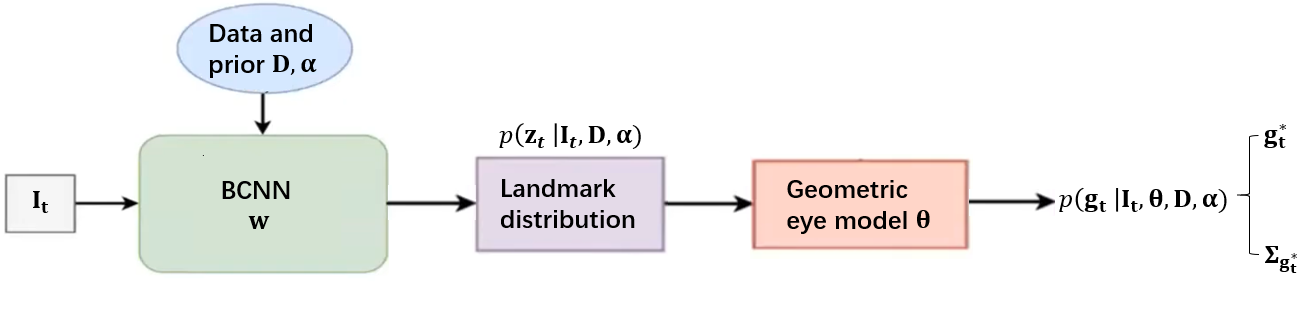

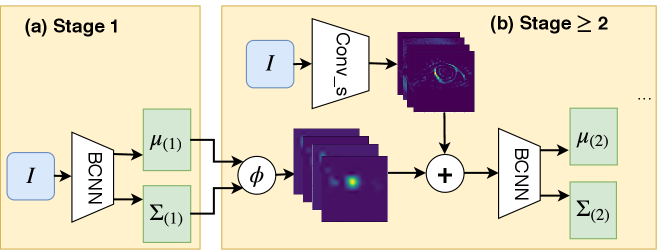

To overcome these limitations, we introduce a Bayesian framework for model-based eye tracking without explicit eye landmark detection. As shown in Fig. 1, the proposed method consists of a Bayesian Convolutional Neural Network (BCNN) module which captures the relationship between eye appearance and eye landmarks, and a geometric model which relates eye landmarks to eye gaze. The two modules are jointly formulated through a Bayesian probabilistic framework, and eye gaze can be effectively estimated through a Bayesian inference. With the Bayesian inference, eye landmark predictions on an input eye image can be performed by an ensemble of BCNN models drawn from their posterior distributions. The landmarks generated by each BCNN model are then used to estimate eye gaze for the input image, yielding a distribution of eye gaze. By modeling the landmark distribution and gaze distribution, the proposed model can generalize to different subjects, head poses or new environments and yield robust performance even under noisy images. Furthermore, our model also associates a level of uncertainty with its gaze prediction. Uncertainty is essential to understand what deep networks cannot do and avoid over-confident predictions. Finally, by feeding the uncertainty information from current stage to next stage with the cascade architecture, we can further improve the model performance. To summarize, we make following novel contributions:

-

•

We introduce a unified Bayesian framework that combines a model-based eye tracking with a Bayesian convolutional neural network to allow robust and generalizable model-based eye tracking .

-

•

With Bayesian inference, our model avoids explicit landmark detection and requires no eye gaze annotations and no model training.

-

•

The model produces not only eye gaze predictions but also their uncertainties, which can be incorporated into a cascade framework to progressively improve gaze estimation performance.

2 Related work

In this section, we focus on reviewing recent work on model-based eye gaze tracking, in particular those aimed at improving eye feature detection and eye tracking generalization performance. In [27], the authors proposed to estimate the 3D eye gaze given 2D facial landmarks. They introduce a 3D eye-face deformable model that relates the 3D eyeball center with 3D rigid facial landmarks. Given the detected 2D facial landmarks and the eye-face model, they can effectively recover the 3D eyeball center and obtain the final gaze direction. In [19], the authors proposed to recover the gaze direction with eye region landmarks. They first used a deep network (Hourglass) to detect eye region landmarks. Next they leveraged on the proposed geometric eye model to estimate the eye gaze direction given the detected eye landmarks. Both methods require accurate facial and eye region landmark detections to perform well. In [21], authors focused on robust eye feature detection in pervasive scenarios. They introduced the Pupil Reconstructor(PuRe), which is mainly based on an edge segment selection. Their method also includes the confidence measure for the detected pupil. But PuRe fails to work on low-resolution eye images. Sun, et al. [24] proposed a gaze estimation system that consists of three modules: gaze feature extraction, system calibration, and gaze estimation. The gaze features are fed into the gaze estimation module after the calibration procedure. During the gaze feature extraction, a parameterized iris model is used to locate iris center, which is robust against low-qulity eye images and large head movement. Wood, et al. [31] constructed a 3D deformabel dense eye model from the head scans. Their model includes shape and texture variations of facial eye region (eyebrow, eyelid, part of the nose) and eyeball (iris, sclera). By fitting the whole eye model instead of fitting landmarks, they can get the 3D position of eyeball center and pupil center simultaneously. But during fitting, they still used landmarks as regularization and set high weights to it. And their model is not robust when facing real world scenario, especially when occlusion occurs. Wang et al. [29], proposed to leverage Bayesian inference during the adversarial learning to increase generalization for appearance-based gaze estimation. However, as a learning based instead of model-based, their method required a large amount of labeled training data under different variations and a complex training procedure; it hence cannot generalize well beyond the training data.

3 Problem statement

Given data , where and represent the image and corresponding landmarks, model prior and geometric model parameter , our goal is to estimate the gaze for a testing image . We want to emphasize here that contains no eye gaze annotations and that its landmarks are not manually annotated but are generated by a third party landmark detector.

We first introduce the deterministic model formulation, then we discuss our extension to the probabilistic formulation.

3.1 Deterministic formulation

For deterministic formulation, we first map eye image to its landmarks through a landmark detector, then estimate eye gaze with the geometric eye model from the landmarks:

| (1) |

The image to landmark mapping function is obtained by a training process. encodes the eye model that maps eye/facial landmarks to the eye gaze analytically. The eye model parameter include both the eye model and personal eye parameters (e.g. eyeball radius) and they can be obtained through an optimization and an offline personal calibration.

3.2 Probabilistic formulation

The deterministic formulation has several limitations. First, gaze estimation only relies on a single set of parameter , which may not work well when data comes from different subjects, head poses or environments or noisy eye images. Second, it requires to explicitly detect the landmark points for model-based gaze estimation. Gaze estimation hence is sensitive to landmark detection errors, and it does not have a way to characterize the errors of the landmark detection.

To address these limitations, we propose to employ a Bayesian convolutional neural network (BCNN) instead of a conventional convolutional neural network (CNNs) to capture the probabilistic relationships between an input eye image and the eye/facial landmarks. With Bayesian inference, BCNN can produce an ensemble of CNNs by sampling its parameter posterior . The ensemble of CNNs produce a distribution of landmarks, which, after fed into the geometric eye model, produces a distribution of eye gaze for the input eye image. By using landmark distribution instead of explicit landmark detection with one model, we can improve both eye gaze estimation accuracy, robustness, and its generalization. Specifically, the proposed Bayesian eye tracking can be formulated as follows:

| (2) | ||||

| (3) | ||||

| (4) |

As can be seen in Eq. (2), by integrating all possible landmarks , we avoid explicit landmark detection and it is more robust against noise. In addition, as in Eq. (3), by integrating over all possible network parameters , we avoid point-based estimation, avoid over-fitting, and are able to achieve better generalization across different subjects, head poses as well as different environments.

4 Single-stage gaze estimation

Following the order in Eq. (4), we first discuss how to obtain the landmark distribution for a testing image in Sec. 4.1. This is then followed by discussing the parameter posterior in Sec. 4.2 and then the model-based gaze estimation in Sec. 4.3. Finally, given these three terms, we summarize in Sec. 4.4 how we can approximately solve for Eq. 4.

4.1 Landmark distribution and BCNN

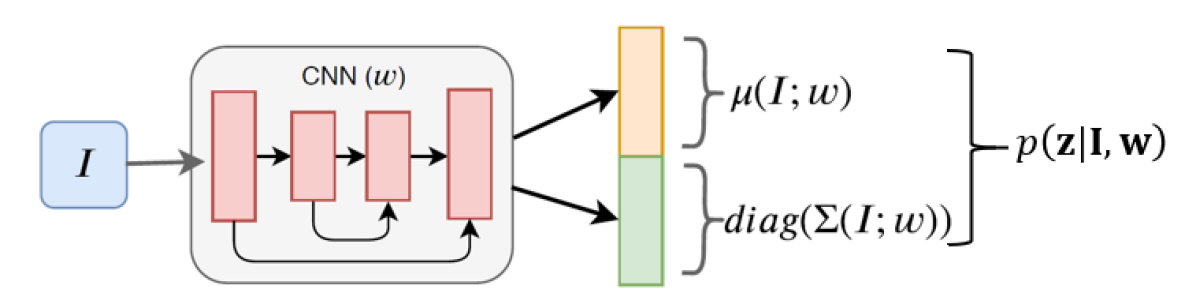

We assume the landmark distribution term or likelihood term follows a Gaussian distribution:

| (5) |

whose mean and covariance matrix are outputted by the BCNN in Fig. 2. Note the inner part of the CNN is a U-Net([22]) like architecture. U-Net is proposed for segmentation tasks, and since the eye region can be segmented as skin, sclera and iris regions, landmarks therefore are easier to detect given the segmentation information.

In this work, the covariance matrix is assumed to be a diagonal matrix and hence the BCNN in Fig. 2 only predicts the diagonal entries.

4.2 Parameter posterior

The parameter posterior is proportional to the product of it’s likelihood and prior:

| (6) |

The likelihood part is generated by the BCNN as discussed in Section 4.1. For the prior term, we assume , where is a hyperparameter and is set to in this work.

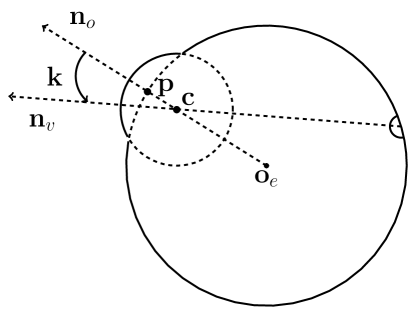

4.3 Model-based gaze estimation from landmarks

The term in Eq. 4 encodes the model-based eye tracking. For model-based eye tracking, we focus on two recent models [27, 19] that only require a web camera. As two models employ the same principle, i.e., relating facial/eye landmarks to eye gaze through a geometric eye model, we focus on discussing [27] below. They use an eye-face model, where 3D eye gaze can be estimated from facial landmarks (full face image is required), based on the classic two-sphere eye model as shown in Fig. 3.

According to the eye model, the true gaze is the visual axis passing through cornea center and fovea. An approximation of the true gaze is the optical axis which passes through eyeball center , cornea center and pupil center . With the deformable 3D eye-face model, the authors first recover the 3D eyeball center and the model parameters by minimizing the projection error of 3D eye face model to 2D landmarks. Next they compute the 3D pupil center given observed 2D pupil center and the eyeball radius. Finally, they can recover the optical axis. Denote the 2D facial landmarks as , gaze estimation can be summarized as , where represents the model parameters. In addition, we also parameterize model-based gaze estimation in a probabilistic way, i.e.,

| (7) |

where is the noise with the model-based method and it can be estimated offline for each method.

4.4 Model-based gaze estimation via Bayesian inference

Given the three terms in Eq. (4), we now introduce our method to solve the equation to obtain the best gaze and its uncertainty for an input image. Solving eye gaze in Eq. (4) directly is challenging because of the integration over both and :

We instead approximate the two integrals with sample averages.

For the first integral over , because of the large number of parameters in , we propose to employ the Stochastic Gradient Hamiltonian Monte Carlo (SGHMC) approach [3] to approximate the posterior. The key idea is to use Hamiltonian dynamics to produce a better sample proposal and the mini-batch based gradient update to scale up to large dataset. Specifically, the Hamiltonians is a function defined over the model parameter w and auxiliary variable v:

| (8) | ||||

where is set as a multiple of identity matrix for simplicity [17]. We compute the gradient update by simulating the Hamilton’s equations. This can be implemented by a stochastic gradient update with momentum as follows:

| (9) | ||||

| (10) |

where is the learning rate and is the friction term in SGHMC. Following Eq. (9)-(10), we can map a state i.e. value of () at time to . By repeating the updates we obtain samples of at different . In experiment, we use a mini-batch of size . The samples of are collected every updates after burn-in period of the Markov chain simulated by SGHMC.

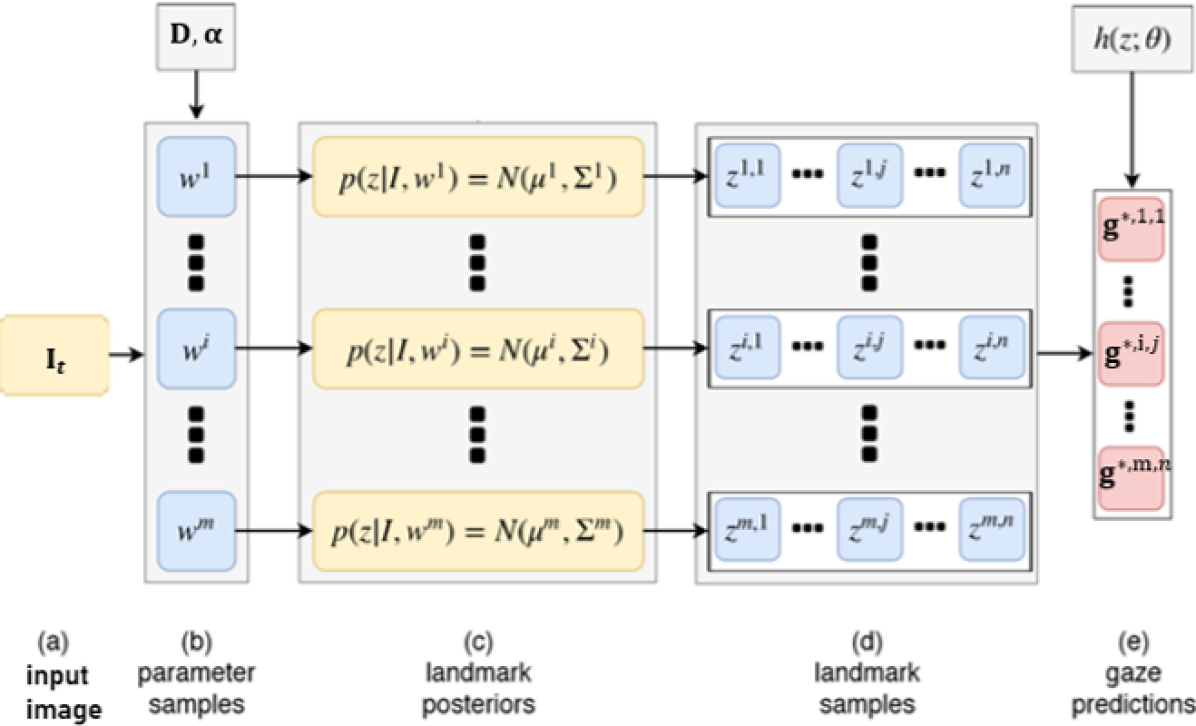

Following the SGHMC method, we draw a sample from for a total of samples. Eq. 4 can hence be approximated by

| (11) |

Next we approximate the landmark integral by drawing samples from (Eq. (5)). As we assume the covariance matrix for are diagonal, elements of are independent. Hence, we can sample each landmark point independently from its mean and variance to generate samples corresponding each ,

| (12) | ||||

| (13) |

Eq. 13 shows that the optimal gaze, given a set of landmarks, can be computed as the average of the optimal gazes for each set of landmarks.

Each set of landmark points produces one corresponding gaze estimation , yielding a total of gaze estimation. Given gaze estimations, we can compute quantify the uncertainty of using the sample covariance matrix as follows:

| (14) |

5 Extension to multi-stage (c-BCNN)

As the output of BCNN are the probability distribution of the landmark points, we can use them to improve landmark detection. Moreover, several studies show that the cascade framework can yield better landmark predictions [30]. We adopt the same idea of cascade framework with a modification that we incorporate the uncertainty information (Fig. 5). Compared to [30], our method explicitly models the landmark distribution as a Gaussian distribution in Eq. (5), and predicts the mean and variance of the distribution, while [30] predicts a deterministic landmark heatmap. In addition, [30] uses artificially generated heatmap with fixed variance as supervision. Their fixed variance needs to be tuned carefully while our method only requires landmark locations as groundtruth without additional assumptions.

As can be seen in Fig. 4 (c), the samples of produce posterior distributions of landmarks for the same image , from which we can compute both the epistemic and aleatoric uncertainties:

| (15) | ||||

| (16) |

where represents the average landmark predictions from the posterior samples , while represents the total uncertainty about the prediction. Total uncertainty consists of the epistemic uncertainty term and the aleatoric uncertainty term. Epistemic uncertainty captures the covariance of the mean of the predictions. Intuitively, if the predictions are close to each other, we are more confident in the prediction, otherwise if the predictions are different from each other, we have high uncertainty about the prediction. Aleatoric uncertainty is the mean of the predicted covariance. It encodes the uncertainty about our input . It is high if is different from the training data distribution (e.g., noise, outlier).

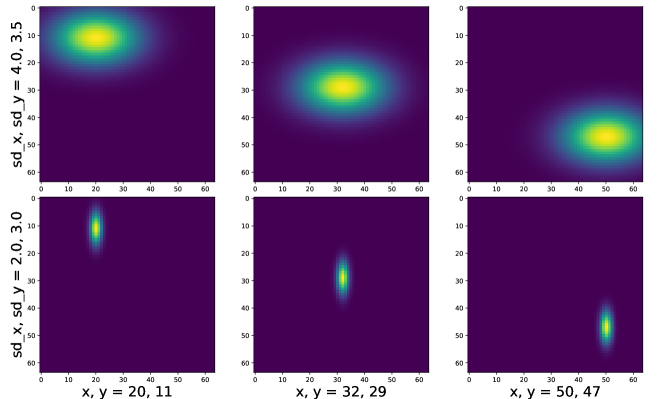

Since total uncertainty captures the quality of the landmark predictions, we would like to incorporate uncertainty information to improve the performance. We propose to encode the total uncertainty into a probability map (same size as the feature map extracted from convolutional layers). The probability map encodes the probability of the landmark being at a particular pixel location.

To this end, we carefully design a probability map layer , that converts and to a probability map . The idea is to apply a Gaussian filter at location with the total uncertainty as covariance matrix. Let us consider the case for one landmark. Assume image is of size , , (we assume independent dimensions of and only use the diagonal entries) . We first create meshgrids and with size , then the value of grid can be computed as follows:

| (17) |

To better understand how Eq. (5) works, we provide several examples in Fig. 6. Intuitively, if we have high uncertainty of the landmark prediction, the probability map will spread out (first row of Fig. 6), and encourage the network to search around the neighborhood to refine the prediction. Similarly, network is encouraged to stay at current locations if we are confident on the prediction (peak regions as in second row of Fig. 6). The complete c-BCNN is illustrated in Fig. 5, the blocks are shared for Stage . The probability map and the feature map is concatenated and fed to the next BCNN block. With multiple stages, we can still use Alg. 1 to perform gaze estimation. The difference is that the and in the log-likelihood (Eq. (5)) now is parameterized by the multi-stage CNN in Fig. 5, where represents the parameters for all stages.

6 Experiments and analysis

We consider four benchmark datasets for our evaluation. 1) EyeDiap [7]: The dataset contains different sessions (e.g. HD camera VS VGA camera, discrete target VS floating target, small head motion VS large head motion). We use images captured by VGA camera with different head poses. 2) Columbia [23]: The dataset has high quality images from subjects. The images are all used for evaluation. 3) UT [34]: The dataset contains subjects each with different head poses and gaze directions. All images are used for evaluations. 4) MPIIGaze [37]: The dataset has subjects and there are head pose variations and lighting variations. We follow the same evaluation sittings to test on the evaluation subset from the dataset.

The eye-region model is applied to the four datasets, while the eye-face model is only applied to EyeDiap and ColumbiaGaze since they provide with full face images and camera parameters (required by eye-face model). Gaze estimation error is measured by the angular error in degree. Notice that these benchmark datasets do not provide landmark labels. For training data, we directly apply a third-party landmark detector [8, 9] to generate landmarks (we use facial landmarks as in [27] and eye landmarks as in [19]). In spite of the inaccuracies with the landmark detector, we can also achieve comparable performance. For the cascade framework, we find that stages work best for facial landmarks and stages are sufficient for eye landmarks detection. All network inputs (face image/eye image) are resized to . For the convolutional blocks in each stage, we adopt a 4-layer U-Net like structure (Fig. 5). The feature map size for the 4-layers are , , and . For the geometric model in [27], we use the average 3D deformable eye-face model. We also use human-average eye related parameters (e.g. eyeball radius is 12 mm, kappa angle is 5.0 and 1.2 degrees) for both eye-face model and eye-region model. For the Bayesian inference, we use parameter samples and landmark samples.

6.1 Evaluation of the Bayesian framework

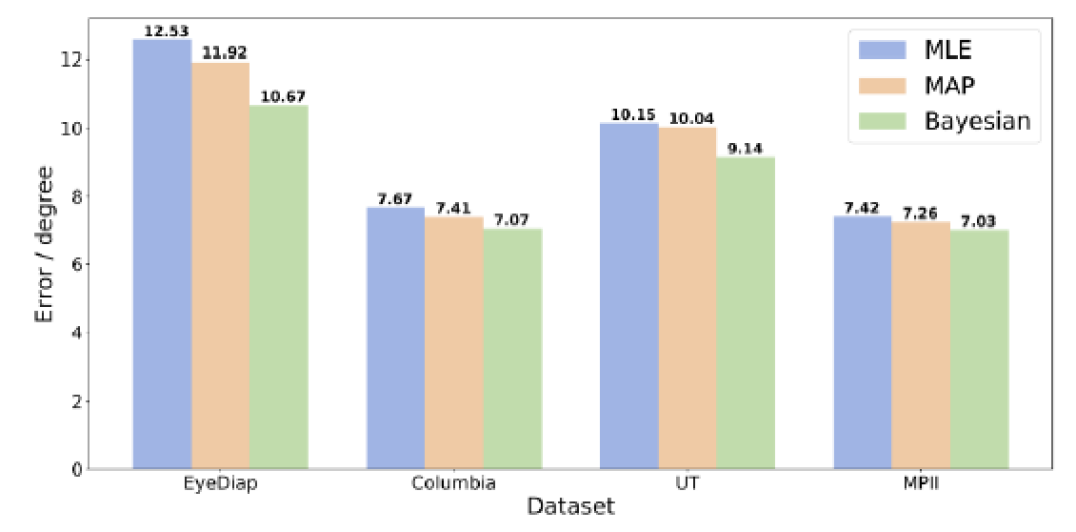

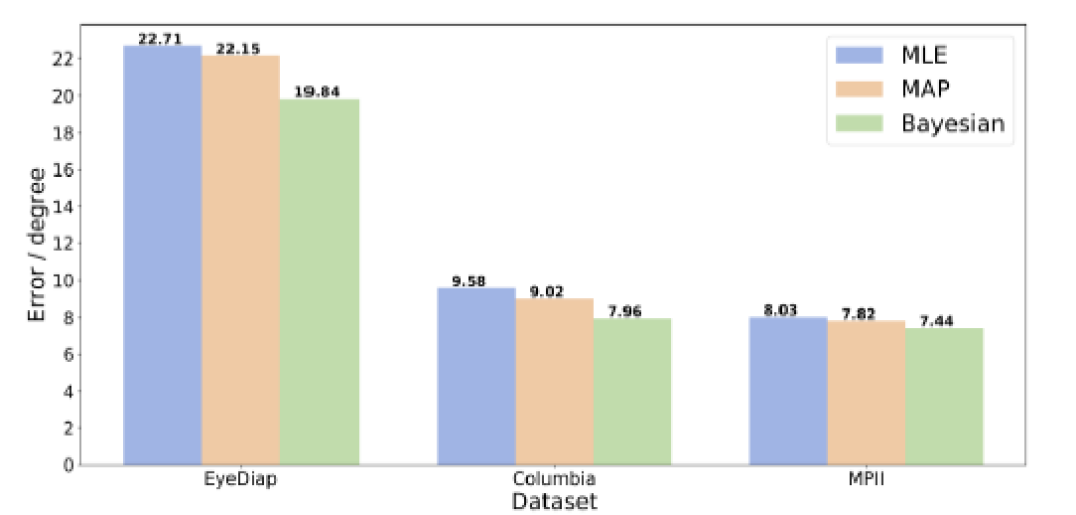

The proposed method consists of two major components: 1) Bayesian inference and 2) cascade framework. To evaluate contribution of Bayesian inference, we compare with two point estimators: Maximum Likelihood Estimation (MLE) and Maximum a Posterior (MAP) estimation. For MAP, we use the same prior as our Bayesian inference. To evaluate the cascade framework, we consider onestage, two-stage and the final three-stage models. In addition, we perform cross subject (within-dataset) and cross-dataset experiments on four benchmark datasets to evaluate the two components.

6.1.1 Contribution of Bayesian inference

Within-dataset cross-subject performance: The evaluation on four benchmark datasets are shown in Fig. 7. First of all, for the four benchmark datasets, the Bayesian inference outperforms the MLE and MAP point estimators. This demonstrates the importance of Bayesian inference in gaze estimation.

Secondly, Bayesian inference is able to handle challenging datasets with low quality images. The Bayesian inference demonstrates more improvement in the two more challenging datasets (EyeDiap and UT) as MPIIGaze dataset has small head pose and subject variations while Columbia dataset has 4K resolution images and fixed head poses, This is because when the image distribution is complex, the corresponding landmark distribution is not accurate. This makes it hard for point estimators to cover the underlying distributions. On the other hand, explicitly modeling the landmark distribution and performing Bayesian inference allows us to leverage the distribution learned from training data and improves the estimation accuracy. Finally, average over the 4 datasets, Bayesian inference gives an improvement of over MLE and for MAP, demonstrating the effectiveness of the proposed Bayesian inference.

Cross-dataset performance:The cross-dataset experiments are shown in Fig. 8, we obtain improvements with Bayesian inference for all three benchmark datasets. Cross-dataset evaluation suffers from the difference from training dataset distribution and testing dataset distribution. In general, point estimators only obtain one set of parametes, though it fits well to the training dataset, it cannot generate good results on testing datasets. With Bayesian inference and using multiple set of parameters, we are able to achieve improved results on cross-dataset evaluations. On average, Bayesian inference gives an improvement of over MLE and over MAP, which is also higher than the within-dataset experiments.

Extreme-case evaluation:To further demonstrate the advantage of Bayesian inference, We perform an extreme-case study where we add noises and occlusions on the testing images. In particular, we select UT as our training dataset, and MPII as our testing dataset. The experimental results are shown in Tab. 1, where the Gaussian noise standard deviation is applied to image with intensity from 0 to 255. The random occlusion is a squared black block, and the size of the square is the percentage of input image width. As we can see in the table, although MLE and MAP can still give reasonable estimation, Bayesian inference outperforms them in all scenarios, demonstrating its ability to handle images with poor qualities.

| Gaussian Noise std | Random Occlusion size | |||||

|---|---|---|---|---|---|---|

| 10 | 30 | 50 | ||||

| MLE | 9.21 | 10.77 | 12.57 | 8.93 | 11.25 | 14.36 |

| MAP | 8.48 | 10.15 | 11.69 | 9.12 | 10.79 | 14.23 |

| Bayesian | 7.97 | 9.23 | 10.26 | 8.24 | 9.57 | 13.07 |

6.1.2 Contribution of cascade framework

We also perform the within-dataset and cross-dataset evaluations with different number of stages. The idea of cascade framework is that the prediction of the output will guide the searching in following stage. The major observations are: 1) more stages are always helpful and 2) the improvement from stage1 to stage2 is more significant than from stage2 to stage3. This is also the main reason we choose 3 stages for our c-BCNN. On average, the improvement of stage3 over stage1 for within-dataset is , and for cross-dataset is . This demonstrate the significance of the proposed cascade framework.

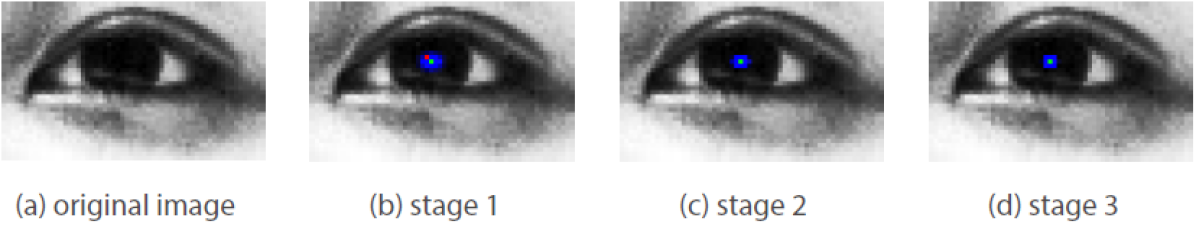

6.1.3 Visualization of landmark detections

We also visualize the landmark detection results across different stages as in Fig. 9 (images from UT). In the first stage, the predicted mean (red dot) is far away from ground truth (green dot), the predicted variance (blue region) therefore is large, indicating high uncertainty. As we proceed with more stages, we can progressively improve the accuracy and reduce the uncertainty.

6.2 Comparison with state of the art

In this section, we summarize the experimental results of comparing our methods to SOTA methods. We first compare with SOTA model-based methods, and with SOTA learning-based methods.

6.2.1 Model-based methods

We compare with three recent model based methods [27, 19, 28] on three benchmark datasets. The within-dataset cross-subject experiments are shown in Tab. 2. For the eye-face model, we obtain a significant improvement () for the EyeDiap dataset, and slight better improvements for Columbia dataset. For the eye-region model, we achieve larger improvements on EyeDiap ( compared with [19], compared with [28]), and similarly slight improvement on the two high quality datasets Columbia and MPII. Overall, this demonstrates that the proposed method can better generalize across subjects, especially when the distribution is complex and data is noisy. To compare the generalization performance, we also performance cross-dataset evaluation. Tab. 3 shows the results for cross-dataset evaluation. For fair comparison, we train the model with UT dataset and test on the remaining three datasets. We can see that we achieve a significant improvement on EyeDiap from 26.6 degrees to 20.6 degrees, showing the power for c-BCNN to generalize to a new dataset. For Columbia and MPII, we achieve a similar performance compared to competing approaches. The reason is that Columbia has high-quality images and the variation in MPII is relatively small. Overall, the results demonstrate better generalization capability of the proposed method.

6.2.2 Appearance-based methods

Here, we only compare with SOTA learning-based methods on cross-datasets. We did not compare with SOTA methods for within-datasets because as a model-based method, our method does not require any training data with groundtruth gaze annotations, while the learning-based methods all require a lot groundtruth gaze annotations, and hence the comparison is hence not fair.

We follow a similar setting to train the model with UT dataset and test on MPIIGaze dataset. Current studies show that appearance-based methods ([37, 4, 38, 5]) perform poorly on cross-dataset. We observe similar results as in Tab. 4. As model-based methods leverage on eye models, therefore the proposed method outperforms most appearance-based methods. The method in [29], gain a similar cross-dataset performance. They apply Beyasian inference in the process of adversarial learning. However, their method require a large amount of labeled data under different variations and a complex training process. In contrast, our proposed model does not require any gaze label, model training, and even landmarks in the training data are generated through third-part landmark detectors.

7 Conclusion

We introduce a novel Bayesian framework to improve the generalization and robustness of the model-based eye tracking. It consists of a BCNN, which captures the probabilistic relationship between appearance and landmarks, and a geometric eye model to relate landmarks to gaze. The two components are unified by a Bayesian framework to avoid point estimation and the explicit landmark predictions. We further extend the single-stage model to multi-stage and incorporate uncertainty information to progressively improve the model performance. Experiments demonstrate better generalization and robustness with Bayesian inference and the cascade framework. Furthermore, our method outperforms SoA model-based eye tracking methods on challenging datasets, further demonstrating its generalization power.

References

- [1] D. Beymer and M. Flickner. Eye gaze tracking using an active stereo head. In Computer Vision and Pattern Recognition, 2003.

- [2] J. Chen and Q. Ji. 3d gaze estimation with a single camera without ir illumination. In Pattern Recognition, 19th International Conference on, 2008.

- [3] T. Chen, E. Fox, and C. Guestrin. Stochastic gradient hamiltonian monte carlo. In International Conference on Machine Learning, pages 1683–1691, 2014.

- [4] Y. Cheng, F. Lu, and X. Zhang. Appearance-based gaze estimation via evaluation-guided asymmetric regression. In Proceedings of the European Conference on Computer Vision (ECCV), pages 100–115, 2018.

- [5] T. Fischer, H. Jin Chang, and Y. Demiris. Rt-gene: Real-time eye gaze estimation in natural environments. In Proceedings of the European Conference on Computer Vision (ECCV), pages 334–352, 2018.

- [6] K. Funes Mora and J. Odobez. Geometric generative gaze estimation (g3e) for remote rgb-d cameras. Computer Vision and Pattern Recognition, 2014.

- [7] K. A. Funes Mora, F. Monay, and J.-M. Odobez. Eyediap: A database for the development and evaluation of gaze estimation algorithms from rgb and rgb-d cameras. In ETRA, 2014.

- [8] C. Gou, Y. Wu, F.-Y. Wang, and Q. Ji. Coupled cascade regression for simultaneous facial landmark detection and head pose estimation. In 2017 IEEE International Conference on Image Processing (ICIP), pages 2906–2910. IEEE, 2017.

- [9] C. Gou, Y. Wu, K. Wang, K. Wang, F.-Y. Wang, and Q. Ji. A joint cascaded framework for simultaneous eye detection and eye state estimation. Pattern Recognition, 67:23–31, 2017.

- [10] E. D. Guestrin and E. Eizenman. General theory of remote gaze estimation using the pupil center and corneal reflections. Biomedical Engineering, IEEE Transactions on, 2006.

- [11] J. Heinzmann. 3-D facial pose and gaze point estimation using a robust real-time tracking paradigm. Face and Gesture Recognition,, 1998.

- [12] T. Ishikawa, S. Baker, I. Matthews, and T. Kanade. Passive Driver Gaze Tracking with Active Appearance Models. Proc. 11th World Congress Intelligent Transportation Systems, 2004.

- [13] K. -H. Tan, D.Kriegman, and N. Ahuja. Appearance-based eye gaze estimation. In Proc. 6th IEEE Workshop on Applications of Computer Vision, 2002.

- [14] K. Krafka, A. Khosla, and P. Kellnhofer. Eye Tracking for Everyone. CVPR, 2016.

- [15] J. Li and S. Li. Eye-model-based gaze estimation by rgb-d camera. Computer Vision and Pattern Recognition Workshops, 2014.

- [16] F. Lu, Y. Sugano, T. Okabe, and Y. Sato. Inferring human gaze from appearance via adaptive linear regression. In ICCV, 2011.

- [17] R. M. Neal et al. Mcmc using hamiltonian dynamics. Handbook of Markov Chain Monte Carlo, 2011.

- [18] S. Park, A. Spurr, and O. Hilliges. Deep pictorial gaze estimation. arXiv preprint arXiv:1807.10002, 2018.

- [19] S. Park, X. Zhang, A. Bulling, and O. Hilliges. Learning to find eye region landmarks for remote gaze estimation in unconstrained settings. arXiv preprint arXiv:1805.04771, 2018.

- [20] S. -W. Shih and J. Liu. A novel approach to 3-d gaze tracking using stereo cameras. IEEE Transactions on Systems, Man and Cybernetics, PartB, 2004.

- [21] T. Santini, W. Fuhl, and E. Kasneci. Pure: Robust pupil detection for real-time pervasive eye tracking. Computer Vision and Image Understanding, 170:40–50, 2018.

- [22] A. Shrivastava, T. Pfister, O. Tuzel, J. Susskind, W. Wang, and R. Webb. Learning from simulated and unsupervised images through adversarial training. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2107–2116, 2017.

- [23] B. A. Smith, Q. Yin, S. K. Feiner, and S. K. Nayar. Gaze Locking: Passive Eye Contact Detection for Human-Object Interaction. ACM Symposium on User Interface Software and Technology, 2013.

- [24] L. Sun, Z. Liu, and M.-T. Sun. Real time gaze estimation with a consumer depth camera. Information Sciences, 320:346–360, 2015.

- [25] R. Valenti, N. Sebe, and T. Gevers. Combining head pose and eye location information for gaze estimation. Image Processing, IEEE Transactions on, 2012.

- [26] F. Vicente, H. Zehua, X. Xuehan, F. De la Torre, Z. Wende, and D. Levi. Driver gaze tracking and eyes off the road detection system. Intelligent Transportation Systems, IEEE Transactions on, 2015.

- [27] K. Wang and Q. Ji. Real time eye gaze tracking with 3d deformable eye-face model. In Proceedings of the IEEE International Conference on Computer Vision, pages 1003–1011, 2017.

- [28] K. Wang, R. Zhao, and Q. Ji. A hierarchical generative model for eye image synthesis and eye gaze estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 440–448, 2018.

- [29] K. Wang, R. Zhao, H. Su, and Q. Ji. Generalizing eye tracking with bayesian adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 11907–11916, 2019.

- [30] S.-E. Wei, V. Ramakrishna, T. Kanade, and Y. Sheikh. Convolutional pose machines. In CVPR, 2016.

- [31] E. Wood, T. Baltrusaitis, L. P. Morency, P. Robinson, and A. Bulling. A 3D morphable eye region model for gaze estimation. ECCV, 2016.

- [32] E. W. Wood. Gaze Estimation with Graphics. PhD thesis, University of Cambridge, 2017.

- [33] X. Xiong, Q. Cai, Z. Liu, and Z. Zhang. Eye gaze tracking using an rgbd camera: A comparison with a rgb solution. UBICOMP, 2014.

- [34] Y. Sugano, Y. Matsushita, and Y. Sato. Learning-by-synthesis for appearance-based 3d gaze estimation. CVPR, 2014.

- [35] H. Yamazoe, A. Utsumi, T. Yonezawa, and S. Abe. Remote gaze estimation with a single camera based on facial-feature tracking without special calibration actions. In Proceedings of the 2008 symposium on Eye tracking research and applications, 2008.

- [36] C. Yiu-Ming and P. Qinmu. Eye Gaze Tracking With a Web Camera in a Desktop Environment. Human-Machine Systems, IEEE Transactions on, 2015.

- [37] X. Zhang, Y. Sugano, M. Fritz, and A. Bulling. Appearance-based gaze estimation in the wild. In CVPR, 2015.

- [38] X. Zhang, Y. Sugano, M. Fritz, and A. Bulling. Mpiigaze: Real-world dataset and deep appearance-based gaze estimation. IEEE transactions on pattern analysis and machine intelligence, 41(1):162–175, 2017.