Bayesian hierarchical modeling of simply connected 2D shapes

Abstract

Models for distributions of shapes contained within images can be widely used in biomedical applications ranging from tumor tracking for targeted radiation therapy to classifying cells in a blood sample. Our focus is on hierarchical probability models for the shape and size of simply connected 2D closed curves, avoiding the need to specify landmarks through modeling the entire curve while borrowing information across curves for related objects. Prevalent approaches follow a fundamentally different strategy in providing an initial point estimate of the curve and/or locations of landmarks, which are then fed into subsequent statistical analyses. Such two-stage methods ignore uncertainty in the first stage, and do not allow borrowing of information across objects in estimating object shapes and sizes. Our fully Bayesian hierarchical model is based on multiscale deformations within a linear combination of cyclic basis characterization, which facilitates automatic alignment of the different curves accounting for uncertainty. The characterization is shown to be highly flexible in representing 2D closed curves, leading to a nonparametric Bayesian prior with large support. Efficient Markov chain Monte Carlo methods are developed for simultaneous analysis of many objects. The methods are evaluated through simulation examples and applied to yeast cell imaging data.

Keywords: Bayesian nonparametrics, cyclic basis, deformation, hierarchical modeling, image cytometry, multiscale, 2d shapes

1 Introduction

Collections of shapes are widely studied across many disciplines, such as biomedical imaging, cytology and computer vision. Perhaps the most fundamental issue when studying shape is the choice of representation. The simplest representations for shape are basic geometric objects, such as ellipses (?; ?; ?), polygons (?; ?; ?; ?), and slightly more involved specifications such as superellipsoids (?).

Clearly, not all shapes can be adequately characterized by simple geometric objects. The landmark-based approach was developed to describe more complex shapes by reducing them to a finite set of landmark coordinates. This is appealing because the joint distribution of these landmarks is tractable to analyze, and because landmarks make registration/alignment of different shapes straightforward. There is a very rich statistical literature on parametric joint distributions for multiple landmarks (?; ?; ?; ?; ?; ?; ?; ?; ?), with some recent work on nonparametric distributions, both frequentist (?; ?; ?; ?) and Bayesian (?; ?; ?).

Unfortunately, in many applications it is not possible to define landmarks if the target collection of objects vary greatly. Furthermore, even if landmarks can be chosen, there may be substantial uncertainty in estimating their location, which is not accounted for in landmark-based statistical analyses.

In these situations, one can instead characterize shapes by describing their boundary, using a nonparametric curve (2D) or surface (3D). Curves and surfaces are widely used in biomedical imaging and commercial computer-aided design (?; ?; ?; ?), because they provide a flexible model for a broad range of objects e.g., cells, pollen grains, protein molecules, machine parts, etc. A collection of introductory work on curve and surface modeling can be found in ? and subsequent developments in ?. Popular representations include: Bezier curves, splines, and principal curves (?) (a nonlinear generalization of principal components, involving smooth curves which ‘pass through the middle’ of a data cloud). ? and ? dealt with curve modeling based on smooth stochastic processes. Although there is a hugely vast literature on estimating curves and surfaces, most of the focus is on estimating , where a compact subset of without making any constraints on . Estimating a closed surface or a curve involves a different modeling strategy and there has been very few works in this regime, particularly from a Bayesian point of view. To our knowledge, only ? developed a Bayesian approach for fitting a closed surface using tensor-products.

Many of the above curve representations can successfully fit and describe complex shape boundaries, but they often have high or infinite dimensionality, and it is not clear how to directly analyze them. Also, they were not designed to facilitate comparison between shapes or characterize a collection of shapes. One solution is to re-express each curve using Fourier descriptors or wavelet descriptors (?; ?; ?; ?). Both approaches decompose a curve into components of different scales, so that the coarsest scale components carry the global approximation information while the finer scale components contain the local detailed information. Such multiscale transforms make it easier to compare objects that share the same coarse shape, but differ on finer details, or vice versa. The finer scale components can also be discarded to yield a finite and low-dimensional representation. Other dimensionality-reducing transformations include Principal Component Analysis and Distance Weighted Discrimination.

Note that the entire process is fragmented into three separate tasks: 1) curve fitting, 2) transformation, 3) population-level analysis. This can be problematic for several reasons. First, curve-fitting is not always accurate. If uncertainty is not accounted for, mistakes made during curve-fitting will be propagated into later analyses. Second, dimension-reducing transformations may throw away some of the information captured by curve-fitting. Finally, one suspects that the curve-fitting and transformation steps should be able to benefit from higher-level observations made during subsequent population analysis. For example, if the curve-fitting procedure is struggling to fit a missing or noisy shape boundary, it should be able to draw on similar shapes in the population to achieve a more informed fit. In this paper, we propose a Bayesian hierarchical model for 2D shapes, which addresses all of the aforementioned problems by performing curve fitting, multiscale transformation, and population analysis simultaneously within a single joint model.

The key innovation in our shape model is a shape-generating random process which can produce the whole range of simply-connected 2D shapes (shapes which contain no holes), by applying a sequence of multiscale deformations to a novel type of closed curve based on the work of ?. ?, ? and ? also proposed multiscale curves (with the latter two being more similar to our work, in their usage of Bézier curves and degree-elevation). However, none of these developed a statistical model around their representation or considered a collection of shapes. In analyzing a population of shapes, a notion of average shape or mean shape is quite important. ? discussed notions of mean shape and shape variability and various methods of estimating them pertaining to landmark based analysis. We will follow a different but related strategy for defining the average shape in terms of the basis coefficients or the control points of the Bézier curves. We call it the ‘central shape’. Refer to §2.5 for details. To characterize shape variability, we also define a notion of shape quantile in §2.5.

In §2, we describe the shape-generating random process, how it specifies a multiscale probability distribution over shapes, and how this can be used to express various modeling assumptions, such as symmetry. In §3, we provide theory regarding the flexibility of our model (support of the prior). In §4 and §5, we show how the random process can be used to fit a curve to a point cloud or an image. In §6, we show how to simultaneously fit and characterize a collection of shapes, which also naturally incorporates inter-shape alignment. In §7, we describe the computational details of Bayesian inference behind each of the tasks described earlier. This results in a fast approximate algorithm which is scalable to a huge collection of shapes having a dense point cloud each. Finally, in §8 and §9, we test our model on simulated shapes and real image data respectively. En route, we solve several important sub-problems that may be generally useful in the study of curve and surface fitting. First, we develop a model-based approach for parameterizing point cloud data. Second, we show how fully Bayesian joint modeling can be used to incorporate several pieces of auxiliary information in the process of curve-fitting, such as when each point within a point cloud also reports a surface orientation. Lastly, the concept of multi-scale deformation can be generalized to 3d surfaces in a straightforward manner.

2 Priors for Multiscale Closed Curves

2.1 Overview

Our random shape generation process starts with a closed curve and performs a sequence of multiscale deformations to generate a final shape. In §2.2, we introduce the Roth curve developed by ?, which is used to represent the shape boundary. Then, in §2.3, we demonstrate how to deform a Roth curve at multiple scales to produce any simply-connected shape. Using the mechanisms developed in §2.2 and §2.3, we present the full random shape process in §2.5.

2.2 Roth curve

A Roth curve is a closed parametric curve, , defined by a set of control points , where is the degree of the curve and we may choose it to be any positive integer, depending on how many control points are desired. For convenience, we will refer to the total number of control points as , where . For notational simplicity, we will drop the dependence of in . As a function of , the curve can be viewed as the trajectory of a particle over time. At every time , the particle’s location is defined as some convex combination of all control points. The weight accorded to each control point in this convex combination varies with time according to a set of basis functions, , where and for all .

| (1) | |||||

| (2) |

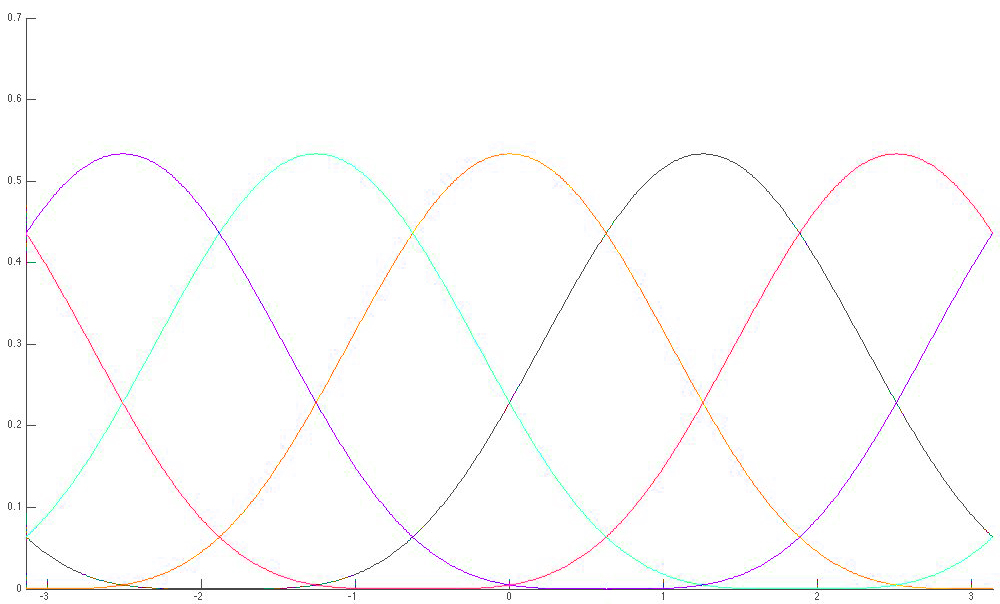

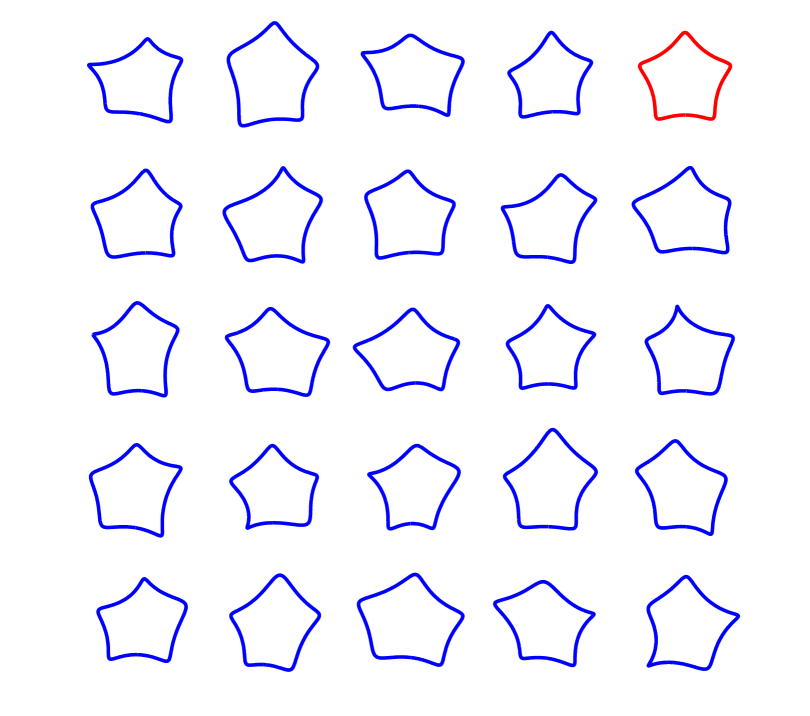

where specifies the location of the control point and is the basis function. For simplicity, we omit the superscript denoting a basis function’s degree, unless it requires special attention. This representation is a type of Bezier curve. Refer to Figure 1 for an illustration of the Roth basis functions.

|

The Roth curve has several appealing properties:

-

1.

It is fully defined by a finite set of control points, despite being an infinite dimensional curve.

-

2.

It is always closed, i.e. . This is necessary to represent the boundary of a shape.

-

3.

All basis functions are nonlinear translates of each other, and are evenly spaced over the interval . They can be cyclically permuted without altering the curve. This implies that each control point exerts the same ‘influence’ over the curve. The influence of the control points is illustrated in §3.3.

-

4.

A degree 1 Roth curve having 3 control points is always a circle or ellipse.

-

5.

Any closed curve can be approximated arbitrarily well by a Roth curve, for some large degree . This is because the Roth basis, for a given , spans the vector space of trigonometric polynomials of degree and as , the basis functions span the vector space of Fourier series. We elaborate on this in §3.

-

6.

Roth curves are infinitely smooth in the sense that they are infinitely differentiable ().

2.3 Deforming a Roth curve

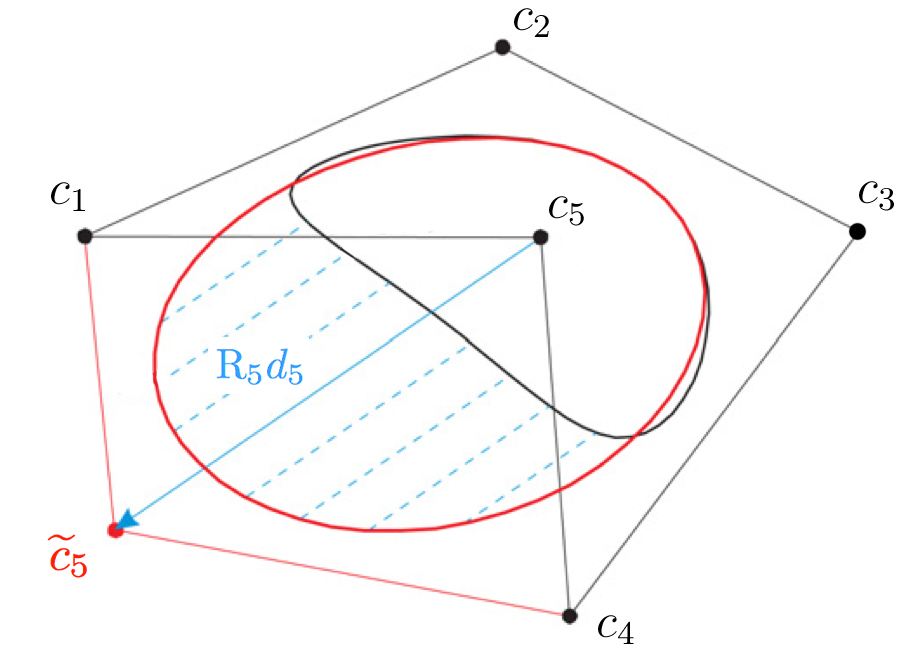

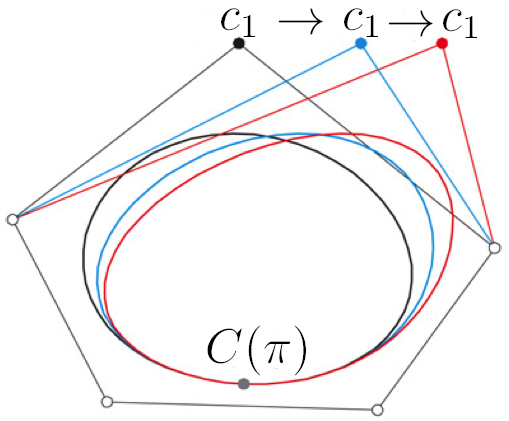

A Roth curve can be deformed simply by translating some of its control points. We now formally define deformation and illustrate it in Figure 2.

|

-

Definition

Suppose we are given two Roth curves,

(3) where for each , , and is a rotation matrix. Then, we say that is deformed into by the deformation vectors .

Each orients the deformation vector relative to the original curve’s surface. As a result, positive values for the y-component of always correspond to outward deformation, negative values always correspond to inward deformation, and ’s x-component corresponds to deformation parallel to the surface. We will call a deformation-orienting matrix. In precise terms,

| (4) |

where is the angle of the curve’s tangent line at , the point where the control point has the strongest influence: . can be obtained by computing the first-derivative of the Roth curve, also known as its hodograph.

-

Definition

The hodograph of a Roth curve is given by:

(5) (6) where . If we view as the trajectory of a particle, intuitively gives the velocity of the particle at point .

We can now use simple trigonometry to determine that

| (7) |

Note that is ultimately just a function of .

Next, we show how to alter the scale of deformation, using an important concept called degree elevation.

-

Definition

Given any Roth curve, we can use degree elevation to re-express the same curve using a larger number of control points (a higher degree). More precisely, if we are given a curve of degree , , we can elevate its degree by any positive integer , to obtain a new degree elevated curve: such that for all . In , each new degree-elevated control point, , can be defined in terms of the original control points, :

It is crucial to note that the ‘influence’ of a single control point shrinks after degree elevation. We quantify this intuition in §3.3. This is because the curve is now shared by a greater total number of control points. This implies that after degree-elevation, the translation of any single control point will cause a smaller, finer-scale deformation to the curve’s shape. Thus, degree elevation can be used to adjust the scale of deformation. We exploit this strategy in the random shape process proposed in §2.5.

To that end, we first rewrite all of the concepts described above in more compact vector notation. Note that the formulas for degree elevation, deformation, the hodograph and the curve itself all simply involve linear operations on the control points.

2.4 Vector notation

First, we rewrite the control points in a ‘stacked’ vector of length .

| (8) |

The formula for a Roth curve given in (1) can be rewritten as:

| (9) | |||||

| (10) |

The formula for the hodograph given in (5) is rewritten as:

| (11) |

Deformation can be written as:

| (12) |

where is a block diagonal matrix using matrices . We call the stacked deformation-orientating matrix. Note that is a function of , because each depends on . Degree elevation can be written as the linear operator, :

where

We will maintain this vector notation throughout the rest of the paper.

2.5 Random Shape Process

The random shape process starts with some initial Roth curve, specified by an initial set of control points, . From here on, we will refer to all curves by the stacked vector of their control points, . Then, drawing on the deformation and degree-elevation operations defined earlier, we repeatedly apply the following recursive operation times:

| (13) |

resulting in a final curve . In other words, the process simply has two steps: (i) degree elevate the current curve, (ii) randomly deform it, and repeat a total of times. Note that this random process specifies a probability distribution over .

We now elaborate on the details of this recursive process. The parameters of the process are:

-

1.

, the number of steps in the process

-

2.

, the degree of the curve , for each . The sequence of must be strictly monotonically increasing. For convenience, we will denote the number of control points at a certain step to be .

-

3.

, the average set of deformations applied at step . Note that this vector contains a stack of deformations, not just one.

-

4.

, the covariance in the set of deformations applied at step .

According to these parameters, is then the degree-elevation matrix going from degree to , is a -variate normal distribution and is the stacked deformation orienting matrix.

We take special care in defining the initial curve, . We choose to be degree , which guarantees that it is an ellipse. For , we define each control point as:

and where each is a random deformation vector. In words: we start with a curve that is just a point at the origin, , and apply three random deformations which are rotated by a radially symmetric amount: and (note that the final deformations are not radially symmetric, since each is randomly drawn). We will write this in vector notation as:

The deformations essentially ‘inflate’ the curve into some ellipse. This completes our definition of the random shape process.

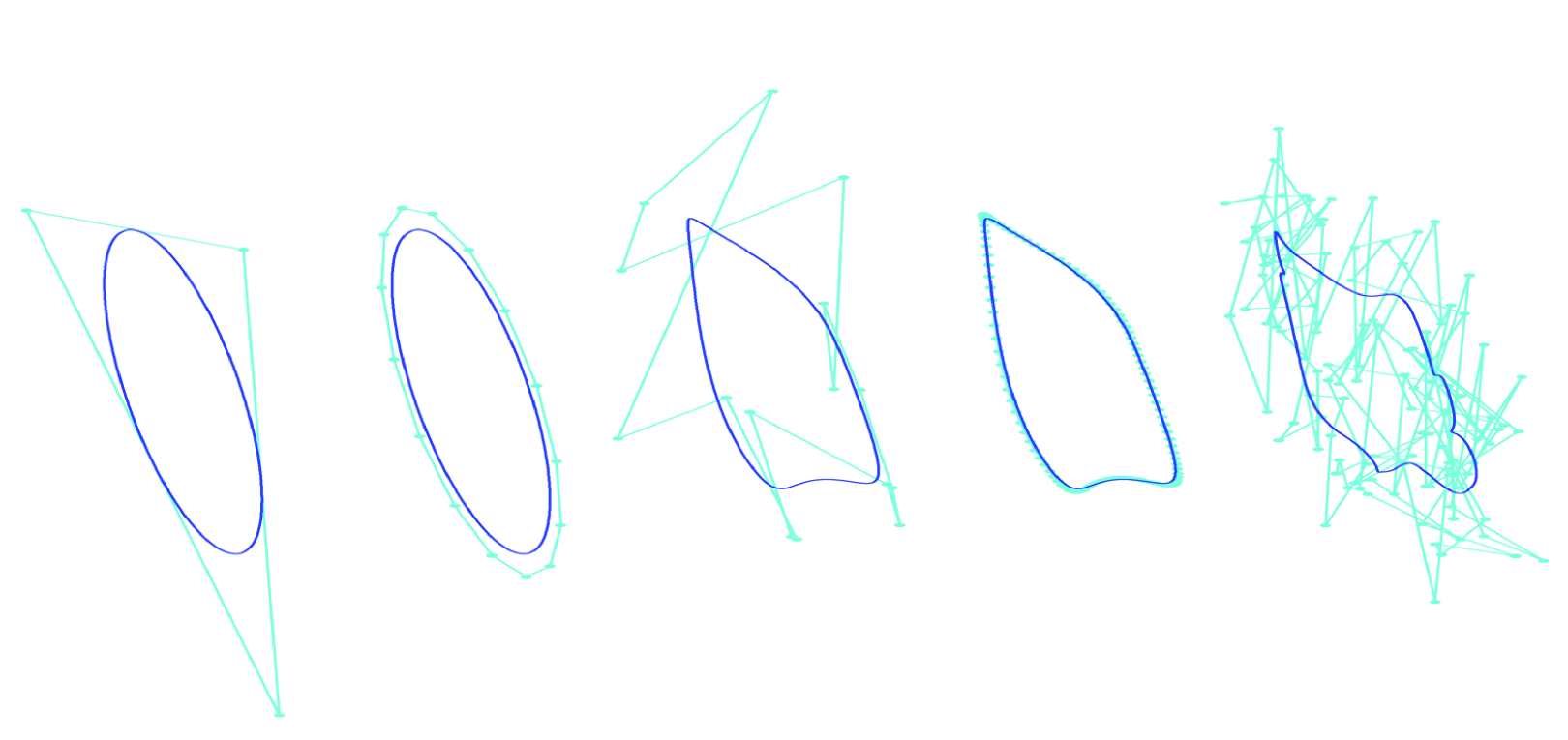

We now give some intuition about the process and each of its parameters, and define several additional concepts which make the process easier to interpret. The random shape process gives a multiscale representation of shapes, because each step in the process produces increasingly fine-scale deformations, through degree-elevation.

is then the number of scales or ‘resolutions’ captured by the process. Each specifies the number of control points at resolution . We will use to denote the class of shapes that can be exactly represented by a degree Roth curve. See the definition of in §3 for a formal characterization of a special case of . If is monotonically increasing, then . Thus, the deformations roughly describe the additional details gained going from to .

is the mean deformation at level . Based on , we define the ‘central shape’ of the random shape process, as:

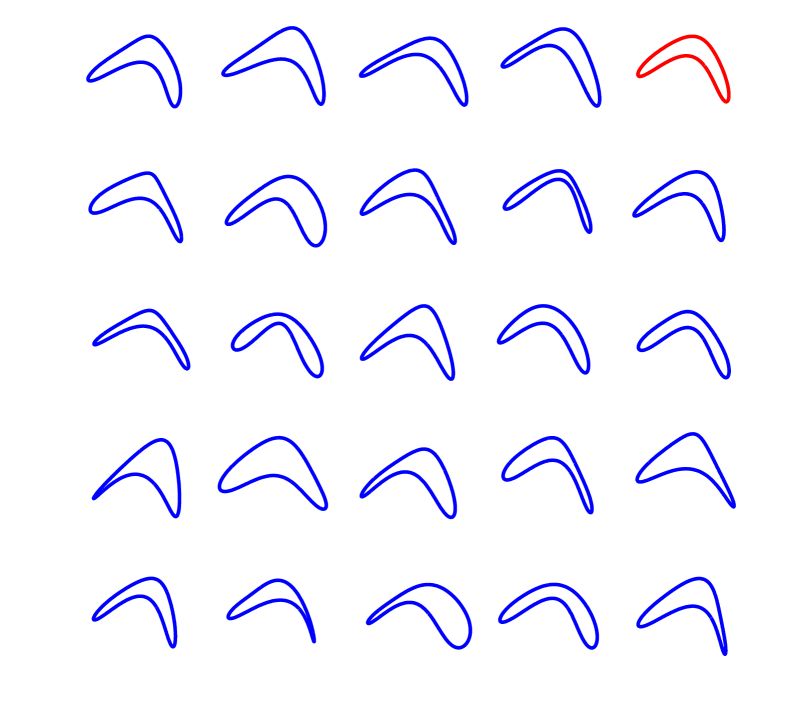

Note that is simply the deterministic result of the random shape process when each , rather than being drawn from a distribution centered on . Thus, all shapes generated by the process tend to be deformed versions of the central shape. We illustrate this in Figure 4. If the random shape process is used to describe a population of shapes, the central shape provides a good summary.

|

|

determines the covariance of the deformations at level . This naturally controls the variability among shapes generated by the process. If the variance is very small, all shapes will be very similar to the central shape. can also be chosen to induce correlation between deformation vectors at the same resolution, in the typical way that correlation is induced between dimensions of a multivariate normal distribution. This allows us to incorporate higher-level assumptions about shape, such as reflected or radial symmetry. For example, if and , we can specify perfect correlation in , such that and . The resulting shapes are guaranteed to be symmetrical along an axis of reflection.

3 Properties of the Prior

3.1 General notations

The supremum and -norm are denoted by and , respectively. We let denote the norm of , the space of measurable functions with -integrable th absolute power. The notation is used for the space of continuous functions endowed with the uniform norm. For , we let denote the Hölder space of order , consisting of the functions that have continuous derivatives with the th derivative being Lipshitz continuous of order . We write “” for inequality up to a constant multiple and to denote the order statistics of the set .

3.2 Support

Let the Hölder class of periodic functions on of order be denoted by . Define the class of closed parametric curves having different smoothness along different coordinates as

| (14) |

Consider for simplicity a single resolution Roth curve with control points . Assume we have independent Gaussian priors on each of the two coordinates of for , i.e., . Denote the prior for by . defines an independent Gaussian process for each of the components of . Technically speaking, the support of a prior is defined as the smallest closed set with probability one. Intuitively, the support characterizes the variety of prior realizations along with those which are in their limit. We construct a prior distribution to have large support so that the prior realizations are flexible enough to approximate the true underlying target object. As reviewed in ?, the support of a Gaussian process (in our case ) is the closure of the corresponding reproducing kernel Hilbert space (RKHS). The following Lemma 3.1 describes the RKHS of , which is a special case of Lemma 2 in ?.

Lemma 3.1

The RKHS of consists of all functions of the form

| (15) |

where the weights range over . The RKHS norm is given by

| (16) |

The following theorem describes how well an arbitrary closed parametric surface can be approximated by the elements of for each . Refer to Appendix A for a proof.

Theorem 3.2

For any fixed , there exists with such that

| (17) |

for some constants independent of .

This shows that the Roth basis expansion is sufficiently flexible to approximate any closed curve arbitrarily well. Although we have only shown large support of the prior under independent Gaussian priors on the control points, the multiscale structure should be even more flexible and hence rich enough to characterize any closed curve. We can also expect minimax optimal posterior contraction rates using the prior similar to Theorem 2 in ? for suitable choices of prior distributions on .

3.3 Influence of the control points

The unique maximum of basis function defined in (1) is at , therefore the control point has the most significant effect on the shape of the curve in the neighborhood of the point . Note that vanishes at , thus has no effect on the corresponding point i.e., the point of the curve is invariant under the modi cation of . The control point affects all other points of the curve, i.e. the curve is globally controlled. These properties are illustrated in Figure 5.

However, we emphasize following Proposition 5 in ? that while control points have a global effect on the shape, this in uence tends to be local and dramatically decreases on further parts of the curve, especially for higher values of .

4 Inference from Point Cloud Data

We now demonstrate how our multiscale closed curve process can be used as a prior distribution for fitting a shape to a 2D point cloud. As a byproduct of fitting, we also obtain an intuitive description of the shape in terms of deformation vectors.

Assume that the data consist of points concentrated near a 2D closed curve. Since a Roth curve can be thought of as a function expressing the trajectory of a particle over time, we view each data point, , as a noisy observation of the particle’s location at a given time ,

| (18) |

(18) shares a similar form to nonlinear factor models, where is the latent factor score. We start by specifying the likelihood and prior distributions conditionally on the s. We now rewrite the point cloud model in stacked vector notation. Defining

we have

| (19) |

where is as defined in (11).

To fit a Roth curve through the data, we want to infer , the posterior distribution over control points , given the data points . To compute this, we must specify , the likelihood, and , the prior distribution over Roth curves specified by . Refer to (13) in §2.5 for a multiscale prior . From (19), we can specify the likelihood function as,

| (20) |

This completes the Bayesian formulation for inferring , given and . In §7, we describe the exact method for performing Bayesian inference. As a byproduct of inference, we also infer the deformation vectors for , . Due to their multiscale organization, they may describe shape in a more intuitive manner than .

We propose a prior for conditionally on , which is designed to be uniform over the curve’s arc-length. This prior is motivated by the frequentist literature on arc-length parameterizations (?), but instead of replacing the points with in a deterministic preliminary step prior to statistical analysis, we use a Bayesian approach to formally accommodate uncertainty in parameterization of the points. Define the arc-length function

| (21) |

Note that is monotonically increasing and satisfies where is the length of the curve conditional on the control points and is given by .

Given , we draw and set . Thus we obtain a prior for the ’s which is uniform along the length of the curve. We will discuss a novel griddy Gibbs algorithm for implementing the arc-length parametrization in a fully Bayesian framework in §7.

5 Inferences from Pixelated Image Data

In this section, we define a hierarchical Bayesian model for point cloud data concentrated near a 2d closed curve. We also show how image data gives a bonus estimate for the object’s surface orientation, at each point . We incorporate this extra information into our model to obtain an even better shape fit, with essentially no sacrifice in computational efficiency.

A grayscale image can be treated as a function . The gradient of this function, is a vector field, where is a vector pointing in the direction of steepest ascent. In computer vision, it is well known that the gradient norm of the image, approximates a ‘line-drawing’ of all the high-contrast edges in the image. Our goal is to fit the edges in the image with our shape model.

In practice, an image is discretized into pixels but a discrete version of the gradient can still be computed by taking the difference between neighboring pixels, such that one gradient vector, is computed at each pixel. The image’s gradient norm is then just another image, where each pixel .

Finally, we extract a point cloud: where is some user-specified threshold. Each point can still be matched to a gradient vector . For convenience, we will re-index them as and . The gradient vector points in the direction of steepest change in contrast, i.e. it points across the edge of the object, approximating the object’s surface normal. The surface orientation is then just .

In the following, we describe a model relating a Roth curve to each . This model can be used together with the model we specified earlier for the .

5.1 Modeling surface orientation

Denote by the velocity vector of the curve at the parameterization location . Note that is always tangent to the curve. Since each points roughly normal to the curve, we can rotate all of them by 90 degrees, , and treat each as a noisy estimate of ’s orientation. Note that we cannot rotate the vector by 90 degrees and directly treat it as a noisy observation of . In particular, ’s magnitude bears no relationship to the magnitude of : is the rate of change in image brightness when crossing the edge of the shape, while describes the speed at which the curve passes through .

Suppose we did have some noisy observation of , denoted . Then, we could have specified the following linear model relating the curve to the ’s:

| (22) | |||||

| (23) |

for where . Instead, we only know the angle of , . In §7, we show that using this model, we can still write the likelihood for , by marginalizing out the unknown magnitude of . The resulting likelihood still results in conditional conjugacy of the control points.

6 Fitting a population of shapes

We can easily generalize the methodology above to fit a collection of separate point clouds, and characterize the resulting population of shapes, represented by a closed curve. Continuing the vector notation earlier, we will represent the point cloud in a stacked vector , the corresponding parametrizations , surface orientations , and the control points corresponding to that point cloud as . Finally, we will denote the deformations which produce the curve for shape as for each step in the shape process.

Up to this point, the parameters specifying each closed curve are separate and independent. This is sufficient if we just wish to fit each point cloud independently. However, now we aim to characterize all the curves as a single population. To do so, we treat each curve as an observation generated from a single random shape process. We borrow information across the population of curves through sharing hyperparameters of our multiscale deformation model or by shrinking to a common value by assigning a hyperprior. These inferred hyperparameters and the uncertainty in estimating them will effectively characterize the whole population of shapes. This is a hierarchical modeling strategy that is often used to characterize a population.

The hyperparameters of our random shape process are and for . We can treat each as an unknown and place the following prior on it:

| (24) |

By assuming that all shapes are generated from a single shape process with unknown ’s, we are basically assuming that all shapes are deformed versions of one ‘central shape’ (defined earlier in §2.5) where the variability in deformation at scale is .

Now suppose that each shape is rotated to a different angle. In this case, it may not be ideal to share all the deformations, because this would assume that all shapes are at exactly the same angle. One solution is to simply make the very large, allowing large variation in the . These deformations define the coarsest outline of each shape, which may be an ellipse. If these have wide variance, each coarse outline may be rotated to a different angle. Furthermore, since all subsequent deformations are defined relative to this initial coarse outline, different shapes can share the exact same deformations even if they are rotated to different angles.

Note that with this modification, all rotated shapes have essentially been aligned with each other, because all shape details expressed by the deformations for have been matched up.

7 Posterior computation

7.1 An approximation to the deformation-orienting matrix for the deformation vector

Observe that since may not be linear in , due to the in (7), the full conditional distribution of is not conditionally conjugate. Below, we develop a novel approximation to the rotation matrix of . The approximation ensures that is linear in the level control points which results in conditional conjugacy of . We resort to a Metropolis Hastings algorithm with an independent proposal suggested by the approximation to correct for the approximation error. For , let . Recall that is the hodograph evaluated at . \prop in (4) can be approximated by , where are approximate rotation matrices given by

| (25) |

and is an approximation for the length of the curve formed by the control points .

-

Proof

Refer to the definition of in (4). First we derive an approximation for and where is the angle at the point of the curve specified by . We write in vector notation

(26) Using the identities

(27) we obtain,

The magnitude of the velocity vector at the point can be well approximated by the quantity in view of the uniform arc-length parameterization discussed in §LABEL:sec:para. Hence

Plugging in in a fixed approximation for the length of the curve , we obtain the required result.

7.2 Conditional posteriors for and

Before deriving the conditional posteriors, we first introduce some simplifying notation. Recall from §2.5 that

Using the approximation , we then have . In the new arrangement, we can now cleanly write in terms of the base case, .

Hence can be approximated by

Note that the terms in are the source of approximation error. Given this expression, we can easily write in terms of and ,

We can also write in terms of and , for any

Note that as approaches , involves fewer factors and the amount of approximation error decreases.

We are now ready to derive the conditional posteriors for and (as in §5, we are using a superscript to denote variables for the shape). First, we claim that all posteriors can be written in the following form for generic ‘’, ‘’ and ‘’.

| (28) | |||||

| (29) | |||||

Note that each conditional posterior is simply a multivariate normal. We now prove that each posterior can be rearranged to match the form of (28) - (29).

7.3 Conditional update for

Where and . The conditional posterior distribution is then

where

7.4 Likelihood contribution from surface-normals

Define

| (30) | |||||

| (31) |

The likelihood contribution of the tangent directions ensures conjugate updates of the control points for a multivariate normal prior.

-

Proof

Recall the noisy tangent director vectors ’s and ’s in (22). Use a simple reparameterization

where only s are observed and ’s aren’t. Observe that

(32) Assuming a non-informative prior for the ’s on , the marginal likelihood of the tangent direction given and the parameterization is given by

It turns out the above expression has a closed form given by

The likelihood for the is given by

where

and is a matrix. Clearly, an inverse-Gamma for and a multivariate normal prior for the control points are conjugate choices.

7.5 Griddy Gibbs updates for the parameterizations

We discretize the possible values of to obtain a discrete approximation of its conditional posterior:

We can make this arbitrarily accurate, by making a finer summation over .

8 Simulation study

9 Case study

A Proofs of main results

Proof of Theorem 3.2: From (?) and observing that the basis functions span the vector space of trigonometric polynomials of degree at most , it follows that given any , there exists , with , such that for some constants . Setting , we have

with where .

Proof of Lemma 3.1:

REFERENCES

- [2] [] Amenta, N., Bern, M., and Kamvysselis, M. (1998), A new Voronoi-based surface reconstruction algorithm,, in Proceedings of the 25th annual conference on Computer graphics and interactive techniques, ACM, pp. 415–421.

- [4] [] Aziz, N., Bata, R., and Bhat, S. (2002), “Bezier surface/surface intersection,” Computer Graphics and Applications, IEEE, 10(1), 50–58.

- [6] [] Barnhill, R. (1985), “Surfaces in computer aided geometric design: A survey with new results,” Computer Aided Geometric Design, 2(1-3), 1–17.

- [8] [] Bhattacharya, A. (2008), “Statistical analysis on manifolds: A nonparametric approach for inference on shape spaces,” Sankhya: The Indian Journal of Statistics, 70(Part 3), 0–43.

- [10] [] Bhattacharya, A., and Dunson, D. (2010), “Nonparametric Bayesian density estimation on manifolds with applications to planar shapes,” Biometrika, 97(4), 851.

- [12] [] Bhattacharya, A., and Dunson, D. (2011a), “Nonparametric Bayes Classification and Hypothesis Testing on Manifolds,” Journal of Multivariate Analysis, . to appear.

- [14] [] Bhattacharya, A., and Dunson, D. (2011b), “Strong consistency of nonparametric Bayes density estimation on compact metric spaces,” Annals of the Institute of Statistical Mathematics, . to appear.

- [16] [] Bhattacharya, R., and Bhattacharya, A. (2009), “Statistics on manifolds with applications to shape spaces,” Publishers page, p. 41.

- [18] [] Bookstein, F. (1986), “Size and shape spaces for landmark data in two dimensions,” Statistical Science, 1(2), 181–222.

- [20] [] Bookstein, F. (1996a), Landmark methods for forms without landmarks: localizing group differences in outline shape,, in Mathematical Methods in Biomedical Image Analysis, 1996., Proceedings of the Workshop on, IEEE, pp. 279–289.

- [22] [] Bookstein, F. (1996b), Shape and the information in medical images: A decade of the morphometric synthesis,, in Mathematical Methods in Biomedical Image Analysis, 1996., Proceedings of the Workshop on, IEEE, pp. 2–12.

- [24] [] Bookstein, F. (1996c), “Standard formula for the uniform shape component in landmark data,” NATO ASI SERIES A LIFE SCIENCES, 284, 153–168.

- [26] [] Cinquin, P., Chalmond, B., and Berard, D. (1982), “Hip prosthesis design,” Lecture Notes in Medical Informatics, 16, 195–200.

- [28] [] Désidéri, J., Abou El Majd, B., and Janka, A. (2007), “Nested and self-adaptive Bézier parameterizations for shape optimization,” Journal of Computational Physics, 224(1), 117–131.

- [30] [] Désidéri, J., and Janka, A. (2004), Multilevel shape parameterization for aerodynamic optimization–application to drag and noise reduction of transonic/supersonic business jet,, in European Congress on Computational Methods in Applied Sciences and Engineering (ECCOMAS 2004), E. Heikkola et al eds., Jyväskyla, pp. 24–28.

- [32] [] Dryden, I., and Gattone, S. (2001), “Surface shape analysis from MR images,” Proc. Functional and Spatial Data Analysis, pp. 139–142.

- [34] [] Dryden, I., and Mardia, K. (1993), “Multivariate shape analysis,” Sankhyā: The Indian Journal of Statistics, Series A, pp. 460–480.

- [36] [] Dryden, I., and Mardia, K. (1998), Statistical shape analysis, Vol. 4 John Wiley & Sons New York.

- [38] [] Gong, L., Pathak, S., Haynor, D., Cho, P., and Kim, Y. (2004), “Parametric shape modeling using deformable superellipses for prostate segmentation,” Medical Imaging, IEEE Transactions on, 23(3), 340–349.

- [40] [] Hagen, H., and Santarelli, P. (1992), Variational design of smooth B-spline surfaces,, in Topics in surface modeling, Society for Industrial and Applied Mathematics, pp. 85–92.

- [42] [] Hastie, T., and Stuetzle, W. (1989), “Principal curves,” Journal of the American Statistical Association, pp. 502–516.

- [44] [] Kent, J., Mardia, K., Morris, R., and Aykroyd, R. (2001), “Functional models of growth for landmark data,” Proceedings in Functional and Spatial Data Analysis, pp. 109–115.

- [46] [] Kume, A., Dryden, I., and Le, H. (2007), “Shape-space smoothing splines for planar landmark data,” Biometrika, 94(3), 513–528.

- [48] [] Kurtek, S., Srivastava, A., Klassen, E., and Ding, Z. (2011), “Statistical Modeling of Curves Using Shapes and Related Features,” , .

- [50] [] Lang, J., and Röschel, O. (1992), “Developable (1, n)-Bézier surfaces,” Computer Aided Geometric Design, 9(4), 291–298.

- [52] [] Madi, M. (2004), “Closed-form expressions for the approximation of arclength parameterization for Bezier curves,” International journal of applied mathematics and computer science, 14(1), 33–42.

- [54] [] Malladi, R., Sethian, J., and Vemuri, B. (1994), “Evolutionary fronts for topology-independent shape modeling and recovery,” Computer Vision ECCV’94, pp. 1–13.

- [56] [] Malladi, R., Sethian, J., and Vemuri, B. (1995), “Shape modeling with front propagation: A level set approach,” Pattern Analysis and Machine Intelligence, IEEE Transactions on, 17(2), 158–175.

- [58] [] Mardia, K., and Dryden, I. (1989), “The statistical analysis of shape data,” Biometrika, 76(2), 271–281.

- [60] [] Mokhtarian, F., and Mackworth, A. (1992), “A theory of multiscale, curvature-based shape representation for planar curves,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 14(8), 789–805.

- [62] [] Mortenson, M. (1985), Geometrie modeling John Wiley, New York.

- [64] [] Muller, H. (2005), Surface reconstruction-an introduction,, in Scientific Visualization Conference, 1997, IEEE, p. 239.

- [66] [] Pati, D., and Dunson, D. (2011), “Bayesian modeling of closed surfaces through tensor products,” , . (submitted to Biometrika).

- [68] [] Persoon, E., and Fu, K. (1977), “Shape discrimination using Fourier descriptors,” Systems, Man and Cybernetics, IEEE Transactions on, 7(3), 170–179.

- [70] [] Rossi, D., and Willsky, A. (2003), “Reconstruction from projections based on detection and estimation of objects–Parts I and II: Performance analysis and robustness analysis,” Acoustics, Speech and Signal Processing, IEEE Transactions on, 32(4), 886–906.

- [72] [] Róth, Á., Juhász, I., Schicho, J., and Hoffmann, M. (2009), “A cyclic basis for closed curve and surface modeling,” Computer Aided Geometric Design, 26(5), 528–546.

- [74] [] Sato, Y., Wheeler, M., and Ikeuchi, K. (1997), Object shape and reflectance modeling from observation,, in Proceedings of the 24th annual conference on Computer graphics and interactive techniques, ACM Press/Addison-Wesley Publishing Co., pp. 379–387.

- [76] [] Sederberg, T., Gao, P., Wang, G., and Mu, H. (1993), 2-D shape blending: an intrinsic solution to the vertex path problem,, in Proceedings of the 20th annual conference on Computer graphics and interactive techniques, ACM, pp. 15–18.

- [78] [] Stepanets, A. (1974), “THE APPROXIMATION OF CERTAIN CLASSES OF DIFFERENTIABLE PERIODIC FUNCTIONS OF TWO VARIABLES BY FOURIER SUMS,” Ukrainian Mathematical Journal, 25(5), 498–506.

- [80] [] Su, B., and Liu, D. (1989), Computational geometry: curve and surface modeling Academic Press Professional, Inc. San Diego, CA, USA.

- [82] [] Su, J., Dryden, I., Klassen, E., Le, H., and Srivastava, A. (2011), “Fitting Optimal Curves to Time-Indexed, Noisy Observations of Stochastic Processes on Nonlinear Manifolds,” Journal of Image and Vision Computing, .

- [84] [] van der Vaart, A., and van Zanten, J. (2008), “Reproducing kernel Hilbert spaces of Gaussian priors,” IMS Collections, 3, 200–222.

- [86] [] Whitney, H. (1937), “On regular closed curves in the plane,” Compositio Math, 4, 276–284.

- [88] [] Zahn, C., and Roskies, R. (1972), “Fourier descriptors for plane closed curves,” Computers, IEEE Transactions on, 100(3), 269–281.

- [90] [] Zheng, Y., John, M., Liao, R., Boese, J., Kirschstein, U., Georgescu, B., Zhou, S., Kempfert, J., Walther, T., Brockmann, G. et al. (2010), “Automatic aorta segmentation and valve landmark detection in C-arm CT: application to aortic valve implantation,” Medical Image Computing and Computer-Assisted Intervention–MICCAI 2010, pp. 476–483.

- [91]