Bayesian Nonparametric Submodular Video Partition for Robust

Anomaly Detection

Abstract

Multiple-instance learning (MIL) provides an effective way to tackle the video anomaly detection problem by modeling it as a weakly supervised problem as the labels are usually only available at the video level while missing for frames due to expensive labeling cost. We propose to conduct novel Bayesian non-parametric submodular video partition (BN-SVP) to significantly improve MIL model training that can offer a highly reliable solution for robust anomaly detection in practical settings that include outlier segments or multiple types of abnormal events. BN-SVP essentially performs dynamic non-parametric hierarchical clustering with an enhanced self-transition that groups segments in a video into temporally consistent and semantically coherent hidden states that can be naturally interpreted as scenes. Each segment is assumed to be generated through a non-parametric mixture process that allows variations of segments within the same scenes to accommodate the dynamic and noisy nature of many real-world surveillance videos. The scene and mixture component assignment of BN-SVP also induces a pairwise similarity among segments, resulting in non-parametric construction of a submodular set function. Integrating this function with an MIL loss effectively exposes the model to a diverse set of potentially positive instances to improve its training. A greedy algorithm is developed to optimize the submodular function and support efficient model training. Our theoretical analysis ensures a strong performance guarantee of the proposed algorithm. The effectiveness of the proposed approach is demonstrated over multiple real-world anomaly video datasets with robust detection performance.

1 Introduction

Anomaly detection from videos poses fundamental challenges as abnormal activities are usually rare, complicated, and unbounded in nature [15]. Furthermore, segment or frame labels are typically unavailable due to high labeling cost and therefore, the detection models have to rely on the weak video level labels [19]. There are two main streams of work to handle the challenging anomaly detection task. The first stream treats anomaly detection as an unsupervised learning problem [21]. It assumes that an event is considered to be abnormal if it deviates significantly from a predefined set of normal events included in the training data [2, 26, 24]. However, a model trained on limited normal data is likely to capture only specific characteristics present in the training dataset, and therefore, testing normal events deviating significantly from the training normal events will lead to a high false alarm rate [27]. The second stream of research has attempted to address the limitation by formulating the problem as multiple instance learning (MIL) that models each video as a bag and its segments (or frames) as instances within the bag [19]. The goal is to learn a model that can effectively make frame-level anomaly predictions relying on the video-level labels during the training process. One effective MIL learning objective is to maximize the gap between two instances having the respective highest anomaly scores from a pair of positive and negative bags. The maximum score based MIL (referred as MMIL) model outperformed the unsupervised approaches and achieved promising performance in real-world long surveillance videos [19].

However, there are two key limitations with the MMIL model. First, the presence of noisy outliers (different from other normal events) in both abnormal and normal videos may significantly impact the overall model performance. This is because the objective function solely focuses on the individual segments from both positive and negative bags, making the training process sensitive to noises. Figure 1 (a-b) shows the example normal frames that are significantly different from other normal ones in real-world surveillance videos. The first frame is from the burglary video that looks similar to an abnormal frame from a video with an arson event. The second frame is from the shooting video that looks similar to a fighting frame. Hence, they may serve as outliers in the corresponding videos.

Second, if multiple types of abnormal events (referred to as multi-modal anomaly) present in a single abnormal video, MMIL may only detect one type of anomaly while missing other important ones due to the limitation of the objective function. Figure 1 (c)-(e) demonstrate three frames with different anomaly types from an example video in the Avenue dataset [16]. In Figure 1 (c), the person is running, which is regarded as a strange action in that context [16]. In (b), it shows a person waiting in a place holding some object in the hand, and (c) involves a person walking in the wrong direction. Therefore, the single video has multiple anomaly frames leading to a multimodal scenario.

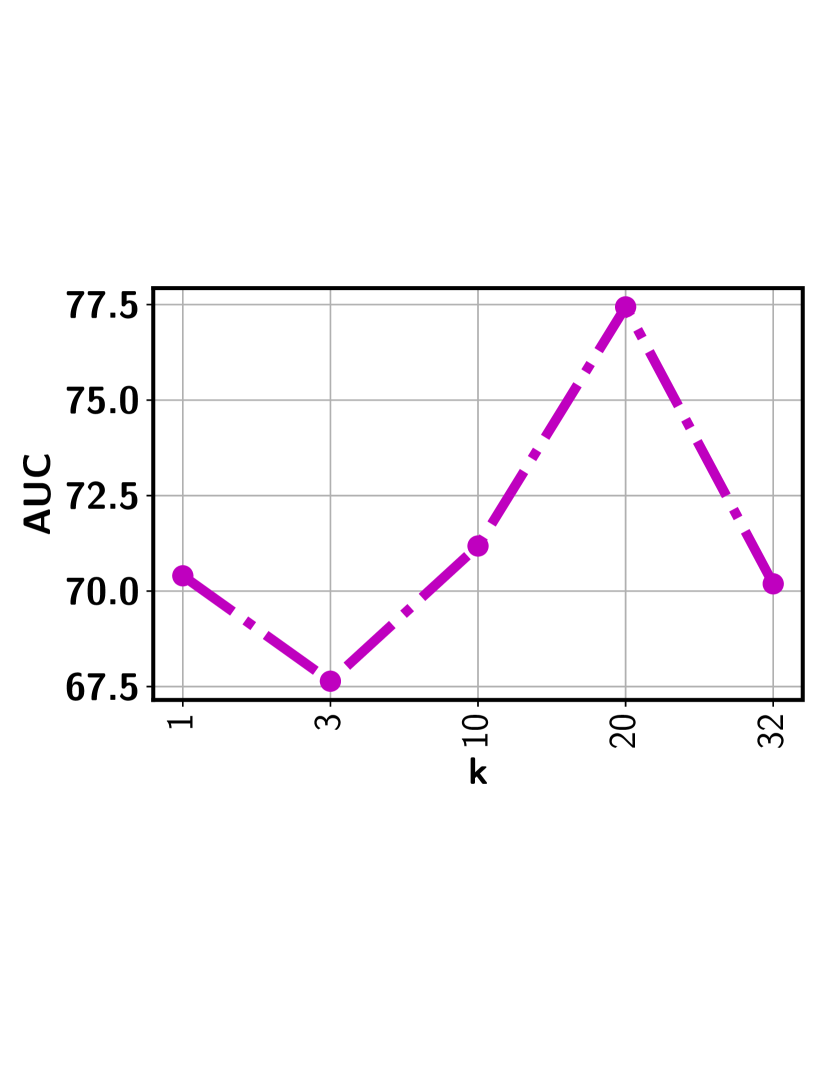

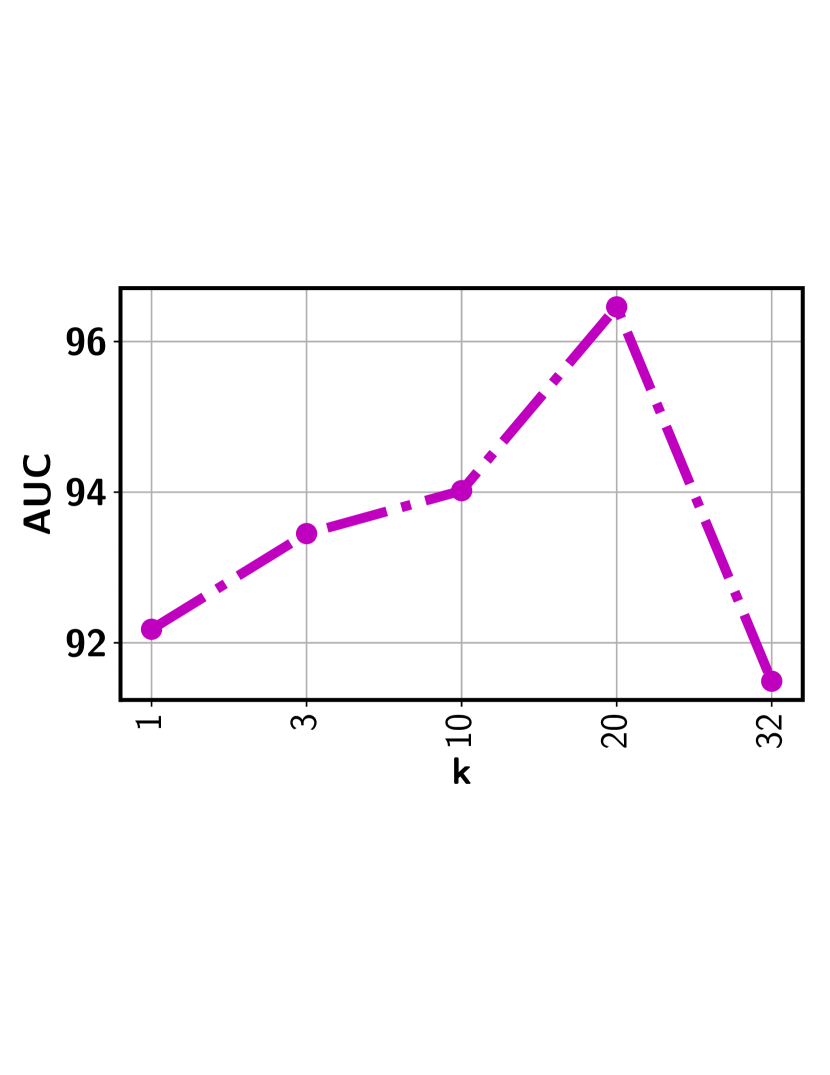

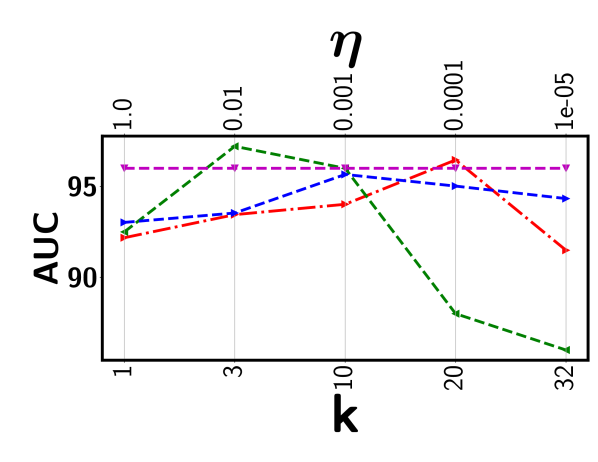

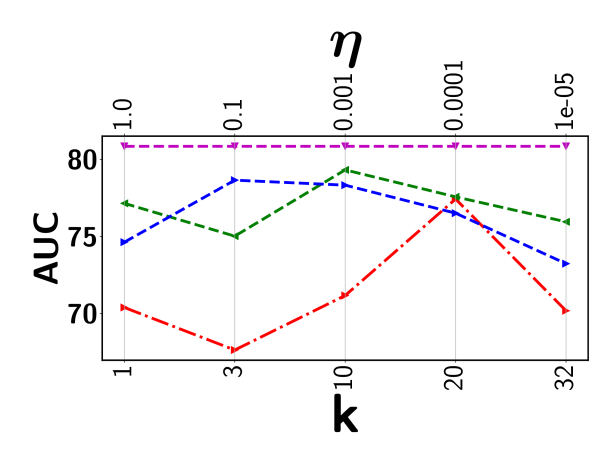

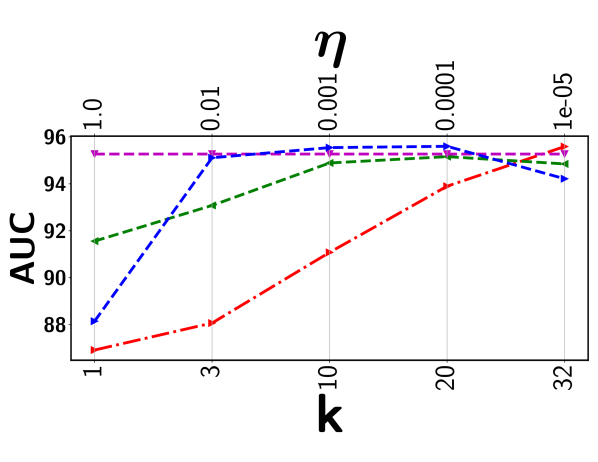

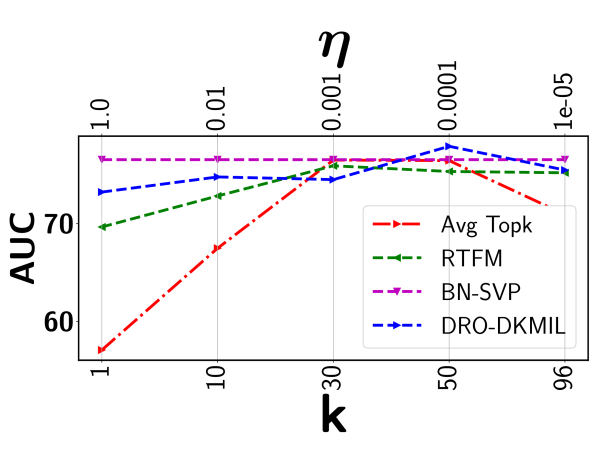

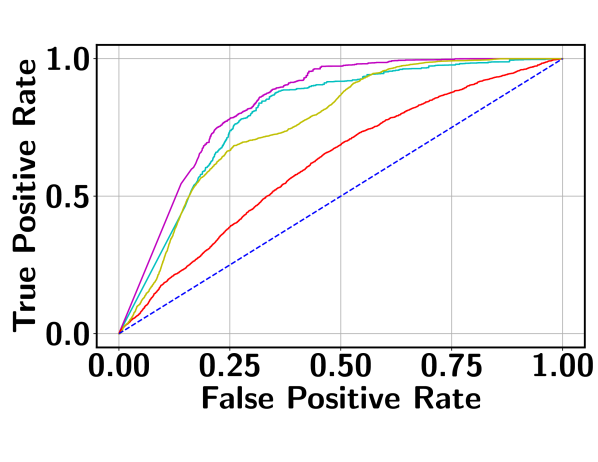

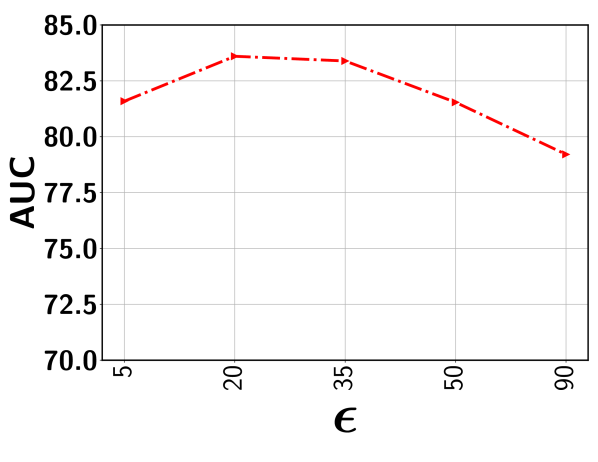

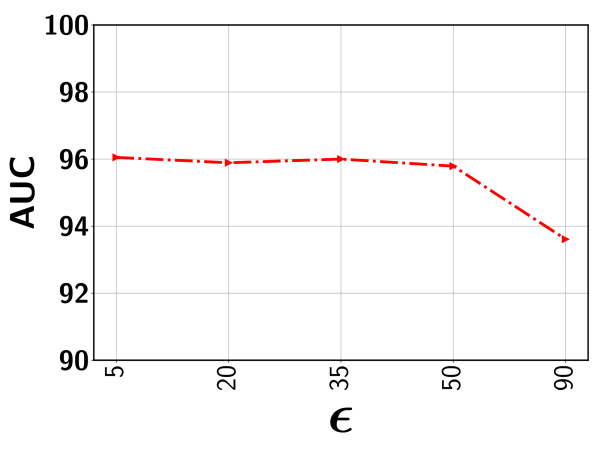

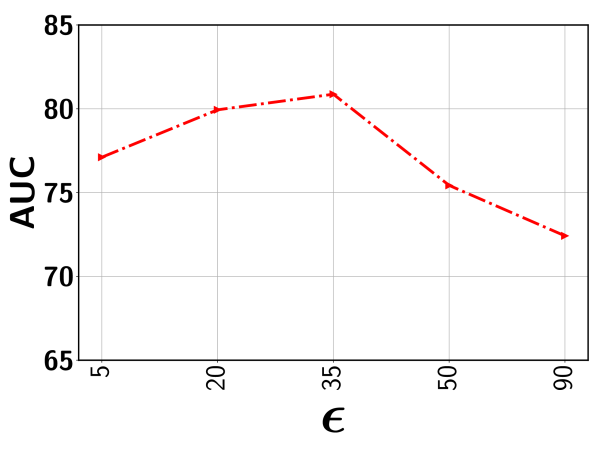

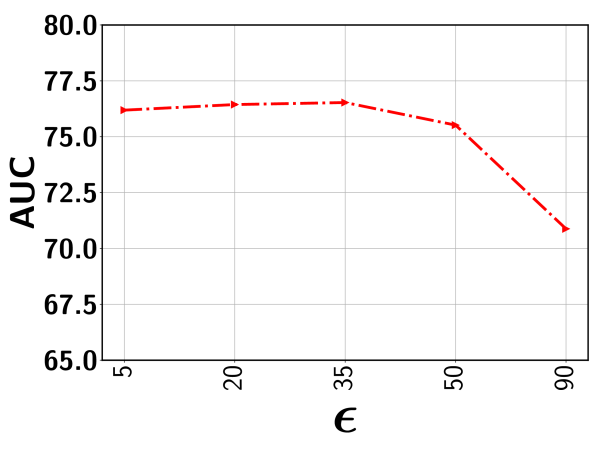

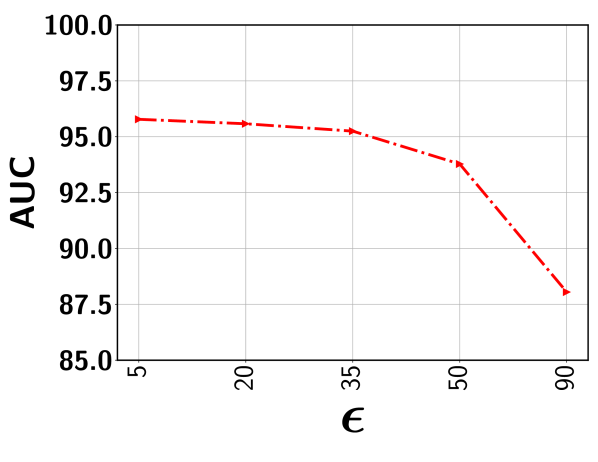

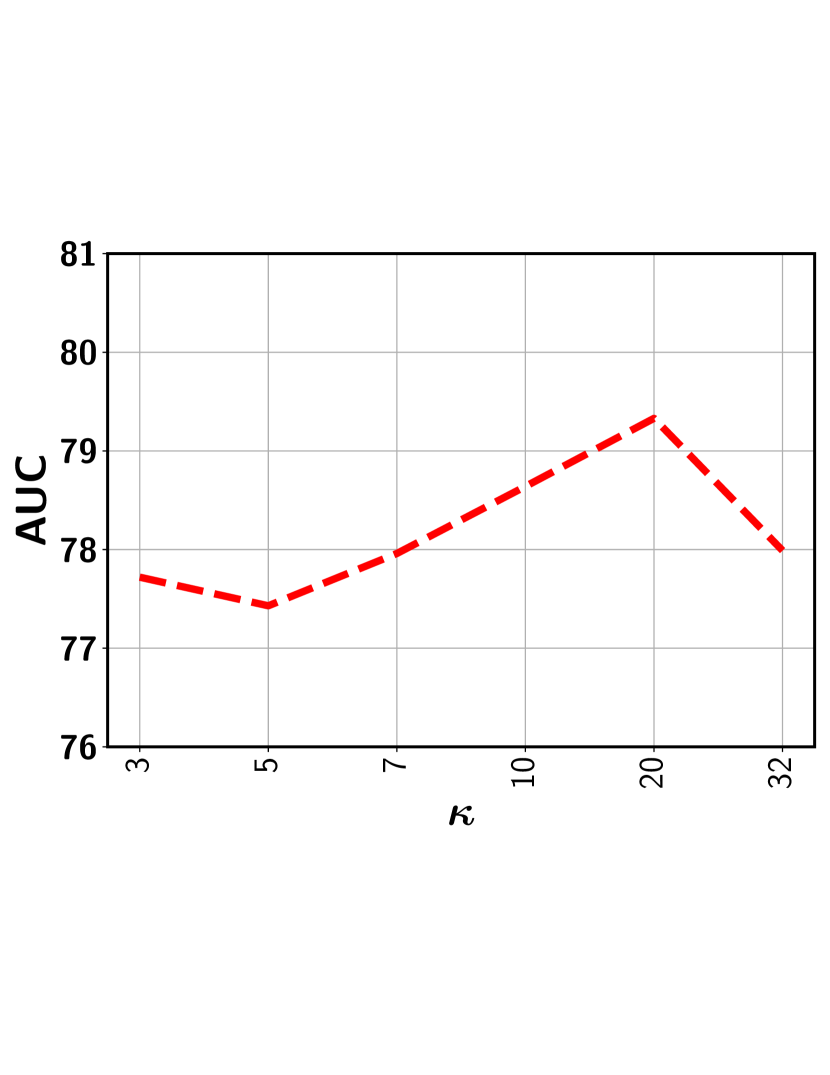

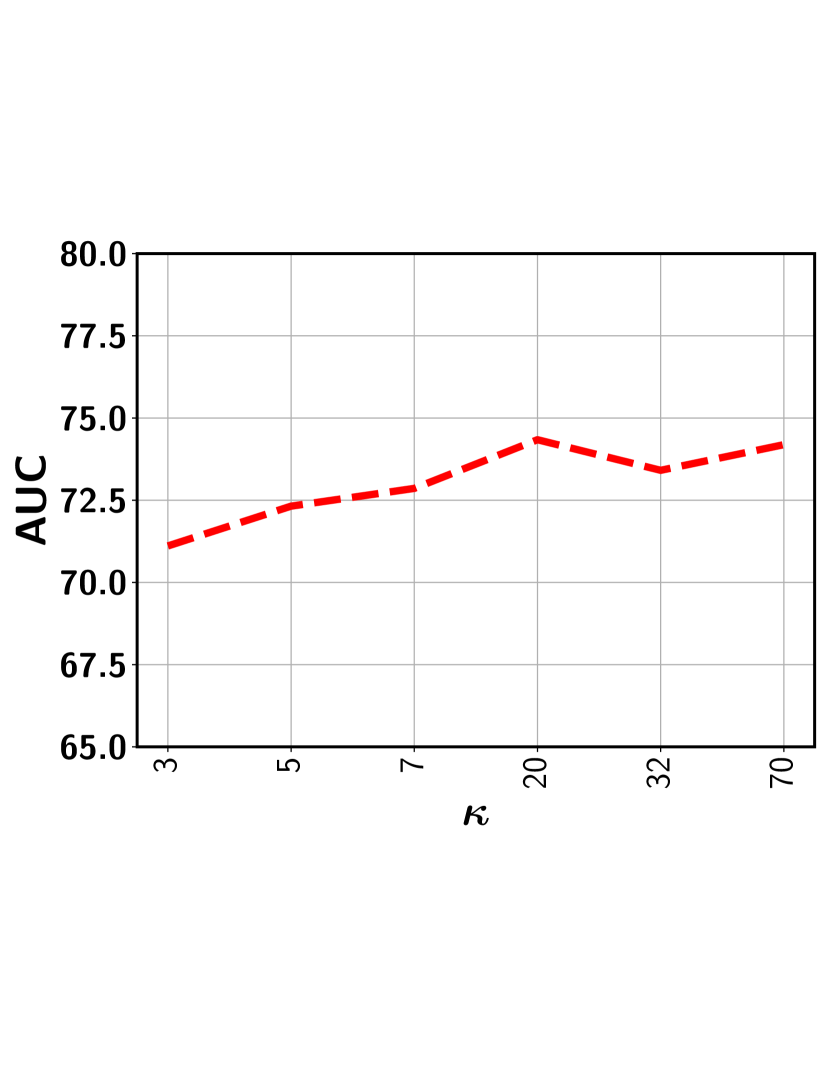

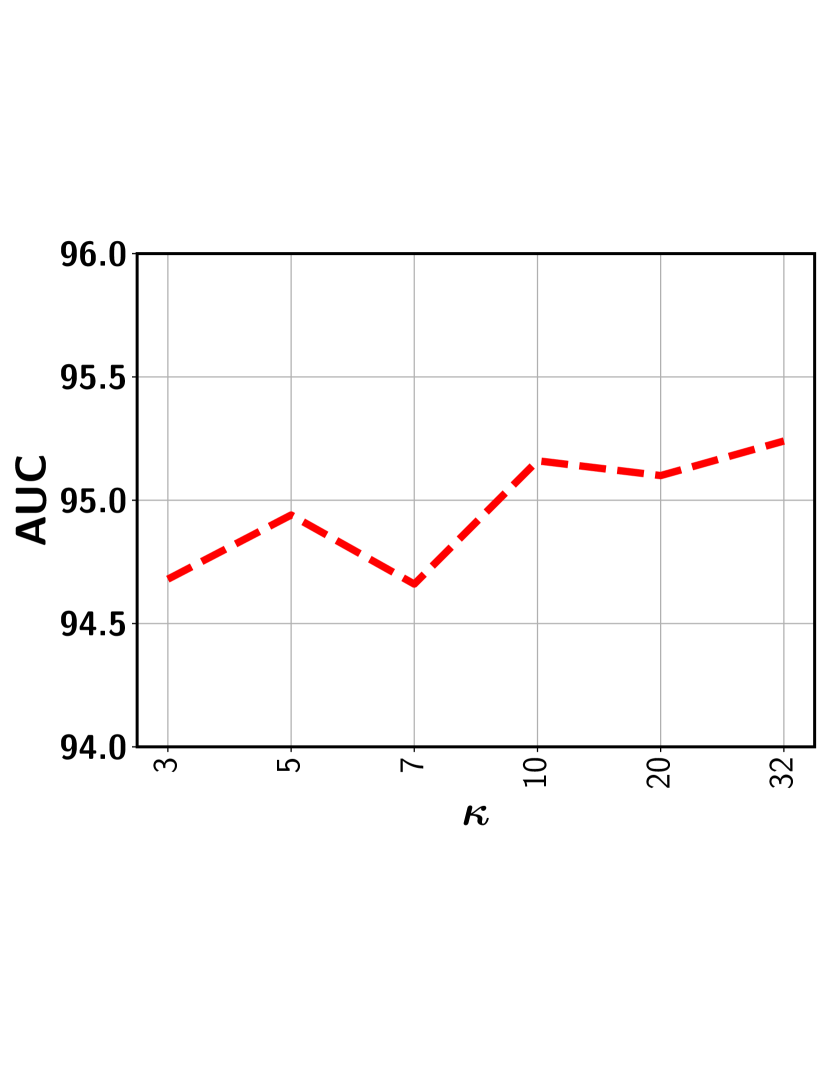

Top- ranking loss has been adopted in an attempt to address the issues as outlined above. It maximizes the gap between the mean score of the top- predicted instances from a positive bag and that of a negative one [22, 18]. However, there are inherent limitations by using a top- loss. First, it tends to be extremely sensitive to the selected value. Figure 2 shows the highly fluctuating detection performance from two real-world surveillance video datasets. Since there is no frame (or segment) labels available during model training, setting an optimal through cross-validation is infeasible or highly costly. Furthermore, given the diverse videos, the number of abnormal instances may vary significantly from one video to another implying we should have a different for each video. Hence, applying the same to all videos as in the existing approaches fails to capture the nature of the data. Another serious but more subtle issue is that all (or most of) the selected segments may come from the same sub-sequence of the video. Using a consecutive set of visually similar segments is less effective for model training, making it more likely to suffer from outlier and multimodal scenarios. As a result, top- approaches will fall short in providing a reliable detection performance in most practical settings as evidenced by our experiments.

To address the fundamental limitations of existing solutions, we propose novel Bayesian non-parametric construction of a submodular set function, which is integrated with multiple instance learning to deliver robust video anomaly detection performance under practical settings. Instead of choosing a set of instances with the highest prediction scores that are likely from a consecutive sub-sequence, maximizing a specially designed submodular function can involve a more diverse set of instances and expose the model to all potentially abnormal segments for more effective model training. Furthermore, the submodular set function is constructed in a non-parametric way, which induces a pairwise similarity among different segments in a video based on the diverse nature of the data. More specifically, an infinite Hidden Markov Model with a Hierarchical Dirichlet prior (HDP-HMM) [20] augmented with an enhanced self-transition is employed to partition a video through dynamic non-parametric clustering of its segments. To more effectively accommodate the dynamic and noisy nature of real-world surveillance videos, the emission process of the HMM is also governed by a non-parametric mixture model to allow segments within the same hidden state to have visual and spatial variations. This unique design is instrumental to discover temporally consistent and semantically coherent hidden states that can be naturally interpreted as scenes. Pairwise similarity among different segments is defined according to the state-component structure, which leads to the construction of a submodular set function. We then develop a novel submodularity diversified MIL loss function to ensure robust anomaly detection from real-world surveillance videos with outlier and multimodal scenarios. Our key contributions include:

-

•

Formulation of a novel submodularity diversified MIL loss that simultaneously extracts a diverse set of potentially positive instances while maximizing the gap between the mean score of these instances from a positive bag and a negative one, respectively.

-

•

Bayesian non-parametric construction of the submodular set function that infers the diversity from the video data to induce a pairwise similarity among different segments in a video and provide an upper bound on the size of the diverse set.

-

•

A greedy algorithm that leverages the state-component hierarchical structure resulting from the non-parametric construction for submodular set function optimization and efficient model training.

-

•

Theoretical results to ensure strong performance guarantee of the greedy algorithm.

The proposed approach achieves the state-of-the-art robust anomaly detection performance on real-world surveillance videos with noisy and multimodal scenarios.

2 Related Work

Encoding and sparse reconstruction-based approaches have been employed for anomaly detection, assuming that abnormal events are rare and deviate from normal patterns. They aim to capture the normal patterns using models, such as Gaussian processes (GPs) [12] and HMMs [9], to identify anomalies as outliers based on the reconstruction loss. Sparse representation-based approaches construct a dictionary for normal events and identify the events with the high reconstruction error as anomalies [16]. Recent approaches consider both abnormal and normal events in the training process. For video anomaly detection, since only video-level labels are assumed to be available during model training [6], MIL offers a natural solution by modeling each video as a bag and the associated segments (frames) as instances of the bag. Sultani et al. proposed an MIL based approach that enables to maximize the gap between highest prediction scores from a positive and negative bags, respectively [19] . However, this maximum score based MIL model (i.e., MMIL) is insufficient to handle outlier and multimodal scenarios as discussed earlier.

Top- ranking loss based MIL models have been developed to address the limitations of the MMIL model [22, 18]. These models produce state-of-the-art detection performance given that a suitable value can be assigned in advance. However, as demonstrated earlier, the detection performance of such models is highly sensitive to the chosen value. Meanwhile, given the diverse nature of videos, applying the same value to all the videos is sub-optimal. More importantly, since instance level labels are not available during training time, choosing a single value through cross-validation is infeasible or incurs a high annotation cost. Distributionally Robust Optimization (DRO) has been used to convert the top- set into an uncertainty set that allows the model to focus on instances in proportion to their prediction scores [18]. This is equivalent to assigning soft membership to involve instances into the MIL loss function. However, the size of the uncertainty set is controlled by the radius (i.e., ) of the uncertainty ball, which needs to be manually set. Furthermore, the model may put more focus on a set of consecutive segments with the highest prediction scores and ignore some other potentially positive segments.

The proposed approach constructs a novel submodular set function in a non-parametric way by inferring the diversity from data automatically. By jointly optimizing the submodular function and the MIL loss, it automatically chooses a diverse set of segments and lets the model better differentiate these (potentially positive) segments from those of a negative bag to ensure good detection performance.

3 Methodology

Following the standard MIL assumption, we consider, for a positive bag, there is at least one abnormal segment whereas, for a negative bag all segments are of normal types. Table 3 in the Appendix summarizes the major symbols and their descriptions.

3.1 Preliminaries

Let be the segment in the positive bag and indicates the segment in the negative bag . Also consider as the total number of instances per bag. The maximum score based MIL (MMIL) model tries to maximize the gap between the maximum prediction score from positive bag and that from the negative bag [19]:

| (1) |

where (or ) is the prediction score of (or ) and . As mentioned earlier, MMIL is less effective to handle outlier and multimodal scenarios. The top- ranking loss partially addresses the limitation of MMIL by maximizing the gap between an average of highest segment predictions from the positive bag and maximum segment prediction score from a negative bag:

| (2) |

where the positive bag segment predictions are sorted in a non-decreasing order, i.e., .

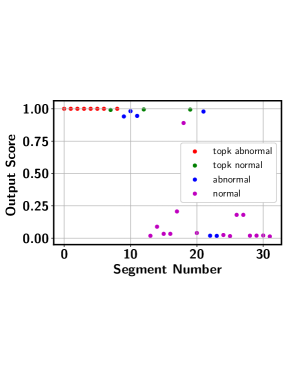

There are two major issues associated with the top- ranking loss. First, choosing an optimal value is a key challenge as the number of abnormal instances may vary significantly from one video to another implying different value for each video. Second, all the selected top- instances may come from same sub-sequence of the video. Including all those visually similar instances does not contribute much in the model training process. Furthermore, concentrating only on a specific sub-sequence may make the approach less effective to handle multimodal and outlier scenario. Figure 3 presents the output of the top- based model in the Avenue dataset. It can be seen that the top- based approach picks the consecutive video segments while missing quite a few other abnormal frames.

3.2 Bayesian Non-parametric Submodular Set Function Construction

The proposed Bayesian non-parametric submodular video partition (BN-SVP) approach offers a novel integrated solution to address the above two fundamental challenges simultaneously. In particular, since submodular set functions provide a natural measure for diversity, we design a special submodular set function that enables discovery of a representative set of segments from a video. This avoids only choosing visually similar consecutive video segments like in the top- approach, which enhances the model’s exposure to potential abnormal instances during model training. As a result, the model’s capability to handle multimodal and outlier scenarios can be effectively improved.

However, maximizing a submodular set function still requires to specify the size of the set. As mentioned above, choosing a set with an optimal size in video anomaly detection is highly challenging. To this end, we propose a novel Bayesian non-parametric construction of the submodular set function. The non-parametric construction leverages both visual features of the video segments and their temporal closeness to derive a similarity measure that allows us to define a submodular set function , where represents a subset of segments in a video. The size of is automatically determined through Bayesian non-parametric analysis of the video. Intuitively, most videos, especially those with anomalies, usually consist of multiple scenes, where each scene is comprised of a consecutive set of visually similar segments. Figure 4 shows the example frames from three different scenes in a video that records an explosion event. Ideally, if a video could be partitioned based on these scenes, we can choose representative (and potentially positive) segments from each scene. Such information can significantly facilitate the optimization of the submodular set function. However, both the number and the types of the scenes are unavailable during model training.

The proposed BN-SVP addresses the above issue through non-parametric partition of a video. It builds upon and extends an HDP-HMM model that places a Hierarchical Dirichlet Process (HDP) prior on the state transition distribution of a Hidden Markov Model (HMM) model [20]. By using an HMM to model a video (as a sequence of segments), each discovered hidden state can be naturally interpreted as a scene in the video. The HDP prior allows us to determine the optimal number of states (i.e., scenes) according to the nature of the data. However, real-world videos may be highly noisy and directly using an HDP-HMM model may extract too many scenes with less significant visual characteristics (e.g., spatial changes of objects or addition/removal of a small number of objects). To address this issue, we follow the sticky HDP-HMM to encourage a stronger self-transition of a state [4]. This will result in temporal persistence of states to produce longer and semantically coherent scenes. To further accommodate spatial changes or variations in certain objects, we allow the emission distribution to follow another non-parametric DP that automatically determines the number of mixture components (i.e., sub-scenes) within the same scene. For example, scene 1 in Figure 4 is comprised of two sub-scenes: the first with a clear sky and the second with smoke in the sky.

More specifically, consider a collection of hidden states (i.e. scenes in a video), the transition probability of state to other states is governed by a DP:

| (3) |

where is formed through a stick breaking construction process with parameter [20], is drawn from a base distribution , which follows another DP

| (4) |

Because of the discrete nature of , there can be multiple ’s taking an identical value of . Considering the unique set of atoms , we can rewrite as

| (5) |

Given the highly dynamic and noisy nature of many real-world surveillance videos, directly applying the standard HDP-HMM model to partition a video may result in many redundant scenes and rapidly switches among them. This is problematic in our setting in which it is critical to infer semantically coherent scenes along with a slower transition among them. As a result, it is essential to ensure temporal persistence of the discovered scenes [4]. This can be achieved through enhanced self transitions. In particular, the transition probability of the ’s state is augmented by

| (6) |

This has the effect of increasing the expected probability of staying in the same state.

| (7) |

To allow certain levels of variations within the same scene and accommodate the highly dynamic nature of a video sequence, we propose to model the emission process using a mixture distribution governed by another non-parametric DP. This design offers three unique advantages. First, it further ensures the temporal persistence of a scene as for a segment with less significant visual differences, it can stay in the same scene by switching to a different mixture component instead of transitioning to another (redundant) scene. Second, it offers a fine-grained partition of the video sequence, which is instrumental to separate abnormal segments (e.g., frames (b) and (e) in Figure 4) from normal ones (e.g., frames (a) and (d) in Figure 4) that share a common background. Last, the number of mixture components is automatically determined by the DP (e.g., scenes 1 & 3 have two mixture components while scene 2 only has one). For the -th scene, there is an unique stick-breaking distribution that defines the weights of the mixture components within the scene. Then, given the scene and mixture component assignment of a segment in a video, it is drawn from a specific multivariate Gaussian: .

Posterior inference of the augmented HDP-HMM model with a DP mixture for emission can be achieved through direct assignment [20] or blocked sampling with an increasing mixing rate [3]. Hyper-parameters can also be inferred by placing a vague prior on them and conduct Gibbs sampling.

The scene and component assignments of BN-SVP induces a pairwise similarity among segments in a video:

| (8) |

It is worth to note that the similarity between two segments and is evaluated using the learned feature representations (through a DNN) instead of the raw features. The induced similarity allows us to define a submodular set function [11, 10] summarized by the follow proposition.

Proposition 1.

Let denote the number of unique mixture components across all the discovered states in a bag and is a subset of with size . Given the BN-SVP induced pairwise similarity defined in (8), the following function is a submodular set function:

| (9) |

Based on the definition of as shown above, it is straightforward to show that is a special instance of the location facility function [14], which is submodular. Since each mixture component captures a unique sub-scene, maximization of can extract a diverse set of segments that best represent the all the scenes (and sub-scenes) in the entire video. By further integrating the margin loss given in (2), we achieve a submodularity diversified MIL loss:

| (10) |

where the margin loss is defined over instances in a set with size no larger than :

| (11) |

In essence, includes the set of instances in a positive bag that participate in the model training. The constraint has the effect of excluding some representative segments from the margin loss as these segments are less likely to be abnormal (e.g., with a very low predicted score). Including these segments will increase the margin loss and coefficient controls the balance between the margin loss and the diversity among the chosen segments.

3.3 Greedy Submodular Function Optimization

We propose a greedy algorithm for optimizing the submodular function in (9) to ensure efficient model training. The proposed algorithm leverages the special structure of the state and mixture component space resulted from the HDP-HMM partition of the video segments. The performance guarantee of the greedy algorithm is ensured by our theoretical result presented at the end of this section.

Recall that we use to denote the mixture component of segment . Let denote the maximum score among all the segments assigned to the same mixture component and be the index of the corresponding representative segment:

| (12) |

We construct a representative set as follows. Let and for each mixture component , we set

| (13) |

where is a threshold to exclude segments with a low prediction score, which plays an equivalent role as constraint in (10). In our experiments, we use equal to the output of the segment staying in the 35th percentile among video specific segments so as to avoid skipping any potential abnormal segments. Once a representative set is constructed, model training can proceed by solving the following MIL loss:

| (14) |

Given the state and mixture component assignment of each segment in a video, the representative set can be quickly constructed by sorting segments within each component according to their predicted scores and choosing the representative segment from each component by comparing its score with the threshold . Next, we provide a strong theoretical guarantee that the greedy algorithm can ensure the inclusion of a diverse set of segments for model training.

Theorem 1.

The representative set based MIL loss given in (14) is equivalent to the submodularity diversified MIL loss given in (10). Furthermore, using the proposed greedy algorithm to locate the representative segments essentially provides a -constrained greedy approximation to the maximization of the submodular set function . As a result, the obtained solution is guaranteed to be no worse of the optimal solution.

The detailed proof is provided in the Appendix.

4 Experiments

We conduct extensive experiments to evaluate the effectiveness of the proposed BN-SVP approach. Through these experiments, we aim to demonstrate: (i) outstanding anomaly detection performance by comparing with competitive top-, MIL, and other video anomaly detection models, (ii) robustness to outlier and multimodal scenarios, and (iii) deeper insights on the better detection performance through a qualitative study.

4.1 Datasets and Experimental Settings

Our experimentation includes three video datasets of different scales: ShanghaiTech [17], Avenue [16], and UCF-Crime [19]. Table 4 in the Appendix shows how the videos are partitioned into the training/testing sets in each dataset.

-

•

ShanghaiTech consists of 437 videos (330 normal and 107 abnormal). In the original setting, all training videos are normal. To fit into our setting, we follow the data split in [27] to assign normal and abnormal videos in both training and testing sets.

-

•

Avenue consists of 16 training and 21 testing videos. We perform 80:20 split separately in the abnormal and normal video sets to generate training and testing instances.

-

•

UCF-Crime consists of 13 different anomalies with a total of 1900 videos, where 1610 are training videos and 290 are testing videos. In this dataset, frame labels are available only for the testing videos.

To show the robustness of the proposed approach in the multimodal and outlier scenarios, we also generate the Multimodal and Outlier datasets. Specifically, we create a multimodal scenario by extending the UCF-Crime dataset. For the outlier scenario, we deliberately impose some outliers in the ShanghaiTech dataset. More details of these two datasets are provided in Section 4.3. For evaluation, we report the frame-level receiver operating characteristics (ROC) curve along with the corresponding area under curve (AUC). The AUC score indicates the robustness of the performance at various thresholds.

For Avenue and ShanghaiTech datasets, we extract visual features from the FC7 layer of a pre-trained C3D network [23]. We re-size each video frame to pixels and fix the frame rate to 30 fps. We compute the C3D features for every 16-frame video clip. This may yield a different number of clips (each clip having a 2048 dimensional feature vector) depending on the number of frames in each video. Thus, we fit any number of clips to the 32 segments by taking an average of clip features in a specific segment. In case of UCF-Crime, we extract the features using an I3D network [1] by using the pretrained network as described in [25]. For all datasets, we use parallel GCN networks to capture the feature similarity and temporal consistency. The outputs of the parallel branches are combined and passed through a 5-layer LSTM network where each layer has 32 hidden units followed by batch normalization. Finally, an FC layer with sigmoid activation is applied to bring the prediction score to . For model training, we use SGD with a learning rate of and regularization with parameter . Detailed information about the network architecture is provided in the Appendix.

4.2 Performance Comparison

Comparison with Top- Models

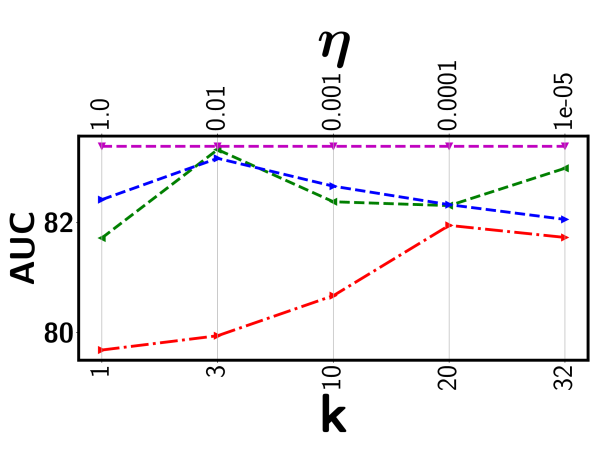

We first compare the detection performance with two most recent top- based models, including Robust Temporal Feature Magnitude learning (RTFM) [22] and the DRO based deep kernel MIL (DRO-DKMIL) [18]. We also compare with a standard average top- model (Avg Topk) as the baseline. Avg Topk uses the rank loss in (2) with the same network architecture as BN-SVP. For RTFM, we get the result by re-running the original implementation for different values. Similarly, for DRO-DKMIL, we run the original implementation for different values that control the size of the uncertainty set. The proposed BN-SVP removes the dependency on these highly sensitive parameters through non-parametric modeling. Detailed comparison results are shown in Figure 5.

We have several important observations. First, all the top- models are very sensitive to the selection of the value (or that defines a soft version of the top- set). Both RTFM and DRO-DKMIL outperform the standard Avg Topk. DRO-DKMIL achieves relatively more stable performance across all datasets. This may attribute to its conversion of discrete optimization (i.e., choose a specific ) to a continuous optimization problem (i.e. choosing ). However, for certain dataset (e.g., Avenue), its performance still varies more than . Second, while for some rare cases that RTFM or DRO-DKMIL achieves the best performance for a specific or , they under-perform BN-SVP in most cases. This is mainly due to that these models tend to choose a consecutive set of segments, which limits the model’s exposure of other potentially positive segments. This issue has been effectively addressed by BN-SVP, which extracts a diverse set of potentially positive segments through submodular optimization.

Comparison with Other Models

We also make comparison with other existing techniques that do not depend on the value. Specifically, our comparison study includes the maximum score based MIL model (MMIL) by Sultani et al. [19], attention based deep MIL model proposed by Ilse et al. [7], a dictionary based approach proposed by Lu et al. [16], and an MIL model for soft bags (MILS) proposed by Li & Vasconcelos [13] as common baselines for all datasets. Sultani et al. [19] used the loss function in (1) along with the temporal similarity and consistency as a regularizer. Ilse et al. used a permutation invariant aggregation function to detect the positive instances in the bag, where the function operators are learned using the attention network [7]. Li & Vasconcelos used a large-margin based latent support vector machine model with the goal to correctly classify positive and negative bags [13]. In case of the approach presented by Zhong et al. [27], we directly report the performance from the original paper for the UCF-Crime and ShanghaiTech datasets. This approach involves multiple rounds of alternative optimization between classification and cleaning and may produce unstable performance [22]. Considering its difficulty in the training and replication process, we do not include it in other datasets.

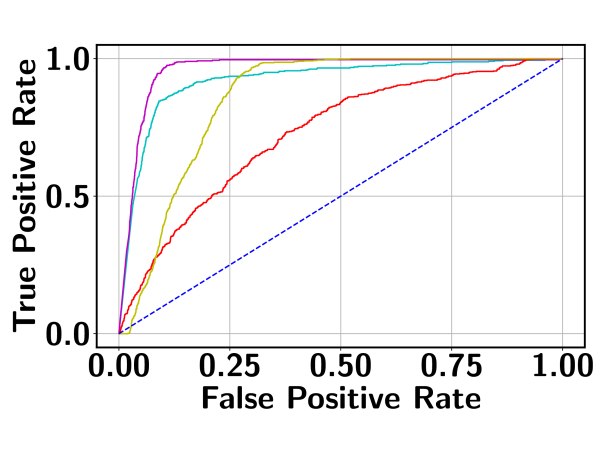

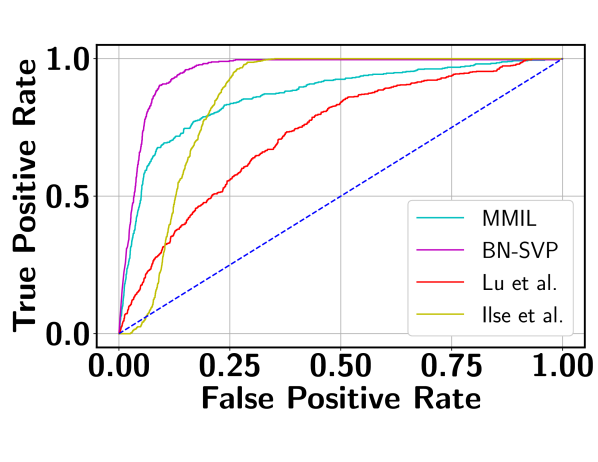

Table 1 reports the AUC scores of BN-SVP along with the results from the comparison models as described above. It can be seen that BN-SVP clearly outperforms other models in all datasets and a large margin (i.e., -) is achieved on both ShanghaiTech and Avenue datasets. The corresponding ROC curves are shown in Figure 6, which demonstrates a consistent trend. For example, on UCF-Crime, BN-SVP has a more than better True Positive Rate (TPR) compared to MMIL at a False Positive Rate (FPR) of 0.2. Also at varying FPR, BN-SVP consistently outperforms the other competitive baselines, which justifies its outstanding detection capability.

| Approach | AUC (%) |

|---|---|

| UCF-Crime | |

| Hasan et al. [5] (C3D) | |

| Lu et al. [16] (C3D) | |

| Lu et al. [16] (I3D) | |

| MMIL [19] (C3D) | |

| Li & Vasconcelos [13] (I3D) | |

| Ilse et al. [7] (I3D) | |

| Zhong et al. [27] (GCN (C3D)) | |

| Zhong et al. [27] () | |

| Zhong et al. [27] () | |

| MMIL [19] (I3D) | |

| BN-SVP (I3D) | 83.39 |

| ShanghaiTech | |

| Lu et al. [16] (C3D) | |

| Li & Vasconcelos [13] (C3D) | |

| Zhong et al. [27] (GCN (C3D)) | |

| Zhong et al. [27] () | |

| Zhong et al. [27] () | |

| Ilse et al. [7] (C3D) | |

| MMIL [19] (C3D) | |

| BN-SVP (C3D) | 96.00 |

| Avenue | |

| Binary SVM (C3D) | |

| Lu et al. [16] (C3D) | |

| Li & Vasconcelos [13] (C3D) | |

| Ilse et al. [7] (C3D) | |

| MMIL [19] | |

| BN-SVP (C3D) | 80.87 |

4.3 Detecting Multimodal and Outlier Segments

Multimodal Detection

The original UCF-Crime dataset does not explicitly consider a multimodal scenario. Even though the real-world surveillance videos may indeed contain those cases (which is evidenced by the superior performance of the BN-SVP model), it is hard to identify actual videos for this specific information. In UCF-Crime dataset, different types of anomalies are present. This allows us to explicitly create multimodal scenarios by combining multiple abnormal videos from different activity types. To this end, we randomly select three activity types and form an abnormal bag by concatenating three abnormal videos, one video per activity type. The training bags are constructed using the training dataset whereas testing bags are constructed using the testing dataset. In total, we construct 50 abnormal and 50 normal training bags. In the testing set, there are 10 normal and 10 abnormal videos. Table 2 shows the AUC scores and corresponding ROC curve is shown in the Figure 6 (d). BN-SVP achieves a more superior performance compared to other baselines. Furthermore, BN-SVP stays consistently on the top in the ROC curve justifying the effectiveness of the approach toward the multimodal scenario. As an example, at , BN-SVP is at least better than other approaches on TPR.

Outlier Detection

To assess the robustness on outlier detection, we extend the ShanghaiTech dataset with outliers. Specifically, we randomly select 120 segments from abnormal videos and replace their features with points drawn from a standard multivariate Gaussian distribution. As shown in Table 2, MMIL suffers heavily by the outliers compared to the proposed BN-SVP. This is because, it is likely to have an outlier prediction as the maximum prediction score from the abnormal video. As a result, the overall optimization process may be heavily influenced by outliers.

4.4 Qualitative Analysis

To show the effectiveness of extracting a diverse set of segments for model training, we present illustrative sample frames in a stealing video from UCF-Crime, where BN-SVP correctly identifies all abnormal frames and a top- approach (e.g., Avg Topk) misses some of them. In Figure 7, both frames are of abnormal types and but they occur in two distinct time intervals within the video. The first frame is more obvious for a stealing event. Consequently, both the proposed BN-SVP and Avg Topk are able to correctly identify it. In contrast, the second frame is less obvious for a stealing activity. Nevertheless, it is still chosen by BN-SVP due to its diverse coverage of potentially abnormal frames during the training process. On the other hand, Avg Topk only focuses on frames with high prediction scores that are usually co-located in the same time interval. This will narrow the scope of the model being exposed to other abnormal frames. Therefore, Avg Topk is not able to correctly predict the second frame and falsely classify it as normal. More qualitative analysis that demonstrates the robustness of the proposed approach on multimodal and outlier scenarios is provided in the Appendix along with an ablation study for the prediction score threshold defined in (13).

5 Conclusion

In this paper, we propose a novel Bayesian non-parametric submodularity diversified MIL model for robust video anomaly detection in practical settings that involve outlier and multimodal scenarios. By integrating submodular optimization with the minimization of an MIL loss, the proposed approach identifies a diverse set of segments to ensure comprehensive coverage of all potential positive segments for effective model training. The Bayesian non-parametric construction of the submodular set function automatically determines the upper bound on the size of the diverse set, which serves as a key constraint for minimizing the submodularity diversified MIL loss function. The resulting state-component structure also leads to a greedy submodular optimization algorithm to support efficient model training. The effectiveness of the proposed approach is demonstrated through the state-of-the-art robust anomaly detection performance on real-world surveillance videos with noisy and multimodal scenarios.

Acknowledgement

This research was supported in part by an NSF IIS award IIS-1814450 and an ONR award N00014-18-1-2875. The views and conclusions contained in this paper are those of the authors and should not be interpreted as representing any funding agency.

References

- [1] J. Carreira and A. Zisserman. Quo vadis, action recognition? a new model and the kinetics dataset. In CVPR, pages 4724–4733, 2017.

- [2] Y. Cong, J. Yuan, and J. Liu. Sparse reconstruction cost for abnormal event detection. In CVPR 2011, pages 3449–3456, 2011.

- [3] Yanbo Fan, Siwei Lyu, Yiming Ying, and Baogang Hu. Learning with average top-k loss. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, NIPS, pages 497–505. Curran Associates, Inc., 2017.

- [4] Emily B. Fox, Erik B. Sudderth, Michael I. Jordan, and Alan S. Willsky. A sticky hdp-hmm with application to speaker diarization. The Annals of Applied Statistics, 5:1020–1056, 2011.

- [5] M. Hasan, J. Choi, J. Neumann, A. K. Roy-Chowdhury, and L. S. Davis. Learning temporal regularity in video sequences. In CVPR, pages 733–742, 2016.

- [6] Chengkun He, Jie Shao, and Jiayu Sun. An anomaly-introduced learning method for abnormal event detection. Multimedia Tools Appl., 77(22):29573–29588, Nov. 2018.

- [7] Maximilian Ilse, Jakub M. Tomczak, and Max Welling. Attention-based deep multiple instance learning. In ICML, 2018.

- [8] Thomas N. Kipf and Max Welling. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR ’17, 2017.

- [9] L. Kratz and K. Nishino. Anomaly detection in extremely crowded scenes using spatio-temporal motion pattern models. In CVPR, pages 1446–1453, 2009.

- [10] Andreas Krause. Optimizing sensing: Theory and applications. Technical report, 2008.

- [11] Andreas Krause, H. Brendan McMahan, Carlos Guestrin, and Anupam Gupta. Robust submodular observation selection. Journal of Machine Learning Research, 9(93):2761–2801, 2008.

- [12] Nannan Li, Xinyu Wu, Huiwen Guo, Dan Xu, Yongsheng Ou, and Yen-Lun Chen. Anomaly detection in video surveillance via gaussian process. Int. J. Pattern Recognit. Artif. Intell., 29:1555011:1–1555011:25, 2015.

- [13] W. Li and N. Vasconcelos. Multiple instance learning for soft bags via top instances. In CVPR, 2015, pages 4277–4285, 2015.

- [14] Hui Lin and Jeff Bilmes. How to select a good training-data subset for transcription: Submodular active selection for sequences. Technical report, WASHINGTON UNIV SEATTLE DEPT OF ELECTRICAL ENGINEERING, 2009.

- [15] Wen Liu, Weixin Luo, Zhengxin Li, Peilin Zhao, and Shenghua Gao. Margin learning embedded prediction for video anomaly detection with a few anomalies. In IJCAI, 2019.

- [16] C. Lu, J. Shi, and J. Jia. Abnormal event detection at 150 fps in matlab. In ICCV, pages 2720–2727, 2013.

- [17] W. Luo, W. Liu, and S. Gao. A revisit of sparse coding based anomaly detection in stacked rnn framework. In ICCV, pages 341–349, 2017.

- [18] Hitesh Sapkota, Yiming Ying, Feng Chen, and Qi Yu. Distributionally robust optimization for deep kernel multiple instance learning. In Arindam Banerjee and Kenji Fukumizu, editors, Proceedings of The 24th International Conference on Artificial Intelligence and Statistics, volume 130 of Proceedings of Machine Learning Research, pages 2188–2196. PMLR, 13–15 Apr 2021.

- [19] W. Sultani, C. Chen, and M. Shah. Real-world anomaly detection in surveillance videos. In CVPR, pages 6479–6488, 2018.

- [20] Yee Whye Teh, Michael I Jordan, Matthew J Beal, and David M Blei. Hierarchical dirichlet processes. Journal of the american statistical association, 101(476):1566–1581, 2006.

- [21] Kai Tian, Shuigeng Zhou, Jianping Fan, and Jihong Guan. Learning competitive and discriminative reconstructions for anomaly detection. In AAAI, pages 5167–5174. AAAI Press, 2019.

- [22] Yu Tian, Guansong Pang, Yuanhong Chen, Rajvinder Singh, Johan W. Verjans, and Gustavo Carneiro. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pages 4975–4986, October 2021.

- [23] Du Tran, Lubomir Bourdev, Rob Fergus, Lorenzo Torresani, and Manohar Paluri. Learning spatiotemporal features with 3d convolutional networks. In ICCV, ICCV ’15, page 4489–4497, USA, 2015. IEEE Computer Society.

- [24] Hung Vu, T. Nguyen, Trung Le, Wei Luo, and Dinh Q. Phung. Robust anomaly detection in videos using multilevel representations. In AAAI, 2019.

- [25] X. Wang, Ross B. Girshick, Abhinav Gupta, and Kaiming He. Non-local neural networks. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7794–7803, 2018.

- [26] Huan Yang, Baoyuan Wang, Stephen Lin, David Wipf, Minyi Guo, and Baining Guo. Unsupervised extraction of video highlights via robust recurrent auto-encoders. In ICCV, ICCV ’15, page 4633–4641, USA, 2015. IEEE Computer Society.

- [27] J. Zhong, N. Li, W. Kong, S. Liu, T. H. Li, and G. Li. Graph convolutional label noise cleaner: Train a plug-and-play action classifier for anomaly detection. In CVPR, pages 1237–1246, 2019.

Appendix

Organization of Appendix

Appendix A Summary of Notations

Table 3 summarizes all the major symbols along with their descriptions.

| Notation | Description |

|---|---|

| Positive bag (video) | |

| Negative bag (video) | |

| Number of segments in each bag | |

| Segment in a positive bag | |

| largest prediction segment in a positive bag | |

| Segment in a negative bag | |

| Feature dimension of each video segment | |

| w | Network parameters |

| b | Network bias |

| Number of segments considered in the top- formulation | |

| Learning rate | |

| Set of instances from positive bag involve in model training | |

| Base distribution in DP | |

| Concentration parameter for the distribution | |

| Weight associated with the atom | |

| Atom drawn from the distribution | |

| Transition probability distribution of state | |

| Stick breaking weight associated with atom in group | |

| Concentration parameter for | |

| atom corresponding group | |

| Stick breaking weight corresponding to atom | |

| Concentration parameter for | |

| Parameter defining the self transitioning | |

| Scene assignment for the segment in a video | |

| Mixture component assignment for the segment in a video | |

| Multivariate Gaussian distribution | |

| Mean of the state, mixture component | |

| Covariance of state, mixture component | |

| Pairwise similarity between and segments | |

| Submodular set function | |

| Maximum output score among segments assigned to the same cluster | |

| Index of the representative segment | |

| Representative set constructed using the greedy algorithm | |

| Threshold to exclude segments with low prediction score from the representative set | |

| Upper bound of number of representative segments |

Appendix B Proof of Theorem 1

In this section, we provide the detailed proof of Theorem 1. We first show that the representative set based MIL loss given by (14) is equivalent to the submodularity diversified MIL loss given by Equation (10) with a specific to balance the MIL loss and the diversity of the set. We then show that greedy algorithm to locate the representative segments provides a -constrained greedy approximation to the maximization of the submodular set function with the solution to be no worse than of the optimal solution.

Proof of representative set based MIL loss in (14) is a special case of the submodular diversified MIL loss in (10)

We first present a lemma, which is used in the proof.

Lemma 2.

Assume that with size is a solution that maximizes in (9). Then, should contain one segment from each mixture component (i.e. sub-scene).

Proof.

The lemma can be proved by following the definition of the BN-SVP induced pairwise similarity between segments given by (8) and then use proof by contradiction. Assume that at least two segments, say , are chosen from the same component . Then, there will be at least one component, say , where no segments are chosen by . Given the definition of in (9), for each segment in , either or could be used to compute the pairwise similarity based on their closeness to that segment. Since the cohesiveness of each component is guaranteed through the BN-SVP process, both and should be close to the mean of their assigned Gaussian component to ensure a high likelihood optimized by HDP-HMM. Due to triangle inequality, and should be close to each other. As a result, we can assume that is always chosen to evaluate the pairwise similarity with each segment in component . Next, we replace with another segment from component to construct another solution set . Since has positive similarity with each segment in and the pairwise similarity between and all segments in is all zero, we have , which contradicts the assumption that maximizes . ∎

Since the representative set is constructed by choosing one segment from each mixture component, it satisfies the necessary condition to be an optimizer of specified in the above lemma. However, choosing a set of segments with the maximum diversity is not the primary goal and the overall objective function (10) includes both the MIL loss and the diversity, which are balanced through . Due to the lack of instance-level labels, choosing a that optimally balances the MIL loss and the set diversity is challenging. We argue that construction essentially offers alternative way to set a specific to balance these two terms. First, since the constraint allows the set to contain less than segment, excludes those segments with low prediction scores. This can be viewed as setting a to increase while decreasing the MIL loss . Similarly, instead of choosing the instance with the largest pairwise similarity with all other segments in the same component, we choose a segment with the highest prediction score. Again, this can be viewed as further reducing the to give more preference to the MIL loss as such segments can further reduce the training MIL loss. Thus, instead of directly setting , which is highly challenging, is constructed by leveraging both the mixture component assignments and the prediction scores of the segments. This is equivalent to implicitly setting a to balance the MIL loss and the diversity of the representative set , which completes the proof of the equivalence of these two objective functions.

Proof of the optimality of the greedy algorithm

We first reformulate (10) as a minimization problem with defined as

| (15) |

The above optimization involves finding a subset that maximizes . This requires enumerating over all possible subsets, which is expensive when there are large number of segments in a given video. Defining the discrete objective function where

| (16) |

Since is monotone non-decreasing submodular, a fast greedy procedure can be used to approximately optimize . A typical greedy procedure involves evaluating the similarity between each pair of segments in a video and then choose the segments with the largest overall similarity with the all other segments. We make two important adjustments of this standard greedy process. First, our non-parametric HDP-HMM process follows the clustering based heuristic (Lin and Bilmes 2018) by choosing one segment from each cluster, which avoids evaluating each candidate segment in the video. Different from (Lin and Bilmes 2018), which chooses the data point that is closest to the cluster centroid, we choose the one with the highest output score. Second, our similarity evaluation takes a linear complexity with respect to the bag size by leveraging the temporal neighborhood of the segments. By leveraging the above greedy procedure, we can show that the obtained approximate solution is guaranteed to be no worse than of the optimal solution according to the standard result from (Nemhauser et al. 1978), which completes the proof of the second part.

| Split | ShanghaiTech | UCF-Crime | UCF-Crime Multimodal | Avenue | ||||

|---|---|---|---|---|---|---|---|---|

| Normal | Abnormal | Normal | Abormal | Normal | Abnormal | Normal | Abnormal | |

| Train | ||||||||

| Test | ||||||||

Appendix C Additional Experimental Results

In this section, we first show the detailed network architecture used in our training process. Next, we provide the ablation study demonstrating the impact of hyperparameter used in our experimentation. Finally, we provide some additional qualitative analysis justifying the effectiveness of the proposed approach. Further we also show effectiveness of the HDP-HMM technique to discover subscenes of different types in a video through qualitative analysis.

C.1 Network Architecture

First, we pass the pre-trained features through the two parallel GCN branches. The upper branch captures the feature similarity between segments and the lower one captures the temporal consistency between segments such that nearby segments will provide similar predictions. The output of the parallel branches are combined and passed through the 5 LSTM layers with 32 hidden units on each. Next, the output is passed through the BatchNorm. Finally, FCN is connected with sigmoid activation to get the final prediction score.

GCN Architecure

Next, we explain the GCN architecture in detail. Let is the dimensional adjacency matrix where the entry in the matrix indicates the similarity between segment and . Mathematically,

| (17) |

where and be the D-dimensional representation for and segments respectively. It should be noted that for the feature similarity branch, we use the RBF kernel with the following form

| (18) |

In case of temporal consistency branch, we use the following form between and segment:

| (19) |

This drives the temporally nearby segments to have a similar score. Based on the adjacency matrix, following Kipf and Welling [8], the graph-Laplacian with the renormalization trick can be written as

| (20) |

In the above equation is the corresponding degree matrix. The output of the feature similarity graph is computed as:

| (21) |

where is a trainable parameter matrix and is the video specific features.

C.2 Ablation Study

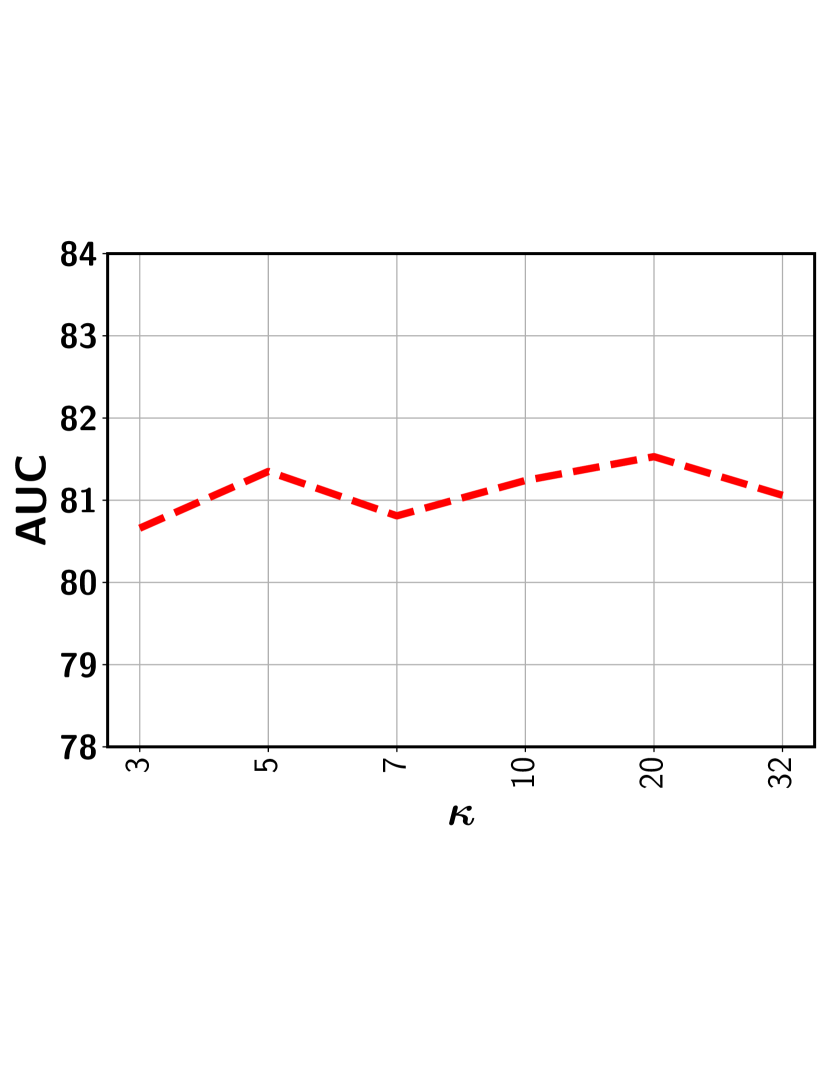

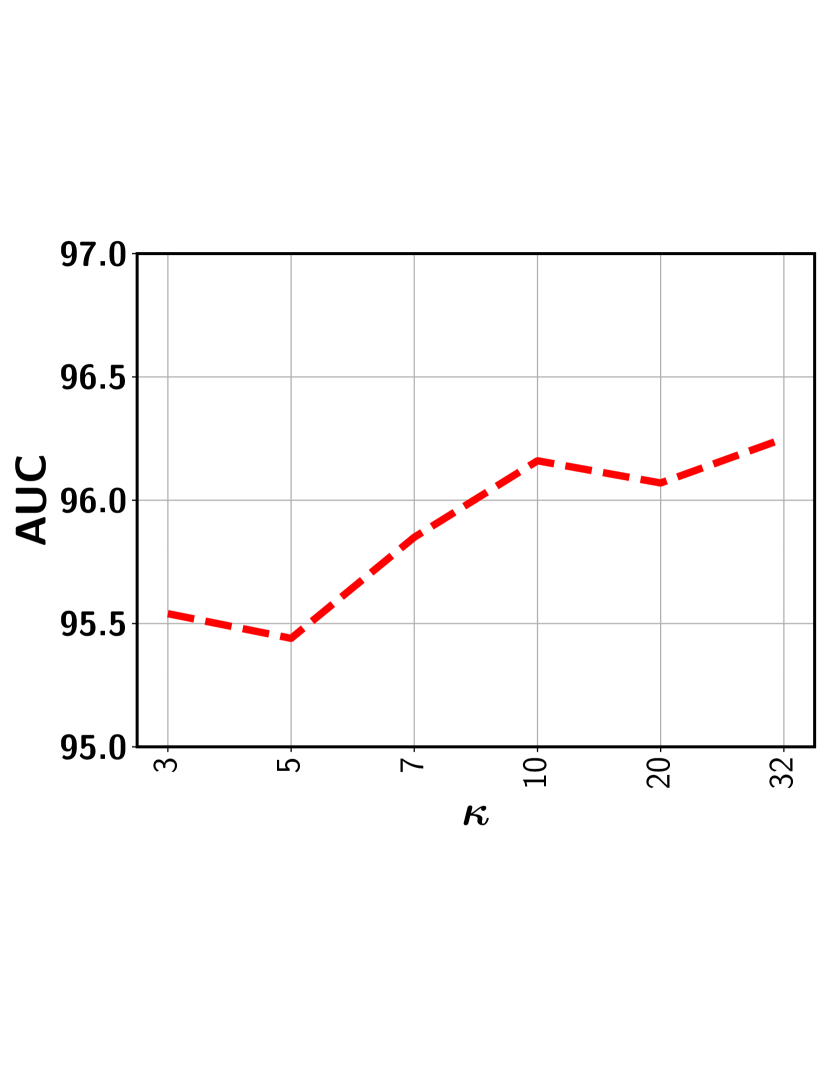

Impact of

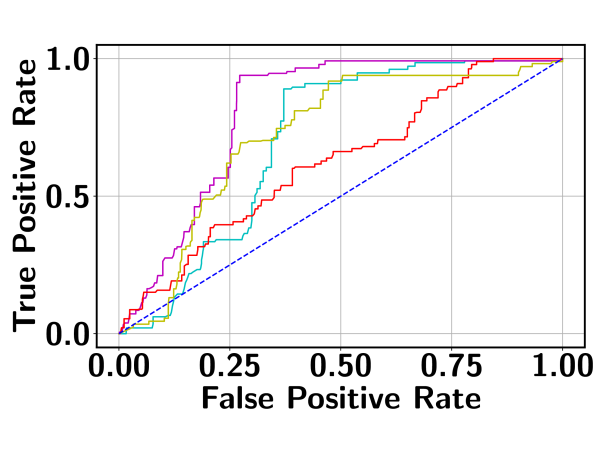

In this subsection, we show the the impact of the error threshold on the model performance. It is worth mentioning that indicates the percentile we used to determine the threshold so as to exclude the clusters with potentially all normal segments with a high probability. For example, indicates that we first determine the output score corresponding to the segment that lies in the percentile based on scores of all segments sorted in the non-decreasing order. This selected score is used as the threshold. Next, all representative segments with a predicted score below this output threshold are discarded from the representative set . Figure 8 show the performance variation with respect to the different ’s for five different datasets. As can be seen, for a relatively lower value (i.e., 20-35%), the performance is fairly stable for all datasets. This is because, with a low , the model rejects the segments from a given video with a sufficiently low output score. This way, the chance of including normal segments from abnormal videos is minimized. Further lowering may include a good number of normal segments, making the model mis-identify other similar normal segments as anomalies. On the other hand, choosing a very high results in the drop in performance. In this case, some potentially abnormal segments may be missed in the loss function and therefore, the model may have less exposure to different types of abnormal frames resulting in the degradation of performance. In sum, as long as we stay in the relatively low range when choosing (e.g., 20-35% gives very stable results), we can get the stable (and nearly optimal) performance.

Impact of the constraint

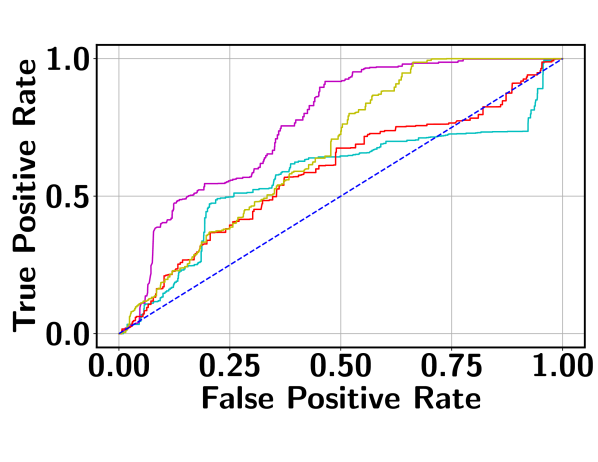

It should be noted that in our approach only provides an upper bound on the selected segments and the actual number is determined by the non-parametric model along with the prediction threshold . This addresses the fundamental issue in the top- models, in which a fixed has to be set for all videos. Figures 9 shows that a stable performance can be achieved for a wide range of values as long as it is not set too small that may exclude some representative abnormal segments.

Impact of

We would like to emphasize that BN-SVP does not require to directly set , which is highly challenging. By leveraging the prediction score of instances and their mixture assignments, BN-SVP implicitly sets to balance MIL loss and the diversity of set . Specifically because of the constraint , we ensure that the set contains no more than segments. It excludes segments with a low prediction score, which has the effect of decreasing to reduce the MIL loss. Similarly, instead of choosing the instance with the largest pairwise similarity with all other instances within the same mixture assignment, it chooses the instance with the highest prediction score. This can also be viewed as choosing a smaller to reduce the MIL loss.

| Dataset | UCF-Crime | Avenue | Multimodal | ShanghaiTech | Outlier |

|---|---|---|---|---|---|

| w augmentation | |||||

| w/o augmentation |

Impact of Augmentation

We compare the performance (AUROC) with augmentation () and without augmentation (). Table 5 shows the result for different datasets. As can be seen, BN-SVP consistently performs better on all datasets than w/o augmentation. Without augmentation, the approach transitions from one state to another state quickly for small visual changes and may not be able to keep the temporal persistence when discovering the scenes and therefore the performance is lower. We have also shown the significance of using augmentation via a qualitative analysis in Appendix C.4.

C.3 Additional Qualitative Analysis

To show the effectiveness of the proposed approach to handle multimodality, we compare BN-SVP with MMIL using some illustrative examples. Figure 10 shows two frames from the TEST06 video in Avenue with different anomaly types. In the first anomaly type, the object is thrown and in the second, a person is walking in the wrong lane. As the first anomaly is more obvious, both BN-SVP and MMIL are able to correctly predict it as abnormal. For the second one, our proposed approach correctly detects it as abnormal while MMIL fails to do that. Due to the submodular diversified loss, BN-SVP is more likely to include even less obvious frames (e.g., Figure 10 (b)) during the training process and as a result the approach can make a correct prediction. On the other hand, MMIL picks the one with maximum score and therefore more likely to miss those less obvious ones during training process resulting in the mis-identification of similar frames as normal.

C.4 Effectiveness of Bayesian Non-Parametric Video Partition

In this section, we present representative frames from the mixture components (i.e. sub-scenes) discovered by the proposed Bayesian non-parametric video partition process. The purpose is to demonstrate that semantically coherent segments are automatically grouped into the same mixture components by the proposed BN-SVP. This significantly facilitates the optimization of the submodular function to choose a diverse set of segments and allow some of the most representative segments to participate in the MIL loss. Figure 11 shows frames randomly selected from different mixture components for video 01_0162 from the ShanghaiTech dataset. As shown in Figure 11 (a), the frame does not contain any person and its associated component (i.e., Component 0) mostly consists of background segments (which are predicted as normal by the model). In Figure 11 (b), there are multiple people in the frame. Furthermore, someone is riding a bike in a wrong lane while a second person is pointing to another group of people. This frame is assigned to a newly created component (i.e., Component 1) since it looks quite different from the previous frames. Also, given the abnormal behavior in the frame, the model predicts it as an anomaly. Next shown in Figure 11 (c), as the bike starts to vanish from the camera frame, it looks different from (b) and therefore the model assigns it to a new Component 2. Although (b) and (c) are both of abnormal types, the latter is much less obvious than the former. Given their distinctions, they have been assigned to different mixture components so both of them could be chosen when optimizing the submodular function to participate in model training. Finally, for Figure 11 (d), the bike completely disappears from the frame and only a group people walking normally. So, it is assigned to Component 3 and the model predicts its as normal.

Appendix D Link to Source Code

For the source code, please click the following link: https://github.com/ritmininglab/BN-SVP.