Bayesian optimization of hyper-parameters in reservoir computing

Abstract

We describe a method for searching the optimal hyper-parameters in reservoir computing, which consists of a Gaussian process with Bayesian optimization. It provides an alternative to other frequently used optimization methods such as grid, random, or manual search. In addition to a set of optimal hyper-parameters, the method also provides a probability distribution of the cost function as a function of the hyper-parameters. We apply this method to two types of reservoirs: nonlinear delay nodes and echo state networks. It shows excellent performance on all considered benchmarks, either matching or significantly surpassing results found in the literature. In general, the algorithm achieves optimal results in fewer iterations when compared to other optimization methods. We have optimized up to six hyper-parameters simultaneously, which would have been infeasible using, e.g., grid search. Due to its automated nature, this method significantly reduces the need for expert knowledge when optimizing the hyper-parameters in reservoir computing. Existing software libraries for Bayesian optimization, such as Spearmint, make the implementation of the algorithm straightforward. A fork of the Spearmint framework along with a tutorial on how to use it in practice is available at https://bitbucket.org/uhasseltmachinelearning/spearmint/

keywords:

reservoir computing , hyper-parameter optimization , Gaussian processes , Bayesian statistics1 Introduction

Error backpropagation combined with stochastic gradient descent (SGD) is a simple and highly successful training method for feedforward neural networks [1, 2]. In contrast, training recurrent neural networks with this method poses considerable difficulties [3]. Different approaches such as, e.g., more complicated training schemes [4] or different architectures [5, 6] have been explored to tackle this problem, and have been quite successful. A popular method skips the training stage of the recurrent network completely. Only the connections of the network to the output are trained. As a result, the training stage is computationally fast and straightforward to implement. This training paradigm goes by the name of reservoir computing (RC) [7]. Despite its simplicity compared to other techniques, it achieves state-of-the-art results on several machine learning tasks [7]. However, on large datasets and more challenging benchmarks, RC techniques fall short of e.g. LSTMs [5], which have become feasible in recent years. Therefore, RC techniques are now mainly being developed in the form of hardware implementations, using e.g. photonics [8, 9, 10, 11] or electronics [12], which could be computationally faster compared to digital ones.

Although the training stage is dramatically simplified, one still needs to set several hyper-parameters that determine the general properties of the network, such as its size and spectral radius. Hyper-parameter optimization (HO) requires an experienced researcher, i.e., acquiring optimal results still necessitates expert input [13]. It is, therefore, of interest to automate the search for hyper-parameters in reservoir computing. A straightforward HO method is grid or random search, which is suitable for finding the optimum when considering only a few hyper-parameters. If there are many hyper-parameters such an approach is not viable, because the volume of the hyper-parameter space grows exponentially with the number of hyper-parameters. A step by step plan for manual HO for RC is provided in [7]. As noted in [7], automated HO is a common topic in machine learning, and these methods are applicable to RC. A few methods have been proposed for automated HO, including Particle Swarm Optimization (PSO) [14, 15, 16], various forms of Genetic Algorithms (GAs) [17, 18, 19], and stochastic gradient descent applied to the hyper-parameters [13].

In this paper, we show that a Gaussian process with Bayesian optimization is able to achieve an optimal choice for RC hyper-parameters in an automated way. The method is applied to two types of reservoirs: dynamical nonlinear delay nodes (NDNs) and echo state networks (ESNs). For both the NDN and ESN, our results either match the considered benchmarks, or surpass them by one or several orders of magnitude. The implementation of Gaussian processes with Bayesian optimization is non-trivial. We therefore use the Spearmint library [20], which was developed in the context of HO in machine learning. HO with Bayesian optimization matches or surpasses human performance on several machine learning tasks and for different algorithms, such as deep learning and support vector machines [20]. However, it seems to fail completely on other tasks [21]. Because we achieve good results for all considered types of reservoirs and benchmarks, our work indicates that its performance for HO in RC is robust.

The paper is organized as follows. In Section 2, we describe the ESN and NDN formalisms. The theory of Bayesian optimization of Gaussian processes is briefly sketched in Section 3. In Section 4, we describe the benchmark tasks. Details of the implementation are discussed in Section 5. In Section 6, we present and discuss the results. In Section 7, we discuss the behaviour of the Bayesian search process. We end with a conclusion and outlook to possible future work in Section 8.

2 Reservoir Computing

2.1 Echo state networks

Echo state networks were introduced independently by Herbert Jaeger [22] and Wolfgang Maass (under the name Liquid State Machines [23]). The idea is to use a recurrent neural network which is fixed, i.e., its connection weights and any biases are not trained. One can drive this network using an input, which will change the state of the network (i.e., the value at each of the nodes). The output is then constructed using a linear combination of all, or a subset of, the node values. The weights of this linear combination are most commonly obtained using linear regression. The values of the nodes are updated according to the rule:

| (1) |

where is the -dimensional state vector of the network at time , is the -dimensional input vector at time , is the reservoir weight matrix, is the input weight matrix, is a constant bias term and is a sigmoid function (in our case we use the function).

The reservoir weight matrix is rescaled as , where is the spectral radius of the network (i.e., the largest eigenvalue of ) and is a scaling parameter (effectively the spectral radius of the rescaled network). This rescaling operation is a popular choice in the reservoir computing literature. It, however, does not guarantee the echo state property [24]. See, e.g., [25, 26] for rigorous discussions on sufficient conditions for the echo state property.

The output is given by:

| (2) |

where the output weights matrix is obtained using, e.g., linear regression. We performed linear regression using ridge regression (a.k.a. Tikhonov regularization), which is a modified version of the linear regression equations [7]:

| (3) |

where is the identity matrix, is the regularization parameter, and is the matrix of all reservoir states. Performance is measured with the normalized mean squared error (NMSE):

| (4) |

where is the predicted value and is the target value. The denominator is simply the variance of the test set under consideration. The root mean squared version (NRMSE) is:

| (5) |

2.2 Nonlinear delay nodes

The concept of a nonlinear delay node as a reservoir was introduced in [27]. It can be implemented in hardware with optical and electronic components [28, 29, 30, 12, 31, 8, 9, 10, 11, 12]. We use two different types of NDNs.

2.2.1 Mackey-Glass delay differential equation

Reservoir states can be computed by solving a delay differential equation. In this work, we consider the Mackey-Glass (MG) differential equation with delay time :

| (6) |

where , , and are adjustable parameters, is the state of the system, and is the input. We note that other delay differential equations can be used equally well.

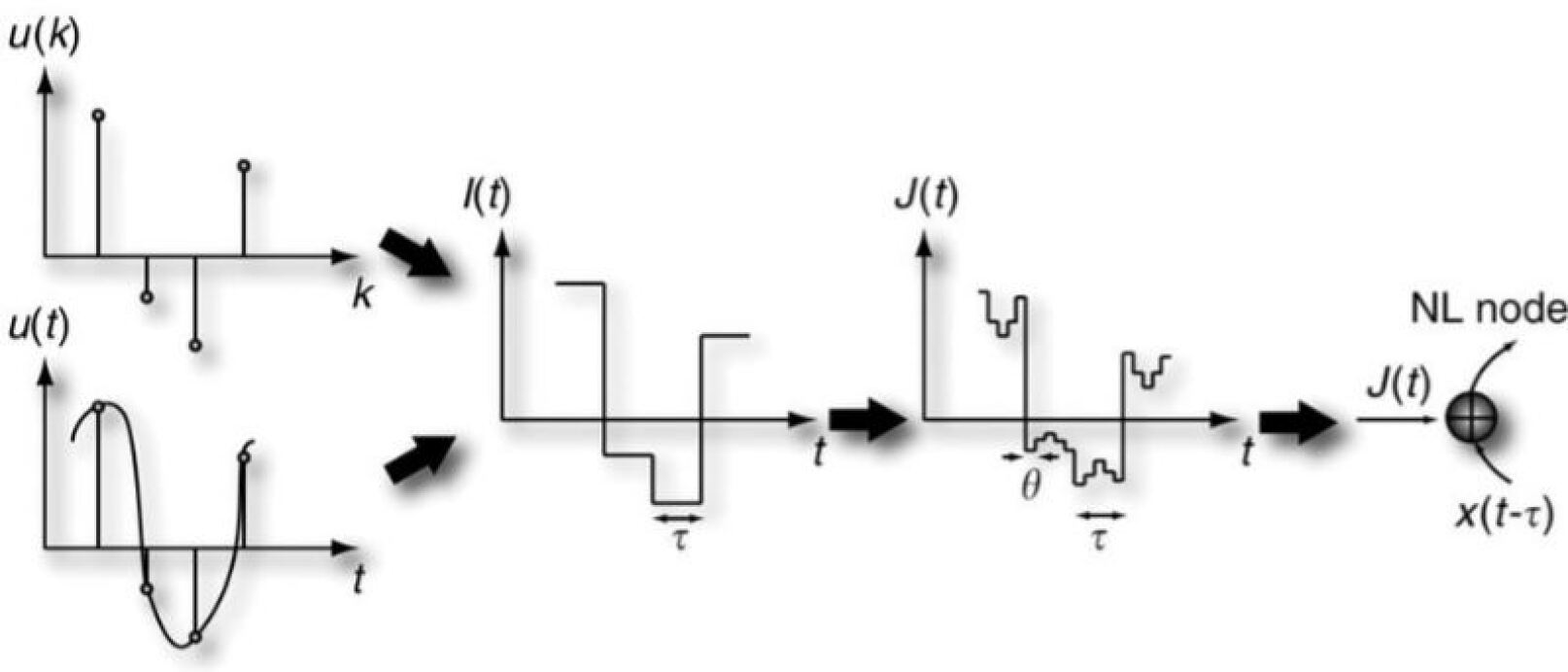

The reservoir states are calculated as follows. Consider a discrete (or discretized) one-dimensional signal , with integer. We want to map each scalar value to a reservoir state of dimension . This reservoir state is obtained by solving the MG differential equation (6). Solving over a time interval enables us to compute one reservoir state. Suppose we start at time and end at . The input is calculated by multiplying with a mask . For a reservoir of dimension , the mask is a set of values, which we take to be either -1 or 1: is a random element from the set . By multiplying the mask with the signal, , one finds values, equal to , which are constant over a time

This preprocessing sequence of the signal is illustrated in Figure 1.

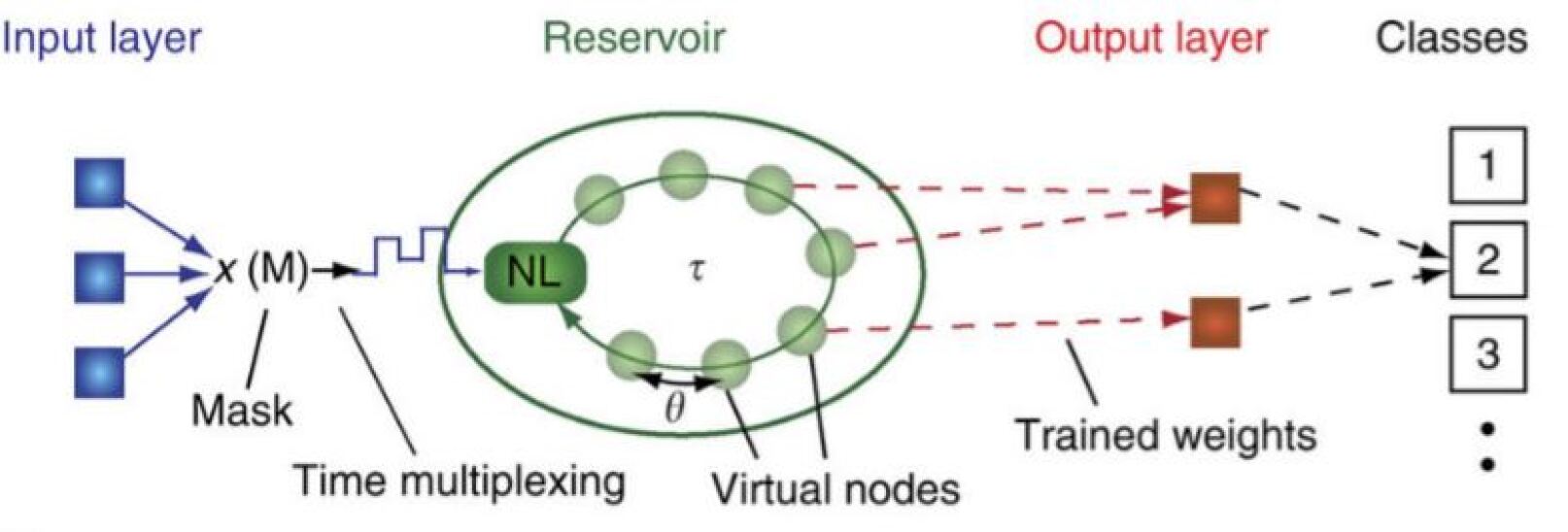

Given the input , the MG equation can be solved. The reservoir state , , is equal to the value of at time . The following reservoir state is then calculated by solving equation (6) from to with input , and so on. The times at which the solution to the MG equation is read can be interpreted as nodes from a network. They are referred to as “virtual nodes”. Training of the connections between the reservoir state and the output is done the same as with ESNs. The whole NDN system is illustrated in Figure 2.

It is clear that the reservoir states depend on the previous state, via , and the input, via . The delay-dynamical equation therefore functions as a recurrent network. It is important that the values of the input mask alternate. Otherwise, the differential equation relaxes to its stationary value, and the dynamics cannot perform a projection to a rich set of reservoir states, cf. the discussion in [27].

The parameters in the MG dynamics equation (6) have specific functions. The input scaling determines how strongly the dynamics is influenced by new input values . determines the influence of both and . The parameter determines the nonlinearity of the dynamics. If other nonlinear delay differential equations are used, the parameters in those equations will have similar roles.

2.2.2 Sine

First proposed in [29], this version of the nonlinear delay node uses an architecture similar to that of [27] (reviewed in Section 2.2.1), but with a simpler dynamics. The update rule for the reservoir states is given by:

| (7) |

where , and are adjustable parameters, represents the state of the th virtual node at discrete timestep , is the total number of virtual nodes, represents the th value of the mask (chosen at random from as before), represents the input at discrete timestep and . In our implementation we set , where denotes the floor function. Further details can be found in [29].

3 Gaussian Processes

When fitting a function, several approaches are possible. One option is to consider a class of functions, for example all linear functions. Another approach is to specify a probability distribution over all possible functions, where more likely functions have higher probabilities. The latter approach can be achieved with Gaussian processes (GPs). A GP is equivalent to Bayesian linear regression with an infinite number of basis functions [32]. It is therefore possible to fit a large class of functions exactly. A function fitted by a GP probability distribution is denoted by

| (8) |

The vector are the hyper-parameters of the reservoir and is the error function, e.g., for the Mackey-Glass NDN. Note that the symbol is also used for the reservoir states. Which one is meant should be clear from the context. Gaussian processes are able to describe probability distributions over complicated functions, which is what we need for the optimization algorithm. For an introduction to Gaussian processes, we refer to [32]. Several applications are discussed in [33].

A GP is completely specified by its mean function and covariance function . gives the average function value at . Values at different positions and are correlated by an amount , also called the kernel. In practice, this kernel is used to ensure that points close to each other in hyper-parameter space are likely to have similar values, which follows from an assumption about the behaviour of . Intuitively, we expect small changes in the objective function if the hyper-parameters are changed slightly.

A GP that models well is achieved by performing measurements of , and consequently updating and to take the obtained information into account. This updating is done with Bayesian statistics [34]. Before any measurements are performed, one needs to set a prior, i.e., guess a reasonable form for and . An example would be for all and the covariance function

| (9) |

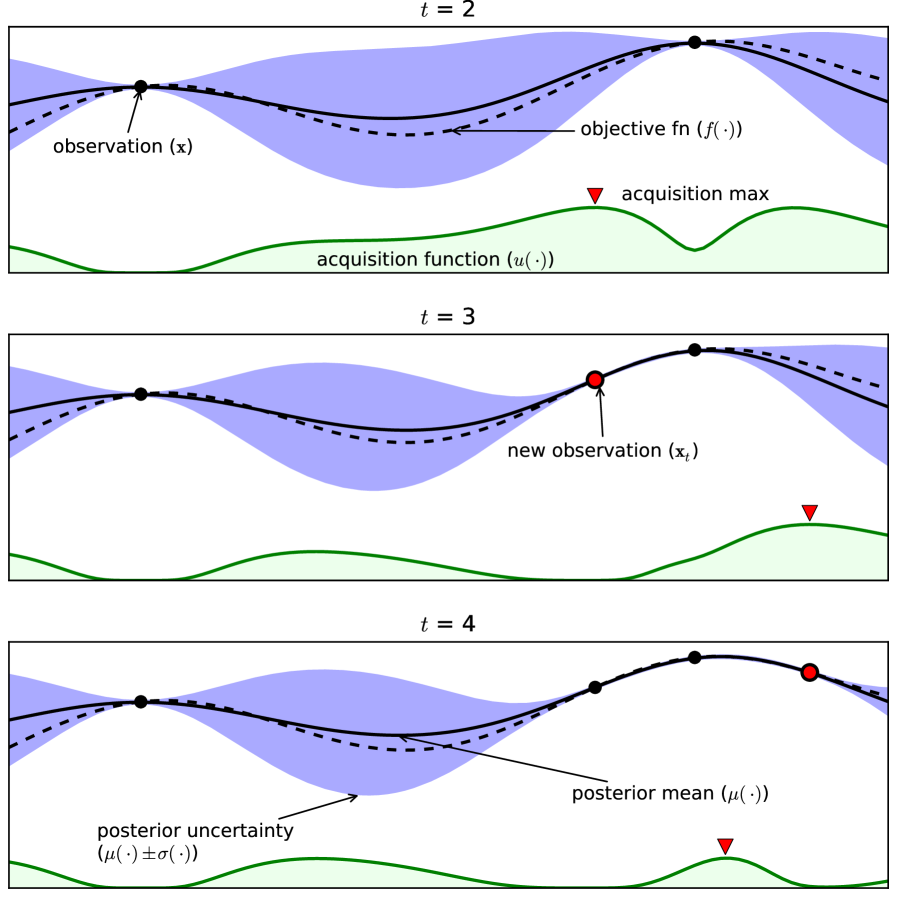

with an adjustable parameter. This covariance function is a popular choice, although it is too smooth for most machine learning problems. In this paper, the automatic relevance detection Matérn 5/2 kernel is used [20]. After each measurement one can update and to obtain the posterior GP. An illustration of a GP and its evolution when measurements are performed is shown in Figure 3. We note that it is possible to incorporate the effect of noise in the measurement process.

One can analytically calculate the mean and variance of the function distribution at each . The choice of where to perform a new measurement is not only determined by the improvement in the average of (exploitation). It is also influenced by how much new information we gain by performing the measurement, which is determined by the reduction in variance (exploration). The balance between exploitation and exploration is determined by the acquisition function, which needs to be chosen by the researcher. The acquisition function used here is the expected improvement. An example of this acquisition function is shown in Figure 3. A discussion of several acquisition functions can be found in [33].

The hyper-parameters of the GP itself, such as in Eq. (9), can also be optimized. A more practical method is to average these parameters out, e.g. with Bayesian statistics. This leads to a completely parameter free algorithm. The implementation of the GP with Bayesian updating is done with Spearmint [20]. This library contains several non-trivial extensions, such as averaging out the hyper-parameters of the GP with Bayesian statics, and input warping [35]. Input warping deals with the problem of being non-stationary, i.e., the covariance function is not invariant under translations in the input space. Error functions in machine learning are generally non-stationary: different regions in the hyper-parameter space have different length scales of function variation. Therefore, a choice of covariance function such as in Eq. (9), which is invariant under translations, cannot model most error functions well. Input warping can be seen as applying a particular kind of non-stationary kernel to the original data, which solves the problem of the non-stationarity of the error function.

Our final choice for the optimal hyper-parameters is the best set found during the search. An interesting question is which functions can be fitted with a GP. A discussion on the convergence of the GP to the average of the underlying function can be found in [32]. There are two necessary conditions for convergence in the limit of an infinite number of measurements: (1) the covariance function should be non-degenerate, which is the case for us; (2) the average of the underlying function should be sufficiently well-behaved, so that it can be represented by a generalized Fourier series. Of more practical importance is whether the search for the minimum converges. This depends on the acquisition function, the function to be estimated, and the prior on the GP. Sufficient conditions for the convergence of GPs with Bayesian optimization were given by Močkus [36]. His assumptions are quite general, so are expected to apply to a large number of error functions, although it is difficult to numerically check whether they are fulfilled. Depending on the implementation, the expected improvement acquisition function can converge near-optimally, although it is possible that the algorithm does not converge at all [37]. We note that, for practical purposes, convergence to the absolute minimum is not necessary. Rather, we are interested in a “good enough” minimum. Besides asymptotic behaviour, one is also interested in the rate of convergence. Recent work has shown that for the upper confidence bound acquisition function, there are algorithms for which convergence of a GP to the optimum is exponentially fast [38, 39]. As discussed in Section 6, the convergence behaviour we observe is also approximately exponential.

We now briefly discuss the differences with other optimization methods. The fit for unseen parameter regions is non-local: it takes into account the information of all measurements. This should be contrasted with SGD, where only local information of the error function determines the parameter update. There is also no exploration component in SGD. If the connection weights of the reservoir output are high, the SGD learning rate becomes exponentially slow [13]. This problem does not occur for GPs. When optimizing hyper-parameters step by step [7], the hyper-parameters are optimized with all others fixed. The interdependency of the hyper-parameters is therefore not fully exploited. This inevitably leads to a sub-optimal end result. In contrast, such interdependency can be represented and taken advantage of with a GP. In contrast to random search, the new measurements are directed towards interesting regions, which means we need far fewer measurements to get good results. This compensates for the slight increase in computation time needed to calculate and update the GP.

4 Benchmark Tasks

In this section we describe the data sets we use to test the performance of the algorithm.

4.1 Santa Fe

4.2 NARMA 10

The Nonlinear Auto-Regressive Moving Average (NARMA) of order 10 was originally introduced for use as a timeseries prediction benchmark in [42]. It is generated by

| (10) |

with a random term with uniform distribution in . The goal is to do a one-step ahead prediction of .

4.3 Nonlinear channel equalization

This task was first introduced in [43] and has since been used as a benchmark several times [44, 29, 45]. The data set is created as follows: An i.i.d. sequence is generated by randomly choosing values from . This signal is then passed through a linear channel described by:

which in turn is passed through a nonlinear channel:

where is an i.d.d. Gaussian noise with zero mean and a standard deviation adjusted to yield signal-to-noise ratios (SNR) ranging from to dB. The objective is to reconstruct given , for several values of the SNR (so is the input and the target is ). Following [44, 46], we shifted by +30. The quality measure of the algorithm is given by the Symbol Error Rate (SER), which is the fraction of incorrect symbols obtained.

5 Implementation

Before discussing the benchmarks, we give some details on how the algorithms are implemented.

5.1 Mackey-Glass dynamics implementation

If is uneven, the MG dynamics Eq. (6) is unstable for negative values. We therefore rescale so that it is always positive. We furthermore add an offset parameter to the mask .

For comparison purposes, the number of nodes is fixed. The (time) distance between the nodes is denoted by . A larger gives a longer relaxation time between the nodes. By definition one has that .

We employ Euler integration to solve the MG equation. The number of integration steps between adjacent nodes determines the precision of the obtained solution. However, the method used to solve the differential equation does not necessarily influence the quality of the reservoir. Put differently, one does not need an exact solution of the differential equation to have a high-quality reservoir. The essential property of the reservoir is a “good” nonlinear projection to a high-dimensional space, which is not necessarily the exact solution of the differential equation. We perform Euler integration with either several integration steps between adjacent nodes (number of steps ), or with one integration step between adjacent nodes. We refer to these methods as, respectively, multi-step integration (MSI) and one-step integration (OSI). Both choices give good results, with the latter method obviously being faster.

For all NDN implementations, ridge regression is performed with sci-kit learn [47].

5.2 Echo state network

5.3 Bayesian optimization

For the implementation of the GP we used the Spearmint library, available at https://github.com/HIPS/Spearmint. A fork of this framework, as well as a step-by-step tutorial detailing both installation and a practical example (NDN MG on NARMA 10), is available at https://bitbucket.org/uhasseltmachinelearning/spearmint/. This code replaces the MongoDB backend with an SQLite one. This removes the need for a background server process, which makes it easier to deploy in a cluster environment or as part of a Jupyter Notebook.

6 Results

6.1 Santa Fe laser data

For the MG NDN, we compare our results with those of [50]. Details of the implementation can be found in A. As in [50], we take . Before the other parameters are optimized, ten different masks are generated, and the best performing one is selected. There are six parameters to optimize: , , , , , and the regularization parameter . Spearmint runs are performed for both OSI and MSI. One needs to specify the boundaries of the hyper-parameter search space. We therefore start by running Spearmint for different boundary settings, to find reasonable areas for the hyper-parameter search. Correct order of magnitudes for the boundaries can also be estimated from previously published results and physical arguments. For different boundary values, the optimum for always converged to a value close to 1. Because a smaller number of variables implies a smaller search space, especially in high dimensions, we set in our final run.

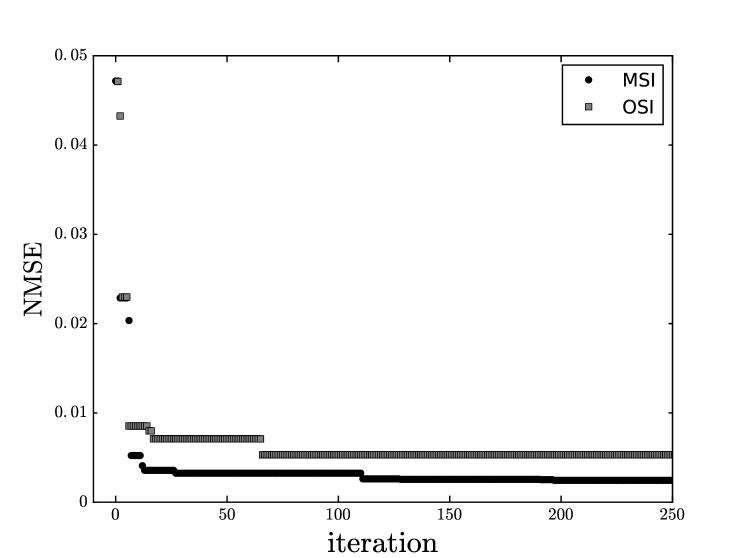

The final results are shown in Table 1. The NMSE for the MSI is an order of magnitude lower than [50], while the OSI result is four times lower. A plot of the NMSE on the validation set as a function of the number of Spearmint iterations is shown in Figure 4. If we start with good hyper-parameter boundaries, the first runs show a strong decrease in error, after which further improvements come in steps. This behaviour qualitatively resembles the exponential convergence speed found in analytical work, cf. Section 3. It should be noted that different runs with small changes in the boundaries of the search space lead to parameter sets that are quite different, but have the same performance.

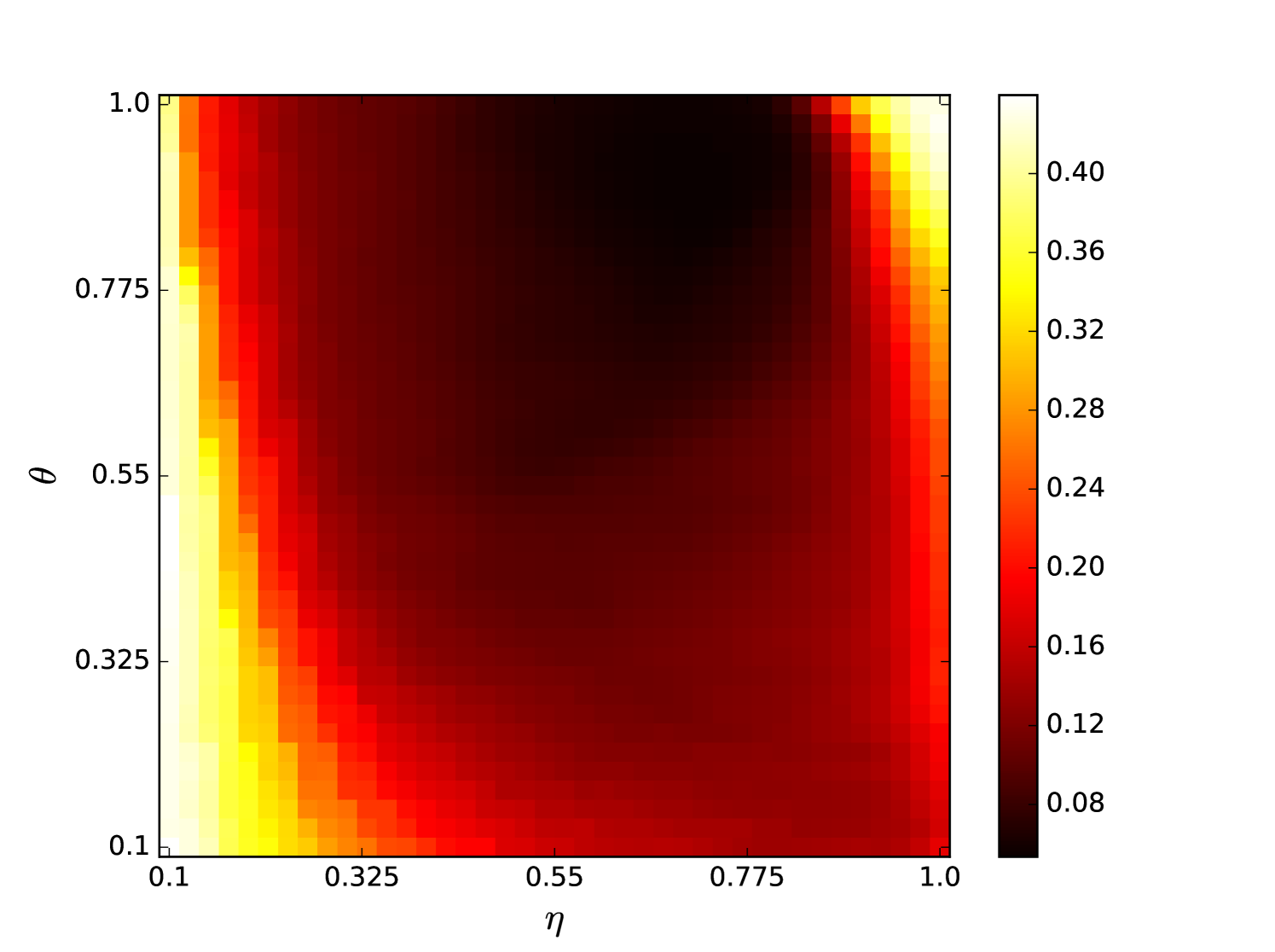

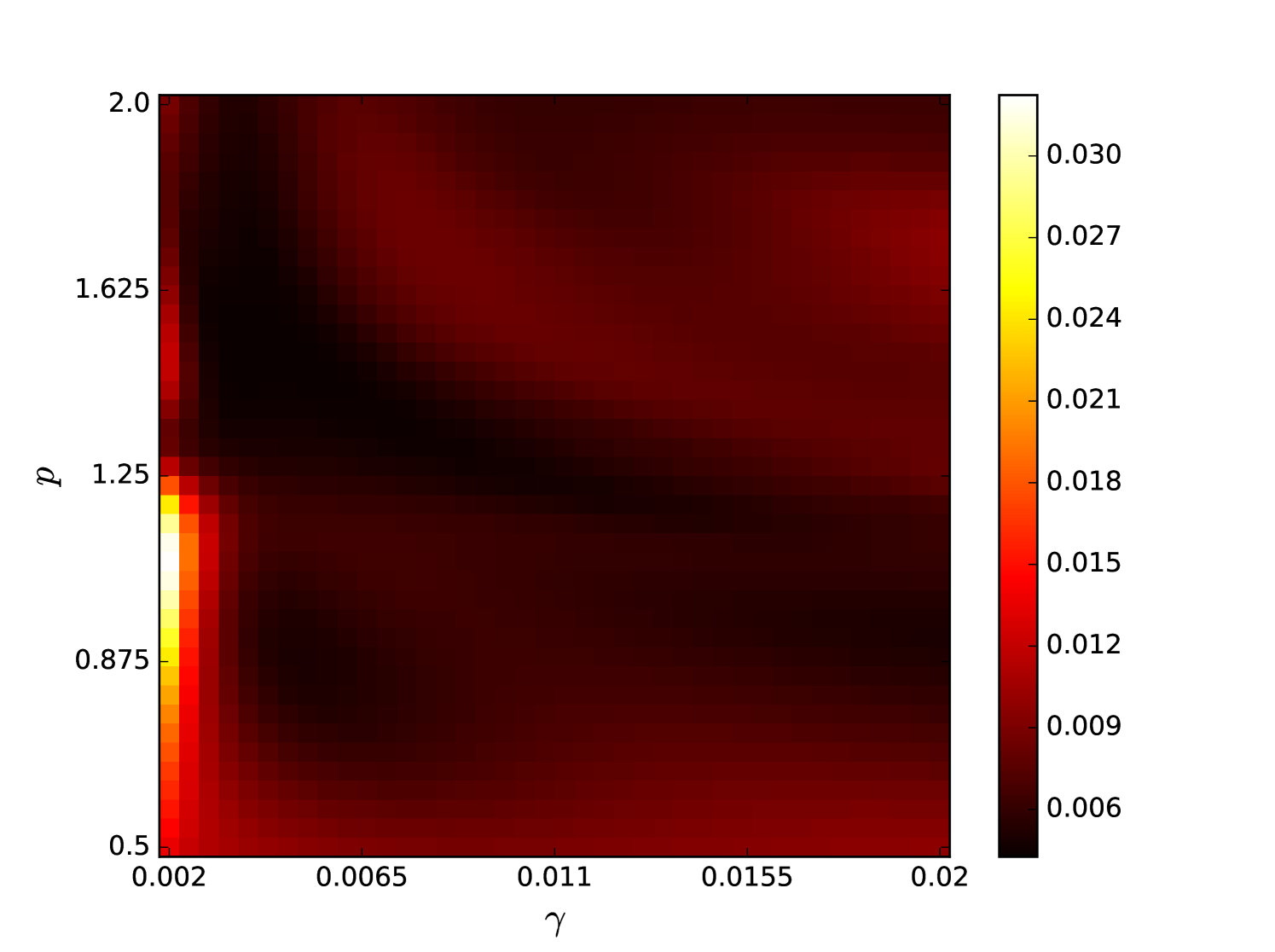

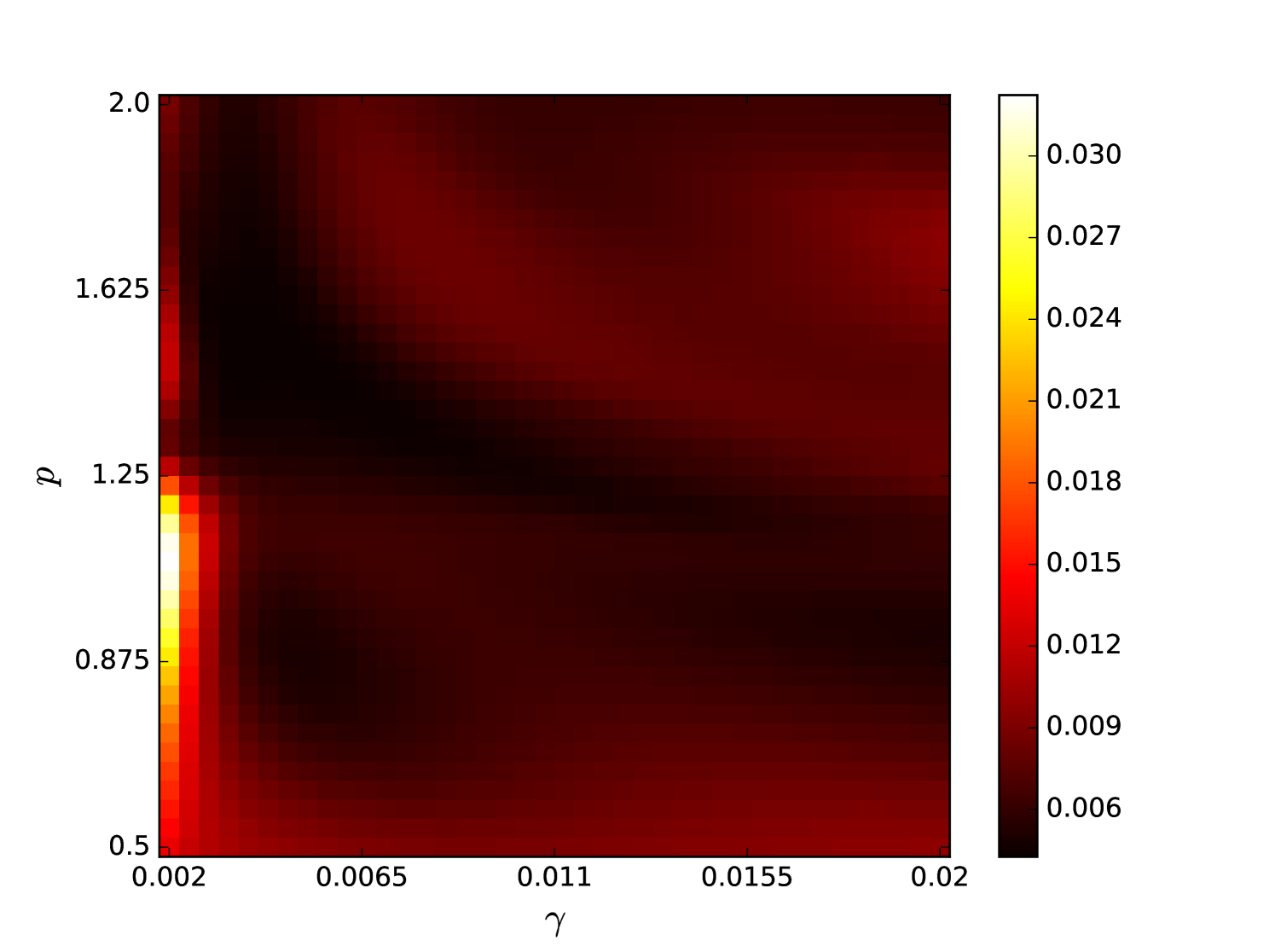

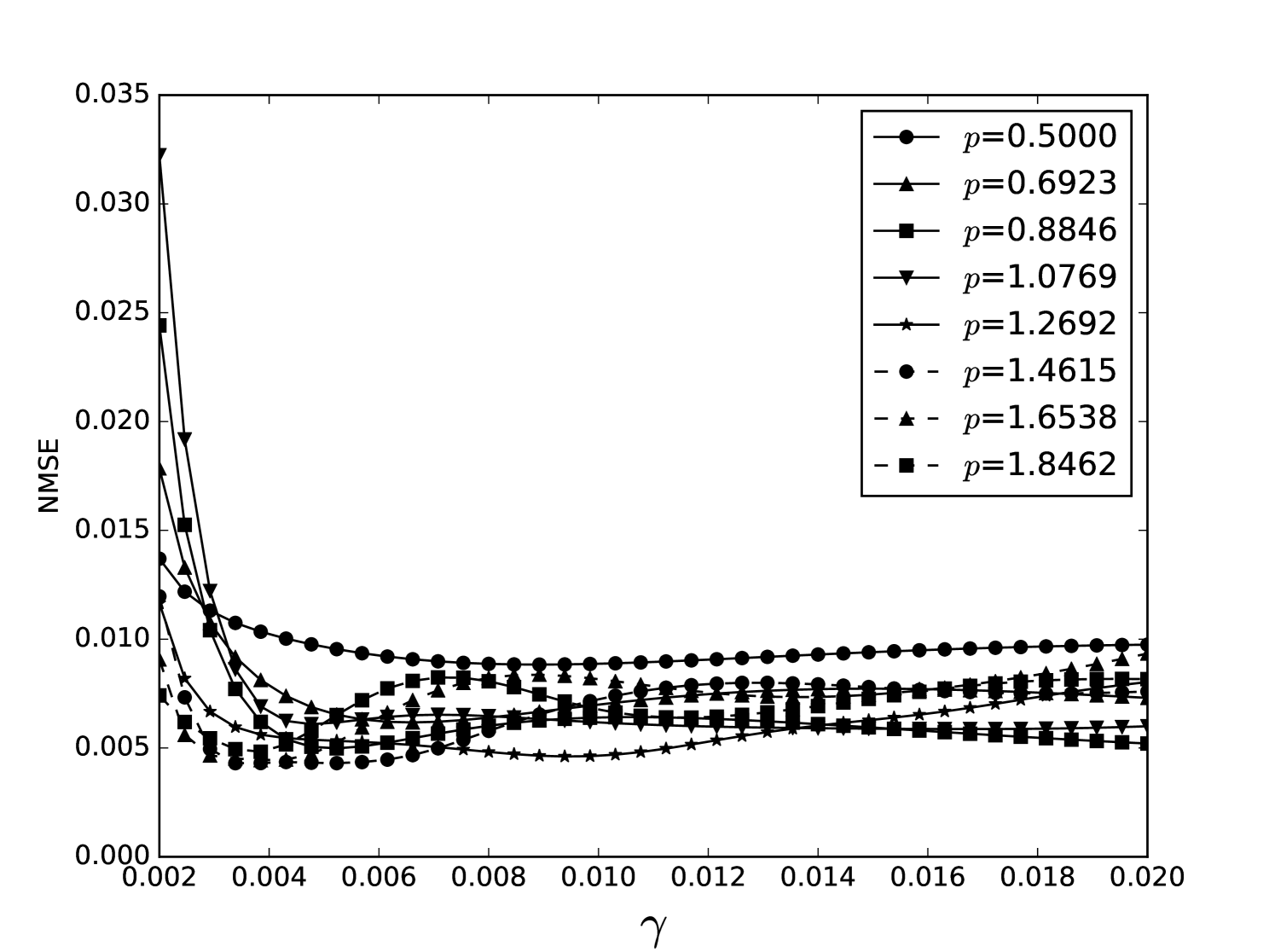

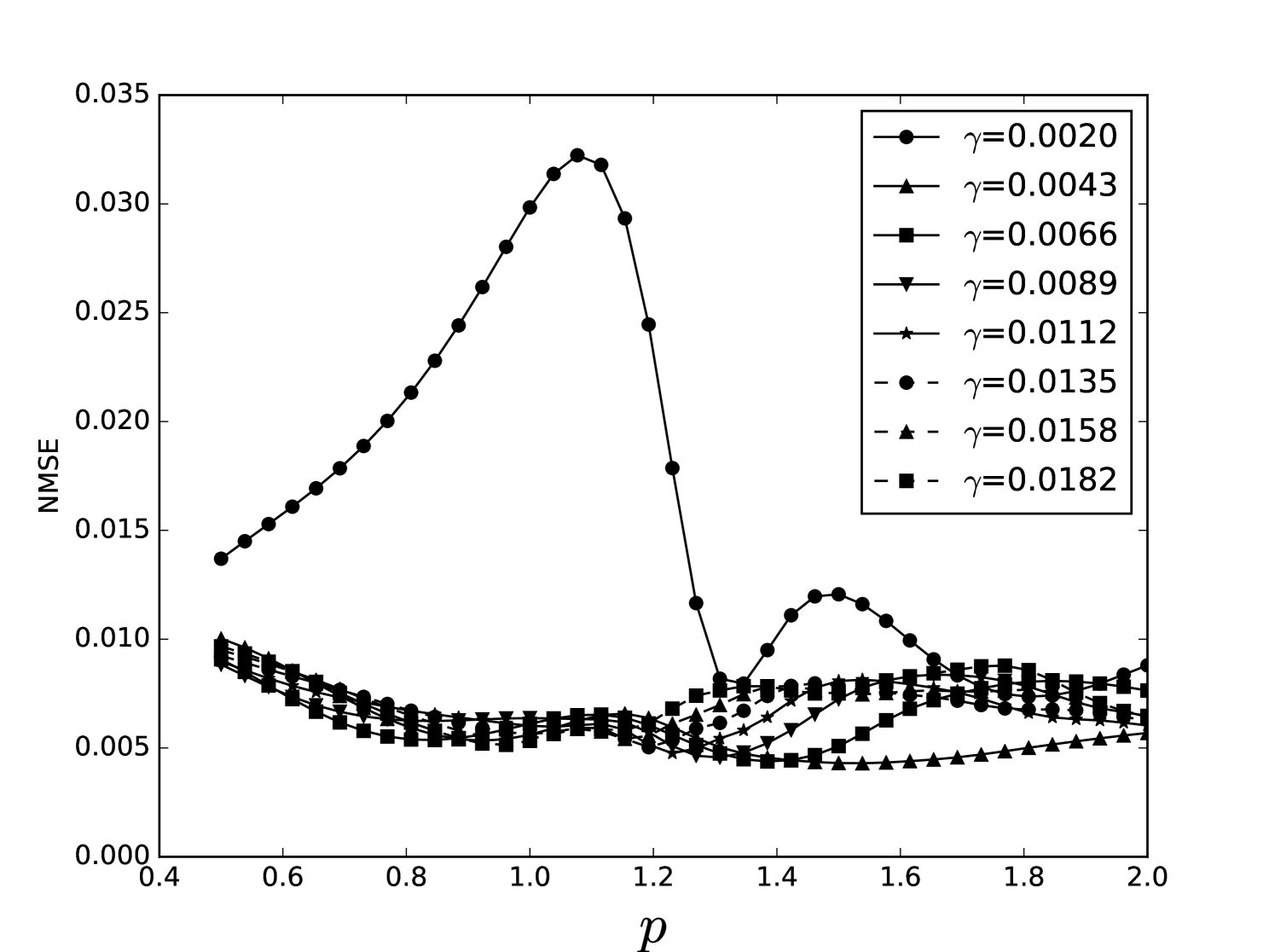

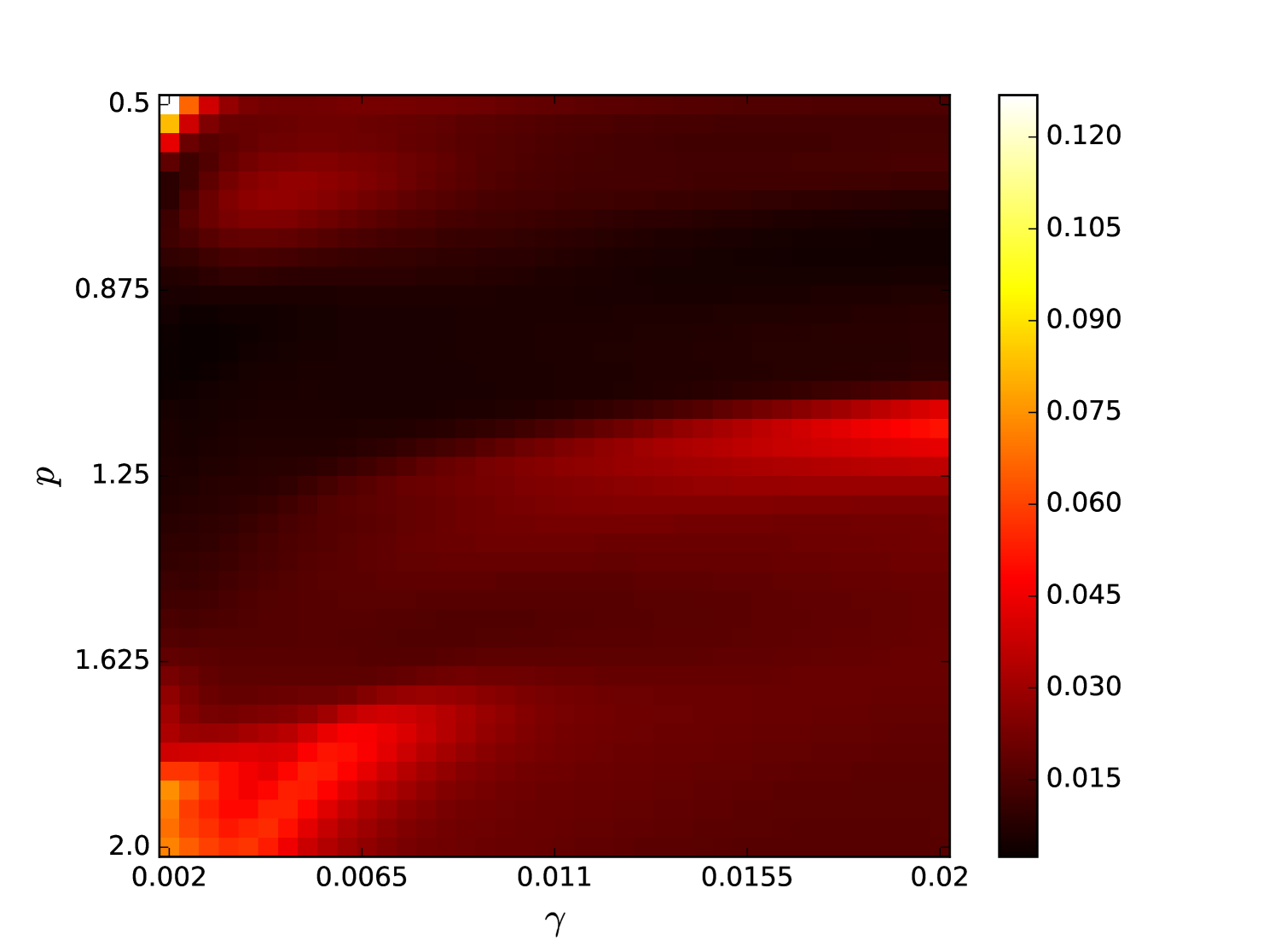

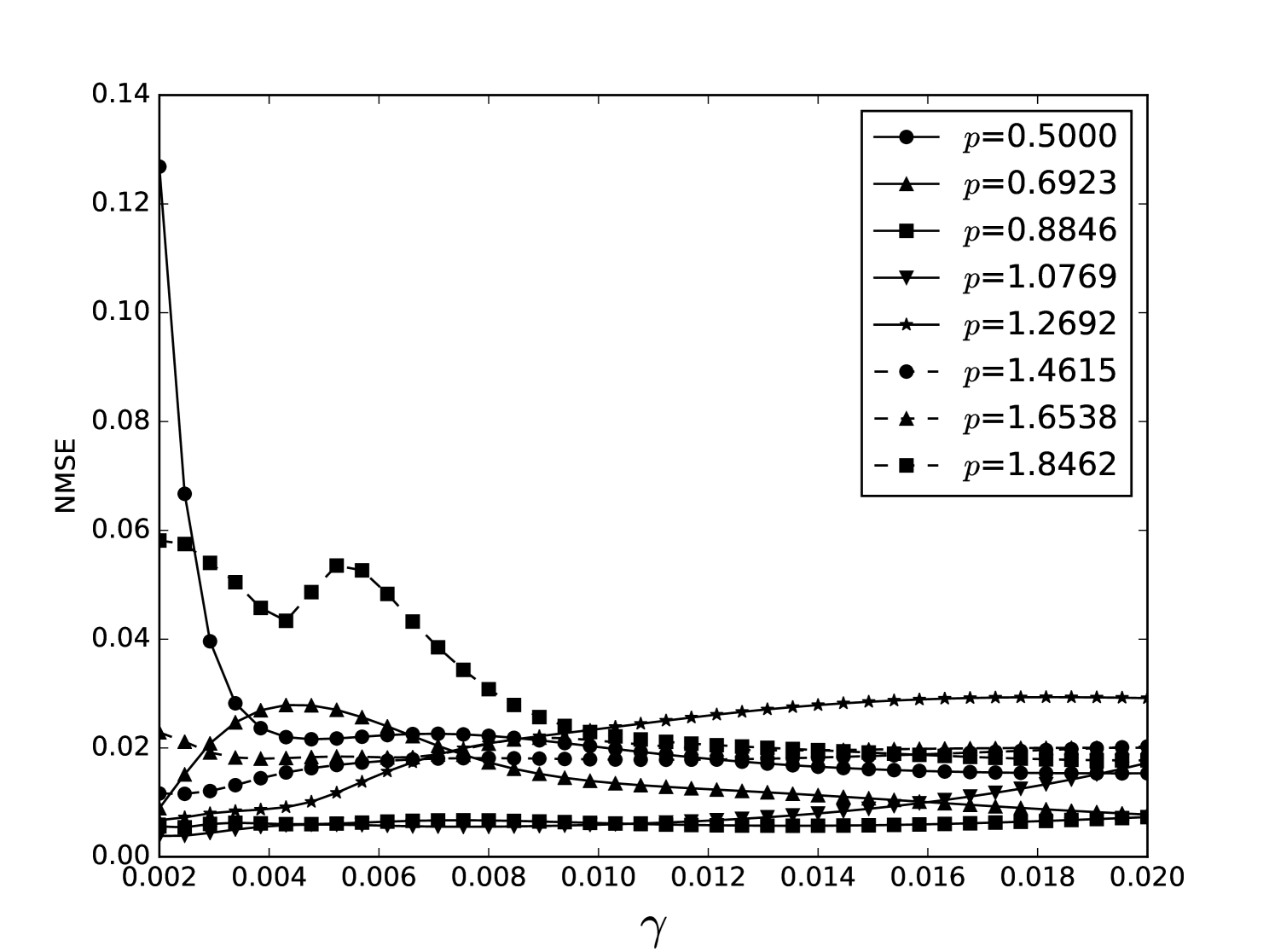

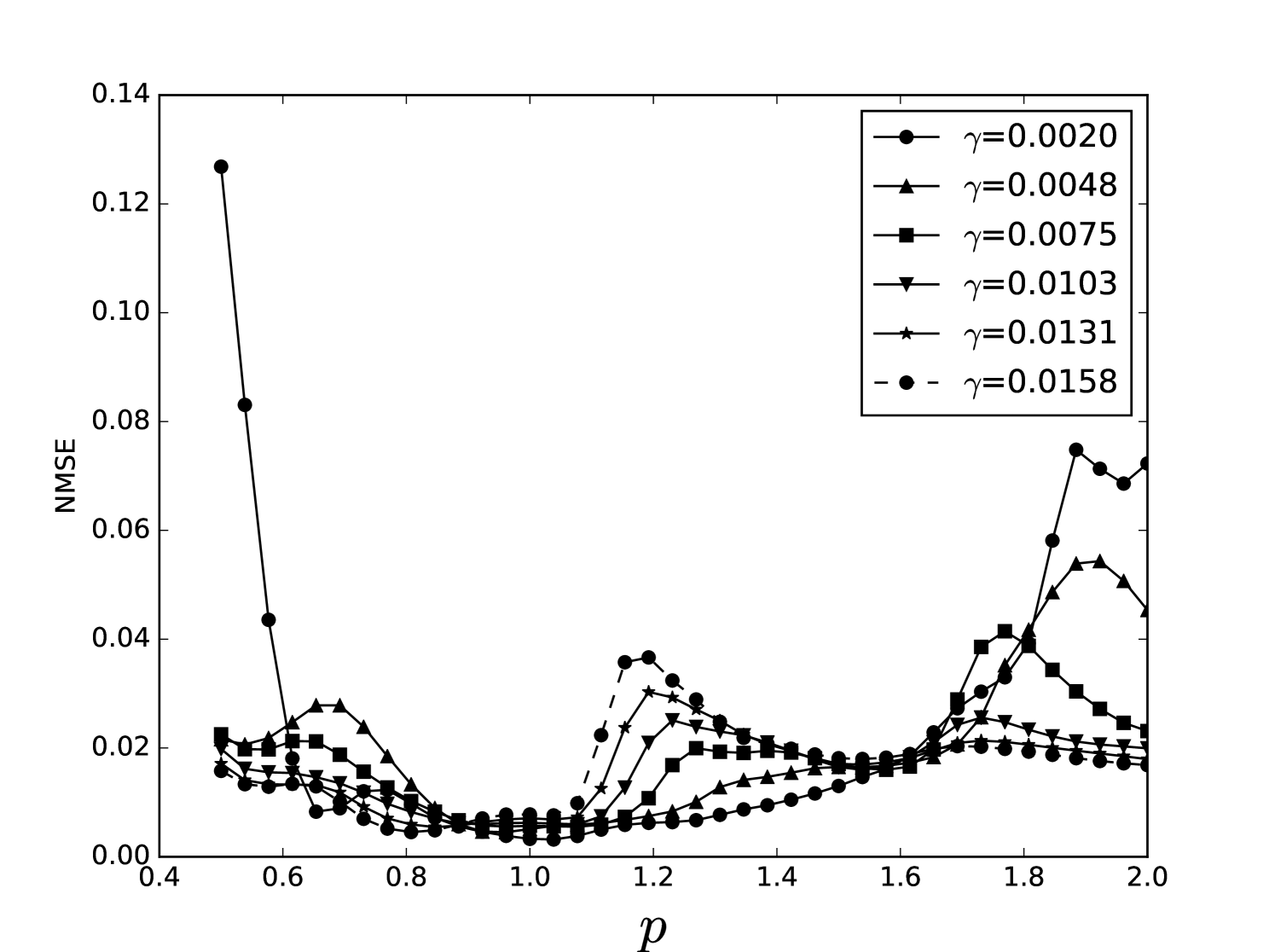

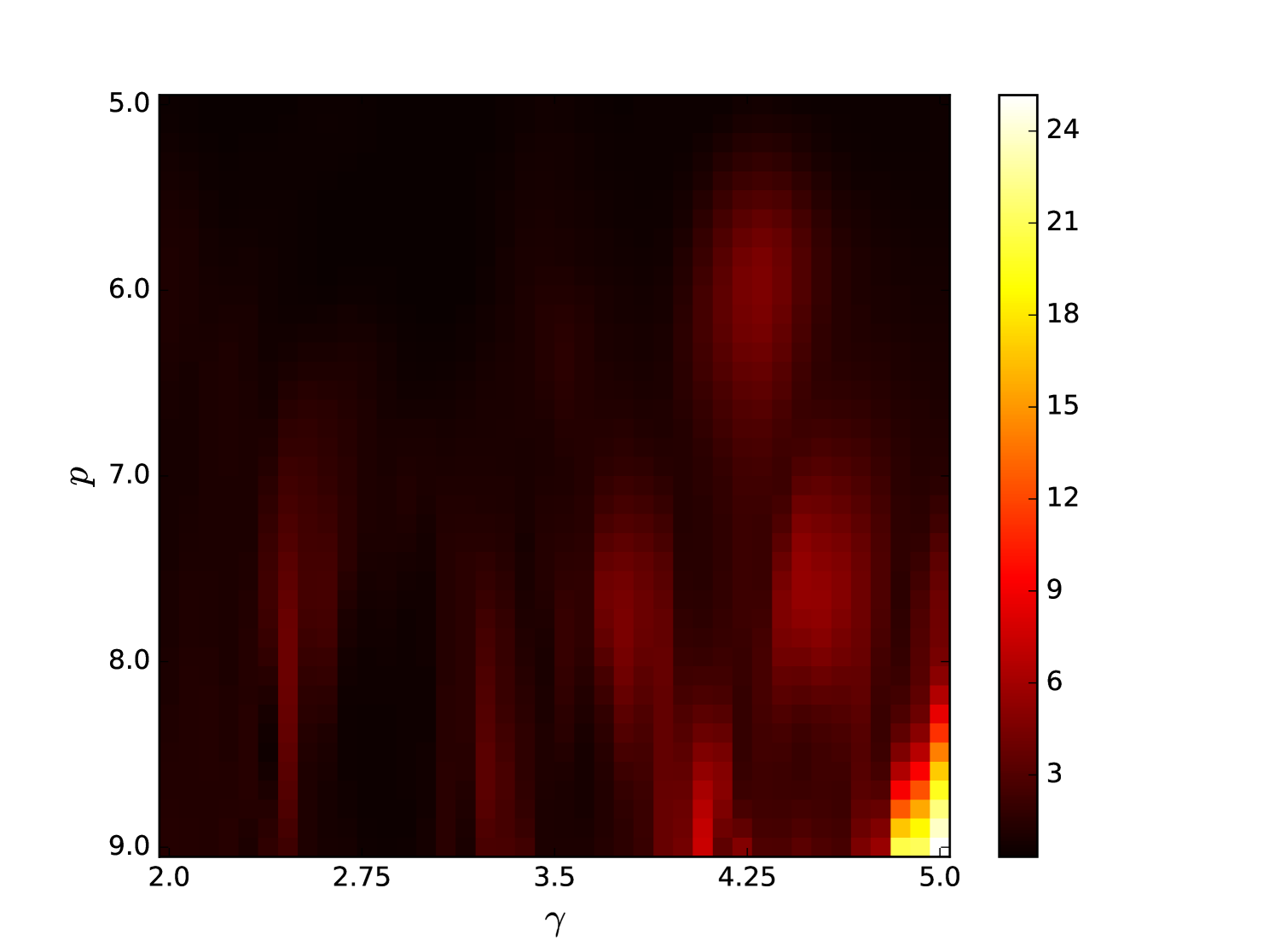

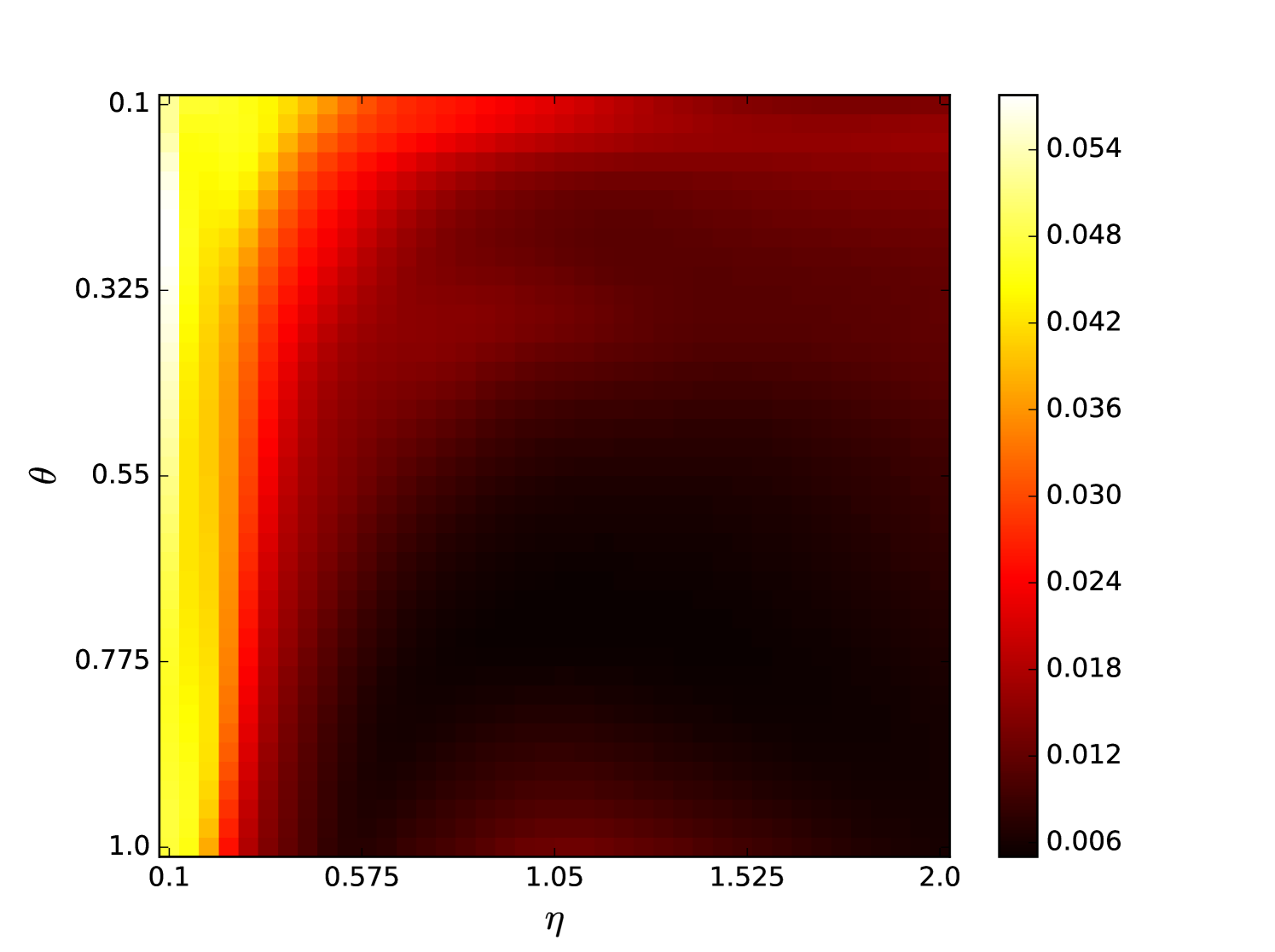

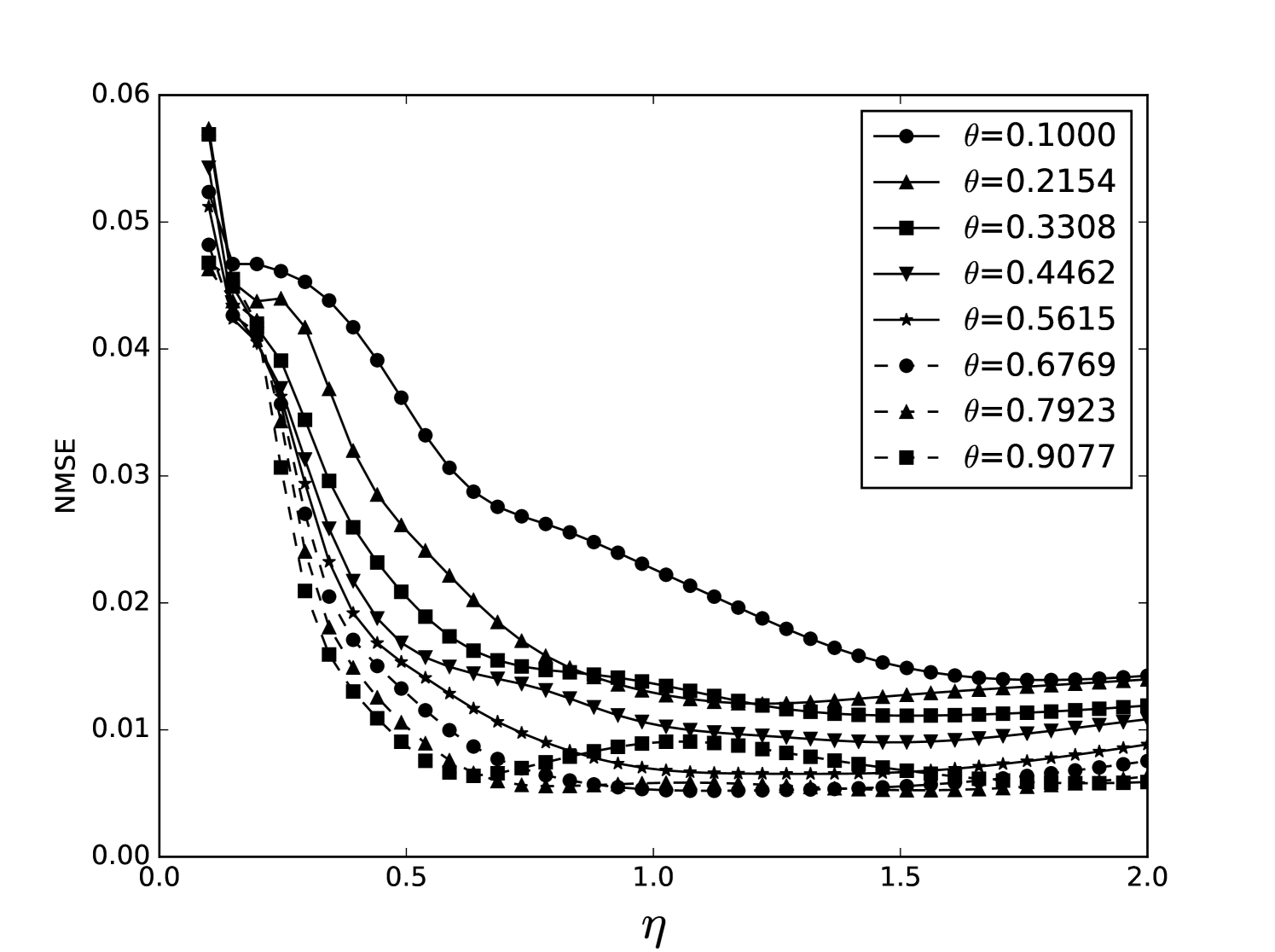

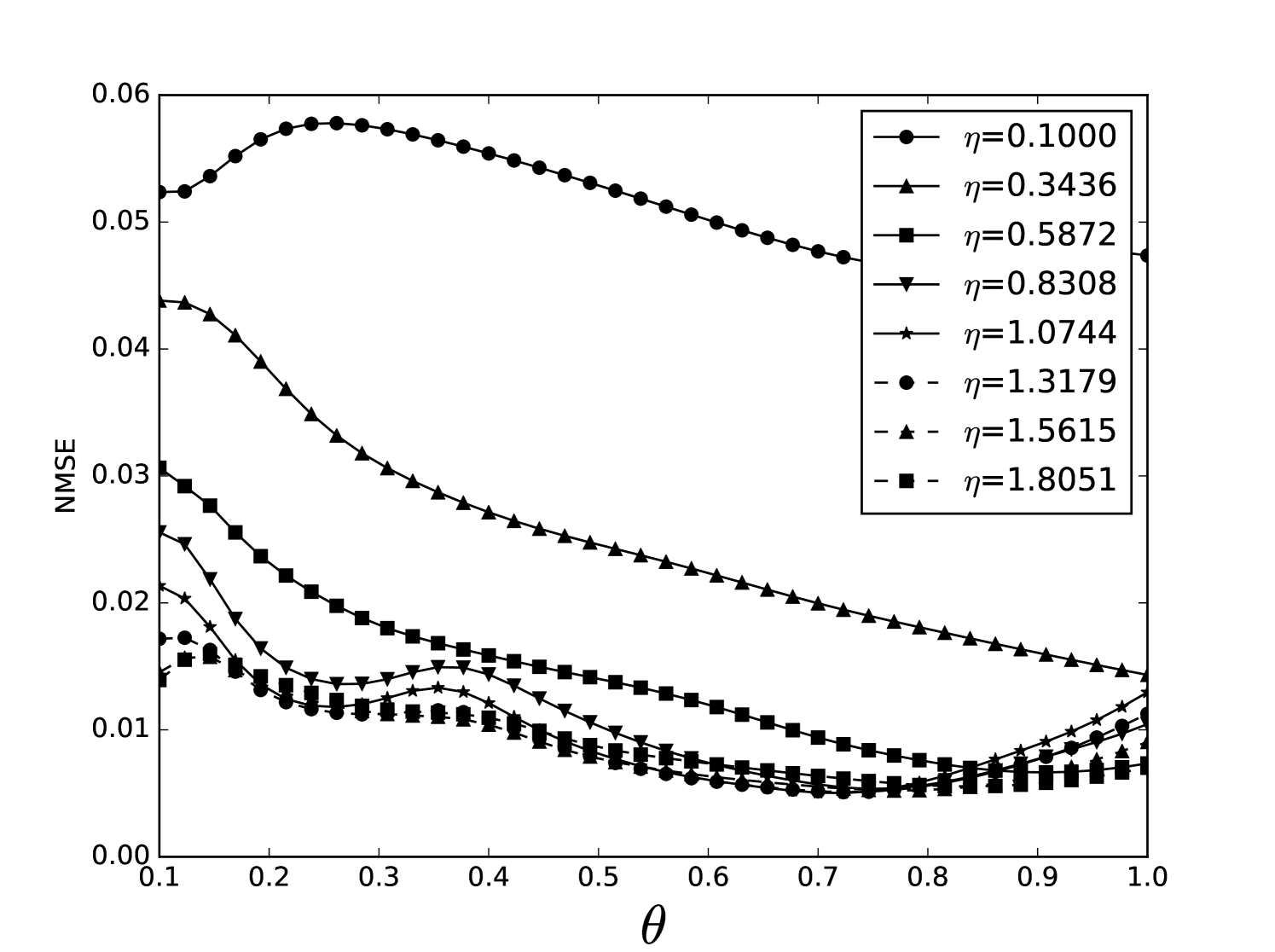

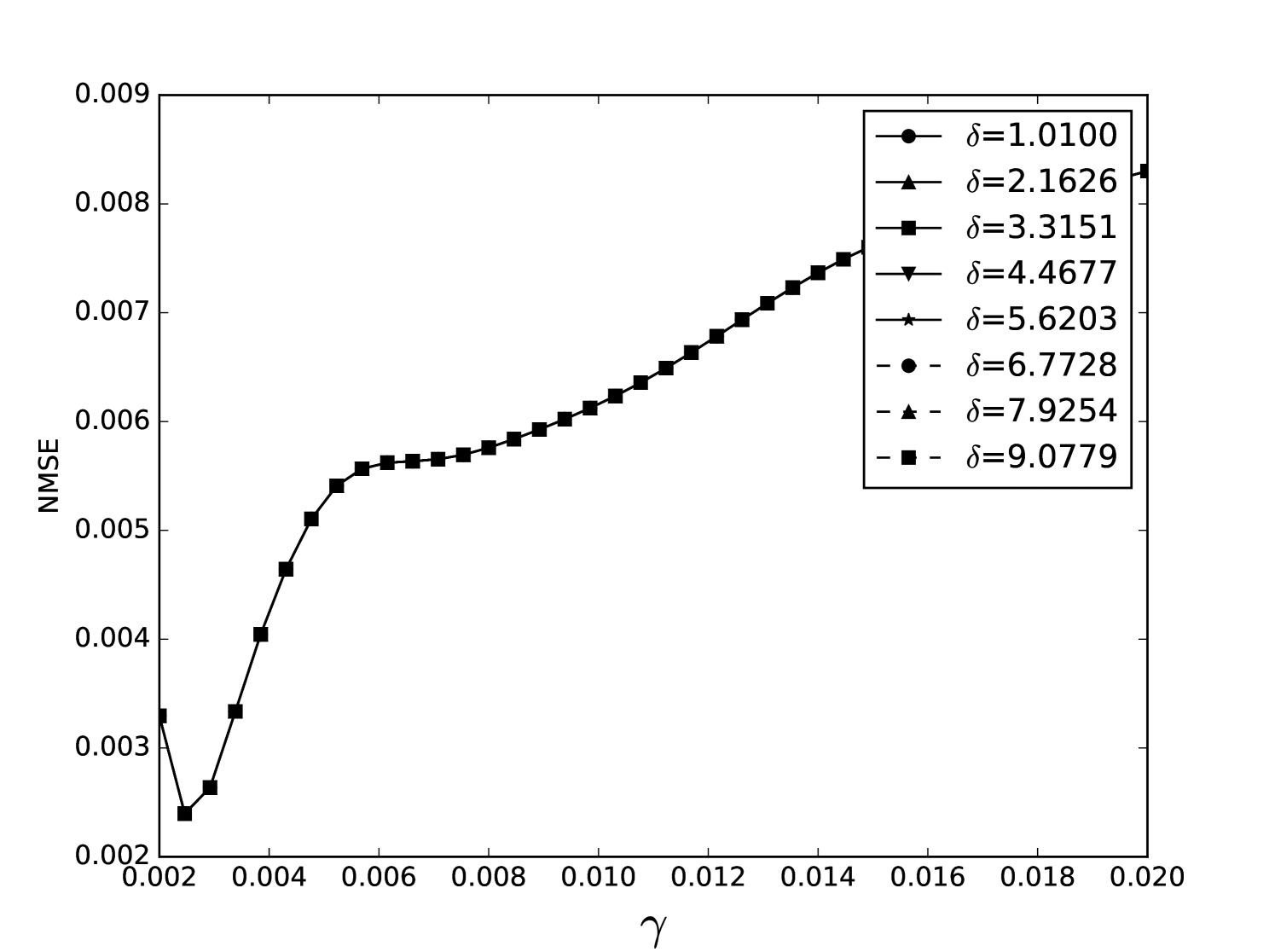

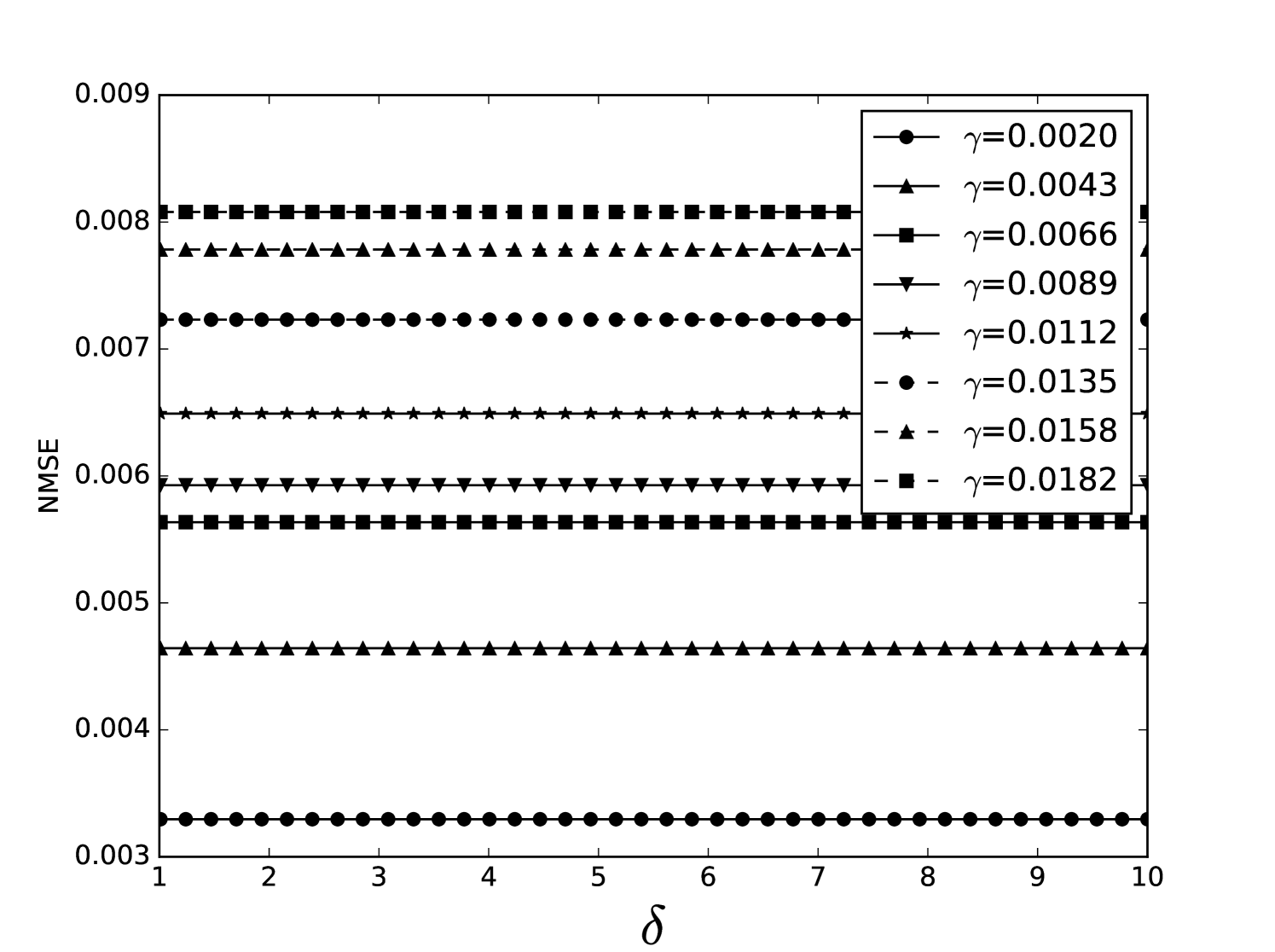

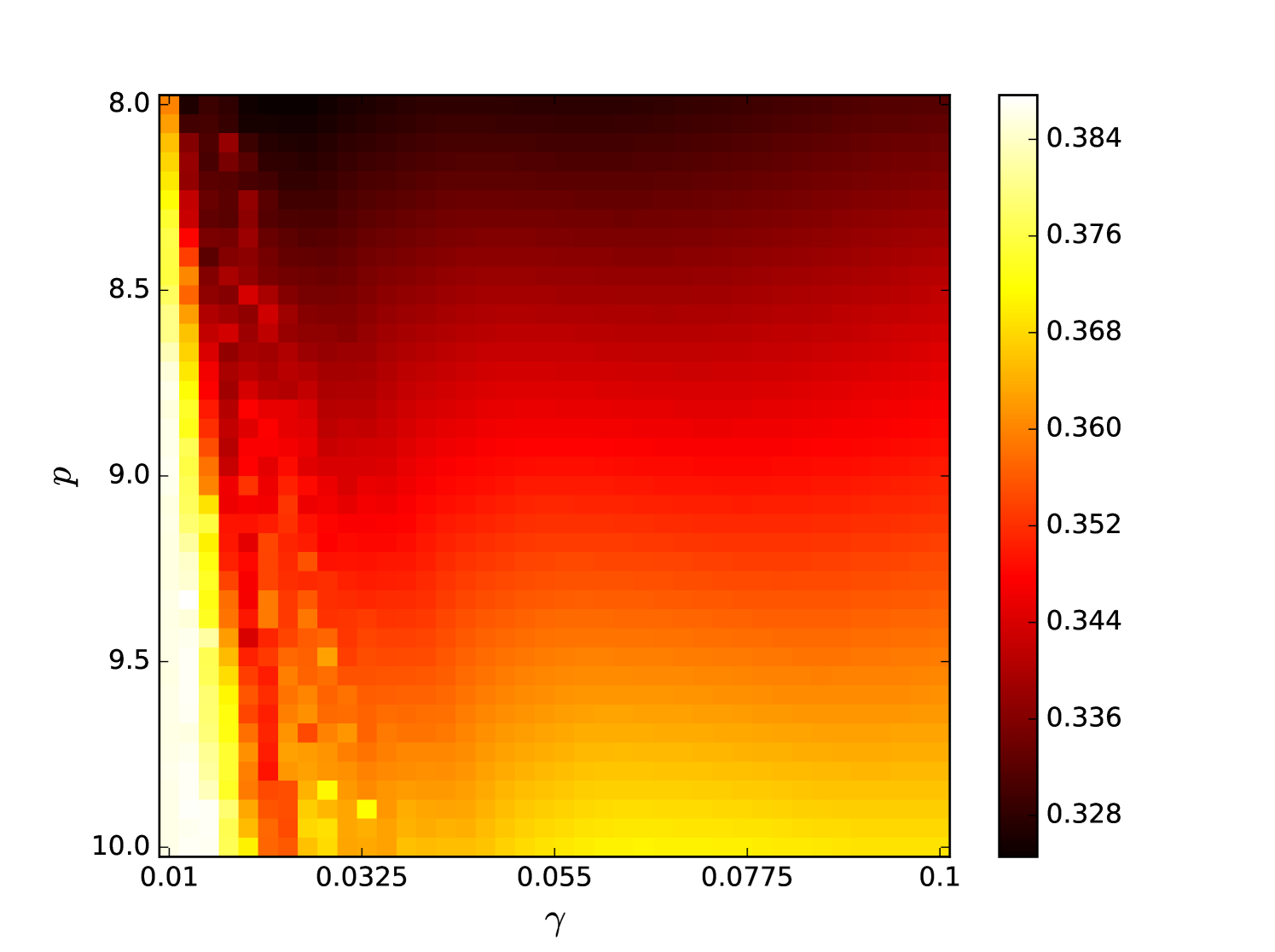

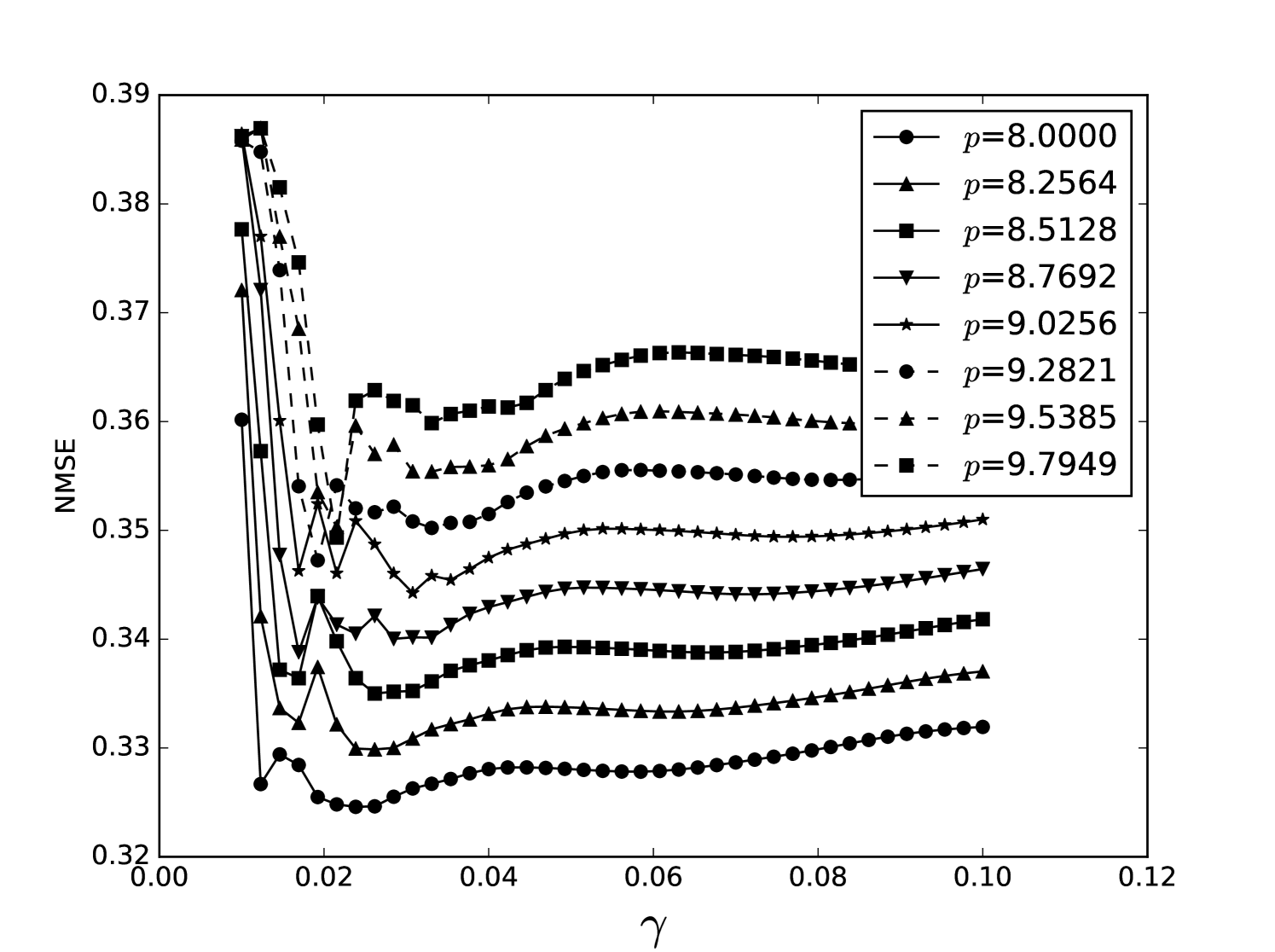

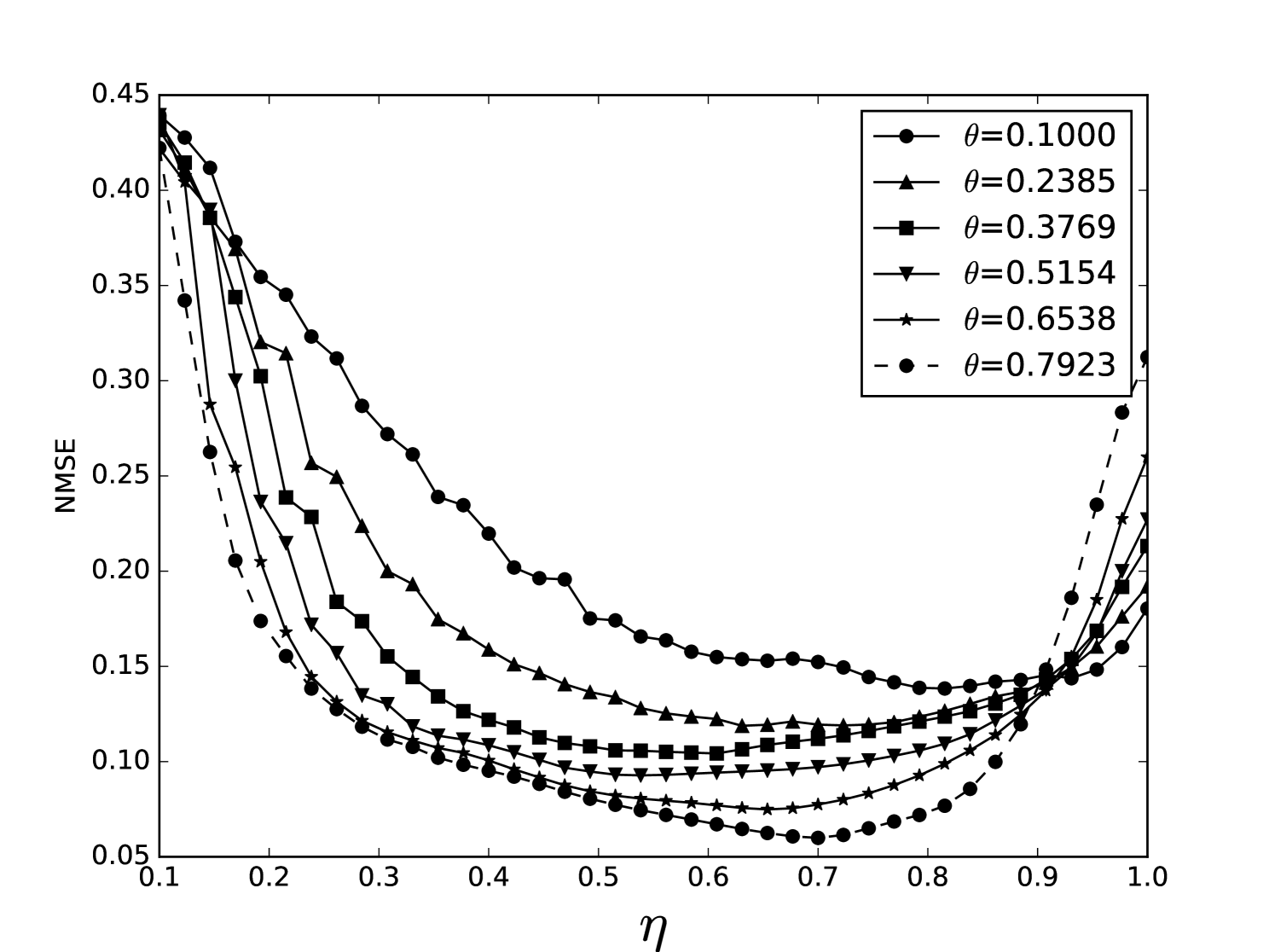

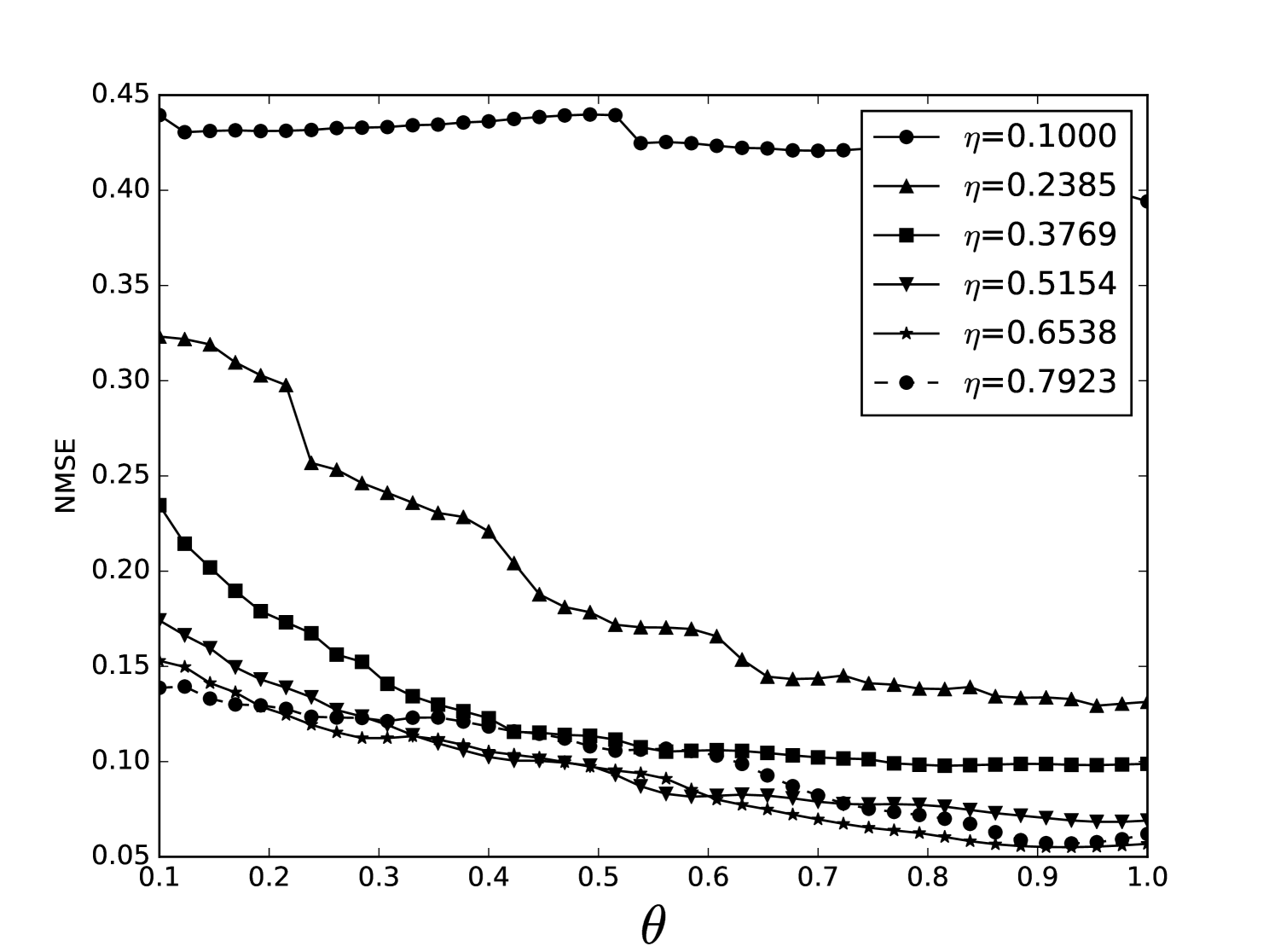

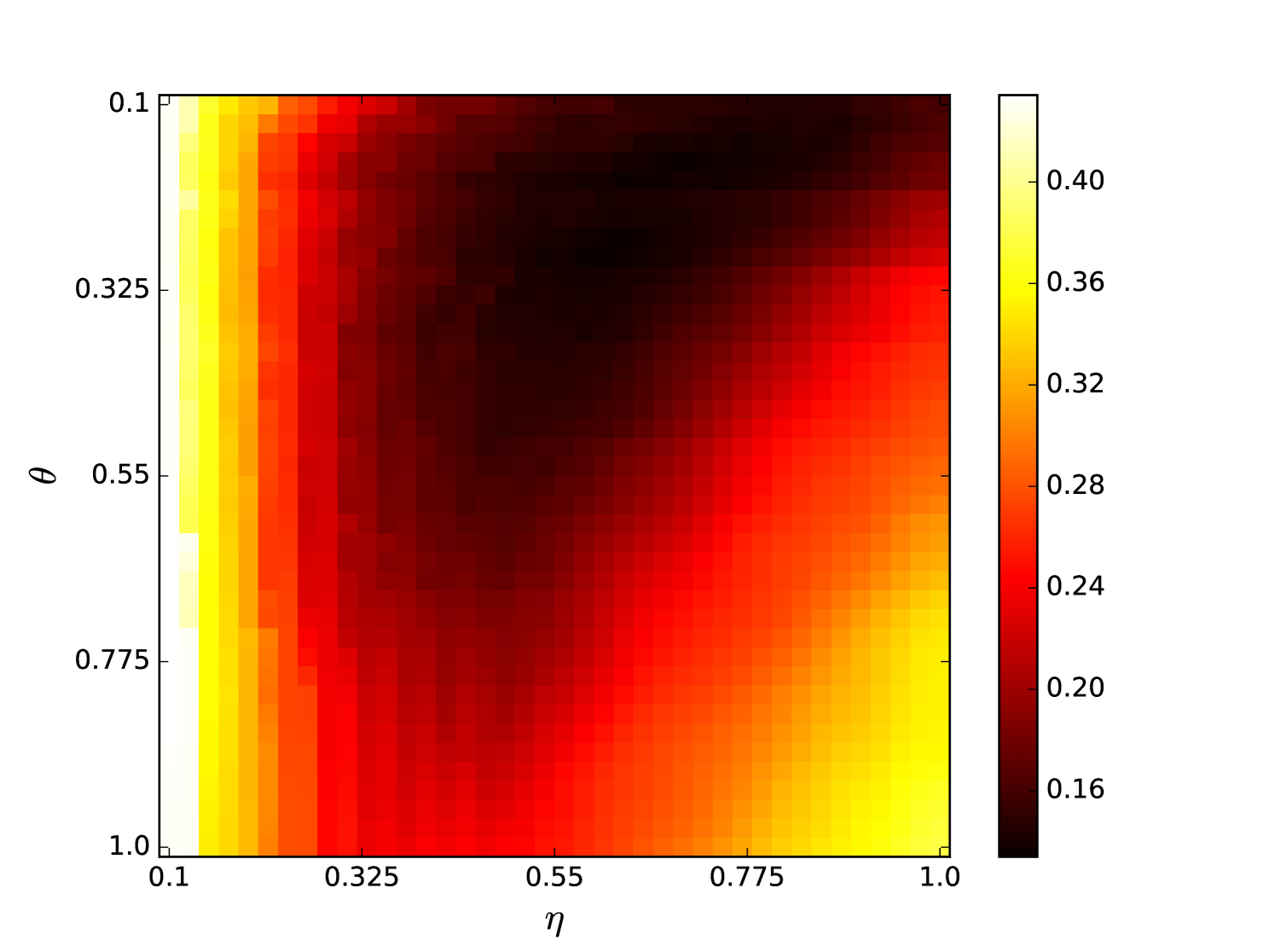

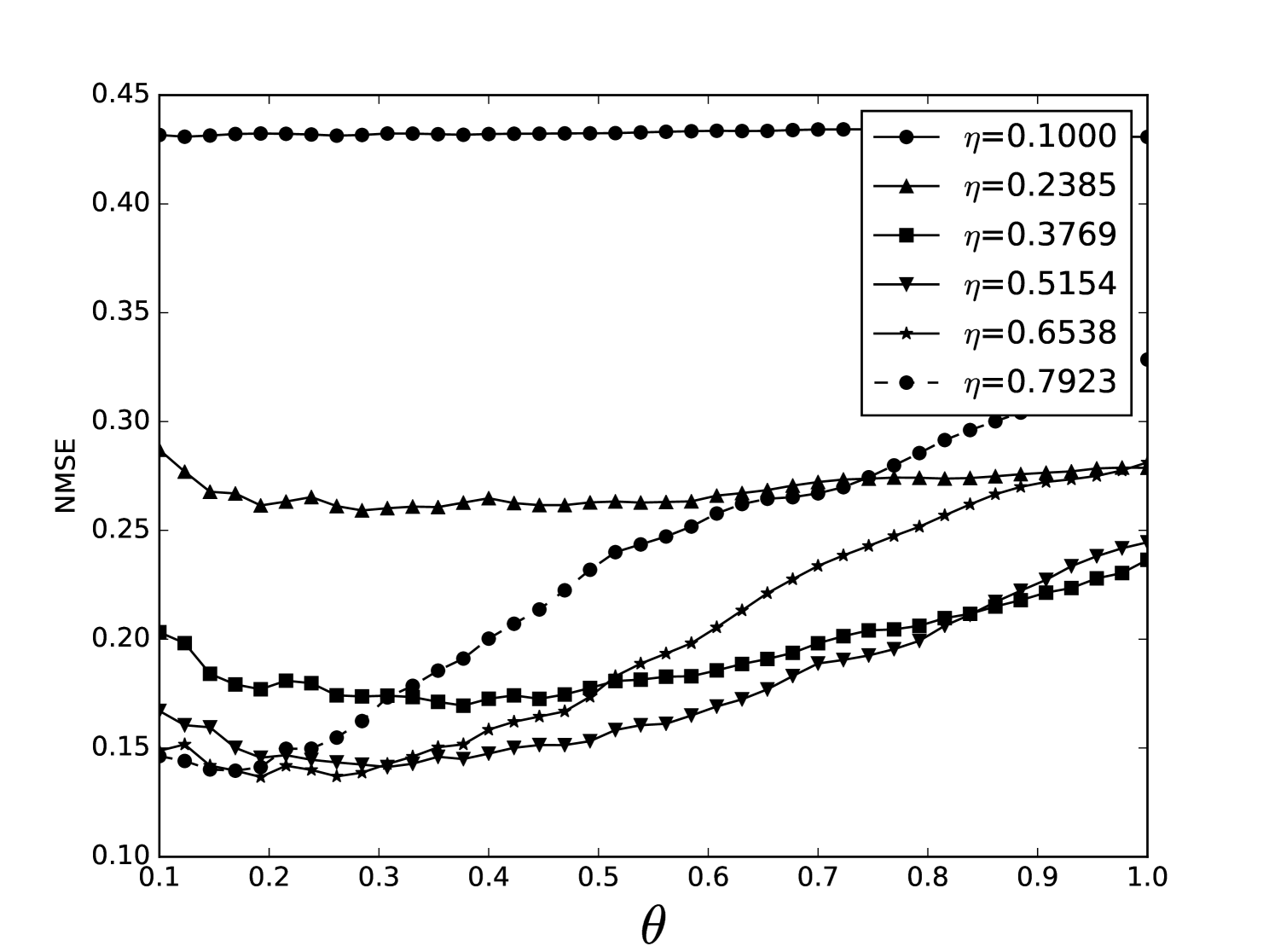

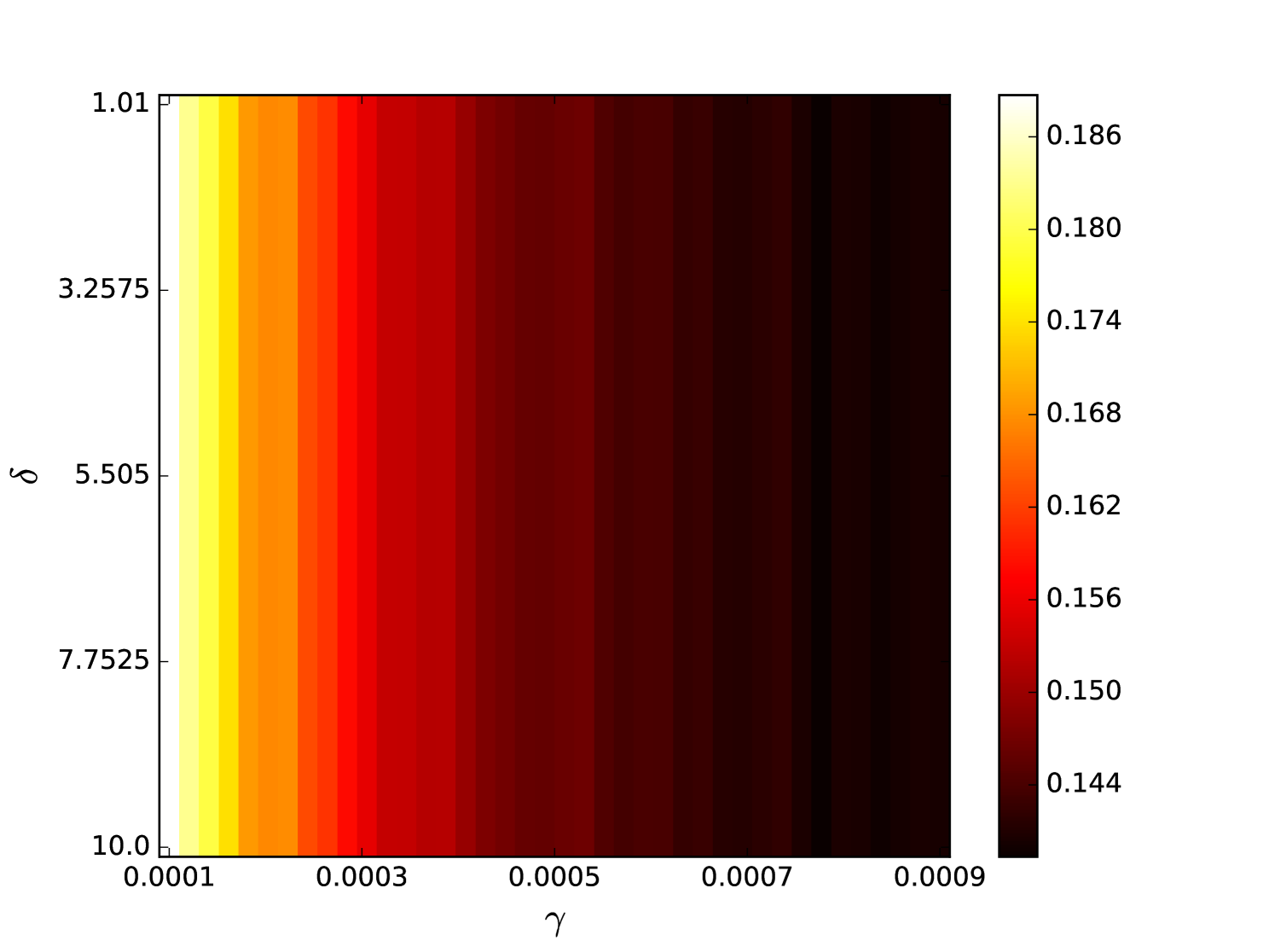

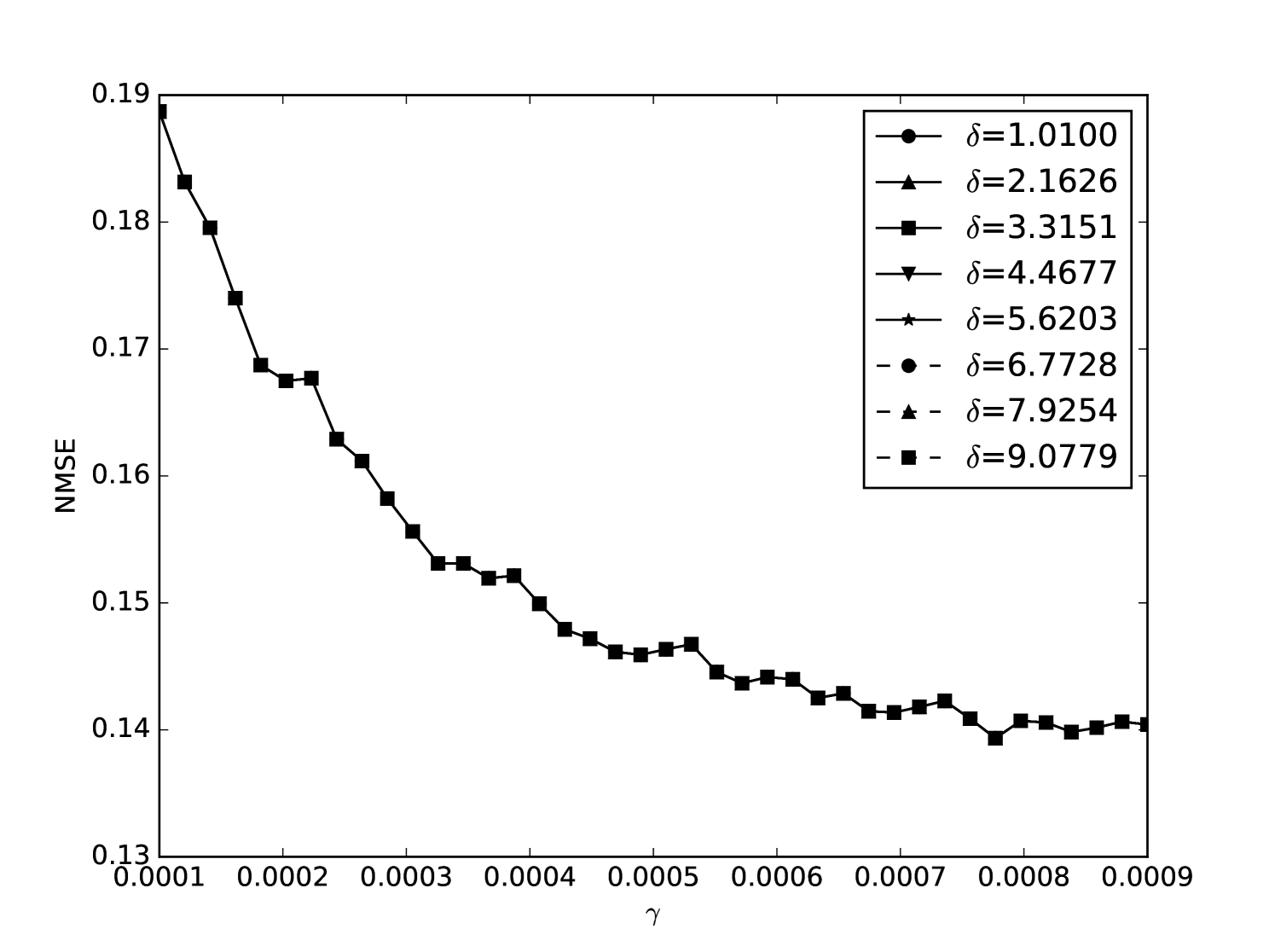

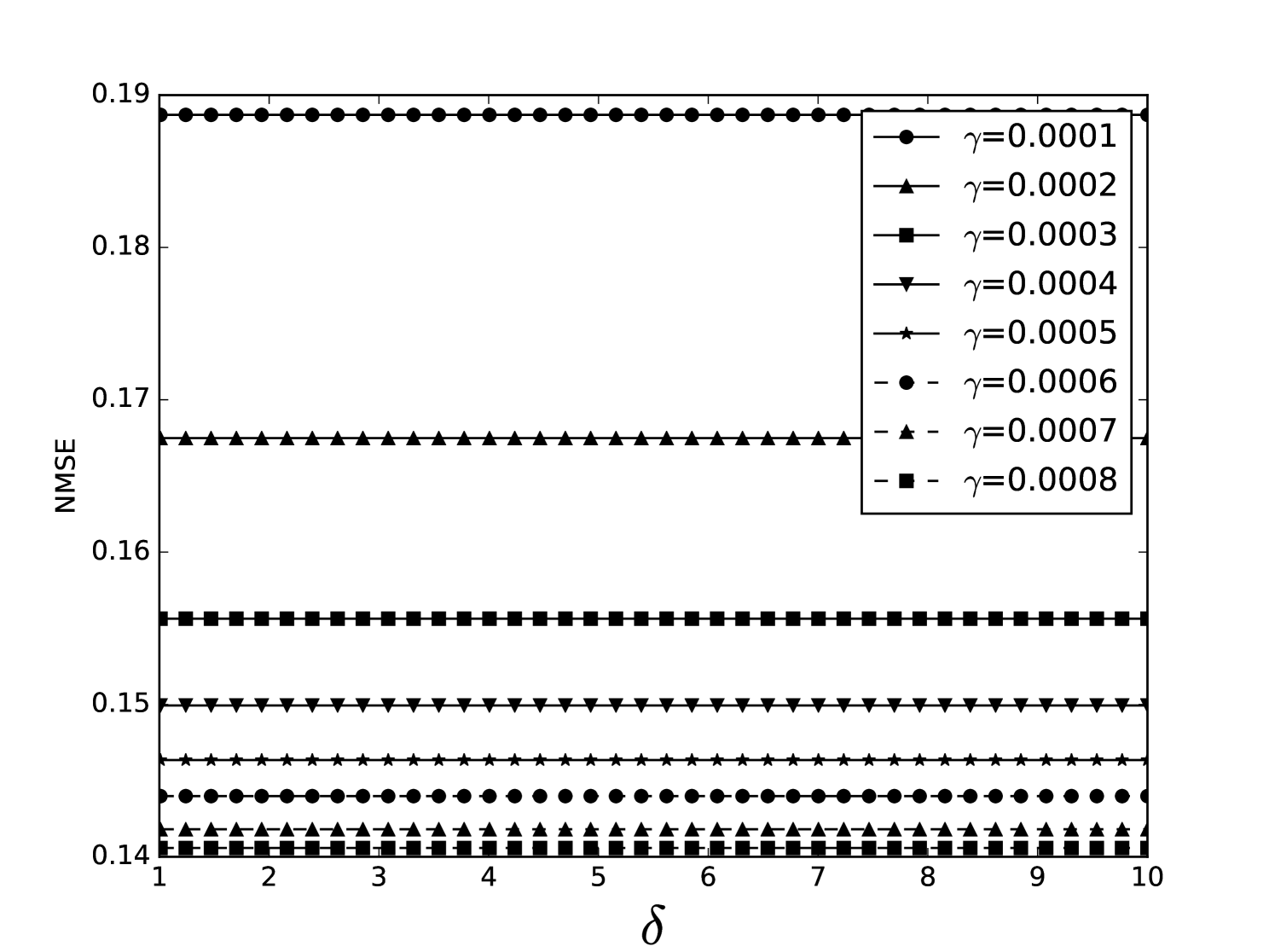

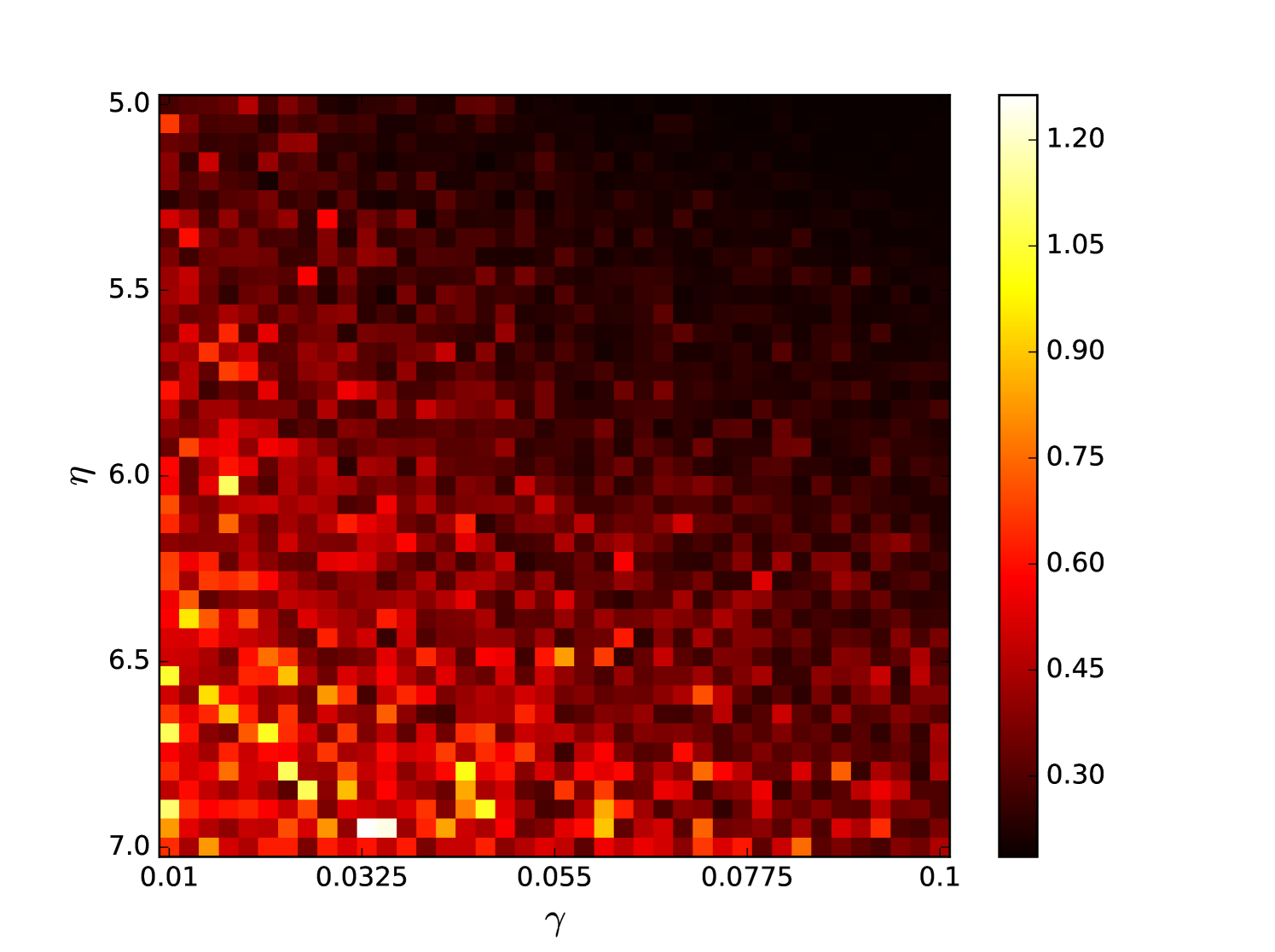

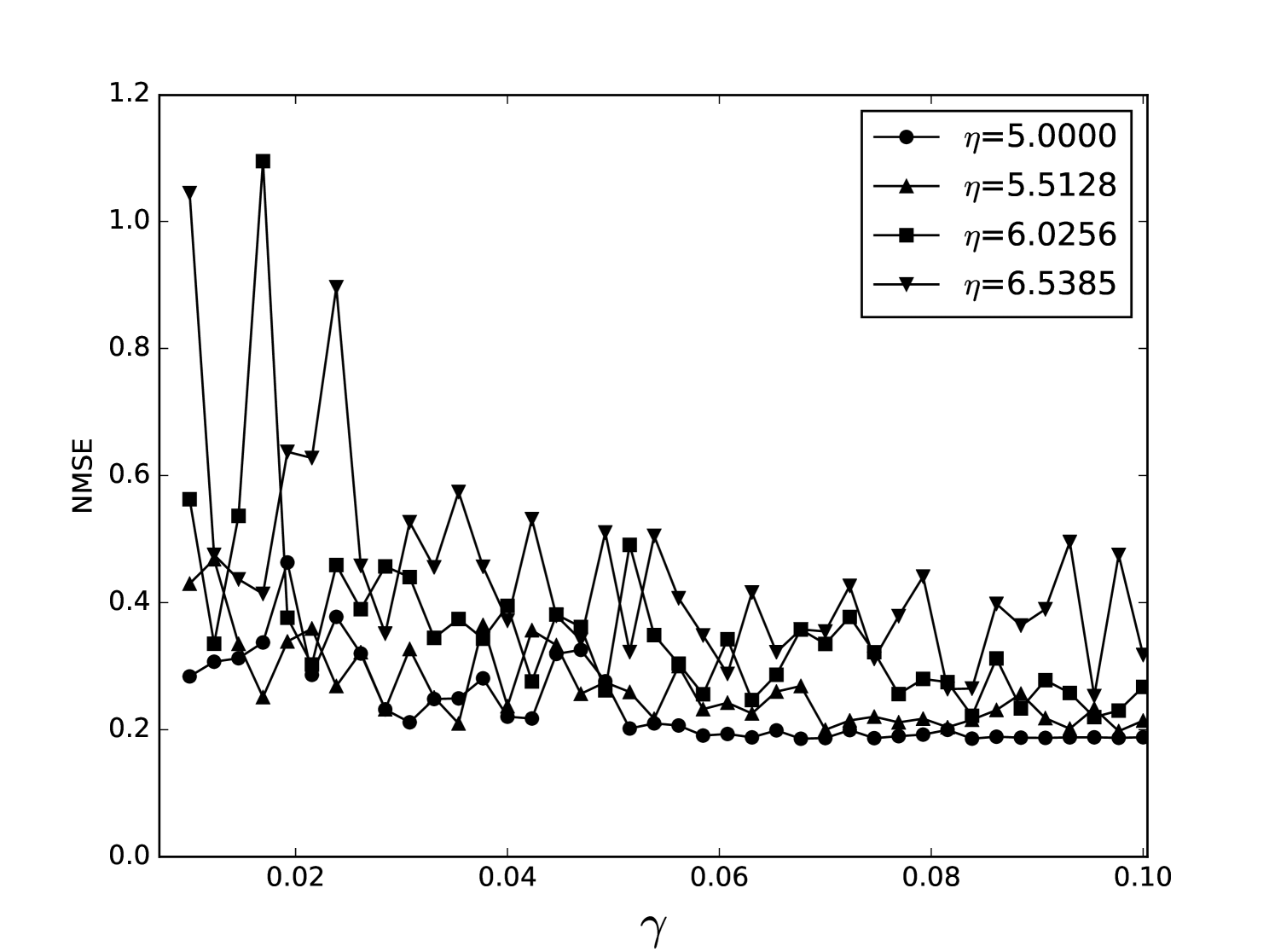

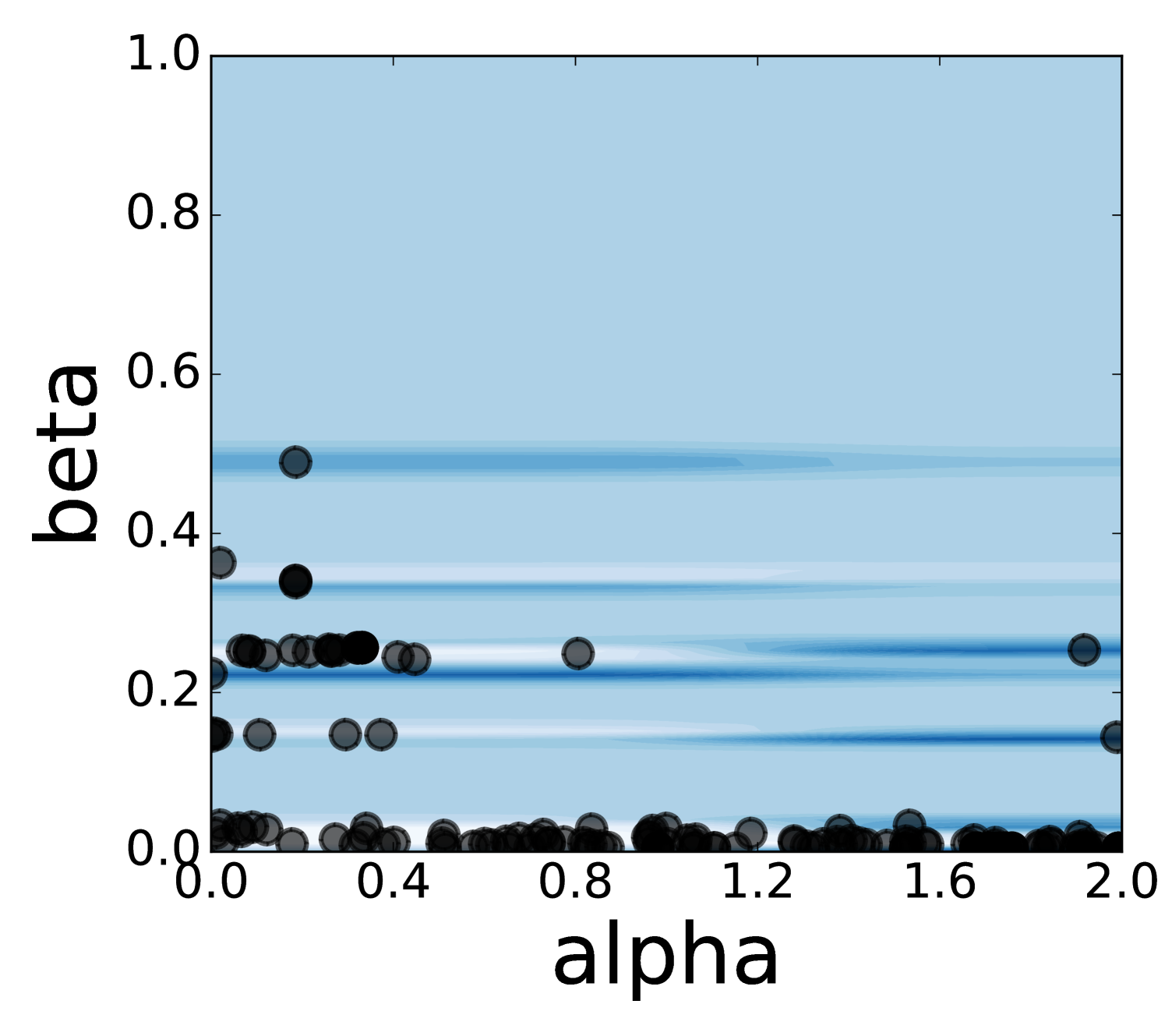

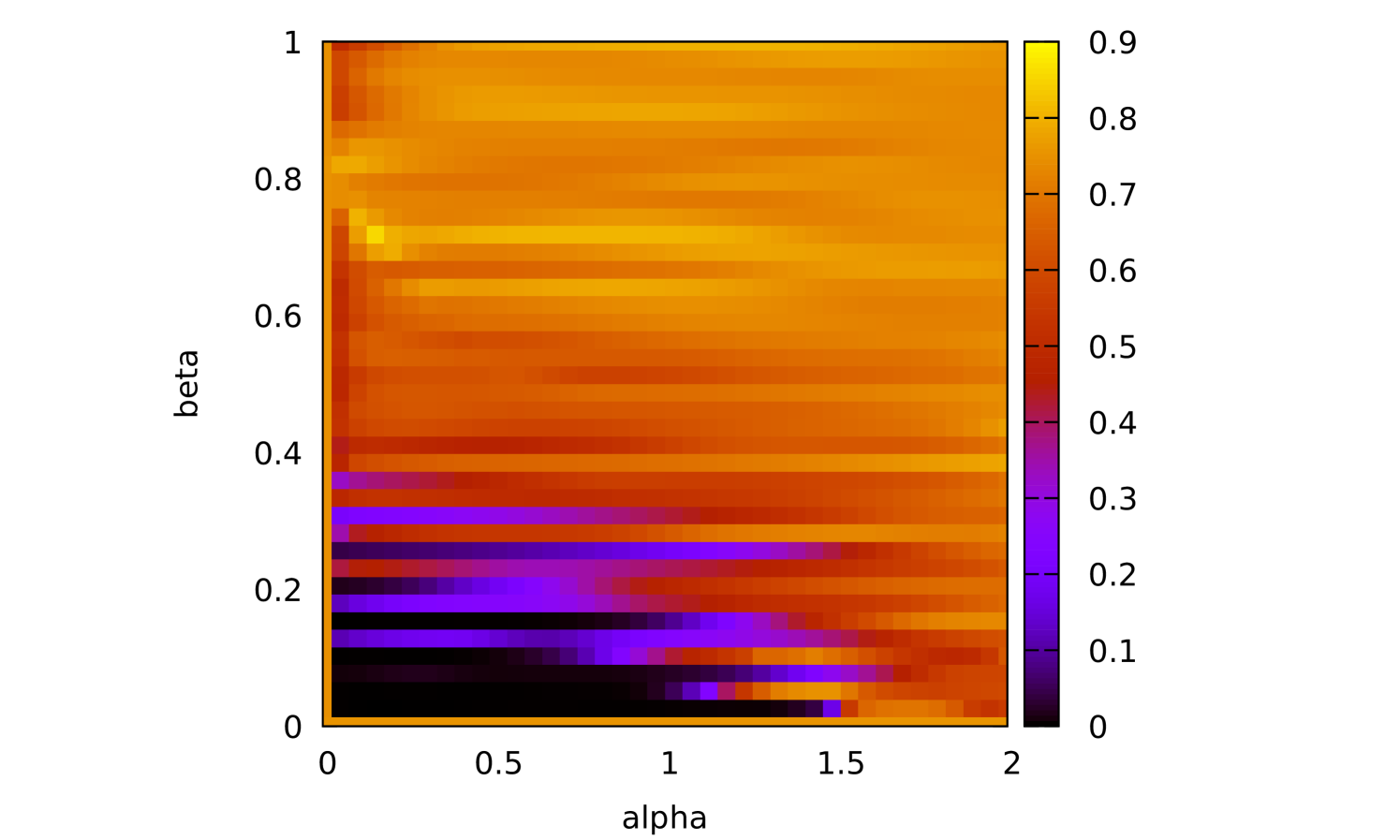

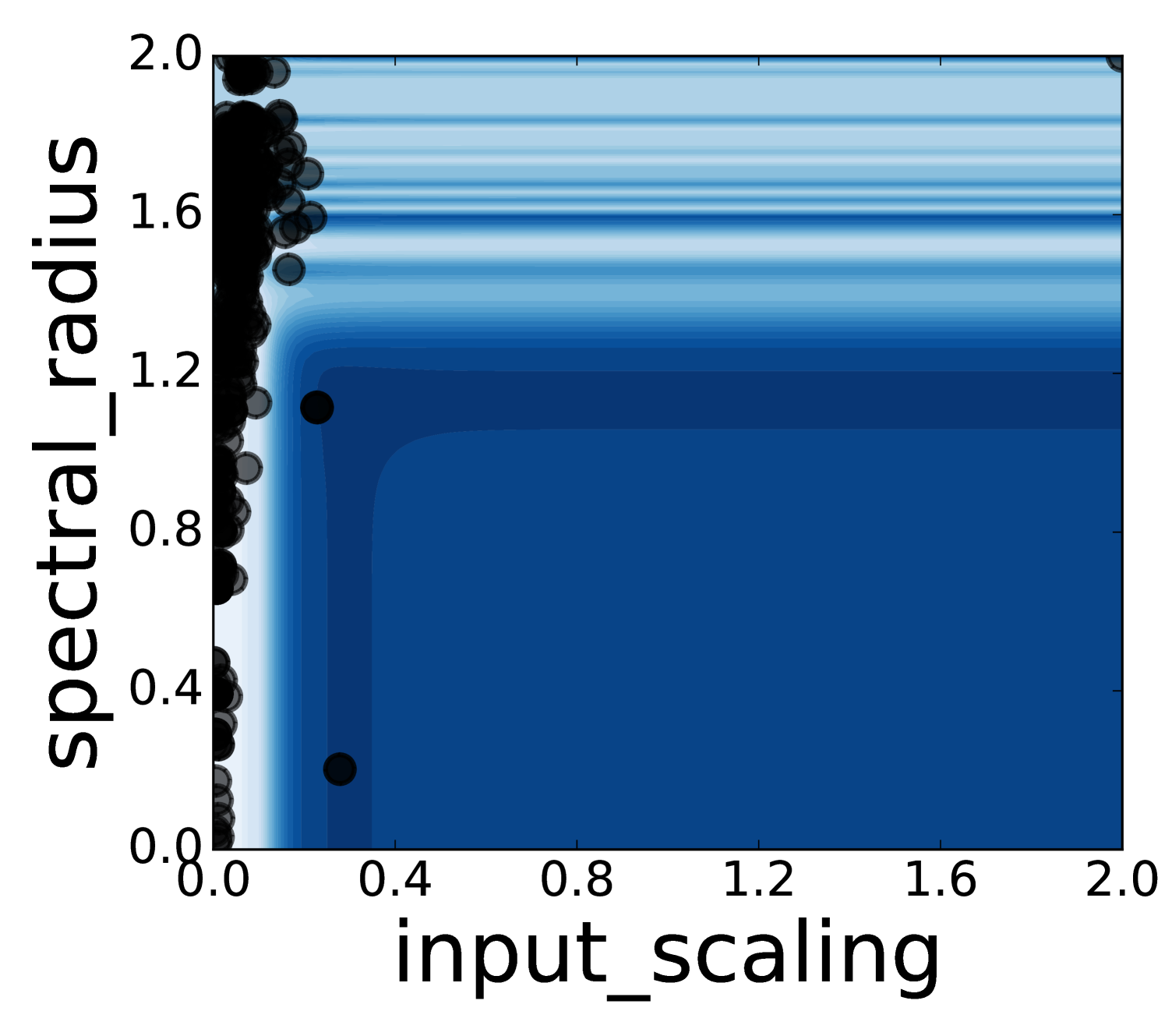

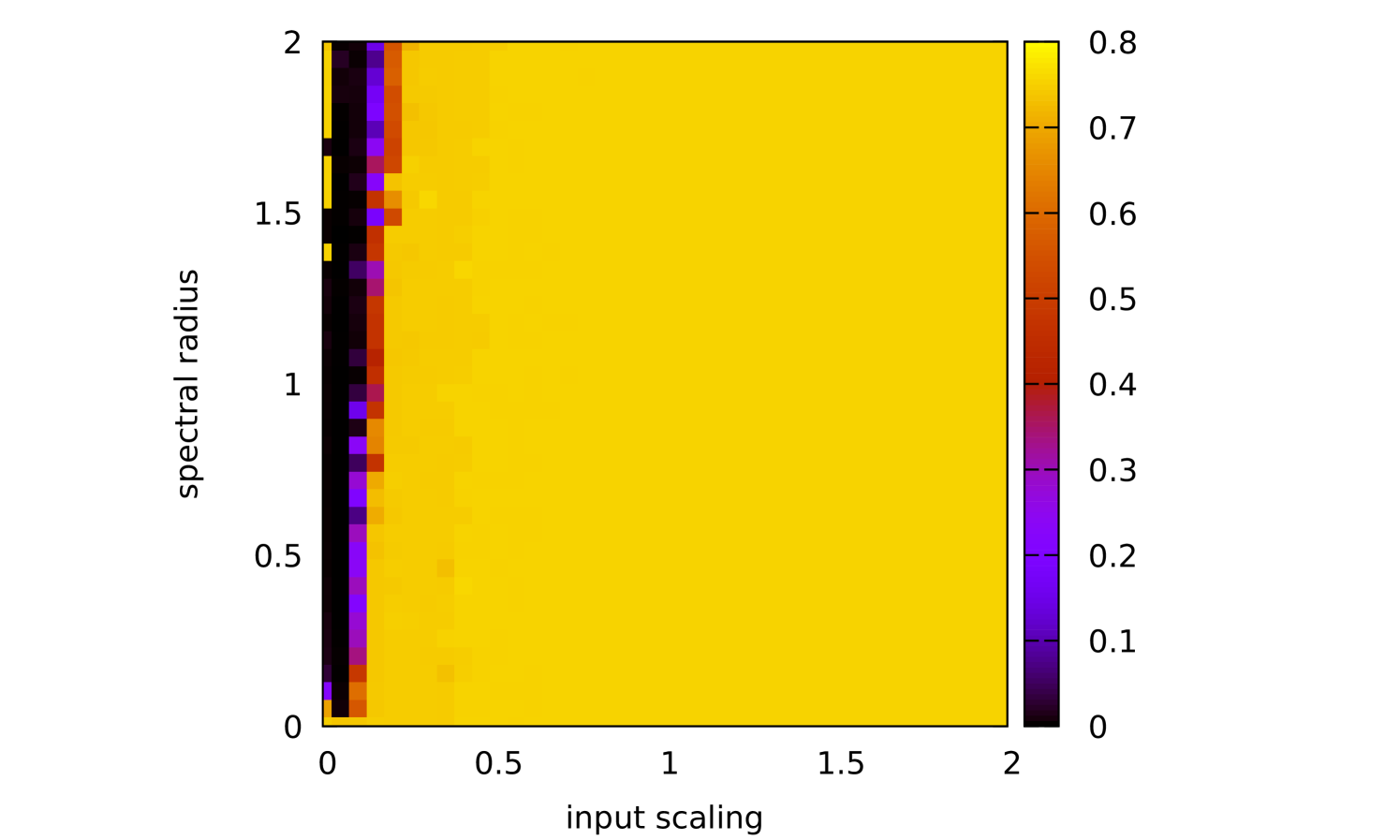

Some error surfaces are shown in B. One sees that the error surface is smooth around the optimum parameter values for both OSI and MSI. The error surfaces of the OSI and MSI differ significantly. The error surface is non-stationary. For example, the variation in the direction is low near the optimum, Figure 10, while it is high away from the optimum, Figure 12. A difference in spatial variation between different parameters can be seen in Figure 11, where exhibits a slow variation and a faster variation. This difference in variation is taken care of by Spearmint, as discussed in Section 3. Note that has little to no influence on the performance. Given the smoothness of the error surface, we expect good convergence behaviour from the GP, as is witnessed in the results.

| OSI | MSI | Appeltant [50] | |

|---|---|---|---|

| NMSE | 0.0047 | 0.0027 | 0.02 |

| 1 | 1 | 1 | |

| 0.018 | 0.0025 | 0.001 | |

| 1.26 | 2 | 0.4 | |

| 0.77 | 0.44 | 0.2 | |

| 1.01 | 2.42 | ? | |

| -6.58 | -12.32 | ? |

We now compare our optimal parameters to those from reference [50]. One should keep in mind that the integration schemes are slightly different from the implementation in [50], even though Euler integration is also used there. Our programs are written in Python, while Matlab is used in [50]. For reference, our MSI method with Appeltant’s parameters gives NMSE = 0.028, which is similar to his result NMSE = 0.02 (note that we don’t know all the parameters of [50], some are educated guesses). All parameters differ at most one order of magnitude. It appears that higher values of and are useful, although this seems to be specific to the Santa Fe task. A more important conclusion is that the value of is suboptimal. Larger values allow for better performance in most considered benchmark tasks. This is important, because the choice has become popular in the literature [28, 12, 51, 52, 53, 30, 54, 55, 56, 57]. A larger causes a longer calculation time for the MSI. The OSI calculation time is independent of , and has the same order of magnitude improvement in NMSE.

In addition to the NDN, we studied an ESN to compare the performance of the Bayesian optimization algorithm to the results from [58], who use binary PSO to optimize the ESN architecture. They use samples for training, for validation and another for testing. The optimized parameters were the input scaling (which is a scaling parameter of in Eq. (1)), spectral radius and the ridge regression parameter . The number of nodes in the network was set to . The validation error was averaged over 10 independent realizations of the ESN. Using Bayesian optimization on these parameters we obtained a NMSE of , which should be compared to in [58] for their standard ESN, and after applying their optimization algorithm. This is a significant improvement compared to their results. During testing an irregularity in the data set was found, on which we elaborate in A. In the partitioning we study, the irregularity is part of the validation set. It should be noted that the irregularity may have driven the optimization to a hyper-parameter set that minimizes the error caused by the irregularity, which isn’t necessarily the optimal set for prediction on the test set, as no such deviations occur there. In order to check the performance of the ESN on the Santa Fe laser task without the complications of the irregularity, we also performed some tests using the Appeltant division of the data set, as was used to test the performance of the delay node algorithm. The resulting NMSE was . Even though the error is approximately the same as for the other data set division, the result is better since only 1000 points instead of 6400 points are used for training.

6.2 NARMA 10

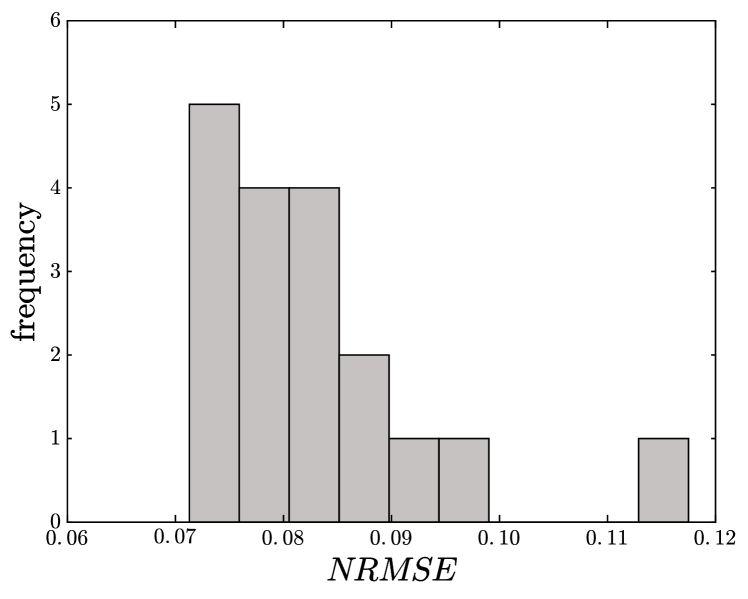

We used the same methods for the NARMA 10 time series. We train and validate on two fixed time series. The test error shows quite some variance, so we average the test error over 15 randomly generated time series of 2000 points each. We found an NRMSE for the OSI scheme, which compares favorably to NRMSE of Appeltant [50]. The histogram of test errors is shown in Figure 5. Most values lie around the average, but there are some outliers with higher NRMSE. We don’t know if such an average was taken in [50]. The MSI scheme gives NRMSE , which is worse than the result from [50]. Note that for the optimal MSI scheme (compared to for OSI). The MSI scheme for NARMA 10 was used in [50] to arrive at the choice of .

Because NARMA 10 is deterministic given the ten previous input steps, the linear regression weights of the output are extremely large (a factor 100 larger than Santa Fe, which itself has large weights because it is deterministic). Large regression weights occur when overfitting, but in this case they give good results. The regularization parameter should be set to zero , i.e., no regularization is needed. This “overfitting” issue also leads to a strong sensitivity on noise in the implementation, making hardware implementations inherently difficult [50]. It is possible that rounding errors in the MSI scheme are the reason for the worse performance, which makes the calculation of reservoir states less stable.

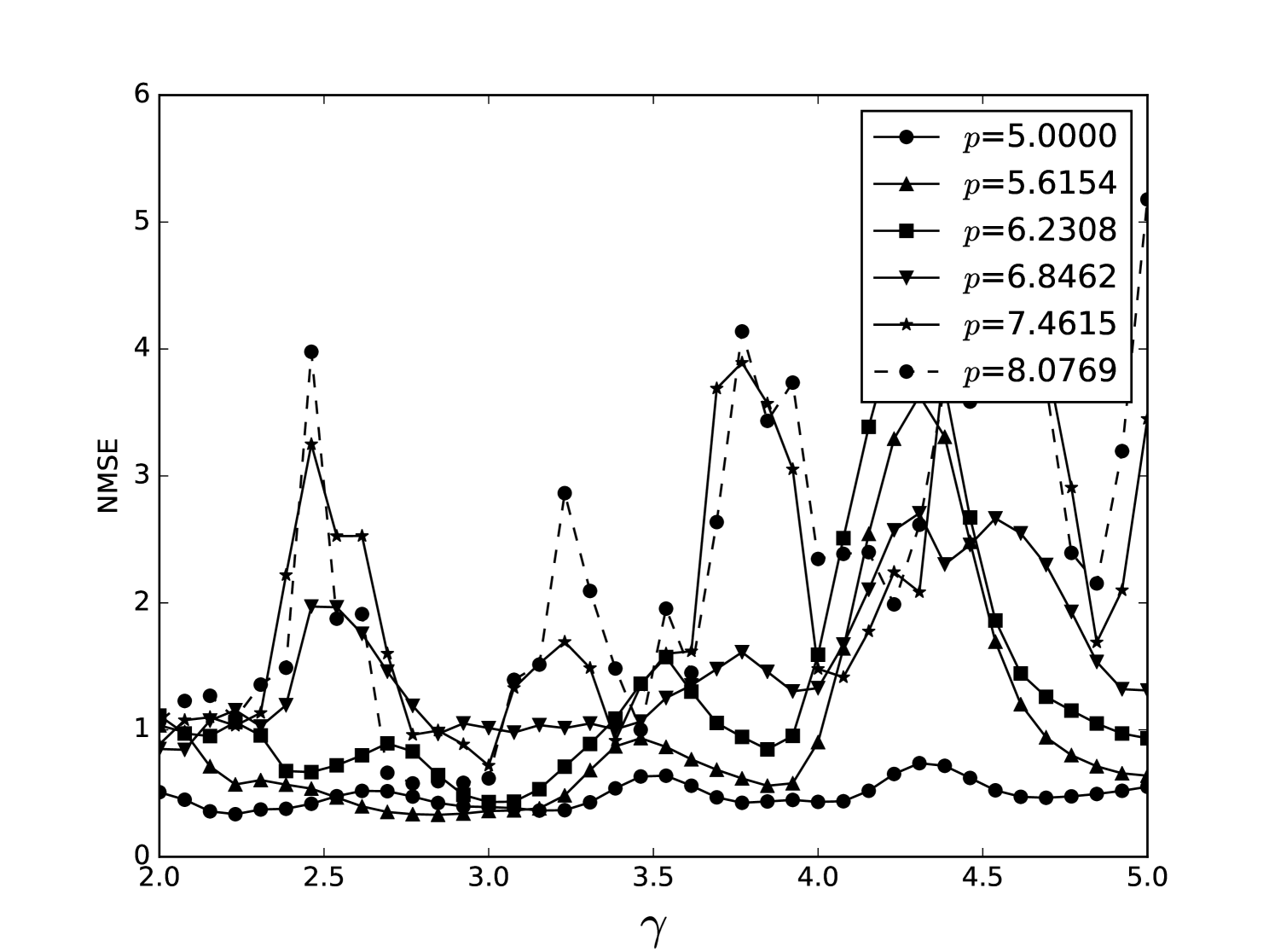

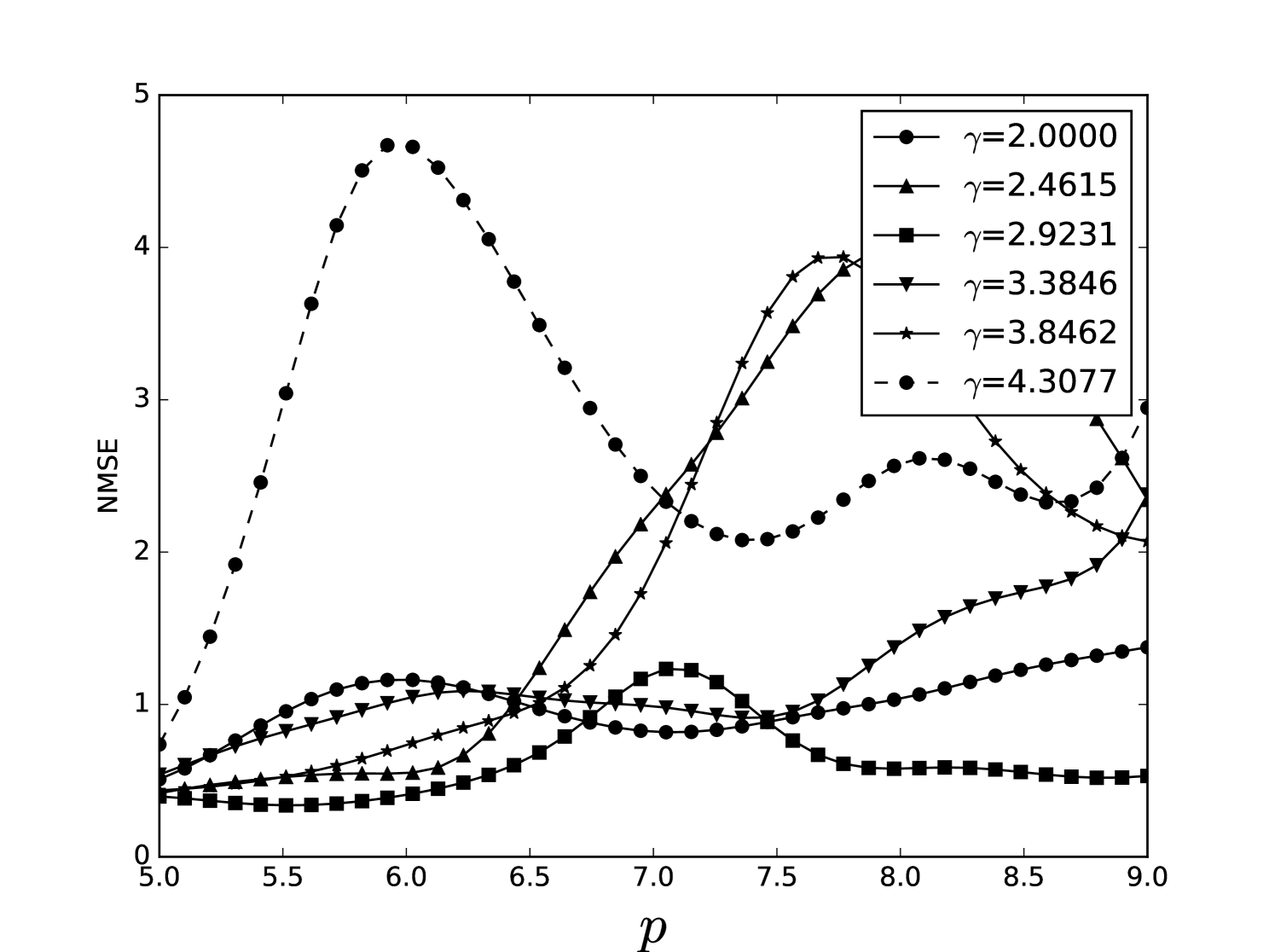

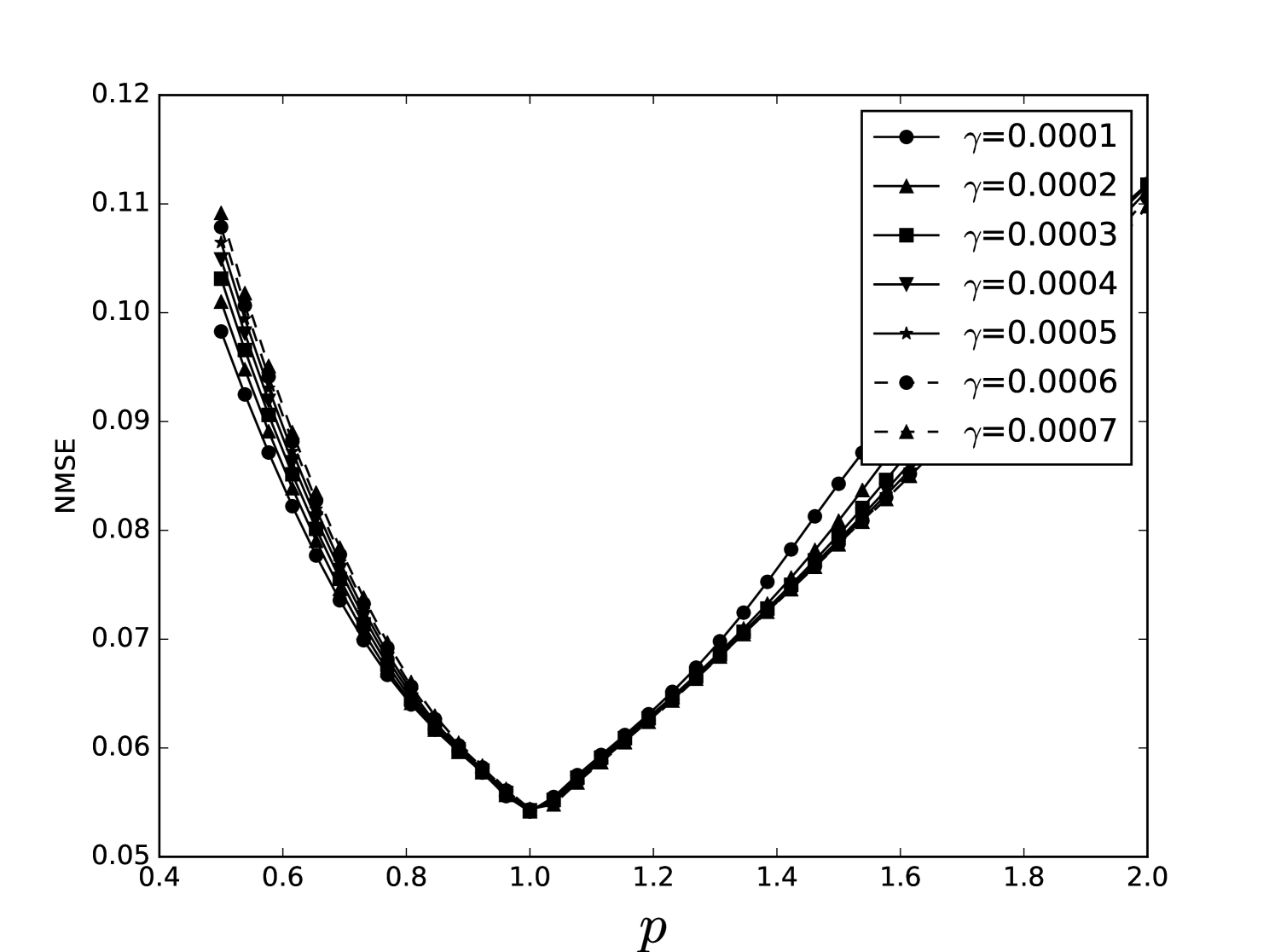

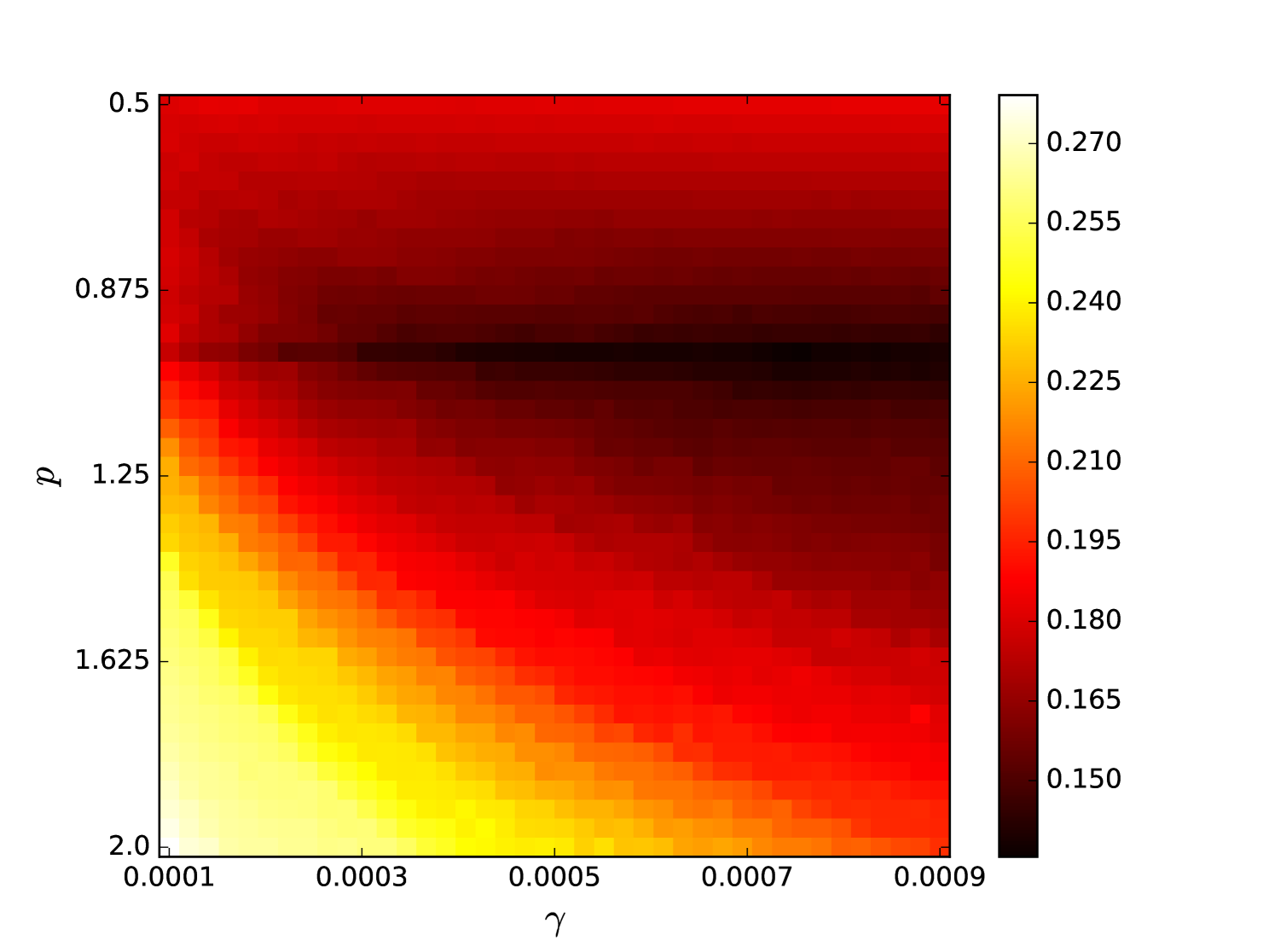

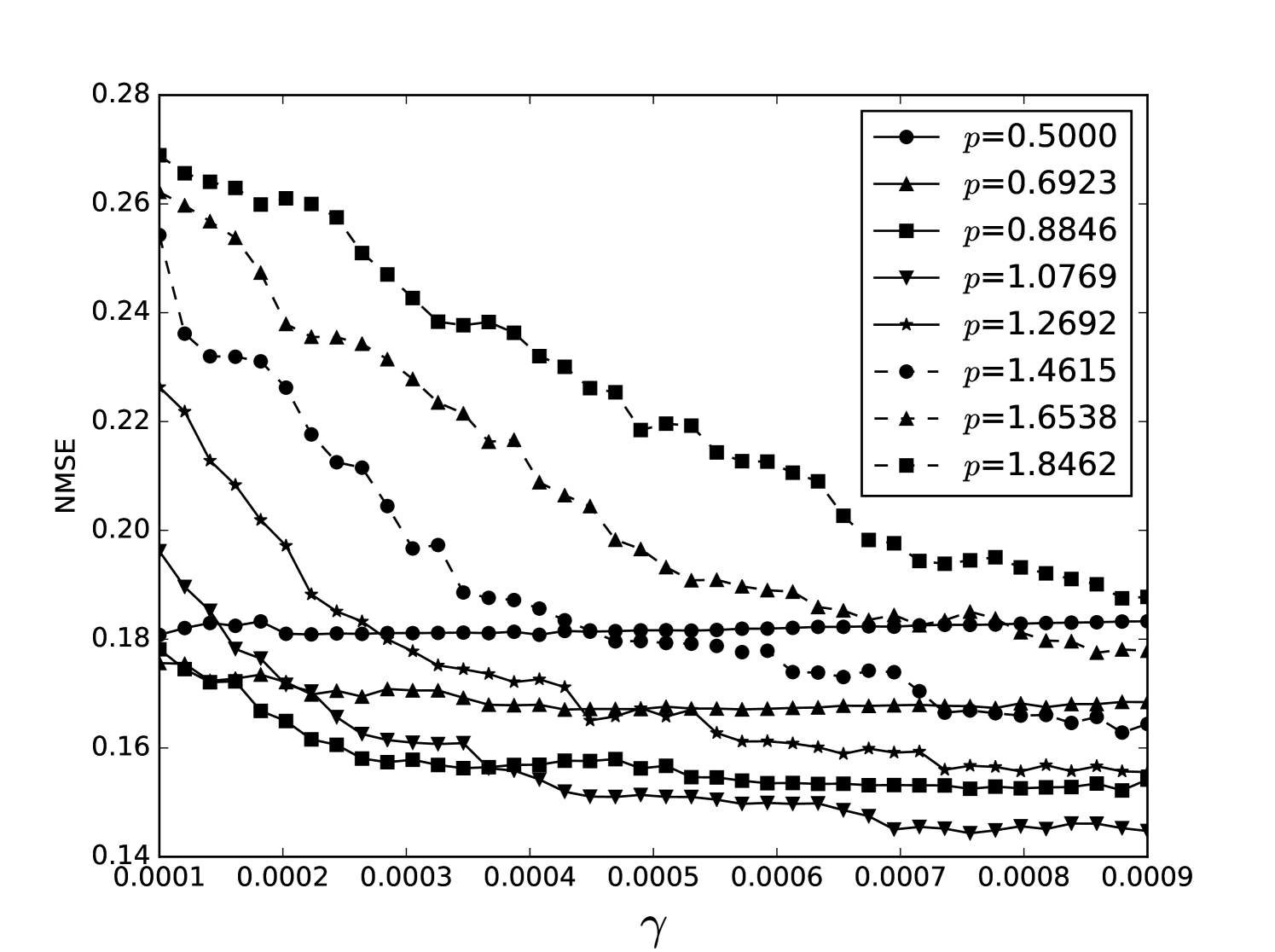

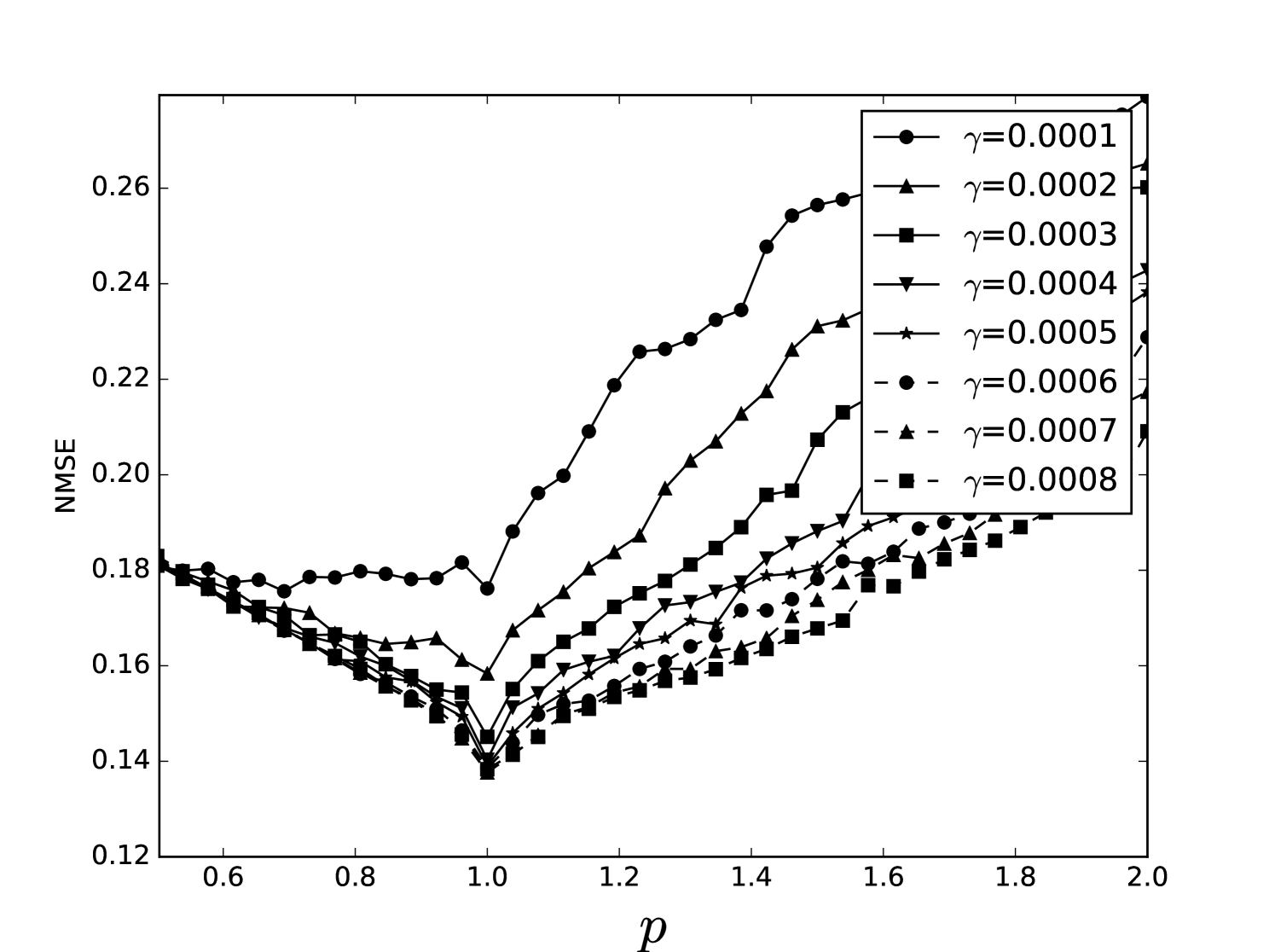

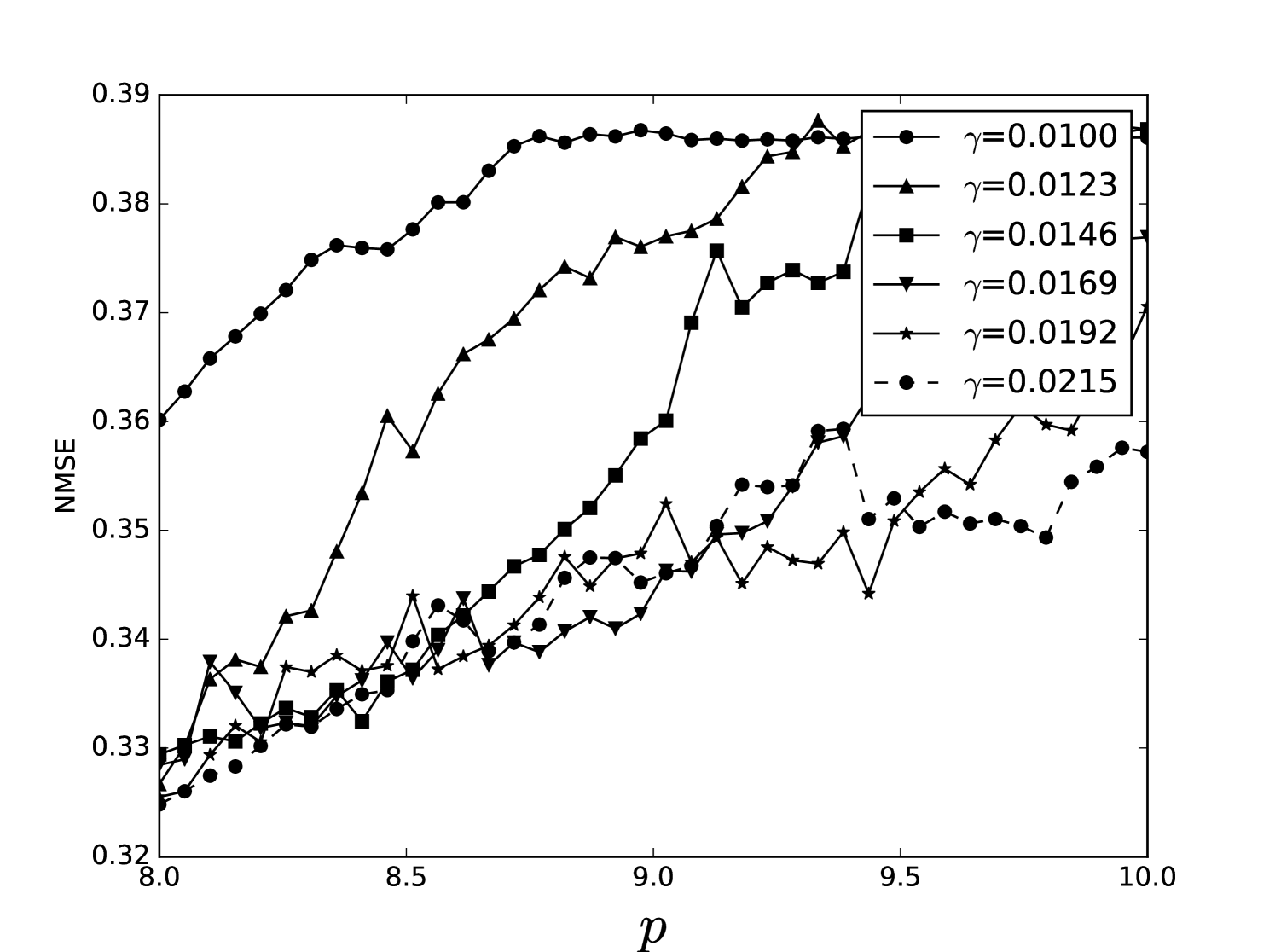

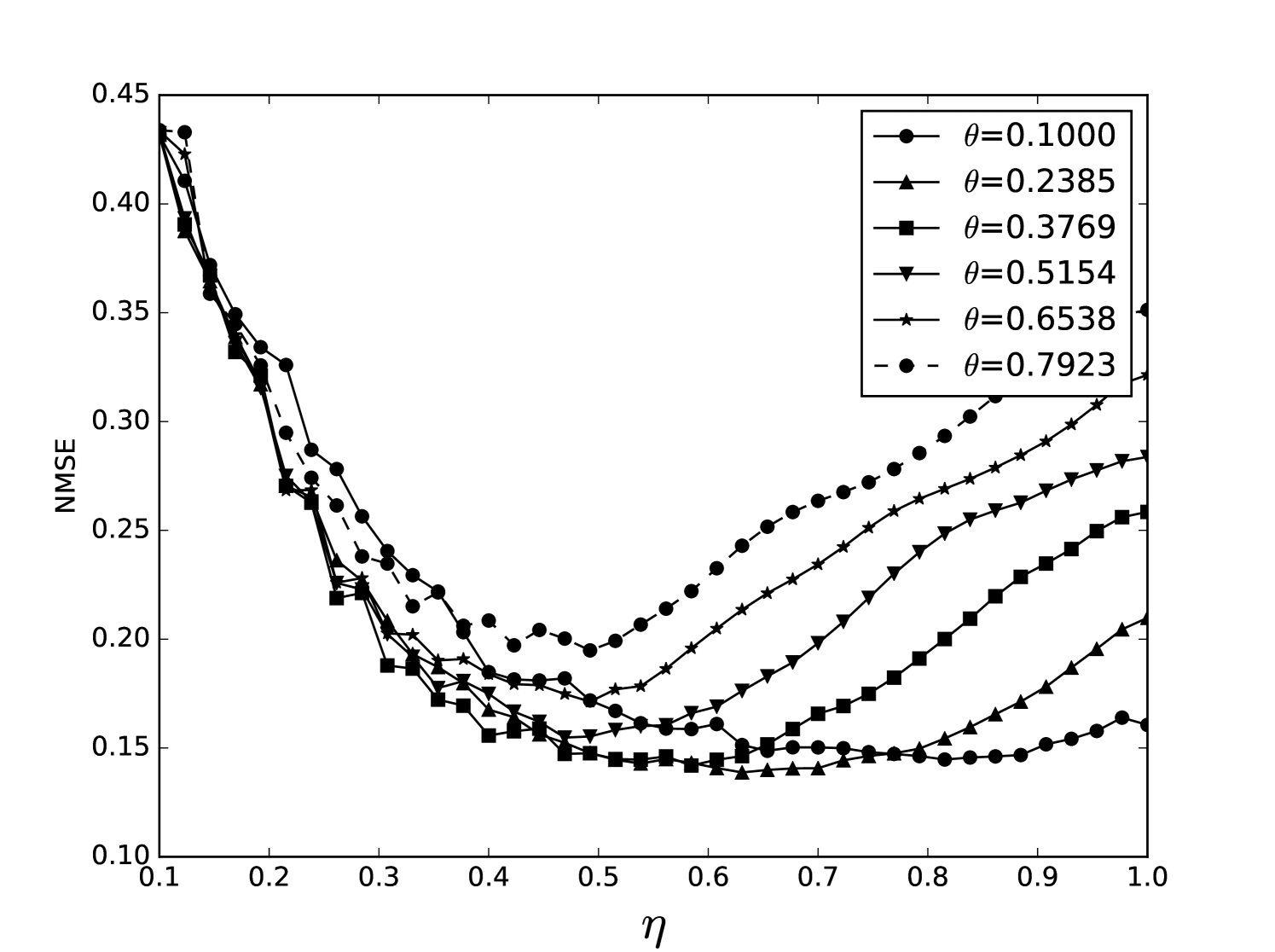

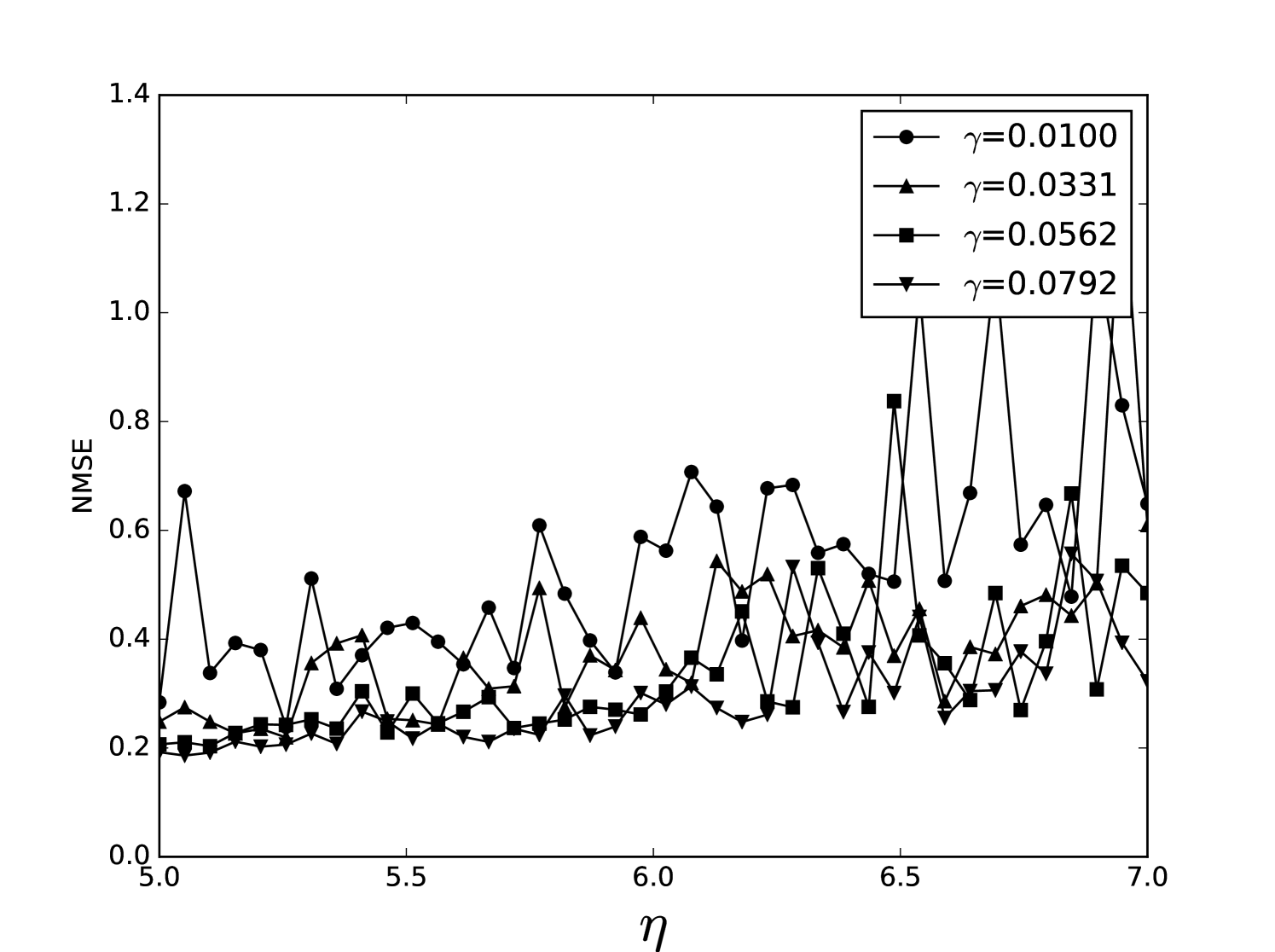

From the plots of the error surfaces in C one sees that there is a very low sensitivity for . The error surface is less smooth than the Santa Fe error surface, but still rather smooth. While the MSI scheme is indeed noisier compared to the OSI scheme, the amount of noise seems small enough for Spearmint to handle (this amount of noise was not problematic for the demo runs, see Section 7). The fact that the MSI error surface is noisier corroborates our idea that the worse performance of the MSI scheme is because of rounding error in the integration scheme. Away from the optimal parameter values, the error surface can be very noisy, cf. Figure 21. We finally note that the error surfaces are non-stationary here as well.

| one-step | multi-step | Appeltant [50] | |

| NRMSE | 0.08 | 0.146 | 0.12 |

| 1.01 | 1.04 | 1 | |

| 0.0005 | 0.0013 | 0.005 | |

| 0.738 | 0.753 | 0.4 | |

| 0.908 | 0.19 | 0.2 | |

| 1.05 | 3.812 | ? | |

| 0 | 0 | ? |

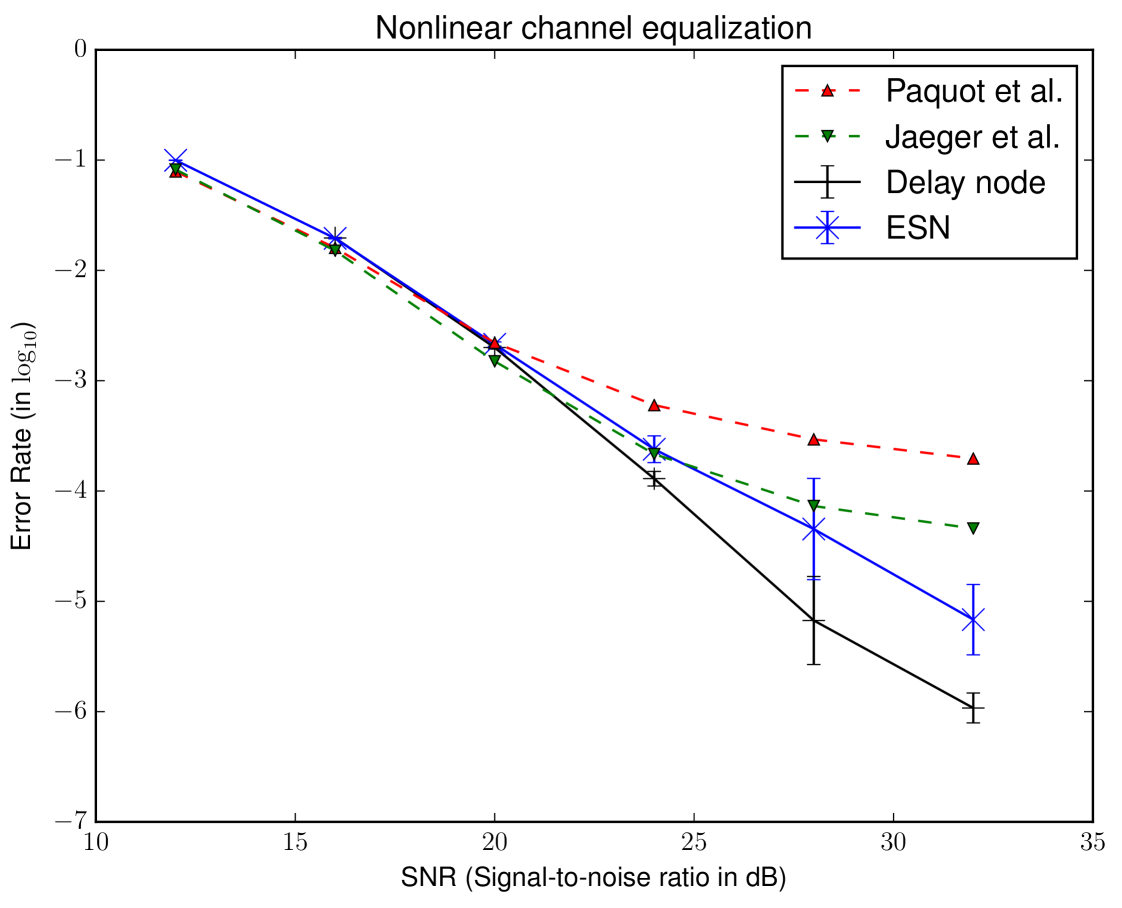

6.3 Nonlinear channel equalization

We now assess the performance on the nonlinear channel equalization task (Section 4.3). In order to compare with the results found in [29] we use the delay node system with the sine nonlinearity, as described in Section 2.2.2. Following the setup in [29] we generate different realizations of the data set, for each of the SNRs (ranging from to dB). The delay node was trained using an initial washout of samples, followed by points of training, and was validated on validation points. This was done for each of the realizations of the data set, and the validation error was the average of the error on the individual realizations. To produce the output, ridge regression was used, after which the resulting values were binned to the values . The reservoir consists of nodes.

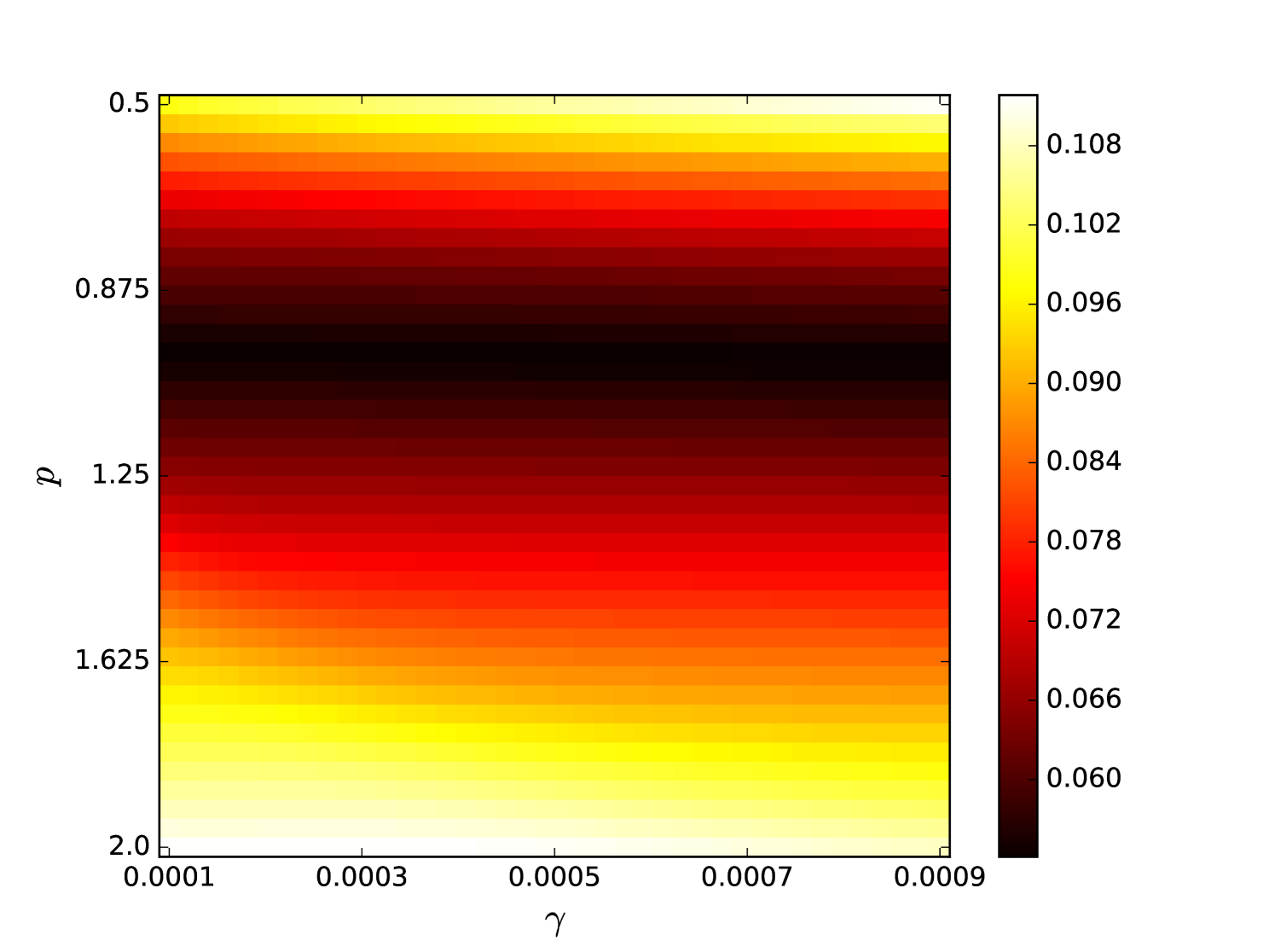

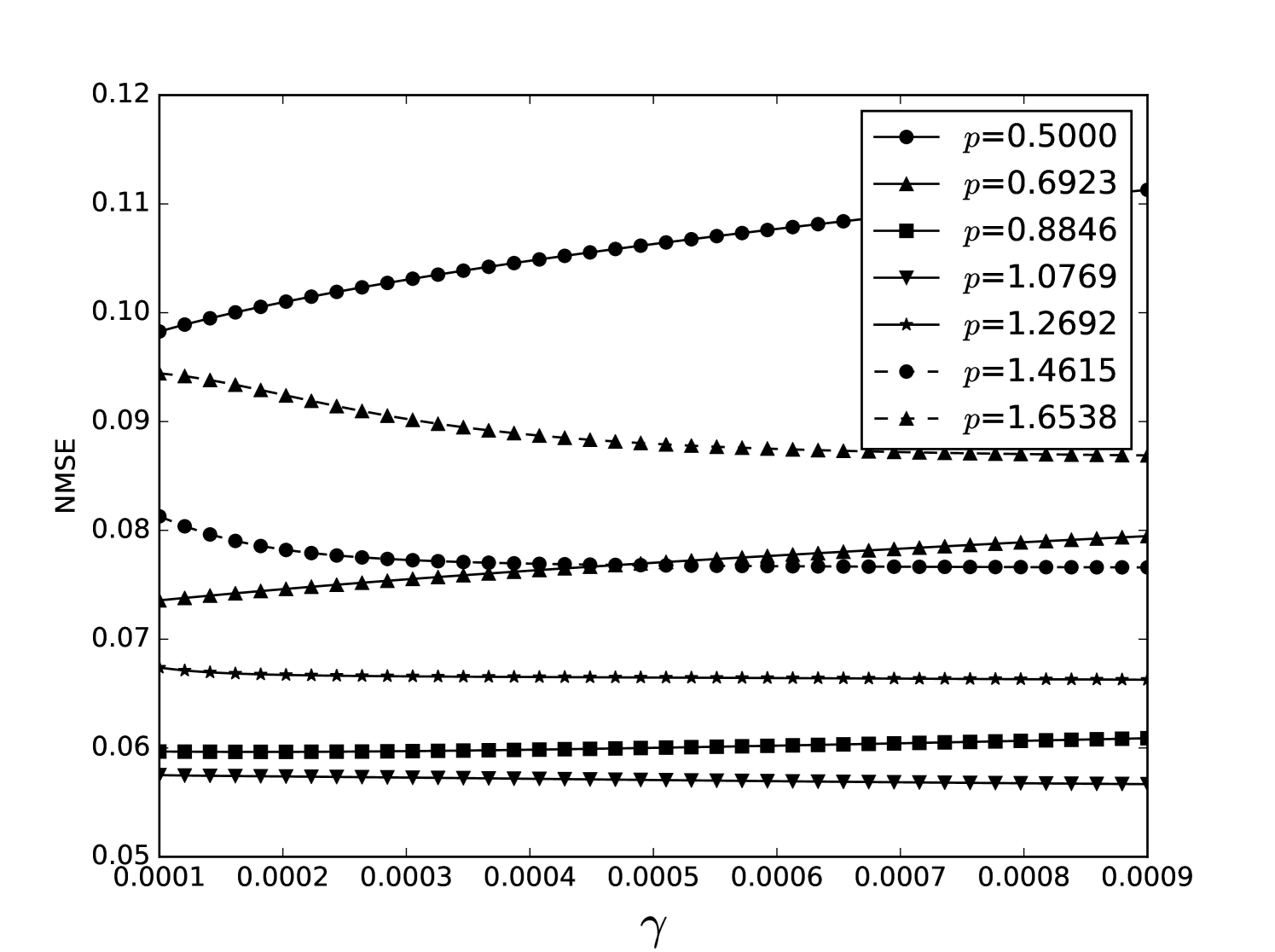

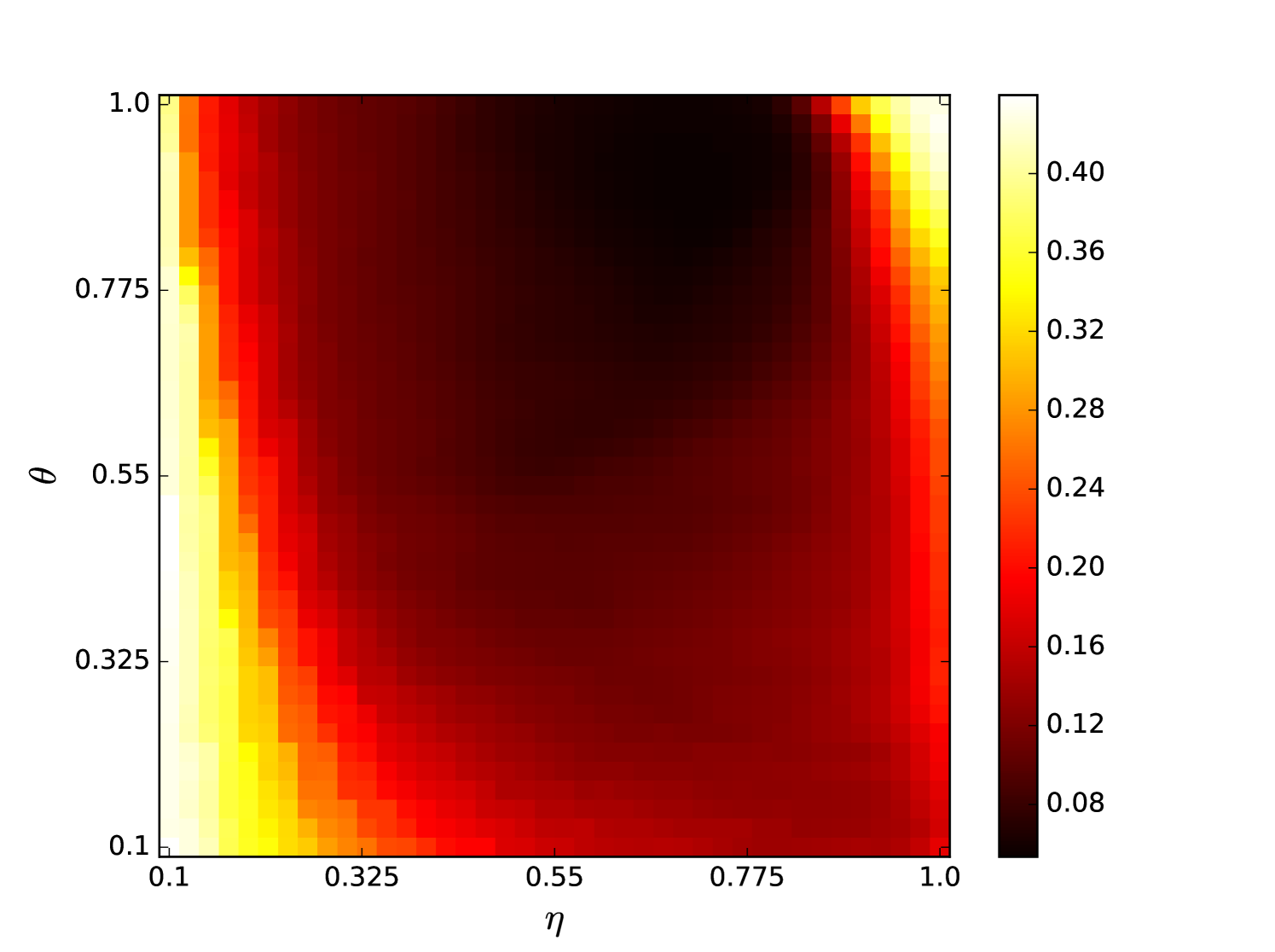

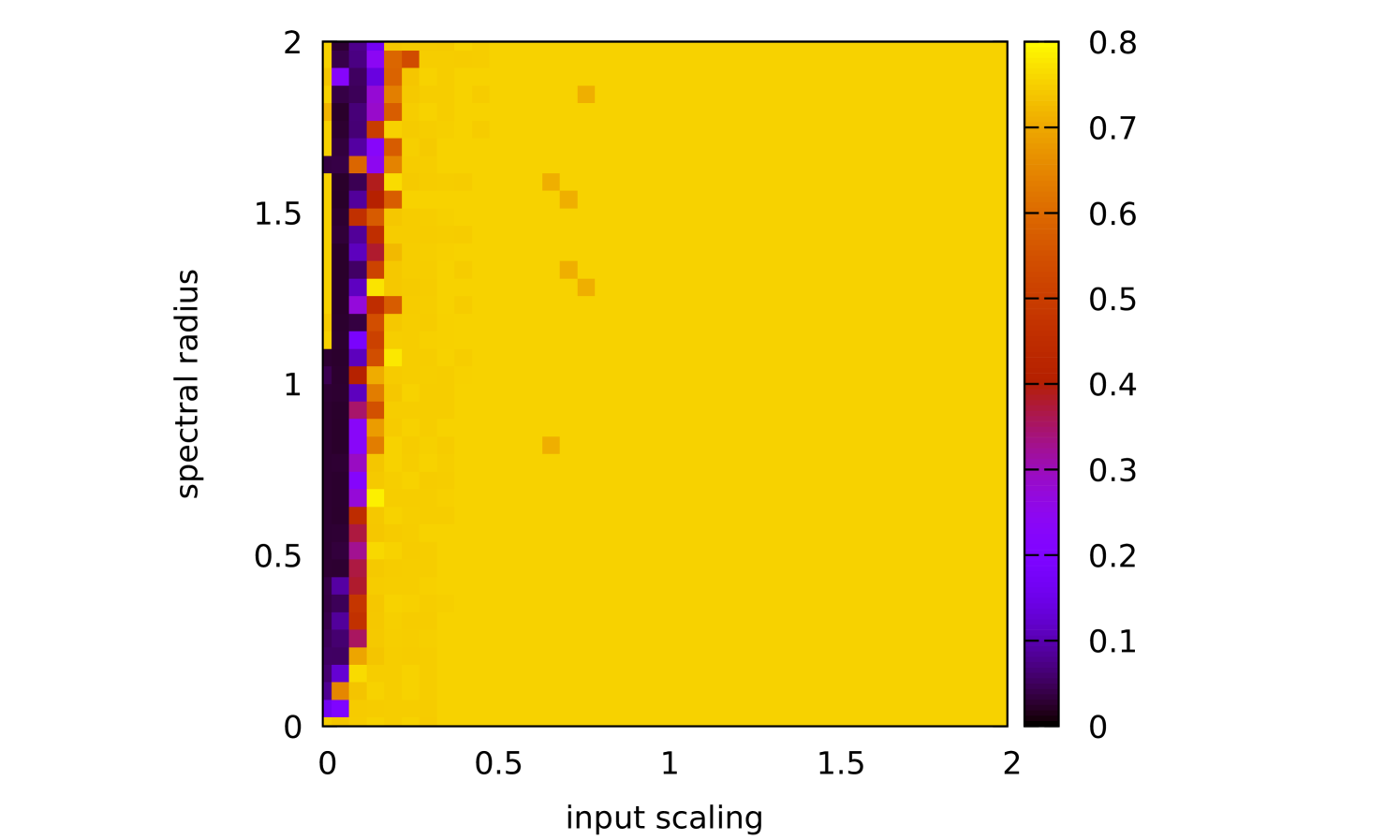

The parameters that were optimized using Bayesian optimization are , , and the regularization parameter . These were optimized separately for each of the SNRs. The results are shown in Table 3. The results of the SER are plotted in Figure 6. For low noise levels, Bayesian optimization finds parameters which result in a performance that is 2 orders of magnitude better than the literature. For high noise the performance is on par with [29]. Presumably, the error surface at high levels of noise becomes less well behaved, and the assumption of smoothness breaks down, reducing the algorithm to random sampling. This is apparent at SNR 16, where the parameter is larger by 2 orders of magnitude when compared to the other values of the SNR.

We also used an ESN on this data set, and compare our results with [44]. Mimicking their setup we used an ESN with 47 nodes, used washout points, training points and validated on points. The validation error was an average over the error on independent realizations of the network. Once the hyper-parameters were determined on the validation set, a test was performed on a test set of points, averaged over separate realizations of the network. This was done for instantiations of the data set, and the final result is the average of the performance on each of these data sets. The result is shown in Figure 6. We observe a performance which is an order of magnitude better than [44] for high values of the SNR. Similar to the results with the NDN architecture with sine nonlinearity, our method converges to the results obtained in other works for the lower values of the SNR .

| SNR (dB) | 16 | 20 | 24 | 28 | 32 |

|---|---|---|---|---|---|

| 1.631484 | 0.076573 | 0.066186 | 0.090469 | 0.056437 | |

| 0.001946 | 0.083608 | 0.082425 | 0.030920 | 0.091135 | |

| 0.086231 | 0.849972 | 0.934945 | 0.916553 | 0.238782 | |

| -12 | -11 | -13 | -15 | -11 |

7 Spearmint demos

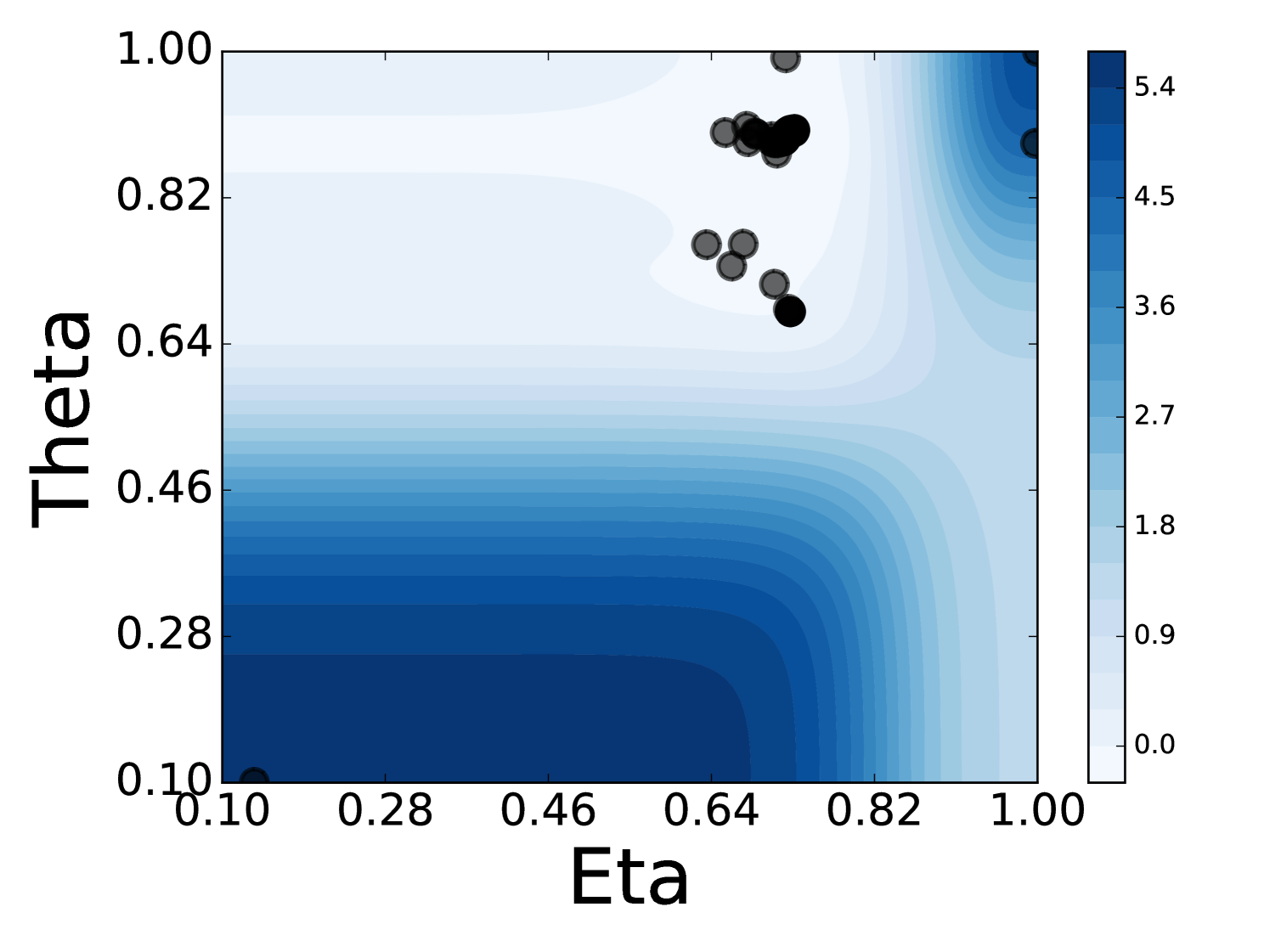

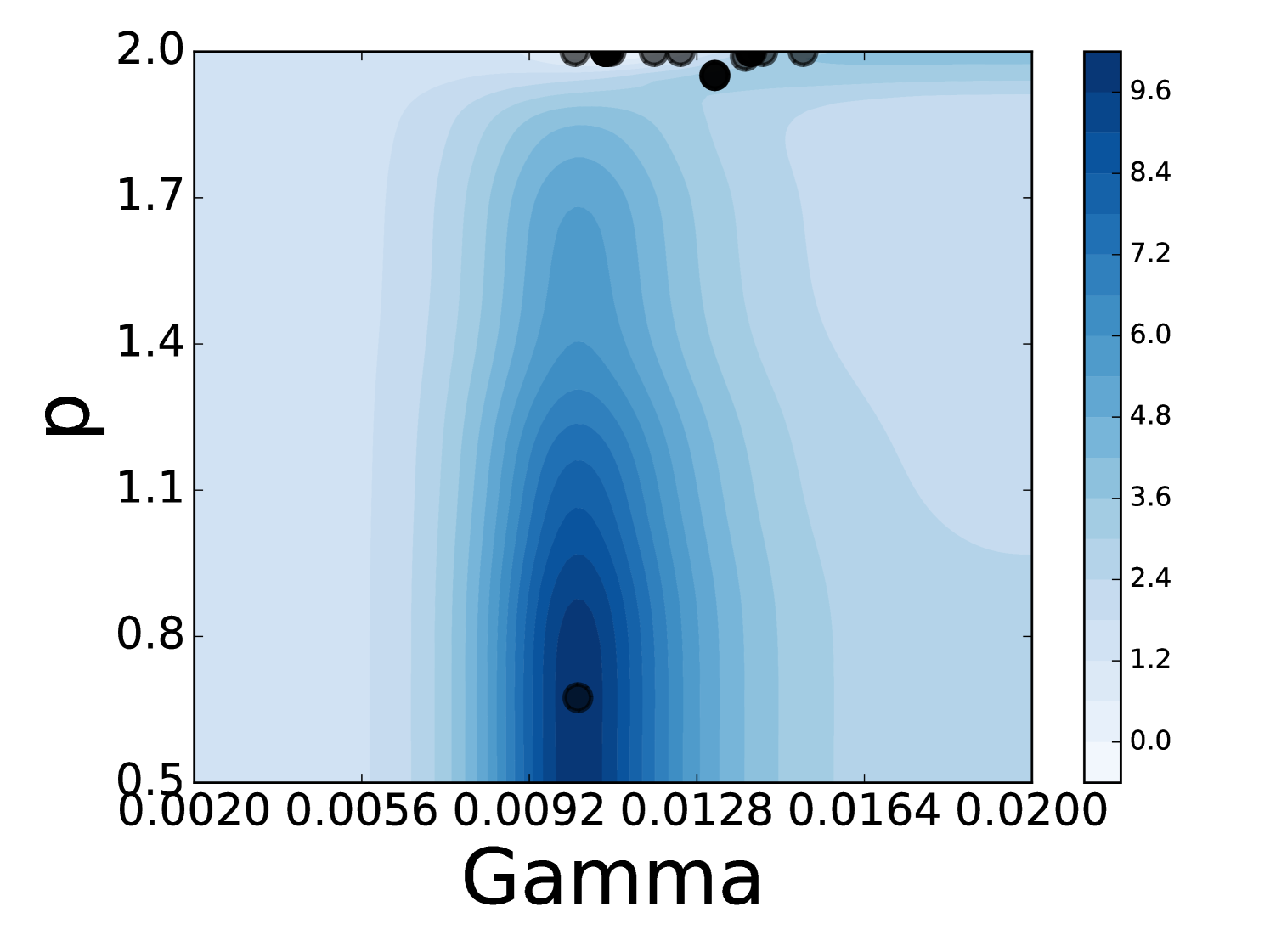

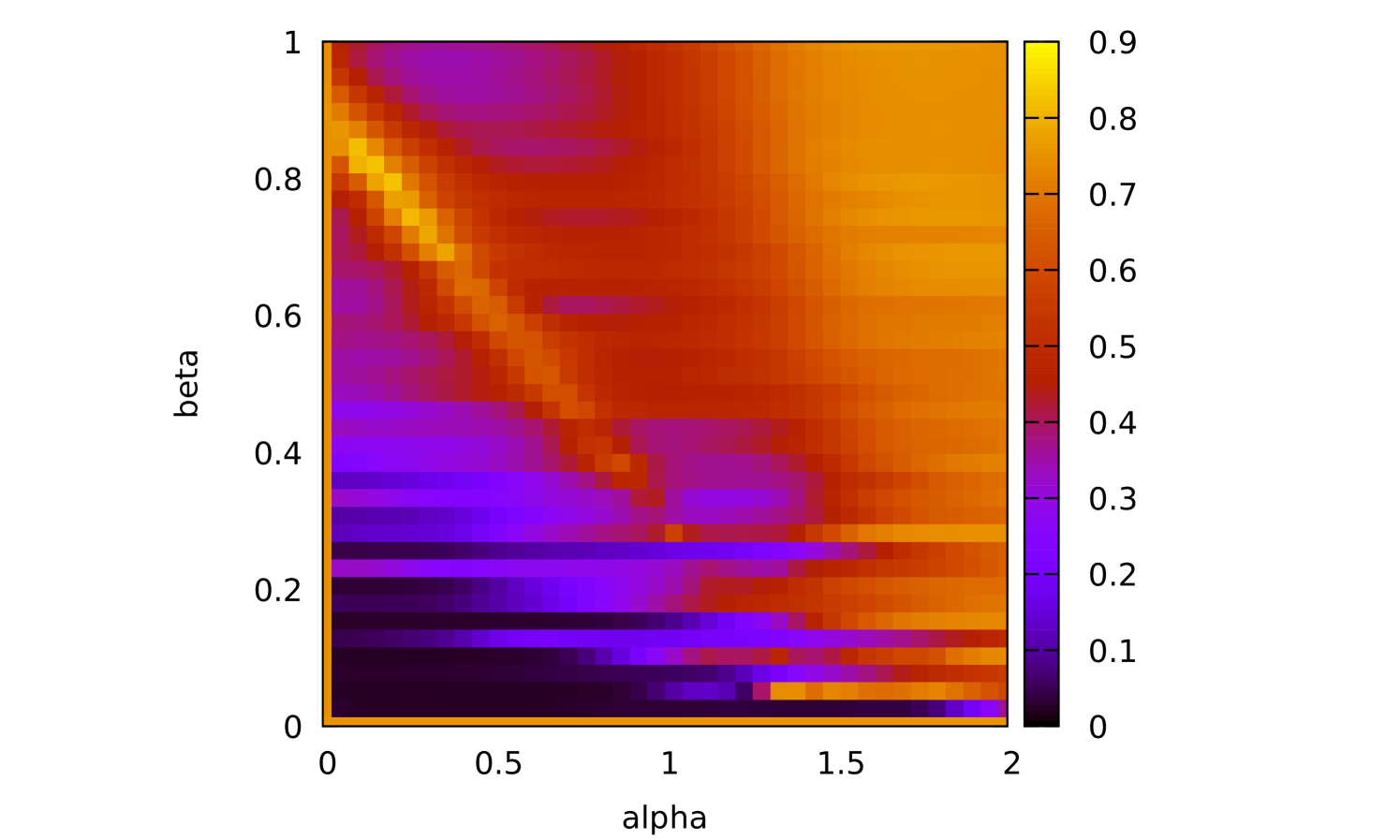

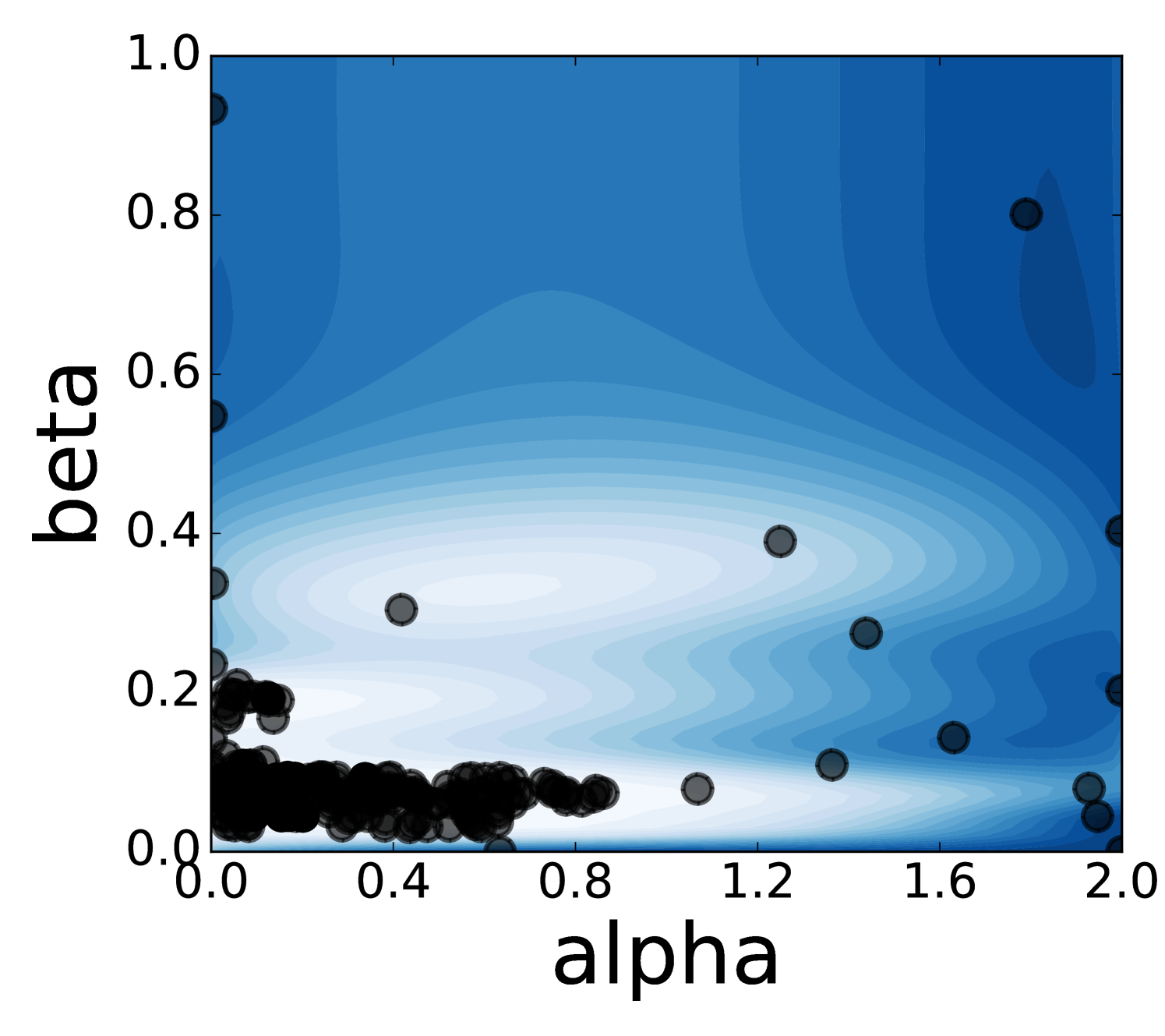

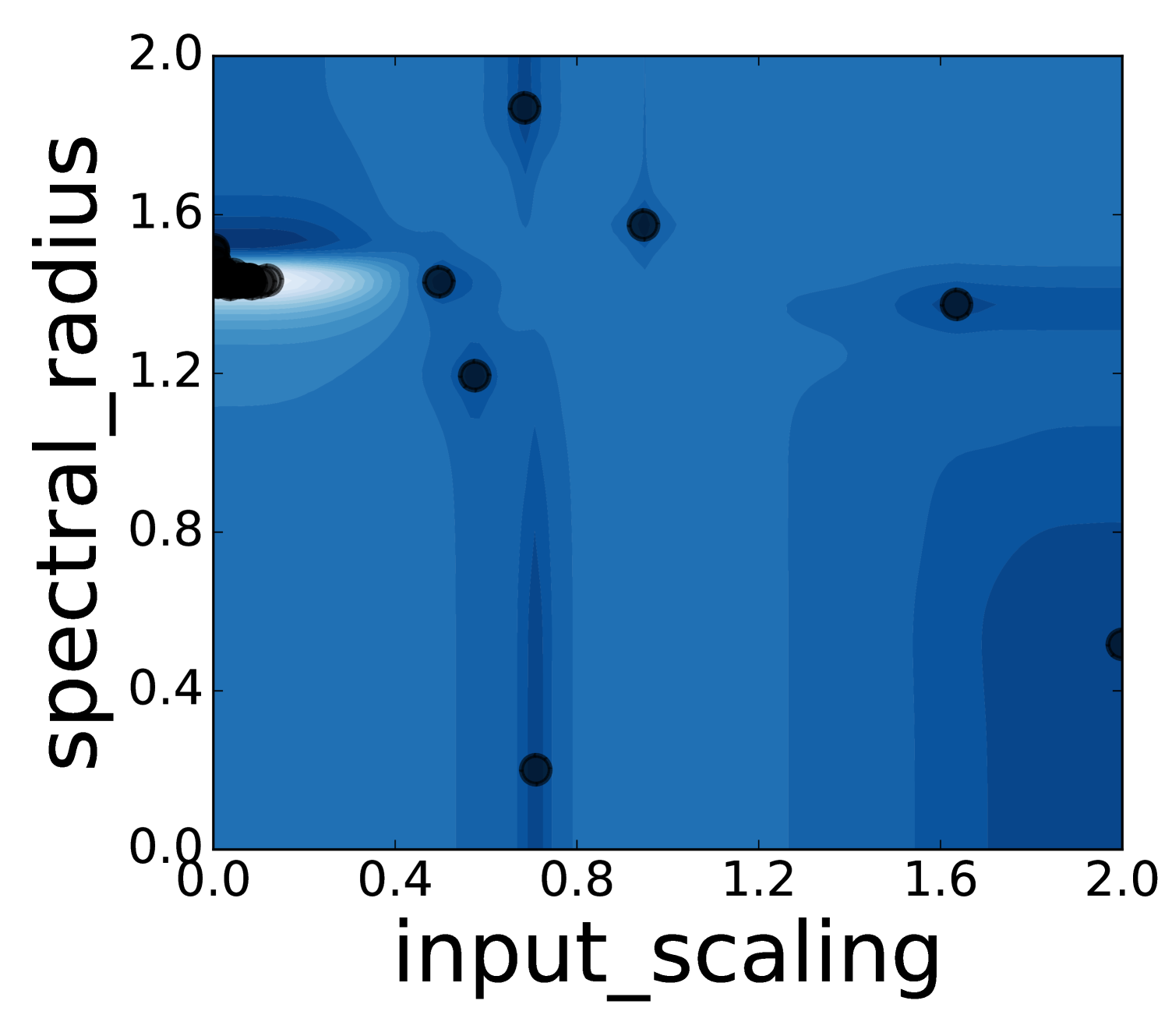

To get a better understanding of Spearmint’s behaviour, we have visualized a few runs on two-dimensional parameter regions. Animations of the search process can be found in the supplementary material. One can conclude that Spearmint settles quite fast at a certain region. This region often contains the minimum, such as in Figure 7 (see also Figures 22, 23, 24). In some cases, however, the region with the true minimum is missed, see Figure 8. In Figure 25 the region with the minimum is found, but is not fully investigated. Spearmint does find the two regions with local minima in Figures 22 and 23 (the area at the bottom left and the strip above it). The fast fixation to a region indicates that the algorithm is quite “eager” to discard large hyper-parameter regions that it hasn’t visited. This gives a qualitative understanding of the observed fast convergence behaviour: Spearmint discards large regions of the hyper-parameter space with only a few measurements, and after a while settles in a local minimum.

The estimate of the shape of the error surface is never really good, although in some cases a qualitative resemblance can be observed, cf. Figures 7 and 23. Sometimes there is almost no resemblance, cf. Figure 8. This is because the algorithm has a strong focus on finding a good minimum as soon as possible; it simply discards regions that are not of interest instead of trying to model them well.

8 Conclusion and Outlook

To conclude, we have shown that Bayesian optimization of Gaussian processes is an excellent technique for automated hyper-parameter optimization in reservoir computing. The method was implemented with the Spearmint library. On all considered benchmarks, the method performed equally well or significantly better.

For the nonlinear delay nodes reservoirs, we showed that one does not need an exact solution of the differential equation to have a high-quality reservoir. Although this seems to be known, we are not aware of publications were this is explicitly discussed. We furthermore found that the popular choice of node distance is often suboptimal. Because the algorithm is able to optimize over several parameters simultaneously, one can expect to find other such consistent optimal choices for other types of reservoirs.

Because the hyper-parameter search is automated, reservoir computing becomes a technique that requires little to no expert input. This could increase its appeal to machine learning practitioners. It remains to be seen whether this approach works well for all tasks and different types of reservoirs. We hope that the presented method is adopted by other researchers, so that a large variety of problems are studied.

Note that there is no optimization of other architectural features of the reservoir. The goal of this work is to compare the method with exactly the same architecture used in the literature. The methods presented here could possibly speed up the process of searching for new architectures, since the automated HO is able to find better results at a faster pace, without the need for a practitioner to acquire an intuition of the behaviour of the new architecture.

It would be of interest to see the performance of Spearmint for HO of hardware implementations of an NDN/ESN [28, 29, 30, 12, 59, 8, 9, 10, 11, 12], in which testing a hyper-parameter set could be more expensive compared to software implementations.

Another advantage of using Bayesian optimization to determine the hyper-parameters is that the comparison between different frameworks becomes less biased. When introducing a new framework it is not uncommon for researchers to put more effort into finding optimal parameters for the novel framework, than they do for the old method. When both methods are optimized using Bayesian optimization this bias becomes less of a factor.

Appendix A Details Santa Fe implementation

Appeltant [50] uses the first 4000 points of the data set: the first 1000 for training, the second for optimizing hyper-parameters, and the third for optimizing the regularization parameter . The NMSE is reported on the fourth set. We optimized the regularization parameter together with the other hyper-parameters on the second set, and measured the NMSE on the third and fourth set (last 2000 points).

One often uses initialization points to bring the reservoir to a stationary state. We use the first 200 points for initialization, and discard their reservoir states. So in fact we use 4200 points of the data set. We do not know if some initialization points are discarded in [50]. We checked that these small differences do not lead to qualitative differences in behaviour. The prediction to unseen data is robust, i.e., the first 1000 points and second 1000 points have similar errors.

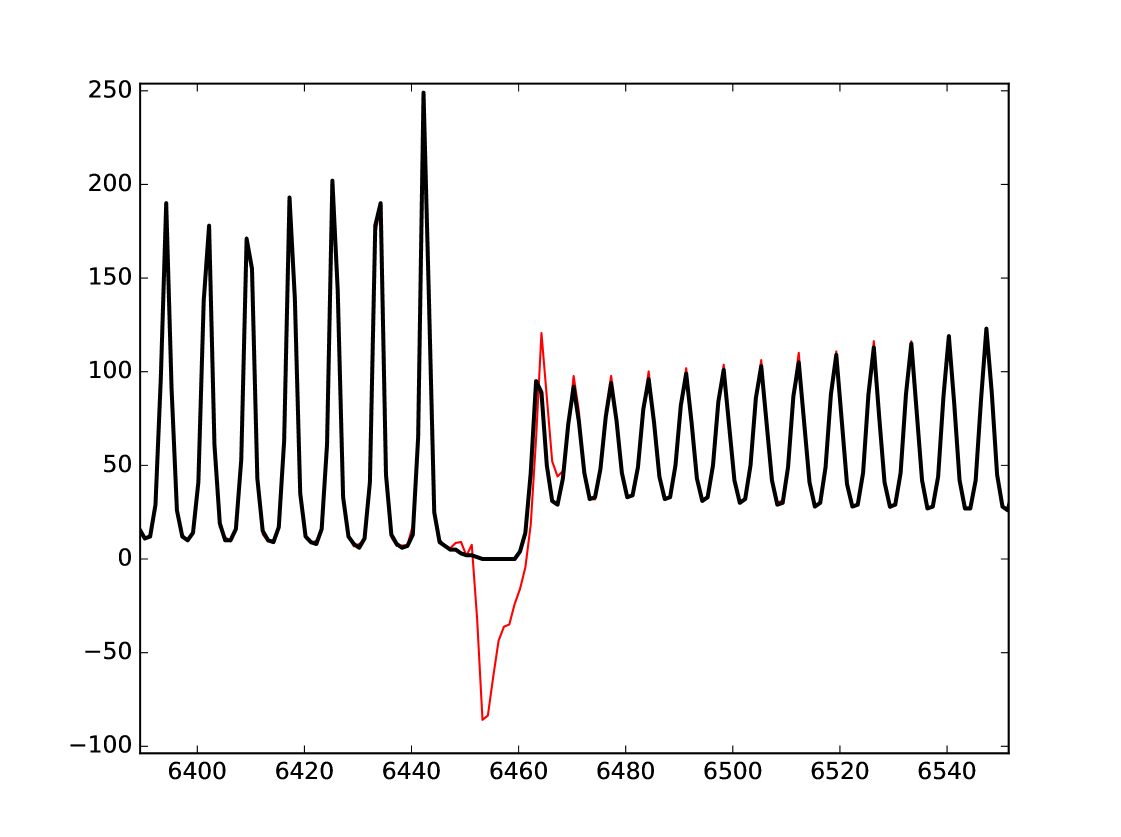

At points 6455-6461 in , the signal is exactly zero. This seems a measurement error, and the prediction of the reservoir diverges at those points, see Figure 9. Several papers include this part of the data set in their test or validation set. In this case, the reservoir structure is not only optimized with respect to predicting the next point, but also towards a greater stability for a null input.

Appendix B Heat maps Santa Fe laser data and MG NDN

We plot heat maps and one-dimensional slices of the error surface of the Santa Fe task. Parameter regions are near the optimal values unless stated otherwise.

Appendix C Heat maps NARMA 10 task and MG NDN

We plot heat maps and one-dimensional slices of the error surface of the Santa Fe task. Parameter regions are near the optimal values unless stated otherwise.

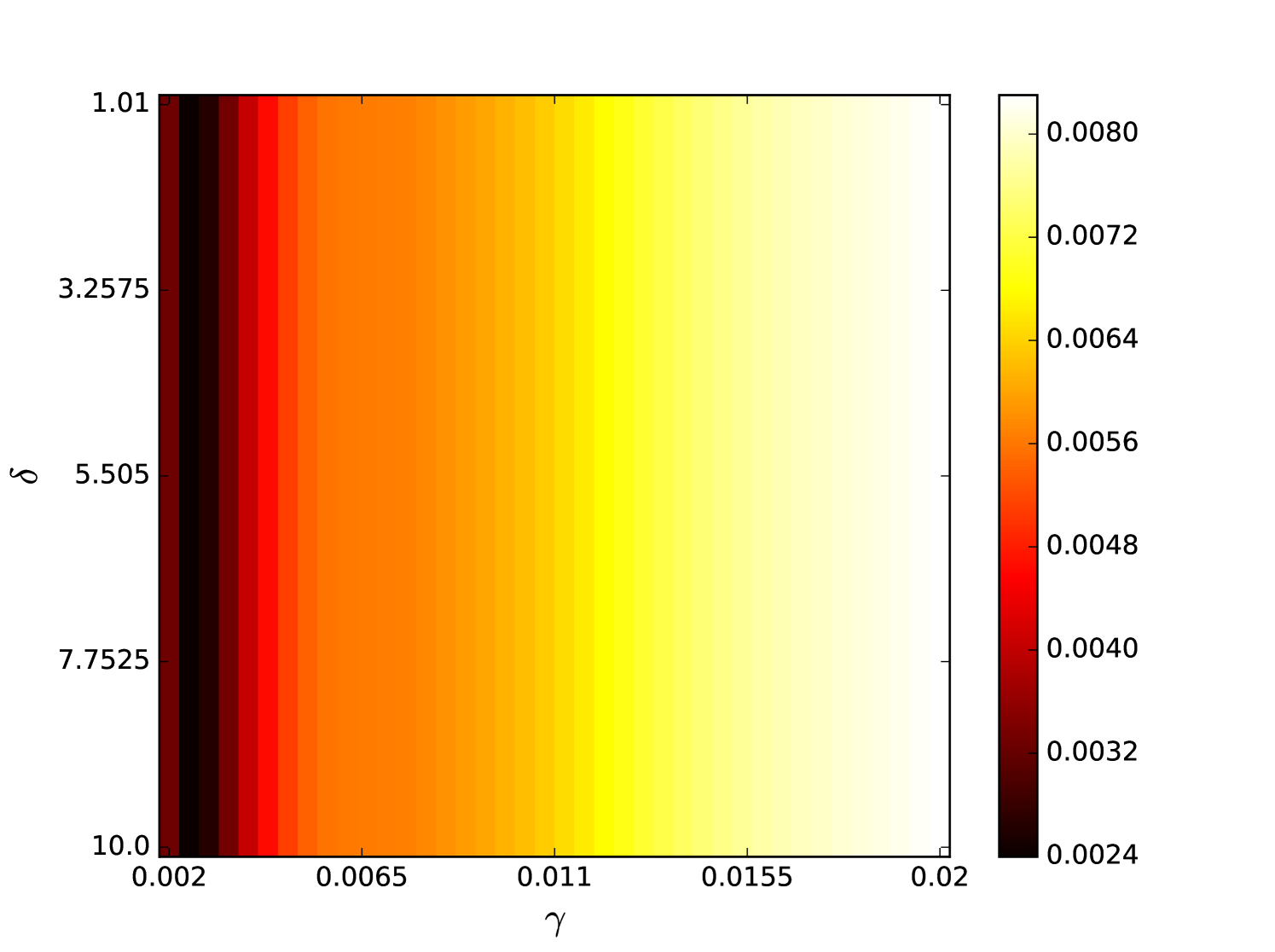

Appendix D Spearmint demos

We plot heat maps and Spearmint runs for the nonlinear channel equalization task.

Acknowledgement

Early work was done with Christian Van den Broeck, whom we thank for letting us work on this problem.

The authors would like to thank

Lars Keuninckx and Guy Van der Sande for discussions and reading the manuscript,

Bart Cleuren for reading the manuscript,

the reservoir computing group at IFISC for discussions,

and Peter Tino for answering our questions about echo state networks.

TB is supported by the Fonds voor Wetenschappelijk Onderzoek (FWO), project R4859.

The computational resources and services used in this work were provided by the VSC (Flemish Supercomputer Center), funded by the Research Foundation - Flanders (FWO) and the Flemish Government - department EWI. In this context, the authors would like to thank Geert Jan Bex for his help with the deployment of our software on the VSC.

References

- [1] Y. LeCun, L. Bottou, G. B. Orr, and K.-R. Müller, “Efficient backprop,” in Neural networks: Tricks of the trade. Springer, 2012, pp. 9–48.

- [2] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no. 7553, pp. 436–444, 2015.

- [3] S. Hochreiter, Y. Bengio, P. Frasconi, and J. Schmidhuber, “Gradient flow in recurrent nets: the difficulty of learning long-term dependencies,” in A field guide to dynamical recurrent neural networks, S. C. Kremer and J. F. Kolen, Eds. IEEE Press, 2001.

- [4] M. Arjovsky, A. Shah, and Y. Bengio, “Unitary evolution recurrent neural networks,” in International Conference on Machine Learning, 2016, pp. 1120–1128.

- [5] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural comput., vol. 9, no. 8, pp. 1735–1780, 1997.

- [6] M. Schuster and K. K. Paliwal, “Bidirectional recurrent neural networks,” IEEE Trans. on Signal Proc., vol. 45, no. 11, pp. 2673–2681, 1997.

- [7] M. Lukoševičius, “A practical guide to applying echo state networks,” in Neural networks: Tricks of the trade. Springer, 2012, pp. 659–686.

- [8] I. Fischer, J. Bueno, D. Brunner, M. C. Soriano, and C. Mirasso, “Photonic reservoir computing for ultra-fast information processing using semiconductor lasers,” in ECOC 2016; 42nd European Conference on Optical Communication; Proceedings of. VDE, 2016, pp. 1–3.

- [9] F. Duport, A. Smerieri, A. Akrout, M. Haelterman, and S. Massar, “Fully analogue photonic reservoir computer,” Scientific reports, vol. 6, 2016.

- [10] P. Antonik, M. Hermans, F. Duport, M. Haelterman, and S. Massar, “Towards pattern generation and chaotic series prediction with photonic reservoir computers,” in SPIE LASE. International Society for Optics and Photonics, 2016, pp. 97 320B–97 320B.

- [11] A. Katumba, M. Freiberger, P. Bienstman, and J. Dambre, “A multiple-input strategy to efficient integrated photonic reservoir computing,” Cognitive Computation, pp. 1–8, 2017.

- [12] M. C. Soriano, S. Ortín, L. Keuninckx, L. Appeltant, J. Danckaert, L. Pesquera, and G. Van der Sande, “Delay-based reservoir computing: noise effects in a combined analog and digital implementation,” IEEE Trans. Neural Netw. Learn. Syst., vol. 26, no. 2, pp. 388–393, 2015.

- [13] H. Jaeger, M. Lukoševičius, D. Popovici, and U. Siewert, “Optimization and applications of echo state networks with leaky-integrator neurons,” Neural networks, vol. 20, no. 3, pp. 335–352, 2007.

- [14] M. J. A. Rabin, M. S. Hossain, M. S. Ahsan, M. A. S. Mollah, and M. T. Rahman, “Sensitivity learning oriented nonmonotonic multi reservoir echo state network for short-term load forecasting,” in Informatics, Electronics & Vision (ICIEV), 2013 International Conference on. IEEE, 2013, pp. 1–6.

- [15] A. Sergio and T. Ludermir, “Pso for reservoir computing optimization,” Artificial Neural Networks and Machine Learning–ICANN 2012, pp. 685–692, 2012.

- [16] S. Basterrech, E. Alba, and V. Snášel, “An experimental analysis of the echo state network initialization using the particle swarm optimization,” in Nature and Biologically Inspired Computing (NaBIC), 2014 Sixth World Congress on. IEEE, 2014, pp. 214–219.

- [17] A. A. Ferreira and T. B. Ludermir, “Genetic algorithm for reservoir computing optimization,” in Neural Networks, 2009. IJCNN 2009. International Joint Conference on. IEEE, 2009, pp. 811–815.

- [18] A. A. Ferreira, T. B. Ludermir, and R. R. De Aquino, “An approach to reservoir computing design and training,” Expert systems with applications, vol. 40, no. 10, pp. 4172–4182, 2013.

- [19] M. Rigamonti, P. Baraldi, E. Zio, I. Roychoudhury, K. Goebel, and S. Poll, “Echo state network for the remaining useful life preeiction of a turbofan engine,” in Proceedings of the Third European Prognostic and Health Management Conference, PHME, 2016.

- [20] J. Snoek, H. Larochelle, and R. P. Adams, “Practical bayesian optimization of machine learning algorithms,” in Advances in Neural Information Processing Systems 25, F. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger, Eds. Curran Associates, Inc., 2012, pp. 2951–2959.

- [21] I. Goodfellow, Y. Bengio, and A. Courville, Deep Learning. MIT Press, 2016, http://www.deeplearningbook.org.

- [22] H. Jaeger, “The “echo state” approach to analysing and training recurrent neural networks-with an erratum note,” Technical Report GMD Report 148, German National Research Center for Information Technology, 2001.

- [23] W. Maass, T. Natschläger, and H. Markram, “Real-time computing without stable states: A new framework for neural computation based on perturbations,” Neural comput., vol. 14, no. 11, pp. 2531–2560, 2002.

- [24] S. Scardapane and D. Wang, “Randomness in neural networks: an overview,” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, vol. 7, no. 2, 2017.

- [25] G. Manjunath and H. Jaeger, “Echo state property linked to an input: Exploring a fundamental characteristic of recurrent neural networks,” Neural computation, vol. 25, no. 3, pp. 671–696, 2013.

- [26] G. Wainrib and M. N. Galtier, “A local echo state property through the largest lyapunov exponent,” Neural Networks, vol. 76, pp. 39–45, 2016.

- [27] L. Appeltant, M. C. Soriano, G. Van der Sande, J. Danckaert, S. Massar, J. Dambre, B. Schrauwen, C. R. Mirasso, and I. Fischer, “Information processing using a single dynamical node as complex system,” Nat. Commun., vol. 2, p. 468, 2011.

- [28] L. Larger, M. C. Soriano, D. Brunner, L. Appeltant, J. M. Gutiérrez, L. Pesquera, C. R. Mirasso, and I. Fischer, “Photonic information processing beyond turing: an optoelectronic implementation of reservoir computing,” Optics express, vol. 20, no. 3, pp. 3241–3249, 2012.

- [29] Y. Paquot, F. Duport, A. Smerieri, J. Dambre, B. Schrauwen, M. Haelterman, and S. Massar, “Optoelectronic reservoir computing,” Sci. Rep., vol. 2, 2012.

- [30] D. Brunner, M. C. Soriano, C. R. Mirasso, and I. Fischer, “Parallel photonic information processing at gigabyte per second data rates using transient states,” Nat. Commun., vol. 4, p. 1364, 2013.

- [31] K. Vandoorne, P. Mechet, T. Van Vaerenbergh, M. Fiers, G. Morthier, D. Verstraeten, B. Schrauwen, J. Dambre, and P. Bienstman, “Experimental demonstration of reservoir computing on a silicon photonics chip,” Nat. Commun., vol. 5, 2014.

- [32] C. K. Williams and C. E. Rasmussen, “Gaussian processes for machine learning,” the MIT Press, vol. 2, no. 3, p. 4, 2006.

- [33] E. Brochu, V. M. Cora, and N. De Freitas, “A tutorial on bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning,” arXiv preprint arXiv:1012.2599, 2010.

- [34] D. Sivia and J. Skilling, Data analysis: a Bayesian tutorial. Oxford University Press, Oxford, 2006.

- [35] J. Snoek, K. Swersky, R. S. Zemel, and R. P. Adams, “Input warping for bayesian optimization of non-stationary functions.” in Proc. Int. Conf. Mach. Learn., 2014, pp. 1674–1682.

- [36] J. Mockus, “Application of bayesian approach to numerical methods of global and stochastic optimization,” Journal of Global Optimization, vol. 4, no. 4, pp. 347–365, 1994.

- [37] A. D. Bull, “Convergence rates of efficient global optimization algorithms,” Journal of Machine Learning Research, vol. 12, no. Oct, pp. 2879–2904, 2011.

- [38] K. Kawaguchi, L. P. Kaelbling, and T. Lozano-Pérez, “Bayesian optimization with exponential convergence,” in Advances in Neural Information Processing Systems, 2015, pp. 2809–2817.

- [39] N. De Freitas, A. Smola, and M. Zoghi, “Exponential regret bounds for gaussian process bandits with deterministic observations,” in Proceedings of the 29th International Conference on Machine Learning, 2012.

- [40] N. A. Gershenfeld and A. S. Weigend, “The future of time series: learning and understanding,” in Time series prediction: Forecasting the future and understanding the past, A. S. Weigend and N. A. Gershenfeld, Eds. Addison-Wesley Publishing Company, Reading, MA, USA, 1994.

- [41] “Santa fe laser data,” http://www-psych.stanford.edu/~andreas/Time-Series/SantaFe.html, accessed: 2016-08-31.

- [42] A. F. Atiya and A. G. Parlos, “New results on recurrent network training: unifying the algorithms and accelerating convergence,” IEEE Trans. Neural Netw., vol. 11, no. 3, pp. 697–709, 2000.

- [43] V. J. Mathews and J. Lee, “Adaptive algorithms for bilinear filtering,” in SPIE’s 1994 International Symposium on Optics, Imaging, and Instrumentation. International Society for Optics and Photonics, 1994, pp. 317–327.

- [44] H. Jaeger and H. Haas, “Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication,” Science, vol. 304, no. 5667, pp. 78–80, 2004.

- [45] A. Rodan and P. Tino, “Simple deterministically constructed recurrent neural networks,” in International Conference on Intelligent Data Engineering and Automated Learning. Springer, 2010, pp. 267–274.

- [46] ——, “Minimum complexity echo state network,” IEEE Trans. Neural Netw., vol. 22, no. 1, pp. 131–144, 2011.

- [47] F. Pedregosa et al., “Scikit-learn: Machine learning in python,” J. Mach. Learn. Res., vol. 12, pp. 2825–2830, 2011.

- [48] D. Verstraeten, B. Schrauwen, S. Dieleman, P. Brakel, P. Buteneers, and D. Pecevski, “Oger: modular learning architectures for large-scale sequential processing,” J. Mach. Learn. Res., vol. 13, no. Oct, pp. 2995–2998, 2012.

- [49] T. Zito, N. Wilbert, L. Wiskott, and P. Berkes, “Modular toolkit for data processing (mdp): A python data processing framework,” Front. Neuroinf., 2009.

- [50] L. Appeltant, “Reservoir computing based on delay-dynamical systems,” These de Doctorat, Vrije Universiteit Brussel/Universitat de les Illes Balears, 2012.

- [51] L. Appeltant, G. Van der Sande, J. Danckaert, and I. Fischer, “Constructing optimized binary masks for reservoir computing with delay systems,” Sci. Rep., vol. 4, p. 3629, 2014.

- [52] M. C. Soriano, S. Ortín, D. Brunner, L. Larger, C. R. Mirasso, I. Fischer, and L. Pesquera, “Optoelectronic reservoir computing: tackling noise-induced performance degradation,” Optics express, vol. 21, no. 1, pp. 12–20, 2013.

- [53] S. Ortín, M. C. Soriano, L. Pesquera, D. Brunner, D. San-Martín, I. Fischer, C. R. Mirasso, and J. M. Gutiérrez, “A unified framework for reservoir computing and extreme learning machines based on a single time-delayed neuron,” Sci. Rep., vol. 5, 2015.

- [54] K. Hicke, M. A. Escalona-Morán, D. Brunner, M. C. Soriano, I. Fischer, and C. R. Mirasso, “Information processing using transient dynamics of semiconductor lasers subject to delayed feedback,” IEEE J. Sel. Topics Quantum Electron., vol. 19, no. 4, pp. 1 501 610–1 501 610, 2013.

- [55] R. M. Nguimdo, G. Verschaffelt, J. Danckaert, and G. Van der Sande, “Fast photonic information processing using semiconductor lasers with delayed optical feedback: Role of phase dynamics,” Optics express, vol. 22, no. 7, pp. 8672–8686, 2014.

- [56] ——, “Simultaneous computation of two independent tasks using reservoir computing based on a single photonic nonlinear node with optical feedback,” IEEE Trans. Neural Netw. Learn. Syst., vol. 26, no. 12, pp. 3301–3307, 2015.

- [57] ——, “Reducing the phase sensitivity of laser-based optical reservoir computing systems,” Optics express, vol. 24, no. 2, pp. 1238–1252, 2016.

- [58] H. Wang and X. Yan, “Optimizing the echo state network with a binary particle swarm optimization algorithm,” Knowledge-Based Systems, vol. 86, pp. 182–193, 2015.

- [59] J. Dong, S. Gigan, F. Krzakala, and G. Wainrib, “Scaling up echo-state networks with multiple light scattering,” arXiv preprint arXiv:1609.05204, 2016.