BDDC by a Frontal Solver and the Stress Computation in a Hip Joint Replacement

Abstract

A parallel implementation of the BDDC method using the frontal solver is employed to solve systems of linear equations from finite element analysis, and incorporated into a standard finite element system for engineering analysis by linear elasticity. Results of computation of stress in a hip replacement are presented. The part is made of titanium and loaded by the weight of human body. The performance of BDDC with added constraints by averages and with added corners is compared.

keywords:

domain decomposition , iterative substructuring , finite elements , linear elasticity , parallel algorithms, , , ,

1 Introduction

Parallel numerical solution of linear problems arising from linearized isotropic elasticity discretized by finite elements is important in many areas of engineering. The matrix of the system is typically large, sparse, and ill-conditioned. The classical frontal solver [8] has became a popular direct method for solving problems with such matrices arising from finite element analyses. However, for large problems, the computational cost of direct solvers makes them less competitive compared to iterative methods, such as the preconditioned conjugate gradients (PCG) [4]. The goal is then to design efficient preconditioners that result in a lower overall cost and can be implemented in parallel, which has given rise to the field of domain decomposition and iterative substructuring [17].

The Balancing Domain Decomposition based on Constraints (BDDC) [6] is one of the most advanced preconditioners of this class. However, the additional custom coding effort required can be an obstacle to the use of the method in an existing finite element code. We propose an implementation of BDDC built on top of common components of existing finite element codes, namely the frontal solver and the element stiffness matrix generation. The implementation requires only a minimal amount of additional code and it is therefore of interest. For an important alternative implementation of BDDC, see [11].

BDDC is closely related to FETI-DP [7]. Though the methods are quite different, they can be built largely from the same components, and the eigenvalues of the preconditioned problem (other than the eigenvalue equal to one) in BDDC and FETI-DP are the same [13]. See also [2, 11, 14] for simplified proofs. Thus the performance of BDDC and FETI-DP is essentially identical, and results for one method apply immediately to the other.

The frontal solver was used to implement a limited variant of BDDC in [3, 16]. The implementation takes advantage of the existing integration of the frontal solver into the finite element methodology and of its implementation of constraints, which is well-suited for BDDC. However, the frontal solver treats naturally only point constraints, while an efficient BDDC method in three dimensions requires constraints on averages. This fact was first observed for FETI-DP experimentally in [7], and theoretically in [10], but these observations apply to BDDC as well because of the equivalence between the methods.

In this paper, we extend the previous implementation of BDDC by the frontal solver to constraints on averages and apply the method to a problem in biomechanics. We also compare the performance of the mehod with adding averages and with additional point constraints. The implementation relies on the separation of point constraints and enforcing the rest by Lagrange multipliers, as suggested already in [6]. One new aspect of the present approach is the use of reactions, which come naturally from the frontal solver, to avoid custom coding.

2 Mathematical formulation of BDDC

Consider the problem in a variational form

| (1) |

where is a finite element space of -valued piecewise polynomial functions continuous on a given domain , satisfying homogeneous Dirichlet boundary conditions, and

| (2) |

Here and are the first, and the second Lamé’s parameter, respectively. Solution represents the vector field of displacement. It is known that is a symmetric positive definite bilinear form on . An equivalent formulation of (1) is to find a solution to a linear system

| (3) |

where is the stiffness matrix computed as , where is a finite element basis of , corresponding to set of unknowns, also called degrees of freedom, defined as values of displacement at the nodes of a given triangulation of the domain. The domain is decomposed into nonoverlapping subdomains , , also called substructures. Unknowns common to at least two subdomains are called boundary unknowns and the union of all boundary unknowns is called the interface .

The first step is the reduction of the problem to the interface. This is quite standard and described in the literature, e.g., [17]. The space is decomposed as the -orthogonal direct sum , where is the space of all functions from with nonzero values only inside (in particular, they are zero on ), and is the -orthogonal complement of all spaces ; . Functions from are fully determined by their values at unknowns on and the discrete harmonic condition that they have minimal energy on every subdomain (i.e. solve the system with zero right hand side in corresponding equations). They are represented in the computation by their values on the interface . Solution may be split into the sum of interior solutions , , and . Then problem (3) may be rewritten as

| (4) |

Let us now write problem (4) in the block form, with the first block corresponding to unknowns in subdomain interiors, and the second block corresponding to unknowns at the interface,

| (5) |

with . Using the fact that functions from are energy orthogonal to interior functions (so it holds ) and eliminating the variable , we obtain that (5) is equivalent to

| (6) |

| (7) |

and the solution is obtained as . Problem (7) is equivalent to the problem

| (8) |

where is the Schur complement with respect to interface: , and is the condensed right hand side . Problem (8) can be split into two problems

| (9) |

| (10) |

Since has a block diagonal structure, the solution to (6) may be found in parallel and similarly the solution to (9).

The BDDC method is a particular kind of preconditioner for the reduced problem (10). The main idea of the BDDC preconditioner in an abstract form [14] is to construct an auxiliary finite dimensional space such that and extend the bilinear form to a form defined on and such that solving the variational problem (1) with in place of is cheaper and can be split into independent computations done in parallel. Then the solution projected to is used for the preconditioning of . Specifically, let be a given projection of onto , and the residual in a PCG iteration. Then the output of the BDDC preconditioner is the part of , where

| (11) |

In terms of operators, , where is the operator on associated with the bilinear form (but not computed explicitly as a matrix).

Note that while the residual in the PCG method applied to the reduced problem is given at the interface only, the residual in (11) has the dimension of all unknowns on the subdomain. This is corrected naturally by extending the residual to subdomain interiors by zeros (setting ), which is required by the condition that the solution is discrete harmonic inside subdomain. Similarly, only interface values of are used in further PCG computation. Such approach is equivalent to computing with explicit Schur complements.

The choice of the space and the projection determines a particular instance of BDDC [6, 14]. All functions from are continuous on the domain . In order to design the space , we relax the continuity on the interface . On , we select coarse degrees of freedom and define as the space of finite element functions with minimal energy on every subdomain, continuous across only at coarse degrees of freedom. The coarse degrees of freedom can be of two basic types – explicit unknowns (called coarse unknowns) at selected nodes (called corners), and averages over larger sets of nodes (subdomain faces or edges). The continuity condition then means that the values at the corresponding corners, resp. averages, on neighbouring subdomains coincide. The bilinear form from (2) is extended to on by integrating (2) over the subdomains separately and adding the results.

The projection is defined at unknowns on the interface (the part) as a weighted average of values from different subdomains and thus resulting in function continuous across the interface. These averaged values on determine the projection , because values inside subdomains (the part) are then obtained by the solutions of local subdomain problems (9) to make the averaged function discrete harmonic. To assure good performance regardless of different stiffness of the subdomains [12], the weights are chosen proportional to the corresponding diagonal entries of the subdomain stiffness matrices. The transposed projection is used for distribution of the residual among neighbouring subdomains and represents the decomposition of unity at unknowns on interface.

The decomposition into subspaces used to derive the problem with Schur complement (10) is now repeated for space , with the coarse degrees of freedom playing the role of interface unknowns and the role of . Namely, space is decomposed as -orthogonal direct sum , where is the space of functions with nonzero values only in outside coarse degrees of freedom (they have zero values at corners, they are generally not continuous at other unknowns on , and they have zero averages) and is the coarse space, defined as the -orthogonal complement of all spaces : . Functions from are fully determined by their values at coarse degrees of freedom (where they are continuous) and have minimal energy. Thus, they are generally discontinuous across outside the corners. The solution from (11) is now split accordingly as where , determined by

| (12) |

is called the coarse correction, and , determined by

| (13) |

is the substructure correction from , .

Let us now rewrite the BDDC preconditioner in terms of matrices, following [6]. Problem (13) is formulated in a saddle point form as

| (14) |

where denotes the substructure local stiffness matrix, obtained by the subassembly of element matrices only of elements in substructure , matrix represents constraints on subdomain, that enforce zero values of coarse degrees of freedom, is vector of Lagrange multipliers, and is the weighted residual restricted to subdomain .

Matrix is singular for floating subdomains (subdomains not touching Dirichlet boundary conditions), while the augmented matrix of problem (14) is regular and may be factorized. Matrix contains both constraints enforcing continuity across corners (single point continuity), and constraints enforcing equality of averages over edges and faces of subdomains. The former type corresponds to just one nonzero entry equal to 1 on a row of , while the latter leads to several nonzero entries on a row. This structure will be exploited in the following section.

Problem (14) is solved in each iteration of the PCG method to find the correction from substructure . However, the matrix of (14) is used prior the whole iteration process to construct the local subdomain matrix of the coarse problem. First, the coarse basis functions are found independently for each subdomain as the solution to

| (15) |

This is a problem with multiple right hand sides, where is a matrix of coarse basis functions with several columns, each corresponding to one coarse degree of freedom on subdomain. These functions are given by values equal to 0 at all coarse degrees of freedom except one, where they have value equal to 1, and they have minimal energy on subdomain outside coarse degrees of freedom. The identity block has the dimension of the number of constraints on the subdomain.

Once is known, the subdomain coarse matrix is constructed as

| (16) |

Matrices are then assembled to form the global coarse matrix . This procedure is same as the standard process of assembly in finite element solution, with subdomains playing the role of elements, coarse degrees of freedom on subdomain representing degrees of freedom on element, and matrix representing the element stiffness matrix.

Problem (12) is now

| (17) |

where is the global coarse residual obtained by the assembly of the subdomain contributions of the form .

The coarse solution has the dimension of the number of all coarse degrees of freedom. So, to add the correction to subdomain problems, we first have to restrict it to coarse degrees of freedom on each subdomain and to interpolate it to the whole subdomain by . By extending and by zero to other subdomains, these can be summed over the subdomains to form the final vector . Finally, the preconditioned residual is obtained as .

It is worth noticing that in the case of no constraints on averages, i.e. using only coarse unknowns for the definition of the coarse space, matrix of problem (17) is simply the Schur complement of matrix with respect to coarse unknowns. This fact was pointed out in [11]. If additional degrees of freedom are added for averages, they correspond to new explicit unknowns in .

Obviously, several mapping operators among various spaces are needed in the implementation, defining embedding of subdomains into global problem, local subdomain coarse problem into global coarse problem etc. We have circumvented their mathematical definition by words for the sake of brevity, while we refer to [6, 12] for rigorous definitions of these operators.

3 BDDC implementation based on frontal solver

The frontal solver implements the solution of a square linear system with some of the variables having prescribed values. Equations that correspond to the fixed variables are omitted and the values of these variables are substituted into the solution vector directly. The output of the solver consists of the solution and the resulting imbalance in the equations, called reaction forces. More precisely, consider a block decomposition of the vector of unknowns with the second block consisting of all fixed variables, and write a system matrix with the same block decomposition (here, the decomposition is different from the one in Section 2). Then on exit from the frontal solver,

| (18) |

where fixed variable values and the load vectors and are the inputs, while the solution and the reaction are the outputs. Stiffness matrices of elements are input instead of the whole matrix, and their assembly is done simultaneously with the factorization inside the frontal solver.

The key idea of this section is to split the constraints in matrix and to handle them in different ways. Those enforcing zero values at corners will be enforced as fixed variables, while the remaining constraints, corresponding to averages and denoted , will be still enforced using Lagrange multipliers.the

In the rest of this section, we drop the subdomain subscript i and we write subdomain vectors in the block form with the second block consisting of unknowns that are involved in coarse degrees of freedom (i.e. coarse unknowns), denoted by the subscript c, and the first block consisting of the remaining degrees of freedom, denoted by the subscript f. The vector of the coarse degrees of freedom given by averages is written as , where each row of contains the coefficients of the average that makes that degree of freedom. Then subdomain vectors are characterized by , . Assume that , with , that is, the averages do not involve single variable coarse degrees of freedom; then . The subdomain stiffness matrix is singular for floating subdomains, but the block is nonsingular if there are enough corners to eliminate the rigid body motions, which will be assumed.

We now show how to solve (14) – (17) using the frontal solver. In the case when there are no averages as coarse degrees of freedom, we recover the previous method from [3, 16].

The local substructure problems (13) are written in the frontal solver form (18) as

| (19) |

where , is the part in the f block of the residual in the PCG method distributed to the substructures by the operator , and is the reaction. The constraint is enforced by marking the unknowns as fixed, while the remaining constraints are enforced via the Lagrange multiplier . Using the fact that , we get from (19) that

| (20) | ||||

| (21) | ||||

| (22) |

From (20), . Now substituting into (22), we get the problem for Lagrange multiplier ,

| (23) |

The matrix is dense but small, with the order equal to the number of averages on the subdomain, and it is constructed by solving the system with multiple right hand sides by the frontal solver and then the multiplication . After solving problem (23), we substitute for in (20) and find from

| (24) |

by the frontal solver, considering fixed. The factorization in the frontal solver for (24) and the factorization of the matrix for (23) need to be computed only once in the setup phase.

The coarse problem (17) is solved by the frontal solver just like a finite element problem, with the subdomains playing the role of elements. It only remains to specify the basis functions of on the subdomain from (15), and compute the local subdomain coarse matrix (16) efficiently. Denote by the matrix whose colums are coarse basis functions associated with the coarse unknowns at corners, and the matrix made out of the coarse basis functions associated with averages. To find the coarse basis functions, we proceed similarly as in (19) and write the equations for the coarse basis functions in the frontal solver form, now with multiple right-hand sides,

| (25) |

where and are matrices of reactions. Denote , , , , and . Then (25) becomes

| (26) | ||||

| (27) | ||||

| (28) |

From (26), we get . Substituting into (28), we derive the problem for Lagrange multipliers

| (29) |

which is solved for by solving the system (29) for multiple right hand sides. Since is known, we can use the frontal solver to solve (26)-(27) to find and :

| (30) |

considering fixed. Finally, we construct the local coarse matrix corresponding to the subdomain as

| (31) |

where .

At the end of the setup phase, the matrix of coarse problem is factored by the frontal solver, using subdomain coarse matrices as input.

4 The algorithm

The setup starts with two types of factorization of the system matrix by frontal algorithm that differ only in the set of fixed variables. In the first factorization, all interface unknowns are prescribed as fixed, for the solution of problems (5) and (7). In the second factorization, only the coarse unknowns are fixed, for the solution of problems (24) and (30). Both factorizations are done subdomain by subdomain, so the interface unknowns are represented by their own instance in different subdomains. This naturally leads to parallelization according to subdomains. Then the main steps of the algorithm are as follows.

(A) Solve (6) in parallel on every subdomain for as reaction using the frontal solver with fixed,

| (32) |

(B) Solve (10) for using PCG with BDDC

preconditioner (for more details see bellow).

(C) Compute the solution

from (5) using the frontal solver with fixed

and .

Step (B) in detail follows. Before starting cycle of PCG iterations, compute in advance:

- •

- •

- •

-

•

Factorization of matrix of (17) using the frontal solver with local coarse matrices as ‘element’ matrices.

- •

Then in every PCG iteration, compute from in three steps:

-

1.

Compute by distributing the residual on interface among neigbouring subdomains.

For every subdomain, compute as restriction of to that subdomain. -

2.

Compute as sum of all substructure corrections and coarse correction . These can be computed in parallel for every :

- •

-

•

Coarse correction is solution of (17).

We are interested in values of only on interface.

-

3.

Compute the part of at every interface node as weighted average of values of at that node (neigbouring subdomains have generally different values of at corresponding interface nodes).

Note that in every iteration of PCG, the product is needed, where is a search direction. This product is computed using the frontal solver with fixed,

| (34) |

The interior part is computed only as a by-product, and it is not used in the PCG iterations.

5 Numerical results

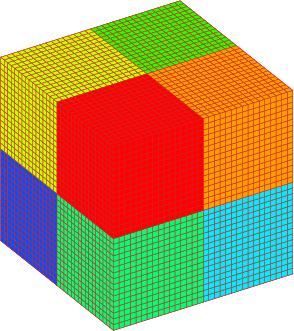

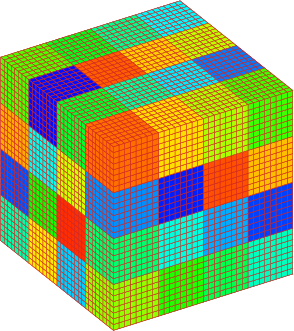

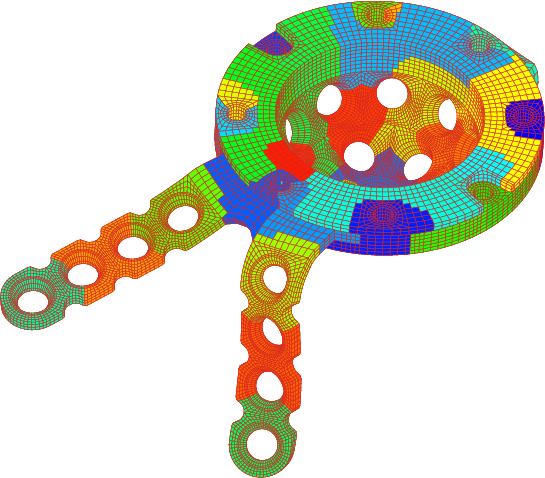

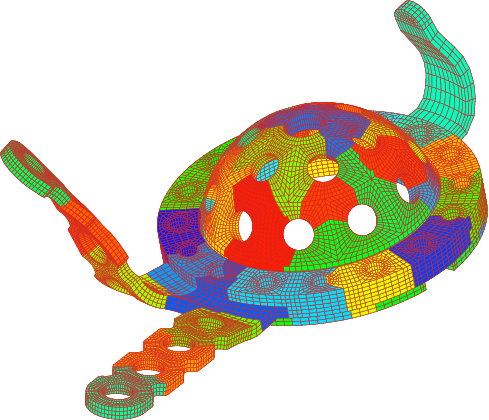

The implementation was first tested on the problem of unit cube, a classical test problem of domain decomposition methods. In our case, the cube is made of steel with Young’s modulus Pa and Poisson’s ratio . The cube is fixed at one face and loaded by the force of N, acting on one edge opposite to the fixed face in direction parallel to it and pointing outwards of the cube. The mesh consists of trilinear elements. It was uniformly divided into and subdomains, resulting in and , respectively (here denotes the characteristic size of subdomains and the characteristic size of elements). These divisions are presented in Figure 1. The interface is initially divided into corners, edges, and faces in the case of subdomains, and into corners, edges, and faces in the case of subdomains.

All experiments with this problem were computed on 8 1.5 GHz Intel Itanium 2 processors of SGI Altix 4700 computer in CTU Supercomputing Centre, Prague. The stopping criterion of PCG was chosen as . In the presented results, an external parallel multifrontal solver MUMPS [1] was used for the factorization and solution of the coarse problem (17), instead of the serial frontal solver.

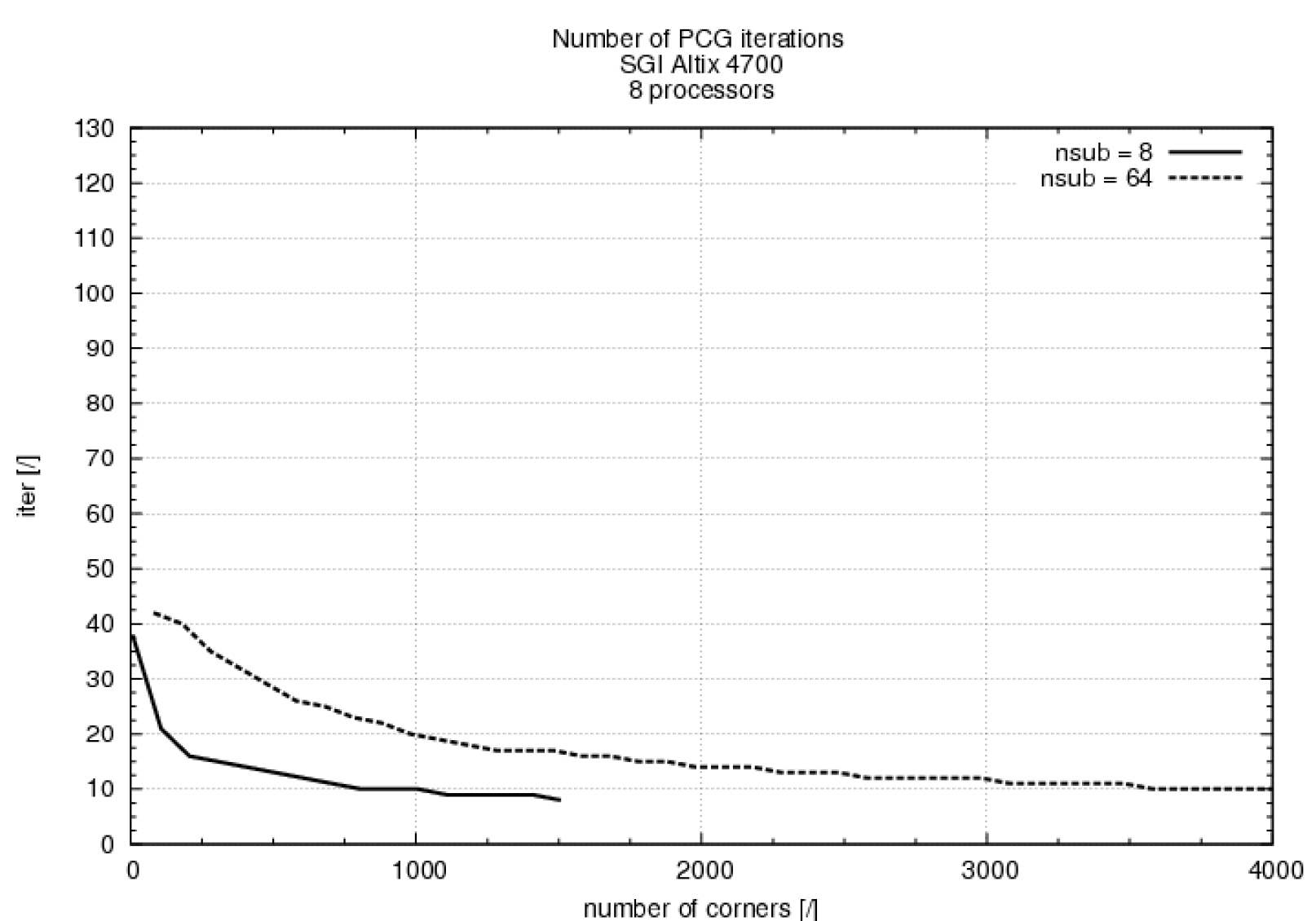

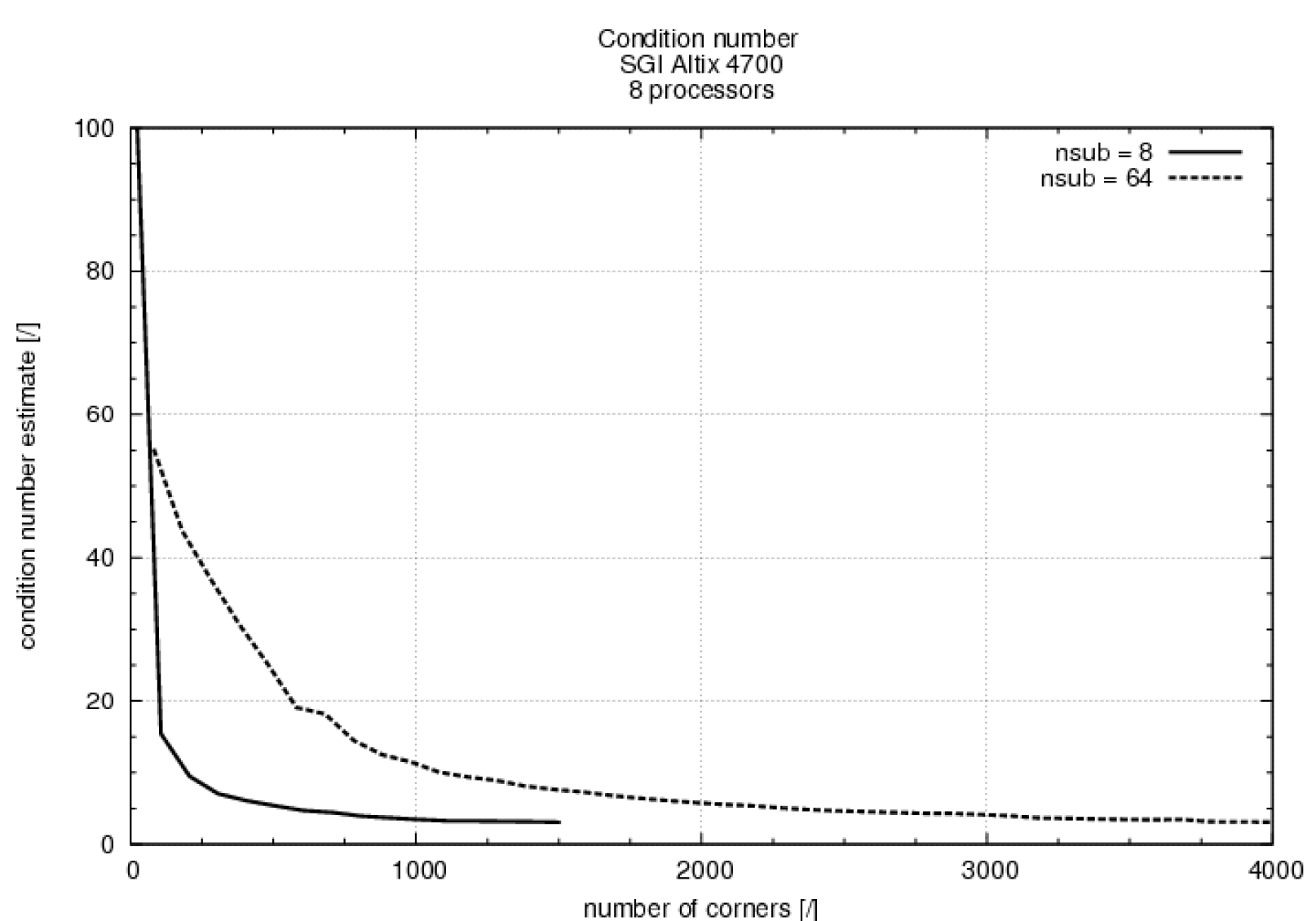

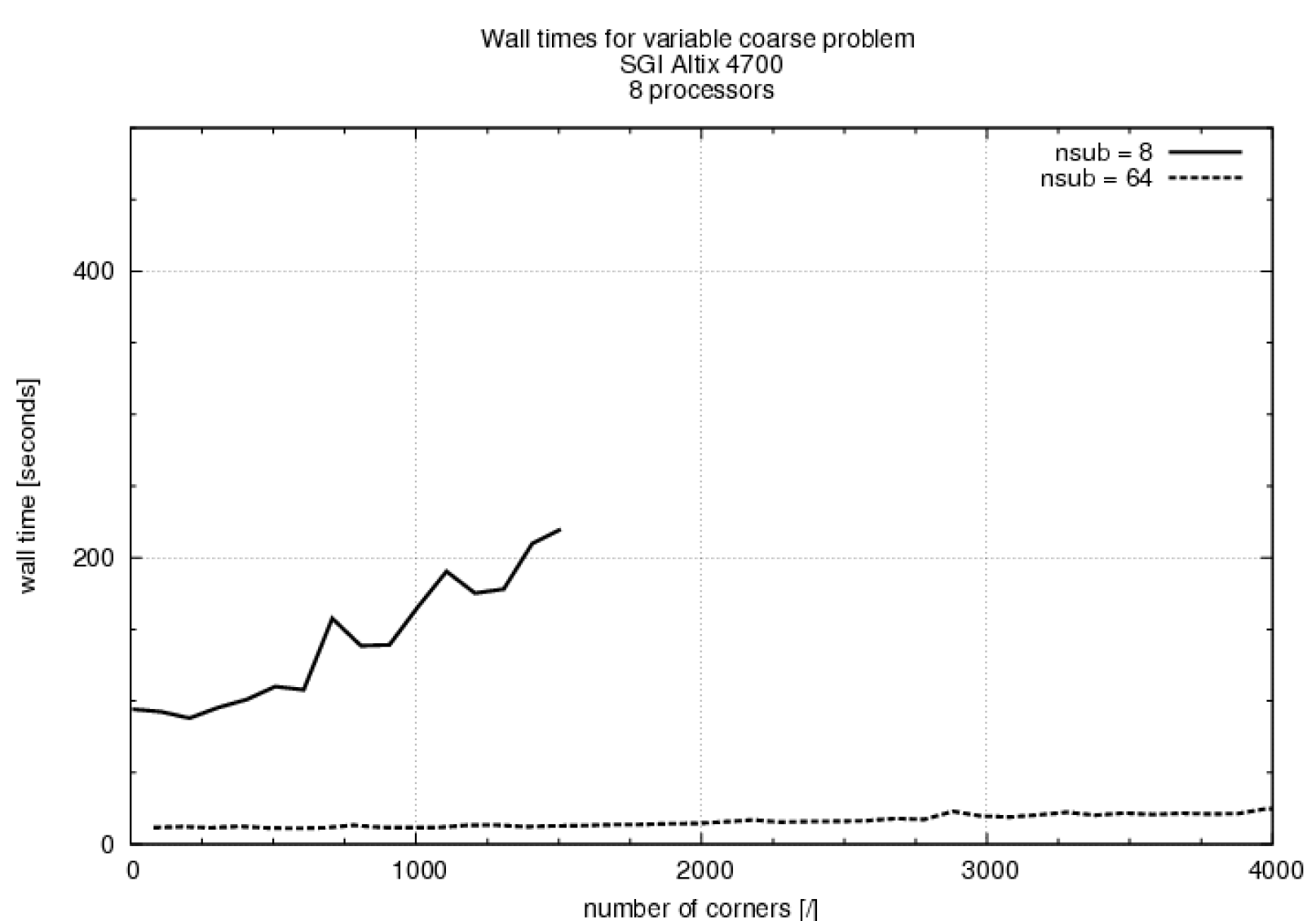

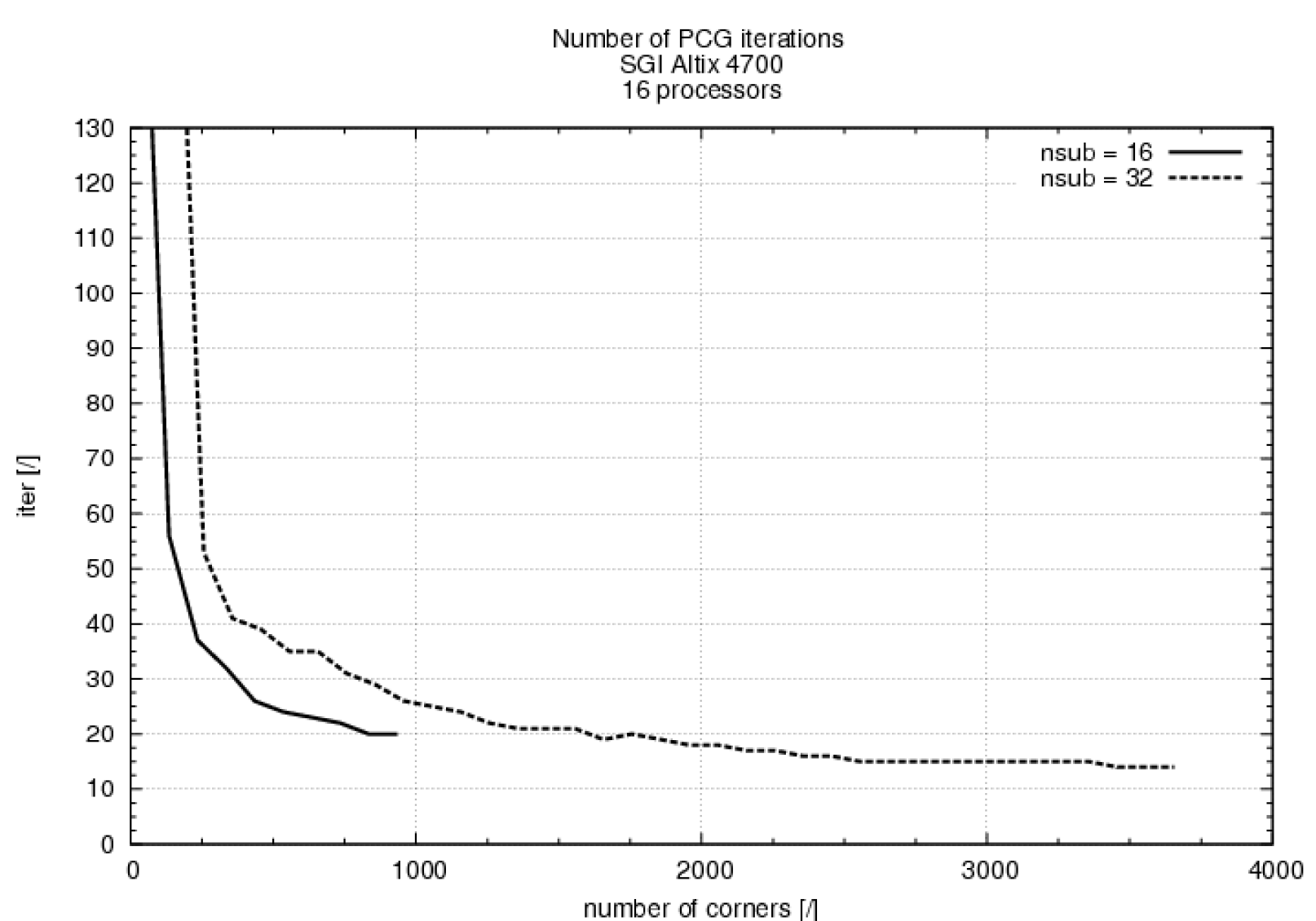

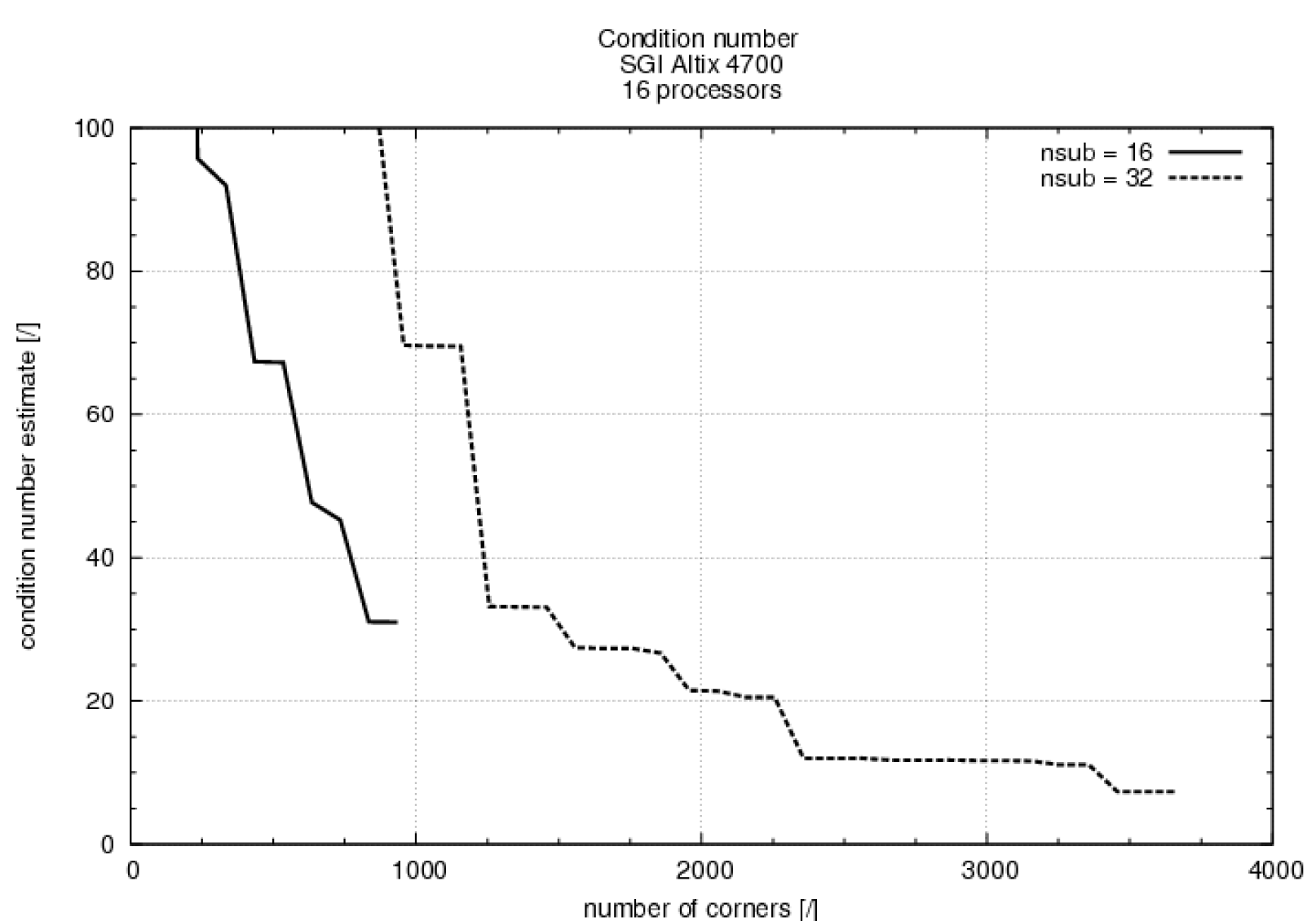

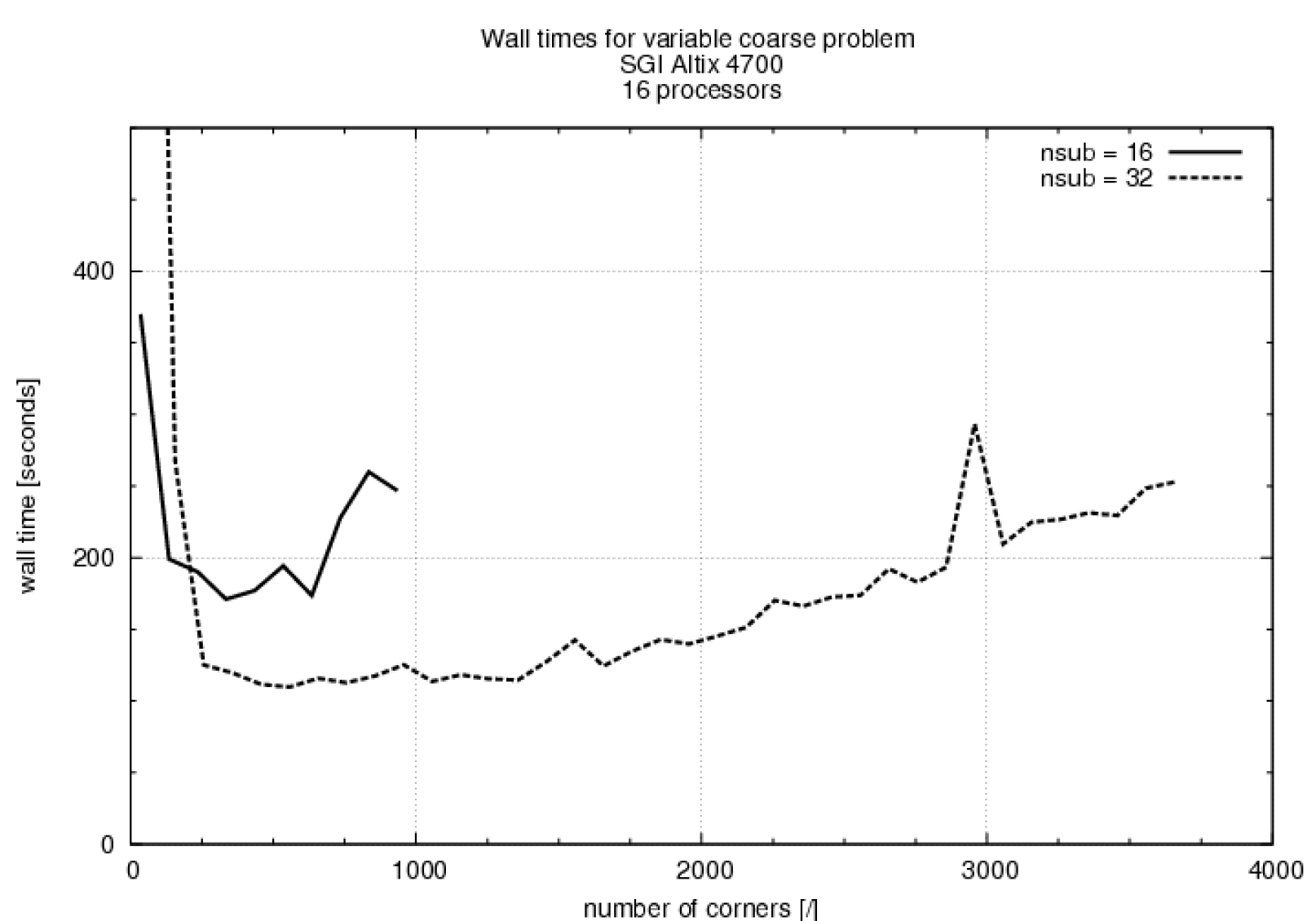

The first experiment compares two ways of enriching the coarse space , namely by adding point constraints on randomly selected variables on the substructure interfaces, i.e. adding more “corners” (Fig. 2 – 4), and by adding averages to the initial set of corners (Table 1 and Table 2). In the first column of these tables, no additional averages are considered and only corners were used in the construction of . Then we enforce the equality of arithmetic averages over all edges, over all faces, and over all edges and faces, respectively.

Although adding corners leads to an improvement of preconditioner in terms of the condition number (Fig. 3) and the number of iterations (Fig. 2), after a slight decrease early on the total computational time increases (Fig. 4) due to the added cost of the setup and factorization of the coarse problem. This effect is particularly pronounced with subdomains, where the cost of creating the coarse matrix dominates, as the frontal solver internally involves multiplication of large dense matrices to compute reactions: (30) takes for multiple right hand sides, where is the number of variables and is the number of coarse variables in subdomain . The problem divided into subdomains requires much less time than the problem with subdomains also due to the fact that the factorization time for subdomain problems grows fast with subdomain size. Note that for subdomains, the number of processors remains the same and each of the processors handles subdomains.

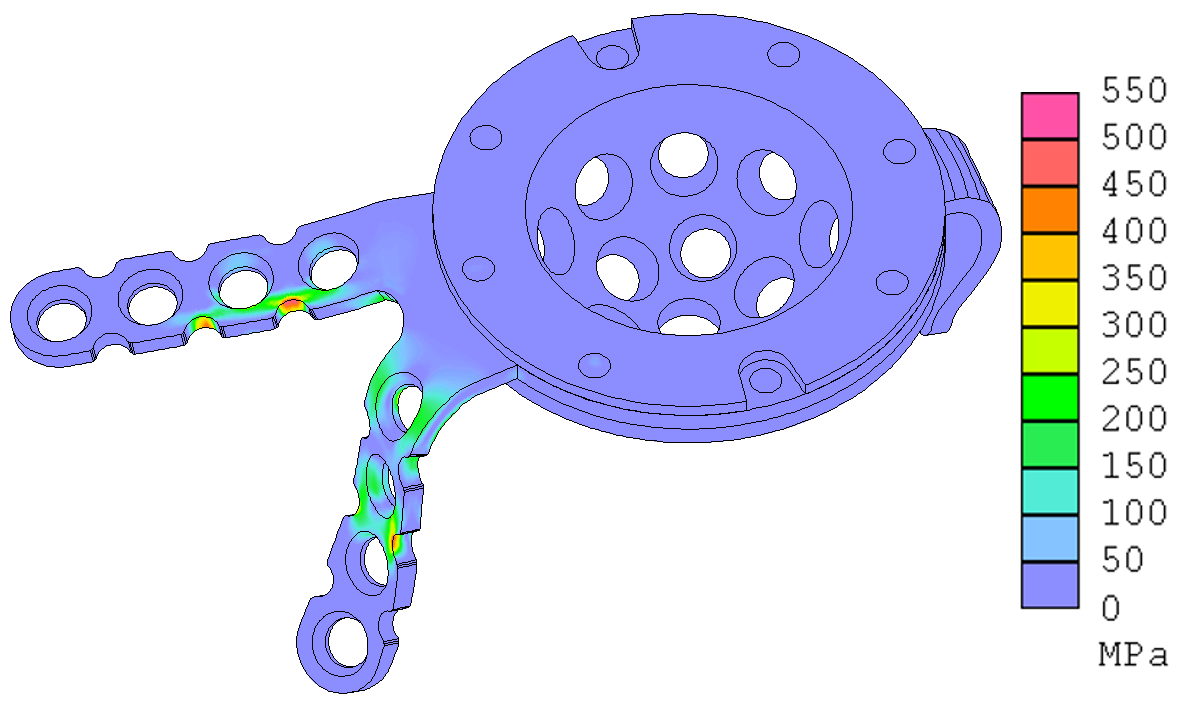

The structural analysis of the replacement of the hip joint construction loaded by pressure from body weight is an important problem in bioengineering. The hip replacement consists of several parts made of titanium; here we consider the central part of the replacement joint. The problem was simplified to stationary linearized elasticity. The highest stress was reached in the notches of the holders. In the original design, holders of the hip replacement had thickness of mm, which led to maximal von Mises stress about MPa. As the yield point of titanium is about MPa, the geometry of the construction had to be modified. The thickness of the holders was increased to 3 mm, radiuses of the notches were increased, and the notches were made smaller, as in Fig. 5. The maximal von Mises stress on this new construction was only about 540 MPa, which satisfed the demands for the strength of the construction [5, 18]. The mesh consists of 33,186 quadratic elements resulting in 544,734 unknowns.

The computation needs 400 minutes when using a serial frontal solver on Compaq Alpha server ES47 at the Institute of Thermomechanics, Academy of Sciences of the Czech Republic. With 32 subdomains and corner coarse degrees of freedom only, BDDC on a single Alpha processor took 10 times less, only 40 minutes.

In this paper, we present results for decompositions into 16 and 32 subdomains obtained by the METIS graph partitioner [9]. In the case of 16 subdomains, the interface topology leads to 35 corners, 12 edges, and 35 faces, and in the case of 32 subdomains, to 57 corners, 12 edges, and 66 faces. All presented results were obtained on 16 processors of SGI Altix 4700. Again, the stopping criterion of PCG was chosen as . Also these results were obtained using MUMPS solver for the coarse problem solution.

We again investigate the two approaches to coarse space enrichment. The results of random addition of corners are presented in Figures 7 – 9. Unlike in the case of the cube, a substantial decrease of the total time is achieved. The optimal number of additional corners depends on the division into subdomains.

Results of adding averages are summarized in Table 3 for the case of 16 subdomains, and in Table 4 for the case of 32 subdomains, both with the initial set of corners.

The last experiment combines both approaches: we add averages to the optimal size of the set of corners, determined from Figure 9 as for the problem with subdomains, and for the problem with subdomains. These results are presented in Tables 5 and 6. We can see that this synergy can lead to the lowest overall time of the computation.

We observe that while the implementation of averages leads to a negligible increase in the computational cost of the factorizations, it considerably improves the condition number, and thus reduces the overall time of solution. Also, the decomposition into 32 subdomains leads to significantly lower computational times than the division into 16 subdomains. Note that although the initial set of corners leads to non-singular local matrices and the coarse matrix and so successful setup of the preconditioner, the iterations do not converge in this case.

6 Conclusion

We have presented an application of a standard frontal solver within the iterative substructuring method BDDC. The method was applied to a biomechanical stress analysis problem. The numerical results show that the improvement of preconditioning by additional constraints is significant and can lead to a considerable savings of computational time, while the additional cost is negligible.

For a model problem (cube), constraints by averages on edges and faces are required for good performance, as predicted by the theory [10, 13], and additional point constraints (i.e., corners) are not productive. However, for the hip replacement problem (which is far from a regularly decomposed cube), additional point constraints result in significantly lower total computational time than the averages, and the best result is obtained by combining both the added point constraints and the averages.

For large problems and a large number of processors, load balancing will be essential [15].

Acknowledgement

This research has been supported by the Czech Science Foundation under grant 106/08/0403 and by the U.S. National Science Foundation under grant DMS-0713876. It has also been partly supported by the Czech Republic under projects MSM6840770001 and AV0Z20760514. A part of this work was done while Jakub Šístek was visiting at the University of Colorado Denver. Another part of the work was performed during the stay of Jakub Šístek and Marta Čertíková at Edinburgh Parallel Computing Center funded by HPC-Europa project (RII3-CT-2003-506079). The authors would also like to thank Bedřich Sousedík for his help in both typesetting and proofreading of the manuscript.

References

- [1] P. R. Amestoy, I. S. Duff, J.-Y. L’Excellent, Multifrontal parallel distributed symmetric and unsymmetric solvers, Comput. Methods Appl. Mech. Engrg. 184 (2000) 501–520.

- [2] S. C. Brenner, L.-Y. Sung, BDDC and FETI-DP without matrices or vectors, Comput. Methods Appl. Mech. Engrg. 196 (8) (2007) 1429–1435.

-

[3]

P. Burda, M. Čertíková, J. Novotný, J. Šístek, BDDC

method with simplified coarse problem and its parallel implementation, in:

Proceedings of MIS 2007, Josefuv Dul, Czech Republic, January 13–20,

Matfyzpress, Praha, 2007, pp. 3–9.

URL http://ulita.ms.mff.cuni.cz/pub/MIS/MIS2007.pdf - [4] P. Concus, G. H. Golub, D. P. O’Leary, A generalized conjugate gradient method for the numerical solution of elliptic PDE, in: J. R. Bunch, D. J. Rose (eds.), Sparse Matrix Computations, Academic Press, New York, 1976, pp. 309–332.

- [5] M. Čertíková, J. Tuzar, B. Sousedík, J. Novotný, Stress computation of the hip joint replacement using the finite element method, in: Proceedings of Software a algoritmy numerické matematiky, Srní, Czech Republic, September, Charles University, Praha, 2005, pp. 31–40.

- [6] C. R. Dohrmann, A preconditioner for substructuring based on constrained energy minimization, SIAM J. Sci. Comput. 25 (1) (2003) 246–258.

- [7] C. Farhat, M. Lesoinne, K. Pierson, A scalable dual-primal domain decomposition method, Numer. Linear Algebra Appl. 7 (2000) 687–714, Preconditioning techniques for large sparse matrix problems in industrial applications (Minneapolis, MN, 1999).

- [8] B. M. Irons, A frontal solution scheme for finite element analysis, Internat. J. Numer. Methods Engrg. 2 (1970) 5–32.

- [9] G. Karypis, V. Kumar, A fast and high quality multilevel scheme for partitioning irregular graphs, SIAM J. Sci. Comput. 20 (1) (1998) 359–392 (electronic).

- [10] A. Klawonn, O. B. Widlund, M. Dryja, Dual-primal FETI methods for three-dimensional elliptic problems with heterogeneous coefficients, SIAM J. Numer. Anal. 40 (1) (2002) 159–179.

- [11] J. Li, O. B. Widlund, FETI-DP, BDDC, and block Cholesky methods, Internat. J. Numer. Methods Engrg. 66 (2) (2006) 250–271.

- [12] J. Mandel, C. R. Dohrmann, Convergence of a balancing domain decomposition by constraints and energy minimization, Numer. Linear Algebra Appl. 10 (7) (2003) 639–659.

- [13] J. Mandel, C. R. Dohrmann, R. Tezaur, An algebraic theory for primal and dual substructuring methods by constraints, Appl. Numer. Math. 54 (2) (2005) 167–193.

- [14] J. Mandel, B. Sousedík, BDDC and FETI-DP under minimalist assumptions, Computing 81 (2007) 269–280.

- [15] O. Medek, J. Kruis, Z. Bittnar, P. Tvrdík, Static load balancing applied to schur complement method, Comput. Struct. 85 (9) (2007) 489–498.

- [16] J. Šístek, M. Čertíková, P. Burda, E. Neumanová, S. Pták, J. Novotný, A. Damašek, Development of an efficient parallel BDDC solver for linear elasticity problems, in: Blaheta, R. and Starý, J. (ed.), Proceedings of Seminar on Numerical Analysis, SNA’07, Ostrava, Czech Republic, January 22–26, Institute of Geonics AS CR, Ostrava, 2007, pp. 105–108.

- [17] A. Toselli, O. Widlund, Domain decomposition methods—algorithms and theory, vol. 34 of Springer Series in Computational Mathematics, Springer-Verlag, Berlin, 2005.

- [18] J. Tuzar, Mathematical modeling of hip joint replacement. (matematické modelování náhrady kyčelního kloubu, in czech), master thesis (2005).

| coarse problem | corners | corners+edges | corners+faces | corners+edges+faces |

|---|---|---|---|---|

| iterations | 38 | 19 | 17 | 13 |

| cond. number est. | 117 | 15 | 65 | 7 |

| factorization (sec) | 49 | 56 | 52 | 57 |

| pcg iter (sec) | 21 | 11 | 10 | 8 |

| total (sec) | 85 | 85 | 80 | 83 |

| coarse problem | corners | corners+edges | corners+faces | corners+edges+faces |

| iterations | 42 | 16 | 24 | 11 |

| cond. number est. | 55 | 8 | 27 | 4 |

| factorization (sec) | 2.2 | 3.2 | 2.8 | 4.0 |

| pcg iter (sec) | 7.5 | 3.7 | 5.1 | 3.6 |

| total (sec) | 11.6 | 8.8 | 10.4 | 9.6 |

| coarse problem | corners | corners+edges | corners+faces | corners+edges+faces |

|---|---|---|---|---|

| iterations | 181 | 171 | 69 | 62 |

| cond. number est. | 4,391 | 3,760 | 535 | 522 |

| factorization (sec) | 100 | 70 | 86 | 80 |

| pcg iter (sec) | 241 | 216 | 94 | 87 |

| total (sec) | 380 | 321 | 216 | 203 |

| coarse problem | corners | corners+edges | corners+faces | corners+edges+faces |

| iterations | 500 | 500 | 137 | 70 |

| cond. number est. | n/a | n/a | n/a | n/a |

| factorization (sec) | 79 | 65 | 53 | 52 |

| pcg iter (sec) | 545 | 547 | 166 | 87 |

| total (sec) | 651 | 638 | 236 | 161 |

| coarse problem | corners | corners+edges | corners+faces | corners+edges+faces |

|---|---|---|---|---|

| iterations | 35 | 34 | 26 | 26 |

| cond. number est. | 96 | 96 | 65 | 65 |

| factorization (sec) | 91 | 80 | 78 | 106 |

| pcg iter (sec) | 53 | 49 | 38 | 37 |

| total (sec) | 183 | 166 | 153 | 181 |

| coarse problem | corners | corners+edges | corners+faces | corners+edges+faces |

|---|---|---|---|---|

| iterations | 35 | 32 | 30 | 27 |

| cond. number est. | 149 | 70 | 59 | 46 |

| factorization (sec) | 60 | 57 | 59 | 62 |

| pcg iter (sec) | 49 | 40 | 37 | 34 |

| total (sec) | 128 | 115 | 113 | 113 |