Best-response Algorithms for Integer Convex Quadratic Simultaneous Games

Abstract

We evaluate the best-response algorithm in the context of pure-integer convex quadratic games. We provide a sufficient condition that if certain interaction matrices (the product of the inverse of the positive definite matrix defining the convex quadratic terms and the matrix that connects one player’s problem to another’s) have all their singular values less than 1, then finite termination of the best-response algorithm is guaranteed regardless of the initial point. Termination is triggered through cycling among a finite number of strategies for each player. Our findings indicate that if cycling happens, a relaxed version of the Nash equilibrium can be calculated by identifying a Nash equilibrium of a smaller finite game. Conversely, we prove that if every singular value of the interaction matrices is greater than 1, the algorithm will diverge from a large family of initial points. In addition, we provide an infinite family of examples in which some of the singular values of the interaction matrices are greater than 1, cycling occurs, but any mixed-strategy with support in the strategies where cycling occurs has arbitrarily better deviations. Then, we perform computational tests of our algorithm and compare it with standard algorithms to solve such problems. We notice that our algorithm finds a Nash equilibrium correctly in every instance. Moreover, compared to a state-of-the art algorithm, our method shows similar performance in two-player games and significantly higher speed when involving three or more players.

1 Introduction

Advancements in computational power achieved over recent decades have significantly enhanced our ability to efficiently address large-scale optimization problems. Traditional optimization frameworks primarily consider an individual’s strategic choices without accommodating variations in payoffs resulting from the presence of strategic opponents or scenarios in which one player’s actions exert influence on others. The conceptualisation and formalisation of strategic interactions among players with misaligned objectives led to the introduction of game theory, notably by Morgenstern and Von Neumann (1953), Von Neumann and Morgenstern (1944). A pivotal moment in this field was John Nash’s groundbreaking theorem on the existence of equilibria, later referred to as mixed-strategy Nash equilibria, in finite games (Nash, 1950, 1951).

With the improvement in computational resources, the computation of equilibria for games has gained increasing significance and practical utility. Algorithms to obtain solutions for such games were developed in a series of papers (Ba and Pang, 2022, Bichler et al., 2023, Ravner and Snitkovsky, 2023, Feinstein and Rudloff, 2023, Carvalho et al., 2022, Adsul et al., 2021, Crönert and Minner, 2022). These equilibrium concepts find applications in identifying oligopolistic equilibria (Egging-Bratseth et al., 2020), assessing the impact of governmental policy (Langer et al., 2016), analysing infrastructure development (Devine and Siddiqui, 2023, Feijoo et al., 2018), determining pricing strategies (Luna et al., 2023), inventory decisions (Lamas and Chevalier, 2018), and even kidney exchange problems (Blom et al., 2022, Carvalho et al., 2017).

Some of these papers that work with large-scale models convexify their problem just so equilibrium identification is possible. This is driven by the computational overhead in solving games which don’t satisfy the assumptions of convexity. The literature has recently witnessed a growing interest in addressing games with structured nonconvexities, offering new perspectives on equilibrium computation. For example, there is a surge in the study of integer linear programming games, where the objectives and the constraints are linear, and some of the variables are forced to be integers (which is the nonconvexity). In this research endeavor, our focus in this paper is on a natural next step in this context, which is to consider games where each player solves a convex quadratic optimization problem with integer constraints, a specific problem category denoted as integer convex quadratic games. For such games, we explore the suitability of employing best-response algorithms. We rigorously delineate the characteristics of this game class and the associated best-response algorithm in Section 3.

The best-response algorithm.

In essence, the best-response algorithm corresponds to the dynamics where each player observes the decisions made by their counterparts in a given round and, in the subsequent round, formulates their strategy as a best response to the strategies adopted by the other players. This concept readily aligns with the notion of pure-strategy Nash equilibria, implying that, at such equilibria, no player possesses an incentive to deviate from their chosen strategy. However, when the initial state does not constitute a Nash equilibrium, players retain the flexibility to adjust their strategies in pursuit of improved payoffs. The central inquiry in this study revolves around whether this adaptive behaviour, where players respond to the strategies employed by their competitors with the assumption that their rivals will persist with the same strategies, leads to convergence to a Nash equilibrium. Notably, this assertion holds true in cases where the examined game conforms to the characteristics of a potential game (Monderer and Shapley, 1996) and the feasible sets are compact. In scenarios where such assumptions do not hold, the question of convergence becomes contingent on specific conditions. There are well-known instances of quadratic programming games with two players and one variable per player, where the best-response dynamics generate diverging iterates. We have shown the example below, as adapted from Carvalho et al. (2022, Supplementary Material, B. Divergence of SGM).

Example 1.

Consider the following simultaneous game.

In the game given above, suppose the initial set of considered strategies for players 1 and 2 be and respectively. Setting , player 1’s objective becomes whose integer minimum is . By symmetry, setting , player 2’s optimum deviation is . Thus the best response by each player gives a strategy pair . And from there on, the next best responses would be . Moreover, we can observe that in this procedure, the successive iterates will be which diverge.

The divergence here is not driven by non-existence of a Nash equilibrium as the game has a Nash equilibrium at . Further, the above is also a potential game with an exact potential function given by . While results from Monderer and Shapley (1996) hold if the feasible sets are compact, they fail when the feasible sets are the integer lattice.

Why best-response algorithm?

As a result of the above discussion, the fundamental question that arises is: why should we delve into understanding best-response dynamics in the first place? Why not exclusively rely on convexification-based methods for equilibrium identification? In many real-world scenarios, the assumption of complete information is often made for the sake of numerical tractability. However, it’s highly plausible that in practice, one player lacks access to all the parameters of another player’s optimization problem, i.e., their objectives and constraints. In such situations, a player may find themselves unable to compute a Nash equilibrium, resorting instead to crafting strategies based solely on observable actions taken by their opponents. This scenario gives rise to the concept of best-response dynamics.

Even when information is available, the adoption of best-response dynamics remains a natural choice for players with myopic perspectives, garnering significant attention within the academic discourse as well (Hopkins, 1999, Wang et al., 2021, Bayer et al., 2023, Kukushkin, 2004, Leslie et al., 2020, Morris, 2003, Voorneveld, 2000, Baudin and Laraki, 2022). Thus, understanding the convergence properties of this algorithm is of paramount importance. The negative results that we have in the paper saying that best-response dynamics are insufficient to reach an equilibrium, in practice, this corresponds to games where an external nudge is required to reach an equilibrium. This motivates our in-depth analysis of best-response dynamics within the framework of integer quadratic games.

Contributions.

We list our contributions in this paper here below.

-

1.

First, we present a key technical result on proximity in integer convex quadratic optimization, a result on which almost all of the fundamental results in this paper are based on. In particular, using the flatness theorem, we show that the distance between the integer minimizer and the continuous minimizer of a (strictly) convex quadratic function is at most , where and are the largest and the smallest eigen values of and where is the dimension of .

-

2.

For integer convex quadratic games, we provide necessary and sufficient conditions for when the best-response algorithm will terminate, irrespective of the initial iterate. The sufficient condition is that all singular values of a particular set of matrices are less than , a condition we call as the game having positively adequate objectives. The necessary condition is that at least one singular value of one of the (same set of above) matrices is less than .

-

3.

We show that finite termination in the context of integer convex quadratic games could occur due to cycling among finitely many strategies. In that case, we show that if is a mixed-strategy Nash equilibrium (MNE) of the restricted game where each player’s strategies are restricted to those about which cycling occurs, then is a -MNE to the integer convex quadratic game. The paper explicitly computes the that is possible.

-

4.

As a form of tightness to the above result, we show the following. Let be given. There exist games where cycling occurs (without the said condition of positively adequate objectives holding), and given any MNE of the restricted game, at least one player has a deviation that improves their objective more than . In other words, this shows that the proof of -MNE as opposed to an actual MNE, or even a uniform bound on , is not due to lack of tightness in analysis, but an inherent property of the best-response algorithm.

-

5.

We perform computational experiments on two classes of problems. When there are at least three players, we provide empirical evidence that our algorithm significantly outperforms the SGM algorithm (Carvalho et al., 2022). Moreover, while the theorems guarantee only a -MNE with our algorithm, empirically, we always obtain an MNE. This leads us to end the paper with a conjecture that the theorem can be strengthened to state that when we have positively adequate objectives, an MNE is always retrievable.

In the appendix, we discuss the rate of convergence of the best-response algorithm to a neighbourhood of the equilibria, when our sufficient condition holds. We show that there is a linear rate of convergence, indicating that the algorithm brings the iterates close to the solution very fast. However, after reaching a neighborhood, the actual rate at which convergence occurs is not immediate from this analysis.

2 Literature Review

Finding solution strategies for games have long been of interest in the literature. As a seminal result, Lemke and Howson (1964) provided an algorithm to find Nash equilibria for bimatrix games (two-player finite games). Audet et al. (2006) improved these algorithms, and more recently, Adsul et al. (2021) provided fast algorithms to solve rank-1 bimatrix games. Also, Feinstein and Rudloff (2023) present an algorithm to solve simultaneous games with vector optimization techniques.

The term integer programming games (IPG), to the best of our knowledge, was coined in Köppe et al. (2011), marking the inception of research in this field. This unique subdomain gained prominence due to the compact representation of games with discrete strategy sets. A recent tutorial article surveys some of the algorithms in this context (Carvalho et al., 2023c).

As far as the computational complexity of IPGs go, Carvalho et al. (2022) prove that it is -hard to decide if an IPG does or does not have a PNE or an MNE. Despite the strong complexity bounds, algorithms that match the lower-bounds predicted by the complexity results have been of interest in the literature. Typically, such algorithms assume either assume linear objective functions or assume compact feasible sets or assume both. Algorithms that don’t rely on compactness of the feasible sets, typically rely on the structure of the feasible set and use a convexification approach. Carvalho et al. (2023a) identify an inner approximation-based algorithm to solve a class of problems they refer to as NASP (Nash game Among Stackelberg Players). While these problems are not directly categorised as IPGs, it is noteworthy that any bounded integer linear program can be reformulated as a continuous bilevel program. This implies the applicability of their inner-approximation algorithm to bounded integer linear programming games. As a counterpart to the inner-approximation algorithm, Carvalho et al. (2023b) proposes an outer-approximation algorithm for IPGs as well as a large class of separable games. Both these algorithms iteratively improve the approximation of the convex hull.

While both Carvalho et al. (2023b, a) suggest convexification-motivated approaches and are very fast when convexification is possible, difficulty occurs when the feasible set cannot be convexified easily. Algorithms meant to handle such settings solve finite games as a subroutine. Carvalho et al. (2022) propose the SGM algorithm, an abbreviation of Sampled Generation Method where they iteratively generate more and more feasible points and compute an MNE for the finite subset, until such an MNE is also an MNE for the entire problem. Crönert and Minner (2022) extend the algorithm into an exhaustive-SGM or eSGM algorithm, where exhaustively all MNEs of a game are enumerated, when the players’ decisions are all discrete. These algorithms, while practical and fast, require that the feasible sets are compact. Schwarze and Stein (2023) provide a branch-and-prune algorithm to identify Nash equilibria in a family of games where at least one player has an objective that is strongly convex in at least one variable.

Best-response dynamics.

Best-response dynamics have been of interest starting from the seminal paper by Monderer and Shapley (1996). The authors in this paper define a potential function, which when minimized over a compact set of feasible strategies, yields a PNE to the game. When players play the best response to the opponents in the game, they can be interpreted as descent steps in the potential function. Voorneveld (2000) extends the concept of potential function games and define best-response potential games allowing infinite paths of improvement also.

Best-response dynamics, being a very natural action for players as they only have to react to other players’ strategy in an optimal way, has been studied when we don’t have a potential function too. Hopkins (1999) provides a note comparing the best-response dynamics and other dynamics that are studied in the economics literature. Kukushkin (2004) analyses the consequences of best-response dynamics in the context of finite games with additive aggregation. Leslie et al. (2020) studies best-response dynamics in zero-sum stochastic games. Baudin and Laraki (2022) extend their work and contrast these results against fictitious plays, and also to a family of games they call identical interest games. Lei and Shanbhag (2022) consider the convergence rate of best-response dynamics in a convex stochastic game and provide bounds on equilibria based on sample size. More recently, Bayer et al. (2023) provide sufficient conditions when best-response dynamics converge to an PNE for a class of directed network games.

Within the scope of the problems addressed in this paper, the best-response optimization problem entails convex quadratic minimisation over integers, a well-explored challenge with connections to the shortest vector problem under the norm. This problem can be readily reduced to unconstrained convex quadratic minimisation over the integers. Conversely, any unconstrained convex quadratic minimisation over the integers can be reduced into a shortest vector problem, making both families of problems in identical complexity class. Micciancio and Voulgaris (2013) provide an optimal algorithm for the shortest vector problem, which is exponential in the number of dimensions. Given that the best-response problem is -complete, we believe that the integer convex quadratic games does not allow for very fast algorithms in general.

3 Definitions and Algorithm descriptions

3.1 Simultaneous games

First, we define integer convex quadratic games, Nash equilibrium and the best-response algorithm.

Definition 1 (Integer Convex Quadratic Simultaneous Game (ICQS)).

An Integer Convex Quadratic Simultaneous game (ICQS) is a game of the form

| (ICQS) |

In this definition, we assume that and are symmetric positive definite matrices. Moreover, we refer to and as interaction matrices.

For the purposes of expositionary simplicity, we assume that there are only two players. The results in this paper can be generalized to multiple (finitely many) players. The generalized definitions with the ideas needed to extend the proofs are discussed in Appendix B. In this paper, we refer to the player choosing the variables as the -player, and the other one as the -player. We refer to each feasible point for a player as a strategy, and a probability distribution over any finite subset of strategies as a mixed-strategy. With that, we define -Mixed-strategy Nash Equilibrium (MNE).

Definition 2 (-Nash equilibrium).

Given ICQS, a mixed-strategy pair is an -mixed-strategy Nash equilibrium (-MNE) if

Further, the finite subset of and to which and assign non-zero probability are called the -player’s and -player’s supports of the -MNE respectively. If holds, then we call it just an MNE or a PNE.

We note the subtle difference between -MNE that is popular in literature as opposed to the -MNE we define above. Typically, algorithms claim to find an -MNE, if such solution is possible for any given , i.e., any allowable positive error. In contrast, our paper only guarantees solutions for a fixed value of the error term that we denote by . Besides this subtle difference, the definitions are interchangable.

3.2 The best-response algorithm

Definition 3 (Best Response).

Given a game ICQS, we define the best response of the -player, given as and the best response of the -player given as .

We note that and need not be singleton sets. If they are not singleton, for the purposes of the best-response algorithm, any arbitrary element from these sets can be chosen.

The notion of best response immediately presents the idea of a best-response algorithm which is formally presented in Algorithm 1.

Algorithm 1 is possibly one of the simplest algorithms that could be considered for finding Nash equilibria for simultaneous games. We begin with input “guesses” for each player’s strategy – and . In 5 we solve integer convex quadratic programs to identify the best response to the previous strategy of the other player. We repeat this until a previously-observed iterate is observed again.

Observe that it is not possible for the iterates to converge to a point but not attain the limit. This is beacuse, each iterate is a feasible point in the integer lattice, and they can’t get arbitrarily closer without coinciding. Thus the only two outcomes are (i) the algorithm cycling among finitely many strategies (and hence terminating) or (ii) diverging (non-terminating). Cycle could be of length , in which case, the repeated strategy is a pure-strategy Nash equilibrium. Alternatively, it could also be of some finite length.

3.3 Adequate objectives

Before we state the main results, we define positively and negatively adequate objectives below. A game having (positively or negatively) adequate objectives is a combined property of the objectives of both the players. More importantly, this can be checked only by inspecting the singular values of a the interaction matrices and . There are efficient routines to do a singular value decomposition of any matrix , where are unitary matrices and is a non-negative codiagonal matrix. The diagonal elements of are the singular values of .

Definition 4.

ICQS is said to have positively adequate objectives if every singular value of the interaction matrices and is strictly less than

Definition 5.

ICQS is said to have negatively adequate objectives if every singular value of the interaction matrices and is strictly greater than

When we have positively adequate objectives, we assume that the largest singular value is for some and when we have negatively adequate objectives, we assume that the smallest singular value is for some .

4 Main Results

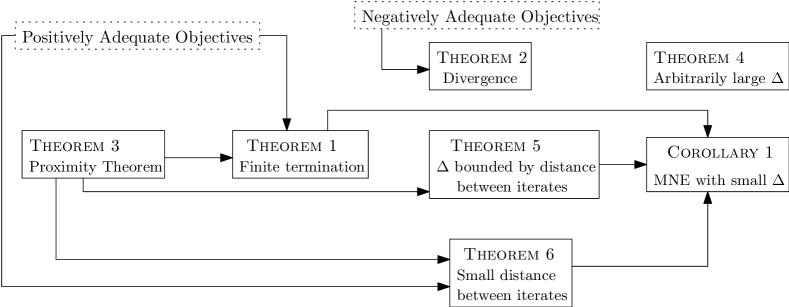

We state the organization of results in this paper first. First we prove a technical result we call proximity theorem (Theorem 3) that provides a bound for the maximum distance between a continuous minimizer and an integer minimizer of a convex quadratic function, a bound that is insensitive to the linear terms of the convex quadratic function. Using this result and the assumption of positively adequate objectives, we show that Algorithm 1 terminates finitely (Theorem 1). Then, we show a negative result, that if the game has negatively adequate objectives, there always exists points from where Algorithm 1 generates divergent iterates (Theorem 2). Together, Theorems 2 and 1 provide necessary and sufficient conditions for finite termination of Algorithm 1.

Following the results on finite termination, we consider the part where we solve a finite game restricted to the iterates about which Algorithm 1 cycled to obtain an MNE. First, we show that in general, such an MNE to the restricted finite game could be arbitrarily bad for the original instance of ICQS (Theorem 4). Then, we show that, the maximum profitable deviation any player in ICQS could obtain, given an MNE for the finitely restricted game, is bounded by the maximum distance between any pair of iterates generated (Theorem 5). Next, we show that under our assumption of positively adequate objectives, the maximum distance between any two iterates about which cycling happens is indeed bounded (Theorem 6). Tying these results together, we have Corollary 1, which says that under the assumption of positively adequate objectives, Algorithm 1 can be used to obtain -MNE. Finally, from the computational experience, we state a conjecture that in case of positively adequate objectives, the value can be indeed chosen as zero.

For enhanced readability, we show the implications between the theorems in the manuscript in Figure 1.

4.1 Necessary and sufficient conditions for finite termination

Theorem 1.

If the game ICQS has positively adequate objectives, then Algorithm 1 terminates finitely, irrespective of the initial points .

Theorem 2.

If the game ICQS has negatively adequate objectives, then Algorithm 1 generates divergent iterates for all but finitely many feasible initial points .

Theorems 2 and 1 can be interpreted respectively as necessary and sufficient conditions for Algorithm 1 to terminate finitely. However, proving these requires an intermediate result on proximity of integer minimizers of convex quadratic functions to the corresponding continuous minimizers. In that respect, we first define proximity bound, and then we prove that the bound is finite for any given positive definite matrix .

4.2 Proximity in integer convex quadratic programs

First, we define proximity, which bounds the maximum distance between the continuous minimum and the integer minimum of a convex quadratic function. The most famous of such results are the results in the context of linear programs provided in Cook et al. (1986), and recently improved by Paat et al. (2020), Celaya et al. (2022). Some proximity results for convex quadratic programs (Granot and Skorin-Kapov, 1990) and a subfamily of general convex programs (Moriguchi et al., 2011) are available in the literature extending the results for integer linear programs. We provide a version of proximity result for convex quadratic programs in this paper. While the results are not in full generality in line with the proximity literature in the context of integer linear programs, the version we provide here is sufficient to prove the fundamental results of the paper.

Definition 6.

Let be a given positive definite matrix. The proximity bound of with respect to the vector norm is denoted by and is the optimal objective value of the problem

| s.t. | (2a) | ||||

| (2b) | |||||

| (2c) | |||||

In this paper, we use norm predominantly, so when referring to we write .

In Definition 6, we consider a quadratic function whose quadratic terms (defined by ) are fixed, but the linear terms (defined by ) are allowed to vary. We want to find the linear term such that the distance between the continuous minimizer and the integer minimizer is maximized. Moreover, the inner ensures, should there be multiple integer minimizers, a minimizer which is farthest from the (unique) continuous minimizer could be chosen.

Apriori, it is not clear if is finite for a given . It is not clear if for a given there is a sequence of and corresponding continuous minimizers and integer minimizers, of the quadratic such that . However, we show that this is not the case in the following result.

Theorem 3 (Proximity Theorem).

Given a positive definite matrix of dimension ,

-

(i)

.

-

(ii)

The maximum difference between the optimal objective values when optimizing over versus optimizing over is .

where and are the largest and the smallest singular values of and is a constant dependent only on the dimension .

First we note that since is a real symmetric matrix, the absolute value of its eigen values are its singular values. Moreover, since is positive definite, all its eigen values are positive real numbers. So, its eigen values are its singular values. Thus and in Theorem 3 can also be interpreted as the largest and the smallest eigen values of .

To prove Theorem 3, we use a fundamental result in convex analysis named the flatness theorem. We refer the readers to Barvinok (2002, Pg. 317, Theorem 8.3) for a complete and formal proof of the flatness theorem. The theorem states that if a convex set has no integer points in its interior, then there exists an (integer) direction along which the convex set is flat. i.e., there exists such that , a finite number dependent only on the dimension of the space in which lies. While the theorem is generally stated for any lattice, in this paper we are only interested in lattice. For , it is known that , although stronger () bounds are conjectured (Celaya et al., 2022, Rudelson, 2000). We state all our results in terms of , so if stronger bounds are found for , they are directly applicable here.

Proof of Theorem 3..

Consider a strictly convex quadratic function . For the choice (equivalently ), we can rewrite the function as . Neither the continuous minimizer nor the integer minimizer is sensitive to addition of constants to the function. Thus the continuous and the integer minimizers of the above function are same as that of . But this expression equals . We have written a general strictly convex quadratic function in the above form. Thus, it is sufficient to consider quadratic functions in this form. Define . A useful property when considering this form is that is the continuous minimizer here, and we don’t need to explicitly write as a part of the function. The corresponding value of will be . With this substitution, we can write .

Next, consider the family of ellipsoids parameterised by and , where . For any and , is a convex set. More interestingly, for any where is the integer minimizer of , . Now, if , then is a -free convex set. Thus, the flatness theorem (Barvinok, 2002) is applicable. In our context, this means, there exists a direction along which is flat.

Now, let us identify the direction along which is flattest, using our knowledge about the ellipsoid. Since is symmetric, has eigen values with corresponding eigen vectors that form an orthonormal basis of . We order the eigen values and notate their corresponding orthonormal eigen vectors as . Now, consider the function . Using Rayleigh’s theorem (Horn and Johnson, 2012, Pg. 234, Theorem 4.2.2, choose ), one can show that , and the maximizer is . Now, consider the ellipsoid . Consider the point in this ellipsoid along the direction and for some . We can observe that . Along the same line, . Thus the scalings of , namely lie on the boundary of the ellipsoid. So, the Euclidean distance between the two extreme points on the ellipsoid along direction is . Clearly, the distance is the smallest when the denominator is the largest, i.e., when . In other words, the ellipsoid translated to the origin is flattest along the direction . However, the flatness theorem guarantees if is free, then there exists a direction where the width is at most . is the direction along which the width is minimal for , and width along a direction is invariant with translation. So, if is -free, then the largest value can take is . In other words, it is necessary that for to have no integer points. In other words, there is an integer point whose objective value is at most more than the continuous minimum. This proves part (ii) of the result.

However, if is given, the farthest point on from the origin is in the direction of with a distance of . However, the bound on helps us bound the above by saying that the distance from the origin to the farthest point in the ellipsoid is at most which is finite. While the above is a bound to the farthest point on the ellipsoid, the farthest integer point could only be possibly closer. The arguments continue to hold after translating to . So, we have proving (i). ∎

Remark 1.

We note that the bound provided in Theorem 3 is a weak bound that is meant to only show finiteness of . It is a single expression that works as an upper bound for any positive definite matrix . The bound is weak fundamentally due to the weakness in the flatness bound, . Given specific matrices, tighter bounds can be obtained. For example, for the choice ( identity matrix), we can prove that without an appeal to the flatness theorem. This is significantly better than bound provided by Theorem 3. While the proofs of in this paper rely only on the finiteness of and the exact value of is less relevant, the approximation guarantees provided by Theorems 5, 6 and 1 rely on the value of .

Remark 2.

We remark the contrast in the proximity we have in Theorem 3 with the proximity results in Granot and Skorin-Kapov (1990). Theorem 3 provides a bound that is insensitive to the linear terms in the quadratic function, while the bounds in Granot and Skorin-Kapov (1990) depends upon the linear terms in the objective function, but handles more general settings where there are linear constraints as well. We also note that the availability of analogous proximity results for other families of sets (beyond ellipsoids) will naturally extend the main results of this paper to analogous families of games.

4.3 Finite termination of best-response dynamics

Now we are in a position to prove Theorem 1.

Proof of Theorem 1..

Given the iterates and in iteration , the continuous minimizers of the best-response problems, and are given by

The integer optimum is at most a distance and distance away from and for each of the players respectively. If and denote the difference between the integer and the continuous minimizers of each of the players’ best-response problem, each iteration of Algorithm 1 can be modeled as application of the function , where,

| (3c) | ||||

| (3d) | ||||

| Now, | ||||

| (3e) | ||||

| (3f) | ||||

| (3g) | ||||

| (3h) | ||||

| (3i) | ||||

| (3j) | ||||

Here, the first inequality follows from the triangle inequality of norms. The second inequality follows from Theorem 3 that and . The equality in the next line is due to the fact that the expressions within in the first term are essentially the same and the next equality is due to positivity of norms. The inequality in the last line follows from the fact that (i) each singular value of is at most the largest singular value of and (due to Proposition 1 in the electronic companion), which is for some and (ii) Proposition 2 applied to .

| Now, suppose, | ||||

| (4a) | ||||

| (4b) | ||||

| (4c) | ||||

| (4d) | ||||

Here, we assume the first inequality. The second inequality is obtained by multiplying on both sides. The third inequality is obtained by adding on both sides, and the last inequality follows from 3.

In 4, we are saying that for all such that . Now, we make a claim that the above statement implies that Algorithm 1 has finite termination. This is because, in any bounded region, in particular, in the region defined by , there are finitely many feasible . Now, whenever an iterate has a norm exceeding , it decreases monotonically over subsequent iterations, till the norm is less than at least once, thus visiting a vector in . After that, it could possibly increase again. However, given that these monotonic decreases always end in some , after sufficiently many times, either all vectors in will be visited eventually and then returning back to will cause a second time visit of a vector, or even before all vectors are visited, some vector will be visited for the second time. In either case, this will trigger the termination condition in 6 of Algorithm 1, leading to finite termination. 4 indicates that the choice works. ∎

A natural question that arises now is the rate of convergence. How long does it take the ICQS to begin cycling. While we don’t have a comprehensive answer to the question, we discuss convergence rate to a neighborhood of strategies about which cycling will occur in Section D in the electronic companion.

Now, we prove Theorem 2 that if we have negatively adequate objectives, then there exist initial points from where the iterates from Algorithm 1 diverge.

Proof of Theorem 2..

Let . Let each singular value of and be greater than for some . Suppose the initial iterate is a vector such that . We now show that the subsequent iterates have monotonically increasing norms, which indicates diverging and is sufficient to prove the theorem.

Like in the proof of Theorem 1, the iterates generated by Algorithm 1 equals recursive application of the function given by

where and . Now, like before,

From the claim made earlier and given that the smallest singular value of the matrix in RHS is at least , we can say the following.

Now, if we have , the RHS in the above expression is positive. This means, in the following iteration, the norm of the iterates increase. It increases and increases without a bound indicating that the algorithm diverges. Moreover, there are only finitely many integer points with norm at most , implying that the algorithm will diverge for all but finitely many feasible initial points. ∎

4.4 Retrieval of an approximate equilibrium

It is clear that if Algorithm 1 terminates outputting sets such that , then the pair of strategies in the output constitute a PNE for the instance of ICQS. However, if we have an output with a non-singleton set, it is not clear, what guarantees we could have. In some cases, as shown below, it could happen that an MNE could be easily found, by solving the finite game with strategies of each player restricted to and .

Example 2 (Cycling).

Consider the problem given as follows.

| (5) |

Let us start the best-response algorithm with initial iterates . The best response for is and for is . Now, we start the next iteration from . Now the best response for is . There is no change in ’s strategy. Now, we start the next iteration from . There is no change in ’s strategy. Now the best response for is . Now, we start the next iteration from . Now the best response for is . There is no change in ’s strategy. We are now back to the strategy and the same cycle of period 4 will keep repeating. For the problem in that example, cycling occured with . We can find an MNE for the bimatrix game where each player’s strategy is restricted to and respectively. The cost matrices (payoff matrices are negative of these matrices) for the -player (row player) and -player (column player) are

| 0 | 1 | |

|---|---|---|

| 0 | (0, 0) | (0, -0.1) |

| 1 | (0.1, 0) | (-0.1, 0.1) |

Here, if both players mix both their strategies with probability , then it is an MNE for the bimatrix game. This also turns out to be an MNE for the original game in 5.

The above observation raises the following question. If and are the iterates returned by Algorithm 1 as adapted for ICQS, will there be an MNE for the ICQS whose supports are subsets of and respectively? We state that this is not the case in general through the following theorem.

Theorem 4.

Suppose Algorithm 1 terminates finitely and returns iterates and for ICQS with and . Let , Given any , there exists such that an MNE of the finite game restricted to and is not a -MNE for ICQS.

In other words, even if are well-behaved matrices ( remains bounded), and even if we allow feasible strategies in the convex hull of and , the MNE to the restricted game could be arbitrarily bad for ICQS. For example, could be chosen, and there exists such that the iterates generated by Algorithm 1 would cycle, and still the restricted game’s MNE is not even a -MNE for the original game.

Proof of Theorem 4..

Consider this game, where is a large positive even integer.

| -player | (6a) | |||

| -player | (6b) | |||

This completes the description of the game.

If we start Algorithm 1 from and , the best response of is while the best response for is . Given these points, the best response for is and that of is . Given these points, the best response for is and that of is . Given these points, the best response for is and that of is . Thus, the following iterates will cycle between and . Thus, .

The cost matrices (negative of payoff matrices) for both the players, given the strategies in and are given below.

One can confirm that the above bimatrix game has no PNE, but has an MNE where the strategies are both given a probability of by both players. The cost of both the players given this MNE is . However, going back to the game in 6, we observe that is a feasible profitable deviation for the -player. Feasibility follows from the fact that was chosen as an even positive integer. The cost -player incurs by playing this strategy is . By choosing to be arbitrarily large, we can obtain arbitrarily large profitable deviations from the MNE of the restricted game. ∎

We note that the family of examples described in the proof of Theorem 4 are not problems that have positively adequate objectives. But we also observe that the output set generated by the game in 6, and have points far away from each other. We show that this large distance between points within and lead to arbitrarily large values of . The below result shows that and are bounded by and , the maximum distance between any two iterates in and respectively.

Theorem 5.

Suppose Algorithm 1 terminates finitely and returns iterates and for ICQS. Let be the maximum of the norm between any two points in . Analgously, let be the maximum of the norm between any two points in . Then, any MNE of the finite game restricted to the strategies and is an -MNE to ICQS, where , , is the largest eigen value of , and is the largest eigen value of .

Proof of Theorem 4..

Since and are sets of iterates over which Algorithm 1 cycles, we know that for each , there exists such that . We notate such a best response as for simplicity. Given some , we notate the continuous minimizer of the -player’s objective (which is ) as .

From Theorem 3, we know that for any , . Choose where and denote the probabilities with which is played in the MNE of the restricted finite game. Being probabilities, with . i.e., is convex combination of points in . Now, where due to Theorem 3. But this implies that .

We are given that the distance between any two points in is at most . The point (where for all ) is a convex combination of points in . This point is also, hence, at most a distance from any other point in , due to the convexity of norms. Formally, for any .

Combining and , we get for any through the triangle inequality for norms.

Now, define . By definition, is the continuous minimizer of . Since for any , we have that for any . Here, the first inequality follows from the fact that a point that is at most a distance away from the minimum of a convex quadratic, has a function value of at most over and above the minimum value of the quadratic, where is the largest eigen value of the matrix defining the quadratic. The second inequality follows from the fact that the continuous minimizer has an objective value that is not larger than an integer minimizer.

Since for each is at most suboptimal by , the maximum improvement possible from the mixed strategies which only plays a subset of can have an improvement not more than , giving the value of as needed.

Analogous arguments for the -player proves the analogous result for . ∎

Remark 3.

While the previous result holds for any instance of ICQS, we now show that if we have positively adequate objectives, then and themselves can be bounded, providing an ex-ante guarantee on the error in equilibria.

Theorem 6.

Suppose Algorithm 1 terminates finitely and returns iterates and for an instance of ICQS with positively adequate objectives. Let be the maximum of the norm between any two points in . Analgously, let be the maximum of the norm between any two points in . Then, and , where and are the largest singular values of and respectively.

Proof of Theorem 6..

Let . Since there exist such that and . But observe that for any , , where the first term is the continuous minimizer and the second term is the error induced due to minimising over integers, and . Thus, for ,

| (7a) | ||||

| (7b) | ||||

| (7c) | ||||

| (7d) | ||||

| (7e) | ||||

| (7f) | ||||

| (7g) | ||||

| where the first inequality follows from the triangular inequality of norms, the second inequality follows from the fact that the largest singular value of is less than and the last inequality follows from the fact for any by definition. However, since were arbitrary vectors in , we have . Now, following analogous arguments for -player, as we did above for -player, we get for any . Substituting this in 7g, we get | ||||

| (7h) | ||||

| (7i) | ||||

| (7j) | ||||

| (7k) | ||||

| (7l) | ||||

Notice that the division by in the last step is valid since and hence due to positively adequate objectives, proving the bound for .

Following analogous steps for the -player, the bound for follows. ∎

Due to Theorems 6, 5 and 1, we now have the following corollary, which captures the complete result in the context of ICQS with positively adequate objectives.

Corollary 1.

Given an instance of ICQS, Algorithm 1 terminates finitely outputting finite sets and . Moreover, any MNE of the finite game restricted to and is a -MNE to the instance of ICQS, where

| (8a) | ||||

| (8b) | ||||

5 Computational experiments

We conduct computational experiments in two families of instances. All tests were done in MacBook Air, 2020 with an Apple M1 (3.2 GHz) processor and 16GB RAM. The primary comparison in both these families of instances is between the best-respose (BR) algorithm in Algorithm 1 and the SGM algorithm (Carvalho et al., 2022). The initial iterate used for both the algorithms is always the zero vector of appropriate dimension. The best-response optimization problems are solved using Gurobi 9.1 (Gurobi Optimization, 2019). All finite games, be it the restricted game at the end of the BR algorithm, or the intermediate games solved in SGM algorithm are also solved by posing the problems as mixed-integer programming problems as shown in Sandholm et al. (2005b, a). These mixed-integer programs were also solved using Gurobi 9.1 (Gurobi Optimization, 2019).

5.1 Pricing with substitutes and complements

Family description.

In this family of instances, we consider retailers who are competitively pricing their products. Each retailer has a disjoint set of products in the set . The demand for each product depends upon the price of that product, as well as the price of all other products, which could be strategic complements or substitutes. In particular, we consider a linear price-response curve given by , where is the set of all products, is the price of product and is the quantity of product sold. The terms account for the cross elasticities, and is positive if and are strategic complements and is negative and are strategic substitutes. The player controls the prices of only the products that they sell. Each product could also have a marginal cost , and each player maximizes their profit . Substituting the price-response function for in the above results in a convex quadratic objective for each player. Further, in many realistic situations, prices are required to take discrete values rather than in a continuum. Thus, we enforce that the prices must be integers.

This results in the first family of problems.

Instance generation.

We generated 100 instances with two players each, 100 instances with three players each, 20 instances with four players each, and 20 instances with five players each. Thus, it adds up to 240 instances over all. The number of products each player controls is a random number between three and six. All the other parameters for each product as well as for each pair of products is generated uniformly randomly between appropriate limits. As soon as the instances are generated, we check if the instance has positively adequate objectives. If not, then the instance is discarded. All other instances were retained.

Results.

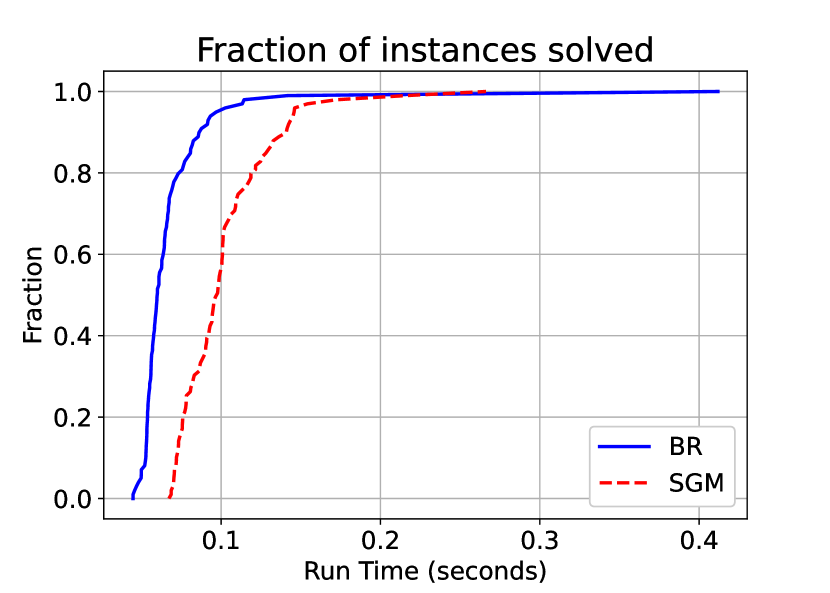

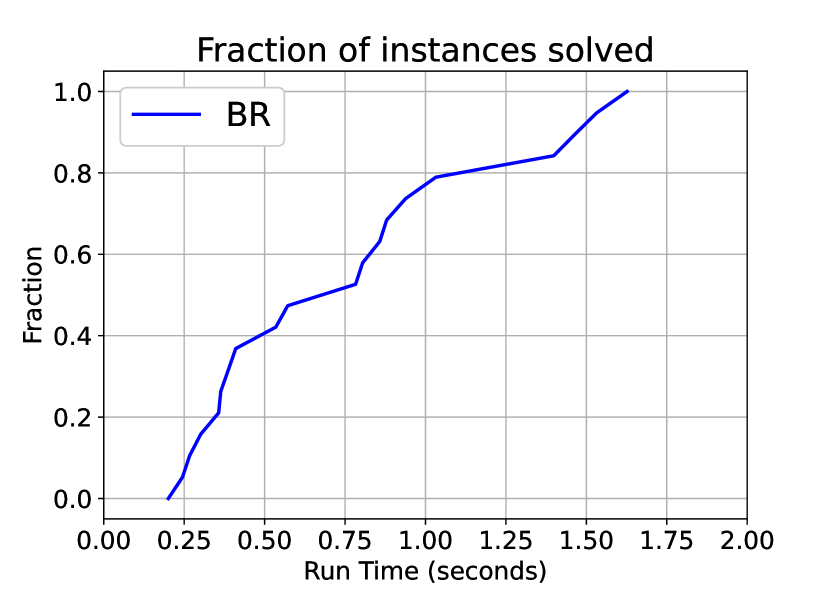

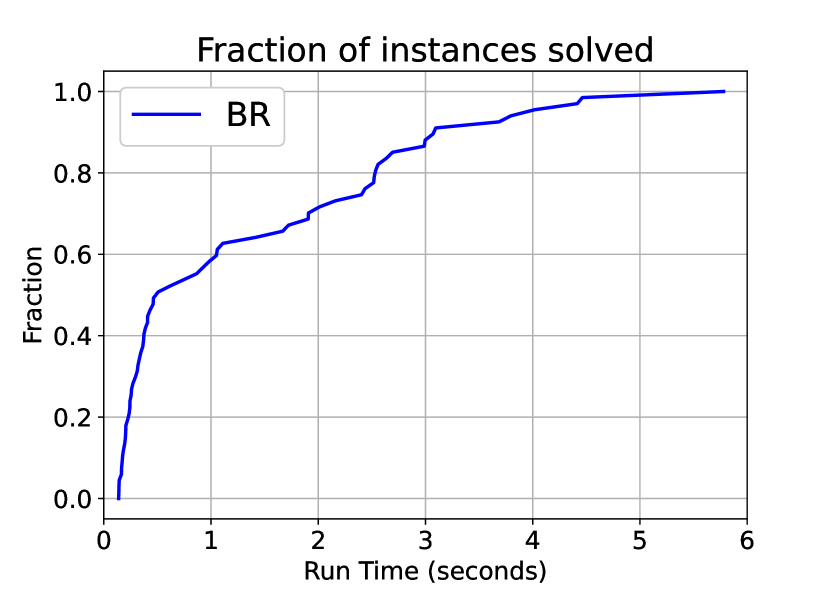

In the two player case, we observe that we are competitive with SGM. Figure 2(a) compares the performance profile of our algorithm with SGM. The BR algorithm presented in Algorithm 1 is slightly faster than SGM. The mean run-time for BR is 0.0683 seconds as oppossed to the mean run-time for SGM being 0.1017 seconds. The median run-time for BR is 0.0599 seconds, while the median run-time for SGM is 0.0971 seconds. This is consistent with the mild speed up discussed before. However, the mild is statistically significant that a paired t-test between the run time of the algorithms testing equality of means, the null hypothesis can be rejected with a p-value of .

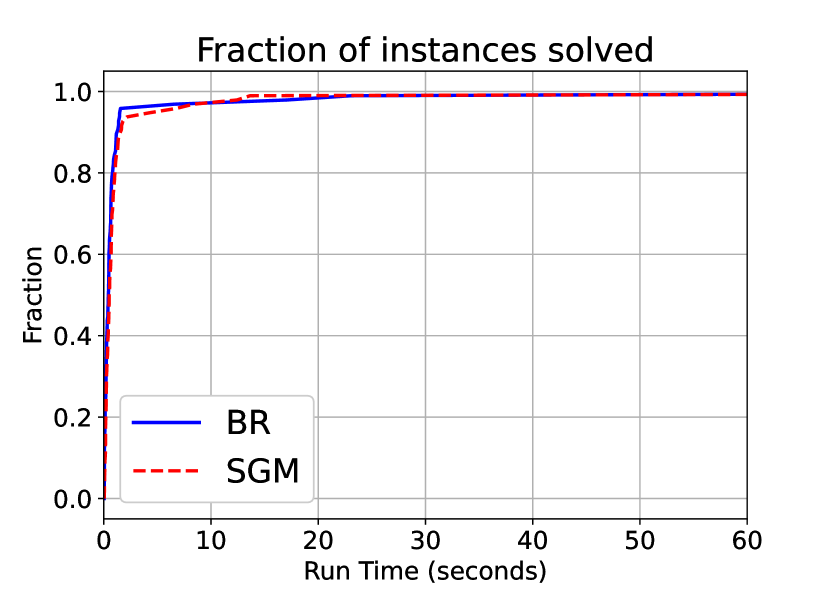

However, with three players, there is a considerable speed up when using the BR algorithm. The performance profile is depicted in Figure 2(b). We can see that almost all the instances were solved in less than 0.5 seconds when using BR, while almost no instance is solved within that time in SGM. Comparably, the mean run-time for BR and SGM in this set of instances is 0.1506 seconds and 3.3608 seconds. The median run-time for BR and SGM are 0.1192 and 1.7239 seconds, indicating that our algorithm is clearly at least ten times faster than SGM.

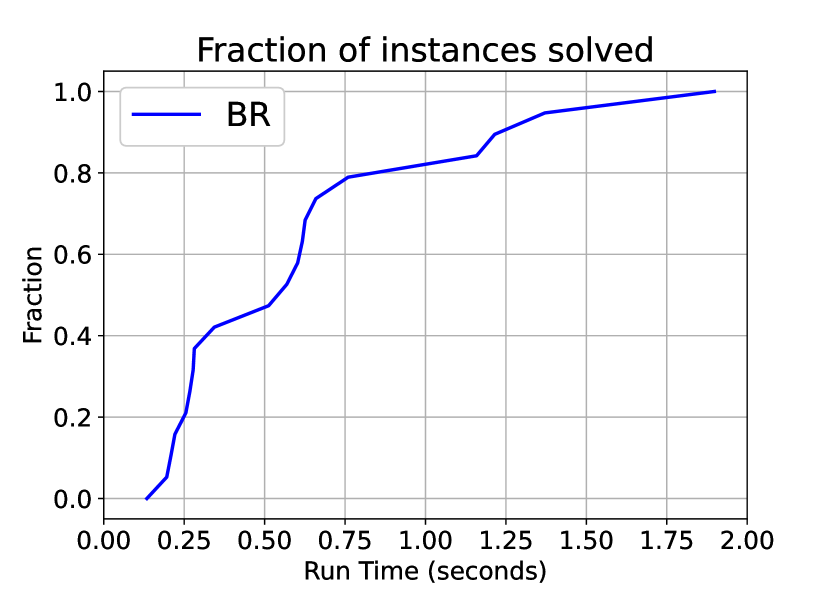

With four and five players, the difference is even more pronounced. The mean run-time for BR in the four and five player cases are 0.6087 seconds and 0.7477 seconds. The median run-time for BR in the four and five player cases are 0.5407 seconds and 0.6772 seconds. The SGM was run on these instances with a maximum allotted time of 120 seconds. We observe that not even one of the four-player instance or five-player instance was solved within 120 seconds, hinting at at least that our algorithm provides 100-times speed up when applicable.

Finally, we also note that in each of the 240 instances, the BR algorithm always terminated after finding a PNE or an MNE, but never a -MNE with (after allowing for numerical tolerance of ). We share the instance-by-instance data on run time and the number of iterations in Appendix E in the electronic companion.

5.2 Random instances

Family description.

In this family of instances, the matrices are all randomly generated matrices with integer entries. To enure that s are positive definition, we generate a random integer matrix , and compute , which is now guaranteed to be a positive-definite matrix with integer entries. Next, to ensure that the players have positively adequate objectives, we compute and compute the largest singular value of , which we denote as . Finally, we define . The ceiling ensures that has integer entries and the addition with the identity matrix ensures that we have each of the singular values of is strictly lesser than .

Instance generation.

We vary the number of players between two, three, four, and five. Further, for each of the above four situations, we consider the decision vector of each player to be vary from 5, 10, 15, 20 or 25 variables. For each of the combinations, for example, three player games with fifteen variables per player or five player games with five variables per player, we generate 20 instances randomly. This gives a total of instances. Along with the exact matrices defining these 400 instances, we also share the code used to generate them.

Results.

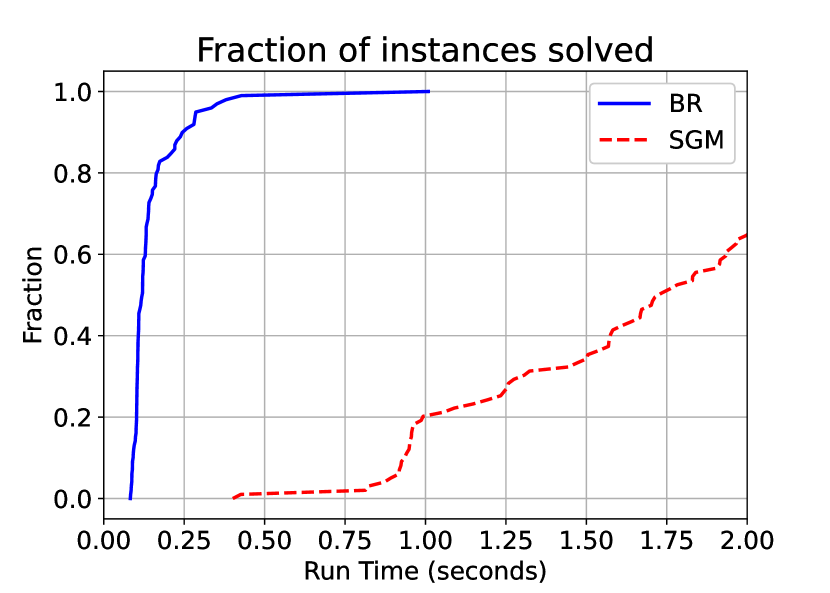

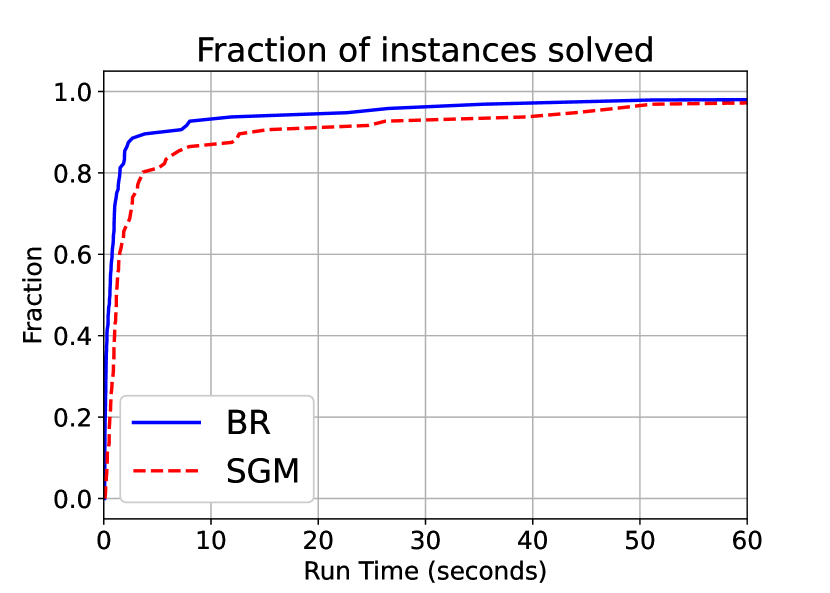

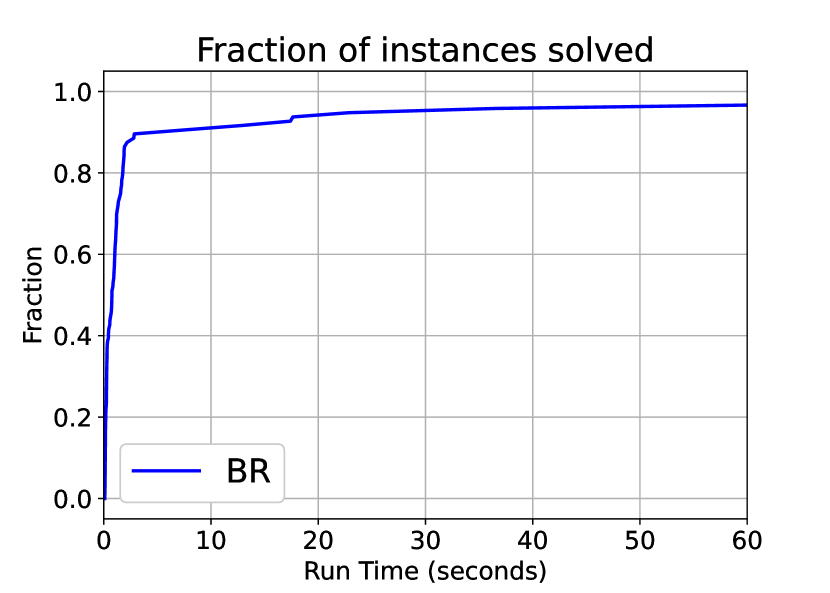

In all subfamilies of instances with two to five players, there were two to four instances in each setting where numerical instabilities called failure of both the BR as well as the SGM algorithm. These instances were discarded from the below analysis as both the algorithms failed in these instances.

Out of the remaining instances, in the two player and three player cases, the performance of BR and SGM is comparable. In fact, we do not find any statistically significant difference between the two algorithms. Their performance profiles are plotted in Figures 3(a) and 3(b). However, when we have four or five players, the BR algorithm is significantly faster than the SGM algorithm. The BR always found a solution (except for the cases with numerical instabilities), with a median time of 5.348 seconds for four player-instances and 6.131 seconds for five player-instances, with the maximum time taken for any single instance being 233 seconds approximately. However, with the SGM algorithm, only eight of the hundred instances with four players were solved within a time limit of 500 seconds. The quickest one among them took over 290 seconds. Moreover, all eight solved instances are the simplest of the four player instances, where the decision vector of each player has five variables. Among the five player instances, only two of the hundred instances were solved within a time limit of 500 seconds, both of them taking over 400 seconds. Again, both the solved instances correspond to those where each player’s decision vector has five variables. The complete instance-by-instance details of the computational tests are presented in Appendix E in the electronic companion.

We also note that that, in every single instance that was solved (i.e., the ones that did not run into numerical error), the MNE of the restricted finite game after running the BR algorithm, had only a profitable deviation with maximum profit in the order of , which can be considered as errors due to numerical methods used within the solver, thus motivating a conjecture that is provable.

6 Future work

We end the paper with two possible avenues for future work. We first state a conjecture, which strengthens Corollary 1.

Conjecture 1.

Given an instance of ICQS with positively adequate objectives, and sets and from Algorithm 1, any MNE of the version of the game restricted to and is an MNE to ICQS.

In other words, the conjecture says that Corollary 1 holds with . The conjecture is validated by the computational experiments in Section 5. This is also consistent with the fact that the family of counterexamples provided in the proof of Theorem 4 do not have positively adequate objectives.

Second, the paper fundamentally uses the properties of quadratic functions to prove the results. It is conceivable then, that the results should hold even if the objective functions are approximated well by quadratic functions. For example, -Lipschitz, -strongly convex functions are both under-approximated and over-approximated by quadratic functions. However, an extension of these results to such functions and identifying the loss in the approximation ratios when considering such functions is non trivial, and is an interesting avenue for future work.

References

- Adsul et al. (2021) Bharat Adsul, Jugal Garg, Ruta Mehta, Milind Sohoni, and Bernhard von Stengel. Fast algorithms for rank-1 bimatrix games. Operations Research, 69(2):613–631, March 2021. ISSN 1526-5463. doi: 10.1287/opre.2020.1981.

- Audet et al. (2006) C. Audet, S. Belhaiza, and P. Hansen. Enumeration of All the Extreme Equilibria in Game Theory: Bimatrix and Polymatrix Games. Journal of Optimization Theory and Applications, 129(3):349–372, 2006. ISSN 0022-3239, 1573-2878. doi: 10.1007/s10957-006-9070-3. URL http://link.springer.com/10.1007/s10957-006-9070-3.

- Ba and Pang (2022) Qin Ba and Jong-Shi Pang. Exact penalization of generalized nash equilibrium problems. Operations Research, 70(3):1448–1464, May 2022. ISSN 1526-5463. doi: 10.1287/opre.2019.1942.

- Barvinok (2002) Alexander Barvinok. A course in convexity, volume 54. American Mathematical Soc., 2002.

- Baudin and Laraki (2022) Lucas Baudin and Rida Laraki. Fictitious play and best-response dynamics in identical interest and zero-sum stochastic games. In International Conference on Machine Learning, pages 1664–1690. PMLR, 2022.

- Bayer et al. (2023) Péter Bayer, György Kozics, and Nóra Gabriella Szőke. Best-response dynamics in directed network games. Journal of Economic Theory, 213:105720, 2023. ISSN 0022-0531. doi: 10.1016/j.jet.2023.105720.

- Bichler et al. (2023) Martin Bichler, Max Fichtl, and Matthias Oberlechner. Computing bayes–nash equilibrium strategies in auction games via simultaneous online dual averaging. Operations Research, December 2023. ISSN 1526-5463. doi: 10.1287/opre.2022.0287.

- Blom et al. (2022) Danny Blom, Bart Smeulders, and Frits C. R. Spieksma. Rejection-proof Kidney Exchange Mechanisms, 2022. URL https://arxiv.org/abs/2206.11525.

- Carvalho et al. (2017) Margarida Carvalho, Andrea Lodi, João Pedro Pedroso, and Ana Viana. Nash equilibria in the two-player kidney exchange game. Mathematical Programming, 161(1-2):389–417, 2017. ISSN 0025-5610, 1436-4646. doi: 10.1007/s10107-016-1013-7.

- Carvalho et al. (2022) Margarida Carvalho, Andrea Lodi, and João Pedro Pedroso. Computing equilibria for integer programming games. European Journal of Operational Research, 2022. ISSN 0377-2217.

- Carvalho et al. (2023a) Margarida Carvalho, Gabriele Dragotto, Felipe Feijoo, Andrea Lodi, and Sriram Sankaranarayanan. When Nash meets Stackelberg. Management Science, 2023a. doi: 10.1287/mnsc.2022.03418.

- Carvalho et al. (2023b) Margarida Carvalho, Gabriele Dragotto, Andrea Lodi, and Sriram Sankaranarayanan. The Cut and Play algorithm: Computing Nash equilibria via outer approximations. arXiv preprint arXiv:2111.05726, 2023b.

- Carvalho et al. (2023c) Margarida Carvalho, Gabriele Dragotto, Andrea Lodi, and Sriram Sankaranarayanan. Integer programming games: A gentle computational overview. TutORials in Operations Research, 2023c.

- Celaya et al. (2022) Marcel Celaya, Stefan Kuhlmann, Joseph Paat, and Robert Weismantel. Proximity and flatness bounds for linear integer optimization. arXiv preprint arXiv:2211.14941, 2022.

- Cook et al. (1986) William Cook, Albertus MH Gerards, Alexander Schrijver, and Éva Tardos. Sensitivity theorems in integer linear programming. Mathematical Programming, 34:251–264, 1986.

- Crönert and Minner (2022) Tobias Crönert and Stefan Minner. Equilibrium Identification and Selection in Finite Games. Operations Research, 2022. ISSN 0030-364X, 1526-5463. doi: 10.1287/opre.2022.2413.

- Devine and Siddiqui (2023) Mel T Devine and Sauleh Siddiqui. Strategic investment decisions in an oligopoly with a competitive fringe: An equilibrium problem with equilibrium constraints approach. European Journal of Operational Research, 306(3):1473–1494, 2023.

- Egging-Bratseth et al. (2020) Ruud Egging-Bratseth, Tobias Baltensperger, and Asgeir Tomasgard. Solving oligopolistic equilibrium problems with convex optimization. European Journal of Operational Research, 284(1):44–52, 2020.

- Feijoo et al. (2018) Felipe Feijoo, Gokul C Iyer, Charalampos Avraam, Sauleh A Siddiqui, Leon E Clarke, Sriram Sankaranarayanan, Matthew T Binsted, Pralit L Patel, Nathalia C Prates, Evelyn Torres-Alfaro, et al. The future of natural gas infrastructure development in the united states. Applied energy, 228:149–166, 2018.

- Feinstein and Rudloff (2023) Zachary Feinstein and Birgit Rudloff. Technical note—characterizing and computing the set of nash equilibria via vector optimization. Operations Research, May 2023. ISSN 1526-5463. doi: 10.1287/opre.2023.2457.

- Granot and Skorin-Kapov (1990) Frieda Granot and Jadranka Skorin-Kapov. Some proximity and sensitivity results in quadratic integer programming. Mathematical Programming, 47(1-3):259–268, 1990.

- Gurobi Optimization (2019) LLC Gurobi Optimization. Gurobi Optimizer Reference Manual, 2019. URL http://www.gurobi.com.

- Hopkins (1999) Ed Hopkins. A note on best response dynamics. Games and Economic Behavior, 29(1-2):138–150, 1999.

- Horn and Johnson (2012) Roger A Horn and Charles R Johnson. Matrix analysis. Cambridge university press, 2012.

- Köppe et al. (2011) Matthias Köppe, Christopher Thomas Ryan, and Maurice Queyranne. Rational Generating Functions and Integer Programming Games. Operations Research, 59(6):1445–1460, 2011. ISSN 0030-364X, 1526-5463.

- Kukushkin (2004) Nikolai S Kukushkin. Best response dynamics in finite games with additive aggregation. Games and Economic Behavior, 48(1):94–110, 2004.

- Lamas and Chevalier (2018) Alejandro Lamas and Philippe Chevalier. Joint dynamic pricing and lot-sizing under competition. European Journal of Operational Research, 266(3):864–876, 2018. ISSN 0377-2217. doi: 10.1016/j.ejor.2017.10.026.

- Langer et al. (2016) Lissy Langer, Daniel Huppmann, and Franziska Holz. Lifting the us crude oil export ban: A numerical partial equilibrium analysis. Energy Policy, 97:258–266, 2016.

- Lei and Shanbhag (2022) Jinlong Lei and Uday V Shanbhag. Distributed variable sample-size gradient-response and best-response schemes for stochastic nash equilibrium problems. SIAM Journal on Optimization, 32(2):573–603, 2022.

- Lemke and Howson (1964) C. E. Lemke and J. T. Howson, Jr. Equilibrium Points of Bimatrix Games. Journal of the Society for Industrial and Applied Mathematics, 12(2):413–423, 1964. ISSN 0368-4245, 2168-3484.

- Leslie et al. (2020) David S Leslie, Steven Perkins, and Zibo Xu. Best-response dynamics in zero-sum stochastic games. Journal of Economic Theory, 189:105095, 2020.

- Luna et al. (2023) Juan Pablo Luna, Claudia Sagastizábal, Julia Filiberti, Steven A. Gabriel, and Mikhail V. Solodov. Regularized equilibrium problems with equilibrium constraints with application to energy markets. SIAM Journal on Optimization, 33(3):1767–1796, 2023. doi: 10.1137/20M1353538.

- Micciancio and Voulgaris (2013) Daniele Micciancio and Panagiotis Voulgaris. A deterministic single exponential time algorithm for most lattice problems based on voronoi cell computations. SIAM Journal on Computing, 42(3):1364–1391, 2013. doi: 10.1137/100811970.

- Monderer and Shapley (1996) Dov Monderer and Lloyd S Shapley. Potential games. Games and economic behavior, 14(1):124–143, 1996.

- Morgenstern and Von Neumann (1953) Oskar Morgenstern and John Von Neumann. Theory of games and economic behavior. Princeton university press, 1953.

- Moriguchi et al. (2011) Satoko Moriguchi, Akiyoshi Shioura, and Nobuyuki Tsuchimura. M-convex function minimization by continuous relaxation approach: Proximity theorem and algorithm. SIAM Journal on Optimization, 21(3):633–668, 2011.

- Morris (2003) Stephen Morris. Best response equivalence, 2003. Nach Informationen von SSRN wurde die ursprüngliche Fassung des Dokuments July 2002 erstellt.

- Nash (1950) John F. Nash. Equilibrium Points in n-Person Games. Proceedings of the National Academy of Sciences of the United States of America, 36(1):48–49, 1950.

- Nash (1951) John F. Nash. Non-Cooperative Games. The Annals of Mathematics, 54(2):286, 1951. ISSN 0003486X. doi: 10.2307/1969529.

- Paat et al. (2020) Joseph Paat, Robert Weismantel, and Stefan Weltge. Distances between optimal solutions of mixed-integer programs. Mathematical Programming, 179(1-2):455–468, 2020.

- Ravner and Snitkovsky (2023) Liron Ravner and Ran I. Snitkovsky. Stochastic approximation of symmetric nash equilibria in queueing games. Operations Research, June 2023. ISSN 1526-5463. doi: 10.1287/opre.2021.0306.

- Rudelson (2000) M Rudelson. Distances between non-symmetric convex bodies and the-estimate. Positivity, 4(2):161–178, 2000.

- Sandholm et al. (2005a) Thomas Sandholm, Andrew Gilpin, and Vincent Conitzer. Mixed-Integer Programming Methods for Finding Nash Equilibria. In Proceedings of the 20th National Conference on Artificial Intelligence - Volume 2, AAAI’05, pages 495–501. AAAI Press, 2005a. ISBN 1-57735-236-X. URL https://dl.acm.org/doi/10.5555/1619410.1619413.

- Sandholm et al. (2005b) Tuomas Sandholm, Andrew Gilpin, and Vincent Conitzer. Mixed-integer programming methods for finding Nash equilibria. In AAAI, pages 495–501, 2005b.

- Schwarze and Stein (2023) Stefan Schwarze and Oliver Stein. A branch-and-prune algorithm for discrete Nash equilibrium problems. Computational Optimization and Applications, pages 1–29, 2023.

- Von Neumann and Morgenstern (1944) John Von Neumann and Oskar Morgenstern. Theory of Games and Economic Behavior. Princeton University Press, 1944. ISBN 978-1-4008-2946-0. doi: 10.1515/9781400829460.

- Voorneveld (2000) Mark Voorneveld. Best-response potential games. Economics Letters, 66(3):289–295, 2000. ISSN 0165-1765. doi: 10.1016/S0165-1765(99)00196-2.

- Wang et al. (2021) Chong Wang, Ping Ju, Feng Wu, Shunbo Lei, and Xueping Pan. Best response-based individually look-ahead scheduling for natural gas and power systems. Applied Energy, 304:117673, 2021.

Appendix A Continuous Quadratic Games

Definition 7 (Continuous convex quadratic Simultaneous game (CG-Nash)).

A Continuous Convex Quadratic Simultaneous game (CCQS) is a game of the form

| (CCQS) |

In this definition, we assume that and are symmetric positive definite matrices. Moreover, we refer to and as interaction matrices. The interaction matrices are of dimensions and respectively.

Now, we present the results for this section.

Theorem 7.

If the game CCQS has negatively adequate objectives, there exist initial points , starting from which Algorithm 1 generates divergent iterates, .

Theorem 8.

If the game CCQS has positively adequate objectives, then, irrespective of the initial points , Algorithm 1 generates iterates converging to a PNE.

Theorems 7 and 8 above can be interpreted as necessary and sufficient conditions for Algorithm 1 converge to a PNE of CCQS. Theorem 7 only talks about existence of initial points starting from which the iterates will diverge, because, for example, one could start Algorithm 1 right at a PNE of the problem. And by the definition of PNE, the algorithm will terminate immediately. Nevertheless, the proof provides a constructive procedure to identify initial points so that Algorithm 1 is guaranteed to diverge.

Proof of Theorem 8. .

Consider the best response and for both the players. There is a unique best response, since and are positive definite. The corresponding conditions for optimality are

| (9a) | ||||

| (9b) | ||||

So, in case we follow the best-response iteration, the successive iterates can be obtained by the updates defined in 9. This can be seen as the fixed-point iteration of the function

| (10c) | ||||

| (10d) | ||||

Let us analyse how maps two points, and the norm of the difference of the images of those points. We observe

where the inequality in the last step follows due to the fact that the largest singular value of is at most the largest singular value of and (Proposition 1) and this is at most since we have positively adequate objectives and finally Proposition 2 gives the inequality.

Thus, we have established for any arbitrary vectors. But, this means that is a contractive mapping. It is known that the fixed-point iteration converges to a fixed-point if the mapping is contractive. But a fixed point in this context means two successive iterates in Algorithm 1 adapted for CCQS. Thus the algorithm will terminate in pure-strategy condition, and the fixed point is a PNE for CCQS. ∎

Now, we will prove Theorem 7.

Proof of Theorem 7. .

Let every singular value of and be at least with . We use the fixed-point iteration of the function defined in 10. Since this tracks the iterations of Algorithm 1, if we show that this fixed-point iteration diverges, so does Algorithm 1. We observe that

| where the inequality holds because the singular value of the matrix in the RHS above are all at least as large as the smallest singular value of and , which are at least , and then by applying Proposition 2. | ||||

| where the inequalities are due to negatively adequate objectives and Propositions 2 and 1. Now, if , then the above becomes | ||||

But this means that the iterates are going successively get farther and farther from the origin, and any arbitrarily large norm bound will be eventually crossed to never return back and repeat any iterate. This indicates that Algorithm 1 as adapted for CCQS will diverge. ∎

Appendix B Extension for multiple players

Suppose there are multiple players . We denote the variables of player as and those of everybody except as . Now, the objective function if player is given as . For example, in a three player game, suppose the objective function of player is given as , we write . Thus, .

The successive iterates, as in the proof of Theorem 1, are given by . The index refers to the player whose best response is being computed and refers to the iteration number.

We provide a proof sketch that even with players, a theorem analogous to that of Theorem 1 holds. In other words, when the game has positively adequate objectives, then the best-response algorithm terminates. Now, analogous to the proof of Theorem 1, we can write the difference between two strategy profiles as . Like before, we observe that if the norm of is large, then the iterate in the subsequent iteration will necessarily have a smaller norm. But this means, the iterates will have to return to a bounded region, if they begin to move towards infinity. But in any bounded region, there will be finitely many feasible integer points, leading to cycling and hence termination. Hence the proof.

Next, it is straightforward to observe Theorem 5 translates to multiplayer case naturally. The proof of Theorem 5 considers the strategies of player , while using the probabilities and strategies of player . In a multi-player setting, the same proof can be adapted, by considering all the other players’s strategies , when establishing a bound .

Finally, to provide the bounds on (which will now be notated as when there are multiple players), we express 7g in terms of , which is the maximum distance between two valid that appears in the cycle.

Appendix C Auxiliary results

We state these auxiliary results for ready reference. The following results are available (typically in greater generality) in most standard texts on matrix analysis, for example, Horn and Johnson (2012). However, a short proof sketch is provided for the reader’s convenience.

Proposition 1.

Let be an matrix and be an matrix. Let be the singular values of and be the singular values of . Then, the singular values of the matrix are .

Proof of Proposition 1..

Consider the singular value decompositions (SVD) of matrices and . Let and . Then, , which is obtained by muiltiplying the block matrices. While is not diagonal, its rows and columns can be permuted according to a permutation matrix to get to get where the only non-zero elements of are along its diagonal. It can also be verified that and are unitary, completing the proof. The only non-zero elements of are the singular values of and , making them the singular values of . ∎

Proposition 2.

Every singular value of a matrix is strictly lesser than if and only if for every . Every singular value of a matrix is strictly greater than if and only if for every .

Proof of Proposition 2..

Let be a matrix of dimension with real entries. Then, the singular values of are the square roots of the eigenvalues of . Let us call this matrix . Since is symmetric, all its eigen values are real. Moreover, from Rayleigh’s theorem, the largest value that can take is and the smallest value it can take is where and are the largest and the smallest eigen values of respectively. Now, observe . But the largest eigen value of , which is , is the square of the largest singular value of (denoted as ). Thus, we have , which implies . This proves the first part of the result. The second part can be proved using analogous arguments. ∎

Appendix D Discussion on the rate of convergence.

In the context of both CCQS and ICQS, it is important to bound the number of iterations taken by Algorithm 1 before termination. We discuss this when the game has positively adequate objectives, and hence termination is guaranteed. Like in case of descent algorithms for continuous nonlinear programs, the number of iterations is sensitive to the initial point.

We note that when we have positively adequate objectives, then the iterates converge to the neighborhood of the region where cycling occurs. In particular, we show the following. Let be a set about which cycling can possibly occur. Then, iterates will move towards a neighborhood around this set at a linearly convergent rate.

More formally, we state the same as follows. Let be some iterate generated by Algorithm 1. Let be the point in that is closest to . If for some , then we claim that . In other words, if the iterate is at least away from any point in , then there is a linear rate of convergence towards the neighborhood.

The reasoning behind this is as follows. As argued earlier, if the game ICQS has positively adequate objectives, then the matrix has all its singular values less than strictly. In particular, let each of them be less than or equal to for some . Let . Further, let be as defined in 3d, mapping each iterate in the best-response iteration to the next iterate. Now,

Here, the first inequality follows from the fact that is the closest point in to and that . The next equality is obtained from the fact that the consecutive iterates are generated by applying the function and the next equality is about substituting the function as per 3d. The following two inequalities are both by applying the trianguar inequality of norms. The next inequality is a consequence of Theorem 3 that the integer minimum is at a bounded distance away from the continuous minimum. The equality following that introduces a unitary matrix like before that reorders the rows of the vector as needed. The next inequality follows due to the assumption that the largest singular value of both and are at most . The next equality is obtained by adding and subtracting on the RHS. The last inequality is true, if which is equivalent to say . In other words, as claimed, if the iterates are far away from the set , then there is a linear rate of convergence. i.e., the distance goes down by a constant factor of in each iteration. till the iterates reach a neighborhood of radius around . Following that, however, we believe that guarantees are not possible about when cycling could occur.

The same analysis also says that in the context of CCQS, where the term corresponding to is , linear convergence to the PNE is guaranteed.

Appendix E Computational experiments data

For the 240 instances of the pricing game with substitutes and complements and the 500 instances of the random games, we provide the run-time data here below. Each instance is recognized by the unique filename that has the data for the instance. The second column indicates the number of players in the instance. The third and fourth columns titled and indicate the time taken by the BR and SGM algorithms respectively on the problem. An entry saying TL here indicates that the maximum time limit is reached but no MNE is found. An entry saying Num Err indicates that the solver ran into numerical issues as some of the matrices possibly have large entries in them. In particular, we state numerical error, if the integer programming solver (Gurobi) declares that the matrix used is not positive definite. This is not possible from construction, as the instances are generated by choosing for some random . However, some times, large entries in makes Gurobi to declare that the matrix is not positive definite, and we report numerical errors in these cases. Finally, the last two columns and indicate the number of iterations of the BR and the SGM algorithms that ran before either successful termination or reaching the time limit or running into numerical issues.

E.1 Pricing with substitutes and complements

| Instance name | nPlay | ||||

|---|---|---|---|---|---|

| asymmMktGame_N2_1.json | 2 | 0.2172 | 0.1425 | 4 | 6 |

| asymmMktGame_N2_2.json | 2 | 0.1812 | 0.1904 | 4 | 5 |

| asymmMktGame_N2_3.json | 2 | 0.3832 | 0.3613 | 5 | 7 |

| asymmMktGame_N2_4.json | 2 | 0.1808 | 0.2887 | 5 | 7 |

| asymmMktGame_N2_5.json | 2 | 0.3706 | 0.5868 | 4 | 5 |

| asymmMktGame_N2_6.json | 2 | 0.138 | 0.1444 | 4 | 5 |

| asymmMktGame_N2_7.json | 2 | 0.4024 | 0.9749 | 4 | 6 |

| asymmMktGame_N2_8.json | 2 | 0.2808 | 0.5777 | 4 | 5 |

| asymmMktGame_N2_9.json | 2 | 0.9021 | 0.5659 | 4 | 6 |

| asymmMktGame_N2_10.json | 2 | 0.1653 | 1.3944 | 4 | 6 |

| asymmMktGame_N2_11.json | 2 | 1.1006 | 0.3641 | 4 | 6 |

| asymmMktGame_N2_12.json | 2 | 0.1449 | 0.5067 | 4 | 6 |

| asymmMktGame_N2_13.json | 2 | 0.8202 | 0.9078 | 5 | 7 |

| asymmMktGame_N2_14.json | 2 | 1.4994 | 0.9507 | 4 | 5 |

| asymmMktGame_N2_15.json | 2 | 0.2634 | 0.1862 | 6 | 5 |

| asymmMktGame_N2_16.json | 2 | 0.1037 | 0.1353 | 4 | 5 |

| asymmMktGame_N2_17.json | 2 | 0.7951 | 2.0591 | 4 | 6 |

| asymmMktGame_N2_18.json | 2 | 1.4928 | 1.1661 | 4 | 6 |

| asymmMktGame_N2_19.json | 2 | 0.3455 | 1.1541 | 3 | 5 |

| asymmMktGame_N2_20.json | 2 | 0.5566 | 0.89 | 4 | 5 |

| asymmMktGame_N2_21.json | 2 | 0.5051 | 0.5074 | 4 | 5 |

| asymmMktGame_N2_22.json | 2 | 0.9255 | 0.3385 | 3 | 5 |

| asymmMktGame_N2_23.json | 2 | 1.8685 | 0.8177 | 4 | 6 |

| asymmMktGame_N2_24.json | 2 | 1.1305 | 0.9827 | 4 | 7 |

| asymmMktGame_N2_25.json | 2 | 0.3919 | 1.0244 | 4 | 7 |

| asymmMktGame_N2_26.json | 2 | 0.6474 | 0.5421 | 4 | 6 |

| asymmMktGame_N2_27.json | 2 | 0.3688 | 0.5998 | 4 | 6 |

| asymmMktGame_N2_28.json | 2 | 0.2082 | 0.3656 | 3 | 5 |

| asymmMktGame_N2_29.json | 2 | 0.2732 | 0.5181 | 4 | 6 |

| asymmMktGame_N2_30.json | 2 | 0.5284 | 1.5531 | 4 | 5 |

| asymmMktGame_N2_31.json | 2 | 1.4595 | 0.89 | 4 | 6 |

| asymmMktGame_N2_32.json | 2 | 0.2795 | 0.3696 | 4 | 5 |

| asymmMktGame_N2_33.json | 2 | 0.392 | 0.6649 | 4 | 6 |

| asymmMktGame_N2_34.json | 2 | 1.4271 | 0.5682 | 4 | 5 |

| asymmMktGame_N2_35.json | 2 | 0.5249 | 1.1689 | 5 | 8 |

| asymmMktGame_N2_36.json | 2 | 0.2552 | 2.0878 | 4 | 6 |

| asymmMktGame_N2_37.json | 2 | 2.0422 | 1.1434 | 4 | 6 |

| asymmMktGame_N2_38.json | 2 | 0.0891 | 0.0963 | 4 | 5 |

| asymmMktGame_N2_39.json | 2 | 0.0506 | 0.0941 | 3 | 5 |

| asymmMktGame_N2_40.json | 2 | 0.059 | 0.1413 | 4 | 6 |

| asymmMktGame_N2_41.json | 2 | 0.0608 | 0.1537 | 4 | 7 |

| asymmMktGame_N2_42.json | 2 | 0.0668 | 0.1218 | 3 | 5 |

| asymmMktGame_N2_43.json | 2 | 0.0877 | 0.0879 | 5 | 5 |

| asymmMktGame_N2_44.json | 2 | 0.0524 | 0.0787 | 4 | 5 |

| asymmMktGame_N2_45.json | 2 | 0.0574 | 0.0781 | 4 | 5 |

| asymmMktGame_N2_46.json | 2 | 0.0601 | 0.0781 | 4 | 5 |

| asymmMktGame_N2_47.json | 2 | 0.049 | 0.0995 | 4 | 6 |

| asymmMktGame_N2_48.json | 2 | 0.1138 | 0.1121 | 4 | 5 |

| asymmMktGame_N2_49.json | 2 | 0.075 | 0.0916 | 4 | 5 |

| asymmMktGame_N2_50.json | 2 | 0.0673 | 0.1295 | 5 | 7 |

| asymmMktGame_N2_51.json | 2 | 0.0693 | 0.1177 | 4 | 6 |

| asymmMktGame_N2_52.json | 2 | 0.0941 | 0.167 | 5 | 7 |

| asymmMktGame_N2_53.json | 2 | 0.0933 | 0.1605 | 5 | 7 |

| asymmMktGame_N2_54.json | 2 | 0.0594 | 0.0807 | 4 | 5 |

| asymmMktGame_N2_55.json | 2 | 0.0663 | 0.0752 | 4 | 5 |

| asymmMktGame_N2_56.json | 2 | 0.1091 | 0.0791 | 3 | 5 |

| asymmMktGame_N2_57.json | 2 | 0.0952 | 0.3277 | 4 | 6 |

| asymmMktGame_N2_58.json | 2 | 0.1663 | 0.1887 | 4 | 5 |

| asymmMktGame_N2_59.json | 2 | 0.4696 | 0.5084 | 5 | 7 |

| asymmMktGame_N2_60.json | 2 | 0.363 | 0.6538 | 4 | 6 |

| asymmMktGame_N2_61.json | 2 | 0.1011 | 0.1741 | 4 | 6 |

| asymmMktGame_N2_62.json | 2 | 0.0979 | 0.1767 | 4 | 7 |

| asymmMktGame_N2_63.json | 2 | 0.3333 | 0.1599 | 4 | 5 |

| asymmMktGame_N2_64.json | 2 | 0.0902 | 0.1394 | 4 | 6 |

| asymmMktGame_N2_65.json | 2 | 0.0752 | 0.3657 | 4 | 6 |

| asymmMktGame_N2_66.json | 2 | 0.1045 | 0.1041 | 4 | 5 |

| asymmMktGame_N2_67.json | 2 | 0.077 | 0.3213 | 4 | 5 |

| asymmMktGame_N2_68.json | 2 | 0.9419 | 0.5485 | 5 | 7 |

| asymmMktGame_N2_69.json | 2 | 0.7156 | 0.7363 | 5 | 6 |

| asymmMktGame_N2_70.json | 2 | 0.4242 | 0.7839 | 5 | 6 |

| asymmMktGame_N2_71.json | 2 | 0.2864 | 0.3569 | 4 | 5 |