Beyond Linear Subspace Clustering: A Comparative Study of Nonlinear Manifold Clustering Algorithms

Abstract

Subspace clustering is an important unsupervised clustering approach. It is based on the assumption that the high-dimensional data points are approximately distributed around several low-dimensional linear subspaces. The majority of the prominent subspace clustering algorithms rely on the representation of the data points as linear combinations of other data points, which is known as a self-expressive representation. To overcome the restrictive linearity assumption, numerous nonlinear approaches were proposed to extend successful subspace clustering approaches to data on a union of nonlinear manifolds. In this comparative study, we provide a comprehensive overview of nonlinear subspace clustering approaches proposed in the last decade. We introduce a new taxonomy to classify the state-of-the-art approaches into three categories, namely locality preserving, kernel based, and neural network based. The major representative algorithms within each category are extensively compared on carefully designed synthetic and real-world data sets. The detailed analysis of these approaches unfolds potential research directions and unsolved challenges in this field.

Keywords: subspace clustering, nonlinear subspace clustering, manifold clustering, Laplacain regularization, Kernel learning, unsupervised deep learning, neural networks

1 Introduction

Understanding and processing high-dimensional data is a key component of numerous applications in many domains including machine learning, signal processing and computer vision. However, analyzing high-dimensional data using classical data mining algorithms is not only challenging due to computational costs but it can also easily lead to the well-known problem of the curse of dimensionality [8]. Luckily, in most of applications, the data often have fewer degrees of freedom than the ambient dimension and they can be approximately represented by a small number of features; see [106, 105]. This led to the development of a vast variety of algorithms for the long-standing problem of extracting latent structures from high-dimensional data; see for example [109] and references therein.

The main focus of the majority of these algorithms is to fit a single low-dimensional linear subspace to the data, with principal component analysis (PCA) being the most well-known pioneer algorithm in this area [46]. However, the data often belongs to multiple categories with different intrinsic structures, and modeling the data using only one low-dimensional subspace might be too restrictive [108]. In fact, in many applications, the data is better represented by multiple subspaces. Representing the data using a union of multiple subspaces gave rise to linear subspace clustering [15, 9]; see [107] for a survey paper.

Definition 1 (Linear subspace clustering (linear SC)).

Let be the input high-dimensional data, with -dimensional data points as its columns. Suppose the data points are distributed around unknown linear subspaces with intrinsic dimensions , respectively, such that for all . The problem of subspace clustering is defined as segmenting the data points based on their corresponding subspaces and estimating the parameters of each subspace.

In the literature, linear SC is usually referred to as SC but, to avoid any confusion, we refer to it as linear SC in this survey. Linear SC is a clustering framework where the data points are grouped together based on the underlying subspaces they belong to. In other words, the similarity between the data points is measured by how well they fit within a low-dimensional linear subspace. Modeling data with multiple subspaces has many applications in image and signal processing. In fact, data points are often collected from multiple classes/categories, and extracting latent low-dimensional structures within each class/category results in more meaningful and more compressed representations. For example, given a collection of facial images taken with variations in the lightening, cluserting these images according to the person they represent can be modeled as a linear SC problem. In fact, under the Lambertian surface assumption, facial images of a single individual under different illumination conditions lie on a roughly nine-dimensional subspace which has far less effective dimensions than the original ambient space (usually an image consists of thousands of pixels) [4]. Hence, facial images of various people under different lightening conditions can be approximated by multiple low-dimensional subspaces. Other notable examples of data that can be approximated by multiple low-dimensional subspaces include segmenting trajectories of moving objects in a video [100], clustering images of hand-written digits [32], and partitioning sequences of video frames into semantic parts [109].

Driven by this wide range of applications, many efficient algorithms for linear SC have been developed. These algorithms are divided into four categories [107, 21]: (i) iterative, (ii) statistical, (iii) algebraic, and (iv) spectral clustering based approaches. Let us briefly describe these four categories:

-

(i)

Iterative approaches were among the first ones for linear SC [9, 36, 104]. Inspired by iterative refinement in centroid-based clustering, such as k-means, two steps are carried out alternatively: assigning each data point to the closest subspace, and updating the parameters of each subspace based on the points assigned to it (e.g., using the truncated singular value decomposition). However, iterative approaches are sensitive to initialization and parameters such as the dimensions and the number of subspaces (these are usually not available in most applications).

-

(ii)

Statistical approaches [99, 28], which suffer from the same drawbacks as iterative ones, usually assume that the distribution of the data within each subspace is Gaussian, and hence the linear SC problem is mapped into the iterative problem of fitting a mixture of Gaussian distribution to the data using expectation maximization.

-

(iii)

Some approaches use algebraic matrix factorization to perform the data segmentation [16, 47]. These approaches are not only sensitive to noise but are also based on the assumption that the underlying subspaces are independent. In related algebraic-geometric approaches [108, 103], the association of the data points to the subspaces are revealed by fitting a polynomial to the data. These approaches are computationally expensive and sensitive to noise.

-

(iv)

Spectral clustering based algorithms are the most popular and successful linear SC approaches. They have received significant attention over the last decade. These approaches are based on recent advances in sparse and low-rank representation [39, 118, 146]. They first learn a directed weighted graph representing the interaction between the data points, and then apply spectral clustering to segment this graph; see Section 2 for more details.

Even though linear SC approaches have achieved impressive performances in several applications, the global linearity assumption is somewhat strong. Assuming that the data points lie close to multiple linear subspaces is limiting and might be violated in some applications. In order to extend the applicability of linear SC in real-world problems, there has been numerous attempts to generalize linear SC for data lying on the union of nonlinear subspaces or manifolds. This is the main topic of this survey paper.

Our contributions

The main contributions of this paper are summarized as follows:

-

•

We propose a new taxonomy to classify nonlinear SC algorithms, divided them into three categories: (1) locality preserving, (2) kernel based, and (3) neural network based

-

•

We provide a comprehensive overview of these nonlinear SC algorithms.

-

•

We numerically analyze and compare the representative algorithms in each category. This survey can be considered as a guiding tutorial for understanding and developing nonlinear SC algorithms.

Outline of the survey

The rest of the paper is organized as follows. Since spectral clustering based linear SC algorithms are at the core of the majority of existing nonlinear SC methods, we briefly introduce and review the most important ones in Section 2. Section 3 provides a detailed overview of nonlinear SC methods, based on our new taxonomy that divides them into three categories. Section 4 discusses the computational cost of these methods, while Section 5 provides a numerical comparison of the main representative methods on synthetic and real-world data sets. In Section 6, we discuss the existing challenges in dealing with nonlinear structures in data, and future research directions. We conclude the paper in Section 7.

2 Linear subspace clustering based on spectral clustering

Among the wide variety of linear SC approaches, the current state-of-the-art algorithms are based on spectral clustering. As illustrated in Figure 1, these algorithms follow a two-step strategy:

-

•

In the first step, an affinity matrix is constructed: each vertex in the corresponding graph corresponds to a data point while edges connect similar data points.

-

•

In the second step, spectral clustering is applied on the affinity matrix to segment the data points.

In the next two sections, we describe these two steps in more details.

2.1 Building the affinity matrix

The first and undoubtedly main step of linear SC algorithms based on spectral clustering is to build an affinity matrix. The affinity matrix should reveal the pairwise similarities between the data points, that is, the data points from the same cluster/subspace should be highly connected with high similarities, as opposed to points from different subspaces. The construction of this affinity matrix is typically carried out using a self-expressive representation; this is discussed in Section 2.1.1. It is sometimes followed by a post-processing step to polish the obtained pairwise similarities; this is discussed in Section 2.1.2.

Remark 1.

Building the affinity matrix is not only useful for clustering. It can be used in other contexts where it is useful to model the input data as a graph by capturing pairwise relationships between the data points [14]. In other words, this first step of spectral based SC approaches is also used in other applications that model and analyze the structure within the data [87].

2.1.1 Self-expressive representation

Constructing a pairwise affinity matrix that reflects the multiple subspace structure of the data points is the main challenge in the linear SC algorithms based on spectral clustering. The affinity matrix is often constructed by exploiting the self-expressiveness property of the data points. This property, which is known as collaborative representation in the sparse representation literature [141], is based on the fact that each data point can be represented as a linear combination of other data points in the same subspace. Mathematically, for all ,

| (1) |

where is the coefficient matrix, is the -th diagonal entry of , and is the -th column of . The condition eliminates the trivial solution of expressing each point by itself. However, there are typically infinitely many solutions to (1) and many of them might not be subspace preserving, that is, all data point might not be expressed using a linear combination of data points belonging only to the same subspace. Let us formally define this concept.

Definition 2 (Subspace preserving representation).

Given the data matrix drawn from the union of subspaces , let be the coefficient matrix corresponding to the output of a linear SC algorithm based on self-expressiveness; see (1). The matrix is subspace preserving if for any data point , for all such that . In other words, for all , the nonzero elements in only correspond to data points from the same subspace as .

To fulfill the goal of linear SC and reveal the association of each data point to the underlying subspace, the coefficient matrix should be (approximately) subspace preserving. Hence, to ensure that the obtained coefficient matrix is subspace preserving, several regularizations on the coefficient matrix are used in the literature, including sparsity [21] and low-rankness [59]. In general, the linear SC problem based on self-expressiveness is formulated as follows:

| (2) | ||||

| such that |

where is a regularization function for the coefficient matrix (see below), models the noise, is a regularization function for modeling the noise (for example the norm for sparse gross noise, or the Frobenius norm for Gaussian noise), and is the vector containing the diagonal entries of . The conditions under which the obtained coefficient matrix is subspace preserving strongly depends on the regularization function . Table 1 summarizes the most common regularization functions and the theoretical conditions for the corresponding coefficient matrix to be subspace preserving. In this table , , and indicate the norm (the number of nonzero entries), the component-wise norm (the sum of the absolute value of the matrix entries), the nuclear norm (the sum of singular values), and the squared Frobenius norm (the sum of the squares of the matrix entries), respectively.

| Method | Regularization Function | Available subspace preserving guarantees | ||

|---|---|---|---|---|

|

independent, disjoint and intersecting subspaces in noiseless and noisy cases | |||

|

all arrangements of noiseless distinct subspaces | |||

|

noiseless independent subspaces | |||

|

noiseless independent subspaces | |||

|

Not available | |||

|

noiseless orthogonal subspaces | |||

|

noiseless independent subspaces | |||

|

independent and disjoint subspaces for the noiseless case with strong dependency on the value of the parameter |

Effect of regularization on subspace preserving representations

The difficulty of linear SC depends on several factors, these include the arrangement of the subspaces, the separation between subspaces, the distribution of the data points within each subspace, the number of points per subspace, and the noise level. There are three main arrangements of subspaces which play a key role in identifying the subspace recovery conditions: independent, disjoint, and intersecting (or overlapping) subspaces. These arrangements are defined as follows:

Definition 3 (Independent subspaces).

A collection of subspaces are said to be independent if , where denotes the direct sum between subspaces, and is the dimension of .

Definition 4 (Disjoint subspaces).

A collection of subspaces are disjoint if and for all .

Definition 5 (Intersecting subspaces).

A collection of subspaces are intersecting/overlapping if for some .

Independent subspaces (orthogonal subspaces being a special case) are the easiest to separate, and hence most regularizations are guaranteed to be subspace preserving in this case, at least in noiseless conditions; see Table 1. An example is two distinct lines in a plane.

Clustering data from disjoint subspaces is a more challenging scenario. An example are three distinct lines in a plane. Sparsity regularization based on the and norms is the only one proven to be subspace preserving, under some conditions; see Table 1. These conditions depend on the separation between the subspaces and the distribution of the data points within each subspace. With sufficiently separated subspaces and well-spread data points (not skewed towards a specific direction), sparsity regularization is guaranteed to provide subspace preserving coefficients in noiseless and noisy cases. For detailed theoretical discussions on this topic, we refer the interested reader to [21, 116].

Intersecting/overlapping subspaces is the most general subspace arrangement for which there is no particular assumption on the subspaces, and any two subspaces can have a nontrivial intersection [116, 95]. Note that data points belonging to the intersection of two subspaces lead to non-unique membership assignments. Similar to disjoint subspaces, sparsity is the only regularization that is proven to be effective for clustering intersecting subspaces. Two distinct two-dimensional planes in three dimensions is an example of intersecting subspaces.

The two most widely used algorithms for self-expressive based linear SC are the following:

-

•

Sparse Subspace Clustering (SSC) [21] uses the component-wise norm, that is, , as a convex surrogate of the norm to enhance the sparsity of ,

-

•

Low-Rank Representation (LRR) [59] uses the nuclear norm, that is, where is the th singular value of , to promote to have low rank.

SSC is the pioneer work in this context, and has strong theoretical guarantees in noisy and noiseless cases for independent, disjoint and intersecting subspaces [21, 95, 96, 136]. LRR has shown competing results with SSC. However, its behavior in noisy and disjoint subspaces is still not well understood theoretically111Although the nuclear norm is a convex surrogate of the rank, it has different subspace preserving properties; see [66, Example 1] for a numerical example. This is in contrast to the strong theoretical guarantees of the norm regularizations and its corresponding convex surrogate, the norm; see Table 1. [117].

Remark 2 (Oversegmentation).

Accurate SC not only depends on subspace preserving representations but also on the connectivity of data points within the same subspace. Even though sparsity regularization has strong theoretical guarantees compared to other regularizations, it not only emphasizes the sparsity of between-cluster connections, but also the sparsity of the inner-cluster connections. Hence, it is possible that the data points from the same subspace form multiple connected components [73], which leads to the creation of more clusters than necessary, that is, oversegmentation. The connectivity issue is less apparent in other regularizations as they inherently promote denser representations.

2.1.2 Post-Processing

In order to enhance the quality of the coefficient matrix , several post-processing strategies exist. The goal of these approaches is to decrease the number of wrong between-subspaces connections and/or strengthening the within-subspaces connections. Even though there is no generally accepted strategy in the literature, a few common post-processing methods are as follows:

-

1.

Normalizing the columns of the coefficient matrix as where is the infinity norm [21]. This can be helpful when some data points have drastically different norms compared to other data points.

-

2.

Applying a hard thresholding operator on each column of the coefficient matrix by keeping only the largest entries in absolute value [84]. This post-processing is based on the property of Intra-subspace Projection Dominance (IPD) which states that the entries corresponding to data points in the same subspace are larger (in absolute value) than the ones corresponding to data points in different subspaces.

-

3.

Performing an ad-hoc post-processing strategy using multiple steps. First, a percentage of the top entries in each column of the coefficient matrix are preserved. Next the shape interaction matrix method [15] is applied to remove the noise; it consists in a low-rank approximation of based on the skinny singular value decomposition. Finally each entry is raised to some power larger than one (the value 4 is the often used) in order to intensify the dominant connections. This strategy depends on several parameters with no explicit theoretical justification. However, it is widely used in nonlinear SC approaches based on neural networks [41, 42].

-

4.

Finding good neighbors which correspond to the key connections in the coefficient matrix [125]. This approach is based on three parameters: . First, only the largest coefficients are kept for each data point. Then, only a subset of these connections is preserved based on the notion of good neighbors. A good neighbor is defined as a data point with at least (usually ) common data points inside the largest connections. The good neighbors of each data point with the largest coefficients form the final selected subset. A disadvantage of this approach is that it depends on parameters which are difficult to tune. (Note that using and reduces to the second post-processing approach described above.)

2.2 Spectral clustering using the coefficient matrix

After obtaining the coefficient matrix using (2), the next step is to infer the clusters using spectral clustering [94, 75]; see [110] for a tutorial. In fact, the entries in the coefficient matrix can be interpreted as the links between the data points: means that the data point is used for expressing data point , and hence it is likely that the data points and belong to the same subspace. Therefore, the coefficient matrix corresponds to a directed graph structure where the nodes of the graph () are the data points and the weights of the edges () are determined by the entries in . Using this interpretation, the problem of linear SC is mapped into the problem of segmenting the graph . The symmetric adjacency matrix is constructed as and then the celebrated algorithm of spectral clustering is applied on the affinity matrix to partition the graph.

An important advantage of spectral based approaches is that they do not need to know the dimensions () of the subspaces. Moreover, by utilizing spectral clustering, they can estimate the number of clusters by analyzing the spectrum of the Laplacian matrix corresponding to the adjacency matrix [110]. Furthermore, robustness of spectral clustering to small perturbations is advantageous for correct clustering especially with unavoidable slight violation of subspace preservation in the coefficient matrix for real-world noisy cases [116].

Linear SC based on exploiting the self-expressive property initiated an extensive amount of research in various directions. Several extensions of self-expressive based SC approaches have been proposed in the past decade to overcome notable challenges such as scalability [72, 11, 2, 133], improving robustness [34, 65], multi-view data clustering [23, 52], and the ability to deal with missing data [54]. However, a crucial limit to linear SC is the linearity assumption. In the next section, we review the approaches that were proposed to segment data points that are drawn from nonlinear manifolds.

3 Nonlinear subspace clustering

Linear SC algorithms often fail in dealing with data belonging to several nonlinear manifolds. This is expected since these approaches only consider the global linear relationship between data points. For nonlinear manifolds, the local relationship between data points plays a more important role, as we will explain later in this section. But first, let us define the problem of nonlinear subspace clustering [20].

Definition 6 (Nonlinear subspace clustering (nonlinear SC)).

Let be the input data. Suppose the data points lie on manifolds with intrinsic dimensions222 A manifold has dimension if every data point has a neighborhood homeomorphic to the Euclidean space in . For example, a circle is a one-dimensional manifold as the neighborhood of each point is locally a segment [18]. such that for all . The problem of nonlinear SC is defined as segmenting the data points based on their corresponding manifolds and obtaining a low-dimensional embedding of the data points within each manifold.

Nonlinear SC is also referred to as manifold clustering in the literature [20]. We mainly use the nonlinear SC term to emphasize that this survey focuses on the nonlinear approaches that extend the concepts in linear SC for data on nonlinear manifolds. Nonlinear SC approaches can be divided into three main categories:

-

1.

locality preserving: they exploit the local geometric structure of the manifold to bridge the gap between thinking globally, that is, using the global information from the whole data set, and fitting locally, that is, using spatial local information around each point.

-

2.

kernel based: they use implicit but predefined nonlinear mappings to transfer the data into a space where the linearity assumption is more likely to be satisfied.

-

3.

neural network based: they use the recent advances in structured neural networks to learn a nonlinear mapping of the data that respects the union of linear SC structure.

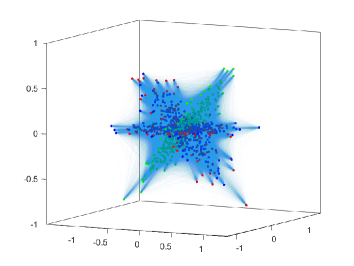

Figure 2 illustrates these three categories, along with several subcategories. In the following three sections, the major approaches within each category are presented.

3.1 Locality preserving nonlinear subspace clustering

A major disadvantage of linear SC approaches is that they are not faithful to the data structure in the high-dimensional space. In particular, the self-expressiveness property does not guarantee that the nearby points in the ambient space have similar representations in the latent coefficient space [114], that is, a small value of does not imply that is small. However, preserving the local configuration of the nearest neighbors of each point in the original ambient space is essential in being faithful to the data structure in nonlinear manifolds [90]. In order to reflect the local structures, there are three major categories: graph Laplacian regularization, avoiding cannot links, and tangent space approximation.

In fact, the root of these approaches comes from the single manifold learning algorithms based on preserving locality relationships among data points [91, 90, 6]. Inspired by these classical algorithms, two assumptions are needed for these approaches to work (although they are not always stated explicitly):

-

(i)

the underlying manifolds are smooth, and

-

(ii)

the manifolds are well-sampled, that is, there are sufficiently many data points sampled in the neighborhood of each data point.

Subsequently, these two assumptions imply that that each data point and the nearby points on the same manifold lie approximately on a local linear patch333In single manifold learning, it is proven that under the smoothness and well-sampled assumptions, for a d-dimensional manifold, each data point along with its neighbors define an approximately linear patch of the manifold [91].. Hence, the nonlinear structure of the manifolds can be captured by the local linear reconstruction of the data points. Let us now present the approaches within each of the three categories in the following sections.

3.1.1 Graph Laplacian regularization

The methods in this category exploit the local structure of the data to construct the matrix . To do so, a pairwise local similarity matrix is first constructed from the data points. The matrix is symmetric, and its -th entry measures how similar or close the data points and are in the ambient space. The entries of are computed in different ways, including the following:

-

1.

Binary k-nearest neighbors (K-NN): is equal to one if is among the nearest neighbor of , or vice versa [37], that is,

where computes the nearest samples of the data point .

- 2.

-

3.

Weighted K-NN is a combiantion of the two strategies described above [60]:

where is a similarity function, such as Gaussian kernels, between two input vectors and .

For a recent comprehensive overview of similarity and neighborhood construction algorithms, we refer the interested reader to [85].

Once the matrix is constructed, the following regularizer for is constructed using this local similarity information:

| (3) |

Let the Laplacian matrix corresponding to be defined as where is the diagonal matrix with for all . The function (3) can be rewritten as:

| (4) | ||||

where denotes the trace of a matrix. This regularizer is known as the Graph Laplacian or manifold regularization. It is used within existing global linear SC optimization problems, that is, it is added in the objective function of the optimization problem (2) with a proper penalty parameter. Manifold regularization promotes a grouping effect, which is defined as follows.

Definition 7 (Grouping Effect [37]).

Given the input data matrix , let be the obtained coefficient matrix corresponding to by an SC approach. The matrix has the grouping effect if goes to zero as and get closer, that is, as goes to zero.

Intuitively, the grouping effect encourages locally similar points (that is, pairs of points whose corresponding value in is large) to have similar coefficient representations.

The grouping effect of Graph Laplacian regularizers generates graphs that better represent the structure of the data and hence are better connected [37]. This might benefit SC approaches based on self-expressiveness as they use spectral clustering for the final step [110]. Note that the Graph Laplacian regularization is based on the assumption that the representation coefficient vector corresponding to each sample, that is, for each , is changing smoothly on each manifold [7]. Hence, nearby data points in the ambient space have similar coefficient vectors. This regularization also implicitly assumes that the data points from different clusters are not likely to be near each other and that sufficiently many data points are sampled from each manifold.

Graph Laplacian regularization can be used exclusively with the reconstruction error, , as in the Smooth Representation (SMR) algorithm [37]. SMR solves the following optimizatin problem:

Note that SMR does not enforce explicitly, because the regularization prevents the identity matrix to be an optimal solution, for sufficiently large, since the identity matrix satisfies for all .

It is proven that Graph Laplacian regularization of the coefficient matrix leads to subspace preserving coefficients for noiseless linear independent subspaces [37]. Graph Laplacian regularization can be used in combination with other coefficient regularizations such as the norm [129], or the nuclear norm [60, 131]. In particular, using the norm along with Graph Laplacian regularization leads to the algorithm referred to as Laplacian Regularized -SSC (LR-SSC) which solves the following optimization problem [129]:

However, as we will see in the numerical experiments in Section 5, promoting the smoothness of the representation over the manifold might not be sufficient to recover the complex nonlinear structures. Moreover, these methods are rather sensitive to the choice of the similarity function used to construct the pairwise local similarity matrix .

3.1.2 Avoiding cannot-links

Instead of encouraging locally nearby points to have similar coefficient representations, as presented in the previous section, one could encourage the entries of corresponding to faraway points to have small/zero values. In other words, data points should not use faraway points in their representation, which we refer to as avoiding cannot-links. The name “cannot-links” is borrowed from the graph learning and spectral clustering literature to indicate the pairwise constraints on the links that are encouraged/enforced to be avoided [68, 119].

Avoiding cannot-links attracted less attention compared to the Graph Laplacian regularization methods. Generally, the cannot-links refer to graph links/coefficients corresponding to distant points that are specified based on a dissimilarity criterion. There are two strategies to integrate pairwise cannot-links constraints:

-

1.

Explicit elimination of faraway samples: A simple approach to prevent cannot-links is to explicitly add them as proper constraints in the optimization problem; for example, in [155], Zhuang et al. use the following constraint: for all ,

A similar approach was proposed for linear SSC [31]. Instead of additional explicit constraints on the entries of the coefficient matrix, the self-expressiveness term is modified such that each sample is represented by the locally nearby data points.

-

2.

Implicit penalizing of distant pairwise connections: Cannot-links information can be added as a weighted penalty term. For example, in [148], the following regularization term was proposed:

This term is similar to (3). However, for any two faraway data points and , the corresponding is encouraged to be small while this is not the case for graph Laplacian regularization (for which will be close to zero). In other words, in graph Laplacian regularization, the nearby samples are encouraged to have similar coefficient representation but there is no explicit penalty on the coefficient representations corresponding to faraway data points. In fact, graph Laplacian regularization tend to produce dense coefficient matrices (see the numerical results in Section 5.1), hence do not have the disadvantage of oversegmentation that avoiding cannot-links may have [2].

Avoiding non-local data points in self-expression might be intuitively similar to encouraging spatially close connections, as with the Graph Laplacian regularization. However, they lack the grouping effect of Graph Laplacian regularizations.

3.1.3 Tangent space approximation

The approaches in this category rely on the estimation of the local tangent space for each data point, by fitting an affine subspace in the neighborhood of each data point. A simple strategy to approximate such tangent spaces is to consider a fixed predefined number of nearest neighbors for each sample, and approximate the tangent space by fitting an affine subspace to the data point and its neighbors. The crucial question is how to define the neighborhood of each data point in order to balance two goals: large enough to capture the local geometry of the manifolds, and small enough to avoid data points from other manifolds/clusters so as to preserve the curvature information of the underlying manifold.

Despite the fact that choosing a fixed value for neighborhood size with no prior knowledge is challenging, Deng et al. [19] proposed a multi-manifold embedding approach based on the angles between approximated tangent spaces using a fixed neighborhood size. In particular, they assumed that if two data points belong to the same manifold, the angle between their corresponding tangent space is small whereas the angle is large if they belong to different manifolds. In contrast to estimating tangent space using fixed neighborhood size, a different strategy is to allow the size of the neighborhood to be arbitrary. This can be achieved via sparse representations in order to estimate the neighboring samples and simultaneously calculate the contribution weight of each neighborhood sample. These weights would be helpful to reflect the clustering information as well. In this section, we focus on this particularly effective strategy.

Sparsity based tangent estimation for manifold clustering

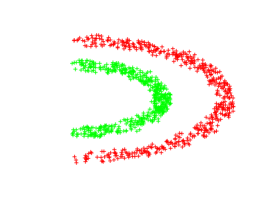

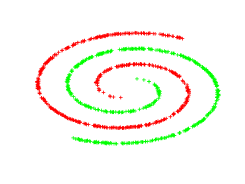

In order to obtain the neighborhood of each point, Elhamifar and Vidal [20] assumed that the tangent space of the manifold it belongs to can be approximated by the low-dimensional affine subspace constructed using a few neighboring points from the same manifold. Based on this assumption, they proposed an approach dubbed Sparse Manifold Clustering and Embedding (SMCE). It relies on two main principles, sparsity and locality, to estimate the tangent spaces.

For an illustration of the main idea behind SMCE, consider the example in Figure 3, showing two clusters of samples (gray circles) and their underlying manifolds. Suppose we want to approximate the tangent space for the sample . Based on the principle of sparsity, the sparsest affine representation for the sample should contain two other distinct samples and among all possible combinations of two samples, is close to the affine span of and . To avoid representing using the affine subspace containing , which belongs to the other manifold, the locality principle comes into effect to favor the affine span of and . However, it should be noted that locality alone is not helpful to avoid representing using the points which are spatially close to . This example illustrates the necessity of both sparsity and locality in the approximation of local tangent spaces using the samples from the same manifold.

More precisely, SMCE works as follows. To construct the tangent space to , the normalized sample differences is first constructed as follows:

Based on these directions, the affine subspace is represented using

where is the all-one vector. The goal is to estimate parameters of the local subspace such that it has minimal distance to the unknown target tangent space. Hence, the coefficients in the linear combination are calculated such that the distance between and the affine subspace is minimized:

However, this formulation does not guarantee that the data points are expressed using locally nearby points from the same manifold. To estimate the tangent space using few nearby samples, SMCE optimizes the following problem for each point :

| (5) |

where is called the proximity inducing matrix. The matrix is a positive-definite diagonal matrix. The entries of are defined by normalized distance between each data point and for , that is,

Hence the diagonal entries of that correspond to closer samples to have smaller values, allowing the corresponding entries of to be larger. In contrast, the diagonal entries of which correspond to farther samples have larger values, hence favoring smaller values of . The nonzero entries of the vector indicate the points that are estimated to be on the same manifold as . Finally, the coefficient matrix is constructed as follows: for all , and

| (6) |

Applying spectral clustering on the corresponding symmetrized affinity matrix of reveals the final manifold clustering assignments.

A very similar approach to SMCE was presented in [144]. However, the authors enforced locality and sparsity in separate steps. First a sparse representation of the data points using the affine directions is computed (without the proximity inducing matrix), and then the obtained sparse representation is weighted using the spatial locality information.

3.2 Kernel based non-linear subspace clustering

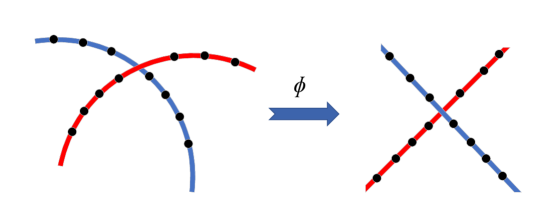

Kernel methods are commonly used to identify nonlinear structures and relationships among data points. They map the data from the input space to the reproducing kernel Hilbert space where the points might be better represented for a specific task. In nonlinear SC, the goal is to map the data points through a nonlinear transformation such that the linearity assumption is better satisfied; see Figure 4 for a simple illustration.

However, instead of explicitly using a nonlinear function, Kernel methods rely on the kernel trick to implicitly apply the nonlinear transformation. The kernel trick represents the data through pairwise similarity functions. It avoids the often computationally expensive nonlinear transformations (feature maps), that is, the explicit computation of the coordinates of the transformed data points in the feature space. Using this trick, only the inner products between all pairs of transformed data points in the feature space are computed.

Let be the explicit nonlinear feature map. The kernel function for is defined as: , where is the inner product. The matrix is a positive semidefinite matrix, known as the Gram matrix, whose entries are defined as:

| (7) |

Using the kernel function, the explicit representation for the nonlinear transformation is no longer needed.

In this section, we provide an overview of existing nonlinear SC based on kernel methods. These approaches can be divided into two categories: single kernel and multiple kernels, and are presented in the next two sections.

3.2.1 Using a single kernel

Patel et al. [79] proposed a kernelized SC approach by using the kernel trick in the (affine) sparse SC formulation. Their algorithm, dubbed Kernelized Sparse Subspace Clustering (KSSC), solves the following optimization problem

| (8) |

where . The kernel trick can be used to avoid computing explicitly, using

| (9) |

where the kernel is given in (7). As for SSC, the authors used sparse regularization for the coefficient matrix. Finally, (8) can be reformulated as follows

| (10) |

In order to reduce the computational cost of calculating the coefficient matrix for high-dimensional data, the same authors [78] extended KSSC to combine dimensionality reduction and kernelized SC. To this end, their approach learns the implicit mapping of the data onto a high-dimensional feature space using the kernel method, and then projecting onto a low-dimensional space via a linear projection.

The ease of use of the kernel trick in the self-expressive based linear SC

initiated the development of other nonlinear SC algorithms with additional regularizations, motivated by different purposes.

These algorithms include for example the kernelized multi-view SC approaches from [138, 121].

Recently Kang et al. [48] proposed additional

structure preserving regularization for KSSC in order to minimize the inconsistency between inner products of the transferred data, , and inner products for reconstructed transferred data, .

However, the traditional challenges of kernel methods are still present for kernel based SC. The two main challenges are:

-

1.

Using the Frobenius norm to compute the representation error facilitates the use of the kernel trick; see (9). However, the Frobenius norm implicitly assumes that the noise follows a Gaussian distribution. How can the kernel trick be applied on non-Gaussian noise models including, e.g., gross corruptions and outliers?

-

2.

With no prior knowledge, how can we select an appropriate kernel function and its parameters?

In the rest of this section, we review the few approaches that tried to tackle these challenging limitations.

Robust kernelized nonlinear SC

To improve the robustness, Xiao et al. [120] adopted the norm to replace the Frobenius norm in the kernelized LRR algorithm. The norm of the matrix is defined as

This norm, which enhances column-wise sparsity, models sample-specific outliers assuming that a fraction of the data points are corrupted. Based on this norm, robust nonlinear LRR solves the following optimization problem:

| (11) |

Defining , (11) can be reformulated as

| (12) |

which allows to use the kernel trick. To the best of our knowledge, no kernelized SC algorithm is proposed for other robust noise measurement norms such as the component-wise norm.

Adaptive kernel learning

All the aforementioned methods (and in fact, the majority of kernel based algorithms) make use of standard predefined kernel functions, such as Gaussian RBF kernels , and polynomial kernels . However, due to lack of a criterion for measuring the quality of a kernel function, selecting a proper kernel function is challenging. In the context of SC, a good kernel should result in a union of linear subspaces in the implicit embedded space. Following this intuition, Ji et al. [40] presented a kernel SC approach where they explicitly enforced the feature map to be of low rank and self-expressive:

However, this formulation has two limitations: (i) applying the kernel trick on the first term of the objective function is not straightforward, and (ii) the lack of constraints on the kernel leads to trivial solutions such as mapping all data points to zero. In order to overcome these issues, the authors exploited the symmetric positive definiteness of the kernel gram matrix , which implies that for some square matrix , while

| (13) |

Moreover, they restricted the unknown kernel matrix to be close (but not identical) to a predefined kernel matrix (such as Gaussian RBF or polynomial kernels) to avoid trivial solutions:

| (14) | ||||

| such that |

where is the predefined kernel matrix. Using the kernel trick (9) and substituting by , they solved this problem using ADMM. This formulation ensures that the transformed data points in the latent feature space are low-rank while an adaptive kernel function is used. The optimization problem (14) was further extended in [122] by regularizing the mapped features using the weighted Schatten p-norm as a tighter nonconvex surrogate for the rank and using correntropy for more robust measurement of the difference between the predefined kernel and the estimated kernel . The same idea was used in the robust nonlinear multi-view SC approach in [145].

However, these approaches suffer from two limitations: (i) they still need a predefined kernel matrix, , and (ii) assuming that a “good” kernel leads to a low-rank embedding of the data points is rather strong for data on multiple manifolds. In particular, there is no guarantee that learning a low-rank kernel corresponds to an implicit nonlinear transformation that preserves sufficient separability between the data points from different manifolds. In other words, a low-rank kernel (even with the additional self-expressiveness structure) does not necessary lead to a well separated embedding of the data points.

3.2.2 Using multiple kernels

Even though kernel methods are considered principled approaches to provide non-linearity in linear models, they rely on selecting and tuning a kernel. In many (unsupervised) applications, with no prior knowledge, this is a challenging problem. Multiple Kernel Learning (MKL) methods overshadow this issue, by learning a consensus kernel from a set of predefined candidate kernels , where is the number of given kernels. The sought kernel is regularized towards the predefined candidate kernels via a penalty function which is added as a penalty term in the objective function. The vector contains the weights/importance of the predefined kernels. This regularization function is typically defined in two ways:

The main difference between MKL based approaches is the enforced property for the ideal consensus kernel, using different regularizations. This relates to the criteria used to define the “goodness” of the consensus kernel, and the two most widely used ones promote

-

•

a block-diagonal coefficient matrix, or

-

•

a low-rank consensus kernel.

Let us discuss these two strategies in the next two paragraphs.

MKL encouraging a block-diagonal representation

A criterion for quantifying the quality of the unknown consensus kernel is based on the assumption that it should encourage a block diagonal representation for the coefficient matrix (up to permutation), where each block corresponds to a different manifold. In fact, the coefficient matrix is block diagonal, up to permutation, if and only if the subspace preserving property holds (see Definition 2).

A common approach to promote a block-diagonal representation is to use a proper regularizer for the coefficient matrix. Let us define the Laplacian matrix associated to as where is the affinity matrix corresponding to . Then, minimizing the sum of the smallest eigenvalues of , that is, where is the -th smallest eigenvalue of , promotes to be block diagonal. In fact, the multiplicity of the zero eigenvalue of the Laplacian matrix is equal to the number of connected components in the graph corresponding to the matrix [110]. Using the Ky Fan theorem, Lu et al [62] write this nonconvex regularizer into a convex counterpart.

As one of the first approaches presented for MKL based nonlinear SC, Kang et al. [49] proposed the Self-weighted Multiple Kernel Learning (SMKL) algorithm. SMKL combines the multi-kernel learning formulation in (15) with the block-diagonal regularizer in the kernelized LSR formulation [66]444LSR is a linear SC algorithm that regularizes the coefficient matrix with the Frobenius norm; see Table 1.:

| (17) | ||||

where the kernel trick can be easily applied on the first term in the objective function; see (9). An iterative alternative optimization approach was used to solve (17). In each iteration, the entries of the weight vector are updated adaptively during the optimization process using

However, in our implementations, we noticed that it might prevent convergence, because the parameter in (17) is modified at each iteration. Moreover, with no constraint on the summation of the weights, they tend to have very small values.

Remark 3 (Nonnegativity).

The nonnegativity and symmetry constraints in (17) make the coefficient matrix a valid affinity matrix [62], with being the corresponding Laplacian matrix. However, with the nonnegativity constraint on the entries of the matrix , the optimal solution may not be subspace preserving for the “extreme points” within the subspaces (since they are not contained within the conical hull of points from the same subspace). As discussed for affine SC in [56], the spectral clustering step is however likely to generate correct clusters. This is justified by the “collaborative effect” phenomenon which states that the coefficient representation corresponding to non-extreme points can “pull” the extreme points toward the data points corresponding to the same subspace, and hence make the spectral clustering robust to the wrong connections. However, this depends on the strength of the within subspace connectivity of non-extreme data points and the number of the extreme data points.

Similar formulations were presented in [124, 147, 88]. In these approaches, the update of was performed using variations of the Correntropy Induced Metric (CIM) for measuring the distance between entries of the kernels, that is, . The CIM between two matrices and is defined as where , and is known to be a more robust distance metric compared to the Frobenius norm [65], and hence might lead to a more accurate weighting assignment. In particular, [124] used the mere block-diagonal regularizer and eliminated the Frobenius norm regularizer for the coefficient matrix. This is motivated by the fact that block-diagonal regularizer is proven to be subspace preserving for independent subspaces (similar to the Frobenius norm) [63]. The same idea was used for multi-view nonlinear SC based on MKL in [147]. Ren et al. [88] introduced an additional regularization to learn the affinity and coefficient matrices simultaneously.

MKL encouraging a low-rank consensus kernel

Although the data might be nonlinear in the original ambient space, the linear subspace structure should be present in the implicit embedding space. Hence a proper kernel should implicitly lead to a low-rank embedded data. Based on this intuition, Kang et al. [51], proposed Low-rank Kernel learning for Graph matrix (LKG) that learns a low-rank consensus kernel from a weighted linear combination of the given kernels by solving the following optimization problem:

| (18) | ||||

However, [89] explained that minimizing does not necessarily lead to a low-rank transformed data in the implicit feature space, that is, a small value for . In fact, we have

Hence, using the observation in (13), and defining an auxiliary square matrix such that , they enforced the low-rankness of by minimizing the nuclear norm of [89].

3.3 Neural network based nonlinear SC

Neural networks have emerged as a very powerful representation learning framework and have drawn substantial attention due to many successful applications in different fields. The past four years have witnessed an increasing number of papers which tried to merge the representational power of neural networks with classical linear SC algorithms to deal with data from nonlinear manifolds. In a nutshell, the main motivation of neural networks based SC approaches is to overcome the fundamental limitation of kernel based alternatives, by learning the proper embedding of the data from the data. Geometrically, as for Kernel-based approaches, this proper embedding should ideally have a union of linear subspaces structure where classic linear self-expressiveness leads to subspace preserving representations. These algorithms can be partitioned into three main categories (see also Table 2, page 2):

-

1.

Neural network based feature learning that extract nonlinear features from the data using neural networks as a preprocessing step for linear SC algorithms,

-

2.

Self-expressive latent representation learning that enforce explicitly the neural network to learn self-expressive latent representation either by changing the architecture or the objective function, and

-

3.

Deep adversarial SC that uses generative adversarial networks to perform nonlinear SC.

In the next three sections, we describe these three categories.

3.3.1 Neural network based feature learning

Early attempts for employing neural network for nonlinear SC were mainly limited to providing better, that is, more discriminative, features (representations) for the data points. In fact, several SC papers in the literature (including linear and nonlinear methodologies) still rely on manually-designed/handcrafted features, such as Local Binary Patterns (LBP) [77], Histogram of Oriented Gradients (HOG) [17], and Scale-Invariant Feature Transform (SIFT) [61], for satisfactory results on real-world data sets. However, there is no theoretical justification on how any of these handcrafted features might affect the geometric structure of the data lying on multiple (nonlinear) subspaces. From the perspective of unsupervised feature learning, features extracted by neural networks have an important advantage over traditional handcrafted features: they are specifically learned from the data.

The focus of neural network based approaches for SC is to learn proper representations/features from the data points for the specific task of SC. The main shared characteristic of these approaches is that they treat feature learning and clustering as separate tasks, which is suboptimal. They are divided into two main subcategories: (i) transforming the unsupervised problem into a (self)-supervised problem, and (ii) using the connectivity information from the output of a classical linear SC algorithm as prior knowledge. Let us discuss, in the next paragraphs, the approaches within each category in detail.

Self-supervised subspace clustering

The success of supervised feature learning in neural networks inspired a few works to map the unsupervised SC task to a (self-)supervised problem. To do so, the clustering output of a linear SC algorithm can be used as labels to provide supervision [92, 150]. However, these labels are highly unreliable as they are obtained by applying algorithms based on the linearity assumption. There are two strategies to exploit the information from these noisy labels to train a network:

-

•

Use the concept of “confident learning”: a confidence weight is assigned to each data point quantifying its likeliness of having the correct label [76]. The highly confident samples and their corresponding labels can be used to train a neural network in a supervised fashion. Sekmen et al. [92] used the criterion of the distance of the samples to the obtained subspaces from a linear SC algorithm to choose highly confident data points for the supervision. However, there are serious drawbacks to this approach. The weighting procedure based on the criterion of distance to the estimated subspaces using linear algorithms is not reliable for nonlinear data. Moreover, selecting the highly confident labels depends on the value of a threshold which is not easy to set with no prior knowledge. However, we would like to emphasize that confident learning is an emerging topic in robust deep learning given noisy labels with significant theoretical and numerical advances over the past couple of years [43, 70]. However, how to select the “confident samples” and use them for nonlinear manifold clustering task requires more in-depth study.

-

•

Increase the number of clusters incrementally: To reduce the effect of wrong labels, Zhou et al. [150] suggested an iterative approach. Specifically, at the first iteration, the linear SSC algorithm is applied on the (raw) input data and the spectral clustering is applied on the coefficient matrix to segment the data in two clusters. A neural network is trained with the obtained labels (clusters) for a binary classification problem. In the following iterations, the features from the previously trained network are used as input data to the SSC and the number of clusters to segment the corresponding graph (in the spectral clustering step) is increased by one. The network parameters are updated using the obtained labels as a supervised classification problem. This iterative process continues until the number of clusters reaches the desired predefined number. However, there is no theoretical evidence or justification that this strategy can improve the robustness to the erroneous output of linear SC algorithms.

Linear based connectivity as prior knowledge

Another strategy to leverage linear SC algorithms as a prior information is to use the coefficient matrix to guide the geometric structure of the embedded data points in the latent space of neural networks.

As the pioneer algorithm, Peng et al. [81, 83] proposed a structured autoencoder, dubbed StructAE, where self-expressiveness of the latent representations is incorporated in the loss function of the network. StructAE relies on a previously computed coefficient matrix by a classical linear SC algorithm. The authors suggested the use of SSC and LSR, leading to StructAE-L1 and StructAE-L2 respectively555Recall that SSC and LSR use the and norms for regularization of the columns of the coefficient matrix, respectively; see Section 2.1.1.. Chen et al. [13] used LRR instead to construct the matrix . Given , consider an autoencoder with fully-connected hidden layers, where the first layers indicate the encoder and the last layers indicate the decoder. We denote the parameters of the network, that is, the weights and biases of each layer. Let us also denote the compact latent representation of the encoder output as for the input data , and, the reconstructed data, which is the output of the decoder, by . They both depend on the parameters .

The optimization problem is defined as follows

| (19) |

where the first term is the reconstruction error of the autoencoder, the second term enforces the latent representation to respect the multiple subspace structure encoded by the matrix , and the third term is regularizing the parameters of the network to avoid overfitting. The coefficient matrix contains prior information on the global structure of the data, because it is obtained by minimizing , via a linear SC approach, containing the information of all data points using self-expressiveness. The clustering membership is obtained by clustering the latent representation using algorithms such as k-means or by applying a linear SC algorithm such as SSC and LSR. The main motivation of StructAE is to integrate the individual and global structures together, and encourage the autoencoder to learn features/latent representation respecting the geometric structure provided by the coefficient matrix. However, the matrix is obtained by a linear SC based on a global linearity assumption. Hence the performance tends to significantly depend on how well the linearity assumption is satisfied.

3.3.2 Self-expressive latent representation learning

Separating the two modules of representation/feature learning and (subspace) clustering assignment typically leads to suboptimal results. To overcome this limitation, a group of approaches explicitly include the self-expressiveness term in the network loss function. These approaches are based on the intuition that minimizing self-expressiveness in the embedded space encourages the neural network to learn the appropriate embedding for the task of SC. In general, the optimization problem of these networks has the following form:

| (20) |

where and denote the network embedding and parameters, respectively. The embedding of the network, which is denoted by , is encouraged to have a union of linear subspaces structure by explicitly minmizing the self-expressive representation term. As in the rest of the paper, is the regularization on the coefficient matrix. The extra regularization plays a critical role in removing trivial solutions and specifying the properties of the nonlinear mapping and the embedding space. In particular, looking for self-expressiveness in the transformed space is not sufficient. There exists infinite nonlinear transformations that can lead to small/zero self-expressive error, that is, . Hence, the main difference between these approaches lies on the regularization function that reduces the possible nonlinear mappings space and forms the geometric structure of the data embedding. There are mainly four choices for in the literature:

-

1.

Instant normalization [82] promotes the norm of the embedded data points in the latent space to be close to 1 using the regularization . This avoids an arbitrary scaling factor in the embedding space.

- 2.

-

3.

Autoencoder reconstruction loss [42] is based on an autoencoder architecture and uses a decoder network to reconstruct the original data from the self-expressive representation, that is, . More precisely, the regularization is defined as where , and represents the decoding mapping which depends on the parameters . This regularization that minimizes the reconstruction error of the input matrix ensures that the self-expressive based embedding preserves sufficient information to recover the original data.

-

4.

Restricted transformations [71] is based on learning stacked linear transformations through multiple layers that are connected via non-negativity constraints inspired by the rectified linear unit (ReLu) that sets negative values to zero after each layer [30, 25]. Specifically, Maggu et al. [71] proposed a three layered transformation for SC, solving the following optimization problem:

(21) where are the linear transformations such that the dimension of each layer output is reduced. To avoid trivial or degenerate solutions (), the linear transformation corresponding to each layer is penalized by minimizing the Frobenius norm minus the logarithm of the determinant of the transformation matrices. Note that the non-negativity constraints are inspired by the ReLU activation but they are inherently different.

Undoubtedly, the deep SC approaches based on autoencoder regularization are the most popular. A large number of algorithms have been proposed using this regularization. In the rest of this section, we focus on these approaches.

Autoencoder regularized deep subspace clustering

The autoencoder based feature extraction for SC was first proposed as the Cascade Subspace Clustering (CSC) algorithm in [80]. In a nutshell, CSC first learns compact features by pretraining a simple autoencoder, and then discards the decoder part. Afterwards, it fine-tunes the parameters of the encoder with a novel loss function based on the invariance of distribution property. The invariance of distribution is based on the assumption that the conditional distribution of any data point given the cluster centers is invariant to different distance metrics. The conditional distribution for each data point is a -dimensional vector providing a probability that is related to the closeness between the given data point and the centroids of the clusters, so that the data point has a higher probability to be assigned to a closer cluster. The closeness is measured by different metrics such as the Euclidean and cosine similarity metrics. The invariance of distribution uses the KL-divergence to minimize the discrepancy among different distributions defined by different closeness measures. After updating the encoder parameters and the cluster centroids in the fine-tuning step, each data point is assigned to the cluster with the closest centroid. Nevertheless, it is not clear how minimizing the discrepancy among different distributions benefits the SC or affects the geometric structure of the data from multiple manifolds. Moreover, CSC only uses the autoencoder network for the initialization of the parameters of the encoder.

The idea of explicitly merging self-expressiveness in autoencoder networks for SC was first brought to light in the framework of Deep Subspace Clustering network (DSC-Net) [42]. DSC-Net is the pioneer work to identify that the linear combination in the collaborative representation corresponds to a layer of fully connected neurons without non-linear activations and biases. This layer, dubbed the self-expressive layer, is added between the encoder and decoder of a standard autoencoder. The parameters (weights) of this layer can be interpreted as the coefficient matrix . The authors promoted the use of convolutional autoencoders as the backbone architecture, reasoning that they can be trained easier compared to the classical fully connected autoencoders as they have less parameters. This has been recently proved theoretically in [57]. Let denote the parameters of the encoder and the decoder. The self-expressiveness in the latent space, that is, , is a linear layer located between the encoder and decoder, as illustrated in Figure 5. In particular, all the input data , as a single large batch, is fed into the encoder to obtain the embedded data , where corresponds to the data point . The self-expressive representation of the latent data is calculated using the subsequent linear layer. The decoder reconstructs each data point in the input batch from the columns of .

In order to seek a tradeoff between the reconstruction and self-expression errors, DSC-Net solves the following optimization problem:

| (22) | ||||

where is the component-wise norm that regularizes the coefficient matrix (that is, the weights of the fully connected layer).

Two different regularizations were considered in [42], namely , leading to DSC-Net-L1 and DSC-Net-L2 networks, respectively.

The network is trained in two steps:

(i) pretraining which includes initialization of the autoencoder without the self-expressive layer, and

(ii) fine-tuning which includes training the whole network including the self-expressive layer.

Once the network is trained, the weights of the self-expressive layer are clustered using spectral clustering, as in linear SC.

Recent extensions of self-expressive autoencoders

DSC-Net initiated many extensions that aim to integrate the representational power of neural networks with self-expressive SC algorithms. Among noticeable extensions, there are two approaches that attempt to unify the self-expressive autoencoders with the concept of self-supervision/self-training. The Self-Supervised Convolutional Subspace Clustering Network (S2ConvSCN) [140] combines DSC-Net with a classification convolutional module (for feature learning) and a spectral clustering module (for self-supervision) into a joint framework. This framework uses the (noisy) clustering labels of the spectral clustering module to supervise the training of the feature learning network and the self-expressive coefficient learning. The idea of collaborative learning between the classification module and the self-expressive layer was reused in [143], but using the concept of confidence learning. In contrast to S2ConvSCN, the model in [143] supervises the training without the use of the computationally expensive spectral clustering. In particular, two affinity matrices are constructed to supervise the training by selecting highly confident sample pairs: (i) the affinity matrix of a binary classifier module based on a convolutional network, which indicates whether two data points belong to the same cluster or not, and (ii) the affinity matrix corresponding to the weights of the self-expressiveness layer. Nonetheless, selecting confident samples depends on thresholding parameters which are hard to tune in an unsupervised setting.

Other notable extensions include the following. Sparse and low-rank regularized DSC (SLR-DSC) [154] regularizes the coefficient matrix using the nuclear and Frobenius norms. An adaptation of DSC-Net for multi-modal data was presented in [1]. Zhou et al. [151] added a distribution consistent loss term to keep the consistency between two distributions of the original data and the embedded representation (the output of the encoder). Inspired by human cognition, Jiang et al. [45] aggregated a self-paced learning framework [44] with the DSC-Net loss function to encourage the network to learn “easier” samples at first. Easier samples are defined as the ones with a small value of the reconstruction and of the self-expressive representation errors. However, similar to many self-paced frameworks, it is very sensitive to thresholding parameters [27]. Instead of focusing on the learned features from the output of the encoder, Kheirandish et al. [53] proposed a multi-level representation of DSC-Net (MLRDSC) which combines low-level information from the initial layers with high-level information from the deeper layers.

3.3.3 Deep adversarial subspace clustering

So far we have reviewed deep SC approaches that are based on a discriminative supervised neural network (such as those for self-supervised feature learning) and autoencoder architectures. There are a few approaches that rely on Generative Adversarial Networks (GANs) [26]. GANs are composed of two modules, a generator and a discriminator. The generator module produces new “fake” samples by learning to map from a latent space (often based on random uniform or Gaussian distributions) to the unknown desired distribution of the samples from the given data set. The goal of the generator is to produce samples that follow the true distribution of the data points as closely as possible. On the other hand, the goal of the discriminator is to distinguish the generated fake samples from the “real” samples of the data set. These two modules are trained through a minimax game such that the success of each module is the loss of the other one. GANs are often difficult to train and this explains the fewer number of works that combines GANs with SC.

The first SC algorithm that adopted the GAN architecture is Deep Adversarial Subspace Clustering (DASC) [152]. In order to match the goal of SC, DASC modifies the generator and discriminator modules based on well-known linear subspace properties:

-

•

DASC generator. The generator has two main components: a self-expressive autoencoder (similar to DSC-Net, with the encoder and decoder network parameters denoted by ) and a sampling layer for producing fake data for each estimated subspace. The generator first uses a deep self-expressive auto-encoder to transform the data into the latent variable such that they are encouraged to be located in a union of linear subspaces. Thereafter, spectral clustering is applied on the weights from the self-expressive layer () to obtain the clustering assignment. The generator produces new “fake” samples by sampling from the estimated subspaces using property of linear subspaces: linearly combining samples within a subspace generates a sample from the same subspace. To this end, the fake samples are generated by random linear combination of the samples within each cluster (the weights of the combinations are chosen uniformly at random in ). Apart from the fake data generation process, the definition of the “real” data is also noticeably different from the classic GAN for which the real data is the given data set. In DASC, the real data are a predefined fraction of the samples within each estimated cluster that have a small projection residual onto the learned subspaces by the discriminator. Mathematically, the generator solves

(23) where and denote the parameters of the network and the reconstructed data in the autoencoder, respectively, and is the number of fake samples for the th subspace/cluster. The matrices () are the learned bases for each subspace in the discriminator. In fact, the first term in (23) is the sum of projection residuals of the fake data for all of the estimated subspaces. The generator tries to “fool” the discriminator by producing (embedded) samples that are very close to the estimated subspaces by the discriminator.

-

•

DASC discriminator. The discriminator is parametrized by the basis vectors of each subspace of the nonlinear transformed data (). The basis vectors of each subspace/cluster are learned such that the projection residual loss function for the real data is smaller compared to the fake ones. The discriminator solves

(24) where . The first term in (24) ensures that the discriminator learns the bases such that they fit the intrinsic subspace of each cluster for the real data. The second term is the adversarial goal of the discriminator compared to the generator. This term ensures that the learned bases of the discriminator produce sufficiently large residuals for the generated fake data.

Subsequently, the generator and discriminator are trained such that the generator is encouraged to produce fake data close to the subspaces learned by the discriminator which leads to higher clustering quality.

However, DASC completely ignores the distribution of the input data and merely focuses on the latent embedded data . Recently, Yu et al. [137] proposed two extensions to DASC. In the first approach, an additional adversarial learning is utilized to model the distributions of the input data along with the adversarial learning for the corresponding latent representations (similar to DASC). In the second approach, inspired by the self-supervised SC approach in [143], the adversarial learning in the latent space is replaced by a self-supervised module to encourage the (autoencoder) network to learn discriminative embedding (features) for each subspace.

Remark 4.

Instead of using GANs to enhance SC, one could also use linear SC to overcome challenges of classical GANs, such as the “mode-collapse” issue [5], that is, the lack of sample variety in the generator’s output. For example, Liang et al. [58] suggested to use a clustering module based on linear SC to exploit subspaces within the latent representation of the data. The clustering output is used by the generator to produce samples conditioned on their corresponding subspace to promote diverse sample generation from all latent subspaces.

4 Computational cost of nonlinear SC algorithms

Despite the nice theoretical properties of self-expressive representations [95, 21] and their practical efficiency, expressing the data points using other data points from the data set can be computationally inefficient. In fact, the computational complexity for computing the coefficient matrix in the major linear SC algorithms is either quadratic or cubic in the number of data points [86], and hence using them for medium and large scale data sets is computationally prohibitive. To address the scalability issue, several approaches were proposed which either limit the self-expression over a smaller size dictionary rather than the whole data [2, 101, 11, 133] or propose specific solvers (such as the greedy OMP [135] or using proximal gradient descent framework [86]).

Remark 5.

In addition to the high computational complexity of calculating the self-expressive representation (the first step of most nonlinear SC algorithms), the spectral clustering step has in general a computational cost of as well [38]. However, structures in the affinity matrix such as sparsity can reduce the computational time significantly [11]. Moreover, there exists fast approximation algorithms for spectral clustering [123, 102, 38]. Hence, the main focus of scalable extensions of linear SC was to provide faster and more efficient approaches to (approximately) compute the coefficient matrix.

The computational burden of self-expressive representations is present in the majority of nonlinear extensions reviewed in this survey as well. Representing data points using the whole data set, whether it is in the ambient or in the (implicit) embedding space, typically has the computational cost of or . Similar to linear SC, ADMM is the standard algorithm used for the majority of the optimization problems. The major bottleneck is computing the coefficient matrix which often involves solving an -by- linear system for Kernel based and locality preserving approaches (in the subcategories of tangent space approximation and avoiding cannot-links). Solving this problem in each iteration of the ADMM framework has a computational complexity of , or using the matrix inversion lemma (also known as Sherman-Morrison-Woodbury identity [35], see [86, Remark 1] for more details). Using the graph Laplacian regularizer, an additional bottleneck of computing a pairwise similarity matrix in the ambient space is added (in operations), and, moreover, the coefficient matrix should be computed by solving a Sylvester equation with the complexity of . The high computational cost is also a very evident drawback of the self-expressive based autoencoders in neural network approaches. In fact, the number of parameters of the fully-connected self-expressive layer is . Computing the latent representation of the encoder has the time complexity of where is the dimension of the encoder output.

In addition to the time complexity, the memory requirement is often significant as well. Storing (and clustering) an coefficient matrix is usually restrictive, unless the coefficient matrix is sparse. Computing and storing (dense) Gram matrices increases the space complexity of kernel based approaches further. This is even worse for MKL algorithms which update the kernel matrix at each iteration of an ADMM based algorithm.

Scalable nonlinear SC approaches

In contrast to linear SC, the scalability issue of nonlinear alternatives has not yet been investigated much. To the best of our knowledge, the only scalable algorithm in the category of locality preserving SC is an avoiding cannot-link approach [31] which uses nearby data points to represent each data point, with a computational cost of operations.