Bifurcation Curves of Two-Dimensional Quantum Walks

Abstract

The quantum walk differs fundamentally from the classical random walk in a number of ways, including its linear spreading and initial condition dependent asymmetries. Using stationary phase approximations, precise asymptotics have been derived for one-dimensional two-state quantum walks, one-dimensional three-state Grover walks, and two-dimensional four-state Grover walks. Other papers have investigated asymptotic behavior of a much larger set of two-dimensional quantum walks and it has been shown that in special cases the regions of polynomial decay can be parameterized. In this paper, we show that these regions of polynomial decay are bounded by algebraic curves which can be explicitly computed. We give examples of these bifurcation curves for a number of two-dimensional quantum walks.

1 Introduction

The quantum walk is a discrete quantum mechanical system which serves as an analogue to the classical random walk. While the probability distribution of a classical random walk approximates a normal distribution with a linearly growing variance in large time, the quantum walk has more involved asymptotic behavior. A paper by Ambainis et. al. [3] provides a detailed asymptotic description of the one-dimensional Hadamard walk (a quantum walk governed by a Hadamard matrix) for large time. The large time behavior of the quantum walk contrasts with that of the classical random walk in both the shape of the probability density and its rate of spread. Ambainis et. al. showed that the standard deviation of the Hadamard walk grows at and in particular the distribution at time is almost entirely contained in the interval . In this interval, the distribution contains highly oscillatory peaks at the boundaries and is approximately uniform near the origin.

These results were among the first in a line of papers describing asymptotic behavior of more general quantum walks. A few years later, Konno et. al. [14] extended these results to two state quantum walks governed by general unitary matrices. Inui et. al. [12] provided asymptotic results on the three-state one dimensional Grover walk with a focus on localization phenomena. Analysis of two-dimensional quantum walks started with a brief survey by Mackay et. al. [19], and continued with a description of localization in the two-dimensional Grover walk by Inui et. al. [11], and a differential geometric interpretation of two-dimensional quantum walks by Baryshnikov et. al. [5]. The asymptotic behavior of the one dimensional Hadamard walk described by Ambainis et. al. has served as a common baseline through these papers. The standard deviation of these walks grows as , and the probability distributions are almost entirely contained in a linearly expanding subset of the domain, which we term as the region of polynomial decay. In Kuklinski [17], these regions of polynomial decay are investigated further. In particular, it was shown that in certain low state two-dimensional quantum walks, the regions of polynomial decay can be explicitly parameterized. However, these parameterizations are often unwieldy and cannot easily give a description of the bounds on the region.

In this paper, we show that for any quantum walk defined on , one can write down a collection of algebraic curves which bound the regions of polynomial decay. This procedure builds upon previous methods to analyze the asymptotic behavior of the quantum walk. First, we conduct an eigenvalue decomposition of the quantum walk operator via a Fourier transform. The state of the quantum walk particle at time becomes a sum of integrals whose asymptotic behavior we analyze using the method of stationary phase. Solving for points of stationary phase in these integrals leads to the previously discussed parametric representation of the regions of polynomial decay. We will take this a step further and compute bifurcation curves corresponding to these integrals by computing a Hessian determinant of the phase of the integrand. These bifurcation curves divide the space into a discrete collection of subsets, a finite number of which are found to belong to the region of polynomial decay. The bifurcation curves are found to be solutions to a system of multivariate polynomial equations. We use a Gröbner basis computation to derive an implicit algebraic representation of these curves. However, this system of multivariate polynomial equations is quite large which puts strain on our algorithm, thus only in the simplest cases can we derive the bifurcation curves. We present a non-rigorous ad-hoc method for computing bifurcation curves for more complicated quantum walks.

The remainder of this paper is organized as follows. The definition of the quantum walk as well as a discussion of stationary phase approximations is given in section 2. In section 3 we explicitly write the multivariate polynomial system corresponding to the bifurcation curves and discuss solution techniques. In section 4 we compute bifurcation curves for several examples of two-dimensional quantum walks.

2 Definitions and Method of Stationary Phase

We begin by defining the quantum walk on a group, as first introduced by Acevedo et. al. [2]:

Definition 2.1

Let be a group, let with , and let be an unitary matrix. The quantum walk operator corresponding to the triple may be written as the composition where for and , . We denote this correspondence as .

The ordered pair can be thought of as an undirected Cayley graph which admits loops [8]. In this paper, we will primarily consider . Let be the set of unit directional vectors and let . Two of the unitary matrices which we will use in this paper are the Grover matrix and the Hadamard matrix. If is the identity matrix and is the matrix filled with ones, then we define the Grover matrix as . The Hadamard matrix is defined as .

One way to view this quantum walk operator is as a linear combination of translations. Let and let be a translation operator which acts as with . Then we can visualize acting on the vector as follows:

| (1) |

When , we can gain a better understanding of through the application of a discrete Fourier transform. If , the multi-dimensional Fourier transform acts on a translation as:

| (2) |

Applying a Fourier transform to equation (1) and using equation (2), we find:

| (3) |

We refer to as the multiplier matrix of the quantum walk operator.

After steps of the quantum walk, the Fourier transform of the state becomes

. Thus, if we wish to study long term behavior of the quantum walk, we must take large powers of the multiplier matrix. If is the set of eigenvalues of , then we can write the transform of a quantum walk with initial condition as:

| (4) |

Here, the are scaled eigenvectors of whose weights depend on the initial condition. Since is unitary, we can write where . To return to the original domain, we conduct an inverse Fourier transform on equation (4):

| (5) |

Here, refers to the norm [16]. We let such that our spatial variable of interest is now . This scales position space such that we will no longer be observing a linearly expanding spatial region but a stationary one. Substituting this into equation (5), we have:

| (6) |

We use the method of stationary phase [6] to asymtptotically evaluate the integrals in equation (6). Consider the following -dimensional oscillatory integral:

| (7) |

where and are smooth functions with compact support. Consider the set:

whose members we refer to as points of stationary phase or nondegenerate critical points. If and the determinant of the Hessian matrix also vanishes, we say that is a degenerate critical point. We state two results from Stein [22] relating this set to an approximation of in equation (7):

Proposition 2.1

Suppose has no critical points in the support of . Then for every .

This proposition says that if the set of critical points is empty, then the integral in equation (7) decays superpolynomially (often this superpolynomial decay can be shown to be exponential). If the set of critical points is nonempty, then we can use the following proposition:

Proposition 2.2

If has a nondegenerate critical point at and is supported in a sufficiently small neighborhood of , then .

Thus, if the set of nondegenerate critical points is nonempty, then the integral decays polynomially, slower than the decay dictated by proposition 1.

We apply these propositions to the integrals in equation (6). Let correspond to the expression in the exponential for the integrand. This integral has a critical point if , or if for some . By letting vary in this range we can trace out a region in on which the amplitudes decay polynomially. In this way, maps the region of integration into the spatial domain on which the quantum walk resides. This representation requires that we can analytically solve for the eigenvalues of which is not always possible, especially for quantum walks with many states. Moreover, this parametric representation is often complicated and it is not immediately apparent how to connect this representation to the more general structure of the region of polynomial decay, or even to a mathematical description of its boundaries.

We mention that a critical condition for the stationary phase propositions is the smoothness imposed on and . In the context of proposition 2.1, a discontinuity in an derivative of one of these functions will lead to a slower polynomial rate of decay than the superpolynomial decay guaranteed by the proposition for smooth amplitude and phase functions. It is not trivial to show that the eigenvalues and eigenvectors of the multiplier matrix are smooth. The following proposition was proved by Rainer [20]:

Proposition 2.3

Let be a -curve of normal complex matrices, i.e., the entries belong to , such that is normally nonflat. Then there exists a global -parameterization of the eigenvalues and the eigenprojections of .

Here, is a subalgebra of (Rainer notes that it may be true that ), which the entries of can be shown to belong to. However, this proposition only applies to families of normal matrices whose entries are smothly parameterized by a single variable. Families of normal matrices parameterized by more than one variable may not admit a smooth selection of eigenvalues (e.x. consider the family of Hermitian matrices ). This means that for one-dimensional quantum walks, we can provably distinguish between a region of polynomial decay and a region of superpolynomial decay. This distinction is not guaranteed for higher dimensional quantum walks, although in the examples that follow a smooth selection of eigenvalues and eigenvectors can be demonstrated. In any case, for general higher dimensional quantum walks a lack of smoothness in the eigenvalues and eigenvectors of the multiplier matrix would not negate existence of a distinct region of polynomial decay bounded by algebraic curves, it simply does not guarantee qualitatively faster rates of decay outside this region.

3 Multivariate Polynomial System

Instead of focusing our attention on the locus of critical points, we illustrate a method to construct algebraic surfaces representing the bifurcation curves of the quantum walk. These bifurcation curves will more appropriately describe the structure of the region of polynomial decay than does the aforementioned critical point parameterization. The degenerate critical points of the integral in equation (6) must simultaneously satisfy:

| (8) |

| (9) |

Using implicit differentiation, one can represent equations (8) and (9) as multivariate polynomial equations in terms of derivatives of the characteristic polynomial. Let be the characteristic polynomial of in . Since the entries of are linear combinations of terms of the form , we can write a multivariate polynomial such that the roots in of coincide with the eigenvalues of (i.e. the roots of in ). Thus, if is an eigenvalue of , we have:

| (10) |

Recall that , and that we are searching for derivatives of to use in equations (8) and (9). As such, let us take a derivative of this equation with respect to :

| (11) |

From here, let us refer to partial derivatives via subscripts (not to be confused with the indexing of eigenvalues in the previous section) and suppress mention of . Rearranging terms, we find:

| (12) |

If , we can substitute equation (12) into equation (9) to find:

| (13) |

If we take a second derivative of equation (11) with respect to , we have:

| (14) |

By isolating and substituting the expression in equation (12) for , we may write:

| (15) |

We can similarly substitute equation (15) into equation (8) such that:

| (16) |

Both equations (13) and (16) may be used to describe the bifurcation curves, however these equations are dependent on derivatives of . We can solve for these derivatives in terms of the characteristic polynomial by taking derivatives of equation (10) with respect to . Let us take a first derivative with respect to :

| (17) |

Noticing that , we can rearrange terms to write:

| (18) |

This can be substituted into equation (13) to find:

| (19) |

We take an additional derivative of equation (17) with respect to . If , then we can write:

| (20) |

If we rearrange terms and substitute equation (18), we have:

| (21) |

Meanwhile, if then we must account for an additional term:

| (22) |

Expanding this expression and making similar substitutions, we find:

| (23) |

We can substitute equations (21) and (23) into equation (16) and eliminate the denominator factors to arrive at a polynomial equation in and , which we term the exponential Hessian determinant.

The equations (10), (16), and (19) make up a system of multivariate polynomial equations in variables; these are , , and . Using a Gröbner basis calculation [7], we can reduce this system to a single equation of spatial variables . Unfortunately the exponential Hessian determinant is often prohibitively large and the system requires significant computational resources to solve. However, we present a more feasible naïve method of bifurcation curve computation which, while not rigorously supported, generates curves that bear striking visual resemblance to the quantum walk boundaries. Consider the polynomial system and . We wish to find a resultant multivariate polynomial such that selections of coefficients which admit simultaneous solutions of the polynomial system in also satisfy the equation . Such a resultant may be computed using the determinant of a Sylvester matrix [23]:

In this notation, is the variable being cancelled. There are two shortcomings with this formula. First, this resultant will often overrepresent solutions in the system in the sense that solutions of in may not admit solutions in the corresponding polynomial system. For example, consider the system and such that the resultant satisfies . If we let , then the resultant equation is trivially satisfied, but any nonzero choice of or leads to a polynomial system with no solutions. The second shortcoming of this procedure is that the resultant will not take into account any a priori restrictions on the cancelled variable. For instance in our current quantum walk example, we will require that the cancelled variables satisfy . It is often difficult to discern which portions of the generated bifurcation curves satisfy these conditions, as these variables are absent from the resultant. Though this method overrepresents solutions of the polynomial system, it will not miss any of the solutions and we use our non-rigorous judgment to hypothesize which ones truly exist in the system.

We use a simple extension of this method to solve a larger system of polynomial equations. Suppose we have a system of polynomial equations in variables with a set of variable coefficients. Let us write these polynomials as where . Using the Sylvester matrix determinant, let for , in other words we choose a base polynomial and compute resultants with the remaining polynomials in the system by cancelling . The new system has equations and variables. We can continue inductively to arrive at a total multivariate resultant polynomial in the coefficient variables. As with the Gröbner basis calculation, even small systems in multiple variables can lead to extremely large resultant polynomials. To combat this, we factor the intermediate polynomials in the system to create a tree of possible solutions, these putative solutions being more feasible to derive. Also, depending on the structure of the system, different choices of base polynomials as well as different orders of variable cancellations can often lead to significant changes in computation time.

Up to this point, the naïve method we have described for computing bifurcation curves is legitimate, we need only take care to ensure that we judiciously select correct bifurcation curves from the overrepresentation provided. However, the exponential Hessian determinant still provides a massive roadblock and renders this naïve method just as intractible as the Gröbner basis method. It has been observed that replacing the exponential Hessian determinant with the far simpler equation results in bifurcation curve solutions which visually bound the regions of polynomial decay, though it is not clear how these curves can be rigorously justified. If we let , then there are no restrictions on the spatial variables in equation (20), and these are the variables of interest. A Gröbner basis calculation would fail to render spatial bifurcation curves in this case while the naïve method generates outputs. We have found that letting be the first base polynomial, and cancelling the variables before cancelling leads to more digestible factors for the algorithm. In the subsequent section, we will clearly state when the displayed bifurcation curves result from the Gröbner basis calculation and when they are derived from the naïve method.

4 Examples

In this section, we compute parameterizations of regions of polynomial decay for five different two-dimensional quantum walks and compute bifurcation curves where possible. In the following cases, characteristic polynomials of the multiplier matrix will often be symmetric quartic polynomials. Suppose we have a characteristic polynomial

such that and are functions of a vector . This polynomial can be factored as:

where and , otherwise and takes the opposite sign. These quadratic factors allow for an explicit representation of the eigenvalues, but recall that and we are searching for . Using implicit differentiation and previous equations, we find that the following holds:

| (24) |

This formula will grant us a parametric representation for the region of polynomial decay in these examples.

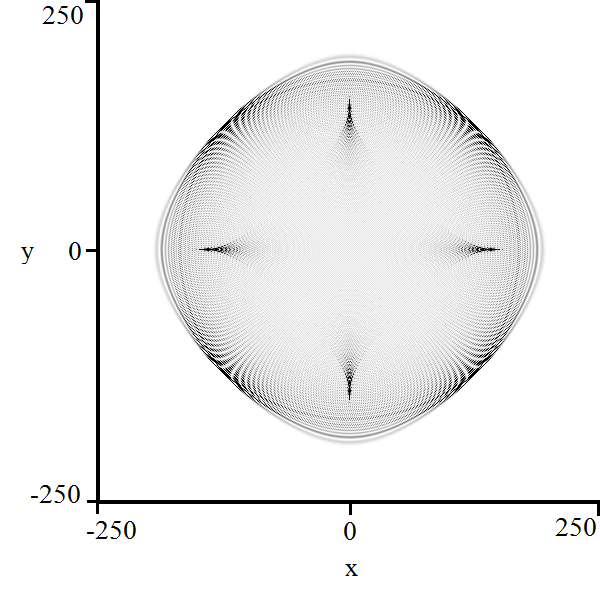

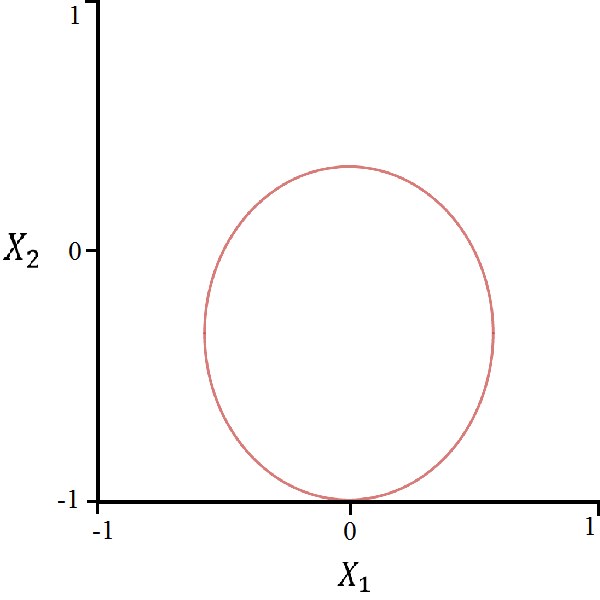

4.1 Four-State Grover Walk

We first consider the two-dimensional four-state Grover walk . Recall that the Grover matrix is written as:

We write the corresponding multiplier matrix as:

The characteristic polynomial of the multiplier matrix thus satisfies:

We pause to note that the solution causes a breakdown in the stationary phase approximation in that the only critical point that exists corresponding to this eigenvalue is at and is degenerate. This phenomenon is known as localization and has been explored by several authors [11] [12] [15] [21]; we will not elaborate any further on it in this paper. We will term constant solutions to the characteristic polynomial as trivial.

As the non-trivial portion of the characteristic polynomial is quadratic, we can explicitly solve for the non-trivial eigenvalues and use a reduced version of equation (24) to construct a parameterization of the region of polynomial decay:

| (25) |

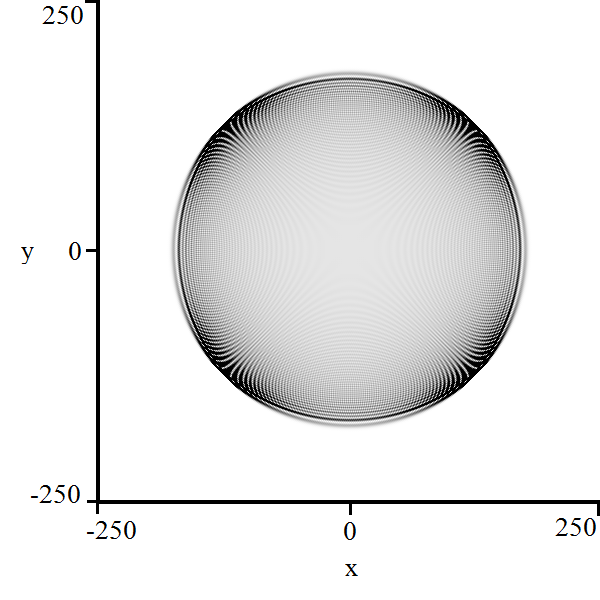

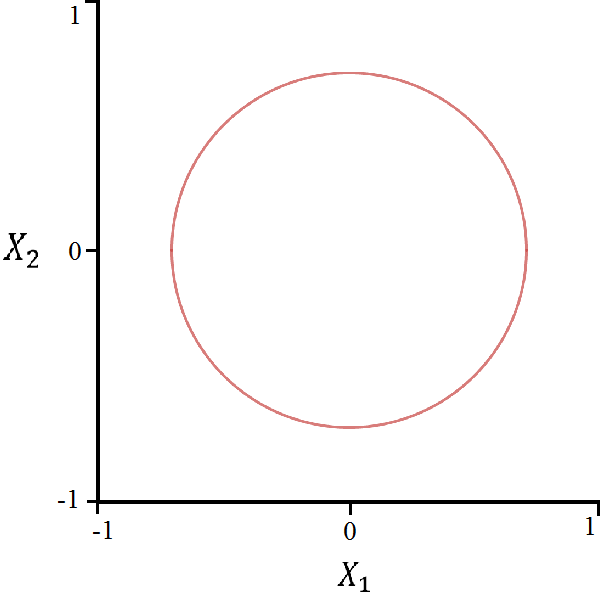

It was shown in Kuklinski [17] that this parameterization traces the circle in , albeit in an atypical way.

In this example, we can in fact solve for the bifurcation curve. By letting and , we can write the characteristic equation in a different way:

The corresponding exponential Hessian determinant is small enough that the system may be efficiently reduced via Gröbner basis computation. The result is as we expect:

| (26) |

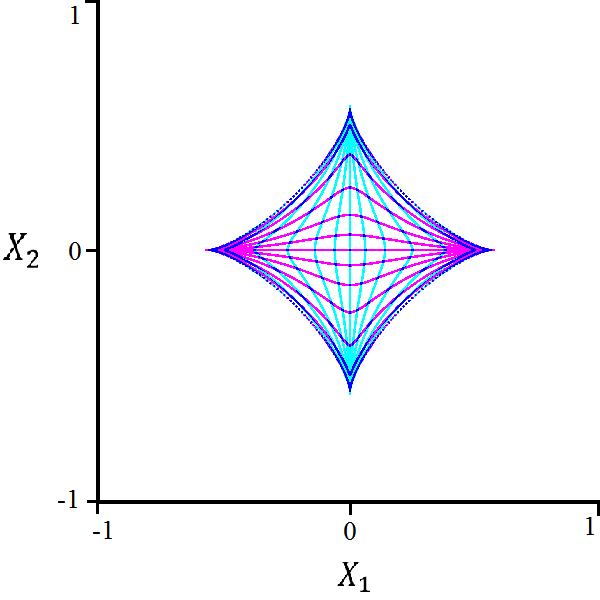

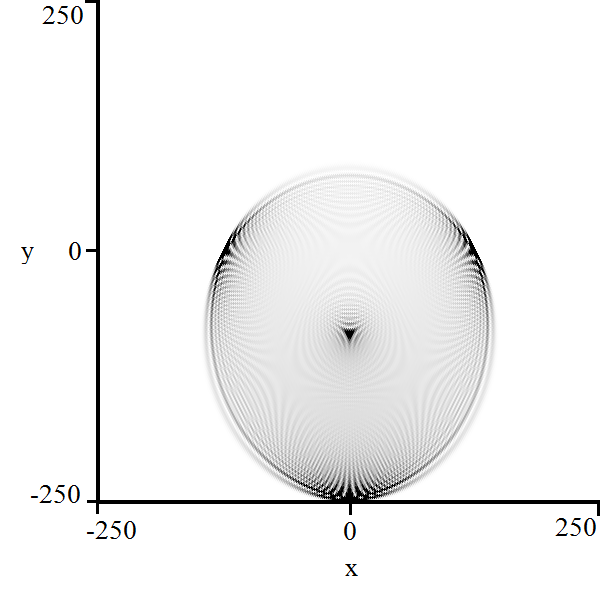

4.2 Five-State Grover Walk

We explore a variant of the four-state Grover walk [4] with the operator The Grover matrix is written as:

We write the corresponding multiplier matrix as:

The characteristic polynomial of this multiplier matrix satisfies

where and . Notice that the eigenvalue leads to localization in this walk as well.

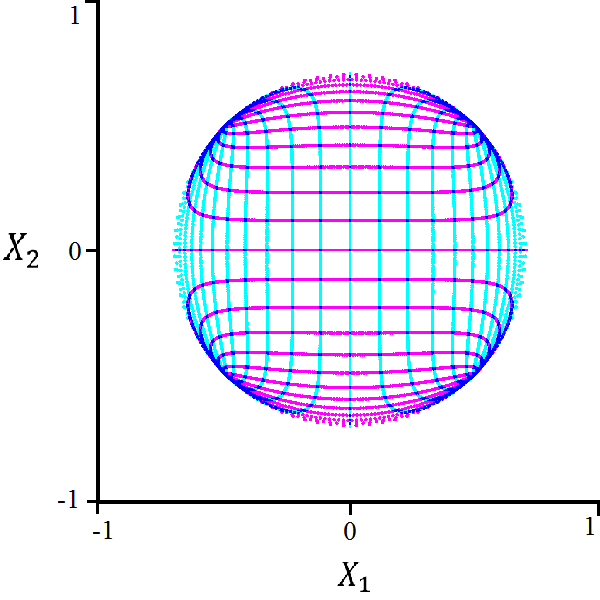

Using equation (24), we can write an explicit parameterization of the region of polynomial decay:

| (27) |

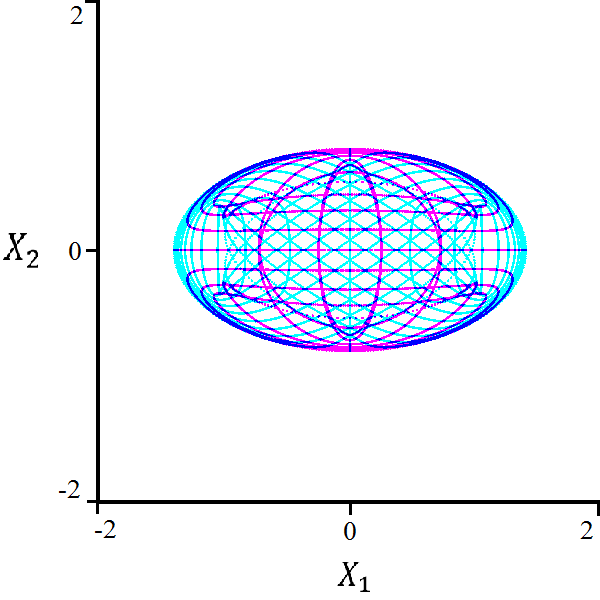

Here, , , and . Notice that in this case, the choice of plus/minus in results in different regions of polynomial decay.

To find the bifurcation curves of this quantum walk, we rewrite the characteristic polynomial:

In this example, the exponential Hessian determinant is several pages long, so a Gröbner basis calculation is computationally infeasable. We choose to illustrate the naïve method for this system. Let , , , and . We first cancel from this system by letting be the base polynomial:

The polynomials are irreducible. We can omit factors of in the second two equations as . If we substitute the factor from one of these equations, substitute into the remaining two, and then cancel from the resulting system, the generated curves do not visually fit the system, so we ignore this option and proceed with solving the system . By choosing to be the base polynomial and eliminating from the system, we find:

If we substitute from the second equation into , then we find:

| (28) | ||||

The remaining factors from the second polynomial do not satisfy so we ignore these. Although this is not proof, equation (28) visually replicates the boundary of both regions of polynomial decay. Notice that although we have found two distinct regions of polynomial decay in the parametric representation, this irreducible bifurcation curve traces the boundaries of both regions. We note that the outer curve has a maximum distance of and a minimum distance of from the origin, while the inner curve has a maximum distance of and a minimum distance of from the origin. These maxima are attained on the cardinal axes, and the minima are attained on the axes .

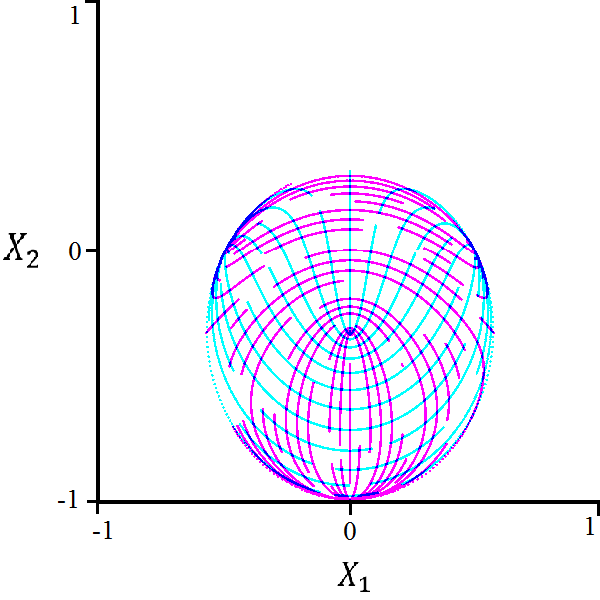

4.3 Triangular Quantum Walk

We now analyze the triangular Grover walk with operator where

. The multiplier matrix of this operator may be written as:

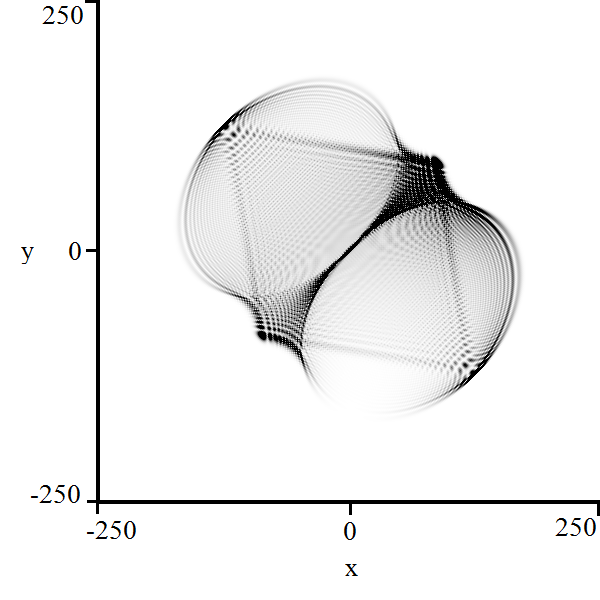

Letting , the eigenvalues of this matrix satisfy the following equation:

The structure of this polynomial does not lend itself to an analytic solution of without invoking the cubic formula [10]. We do not write the formula here, but we may graphically display this parameterization.

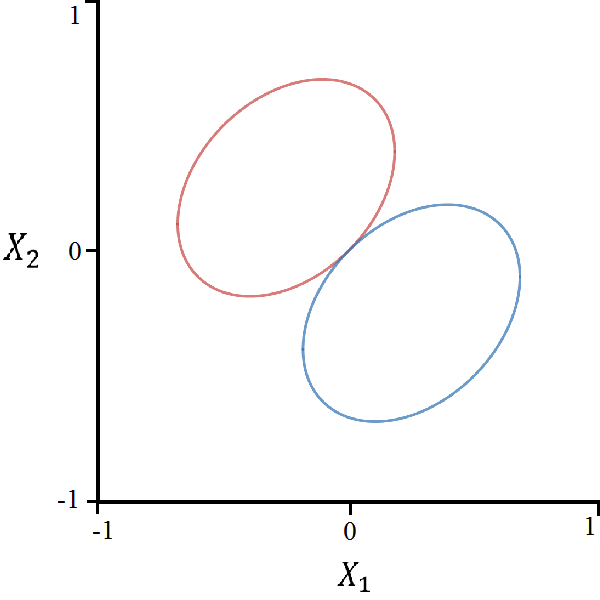

Furthermore, the Hessian determinant is too large to facilitate a Gröbner basis calculation for the bifurcation curves. However, using the naïve elimination procedure outputs the bifurcation curve:

| (29) |

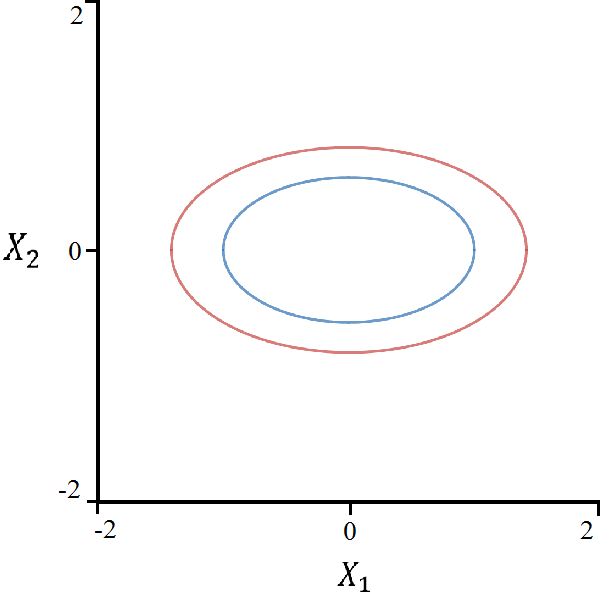

This equation represents an ellipse centered at with vertical major axis length and horizontal minor axis length .

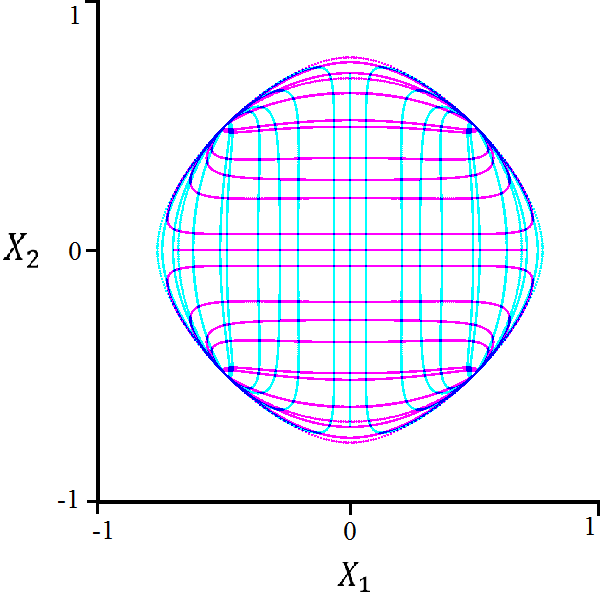

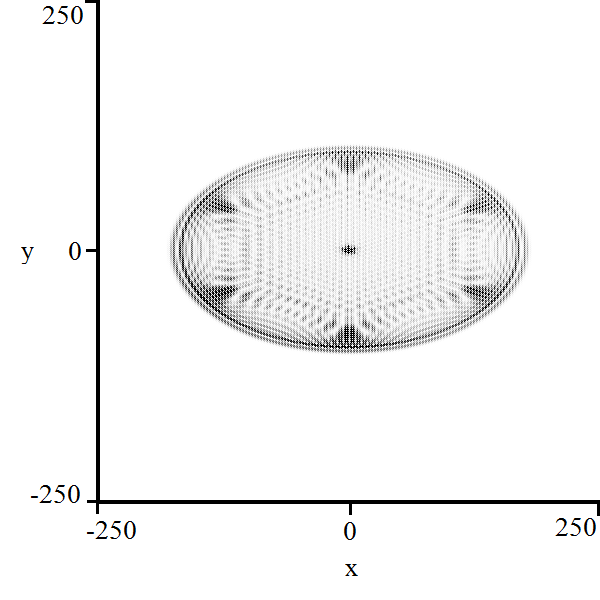

4.4 Hexagonal Quantum Walk

We now illustrate an example of a quantum walk which traverses a hexagonal lattice on . The hexagonal or honeycomb lattice has been the subject of a few quantum walk investigations [13] [18]. Let us define the set:

Consider the quantum walk operator where is the Pauli- gate [9] . In this case, amplitudes will travel on two separate hexagonal lattices. To be clear, this hexagonal Grover walk takes place on a subset of spanned by the elements of . The multiplier matrix of this quantum walk takes the form:

The characteristic polynomial of the multiplier matrix of this quantum walk operator is written as:

The factor indicates that localization is present in this walk. The remaining eigenvalues satisfy the following equation:

Since the characteristic polynomial is quadratic in , we can efficiently parameterize the region of polynomial decay:

| (30) |

Again, the characteristic polynomial is too large for a Gröbner basis calculation, but the naïve method leads to two possible bifurcation curves:

| (31) | ||||

| (32) |

These equations represent two concentric ellipses with major axis of length times the length of the minor axis. It seems likely that the larger ellipse in equation (31) is a bifurcation curve of the system, but it is uncertain whether the smaller ellipse in equation (32) is a legitimate bifurcation curve.

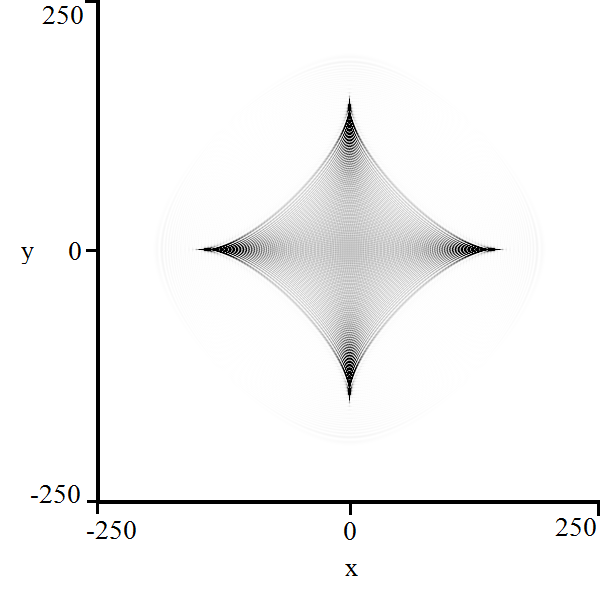

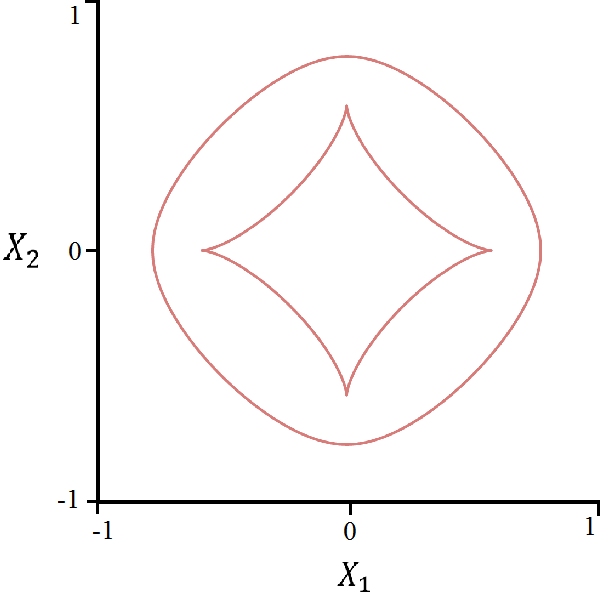

4.5 Four-State Hadamard Walk

In the final example we consider a quantum walk governed by a different unitary matrix. Let such that the corresponding multiplier matrix becomes:

The characteristic polynomial of this matrix becomes:

This is not a symmetric quartic polynomial, but the parametrization of the region of polynomial decay may stille be solved using a modification of equation (24):

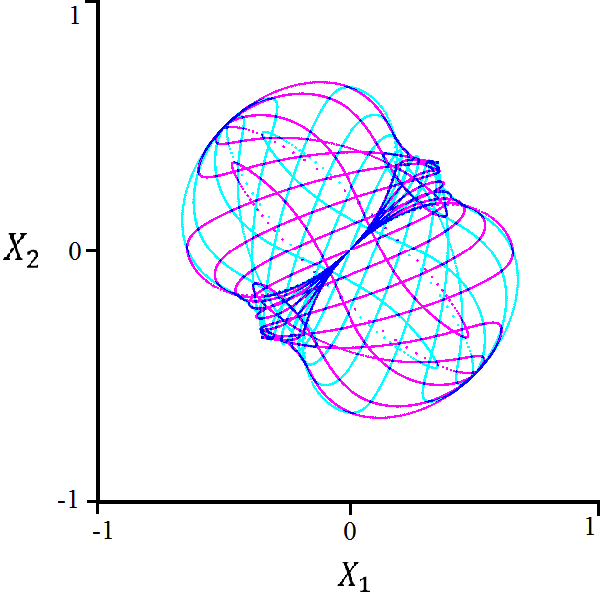

| (33) |

Here, we let , , and .

By letting and , we can rewrite the characteristic polynomial:

This characteristic polynomial is too large for a Gröbner basis calculation, and even the naïve method cannot generate a complete set of outputs. However, this algorithm is capable of generating the equations for the two main ellipses in the region of polynomial decay:

| (34) | ||||

| (35) |

The major axes of these ellipses are parallel to the line and have length 1 while the minor axes have length . Though it did not appear in the calculation, we also predict that the bifurcation curve set also includes a rhombus and a order algebraic curve.

5 Conclusion

In this paper we have detailed a process to compute bifurcation curves of two-dimensional quantum walks, as well as describe a non-rigorous algorithm to trace bifurcation curves for more complicated examples which are difficult to solve analytically. In addition, we have provided parameterizations of the regions of polynomial decay. These methods are not unique to two-dimensional quantum walks, and with sufficient computational resources could potentially be extended to computing bifurcation surfaces for higher-dimensional quantum walks.

References

- [1]

- [2] Olga Lopez Acevedo & Thierry Gobron (2005): Quantum walks on Cayley Graphs. Journal of Physics A: Mathematical and General 39(3), p. 585, 10.1103/PhysRevE.72.026113.

- [3] Andris Ambainis, Eric Bach, Ashwin Nayak, Ashvin Vishwanath & John Watrous (2001): One-Dimensional Quantum Walks. In: Proceedings of the thirty-third annual ACM symposium on Theory of computing, pp. 37–49, 10.1145/380752.380757.

- [4] Clement Ampadu (2011): Localization of two-dimensional five-state quantum walks. ArXiv preprint arXiv:1108.0984.

- [5] Yuliy Baryshnikov, Wil Brady, Andrew Bressler & Robin Pemantle (2011): Two-dimensional quantum random walk. Journal of Statistical Physics 142(1), pp. 78–107, 10.1007/s10955-010-0098-2.

- [6] Norman Bleistein & Richard A. Handelsman (1975): Asymptotic expansions of integrals. Courier Corporation.

- [7] David A. Cox, John Little & Donal O’shea (2006): Using Algebraic Geometry. Springer Science & Business Media.

- [8] Reinhard Diestel (2005): Graph Theory. Springer-Verlag. Graduate Texts in Mathematics, No. 101.

- [9] David J. Griffiths & Darrell F. Schroeter (1982): Introduction to Quantum Mechanics. Cambridge University Press.

- [10] Lucye Guilbeau (1930): The History of the Solution of the Cubic Equation. Mathematics News Letter, pp. 8–12, 10.2307/3027812.

- [11] Norio Inui, Yoshinao Konishi & Norio Konno (2004): Localization of two-dimensional quantum walks. Physical Review A 69(5), 10.1080/00107151031000110776.

- [12] Norio Inui, Norio Konno & Etsuo Segawa (2005): One-dimensional three-state quantum walk. Physical Review E 72(5), 10.1142/S0219749905001079.

- [13] M. A. Jafarizadeh & R. Sufiani (2007): Investigation of continuous-time quantum walk on root lattice An and honeycomb lattice. Physica A: Statistical Mechanics 381, pp. 116–142, 10.1016/j.physa.2007.03.032.

- [14] Norio Konno (2002): Quantum random walks in one dimension. Quantum Information Processing 1(5), pp. 345–354, 10.1023/A:1023413713008.

- [15] Norio Konno (2010): Localization of an inhomogeneous discrete-time quantum walk on the line. Quantum Information Processing 9(3), 10.1007/s11128-009-0147-4.

- [16] Erwin Kreyszig (1978): Introductory Functional Analysis with Applications. New York: Wiley.

- [17] Parker Kuklinski (2017): Absorption Phenomena in Quantum Walks. Ph.D. thesis, Boston University.

- [18] Changuan Lyu, Luyan Yu & Shengjun Wu (2015): Localization in quantum walks on a honeycomb network. Physical Review A 92(5), p. 052305, 10.1103/PhysRevA.82.012303.

- [19] T.D. Mackay, S.D. Bartlett, L.T. Stephenson & B.C. Sanders (2002): Quantum walks in higher dimensions. Journal of Physics A: Mathematical and General 35(12), p. 2745, 10.1103/PhysRevA.65.032310.

- [20] Armin Rainer (2013): Perturbation theory for normal operators. Transactions of the American Mathematical Society 365(10), pp. 5545–5577, 10.1090/S0002-9947-2013-05854-0.

- [21] Martin Stefanak, I. Bezdekova & Igor Jex (2012): Continuous deformations of the Grover walk preserving localization. The European Physical Journal D 66, p. 142, 10.1140/epjd/e2012-30146-9.

- [22] Elias M. Stein & Timothy S. Murphy (1993): Harmonic Analysis: real-variable methods, orthogonality, and oscillatory integrals. 3, Princeton University Press.

- [23] James Joseph Sylvester (2012): The Collected Mathematical Papers of James Joseph Sylvester. Cambridge University Press.