Bootstraps for Dynamic Panel Threshold Models

Abstract

This paper develops valid bootstrap inference methods for the dynamic short panel threshold regression. We demonstrate that the standard nonparametric bootstrap is inconsistent for the first-differenced generalized method of moments (GMM) estimator. The inconsistency arises from an -consistent non-normal asymptotic distribution of the threshold estimator when the true parameter lies in the continuity region of the parameter space, which stems from the rank deficiency of the approximate Jacobian of the sample moment conditions on the continuity region. To address this, we propose a grid bootstrap to construct confidence intervals for the threshold and a residual bootstrap to construct confidence intervals for the coefficients. They are shown to be valid regardless of the model’s continuity. Moreover, we establish a uniform validity for the grid bootstrap. A set of Monte Carlo experiments demonstrates that the proposed bootstraps improve upon the standard nonparametric bootstrap. An empirical application to a firm investment model illustrates our methods.

KEYWORDS: Dynamic Panel Threshold; Kink; Bootstrap; Endogeneity; Identification; Rank Deficiency; Uniformity.

JEL: C12, C23, C24

1 Introduction

Threshold regression models have been widely used by empirical researchers, which have been more fruitful because of their extensions to the panel data context. Estimation and inference methods for the threshold model in non-dynamic panels were developed by Hansen, 1999b and Wang, (2015). Dynamic panel threshold models were considered by Seo and Shin, (2016), which proposes the generalized method of moments (GMM) estimation by generalizing the Arellano and Bond, (1991) dynamic panel estimator. A latent group structure in the parameters of the panel threshold model was investigated by Miao et al., 2020b .

Applications of the panel threshold models cover numerous topics in economics. The effect of debt on economic growth is a well-known example that has been analyzed using the panel threshold models, e.g., Adam and Bevan, (2005), Cecchetti et al., (2011) and Chudik et al., (2017). Another example is the threshold effect of inflation on economic growth such as the works by Khan and Senhadji, (2001), Rousseau and Wachtel, (2002), Bick, (2010), and Kremer et al., (2013). The benefit of foreign direct investment to productivity growth that depends on the regime determined by absorptive capacity is studied by Girma, (2005) using firm-level panel data.

It is common practice to make inference in threshold regression models based on an assumption about whether the model is continuous or not. Continuous threshold models that have kinks at the tipping points have received active research attention, e.g., Hansen, (2017); Kim et al., (2019) and Yang et al., (2020). In the literature, kink threshold models are analyzed for estimators that impose the continuity restriction as in Chan and Tsay, (1998), Hansen, (2017), and Zhang et al., (2017). On the other hand, unrestricted estimators are commonly used for discontinuous threshold models as in Hansen, (2000). However, Hidalgo et al., (2019) showed that the unrestricted least squares estimator possesses a different asymptotic property in the absence of discontinuity. Specifically, while the unrestricted model is not misspecified under continuity, failing to impose the restriction results in incorrect inference without proper care.

In the empirical literature, there has been mixed use of kink/discontinuous threshold models without much consideration of a possible specification error. Among the empirical examples referred to previously, Khan and Senhadji, (2001) use a continuous threshold model and impose continuity on their estimation procedure. They claim that the continuous model is desirable to prevent small changes in inflation rate from yielding different impacts around the threshold level. On the other hand, Bick, (2010) claims that the discontinuous threshold model is more appropriate for the same research question since overlooking a regime-dependent intercept can result in omitted variable bias. However, both of them do not provide econometric evidence that supports their choice of models.

For the dynamic panel threshold model, asymptotic normality of the GMM estimator is derived by Seo and Shin, (2016) under the fixed scheme. However, the asymptotic normality is valid only for the discontinuous models since it requires a full rank condition on the Jacobian of the population moment, which is violated in continuous models. Although the continuity-restricted estimator described in Kim et al., (2019) is asymptotically normal, it may be problematic since empirical researchers often do not agree about whether their threshold models should have a kink or a jump at the threshold as in Khan and Senhadji, (2001) and Bick, (2010). Therefore, we are focusing on the unrestricted GMM estimator and bootstrap inference methods which do not require any pretest on continuity or prior knowledge about continuity of true models.

We first show that when the true model is continuous, the asymptotic normality of the unrestricted GMM estimator breaks down and the convergence rate of the threshold estimator becomes -rate, which is slower than the standard -rate. Moreover, the standard nonparametric bootstrap is inconsistent in this case because the Jacobian from the bootstrap distribution does not degenerate fast enough due to the slow convergence rate of the threshold estimator.

We propose two different bootstrap methods to obtain confidence intervals for the parameters that are consistent regardless of whether the true model is continuous or not. One is for the threshold location, and the other is for the coefficients. The two bootstrap methods achieve the consistency irrespective of the continuity of the model by adaptively setting the recentering parameter at the bootstrap for GMM introduced by Hall and Horowitz, (1996). This means that our bootstrap moment function achieves zero not at the sample estimator but at the parameter values that we propose. In the bootstrap for the threshold location, we employ a grid bootstrap to fix the recentering parameter. The grid bootstrap was originally proposed by Hansen, 1999a for inference on an autoregressive parameter and applies the test inversion principle. In case of the bootstrap for the coefficients, the recentering parameter is set to adjust the unrestricted estimator by a data driven criterion on the model’s continuity. We also introduce a bootstrap test of model continuity.

Furthermore, we establish the uniform validity of the grid bootstrap for the unknown continuity (or discontinuity) of the threshold model. The importance of uniform validity is well recognized in the literature, notably in the works of Mikusheva, (2007), Andrews and Guggenberger, (2009), and Romano and Shaikh, (2012), among others, who have studied the uniformity of resampling procedures. In particular, Mikusheva, (2007) showed the uniform validity of the grid bootstrap for linear autoregressive models. Our work extends this advantage of the grid bootstrap to a different class of nonstandard inference problems involving continuity of the model.

A set of Monte Carlo simulations demonstrates that the grid bootstrap performs favorably for inference on the threshold location, not only when the model is continuous but also when it includes a jump for various jump sizes. However, inference on the coefficients turns out to be more challenging. Bootstrap confidence intervals for the coefficient, based on percentiles of bootstrap distributions, tend to exhibit severe undercoverage. Nevertheless, our residual bootstrap method improves upon the standard nonparametric bootstrap in both cases.

We apply our inference methods to the dynamic firm investment model, whose static version has been studied by Fazzari et al., (1988) or Hansen, 1999b . It takes financial constraints into account via the threshold effect to determine a firm’s investment decision.

In the literature, Dovonon and Renault, (2013) and Dovonon and Hall, (2018) also deal with the degeneracy of the Jacobian in the context of the common conditional heteroskedasticity testing problem. And a bootstrap based test for the common conditional heteroskedasticity feature was proposed by Dovonon and Goncalves, (2017). However, their works do not deal with a discontinuous criterion function and their null hypothesis of interest always induces the degeneracy of the first-order derivative. That is, they are only concerned with a hypothesis testing and do not consider the confidence intervals. So, they do not have to address the uncertainty associated with the potential degeneracy of the Jacobian.

Meanwhile, there is also a substantial body of literature on singularity-robust inference such as Andrews and Cheng, (2012, 2014) and Han and McCloskey, (2019), among many others. They are motivated by weak or non-identification problems, where models are not point identified. In contrast, we focus on the inference problem that does not involve identification failure even though the Jacobian of the moment restriction can become singular. Andrews and Guggenberger, (2019) study more general singular cases than non-identification, but their approach requires differentiability of sample moments for the subvector inference. Since our model exhibits discontinuity, the method of Andrews and Guggenberger, (2019) is not applicable.

This paper is organized as follows. Section 2 explains the dynamic panel threshold model. Section 3 presents the asymptotic distribution theories of the estimators and test statistics related to the threshold location and continuity. Section 4 proposes bootstrap methods. Section 5 reports Monte Carlo simulation results. Section 6 contains an empirical application. Section 7 concludes. The mathematical proofs and technical details are left to the Appendix.

2 Dynamic Panel Threshold Model

We consider the dynamic panel threshold model,

| (1) |

where , , and is a regressor vector that includes and . The threshold variable is allowed to be endogenous and is the last element of .111Our analysis still holds if researchers have two sets of regressors and such that where is an element of . However, this paper sticks to the current form to keep the exposition simple. Then, we partition and write .

When consists of the lagged dependent variables, the model becomes the well-known self-exciting threshold autoregressive (TAR) model popularized by Chan and Tong, (1985). The static version where the lagged dependent variables are excluded from was considered by Hansen, 1999b , while the current dynamic model was studied by Seo and Shin, (2016).

The parameter denotes the threshold location, where is a compact set in , and denotes the collection of coefficients. Let denote the vector of all the parameters. The fixed effect is constant across time for each individual in the panel data. It is not identified but is eliminated after first-differencing for the GMM estimation. The idiosyncratic error is independent across individuals.

For the estimation, we use the GMM after the first-difference transformation

| (2) |

where

| (3) |

Let denote a set of instrumental variables at time such that becomes a zero vector, which may include lagged dependent variables and certain lagged variables of covariates and/or , depending on the assumptions regarding exogeneity of those variables.

Then, we can define a vector of moment functions for the GMM estimation,

| (4) |

where and is the earliest period that the regressor and instrument can be defined. For example, when and . Denote the population moment by and the sample moment by

We write instead of for simplicity of notations.

We consider the two-stage GMM estimation of the dynamic panel threshold model. In the first stage, we get an initial estimate by to compute a weight matrix

and obtain the second stage estimator

where . Seo and Shin, (2016) proposed averaging of a class of GMM estimators that are constructed from randomized first stage estimators. We do not pursue the averaging since our primary goal is the bootstrap inference.

In practice, the grid search algorithm is employed to compute the estimates. Note that when is given, can be easily computed because the problem becomes the estimation of the linear dynamic panel model. Then, minimizes the profiled criterion over the grid of .

Let denote the true parameter value that lies in the interior of . For the point identification of , should hold if and only if , where . Let

and . Additionally, define , , , , , and . We write , and instead of , and , respectively, for simplicity of notation. The identification condition is stated in Theorem 1 that follows.

Theorem 1.

Let the following two conditions hold:

(i) The matrix is of full column rank.

(ii) For any , is not in the column space of .

Then, is a unique solution to .

Theorem 1 (i) is the identification condition for the coefficients once the true threshold location is identified. This means that instruments should be relevant to the first-differenced regressors appearing in when .

Theorem 1 (ii) is for the identification of the threshold location, which excludes the possibility of . In the standard GMM problem, it is usually assumed that the Jacobian of at is of full column rank for both the point identification and the asymptotic normality of the GMM estimator. The condition (ii) does not require the full rank condition on the Jacobian, which is related to the presence of a jump in the threshold model, and thus it generalizes the identification conditions in Seo and Shin, (2016). When the model is continuous and has a kink at the threshold location, the last column of the Jacobian matrix, which is the first-order derivative with respect to at the true parameter, becomes a zero vector. This degeneracy does not violate the condition (ii), but it fails the asymptotic normality of the standard GMM estimator, which relies on the linearization of near as in Newey and McFadden, (1994).

To define the continuity, recall that is the last element of such that . Accordingly, partition , where and , and . Hence, is the change in the coefficient of the threshold variable when the threshold variable surpasses the tipping point. Likewise, and are the changes in the coefficients for the other regressors, , and the intercept, respectively. The continuity of the dynamic panel threshold model is formally given in Definition 1.

Definition 1.

Let . A dynamic panel threshold model is continuous with respect to the threshold variable if and . Otherwise, it is discontinuous at the threshold location.

Note that this definition of continuity requires that ; otherwise, .

The rank of the first-order derivative matrix, say , of at is crucial to the standard asymptotic normality of the GMM estimator. Let denote the first-order derivative of with respect to at . Then,

| (5) |

where the conditional expectation and the density function of are assumed to exist. The derivation of is provided in the proof of Lemma D.1. Note that the first-order derivative of with respect to at is . The linear independence of from the other columns in is required for the standard linear approximation

Recall that the vector can be written as the product of the matrix and the vector , (5), and the first and last columns of are linearly dependent since for all due to the conditioning. Then, the standard rank condition on the first derivative matrix can follow from a more primitive rank condition on , that is, the linear independence of all the columns in and all but the last column of , for the discontinuous case. Even if the primitive condition is met, however, the continuity restriction makes since for , which leads to degeneracy of .

When the rank condition fails due to the continuity, the expansion becomes

where

| (6) |

The detailed derivation is given in the proof of Lemma D.1. It is worth noting that is identical to the first column of up to a constant multiple. Then, the rank condition on is implied by the rank condition on . Thus, the rank condition on can be viewed as a sufficient condition for both Assumptions LK and LJ in the next section, apart from the continuity restriction on . Next section formalizes this discussion and presents the asymptotic distribution of the GMM estimator under the continuity.

3 Asymptotic theory

This section considers the asymptotic analysis when is fixed, the data are independent and identically distributed across , and . Specifically, the data for each individual is determined by the realization of , where denotes the initial value. We make the following assumptions.

Assumption G.

The parameter space is compact and . is of full column rank, and is not in the column space of for any . is positive definite. , , and are finite for all .

Assumption D.

For all , (i) has a continuous distribution and a bounded density , which is continuously differentiable at and . (ii) and are continuous on and continuously differentiable at .

Assumption LK.

has full column rank.

Assumptions G and D are similar to Assumptions 1 and 2 in Seo and Shin, (2016) except for the differentiability conditions in D which allow the second-order derivative of the population moment to be defined. Since the regressors include lagged dependent variables, G requires the individual fixed effects and initial values to have finite fourth moments, too. The assumption also includes the conditions in Theorem 1. LK is a rank condition for a nondegenerate asymptotic distribution when the underlying model is continuous. This condition may be viewed as less restrictive than the standard rank assumption as discussed in the preceding section where and are defined. For easy reference, we restate the standard full rank assumption for the asymptotic normality of the GMM estimator for the discontinuous threshold regression below.

Assumption LJ.

has full column rank.

In a simple model, where , LK is equivalent to LJ because while , where is the first column of in (5).

Theorem 2 below establishes the asymptotic distribution of the GMM estimator when the dynamic panel threshold model is continuous.

Theorem 2.

We observe that the convergence rate of is , which is slower than the standard -rate. Meanwhile, Seo and Shin, (2016) show the -convergence rate for when the model is discontinuous. Intuitively, it would be more difficult to detect the precise threshold location when there is a kink than when there is a jump at the tipping point. More technically, when the threshold model is discontinuous and the Jacobian is not singular, the limit of the GMM objective function admits a quadratic approximation with respect to at the true value, while the limit admits a quartic approximation for the continuous model. Hence, the limit objective function becomes flatter in at the true value resulting in the slower convergence rate. Hidalgo et al., (2019) also showed in the least squares context that when the model is continuous, the convergence rate of the threshold estimator slows down to , while it is superconsistent -rate when the model is discontinuous.

Moreover, we can observe that the asymptotic distribution of is also shifting to a non-normal distribution. Hence, standard inference methods based on the asymptotic normality become invalid for the continuous dynamic panel threshold model.

The asymptotic distribution of the GMM estimator is identical to the distribution reported in Theorem 1 (b) in Dovonon and Hall, (2018), which studies a smooth GMM problem with the degeneracy of the Jacobian. Theorem 2 shows that even though the criterion of our threshold model is discontinuous with respect to the parameter , the same asymptotic distribution as that of Dovonon and Hall, (2018) appears.

The censored normal distribution also appears in Andrews, (2002) which studies the estimation of a parameter on a boundary. Heuristically, because our analysis depends on the second-order derivative of for the local polynomial expansion of near , only the asymptotic distribution of can be derived. Since should be nonnegative, the asymptotic censored normal distribution appears as in Andrews, (2002). Meanwhile, Dovonon and Goncalves, (2017) show that the standard nonparametric bootstrap becomes invalid when the Jacobian degenerates. To address this issue, we propose different bootstrap methods in Section 4 for the inference of the parameters.

The asymptotic distribution in Theorem 2 can be used for parameter inference when the true model is continuous, but the estimator is obtained without imposing the continuity restriction. As discussed in Seo and Shin, (2016), and can be consistently estimated, while can be nonparametrically estimated similarly to . It is then straightforward to simulate the limit distribution from Theorem 2 by generating random numbers for and . However, there are several drawbacks to that approach, and hence we do not recommend it. First, empirical researchers might construct confidence intervals based on Theorem 2 when they cannot reject the continuity. However, Leeb and Pötscher, (2005) show that confidence intervals after model selection are subject to size-distortion. Second, even if the true model is known to be continuous, the continuity-restricted estimator explained in Kim et al., (2019) is more efficient and asymptotically normal. Therefore, using the continuity-restricted estimator for estimation and inference is preferable. Finally, the nonparametric estimation of requires a tuning parameter and has a slower convergence rate.

Seo and Shin, (2016) derived the asymptotic distribution of the GMM estimator and propose an inference method when the underlying model is discontinuous. When the true model is discontinuous and Assumptions G, D, and LJ hold,

can be estimated by . Note that , and can be estimated by , while the estimation of involves nonparametric estimation of the conditional means and densities. See section 4 of Seo and Shin, (2016) for more details. Note that diverges when the model is continuous since the last column of converges to a zero vector when it is consistent. This paper does not analyze the issue and leaves it for future research.

3.1 Testing for threshold value

Since the asymptotic distribution of the threshold estimator is not standard, we consider the GMM distance test introduced by Newey and West, (1987) for a hypothesis on the location of the threshold. Let the test statistic for the threshold location at be

and let denote the chi-square distribution with 1 degree of freedom.

Theorem 3.

(iii) If , then for any , .

Theorem 3 (i) presents the asymptotic distribution of the distance statistic under the continuity. Due to the censoring, the asymptotic distribution becomes a mixture of the distribution with weight 1/2 and zero with weight 1/2. This type of distribution also arises in the context of testing parameters on a boundary; see e.g., Andrews, (2001).

Meanwhile, the chi-square limit in Theorem 3 (ii) extends Newey and West, (1987) for a discontinuous moment function. Seo and Shin, (2016) did not study the distance statistic.

Theorem 3 (iii) shows that the GMM distance test for the threshold location is consistent. It also serves as the consistency of a bootstrap test together with Theorem 5 since the bootstrap statistic is stochastically bounded whether or not the threshold location is true.

Since the limit distribution depends on the continuity of the model, we introduce a bootstrap in Section 4.1, which is valid regardless of the model continuity. Furthermore, Appendix I establishes the uniform validity of the bootstrap inference for the threshold location under some simplifying assumptions.

3.2 Testing continuity

We propose a test for the continuity of the threshold model, similar to the approach used by Gonzalo and Wolf, (2005) or Hidalgo et al., (2023) in the threshold regression literature. While empirical researchers may employ the test to select a model, we utilize the test to modify the standard nonparametric bootstrap to make the bootstrap valid irrespective of the model continuity. Details of the use of the continuity test statistic in the bootstrap method are explained in Section 4.2.

The continuity hypothesis is a joint hypothesis. We employ the GMM distance test. Let be the continuity-restricted estimator. The GMM distance test statistic is

Theorem 4.

(i) When the true model is continuous and Assumptions G, D, and LK hold,

where , , , , , , and are independent, , and

(ii) If the model is discontinuous, then for any and .

While the limit distribution in Theorem 4 (i) is non-standard, it can be simulated to obtain critical values for the test using consistent plug-in sample analogue estimators, e.g., , , , etc. Another way to obtain the critical values is via a bootstrap method, which will be introduced in Section 4.3.

Theorem 4 (ii) shows that the continuity test is consistent. It also implies the consistency of the bootstrap test together with Theorem 7, which shows that the bootstrap test statistic is stochastically bounded even when the true model is not continuous. The divergence rate of , which is faster than for any , is exploited to modify the standard nonparametric bootstrap for the coefficients as detailed in Section 4.2.

4 Bootstrap

As usual, the superscript “*” denotes the bootstrap quantities or the convergence of bootstrap statistics under the bootstrap probability law conditional on the original sample. For example, denotes the expectation with respect to the bootstrap probability law conditional on the data. “, in ” denotes the distributional convergence of bootstrap statistics under the bootstrap probability law with probability approaching one. We write “, in ” if a sequence is stochastically bounded under the bootstrap probability law with probability approaching one. More details are written in Section B.1. Let denote the empirical quantile of a bootstrap statistic .

This section introduces three different bootstrap schemes. The first bootstrap is for constructing bootstrap confidence interval(CI)s for the threshold, while the second bootstrap is for constructing bootstrap CIs for the coefficients. Both methods aim to provide valid inferences, regardless of whether the model is continuous or not. The third bootstrap is for testing continuity of the threshold model. The three bootstrap methods can be represented by means of Algorithm 1 with suitable choices of .

In step 1, we resample the regressors, the instruments, and the residuals jointly to maintain the dependence among them, unlike in the usual residual bootstrap. See e.g., Giannerini et al., (2024) for the description of the standard residual bootstrap, which resamples the residuals only, and the wild bootstrap for the testing of linearity in the threshold regression. There could be other ways of resampling not mentioned here and we do not attempt to decide which is the best here.

The parameter is used in step 2 of Algorithm 1 to generate the dependent variables in the bootstrap samples. In step 4, recentering of the bootstrap sample moment is done by subtracting . Note that the expectation of by the bootstrap probability law conditional on the data becomes zero when due to the recentering, which can be easily checked from the following equations: and for .

A different choice of leads to a different bootstrap. For example, if , then the bootstrap becomes the standard nonparametric bootstrap in Hall and Horowitz, (1996) because holds true for and in step 2. Note that, for not equal to , step 2 of Algorithm 1 generates ’s that are generally different from ’s. The following subsections detail three different choices of for three different inference problems.

4.1 Grid bootstrap for threshold location

To construct CIs for the threshold location, we propose to employ the grid bootstrap method introduced by Hansen, 1999a for autoregressive models. Let be a grid of the candidate thresholds. The grid bootstrap constructs the confidence set by inverting the bootstrap threshold location tests over . Specifically, a sequence of hypothesis tests for the hypothesized threshold locations in are performed by the bootstrap that imposes the null to generate bootstrap samples.

The null imposed bootstrap at a point can be implemented by setting in Algorithm 1, and the bootstrap test statistic is

The null hypothesis is rejected at size if . Consequently, after running the null imposed bootstrap for each point in , we can construct the % confidence set of by

| (7) |

Note that the confidence set is not necessarily a connected set, even though researchers can convexify the set to get a connected CI. The CI does not become an empty set because while . The consistency of the grid bootstrap method is implied by Theorem 5 that follows.

Theorem 5 (i) and (ii) show that the limit distribution of the bootstrap test statistic, conditional on the data, is identical to that of the sample test statistic regardless of the continuity of the true model. Therefore, the CI for the threshold location by the grid bootstrap, (7), achieves an exact coverage rate for both continuous and discontinuous models asymptotically. Specifically, for both cases (i) and (ii). Theorem 5 (iii) says that the bootstrap test statistic is still stochastically bounded, conditionally on the data, under the alternatives. As Theorem 3 (iii) shows that the sample test statistic is stochastically unbounded under the alternatives, the grid bootstrap CI has power against the alternative threshold locations.

4.1.1 Uniform validity of grid bootstrap

We extend Theorem 5 to the uniform validity of the grid bootstrap to ensure its good finite sample performance when the model is nearly continuous. We establish the uniform validity for the following simplified specification for analytical tractability:

where and in this subsection.

This section briefly states the uniformity result of the grid bootstrap and gives heuristic justification. Our derivation follows Andrews et al., (2020). It is highly complicated and involves more technical conditions, which are stated in Appendix I.

Specifically, we establish in Theorem I.1 that

where is the probability law when the model is specified by and is the distribution of . The collection of probabilistic models includes both continuous and discontinuous threshold models. More detailed discussions of technical assumptions about are given in Appendix I.

For the uniformity analysis, we need to consider drifting sequences of true parameters such that and . Here, the distance between and is induced by a specific choice of norm that is explained in Appendix I. To show the uniform validity of the grid bootstrap CI, we need to verify that the limit distribution of conditional on the data is identical to the limit distribution of under all the above drifting sequences of models. Our analysis finds that the limit distribution of the threshold location test statistic under the true null, i.e., the limit distribution of , is determined by ; see Lemma I.1 for details. When , the limit distribution of is as described in Theorem 3 (i). In contrast, when , the limit distribution is the -distribution as in Theorem 3 (ii). When is finite and nonzero, then has a nonstandard limit distribution that depends on .

Therefore, if comprises a true parameter sequence of a bootstrap scheme, then should consistently estimate for the bootstrap statistics to exhibit the same asymptotic behavior as the sample statistics.

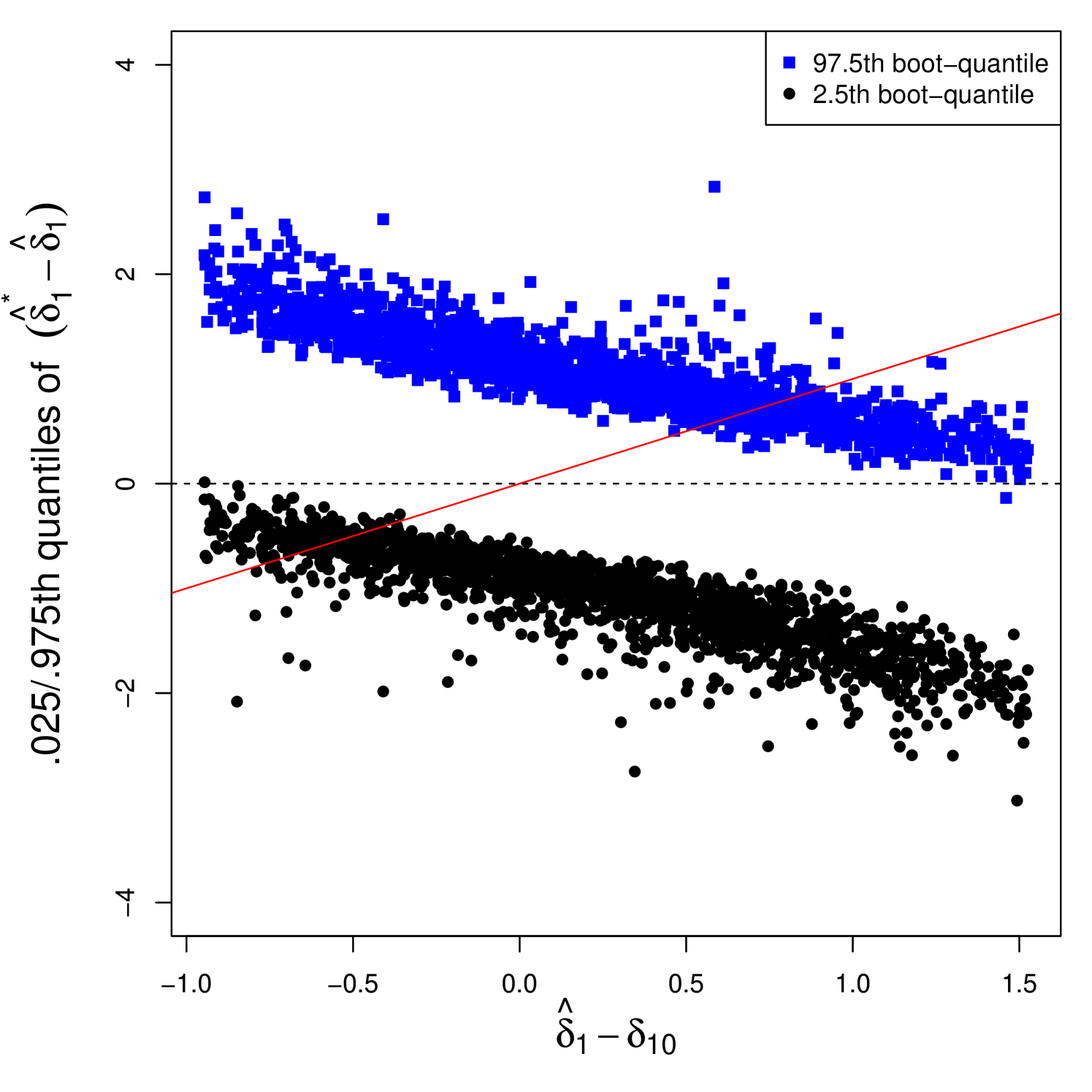

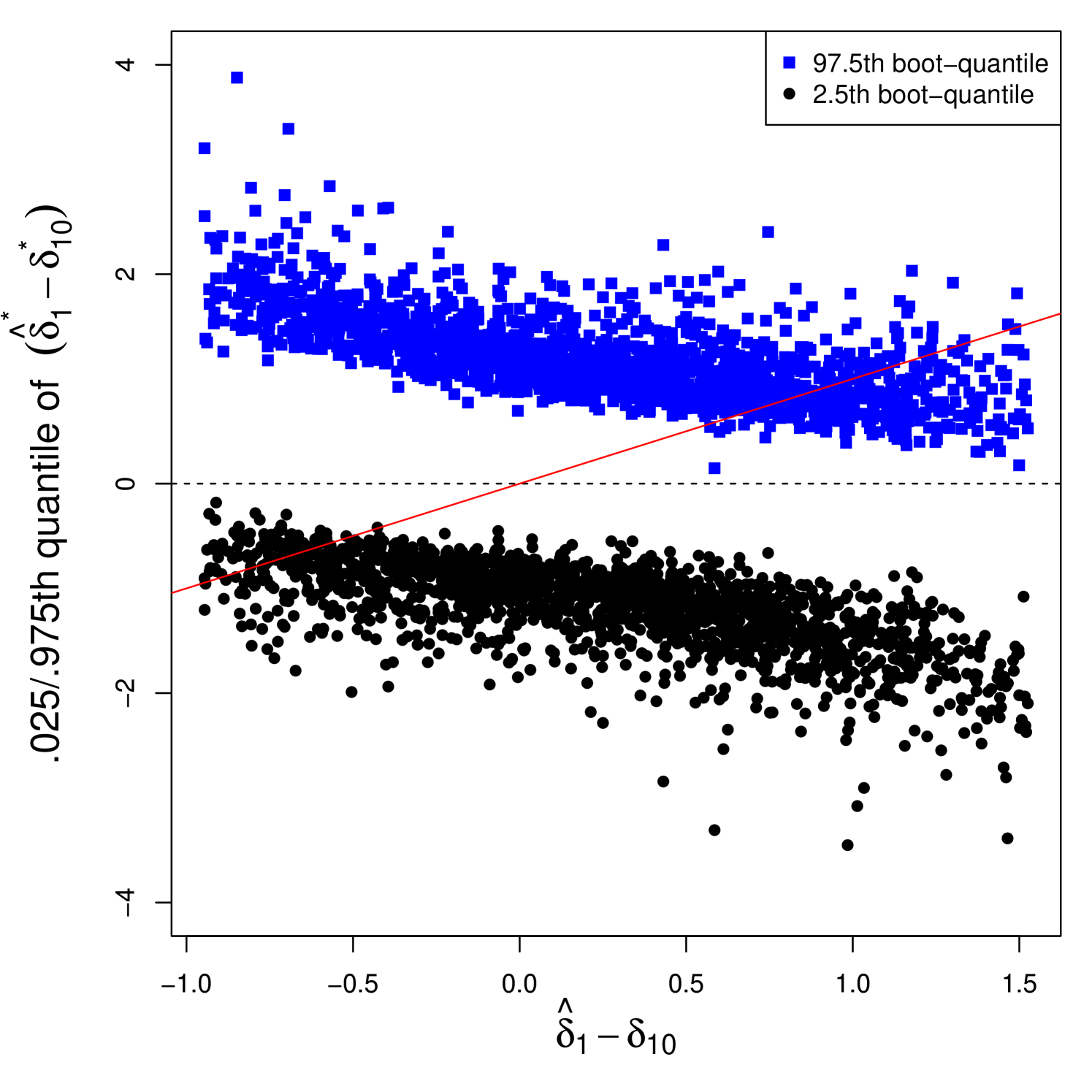

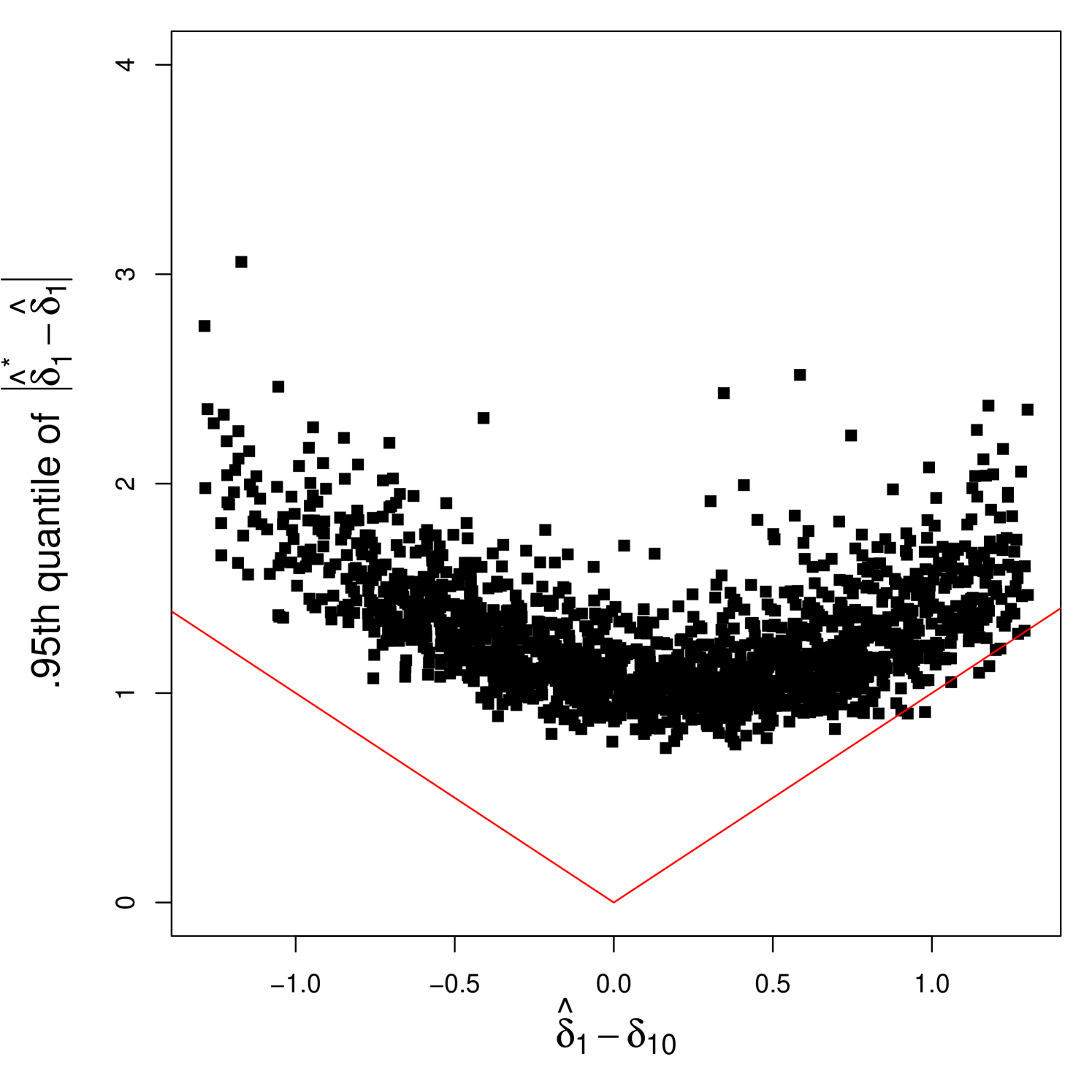

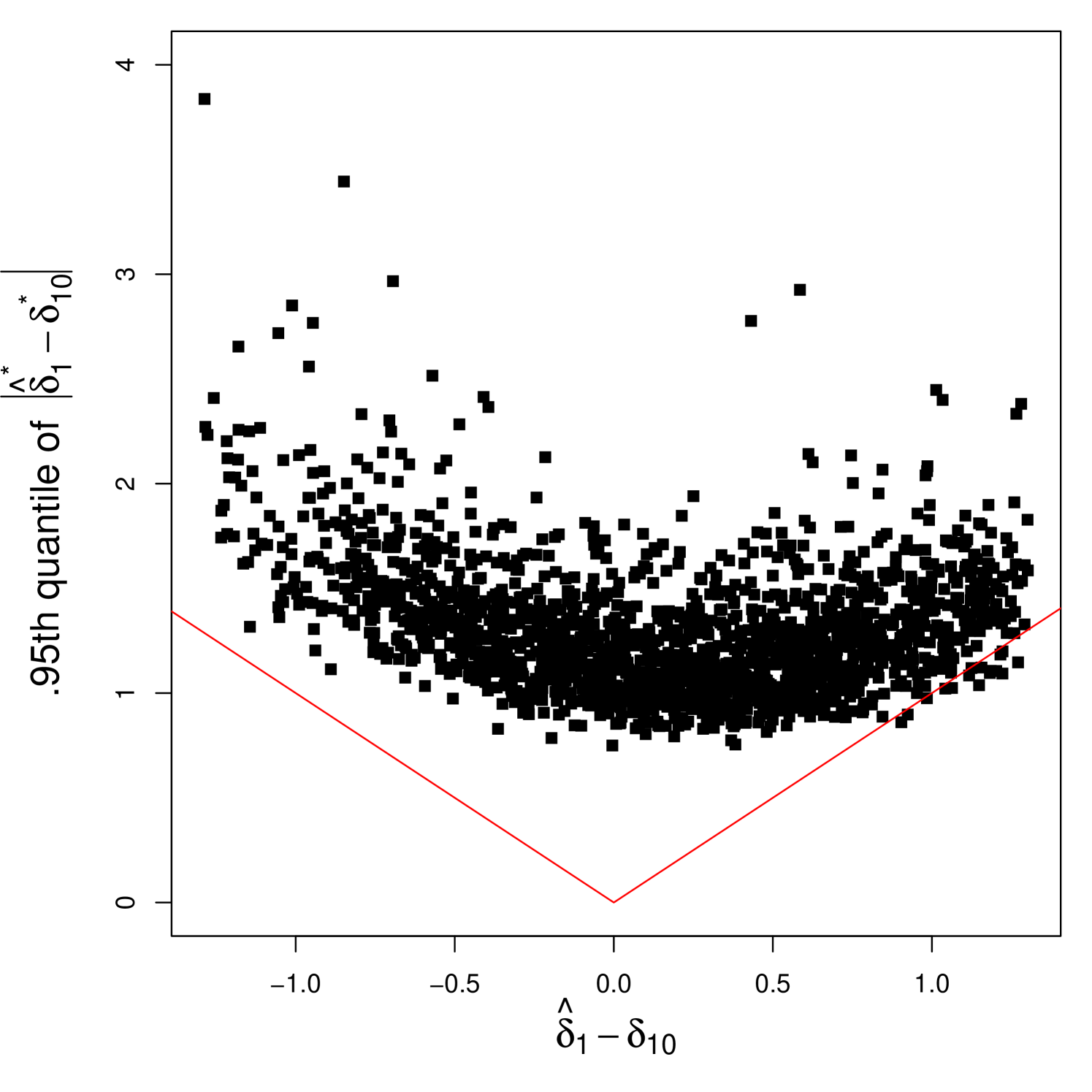

Note that under the grid bootstrap scheme, the bootstrap test statistic is drawn from the bootstrap that imposes the null threshold location . The true parameter of the bootstrap data generating process (dgp) is , where . The restricted estimator satisfies , as the problem becomes estimating a standard linear dynamic panel model, and hence . Therefore, conditionally converges to the limit distribution of , which leads to the uniform validity of the grid bootstrap confidence interval. In contrast, does not satisfy this property for some and the bootstrap building on may not be uniformly valid.

4.2 Residual bootstrap for coefficients

The bootstrap CIs for the coefficients can be obtained by applying Algorithm 1 with set as

| (8) |

where is the continuity-restricted estimator. is some estimated quantile, such as the th percentile, of the limit distribution of the continuity test statistic when the model is continuous. can be obtained either by methods in Section 3.2 or Section 4.3. Since if the true model is continuous, and if the model is discontinuous, the true parameter value for the bootstrap adapts to the model continuity.

After collecting the bootstrap estimators

we can construct the CIs for the coefficients using the percentiles of either or . Here, and are the th elements of and , respectively. The % CI for the th element of the coefficients, , can be constructed by

| (9) |

or

| (10) |

which leads to a symmetric CI. The validity of the residual bootstrap CI is implied by Theorem 6 that follows.

We make the following additional assumption to derive the limit distribution of the bootstrap estimator when the true model is discontinuous.

Assumption P.

The continuity-restricted estimator is .

The assumption holds if has full column rank for all . Details are explained in the comment after Lemma E.6.

Theorem 6.

The asymptotic distributions of the bootstrap estimators in Theorem 6, conditional on the data, match those of the sample estimators for both continuous and discontinuous cases. Therefore, the residual bootstrap CI becomes asymptotically valid in a pointwise sense, regardless of whether the model is continuous or discontinuous. We acknowledge that Theorem 6 does not guarantee the uniform validity of the bootstrap CI. The difficulty in establishing the uniform validity lies in analyzing asymptotic behaviors of and for drifting sequences of the true models. already exhibits an irregular limit distribution even in the pointwise setup, as shown in Theorem 4 (i). This paper does not provide a theoretical analysis regarding the uniformity of the residual bootstrap. Instead, we conduct Monte Carlo experiments for nearly continuous cases in Section 5 and leaves theoretical work on the uniformity of the bootstrap method to future research.

The key motivation for setting , the true parameter of the bootstrap dgp, by (8) is to make degenerate fast enough when the underlying model is continuous. The convergence rate of the unrestricted estimator to is not sufficiently fast. To see this, let the first-derivative of the population moment with respect to at be

| (11) |

for which we recall that and that under continuity. For the validity of a bootstrap method, the degeneracy of the Jacobian should be mimicked by the bootstrap dgp. In our residual bootstrap method, the Jacobian is . However, it is for the standard nonparametric bootstrap. This fails the standard nonparametric bootstrap. More formal treatment of the invalidity of the standard nonparametric bootstrap is given in Appendix F.

It is not difficult to check but not , which is directly implied by but not due to Theorem 2. Meanwhile, in our residual bootstrap method, and , which leads to . The exact formula for is provided in the comment of Lemma E.5.

According to the proof of Theorem 6 in Appendix B, is sufficient for the first-order asymptotic validity. This requirement is explicitly stated in the conditions of Lemma E.5. While our choice of decay rate for guarantees this condition, it remains an open question how fast must decay to ensure the uniform validity.

The idea of shrinking the first-order derivative in our bootstrap is closely related to other bootstrap methods developed for the case when asymptotic distributions of estimators are irregular. For example, Chatterjee and Lahiri, (2011) propose a bootstrap method for the lasso estimator, and Cavaliere et al., (2022) study bootstrap inference on the boundary of a parameter space. Both papers set up the model where the problem appears if the true parameter value is zero, and they obtain true parameters of bootstrap dgps by thresholding unrestricted estimators, i.e., , where converges to zero in a proper rate.

4.3 Bootstrap for testing continuity

The critical value for the continuity test introduced in Section 3.2 can also be obtained by bootstrapping. Recall that is the continuity-restricted estimator. By setting in Algorithm 1, and collecting the bootstrap test statistic

we can get the critical value using the empirical quantile of . To run the bootstrap continuity test at size , reject the continuity if , where is the empirical quantile of . The consistency of the bootstrap is implied by Theorem 7 that follows.

Theorem 7.

Assume that is obtained by Algorithm 1 with .

(i) When the true model is continuous and Assumptions G, D, and LK hold,

where the distributions of , , and are specified in Theorem 4.

(ii) When the model is discontinuous, then in .

Theorem 7 (i) shows that the limit distribution of , conditional on the data, is identical to that of under the null hypothesis. Moreover, Theorem 7 (ii) says that is still stochastically bounded, conditionally on the data, when the true model is discontinuous. As is shown to be stochastically unbounded under the alternative, according to Theorem 4 (ii), the bootstrap continuity test has power against the alternatives.

5 Monte Carlo results

This section executes Monte Carlo simulations to investigate finite sample performances of our bootstrap methods. The data is generated by

| (12) |

with , , , , , , , and . Note that (12) implies that the threshold variable is weakly exogenous. That is, for while for . Similar Monte Carlo results are obtained when the threshold variable is weakly endogenous, and they are reported in Appendix C.

To investigate how coverage rates of the CIs change depending on the continuity, we try different values of , which implies different degrees of (dis)continuity . If , then and the model is continuous. Otherwise, the model is discontinuous. As near continuous designs, we try and check if there is any poor performance of CIs. We generate samples of size and . The number of repetitions for the Monte Carlo simulations is 2000. Instruments used for the estimations are the lagged dependent variables that date back from period to period 1 and the lagged threshold variables from period to period 1, i.e., . The earliest period used for the estimation is , and the total number of the instruments becomes 24.

We begin with examining the finite sample coverage probabilities of bootstrap CIs for the threshold location. Specifically, the grid bootstrap CI (Grid-B) is compared with both percentile nonparametric bootstrap CI (NP-B) and symmetric percentile nonparametric bootstrap CI (NP-B(S)) that are defined as follows:

| (13) | ||||

| (14) |

The number of bootstrap repetitions is set at 500 for each bootstrap method.

Table 1 reports the coverage rates of 95% CIs for the threshold location. First, it shows that the bootstrap CI by NP-B is subject to severe undercoverage in all cases. This is the case even when , despite the theoretical validity of NP-B when the model is discontinuous. Meanwhile, NP-B(S) exhibits extreme over-coverage in all cases. The large discrepancy in the results between NP-B and NP-B(S) suggests that the distribution of the nonparametric bootstrap estimator is poorly behaved, undermining its reliability for inference. The large difference between symmetric and non-symmetric CIs also arises in the inference for the coefficients, which we analyze in more detail in Appendix C.

| n | 0 | 0.1 | 0.2 | 0.5 | 1 | |

| 400 | 0.992 | 0.995 | 0.993 | 0.988 | 0.966 | |

| Grid-B | 800 | 0.986 | 0.986 | 0.985 | 0.973 | 0.955 |

| 1600 | 0.988 | 0.987 | 0.988 | 0.979 | 0.959 | |

| 400 | 0.484 | 0.491 | 0.494 | 0.524 | 0.631 | |

| NP-B | 800 | 0.478 | 0.472 | 0.487 | 0.518 | 0.611 |

| 1600 | 0.471 | 0.468 | 0.476 | 0.521 | 0.642 | |

| 400 | 1.000 | 1.000 | 1.000 | 1.000 | 0.998 | |

| NP-B(S) | 800 | 1.000 | 1.000 | 1.000 | 0.999 | 0.994 |

| 1600 | 1.000 | 1.000 | 1.000 | 1.000 | 0.994 | |

On the other hand, Table 1 shows that Grid-B provides more reasonable coverage rates. It seems that a larger jump yields coverage rates closer to the nominal level as expected since it is easier to detect a bigger jump. As expected from the uniform validity of Grid-B against near continuity, coverage rates remain valid for all the parameter values, if somewhat over-coveraged near continuity or under smaller sample sizes.

Contrary to Grid-B, NP-B(S) exhibits higher coverage probabilities that are one or almost one for all cases. It indicates that NP-B(S) CIs are overly wide and non-informative. To investigate this further, we examine some power properties as reported in Table 2 below. It shows that NP-B(S) based tests for the threshold location are trivial for many parametrizations, specifically when the design is continuous or near-continuous or when the alternative is closer to the null. In contrast, Grid-B tests are more powerful, oftentime twice more powerful than NP-B(S) tests. Here, we report the power of the tests instead of the lengths of the bootstrap CIs due to computational burden associated with the grid bootstrap.

| Grid-B | NP-B(S) | ||||||||||

| c | n | 0 | 0.1 | 0.2 | 0.5 | 1 | 0 | 0.1 | 0.2 | 0.5 | 1 |

| 400 | 0.015 | 0.015 | 0.015 | 0.027 | 0.096 | 0.000 | 0.000 | 0.000 | 0.004 | 0.018 | |

| 0.10 | 800 | 0.011 | 0.014 | 0.015 | 0.038 | 0.112 | 0.000 | 0.000 | 0.000 | 0.004 | 0.017 |

| 1600 | 0.017 | 0.020 | 0.021 | 0.040 | 0.125 | 0.000 | 0.000 | 0.002 | 0.004 | 0.023 | |

| 400 | 0.020 | 0.030 | 0.042 | 0.100 | 0.281 | 0.002 | 0.004 | 0.009 | 0.043 | 0.135 | |

| 0.25 | 800 | 0.020 | 0.034 | 0.041 | 0.112 | 0.325 | 0.002 | 0.003 | 0.007 | 0.035 | 0.154 |

| 1600 | 0.029 | 0.034 | 0.048 | 0.126 | 0.351 | 0.002 | 0.006 | 0.007 | 0.044 | 0.152 | |

| 400 | 0.102 | 0.137 | 0.172 | 0.314 | 0.581 | 0.062 | 0.109 | 0.142 | 0.274 | 0.298 | |

| 0.50 | 800 | 0.114 | 0.162 | 0.207 | 0.362 | 0.632 | 0.078 | 0.117 | 0.169 | 0.310 | 0.327 |

| 1600 | 0.136 | 0.186 | 0.240 | 0.396 | 0.652 | 0.076 | 0.124 | 0.189 | 0.332 | 0.316 | |

Next, we turn to the coverage probabilities for the regression coefficients by different bootstrap CIs. Table 3 reports the coverage rates of bootstrap percentile CIs using the residual bootstrap (R-B), defined by (9), and the standard nonparametric bootstrap (NP-B), defined by

| (15) |

and in (8) is set as the 50th percentile of the bootstrap distribution of the test statistic under the null hypothesis that the model is continuous, using the bootstrap method explained in Section 4.3. Additional results on the coverage rates of the symmetric percentile CIs (NP-B(S) and R-B(S)) for the coefficients are reported in Appendix C.

As in the threshold inference case, the percentile CIs for the coefficients constructed using NP-B exhibit systematic undercoverage across all specifications and sample sizes. Even when , so that the model is discontinuous and the NP-B method is theoretically valid, the undercoverage remains severe. While the R-B method yields higher coverage rates than NP-B, they still fall short of the nominal 95% level. Moreover, as reported in Table 4, R-B results in wider average CI lengths compared to NP-B, partly accounting for its improved coverage.

Additional simulation results in Appendix C reveal highly asymmetric bootstrap distributions, which lead to one-sided inference failures because the bootstrap fails to reject the null when . These findings underscore the difficulty of reliable inference for the coefficients and . They echo similar concerns raised in the threshold regression literature; for instance, Hansen, (2000) documents comparable undercoverage issues for even when the threshold is estimated at a faster rate. A more comprehensive theoretical and methodological investigation is needed to address these challenges in future research.

| R-B | NP-B | ||||||||||

| n | |||||||||||

| 400 | 0.839 | 0.780 | 0.746 | 0.815 | 0.801 | 0.799 | 0.691 | 0.627 | 0.712 | 0.709 | |

| 0.0 | 800 | 0.837 | 0.790 | 0.721 | 0.807 | 0.806 | 0.790 | 0.723 | 0.607 | 0.725 | 0.716 |

| 1600 | 0.849 | 0.782 | 0.727 | 0.840 | 0.835 | 0.833 | 0.709 | 0.602 | 0.754 | 0.718 | |

| 400 | 0.837 | 0.784 | 0.749 | 0.813 | 0.799 | 0.794 | 0.697 | 0.624 | 0.706 | 0.708 | |

| 0.1 | 800 | 0.830 | 0.779 | 0.724 | 0.803 | 0.800 | 0.786 | 0.714 | 0.599 | 0.720 | 0.710 |

| 1600 | 0.853 | 0.787 | 0.727 | 0.840 | 0.829 | 0.827 | 0.700 | 0.598 | 0.760 | 0.719 | |

| 400 | 0.838 | 0.786 | 0.749 | 0.819 | 0.811 | 0.794 | 0.701 | 0.623 | 0.713 | 0.716 | |

| 0.2 | 800 | 0.833 | 0.776 | 0.720 | 0.803 | 0.794 | 0.784 | 0.707 | 0.585 | 0.718 | 0.712 |

| 1600 | 0.855 | 0.789 | 0.728 | 0.846 | 0.832 | 0.830 | 0.707 | 0.606 | 0.764 | 0.722 | |

| 400 | 0.836 | 0.775 | 0.739 | 0.820 | 0.802 | 0.787 | 0.703 | 0.601 | 0.718 | 0.724 | |

| 0.5 | 800 | 0.841 | 0.789 | 0.732 | 0.815 | 0.807 | 0.787 | 0.714 | 0.602 | 0.716 | 0.727 |

| 1600 | 0.843 | 0.799 | 0.728 | 0.826 | 0.834 | 0.815 | 0.717 | 0.595 | 0.753 | 0.737 | |

| 400 | 0.858 | 0.815 | 0.745 | 0.832 | 0.805 | 0.800 | 0.741 | 0.627 | 0.741 | 0.743 | |

| 1.0 | 800 | 0.858 | 0.827 | 0.749 | 0.846 | 0.820 | 0.808 | 0.731 | 0.620 | 0.741 | 0.738 |

| 1600 | 0.863 | 0.846 | 0.759 | 0.830 | 0.837 | 0.820 | 0.738 | 0.622 | 0.761 | 0.747 | |

| Ratios of average lengths of CIs: | ||||||

| R-B / NP-B | ||||||

| n | ||||||

| 400 | 1.076 | 1.091 | 1.099 | 1.074 | 1.046 | |

| 0.0 | 800 | 1.081 | 1.086 | 1.093 | 1.070 | 1.046 |

| 1600 | 1.088 | 1.100 | 1.111 | 1.083 | 1.057 | |

| 400 | 1.087 | 1.098 | 1.101 | 1.074 | 1.047 | |

| 0.1 | 800 | 1.080 | 1.082 | 1.090 | 1.075 | 1.043 |

| 1600 | 1.086 | 1.102 | 1.111 | 1.077 | 1.057 | |

| 400 | 1.080 | 1.088 | 1.097 | 1.074 | 1.047 | |

| 0.2 | 800 | 1.079 | 1.089 | 1.094 | 1.075 | 1.047 |

| 1600 | 1.085 | 1.100 | 1.106 | 1.077 | 1.054 | |

| 400 | 1.097 | 1.100 | 1.100 | 1.083 | 1.056 | |

| 0.5 | 800 | 1.083 | 1.095 | 1.089 | 1.076 | 1.051 |

| 1600 | 1.098 | 1.110 | 1.098 | 1.089 | 1.059 | |

| 400 | 1.164 | 1.159 | 1.084 | 1.114 | 1.074 | |

| 1.0 | 800 | 1.158 | 1.159 | 1.079 | 1.109 | 1.076 |

| 1600 | 1.158 | 1.177 | 1.084 | 1.109 | 1.079 | |

6 Empirical example

Our empirical example examines a firm’s investment decision model that incorporates financial constraints, as in Hansen, 1999b and Seo and Shin, (2016). In a perfect financial market, firms can borrow as much money as they need to finance their investment projects, regardless of their financial conditions. Therefore, the financial conditions of firms are irrelevant to their investment decisions. However, in an imperfect financial market, some firms may be restricted in their access to external financing. These firms are said to be financially constrained. Financially constrained firms are more sensitive to the availability of internal financing, as they cannot rely on external financing to fund their investment projects.

Fazzari et al., (1988) argue that firms’ investments are positively related to their cash flow if they are financially constrained, where those firms are identified by low dividend payments. Hansen, 1999b applies the threshold panel regression more systematically to show that a more positive relationship between investment and cash flow is present for firms with higher leverage.

Since there are multiple candidate measures of the financial constraint for the threshold variable, we compare the following three dynamic panel threshold models:

| (16) | ||||

| (17) | ||||

| (18) |

where . Here, is investment, is cash flow, is property, plant and equipment, and is return on assets. , and are normalized by total assets. We have two candidate threshold variables, and , which are leverage and Tobin’s Q, respectively. Choice of the regressors and threshold variables is based on previous works like Hansen, 1999b and Lang et al., (1996). Note that the regression model (18) is nested within (17) and it is closer to a continuous threshold model.

Unlike the previous works, we do not need to assume either continuity or discontinuity for valid inferences since the bootstrap methods in this paper are adaptive to each case. With an assumption that the regressors are predetermined, we use the variables dated one period before as instruments. Hence, the instruments include , , , added by or for each period.

We construct a balanced panel of 1459 U.S. firms, excluding finance and utility firms, from 2010 to 2019 available in Compustat. To deal with extreme values, we drop firms if any of their non-threshold variables’ values fall within the top or bottom 0.5% tails. Moreover, we exclude firms whose Tobin’s Q is larger than 5 for more than 5 years when the threshold variable is Tobin’s Q, leaving 1222 firms in the sample. Meanwhile, Strebulaev and Yang, (2013) claims that firms with large CEO ownership or CEO-friendly boards show persistent zero-leverage behavior. To prevent our threshold regression from capturing corporate governance characteristics rather than financial constraints, we exclude firms whose leverage is zero for more than half of the time periods when leverage is the threshold variable, leaving 1056 firms in the sample.

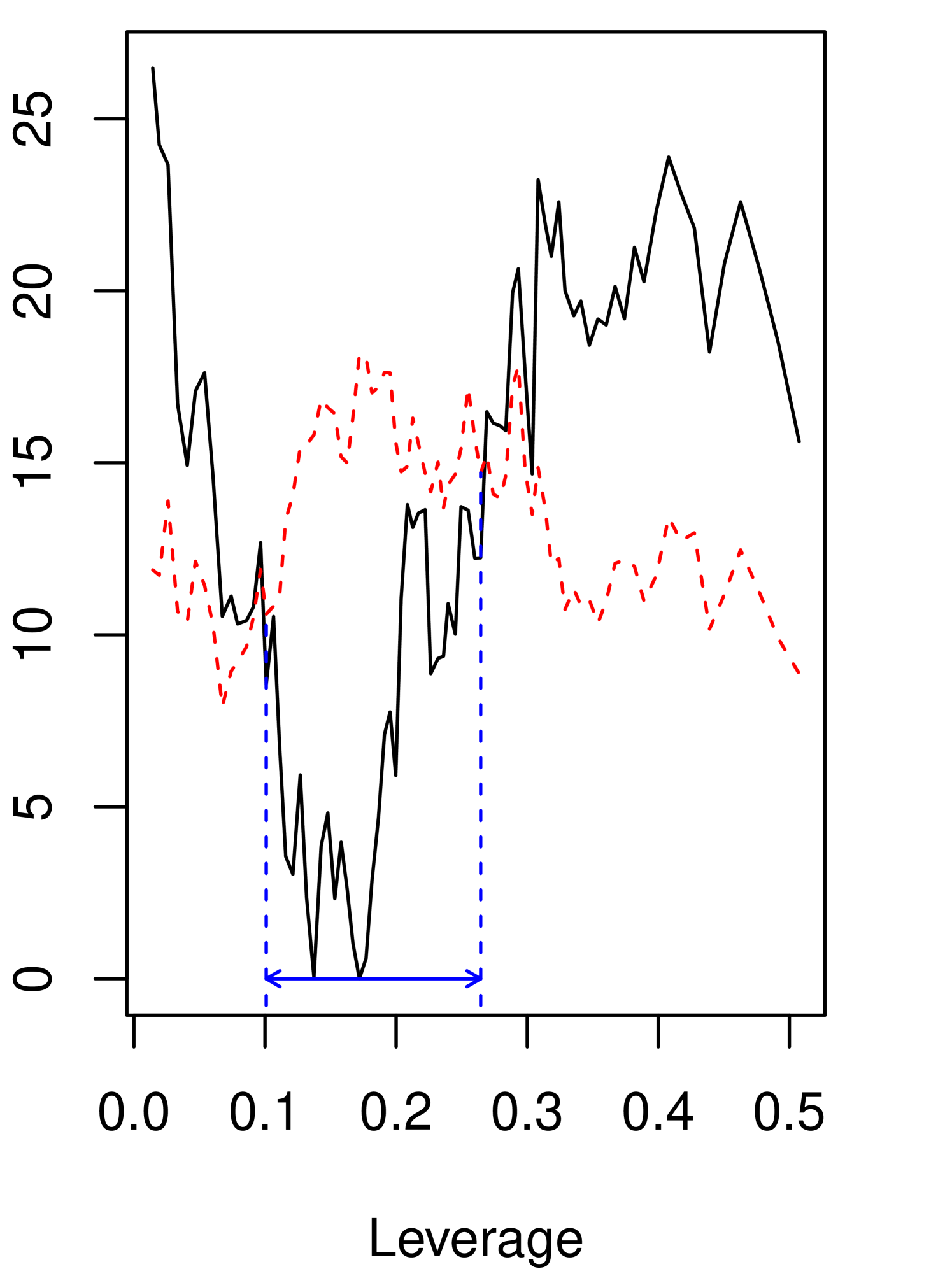

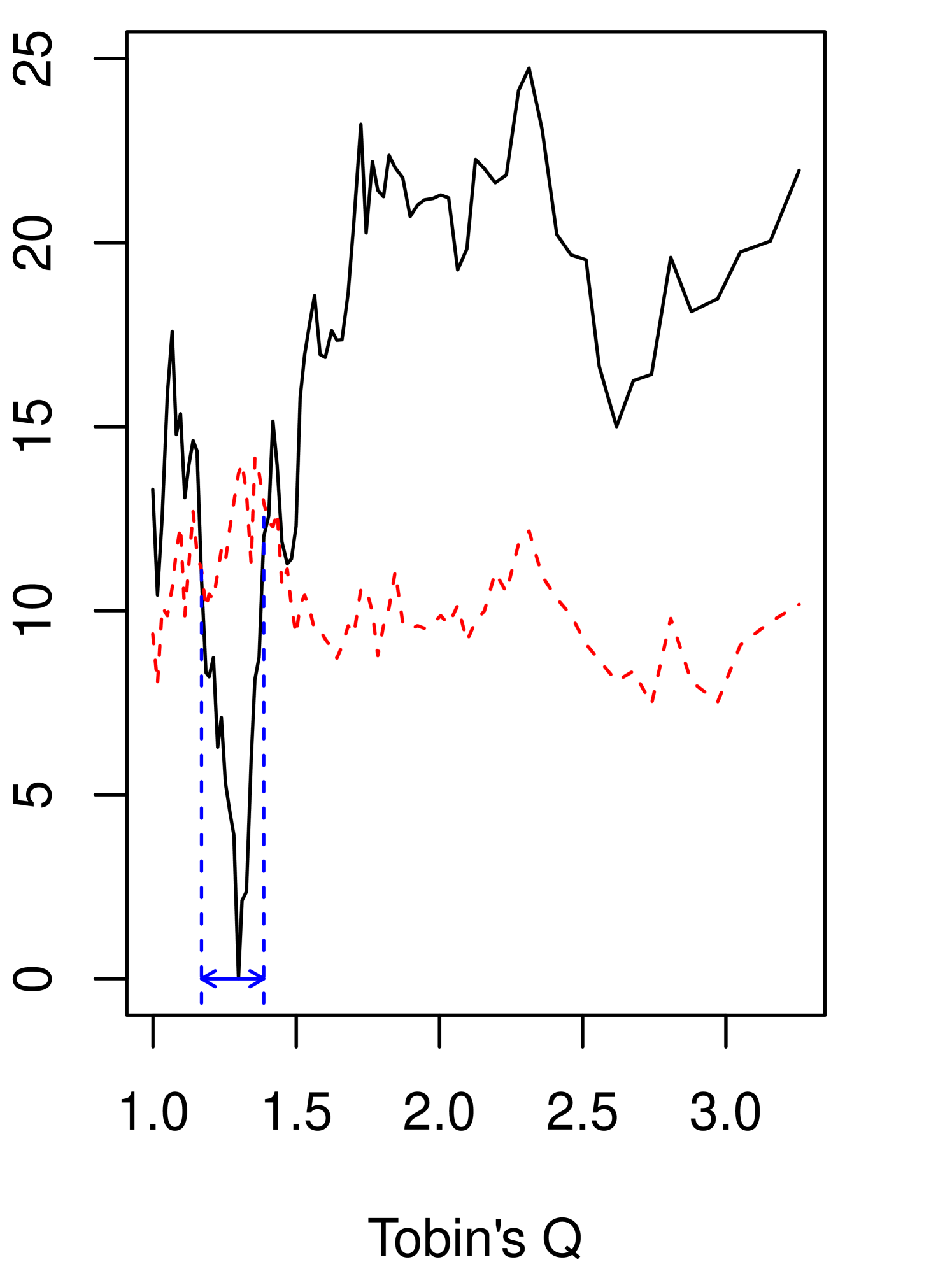

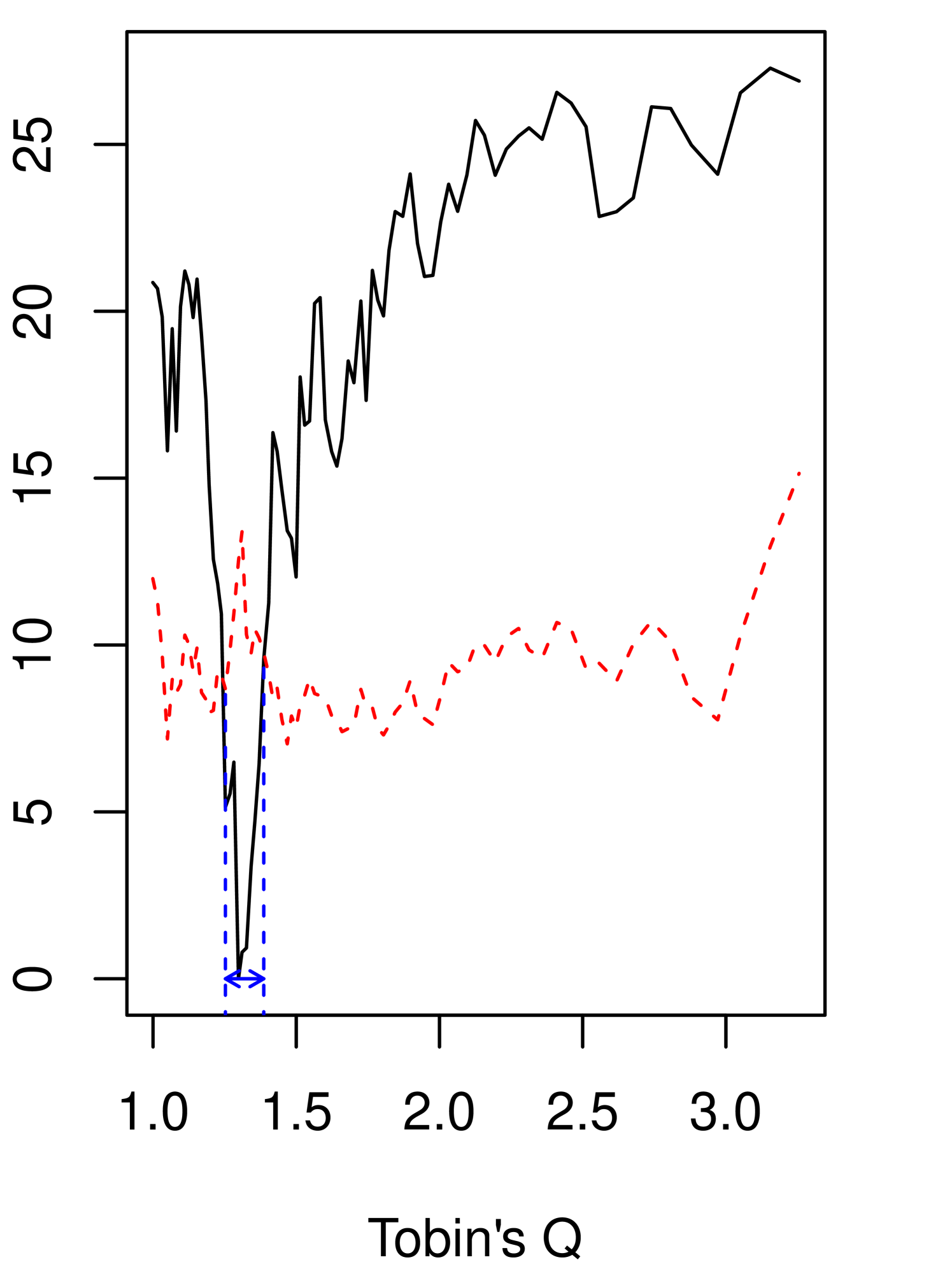

Table 5 reports the estimates and 95% CIs for (16) and (17), and Table 6 for (18). Figure 1 visualizes how the grid bootstrap CIs are obtained. The CIs for the coefficients are constructed by using the percentiles obtained from the residual bootstrap, defined as (10)222The symmetric percentile residual-bootstrap CIs that use the 0.05 quantiles of ’s return similar results, unlike in Monte Carlo results from Section 5. We report them in Appendix G.. for the precentile bootstrap is set at the 50th percentile of the bootstrap statistic for the continuity test, explained in Section 4.3. For the threshold locations, the CIs are obtained by the grid bootstrap with convexification. For the grid bootstrap, we make 500 bootstrap draws for each grid point. The grids of the threshold locations have 81 points from the 10th percentile to the 90th percentile of the threshold variables, and there are equal number of observations between two consecutive points. Table 5 and Table 6 also report the bootstrap p-values for the continuity and linearity tests by the bootstrap methods explained in Section 4.3 and Appendix H, respectively. The null hypothesis of the linearity test is , which implies no threshold effects.

We find supporting evidence for the presence of the threshold effect when the threshold variable is Tobin’s Q, but the statistical evidence is not strong for the leverage threshold model. Table 5 and Table 6 report the bootstrap p-values at .135, .011, and .011, for specifications (16) - (18), respectively. The statistical evidence to reject the continuity is not trivial for all specifications and gets stronger when it is the restricted model using Tobin’s Q. The estimated bootstrap p-values are .028 and .004 for the unrestricted and the restricted using Tobin’s Q. Furthermore, the confidence interval for the threshold location is narrower for the restricted model (18) than for the unrestricted model (17).

| (a) | (b) | ||||||

| est. | [95% CI] | est. | [95% CI] | ||||

| Lower regime | Lower regime | ||||||

| 0.778** | 0.124 | 1.154 | 0.252 | -0.258 | 0.724 | ||

| 0.047 | -0.034 | 0.145 | 0.266* | -0.003 | 0.535 | ||

| -0.147 | -0.385 | 0.171 | 0.027 | -0.103 | 0.264 | ||

| -0.032 | -0.132 | 0.047 | -0.017 | -0.180 | 0.090 | ||

| 0.231 | -0.843 | 1.849 | 0.246* | -0.031 | 0.577 | ||

| Upper regime | Upper regime | ||||||

| -0.154 | -0.717 | 0.551 | 0.410 | -0.049 | 0.751 | ||

| 0.148 | -0.015 | 0.326 | 0.081** | 0.021 | 0.200 | ||

| -0.291* | -0.519 | 0.015 | 0.044 | -0.214 | 0.398 | ||

| 0.013 | -0.066 | 0.113 | 0.050* | -0.019 | 0.153 | ||

| -0.081 | -0.234 | 0.037 | 0.005 | -0.004 | 0.012 | ||

| Difference between regimes | Difference between regimes | ||||||

| intercept | 0.068 | -0.024 | 0.200 | intercept | 0.236* | -0.014 | 0.580 |

| -0.932** | -1.830 | -0.097 | 0.158 | -0.559 | 0.843 | ||

| 0.101 | -0.107 | 0.322 | -0.185 | -0.479 | 0.108 | ||

| -0.144 | -0.519 | 0.134 | 0.017 | -0.227 | 0.275 | ||

| 0.045 | -0.111 | 0.232 | 0.066 | -0.074 | 0.287 | ||

| -0.312* | -1.893 | 0.792 | -0.242* | -0.573 | 0.038 | ||

| Threshold | Threshold | ||||||

| 0.172 | 0.101 | 0.265 | 1.298 | 1.169 | 1.386 | ||

| (38%) | (24%) | (58%) | (30%) | (21%) | (36%) | ||

| Testing (p-val) | Testing (p-val) | ||||||

| Linearity | 0.135 | Linearity | 0.011 | ||||

| Continuity | 0.033 | Continuity | 0.028 | ||||

| est. | [95% CI] | ||

| Coefficients | |||

| 0.392*** | 0.304 | 0.539 | |

| 0.122*** | 0.084 | 0.154 | |

| 0.076 | -0.027 | 0.271 | |

| 0.027*** | 0.006 | 0.046 | |

| 0.298** | 0.073 | 0.571 | |

| 0.008** | 0.001 | 0.015 | |

| Difference between regimes | |||

| intercept | 0.275** | 0.010 | 0.540 |

| -0.290** | -0.562 | -0.018 | |

| Threshold | |||

| 1.298 | 1.253 | 1.386 | |

| (30%) | (27%) | (36%) | |

| Testing (p-val) | |||

| Linearity | 0.011 | ||

| Continuity | 0.004 | ||

A notable finding concerning the coefficients estimates is that the relationship between cash flow and investment is positive and has larger magnitude for the low Tobin’s Q firms and the high leverage firms compared to their other respective regimes, although they are not statistically significant at 5% level. Even though the sign and magnitude of the estimates align with the observations by Lang et al., (1996) and Hansen, 1999b that a firm is subject to financial constraints when its Tobin’s Q is low or leverage is high, there is uncertainty in the interpretation of our results due to the lack of statistical significance.

Next, the autoregressive coefficient of the lagged investment is significant at 5% level in the low leverage regime and is larger than in the high leverage regime. This lends supporting evidence for the presence of asymmetric dynamics in investment, akin to the dynamics of leverage analyzed by Dang et al., (2012). In the meantime, we note that the autoregressive coefficients for the low and high leverage regimes in Column (a) are 0.778 and -0.154, respectively, which appear more extreme than findings of the literature where the estimates are between 0.1 and 0.5, e.g., Blundell et al., (1992). The autoregressive coefficients in the Column (b) are more in line with these estimates. Since the changes of the estimated coefficients in Column (b) are moderate, we also estimate the restricted model (18).

Turning to Table 6, we observe that the differences between the coefficients of the two regimes become significant at 5% level, and the CI for the threshold location becomes narrower while the estimate of the threshold location remains close to the estimate under the unrestricted model. The autoregressive coefficient of the lagged investment and the sensitivity of investment to both cash flow and return on assets are all positive and significant. The effect of Tobin’s Q is both positive and significant for both high and low Tobin’s Q regimes, but it almost disappears once it surpasses the threshold location. This suggests that low Tobin’s Q is related to low investment but higher Tobin’s Q does not cause higher investment once it reaches some level.

7 Conclusion

This paper studies the asymptotic properties of the GMM estimator in dynamic panel threshold models, showing that the limiting distribution depends critically on whether the true model exhibits a kink or a jump at the threshold. We demonstrate that the standard nonparametric bootstrap is inconsistent when the true model has a kink. To address this, we propose alternative bootstrap procedures for constructing confidence intervals for the threshold location and the model coefficients, which are shown to be consistent regardless of the model’s continuity. In particular, we establish that the grid bootstrap for the threshold parameter is uniformly valid. Monte Carlo simulations confirm that the proposed methods outperform the standard bootstrap in finite samples.

Several directions remain for future research. Our simulation results reveal highly asymmetric bootstrap distributions for the coefficient estimates, which distort finite sample inference. This highlights the need for a more thorough theoretical understanding of the bootstrap’s behavior. In particular, establishing the uniform validity of the bootstrap for the coefficient estimates is an important open question. Extensions of our bootstrap algorithms to incorporate latent group structures, interactive fixed effects, or threshold indices, as studied in Miao et al., 2020b , Miao et al., 2020a , and Seo and Linton, (2007); Lee et al., (2021), respectively, would also be valuable.

References

- Adam and Bevan, (2005) Adam, C. S. and Bevan, D. L. (2005). Fiscal deficits and growth in developing countries. Journal of Public Economics, 89:571–597.

- Andrews et al., (2020) Andrews, D. W., Cheng, X., and Guggenberger, P. (2020). Generic results for establishing the asymptotic size of confidence sets and tests. Journal of Econometrics, 218(2):496–531.

- Andrews, (2001) Andrews, D. W. K. (2001). Testing when a parameter is on the boundary of the maintained hypothesis. Econometrica, 69(3):683–734.

- Andrews, (2002) Andrews, D. W. K. (2002). Generalized method of moments estimation when a parameter is on a boundary. Journal of Business & Economic Statistics, 20(4):530–544.

- Andrews and Cheng, (2012) Andrews, D. W. K. and Cheng, X. (2012). Estimation and Inference With Weak, Semi-Strong, and Strong Identification. Econometrica, 80:2153–2211.

- Andrews and Cheng, (2014) Andrews, D. W. K. and Cheng, X. (2014). GMM Estimation and Uniform Subvector Inference With Possible Identification Failure. Econometric Theory, 30:287–333.

- Andrews and Guggenberger, (2009) Andrews, D. W. K. and Guggenberger, P. (2009). Hybrid and size-corrected subsampling methods. Econometrica, 77(3):721–762.

- Andrews and Guggenberger, (2019) Andrews, D. W. K. and Guggenberger, P. (2019). Identification- and singularity-robust inference for moment condition models. Quantitative Economics, 10:1703–1746.

- Arellano and Bond, (1991) Arellano, M. and Bond, S. (1991). Some Tests of Specification for Panel Data: Monte Carlo Evidence and an Application to Employment Equations. The Review of Economic Studies, 58:277–297.

- Bick, (2010) Bick, A. (2010). Threshold effects of inflation on economic growth in developing countries. Economics Letters, 108(2):126–129.

- Blundell et al., (1992) Blundell, R., Bond, S., Devereux, M., and Schiantarelli, F. (1992). Investment and Tobin’s Q: Evidence from company panel data. Journal of Econometrics, 51:233–257.

- Boyd and Vandenberghe, (2004) Boyd, S. and Vandenberghe, L. (2004). Convex Optimization. Cambridge University Press.

- Cavaliere et al., (2022) Cavaliere, G., Nielsen, H. B., Pedersen, R. S., and Rahbek, A. (2022). Bootstrap inference on the boundary of the parameter space, with application to conditional volatility models. Journal of Econometrics, 227(1):241–263.

- Cecchetti et al., (2011) Cecchetti, S. G., Mohanty, M. S., and Zampolli, F. (2011). The Real Effects of Debt. BIS Working Paper No. 352.

- Chan and Tong, (1985) Chan, K. S. and Tong, H. (1985). On the use of the deterministic lyapunov function for the ergodicity of stochastic difference equations. Advances in applied probability, 17(3):666–678.

- Chan and Tsay, (1998) Chan, K. S. and Tsay, R. S. (1998). Limiting properties of the least squares estimator of a continuous threshold autoregressive model. Biometrika, 85(2):413–426.

- Chatterjee and Lahiri, (2011) Chatterjee, A. and Lahiri, S. N. (2011). Bootstrapping lasso estimators. Journal of the American Statistical Association, 106(494):608–625.

- Cheng and Huang, (2010) Cheng, G. and Huang, J. Z. (2010). Bootstrap consistency for general semiparametric m-estimation. The Annals of Statistics, 38(5):2884–2915.

- Chudik et al., (2017) Chudik, A., Mohaddes, K., Pesaran, M. H., and Raissi, M. (2017). Is There a Debt-Threshold Effect on Output Growth? The Review of Economics and Statistics, 99:135–150.

- Dang et al., (2012) Dang, V. A., Kim, M., and Shin, Y. (2012). Asymmetric capital structure adjustments: New evidence from dynamic panel threshold models. Journal of Empirical Finance, 19:465–482.

- Dovonon and Goncalves, (2017) Dovonon, P. and Goncalves, S. (2017). Bootstrapping the GMM overidentification test under first-order underidentification. Journal of Econometrics, 201:43–71.

- Dovonon and Hall, (2018) Dovonon, P. and Hall, A. R. (2018). The asymptotic properties of gmm and indirect inference under second-order identification. Journal of Econometrics, 205(1):76–111.

- Dovonon and Renault, (2013) Dovonon, P. and Renault, E. (2013). Testing for Common Conditionally Heteroskedastic Factors. Econometrica, 81:2561–2586.

- Fazzari et al., (1988) Fazzari, S. M., Hubbard, R. G., Petersen, B. C., Blinder, A. S., and Poterba, J. M. (1988). Financing Constraints and Corporate Investment. Brookings Papers on Economic Activity, 1988:141–206.

- Giannerini et al., (2024) Giannerini, S., Goracci, G., and Rahbek, A. (2024). The validity of bootstrap testing for threshold autoregression. Journal of Econometrics, 239(1):105379.

- Gine and Zinn, (1990) Gine, E. and Zinn, J. (1990). Bootstrapping general empirical measures. The Annals of Probability, 18(2):851 – 869.

- Girma, (2005) Girma, S. (2005). Absorptive Capacity and Productivity Spillovers from FDI: A Threshold Regression Analysis. Oxford Bulletin of Economics and Statistics, 67:281–306.

- Goncalves and White, (2004) Goncalves, S. and White, H. (2004). Maximum likelihood and the bootstrap for nonlinear dynamic models. Journal of Econometrics, 119(1):199–219.

- Gonzalo and Wolf, (2005) Gonzalo, J. and Wolf, M. (2005). Subsampling inference in threshold autoregressive models. Journal of Econometrics, 127(2):201–224.

- Hall and Horowitz, (1996) Hall, P. and Horowitz, J. L. (1996). Bootstrap Critical Values for Tests Based on Generalized-Method-of-Moments Estimators. Econometrica, 64:891–916.

- Han and McCloskey, (2019) Han, S. and McCloskey, A. (2019). Estimation and Inference with a (nearly) Singular Jacobian. Quantitative Economics, 10:1019–1068.

- (32) Hansen, B. E. (1999a). The Grid Bootstrap and the Autoregressive Model. The Review of Economics and Statistics, 81:594–607.

- (33) Hansen, B. E. (1999b). Threshold effects in non-dynamic panels: Estimation, testing, and inference. Journal of Econometrics, 93:345–368.

- Hansen, (2000) Hansen, B. E. (2000). Sample Splitting and Threshold Estimation. Econometrica, 68:575–603.

- Hansen, (2017) Hansen, B. E. (2017). Regression kink with an unknown threshold. Journal of Business & Economic Statistics, 35(2):228–240.

- Hidalgo et al., (2023) Hidalgo, J., Lee, H., Lee, J., and Seo, M. H. (2023). Minimax risk in estimating kink threshold and testing continuity. In Advances in Econometrics: Essays in Honor of Joon Y. Park: Econometric Theory, Vol. 45A, pages 233–259.

- Hidalgo et al., (2019) Hidalgo, J., Lee, J., and Seo, M. H. (2019). Robust Inference for Threshold Regression Models. Journal of Econometrics, 210:291–309.

- Khan and Senhadji, (2001) Khan, M. S. and Senhadji, A. S. (2001). Threshold Effects in the Relationship between Inflation and Growth. IMF Staff Papers, 48:1–21.

- Kim et al., (2019) Kim, S., Kim, Y. J., and Seo, M. H. (2019). Estimation of Dynamic Panel Threshold Model Using Stata. The Stata Journal, 19:685–697.

- Kremer et al., (2013) Kremer, S., Bick, A., and Nautz, D. (2013). Inflation and growth: new evidence from a dynamic panel threshold analysis. Empirical Economics, 44:861–878.

- Lang et al., (1996) Lang, L., Ofek, E., and Stulz, R. (1996). Leverage, investment, and firm growth. Journal of Financial Economics, 40(1):3–29.

- Lee et al., (2021) Lee, S., Liao, Y., Seo, M. H., and Shin, Y. (2021). Factor-driven two-regime regression. The Annals of Statistics, 49(3):1656–1678.

- Lee et al., (2011) Lee, S., Seo, M. H., and Shin, Y. (2011). Testing for Threshold Effects in Regression Models. Journal of the American Statistical Association, 106:220–231.

- Leeb and Pötscher, (2005) Leeb, H. and Pötscher, B. M. (2005). Model selection and inference: facts and fiction. Econometric Theory, 21(1):21–59.

- (45) Miao, K., Li, K., and Su, L. (2020a). Panel threshold models with interactive fixed effects. Journal of Econometrics, 219(1):137–170.

- (46) Miao, K., Su, L., and Wang, W. (2020b). Panel threshold regressions with latent group structures. Journal of Econometrics, 214(2):451–481.

- Mikusheva, (2007) Mikusheva, A. (2007). Uniform inference in autoregressive models. Econometrica, 75(5):1411–1452.

- Newey and McFadden, (1994) Newey, W. K. and McFadden, D. (1994). Chapter 36 Large Sample Estimation and Hypothesis Testing. In Handbook of Econometrics, volume 4, pages 2111–2245. Elsevier.

- Newey and West, (1987) Newey, W. K. and West, K. D. (1987). Hypothesis Testing with Efficient Method of Moments Estimation. International Economic Review, 28:777–787.

- Pakes and Pollard, (1989) Pakes, A. and Pollard, D. (1989). Simulation and the Asymptotics of Optimization Estimators. Econometrica, 57:1027–1057.

- Praestgaard and Wellner, (1993) Praestgaard, J. and Wellner, J. A. (1993). Exchangeably Weighted Bootstraps of the General Empirical Process. The Annals of Probability, 21(4):2053 – 2086.

- Romano and Shaikh, (2012) Romano, J. P. and Shaikh, A. M. (2012). On the uniform asymptotic validity of subsampling and the bootstrap. The Annals of Statistics, 40(6):2798 – 2822.

- Rousseau and Wachtel, (2002) Rousseau, P. L. and Wachtel, P. (2002). Inflation thresholds and the finance–growth nexus. Journal of International Money and Finance, 21:777–793.

- Seo and Linton, (2007) Seo, M. H. and Linton, O. (2007). A smoothed least squares estimator for threshold regression models. Journal of Econometrics, 141(2):704–735.

- Seo and Shin, (2016) Seo, M. H. and Shin, Y. (2016). Dynamic Panels With Threshold Effect and Endogeneity. Journal of Econometrics, 195:169–186.

- Strebulaev and Yang, (2013) Strebulaev, I. A. and Yang, B. (2013). The mystery of zero-leverage firms. Journal of Financial Economics, 109(1):1–23.

- van der Vaart and Wellner, (1996) van der Vaart, A. W. and Wellner, J. (1996). Weak Convergence and Empirical Processes With Applications to Statistics. Springer Series in Statistics. Springer-Verlag, New York.

- Wang, (2015) Wang, Q. (2015). Fixed-effect panel threshold model using stata. The Stata Journal, 15(1):121–134.

- Yang et al., (2020) Yang, L., Zhang, C., Lee, C., and Chen, I.-P. (2020). Panel kink threshold regression model with a covariate-dependent threshold. The Econometrics Journal, 24(3):462–481.

- Zhang et al., (2017) Zhang, Y., Zhou, Q., and Jiang, L. (2017). Panel kink regression with an unknown threshold. Economics Letters, 157:116–121.

Additional Notations.

For , denotes a matrix whose elements are all zero. “” denotes the weak convergence as in section 1.3 of van der Vaart and Wellner, (1996). is a norm for either vectors or matrices. For a vector, it is the Euclidean norm. For a matrix, it is the Frobenius norm, i.e., for a matrix .

Appendix A Proofs for Section 3.

A.1 Proof of Theorem 1.

Note that due to . Hence, the population moment equation is when . The condition (ii) of Theorem 1 implies that has full column rank, and hence if . , when . The condition (i) of Theorem 1 implies that is not zero if . Therefore, if , and if , which is the standard identification condition in the literature, e.g., Section 2.2.3 in Newey and McFadden, (1994).

A.2 Proof of Theorem 2.

To obtain limit distribution of , we first establish consistency of to and rate of ’s convergence. Then, we show asymptotic distribution of the estimates using rescaled versions of the parameters and criterions.

A.2.1 Consistency.

Constrained estimator of the coefficients, , given a fixed can be expressed as

where

Therefore,

Define profiled criterion with respect to by and . The threshold location estimator is . By the law of large numbers (LLN), . By the uniform law of large numbers (ULLN) in Lemma D.2, uniformly with respect to . Hence, would imply , and then , which completes the proof.

To show consistency of to , we apply the argmin/argmax continuous mapping theorem (CMT) as in Theorem 3.2.2 in van der Vaart and Wellner, (1996). It is sufficient to check (i) uniformly converges to some function in probability, and (ii) for any open set contatining . (ii) can be shown if is uniquely minimized at and continuous as is compact.

The profiled moment can be rewritten as

Therefore,

where is a projection matrix to the column space of . The profiled objective can be written as

By , , and , we can derive that

uniformly with respect to , where . Note that in the second stage of the two-step GMM estimation. when we consider the first stage. is uniquely minimized when . This is because is positive definite, and the conditions in Theorem 1 implies that does not lie in the column space of whenever . Moreover, is continuous as is continuous with respect to by D.

A.2.2 Convergence rate.

as the consistency of is shown. Our proof follows arguments similar to the proof of Theorem 3.3 by Pakes and Pollard, (1989). By the consistency of and by Lemma D.3,

By , we can obtain

Apply triangle inequality to get

As is the minimizer of the GMM criterion, . Therefore,

, while by Lemma D.1. Thus,

which implies and .

A.2.3 Asymptotic distribution.

This section derives asymptotic distribution of the estimator through the argmin/argmax continuous mapping theorem (CMT) as in Theorem 3.2.2 in van der Vaart and Wellner, (1996).

Introduce a local reparametrization by and , and let consist of subvectors and . Additionally, define and . Note that is uniformly tight due to the convergence rate we obtained.333 A random variable is tight if for any , there exists a compact set such that , and is uniformly tight if for any , there exists a compact set such that for all . Note that by the convergence rate we derived, for any , there exists a compact such that , and such that if . Then, we can define a compact set , where is a compact set such that , which satisfies for all . Let

We show that (i) weakly converges to a stochastic process in for every compact in the Euclidean space, (ii) is continuous, and (iii) possesses an unique optimum not in but in its square since . Thus, we will establish that converges in distribution to . In the characterization of the minimizers, is shown to be tight.

The rescaled and reparametrized sample moment can be written as

By the central limit theorem (CLT),

By the LLN,

Let be arbitrary. By the ULLN in Lemma D.2,

uniformly with respect to . Then, by continuity of at ,

uniformly with respect to . By Lemma D.4,

uniformly with respect to .

Therefore, weakly converges to

in for any compact . Then, by the CMT,

Characterization of the minimizers

Next, we characterize the minimizers. The objective function of the minimization problem is strictly convex with respect to and , since has full column rank and is positive definite. Hence, a solution can be characterized by the Karush-Kuhn-Tucker (KKT) conditions. See Chapter 5 in Boyd and Vandenberghe, (2004) for more details.

The Lagrangian for this problem is

and the gradient of the Lagrangian with respect to and should vanish:

In addition, and should hold.

-

(i)

When and , we can obtain

where is the projection matrix to the column space of . because the matrix has full column rank, and cannot be in the column space of and . Therefore,

should hold for the feasibility condition .

-

(ii)

When and , we can obtain

By plugging this into the equation for , we get

Thus,

where . follows a normal distribution that is left censored at 0. Then,

Note that the two normal variables and are independent of each other, because becomes zero.

Appendix B Proofs for Section 4

B.1 Preliminaries

The bootstrap methods we consider are Algorithm 1 with different choices of . There are three bootstrap methods this paper propose: (i) for , (ii) set as (8), and (iii) which is the continuity-restricted estimator. In Appendix F, we consider the case which results in the standard nonparametric bootstrap.

The probability law for the bootstrap is formalized following Goncalves and White, (2004). Let be the probability measure for data and be the conditional probability law of bootstrap given observations. in ( in ) if for any , as . in if for any and , there exists such that . in if in for every continuous and bounded function , where is the expectation by the bootstrap probability law conditional on observations. in in if , where is the set of all Lipschitz functions on bounded in such that .

The following lemma is useful in analyzing bootstrap stochastic orders.

Lemma B.1.

-

(i)

If or , then or in , respectively.

-

(ii)

Let in and in . Then, in .

Proof.

See Lemma 3 in Cheng and Huang, (2010). ∎

Recall that . in when in . This would be the case when in and since then in by Lemma B.1.

B.2 Proof of Theorem 6.

As in the proof of Theorem 2, consistency and convergence rates of the bootstrap estimator should be derived first. These results are summarized in the following proposition, with the proof provided in Online Appendix E.

Proposition 1.

Then, we derive the (conditional) weak convergence limit of the rescaled criterion and apply the CMT to obtain the asymptotic distribution of the bootstrap estimator.

Asymptotic distribution under continuity.

Based on the convergence rate in Proposition 1, introduce the local reparametrization by and , and let consist of subvectors and .

The asymptotic distributions of the bootstrap estimators can be derived by using the argmin/argmax CMT as in the proof of Theorem 2. Let

We show that in in for every compact in the Euclidean space. Recall that .

The rescaled and reparametrized bootstrap moment can be written as

By Lemma E.2,

By the bootstrap LLN,

Let be arbitrary. By bootstrap Glivenko-Cantelli, e.g., Lemma 3.6.16 in van der Vaart and Wellner, (1996),

By continuity of at , for any , there exists such that if . For any , . Note that , and hence with probability approaching 1, while uniformly with respect to . Thus,

both uniformly with respect to . By Lemma E.5,

uniformly with respect to .

Therefore, in in for any compact . Then, by applying the argmin CMT as in the proof of Theorem 2, we can obtain the limit distribution of the bootstrap estimates conditional on the data.

Asymptotic distribution under discontinuity.

The proof for the discontinuous model only requires a slight change to the proof for the continuous model. As the convergence rate for the discontinuous model is for both coefficients and threshold location estimators, let be unchanged and for the local reparametrization. Let

We can write the rescaled and reparametrized moment as follows:

The limit of can be obtained similarly to the continuous model case, except that we use Lemma E.6 instead of Lemma E.5 to get

uniformly with respect to .

Then, conditonally weakly converges to in in for any compact . And the argmin CMT yields the asymptotic distribution of the bootstrap estimators. The limit distributions of the bootstrap estimators are normal because . ∎

Online Supplements for “Bootstraps for Dynamic Panel Threshold Models” (Not for Publication)

Woosik Gong and Myung Hwan Seo

This part of the appendix is only for online supplements. It contains supplementary results for the Monte Carlo simulations, the remaining proofs for Theorem 3, Theorem 4, Proposition 1, Theorem 5, Theorem 7, as well as additional lemmas with proofs. It also presents invalidity of the standard nonparametric bootstrap, percentile bootstrap confidence intervals for empirical application, explanation of bootstrap for linearity test, and the uniform validity of the grid bootstrap.

Appendix C Supplementary Results for Monte Carlo Simulation

In this section, we present supplementary results for the Monte Carlo simulations in Section 5.

C.1 Symmetric Percentile Confidence Intervals for Coefficients

First, we report the coverage rates of symmetric percentile CIs for the coefficients that are constructed using the nonparametric bootstrap,

| (C.1) |

and the residual bootstrap, defined by (10). Tables 7 and 8 show the coverage rates and the ratios of the average lengths of CIs by the two different bootstrap methods.

In contrast to the results based on non-symmetric percentile CIs in Table 3 in Section 5, Table 7 shows that symmetric CIs provide much higher coverage rates, often resulting in over-coverage. Note that this observation also occurs for the threshold inference as shown in Table 1. Meanwhile, Table 8 shows that the difference in the average lengths of symmetric percentile CIs between the two bootstrap methods is less pronounced compared to the non-symmetric case shown in Table 4.

| R-B(S) | NP-B(S) | ||||||||||

| 400 | 0.964 | 0.976 | 0.980 | 0.974 | 0.930 | 0.996 | 0.996 | 0.996 | 0.992 | 0.982 | |

| 0.0 | 800 | 0.951 | 0.974 | 0.971 | 0.967 | 0.931 | 0.987 | 0.992 | 0.995 | 0.988 | 0.976 |

| 1600 | 0.955 | 0.972 | 0.964 | 0.961 | 0.923 | 0.983 | 0.994 | 0.995 | 0.980 | 0.977 | |

| 400 | 0.964 | 0.976 | 0.979 | 0.974 | 0.933 | 0.994 | 0.993 | 0.995 | 0.991 | 0.982 | |