11email: alexander.hammers@kcl.ac.uk 22institutetext: Brain Research & Imaging Centre, University of Plymouth, Plymouth, U.K. 33institutetext: Department of Biomedical Engineering, School of Biomedical Engineering & Imaging Sciences, King’s College London, London, U.K. 44institutetext: CERMEP-Imagerie du vivant, Lyon, France 55institutetext: Department of Psychosis Studies, Institute of Psychiatry, Psychology, and Neuroscience, King’s College London, London, U.K.

Brain PET-MR attenuation correction with deep learning: method validation in adult and clinical paediatric data

Abstract

Current methods for magnetic resonance-based positron emission tomography attenuation correction (PET-MR AC) are time consuming, and less able than computed tomography (CT)-based AC methods to capture inter-individual variability and skull abnormalities. Deep learning methods have been proposed to produce pseudo-CT from MR images, but these methods have not yet been evaluated in large clinical cohorts. Methods trained on healthy adult data may not work in clinical cohorts where skull morphometry may be abnormal, or in paediatric data where skulls tend to be thinner and smaller. Here, we train a convolutional neural network based on the U-Net to produce pseudo-CT for PET-MR AC. We trained our network on a mixed cohort of healthy adults and patients undergoing clinical PET scans for neurology investigations. We show that our method was able to produce pseudo-CT with mean absolute errors (MAE) of 100.4 ± 21.3 HU compared to reference CT, with a Jaccard overlap coefficient of 0.73 ± 0.07 in the skull masks. Linear attenuation maps based on our pseudo-CT (relative MAE = 8.4 ± 2.1%) were more accurate than those based on a well-performing multi-atlas-based AC method (relative MAE = 13.1 ± 1.5%) when compared with CT-based linear attenuation maps. We refined the trained network in a clinical paediatric cohort. MAE improved from 174.7 ± 33.6 HU when using the existing network to 127.3 ± 39.9 HU after transfer learning in the paediatric dataset, thus showing that transfer learning can improve pseudo-CT accuracy in paediatric data.

Keywords:

3D convolutional neural network residual U-Net pixel shuffle transfer learning computed tomography1 Introduction

Brain positron emission tomography (PET) obtains images from radioactivity detected via 511 keV photons emerging inside the brain after positron emission. These photons undergo attenuation, especially in dense material like the skull, and attenuation correction (AC) is crucial for PET quantification. Standard PET coupled to computed tomography (CT) uses the density information from CT for AC (CT-AC). With the advent of hybrid (simultaneous) PET and magnetic resonance (MR) imaging, this is no longer possible, and MR-based methods (MR-AC) had to be developed.

The current best-performing methods for brain MR-AC are multi-atlas co-registration methods, which give average PET quantification errors within ±3% of CT-AC [12]. However, these methods rely on multiple co-registrations with healthy control atlas sets of CT and MR images, and thus tend to be time consuming, and less able than CT to capture inter-individual variability and model abnormalities. Deep learning methods for synthesising pseudo-CT from MR images are emerging [4, 11] but many are trained and tested on healthy data only, and very few have been evaluated in large clinical cohorts [13] and in children [14].

Children often require general anaesthesia for scanning. Simultaneous PET-MR allows scanning in a single session, avoiding repeat anaesthesia. However, children have smaller heads and thinner skulls than adults, so methods that perform well in adults may not necessarily perform well in children.

Here we aimed to show that transfer learning of a network trained on adult data can improve pseudo-CT accuracy in paediatric data. We trained our network on a cohort of primarily adult research data and evaluated our deep learning MR-AC against the CT-AC, used as the gold standard, and a well-performing [12] multi-atlas-based AC method, MaxProb [16]. We then used a simple transfer learning approach, implementing and refining the network trained on adult data in a clinical paediatric cohort. We showed that pseudo-CT accuracy improved with transfer learning and that the refined network could produce pseudo-CT in paediatric data with accuracy comparable to the adult dataset.

2 Materials

2.1 PET Centre Dataset

The training dataset consisted of data from research studies at the KCL & GSTT PET Centre (“PET Centre dataset”). Research studies were reviewed and approved by regional research ethics committees: West Midlands – Coventry & Warwickshire Research Ethics Committee (16/WM/0364), and West London & GTAC Research Ethics Committee (16/LO/0130). All participants provided written informed consent.

This dataset consisted of 110 individuals who were scanned as part of three PET-MR research studies at the KCL & GSTT PET Centre between 2016 and 2019 - the [18F]fluorodeoxyglucose ([18F]FDG) study, the [18F]GE-179 study and the [18F]Fallypride study. All low-dose reference CT data were acquired on a whole-body GE Discovery 710 PET/CT system on the same day as the MR scan. All MR data were acquired on a 3T Siemens Biograph mMR PET-MR system. Participant demographics and MR and CT acquisition details for each sub-group of data are given in Table 1. This dataset consisted primarily of adult data, however, there were two children aged 9 and nine teenagers between the ages of 13 to 17 years.

| Tracer/Study | [18F]FDG | [18F]GE-179 | [18F]Fallypride | |

|---|---|---|---|---|

| Number | 57 | 31 | 22 | |

| Mean age ± SD | 40.7 ± 19.2 | 24.3 ± 4.1 | 30.2 ± 8.7 | |

| (min–max) in years | (9–79) | (18–36) | (18–55) | |

| Female/Male | 30/27 | 12/19 | 19/3 | |

| Orientation | Sagittal | Sagittal | Sagittal | |

| TE (ms) | 2.63 | 2.63 | 2.19 | |

| TR (ms) | 1.7 | 1.7 | 1.7 | |

| TI (ms) | 900 | 900 | 900 | |

| MPRAGE | FA (°) | 9 | 9 | 9 |

| parameters | Slice thickness (mm) | 1.1 | 1.1 | 1 |

| Num. slices | 176 | 176 | 240 | |

| FoV (mm) | 270 | 270 | 256 | |

| Percent phase FoV | 87.5 | 87.5 | 100 | |

| Matrix size | 224 × 256 | 224 × 256 | 256 × 256 | |

| Voxel size (mm) | 1.055 × 1.055 | 1.055 × 1.055 | 1 × 1 | |

| FoV (cm) | 15 | 30 | 30 | |

| CT | kVp | 140 | 140 | 140 |

| parameters | mAs | 8 | 8 | 8 |

| Voxel size (mm) | 0.5 × 0.5 × 2.5 | 0.5 × 0.5 × 2.5 | 0.5 × 0.5 × 2.5 | |

| TE: echo time; TR: repetition time; TI: inversion time; FA: flip angle; FoV: field of view. | ||||

2.2 Clinical paediatric data

The “clinical paediatric dataset” consisted of anonymised clinical scans acquired at Guy’s and St Thomas’ Hospital available through an NHS clinical service evaluation of patients with dystonia from 2013 to 2017 (Service Evaluation GSTT Reference 10319). Scans were performed as part of standard clinical assessment for possible deep brain stimulation and included MR imaging, [18F]FDG-PET, and head CT under general anaesthesia. We included data from patients who had a complete set of [18F]FDG-PET, 3D T1-weighted MR and CT scans, resulting in a dataset consisting of 49 patients between 2 and 17 years old (mean age ± SD = 9.3 ± 4.3 years). Patients underwent [18F]FDG-PET-CT imaging on a GE (General Electric Medical Systems, Waukesha, WI) Discovery ST and a Discovery VCT scanner prior to October 2013, and thereafter on a GE Discovery 710 scanner, at the King’s College London & Guy’s and St Thomas’ PET Centre. CT images had voxel sizes of 0.5 × 0.5 × 2.5 mm3 (n = 32) or 0.977 × 0.977 × 3 mm3 (n = 17). 3D T1-weighted images were acquired in sagittal orientation with voxel sizes of 0.694 × 0.694 × 0.68 mm3 (n = 15), 0.977 × 0.977 × 1 mm3 (n = 28), 0.446 × 0.446 × 1 mm3 (n = 1), 0.898 × 0.898 × 1 mm3 (n = 1), and approximately 1 × 1 × 1 mm3 (n = 2). One patient had their T1-weighted MR image acquired in transverse orientation (voxel size = 0.55 × 0.55 × 1.1 mm3).

3 Methods

3.1 Data preprocessing

T1-weighted MR data were first bias corrected using N4ITK bias field correction [20]. Each individual’s CT image was rigidly realigned and resampled to their T1-weighted image using NiftyReg [17] with cubic spline interpolation, after the scanner bed was edited out of the CT images using custom scripts. In the PET Centre dataset, where voxel sizes were 1.1 mm, we did not apply any resampling but instead allowed the network treat this as a variation in head size, similar to a scaling augmentation (± 10%). In the clinical paediatric dataset, we resampled all data to 1 mm3 isotropic resolution to make it compatible with the resolution of the PET Centre dataset.

3.2 Network architecture

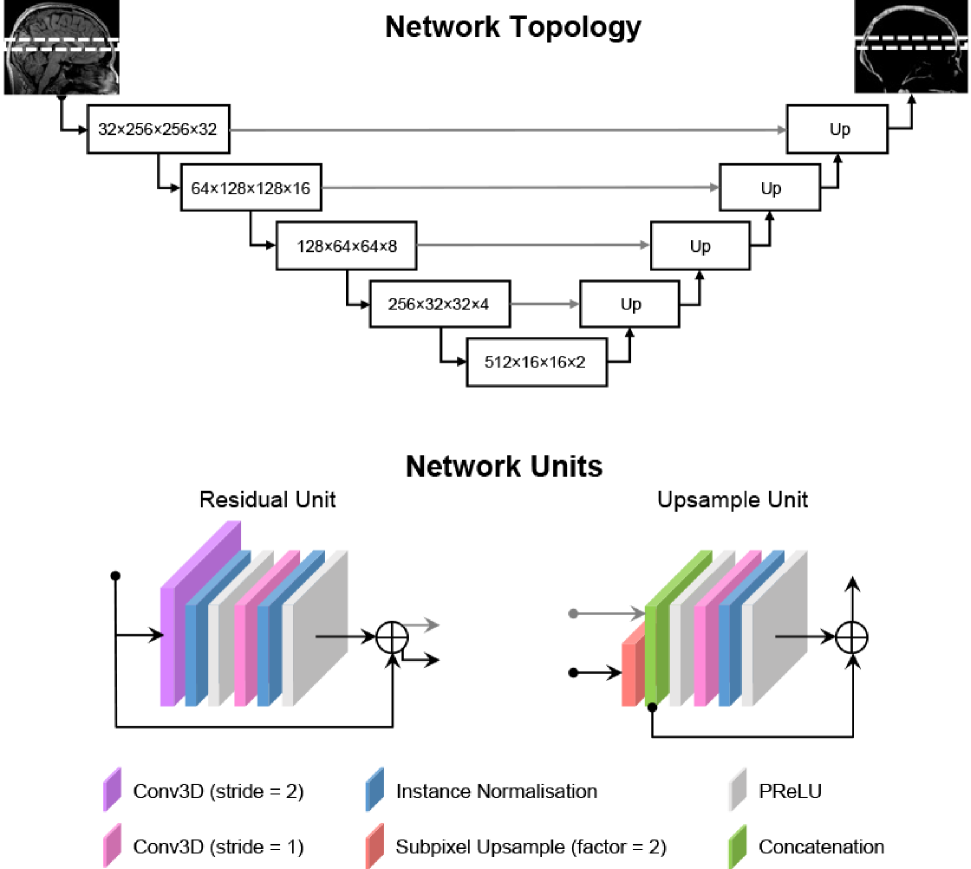

The convolutional neural network (CNN) we used for image translation is based on a residual U-Net architecture [18, 7, 10]. The network consists of blocks of 3D convolutions (kernel size = 3), each followed by instance normalisation [8] and a Parametric Rectified Linear Unit (PReLU) [6] activation. Our U-Net has five layers of these convolutional blocks with 32, 64, 128, 256 and 512 channels in each consecutive layer. In the encoding path, instead of max pooling, downsampling is performed using convolutions with a stride of 2 and a residual connection is added. In the decoding path, we use a modified 3D version of the pixel shuffle method [19] to upsample data from lower layers by a factor of 2. The upsampled input is then concatenated with the output from the encoding path in the same layer. Figure 1 illustrates the overall topology of our U-Net and the network units in the encoding and decoding paths.

3.3 Network implementation

We implemented the above network architecture in PyTorch https://pytorch.org using the MONAI framework [2] https://monai.io on an NVIDIA Quadro M4000 GPU with 8GB of RAM. Our network took a 3D patch from the T1-weighted MR as input and produced the corresponding CT patch.

In order to normalise intensities across each dataset, histogram normalisation was applied to the input T1-weighted MR images using the HistogramNormalize transform in MONAI. MR images were then rescaled between -1 and 1 (ScaleIntensity) and CT images were thresholded at -1000 Hounsfield units (HU) and clipped above 2400 HU (ThresholdIntensity).

The network was trained on batches of eight patches each of size 256 × 256 × 32 voxels sampled randomly from the pairs of images. We used the Adam optimiser with weight decay regularisation [15] with an initial learning rate of 3 × 10-3, 1 = 0.95, 2 = 0.99, and weight decay = 0.03 (hyperparameters chosen based on [5]). We defined an epoch as one iteration of a randomly sampled patch over all images in the training dataset.

We performed a five-fold cross-validation of the network, dividing the data into 80% training and 20% testing data for each fold, with one image set from the testing data used for validation. We used the mean squared error loss and tracked the mean absolute error of the validation dataset every five epochs. The network was trained until the validation loss did not improve by more than 1 HU over 100 epochs (approximately 900–1000 epochs in total for all folds). At this point, the learning rate was reduced to 3 × 10-4 and the network further trained until the validation loss did not improve by more than 1 HU over 100 epochs (approximately 100 to 450 epochs). We took the network weights at the epoch showing the best validation loss as the final weights for each fold of the cross-validation. The full 3D pseudo-CT volumes were produced from T1-w MR images of each test dataset by predicting overlapping patches (sliding_window_inference, overlap = 0.9, blend mode = gaussian).

3.4 Evaluation of pseudo-CT accuracy

We evaluated the accuracy of pseudo-CTs produced using our deep learning method against reference CTs using the mean absolute error (MAE) metric [1] defined as MAE=(/V), where V is the number of non-zero voxels within a head mask. The head mask was obtained by cropping the T1-weighted MR image to have a 17cm cranio-caudal extent so as to exclude the neck using robustfov (https://fsl.fmrib.ox.ac.uk). We also evaluated the Jaccard coefficient (JC; [9]) of overlap within a skull mask, which was obtained by thresholding the CT image within the head mask at 300 HU to obtain intensities relating to the skull.

3.5 Comparison with MaxProb method

The MaxProb method [16] was implemented on a Linux machine at the KCL & GSTT PET Centre to produce linear attenuation maps (µ-maps) for all 110 individuals. For the comparison with µ-maps produced by the MaxProb method, we first converted the reference CTs and pseudo-CTs produced by our method to 511 keV µ-map according to the bilinear equation below [3]:

Below Break Point: cm-1,

Above Break Point: cm-1,

with the Break Point at 1030 HU, a = 5.64 × 10-5 cm-1 and b = 4.08 × 10-2 cm-1, based on a 140 kVp CT tube voltage.

We evaluated the accuracy of the µ-maps obtained from our pseudo-CTs (µpCT) to µ-maps from the reference CTs (µCT) using the relative MAE (rMAE) metric [1] defined as . We also evaluated rMAE on the µ-maps obtained from the MaxProb method. We compared rMAE from our deep learning method against those obtained from the MaxProb method with a paired t-test.

3.6 Transfer learning in paediatric dataset

We combined our deep learning model by redoing the training on the full set of images in the PET Centre dataset, using the same training scheme as in the cross-validation. We first implemented our fully trained network on the clinical paediatric dataset without refinement. Next, we investigated whether we could improve the performance of our network in paediatric data through transfer learning. Using the fully trained network as a starting point, we further trained the network on the clinical paediatric dataset only using the same training scheme as described above. We performed another 4-fold cross-validation in the clinical paediatric dataset and evaluated the resulting pseudo-CT against reference CT in each fold as above.

4 Results

4.1 Pseudo-CT accuracy

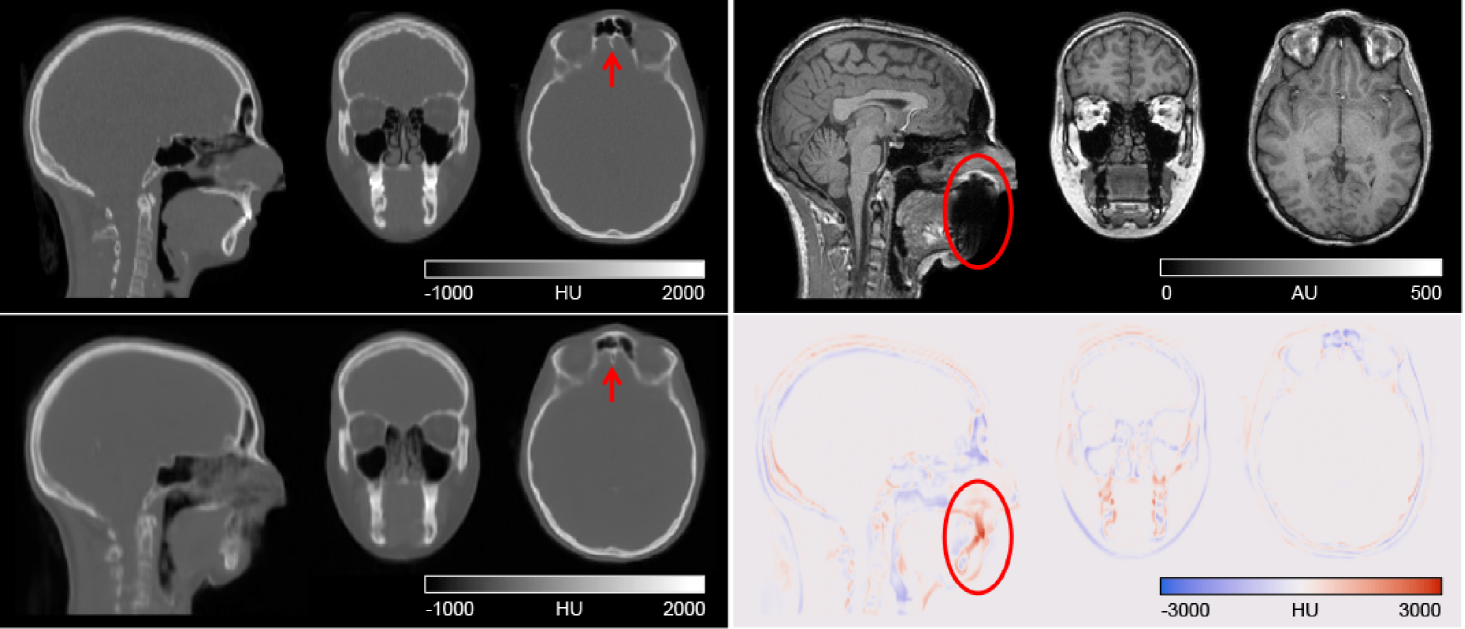

Figure 2 shows the reference CT and pseudo-CT produced by our deep learning method for a representative individual, together with the difference image (reference CT–pseudo-CT) and T1-weighted MR used to generate the pseudo-CT. Qualitatively, our pseudo-CT looks similar to the ground truth CT and our method was able to faithfully reproduce fine bone structures, for example in the tissue and air-space near the frontal sinuses (red arrow in Figure 2). The area with the largest error coincided with signal dropout on the MR image due to dental fillings (circled in Figure 2). On average, our method produced pseudo-CT with MAE of 100.4 ± 21.3 HU compared to reference CT within the head mask. The Jaccard coefficient (JC) for the amount of overlap between the skull masks from our pseudo-CT and the reference CT was 0.73 ± 0.07. Table 2 shows the results of each fold of the cross-validation.

| Fold | MAE (HU) | JC |

|---|---|---|

| 1 | 104.1 ± 28.4 | 0.73 ± 0.05 |

| 2 | 93.1 ± 9.5 | 0.75 ± 0.05 |

| 3 | 102.5 ± 19.6 | 0.71 ± 0.07 |

| 4 | 96.2 ± 16.7 | 0.75 ± 0.05 |

| 5 | 106.0 ± 25.1 | 0.72 ± 0.09 |

| Mean | 100.4 ± 21.3 | 0.73 ± 0.07 |

| Values are given as mean ± SD. | ||

4.2 Comparison with MaxProb method

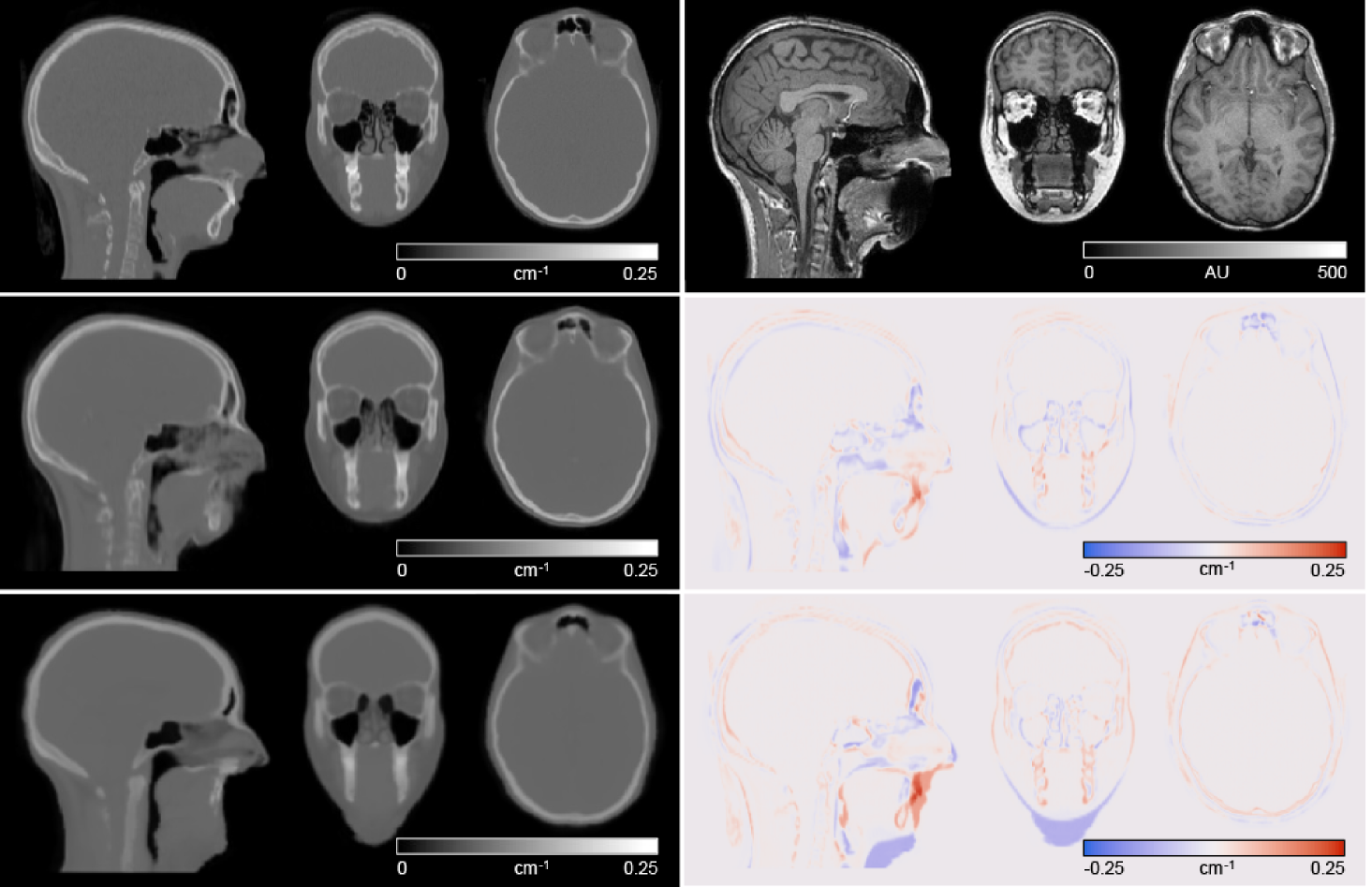

Figure 3 shows the µ-maps derived from reference CT, the MaxProb method and our pseudo-CT along with difference images, for the same individual as in Figure 2. Our method was able to learn to generate some intensities in the area where there was signal dropout on the MR image due to dental fillings, whereas the MaxProb method was not able to. The fine bone structures in the air space near the frontal sinuses were not reproduced with the MaxProb method. Compared with reference CT µ-maps, our network produced µ-maps with smaller errors than the MaxProb method: mean rMAE was 8.4 ± 2.1% for our pseudo-CT µ-maps and 13.1 ± 1.5% for the MaxProb µ-maps.

4.3 Implementation of MR-AC in paediatric dataset and impact of pre-training

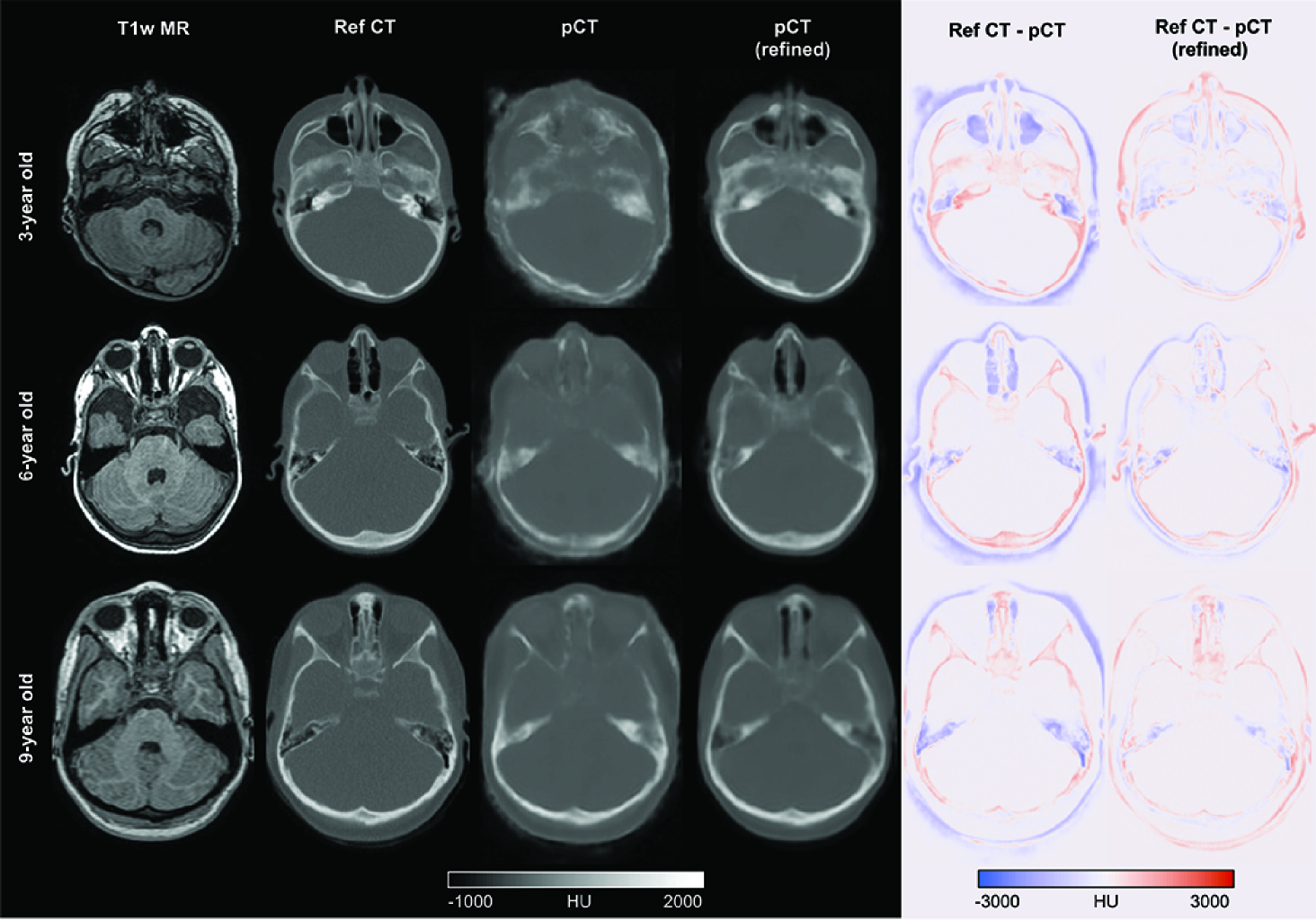

Figure 4 shows the T1-weighted MR, corresponding reference CT, pseudo-CTs generated using the network as trained on adult data (i.e. without refinement), and pseudo-CTs generated using the network refined on paediatric data, together with difference images (reference CT–pseudo-CT) for three randomly selected individuals aged 3, 6 and 9 years. Qualitative inspection shows that the pseudo-CT (without refinement) generated in the 3-year-old shows the highest errors due to thinner skull structures not being reproduced. This was slightly better in the 6- and 9-year-old patients. Pseudo-CTs generated using the refined network were qualitatively better in all three cases. MAE improved from 174.7 ± 33.6 HU when using the existing network to 127.3 ± 39.9 HU after transfer learning in the paediatric dataset.

5 Discussion

Our proposed method is able to generate a pseudo-CT with comparable accuracy to existing deep learning methods trained in adults. The µ-maps produced using our method have higher accuracy than the MaxProb multi-atlas method when compared with reference CT-based µ-maps. We refined our network on a clinical paediatric dataset and showed the benefit of transfer learning for improving the accuracy of pseudo-CT in paediatric data. The results suggest that the network can be used to produce pseudo-CTs in paediatric datasets for use in PET-MR-AC.

Our method produces pseudo-CT with MAE (100.4 ± 21.3) and Jaccard coefficients of overlap in skull (0.73 ± 0.07) comparable to other published deep learning methods (c.f. Jaccard coefficient in bone tissue of 0.70 in [14]). The method outperforms a well-performing multi-atlas method in terms of µ-map accuracy. In the PET Centre dataset, all five folds of the cross-validation showed similar pseudo-CT accuracy metrics, demonstrating the robustness of the network and training scheme, and balance of the training dataset.

We show proof of concept that a deep learning method trained on predominantly adult data can be refined and implemented in paediatric data, and showed the added benefit of transfer learning on clinical data where skull geometry and size may differ from the training dataset. Further work will include validation of the PET-MR AC method in both datasets.

Acknowledgements.

S.N.Y. is supported by the MRC grant MR/T023007/1. This work was supported by the UK Department of Health via the NIHR Comprehensive Biomedical Research Centre Award (COV-LT-0009) to Guy’s and St Thomas’ NHS Foundation Trust (in partnership with King’s College London and King’s College Hospital NHS Foundation Trust), and by the Wellcome Engineering and Physical Sciences Research Council Centre for Medical Engineering at King’s College London (WT 203148/Z/16/Z).

References

- [1] Burgos, N., Cardoso, M.J., Thielemans, K., Modat, M., Pedemonte, S., Dickson, J., Barnes, A., Ahmed, R., Mahoney, C.J., Schott, J.M., Duncan, J.S., Atkinson, D., Arridge, S.R., Hutton, B.F., Ourselin, S.: Attenuation correction synthesis for hybrid PET-MR scanners: Application to brain studies. IEEE Transactions on Medical Imaging 33(12), 2332–2341 (2014)

- [2] Cardoso, M.J., Li, W., Brown, R., Ma, N., Kerfoot, E., Wang, Y., Murrey, B., Myronenko, A., Zhao, C., Yang, D., Nath, V., He, Y., Xu, Z., Hatamizadeh, A., Myronenko, A., Zhu, W., Liu, Y., Zheng, M., Tang, Y., Yang, I., Zephyr, M., Hashemian, B., Alle, S., Darestani, M.Z., Budd, C., Modat, M., Vercauteren, T., Wang, G., Li, Y., Hu, Y., Fu, Y., Gorman, B., Johnson, H., Genereaux, B., Erdal, B.S., Gupta, V., Diaz-Pinto, A., Dourson, A., Maier-Hein, L., Jaeger, P.F., Baumgartner, M., Kalpathy-Cramer, J., Flores, M., Kirby, J., Cooper, L.A.D., Roth, H.R., Xu, D., Bericat, D., Floca, R., Zhou, S.K., Shuaib, H., Farahani, K., Maier-Hein, K.H., Aylward, S., Dogra, P., Ourselin, S., Feng, A.: MONAI: An open-source framework for deep learning in healthcare. arXiv preprint (2022)

- [3] Carney, J.P.J., Townsend, D.W., Rappoport, V., Bendriem, B.: Method for transforming CT images for attenuation correction in PET/CT imaging. Medical Physics 33(4), 976–983 (2006)

- [4] Chen, Y., Ying, C., Binkley, M.M., Juttukonda, M.R., Flores, S., Laforest, R., Benzinger, T.L.S., An, H.: Deep learning-based T1- enhanced selection of linear attenuation coefficients (DL-TESLA ) for PET/MR attenuation correction in dementia neuroimaging. Magnetic Resonance in Medicine 86, 499–513 (2021)

- [5] Gugger, S., Howard, J.: AdamW and Super-convergence is now the fastest way to train neural nets (2018)

- [6] He, K., Zhang, X., Ren, S., Sun, J.: Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In: 2015 IEEE International Conference on Computer Vision (ICCV). pp. 1026–1034. IEEE (2015)

- [7] He, K., Zhang, X., Ren, S., Sun, J.: Deep Residual Learning for Image Recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). vol. 4, pp. 770–778. IEEE (2016)

- [8] Ioffe, S., Szegedy, C.: Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In: Bach, F., Blei, D. (eds.) ICML’15: Proceedings of the 32nd International Conference on International Conference on Machine Learning - Volume 37. JLMR.org, Lille, France (2015)

- [9] Jaccard, P.: Distribution de la flore alpine dans le bassin des Dranses et dans quelques régions voisines. Bulletin de la Société Vaudoise des Sciences Naturelles 37, 241–272 (1901)

- [10] Kerfoot, E., Clough, J., Oksuz, I., Lee, J., King, A.P., Schnabel, J.A.: Left-Ventricle Quantification Using Residual U-Net. In: Statistical Atlases and Computational Models of the Heart. Atrial Segmentation and LV Quantification Challenges. STACOM 2018. Lecture Notes in Computer Science, vol 11395, pp. 371–380. Springer, Cham (2019)

- [11] Klaser, K., Borges, P., Shaw, R., Ranzini, M., Modat, M., Atkinson, D., Thielemans, K., Hutton, B., Goh, V., Cook, G., Cardoso, J., Ourselin, S.: A Multi-Channel Uncertainty-Aware Multi-Resolution Network for MR to CT Synthesis. Applied Sciences 11(4), 1667 (2021)

- [12] Ladefoged, C.N., Law, I., Anazodo, U., St. Lawrence, K., Izquierdo-Garcia, D., Catana, C., Burgos, N., Cardoso, M.J., Ourselin, S., Hutton, B., Mérida, I., Costes, N., Hammers, A., Benoit, D., Holm, S., Juttukonda, M., An, H., Cabello, J., Lukas, M., Nekolla, S., Ziegler, S., Fenchel, M., Jakoby, B., Casey, M.E., Benzinger, T., Højgaard, L., Hansen, A.E., Andersen, F.L.: A multi-centre evaluation of eleven clinically feasible brain PET/MRI attenuation correction techniques using a large cohort of patients. NeuroImage 147(June 2016), 346–359 (2017)

- [13] Ladefoged, C.N., Hansen, A.E., Henriksen, O.M., Bruun, F.J., Eikenes, L., Øen, S.K., Karlberg, A., Højgaard, L., Law, I., Andersen, F.L.: AI-driven attenuation correction for brain PET/MRI: Clinical evaluation of a dementia cohort and importance of the training group size. NeuroImage 222(November 2019) (2020)

- [14] Ladefoged, C.N., Marner, L., Hindsholm, A., Law, I., Højgaard, L., Andersen, F.L.: Deep Learning Based Attenuation Correction of PET/MRI in Pediatric Brain Tumor Patients: Evaluation in a Clinical Setting. Frontiers in Neuroscience 12(January), 1–9 (2019)

- [15] Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. 7th International Conference on Learning Representations, ICLR 2019 (2019)

- [16] Mérida, I., Reilhac, A., Redouté, J., Heckemann, R.A., Costes, N., Hammers, A.: Multi-atlas attenuation correction supports full quantification of static and dynamic brain PET data in PET-MR. Physics in Medicine and Biology 62(7), 2834–2858 (2017)

- [17] Modat, M., Cash, D.M., Daga, P., Winston, G.P., Duncan, J.S., Ourselin, S.: Global image registration using a symmetric block-matching approach. Journal of Medical Imaging 1(2), 024003 (2014)

- [18] Ronneberger, O., Fischer, P., Brox, T.: U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab, N., Hornegger, J., Wells III, W.M., Frangi, A.F. (eds.) Medical Image Computing and Computer-Assisted Intervention MICCAI 2015, vol. 9351, pp. 234–241. Springer (2015)

- [19] Shi, W., Caballero, J., Huszár, F., Totz, J., Aitken, A.P., Bishop, R., Rueckert, D., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. pp. 1874–1883 (2016)

- [20] Tustison, N.J., Avants, B.B., Cook, P.A., Yuanjie Zheng, Egan, A., Yushkevich, P.A., Gee, J.C.: N4ITK: Improved N3 Bias Correction. IEEE Transactions on Medical Imaging 29(6), 1310–1320 (2010)