22institutetext: Energy Business Unit, Commonwealth Scientific and Industrial Research Organisation (CSIRO), Newcastle, NSW, Australia

22email: subbu.sethuvenkatraman@csiro.au

Building Metadata Inference Using a Transducer Based Language Model

Abstract

Solving the challenges of automatic machine translation of Building Automation System text metadata is a crucial first step in efficiently deploying smart building applications. The vocabulary used to describe building metadata appears small compared to general natural languages, but each term has multiple commonly used abbreviations. Conventional machine learning techniques are inefficient since they need to learn many different forms for the same word, and large amounts of data must be used to train these models. It is also difficult to apply standard techniques such as tokenisation since this commonly results in multiple output tags being associated with a single input token, something traditional sequence labelling models do not allow. Finite State Transducers can model sequence-to-sequence tasks where the input and output sequences are different lengths, and they can be combined with language models to ensure a valid output sequence is generated. We perform a preliminary analysis into the use of transducer-based language models to parse and normalise building point metadata.

Keywords:

Finite State Transducer Language Model Abbreviation Expansion Text Normalisation.1 Introduction

The International Energy Agency (IEA) has estimated that buildings were responsible for 28% of global emissions in 2019 [1]. Commercial buildings are a significant energy consumer, responsible for more than 7% of global final energy consumption and up to 18% in some industrialised economies [14]. A typical commercial building has been shown to waste 30% of its energy consumption [16]. Incorporating digital technologies in buildings can reduce energy consumption, increase operational efficiency [8], and improve occupant comfort and productivity [22].

A Building Automation System (BAS) consists of ‘points’ representing ‘sensors’, ‘actuators’, ‘settings’ and ‘setpoints’. These points are described by short text fields containing unstructured metadata that may provide information such as sensor type and location and what equipment it is associated with. Novel artificial intelligence (AI) and machine learning (ML) solutions for managing buildings are being developed, such as occupancy detection [2], fault detection and diagnosis [19], and grid support services [20]. However, deployment of these applications and algorithms is hamstrung because the BAS point metadata was not designed to support these tasks [5]. Due to device memory limitations and to reduce input keystrokes, point text metadata is often heavily abbreviated, and the abbreviated forms can vary significantly from building to building. Mapping to one of many machine-readable schemas, such as Brick [3] or Haystack [15], is required, and this is usually performed manually.

Machine learning approaches to automate metadata mapping show promise. However, they require large amounts of data from many buildings in order to learn the multitude of ways engineers can express the same concept, and they’re prone to making unrecoverable mistakes [6]. In this paper, we use a hybrid approach of slot rules defined by finite-state transducers (FSTs) combined with a statistical language model. This approach allows us to more easily include expert knowledge in our model whilst retaining the power of statistical techniques to learn from data.

2 Prior Work

Most studies into the BAS metadata mapping focus on reducing the cost of manually labelling each building independently. The goal is to select the smallest subset of points from the initially unlabelled dataset that maximise the accuracy a model where . Active learning [17] is used to iteratively generate a set of representative samples which an expert then labels.

One of the earliest attempts to automate metadata mapping used a rules-based approach [4, 5] using synthesis by example [7]. Later approaches focus on statistical machine learning. Sequence labelling is used to split the text into non-overlapping chunks and apply class labels to each chunk [10, 11, 9]. This approach combined with active learning works well since the same abbreviations are used consistently between points from the same building. These approaches all train a local model, a model that is trained on a single building only. There have been some attempts to train global models on multiple buildings [12, 21]. Most of these approaches have only been validated on small datasets consisting of 3-5 buildings so it is unclear how they scale to larger datasets.

Finite-state transducers (FSTs) have been used with language models to expand abbreviations [18, 6]. FSTs are also used in speech applications because they can be constructed to ensure that the output sequence is valid [13].

In this work, we perform a preliminary investigation into the use of FSTs combined with a statistical language model to extract semantic information from BAS text metadata. To the best of our knowledge, we are the first to use a hybrid rules-based and statistical approach to this task.

3 The Metadata Mapping Task

There are two aspects of the metadata mapping task. The first is assigning tags to sequences of abbreviations. A tag is a single piece of semantic information such as air, temperature and sensor. A collection of tags is known as a tagset. The second task is identifying text chunks corresponding to physical entities such as equipment and locations. The challenges with this approach are two-fold. First, it is common for entities to nest – ‘AHU-L01-02’ is an entity of type Air_Handling_Unit containing a nested Level entity. Second, a single input token may map to multiple output tags – ‘SAF’ should be labelled with three tags, supply, air and fan. Prior work uses character-based models and multiple inference steps. This introduces inefficiencies that we address by using a Finite State Transducer slot model with a Language Model.

Two idiosyncratic practices define BAS point text descriptions and make it difficult for automated processes to extract metadata. The first is the heavy use of abbreviations. In a portfolio of over 200 commercial buildings, we observed the following:

-

•

Initialism: A group of letters, each pronounced separately.

-

•

Truncation: This type of abbreviation consists only of the first characters of a word.

-

•

Vowel Deletion: All vowels are removed, this may be combined with truncation.

-

•

Contraction: Only the first and last letter is retained.

-

•

Syllable Division: Words are split into syllables then one or more of the previous rules are applied to each syllable.

-

•

Phonetic: Similar to syllable division using phonemes.

The second is deletion or substitution of whitespace with special characters, we have observed the following practices:

-

•

camelCase: The first letter of each word other than the first word is upper case. All whitespace is deleted.

-

•

PascalCase: The first letter of each word is upper case. All whitespace is deleted.

-

•

snake_case: Whitespace is replaced with an underscore (_).

-

•

kebab-case: Whitespace is replaced with a dash (-).

-

•

UPPERCASE: All whitespace is deleted and all characters converted to upper case.

These practices make it very difficult to use rules-based methods such as regular expressions to extract information. For example, ‘AHU-01A’ is a valid entity by itself, but if it appears in the text ‘AHU-01AhrsMd’ then the correct prediction is ‘AHU-01’ should be labelled as Air_Handling_Unit and ‘AhrsMd’ as the tagset containing tags after, hours and mode.

4 Finite State Transducer and Language Model

.

A finite-state acceptor (FSA) is a state machine with no outputs. It consists of a finite alphabet of input symbols, a finite set of states, a starting state, one or more final (accepting) states and a transition function. An FSA is said to accept a sequence of input symbols if there exists a sequence of valid transitions from the start state to a final state.

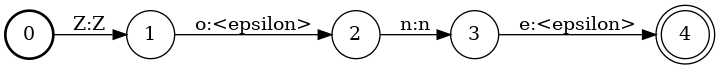

Finite-state transducers (FSTs) are generalisations of FSAs that represent the relationship between sets of strings. Each transition includes both an input and output symbol. In addition, the special character is added to represent no input or no output, allowing relationships between different length strings to be represented. For example, the FST shown in Figure 1 transduces ‘Zone’ to ‘Zn’.

Weighted finite-state transducers (WFSTs) are FSTs where the transitions have an associated weight in addition to the input and output symbols. The weights can be log probabilities, allowing different paths from the starting state to each final state to be scored based on maximum likelihood by weight summation. We use this property to generate a lattice that contains multiple potential output sequences and then use a language model to select the most likely sequence.

4.1 Finite State Transducer Slots

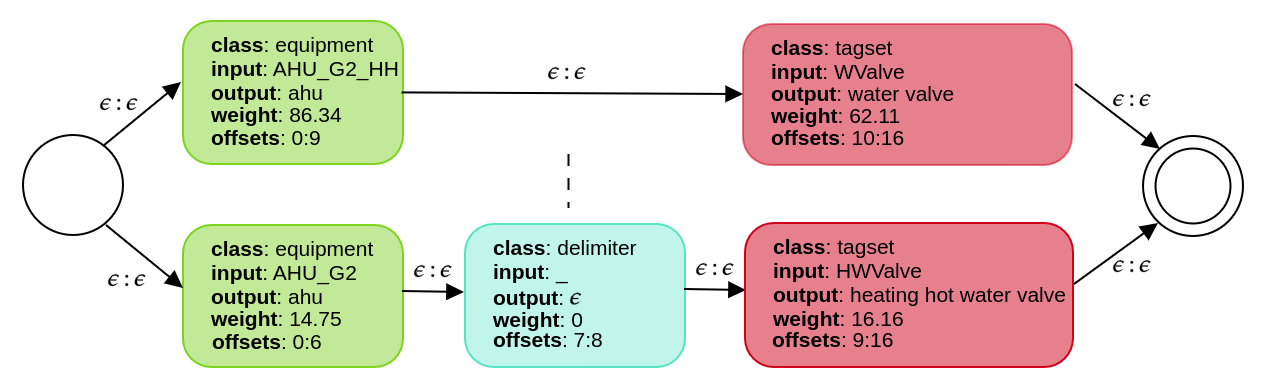

A slot is a class representing matched text. Each slot consists of a weighted finite-state transducer. The transducer outputs one or more tags, or the empty string (). Each slot has a weight, and slots form a directed graph or lattice. There is an arc between slots if the text they match is adjacent in the original string. The slot weights are either fixed, or dynamically assigned by a language model depending on the slot class.

Construction of the lattice proceeds as follows:

-

1.

All substrings of the input string are enumerated.

-

2.

Each substring is tested against each slot class. If a class accepts a substring, a slot is created for every output string generated from the input.

-

3.

The FSTs of adjacent slots are joined.

-

4.

A start and final node are added.

-

5.

The weights are updated using the language model for dynamic slots.

-

6.

The shortest path is calculated.

-

7.

The slots from the shortest path are extracted.

We used the following slots:

4.1.1 delimiter

The delimiter slot matches one of [ -_.] and outputs the empty string symbol (<epsilon>).

4.1.2 equipment

The equipment slot matches <type> <delimiter>? <identifier> (<delimiter> <identifier>)* and outputs the equipment type tag.

4.1.3 tagset

The tagset slot matches point descriptions, both abbreviated and unabbreviated. The matched text can also include delimiter characters, and the output is a sequence of expanded tags; for example inputting SAF results in the output supply air fan. Multiple tagset slots may be generated for the same input as abbreviations are often ambiguous.

4.1.4 unknown

The final rule matches any alphanumeric character and outputs <epsilon>.

4.2 Language Model

A language model is a probabilistic model that enables us to score a tagset based on the likelihood of the sequence of symbols. The equipment and tagset slots each have an associated language model. The tagset language model is trained on HVAC phrases such as zone temperature setpoint and the equipment language model is a character model trained on equipment names such as AHU-01. In both cases, a count-based language model of order=2 was used, and perplexity used to compute the slot weights. The weight of the delimiter slot is fixed at zero, and the weight of the unknown slot is a large constant value.

5 Experiment

In order to evaluate the feasibility of using FSTs to model BAS point metadata, we manually labelled 3,000 randomly selected points from 10 commercial buildings. Due to resource limitations, we choose only to label air handling units (including fan coil units and packaged units). As previously described, we used this data to construct an FST parser and language model. Finally, we tested the model on air handling unit points from five buildings that were not part of the dataset used to build the model. The buildings were diverse; some used abbreviations such as ‘ZnT’ for ‘zone temperature’ and others longer descriptions such as ‘RmTemp_Setpt’ for ‘room temperature setpoint’. The structure of points from the different buildings was also quite different, ranging from ‘AHU_G_1_Mode’ to ‘B550-NAE19/N2-2.DX-103.B550_AHU_02-03 CV-C’.

Accuracy ranged from 88.6% to 93.1%. There were two sources of errors. The first was out-of-vocabulary. Some points such as ‘minimum outside air damper’ (MOAD) were not included in the training set. A secondary issue was due to equipment such as ‘RAF_19_2’ being labelled as a tagset (return air fan) rather than as equipment. This is because our dataset contains return air fan points labelled as air handling units (since they are a component of an air handling unit) and we did not include fans when training the equipment language model. We observed that it was easy to rectify these errors by adding the missing terms and regenerating the model, a process that can be done online.

6 Discussion and Conclusions

In this study, we have demonstrated that it is possible to use a hybrid of rules with a language model to train a global model to extract semantic information from BAS text metadata.

Given the open nature of the problem at hand, training a single model that can correctly classify any BAS (Building Automation System) point is very difficult. This is why most of the prior work focuses on identifying the smallest set of points that require manual annotation through the use of active learning.

A promising alternative is continual learning, where a single model trained on multiple buildings is continuously updated with new information. To the best of our knowledge, this approach has yet to been studied using BAS metadata. To do so effectively would require a model that can be easily updated, ideally using only one or a few new examples.

A finite-state transducer abbreviation model shows promise in this area. Most of the errors we identified could be easily corrected by updating the abbreviation models vocabulary with new abbreviated words and phrases as they are encountered. Despite these errors our model preformed very well when trained on a relatively small dataset. Similar to active learning, when the model encounters out-of-vocabulary tokens, it could prompt the user to provide an expansion. In most cases, this would require the user to enter a short phrase. Since the abbreviation model is not gradient-based, it can be rapidly retrained.

6.0.1 Acknowledgements.

The work of David Waterworth is funded by the RoZetta Institute, the CSIRO and CIM Pty Ltd. Quan Z. Sheng’s work has been partially supported by an Australian Research Council (ARC) Linkage Infrastructure, Equipment and Facilities Projects ARC LE180100158 and LE220100078.

References

- Abergel et al. [2020] Abergel, T., Delmastro, C., Lane, K.: Tracking Buildings 2020. Tech. rep., IPCC (2020), URL https://www.iea.org/reports/tracking-buildings-2020

- Acquaah et al. [2020] Acquaah, Y., Steele, J.B., Gokaraju, B., Tesiero, R., Monty, G.H.: Occupancy Detection for Smart HVAC Efficiency in Building Energy: A Deep Learning Neural Network Framework using Thermal Imagery. In: 2020 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), pp. 1–6 (2020), doi:10.1109/AIPR50011.2020.9425091

- Balaji et al. [2016] Balaji, B., Bhattacharya, A., Fierro, G., Gao, J., Gluck, J., Hong, D., Johansen, A., Koh, J., Ploennigs, J., Agarwal, Y., Berges, M., Culler, D., Gupta, R., Kjærgaard, M.B., Srivastava, M., Whitehouse, K.: Brick: Towards a Unified Metadata Schema For Buildings. In: Proceedings of the 3rd ACM International Conference on Systems for Energy-Efficient Built Environments, pp. 41–50, ACM (11 2016), doi:10.1145/2993422.2993577

- Bhattacharya et al. [2014] Bhattacharya, A., Culler, D.E., Ortiz, J., Hong, D., Whitehouse, K., Culler, D.: Enabling Portable Building Applications through Automated Metadata Transformation. Tech. rep., University of California at Berkeley (2014)

- Bhattacharya et al. [2015] Bhattacharya, A.A., Hong, D., Culler, D., Ortiz, J., Whitehouse, K., Wu, E.: Automated Metadata Construction to Support Portable Building Applications. In: Proceedings of the 2nd ACM International Conference on Embedded Systems for Energy-Efficient Built Environments - BuildSys 15, pp. 3–12, ACM Press (2015), doi:10.1145/2821650.2821667

- Gorman et al. [2021] Gorman, K., Kirov, C., Roark, B., Sproat, R.: Structured abbreviation expansion in context. In: Findings of the Association for Computational Linguistics: EMNLP 2021, pp. 995–1005, Association for Computational Linguistics, Stroudsburg, PA, USA (2021), doi:10.18653/v1/2021.findings-emnlp.85

- Gulwani [2012] Gulwani, S.: Synthesis from examples: Interaction models and algorithms. In: Proceedings - 14th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing, SYNASC 2012, pp. 8–14 (09 2012), doi:10.1109/SYNASC.2012.69

- Hong et al. [2020] Hong, T., Wang, Z., Luo, X., Zhang, W.: State-of-the-art on research and applications of machine learning in the building life cycle. Energy and Buildings 212, 109831 (4 2020), doi:10.1016/j.enbuild.2020.109831

- Jiao et al. [2020] Jiao, Y., Li, J., Wu, J., Hong, D., Gupta, R., Shang, J.: SeNsER: Learning Cross-Building Sensor Metadata Tagger. In: Findings of the Association for Computational Linguistics: EMNLP 2020, pp. 950–960, Association for Computational Linguistics, Online (11 2020), doi:10.18653/v1/2020.findings-emnlp.85

- Koh et al. [2018] Koh, J., Hong, D., Gupta, R.E., Whitehouse, K., Wang, H., Agarwal, Y.: Plaster: an integration, benchmark, and development framework for metadata normalization methods. Proceedings of the 5th Conference on Systems for Built Environments (2018)

- Lin et al. [2019] Lin, L., Luo, Z., Hong, D., Wang, H.: Sequential Learning with Active Partial Labeling for Building Metadata. In: Proceedings of the 6th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, pp. 189–192, ACM (11 2019), doi:10.1145/3360322.3360866

- Ma et al. [2020] Ma, J., Hong, D., Wang, H.: Selective Sampling for Sensor Type Classification in Buildings. In: 2020 19th ACM/IEEE International Conference on Information Processing in Sensor Networks, IPSN 2020, pp. 241–252, Institute of Electrical and Electronics Engineers Inc. (4 2020), doi:10.1109/IPSN48710.2020.00028

- Mohri et al. [2002] Mohri, M., Pereira, F., Riley, M.: Weighted Finite-State Transducers in Speech Recognition. Computer Speech and Language 16(1), 69–88 (2002), doi:10.1006/csla.2001.0184

- Pérez-Lombard et al. [2008] Pérez-Lombard, L., Ortiz, J., Pout, C.: A review on buildings energy consumption information. Energy and Buildings 40(3), 394–398 (1 2008), doi:10.1016/j.enbuild.2007.03.007

- Praire et al. [2016] Praire, D., Petze, J., Petock, M., Kumar Gopan Samy, M.: Project-Haystack. Tech. rep., Continental Automated Building Association (2016)

- Pritoni et al. [2021] Pritoni, M., Paine, D., Fierro, G., Mosiman, C., Poplawski, M., Saha, A., Bender, J., Granderson, J.: Metadata Schemas and Ontologies for Building Energy Applications: A Critical Review and Use Case Analysis. Energies 14(7), 2024 (4 2021), doi:10.3390/en14072024

- Settles [2009] Settles, B.: Active Learning Literature Survey. Tech. Rep. 1648, University of Wisconsin-Madison (2009)

- Sproat and Jaitly [2016] Sproat, R., Jaitly, N.: RNN Approaches to Text Normalization: A Challenge. arXiv preprint (10 2016)

- Tun et al. [2021] Tun, W., Wong, J.K.W., Ling, S.H.: Hybrid Random Forest and Support Vector Machine Modeling for HVAC Fault Detection and Diagnosis. Sensors 21(24) (2021), doi:10.3390/s21248163

- Wang et al. [2021] Wang, J., Huang, S., Wu, D., Lu, N.: Operating a Commercial Building HVAC Load as a Virtual Battery Through Airflow Control. IEEE Transactions on Sustainable Energy 12(1), 158–168 (2021), doi:10.1109/TSTE.2020.2988513

- Waterworth et al. [2021] Waterworth, D., Sethuvenkatraman, S., Sheng, Q.Z.: Advancing smart building readiness: Automated metadata extraction using neural language processing methods. Advances in Applied Energy 3, 100041 (8 2021), doi:10.1016/j.adapen.2021.100041

- Zhang et al. [2017] Zhang, F., Haddad, S., Nakisa, B., Rastgoo, M.N., Candido, C., Tjondronegoro, D., de Dear, R.: The effects of higher temperature setpoints during summer on office workers’ cognitive load and thermal comfort. Building and Environment 123, 176–188 (2017), doi:10.1016/j.buildenv.2017.06.048