CAS-GAN for Contrast-free Angiography Synthesis

Abstract

Iodinated contrast agents are widely utilized in numerous interventional procedures, yet posing substantial health risks to patients. This paper presents CAS-GAN, a novel GAN framework that serves as a “virtual contrast agent” to synthesize X-ray angiographies via disentanglement representation learning and vessel semantic guidance, thereby reducing the reliance on iodinated contrast agents during interventional procedures. Specifically, our approach disentangles X-ray angiographies into background and vessel components, leveraging medical prior knowledge. A specialized predictor then learns to map the interrelationships between these components. Additionally, a vessel semantic-guided generator and a corresponding loss function are introduced to enhance the visual fidelity of generated images. Experimental results on the XCAD dataset demonstrate the state-of-the-art performance of our CAS-GAN, achieving a FID of and a MMD of . These promising results highlight CAS-GAN’s potential for clinical applications.

Index Terms:

Vascular intervention, X-ray angiographies, generative adversarial networks (GANs), disentanglement representation learning.I Introduction

Cardiovascular diseases (CVDs) remain the leading cause of global mortality [1]. Image-guided vascular intervention procedures offer minimal trauma and rapid recovery, becoming a mainstream treatment of CVDs [2]. X-ray angiography systems provide physicians with dynamic 2D X-ray images, widely used in interventional procedures. However, since X-rays cannot directly opacify vessels, contrast agents, typically iodine-based, are introduced into vessels to obtain X-ray angiographies [3], as depicted in Figure 1 (a). Despite their effectiveness, these contrast agents have several side effects [4], including potentially life-threatening allergic reactions [5]. Additionally, the elimination of contrast agents via kidneys can exacerbate renal damage, especially in individuals with existing kidney conditions or diabetes [6]. Thus, significantly reducing the contrast agent dose or even not using contrast agents while maintaining imaging quality to meet clinical needs is a key challenge that X-ray angiography systems must address [7].

Recent advancements in generative adversarial networks (GANs) across both natural and medical imaging domains suggest they can produce convincingly realistic images [8], [9]. This raises the possibility of using GANs as “virtual contrast agents” to generate real-looking X-ray angiographies, as illustrated in Fig. 1 (b). This will potentially reduce the reliance on iodinated agents and enhance the safety and efficiency of intervention procedures. Specifically, we treat this task as image-to-image translation, i.e., using GANs to generate X-ray angiographies from non-contrast X-ray images.

As one of the most challenging problems in computer vision, image-to-image translation has traditionally been tackled using fully supervised methods like pix2pix [10], which require paired images for training. Given the scarcity and high cost of paired medical images, researchers have explored unpaired data methods [11], [12], [13]. Cycle-consistency is a simple yet efficient method for learning transformations between source and target domains without paired data and has become a key technique in this field. Yet, existing methods face limitations in clinical application scenarios [14], [15]. In our task, we necessitate not only the style translation between two image domains but also the precise one-to-one mapping of specific images. For instance, the generated vessels must align with anatomical features in non-contrast X-ray images and maintain continuity. Unfortunately, existing methods often fail to adhere to these constraints, resulting in sub-optimal outcomes.

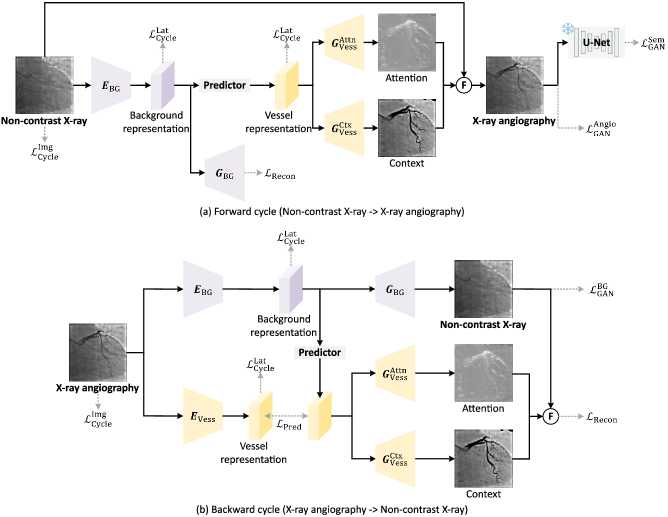

To tackle these challenges, we introduce CAS-GAN, a novel method that learns disentanglement representations to synthesize X-ray angiographies, enhanced by vessel semantic guidance for improved realism. Leveraging medical insights, we assume that anatomical structures between backgrounds and vessels have strong interrelationships. To explicitly formulate such interrelationships, we disentangle backgrounds and vessels in the latent space, using a neural network (termed “Predictor” in Fig. 2) to infer vessel representations from background representations. To further ensure the authenticity of anatomical structures in generated vessels, we introduce a vessel semantic-guided generator and a corresponding adversarial loss. The main contributions of this work are summarized as follows:

-

•

A novel CAS-GAN is proposed for contrast-free X-ray angiography synthesis. To the best of our knowledge, this is the first work in this field.

-

•

A disentanglement representation learning method is presented to decouple X-ray angiographies into background and vessel components, and their interrelationships are formulated using neural networks. Furthermore, a vessel semantic-guided generator and a corresponding adversarial loss are introduced to ensure the authenticity of generated images.

-

•

Quantitative and qualitative experimental results on the XCAD [16] demonstrate the state-of-the-art performance of our CAS-GAN compared with existing methods.

II Related works

II-A Generative adversarial networks

Generative adversarial networks (GANs), introduced by Goodfellow et al. [17] in 2014, revolutionized machine learning by establishing a minimax optimization game between a generator and a discriminator . The generator aims to mimic the distribution of real data samples, while the discriminator evaluates their authenticity. Up till now, GANs have been applied to numerous computer vision tasks including medical image generation [18], image super-resolution [19], and data augmentation [20]. Despite their effectiveness, training GANs remains challenging due to issues such as gradient vanishing and mode collapse [21], [22], [23]. Many variants have been developed to address these challenges with more stable training objectives [24], [25], [26]. In our study, we employ GANs to generate X-ray angiographies from non-contrast X-ray images.

II-B Image-to-image translation

Image-to-image translation involves transforming images from one domain to another while maintaining their underlying structures or contents [27], [28], [29], [30]. Isola et al. [10] pioneered this approach with a conditional GAN framework that utilizes both adversarial [17] and L1 reconstruction losses for training on paired data. However, obtaining a large number of paired data is often impractical, especially in clinical settings. Recent advancements have focused on unpaired data translation, introducing additional constraints like cycle-consistency [11], [31], [12] to preserve semantic consistency during domain transfer. This constraint ensures that an image converted into another domain and back again should remain largely unchanged, thereby maintaining the original content. Additionally, some studies have incorporated self-supervised contrast loss to enhance semantic relationships between different domains [32], [33], [34].

In the medical imaging field, most works focused on 3D medical imaging modalities, including CT CTA [35], MR CT [36], image denoising [37], etc. Notably, the most relevant studies to our work are [29], [38]. These works involve generating realistic X-ray angiographies from simulated images derived from projections of 3D vessel models. Our research extends these applications by focusing on the generation of vessels in non-contrast X-ray images, presenting a more complex challenge.

II-C Disentanglement representation learning

Disentanglement representation learning is gaining traction for its potential to increase the controllability and interpretability of generated images in image-to-image translation tasks [39], [40]. Many works attempt to disentangle images into content and style representations to achieve image translation through swapping style representations [41], [42], [43]. However, these representations are abstract concepts, as they cannot be directly visualized within the image space. Some other works have opted to disentangle images into components with physical significance [44], [45], such as separating scenes from elements like raindrops, occlusions, and fog [45]. Inspired by these efforts, our work seeks to disentangle X-ray angiographies into background and vessel components and construct the one-to-one mappings of these two components via neural networks.

III Methodology

The overall architecture of our method is depicted in Fig. 2. CAS-GAN is designed to learn an unpaired image-to-image translation function, , that maps non-contrast X-ray images to X-ray angiographies . To address the inherent challenges of this under-constrained mapping, we adopt a cycle-consistency approach similar to CycleGAN [10], incorporating an inverse mapping, , to couple with the forward translation. Distinct from conventional image-to-image translation models that primarily focus on style mappings between domains, CAS-GAN enhances the process by disentangling backgrounds and vessels from X-ray angiographies. This disentanglement allows us to employ neural networks to explicitly learn the interrelationships between these two distinct components (Section III-A). Considering the complex morphology of vessels, which are slender and highly branched [46], we further propose a vessel semantic-guided generator (Section III-B) and a vessel semantic-guided adversarial loss (Section III-C) to enhance the authenticity of generated images.

III-A Disentanglement representation learning

In a specific X-ray angiography , it can be conceptualized as a composite of a background (i.e., non-contrast X-ray image ) and vessel components, consistent with its imaging principles [47]. Physicians can infer vessel morphology from prior anatomical details observed in the backgrounds before injecting contrast agents. Based on this observation, our approach aims to disentangle X-ray angiographies into background and vessel components, subsequently learning a predictor to establish interrelationships between these components. Specifically, we execute this disentanglement in the latent space using two specialized encoders: a background encoder and a vessel encoder . Note that we assume the background components of and share the same latent space according to the above analysis. The encodings are formulated as follows:

| (1) | |||

| (2) |

where , , and are latent space representations. , , and denote the channel number, height, and width of X-ray images ( and ), and indicates the downsampling ratio of the encoders ( in default). Given the absence of in the forward cycle depicted in Fig. 2, we utilize the paired to train the predictor . With the powerful generalization ability of the predictor, the vessel representations of can be easily derived:

| (3) |

III-B Vessel semantic-guided generator

For the backward cycle, we employ a single generator to generate non-contrast X-ray images directly, because this task is comparatively simpler than the forward cycle. The mathematical formulation for the backward cycle is presented below:

| (4) |

The single generator approach is insufficient for the forward cycle, as it cannot discern the most discriminative features of the vessels. Drawing inspiration from attention mechanisms, we introduce a vessel semantic-guided generation to address this limitation. Rather than direct image generation, we deploy two specialized generators: for attention masks and for context masks . We employ Sigmoid to replace Tanh as the activation function in .

| (5) | |||

| (6) |

Finally, the corresponding attention masks , context masks , and non-contrast X-ray images are fused to synthesize X-ray angiographies.

| (7) |

III-C Vessel semantic-guided loss

The adversarial loss is fundamental in training generative adversarial networks (GANs). However, when two image domains are visually similar, traditional adversarial losses applied directly in the image space may not be sufficiently sensitive. For instance, the primary distinction between non-contrast X-ray images and X-ray angiographies often lies in vascular details, which may not always be distinctly opacified, rendering these images visually akin to one another. To address this, we introduce a vessel semantic-guided adversarial loss, focusing the adversarial training on semantic differences highlighted by vascular information.

Specifically, we employ a pre-trained U-Net [48] to extract vessel semantic images from both the original and the generated angiographies:

| (8) | |||

| (9) |

The vessel semantic-guided adversarial loss is then defined as follows:

| (10) |

This loss function specifically targets the semantic representations of vessels, thereby enhancing the GAN’s ability to discriminate between real and generated images based on vascular features, rather than general imaging characteristics.

III-D Training objective

Due to the highly under-constrained nature of the mappings between the two image domains, incorporating a variety of effective loss functions is crucial for training CAS-GAN.

III-D1 Prediction loss

We employ the mean square error to train the predictor, enabling it to learn mappings from background to vessel representations:

| (11) |

III-D2 Adversarial loss

III-D3 Cycle-consistency loss

Following CycleGAN [10], we implement cycle-consistency loss both in the image and latent spaces to ensure the integrity of the transformations:

| (14) |

| (15) | |||

where and are cycle translation results of and , respectively.

III-D4 Reconstruction loss

Given our use of autoencoder structures within CAS-GAN, the model aims to faithfully reconstruct images post-encoding and decoding:

| (16) |

where and are reconstruction results of and , respectively.

The training objective for CAS-GAN is a weighted sum of the above loss functions, tailored to optimize each aspect of the image translation process:

| (17) |

where , , , , and are hyperparameters.

IV Experimental setup

IV-A Dataset

IV-A1 XCAD [16]

Our experiments utilize the X-ray angiography coronary vessel segmentation dataset (XCAD), which is sourced from a General Electric Innova IGS 520 system. XCAD consists of two distinct subsets: i) The first subset includes 1,621 non-contrast X-ray images and 1,621 X-ray angiographies. These images are used for the image translation task; ii) The second subset comprises 126 X-ray angiographies with pixel-wise vessel annotations. This subset is employed to train U-Net [48] for extracting vessel semantic information. Note that non-contrast X-ray images and X-ray angiographies are not paired, so we serve this problem as an unpaired image translation task. For our experimental setup, we randomly select 621 non-contrast X-ray images and 621 X-ray angiographies as the testing set. The remaining 1,000 non-contrast X-ray images and 1,000 X-ray angiographies are designated as the training set.

IV-B Implementation details

We adopt the same generator and discriminator architectures in CycleGAN [10] for fair comparisons. The predictor within our model is implemented using a multi-layer perceptron (MLP) with a configuration of , where is set to in default. The whole framework is implemented based on PyTorch 2.1.1 [49], Python 3.8.18, and Ubuntu 20.04.6 with an NVIDIA GeForce RTX 4090 GPU. We set hyperparameters of CAS-GAN consistent with CycleGAN [11]. The input image size is set to . Adam optimizer [50] is adopted to train our model for 1,000 epochs. The initial learning rate is set to and is linearly reduced to zero after 700 epochs. Hyperparameters , , , , and are set to , , , , and .

IV-C Evaluation metrics

To evaluate the performance of CAS-GAN and other state-of-the-art methods, two popular utilized metrics in medical image translation are selected:

IV-C1 Fréchet Inception Distance (FID) [51]

The Fréchet Inception Distance (FID) measures similarities between two sets of images based on features extracted by Inception v3 [52] pre-trained on ImageNet [53]. To enhance the feature extraction capability of Inception v3 for X-ray angiographies, we fine-tuned it on our training dataset.

| Method | FID | MMD () |

|---|---|---|

| CycleGAN [11] [ICCV’17] | ||

| UNIT [12] [NeurIPS’17] | ||

| MUNIT [41] [ECCV’18] | ||

| CUT [32] [ECCV’20] | ||

| AttentionGAN [54] [TNNLS’21] | ||

| QS-Attn [34] [CVPR’22] | ||

| StegoGAN [55] [CVPR’24] | ||

| CAS-GAN [Ours] |

IV-C2 Maximum Mean Discrepancy (MMD) [56]

The Maximum Mean Discrepancy (MMD) measures statistical differences between feature distributions of real and generated images. Specifically, image features are extracted by Inception v3.

V Results

V-A Comparisons with state-of-the-arts

To demonstrate the effectiveness of CAS-GAN in X-ray angiography synthesis task, we compared it against several leading unpaired image-to-image translation methods.

Quantitative results on the XCAD dataset are presented in Table I. As seen, our CAS-GAN outperforms all baselines on FID and MMD. Compared with other methods, CAS-GAN and AttentionGAN which utilize attention-guided generation can produce more realistic results, reflecting higher FID. However, due to the integration of disentanglement representations and the vessel semantic-guided loss, CAS-GAN achieves superior performance compared to AttentionGAN on both FID and MMD.

| Index | DRL | VSGG | VSGL | FID | |

|---|---|---|---|---|---|

| 1 | ✗ | ✗ | ✗ | ||

| 2 | ✗ | ✗ | ✓ | ||

| 3 | ✗ | ✓ | ✗ | ||

| 4 | ✗ | ✓ | ✓ | ||

| 5 | ✓ | ✗ | ✗ | ||

| 6 | ✓ | ✗ | ✓ | ||

| 7 | ✓ | ✓ | ✗ | ||

| 8 | ✓ | ✓ | ✓ |

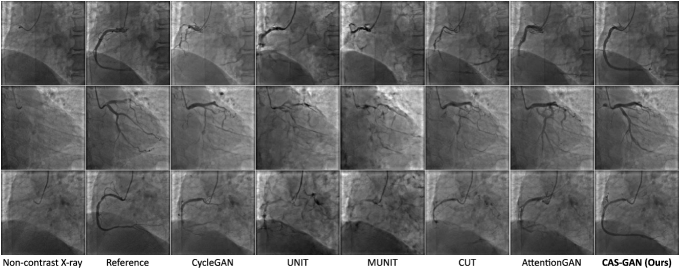

Fig. 3 showcases qualitative results generated by several baselines and our CAS-GAN. Reference images are real X-ray angiographies obtained by injecting contrast agents111Since reference and non-contrast X-ray images are captured at different moments in the cardiac cycle, quantitative metrics are not applicable for measuring their similarities.. As seen, baselines frequently exhibit significant entanglement of backgrounds and vessels, leading to highly unrealistic outputs. This issue is particularly evident in the first and third rows of Figure 3, where there is a total loss of structural consistency in vessels. Moreover, baselines fail to infer vessel bifurcations based on background prior. For example, in the second row of Figure 3, all baseline models cannot generate a crucial bifurcation at the position indicated by the catheter. In contrast, CAS-GAN can effectively disentangle and understand the complex interrelationships between backgrounds and vessels. This capability allows it to maintain structural integrity and precisely generate vessel bifurcations, markedly outperforming the baselines.

V-B Ablation studies

Extensive ablation experiments are conducted to verify the efficacy of several designs in CAS-GAN. Default configurations are highlighted in gray. Quantitative results are detailed in Table II. Our main observations are as follows: i) Learning disentanglement representations facilitates the model’s ability to synthesize accurate and realistic vessels based on the given backgrounds, thus leading to FID improvements; ii) Vessel semantic-guided generator is crucial as it directs the model’s focus toward target vessel regions while preserving backgrounds of the original images; iii) Vessel semantic-guided loss can further refine the realism of the generated X-ray angiographies. However, it is observed that integrating VSGL into a baseline model (i.e., Index 1) deteriorates performance. This suggests that VSGL requires the foundational enhancements provided by DRL and VSGG to be effective. Without them, the model struggles to generate high-quality vessels, and VSGL may exacerbate this by trying to force realism in poorly generated images.

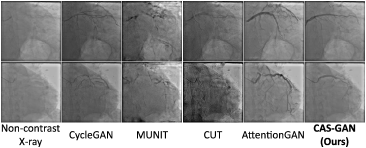

V-C External validation

We also conduct external validations to verify the generalization performance of different models. The data is derived from a collaborating hospital, consisting of 11 X-ray sequences from different patients. From each sequence, we extract two frames (before and after injecting contrast agents) to conduct experiments. Note that we directly evaluate models trained on the XCAD dataset without fine-tuning. Fig. 4 presents several qualitative results.

Despite all methods struggling with the significant variance between the XCAD and external datasets, CAS-GAN showcased relatively superior performance. The specialized design of our model, particularly its disentanglement approach, contributed to its better handling of the dataset’s challenges. In contrast, models like CycleGAN [11], MUNIT [41], and CUT [32] altered the image appearances significantly, while AttentionGAN [54] tended to generate unrealistic vessel structures, entangling with backgrounds. We anticipate that enhancing the dataset size and diversity will significantly improve model performance on external datasets and we will develop a more comprehensive dataset in future works.

VI Conclusion

This paper introduces CAS-GAN, a novel unpaired image-to-image translation model for contrast-free X-ray angiography synthesis. By effectively disentangling X-ray angiographies into background and vessel components within the latent space, CAS-GAN can effectively learn the mappings between these components. Moreover, the detailed designed generator and loss function based on vessel semantic guidance can further boost the realism of generated images. Comparative results with other state-of-the-art methods have demonstrated the superior performance of CAS-GAN. Our study offers a promising approach to reducing the reliance on iodinated contrast agents during interventional procedures. In future works, we aim to improve CAS-GAN’s capabilities by expanding the training dataset and incorporating more advanced models.

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under 2023YFC2415100, in part by the National Natural Science Foundation of China under Grant 62222316, Grant 62373351, Grant 82327801, Grant 62073325, Grant 62303463, in part by the Chinese Academy of Sciences Project for Young Scientists in Basic Research under Grant No.YSBR-104 and in part by China Postdoctoral Science Foundation under Grant 2024M763535.

References

- [1] G. A. Roth, G. A. Mensah, and V. Fuster, “The global burden of cardiovascular diseases and risks: A compass for global action,” J. Am. Coll. Cardiol., vol. 76, no. 25, pp. 2980–2981, 2020.

- [2] R. A. Chandra, F. K. Keane, F. E. Voncken, and C. R. Thomas, “Contemporary radiotherapy: Present and future,” Lancet, vol. 398, no. 10295, pp. 171–184, 2021.

- [3] M. G. Wagner et al., “Real-time respiratory motion compensated roadmaps for hepatic arterial interventions,” Med. Phys., vol. 48, no. 10, pp. 5661–5673, 2021.

- [4] M. S. D’Souza, R. Miguel, and S. D. Ray, “Radiological contrast agents and radiopharmaceuticals,” Side Eff. Drugs Annu., vol. 42, pp. 459–471, 2020.

- [5] O. Clement et al., “Immediate hypersensitivity to contrast agents: The French 5-year CIRTACI study,” Lancet Discov. Sci., vol. 1, pp. 51–61, 2018.

- [6] M. Fähling, E. Seeliger, A. Patzak, and P. B. Persson, “Understanding and preventing contrast-induced acute kidney injury,” Nat. Rev. Nephrol., vol. 13, no. 3, pp. 169–180, 2017.

- [7] M. Yin et al., “Precisely translating computed tomography diagnosis accuracy into therapeutic intervention by a carbon-iodine conjugated polymer,” Nat. Commun., vol. 13, no. 1, p. 2625, 2022.

- [8] J. Lin, Z. Chen, Y. Xia, S. Liu, T. Qin, and J. Luo, “Exploring explicit domain supervision for latent space disentanglement in unpaired image-to-image translation,” EEE Trans. Pattern Anal. Mach. Intell., vol. 43, no. 4, pp. 1254–1266, 2019.

- [9] C.-C. Fan et al., “TR-GAN: Multi-session future MRI prediction with temporal recurrent generative adversarial network,” IEEE Trans. Med. Imaging, vol. 41, no. 8, pp. 1925–1937, 2022.

- [10] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in Proc. CVPR, 2017, pp. 1125–1134.

- [11] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proc. ICCV, 2017, pp. 2223–2232.

- [12] M.-Y. Liu, T. Breuel, and J. Kautz, “Unsupervised image-to-image translation networks,” in Proc. NeurIPS, vol. 30, 2017.

- [13] Z. Yi, H. Zhang, P. Tan, and M. Gong, “DualGAN: Unsupervised dual learning for image-to-image translation,” in Proc. ICCV, 2017, pp. 2849–2857.

- [14] N. Moriakov, J. Adler, and J. Teuwen, “Kernel of CycleGAN as a principle homogeneous space,” in Proc. ICLR, 2020.

- [15] L. Kong, C. Lian, D. Huang, Z. Li, Y. Hu, and Q. Zhou, “Breaking the dilemma of medical image-to-image translation,” in Proc. NeurIPS, vol. 34, 2021, pp. 1964–1978.

- [16] Y. Ma et al., “Self-supervised vessel segmentation via adversarial learning,” in Proc. ICCV, 2021, pp. 7536–7545.

- [17] I. Goodfellow et al., “Generative adversarial nets,” in Proc. NeurIPS, vol. 27, 2014.

- [18] K. Guo et al., “MedGAN: An adaptive GAN approach for medical image generation,” Comput. Biol. Med., vol. 163, p. 107119, 2023.

- [19] J. Park, S. Son, and K. M. Lee, “Content-aware local gan for photo-realistic super-resolution,” in Proc. ICCV, 2023, pp. 10 585–10 594.

- [20] N.-T. Tran, V.-H. Tran, N.-B. Nguyen, T.-K. Nguyen, and N.-M. Cheung, “On data augmentation for GAN training,” IEEE Trans. Image Process., vol. 30, pp. 1882–1897, 2021.

- [21] N. Kodali, J. Abernethy, J. Hays, and Z. Kira, “On convergence and stability of GANs,” arXiv:1705.07215, 2017.

- [22] N.-T. Tran, T.-A. Bui, and N.-M. Cheung, “Dist-GAN: An improved GAN using distance constraints,” in Proc. ECCV, 2018, pp. 370–385.

- [23] D. Bang and H. Shim, “MGGAN: Solving mode collapse using manifold-guided training,” in Proc. ICCV, 2021, pp. 2347–2356.

- [24] T. Salimans, I. Goodfellow, W. Zaremba, V. Cheung, A. Radford, and X. Chen, “Improved techniques for training GANs,” in Proc. NeurIPS, vol. 29, 2016.

- [25] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein generative adversarial networks,” in Proc. ICML, 2017, pp. 214–223.

- [26] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. Paul Smolley, “Least squares generative adversarial networks,” in Proc. ICCV, 2017, pp. 2794–2802.

- [27] Y. Choi, Y. Uh, J. Yoo, and J.-W. Ha, “StarGAN v2: Diverse image synthesis for multiple domains,” in Proc. CVPR, 2020, pp. 8188–8197.

- [28] E. Richardson et al., “Encoding in style: A StyleGAN encoder for image-to-image translation,” in Proc. CVPR, 2021, pp. 2287–2296.

- [29] R. Martin, P. Segars, E. Samei, J. Miró, and L. Duong, “Unsupervised synthesis of realistic coronary artery X-ray angiogram,” Int. J. Comput. Assisted Radiol. Surg., vol. 18, no. 12, pp. 2329–2338, 2023.

- [30] K. Armanious, C. Jiang, S. Abdulatif, T. Küstner, S. Gatidis, and B. Yang, “Unsupervised medical image translation using cycle-MedGAN,” in Proc. EUSIPCO, 2019.

- [31] T. Zhou, P. Krahenbuhl, M. Aubry, Q. Huang, and A. A. Efros, “Learning dense correspondence via 3D-guided cycle consistency,” in Proc. CVPR, 2016, pp. 117–126.

- [32] T. Park, A. A. Efros, R. Zhang, and J.-Y. Zhu, “Contrastive learning for unpaired image-to-image translation,” in Proc. ECCV, 2020, pp. 319–345.

- [33] W. Wang, W. Zhou, J. Bao, D. Chen, and H. Li, “Instance-wise hard negative example generation for contrastive learning in unpaired image-to-image translation,” in Proc. ICCV, 2021, pp. 14 020–14 029.

- [34] X. Hu, X. Zhou, Q. Huang, Z. Shi, L. Sun, and Q. Li, “QS-Attn: Query-selected attention for contrastive learning in I2I translation,” in Proc. CVPR, 2022, pp. 18 291–18 300.

- [35] J. Lyu et al., “Generative adversarial network–based noncontrast CT angiography for aorta and carotid arteries,” Radiol., vol. 309, no. 2, p. e230681, 2023.

- [36] V. Kearney et al., “Attention-aware discrimination for MR-to-CT image translation using cycle-consistent generative adversarial networks,” Radiol.: Artif. Intell., vol. 2, no. 2, p. e190027, 2020.

- [37] K. Armanious et al., “MedGAN: Medical image translation using GANs,” Comput. Med. Imaging Graphics, vol. 79, p. 101684, 2020.

- [38] O. Tmenova, R. Martin, and L. Duong, “CycleGAN for style transfer in X-ray angiography,” Int. J. Comput. Assisted Radiol. Surg., vol. 14, pp. 1785–1794, 2019.

- [39] X. Chen, Y. Duan, R. Houthooft, J. Schulman, I. Sutskever, and P. Abbeel, “InfoGAN: Interpretable representation learning by information maximizing generative adversarial nets,” in Proc. NeurIPS, vol. 29, 2016.

- [40] I. Higgins et al., “-VAE: Learning basic visual concepts with a constrained variational framework,” in Proc. ICLR, vol. 3, 2017.

- [41] X. Huang, M.-Y. Liu, S. Belongie, and J. Kautz, “Multimodal unsupervised image-to-image translation,” in Proc. ECCV, 2018, pp. 172–189.

- [42] T. Park et al., “Swapping autoencoder for deep image manipulation,” in Proc. NeurIPS, vol. 33, 2020, pp. 7198–7211.

- [43] Y. Zhang, C. Li, Z. Dai, L. Zhong, X. Wang, and W. Yang, “Breath-hold CBCT-guided CBCT-to-CT synthesis via multimodal unsupervised rrepresentation disentanglement learning,” IEEE Trans. Med. Imaging, vol. 42, no. 8, pp. 2313–2324, 2023.

- [44] X. Chen et al., “Unpaired deep image dehazing using contrastive disentanglement learning,” in Proc. ECCV, 2022, pp. 632–648.

- [45] F. Pizzati, P. Cerri, and R. de Charette, “Physics-informed guided disentanglement in generative networks,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 45, no. 8, pp. 10 300–10 316, 2023.

- [46] D.-X. Huang et al., “SPIRONet: Spatial-frequency learning and topological channel interaction network for vessel segmentation,” arXiv:2406.19749, 2024.

- [47] D. P. Harrington, L. M. Boxt, and P. D. Murray, “Digital subtraction angiography: Overview of technical principles,” Am. J. Roentgenol., vol. 139, no. 4, pp. 781–786, 1982.

- [48] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Proc. MICCAI, 2015, pp. 234–241.

- [49] A. Paszke et al., “PyTorch: An imperative style, high-performance deep learning library,” in Proc. NeurIPS, vol. 32, 2019.

- [50] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in Proc. ICLR, 2014.

- [51] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs trained by a two time-scale update rule converge to a local Nash equilibrium,” in Proc. NeurIPS, vol. 30, 2017.

- [52] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the Inception architecture for computer vision,” in Proc. CVPR, 2016, pp. 2818–2826.

- [53] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” in Proc. CVPR, 2009, pp. 248–255.

- [54] H. Tang, H. Liu, D. Xu, P. H. Torr, and N. Sebe, “AttentionGAN: Unpaired image-to-image translation using attention-guided generative adversarial networks,” IEEE Trans. Neural Networks Learn. Syst., vol. 34, no. 4, pp. 1972–1987, 2021.

- [55] S. Wu et al., “StegoGAN: Leveraging steganography for non-bijective image-to-image translation,” in Proc. CVPR, 2024, pp. 7922–7931.

- [56] A. Gretton, K. M. Borgwardt, M. J. Rasch, B. Schölkopf, and A. Smola, “A kernel two-sample test,” J. Mach. Learn. Res., vol. 13, no. 1, pp. 723–773, 2012.