CASSOD-Net: Cascaded and Separable Structures of Dilated Convolution for Embedded Vision Systems and Applications

Abstract

The field of view (FOV) of convolutional neural networks is highly related to the accuracy of inference. Dilated convolutions are known as an effective solution to the problems which require large FOVs. However, for general-purpose hardware or dedicated hardware, it usually takes extra time to handle dilated convolutions compared with standard convolutions. In this paper, we propose a network module, Cascaded and Separable Structure of Dilated (CASSOD) Convolution, and a special hardware system to handle the CASSOD networks efficiently. A CASSOD-Net includes multiple cascaded dilated filters, which can be used to replace the traditional dilated filters without decreasing the accuracy of inference. Two example applications, face detection and image segmentation, are tested with dilated convolutions and the proposed CASSOD modules. The new network for face detection achieves higher accuracy than the previous work with only 47% of filter weights in the dilated convolution layers of the context module. Moreover, the proposed hardware system can accelerate the computations of dilated convolutions, and it is 2.78 times faster than traditional hardware systems when the filter size is .

1 Introduction

Dilated convolutions in Convolutional Neural Networks (CNNs) can be applied to different kinds of applications, including audio processing [6], crowd counting [9], semantic image segmentation [3, 14, 18, 20], image classification [7], image super-resolution [11], road extraction [22], image denoising [17], face detection [8], object detection [10], and so on. For many vision applications, the receptive field, or the field of view (FOV) [12] of convolutional neural networks is highly related to the accuracy of inference. Large FOV can be obtained by increasing the filter size or by increasing the number of convolution layers. However, the computational time might also increase because the computational cost is proportional to the filter size and the number of layers. Dilated convolutions are known as an effective method to enlarge the FOV of a network without increasing the computational costs. By adjusting the dilation rate , a filter can be enlarged to a filter with Multiply-Accumulate (MAC) operations.

Deng et al. propose a context module, which is inspired by the SSH face detector [16], to increase the FOV [2]. Wu et al. propose a Joint Pyramid Up-sampling (JPU) module and formulate the task of extracting high-resolution feature maps into a joint up-sampling problem [20]. In the JPU structure, the generated feature maps are up-sampled and concatenated, and 4 separable convolutions with different dilation rates () are included in the same convolution layer. Hamaguchi et al. propose a segmentation model, which includes a front-end module, a local feature extraction (LFE) module, and a head module [3]. The dilation rate in the front-end module is gradually increased to extract the features from a large range, and the dilation rate in the subsequent LFE module is gradually decreased to aggregate local features generated by the front-end module. The above mentioned network models are designed for applications related to face detection and image segmentation. There are still many kinds of network architectures containing dilated convolution layers, which are used to improve the accuracy of other applications.

In order to implement dilated convolutions on mobile devices and embedded system platforms, it is necessary to reduce the memory size and the computational costs without decreasing the accuracy. For some hardware devices, including GPUs, convolution operations are only optimized for standard convolutions. Even though the dilated filters require only MAC operations, the overhead to skip the pixels which do not engage in the process of convolutions is not always zero. For some dedicated hardware systems based on systolic arrays, when the dilation rate is high, the memory footprint might increase since the addresses of the pixels to be processed are not consecutive.

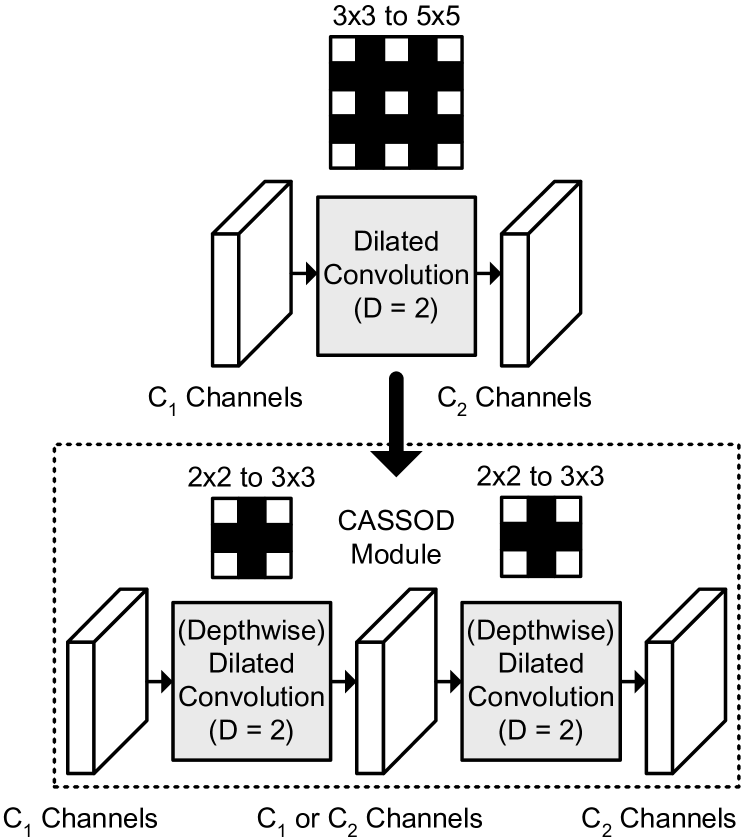

In this paper, we propose a new network module, Cascaded and Separable Structure of Dilated (CASSOD) Convolutions, to approximate the dilated convolutions with low memory cost for filter weights and low computational costs for convolutions. The concept of the proposed method is shown in Fig. 1.

2 Proposed Network Module

| Network Structure | No. of Filter Weights |

|---|---|

| Dilated Convolution | |

| CASSOD-A | |

| (1st layer: DW Conv.∗) | |

| CASSOD-C (No DW Conv.∗) | |

| or | |

| Dilated / Depthwise Convolution | |

| CASSOD-D | |

| (1st and 2nd layer: DW Conv.∗) |

∗DW Conv. denotes the depthwise convolutions.

In this section, the dilated convolutions and the proposed CASSOD module are introduced in the following subsections. The cascaded dilated convolution, which can be a separable version of the traditional dilated convolution, is an important component of the CASSOD module. In this paper, the (or ) dilated convolutions refer to the convolutions where the filter size is (or ) before the filters are dilated ().

2.1 Dilated Convolutions with Fewer Filter Weights

In previous works [3, 6, 7, 9, 11, 14, 17, 20, 22], the dilated filters are generated based on filters. A total of 9 filter weights are used to compute the convolution results for one input feature map. The FOV is expanded when the dilation rate is larger than 1. The larger the dilation rate , the larger the FOV.

The dilated filters which are generated based on filters, are proposed in this work. The output result of the proposed dilated filters is calculated based on the following equation.

| (1) |

where is the output feature map, is the -th input feature map, and is the filter weight for the -th input feature map. The parameters represent the index of the pixels in the feature maps, and the ranges of both and depend on the size of feature maps. The parameter denotes the number of channels. There are only 4 filter weights for 1 input feature map and the ranges of both and are .

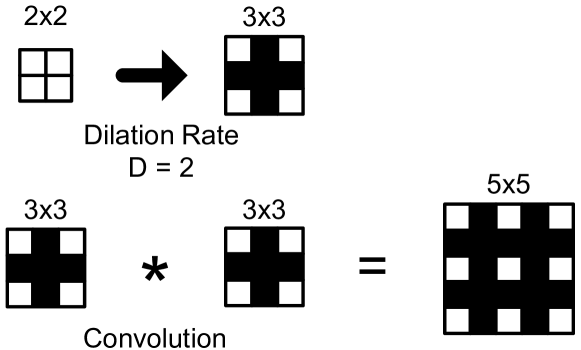

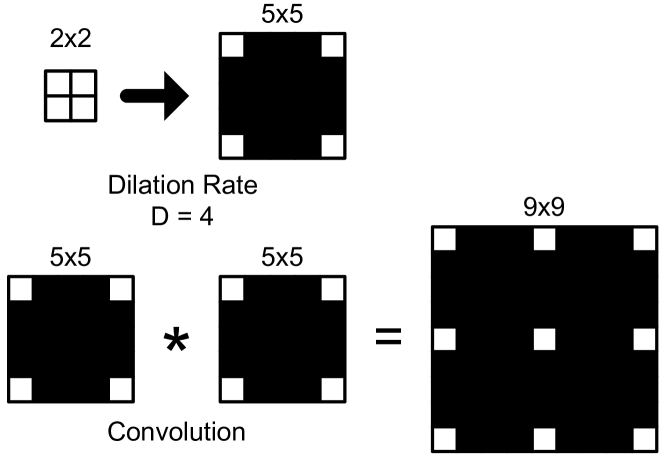

Two examples of the filters and dilated convolutions are shown in Fig. 2. Fig. 2(a) shows an example where the dilation rate is . Two cascaded dilated convolutions where the dilation rate is can be an approximation to a dilated convolution with the same dilation rate since the positions of zero weights are exactly the same. Similarly, as shown in Fig. 2(b), two cascaded dilated convolutions with the dilation rate can be an approximation to a dilated convolution with the same dilation rate. The dilation rate is always set to a multiple of 2.

(a)

(b)

2.2 Cascaded and Separable Structure of Dilated (CASSOD) Convolution

The concept of the proposed CASSOD module is shown in Fig. 1. The upper part of the figure shows an example of dilated convolutions [19] where the dilation rate is . There are channels in the input feature maps, and channels in the output feature maps. The number of filter weights of the dilated convolutions is .

The lower part of the figure shows an example of the proposed CASSOD module where the dilation rate is . There are two convolution layers in this module and 3 sets of feature maps. Either of the first convolution layer or the second convolution layer can be a depthwise convolution [5] layer. The variations (CASSOD-A,C,D) are shown in Table 1.

In the CASSOD-A module, there are , , and channels in the first, the second, and the third set of feature maps, respectively. The numbers of channels in the first and the second set of feature maps are the same because the first convolution layer includes depthwise separable operations. The first convolution layer includes a series of depthwise and dilated filters, which are different from the traditional dilated filters. The second convolution layers includes a series of dilated filters. The number of filter weights of the dilated convolutions is . Table 1 also shows the comparison of the number of filter weights. It can be observed that, when is large, the number of filter weights in the CASSOD-A module is close to (4/9) of the number of filter weights in the traditional dilated convolution.

In the CASSOD-C module, there are , (or ), and channels in the first, the second, and the third set of feature maps, respectively. Both convolution layers include a series of dilated filters. The number of filter weights of the dilated convolutions is or . It can be observed that, when is equal to , the number of filter weights of the CASSOD-C module is close to (8/9) of the number of filter weights in the traditional dilated convolution.

The CASSOD-A,C modules have lower computational costs than the traditional dilated convolutions. The number of filter weights is also proportional to the computational cost, which is equal to the number of MAC operations. Compared with the traditional dilated convolutions, 1 additional convolution layer is required to implement the CASSOD modules. The batch normalization operations and the activation functions (e.g. ReLU) can be included in the additional convolution layer if necessary. The CASSOD-A,C modules are alternatives to the dilated convolutions, and the CASSOD-D module is an alternative to the depthwise and dilated convolutions. The number of filter weights of the CASSOD-D module is close to (8/9) of the depthwise and dilated convolutions.

3 Proposed Hardware Architecture

The analysis of computational time of dilated convolutions and the proposed hardware architecture are shown in the following subsections.

3.1 Computational Time of Dilated Convolutions

Table 2 shows an example of the computational time of dilated convolutions when the size of input image is pixels. A network with 3 layers is used to measure the computational time of CPU, and a network with 10 layers is used to measure the computational time of GPU. There are 64 input channels and 64 output channels in each layer of the networks, and the dilation rates of the convolution layers are set to the same value.

The results show that the processing time does not change much when the dilation rate is larger than 1 for either CPU or GPU. The computational time of standard convolutions, where the dilation rate is set to 1, is the shortest, and the reason can be that the framework (e.g. CUDA library) includes some optimized operations to accelerate the computations of standard convolutions. The speed of dilated convolutions may depend on the performance of system platforms, but the dilated convolutions with a dilation rate larger than 1 cannot necessarily achieve the same level of speed as standard convolutions with a dilation rate of 1. The goal of this work is to design a hardware system which can handle both dilated convolutions and standard convolutions efficiently.

| Dilation Rate | Processing Time (ms) | |||

|---|---|---|---|---|

| Convolutions | Depthwise Conv. | |||

| CPU∗ | GPU∗∗ | CPU∗ | GPU∗∗ | |

| 39.53 | 6.53 | 21.57 | 11.81 | |

| 862.56 | 19.12 | 861.99 | 11.83 | |

| 868.83 | 19.17 | 865.09 | 11.79 | |

| 854.23 | 19.21 | 862.95 | 11.78 | |

| 852.42 | 19.23 | 871.42 | 11.78 | |

∗The CPU is Intel Xeon E5-2640 v4 (2.40 GHz) and the memory size is 256 GB.

∗∗The GPU is TITAN Xp (12 GB), and the version of cuDNN is 7.6.5.

For hardware implementation, the pixel values in the feature maps are usually stored in a shift register array. One solution to handle dilated convolutions using a shift register array is to add zeros to the filter weights and compute the results of standard convolutions. To handle a dilated filter where the dilation rate is , it is necessary to compute the products of 25 filter weights and 25 input pixels. A total of 16 filter weights equal to zero can be skipped. The computational time is proportional to the size of dilated filters, and the efficiency of filter processing is relatively low compared with CPU and GPU.

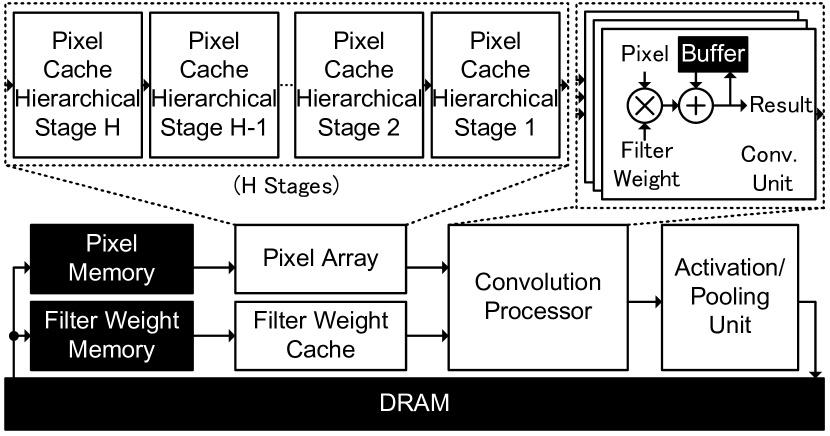

To solve this problem, a new hardware architecture is proposed to speed up dilated convolutions. An example of the architecture of the proposed hardware system is shown in Fig. 3, which includes 6 modules and DRAM. The filter weights stored in the “Filter Weight Memory” are sent to the “Filter Weight Cache,” and the feature maps of the current convolution layer stored in the “Pixel Memory” are sent to the “Pixel Array.” The filter weights and the pixels of feature maps are sent to the “Convolution Processor” to compute the convolution results, and the convolution results are sent to the “Activation and Pooling Unit” to generate the feature maps for the next convolution layer. The “Pixel Array” includes multiple hierarchical stages of pixel caches, which can generate the input pixels for dilated convolutions with a different dilation rate .

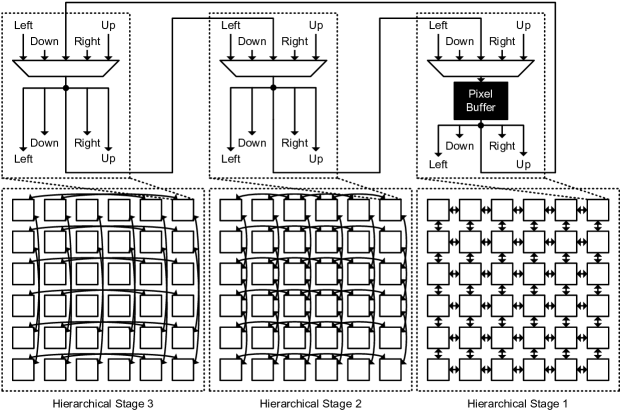

3.2 Pixel Array for Dilated Convolutions

The “Pixel Array,” including multiple hierarchical stages, can handle dilated convolutions with different dilation rates efficiently. The proposed hardware architecture can be implemented in a Field-Programmable Gate Array (FPGA) or an Application-Specific Integrated Circuit (ASIC). Fig. 4 shows an example of the architecture and the interconnections of the proposed shift register array, where there are 3 hierarchical stages. In this example, there are pixel buffers (or selectors) in each stage, and each pixel buffer (or selector) is connected to 4 other pixel buffers (or selectors) in the up, right, down, and left directions. It means that the pixel stored in the pixel buffer can be transferred to one of the 4 connected pixel buffers (or selectors). In the first hierarchical stage, the input of a pixel buffer is connected to the input of its neighboring pixel buffer and the pixel selector with the same position in the second stage. The difference of the shifted index and the original index of the pixel, , is 0 or 1 (). Similarly, in the second hierarchical stage, the difference of the shifted index and the original index of the pixel, , is 0 or 2 (), and in the third hierarchical stage, the difference of the shifted index and the original index of the pixel, , is 0 or 4 ().

The supported dilation rate, which is equal to the total difference of the shifted index and the original index of the pixel in all hierarchical stages (, , , … , ) is shown in the following equation.

| (2) |

where is the total number of the hierarchical stages. In this example, is equal to 3, but can be set to any number to support dilation rates () according to the network architectures. When the gate count of each stage is the same, the total hardware cost is proportional to the number of hierarchical stage, .

4 Experimental Results

The experimental results contain 3 parts. The first part is the comparison of accuracy of face detection. The second part is the comparison of accuracy of image segmentation. The third part is the analysis of the performance of the proposed hardware architecture.

4.1 Accuracy of Face Detection

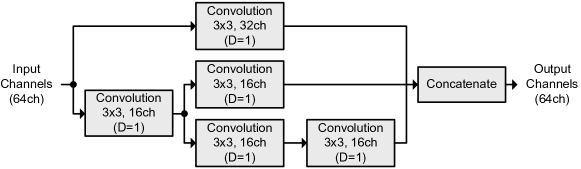

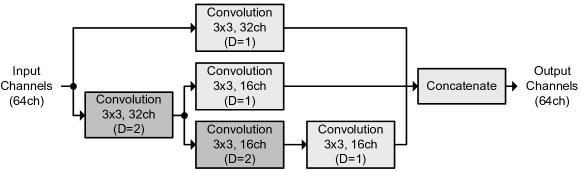

The proposed network architecture, CASSOD-Net, is evaluated based on the RetinaFace [2], which is designed for face detection tasks. The context module, inspired by the SSH face detector [16], is used to increase the FOV and enhance the rigid context modeling power. To compare the accuracy, the convolution layers in the context modules is replaced by the Feature Enhance Module (FEM), which includes dilated convolution structures, in the Dual Shot Face Detector (DSFD) [8]. Then, the dilated convolutions in the DSFD [20] are replaced by the proposed CASSOD modules. The network architecture of the modified context module is shown in Fig. 5. To keep the computational costs at the same level, the number of input channels of the FEM is changed from 256 to 64, and the number of input channels in the remaining layers is reduced by the same ratio. Fig. 5(a) shows the original context module in the RetinaFace, and Fig. 5(b) shows the modified context module with FEM, which includes dilated convolution layers with a dilation rate () of 2.

MobileNet-0.25 [5] is used as the backbone network. The accuracy of face detection is shown in Table 3. After replacing the dilated convolutions with the CASSOD-C and CASSOD-A modules, the accuracy does not decrease. Besides, the proposed CASSOD module can achieve higher accuracy than the previous architecture with batch normalization and activation. The CASSOD-C module has better performance than the CASSOD-A module on the 3 categories (easy, medium, and hard), and the reason can be that the CASSOD-C module has more parameters than the CASSOD-A module. It is shown that the CASSOD module is a good alternative to the original dilated convolutions. The accuracy of the CASSOD-A module shows that the new network achieves higher accuracy than the FEM-based network with only 47% of filter weights in the dilated convolution layers of the context module.

(a)

(b)

| Context Module of RetinaFace [2] | Easy (%) | Medium (%) | Hard (%) | Parameter Size of |

|---|---|---|---|---|

| Dilated Conv. Layers | ||||

| SSH† | 88.72 | 86.97 | 79.19 | (11,520) |

| FEM†† | 88.87 | 86.74 | 80.26 | 23,040 |

| FEM††-CASSOD-C | 89.05 | 87.46 | 81.09 | 15,360 |

| FEM††-CASSOD-C with BN∗ | 89.21 | 87.55 | 81.28 | 15,552 |

| FEM††-CASSOD-C with BN∗ and ReLU∗∗ | 89.12 | 87.62 | 81.16 | 15,552 |

| FEM††-CASSOD-A with BN∗ and ReLU∗∗ | 88.88 | 87.40 | 80.74 | 10,912 |

4.2 Accuracy of Image Segmentation

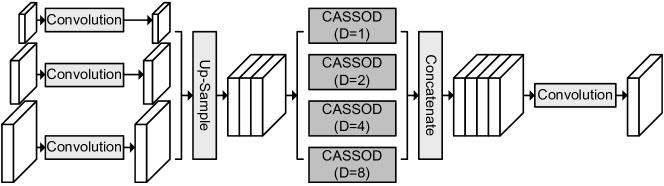

The proposed network architecture, CASSOD-Net, is evaluated based on the FastFCN [20], which is designed for semantic image segmentation tasks. The JPU module, which is included in the FastFCN, combines the up-sampled feature maps by using 4 groups of dilated convolutions with different dilation rates. The JPU modules also contain depthwise convolution layers. It can extract multiple-scale context information and increase the accuracy of image segmentation.

To compare the accuracy, the dilated convolutions in the JPU [20] are replaced by the proposed CASSOD-D modules. The network architecture of the modified JPU is shown in Fig. 6. ResNet-50 [4] is used as a backbone network and trained with Pascal Context [15] and ADE20K datasets [21]. The accuracy of image segmentation is shown in Table 4. The results [20] and our re-implementation results are very close. After replacing the dilated convolutions with the CASSOD modules, the accuracy does not decrease. Besides, the proposed CASSOD module can achieve higher accuracy than the previous architecture with batch normalization and activation. It is shown that the CASSOD module is a good alternative to the original dilated convolutions for image segmentation. Also, the proposed CASSOD modules can be applied to networks with depthwise and dilated convolutions. The computational time of GPU increases after applying the CASSOD modules because an extra layer is added. This problem can be solved by using the proposed hardware architecture.

| Datasets | Networks | pixAcc (%) | mIoU (%) | Speed (fps†) |

| Pascal Context [15] | EncNet + JPU (Table 2, 3 in [20]) | – | 51.20 | 37.56 |

| EncNet + JPU (Re-implementation) | 77.88 | 49.44 | 35.30 | |

| EncNet + JPU-CASSOD-D (Proposed Work) | 79.52 | 52.51 | 34.73 | |

| EncNet + JPU-CASSOD-D with BN and ReLU | 79.67 | 52.72 | 34.57 | |

| (Proposed Work) | ||||

| EncNet + JPU-CASSOD-D with BN | 79.75 | 52.76 | 34.12 | |

| (Proposed Work) | ||||

| ADE20K [21] | EncNet + JPU (Table 2, 4 in [20]) | 80.39 | 42.75 | 37.56 |

| EncNet + JPU (Re-implementation) | 80.04 | 42.09 | 35.30 | |

| EncNet + JPU-CASSOD-D (Proposed Work) | 80.35 | 42.72 | 34.73 | |

| EncNet + JPU-CASSOD-D with BN and ReLU | 80.48 | 42.86 | 34.57 | |

| (Proposed Work) | ||||

| EncNet + JPU-CASSOD-D with BN | 80.42 | 42.78 | 34.12 | |

| (Proposed Work) |

†Fps represents frame per second, which is the unit of processing speed of the GPU, TITAN Xp (12 GB).

4.3 Analysis of Hardware Systems

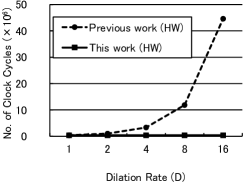

To show the advantages of the proposed hardware system, the hardware architecture and the CASSOD modules are compared with previous works. The previous works refer to the hardware systems [1, 13] which do not accelerate the algorithms with the dilated convolutions shown in Sec. 2. Since the hardware systems of the related works are not available, we re-implement a hardware system on our platforms without using the “Pixel Array” shown in Sec. 3.2 for comparison. The results are shown in Fig. 7.

Fig. 7(a) shows the relation between the computational time and the dilation rate () with filters. The number of cycles is equivalent to the computational time. In previous works, since there are no special hardware architectures to handle dilated convolutions, it is necessary to add zero-values to the filter weights after a filter is dilated. The computational time increases as the dilation rate increases and is roughly proportional to a square of the dilation rate . In the proposed hardware system, the input pixels of dilated convolutions can be adjusted according to the dilation rate and dumped consecutively, and the computational time does not vary with the dilation rate . It can be observed that the proposed hardware system can handle both dilated convolutions and standard convolutions () efficiently. When the dilation rate () of a filter is 2, the proposed hardware system is 2.78 times faster than the previous work.

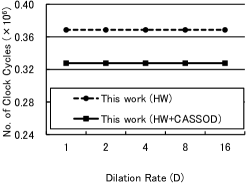

Fig. 7(b) shows the relation between the computational time and the dilation rate (). By replacing the traditional dilated convolutions with the CASSOD module, the computational cost and the parameter size can be reduced even though 1 additional convolution layer is required. In the proposed hardware system, the time to set the parameters for 1 additional layer is relatively small. The results show that the computational time of the proposed hardware system can be further reduced by using the CASSOD module, which achieves similar accuracy as the traditional dilated convolutions.

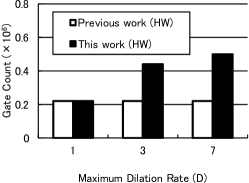

Fig. 7(c) shows the relation between the gate count of the “Pixel Array” and the maximum dilation rate (). It can be observed that the overhead of hardware cost increases as the dilation rate increases, but the difference of hardware cost between the previous work and the proposed work is not proportional to the maximum dilation rate, . The proposed hardware system scales well because it can support dilation rates () with less than times of hardware costs.

|

|

|

| (a) | (b) | (c) |

The supported dilation rates also depend on the filter size and the interface between modules. Table 5 shows the specifications of the proposed hardware architecture. Different from previous works, the proposed hardware system can handle and dilated convolutions efficiently. The maximum supported dilation rate for filters is 6, and the maximum supported dilation rate for filters is 3. By replacing the traditional dilated convolutions with the CASSOD module, an approximated dilated filters with a dilation rate of 6 can be implemented with cascaded filters with a dilation rate of 6. The computational costs and memory footprints can also be reduced. Since the ”Pixel Array” with 3 hierarchical stages only occupies only 21% of the total area, the overhead to support the dilated convolutions is relatively small. A comparison of the processing speed of the proposed system is shown in Table 6. The resolution of the input image is pixels, and the network is RetinaFace [2] with FEM [8], which is shown in Table 3. By using the proposed hardware architecture with the shift register array, 23% of processing time can be reduced. By replacing the dilated filters with the proposed CASSOD modules, 9% of processing time can be further reduced. The result clearly shows the advantages in terms of computational speed.

| Gate Count | Total: 2.4 M |

|---|---|

| (NAND-Gates) | (Pixel Array: 0.5 M) |

| Process | 28-nm CMOS technology |

| Clock Frequency | 400 MHz |

| Supported Filter Size | Maximum |

| Supported Dilation Rate | filter: |

| filter: | |

| No. of Hierarchical Stages | |

| Memory Size | 128 KB |

| Performance | 409.6 GOPS* |

∗GOPS represents giga operations per second. There are 2 operations in 1 MAC operation.

5 Conclusions and Future Work

In this paper, we propose an efficient module, which is called Cascaded and Separable Structure of Dilated (CASSOD) Convolutions, and a special hardware system to handle the CASSOD networks efficiently.

To analyze the accuracy of algorithms, two example applications, face detection and image segmentation, are tested with dilated convolutions and the proposed alternatives. For face detection, the RetinaFace [2] network architecture can achieve the same level of accuracy after replacing the dilated convolutions in the context module with the proposed CASSOD modules. For image segmentation, the FastFCN [20] can achieve the same level of accuracy after replacing the dilated convolutions in the JPU with the proposed CASSOD modules, which also contain depthwise convolutions. It is shown that the CASSOD module is a good alternative to the traditional dilated convolutions for both applications.

The performance of hardware is analyzed in terms of computational time and hardware costs. The input pixels of dilated convolutions can be adjusted according to the dilation rate and dumped consecutively, the computational time does not vary with the dilation rate . By using the proposed hardware architecture with the shift register array, 23% of processing time can be reduced for face detection applications.

The experiments clearly show that both the proposed hardware system and the proposed CASSOD modules have advantages over previous works. For future works, we plan to test the proposed hardware system and the CASSOD modules with other applications.

References

- [1] Yu-Hsin Chen, Joel Emer, and Vivienne Sze. Eyeriss: A spatial architecture for energy-efficient dataflow for convolutional neural networks. In Proceedings of ACM/IEEE International Symposium on Computer Architecture (ISCA), June 2016.

- [2] Jiankang Deng, Jia Guo, Yuxiang Zhou, Jinke Yu, Irene Kotsia, and Stefanos Zafeiriou. RetinaFace: Single-stage dense face localisation in the wild, 2019. CoRR, abs/1905.00641.

- [3] Ryuhei Hamaguchi, Aito Fujita, Keisuke Nemoto, Tomoyuki Imaizumi, and Shuhei Hikosaka. Effective use of dilated convolutions for segmenting small object instances in remote sensing imagery, 2017. CoRR, abs/1709.00179.

- [4] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition, 2015. CoRR, abs/1512.03385.

- [5] Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, and Hartwig Adam. MobileNets: Efficient convolutional neural networks for mobile vision applications, 2017. CoRR, abs/1704.04861.

- [6] Shehzeen Hussain, Mojan Javaheripi, Paarth Neekhara, Ryan Kastner, and Farinaz Koushanfar. FastWave: Accelerating autoregressive convolutional neural networks on FPGA. In Proceedings of IEEE/ACM International Conference on Computer-Aided Design (ICCAD), 2019.

- [7] Xinyu Lei, Hongguang Pan, and Xiangdong Huang. A dilated CNN model for image classification. IEEE Access, 7:124087–124095, July 2019.

- [8] Jian Li, Yabiao Wang, Changan Wang, Ying Tai, Jianjun Qian, Jian Yang, Chengjie Wang, Jilin Li, and Feiyue Huang. DSFD: Dual shot face detector, 2018. CoRR, abs/1810.10220.

- [9] Yuhong Li, Xiaofan Zhang, and Deming Chen. CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes, 2018. CoRR, abs/1802.10062.

- [10] Zeming Li, Chao Peng, Gang Yu, Xiangyu Zhang, Yangdong Deng, and Jian Sun. DetNet: A backbone network for object detection, 2018. CoRR, abs/1804.06215.

- [11] Guimin Lin, Qingxiang Wu, Lida Qiu, and Xixian Huang. Image super-resolution using a dilated convolutional neural network. Neurocomputing, 275:1219–1230, 2018.

- [12] Wenjie Luo, Yujia Li, Raquel Urtasun, and Richard Zemel. Understanding the effective receptive field in deep convolutional neural networks, 2017. CoRR, abs/1701.04128.

- [13] Yufei Ma, Naveen Suda, Yu Cao, Sarma Vrudhula, and Jae sun Seo. ALAMO: FPGA acceleration of deep learning algorithms with a modularized RTL compiler. Integration, 62:14–23, 2018.

- [14] Sachin Mehta, Mohammad Rastegari, Anat Caspi, Linda Shapiro, and Hannaneh Hajishirzi. ESPNet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In Proceedings of European Conference on Computer Vision (ECCV), 2018.

- [15] Roozbeh Mottaghi, Xianjie Chen, Xiaobai Liu, Nam-Gyu Cho, Seong-Whan Lee, Sanja Fidler, Raquel Urtasun, and Alan Yuille. The role of context for object detection and semantic segmentation in the wild. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2014.

- [16] Mahyar Najibi, Pouya Samangouei, Rama Chellappa, and Larry Davis. SSH: Single stage headless face detector, 2017. CoRR, abs/1708.03979.

- [17] Chunwei Tian, Yong Xu, Lunke Fei, Junqian Wang, Jie Wen, and Nan Luo. Enhanced CNN for image denoising, 2018. CoRR, abs/1810.11834.

- [18] Zhengyang Wang and Shuiwang Ji. Smoothed dilated convolutions for improved dense prediction, 2018. CoRR, abs/1808.08931.

- [19] Yunchao Wei, Huaxin Xiao, Honghui Shi, Zequn Jie, Jiashi Feng, and Thomas S. Huang. Revisiting dilated convolution: A simple approach for weakly- and semi- supervised semantic segmentation, 2018. CoRR, abs/1805.04574.

- [20] Huikai Wu, Junge Zhang, Kaiqi Huang, Kongming Liang, and Yizhou Yu. FastFCN: Rethinking dilated convolution in the backbone for semantic segmentation, 2019. CoRR, abs/1903.11816.

- [21] Bolei Zhou, Hang Zhao, Xavier Puig, Sanja Fidler, Adela Barriuso, and Antonio Torralba. Scene parsing through ADE20K dataset. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

- [22] Lichen Zhou, Chuang Zhang, and Ming Wu. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2018.