CatFLW: Cat Facial Landmarks in the Wild Dataset

Abstract

Animal affective computing is a quickly growing field of research, where only recently first efforts to go beyond animal tracking into recognizing their internal states, such as pain and emotions, have emerged. In most mammals, facial expressions are an important channel for communicating information about these states. However, unlike the human domain, there is an acute lack of datasets that make automation of facial analysis of animals feasible.

This paper aims to fill this gap by presenting a dataset called Cat Facial Landmarks in the Wild (CatFLW) which contains 2016 images of cat faces in different environments and conditions, annotated with 48 facial landmarks specifically chosen for their relationship with underlying musculature, and relevance to cat-specific facial Action Units (CatFACS). To the best of our knowledge, this dataset has the largest amount of cat facial landmarks available.

In addition, we describe a semi-supervised (human-in-the-loop) method of annotating images with landmarks, used for creating this dataset, which significantly reduces the annotation time and could be used for creating similar datasets for other animals.

The dataset is available on request.

1 Introduction

Emotion recognition based on facial expression and body language analysis are important and challenging topics addressed within the field of human affective computing. Comprehensive surveys cover analysis of facial expressions [27], and body behaviors [33], with the recent trend being multi-modal emotion recognition approaches [35]. Automated facial expression analysis is also studied in pain research [2], and is most crucial for addressing pain in infants[40].

In animals, facial expressions are known to be produced by most mammalian species[31, 14]. Analogously to the human domain, they are assumed to convey information about emotional states, and are increasingly studied as potential indicators of subjective states in animal emotion and welfare research [5, 29, 3, 19].

Although research in animal affective computing has so far lagged behind the human domain, this is rapidly changing in recent years [22]. Broome et al [7] provide a comprehensive review of more than twenty studies addressing automated recognition of animals’ internal states such as emotions and pain, with the majority of these studies focusing on the analysis of animal faces in different species, such as rodents, dogs, horses and cats.

Cats are of specific interest in the context of pain, as they are one of the most challenging species in terms of pain assessment and management due to a reduced physiological tolerance and adverse effects to common veterinary analgesics[25], a lack of strong consensus over key behavioural pain indicators[30] and human limitations in accurately interpreting feline facial expressions[12]. Three different manual pain assessment scales have been developed and validated in English for domestic cats: the UNESP-Botucatu multidimensional composite pain scale[6], the Glasgow composite measure pain scale (CMPS, [34]) and the Feline Grimace Scale (FGS) [16]. The latter was further used for a comparative study in which human’s assignment of FGS to cats during real time observations and then subsequent FGS scoring of the same cats from still images were compared. It was shown that there was no significant difference between the scoring methods [15], indicating that facial images can be a reliable medium from which to assess pain.

Finka and colleagues[18] were the first to apply 48 geometric landmarks to identify and quantify facial shape change associated with pain in cats. These landmarks were based on both the anatomy of cat facial musculature, and the range of facial expressions generated as a result of facial action units (CatFACS)[8]. The authors manually annotated the landmarks, and used statistical methods (PCA analysis) to establish a relationship between PC scores and a well-validated measure of pain in cats. Feighelstein et al [17] used the dataset and landmark annotations from [18] to automate pain recognition in cats using machine learning models based on geometric landmarks grouped into multivectors, reaching accuracy of above 72%, which was comparable to an alternative approach using deep learning. This indicates that the use of the 48 landmarks from [18] can be a reliable method for pain recognition from cat facial images. The main challenge in this method remains the automation of facial landmarks detection. In general, one of the most crucial gaps in animal affective computing today, as emphasized in [7], is the lack of publically available datasets. To the best of our knowledge, all datasets containing animal faces available today have very few facial landmarks, as discussed in the next section.

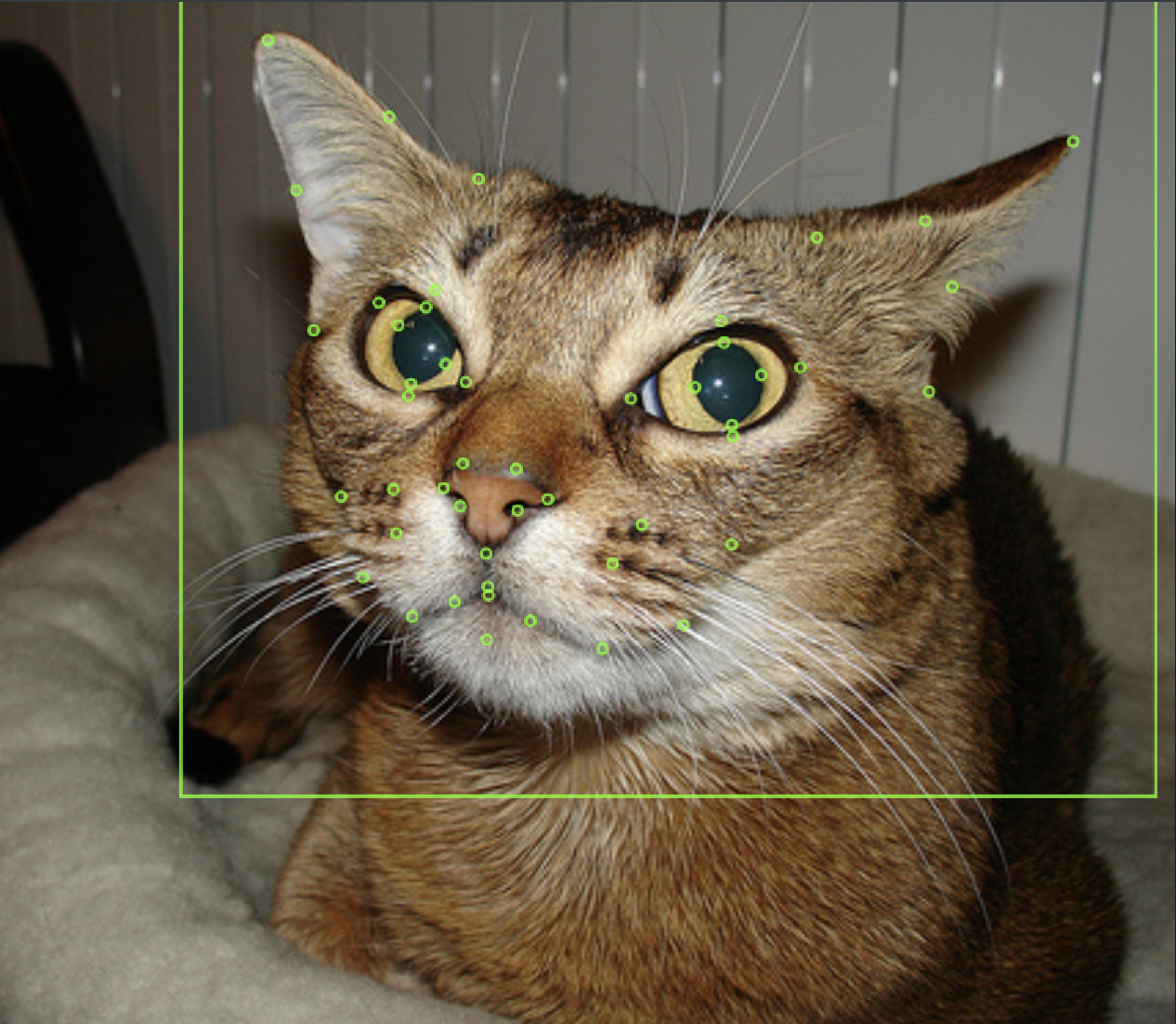

To address this gap for the feline species, where facial analysis can help address acute problems in the context of pain assessment, we present the Cat Facial Landmarks in the Wild (CatFLW) dataset, containing images of cat faces in various conditions and environments with face bounding boxes and 48 facial landmarks of [18, 17] for each image (see Figure 1). We hope that this dataset can serve as a starting point for creating similar datasets for various animals, as well as push forward automatic detection of pain and emotions in cats and other feline species.

Our main contributions are, therefore, the following:

-

•

We present a dataset containing 2016 annotated images of cats with 48 landmarks, which are based on the cat’s facial anatomy and allows the study of complex morphological features of cats’ faces.

-

•

We describe an AI-based ‘human-in-the-loop’ (also known as cooperative machine learning[21]) method of facial landmark annotation, and show that it significantly reduces annotation time per image.

2 Related Datasets

Our dataset is based on the original dataset collected by Zhang et al. [41], that contains 10,000 images of cats annotated with 9 facial landmarks. It includes a wide variety of different cat breeds in different conditions, which can provide good generalization when training computer vision models, however some images from it depict several animals, have visual interference in front of animal faces or cropped (according to our estimates 10-15%). In [41] Zhang et al. 9 landmarks are labeled for each image, which is sufficient for detecting and analyzing general information about animal faces (for example, the tilt of the head or the direction of movement), but is not enough for analyzing complex movements of facial muscles.

| Dataset | Animal | Size | Facial Landmarks |

|---|---|---|---|

| Khan et al. [23] | Various | 21900 | 9 |

| Zhang et al. [41] | Cat | 10000 | 9 |

| Liu et al. [28] | Dog | 8351 | 8 |

| Cao et al. [9] | Various | 5517 | 5 |

| Mougeot et al. [32] | Dog | 3148 | 3 |

| Sun et al. [37] | Cat | 1706 | 15 |

| Yang et al. [39] | Sheep | 600 | 8 |

| CatFLW | Cat | 2016 | 48 |

Sun et al. [37] used the same dataset in their work, expanding the annotation to 15 facial landmarks. To the best of our knowledge, only 1,706 out of the declared 10,000 images are publicly available.

Khan et al. [23] collected the AnimalWeb dataset, consisting of an impressive number of 21,900 images annotated with 9 landmarks. Despite the wide range of represented species, only approximately 450 images can be attributed to feline species.

Other relevant datasets, containing less than 9 facial landmarks, are mentioned in Table 1, including those in [28, 9, 32, 39]. For comparison, popular datasets for human facial landmark detection [4, 26] have several dozens of landmarks. There are also other datasets suitable for face detection and recognition of various animal species [11, 13, 20, 24, 10], but they have no facial landmark annotations.

3 The CatFLW Dataset

The CatFLW dataset consists of 2016 images selected from the dataset in [41] using the following inclusion criterion which optimize the training of landmark detection models: image should contain a single fully visible cat face, where the cat is in non-laboratory conditions (‘in the wild’). Thus many images from the original dataset were discarded (Figure 2 shows the examples).

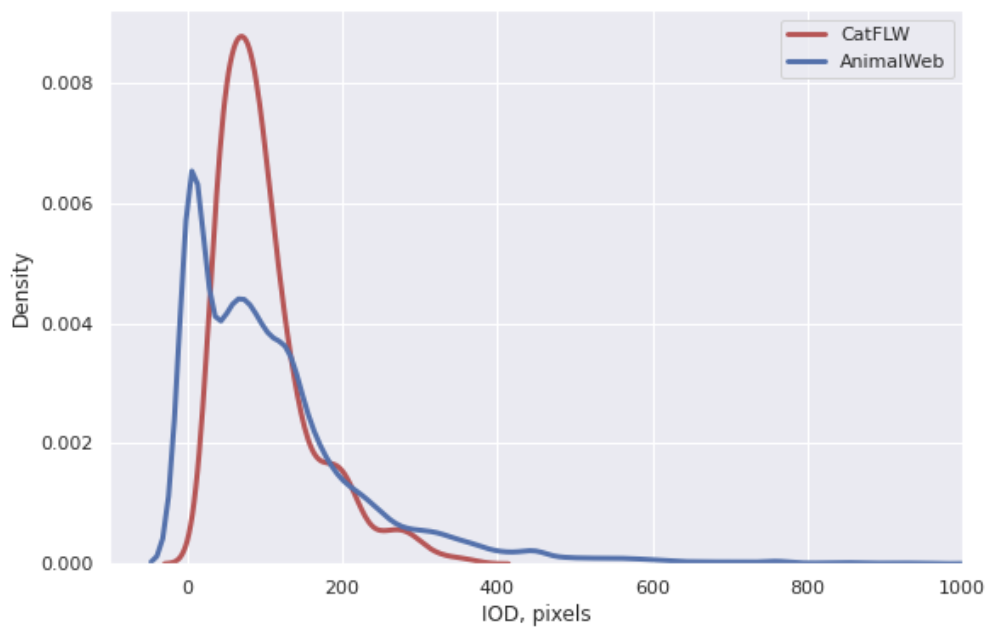

The images in this dataset have a large variation of colors, breeds and environments, as well as of scale, position and angle of head tilts. Figure 3 shows the distribution of inter-ocular distances (IOD — distance between the two medial canthi of each eye) on CatFLW and AnimalWeb [23]. CatFLW dataset has similar characteristics to AnimalWeb, except that the latter has about twice the standard deviation. This can be explained by the large species diversity, as well as a greater variation in the size of the images.

3.1 The Landmark Annotation Process

After selection and filtering of images, a bounding box and 48 facial landmarks were placed on each image using the Labelbox platform[1].

Bounding box. In the current dataset, the bounding box is not tied to facial landmarks (this is usually done by selecting the outermost landmarks and calculating the difference in their coordinates), but is visually placed so that the entire face of the animal fits into the bounding box as well as about 10% of the space around the face. This margin is made because when studying detection models on the initial version of the dataset, it was noticed that some of them tend to crop the faces and choose smaller bounding boxes. In the case when face detection is performed for the further localization of facial landmarks, the clipping of faces can lead to the disappearance from the image of important parts of the cat’s face, such as the tips of the ears or the mouth. Such an annotation still makes it possible to construct bounding boxes by outermost landmarks if needed.

Landmarks. 48 facial landmarks introduced in Finka et al. [18] and used in Feighelstein et al. [17] were manually placed on each image. These landmarks were specifically chosen for their relationship with underlying musculature and anatomical features, and relevance to cat-specific Facial Action Units (CatFACS) [8].

Annotation Process. The process of landmark annotation had several stages: at first, 10% images were annotated by an annotator who has an extensive experience labeling facial landmarks for various animals, then they were annotated by second expert with the same annotation instructions. The landmarks were then compared to verify the internal validity and reliability via the Inter Class Correlation Coefficient ICC2 [36], and reached a strong agreement between the annotators with a score of 0.998.

Finally, the remaining part of images were annotated by the first annotator using the ‘human-in-the-loop’ method described below.

After the annotation, a review and correction of landmarks and bounding boxes were performed, all images were re-filtered and verified.

3.2 AI-assisted Annotation

Khan et al.[23] spent approximately 5,408 man-hours annotating all the images in the AnimalWeb dataset (each annotation was obtained by taking the median value of the annotations of five or more error-prone volunteers). Roughly, it is 3 minutes for the annotation of one image (9 landmarks) or 20 seconds for one landmark (taken per person). Our annotation process took 140 hours, which is about 4.16 minutes per image (48 landmarks) and only 5.2 seconds per landmark. Such performance is achieved due to a semi-supervised ’human-in-the-loop’ method that uses the predictions of a gradually-trained model as a basis for annotation.

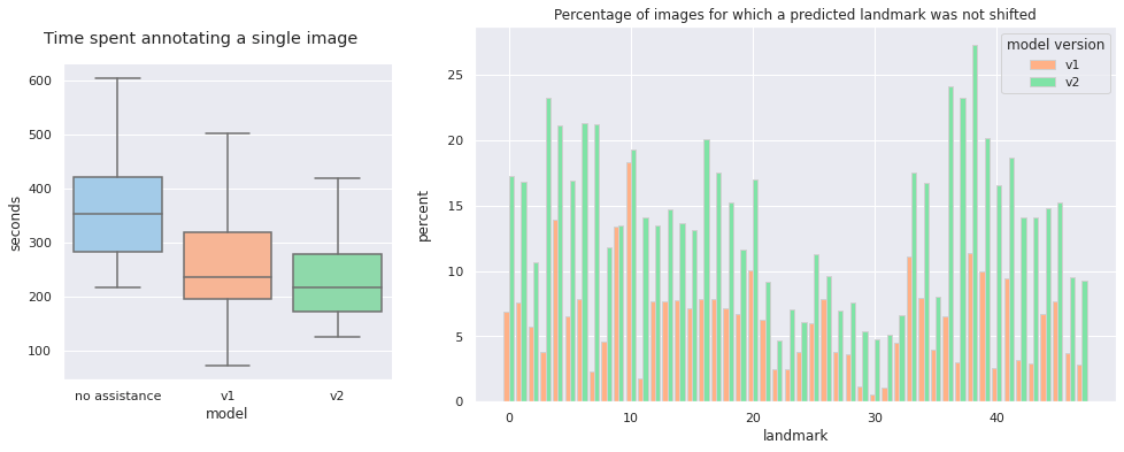

To assess the impact of AI on the annotation process, 3 batches were created from the data. The first one consisted of two hundred images and was annotated without AI assistance. Next, we trained the EfficientNet-based [38] model on the dataset from [18] (about 600 images) combined with the first annotated batch (800 images total). After that, we used this model (v1) to predict the landmarks on the second batch (900 images). Based on the predictions, landmarks were annotated for the second batch. This approach made it possible to reduce the average time for annotation of one image between the first and second batches by 35%, since for annotation it became necessary only to adjust the position of the landmark, and not to place it from scratch. However, the predictions obtained turned out to be inaccurate, so the first and second batches were combined to train the next version of the model (v2) with the same architecture (1700 images total). After training, the v2 model was used to predict the landmarks on the third batch, and then an annotation was performed based on these predictions. The results obtained for the time spent on annotation of one image are shown in Figure 4(left). Increasing the accuracy of predicting the position of a landmark reduces the time required to adjust it, sometimes to zero if the annotator considers the position to be completely correct. Figure 4(right) shows the distribution of the percentage of images in which a specific facial landmark was not shifted by the annotator: between the two versions of the model, the increase is approximately doubled.

4 Conclusion

In this paper we present a CatFLW dataset of annotated cat faces. It includes 2016 images of cats in various conditions with face bounding boxes and 48 facial landmarks for each cat. Each of the landmarks corresponds to a certain anatomical feature, which makes it possible to use these landmarks for various kinds of tasks on evaluating the internal state of cats. The proposed AI-assisted method of image annotation has significantly reduced the time for annotation of landmarks and reduced the amount of manual work during the preparation of the dataset. The created dataset opens up opportunities for the development of various computer vision models for detecting facial landmarks of cats and can be the starting point for research in the field of behaviour observation and well-being of cats and animals in general.

Acknowledgements

The research was supported by the Data Science Research Center at the University of Haifa. We thank Ephantus Kanyugi for his contribution with data annotation and management. We thank Yaron Yossef for his technical support.

References

- [1] Labelbox, ”labelbox,” online, 2023. [online]. available:. https://labelbox.com.

- [2] Rasha M Al-Eidan, Hend Suliman Al-Khalifa, and AbdulMalik S Al-Salman. Deep-learning-based models for pain recognition: A systematic review. Applied Sciences, 10:5984, 2020.

- [3] Niek Andresen, Manuel Wöllhaf, Katharina Hohlbaum, Lars Lewejohann, Olaf Hellwich, Christa Thöne-Reineke, and Vitaly Belik. Towards a fully automated surveillance of well-being status in laboratory mice using deep learning: Starting with facial expression analysis. PLoS One, 15(4):e0228059, 2020.

- [4] Peter N Belhumeur, David W Jacobs, David J Kriegman, and Neeraj Kumar. Localizing parts of faces using a consensus of exemplars. IEEE transactions on pattern analysis and machine intelligence, 2013.

- [5] Tali Boneh-Shitrit, Marcelo Feighelstein, Annika Bremhorst, Shir Amir, Tomer Distelfeld, Yaniv Dassa, Sharon Yaroshetsky, Stefanie Riemer, Ilan Shimshoni, Daniel S Mills, et al. Explainable automated recognition of emotional states from canine facial expressions: the case of positive anticipation and frustration. Scientific reports, 12(1):22611, 2022.

- [6] Juliana T Brondani, Khursheed R Mama, Stelio PL Luna, Bonnie D Wright, Sirirat Niyom, Jennifer Ambrosio, Pamela R Vogel, and Carlos R Padovani. Validation of the english version of the unesp-botucatu multidimensional composite pain scale for assessing postoperative pain in cats. BMC Veterinary Research, 9(1):1–15, 2013.

- [7] Sofia Broome, Marcelo Feighelstein, Anna Zamansky, Carreira G Lencioni, Haubro P Andersen, Francisca Pessanha, Marwa Mahmoud, Hedvig Kjellström, and Albert Ali Salah. Going deeper than tracking: A survey of computer-vision based recognition of animal pain and emotions. International Journal of Computer Vision, 131(2):572–590, 2023.

- [8] Cátia C Caeiro, Anne M Burrows, and Bridget M Waller. Development and application of catfacs: Are human cat adopters influenced by cat facial expressions? Applied Animal Behaviour Science, 2017.

- [9] Jinkun Cao, Hongyang Tang, Haoshu Fang, Xiaoyong Shen, Cewu Lu, and Yu-Wing Tai. Cross-domain adaptation for animal pose estimation. CoRR, abs/1908.05806, 2019.

- [10] Peng Chen, Pranjal Swarup, Wojciech M Matkowski, Kong Adams WK, Su Han, Zhihe Zhang, and Hou Rong. A study on giant panda recognition based on images of a large proportion of captive pandas. Ecol Evol., 2020.

- [11] Melanie Clapham, Ed Miller, Mary Nguyen, and Russel C Van Horn. Multispecies facial detection for individual identification of wildlife: a case study across ursids. Mamm Biol 102, 943–955, 2022.

- [12] Lauren Dawson, Joanna Cheal, Lauren Niel, and Georgia Mason. Humans can identify cats’ affective states from subtle facial expressions. Animal Welfare, 28(4):519–531, 2019.

- [13] Debayan Deb, Susan Wiper, Sixue Gong, Yichun Shi, Cori Tymoszek, Alison Fletcher, and Anil K. Jain. Face recognition: Primates in the wild. In 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), pages 1–10, 2018.

- [14] Rui Diogo, Virginia Abdala, N Lonergan, and BA Wood. From fish to modern humans–comparative anatomy, homologies and evolution of the head and neck musculature. Journal of Anatomy, 213(4):391–424, 2008.

- [15] Marina C Evangelista, Javier Benito, Beatriz P Monteiro, Ryota Watanabe, Graeme M Doodnaught, Daniel SJ Pang, and Paulo V Steagall. Clinical applicability of the feline grimace scale: real-time versus image scoring and the influence of sedation and surgery. PeerJ, 8:e8967, 2020.

- [16] Marina C Evangelista, Ryota Watanabe, Vivian SY Leung, Beatriz P Monteiro, Elizabeth O’Toole, Daniel SJ Pang, and Paulo V Steagall. Facial expressions of pain in cats: the development and validation of a feline grimace scale. Scientific reports, 9(1):1–11, 2019.

- [17] Marcelo Feighelstein, Ilan Shimshoni, Lauren Finka, Stelio P. Luna, Daniel Mills, and Anna Zamansky. Automated recognition of pain in cats. Scientific Reports, 12, 2022.

- [18] Lauren R Finka, Stelio P Luna, Juliana T Brondani, Yorgos Tzimiropoulos, John McDonagh, Mark J Farnworth, Marcello Ruta, and Daniel S Mills. Geometric morphometrics for the study of facial expressions in non-human animals, using the domestic cat as an exemplar. Scientific reports, 9(1):1–12, 2019.

- [19] Karina B Gleerup, Björn Forkman, Casper Lindegaard, and Pia H Andersen. An equine pain face. Veterinary anaesthesia and analgesia, 42(1):103–114, 2015.

- [20] Songtao Guo, Pengfei Xu, Qiguang Miao, Guofan Shao, Colin A Chapman, Xiaojiang Chen, Gang He, Dingyi Fang, He Zhang, Yewen Sun, Zhihui Shi, and Baoguo Li. Automatic identification of individual primates with deep learning techniques. iScience, 2020.

- [21] Alexander Heimerl, Katharina Weitz, Tobias Baur, and Elisabeth André. Unraveling ml models of emotion with nova: Multi-level explainable ai for non-experts. IEEE Transactions on Affective Computing, 13(3):1155–1167, 2020.

- [22] Hilde I Hummel, Francisca Pessanha, Albert Ali Salah, Thijs JPAM van Loon, and Remco C Veltkamp. Automatic pain detection on horse and donkey faces. In FG, 2020.

- [23] Muhammad Haris Khan, John McDonagh, Salman H Khan, Muhammad Shahabuddin, Aditya Arora, Fahad Shahbaz Khan, Ling Shao, and Georgios Tzimiropoulos. Animalweb: A large-scale hierarchical dataset of annotated animal faces. CVRR, abs/1909.04951, 2019.

- [24] Matthias Körschens, Björn Barz, and Joachim Denzler. Towards automatic identification of elephants in the wild. CoRR, abs/1812.04418, 2018.

- [25] B Duncan X Lascelles and Sheilah A Robertson. Djd-associated pain in cats: what can we do to promote patient comfort? Journal of Feline Medicine & Surgery, 12(3):200–212, 2010.

- [26] Vuong Le, Jonathan Brandt, Zhe Lin, Lubomir Bourdev, and Thomas S Huang. Interactive facial feature localization. Proceedings of European Conference on Computer Vision (ECCV), Springer, 2012.

- [27] Shan Li and Weihong Deng. Deep facial expression recognition: A survey. IEEE transactions on affective computing, 13(3):1195–1215, 2022.

- [28] Jiongxin Liu, Angjoo Kanazawa, David Jacobs, and Peter Belhumeur. Dog breed classification using part localization. In European conference on computer vision, pages 172–185. Springer, 2012.

- [29] Katrina Merkies, Chloe Ready, Leanne Farkas, and Abigail Hodder. Eye blink rates and eyelid twitches as a non-invasive measure of stress in the domestic horse. Animals (Basel), 2019.

- [30] Isabella Merola and Daniel S Mills. Behavioural signs of pain in cats: an expert consensus. PloS one, 11(2):e0150040, 2016.

- [31] Alexander Mielke, Bridget M Waller, Claire Pérez, Alan V Rincon, Julie Duboscq, and Jérôme Micheletta. Netfacs: Using network science to understand facial communication systems. Behavior Research Methods 54, 1912–1927, 2022.

- [32] Guillaume Mougeot, Dewei Li, and Shuai Jia. A deep learning approach for dog face verification and recognition. Lecture Notes in Computer Science, 2019.

- [33] Fatemeh Noroozi, Ciprian Adrian Corneanu, Dorota Kamińska, Tomasz Sapiński, Sergio Escalera, and Gholamreza Anbarjafari. Survey on emotional body gesture recognition. IEEE transactions on affective computing, 12(2):505–523, 2018.

- [34] Jacky Reid, Mariann E Scott, Gillian Calvo, and Andrea M Nolan. Definitive glasgow acute pain scale for cats: validation and intervention level. Veterinary Record, 180(18):449–449, 2017.

- [35] Garima Sharma and Abhinav Dhall. A survey on automatic multimodal emotion recognition in the wild. In Gloria Phillips-Wren, Anna Esposito, and Lakhmi C. Jain, editors, Advances in Data Science: Methodologies and Applications, pages 35–64. Springer International Publishing, Cham, 2021.

- [36] Patrick E Shrout and Joseph L Fleiss. Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin, 86(2):420–428, 1979.

- [37] Yifan Sun and Noboru Murata. Cafm: A 3d morphable model for animals. IEEE Winter Applications of Computer Vision Workshops (WACVW), 2020.

- [38] Mingxing Tan and Quoc V Le. Efficientnet: Rethinking model scaling for convolutional neural networks. CoRR, abs/1905.11946, 2019.

- [39] Heng Yang, Renqiao Zhang, and Peter Robinson. Human and sheep facial landmarks localisation by triplet interpolated features. CVRR, abs/1509.04954, 2015.

- [40] Ghada Zamzmi, Rangachar Kasturi, Dmitry Goldgof, Ruicong Zhi, Terri Ashmeade, and Yu Sun. A review of automated pain assessment in infants: features, classification tasks, and databases. IEEE reviews in biomedical engineering, 11:77–96, 2017.

- [41] Weiwei Zhang, Jian Sun, and Xiaoou Tang. Cat head detection - how to effectively exploit shape and texture features. ECCV, 2008.