Cell-Free Massive MIMO Detection: A Distributed Expectation Propagation Approach

Abstract

Cell-free massive MIMO is one of the core technologies for future wireless networks. It is expected to bring enormous benefits, including ultra-high reliability, data throughput, energy efficiency, and uniform coverage. As a radically distributed system, the performance of cell-free massive MIMO critically relies on efficient distributed processing algorithms. In this paper, we propose a distributed expectation propagation (EP) detector for cell-free massive MIMO, which consists of two modules: a nonlinear module at the central processing unit (CPU) and a linear module at each access point (AP). The turbo principle in iterative channel decoding is utilized to compute and pass the extrinsic information between the two modules. An analytical framework is provided to characterize the asymptotic performance of the proposed EP detector with a large number of antennas. Furthermore, a distributed iterative channel estimation and data detection (ICD) algorithm is developed to handle the practical setting with imperfect channel state information (CSI). Simulation results will show that the proposed method outperforms existing detectors for cell-free massive MIMO systems in terms of the bit-error rate and demonstrate that the developed theoretical analysis accurately predicts system performance. Finally, it is shown that with imperfect CSI, the proposed ICD algorithm improves the system performance significantly and enables non-orthogonal pilots to reduce the pilot overhead.

Index Terms:

6G, cell-free massive MIMO, distributed MIMO detection, expectation propagation.I Introduction

The fifth-generation (5G) wireless networks have been commercialized since 2019 to support a wide range of services, including enhanced mobile broadband, ultra-reliable and low-latency communications, and massive machine-type communications[2]. However, the endeavor for faster and more reliable wireless communications will never stop. This trend is reinforced by the recent emergence of several innovative applications, including the Internet of Everything, Tactile Internet, and seamless virtual and augmented reality. Future sixth-generation (6G) wireless networks are expected to provide ubiquitous coverage, enhanced spectral efficiency (SE), connected intelligence, etc. [3, 4]. Such diverse service requirements create daunting challenges for system design. With the commercialization of massive MIMO technologies [5] in 5G, it is time to think about new MIMO-based network architectures to support the continuous exponential growth of mobile data traffic and a plethora of applications.

As a promising solution, cell-free massive MIMO was proposed [6]. It is a disruptive technology and has been recognized as a crucial and core enabler for beyond 5G and 6G networks[7, 8, 9, 10, 12, 11]. Cell-free massive MIMO can be interpreted as a combination of massive MIMO[5], distributed antenna systems (DAS)[13], and Network MIMO[14]. It is expected to bring important benefits, including huge data throughput, ultra-low latency, ultra-high reliability, a huge increase in the mobile energy efficiency, and ubiquitous and uniform coverage. In cell-free massive MIMO systems, a very large number of distributed access points (APs) are connected to a central processing unit (CPU) via a front-haul network in order to cooperate and jointly serve a large number of users using the same time or frequency resources over a wide coverage area. In contrast to current cellular systems, there is no cell or cell boundary in cell-free MIMO networks. As a result, this approach is revolutionary and will be able to relieve one of the major bottlenecks and inherent limitations of wireless networks, i.e., the strong inter-cell interference. Compared to conventional co-located massive MIMO, cell-free networks offer more uniform connectivity for all users thanks to the macro-diversity gain provided by the distributed antennas.

Investigations on cell-free massive MIMO started with some initial attempts on analyzing the SE [6], where single-antenna APs, single-antenna users, and Rayleigh fading channels were considered. The analysis has been extended to multi-antenna APs with Rayleigh fading, Rician fading, and correlated channels [15, 16, 17], showing that cell-free massive MIMO networks can achieve great SE. The energy efficiency of cell-free massive MIMO systems was then investigated [18, 19]. It was shown that cell-free massive MIMO systems can improve the energy efficiency by approximately ten times compared to cellular massive MIMO. Although cell-free massive MIMO has shown a huge potential, its deployment critically depends on effective and scalable algorithms. According to [20], cell-free massive MIMO is considered to be scalable if the signal processing tasks for channel estimation, precoder and combiner design, fronthaul overhead, and power optimization per AP can be kept within finite complexity when the number of served users goes to infinity. Unfortunately, the conventional cell-free massive MIMO is not scalable. To tackle the scalability issue, the concept of user-centric has been introduced into cell-free massive MIMO systems [20, 21, 22, 23, 24, 25]. In particular, a user-centric dynamic cooperation clustering (DCC) scheme was introduced [20], where each user is only served by a subset of APs. This scheme was called scalable cell-free massive MIMO.

In this paper, we will focus on effective data detection algorithms in cell-free massive MIMO systems. In this aspect, some early attempts were made on centralized algorithms where the detection is implemented at the CPU with the received pilots and data signals reported from all APs [6, 26]. However, the computational and fronthaul overhead of such a centralized detection scheme is prohibitively high when the network size becomes large. To address this challenge, distributed detectors have been recently investigated. In [27], one centralized and three distributed receivers with different levels of cooperation among APs were compared in terms of SE. Radio stripes were then incorporated into cell-free massive MIMO in [28]. In this case, the APs are sequentially connected and share the same fronthaul link in a radio stripe network, thus reducing the cabling substantially. Based on this structure, a novel uplink sequential processing algorithm was developed which can achieve an optimal performance in terms of both SE and mean-square error (MSE). Furthermore, it can achieve the same performance as the centralized minimum MSE (MMSE) processing, while requiring much lower fronthaul overhead by making full use of the computational resources at the APs. However, the distributed detectors investigated in [27] are linear detectors. Therefore, they are highly suboptimal or may even be ill-conditioned in terms of the bit-error rate (BER) performance. To address this problem, the local per-bit soft detection is exploited at each AP with the bit log-likelihoods shared on the front-haul link by a partial marginalization detector [29]. However, the proposed soft detection is still very complex as the approximate posterior density for each received data bit is required to be computed at each AP. Therefore, it is of great importance to develop a distributed and non-linear receiver to achieve a better BER performance with a considerably lower complexity.

To fill this gap, we propose a distributed non-linear detector for cell-free massive MIMO networks in this paper, which is derived based on the expectation propagation (EP) principle [30]. The EP algorithm, proposed in [30], provides an iterative method to recover the transmitted data from the received signal and has recently attracted extensive research interests in massive MIMO detection [31, 32, 33, 34]. Recently, it has been extended to cell-free massive MIMO systems [35, 36]. The algorithm is derived from the factor graph with the messages updated and passed between different pairs of nodes. Specifically, with the linear MMSE estimator, the APs first detect the symbols with the local channel state information (CSI) and transfer the posterior mean and variance estimates to the CPU. Then, the extrinsic information for each AP is computed and integrated at the CPU by maximum-ratio combining (MRC). Subsequently, the CPU uses the posterior mean estimator to refine the detection and the extrinsic information is transferred to each AP from the CPU via the fronthaul.

The main contributions of this paper are summarized as follows:

-

•

Different from the existing linear detectors in cell-free massive MIMO[27], we propose a distributed and non-linear detector. The detection performance is improved at the cost of slightly increasing the computation overhead at the computationally-powerful CPU. Simulation results will demonstrate that the proposed method outperforms existing distributed detectors and even the centralized MMSE detector in terms of BER performance.

-

•

To be applicable in practical scenarios with imperfect CSI, we further develop a distributed iterative channel estimation and data detection (ICD) algorithm for cell-free massive MIMO systems, where the detector takes the channel estimation error and channel statistics into consideration while the channel estimation is refined by the detected data. Simulation results will demonstrate that the proposed ICD algorithm outperforms existing distributed detectors and enables non-orthogonal pilots to reduce the pilot overhead.

-

•

We develop an analytical framework to analyze the performance of the distributed EP algorithm, which can precisely predict the performance of the proposed detector. Based on the theoretical analysis, key performance metrics of the system, such as the MSE and BER, can be analytically determined without time-consuming Monte Carlo simulation.

Notations—For any matrix , and will denote the conjugate transpose and trace of , respectively. In addition, is the identity matrix and is the zero matrix. We use to denote the real Gaussian integration measure. That is,

A complex Gaussian distribution with mean and covariance can be described by the probability density function,

The remaining part of this paper is organized as follows. Section II introduces the system model and formulates the cell-free massive MIMO detection problem. The distributed EP detector is proposed in Section III and the distributed ICD receiver is investigated in Section IV. Furthermore, an analytical framework is provided in Section V. Numerical results are then presented in Section VI and Section VII concludes the paper.

II System Model

In this section, we first present the system model and formulate the cell-free massive MIMO detection problem. Four commonly-adopted receivers are then briefly introduced.

II-A Cell-Free Massive MIMO

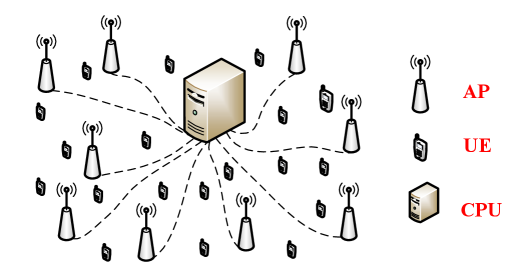

As illustrated in Fig. 1, we consider a cell-free massive MIMO network with distributed APs, each equipped with antennas to serve single-antenna users. All APs are connected to a CPU that has abundant computing resources. Denote as the channel between the -th user and the -th AP, where is the spatial correlation matrix, and as the large-scale fading coefficient involving the geometric path loss and shadowing. In the uplink data transmission phase, we consider as the subset of APs that serve the -th user and define the DCC matrices based on as

| (1) |

Specifically, is an identity matrix if the -th AP serves the -th user, and is a zero matrix, otherwise. Furthermore, we define as the set of user indices that are served by the -th AP

| (2) |

where the cardinality of is denoted as . When each AP serves all users, we have accordingly. Assuming that perfect CSI is available at the local APs, the received signal at the -th AP is then given by

| (3) |

where is the transmitted symbol drawn from an -QAM constellation, and is the transmit power at the -th user. The additive noise at the -th AP is denoted as . Let denote the transmitted vector from all users and is the channel from all users to AP . If the -th user is not associated with the -th AP, the channel vector is . Furthermore, we denote as the channel matrix between all users and APs. The uplink detection problem for cell-free massive MIMO is to detect the transmitted data based on the received signals , channel matrix , and noise power .

(a) Conventional cell-free massive MIMO system

(b) Scalable cell-free massive MIMO system.

II-B Linear Receivers

For the cell-free massive MIMO detection, there are four commonly-adopted linear receivers [27] with different levels of cooperation among APs. This is elaborated as follows.

-

•

Fully centralized receiver: The pilot and data signals received at all APs are sent to the CPU for channel estimation and data detection. The CPU may perform the MMSE or MRC detection.

-

•

Partially distributed receiver with large-scale fading decoding: First, each AP estimates the channels and uses the linear MMSE detector to detect the received signals. Then, the detected signals are collected at the CPU for joint detection for all users by utilizing the large-scale fading decoding (LSFD) method. Compared to the fully centralized receiver, only the channel statistics are utilized at the CPU but the pilot signals are not required to be sent to the CPU.

-

•

Partially distributed receiver with average decoding: It is a special case of the partially distributed receiver with LSFD. The CPU performs joint detection for all users by simply taking the average of the local estimates. Thus, no channel statistics are required to be transmitted to the CPU via the fronthaul.

-

•

Fully distributed receiver: It is a fully distributed approach in which the data detection is performed at the APs based on the local channel estimates. No information is required to be transferred to the CPU. Each AP may perform MMSE detection.

All of the above-mentioned four receivers achieve performance that is far from the optimal one due to the linear processing. In contrast, non-linear receivers have shown great advantages in terms of BER in cellular massive MIMO systems but at the cost of higher computational complexity [31]. Thanks to the relatively high computing abilities at the CPU and the large number of APs in cell-free massive MIMO systems, we can offload some of the computational-intensive operations to the CPU and distribute the partial computation tasks to APs. Following this idea, we will propose a distributed non-linear detector for cell-free massive MIMO systems.

III Proposed Distributed EP Detector

In this section, we first formulate the MIMO detection problem from the Bayesian inference. Then, we introduce the factor graph and propose the distributed EP detector. Finally, we will analyze the computational complexity and fronthaul overhead of the proposed detector.

III-A Distributed Bayesian Inference

We first apply the Bayesian inference to recover the signals from the received signal in the data detection stage with the linear model , where , and . Based on Bayes’ theorem, the posterior probability is given by

| (4) |

where is the likelihood function with the known channel matrix and is the prior distribution of . Given the posterior probability , the Bayesian MMSE estimate is obtained by

| (5) |

However, the Bayesian MMSE estimator is not tractable because the marginal posterior probability in (5) involves a high-dimensional integral, which motivates us to develop an advanced method to approximate (4) effectively. Furthermore, different from conventional cellular MIMO systems[31], the posterior probability in (4) has to be rewritten in a distributed way because of the inherent distributed characteristic of cell-free massive MIMO systems, given by

| (6) |

As a result, we can develop an efficient distributed MIMO detector to approximate the posterior probability (6) by resorting to message passing on factor graph and take the characteristic of cell-free massive MIMO into consideration. The factor graph and proposed detector will be elaborated in next sections.

III-B Factor Graph for Cell-free Massive MIMO Detection

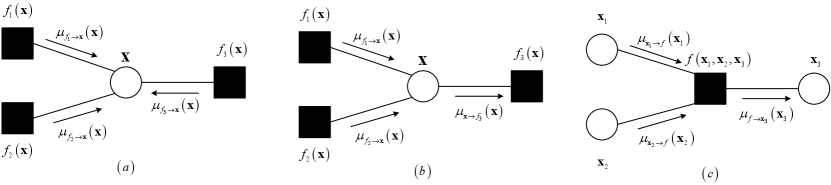

Factor graph is a powerful tool to develop efficient algorithms for solving inference problems by performing different message-passing rules. We first introduce several concepts for the factor graph [37], as illustrated in Fig. 2, where the hollow circles represent the variable nodes and the solid squares represent the factor nodes. For a factor graph with factor nodes , all connected to a set of variable nodes , the messages are computed and updated iteratively by performing the following rules.

1) Approximate beliefs: The approximate belief on variable node is , where and are the mean and average variance of the corresponding beliefs .

2) Variable-to-factor messages: The message from a variable node to a connected factor node is given by

| (7) |

which is the product of the message from other factors.

3) Factor-to-variable messages: The message from a factor node to a connected variable node is .

If we treat the APs and the CPU as the factor nodes, we can construct the factor graph for cell-free massive MIMO detection. By further applying the above message-passing rules to the constructed factor graph, we develop the distributed EP detector for cell-free massive MIMO systems. The detailed derivation is given in Appendix A-A.

III-C Distributed EP Detector

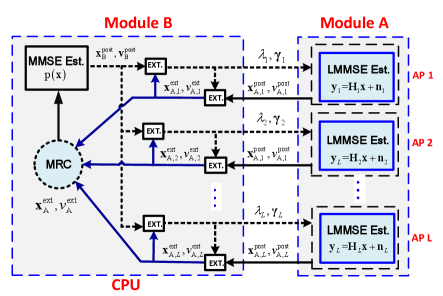

The distributed EP-based detector is illustrated in Algorithm 1. Compared with the centralized EP detector, it deploys partial calculation modules at the AP based on the local information and then sends the estimated posterior mean and variance to the CPU for combining. In particular, the input of the algorithm is the received signal , channel matrix , and noise level , while the output is the recovered signal in the -th iteration. The initial parameters are , and , where

| (8) |

Note that and are the initialized extrinsic information, and is the power of the transmitted symbol . The block diagram of the proposed distributed EP detector is illustrated in Fig. 3, which is composed of two modules, A and B. Each module uses the turbo principle in iterative decoding, where each module passes the extrinsic messages to the other module and this process repeats until convergence. It is derived from the factor graph with the messages updated and passed between different pairs of nodes that are assumed to follow Gaussian distributions. As the Gaussian distribution can be fully characterized by its mean and variance, only the mean and variance need to be calculated and passed between different modules. To better understand the distributed EP detection algorithm, we elaborate the details of each equation in Algorithm 1. Specifically, module A is the linear MMSE (LMMSE) estimator performed at the APs according to the following linear model

| (9) |

In the -iteration, the explicit expressions for the posterior covariance matrix and mean vector are given by (14) and (15), respectively. Note that each AP only uses the local channel to detect the transmitted signal . For the ease of notation, we omit the iteration index for all estimates for the mean and variance in Algorithm 1. Then, the variance estimate and mean estimate are transferred to the CPU to compute the extrinsic information (16) and (17), respectively. The extrinsic information can be regarded as the AWGN observation given by

| (10) |

where [33]. As a result, the linear model in (3) is decoupled into parallel and independent AWGN channels with equivalent noise for each AP. Subsequently, the CPU collects all extrinsic means and variances and performs MRC111In our paper, we focus on linear combining of the local estimate and from APs as . The optimal combining is MRC, which can maximize the post-combination SNR of the final AWGN observation with the linear combination of where and . The final expressions are shown in (18) and (19) and the detailed derivation is given in Appendix A-B.. The MRC expressions (18) and (19) are obtained by maximizing the post-combination signal-to-noise ratio (SNR) of the AWGN observation at the CPU, given by

| (11) |

where . The CPU uses the posterior mean estimator to detect the signal from the equivalent AWGN model (11). Then, the posterior mean and variance are computed by the posterior MMSE estimator for the equivalent AWGN model in (20) and (21). As the transmitted symbol is assumed to be drawn from the -QAM set , the corresponding expressions for each element in (20) and (21) are given by

| (12) |

| (13) |

where , , and are the -th element in , , and , respectively. The posterior mean and variance are then utilized to compute the extrinsic information and for each AP in (22) and (23), where the function is used to compute the mean of a vector. Finally, the extrinsic information and are transferred to each AP in the next iteration. The whole procedure is executed iteratively until it is terminated by a stopping criterion or a maximum number of iterations.

| (14) |

| (15) |

| (16) |

| (17) |

| (18) |

| (19) |

| (20) |

| (21) |

| (22) |

| (23) |

III-D Computational Complexity and Fronthaul Overhead

In the following, we provide the complexity analysis and fronthaul overhead for different detectors in Tables I and II, respectively. For the proposed distributed EP detector, the computational complexity at each AP is dominated by the LMMSE estimator for estimating the signal , which is because of the matrix inversion required in (14), while the computational complexity at the CPU is in each iteration. Furthermore, if the number of antennas is less than , we can use the matrix inversion lemma to carry out the matrix inversion in (14) as follows

where and the computational complexity is reduced to . Therefore, the overall computational complexity at each AP is while the overall computational complexity at the CPU is for iterations. As observed in Table I, the distritbuted EP detector mainly increases the computational complexity at the CPU when compared with other distributed linear receivers.

| Detectors | Distributed EP | Centralized MMSE | Partially distributed MMSE | Distributed MMSE |

| AP | ||||

| CPU |

We further compare the number of complex scalars that need to be transmitted from the APs to the CPU via the fronthauls of different detectors in Table II. We assume that and are the coherence time and pilot length, respectively. The results for the 4 baseline detectors are from [27]. With the proposed detector, scalars need to be passed from the APs to the CPU and no statistical parameters are required to be passed, where denotes the total number of iterations. The fronthaul overhead of the proposed distributed EP detector is similar to those of the centralized MMSE and EP detectors. The detailed comparison shall be determined by the value of system parameters.

| Detectors | Coherence block | Statistical parameters |

| Distributed EP | ||

| Centralized MMSE | ||

| Partially distributed MMSE with LSFD | ||

| Partially distributed MMSE with average decoding | ||

| Distributed MMSE | ||

| Centralized EP |

IV Distributed iterative Channel Estimation and Data Detection

In Section III, we developed a distributed EP detector assuming perfect CSI. However, the channel matrix is typically estimated at the receiver with uplink training, and thus channel estimation errors should be considered in the detection stage. In this section, we will propose a distributed ICD receiver for cell-free massive MIMO to handle imperfect CSI.

IV-A ICD Algorithm

ICD has been explored for MIMO [38, 39], OFDM [40], and massive MIMO [41] systems with a low-resolution analog-to-digital converter (ADC) [42]. Recently, it has been investigated in cell-free massive MIMO to improve the BER performance and reduce the pilot overhead. Different from [43] that solved the complex biconvex optimization problem with high complexity, we develop a low-complexity turbo-like ICD architecture. The iterative procedure is summarized in Algorithm 2.

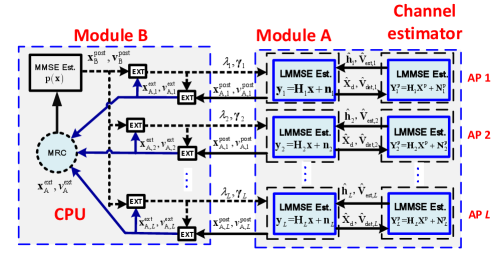

As illustrated in Fig. 3, the proposed ICD scheme employs a similar idea as iterative decoding. At each AP, the channel estimator and Module A in the proposed distributed EP detector are performed. They exchange extrinsic information iteratively. In the ICD processing stage, the channel estimation is first performed based on the received pilot signal and transmitted pilot. Then, the data detector performs signal detection by taking both the channel estimation error and channel statistics into consideration. It will then feed back the detected data and detection error to the channel estimator. Finally, data-aided channel estimation is employed with the help of the detected data. The whole ICD procedure is executed iteratively until it is terminated by a stopping criterion or a maximum number of ICD iterations.

Similar to Algorithm 1, the input of the ICD scheme is the pilot signal matrix, , the received signal matrix corresponding to the pilot matrix, , and that corresponding to the data matrix, , in each time slot for each AP. In the -th ICD iteration222 Here we use to denote the number of data feedback from the data detector to the channel estimator. For example, means no data feedback while means 3 data feedback should be performed., is the estimated channel matrix, is the estimated data matrix, and and are used to compute the covariance matrix for the equivalent noise in the signal detector and channel estimator, respectively. The final output of the signal detector is the detected data matrix , where is the total number of ICD iterations. Compared with the conventional receiver where the channel estimator and signal detector are designed separately, the ICD scheme can improve the BER performance by considering the characteristics of the channel estimation error and channel statistics. After performing signal detection, the detected payload data will be utilized for channel estimation. Next, we will elaborate the whole procedure in detail.

IV-B LMMSE Estimator

We consider adopting the classical LMMSE estimator in the channel estimation stage at each AP. To facilitate the representation of the channel estimation problem, we consider the matrix vectorization to (9) and rewrite it as

| (24) |

where , , , and . We denote as the matrix Kronecker product and as the vectorization operation. In the pilot-only based channel estimation stage, the LMMSE estimate of is given by

| (25) |

where is the channel covariance matrix and depends on the spatial correlation matrix . Based on the property of the LMMSE estimator, is a Gaussian random vector, and the channel estimation error vector is also a Gaussian random vector with zero-mean and covariance matrix given by

| (26) |

IV-C Signal Detection with Channel Estimation Errors

After performing channel estimation, the estimated channel is used to perform data detection at each AP. In the data transmission stage, the received data signal vector corresponding to the -th data in each coherence time can be expressed by , where is the AWGN vector333As each AP has similar signal models, we remove the index for the AP and use , , , and to denote , , , and , respectively. To simplify the notation, we have the same operation for the following symbols.. We can rewrite it in a matrix from as , where is the AWGN matrix in the data transmission stage. Denote the estimated channel as , where is the channel estimation error. The signal detection problem can be formulated as

| (27) |

where is the equivalent noise in the signal detector and the statistical characterization of is given in Appendix B-A.

IV-D Data-Aided Channel Estimation

To further improve the BER performance, a data-aided channel estimation approach is adopted in the channel estimation stage. First, conventional pilot-only based channel estimation is performed and the transmitted symbols are detected. Then, the detected symbols are fed back to the channel estimator as additional pilot symbols to refine the channel estimation. In the channel training stage, pilot matrix is transmitted. The received signal matrix corresponding to the pilot matrix is expressed as , where is the AWGN matrix and each column for . The estimated data matrix can be expressed as , where is the signal detection error matrix. In the data-aided channel estimation stage, the estimated are fed back to the channel estimator as additional pilots. Then, the received signal matrix corresponding to can be expressed as

| (28) |

where is the equivalent noise for the data-aided pilot . The statistical information of the -th column of is for , where will be utilized for data-aided channel estimation. We denote as the received signal matrix corresponding to the overall transmitted signal. By concatenating the received pilot and data signals, we have

| (29) |

where and can be interpreted as the equivalent pilot signal and noise in the data-aided channel estimation stage, respectively. The statistical characteristic for the equivalent noise and corresponding LMMSE estimator are derived in Appendix B-B.

V Performance Analysis

In this section, we provide an asymptotic performance analysis for the proposed distributed EP detector. We consider and fix

| (30) |

After proposing a state evolution analysis framework, we will characterize the fixed points of the proposed detector.

V-A State Evolution Analysis

We first provide a state evolution analysis framework to predict the asymptotic performance of the distributed EP detector in the large-system limit444The large-system limit means that the cell-free massive MIMO system with ..

Proposition 1.

In the large-system limit, the asymptotic behavior (such as MSE and BER) of Algorithm 1 can be described by the following equations:

| (31a) | ||||

| (31b) | ||||

| (31c) | ||||

The function is given by

| (32) |

where the expectation is with respect to . are the eigenvalues of .

The state equations illustrated in Proposition 1 can be proved rigorously in a similar way as [44]. To better understand the state evolution analysis, we give an intuitive derivation here. In Algorithm 1, we have explicit expressions for computing the mean and variance for each module. By substituting (14) into (16), we have the following expression

| (33) |

The asymptotic expressions for and can then be derived from (18) and (22). Finally, the asymptotic MSE can be interpreted as the MSE of the decoupled scalar AWGN channels (11) and is related to the distribution of the transmitted signal . Next, we will give a specific example for Proposition 1.

Example 1: If the channel matrix is an i.i.d. Gaussian distributed matrix, then will converge to the following asymptotic expression by adopting the result of the -transform555The concept of -transform can be found in [45], which enables the characterization of the asymptotic spectrum of a sum of suitable matrices from their individual asymptotic spectra. of the average empirical eigenvalue distribution given by,

| (34) |

Furthermore, if the data symbol is drawn from a quadrature phase-shift keying (QPSK) constellation, the is expressed by

| (35) |

Furthermore, the BER w.r.t. can be evaluated through the equivalent AWGN channel (11) with an equivalent and is given by

| (36) |

where is the -function. In fact, the MSE and BER are determined given the knowledge of the AWGN channel (11) with , which is known as the decoupling principle. Thus, if the data symbol is drawn from other -QAM constellations, the corresponding BER can be easily obtained using a closed-form BER expression [46].

V-B Fixed Point Characterization

The fixed points of the distributed EP detection algorithm are the stationary points of a relaxed version of the Kullback-Leibler (KL) divergence to minimize the posterior probability (4), which is given by

| (37) |

The KL divergence between two distributions is defined as,

| (38) |

Generally, it is difficult to approximate the complex posterior distribution (4) by a tractable approach. The moment matching method is utilized to exploit the relaxations of the KL divergence minimization problem [47]. The process for solving the KL divergence minimization problem is equivalent to optimizing the Bethe free energy subject to the moment-matching constraint. That is,

| (39a) | |||

| (39b) | |||

| (39c) | |||

where and is the differential entropy. In addition, , , and are given by

| (40a) | |||

| (40b) | |||

| (40c) | |||

respectively, where , , and are the normalization factors corresponding to their density functions. Based on the above-mentioned optimization process, we have

| (41a) | |||

| (41b) | |||

for any fixed points and in Algorithm 1. This fixed point characterization is helpful to perform the convergence analysis and understand the algorithm from the optimization perspective.

VI Simulation Results

In this section, we will provide extensive simulation results to demonstrate the excellent performance of the proposed distributed EP detector for cell-free massive MIMO. After showing the simulation results of the distributed EP detector in conventional and scalable cell-free massive MIMO systems, we will verify the accuracy of the proposed analytical framework. Furthermore, the performance of the distributed EP-based ICD scheme is also investigated. The codes for the simulation part is available at https://github.com/hehengtao/DeEP-cell-free-mMIMO.

VI-A Conventional Cell-Free Massive MIMO

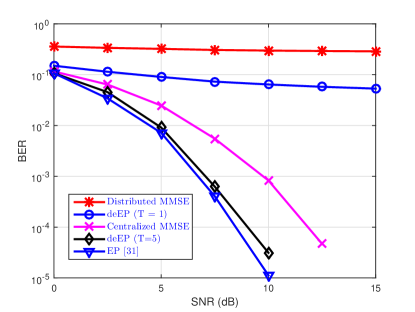

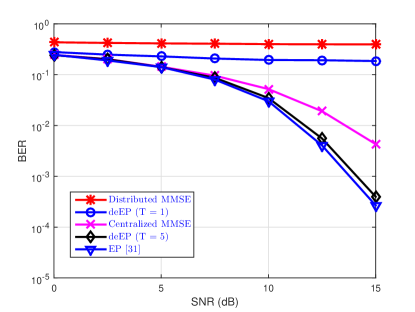

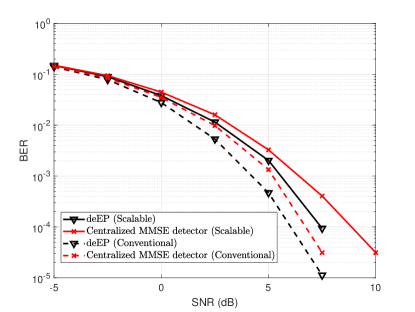

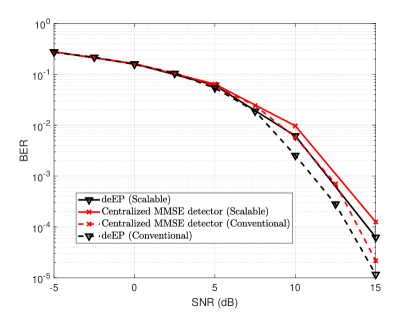

In this subsection, we consider the BER performance of the proposed detector in the conventional cell-free massive MIMO where each AP serves all users. We assume that perfect CSI can be obtained at each AP. The simulation parameters are set as , , and . Fig. 4 compares the achievable BER of the proposed distributed EP, centralized EP detectors [31], and other baselines [27] using QPSK and -QAM modulation symbols. The results are obtained by Monte Carlo simulations with independent channel realizations. We denote “deEP” as the distributed EP detector proposed in Section III. It can be observed that the proposed distributed EP detector outperforms the distributed MMSE detector with only one EP iteration. This is because deEP incorporates the prior information of the symbol into the posterior mean estimator for the equivalent AWGN channel (10). Furthermore, it outperforms the centralized MMSE detector with iterations for different modulation symbols. Compared with the centralized EP detectors [31], the distributed EP detector achieves comparable BER performance.

(a) QPSK

(b) 16-QAM

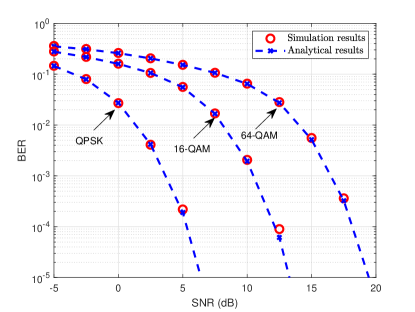

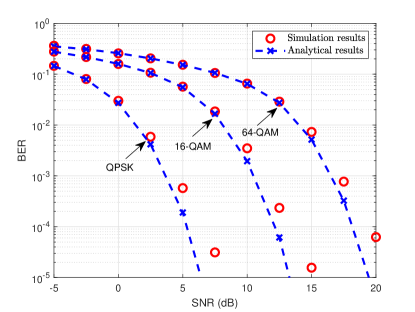

VI-B Accuracy of the Analytical Results

We next verify the accuracy of the analytical framework in Fig. 5 with different system settings and modulation schemes. As shown in the figure, the BERs of the proposed detector match well with the derived analytical results, which demonstrates the accuracy of the analytical framework developed in Section V-A. Although the analytical framework is derived in the large-system limit, it still can predict the BER performance in small-sized MIMO systems illustrated in Fig. 5(b). Therefore, instead of performing time-consuming Monte Carlo simulations to obtain the corresponding performance metrics, we can predict the theoretical behavior by the state evolution equations. Furthermore, the analytical framework can be further utilized to optimize the system design.

(a) , ,

(b) , ,

VI-C Scalable Cell-Free Massive MIMO

As the user-centric approach is more attractive for cell-free massive MIMO, we investigate the distributed EP detector with DCC framework. The user first appoints a master AP according to the large-scale fading factor and assigned a pilot by the appointed AP. Then, other neighboring APs determine whether they serve the accessing user according to the assigned pilot . Finally, the cluster for the -th user is constructed. Fig. 6 shows that the distributed EP detector outperforms both the centralized and distributed MMSE detectors. Furthermore, the performance loss is limited when compared to the conventional cell-free massive MIMO system while the computational complexity is significantly decreased from to .

(a) QPSK

(b) 16-QAM

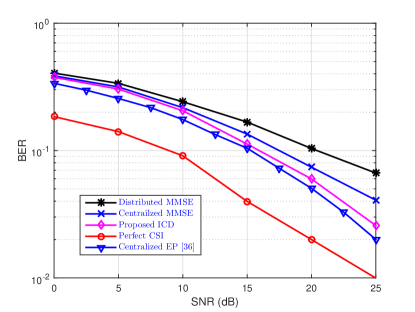

VI-D Performance of ICD with 3GPP Urban Channel

In Sections VI-A to VI-D, all detectors are investigated assuming perfect CSI. In this section, we consider a distributed EP-based ICD scheme for cell-free massive MIMO with the 3GPP Urban Channel [48] and imperfect CSI. We assume that , , and . The APs are assumed to be deployed in a area located in urban environments to match with the system settings in [27]. The large-scale fading factor is given by

| (42) |

where is the distance between the -th user and -th AP and represents shadow fading. The shadowing terms from an AP to different users are correlated as

| (43) |

where is the distance between the -th user and -th user. The second case in (43) on the correlation of shadowing terms related to two different APs can be ignored. Furthermore, the multi-antenna APs are equipped with half-wavelength-spaced uniform linear arrays and the spatial correlation is generated using the Gaussian local scattering model with a angular standard deviation. The transmit power for each user is mW and the bandwidth is MHz.

(a) QPSK

(b) 16-QAM

Fig. 7 compares the achievable BER of the proposed distributed EP detector with those of other detectors in the 3GPP Urban Channel and imperfect CSI. The channel is estimated with the LMMSE channel estimator. As can be observed from the figure, the proposed ICD scheme outperforms the centralized and distributed MMSE detectors, and shows a similar performance as the distributed EP detector with perfect CSI and -QAM symbols.

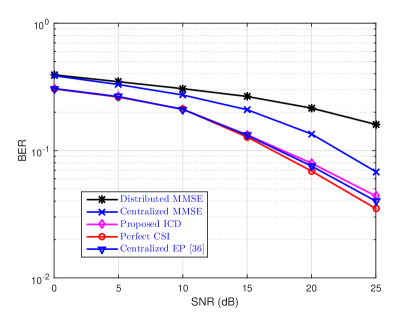

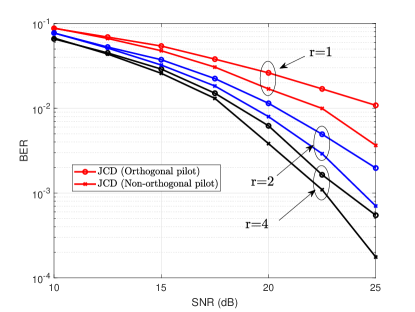

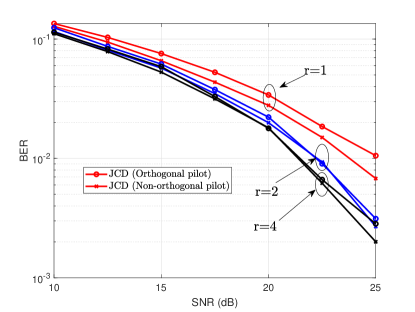

VI-E Non-Orthogonal Pilots

In this section, we consider the performance of ICD with different pilots in the channel training stage. In particular, the orthogonal pilot matrix is chosen by selecting columns from the discrete Fourier transformation (DFT) matrix . For a non-orthogonal pilot, each element of the pilot matrix is drawn from -QAM. Furthermore, we consider and for the two pilots. Fig. 8 shows the BERs of the distributed detectors in the ICD architecture, where indicates no data feedback to the channel estimator while refers to the scenario where the detected data are fed back to channel estimator once. As can be observed from the figure, the data feedback can improve the system performance significantly. Specifically, if we target for a BER = with QPSK symbols and non-orthogonal pilots, data feedback () can bring dB and data feedback () can bring dB performance gain, respectively. Furthermore, the performance gain will be enlarged if orthogonal DFT pilots are used. Interestingly, using DFT pilots has a similar performance as that of non-orthogonal pilots. This demonstrates that the ICD scheme is an efficient way to enable non-orthogonal pilots for reducing the pilot overhead in cell-free massive MIMO systems.

(a) QPSK

(b) 16-QAM

VII Conclusion

In this paper, we proposed a distributed EP detector for cell-free massive MIMO. It was shown that the proposed detector achieves better performance than linear detectors for both conventional and scalable cell-free massive MIMO networks. Compared to other distributed detectors, it achieves a better BER performance with an increase of the computational overhead at the CPU. A distributed ICD was then proposed for cell-free massive MIMO systems to handle imperfect CSI. An analytical framework was also provided to characterize the asymptotic performance of the proposed detector in a large system setting. Simulation results demonstrated that the proposed method outperforms existing distributed detectors for cell-free massive MIMO in terms of BER performance. Furthermore, the proposed ICD architecture significantly improves the system performance and enables non-orthogonal pilots to reduce the pilot overhead. For future research, it is interesting to extend the distributed EP algorithm to precoding and channel estimation for cell-free massive MIMO systems.

Appendix A Message-Passing Derivation of Algorithm 1

A-A Derivation of Distributed EP Detector

In this appendix, we present the derivation of Algorithm 1 from the message-passing perspective666We also can derive Algorithm 1 by directly utilizing the EP principle in [49], which is an extension of belief propagation and obtained by imposing an exponential family constraint on messages. Both BP and EP can be derived from the generic message passing rules introduced in Section III-B.. By applying the above message-passing rules to the factor graph in Fig. 2, we can obtain Algorithm 1. First, we give the factor graph for cell-free massive MIMO detection. As illustrated in Fig. 2, we have factor graphs for describing the centralized processing model (4) and distributed processing model (6). Specifically, the factor graph contains factor nodes and one variable node for the distributed processing model (6). The factor represents the prior information of and denotes -th AP. After constructing the factor graph for (6), we approximate the posterior distribution by computing and passing messages among the nodes in the factor graph with an iterative manner and aforementioned rules. We initialize the messages from the variable node to factors as . Then, according to Factor-to-variable messages, we have the message 777As each AP serves all users, only one variable should be considered in the message passing process. Therefore, we have a reduced form as .. The message is initialized as for each AP. Therefore, how to compute the belief is of great significance and it is given by

| (44) |

The explicit expression for KL divergence is given in (38). Since obtaining a distribution to minimize the KL divergence is very difficult, we consider is a Gaussian distribution with the same mean and covariance matrix with the distribution . Therefore, we have with and , which yields (14) and (15) in Algorithm 1. Then, we have

| (45) |

where we approximate by . The principles behind the approximation are moment-matching and self-averaging [49, 50]. According to the Gaussian production lemma, we have (16) and (17) to obtain and in Algorithm 1, respectively. Next, we consider the message to be the product of messages passing from each APs (factors) to and we have

| (46) |

where and are obtained from (18) and (19). We then approximate the belief on as . The mean and variance are obtained by moment-matching and self-averaging, and denoted by (20) and (21), respectively. As the transmitted symbol is assumed to be drawn from -QAM set, the explicit expressions for (20) and (21) are given by (12) and (13). Similar to (45), we set the message as

A-B Derivation of MRC Combining

For distributed EP-based MIMO detection, the challenge is how to combine the message from different APs. In our paper, we focus on linear combining of the local estimate and from APs as follows,

| (49) |

As the linear model (3) is decoupled into the equivalent AWGN model, we focus on the scalar form of the (49) with

| (50) |

where is the local estimate for the -user from the -th AP, and it depends on the variance . As in (10) and (11)., we write the estimate , where represents equivalent noise with known variance . For the -th user, the optimal linear combining is . Thus, we have . Because should be an unbiased estimate of , we have . After combining, the equivalent SNR is given by

| (51) |

As a result, the combing problem becomes an optimization problem

| (52) |

By using the method of Lagrange multipliers, it is easy to obtain

| (53) |

Appendix B Derivation of ICD

In this appendix, we present the derivation of the equivalent channel estimation error in the data detection stage, as well as the derivation of data detection error in channel estimation stage.

B-A Derivation of Equivalent Channel Estimation Error

The equivalent noise in the signal detector is assumed to be Gaussian distributed with zero mean and covariance matrix , which can be obtained by considering the statistical properties of the channel estimation error. For the convenience of simplicity, we omit the time index . The detailed expression for is then given by

| (54) |

where is the variance of -th element for channel estimation error matrix obtained from .

B-B Derivation of Data Detection Error

Owing to the data feedback from the signal detector to the channel estimator, the LMMSE channel estimation in the data-aided stage is given by

| (55) |

where , , , and . The covariance matrix of the channel estimation error vector can be computed as

| (56) |

The covariance matrix contains the equivalent noise power of the actual pilot and additional pilot . The explicit expression for is given by

| (59) |

where

| (63) |

and

| (64) |

Denote , , where is the -th column of the signal detection error matrix and is the variance for -th element of . The signal detection error matrix can be obtained from the posterior variance in the module A.

References

- [1] H. He, H. Wang, X. Yu, J. Zhang, S.H. Song, K. B. Letaief, “Distributed expectation propagation detection for cell-free massive MIMO,” in Proc. IEEE Global Commun. Conf. (GLOBECOM), Madrid, Spain, 2021.

- [2] J. G. Andrews, S. Buzzi, W. Choi, S. V. Hanly, A. Lozano, C. K. Soong, and J. C. Zhang, “What will 5G be?” IEEE J. Sel. Areas Commun., vol. 32, no. 6, pp. 1065-1082, 2014.

- [3] K. B. Letaief, Y. Shi, J. Lu, and J. Lu, “Edge artificial intelligence for 6G: Vision, enabling technologies, and applications,” IEEE J. Sel. Areas Commun., vol. 40, no. 1, pp. 5-36, 2022.

- [4] K. B. Letaief, W. Chen, Y. Shi, J. Zhang, and Y.-J.-A. Zhang, “The roadmap to 6G: AI empowered wireless networks,” IEEE Commun. Mag., vol. 57, no. 8, pp. 84-90, Aug. 2019.

- [5] T. L. Marzetta, “Noncooperative cellular wireless with unlimited numbers of base station antennas,” IEEE Trans. Wireless Commun., vol. 9, no. 11, pp. 3590–3600, Nov. 2010.

- [6] H. Q. Ngo, A. Ashikhmin, H. Yang, E. G. Larsson, and T. L. Marzetta, ”Cell-free massive MIMO versus small cells,” IEEE Trans. Wireless Commun., vol. 16, no. 3, pp. 1834–1850, Mar. 2017.

- [7] J. Zhang, S. Chen, Y. Lin, J. Zheng, B. Ai, and L. Hanzo, “Cell-free massive MIMO: A new next-generation paradigm,” IEEE Access, vol. 7, pp. 99878–99888, Sep. 2019.

- [8] J. Zhang, E. Björnson, M. Matthaiou, D. W. K. Ng, H. Yang, and D. J. Love, “Prospective multiple antenna technologies for beyond 5G,” IEEE J. Sel. Areas Commun., vol. 38, no. 8, pp. 1637-1660, Aug. 2020.

- [9] M. Matthaiou, O. Yurduseven, H. Q. Ngo, D. Morales-Jimenez, S. L. Cotton, and V. F. Fusco, “The road to 6G: Ten physical layer challenges for communications engineers,” IEEE Commun. Mag., vol. 59, no. 1, pp. 64-69, Jan. 2021.

- [10] H. He, X. Yu, J. Zhang, S.H. Song, K. B. Letaief, “Cell-Free Massive MIMO for 6G Wireless Communication Networks,” Journal of Communications and Information Networks, vol. 6, no. 4, pp. 321-335, Dec. 2021.

- [11] G. Interdonato, E. Björnson, H. Q. Ngo, P. Frenger, and E. G. Larsson, “Ubiquitous cell-free massive MIMO communications,” EURASIP J. Wireless Commun. and Netw., vol. 2019, no. 1, pp. 197-206, 2019.

- [12] D. Wang, C. Zhang, Y. Du, J. Zhao, M. Jiang, and X. You, “Implementation of a cloud-based cell-free distributed massive MIMO system,” IEEE Commun. Mag., vol. 58, no. 8, pp. 61-67, 2020.

- [13] W. Choi, J. G. Andrews, “Downlink performance and capacity of distributed antenna systems in a multicell environment,” IEEE Trans. Wireless Commun., vol. 6, no. 1, pp. 69-73, Jan. 2007.

- [14] J. Zhang, R. Chen, J. G. Andrews, A. Ghosh, and R. W. Heath Jr, “Networked MIMO with clustered linear precoding,” IEEE Trans. Wireless Commun., vol. 8, no. 4, pp. 1910-1921, Apr. 2009.

- [15] Ö. Özdogan, E. Björnson, and J. Zhang, “Performance of cell-free massive MIMO with rician fading and phase shifts,” IEEE Trans. Wireless Commun., vol. 18, no. 11, pp. 5299-5315, Nov. 2019.

- [16] Z. Wang, J. Zhang, E. Björnson, and B. Ai, “Uplink performance of cell-free massive MIMO over spatially correlated Rician fading channels,” IEEE Commun. Lett., vol. 25, no. 4, pp. 1348-1352, Apr. 2020.

- [17] S.-N. Jin, D.-W. Yue, and H. H. Nguyen, “Spectral and energy efficiency in cell-free massive MIMO systems over correlated Rician fading,” IEEE Syst. J., pp. 1-12, May. 2020.

- [18] H. Q. Ngo, L. Tran, T. Q. Duong, M. Matthaiou, and E. G. Larsson, “On the total energy efficiency of cell-free massive MIMO,” IEEE Trans. Green Commun. Netw, vol. 2, no. 1, pp. 25-39, 2018.

- [19] H. V. Nguyen, V. D. Nguyen, O. A. Dobre, S. K. Sharma, S. Chatzinotas, B. Ottersten, and O. S. Shin, “On the spectral and energy efficiencies of full-duplex cell-free massive MIMO,” IEEE J. Sel. Areas Commun., vol. 38, no. 8, pp. 1698-1718, Aug. 2020.

- [20] E. Björnson and L. Sanguinetti, “Scalable cell-free massive MIMO systems,” IEEE Trans. Commun., vol. 68, no. 7, pp. 4247–4261, Jul. 2020.

- [21] S. Buzzi, C. D’Andrea, “Cell-free massive MIMO: User-centric approach,” IEEE Wireless Commun. Lett., vol. 6, no. 6, pp. 706-709, Dec. 2017.

- [22] S. Buzzi, C. D’Andrea, A. Zappone, and C. D’Elia, “User-centric 5G cellular networks: Resource allocation and comparison with the cell-free massive MIMO approach,” IEEE Trans. Wireless Commun., vol. 19, no. 2, pp. 1250-1264, Feb. 2020.

- [23] H. A. Ammar, R. Adve, S. Shahbazpanahi, G. Boudreau, and K. V. Srinivas, “User-centric cell-free massive MIMO networks: A survey of opportunities, challenges and solutions,” IEEE Commun. Surv. Tutor., vol. 24, no. 1, pp. 611-652, 2022.

- [24] A. A. Polegre, F. Riera-Palou, G. Femenias, and A. G. Armada, “Channel hardening in cell-free and user-centric massive MIMO networks with spatially correlated ricean fading,” IEEE Access, vol. 8, pp. 139827-139845, 2020.

- [25] F. Riera-Palou, G. Femenias, A. G. Armada, and A. Pérez-Neira, “Clustered cell-free massive MIMO,” in Proc. IEEE Globecom Workshops (GC Wkshps), Dec. 2018, pp. 1-6.

- [26] E. Nayebi, A. Ashikhmin, T. L. Marzetta, and B. D. Rao, “Performance of cell-free massive MIMO systems with MMSE and LSFD receivers,” in Proc. 50th Asilomar Conf. Signals, Syst. Comput., Nov. 2016, pp. 203–207.

- [27] E. Björnson and L. Sanguinetti, “Making cell-free massive MIMO competitive with MMSE processing and centralized implementation,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 77–90, Jan. 2020.

- [28] Z. H. Shaik, E. Björnson, and E. G. Larsson, “MMSE-optimal sequential processing for cell-free massive MIMO with radio stripes,” IEEE Trans. Commun., vol. 69, no. 11, pp. 7775-7789, Nov. 2021.

- [29] Carmen D’Andrea, E. Bjornson, and E. G. Larsson, “Improving cell-free massive MIMO by local per-bit soft detection,” IEEE Commun. Lett., vol. 25, no. 7, pp. 2400-2404, Apr. 2021.

- [30] T. P. Minka, “A family of algorithms for approximate Bayesian Inference,” Ph.D. dissertation, Dept. Elect. Eng. Comput. Sci., MIT, Cambridge, MA, USA, 2001.

- [31] J. Céspedes, P. M. Olmos, M. Sánchez-Fernández, and F. Perez-Cruz, “Expectation propagation detection for high-order high-dimensional MIMO systems,” IEEE Trans. Commun., vol. 62, no. 8, pp. 2840–2849, Aug. 2014.

- [32] C. Jeon, K. Li, J. R. Cavallaro, and C. Studer, “Decentralized equalization with feedforward architectures for massive MU-MIMO,” IEEE Trans. Signal Process., vol. 67, no. 17, pp. 4418–4432, Sep. 2019.

- [33] H. Wang, A. Kosasih, C. Wen, S. Jin, and W. Hardjawana, “Expectation propagation detector for extra-large scale massive MIMO,” IEEE Trans. Wireless Commun., vol. 19, no. 3, pp. 2036–2051, Mar. 2020.

- [34] K. Ghavami and M. Naraghi-Pour, “MIMO detection with imperfect channel state information using expectation propagation,” IEEE Trans. Veh. Technol., vol. 66, no. 9, pp. 8129-8138, Sep. 2017.

- [35] R. Gholami, L. Cottatellucci, and D. Slock, “Message passing for a bayesian semi-blind approach to cell-free massive mimo,” in Proc. 55th Asilomar Conf. Signals Syst. Comput., Nov, 2021, pp. 1237-1241.

- [36] A. Kosasih, et al. “Improving Cell-Free Massive MIMO Detection Performance via Expectation Propagation,” in Proc. IEEE 94th Veh. Technol. Conf. (VTC-Fall), Sep. 2021, pp. 1-6.

- [37] F. R. Kschischange, B. J. Frey, and H. A. Loeliger, “Factor graphs and the sum-product algorithm,” IEEE Trans. Inf. Theory, vol. 42, no. 2, pp. 498-519, Feb. 2001.

- [38] M. Coldrey and P. Bohlin, “Training-based MIMO systems: Part II:Improvements using detected symbol information,” IEEE Trans. Signal Process., vol. 56, no. 1, pp. 296-303, Jan. 2008.

- [39] H. He, C.-K. Wen, S. Jin, and G. Y. Li, “Model-driven deep learning for MIMO detection,” IEEE Trans. Signal Process., vol. 68, pp. 1702–1715, Mar. 2020.

- [40] R. Prasad, C. R. Murthy, and B. D. Rao, “Joint channel estimation and data detection in MIMO-OFDM systems: A sparse Bayesian learning approach,” IEEE Trans. Signal Process., vol. 63, no. 20, pp. 5369-5382, Oct. 2015.

- [41] J. Ma and L. Ping, “Data-aided channel estimation in large antenna systems,” IEEE Trans. Signal Process., vol. 62, no. 12, pp. 3111-3124, Jun. 2014.

- [42] C.-K. Wen, C.-J. Wang, S. Jin, K.-K. Wong, and P. Ting, “Bayes-optimal joint channel-and-data estimation for massive MIMO with low-precision ADCs” IEEE Trans. Signal Process., vol. 64, no. 10, pp. 2541-2556, Jul. 2015.

- [43] H. Song, X. You, C. Zhang, O. Tirkkonen, C. Studer, “Minimizing pilot overhead in cell-Free massive MIMO systems via joint estimation and detection,” in Proc. IEEE 21st Int. Workshop Signal Process. Adv. Wireless Commun. (SPAWC), Atlanta, GA, USA, May. 2020, pp. 1-5.

- [44] K. Takeuchi, “Rigorous dynamics of expectation-propagation-based signal recovery from unitarily invariant measurements,” IEEE Trans. Inf. Theory., vol. 66, no. 1, 368-386, Oct. 2019.

- [45] A. M. Tulino and S. Verdu, Random Matrix Theory and Wireless Communications. Hanover, MA USA: Now Publishers Inc., 2004.

- [46] J. G. Proakis, Digital Communications. Boston, USA: McGraw-Hill Companies, 2007.

- [47] A. Fletcher, M. Sahraee-Ardakan, S. Rangan, and P. Schniter, “Expectation consistent approximate inference: Generalizations and convergence,” in Proc. IEEE Int. Symp. Inf. Theory, Barcelona, Spain, Jul. 2016, pp. 190-194.

- [48] 3GPP, “Further advancements for E-UTRA physical layer aspects (Release 9),” 3GPP TS 36.814, Tech. Rep., Mar. 2017.

- [49] T. Minka, “Divergence measures and message passing,” Microsoft Research Cambridge, Tech. Rep., 2005.

- [50] S. Rangan, P. Schniter, and A. Fletcher, “Vector approximate message passing” IEEE Trans. Inf. Theory, vol. 65, no. 10, pp. 6664-6684, Oct. 2019.